Sub-Sequential Physics-Informed Learning with State Space Model

Abstract

Physics-Informed Neural Networks (PINNs) are a kind of deep-learning-based numerical solvers for partial differential equations (PDEs). Existing PINNs often suffer from failure modes of being unable to propagate patterns of initial conditions. We discover that these failure modes are caused by the simplicity bias of neural networks and the mismatch between PDE’s continuity and PINN’s discrete sampling. We reveal that the State Space Model (SSM) can be a continuous-discrete articulation allowing initial condition propagation, and that simplicity bias can be eliminated by aligning a sequence of moderate granularity. Accordingly, we propose PINNMamba, a novel framework that introduces sub-sequence modeling with SSM. Experimental results show that PINNMamba can reduce errors by up to 86.3% compared with state-of-the-art architecture. Our code is available at \urlhttps://github.com/miniHuiHui/PINNMamba.

1 Introduction

In the past few years, Physics-Informed Neural Networks (PINNs) (Raissi et al., 2019) have emerged as a novel approach for numerically solving partial differential equations (PDEs). PINN takes a neural network , whose parameters are trained with physics PDE residual loss, as the numerical solution of the PDE, where and are spatial and temporal coordinates. The core idea behind PINNs is to take advantage of the universal approximation property of neural networks (Hornik et al., 1989) and automatic differentiation implemented by mainstream deep learning frameworks, such as PyTorch (Paszke et al., 2019) and Tensorflow (Abadi et al., 2016), so that PINNs can achieve potentially more precise and efficient PDE solution approximation compared with traditional numerical approaches like finite element methods (Reddy, 1993).

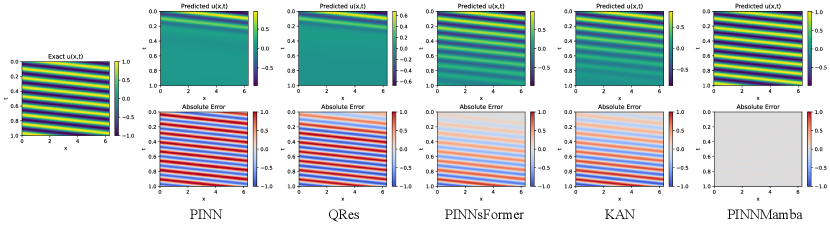

The mainstream PINNs predominantly employ multilayer perceptrons (MLPs) as their backbone architecture. However, despite the universal approximation capability of MLPs, they do not always guarantee the accurate learning of numerical solutions to PDEs in practice. This phenomenon is observed as the failure modes in PINNs, in which case PINN provides a completely wrong approximation (Krishnapriyan et al., 2021). As illustrated in Fig. 1, the failure modes often manifest as a temporal gradual distortion. This distortion arises because MLPs lack the necessary inductive bias to effectively capture the temporal dependencies of a system, ultimately hindering the accurate propagation of physical patterns informed by the initial conditions.

To introduce such an inductive bias, several sequence-to-sequence approaches have been proposed (Krishnapriyan et al., 2021; Zhao et al., 2024; Yang et al., 2022; Gao et al., 2022). Specifically, Krishnapriyan et al. (2021) propose training a new network at each time step and recursively using its output as the initial condition for the next step. This method incurs significant computational and memory overhead while exhibiting poor generalization. Furthermore, Transformer-based approaches (Zhao et al., 2024; Yang et al., 2022; Gao et al., 2022) are proposed to address the time-dependency issue. Yet, these methods are based on discrete sequences, making their model produce incorrect pattern mutations in some cases due to their ignorance of the basic principle that PINNs approximate continuous dynamical systems. Thus, there is still an open question:

How can we effectively introduce sequentiality to PINNs?

To answer this question, we need to understand the essential difficulties of training PINNs. First, PINNs assume temporal continuity, whereas, during their actual training, the spatio-temporal collection points used to construct the PDE residual loss are sampled discretely. We define this nature of PINN as Continuous-Discrete Mismatch. In the absence of a well-defined continuous-discrete articulation, the real trajectory of physical system is not necessarily recovered correctly in the training process, since such Continuous-Discrete Mismatch would block the propagation of the initial condition.

To respect the inherent Continuous-Discrete Mismatch, we reveal that the State Space Models (SSM) (Kalman, 1960) can be a good continuous-discrete articulation. SSMs parametrically model a discrete temporal sequence as a continuous dynamic system. An SSM’s discrete form approximates the instantaneous states and rates of change of its continuous form via integrating the system’s dynamics over each time interval, which more accurately responds to the trajectory of a continuous system. Meanwhile, the SSM unifies the scale of derivatives of different orders, making it easier to be optimized. So far, SSMs have shown their insane capacity in language (Gu & Dao, 2023) and vision (Liu et al., 2024a) tasks, but its potential for solving PDEs remains unexplored. We propose to construct PINNs with SSMs to unleash their excellent properties of continuous-discrete articulation.

Next, we remark that the simplicity bias (Shah et al., 2020) of neural networks is another crucial contributing factor to PINN training difficulty. The simplicity bias will lead the model to choose the pattern with the simplest hypothesis. This results in an over-smoothed solution when approximating PDEs. Because, for data-free PINNs, there might be a very simple function in the feasible domain that can make the residual loss zero. For example, for convection equation, leads to zero empirical loss on every collection point except when . While the correct pattern is hard to fight against over-smoothed patterns during training.

A major way to eliminate simplicity bias is to construct agreements over diversity predictions (Teney et al., 2022). Following this principle, we propose a novel sub-sequence alignment approach, which allows the diverse predictions of time-varying SSMs to form such agreements. Sub-sequence modeling adopts a medium sequence granularity, forming the time dependency that a small sequence fails to capture while avoiding the optimization problem along with the long sequence. Meanwhile, the alignment of the sub-sequence predictions ensures the global pattern propagation and the formation of an agreement that eliminates simplicity bias.

In this paper, we introduce a novel learning framework to solve physics PDE’s numerically, named PINNMamba, which performs time sub-sequences modeling with the Selective SSMs (Mamba) (Gu & Dao, 2023). PINNMamba successfully captures the temporal dependence within the PDE when training the continuous dynamical systems with discretized collection points. To the best of our knowledge, PINNMamba is the first data-free SSM-based model that effectively solve physics PDE. Experiments show that PINNMamba outperforms other PINN approaches such as PINNsFormer (Zhao et al., 2024) and KAN (Liu et al., 2024c) on multiple hard problems, achieving a new state-of-the-art.

Contributions. We make the following contributions:

-

•

We reveal that the mismatch between the discrete nature of the training collection points and the continuous nature of the function approximated by the PINNs is an important factor that prevents the propagation of the initial condition pattern over time in PINNs.

-

•

We also note that the simplicity bias of neural networks is a key contributing factor to the over-smoothing pattern that causes gradual distortion in PINNs.

-

•

We propose PINNMamba, which eliminates the discrete-continuity mismatch with SSM and combats simplicity bias with sub-sequential modeling, resulting in state-of-the-art on several PINN benchmarks.

2 Preliminary

Due to page limit, we discuss related works in Appendix A.

Physics-Informed Neural Networks. The PDE systems that are defined on spatio-temporal set and described by equation constraints, boundary conditions, and initial conditions can be formulated as:

| (1) |

| (2) |

| (3) |

where is the solution of the PDE, is the spatial coordinate, is the boundary of , is the temporal coordinate and is the time horizon. The denote the operators defined by PDE equations, initial conditions, and boundary conditions respectively.

A physics-driven PINN first builds a finite collection point set , and its spatio (temporal) boundary (), then employs a neural network which is parameterized by to approximate by optimizing the residual loss as defined in Eq. 7:

| (4) |

| (5) |

| (6) |

| (7) |

where ,, are the weights for loss that are adjustable by auto-balancing or hyperparameters. denotes -norm.

State Space Models. An SSM describes and analyzes a continuous dynamic system. It is typically described by:

| (8) | ||||

| (9) |

where is hidden state of time , is the derivative of . is the input state of time , is the output state, and are state transition matrices.

In real-world applications, we can only sample in discrete time for building a deep SSM model. We usually omit the term in deep SSM models because it can be easily implemented by residual connection (He et al., 2016). So we create a discrete time counterpart:

| (10) | ||||

| (11) |

with discretization rules such as zero-order hold (ZOH):

| (12) | ||||

| (13) |

where and is discrete time state transfer and input matrix, and is a step size parameter. By parameterizing using HiPPO matrix (Gu et al., 2020), and parameterizing with input-dependency, a time-varying Selective SSM can be constructed (Gu & Dao, 2023). Such a Selective SSM can capture the long-time continual dependencies in dynamic systems. We will argue that this makes SSM a good continuous-discrete articulation for modeling PINN.

3 Why PINNs present Failure Modes?

A counterintuitive fact of PINNs is that the failure modes are not devoid of optimizing their residual loss to a very low degree. As shown in Fig. 2, for the convection equation, the converged PINN almost completely crashes in the domain, but its loss maintains a near-zero degree at almost every collection point. This is the result of the combined effects of the simplicity bias (Shah et al., 2020; Pezeshki et al., 2021) of neural networks and the Continuous-Discrete Mismatch of PINNs, as shown in Fig. 3. The simplicity bias is the phenomenon that the model tends to choose the one with the simplest hypothesis among all feasible solutions, which we demonstrate in Fig. 3 (b). Continuous-Discrete Mismatch refers to the inconsistency between the continuity of the PDE and the discretization of PINN’s training process. As shown in Eq. 4 - 6, to construct the empirical loss for the PINN training process, we need to determine a discrete and finite set of collection points on . This is usually done with a grid or uniform sampling. But a PDE system is usually continuous and its solutions should be regular enough to satisfy the differential operator , , and .

Continuous-Discrete Mismatch. Continuous-Discrete Mismatch will cause correct local patterns hard to propagate over the global domain. Because the loss on discrete collection points does not necessarily respond to the correct pattern on the continuous domain, instead, only responds to its small neighborhood. To show such Continuous-Discrete Mismatch, we first present the following theorem:

Theorem 3.1.

Let . Then for differential operator there exist infinitely many functions parametrized by , s.t.

By Theorem 3.1, enforcing the PDE only at a finite set of points does not guarantee a globally correct solution. This can be performed by simply constructing a Bump function in a small neighborhood of points in so that it satisfies for . This means that the information of the equation determined by the initial conditional differential operator may act only on a small neighborhood of collection points with . The other collection points in the , on the other hand, might fall into a local optimum that can make defined by Eq. 4 to near 0. Because the function determined by and together on the collection points at may not be generalized outside its small neighborhood. The detailed proof of Theorem 3.1 can be found in Appendix B.

Simplicity Bias. Meanwhile, the simplicity bias of neural networks will make the PINNs always tend to choose the simplest solution in optimizing . This implies that PINN will easily fall into an over-smoothed solution. For example, as shown in Fig. 2, the PINN’s prediction is 0 in most regions. The loss of this over-smoothed feasible solution is almost identical to that of the true solution, and the existence of an insurmountable ridge between the two loss basins results in a PINN that is extremely susceptible to falling into local optimums. As in Fig 3, the over-smoothed pattern yields an advantage against the correct pattern.

Under the effect of difficulty in passing locally correct patterns to the global due to Continuous-Discrete Mismatch and over-smoothing due to simplicity bias, PINNs present failure modes. Therefore, to address such failure modes, the key points in designing the PINN models lie in: (1) a mechanism for information propagation in continuous time and (2) a mechanism to eliminate the simplicity bias of models.

4 Combating Failure Mode with State-Space Model and Sub-sequential Alignment

To address the problems in Section 3, we propose (1) a discrete state-space-based encoder that models the sequences of individual collection points in continuous dynamics, to match with Continuous-Discrete Mismatch, and propagates the information from the initial condition to subsequent times (Section 4.1). and (2) a sub-sequence contrastive alignment mechanism that aligns different outputs of the same collection point in different sub-sequences, to form an agreement that eliminates simplicity bias (Section 4.2).

4.1 Continuous Time Propagation of Initial Condition Information with State Space Model

As we discussed in Section 3, the Continuous-Discrete Mismatch of PINNs raises the intrinsic difficulty of modeling, since the time dependency in a dynamic PDE system is not captured spontaneously by discrete sampling. We argue that such a dynamic time dependency can be modeled by SSM. To this end, we first consider the PDE as a spatially infinite-dimensional ODE to simplify the problem. We view the solution in a function space that, if we let:

| (14) |

be a function , by -point spatial sampling:

| (15) |

| (16) |

Sequential Modeling Continuity with SSM. In continuous time, we now model the function to the dynamic system described by SSM as in Eq. 8 and 9. Here we let , where is the Spatio-Temporal Embedding in Fig 4. After temporal discretization and , we get:

| (17) |

Reversibly, by the inverse of the discretization rule defined by Eq. 12, 13, we can restore this temporal dependency to continuous time. This kind of restoration can help achieve PINN’s generalization to any moment in .

Pattern Propagation by Joint Optimization. Combine Eq. 4 with 17, in a sequence start with , the sum of loss of collection points at time , would be:

| (18) |

where . In Eq. 18, we notice that the should satisfy both the initial condition and the equation by jointly optimizing the losses:

| (19) | |||

| (20) |

Thereby, for each collection point, the numerical value of its solution should be jointly optimized by Eq. 18, 19, and 20, thus receiving the pattern defined by the initial conditions.

Uniformed Derivatives Scale. Another benefit that can be got from SSM is, by parameterizing differential state matrix in Eq.8 with HiPPO matrix (Gu et al., 2020) which contains the derivative information, we can align the derivatives of the system with respect to time on a uniform scale. This uniform scale will help to reduce the problem of ruggedness on the loss landscape due to gradient vanishing or exploding.

Time-Varying SSM. In practice, we use the time-varying Selective SSM (Gu & Dao, 2023), instead of the function defined by Eq. 17 being the SSM on a linear time-invariant system. The time-varying SSM has two advantages, one is that such input-dependent models typically have stronger representational capabilities (Xu et al., 2024), while the other is that it will make diverse predictions that help to eliminate simplicity bias in the model, as we will discuss in section 4.2. This time-variance will make time-dependent, and therefore, Eq. 17 and 18 need minor adjustments. These adjustments won’t impact the initial condition propagation, and we will discuss them in Appendix C.

4.2 Eliminating Simplicity Bias of Models with Sub-Sequence Contrastive Alignment

Although SSM can make the information about the initial conditions propagate in time coordinates, it still cannot mitigate the simplicity bias of neural networks. The model is still prone to falling into an over-smoothed local optimum. There are two key points to address this over-smoothness caused by simplicity bias: (1) appropriate sequence granularity to guarantee a smooth optimization process. (2) Mitigating the effect of simplicity bias through the diversity of model prediction paradigms (Pagliardini et al., 2023).

Sequence Granularity. A proper sequence granularity ensures smooth propagation of the initial conditions while making the model easier to optimize. As shown in Fig. 5, there are three ways to define sequence, which are pseudo sequence (Zhao et al., 2024), long sequence (Nguyen et al., 2024a), and the proposed sub-sequence. We propose to use a sub-sequence with medium granularity overlapping. The sub-sequential modeling can avoid: (1) the difficulty of crossing the loss barrier that makes the model trapping in the over-smooth local optimum, which is caused by the huge inertia of long sequence; (2) the difficulty of broadcasting information globally on the time coordinate, that caused by construct on small neighborhoods of a collection point in pseudo sequence. Sub-sequence takes only the first output in the sequence as the output value of the current collection point. Its successors’ values will pass information crossing the time coordinate through subsequences alignment and form diverse predictions to eliminate simplicity bias.

Contrastive Alignment for Information Propagation. As shown in Fig. 5, we construct a sub-sequence for each collection point together with its finite successors, which form overlapping collection points. By aligning the predictions of these collection points with a contrastive loss, each collection point becomes a soft relay of the pattern. Thus, it forms the propagation of patterns in the whole time domain.

Eliminating the Simplicity Bias. Previous work (Teney et al., 2022; Pagliardini et al., 2023) has pointed out that the agreement obtained from diverse predictions is the key to eliminating the effects of simplicity bias. We argue that this agreement from diverse predictions is naturally obtained in the sub-sequence alignment. This is because the fact that, since the SSM we constructed in section 4.1 is time-varying and a collection point will be at different time coordinates in different sub-sequences, the predictions for this collection point are naturally diverse. And we force these diverse predictions to arrive at a consensus by contrastive alignment.

5 PINNMamba

In conjunction with the high-level ideas described in Section 4, in this section, we present PINNMamba, a novel physics-informed learning framework that effectively combats the failure modes in the PINNs.

Sub-Sequential I/O. As shown in Fig. 4, PINNMamba first samples the grid of collection points over the entire spatio-temporal domain bounded by the PDE. We assume that the grid picks spatial coordinates and temporal coordinates, and denote the temporal sampling interval as . For a collection point , we construct a sequence with its temporal successors:

| (21) |

PINNMamba takes such sequences as the input of models. For each sequence , PINNMamba computes a sub-sequence prediction corresponding to every collection point in the sequence, where denote the tentative prediction of collection point in a sequence start with time . The will be taken as the output of collection point and the rest of the sequence will be used to construct the sub-sequence contrastive alignment loss we will discuss later in Section 4.2. The residual losses of the model w.r.t the sub-sequence will be:

| (22) |

| (23) |

| (24) |

Model Architecture. As shown in Fig. 4, PINNMamba employs an encoder-only architecture, which encodes fixed-size input sub-sequence into a sub-sequence prediction with the same length. First, for each token in the sequence, an MLP-based Spatio-Temporal Embedding layer first embeds the coordinates into high-dimensional representation. The embeddings will be sent to a Mamba-based encoder, which consists of several PINNMamba blocks.

The PINNMamba block employed here consists of two branches: (1) the first is a stack of a linear projection layer, a 1d-convolution layer, a Wavelet activation (Zhao et al., 2024), and an SSM layer with parallel scan (Gu & Dao, 2023); (2) the second is a stake of a linear projection layer and a Wavelet activation. The two branches are then connected with an element-wise multiplication, followed by another linear projection and residual connection. With input , the PINNMamba block can be formulated as:

| (25) |

| (26) |

| (27) |

where is Wavelet activation function (Zhao et al., 2024), in which are learnable. denotes an element-wise multiplication.

Sub-Sequence Contrastive Alignment. PINNMamba predicts the same collection multiple times in different subsequences. For example, the collection point appears on sequences from to . We align the predictions on these subsequences to make the information defined by the initial conditions propagate over time. To do this, for each subsequence, we design a contrastive loss with the last subsequence for alignment:

| (28) |

Thus, the empirical loss for PINNMamba is defined as:

| (29) |

6 Experiments

Setup. We evaluate the performance of PINNMamba on three standard PDE benchmarks: convection, wave, and reaction equations, all of which are identified as being affected by failure modes (Krishnapriyan et al., 2021; Zhao et al., 2024). The details of those PDEs can be found in Appendix D. We compare PINNMamba with four baseline models, vanilla PINN (Raissi et al., 2019), QRes (Bu & Karpatne, 2021), PINNsFormer (Zhao et al., 2024), and KAN (Liu et al., 2024c) . For fair comparison, we sample 101101 collection points with uniformly grid sampling, following previous work (Zhao et al., 2024; Wu et al., 2024). We also evaluate on PINNacle Benchmark (Hao et al., 2024) and Navier–Stokes equation (Raissi et al., 2019).

| Convection | Reaction | Wave | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Model | #Params | Loss | rMAE | rRMSE | Loss | rMAE | rRMSE | Loss | rMAE | rRMSE |

| PINN | 527361 | 0.0239 | 0.8514 | 0.8989 | 0.1991 | 0.9803 | 0.9785 | 0.0320 | 0.4101 | 0.4141 |

| QRes | 396545 | 0.0798 | 0.9035 | 0.9245 | 0.1991 | 0.9826 | 0.9830 | 0.0987 | 0.5349 | 0.5265 |

| PINNsFormer | 453561 | 0.0068 | 0.4527 | 0.5217 | 3e-6 | 0.0146 | 0.0296 | 0.0216 | 0.3559 | 0.3632 |

| KAN | 891 | 0.0250 | 0.6049 | 0.6587 | 7e-6 | 0.0166 | 0.0343 | 0.0067 | 0.1433 | 0.1458 |

| PINNMamba | 285763 | 0.0001 | 0.0188 | 0.0201 | 1e-6 | 0.0094 | 0.0217 | 0.0002 | 0.0197 | 0.0199 |

Training Details. We train PINNMamba and all the baseline models 1000 epochs with L-BFGS optimizer (Liu & Nocedal, 1989). We set the sub-sequence length to 7 for PINNMamba, and keep the original pseudo-sequence setup for PINNsFormers. The weights of loss terms are set to for all three equations, as we find that strengthening the boundary conditions can lead to better convergence. is set to 1000 for convection and reaction equations, and auto-adapted by for wave equation. All experiments are implemented in PyTorch 2.1.1 and trained on an NVIDIA H100 GPU. More training details are in Appendix E. Our code and weights are available at \urlhttps://github.com/miniHuiHui/PINNMamba.

Metrics. To evaluate the performance of the models, we take relative Mean Absolute Error (rMAE, a.k.a relative error) and relative Root Mean Square Error (rRMSE, a.k.a relative error) following common practive (Zhao et al., 2024; Wu et al., 2024). The metrics are formulated as:

| (30) | ||||

| (31) |

where N is the number of test points, is the ground truth solution, and is the model’s prediction.

6.1 Main Results

We present the rMAE and rRMSE for approximating convection, reaction and wave equation’s solution in Table 1. Our model consistently outperforms other model architectures, achieving new state-of-the-art. Notably, as shown in Fig. 6, for the convection equation, PINNMamba allows sufficient propagation of information about the initial conditions, whereas on all the other models there is a varying degree of distortion in the time coordinates. As shown in Fig. 8, PINNMamba can further optimize at the boundary, resulting in a lower error than KAN and PINNsFormer for reaction equations. For problems as intrinsically difficult to optimize as the wave, as in Fig. 7, PINNMamba effectively combats simplicity bias and aligns the scales of multi-order differentiation, and thus achieves significantly higher accuracy. This illustrates that PINNMamba can be effective against PINN’s failure modes. It’s also worth noting that, PINNMamba has the lowest number of parameters (except KAN), while achieving consistently the best performance.

| Method | Convection | Reaction | Wave |

|---|---|---|---|

| PINNMamba | 0.0188 | 0.0094 | 0.0197 |

| +gPINN | 0.0172 | 0.0123 | 0.0264 |

| +vPINN | 0.0236 | 0.0092 | 0.0169 |

| +RoPINN | 0.0102 | 0.0099 | 0.0121 |

| +NTK | 0.0179 | 0.0079 | 0.0147 |

| +NTK+RoPINN | 0.0127 | 0.0072 | 0.0106 |

6.2 Combination with Other Methods

Since PINNMamba mainly focuses on model architecture, it can be integrated with other methods effortlessly. We explore the feasibility and their performance in combination with advanced training paradigm, as well as loss balancing.

Training Paradigm. We show the rMAE of PINNMamba when integrated with advanced strategies in Table 2. We observe that gPINN (Yu et al., 2022) and vPINN (Kharazmi et al., 2019) erratically deliver some performance gains on some tasks. This is due to the fact that the regularization provided by gPINN and vPINN in the form of a loss function through the gradient and variational residuals has little effect on PINNMamba, since SSM itself is sufficiently regularized. RoPINN (Wu et al., 2024) reduces the PINNMamba’s error on convection and wave equations by about 40%, since it complements the spatial continuity dependency.

Neural Tangent Kernel. Dynamic tuning of losses via Neural Tangent Kernel(NTK) (Wang et al., 2022b) has been shown to have the effect of smoothing out the loss landscape. PINNMamba also works well with the NTK-adopted loss function. As shown in Table 2, NTK can reduce PINNMamba error by 5-25%. The combination of RoPINN and NTK can further improve the overall performance of PINNMamba, which demonstrates the excellent suitability of PINNMamba with other PINN optimization methods.

6.3 Loss-Error Consistency Analysis

Our other interest is the role of PINNMamba for the elimination of simplicity bias. Models affected by simplicity bias that fall into over-smoothing solutions will show inconsistent decreasing trends in loss and error during training. As shown in Fig. 9, in the training process for solving convection equations, the rMAE of PINN doesn’t descend as and . This suggests that PINN is trapped in an over-smoothing solution, which is in agreement with our observation in Fig. 6. As a comparison, we find that PINNMamba’s losses descent processes show a high degree of consistency with its error descent process. This indicates that PINNMamba does not tend to fall into a local optimum of oversimplified patterns. Instead, it tends to exhibit patterns that are consistent with the original PDEs.

6.4 Ablation Study

| Method | Convection | Reaction | Wave |

|---|---|---|---|

| PINNMamba | 0.0188 | 0.0094 | 0.0197 |

| -Sub-Sequence Align | 0.1436 | 0.0291 | 0.0481 |

| -Sub +Long Sequence | 0.6492 | 0.6731 | 0.3391 |

| -Time Varying SSM | 0.0241 | 0.0179 | 0.0664 |

| -SSM | 0.7785 | 0.9836 | 0.3341 |

| -Wavelet +Tanh | 0.4531 | 0.0299 | 0.3151 |

To verify the validity of the various components of the PINNMamba, as shown in Table 3, we evaluate the performance of models subtracting these components from PINNMamba.

Sub-Sequence. We remove the sub-sequence alignment, which leads to a decrease in model performance, indicating the significance of the agreement formed through alignment in eliminating simplicity bias. After replacing the sub-sequence with a long sequence of the entire domain, the model shows failure modes, in line with the sequence granularity analysis in Section 4.2.

Time-Varying SSM. We replace the selective SSM (Gu & Dao, 2023) with a linear time-invariant structure SSM (Gu et al., 2022), and there is some decrease in model performance, illustrating the role of predictive diversity in eliminating simplicity bias. And when we remove SSM completely and switch to MLP instead, the model has severe failure modes. This demonstrates that SSM’s adaptation for Continuous-Discrete Mismatch allows the initial condition information to propagate sufficiently in time coordinates.

In addition, we also conducted a sensitivity analysis of the choice of sub-sequence length, activation. See Appendix F.

6.5 Experiments on Complex Problems

To further demonstrate the generalization of our method, we tested our model on partial PINNacle Benchmark (Hao et al., 2024) and Navier-Stokes equations. As shown in Fig. 10, PINNMamba achieves the lowest error on the N-S equation. Just like PINNsFormer, PINNMamba also gets out-of-memory on some problems in PINNacle, which we identify as a major limitation of sequence-based methods. We discuss the details of PINNacle experiments in Appendix G.

7 Conclusion

In this paper, we reveal that the mismatch between discrete training of PINNs and the continuous nature of PDEs, as well as simplicity bias are the key of failure modes. In combating with such failure modes, we propose PINNMamba, an SSM-based sub-sequence learning framework. PINNMamba successfully eliminates the failure modes, and meanwhile becomes the new state-of-the-art PINN architecture.

Impact Statement

The development of physics-informed neural networks represents a transformative approach to solving differential equations by integrating physical laws directly into the learning process. This work explores novel advancements in PINN architecture, to improve accuracy and eliminate the potential failure modes. By refining PINN architectures, this study contributes to the broader adoption of physics-informed machine learning in fields such as computational fluid dynamics, material science, and engineering simulations. The proposed enhancements lead to more robust and scalable models, facilitating real-world applications where conventional PINNs struggle with over-smoothing. There is no known negative impact from this study at this time.

References

- Abadi et al. (2016) Abadi, M., Barham, P., Chen, J., Chen, Z., Davis, A., Dean, J., Devin, M., Ghemawat, S., Irving, G., Isard, M., et al. : a system for machine learning. In 12th USENIX symposium on operating systems design and implementation (OSDI 16), pp. 265–283, 2016.

- Bu & Karpatne (2021) Bu, J. and Karpatne, A. Quadratic residual networks: A new class of neural networks for solving forward and inverse problems in physics involving pdes. In Proceedings of the 2021 SIAM International Conference on Data Mining (SDM), pp. 675–683. SIAM, 2021.

- Cai et al. (2021) Cai, S., Wang, Z., Wang, S., Perdikaris, P., and Karniadakis, G. E. Physics-informed neural networks for heat transfer problems. Journal of Heat Transfer, 143(6):060801, 2021.

- Cho et al. (2024) Cho, W., Jo, M., Lim, H., Lee, K., Lee, D., Hong, S., and Park, N. Parameterized physics-informed neural networks for parameterized PDEs. In Proceedings of the 41st International Conference on Machine Learning, Proceedings of Machine Learning Research, pp. 8510–8533. PMLR, 2024.

- Dao & Gu (2024) Dao, T. and Gu, A. Transformers are SSMs: Generalized models and efficient algorithms through structured state space duality. In Proceedings of the 41st International Conference on Machine Learning, Proceedings of Machine Learning Research, pp. 10041–10071. PMLR, 2024.

- Fan (2000) Fan, E. Extended tanh-function method and its applications to nonlinear equations. Physics Letters A, 277(4-5):212–218, 2000.

- Fu et al. (2023) Fu, D. Y., Dao, T., Saab, K. K., Thomas, A. W., Rudra, A., and Re, C. Hungry hungry hippos: Towards language modeling with state space models. In The Eleventh International Conference on Learning Representations, 2023.

- Gao et al. (2022) Gao, Z., Shi, X., Wang, H., Zhu, Y., Wang, Y. B., Li, M., and Yeung, D.-Y. Earthformer: Exploring space-time transformers for earth system forecasting. Advances in Neural Information Processing Systems, 35:25390–25403, 2022.

- Gao et al. (2023) Gao, Z., Yan, L., and Zhou, T. Failure-informed adaptive sampling for pinns. SIAM Journal on Scientific Computing, 45(4):A1971–A1994, 2023.

- Gu & Dao (2023) Gu, A. and Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv preprint arXiv:2312.00752, 2023.

- Gu et al. (2020) Gu, A., Dao, T., Ermon, S., Rudra, A., and Ré, C. Hippo: Recurrent memory with optimal polynomial projections. Advances in neural information processing systems, 33:1474–1487, 2020.

- Gu et al. (2022) Gu, A., Goel, K., and Re, C. Efficiently modeling long sequences with structured state spaces. In International Conference on Learning Representations, 2022.

- Hao et al. (2024) Hao, Z., Yao, J., Su, C., Su, H., Wang, Z., Lu, F., Xia, Z., Zhang, Y., Liu, S., Lu, L., et al. Pinnacle: A comprehensive benchmark of physics-informed neural networks for solving pdes. In Advances in Neural Information Processing Systems, 2024.

- He et al. (2016) He, K., Zhang, X., Ren, S., and Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770–778, 2016.

- Hornik et al. (1989) Hornik, K., Stinchcombe, M., and White, H. Multilayer feedforward networks are universal approximators. Neural networks, 2(5):359–366, 1989.

- Hu et al. (2024) Hu, Z., Daryakenari, N. A., Shen, Q., Kawaguchi, K., and Karniadakis, G. E. State-space models are accurate and efficient neural operators for dynamical systems. arXiv preprint arXiv:2409.03231, 2024.

- Jin et al. (2021) Jin, X., Cai, S., Li, H., and Karniadakis, G. E. Nsfnets (navier-stokes flow nets): Physics-informed neural networks for the incompressible navier-stokes equations. Journal of Computational Physics, 426:109951, 2021.

- Kalman (1960) Kalman, R. E. A new approach to linear filtering and prediction problems. 1960.

- Kharazmi et al. (2019) Kharazmi, E., Zhang, Z., and Karniadakis, G. E. Variational physics-informed neural networks for solving partial differential equations. arXiv preprint arXiv:1912.00873, 2019.

- Krishnapriyan et al. (2021) Krishnapriyan, A., Gholami, A., Zhe, S., Kirby, R., and Mahoney, M. W. Characterizing possible failure modes in physics-informed neural networks. Advances in neural information processing systems, 34:26548–26560, 2021.

- Liu & Nocedal (1989) Liu, D. C. and Nocedal, J. On the limited memory bfgs method for large scale optimization. Mathematical programming, 45(1):503–528, 1989.

- Liu et al. (2024a) Liu, Y., Tian, Y., Zhao, Y., Yu, H., Xie, L., Wang, Y., Ye, Q., Jiao, J., and Liu, Y. VMamba: Visual state space model. In Advances in Neural Information Processing Systems, 2024a.

- Liu et al. (2024b) Liu, Z., Ma, P., Wang, Y., Matusik, W., and Tegmark, M. Kan 2.0: Kolmogorov-arnold networks meet science. arXiv preprint arXiv:2408.10205, 2024b.

- Liu et al. (2024c) Liu, Z., Wang, Y., Vaidya, S., Ruehle, F., Halverson, J., Soljačić, M., Hou, T. Y., and Tegmark, M. Kan: Kolmogorov-arnold networks. arXiv preprint arXiv:2404.19756, 2024c.

- Mao et al. (2020) Mao, Z., Jagtap, A. D., and Karniadakis, G. E. Physics-informed neural networks for high-speed flows. Computer Methods in Applied Mechanics and Engineering, 360:112789, 2020.

- Nair & Hinton (2010) Nair, V. and Hinton, G. E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the 27th international conference on machine learning (ICML-10), pp. 807–814, 2010.

- Nguyen et al. (2024a) Nguyen, E., Poli, M., Durrant, M. G., Kang, B., Katrekar, D., Li, D. B., Bartie, L. J., Thomas, A. W., King, S. H., Brixi, G., et al. Sequence modeling and design from molecular to genome scale with evo. Science, 386(6723):eado9336, 2024a.

- Nguyen et al. (2024b) Nguyen, P. C., Cheng, X., Azarfar, S., Seshadri, P., Nguyen, Y. T., Kim, M., Choi, S., Udaykumar, H., and Baek, S. PARCv2: Physics-aware recurrent convolutional neural networks for spatiotemporal dynamics modeling. In Proceedings of the 41st International Conference on Machine Learning, Proceedings of Machine Learning Research, pp. 37649–37666. PMLR, 2024b.

- Pagliardini et al. (2023) Pagliardini, M., Jaggi, M., Fleuret, F., and Karimireddy, S. P. Agree to disagree: Diversity through disagreement for better transferability. In International Conference on Learning Representations, 2023.

- Paszke et al. (2019) Paszke, A., Gross, S., Massa, F., Lerer, A., Bradbury, J., Chanan, G., Killeen, T., Lin, Z., Gimelshein, N., Antiga, L., et al. Pytorch: An imperative style, high-performance deep learning library. Advances in neural information processing systems, 32, 2019.

- Pezeshki et al. (2021) Pezeshki, M., Kaba, O., Bengio, Y., Courville, A. C., Precup, D., and Lajoie, G. Gradient starvation: A learning proclivity in neural networks. Advances in Neural Information Processing Systems, 34:1256–1272, 2021.

- Poli et al. (2023) Poli, M., Massaroli, S., Nguyen, E., Fu, D. Y., Dao, T., Baccus, S., Bengio, Y., Ermon, S., and Ré, C. Hyena hierarchy: Towards larger convolutional language models. In International Conference on Machine Learning, pp. 28043–28078. PMLR, 2023.

- Raissi et al. (2019) Raissi, M., Perdikaris, P., and Karniadakis, G. E. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. Journal of Computational physics, 378:686–707, 2019.

- Reddy (1993) Reddy, J. An Introduction to the Finite Element Method. McGraw-Hill, 1993.

- Sain & Massey (1969) Sain, M. and Massey, J. Invertibility of linear time-invariant dynamical systems. IEEE Transactions on automatic control, 14(2):141–149, 1969.

- Shah et al. (2020) Shah, H., Tamuly, K., Raghunathan, A., Jain, P., and Netrapalli, P. The pitfalls of simplicity bias in neural networks. Advances in Neural Information Processing Systems, 33:9573–9585, 2020.

- Smith et al. (2023) Smith, J. T., Warrington, A., and Linderman, S. Simplified state space layers for sequence modeling. In The Eleventh International Conference on Learning Representations, 2023.

- Teney et al. (2022) Teney, D., Abbasnejad, E., Lucey, S., and Van den Hengel, A. Evading the simplicity bias: Training a diverse set of models discovers solutions with superior ood generalization. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 16761–16772, 2022.

- Wang et al. (2022a) Wang, C., Li, S., He, D., and Wang, L. Is l^2 physics informed loss always suitable for training physics informed neural network? Advances in Neural Information Processing Systems, 35:8278–8290, 2022a.

- Wang et al. (2022b) Wang, S., Yu, X., and Perdikaris, P. When and why pinns fail to train: A neural tangent kernel perspective. Journal of Computational Physics, 449:110768, 2022b.

- Wong et al. (2022) Wong, J. C., Ooi, C. C., Gupta, A., and Ong, Y.-S. Learning in sinusoidal spaces with physics-informed neural networks. IEEE Transactions on Artificial Intelligence, 5(3):985–1000, 2022.

- Wu et al. (2023) Wu, C., Zhu, M., Tan, Q., Kartha, Y., and Lu, L. A comprehensive study of non-adaptive and residual-based adaptive sampling for physics-informed neural networks. Computer Methods in Applied Mechanics and Engineering, 403:115671, 2023.

- Wu et al. (2024) Wu, H., Luo, H., Ma, Y., Wang, J., and Long, M. RoPINN: Region optimized physics-informed neural networks. In Advances in Neural Information Processing Systems, 2024.

- Xu et al. (2023) Xu, C., Cao, B. T., Yuan, Y., and Meschke, G. Transfer learning based physics-informed neural networks for solving inverse problems in engineering structures under different loading scenarios. Computer Methods in Applied Mechanics and Engineering, 405:115852, 2023.

- Xu et al. (2024) Xu, C., Yu, F., Li, M., Zheng, Z., Xu, Z., Xiong, J., and Chen, X. Infinite-dimensional feature interaction. In Advances in Neural Information Processing Systems, 2024.

- Yang et al. (2022) Yang, T.-Y., Rosca, J., Narasimhan, K., and Ramadge, P. J. Learning physics constrained dynamics using autoencoders. Advances in Neural Information Processing Systems, 35:17157–17172, 2022.

- Yu et al. (2022) Yu, J., Lu, L., Meng, X., and Karniadakis, G. E. Gradient-enhanced physics-informed neural networks for forward and inverse pde problems. Computer Methods in Applied Mechanics and Engineering, 393:114823, 2022.

- Zhao et al. (2024) Zhao, Z., Ding, X., and Prakash, B. A. Pinnsformer: A transformer-based framework for physics-informed neural networks. In International Conference on Learning Representations, 2024.

- Zheng et al. (2024) Zheng, J., LiweiNo, Xu, N., Zhu, J., XiaoxuLin, and Zhang, X. Alias-free mamba neural operator. In Advances in Neural Information Processing Systems, 2024.

- Zhu et al. (2024) Zhu, L., Liao, B., Zhang, Q., Wang, X., Liu, W., and Wang, X. Vision mamba: Efficient visual representation learning with bidirectional state space model. In Proceedings of the 41st International Conference on Machine Learning, Proceedings of Machine Learning Research, pp. 62429–62442. PMLR, 2024.

Appendix A Related Works

Physics-Informed Neural Networks. Physics-Informed Neural Networks (Raissi et al., 2019) are a class of deep learning models designed to solve problems governed by physical laws described in PDEs. They integrate physics-based constraints directly into the training process in the loss function, allowing them to numerically solve many key physical equations, such as Navier-Stokes equations(Jin et al., 2021), Euler equations (Mao et al., 2020), heat equatuons (Cai et al., 2021). Several advanced learning schemes such as gPINN (Kharazmi et al., 2019), vPINN(Yu et al., 2022), and RoPINN(Wu et al., 2024), model architectures such as QRes (Bu & Karpatne, 2021), FLS (Wong et al., 2022), PINNsFormer (Zhao et al., 2024), KAN (Liu et al., 2024c, b) are proposed in terms of convergence, optimization, and generalization.

Failure Modes in PINNs. Despite these efforts, PINN still has some inherently intractable failure modes. Krishnapriyan et al. (2021) identify several types of equations that are vulnerable to difficulties in solving by PINNs. These equations are usually manifested by the presence of a parameter in them that makes their pattern behave as a high frequency or a complex state (Cho et al., 2024), failing to propagate the initial condition. In such cases, an empirical loss constructed using a collection point can easily fall into an over-smooth solution (e.g. can make the loss of all collection points except whose descend to 0 for 1d-wave equations). Several methods regarding optimization (Wu et al., 2024; Wang et al., 2022a), sampling (Gao et al., 2023; Wu et al., 2023), model architecture (Zhao et al., 2024; Cho et al., 2024; Nguyen et al., 2024b), transfer learning (Xu et al., 2023; Cho et al., 2024) are proposed to mitigate such failure modes. However, the above approaches do not focus on the fact that a PDE system should be modeled as a continuous dynamic, leading to difficulties in generalization over a wide range of problems.

State Space Models. The state space model (Kalman, 1960) is a mathematical representation of a physical system in terms of state variables. Modern SSMs (Gu et al., 2022; Smith et al., 2023; Gu & Dao, 2023) combine the representational power of neural networks with their own superior long-range dependency capturing and parallel computing capabilities and thus are widely used in many fields, such as language modeling (Fu et al., 2023; Poli et al., 2023; Gu & Dao, 2023; Dao & Gu, 2024), computer vision (Zhu et al., 2024; Liu et al., 2024a), and genomics (Gu & Dao, 2023; Nguyen et al., 2024a). Specifically, Structured SSMs (S4) (Gu et al., 2022) decomposing the structured state matrices as the sum of a low-rank and normal terms to improve the efficiency of state-space-based deep models. Further, Selective SSMs (Mamba) (Gu & Dao, 2023) eliminates the Linear Time Invariance (Sain & Massey, 1969) of SSMs by introducing a gating mechanism, allowing the model to selectively propagate or forget information and greatly enhancing the model performance. In physics, SSMs are used in conjunction with Neural Operator to form a data-driven solution to PDEs (Zheng et al., 2024; Hu et al., 2024). However, these methods are data-driven which lack generalization ability in some scenarios where real data is not available. Unlike these methods, our approach, PINNMamba is fully physics-driven, relying only on residuals constructed using PDEs without any training data.

Appendix B Proof of Theorem 3.1

We start with a function such that is non-zero almost everywhere. Such a function exists because is a non-zero differential operator. For example, if is the Laplacian, a non-harmonic function can be chosen.

Lemma B.1 (Existence of Base Function).

Let be a non-degenerate differential operator on , where is a domain. There exists a function such that:

Proof.

Since is non-degenerate (i.e., not identically zero), there exists at least one function and a point such that:

By continuity of (assuming smooth coefficients for ), there is an open neighborhood around where .

Construct a smooth bump function with: on a smaller neighborhood , and outside . Define . Then is non-zero on and smooth everywhere. Let be a countable dense subset of . For each , repeat the above construction to obtain a function such that: in a neighborhood of , , and the supports are pairwise disjoint.

Define the function:

where are chosen such that the series converges in (e.g., ).

The set is open and dense in . Since on this dense open set, the zero set is contained in the complement of , which is nowhere dense and hence has Lebesgue measure zero. Therefore:

∎

Lemma B.2 (Local Correction Functions).

Let be a non-degenerate differential operator on , and let . There exist smooth functions and radii such that for each :

1. Compact Support: ,

2. Non-Vanishing Action: ,

3. Disjoint Supports: for .

Proof.

Let be the minimal distance between distinct points in . For all , choose radii such that:

This ensures the balls are pairwise disjoint.

For each , since is non-degenerate, there exists a smooth function such that:

This is because, when is non-degenerate, its action cannot vanish on all smooth functions at . For instance, if contains a derivative , take near .

Then for each , construct a smooth bump function satisfying:

1. on ,

2. outside ,

3. everywhere.

Therefore, define the localized function:

By construction:

1. ,

2. on , so

Since , the distance between any two balls and is at least . Thus, the supports of and are disjoint for .

Therefore, the functions satisfy all required conditions.

∎

We now state the one-dimensional case of Theorem 3.1 here:

Lemma B.3 (One-Dimensional Case of Theorem 3.1).

Let . Then for differential operator there exist infinitely many functions parametrized by , s.t.

Proof.

Define the corrected function:

where is the local correction function defined in Lemma B.2, are scalars chosen such that:

Since , we can solve for :

Outside the union of supports , we have:

since outside . By construction, almost everywhere.

The parameters can be varied infinitely by varying : The bump functions can be scaled, translated, or reshaped (e.g., Gaussian vs. polynomial) while retaining the properties of Local Correction in Lemma B.2 and varying : For each , choose from a continuum , where ensures disjointness.

Thus, the family is uncountably infinite.

The set by definition has Lebesgue measure zero in . The corrections are confined to the measure-zero set . Therefore:

∎

Theorem B.4 (Theorem 3.1).

Let . Then for differential operator there exist infinitely many functions parametrized by , s.t.

Proof.

It is trivial to generalize the Lemma B.3 to the case , by constructing:

where . Adjust such that:

This gives a linear system for , which is solvable because the are linearly independent. ∎

Appendix C Linear Time-Varying System

To adjust the given Linear Time-Invariant system to a Linear Time-Varying system, we replace the constant matrices , , and with their time-varying counterparts , , and . The state transition matrix in the LTI system becomes the product of time-varying matrices from time to . The resulting time-varying output equation is:

| (32) |

where is the state transition matrix from time to , defined as:

| (33) |

and the term represents the free response due to the initial condition .

The summation includes contributions from all inputs up to time , with capturing the time-varying dynamics.

To adjust the Eq. 18 to a Time-Varying system The state transition term becomes the time-ordered product , and the output now explicitly depends on time-varying dynamics. The adjusted equation becomes:

| (34) |

This modification ensures consistency with the Time-Varying system’s time-dependent parameters while preserving the structure of the original loss function.

Appendix D PDEs Setups

D.1 1-D Convection

The 1-D convection equation, also known as the 1-D advection equation, is a partial differential equation that models the transport of a scalar quantity (such as temperature, concentration, or momentum) due to fluid motion at a constant velocity . It is a fundamental equation in fluid dynamics and transport phenomena. The equation is given by:

| (35) | |||

where is the constant convection (advection) speed. As increases, the equation will be harder for PINN to approximate. It is a well-known equation with failure mode for PINN. We set following common practice (Zhao et al., 2024; Wu et al., 2024).

The 1-D convection equation’s analytical solution is given by:

| (36) |

D.2 1-D Reaction

The 1-D reaction equation is a partial differential equation that models how a chemical species reacts over time and (optionally) varies along a single spatial dimension. The equation is given by:

| (37) | |||

where is the growth rate coefficient. As increases, the equation will be harder for PINN to approximate. It is a well-known equation with failure mode for PINN. We set following common practice (Zhao et al., 2024; Wu et al., 2024).

The 1-D reaction equation’s analytical solution is given by:

| (38) |

D.3 1-D Wave

The 1-D wave equation is a partial differential equation that describes how a wave propagates through a medium, such as a vibrating string. We consider such an equation given by:

| (39) | |||

where is a wave frequency coefficient. We set as 3 following common practice (Zhao et al., 2024; Wu et al., 2024). The wave equation contains second-order derivative terms in the equation and first-order derivative terms in the initial condition, which is considered to be hard to optimize (Wu et al., 2024). This example illustrates that PINNMamba can better capture the time continuum because its differentiation for time is directly defined by the matrix, whose differential scale is uniform for multiple orders.

The 1-D wave equation’s analytical solution is given by:

| (40) |

D.4 2-D Navier-Stokes

The 2-D Navier-Stokes equation describes the motion of fluid in two spatial dimensions and . It is fundamental in fluid dynamics and is used to model incompressible fluid flows. We consider such an equation given by:

| (41) |

where , , and are the x-coordinate velocity field, y-coordinate velocity field, and pressure field, respectively. We set and following common practice (Zhao et al., 2024; Raissi et al., 2019).

The 2-dimensional Navier-Stokes equation doesn’t have an analytical solution that can be described by existing mathematical symbols, we take Raissi et al. (2019)’s finite-element numerical simulation as ground truth.

D.5 PINNNacle

PINNacle (Hao et al., 2024) contains 16 hard PDE problems, which can be classified as Burges, Poisson, Heat, Navier-Stokes, Wave, Chaotic, and other High-dimensional problems. We only test PINNmamba on 6 problems, because solving the remaining problems with a sequence-based PINN model will cause an out-of-memory issue, even on the most advanced NVIDIA H100 GPU. Please refer to the original paper of PINNacle (Hao et al., 2024) for the details of the benchmark.

Appendix E Training Details

Hyperparameters. We provide the training hyperparameters of the main experiments in Table 4.

| Model | Hyperparameter Type | Value |

| PINN | network depth | 4 |

| network width | 512 | |

| QRes | network depth | 4 |

| network width | 256 | |

| KAN | network width | [2,5,5,1] |

| grid size | 5 | |

| grid_epsilon | 1.0 | |

| PINNsFormer | # of encoder | 1 |

| # of decoder | 1 | |

| embedding size | 32 | |

| attention head | 2 | |

| MLP hidden width | 512 | |

| sequence length | 5 | |

| sequence interval | 1e-4 | |

| PINNMamba | # of encoder | 1 |

| embedding size | 32 | |

| width | 8 | |

| MLP hidden width | 512 | |

| sequence length | 7 | |

| sequence interval | 1e-2 |

Computation Overhead. We report the training time and memory consumption of baseline models and PINNMamba on the convection equation in Table 5.

| Method | Memory Overhead | Training Time |

|---|---|---|

| PINN | 1605 MB | 0.28 s/it |

| QRes | 1561 MB | 0.38 s/it |

| PINNsFormer | 8703 MB | 1.82 s/it |

| KAN | 1095 MB | 2.73 s/it |

| PINNMamba | 7899 MB | 1.99 s/it |

Appendix F Sensitivity Analysis

PINNMamba can be further improved by hyper-parameters tuning, we test the sub-sequence length, interval and activation selection in this section.

Sub-sequence Length. We test the effect of different sub-sequence lengths on model performance. As shown in Table 6, we test the length of 3, 5, 7, 9, 21. Length achieves the best performance on reaction and wave equations, while achieves the best performance on convection equation.

| Convection | Reaction | Wave | ||||

|---|---|---|---|---|---|---|

| Length | rMAE | rRMSE | rMAE | rRMSE | rMAE | rRMSE |

| 3 | 0.6698 | 0.7271 | 0.0150 | 0.0331 | 0.5288 | 0.533926 |

| 5 | 0.0092 | 0.0099 | 0.0131 | 0.0286 | 0.0278 | 0.0303 |

| 7 | 0.0188 | 0.0201 | 0.0094 | 0.0217 | 0.0197 | 0.0199 |

| 9 | 0.0410 | 0.0444 | 0.0105 | 0.0246 | 0.0343 | 0.0374 |

| 21 | 1.0263 | 1.0596 | 0.0884 | 0.1588 | 0.0458 | 0.0493 |

Sub-Sequence Interval. We test the effect of different sub-sequence intervals on model performance. As shown in Table 7, we test the intervals of , , , . The interval achieves the best performance on convection and wave equations, while achieves the best performance on reaction. Note that, when , we cannot build the sub-sequence contrastive alignment.

| Convection | Reaction | Wave | ||||

|---|---|---|---|---|---|---|

| Interval | rMAE | rRMSE | rMAE | rRMSE | rMAE | rRMSE |

| 2e-3 | 0.0249 | 0.0257 | 0.0739 | 0.1389 | 0.1693 | 0.1903 |

| 5e-3 | 0.0243 | 0.0287 | 0.0083 | 0.0185 | 0.2492 | 0.2690 |

| 1e-2 | 0.0188 | 0.0201 | 0.0094 | 0.0217 | 0.0197 | 0.0199 |

| 1e-1 | 1.2169 | 1.3480 | 0.4324 | 0.5034 | 0.0666 | 0.0703 |

Activation Function. We test the activation function’s effect on the performance of PINNMamba. We report the results of ReLU (Nair & Hinton, 2010), Tanh (Fan, 2000), and Wavelet (Zhao et al., 2024) in Table 8.

| Convection | Reaction | Wave | ||||

|---|---|---|---|---|---|---|

| Activation | rMAE | rRMSE | rMAE | rRMSE | rMAE | rRMSE |

| ReLU | 0.4695 | 0.4722 | 0.0865 | 0.1583 | 0.4139 | 0.4203 |

| Tanh | 0.4531 | 0.4601 | 0.0299 | 0.0568 | 0.3515 | 0.3539 |

| Wavelet | 0.0188 | 0.0201 | 0.0094 | 0.0217 | 0.0197 | 0.0199 |

Appendix G Complex Problem Results

G.1 2D Navier-Stokes Equations

Although PINN can already handle Navier-Stokes equations well, we still tested the performance of PINN Mamba on Navier-Stokes equations to check the generalization performance of our method on high-dimensional problems. As shown in Fig. 11, our method achieves good results on Navier-Stokes pressure prediction. Since there is no initial condition information for the N-S equation for pressure, we took the data from the only collection point for pattern alignment.

G.2 PINNacle Benchmark

Like PINNsFormer, PINNMamba is a sequence model. The sequence model suffers from Out-of-Memory problems when dealing with some of the problems in the PINNacle Benchmark (Hao et al., 2024), even when running on the advanced Nvidia H100 GPU. We report here the results of the sub-problems for which results can be obtained in Table 9. PINNMamba can solve the Out-of-Memory problem by distributed training over multiple cards, which we leave as a follow-up work.

| PINN | PINNsFormer | PINNMamba | ||||

|---|---|---|---|---|---|---|

| Equation | rMAE | rRMSE | rMAE | rRMSE | rMAE | rRMSE |

| Burgers 1d-C | 1.1e-2 | 3.3e-2 | 9.3e-3 | 1.4e-2 | 3.7e-3 | 1.1e-3 |

| Burgers 2d-C | 4.5e-1 | 5.2e-1 | OOM | OOM | OOM | OOM |

| Poisson 2d-C | 7.5e-1 | 6.8e-1 | 7.2e-1 | 6.6e-1 | 6.2 e-1 | 5.7e-1 |

| Poisson 2d-CG | 5.4e-1 | 6.6e-1 | 5.4e-1 | 6.3e-1 | 1.2e-1 | 1.4e-1 |

| Poisson 3d-CG | 4.2e-1 | 5.0e-1 | OOM | OOM | OOM | OOM |

| Poisson 2d-MS | 7.8e-1 | 6.4e-1 | 1.3e+0 | 1.1e+0 | 7.2e-1 | 6.0e-1 |

| Heat 2d-VC | 1.2e+0 | 9.8e-1 | OOM | OOM | OOM | OOM |

| Heat 2d-MS | 4.7e-2 | 6.9e-2 | OOM | OOM | OOM | OOM |

| Heat 2d-CG | 2.7e-2 | 2.3e-2 | OOM | OOM | OOM | OOM |

| NS 2d-C | 6.1e-2 | 5.1e-2 | OOM | OOM | OOM | OOM |

| NS 2d-CG | 1.8e-1 | 1.1e-1 | 1.0e-1 | 7.0e-2 | 1.1e-2 | 7.8e-3 |

| Wave 1d-C | 5.5e-1 | 5.5e-1 | 5.0e-1 | 5.1e-1 | 1.0e-1 | 1.0e-1 |

| Wave 2d-CG | 2.3e+0 | 1.6e+0 | OOM | OOM | OOM | OOM |

| Chaotic GS | 2.1e-2 | 9.4e-2 | OOM | OOM | OOM | OOM |

| High-dim PNd | 1.2e-3 | 1.1e-3 | OOM | OOM | OOM | OOM |

| High-dim HNd | 1.2e-2 | 5.3e-3 | OOM | OOM | OOM | OOM |