Structured linear quadratic control computations over 2D grids

Abstract

In this paper, we present a structured solver based on the preconditioned conjugate gradient method (PCGM) for solving the linear quadratic (LQ) optimal control problem for sub-systems connected in a two-dimensional (2D) grid structure. Our main contribution is the development of a structured preconditioner based on a fixed number of inner-outer iterations of the nested block Jacobi method. We establish that the proposed preconditioner is positive-definite. Moreover, the proposed approach retains structure in both spatial dimensions as well as in the temporal dimension of the problem. The arithmetic complexity of each PCGM step scales as , where is the length of the time horizon. The computations involved at each step of the proposed PCGM are decomposable and amenable to distributed implementation on parallel processors connected in a 2D grid structure with localized data exchange. We also provide results of numerical experiments performed on two example systems.

Notations

In this paper, we use and to denote the set of real numbers and natural numbers, respectively. We denote an -dimensional real vector with and a real matrix with rows and columns with . denotes all elements of the set except element . denotes the transpose of a matrix. means the symmetric matrix is positive definite (i.e., there exists such that for all ) and means is a positive semi-definite (i.e., for all ). “”” represents construction of block diagonal matrix from input arguments and “” represents concatenation of input arguments as column vector.

I Introduction

We are interested in solving finite-horizon linear-quadratic (LQ) optimal control problems with discrete-time dynamics arising from the interconnection of sub-systems in a two-dimensional (2D) grid graph structure as shown in Fig.1.

Each node is a sub-system and is labeled with subscript with and , where is the total number of sub-systems along the vertical direction and is the total number of sub-systems in the horizontal direction. We assume that there is a bi-directional flow of information between adjacent sub-systems, as shown in Fig.1. That is, each sub-system is coupled, at max, with of the neighbouring sub-systems. Spatio-temporal structure of this kind is relevant in many applications such as automated water irrigation networks [1], radial power distribution networks [2], control of traffic signals [3], spreading processes such as wildfires [4, 5] and in the discretization of 2D partial differential equations [6].

The LQ optimal control problem that we are interested in is a large-scale quadratic program with O(KN T) decision variables, where T is the length of the time horizon. We can solve this problem by solving the linear system of equations that arise from the corresponding first-order optimality conditions, known as KKT conditions. Due to the special spatio-temporal structure of the problem, this linear system of equations is also highly structured.

Previous research has studied the temporal structure of the LQ optimal control problem in [7, 8, 9, 10], but these approaches do not utilize the structure in the spatial dimensions of the problem. Structured solvers for LQ optimal control problems related to path-graph networks (i.e., 1D chains) are proposed in [11, 12, 13], and the computations are decomposable and amenable to distributed implementation on parallel processors with path-graph data exchange. However, these approaches do not exploit the structure in both spatial dimensions simultaneously, as we do in this paper. In [14, 15], structured methods are proposed for solving optimal control problems of multi-agent networks, which can be used to solve the LQ optimal control problem we are interested in. However, these approaches often take a large number of iterations to converge. All of these approaches offer decomposable computations, but they do not exploit the complete three-dimensional spatio-temporal structure of the problem. In [16], a structured solver is proposed for a special case where sub-systems are connected in a directed tree graph structure.

The main contribution of this paper extends the developments presented in [13] to the case of 2D grids. We present a structured solver based on preconditioned conjugate gradient method (PCGM) for solving the KKT conditions. Specifically, we propose a structured preconditioner based on nested block Jacobi method (NBJM) to improve the conditioning of the linear system. The proposed approach retains structure in all three dimensions of the problem, extending the work in [13] which explored structure in two dimensions. We show that the proposed preconditioner is positive-definite. Moreover, the arithmetic complexity of each PCGM step scales as . The computations are decomposeable and amenable to distributed implementation on parallel processors connected in a 2D grid with localized data exchange.

The rest of the paper is organized as follows. In Section II, we present the structured formulation of the problem and the corresponding KKT conditions. In Section III, we provide an overview of the PCGM. The structured preconditioner based on NBJM is developed in Section IV. We explore performance of the proposed PCGM numerically, for a 2D mass-spring-damper network and an automated water irrigation network, and present results in Section V. Finally, some concluding remarks and possible directions of future work are presented in VI.

II Problem Formulation

The state space dynamics of each sub-system are

| (1) | ||||

where and are the state and input of sub-system at time , respectively. Initial conditions are given by and the spatial boundary conditions are given by , and , for .

We are interested in the following finite-horizon linear-quadratic (LQ) optimal control problem:

| (2a) | ||||

| subject to | ||||

| (2b) | ||||

| (2c) | ||||

| (2d) | ||||

| (2e) | ||||

where . For , and , it is assumed that , and . Moreover, for every , , , but , so that can be removed as a decision variable.

Note that the problem (2) is a large-scale LQ optimal control problem with decision variables. While the cost is separable across the sub-systems in both dimensions as well as the time horizon, the problem is not separable due the spatial coupling between the states of neighbouring sub-systems, and the inter-temporal coupling in the equality constraint (2b). Solution of problem (2) can obtained by solving the large-scale linear system of equations arising from the corresponding first-order optimality conditions known as KKT conditions. However, due to the structured coupling of variables (i.e., states) in the equality constraint (2b), this linear system of equations is also structured.

II-A Structured Reformulation

By defining , , , and , problem (2) can be reformulated as the following quadratic program:

| (3a) | ||||

| subject to | ||||

| (3b) | ||||

| (3c) | ||||

| (3d) | ||||

where for and , ,

Note that and . All the matrices in vertically stacked QP (3) are block diagonal except which is block tri-diagonal due to the spatial coupling of system dynamics along the vertical direction in the optimal control problem (2).

Now, defining , , , and , problem (3) can be reformulated by stacking across the horizontal dimension of the 2D grid:

| (4a) | ||||

| subject to | ||||

| (4b) | ||||

| (4c) | ||||

where

and . Matrix is a block tri-diagonal matrix of size and each of it’s diagonal blocks are again block tri-diagonal of size .

II-B KKT Conditions

For problem (4), the first-order optimality conditions, also known as KKT conditions, are given by

| (6a) | ||||

| (6b) | ||||

| (6c) | ||||

where . The variables are Lagrange multipliers. Since and are positive definite, the problem (5) is strictly convex and the KKT conditions are necessary and sufficient for optimality [17]. Notice that, (6) is a large-scale, sparse and structured linear system of equations whose size scales as . We can solve (6) as

| (7a) | ||||

| (7b) | ||||

| (7c) | ||||

where , and .

Note that matrices and are block diagonal with block size independent of and . Therefore, computation of and can be carried out efficiently. However, solving (7a) is computationally more expensive. Since and are positive definite so their inverse are also positive definite, and is full row rank. Therefore, is a positive definite and has a block tri-diagonal structure

| (8) |

where

and . Note that each has a block penta-diagonal structure because are block tri-diagonal of size . Let , with when is even and otherwise. Also define,

| (9a) | ||||

| (9b) | ||||

| (9c) | ||||

| (9d) | ||||

where

for , , with , and . Given this, for becomes a block tri-diagonal matrix given by

| (11) |

where and . This structured splitting of will be needed in the next section.

III Preconditioned Conjugate Gradient Method

The positive definite linear system of equations (7a) can be solved using conjugate gradient method (CGM) [18]. Let be the error between -th iterate of the CGM and the exact solution of (7a). It can be shown that satisfies the following [19, Thm. 6.29]:

| (12) |

where , is the condition number and , ) are the maximum and minimum eigenvalue of , respectively. It shows that the CGM converges faster if is closer to . Therefore, to improve the performance of CGM, the system of equations (7a) is transformed such that the condition number of the transformed coefficient matrix is improved. The transformed system of equations is known as preconditioned system. Let be a positive definite matrix, then solving (7a) is equivalent to solving the transformed system

| (13) |

where and . An efficient implementation of preconditioned CGM (PCGM) is given in Algorithm 1 from [20].

Note that lines 7 to 14 constitute one PCG step. The transformed system is never formed explicitly. Instead, the preconditioning is performed by solving a linear system of equations at lines 3 and 12 of Algorithm 1. Choice of preconditioner determines the overall complexity of PCGM. Ideally, if we use the preconditioner , this will result in . However, the linear system to be solved at the preconditioning lines 3 and 12 becomes the original problem. The incomplete sparse LU factorization of the coefficient matrix can be used as a preconditioner [21, 22]. However, to ensure that the resulting preconditioner is both positive definite and effective, it may be necessary to use incomplete LU factors that are denser (i.e., have less structure) than .

In the next section, we devise a structured preconditioning approach based on nested block Jacobi iterations. We apply a fixed number of inner iterations at each outer iteration of block Jacobi method to approximately implement lines 3 and 12 with . The proposed approach is inspired by the ideas from [23, 24, 25, 26, 27, 28]. It extends the developments presented in [13] where same ideas have already been explored within the context of 1D chains.

IV Nested Block Jacobi Preconditioner

Let is a matrix splitting such that

| (14a) | ||||

| (14b) | ||||

Then, the block Jacobi method for solving (7a) involves the following:

| (15) |

where is the number of block Jacobi iterations. These iterations converge to the solution of (7a) if and only if

| (16) |

where denotes spectral radius [20, Thm. 2.16]. Given that is a positive definite block tri-diagonal matrix, and the splitting (14), condition (16) always holds when (15) is solved exactly [20, Lem. 4.7 with Thm. 4.18]. However, instead of solving (15) exactly, we propose a fixed number of inner iterations. It is apparent from (11), that has a block tri-diagonal structure with and for . Let such that

| (17a) | ||||

| (17b) | ||||

Then for each outer iteration , we propose number of inner iterations. This results in a nested block Jacobi method (NBJM) given in Algorithm 2.

Note that for the proposed splitting (17) of the positive definite block tri-diagonal matrix , condition always holds [20, Lem. 4.7 with Thm. 4.18].

IV-A Positive-definiteness of Preconditioner

We propose to apply fixed number of inner-outer iterations of NBJM (i.e., Algorithm 2), with at lines 3 and 12 in Algorithm 1. Executing this is equivalent to the use of a positive definite preconditioner. First, we present the following technical lemma which leads to the main result.

Lemma 1.

Given that for the proposed splitting (17) of the positive definite block tri-diagonal matrix , condition always holds. Let is a positive even integer i.e., , the inverse of can be expressed as

| (18) |

where and .

Proof.

First note that for the proposed splitting (17) of , with and for , it holds that . Then using , note that

and

for . Note that and for all . Therefore, and as claimed. ∎

Remark 2.

Now we present the main result.

Theorem 3.

Given and , the iterate of Algorithm 2 satisfies with , where and .

Proof.

Noting that for each outer iteration of Algorithm 2, we execute number of inner iterations with . Therefore, . Then note that , and using that

and

for . Therefore, and consequently .. ∎

IV-B Decomposable Computations

Note that we do not explicitly construct the preconditioner . Instead, at each PCG step, we execute number of outer iterations of Algorithm 2 such that for each outer iteration , number of inner iterations are performed. At each inner iteration, we need to solve

| (19) |

Let, where each for . Then, the computations in (19) can be decomposed into smaller problems

| (20) |

for and , where , and represents the decomposition of vector , accordingly. Note that for each smaller (20), the coefficient matrix is a block banded matrix of size , where the subblocks are of size independent of , and . Therefore for each and , the linear system (20) can be solved using block Cholesky factorization of with arithmetic complexity of . Note that this factorization is performed once at the start of Algorithm 1. Therefore, the computations at preconditioning lines 3 and 12 decompose into parallel threads with per thread arithmetic complexity of . By defining where each and for and partitioning and accordingly, the matrix vector products at line 7 of Algorithm 1 can also be decomposed into parallel threads connected in a 2D grid structure with localized data exchange. In Table I, we present the arithmetic complexity of each line of Algorithm 1. It shows the overall arithmetic complexity as well as per-thread, along with the overhead of scalar-value data exchange for each thread in case of parallel implementation on processors. The arithmetic complexity of each PCG step is dominated by the preconditioning line 12. With and being fixed, the overall arithmetic complexity of each PCG step becomes . Note that the computations involved to form the dot products at lines 8 and 13 and to update the infinity norm of the residual at line 11 are sequential in nature. In case of parallel implementation, they can be carried out by performing a backward-forward across the 2D grid with localized scalar data exchange. It should be noted that the total arithmetic complexity of PCGM to converge to a solution of desired accuracy depends on the total number of steps performed. In worst case, the number of steps can be of . This will result in arithmetic complexity. However, it often performs much better than this worst case complexity, as illustrated in the next section.

|

|

||||||

|---|---|---|---|---|---|---|---|

| Alg. 1 | Computations |

|

|

||||

| Line 7: | |||||||

| Line 8: | |||||||

| Line 9: | 0 | ||||||

| Line 10: | 0 | ||||||

| Line 11: | |||||||

| Line 12: | |||||||

| Line 13: | |||||||

| Line 14: | 0 | ||||||

V Numerical Experiments

In this section, we present results of the numerical experiments for solving LQ optimal control for two cases.

Case (I): We consider a 2D grid of mass-spring-damper systems with masses, where each sub-system has the discrete-time state space dynamics of the form (1) with four states and two inputs. The cost function has and for , , . The mass, spring constant, and damping coefficient parameters are randomly selected between and to create a heterogeneous network.

Case (II): We consider an LQ optimal control problem of an automated irrigation network under distant-downstream control [30] shown in Fig. 2. The network consists of controlled pools in the primary channel and controlled pools in each secondary channel originating from the primary channel’s pools. The dynamics of each controlled pool can be described by a sub-system in the form of (1) with and . That is, there is a direct coupling between the states of sub-systems and in the primary channel and and in the secondary channels. However, there is no inter-coupling between the states of subsystems in the secondary channels. The control inputs are the adjustment of the water-level reference, and the states are the deviation of state trajectory from equilibrium. For each sub-system , the number of states , and the number of inputs . The corresponding cost function has and .

The LQ optimal control problem for both cases is solved by solving the corresponding KKT conditions of the form (6). We compare results of the following three methods for solving (7a).

-

•

The proposed PCGM in Algorithm 1, with proposed nested block Jacobi preconditioning with and .

-

•

A standard nested block Jacobi method (see e.g. [28]).

-

•

MATLAB’s Backslash (CHOLMOD [31]) with sparse matrix representation

The experiment are conducted with varied from to such that the number of scalar variables in the largest problems for both cases are of . We consider the processor time of a single thread implementation which serves as a proxy for the overall arithmetic complexity of each method. We set the stopping criteria for the infinity norm of the residuals of the PCGM and standard nested block Jacobi method to . Note that for solving (7a) with the standard nested block Jacobi method the outer iterations are performed until stopping criteria is met, while the inner iterations are fixed to . All computations are performed on a Windows PC with Intel Core-i7 processor with clock speed and 16GB of RAM.

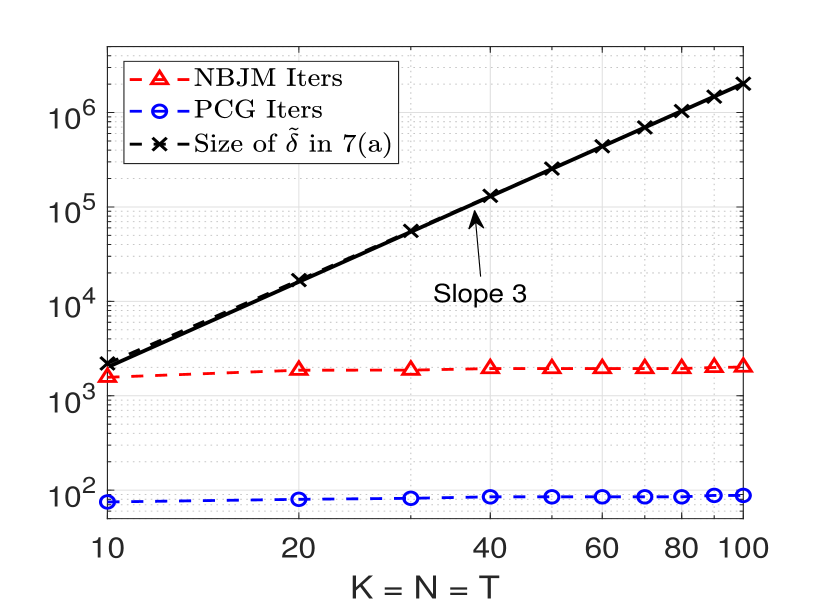

Case (I): 2D Grid of spring-mass-damper network. Fig. 5 shows the total number of steps of the proposed PCGM with and total number of outer steps of the standard NBJM with against the total number of variables in (7a). It shows that the standard NBJM consistently takes a large number of steps, in the order of thousands. On the other hand, our proposed PCGM consistently takes fewer number of steps lesser than hundred as compared to the size of the system. For instance, for , the total number of unknown are while the total number of PCGM steps performed are only. Such small number of steps of proposed PCGM are particularly favourable for a distributed implementation resulting in a small data exchange overhead.

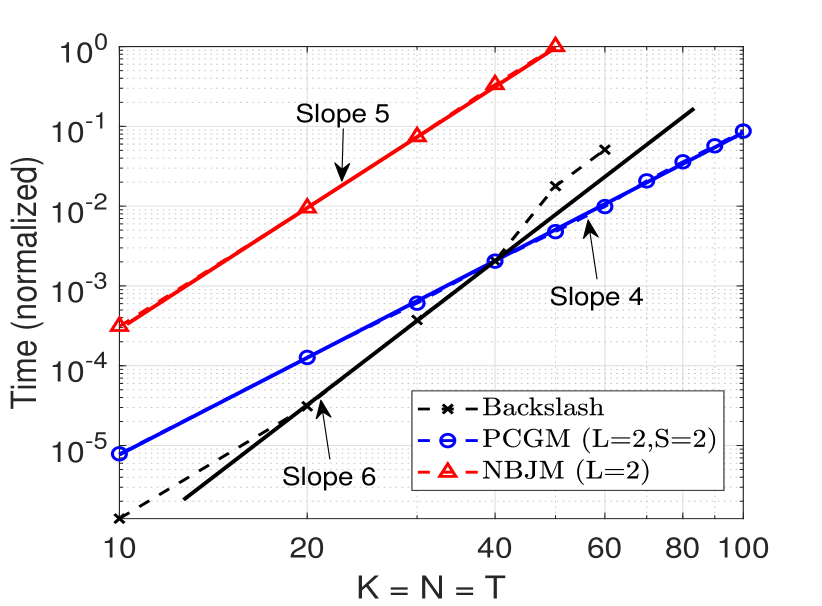

Fig. 5 shows the normalized processor time for solving (7a) using all three approaches. It shows that along the line , the per step arithmetic complexity of PCGM and standard NBJM scales as . However, due to the large number of step performed by NBJM, the overall processor time required is an order larger than the proposed PCGM. In contrast, for smaller systems MATLAB’s Backslash performs well but its overall processor time scales as . This is because Backslash invokes sparse cholesky factorization from CHOLMOD [31]. Despite its superior capabilities including permutation of variables for computational benefit, the number of fill-ins during the factorization increases exponentially since the structure within the sub blocks of is lost. Moreover, for problems with greater than , processor time for Backslash also includes memory management overhead, thus no longer a good proxy for arithmetic complexity. For problems with and above, Backslash went out of memory. While the proposed PCGM performs much better with an added scope of the decomposibility of computations.

Fig. 5 illustrates the actual condition number and the achieved conditioning of the transformed system using the structured preconditioner given in Thm. 3. It can be seen that the conditioning is improved by almost two order of magnitude with the proposed approach.

Case (II): Automated Irrigation Network. Fig. 8 compares the total number of PCGM steps and standard NBJM steps with the size of the system. It can be seen that the number of steps performed by NBJM increases as with the size of the problem reaching up to for . As such, for larger systems, the results of NBJM are not included. By contrast, the increase in the number of steps of PCGM is but the steps performed are much smaller than the size of the system.

Fig.8 shows the overall processor time of the three approaches. The processor time of NBJM increases as and that of Backslash is . Whereas the increase in the processor time of the proposed PCGM is which reflects the linear increase in the number of steps of PCGM along the line . Moreover, for problem size larger than , Backslash suffers from memory management issues and it went out of memory for problems larger than .

Finally, Fig.8 shows the condition number of in (7a) and of the preconditioned system. It can be seen that there is an order of magnitude improvement in the conditioning with and .

VI Conclusions

In this paper, we have proposed a structured PCGM for solving LQ optimal control problems of systems with 2D grid structure. The per step arithmetic complexity of the proposed approach scales linearly in each spatial dimension as well as in temporal dimension. The computations are amenable to distributed implementation on parallel processors with localized data exchange mirroring the 2D grid structure of the problem. Future works include the development of analytical bounds on the achieved conditioning and an implementation of the proposed approach on parallel processing architectures. It will enable us to perform a deeper analysis of the distributed performance of proposed approach along with the issues related with the data exchange overhead.

VII Acknowledgement

We would like to thank Prof. Michael Cantoni and Dr. Farhad Farokhi from the University of Melbourne, Australia for their insights and the helpful discussions about this work. This work was supported by the Air Force Office of Scientific Research Grant FA2386-19-1-4076 and the NSW Defence Innovation Network.

References

- [1] Y. Li, M. Cantoni, and E. Weyer, “On Water-Level Error Propagation in Controlled Irrigation Channels,” in Proceedings of the 44th IEEE Conference on Decision and Control. IEEE, 2005, pp. 2101–2106.

- [2] Q. Peng and S. H. Low, “Distributed optimal power flow algorithm for radial networks, I: Balanced single phase case,” IEEE Transactions on Smart Grid, vol. 9, no. 1, pp. 111–121, 2018.

- [3] M. Liu, J. Zhao, S. P. Hoogendoorn, and M. Wang, “An optimal control approach of integrating traffic signals and cooperative vehicle trajectories at intersections,” Transportmetrica B, vol. 10, no. 1, pp. 971–987, 2022.

- [4] I. Karafyllidis and A. Thanailakis, “A model for predicting forest fire spreading using cellular automata,” Ecological Modelling, vol. 99, no. 1, pp. 87–97, 1997.

- [5] V. L. Somers and I. R. Manchester, “Priority maps for surveillance and intervention of wildfires and other spreading processes,” Proceedings - IEEE International Conference on Robotics and Automation, vol. 2019-May, pp. 739–745, 2019.

- [6] H. Maurer and H. D. Mittelmann, “Optimization techniques for solving elliptic control problems with control and state constraints: Part 1. Boundary control,” Computational Optimization and Applications, vol. 16, pp. 29–55, 2000.

- [7] C. V. Rao, S. J. Wright, and J. B. Rawlings, “Application of Interior-Point Methods to Model Predictive Control,” Journal of Optimization Theory and Applications, vol. 99, no. 3, pp. 723–757, dec 1998.

- [8] Y. Wang and S. Boyd, “Fast Model Predictive Control Using Online Optimization,” IEEE Transactions on Control Systems Technology, vol. 18, no. 2, pp. 267–278, 2010.

- [9] A. Shahzad, E. C. Kerrigan, and G. A. Constantinides, “A Stable and Efficient Method for Solving a Convex Quadratic Program with Application to Optimal Control,” SIAM Journal on Optimization, vol. 22, no. 4, pp. 1369–1393, jan 2012.

- [10] I. Nielsen and D. Axehill, “Direct Parallel Computations of Second-Order Search Directions for Model Predictive Control,” IEEE Transactions on Automatic Control, vol. 64, no. 7, pp. 2845–2860, 2019.

- [11] F. Rey, P. Hokayem, and J. Lygeros, “ADMM for Exploiting Structure in MPC Problems,” IEEE Transactions on Automatic Control, pp. 1–1, 2020.

- [12] A. Zafar, M. Cantoni, and F. Farokhi, “Optimal control computation for cascade systems by structured Jacobi iterations,” IFAC-PapersOnLine, vol. 52, no. 20, pp. 291–296, 2019.

- [13] ——, “Structured Preconditioning of Conjugate Gradients for Path-Graph Network Optimal Control Problems,” IEEE Transactions on Automatic Control, vol. 67, no. 8, pp. 4115–4122, aug 2022.

- [14] A. Nedic, A. Ozdaglar, and P. A. Parrilo, “Constrained Consensus and Optimization in Multi-Agent Networks,” IEEE Transactions on Automatic Control, vol. 55, no. 4, pp. 922–938, 2010.

- [15] A. Falsone, K. Margellos, S. Garatti, and M. Prandini, “Dual Decomposition for Multi-Agent Distributed Optimization with Coupling Constraints,” Automatica, vol. 84, pp. 149–158, 2017.

- [16] A. Zafar, F. Farokhi, and M. Cantoni, “Linear Quadratic Control Computation for Systems with a Directed Tree Structure,” IFAC-PapersOnLine, vol. 53, no. 2, pp. 6536–6541, 2020.

- [17] J. Nocedal and S. J. Wright, Numerical Optimization. Springer, 2000.

- [18] M. R. Hestenes and E. Stiefel, “Methods of Conjugate Gradients for Solving Linear Systems,” Journal of Research of the National Bureau of Standards, vol. 49, no. 1, pp. 409–436, 1952.

- [19] Y. Saad, “Iterative Methods for Sparse Linear Systems, Second Edition,” Methods, p. 528, 2003.

- [20] W. Hackbusch, Iterative Solution of Large Sparse Systems of Equations, ser. Applied Mathematical Sciences. Cham: Springer International Publishing, 2016, vol. 95.

- [21] M. Benzi and M. Tůma, “A Robust Incomplete Factorization Preconditioner for Positive Definite Matrices,” Numerical Linear Algebra with Applications, vol. 10, no. 5-6, pp. 385–400, 2003.

- [22] J. Xia and Z. Xin, “Effective and Robust Preconditioning of General SPD Matrices via Structured Incomplete Factorization,” SIAM Journal on Matrix Analysis and Applications, vol. 38, no. 4, pp. 1298–1322, jan 2017.

- [23] N. K. Nichols, “On the Convergence of Two-Stage Iterative Processes for Solving Linear Equations,” SIAM Journal on Numerical Analysis, vol. 10, no. 3, pp. 460–469, jun 1973.

- [24] O. G. Johnson, C. A. Micchelli, and G. Paul, “Polynomial preconditioners for conjugate gradient calculations,” SIAM Journal on Numerical Analysis, vol. 20, no. 2, pp. 362–376, 1983.

- [25] P. J. Lanzkron, D. J. Rose, and D. B. Szyld, “Convergence of nested classical iterative methods for linear systems,” Numerische Mathematik, vol. 58, no. 1, pp. 685–702, dec 1990.

- [26] V. Migallón and J. Penadés, “Convergence of two-stage iterative methods for Hermitian positive definite matrices,” Applied Mathematics Letters, vol. 10, no. 3, pp. 79–83, 1997.

- [27] Z.-h. Cao, H.-b. Wu, and Z.-y. Liu, “Two-stage iterative methods for consistent Hermitian positive semidefinite systems,” Linear Algebra and its Applications, vol. 294, no. 1-3, pp. 217–238, jun 1999.

- [28] Z.-H. Cao, “Convergence of Nested Iterative Methods for Symmetric P-Regular Splittings,” SIAM Journal on Matrix Analysis and Applications, vol. 22, no. 1, pp. 20–32, jan 2000.

- [29] R. A. Horn and C. R. Johnson, Matrix Analysis. Cambridge University Press, 2013.

- [30] M. Cantoni, E. Weyer, Y. Li, S. K. Ooi, I. Mareels, and M. Ryan, “Control of Large-Scale Irrigation Networks,” Proceedings of the IEEE, vol. 95, no. 1, pp. 75–91, jan 2007.

- [31] Y. Chen, T. A. Davis, W. W. Hager, and S. Rajamanickam, “Algorithm 887: CHOLMOD, supernodal sparse cholesky factorization and update/downdate,” ACM Transactions on Mathematical Software, vol. 35, no. 3, pp. 1–14, 2008.