Streamlining Image Editing with Layered Diffusion Brushes

Abstract.

Denoising diffusion models have recently gained prominence as powerful tools for a variety of image generation and manipulation tasks. Building on this, we propose a novel tool for real-time editing of images that provides users with fine-grained region-targeted supervision in addition to existing prompt-based controls. Our novel editing technique, termed Layered Diffusion Brushes, leverages prompt-guided and region-targeted alteration of intermediate denoising steps, enabling precise modifications while maintaining the integrity and context of the input image. We provide an editor based on Layered Diffusion Brushes modifications, which incorporates well-known image editing concepts such as layer masks, visibility toggles, and independent manipulation of layers—regardless of their order. Our system renders a single edit on a 512x512 image within 140 ms using a high-end consumer GPU, enabling real-time feedback and rapid exploration of candidate edits.

We validated our method and editing system through a user study involving both natural images (using inversion) and generated images, showcasing its usability and effectiveness compared to existing techniques such as InstructPix2Pix and Stable Diffusion Inpainting for refining images. Our approach demonstrates efficacy across a range of tasks, including object attribute adjustments, error correction, and sequential prompt-based object placement and manipulation, demonstrating its versatility and potential for enhancing creative workflows.

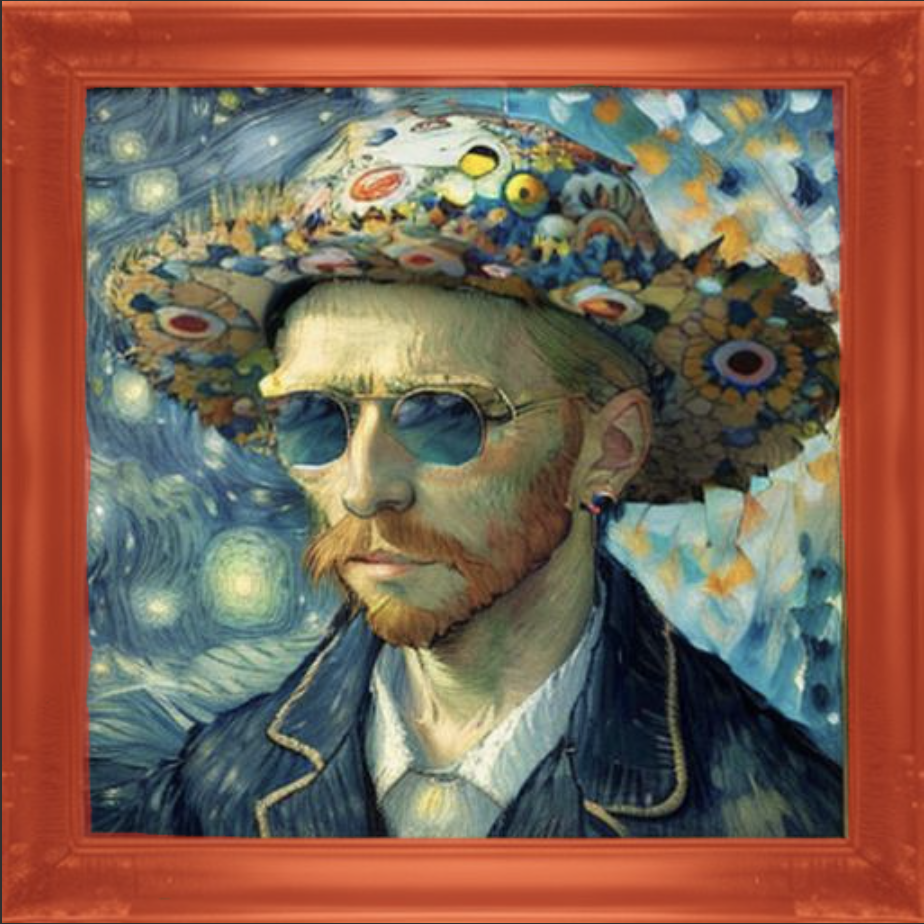

Edit prompt

(type)

sunglasses

(add objects)

hat, Takashi Murakami style

(change style)

starry night, van gogh style

(fix problems, maintaining style)

sky, Leonid Afremov style

(mixing styles)

ornate frame

(change attribute / texture)

Layer Mask

Edited

image

A 5 by 3 photo grid, showing the input photo (first column), the mask used for fine-tuning (column 2), and the results of each method (columns 3 - 5). The methods that are compared are Adobe Photoshop (content-aware fill), inpainting, and Layered Diffusion Brushes. Row 1: shows the results on a portrait of a dog, Row 2: a monkey eating a Banana, and Row 3: A palm tree on the beach at sunset.

1. Introduction

Image editing is a continuously evolving area of research in image processing, which has seen significant advancements in recent years with the advent of advanced text-to-image (T2I) techniques. T2I methods are a group of machine learning models that are able to generate images from textual descriptions and include Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and Denoising Diffusion Models (DMs) (Zhang et al., 2023). Among these, DMs (Ho et al., 2020) have garnered considerable attention due to various characteristics, including a simple training scheme, great performance in generating high-quality images with a wide range of styles, not being prone to mode collapse, and being easy to employ in different tasks.

Despite showing exceptional results in different applications, e.g. image synthesis, super-resolution (Saharia et al., 2022c), inpainting, etc., DMs models are prone to several disadvantages, such as being sensitive to the choice of the original noise distribution, and being difficult to control (Wang et al., 2020). These limitations can lead to a high degree of stochastic behaviour, often requiring many “generations” before a desirable result is achieved. Additionally, due to the nature of the LDM generation technique, the results are often hard to fine-tune. Therefore, a region-targeted image editing tool has the potential to significantly expand the capabilities of these models.

In this work, we introduce Layered Diffusion Brushes, a novel Latent Diffusion Model-based (LDM) (Rombach et al., 2022) editing and fine-tuning tool that users, including artists, can utilize for efficiently making real-time region-specific adjustments and modifications to images. The core of our technique, Layered Diffusion Brushes, uses a user-specified mask to introduce targeted random noise patterns into the latent image at specific steps during the reverse diffusion process, which are then denoised using both mask and prompt guidance into new image content over subsequent steps. This produces targeted changes to the specified regions while preserving the original context from the rest of the image. Our technique results in changes that are well-integrated with the rest of the generated image, without affecting unmasked areas. By employing a latent caching technique, our system is capable of rendering edits in as little as 140 ms on consumer hardware, enabling rapid feedback and refinement. In particular, we provide for a “scroll” gesture that allows users to rapidly adjust the random noise seed and thus explore variations of the desired edit.

We initially developed an early prototype of this work, with the goal of re-randomizing targeted regions for fine-tuning, and conducted a user study to assess its usability and features. Based on the feedback received from this first study, we made significant improvements and revamped the tool. These enhancements included caching in the backend to improve inference speed, a completely redesigned user interface (UI) featuring improved masking controls, and more streamlined adjustments.

On top of the core Layered Diffusion Brushes technique, we implement a layering system which allows multiple edits to be stacked on top of each other. Edit layers can be switched, hidden, or deleted without affecting other layers, despite all operating on the same latent image. Multiple edit layers with their corresponding masks and prompts are shown in Figure 1.

To evaluate the performance, usability, and effectiveness of Layered Diffusion Brushes, we conducted a user study with artists who are experienced in editing images and also have basic familiarity with AI image editing and generative tools. Our user study aimed to assess the efficacy of Layered Diffusion Brushes in improving the experience of users in fine-tuning real AI-generated images and providing artistic control, and the creativity support and functionality of our tool in comparison to two other well-known existing image editing tools, InstructPix2Pix (Brooks et al., 2022) and SD-Inpainting (Rombach et al., 2022).

2. Background and Related Works

Image editing is the task of modifying existing images in terms of appearance, structure, or content, and includes tasks ranging from subtle adjustments to major transformations. This is different from some other related tasks, such as image generation (Saharia et al., 2022b; Rombach et al., 2022), image restoration (Ho et al., 2022) and enhancement that focuses on improving the quality of images.

As discussed in section 1, image editing has been an active field of research for years. With recent advancements in generative AI, many studies have attempted to harness the power of these new AI models in various image editing tasks for generated and real images. One such development has been the increasing utilization of GANs in a range of image manipulation tasks (Abdal et al., 2021; Lang et al., 2021; Pan et al., 2023). However, one of the main challenges faced in using GANs for image editing is the process of GAN-inversion (Richardson et al., 2021). While producing decent global changes, these methods often fail to create localized edits(Bar-Tal et al., 2022). Drag-GAN (Pan et al., 2023) proposed a GAN-based image editing for the controlled deformation of objects in an image. Their method shows interesting results for the manipulation of the pose and layout of certain objects, but it lacks generalizability and is limited to certain scenarios.

On the other hand, LDMs offer a distinct approach to image editing by learning the underlying distribution of image data and generating new images based on that distribution. Many studies have endeavoured to harness the power of controllable DMs in various image editing tasks. Examples include text and image-driven image manipulation studies (Kim et al., 2022; Hu et al., 2022).

2.1. DM-based image editing

Huang et al. surveyed existing DM-based image editing techniques and categorized them into three groups: training-based approaches, testing-time fine-tuning methods, and training and fine-tuning-free methods (Huang et al., 2024). Per the survey, these methods utilize various types of inputs to control the editing process, such as text, masks, segmentation maps, or dragging points. This survey also categorizes image editing methods based on the types of edits they can accomplish, including semantic editing (adding, removing, or replacing objects; background change; and emotional expression modification), stylistic editing (colour, texture, or style change), and structural editing (moving objects; changing size, position, shape, or pose of objects; and viewpoint change).

Our system requires no training or fine-tuning, putting it in the latter category of models and enabling its use with any existing pretrained model.

One of the most common group of works in training-based approaches is instructional editing via full supervision (Brooks et al., 2022; Guo and Lin, 2023; Geng et al., 2023). Many such approaches, while attempting to make localized changes to images, end up creating some degree of global change as well, which is not desirable. InstructPix2Pix (Brooks et al., 2022) is a well-known method in this category, which constructs a large dataset of instruction-image pairs using Stable Diffusion(Rombach et al., 2022) and Prompt-to-Prompt (Hertz et al., 2022), and trains an instructional image editing diffusion model. While this method is widely recognized and is one of the benchmarks in our study, it faces a challenge in expressing certain types of edits solely through text and instruction, such as changing the colour or style of a single object amongst several similar objects in an image. This limitation underscores the utility of allowing users to specify regions for editing purposes.

Another group of works, including Prompt-to-Prompt (Hertz et al., 2022) and Zero-shot Image-to-Image Translation (Parmar et al., 2023), edit images by modifying the attention maps through discerning the intrinsic principles in the attention layers and then utilizing them to modify the attention operations. One of the challenges with these works is obtaining optimal results when making multiple small and fine-tuned edits.

Some other techniques utilize inversion (Choi et al., 2021) to preserve a subject while modifying the context. Textual Inversion (Gal et al., 2022) and DreamBooth (Ruiz et al., 2023) synthesize new views of a given subject given 3–5 images of the subject and a target text.

Finally, among training and fine-tuning-free methods, modification of inversion and sampling formulas are common techniques. Through the inversion process, a real image transforms into a noisy latent space, and then the sampling process is used to generate an edited result given the target prompt. We utilize the Direct Inversion approach (Ju et al., 2023), which is fast and efficient for obtaining the noisy latent space, to support editing real images. However, we employ a different approach for editing the image that modifies the latent space during sampling.

Another common category of image manipulation is image inpainting, which involves adding new content to an image. Traditional inpainting techniques can also be utilized for object removal by providing no text prompt and guidance (Avrahami et al., 2022). While these models are effective for generating new content in images, they are not suitable for making small and targeted adjustments (Avrahami et al., 2022; Lugmayr et al., 2022; Saharia et al., 2022a). We also compare our proposed method with Stable Diffusion Inpainting (SD-Inpainting) (Rombach et al., 2022).

Similar to many other works in the literature, including DiffEdit (Couairon et al., 2022), Blended Diffusion (Avrahami et al., 2022), Inpainting (Yu et al., 2023), and Blended Latent Diffusion (Avrahami et al., 2023), we utilize masks for creating our edits. However, in contrast, our objective is to fine-tune the image and make localized adjustments to the existing image while keeping the remaining content intact. Unlike the method in (Avrahami et al., 2023), our method modifies the original latent space, which results in better preservation of the original context of the image and more natural blending of the results into the image, as well as enabling substantial performance optimizations.

In (Avrahami et al., 2023), LDM’s latent representation is obtained using a VAE, which is lossy and results in the encoded image not being reconstructed exactly, even before any noise addition. (Avrahami et al., 2023) incorporates a background reconstruction strategy to address this, which increases computation and lowers inference time. However, since one of the primary goals of this study is to maximize speed for a real-time user experience, this method is not feasible. On the other hand, we store the latent for regeneration in memory, resulting in much faster results.

2.2. Layers in image editing

Layer-based image editing has long been a cornerstone in the field of computer graphics (Porter and Duff, 1984). Recently, some works have delved into merging this concept into the evolving landscape of machine learning-driven methodologies (Bar-Tal et al., 2022; Sarukkai et al., 2024). These layered representations offer a dynamic platform for the manipulation of distinct components within an image. Often, the essence of this process lies in transforming a singular input image into a multi-layered representation.

Collage Diffusion (Sarukkai et al., 2024) presents an interesting layer-based scheme by modifying text-image cross-attention with the layers’ alpha masks to generate globally harmonized images that respect a user’s desired scene composition. They also introduce an editing framework utilizing a modified Blended Latent Diffusion (Avrahami et al., 2023), assuming a pre-existing layered structure as input, harnessing the power of machine learning to synthesize cohesive image outputs from these pre-arranged layers.

One significant improvement provided by our method, unlike Collage Diffusion, is the ability to have completely independent layers. Additionally, we employ a different approach, focusing on user-oriented and real-time editing experiences.

Therefore, our method is a training and fine-tuning-free approach that utilizes masks and text as input, offering a broad range of edits, including most semantic and stylistic editing tasks, and some structural edits, such as changing the size of objects.

3. Methods

3.1. Layered Diffusion Brushes Formulation

As mentioned in section 1, we use an LDM-based variant of image generative models. Similar to (Lugmayr et al., 2022; Avrahami et al., 2023), we make intermediate adjustments to the latent space using the pre-trained LDM. Therefore, Layered Diffusion Brushes does not require any additional training and only modifies the latents during the reverse diffusion process.

1 presents the algorithm for generating intermediate latents. This part simply caches two latents, and . Here, serves as the initial latent for editing. In this formulation, , where is the total number of steps to create the initial image, and is the total number of steps for editing. represents the latent used for blending the edited latent in layer 1.

We first initialize the sample and noise level () if the image is generated using the DM. If a real image is used, we obtain the initial noise latent for the image using the Direct Inversion method presented in (Ju et al., 2023). Based on our testing, this method is significantly faster in terms of speed and comparable in terms of performance to other methods in the literature, including null text inversion (Mokady et al., 2023) and Negative-Prompt Inversion (Miyake et al., 2023) methods.

Algorithm 2 contains the main backbone of the system, which handles both editing and layering functionalities.

To initiate an edit, the algorithm begins by generating a new noise pattern sampled from . Here, we generate a new noise pattern from a different seed (set per-layer). Then, is scaled to match the variance of obtained from the previous step. This ensures that the additive noise is not too different from the latent for editing, which would result in visual artifacts. The new noise is added to the original latent, controlled by the mask and . The effect of using different values for and will be discussed in detail in 3.

During the edit loop, at step , in each layer, the latent of the new seed and the previous layer’s latent are merged using the mask, thus confining the influence of the new latent to the masked region. Subsequently, the new latent region is progressively denoised and integrated into the existing image contents from steps through . To keep the UI simple and reduce the number of user-controlled parameters, we fix the value of in our implementation. Preliminary tests suggested that the value of works well for many applications.

Finally, there is separate logic that tracks all the layers and their corresponding parameters, which is triggered whenever a new layer is created or if the user switches between the layers. Consequently, all the layers are created and stacked one by one, and the appropriate is provided to the Algorithm 2 to perform the edit.

When creating a new layer, the user specifies a mask . For each layer and the corresponding mask , the user specifies an integer (per 2), which is the total number of steps that the noise is introduced to the latent, and a tunable parameter to control the additive noise strength.

We show the overview of the core algorithm of the proposed method in 2 and also present the updated pseudocode for the denoising process:

3.2. User-Interface Design

Our goal with Layered Diffusion Brushes was to design a practical tool for artists and graphic designers to use. In particular, we aimed to design a set of controls that would be intuitive and flexible, without overwhelming users with excessive choices. Figure 3 provides an overview of the user interface. As illustrated in the figure, the UI comprises the following primary sections (each section highlighted with the corresponding number on the image):

a picture of a table containing different tasks for the user to work with

-

(1)

Model Section

-

•

This section in the UI enables users to load various model combinations, including pre-trained models, schedulers, and LoRA (Hu et al., 2021).

-

•

-

(2)

Generation section

-

•

This section allows users to either generate a new image using a seed and prompt combination, or upload a real image that will be inverted.

-

•

-

(3)

Image Canvas

-

•

This canvas serves as the workspace where users interact with and make edits to images.

-

•

-

(4)

Editing Section

-

•

This section empowers users to create and access different layers and adjust various controls to make desired edits.

-

•

Two types of interactions are provided for performing edits, as shown in Figure 4:

Box Mode: Users can click or drag on the image to move a resizable square mask around the image. This option enables users to quickly and interactively explore how various parts of the image will change in response to a given set of editing settings (prompt and strength), simply by moving the cursor.

Custom Mask Mode: Users can draw the mask in any shape they like using a resizable paintbrush, clicking and dragging to add to the mask. Users can navigate between new generation samples by scrolling the mouse up or down while hovering over the image, allowing them to rapidly explore variations on their edit.

We envision that users could use the box option first to choose a good location to place their edit and refine the prompt/strength settings, followed by the custom mask to refine the shape and appearance of the edit.

Proper utilization of the layering capability enables the tool to achieve more complex effects: users can stack, hide and unhide layers and adjust the strength of each layer. Furthermore, each layer can be edited independently without substantially affecting the appearance of edits in higher or lower layers.

3.3. Hyperparameters

Here are the main hyperparameters used in the Layered Diffusion Brushes editing method:

-

•

Number of regeneration steps (): An integer value that specifies the number of steps that the Layered Diffusion Brushes will run to make the edit

-

•

Brush Strength (): A float number that corresponds to the value in equation (1) which controls how strong the noise should be applied at the targeted region. Initial , specified by the user has a value between 0 and 100, which will be scaled later on in the algorithm using the following equation:

(1) where and are the new noise latent and latent for regeneration respectively (as notes on …), is the acceptable range for the variance of the (we used =0.25), is the corresponding mask, and is the width of the (W=512)

-

•

Seed Number (): An integer number that will be used for generating the Gaussian noise pattern in the specified region. As with normal image generation, the UI provides buttons to randomize the seed or reuse the previous seed. Moving the box around (in box mode) or using the scroll wheel (in custom mask mode) will adjust the seed automatically.

-

•

Brush Size : An integer value that dictates the radius of the box when utilized in box mode, or the size of the brush in custom mask mode. The formulation of in Equation 1 aims to mitigate the impact of varying brush sizes on the quality of edits by considering the number masked pixels and adjusting accordingly.

The number of edit steps () and the brush hardness () parameters jointly control the magnitude of the intermediate additive noise and ultimately the amount of applied change to the latent image. Higher values for the brush hardness will result in a bigger change. Figure 5 (top row) shows the effect of a wide range of different hardness values while keeping other parameters the same. As shown, if the mask strength is too high, the LDM cannot fully denoise, and the additive noise causes artifacts. Conversely, if the value is too small, Layered Diffusion Brushes will not be able to make sufficient adjustments. We use a generalized version of strength in order to make the parameter more intuitive, as discussed above.

Similarly, we tested the effect of using different values while keeping the rest of the parameters stationary. As demonstrated, if the editing is run for fewer steps (lower ) , the LDM cannot recover from it and will produce artifacts, while if has a higher value, the area inside the mask will be denoised too much and cannot properly blend well with the rest of the image.

Additionally, there is a correlation between the two parameters. When the noise is introduced in later stages of diffusion (higher value of ), the model has less time to recover from the additive noise. Therefore, it is recommended to adjust the value to a higher number. Conversely, if the noise is introduced in the early stages of diffusion, and if the value of is not large enough, the additive noise will be dissolved into the input image latent and several stages of diffusion will eliminate its effect. Therefore, if is small, the value should be larger to achieve optimal results.

, ,

, ,

,

,

,

,

,

,

,

,

image with mask

image with mask

,

,

,

,

,

,

,

,

,

\DescriptionA 6 by 2 photo grid illustrating the effect of each of the parameters in the Layered Diffusion Brushes. The input image is a photo of hot balloons racing (Row 1, column 1). A mask is drawn on one of the balloons in the input image for fine-tuning (Row 2, column 1). On the rest of the images, different parameters result in different edited images. Row 1 shows the effect of using different values for alpha while n is fixed. When the alpha is too small, the changes are too minimal and not visible (Row 1, column 2). Also, a very large alpha creates artifacts on the final image (Row 1, column 6). Row 2 shows the effect of using different values for n while keeping alpha fixed. When n is too small, the changes are too minimal and not visible (Row 2, column 2), while a very large n results in artifacts on the final image (Row 2, column 6).

,

\DescriptionA 6 by 2 photo grid illustrating the effect of each of the parameters in the Layered Diffusion Brushes. The input image is a photo of hot balloons racing (Row 1, column 1). A mask is drawn on one of the balloons in the input image for fine-tuning (Row 2, column 1). On the rest of the images, different parameters result in different edited images. Row 1 shows the effect of using different values for alpha while n is fixed. When the alpha is too small, the changes are too minimal and not visible (Row 1, column 2). Also, a very large alpha creates artifacts on the final image (Row 1, column 6). Row 2 shows the effect of using different values for n while keeping alpha fixed. When n is too small, the changes are too minimal and not visible (Row 2, column 2), while a very large n results in artifacts on the final image (Row 2, column 6).

3.4. User Study

We conducted a user study in order to evaluate the effectiveness of Layered Diffusion Brushes for providing targeted image fine-tuning, in comparison with InstructPix2Pix and the SD-Inpainting methods.

3.4.1. Participants

We recruited a cohort of seven expert participants with extensive experience in using image editing software. The group comprised four females and three males, with an average age of 30.4 years. As part of our participant selection criteria, we ensured that all participants possessed at least a basic level of familiarity with AI image generation techniques and were regular users of editing software such as Adobe Photoshop for creating visual art. Two participants were proficient in image generative models and Stable Diffusion, while the remaining five were graphic design students who used Adobe Photoshop and Illustrator on a daily basis.

3.4.2. Study Procedure and Task Description

The study was conducted remotely; participants were provided a link to access the tool. Each user engaged in two types of tasks: free-form tasks where users generated an image for editing using a fixed prompt and seed (type 1), and pre-determined tasks where the user worked with existing real images from the MagicBrush dataset (Zhang et al., 2024) (type 2).

The study started with a brief introduction to each of the methods. Following this, participants received a short tutorial on how to navigate the user interface (UI). Subsequently, they were provided with a 5-minute window to explore the various options and sections of the tool, becoming familiar with the use of each section.

A dedicated task section was incorporated into the user interface (UI) specifically for the user study. Each type of task comprised three rounds of edits using the three methods: Layered Diffusion Brushes, InstructPix2Pix, and SD-Inpainting.

Each user was assigned a unique user ID, and tasks were randomly selected and pre-assigned to users. Throughout the study, users interacted with the task table to load, select, and save each task. An example of the task section is illustrated in Figure 6.

a picture of a table containing different tasks for the user to work with

For the type 1 tasks, we selected specific types of edits that showcase various functionalities and capabilities of the system. Here are the description of edit types along with an example used during the user study:

-

(1)

Stack layers and create sequential edits (draw with Layered Diffusion Brushes:

-

•

Input image: photo of a beautiful beach.

-

•

Layer 1: boat (Introduce a boat in the sea)

-

•

Layer 2: rocks (Scatter weathered rocks along the shoreline)

-

•

Layer 3: birds (Populate the sky above the boat with a flock of birds.

-

•

-

(2)

Modify attributes and features of objects:

-

•

Input image: portrait of a young man

-

•

Layer 1: blond (Transform a person’s hair color to blond).

-

•

Layer 2: joker (Perform facial manipulation by swapping one person’s face with another’s, reshaping identities.)

-

•

-

(3)

Correct image imperfections and errors:

-

•

Input image: portrait of a man holding an umbrella

-

•

Layer 1: remove the rod that is mistakenly placed

-

•

Lyaer 2: fix the extra part on the side of the coat

-

•

-

(4)

Enhance discernibility of similar objects through modification:

-

•

Input image: aerial photo of a pool table with balls

-

•

Layer 1: change the colour of s specific ball (third ball from the left) to red

-

•

-

(5)

Target specific regions for style transfer, refining aesthetics:

-

•

Input image: Mona Lisa by Leonardo da Vinci

-

•

Layer 1: make the left part of the background similar to van gogh stary night style.

-

•

In our study design, we strategically chose the combination of seeds and prompts to encompass and evaluate these functionalities. Each user was given three seed-prompt items and tasked with creating and editing up to three layers of edits. For the majority of the tasks, , i.e. the total number of steps for image generation was set to . All the images were generated using Dreamshaper-7 (Civitai, 2024) and DDIM Scheduler.

For the Layered Diffusion Brushes method, users started by selecting a layer with an existing edit instruction from the task table, then created the corresponding layer in the UI. They had the option of choosing either the box option or the custom mask option. The task was followed by drawing the mask, tweaking the controls or edit prompt if needed, and completing the edit. Once the task was complete, the user saved the edit and moves on to the next task.

Users followed a similar procedure for the InstructPix2Pix and SD-Inpainting methods, with the exception of creating layers, as these methods do not incorporate layering capabilities. After completing each layer edit task, users saved the edits, and the user interface (UI) stacked subsequent edits onto the edited image. For the InstructPix2Pix method, users were required to write the instruction prompt and then adjust the image and text guidance scales and regeneration steps to finalize the edit. On the other hand, for the SD-Inpainting method, users drew a mask and controlled the edit using the strength control. Completion times for each task were recorded for both methods.

Type 2 tasks, corresponding to the MagicBrush dataset (Zhang et al., 2024), were more structured, with the mask, edit prompt, and input images provided by the dataset. MagicBrush utilized crowd workers to collect manual edits using DALL-E 2 (Ramesh et al., 2022). This process involved 5,313 editing sessions and 10,388 editing iterations, resulting in a robust benchmark for instructional image editing. Additionally, the dataset provides manually annotated masks and instructions for each edit. We selected a subset of 35 input images, each containing up to three layers of edits. Users selected each image, started with the provided mask, could modify the mask if necessary, adjusted the control parameters and prompt, and saved and completed the task for each method. 7 provides examples of generated images for each of the methods during the user study.

“What if there was no plant life along the building” / “no plants”

“”Replace the wooden figure with a barbie doll.” / “parking meter with barbie doll on top”

“”Replace the wooden figure with a barbie doll.” / “parking meter with barbie doll on top”

“Have the woman wear a hat.” / “woman wearing a hat”

“Have the woman wear a hat.” / “woman wearing a hat”

InstructPix2Pix

InstructPix2Pix

SD-Inpainting

SD-Inpainting

Layered Diffusion

Layered Diffusion

Brushes (Ours)

MagicBrush (dataset ground truth)

MagicBrush (dataset ground truth)

A 6 by 2 photo grid illustrating the effect of each of the parameters in the Layered Diffusion Brushes. The input image is a photo of hot balloons racing (Row 1, column 1). A mask is drawn on one of the balloons in the input image for fine-tuning (Row 2, column 1). On the rest of the images, different parameters result in different edited images. Row 1 shows the effect of using different values for alpha while n is fixed. When the alpha is too small, the changes are too minimal and not visible (Row 1, column 2). Also, a very large alpha creates artifacts on the final image (Row 1, column 6). Row 2 shows the effect of using different values for n while keeping alpha fixed. When n is too small, the changes are too minimal and not visible (Row 2, column 2). Also a very large n results in artifacts on the final image (Row 2, column 6).

3.4.3. Evaluation Survey

After completing the image editing tasks, the participants were asked to complete a three stage evaluation survey. The first part included a System Usability Scale (SUS) form to rate the usability, ease of use, design, and performance of each method. SUS is a standard usability evaluation survey which is widely used in user-experience literature (Brooke, 1996). The participants were presented with 10 questions about each of the methods and were asked to rate each system on a scale of 1 to 5 for each question. A rating of 1 indicated strong disagreement, while a rating of 5 indicated strong agreement. The questions were designed to assess the participants’ perceptions of the effectiveness, ease of use, and overall user experience of each tool.

-

I think that I would like to use this tool frequently.

-

I found the tool unnecessarily complex.

-

I thought the tool was easy to use.

-

I think that I would need the support of a technical person to be able to use this tool.

-

I found the various functions in this tool were well integrated.

-

I thought there was too much inconsistency in this tool.

-

I would imagine that most people would learn to use this tool very quickly.

-

I found the tool very cumbersome to use.

-

I felt very confident using the tool.

-

I needed to learn a lot of things before I could get going with this tool.

SUS consists of positive and negative phrasing questions. Q2, 4, 6, 8, and 10 are negatively framed, therefore on the chart, red colours means better SUS score and Q1, 3, 5, 7, and 9 are considered positively framed and hence, more green colours demonstrate better score.

The SUS survey was followed by a creativity support index (CSI) questionnaire (Cherry and Latulipe, 2014), excluding questions about collaboration as it is not relevant for our tool. The CSI measures dimensions of Exploration, Expressiveness, Immersion, Enjoyment, and Results Worth Effort in a tool. CSI helps in understanding how well Layered Diffusion Brushes support creative work overall, as well as pointing out which aspects of creativity support may need attention.

The survey was followed by an interview with each participant to gather specific feedback and insights based on their artistic background and experience using the different tools. These processes provided valuable information on the strengths and weaknesses of each tool, as well as how it can be improved to better serve users.

The following multiple-choice questions were also asked for evaluating the performance of each method:

-

•

How much time did it take you to complete the image editing task using the tool you used in this study? [Much less time/About the same/Much more time]

-

•

How did you find each of the tools in terms of effectiveness in achieving the desired edits? [Very effective/Somewhat effective/Neutral/Somewhat ineffective/Very ineffective]

-

•

How does each of the tools you used perform in terms of time to complete the editing task? [Much faster/Somewhat faster/Acceptable/Somewhat slower/Much slower]

-

•

How likely are you to use each of these tools as an AI image editing tool in the future? [Very likely/Somewhat likely/Neutral/Somewhat unlikely/Very unlikely]

The entire study, including filling out the evaluation surveys, took not more than 90 minutes.

4. Results

Figure 7 presents the outcomes of utilizing different editing techniques employed in this work on the MagicBrush dataset for a single-layer edit. During the study, users performed up to three layers of edits on a single image, depending on the task. For each method, all the images in columns 3-5 were generated by the participants during the user study. The input images showcased in column 1 are the MagicBrush provided input images, and Column 2, i.e., the image with a mask, represents the provided MagicBrush mask region. Users had the option to modify the existing mask or update the edit prompt/instruction and tune parameters to achieve optimal results. As demonstrated, the Layered Diffusion Brushes method is able to effectively make targeted adjustments to the image, which are well-integrated with the image.

4.1. Evaluation Survey results

4.1.1. System Usability Scale (SUS)

Figure 11 presents the results of the SUS survey among participants after using the Layered Diffusion Brushes, SD-Inpainting, and InstructPix2Pix. Based on the bar charts, participants indicated that they are more likely to use Layered Diffusion Brushes compared to InstructPix2Pix and SD-Inpainting, and that they find it the easiest tool to use. In addition, participants in Q4 expressed that they would not require technical assistance to use the system in the future, indicating its overall good design. These findings were further supported by the interview feedback. For example, when asked about their understanding of the different parameters in the tool, one participant stated: “I believe that I understand the functionality of each parameter. I need to increase the mask strength value if I want to make bigger changes. The tool is quite intuitive and easy to use, and I think I can easily use it without needing any technical support.” This feedback highlights that the tool has a user-friendly design and can be easily understood and used by a wide range of users. Based on the survey results, the SUS score for diffusion brush is obtained as 80.35%, while InstructPix2PiX and SD-Inpainting achieve a score of 38.21% and 37.5% respectively.

A stacked bar chart showing the SUS survey responses of Q1 to Q10 for Photoshop. For odd questions, green colors show more desirable feedback. Even questions are designed with negative wording and more red colors show more favorable feedback.

4.2. Creativity Support Index results

Figure 12 illustrates the outcomes of the post-study CSI survey. Overall, participants expressed positivity towards Layered Diffusion Brushes, indicating that it enhanced their enjoyment, exploration, expressiveness, and immersion, while also deeming the results worth their effort.

5. Discussion and Future Work

As mentioned in Section 1, we initially developed an earlier version of Layered Diffusion Brushes with the objective of re-randomizing targeted regions for fine-tuning (e.g. fixing small details that were generated incorrectly). Subsequently, we conducted a user study to assess its usability and features. In the first user study, we compared the early version of Diffusion Brush with Inpainting and manual editing in Photoshop, involving five expert users.

While the majority of participants acknowledged that Diffusion Brush was faster than manual editing, some participants suggested that even faster editing would be significantly beneficial, aiding in random idea generation for artists. To address this feedback, we incorporated a caching mechanism, as explained in Algorithm 1, designed an efficient frontend to communicate with the machine learning backbone, and highly optimized the overall pipeline, achieving as little as 140 ms of inference time for a single edit on a high-end consumer GPU.

Furthermore, a few users struggled with finding the optimal brush strength control, a similar challenge observed in SD-inpainting as well. To address this, we devised a more generalized approach. While the earlier version of our system also supported multiple masks, these masks were not fully independent, and deleting or hiding them was not possible without performing operations in a specific order. This observation prompted the creation of a more streamlined and flexible mask management system.

Additionally, insights gathered from the first round of interviews indicated the need for further improvement in various aspects of the tool’s functionality and user experience. These inputs guided us in refining the tool and enhancing its usability for a wider range of users. Lastly, in the first user study, three participants specifically mentioned this feedback. One participant stated, “I really like the tool as it is right now; it certainly provides value for me in my editing tasks and makes my life easier. But one feature that I would love to see is to be able to tell the system how to make these changes. I still want to use the masking editing, but if I can tell it what to do it would be great.”. Based on the findings of the new user study, it is evident that this feature has been well-implemented into the system. All users participating in the current study affirmed the effectiveness of this feature.

During the user study, one of the most common comments regarding the usability of different methods was that users found it challenging to find the optimal settings for InstructPix2Pix and SD-inpainting. For example, one user mentioned, “In InstructPix2Pix, increasing the image guidance scale often distorts the edited image too much, and if the text guidance scale is too high, the edited image looks completely different. After many trials and errors, when I find a good combination, the next image behaves differently. Also, SD-inpainting half the times fails to produce a satisfactory result.”

Another user, who is an expert in graphic design, suggested, “Layers are very helpful. I would like to see the control numbers on top of them as I change them, not beside them. Also, having an undo button is crucial and would be very helpful. Additionally, I would suggest adding a blend option to each layer, similar to Photoshop” These suggestions will be taken into consideration for future improvements.

The CSI score results revealed that one participant responded neutrally or negatively to certain aspects, likely due to their being accustomed to the Photoshop tool. Furthermore, there was notable variability in immersion scores, with several participants giving lower ratings. This variability suggests that while some users felt deeply engaged with the tool, others may have encountered challenges or distractions affecting their immersive experience. Analyzing specific factors such as interface design, task complexity, and user preferences could offer insights into enhancing immersion in future iterations of Layered Diffusion Brushes. Despite this variability, the majority of participants found the tool effective and engaging, highlighting its potential usefulness in creative workflows.

Diffusion Brush offers the unique advantage of generating an unlimited number of adjustments to an image. By setting a mask and fixing the hyperparameters within a layer, users can experiment with various random generator settings to create a vast array of localized changes. This potential for adjustment and rapid exploration was highly praised by artists who used the system during the user study. They highlighted this feature as one of the major strengths of the system and appreciated the ability to explore a variety of possibilities for their work. This sets the Diffusion Brush apart from traditional editing software that often has limited options for localized adjustments.

Diffusion Brush was purposefully developed for a specific demographic who could ultimately utilize the tool for the refinement of AI-generated images. An essential consideration revolves around ensuring that the Diffusion Brush’s practicality aligns with the requirements of its intended user cohort. The SUS results demonstrated that the Diffusion Brush is more usable than the other methods, so we highlight that one of the core contributions of the proposed method is the enhancement of usability and controllability over prior methods. Additionally,

The users appreciated the real-time functionality of the tool and its ability to provide instant results. As expressed by one user, “I think out of the three, diffusion brush has the most potential. I really like the [box] option because, at least in terms of producing results, I appreciate not having to wait. In Adobe Photoshop and Illustrator, I remember using prompts and waiting a long time to get the desired result, whereas with diffusion brush, I could see the result instantly. In the other two methods, as well as Photoshop, I just have to wait for loading.”

6. Conclusion

In this paper, we introduced Layered Diffusion Brushes, a novel LDM-based tool designed for real-time layer-based refinement and editing of images. At its core, our method applies new random noise patterns within targeted regions during the reverse diffusion process, combining them with the intermediate input image latent and guided with edit prompts. This approach empowers the model to enact precise modifications while preserving the integrity of the rest of the image.

Layered Diffusion Brushes incorporates familiar image editing techniques and concepts, enabling users to efficiently harness the power of DMs by stacking multiple independent adjustments to different regions in images. Our formulation, coupled with distinctive design choices, renders it an ideal tool for both users and artists seeking enhanced creative control.

Furthermore, a comprehensive user study demonstrated the effectiveness of Layered Diffusion Brushes compared to other popular AI image editing tools, including InstaPix2Pix and SD-inpainting, in terms of usability and support for creativity. We anticipate that this tool holds potential implications for enhancing creative workflows and versatility in AI-based image editing and related tasks, such as object attribute adjustments, error correction, style mixing, prompt-driven object placement, and manipulation. We are excited to see how this tool will be utilized in practice.

References

- (1)

- Abdal et al. (2021) Rameen Abdal, Peihao Zhu, Niloy J Mitra, and Peter Wonka. 2021. Styleflow: Attribute-conditioned exploration of stylegan-generated images using conditional continuous normalizing flows. ACM Transactions on Graphics (ToG) 40, 3 (2021), 1–21.

- Avrahami et al. (2023) Omri Avrahami, Ohad Fried, and Dani Lischinski. 2023. Blended latent diffusion. ACM Transactions on Graphics (TOG) 42, 4 (2023), 1–11.

- Avrahami et al. (2022) Omri Avrahami, Dani Lischinski, and Ohad Fried. 2022. Blended diffusion for text-driven editing of natural images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 18208–18218.

- Bar-Tal et al. (2022) Omer Bar-Tal, Dolev Ofri-Amar, Rafail Fridman, Yoni Kasten, and Tali Dekel. 2022. Text2live: Text-driven layered image and video editing. In Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part XV. Springer, 707–723.

- Brooke (1996) John Brooke. 1996. SUS-A Quick and Dirty Usability Scale. Usability evaluation in industry 189, 194 (1996).

- Brooks et al. (2022) Tim Brooks, Aleksander Holynski, and Alexei A Efros. 2022. Instructpix2pix: Learning to follow image editing instructions. arXiv preprint arXiv:2211.09800 (2022).

- Cherry and Latulipe (2014) Erin Cherry and Celine Latulipe. 2014. Quantifying the creativity support of digital tools through the creativity support index. ACM Transactions on Computer-Human Interaction (TOCHI) 21, 4 (2014), 1–25.

- Choi et al. (2021) Jooyoung Choi, Sungwon Kim, Yonghyun Jeong, Youngjune Gwon, and Sungroh Yoon. 2021. Ilvr: Conditioning method for denoising diffusion probabilistic models. arXiv preprint arXiv:2108.02938 (2021).

- Civitai (2024) Civitai. 2024. Dreamshaper - 8 — Stable Diffusion Checkpoint. https://civitai.com/models/4384/dreamshaper Accessed on Feb 19, 2024.

- Couairon et al. (2022) Guillaume Couairon, Jakob Verbeek, Holger Schwenk, and Matthieu Cord. 2022. Diffedit: Diffusion-based semantic image editing with mask guidance. arXiv preprint arXiv:2210.11427 (2022).

- Gal et al. (2022) Rinon Gal, Yuval Alaluf, Yuval Atzmon, Or Patashnik, Amit H Bermano, Gal Chechik, and Daniel Cohen-Or. 2022. An image is worth one word: Personalizing text-to-image generation using textual inversion. arXiv preprint arXiv:2208.01618 (2022).

- Geng et al. (2023) Zigang Geng, Binxin Yang, Tiankai Hang, Chen Li, Shuyang Gu, Ting Zhang, Jianmin Bao, Zheng Zhang, Han Hu, Dong Chen, et al. 2023. Instructdiffusion: A generalist modeling interface for vision tasks. arXiv preprint arXiv:2309.03895 (2023).

- Guo and Lin (2023) Qin Guo and Tianwei Lin. 2023. Focus on Your Instruction: Fine-grained and Multi-instruction Image Editing by Attention Modulation. arXiv preprint arXiv:2312.10113 (2023).

- Hertz et al. (2022) Amir Hertz, Ron Mokady, Jay Tenenbaum, Kfir Aberman, Yael Pritch, and Daniel Cohen-Or. 2022. Prompt-to-prompt image editing with cross attention control. arXiv preprint arXiv:2208.01626 (2022).

- Ho et al. (2020) Jonathan Ho, Ajay Jain, and Pieter Abbeel. 2020. Denoising diffusion probabilistic models. Advances in neural information processing systems 33 (2020), 6840–6851.

- Ho et al. (2022) Jonathan Ho, Chitwan Saharia, William Chan, David J Fleet, Mohammad Norouzi, and Tim Salimans. 2022. Cascaded Diffusion Models for High Fidelity Image Generation. J. Mach. Learn. Res. 23, 47 (2022), 1–33.

- Hu et al. (2021) Edward J Hu, Yelong Shen, Phillip Wallis, Zeyuan Allen-Zhu, Yuanzhi Li, Shean Wang, Lu Wang, and Weizhu Chen. 2021. Lora: Low-rank adaptation of large language models. arXiv preprint arXiv:2106.09685 (2021).

- Hu et al. (2022) Minghui Hu, Yujie Wang, Tat-Jen Cham, Jianfei Yang, and Ponnuthurai N Suganthan. 2022. Global context with discrete diffusion in vector quantised modelling for image generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 11502–11511.

- Huang et al. (2024) Yi Huang, Jiancheng Huang, Yifan Liu, Mingfu Yan, Jiaxi Lv, Jianzhuang Liu, Wei Xiong, He Zhang, Shifeng Chen, and Liangliang Cao. 2024. Diffusion Model-Based Image Editing: A Survey. arXiv preprint arXiv:2402.17525 (2024).

- Ju et al. (2023) Xuan Ju, Ailing Zeng, Yuxuan Bian, Shaoteng Liu, and Qiang Xu. 2023. Direct inversion: Boosting diffusion-based editing with 3 lines of code. arXiv preprint arXiv:2310.01506 (2023).

- Kim et al. (2022) Gwanghyun Kim, Taesung Kwon, and Jong Chul Ye. 2022. Diffusionclip: Text-guided diffusion models for robust image manipulation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2426–2435.

- Lang et al. (2021) Oran Lang, Yossi Gandelsman, Michal Yarom, Yoav Wald, Gal Elidan, Avinatan Hassidim, William T Freeman, Phillip Isola, Amir Globerson, Michal Irani, et al. 2021. Explaining in style: Training a gan to explain a classifier in stylespace. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 693–702.

- Lugmayr et al. (2022) Andreas Lugmayr, Martin Danelljan, Andres Romero, Fisher Yu, Radu Timofte, and Luc Van Gool. 2022. Repaint: Inpainting using denoising diffusion probabilistic models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 11461–11471.

- Miyake et al. (2023) Daiki Miyake, Akihiro Iohara, Yu Saito, and Toshiyuki Tanaka. 2023. Negative-prompt inversion: Fast image inversion for editing with text-guided diffusion models. arXiv preprint arXiv:2305.16807 (2023).

- Mokady et al. (2023) Ron Mokady, Amir Hertz, Kfir Aberman, Yael Pritch, and Daniel Cohen-Or. 2023. Null-text inversion for editing real images using guided diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 6038–6047.

- Pan et al. (2023) Xingang Pan, Ayush Tewari, Thomas Leimkühler, Lingjie Liu, Abhimitra Meka, and Christian Theobalt. 2023. Drag your gan: Interactive point-based manipulation on the generative image manifold. In ACM SIGGRAPH 2023 Conference Proceedings. 1–11.

- Parmar et al. (2023) Gaurav Parmar, Krishna Kumar Singh, Richard Zhang, Yijun Li, Jingwan Lu, and Jun-Yan Zhu. 2023. Zero-shot Image-to-Image Translation. In ACM SIGGRAPH 2023 Conference Proceedings (¡conf-loc¿, ¡city¿Los Angeles¡/city¿, ¡state¿CA¡/state¿, ¡country¿USA¡/country¿, ¡/conf-loc¿) (SIGGRAPH ’23). Association for Computing Machinery, New York, NY, USA, Article 11, 11 pages. https://doi.org/10.1145/3588432.3591513

- Porter and Duff (1984) Thomas Porter and Tom Duff. 1984. Compositing digital images. In Proceedings of the 11th annual conference on Computer graphics and interactive techniques. 253–259.

- Ramesh et al. (2022) Aditya Ramesh, Prafulla Dhariwal, Alex Nichol, Casey Chu, and Mark Chen. 2022. Hierarchical text-conditional image generation with clip latents. arXiv preprint arXiv:2204.06125 1, 2 (2022), 3.

- Richardson et al. (2021) Elad Richardson, Yuval Alaluf, Or Patashnik, Yotam Nitzan, Yaniv Azar, Stav Shapiro, and Daniel Cohen-Or. 2021. Encoding in style: a stylegan encoder for image-to-image translation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2287–2296.

- Rombach et al. (2022) Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Björn Ommer. 2022. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 10684–10695.

- Ruiz et al. (2023) Nataniel Ruiz, Yuanzhen Li, Varun Jampani, Yael Pritch, Michael Rubinstein, and Kfir Aberman. 2023. DreamBooth: Fine Tuning Text-to-Image Diffusion Models for Subject-Driven Generation. arXiv:2208.12242 [cs.CV]

- Saharia et al. (2022a) Chitwan Saharia, William Chan, Huiwen Chang, Chris Lee, Jonathan Ho, Tim Salimans, David Fleet, and Mohammad Norouzi. 2022a. Palette: Image-to-image diffusion models. In ACM SIGGRAPH 2022 Conference Proceedings. 1–10.

- Saharia et al. (2022b) Chitwan Saharia, William Chan, Saurabh Saxena, Lala Li, Jay Whang, Emily L Denton, Kamyar Ghasemipour, Raphael Gontijo Lopes, Burcu Karagol Ayan, Tim Salimans, et al. 2022b. Photorealistic text-to-image diffusion models with deep language understanding. Advances in neural information processing systems 35 (2022), 36479–36494.

- Saharia et al. (2022c) Chitwan Saharia, Jonathan Ho, William Chan, Tim Salimans, David J Fleet, and Mohammad Norouzi. 2022c. Image super-resolution via iterative refinement. IEEE Transactions on Pattern Analysis and Machine Intelligence (2022).

- Sarukkai et al. (2024) Vishnu Sarukkai, Linden Li, Arden Ma, Christopher Ré, and Kayvon Fatahalian. 2024. Collage Diffusion. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV). 4208–4217.

- Wang et al. (2020) Lei Wang, Wei Chen, Wenjia Yang, Fangming Bi, and Fei Richard Yu. 2020. A state-of-the-art review on image synthesis with generative adversarial networks. IEEE Access 8 (2020), 63514–63537.

- Yu et al. (2023) Tao Yu, Runseng Feng, Ruoyu Feng, Jinming Liu, Xin Jin, Wenjun Zeng, and Zhibo Chen. 2023. Inpaint anything: Segment anything meets image inpainting. arXiv preprint arXiv:2304.06790 (2023).

- Zhang et al. (2023) Chenshuang Zhang, Chaoning Zhang, Mengchun Zhang, and In So Kweon. 2023. Text-to-image Diffusion Model in Generative AI: A Survey. arXiv preprint arXiv:2303.07909 (2023).

- Zhang et al. (2024) Kai Zhang, Lingbo Mo, Wenhu Chen, Huan Sun, and Yu Su. 2024. Magicbrush: A manually annotated dataset for instruction-guided image editing. Advances in Neural Information Processing Systems 36 (2024).