Stochastic Three-Operator Splitting Algorithms for Nonconvex and Nonsmooth Optimization Arising from FLASH Radiotherapy

Abstract

Radiation therapy (RT) aims to deliver tumoricidal doses with minimal radiation-induced normal-tissue toxicity. Compared to conventional RT (of conventional dose rate), FLASH-RT (of ultra-high dose rate) can provide additional normal tissue sparing, which however has created a new nonconvex and nonsmooth optimization problem that is highly challenging to solve. In this paper, we propose a stochastic three-operator splitting (STOS) algorithm to address the FLASH optimization problem. We establish the convergence and convergence rates of the STOS algorithm under the nonconvex framework for both unbiased gradient estimators and variance-reduced gradient estimators. These stochastic gradient estimators include the most popular ones, such as SGD, SAGA, SARAH, and SVRG, among others. The effectiveness of the STOS algorithm is validated using FLASH radiotherapy planning for patients.

Key words. Nonconvex optimization stochastic three-operator splitting unbiased stochastic gradient variance-reduced stochastic gradient FLASH radiotherapy

AMS subject classifications. 90C06 90C15 90C26 90C90

1 Introduction

1.1 Background

Radiation therapy (RT) has long been a cornerstone of cancer treatment. With the continuous advancement of medical technology, there are various radiation therapy techniques available, such as IMPT and PBS [54, 18, 27]. Although these techniques have been widely adopted in the medical field, complete eradication of cancerous tissue still relies on the dose, which is limited by the risk of severe radiation-induced side effects. A new technique called flash radiotherapy (FLASH-RT) has emerged. FLASH-RT delivers ultra-high dose rates, several orders of magnitude higher than conventional therapy [34]. Clinical data [34, 52] indicates that FLASH-RT can achieve better control with fewer side effects. This is primarily because flash radiotherapy irradiates tissues at ultra-high dose rates ( 40 Gy/s), potentially reducing the toxicity of normal tissues while retaining tumor-killing effects. Proton irradiation can achieve this ultra-high dose rate, due to the unique depth-dose characteristics of proton beams, allowing the delivery of ultra-high dose rates to deep tissues [37, 8]. Additionally, clinical data [48, 34] has demonstrated that FLASH proton radiotherapy, due to its superior immune response capabilities, has become an important treatment modality for certain acute and advanced cancers.

While FLASH proton radiotherapy may become the primary radiotherapy method for certain tumors, current research on FLASH proton radiotherapy planning has mainly focused on optimizing dose distribution. However, each spot has constraints not only on dose distribution but also on dose rate distribution, highlighting the importance of exploring a new treatment optimization method called FLASH proton radiotherapy with dose and dose rate optimization[19, 55, 14]. Mathematically, it can be formulated as the following constraint nonconvex optimization:

| (1.1) |

where is the spot weight to be optimized, is the dose objective, represents the forward system matrix, is a block matrix composed of the forward matrix that only maps to the dose in the ROI, is a vector associated with DVH constraints, and represents the planning monitor unit. Here exhibits a large-scale summation structure, representing the sum of planning objectives. See section 4 for more details. Currently, most of the research on solving (1.1) employs the nonstandard alternating direction method of multipliers (ADMM) algorithm to solve the convex relaxation problem. A classical technique for solving constraint optimization is to transform it into a multiple summation problem via indicator functions. Here, we employ this technique to equivalently transform (1.1) into the following large-scale nonconvex optimization:

| (1.2) |

where , the set . This transformation allows us to apply classical splitting algorithms to solve (1.2). The three-operator splitting algorithm [6] is an efficient algorithm for solving this kind of composite optimization. However, in our case, has a large-scale summation structure, and computing can be computationally expensive, reducing the efficiency of the method. This prompts us to propose a novel stochastic three-operator splitting algorithm and motivates the research presented below.

1.2 Model and Algorithm

Driven by the FLASH proton radiotherapy problem (1.2) and to broaden the applicability of our research, we consider the following general optimization problem:

| (1.3) |

where and are proper lower semi-continuous functions. We do not assume convexity for , , or throughout this paper. Corresponding to the FLASH proton radiotherapy problem (1.2), we know with a large-scale summation structure, while and correspond to indicator functions of two constraint sets. Problem (1.3) not only corresponds to the FLASH proton radiotherapy problem but also captures many other applications, particularly in signal/image processing and computer vision, that involve multiple regularization terms. For instance, deep learning-based models in image processing often include both sparsity regularization terms and neural network-based regularization terms [31]. Non-negative low-rank matrix decompositions have both low-rank and non-negativity constraints [29, 40, 33]. In compressive sensing, a highly significant type of regularization known as L1-L2 sparse regularization allows the compressive sensing problem to be formulated as a three-term composite nonconvex optimization problem [57, 43]. In robust principal component analysis (RPCA), the optimization problem includes constraints not only on the data term but also on the low-rank factor and sparse factor, which can be formulated as a three-term composite minimization [17]. Operator splitting is an important method for solving composite optimization problems of type (1.3). Classic algorithms such as Forward-Backward Splitting (FBS) and Douglas-Rachford Splitting (DRS) can be employed when (1.3) . In [15], Attouch, Bolte, and Svaiter established the convergence of FBS for nonconvex and nonsmooth optimization under the assumption that is Lipschitz continuous. In [12], Li and Pong applied the DRS algorithm to nonconvex feasibility problems and established its convergence under similar assumptions.

The above splitting algorithms are not suitable for (1.3) because they require computing the proximal operator of in each iteration. Computing this operator is much more expensive or even infeasible compared to computing the proximal operators of and separately. To address this limitation, Davis and Yin [6] proposed a three-operator splitting algorithm for solving (1.3). In the three-operator splitting algorithm, each sub-problem involves solving the proximal operator of a single function, either or . When the proximal operators of and are easy to solve, the three-operator splitting algorithm becomes a simple and effective method. Davis and Yin [6] established the convergence of a three-operator splitting algorithm for convex optimization. Liu and Yin in [56] proved the convergence of the three-operator splitting method when is weakly convex, and and are twice continuously differentiable with bounded Hessians. In [9] Bian and Zhang established the global convergence of a three-operator splitting algorithm for solving nonconvex optimization problems and provided local convergence rates.

However, when solving large-scale problems, the TOS algorithm may suffer from computational inefficiency due to the time-consuming computation of , thereby reducing the efficiency of the TOS method. To overcome this challenge, we propose a stochastic version of the TOS algorithm by replacing with its stochastic approximation. This will lead to a new stochastic three-operator splitting (STOS) algorithm that allows for more efficient computations. We present STOS in Algorithm 1 below.

| (1.4a) | |||

| (1.4b) | |||

| (1.4c) | |||

Note that the STOS algorithm is also an extension of these classical algorithms. When , Algorithm 1 becomes the stochastic Forward-Backward Splitting (FBS) algorithm. When , the Algorithm 1 corresponds to the classical Douglas-Rachford Splitting (DRS) algorithm. When and , this algorithm becomes the stochastic gradient descent algorithm. Here, can be either unbiased gradient estimators (such as SGD, etc.) or variance-reduced gradient estimators (such as SAGA, SVRG, SARAH, etc.).

1.3 Related work

Stochastic splitting algorithms for solving large-scale nonconvex optimization problems have received extensive interest in the last two decades.

When in (1.3), the classical stochastic gradient descent algorithm [22] can solve this kind of problem. In [46], Ghadimi and Lan proposed the randomized stochastic gradient (RSG) method to solve (1.3) with and presented the first non-asymptotic convergence analysis of SGD. They demonstrated that under the assumptions of being smooth, being an unbiased and bounded variance estimator, the RSG method achieves a solution satisfying with an iterative complexity . Further, the stochastic gradient descent algorithm has also been combined with certain variance-reduced gradient estimators. For instance, Shamir [42] proposed nonconvex SVRG and considered the problem of computing several leading eigenvectors. Allen-zhu and Hazan [59] also proposed SVRG, showing its linear convergence rate. Later, Reddi et al. [49] introduced the nonconvex SAGA method and demonstrated its linear convergence to the global optimal solution for a class of nonconvex optimization.

When in (1.3), this problem can be solved by the classical proximal gradient descent (PGD) algorithm. Ghadimi, Lan, and Zhang [47] introduced a randomized stochastic projected gradient (RSPG) algorithm to solve (1.3) with a constraint set when . It is worth noting that when the constraint set , the RSPG algorithm becomes the stochastic PGD algorithm. Further, the PGD algorithm combined with variance-reduced gradient estimators has also received a lot of attention. Reddi et al. [50] integrate the proximal gradient descent with variance-reduced estimators SAGA and SVRG, referred to as PROXSAGA and PROXSVRG, respectively. They showed that under the assumptions of being nonsmooth convex and being smooth nonconvex, achieving an -accurate solution requires calling the number of proximal oracles to be . Pham et al. [41] combined proximal gradient descent with the SARAH stochastic estimator, referred to as ProxSARAH. Under the assumptions of being smooth nonconvex and nonconvex nonsmooth, they demonstrated that ProxSARAH finds an -stationary point with complexity for having finite-sum structure.

The study of (1.3) with three-term composite structures is limited in the nonconvex stochastic setting. Metel and Takeda [38] proposed the stochastic proximal gradient method for solving (1.3) and showed that the iteration complexity is when the distance to the subdifferential mapping of loss is less than . Yurtsever, Mangalick, and Sra [3] proposed a stochastic version of a three-operator splitting algorithm to solve (1.3) by combining unbiased and bounded-variance stochastic gradient estimators, and showed that finding an -stationary point requires an iteration complexity of based on a variational inequality. Driggs et al. [7] proposed a stochastic proximal alternating linearization algorithm when is a cross-term in (1.3), which combines a class of variance-reduced stochastic gradient estimators. The algorithm finds a -stationary point with a complexity of . Further, for a class of large-scale three-block composite optimization problems in deep learning, Bian, Liu, and Zhang [11] propose a new stochastic three-block splitting algorithm for (1.3) with a cross-term.

1.4 Contributions

In this paper, we propose a stochastic three-operator splitting (STOS) algorithm to solve the nonconvex and nonsmooth problem (1.3). The main contributions of our paper can be summarized as follows:

-

1.

We propose a stochastic three-operator splitting (STOS) algorithm for solving (1.3), which combines two types of stochastic gradient estimators: unbiased estimators and variance-reduced estimators. Compared to the stochastic TOS in [3] which only considers unbiased gradient estimator, our STOS (Algorithm 1) combines both unbiased gradient estimators and variance-reduced gradient estimators. These include the most commonly used gradient estimators. Hence, our algorithm can select the appropriate gradient estimators for different applications.

-

2.

When the unbiased gradient estimator is incorporated, we establish the convergence and obtain an convergence rate for the STOS algorithm under the variance-bounded assumption. When a variance-reduced gradient estimator is incorporated, we construct a stability function for the STOS algorithm. Furthermore, if the objective function is semi-algebraic, we obtain the convergence and convergence rate of STOS. Compared to the stochastic TOS in [3], our theoretical analysis does not require the convexity of and .

-

3.

In the numerical experiments, we apply the STOS algorithm to solve the FLASH proton radiotherapy with dose and dose rate optimization in radiation therapy. In all current research work, nonstandard ADMM algorithms are used to solve the convex relaxation of this problem. In this paper, we directly solve this nonconvex problem using the stochastic three-operator splitting algorithm. To the best of our knowledge, this is the first time a stochastic algorithm has been adopted to solve the FLASH proton radiotherapy optimization. All test results demonstrate that STOS significantly outperforms the nonstandard ADMM algorithm.

Notations and paper organization. Before presenting the main content of the paper, we list some notations used throughout. We denote as the n-dimensional Euclidean space and as the inner product. The norm induced by the inner product is denoted as . An extended-real-valued function is proper if it is never and its domain, dom is nonempty. The function is closed if it is proper and lower semicontinuous. A point is a stationary point of a function if . is a critical point of if is differentiable at and . A function is coercive if . We call a -convex function if is a convex function. When , is a strongly convex function. When , is a weakly convex function. When , is a convex function.

2 Notation and preliminaries

This section provides the necessary notations and definitions for our paper. For STOS (Algorithm 1), two types of gradient estimators can be used: unbiased gradient estimators and variance-reduced gradient estimators. The definitions for these two types of gradient estimators are presented below.

2.1 Unbiased Gradient Estimator

A class of gradient estimators that are often used in stochastic algorithms are unbiased gradient estimators, such as the most classical stochastic gradient descent (SGD) estimator [21]. In this paper, we also analyze STOS (Algorithm 1) combined unbiased gradient estimators. We define an unbiased gradient estimator.

Definition 2.1 (Unbiased Gradient Estimator).

The stochastic gradient estimator is unbiased if

Remark 2.2.

In many applications, the function has a finite-sum structure, such as in empirical risk minimization problems encountered in deep learning. In this case, randomness mainly arises from the way samples are drawn from the sum, and the unbiased gradient estimator can be expressed in the following unified form:

| (2.1) |

where is a sampling vector drawn from a certain distribution . The random variable can take different forms for different sampling methods. A representative sampling method is -nice sampling without replacement, which forms the stochastic gradient descent (SGD) estimator commonly used in deep learning.

We provide the specific form of SGD, there exist other sampling methods that can produce an unbiased stochastic gradient, such as sampling with replacement and independent sampling without replacement. For more details, we refer to readers to [2].

Definition 2.3 (SGD [21]).

The SGD estimator is defined as follows:

where is a random subset uniformly drawn from of fixed batch size .

Remark 2.4.

For the -nice sampling without replacement, let with for and otherwise, and in (2.1), resulting in SGD estimator.

2.2 Variance-Reduced Gradient Estimator.

Variance-reduced gradient estimators have been proposed and widely adopted in stochastic algorithms, such as SVRG (stochastic variance reduced gradient) [44], SAG (stochastic average gradient) [39], and SDCA (stochastic dual coordinate ascent) [51]. In [7], a unified definition is provided for a class of variance-reduced gradient estimators. In this paper, we analyze the combination of STOS (Algorithm 1) with such variance-reduced gradient estimators taken from[7]. For convenience, we recall the definition of variance-reduced gradient estimators below.

Definition 2.5 (Variance-reduced Gradient Estimator [7, Definition 2.1]).

A gradient estimator is called variance-reduced if there exist constants and such that

-

1.

(MSE Bound). There exists a sequence having random variables of the form for some random vectors such that

and, with

(2.2) -

2.

(Geometric Decay). The sequence decays geometrically:

(2.3) -

3.

(Convergence of Estimator). For all sequences satisfying it follows that and .

The variance-reduced gradient estimator defined in Definition 2.5 is widely used in many algorithms, such as SAGA [1], SARAH [35], SAG [39], and SVRG [30, 44]. It has been verified in [7, 10] that these four estimators are variance-reduced gradient estimators of this type. Below, we provide the definitions for these four estimators.

Definition 2.6 (SAGA [1]).

The SAGA gradient approximation is defined as follows:

where is mini-batches containing indices. The variables follow the update rules if and otherwise.

Definition 2.7 (SARAH [35]).

The SARAH estimator reads for as

For , define random variables with and , where is a fixed chosen parameter. Let be a random subset uniformly drawn from of fixed batch size . Then for the SARAH gradient approximation reads as

Definition 2.8 (SAG [39]).

The SAG gradient approximation is defined as follows:

where is mini-batches containing indices. And the gradient history update follows : and

Definition 2.9 (SVRG [44, 30]).

The SVRG gradient approximation is defined as follows:

where is a random subset uniformly drawn from of fixed batch size . And is a point updated every step where the is the number of steps in the inner loop.

Remark 2.10.

Both types of estimators can be combined with our algorithm, but they have their strengths and weaknesses depending on the application. SGD is the simplest stochastic gradient estimator that only relies on the gradient computed on each batch without additional gradient storage or computation. However, SGD may suffer from relatively large variance, which requires a smaller step size to ensure convergence, leading to slow convergence rates. To address this shortcoming, several variance-reduced gradient estimators have been proposed, such as SVRG, SAGA, SAG, and SARAH. These gradient estimators reduce the variance, allowing for larger step sizes and faster convergence rates. However, the design of these stochastic gradient estimators often requires additional gradients (or dual variable) storage and full gradient computation, increasing the amount of storage and computation needed for faster convergence. This can make these variance-reduced gradient estimators difficult to use for some more complex problems, such as structured prediction and neural network learning. Therefore, for different problems, one must weigh the trade-offs between using SGD for fast computation per iteration with slow convergence or variance-reduced gradient estimators for slower computation per iteration but faster convergence. Regarding the variance-reduced gradient estimators in [7], SAG, SVRG, SAGA, and SARAH all belong to this type of estimator, but not all of them are biased gradient estimators. For example, SAGA is unbiased, and SARAH is biased.

2.3 Kurdyka-Łojasiewicz property

The Kurdyka-Łojasiewicz (KL) property plays a crucial role in the convergence analysis of nonconvex optimization problems. We first recall the definition of KL property, which is particularly applicable to semi-algebraic functions. For a more detailed account, we refer readers to [16, 15, 24, 23, 25] and the references therein. Let and be two real numbers satisfying . We define the set .

Definition 2.11 (KL property).

A function has the Kurdyka-Łojasiewicz property at dom if there exist , a neighborhood of , and a continuous concave function such that:

-

(i)

, and for all ;

-

(ii)

for all the Kurdyka-Łojasiewicz inequality holds, i.e.,

If satisfies the Kurdyka-Łojasiewicz property at each point of dom , then it is called a KL function.

Roughly speaking, the KL property allows for sharp optimization up to reparameterization via , which is known as a desingularizing function for . A typical class of KL functions are semi-algebraic functions, such as the pseudo-norm and the rank function. More specifically, we can find a detailed result on the KL property in [16, Section 4.3]. Further arguments can be found in [24, Section 2] and [23, Corollary 16]. Semi-algebraic functions are easier to identify and encompass a wide range of possibly nonconvex functions that arise in applications, as shown in [16, 15, 24, 23, 25]. To clarify, we revisit the definition of semi-algebraic functions.

Definition 2.12 (Semi-algebraic set).

A semi-algebraic set is a finite union of sets of the form

where and are real polynomials.

Definition 2.13 (Semi-algebraic function).

A function is semi-algebraic if its graph is semi-algebraic.

Lemma 2.14 (KL inequality in the semi-algebraic cases).

Let be a proper closed semi-algebraic function on . Then, satisfies the Kurdyka-Łojasiewicz property at all points in dom with for some and

3 Convergence analysis

This section focuses on the convergence analysis of STOS (Algorithm 1) combined with two different types of stochastic gradient estimators: unbiased estimator and variance-reduced estimator. Our analysis is based on the following assumptions concerning the objective functions , and .

Assumption 3.1.

Functions , , and satisfy the following:

-

(a1)

has a Lipschitz continuous gradient, i.e., there exists a constant such that

-

(a2)

is a proper closed function with a nonempty mapping for any and for .

-

(a3)

For each , has a Lipschitz continuous gradient, i.e., there exists a constant such that

If has a Lipschitz continuous gradient, then we can always find such that is convex, in particular, can be taken to be . We also present the optimality conditions for Algorithm 1:

| (3.1a) | |||

| (3.1b) | |||

These conditions will be frequently used in the convergence analysis.

3.1 Convergence rates for unbiased gradient estimator

In this subsection, we consider the stochastic nonconvex problem (1.3) with being the expectation of a function of a random variable, i.e., , where is a random variable with distribution :

| (3.2) |

As a special case of (3.2), if is a uniformly random vector defined on a finite support set , then (3.2) simplifies to the following finite-sum minimization problem:

where for . Here, the stochastic gradient estimator in Algorithm 1 adopts the following SGD estimator:

where is a set of samples from distribution . Our convergence analysis relies on the following assumptions.

Assumption 3.2.

We assume that

-

(i)

is an unbiased estimator of , i.e.,

-

(ii)

has bounded variance, i.e.,

Remark 3.3.

Definition 3.4.

For , we call an -stationary point if it satisfies

where denotes the distance between and the set .

In the following, we establish the convergence analysis of STOS (Algorithm 1) with unbiased estimators. We show that STOS convergences to an -stationary point and obtain its convergence rate. The proof of Theorem 3.5 is given in Appendix A.2.

Theorem 3.5.

Remark 3.6.

From the expression for , we know as . Thus, given and , it is always true that for small sufficiently. Indeed, we can get a computable thresholding for :

3.2 Convergence rates for variance-reduced gradient estimator

We have provided the convergence guarantee for STOS (Algorithm 1) when combined with unbiased gradient estimators. However, some variance-reduced gradient estimators, such as SARAH, can make the algorithm more effective for certain large-scale image processing problems [10]. To allow STOS to use a wider variety of stochastic gradient estimators, we further provide the convergence guarantee for STOS combined with the variance-reduced gradient estimator (Definition 2.5) and obtain its convergence rate. The convergence analysis is based on the Kurdyka-Łojasiewicz inequality. For this purpose, it is crucial to construct an energy function that decreases along the sequence generated by Algorithm 1. Thus, we define

| (3.3) | ||||

for . Denote . Next, we give the energy function associated with STOS (Algorithm 1) as follows:

| (3.4) |

The decreasing property of in expectation is given in the following theorem.

Theorem 3.7.

Remark 3.8.

We now show that choosing a suitable always makes . From the expression for we have that as . Thus it is always true that when is sufficiently small. Here we also provide a computable threshold for . Denote

where . Then when , we have .

The proof of Theorem 3.7 is provided in Appendix A.3. Next, we will establish the global convergence in expectation for the whole sequence generated by Algorithm 1. See Appendix A.3 for details of the proof.

Theorem 3.9.

Suppose that , , and are semialgebraic functions with KL exponent , and the sequence is generated by Algorithm 1. Then, either is a critical point after a finite number of iterations, or almost surely satisfies the finite length property in expectation:

Moreover, there exists an integer such that for all ,

where and are both positive constants, and .

Furthermore, we derive the convergence rates for the sequence generated by Algorithm 1 based on the KL exponent. The calculation of the KL exponent is detailed in [13]. We omit the proof of convergence rate, which is similar to the proof of Theorem 3.7 in [10].

Theorem 3.10.

Suppose that , and Assumption 3.1 is satisfied. Moreover, suppose that , and are semialgebraic functions with KL exponent . Let be a bounded sequence generated by STOS (Algorithm 1) using a variance-reduced gradient estimator. Then, the following convergence rates hold almost surely:

-

1.

If then there exists an such that for all

-

2.

If , then there exists and such that ;

-

3.

If , then there exists a constant such that .

4 Numerical experiments

In this section, we implement the STOS algorithm on FLASH proton radiotherapy with dose and dose rate constraints. All experiments are run in MATLAB R2021a on a desktop equipped with a 3.9GHz 16-core AMD processor and 64GB memory.

4.1 FLASH proton radiotherapy with dose and dose rate optimization

4.1.1 Problem background

FLASH proton radiotherapy is an emerging cutting-edge technology that can deliver ultra-high doses of radiation in an extremely short period, within a fraction of a second. Although this new treatment modality is still in the experimental stage, it has demonstrated the potential to better protect normal tissues compared to conventional radiation. In clinical practice, proton beams can be utilized to deliver this high-dose radiation, making FLASH proton radiotherapy an intriguing prospect for future cancer treatment [19, 55].

However, the majority of research on FLASH proton radiotherapy primarily focuses on radiation therapy problems with a minimum threshold to the number of protons delivered per spot, such as the well-known minimization monitor-unit (MMU) problem [58, 28]. In recent developments, modeling the FLASH effect while satisfying both dose and dose rate constraints has demonstrated greater effectiveness [14]. Therefore, it becomes imperative to tackle the challenge of simultaneous dose and dose rate constraints as well as the MMU in FLASH proton radiotherapy, which can be formulated as the following nonconvex-constrained optimization problem:

| (4.1) | ||||

Here, represents proton spot weights to be optimized, and is the weighted vector of objective constraint values. Let where with all elements equal to . represents the forward system matrix, a linear operator mapping to , and denotes the -th row of . is the planning minimum spot weight threshold, known as the MMU constraint. The FLASH constraints are enforced in a region of interest (ROI), we use to denote the partial forward system matrix which only maps to the dose in the ROI (). The ROI, as defined in [14] is much smaller than the combined regions of CTV, body, and OARs. Consequently, the size of exhibits significantly smaller dimensions compared to the size of . Here, can be explicitly written as the following block matrix:

This shows that the constraint is equivalent to two constraints, one regarding dose rate: with being the lower bound of dose rate in ROI and the other regarding dose: with being the lower bound of dose in ROI. Here “” represents dot product, “” applies to each element, and is minimum spot duration. Additional physical interpretation of these two constraints regarding the FLASH effect can be found in [14].

4.1.2 Model and algorithm

Based on our knowledge, all of the literature uses an nonstandard ADMM algorithm to solve the convex relaxation of problem (4.1). The technique of transforming constrained optimization problems into unconstrained ones using indicator functions is a commonly employed approach in the field of optimization. Here, we apply this technique to transform problem (4.1) into the following equivalent unconstrained optimization problem:

| (4.2) |

where we denote the set , the set . Here represents the indicator function of the set, defined as

We note that problem (4.2) can be solved by the classical three-operator splitting algorithm. Furthermore, since is typically on the order of millions in FLASH proton therapy optimization, our proposed STOS algorithm can effectively solve (4.2).

We apply the STOS method to solve (4.2) with , , and . However, we note that in our convergence analysis, we require the function to be smooth. Therefore, we approximate the indicator function using the distance function . This is mainly because for the first subproblem in Algorithm 1:

| (4.3) |

Here, is the projection operator onto set . It is worth noting that in (4.3), as the , it approaches to , which is the solution of the first subproblem in Algorithm 1 when . Then we can apply STOS to solve (4.2) with , , and , it yields the following algorithm:

For the first subproblem, due to the smoothness of , we can directly compute the gradient and further utilize a quasi-Newton method in MATLAB to solve it. The second subproblem is easy to solve, and it has an explicit solution:

Further, we verify that , , and satisfy the assumptions of a semi-algebraic function. According to [16, Section 4.3], and are semi-algebraic functions. From Appendix of [26], the set is a semi-algebraic set, therefore the indicator function of is also semi-algebraic.

Next, we evaluate the STOS algorithm in two different cases separately, i.e., FLASH proton RT for lung and brain, and we also compared the nonstandard ADMM in [19] in which the nonlinearity of the constraint is linearized, and in all experiments, the minimum spot weight threshold are fixed as [19] suggested (especially 160 for our experiments). Before presenting the results, we shall introduce several quality measures designed to assess the effectiveness and accuracy of therapy planning. One of these key metrics is the Conformal Index, denoted as , which is defined as , where represents the PTV volume receiving at least of the prescription dose, represents the volume of PTV, and represents the total volume receiving at least of the prescription dose. Therefore, the value of ranges from 0 to 1, and ideally, . , , and represent the maximum dose received by CTV(Clinical Target Volume), cord, and lung, respectively. , , and represent the average dose received by ROI, esophagus, and the body, respectively. The quantitative dose coverage and dose coverage in percentage are calculated as and providing an assessment of the degree to which constraints related to and are met.

4.1.3 FLASH proton radiotherapy for the lung

Radiation therapy is a common and effective treatment method for lung cancer. The first experiment of FLASH proton radiotherapy is conducted for the lung. The dose influence matrix, denoted as , is generated via MatRad [53], with spot width, and lateral spacing on dose grid. The beam angles are empirically chosen as . In this experiment, the regions of CTV, the body, and the organs at risk (spinal cord, lung, esophagus) are chosen for dose planning. The size of the dose influence matrix is . Matrix corresponds to a portion of on ROI and has a size of . The data with the length is shuffled and divided into 8 parts and 16 parts for testing our STOS algorithm. The parameter is set to Gy, while is set to Gy. The parameter for STOS is set to .

| CI | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| ADMM | 109.62 | 0.56 | 1.29 | 9.85 | 18.54 | 2.57 | 1.14 | 58.80 | 90.01 |

| STOS SGD | 118.51 | 0.67 | 1.19 | 7.28 | 17.49 | 1.95 | 1.00 | 67.76 | 92.94 |

| STOS SGD | 118.83 | 0.66 | 1.19 | 7.13 | 17.85 | 1.81 | 1.02 | 71.14 | 94.16 |

| STOS SGD | 118.82 | 0.65 | 1.19 | 7.14 | 17.86 | 1.81 | 1.02 | 71.63 | 94.21 |

| STOS SAGA | 109.50 | 0.66 | 1.18 | 8.09 | 16.93 | 1.86 | 1.01 | 71.54 | 94.17 |

| STOS SARAH | 109.53 | 0.66 | 1.18 | 8.11 | 16.96 | 1.86 | 1.01 | 71.09 | 94.31 |

Here, we tested three different stochastic gradient estimators: SGD, SAGA, and SARAH. In Figure 1, we first present the function values of all algorithms running the same number of epochs. From Figure 1 (a), it can be observed that both the STOS and TOS exhibit faster energy decay compared to ADMM. Additionally, the STOS achieves faster energy function descent compared to the TOS algorithm. In Figure 1 (b), we also show the results of STOS combined with SGD, SAGA, and SARAH. We can observe that the results of the three stochastic gradient estimators are similar, with SGD performing slightly better compared to the other two estimators. In summary, as shown in Figure 1, the energy results indicate that the stochastic methods are superior to the non-stochastic methods for FLASH proton RT. This observation highlights the effectiveness of combining stochastic and probabilistic mechanisms in the optimization process. Moreover, decomposing large-scale problems into smaller sub-problems makes each sub-problem easier to solve, thereby further expanding the applicability of the algorithm.

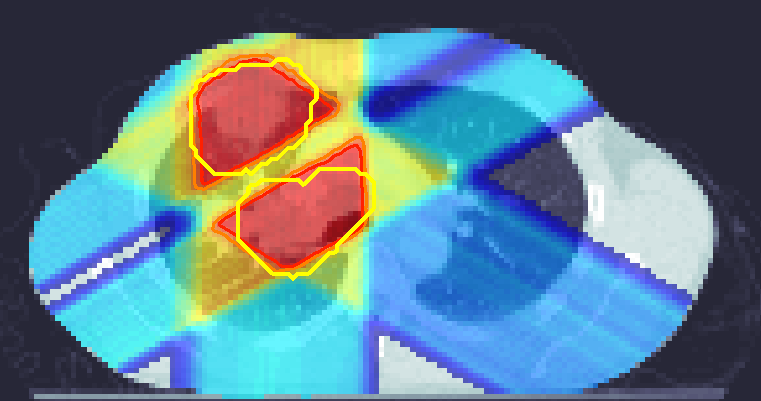

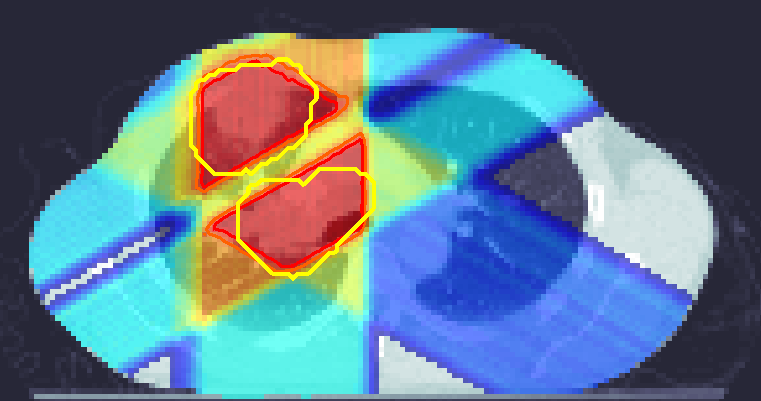

To further demonstrate the effectiveness of STOS, graphical representations in the form of DVH and DVRH have been illustrated in Figure 2 and values for some important indices are presented in Table 1. All the results demonstrate that the proposed STOS can spare more high-dose OAR regions (e.g., ROI = PTV10mm) near treatment targets than ADMM. The proposed methods achieved better dose conformality to the treatment target (e.g., CI). In Figure 2 (a) and (b), we present a comparison between STOS combined with SGD and ADMM. In Figure 2 (c) and (d), we provide a comparison between STOS combined with SGD, SAGA, and SARAH. In Figure 2 (a), it can be observed that the treatment plans obtained by STOS combined with SGD, SAGA, and SARAH exhibit lower dose on all OARs compared to those obtained by the ADMM algorithm. Although the results in Figure 2 (c) suggest that there is no significant difference between STOS combined with SGD and combined with SAGA or SARAH, the numerical results provided in Table 1 indicate that STOS combined with SGD performs significantly better than SAGA and SARAH. For example, the mean FLASH of ROI by SGD reduced from 1.29 Gy to 1.19 Gy for the lung when compared to ADMM. For , STOS combined with SGD outperforms all other algorithms, such as is significantly lower compared to other algorithms. SAGA and SARAH obtain similar results, but they achieve lower compared to SGD and other algorithms. We also present the dose distribution in Figure 3. From Figure 3, we can also observe that STOS combined with SGD provides a closer fit to the target region compared to SAGA or SARAH.

4.1.4 FLASH proton radiotherapy for the brain

Brain tumors are also a challenging problem in the medical field. Compared to tumors in the lungs, brain tumors present additional difficulties due to the intricate network of structures and pathways within the brain, making treatment more challenging. In this experiment, we conducted FLASH proton radiation therapy for the brain. The beam angles are empirically chosen to be . The regions of CTV, body, and the organ at risk (carotid, cranial nerve, oral cavity) are chosen for dose planning leading to the size of the dose influence matrix being , and the ROI is chosen for Flash effect optimization leading to the size of being . The brain data with a length of is shuffled and divided into 8 parts and 16 parts in the same way as the lung data. The is set to Gy and the is set to Gy. The parameter for STOS is set to .

| CI | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| ADMM | 106.02 | 0.68 | 12.11 | 59.75 | 59.07 | 11.02 | 0.49 | 65.88 | 96.21 |

| STOS SGD | 108.77 | 0.76 | 11.29 | 60.13 | 56.41 | 10.19 | 0.45 | 70.21 | 96.83 |

| STOS SGD | 107.89 | 0.79 | 11.34 | 60.00 | 57.29 | 10.17 | 0.45 | 72.21 | 97.17 |

| STOS SGD | 114.85 | 0.74 | 11.67 | 60.02 | 59.61 | 10.61 | 0.46 | 72.32 | 97.66 |

| STOS SAGA | 108.76 | 0.76 | 11.29 | 60.13 | 56.40 | 10.18 | 0.45 | 73.41 | 97.55 |

| STOS SARAH | 110.63 | 0.76 | 12.49 | 58.35 | 53.77 | 11.94 | 0.49 | 72.93 | 97.83 |

The objective function values are shown in Figure 4 when running the same number of epochs. Here, we compare STOS combined with SGD, SAGA, SARAH, and ADMM. All the stochastic algorithms demonstrate a much faster decrease in the objective function value compared to ADMM. In Figure 4 (b), the comparison results between SGD, SAGA, and SARAH are displayed, indicating that for brain tumors, SAGA leads to a faster decrease in the energy function compared to SGD and SARAH. Further, we provide a graph of DVH and DVRH in Figure 5. Some important indices also be presented in Table 2. All the results demonstrate that STOS combined with SGD, SAGA, and SARAH improve the dose rate to the ROI and reduce the dose to critical organs (carotid, cranial nerves, oral cavity). For instance, from Figure 5(b), it is evident that for the dose rate DVH (DRVH), STOS combined with SGD is noticeably higher than ADMM. In Figure 5 (d), the results of STOS combined with SGD, SAGA, and SARAH are very similar. The numerical results in Table 2 also demonstrate these phenomena. For example, the (maximum dose) for SGD reaches 114.85 Gy, while ADMM achieves only 102.02 Gy. SGD significantly outperforms ADMM in this aspect. SARAH, on the other hand, reduces to 53.77 Gy, which is substantially lower than that of ADMM, 59.07 Gy. Moreover, SARAH also yields much lower compared to ADMM. Furthermore, in Figure 6, we present the results of dose distribution, which demonstrate that STOS combined with the SARAH gradient estimator achieves the best fit to the tumor region.

5 Conclusion

In this paper, we propose a stochastic three-operator splitting (STOS) algorithm, which combines two types of stochastic gradient estimators: unbiased gradient estimators and variance-reduced gradient estimators. We establish the convergence analysis and obtain the convergence rates for each type of gradient estimator. Furthermore, we validate the effectiveness of the STOS algorithm in the FLASH proton radiotherapy optimization. The extensive experiments demonstrate that STOS has attained state-of-the-art performance for the simultaneous dose and dose rate optimization for FLASH proton radiotherapy. The STOS algorithm has the capability to partition a large-scale problem into smaller subproblems, and the utilization of stochastic gradients leads to improved convergence.

Acknowledgments

The work of Jian-Feng Cai was supported in part by the Hong Kong Research Grants Council GRF Grants 16310620 and 16306821, in part by the Hong Kong ITF MHP/009/20, and in part by the Project of Hetao Shenzhen-Hong Kong Science and Technology Innovation Cooperation Zone under Grant HZQB-KCZYB-2020083. Xiaoqun Zhang was supported by Shanghai Municipal Science and Technology Major Project (2021SHZDZX0102) and NSFC (No. 12090024). Jiulong Liu was partially supported by the Key grant of Chinese Ministry of Education (2022YFC2504302) and the Fund of the Youth Innovation Promotion Association, CAS (2022002). Fengmiao Bian was also partially supported by an outstanding Ph.D. graduate development scholarship from Shanghai Jiao Tong University.

References

- [1] A. Defazio, F. Bach and S. L. Julien. SAGA: A fast incremental gradient method with support for non-strongly convex composite objectives. In Advances in Neural Information Processing Systems, pages 1646–1654, 2014.

- [2] A. Khaled and P. Richtarik. Better theory for sgd in the nonconvex world. ArXiv preprint arXiv:2002.03329, 2020.

- [3] A. Yurtsever, V. Mangalick, and S. Sra. Three Operator Splitting with a Nonconvex Loss Function. Proceedings of the 38-th International Conference on Machine Learning, (PMLR), 139, 2021.

- [4] C. fang, J. Li, Z. Lin and T. Zhang. SPIDER: near-optimal non-convex optimization via stochastic path integated differential estimator. 32nd Conference on Neural Information Processing Systems (NIPS), 2018.

- [5] D. Davis. The asynchronous palm algorithm for nonsmooth nonconvex problems. ArXiv preprint arXiv:1604.00526, 2016.

- [6] D. Davis and W. Yin. Three-Operator Splitting Scheme and its Optimization Applications. Set-Valued Var. Anal., 25:829–858, 2017.

- [7] D. Driggs, J. Tang, J. Liang, M. Davies and C. B. Schonlieb. SPRING: A fast stochastic proximal alternating method for non-smooth non-convex optimization. SIAM J. Imaging Sci., 14(04):1932–1970, 2021.

- [8] E. Beyreuther, M. Brand, S. Hans, et al. Feasibility of proton FLASH effect tested by zebrafish embryo irradiation. Radiother Oncol., 139:46–50, 2019.

- [9] F. Bian and X. Zhang. A three-operator splitting algorithm for nonconvex sparsity regularization. SIAM J. Sci. Comput, 43(4):A2809–A2839, 2021.

- [10] F. Bian, J. Liang and X. Zhang. A stochastic alternating direction method of multipliers for non-smooth and non-convex optimization. Inverse Problems, 37(7), 2021.

- [11] F. Bian, R. Liu and X. Zhang. A Stochastic Three-block Splittinng Algorithm and Its Application to Quantized Deep Neural NNetworks. ArXiv preprint arXiv:2204.11065, 2022.

- [12] G. Li and T.K. Pong. Douglas–Rachford splitting for nonconvex optimization with application to nonconvex feasibility problems. Math. Program., Ser. A, 159:371–401, 2016.

- [13] G. Li and T.K. Pong. Calculus of the exponent of Kurdyka-Lojasiewicz inequality and its applications to linear convergence of first-order methods. Found. Comput. Math., 18(5):1199–1232, 2018.

- [14] Hao Gao, Jiulong Liu, Yuting Lin, Gregory N Gan, Guillem Pratx, Fen Wang, Katja Langen, Jeffrey D Bradley, Ronny L Rotondo, Harold H Li, et al. Simultaneous dose and dose rate optimization (sddro) of the flash effect for pencil-beam-scanning proton therapy. Medical Physics, 49(3):2014–2025, 2022.

- [15] H. Attouch, J. Bolte and B. F. Svaiter. Convergence of descent methods for semi-algebraic and tame problems: proximal algorithms, forward–backward splitting, and regularized Gauss–Seidel methods. Math. Program., 137(1-2):91–129, 2013.

- [16] H. Attouch, J. Bolte, P. Redont and A. Soubeyran. Proximal alternating minimization and projection methods for nonconvex problems : An approach based on the Kurdyka–Łojasiewicz inequality. Math. Oper. Res., 35:438–457, 2010.

- [17] H. Cai, J.-F. Cai and K. Wei. Accelerated Alternating Projections for Robust Principal Component Analysis. J. Mach. Learn. Res., 20:1–33, 2019.

- [18] H. Gao, B. Clasie, M. McDonald, K. M. Langen, T. Liu, and Y. Lin. Plan-delivery-time constrained inverse optimization method with minimum-MU-per-energy-layer(MMPEL) for efficient pencil beam scanning proton therapy. Med. Phys., 47(9):3892–3897, 2020.

- [19] H. Gao, B. Lin, Yuting Lin, Shujun Fu, Katja Langen, Tian Liu and Jeffery Bradley. Simultaneous dose and dose rate optimization (sddro) for flash proton therapy. Medical Physics, 47(12):6388–6395, 2020.

- [20] H. Ouyang, N. He, L. Q. Tran and A. Gray. Stochastic alternating direction method of multipliers. Proceedings of the 30-th International conference on machine learning, (JMLR), 2013.

- [21] H. Robbins and D. Siegmund. A convergence theorem for non-negative almost supermartingales and some applications. Optimizing Methods in Statistics, pages 233–257, 1971.

- [22] H. Robbins and S. Monro. A stochastic approximation method. Ann. Math. Statist., 22(3):400–407, 1951.

- [23] J. Bolte, A. Daniilidis, A. Lewis and M. Shiota. Clarke subgradients of stratifiable functions. SIAM J. Optim., 18:556–572, 2007.

- [24] J. Bolte, A. Daniilidis and A. Lewis. The Łojasiewicz inequality for nonsmooth subanalytic functions with applications to subgradient dynamical systems. SIAM J. Optim., 17(4):1205–1223, 2006.

- [25] J. Bolte, A. Daniilidis, O. Ley and L. Mazet. Characterizations of Łojasiewicz inequalities subgradient flows, talweg, convexity. Trans. Amer. Math. Soc., 362(6):3319–3363, 2010.

- [26] J. Bolte, S. Sabach and M. Teboulle. Proximal alternating linearized minimization for nonconvex and nonsmooth problems. Math. Program., Ser. A, 146:459–494, 2014.

- [27] J. D. Wilson, E. M. Hammond, G. S. Higgins and K.Petersson. Ultra-High Dose Rate (FLASH) Radio-therapy: Silver Bullet or Fool’s Gold? Front. Oncol., 9, 2020.

- [28] J.-F. Cai, R. Chen, J. Fan, and H. Gao. Minimum-monitor-unit optimization via a stochastic coordinate descent method. Physics in Medicine & Biology, 67(1):015009, 2022.

- [29] J. Flamant, S. Miron, and D. Brie. Quaternion non-negative matrix factorization: Definition, uniqueness, and algorithm. IEEE Trans. Image Process., 68:1870–1883, 2020.

- [30] J. Konecny, J. Liu, P. Richtarik and M. Takac. Mini-batch semi-stochastic gradient descent in the proximal setting. IEEE Journal of Selected Topics in Signal Processing, 10:242–255, 2016.

- [31] J. Li, C. Huang, R. Chan, H. Feng, M. K. Ng, and T. Zeng. Spherical Image Inpainting with Frame Transformation and Data-Driven Prior Deep Networks. SIAM J. Imag. Sci., 16(3):1177–1194, 2023.

- [32] J. Mairal, F. Bach, J. Ponce and G. Sapiro. Online dictionary learning for sparse coding. Proceedings of the 26th Annual International Conference on Machine Learning, (ICML), pages 689–696, 2009.

- [33] J. Pan and M. K. Ng. Separable Quaternion Matrix Factorization for Polarization Images. SIAM J. Imag. Sci., 16(3):1281–1307, 2023.

- [34] J. R. Hughes and J. L. Parsons. FLASH Radiotherapy: current knowledge and future insights using proton-beam therapy. Int. J. Mol. Sci., 21(18), 2020.

- [35] L. M. Nguyen, J. Liu, K. Scheinberg and M. Takac. SARAH: A novel method for machine learning problems using stochastic recursive gradient. In Proceedings of the 34th International Conference on Machine Learning, 70(2613-2621), 2017.

- [36] L. Mason, J. Baxter, P. Bartlett and M. Frean. Boosting algorithms as gradient descent. Conference on Neural Information Processing Systems, (NIPS), 12:512–518, 1999.

- [37] M. Buonanno, V. Grilj, D. J. Brenner. Biological effects in normal cells exposed to FLASH dose rate protons. Radiother oncol., 139(51-55), 2019.

- [38] M. R. Metel and A. Takeda. Stochastic proximal methods for non-smooth non-convex constrained sparse optimization. J. Mach. Learn. Res., 22:1–36, 2021.

- [39] M. Schmidt, N. L. Roux, and F. Bach. Minimizing finite sums with the stochastic average gradient. Math. Program., pages 1–30, 2016.

- [40] N. Gillis. The why and how of nonnegative matrix factorization. Regulariz. Optim. Kernels Supp. Vector Mach., 12:257–291, 2014.

- [41] N. H. Pham, L. M. Nguyen, D. T. Phan, and Q. T. Dinh. ProxSARAH: An Efficient Algorithmic Framework for Stochastic Composite Nonconvex Optimization. J. Mach. Learn. Res., 21:1–48, 2020.

- [42] O. Shamir. A stochastic PCA and SVD algorithm with an exponential convergence rate. Proceedings of the 32-th International Conference on Machine Learning, (PMLR), 2015.

- [43] P. Yin, Y. Lou, Q. He and J. Xin. Minimization of for compressed sensing. SIAM J. Sci. Comput., 37(1):A536–A563, 2015.

- [44] R. Johnson and T. Zhang. Accelerating stochastic gradient descent using predictive variance reduction. Advances in Neural Information Processing Systems, (NIPS), pages 315–323, 2013.

- [45] S. Ghadimi and G. Lan. Accelerated Gradient Methods for Nonconvex Nonlinear and Stochastic Optimization. Technical Report, 2013.

- [46] S. Ghadimi and G. Lan. Stochastic first- and zeroth-order methods for nonconvex stochastic programming. SIAM J. Optim., 23(4):2341–2368, 2013.

- [47] S. Ghadimi, G. Lan and H. Zhang. Mini-batch stochastic approximation methods for nonconvex stochastic composite optimization. Math. Program., Ser. A, 155:267–305, 2016.

- [48] S. Girdhani, E. Abel, A. Katsis, et al. FLASH: A novel paradigm changing tumor irra- diation platform that enhances therapeutic ratio by reducing normal tissue toxicity and activating immune pathways. Cancer Res., 79, 2019.

- [49] S. J. Reddi, A. Hefny, S. Sra, B. Poczos, and A. Smola. Stochastic Variance Reduction for Nonconvex Optimization. Proceedings of the 33-th International Conference on Machine Learning, (PMLR), 2016.

- [50] S. J. Reddi, S. Sra, B. Poczos and A. J. Smola. Proximal Stochastic Methods for Nonsmooth Nonconvex Finite-Sum Optimization. 30th Conference on Neural Information Processing Systems (NIPS), 2016.

- [51] S. S. Shwartz and T. Zhang. Stochastic dual coordinate ascent methods for regularized loss. J. Mach. Learn. Res., 14(1):567–599, 2013.

- [52] V. Favaudon, L. Caplier, V. Monceau, et al. Ultrahigh dose-rate FLASH irradiation increases the differ- ential response between normal and tumor tissue in mice. Sci. Transl. Med., 6(245), 2014.

- [53] Hans-Peter Wieser, Eduardo Cisternas, Niklas Wahl, Silke Ulrich, Alexander Stadler, Henning Mescher, Lucas-Raphael Müller, Thomas Klinge, Hubert Gabrys, Lucas Burigo, et al. Development of the open-source dose calculation and optimization toolkit matrad. Medical physics, 44(6):2556–2568, 2017.

- [54] X. Zhang, D. Robertson, H.Li, S. Cchoi, A. K. Lee, X. R. Zhu, N. Sahooand, and M. T. Gillin. Intensitymodulated proton therapy treatment planning using single-field optimization: the impact of monitor unit constraints on plan quality. Med. Phys., 37(3):1210–9, 2010.

- [55] Y. Lin, B. Lin, S. Fu, M. Folkerts, E. Abel, J. Bradley and H. Gao. Sddro-joint: simultaneous dose and dose rate optimization with the joint use of transmission beams and bragg peaks for flash proton therapy. Physics in Medicine and Biology, 66(12):125011, 2021.

- [56] Y. Liu and W. Yin. An Envelope for Davis–Yin Splitting and Strict Saddle-Point Avoidance. J. Optim. Theory Appl., 181:567–587, 2019.

- [57] Y. Lou and M. Yan. Fast L1–L2 Minimization via a Proximal Operator. J. Sci. Comput., 74:767–785, 2018.

- [58] Y.-N. Zhu, X. Zhang, Y. Lin, C. Lominska, and H. Gao. An orthogonal matching pursuit optimization method for solving minimum-monitor-unit problems: Applications to proton impt, arc and flash. Medical Physics, 2023.

- [59] Z. Allen-Zhu and E. Hazan. Variance Reduction for Faster NonConvex Optimization. Proceedings of the 33-th International Conference on Machine Learning, (PMLR), 2016.

Appendix A Proofs of main theorems

A.1 Basic Lemmas

We introduce some basic lemmas that are required in the convergence analysis. The proofs of Lemma A.1 and Lemma A.2 can be found in reference [9] and are not detailed here.

Lemma A.1.

Let . Then we have

Lemma A.2.

Next, we present a crucial lemma in the convergence analysis of the STOS algorithm with both the unbiased estimator and the variance-reduced estimator.

Lemma A.3.

-

Proof.

From the proof of [9, Lemma 3.3], we have

(A.2) While the gradient here is stochastic, the proof of the above inequality in [9] still holds. Next, we further estimate . Notice that

(A.3) where we have used in Assumption 3.1. Furthermore, using some basic inequalities, we get

(A.4) Substituting (A.4) into (A.3), we get

(A.5) Combining (A.5) with (A.2), we have

Using Lemma A.1, we obtain

This completes the proof. ∎

A.2 Convergence rates for unbiased gradient estimator

-

Proof of Theorem 3.5.

From Lemma A.3, we have

Since

we have

(A.6) Denote

and

Then (A.6) can be rewritten as

(A.7) Combining (A.7) with the estimate of in Lemma A.2, we have

Denote , we have

Further, we have

(A.8) By the optimality conditions (3.1), we know

This implies that

By Cauchy inequality, the update (1.4c) and Lemma A.2, we have

Taking expectations on both sides (over ) and using (A.8), we have

From [3, Lemma 3] and Assumption 3.2, we know

Thus, we have

Summing from to and choosing , we get

Take from randomly. Then we obtain

The proof of Theorem 3.5 is completed. ∎

A.3 Convergence rates for variance-reduced gradient estimator

We first recall a supermartingale convergence theorem which can be referred to [5] and [21] for details.

Lemma A.4 (Supermartingale Convergence Theorem).

-

Proof of Theorem 3.7.

We will complete the proof of this theorem using Lemma A.3. According to Cauchy inequality, we get the following estimate

(A.9) Combining (A.9) with (A.1) in Lemma A.3, we have

(A.10) Simplifying the equation (A.10), we get

For any , adding to both sides of the equation yields

(A.11) Recalling the definition of in (3.3) and taking the conditional expectation with respect to on both sides of (A.11) yields

This is equivalent to

(A.12) According to (2.3), we have

(A.13) Combining (A.13) with (A.12), we get

(A.14) We rearrange (A.14) as

Recalling the definition of in (3.4) and taking the expectation on both sides of (LABEL:eq29) yields

(A.15) According to Lemma A.2, inequality (A.15) can be rewritten as

Denote

Then we have

(A.16) When , (A.16) shows that is a decreasing function. Further, according to Lemma A.4, we know

Again, according to Lemma A.2 and (1.4c) we have

∎

In the above, we have shown that the sum of squares of the sequence norm has a finite length almost surely. However, we need to further demonstrate that the sequence norm is summable. To do so, we first provide a bound on the norm of subgradient .

Lemma A.5.

Suppose that Assumption 3.1 holds and is twice continuously differentiable with a bounded Hessian, i.e., there exists such that for any . Let be a sequence generated by Algorithm 1 using variance-reduced gradient estimators. Denote . Then, for any , we have

where . Moreover, for any critical point , we have .

-

Proof.

First, we denote

According to the definition of in (3.3), we can compute

(A.17a) (A.17b) (A.17c) and

Since , we have

By the optimality condition , we know

Then, we have . By further estimates, we get

(A.18a) (A.18b) (A.18c) (A.18d) (A.18e) (A.18f) (A.18g) Then we have the following estimate

where Because , we get

Furthermore, by the Convergence of Estimator, we have . Then

(A.19) Suppose that is any cluster point of . Then there exists a subsequence satisfying . By the subproblem (1.4b) in Algorithm 1, for any , we have

This is equivalent to

which means . Because is a lower semi-continuous function, we know , thus . Further, by the continuity of and , we get

Combined with (A.19), we have

∎

Next, we present the random version of the KL property, which is taken from Lemma 4.5 in [7].

Theorem A.6.

Let be a bounded sequence of iterates of Algorithm 1 using a variance-reduced gradient estimator, and suppose that is not a critical point after a finite number of iterations. Let be a semialgebraic function satisfying the Kurdyka-ojasiewicz property (see Definition 2.11) with exponent . Then there exists an index and desingularizing function so that the following holds almost surely:

where is an non-decreasing sequence converging to for some , where is the set of cluster points of .

In the following, we provide the convergence for STOS (Algorithm 1) under the assumption that the objective functions , and are semi-algebraic.

-

Proof of Theorem 3.9.

If , then also satisfies the KL property with exponent , so we only consider the case By Theorem A.6 there exists a function such that, almost surely,

Using the bound on in Theorem A.5 and Jensen′s inequality, we have

(A.20) Because for some vectors , we have . By the geometric decay in Definition 2.5 we can bound the term :

Rearranging and multiplying by yields

(A.21) Substituting (A.21) into (A.20), we get

(A.22) Define . Then we have . Therefore, for Using the definition of , we can rewrite this inequality as

Next we prove that the above inequality holds for , for which an additional term needs to be added to the denominator. By , , and , there exists an index and a constant such that

Denote

Since the terms above are small compared to , we can find a constant such that

for all . Using the fact that , we get

Hence, for ,

By the concavity of ,

where the last inequality is due to the non-decreasing property of . Denote , then

Since , we have

(A.23) Applying Young’s inequality to (A.23) yields

Then we have

(A.24) Summing inequality (A.24) from to ,

This implies that

where . Using Jensen’s inequality yields

Since is bounded, we obtain

Together with (1.4c), we get

∎