Stochastic noise can be helpful for variational quantum algorithms

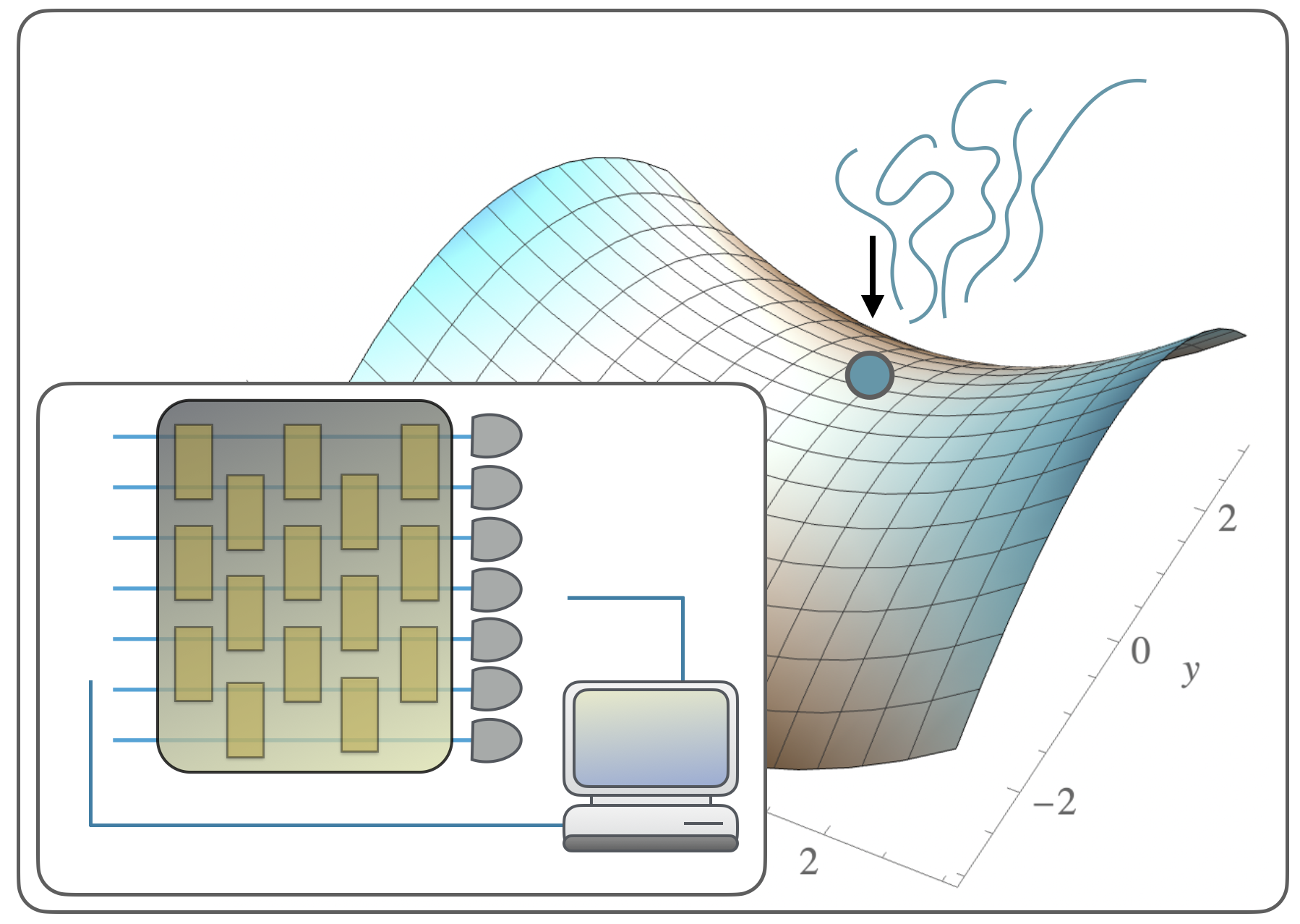

Saddle points constitute a crucial challenge for first-order gradient descent algorithms. In notions of classical machine learning, they are avoided for example by means of stochastic gradient descent methods. In this work, we provide evidence that the saddle points problem can be naturally avoided in variational quantum algorithms by exploiting the presence of stochasticity. We prove convergence guarantees and present practical examples in numerical simulations and on quantum hardware. We argue that the natural stochasticity of variational algorithms can be beneficial for avoiding strict saddle points, i.e., those saddle points with at least one negative Hessian eigenvalue. This insight that some levels of shot noise could help is expected to add a new perspective to notions of near-term variational quantum algorithms.

Quantum computing has for many years been a hugely inspiring theoretical idea. Already in the 1980ies it was suggested that quantum devices could possibly have superior computational capabilities over computers operating based on classical laws Feynman-1986 ; QuantumTuring . It is a relatively recent development that devices have been devised that may indeed have computational capabilities beyond classical means GoogleSupremacy ; Boixo ; SupremacyReview ; jurcevic_demonstration_2021 . These devices are going substantially beyond what was possible not long ago. And still, they are unavoidably noisy and imperfect for many years to come. The quantum devices that are available today and presumably will be in the near future are often conceived as hybrid quantum devices running variational quantum algorithms Cerezo_2021 , where a quantum circuit is addressed by a substantially larger surrounding classical circuit. This classical circuit takes measurements from the quantum device and appropriately varies variational parameters of the quantum device in an update. Large classes of variational quantum eigensolvers (VQE), the quantum approximate optimization algorithm (QAOA) and models for quantum-assisted machine learning are thought to operate along those lines, based on suitable loss functions to be minimized Peruzzo ; Kandala ; McClean_2016 ; QAOA ; Lukin ; bharti_2021_noisy ; VariationalReview . In fact, many near-term quantum algorithms in the era of noisy intermediate-scale quantum (NISQ) computing preskill_quantum_2018 belong to the class of variational quantum algorithms. While this is an exciting development, it puts a lot of burden to understanding how reasonable and practical classical control can be conceived.

Generally, when the optimization space is high-dimensional updates of the variational parameters are done via gradient evaluations PhysRevA.99.032331 ; bergholm2018pennylane ; pennylane ; Gradients , while zeroth-order and second-order methods are, in principle, also applicable, but typically only up to a limited number of parameters. This makes a lot of sense, as one may think that going downhill in a variational quantum algorithms is a good idea. That said, the concomitant classical optimization problems are generally not convex optimization problems and the variational landscapes are marred by globally suboptimal local optima and saddle points. In fact, it is known that the problems of optimizing variational parameters of quantum circuits are computationally hard in worst case complexity Bittel . While this is not of too much concern in practical considerations (since it is often sufficient to find a “good” local minimum instead of the global minimum) and resembles an analogous situation in classical machine learning, it does point to the fact that one should expect a rugged optimization landscape, featuring different local minima as well as saddle points. Such saddle points can indeed be a burden to feasible and practical classical optimization of variational quantum algorithms.

In this work, we establish the notion that in such situations, small noise levels can actually be of substantial help. More precisely, we show that some levels of statistical noise (specifically the kind of noise that naturally arises from a finite number of measurements to estimate quantum expectation values) can even be beneficial. We get inspiration from and build on a powerful mathematical theory in classical machine learning: there, theorems have been established that say that “noise” can help gradient-descent optimization not to get stuck at saddle points JordanSaddlePoints ; jain2017non . Building on such ideas we show that they can be adapted and developed to be applicable to the variational quantum algorithms setting. Then we argue that the “natural” statistical noise of a quantum experiment can play the role of the artificial noise that is inserted by hand in classical machine learning algorithms to avoid saddle points. We maintain the precise and rigorous mindset of Ref. JordanSaddlePoints , but show that the findings have practical importance and can be made concrete use of when running variational quantum algorithms on near-term quantum devices. In previous studies it has been observed anecdotally that small levels of noise can indeed be helpful for improving the optimization procedure duffield_bayesian_2022 ; Gradients ; Jansen ; Rosenkranz ; Lorenz ; Yelin2 ; gu_adaptive_2021 . What is more, variational algorithms have been seen as being noise-robust in a sense PhysRevA.102.052414 . That said, while in the past rigorous convergence guarantees have been formulated for convex loss functions of VQAs Gradients ; gu_adaptive_2021 , in this work we focus on the non-convex scenario, where saddle points and local minima are present. Such a systematic and rigorous analysis of the type we have conducted that explains the origin of the phenomenon of noise facilitating optimization has been lacking.

It is important to stress that for noise we refer to in our theorems is the type of noise that adds stochasticity to the gradient estimations, such as the use of a finite number of measurements or the zero-average fluctuations that are involved in real experiments. Also, instances of global depolarizing noise are covered as discussed in Appendix .2. Thus, in this case, noise does not mean the generic quantum noise that results from the interaction with the environment characterized by completely positive and trace-preserving (CPTP) maps, which can be substantially detrimental to the performance of the algorithm DePalmaVQAs ; Stilck_Fran_a_2021 . In addition, it has been shown that noisy CPTP maps in the circuit may significantly worsen the problem of barren plateaus NoisyBP_Wang_2021 ; McClean_2018 which is one of the main obstacles to the scalability of variational quantum algorithms (VQAs).

We perform numerical experiments, and we show examples where optimizations with gradient descent without noise get stuck at saddle points, whereas if we add some noise, we can escape this problem and get to the minimum—convincingly demonstrating the functioning of the approach. We verify the latter not only in a numerical simulation, but also making use of data of a real IBM quantum machine.

Preliminaries

In our work, we will show how a class of saddle points, the so-called strict saddle points, can be avoided in noisy gradient descent. In developing our machinery, we build strongly on the rigorous results laid out in Ref. JordanSaddlePoints and uplift them to the quantum setting at hand. For this, we do method development in its own right. First, we introduce some useful definitions and theorems (see Ref. JordanSaddlePoints for a more in-depth discussion).

Throughout this work, we consider the problem of minimizing a function . We indicate its gradient at as and its Hessian matrix at point as . We denote as the norm of a vector. and denote respectively the Hilbert-Schmidt norm and the largest eigenvalue norm of a matrix. We denote as the minimum eigenvalue of a matrix.

Definition 1 (-Lipschitz function).

A function is -Lipschitz if and only if

| (1) |

for every and .

Definition 2 (-strong smoothness).

A differentiable function is called -strongly smooth if and only if its gradient is a -Lipschitz function, i.e.,

| (2) |

for every and .

Definition 3 (Stationary point).

If is differentiable, is defined a stationary point if

| (3) |

Definition 4 (-approximate stationary point).

If is differentiable, is defined an -approximate stationary point if

| (4) |

Definition 5 (Local minimum, local maximum, and saddle point).

If is differentiable, a stationary point is a

-

•

local minimum, if there exists such that for any with .

-

•

Local maximum, if there exists such that for any with .

-

•

Saddle point, otherwise.

Definition 6 (-Lipschitz Hessian).

A twice differentiable function has -Lipschitz Hessian matrix if and only if

| (5) |

for every and (where is the Hilbert-Schmidt norm).

Definition 7 (Gradient descent).

Given a differentiable function , the gradient descent algorithm is defined by the update rule

| (6) |

where is called learning rate.

The convergence time of the gradient descent algorithm is given by the following theorem JordanSaddlePoints .

Theorem 8 (Gradient descent complexity).

Given a -strongly smooth function , for any , if we set the learning rate as , then the number of iterations required by the gradient descent algorithm such that it will visit an -approximate stationary point is

where is the initial point and is the value of computed in the global minimum.

It is important to note that this result does not depend on the number of free parameters. Also, the stationary point at which the algorithm will converge is not necessarily a local minimum, but can also be a saddle point. Note that a generic saddle point satisfies where is the minimum eigenvalue. Now we define a subclass of saddle points.

Definition 9 (Strict saddle point).

is a strict saddle point for a twice differentiable function if and only if is a stationary point and if the minimum eigenvalue of the Hessian is .

Adding the strict condition, we remove the case in which a saddle point satisfies . Moreover, note that a local maximum respects our definition of strict saddle point. Analogously to Ref. JordanSaddlePoints , in this work, we focus on avoiding strict saddle points. Hence, it is useful to introduce the following definition.

Definition 10 (Second-order stationary point).

Given a twice differentiable function is a second-order stationary point if and only if

| (7) |

Definition 11 (-second-order stationary point).

For a -Hessian Lipschitz function is an -second-order stationary point if

| (8) |

Gradient descent makes a non-zero step only when the gradient is non-zero, and thus in the non-convex setting it will be stuck at saddle points. A simple variant of GD is the perturbed gradient descent (PGD) method JordanSaddlePoints which adds randomness to the iterates at each step.

Definition 12 (Perturbed gradient descent).

Given a differentiable function , the perturbed gradient descent algorithm is defined by the update rule

| (9) |

where is the learning rate and is a normally distributed random variable with mean and variance with .

In Ref. JordanSaddlePoints , the authors show that if we pick , PGD will find an -second-order stationary point in a number of iterations that has only a poly-logarithmic dependence on the number of free parameters, i.e., it has the same complexity of (standard) gradient descent up to poly-logarithmic dependence.

Theorem 13 (JordanSaddlePoints ).

Let the function be strongly smooth and such that it has a Lipschitz-Hessian. Then, for any , the algorithm starting at the point , with parameters and , will visit an second-order stationary point at least once in the following number of iterations, with probability at least

where and hide poly-logarithmic factors in and . is the initial point and is the value of computed in the global minimum.

This theorem has been proven in Ref. JordanSaddlePoints for Gaussian distributions, but the authors have pointed out that this is not strictly necessary and that it can be generalized to other types of probability distributions in which appropriate concentration inequalities can be applied (for a more in-depth discussion, see Ref. JordanSaddlePoints ).

In Ref. ExpTimeSaddle , it has been shown that although the standard GD (without perturbations) almost always escapes the saddle points asymptotically Lee2017 , there are (non-pathological) cases in which the optimization requires exponential time to escape. This highlights the importance of using gradient descent with perturbations.

Statistical noise in variational quantum algorithms

Our analysis focuses on variational quantum algorithms in which the loss function to be minimized has the following form

| (10) |

where is a Hermitian operator and is a parameterized unitary of the form

| (11) |

where and are respectively fixed unitaries and Hermitian operators. Theorem 13 above assumes that the loss function to minimize is -strongly smooth and has a -Lipschitz Hessian. To guarantee that these conditions are met for loss functions of parametrized quantum circuits, we provide the following theorem.

Theorem 14.

The loss function given in equation (10) with being a -dimensional vector is -strongly smooth and has a -Lipschitz Hessian. In particular, we have

| (12) | ||||

| (13) |

We provide a detailed proof in Appendix .1. It is important to observe that for typical VQAs, the observable associated to the loss function and the components have an operator norm that grows at most polynomially with the number of qubits, so also and will grow at most polynomially. This is because the circuit depth must be chosen to be at most , as well as are usually chosen to be Pauli strings in which case their operator norms is or to be linear combinations of many Pauli strings with coefficients (as in QAOA), therefore by the triangle inequality their operator norm is bounded by . Sometimes is also chosen to be a quantum state Cerezo_2021 , therefore with operator norm bounded by . Hence, the number of iterations in Theorem 13 does not grow exponentially in the number of qubits.

The previous results can be easily generalized for the case of differentiable and bounded loss functions which are functions of expectation values, i.e.,

| (14) |

In fact, we observe that if and are Lipschitz functions, then

In addition, if is a differentiable function with bounded derivatives on a convex set, then (because of the mean value theorem) is Lipschitz on this set. From this follows that if is a differentiable function with bounded derivatives of a quantum expectation value (whose image defines a bounded interval), then it is Lipschitz. Moreover the sum of Lipschitz functions is a Lipschitz function. Therefore, functions of expectation values commonly used in machine learning tasks, such as the mean squared error, satisfy the Lipschitz condition.

Moreover, Theorem 13 assumes that at each step of the gradient descent a normally distributed random variable is added to the gradient, namely . In VQAs the partial derivatives are commonly estimated using a finite number of measurements, such as by the so-called parameter shift rule GradientsQcomp . Here, the update rule for the gradient descent is

| (16) |

where is an estimator of the partial derivative obtained by a finite number of measurements from the quantum device. Moreover, we define

| (17) |

Note that is a random variable with zero expectation value. Therefore, we have

| (18) |

The “noise” will play the role of the noise that is added by hand in the perturbed-gradient descent algorithm 12. However, we cannot control exactly the distribution of such random variable, nor the variance. However, it is to be expected that in the limit of many measurement shots, by the central limit theorem, the noise encountered in practice will be close to the noise considered here, a Gaussian distribution.

Numerical and quantum experiments

In this section, we discuss the results of numerical and quantum experiments we have performed to show that stochasticity can help escape saddle points. Our results suggest that statistical noise leads to a non-vanishing probability of not getting stuck in a saddle point and thereby reaching a lower value of the loss function. These numerical experiments also complement the rigorous results that are proven to be valid under very precisely defined conditions, while the intuition developed here is expected to be more broadly applicable, so that the rigorous results can be seen as proxies for a more general mindset. Modify (16) We have also observed this phenomena in a real IBM quantum device. We have done so to convincingly stress the significance of our results in practice.

Let us first consider the Hamiltonian . The loss function we consider is defined as the expectation value of such a Hamiltonian over the parametrized quantum circuit qml.StronglyEntanglingLayers implemented in Pennylane bergholm2018pennylane , where two layers of the circuit are used. In all our experiments we first initialize the parameters in multiple randomly chosen values. Next, we select the initial points for which the optimization process gets stuck at a suboptimal loss-function value, thereby focusing on cases in which saddle points constitute a significant problem for the (noiseless) optimizer. This selection can be justified by the fact that the loss function defined by is trivial to begin with. The relevant aspect of this experiment is to study situations in which optimizer encounters saddle points. As such we exclusively investigate these specifically selected initial points by subsequently initializing the noisy optimizer with them.

As a proof of principle, we first show the results of an exact simulation (i.e., the expectation values are not estimated using a finite number of shots, but are calculated exactly) in which noise is added manually at each step of the gradient descent. The probability distribution associated to the noise is chosen to be a Gaussian distribution with mean and variance . Figure 2 shows the difference between the noiseless, and noisy calculations with the same initial conditions of the gradient descent, when the noise is from random Gaussian perturbations added manually. Figure 3 shows the performance of the experiment, defined as as a function of the noise parameter. Here, we can find a critical value of noise, leading to saddle-point avoidance. Figure 4 specifically addresses quantum noise levels, with simulated results about purely statistical noise levels (shot noise) and device noise (simulated by making use of the noise model of actual quantum hardware IBM Qiskit).

It should be noted that including device noise generally also means dealing with completely positive trace preserving maps that can lead to a different loss function, with new local minima, new saddle points and a flatter landscape NoisyBP_Wang_2021 . However, even in this case we observe an improvement in performance using the same initial parameters leading to the saddle point in the noiseless case. This is perfectly in line with the intuition developed here, as long as the effective noise emerging can be seen as a small perturbation of the reference circuit featuring a given loss landscape that is then in effect perturbed by stochastic noise.

Aside from the quantum machine learning example, we also provide another instance in variational quantum eigensolvers (VQEs). Here, we use the Hamiltonian associated to the Hydrogen molecule , which is a 4-qubit Hamiltonian obtained by the fermionic one performing a Jordan-Wigner transformation. We specifically use the same circuit from h2.xyz, the Hydrogen VQE example in Pennylane pennylane . Also here, given initial parameters that led to saddle points in the noiseless case, we find that starting by the same parameters and adding noise can lead to saddle-point avoidance. Results are shown in Fig. 5 where we compare the noiseless and noisy simulation.

To further provide evidence of the functioning of our approach suggested and the rigorous insights established, we put the findings into contact of the results of a real experiment example in the IBM Qiskit environment. We use the Hamiltonian that we used in our first numerical simulation with four qubits and two layers. We run the experiment using as initial condition the one that lead to a saddle point in the noiseless case. We use the IBMQ_Jakarta device with 10000 shots. The result in Figure 6 shows that it is possible to obtain a lower value of the cost function than that of the simulation without noise that has been stuck in a saddle point.

Conclusion and outlook

In this work, we have proposed small stochastic noise levels as an instrument to facilitate variational quantum algorithms. This noise can be substantial, but should not be too large: The way a noise level can strike the balance in overcoming getting stuck in saddle points and being detrimental is in some ways reminiscent of the phenomenon of stochastic resonance in statistical physics StochasticResonance . This is a phenomenon in which suitable small increases in levels of noise can increase in a metric of the quality of signal transmission, resonance or detection performance, rather than a decrease. Here, also fine tuned noise levels can facilitate resonance behavior and avoid getting trapped.

On a higher level, our work invites to think more deeply about the use of classical stochasic noise in variational quantum algorithms as well as ways to prove performance guarantees about such approaches. For example, Metropolis sampling inspired classical algorithms in which a stochastic process satisfying detailed balance is set up over variational quantum circuits may assist in avoiding getting stuck in rugged energy landscapes.

It is also interesting to note that the technical results obtained here provide further insights into an alternative interpretation of the setting discussed here. Instead of regarding the noise as stochastic noise that facilitates the optimization in the proven fashion established here, one may argue that the noise channels associated with the noise alter the variational landscapes in the first place PhysRevA.104.022403 ; PRXQuantum.2.040309 . For example, such quantum channels are known to be able to break parameter symmetries in over-parameterized variational algorithms. It is plausible to assume that these altered variational landscapes may be easier to optimize over. It is an interesting observation in its own right that the technical results obtained here also have implications to this alternative viewpoint, as the convergence guarantees are independent on the interpretation.

It is the hope that the present work puts the role of stochasticity in variational quantum computing into a new perspective, and contributes to a line of thought exploring the use of suitable noise and sampling for enhancing quantum computing schemes.

Author contributions

J. E. and J. L. have suggested to exploit classical stochastic noise in variational quantum algorithms, and to prove convergence guarantees for the performance of the resulting algorithms. J. L., A. A. M., F. W., and J. E. have proven the theorems of convergence. J. L. and F. W. have devised and conducted the numerical simulations. J. L. has performed quantum device experiments under the guidance of L. J. All authors have discussed the results and have written the manuscript.

Acknowledgments

J. L. is supported in part by International Business Machines (IBM) Quantum through the Chicago Quantum Exchange, and the Pritzker School of Molecular Engineering at the University of Chicago through AFOSR MURI (FA9550-21-1-0209). F. W., A. A. M. and J. E. thank the BMBF (Hybrid, MuniQC-Atoms, DAQC), the BMWK (EniQmA, PlanQK), the MATH+ Cluster of Excellence, the Einstein Foundation (Einstein Unit on Quantum Devices), the QuantERA (HQCC), the Munich Quantum Valley (K8), and the DFG (CRC 183) for support. LJ acknowledges support from the ARO (W911NF-18-1-0020, W911NF-18-1-0212), ARO MURI (W911NF-16-1-0349), AFOSR MURI (FA9550-19-1-0399, FA9550-21-1-0209), DoE Q-NEXT, NSF (EFMA-1640959, OMA-1936118, EEC-1941583), NTT Research, and the Packard Foundation (2013-39273). This research used resources of the Oak Ridge Leadership Computing Facility, which is a DOE Office of Science User Facility supported under Contract DE-AC05-00OR22725.

References

- (1) Feynman, R. P. Quantum mechanical computers. Found. Phys. 16, 507–531 (1986).

- (2) Deutsch, D. Quantum theory, the Church-Turing principle and the universal quantum computer. Proc. Roy. Soc. A 400, 97–117 (1985).

- (3) Arute, F. et al. Quantum supremacy using a programmable superconducting processor. Nature 574, 505–510 (2019).

- (4) Boixo, S. et al. Characterizing quantum supremacy in near-term devices. Nature Phys. 14, 595–600 (2018).

- (5) Hangleiter, D. & Eisert, J. Computational advantage of quantum random sampling. arXiv:2206.04079 (2022).

- (6) Jurcevic, P. et al. Demonstration of quantum volume 64 on a superconducting quantum computing system. Quantum Sci. Technol. 6, 025020 (2021).

- (7) Cerezo, M. et al. Variational quantum algorithms. Nature Rev. Phys. 3, 625–644 (2021).

- (8) Peruzzo, A. et al. A variational eigenvalue solver on a photonic quantum processor. Nature Comm. 5, 4213 (2014).

- (9) Kandala, A. et al. Hardware-efficient variational quantum eigensolver for small molecules and quantum magnets. Nature 549, 242 (2017).

- (10) McClean, J. R., Romero, J., Babbush, R. & Aspuru-Guzik, A. The theory of variational hybrid quantum-classical algorithms. New J. Phys. 18, 023023 (2016).

- (11) Farhi, E., Goldstone, J. & Gutmann, S. A quantum approximate optimization algorithm. arxiv:1411.4028 (2014).

- (12) Zhou, L., Wang, S.-T., Choi, S., Pichler, H. & Lukin, M. Quantum approximate optimization algorithm: Performance, mechanism, and implementation on near-term devices. Phys. Rev. X 10, 021067 (2020).

- (13) Bharti, K. et al. Noisy intermediate-scale quantum (NISQ) algorithms. Rev. Mod. Phys. 94, 015004 (2022).

- (14) Tilly, J. et al. The variational quantum eigensolver: a review of methods and best practices. arXiv:2111.05176 (2021).

- (15) Preskill, J. Quantum computing in the NISQ era and beyond. arXiv:1801.00862 (2018).

- (16) Schuld, M., Bergholm, V., Gogolin, C., Izaac, J. & Killoran, N. Evaluating analytic gradients on quantum hardware. Phys. Rev. A 99, 032331 (2019).

- (17) Bergholm, V., Izaac, J., Schuld, M., Gogolin, C. & Killoran, N. Pennylane: Automatic differentiation of hybrid quantum-classical computations. arXiv:1811.04968 (2018).

- (18) https://pennylane.ai/qml/demos/tutorial_vqe_qng.html#stokes2019.

- (19) Sweke, R. et al. Stochastic gradient descent for hybrid quantum-classical optimization. Quantum 4, 314 (2020).

- (20) Bittel, L. & Kliesch, M. Training variational quantum algorithms is NP-hard. Phys. Rev. Lett. 127, 120502 (2021).

- (21) Jin, C., Netrapalli, P., Ge, R., Kakade, S. M. & Jordan, M. I. On nonconvex optimization for machine learning: Gradients, stochasticity, and saddle points. arXiv:1902.04811 (2019).

- (22) Jain, P. & Kar, P. Non-convex optimization for machine learning. arXiv:1712.07897 (2017).

- (23) Duffield, S., Benedetti, M. & Rosenkranz, M. Bayesian learning of parameterised quantum circuits (2022). ArXiv:2206.07559 [quant-ph, stat].

- (24) Borras, K. et al. Impact of quantum noise on the training of quantum generative adversarial networks. arXiv:2203.01007 (2022).

- (25) Duffield, S., Benedetti, M. & Rosenkranz, M. Bayesian learning of parameterised quantum circuits. arXiv:2206.07559 (2022).

- (26) Oliv, M., Matic, A., Messerer, T. & Lorenz, J. M. Evaluating the impact of noise on the performance of the variational quantum eigensolver. arXiv:2209.12803 .

- (27) Patti, T. L., Najafi, K., Gao, X. & Yelin, S. F. Entanglement devised barren plateau mitigation. arXiv:2012.12658 (2020).

- (28) Gu, A., Lowe, A., Dub, P. A., Coles, P. J. & Arrasmith, A. Adaptive shot allocation for fast convergence in variational quantum algorithms. arXiv.2108.10434 (2021).

- (29) Gentini, L., Cuccoli, A., Pirandola, S., Verrucchi, P. & Banchi, L. Noise-resilient variational hybrid quantum-classical optimization. Phys. Rev. A 102, 052414 (2020).

- (30) De Palma, G., Marvian, M., Rouzé, C. & França, D. S. Limitations of variational quantum algorithms: a quantum optimal transport approach. arXiv:2204.03455 (2022).

- (31) França, D. S. & García-Patrón, R. Limitations of optimization algorithms on noisy quantum devices. Nature Phys. 17, 1221–1227 (2021).

- (32) Wang, S. et al. Noise-induced barren plateaus in variational quantum algorithms. Nature Comm. 12, 6961 (2021).

- (33) McClean, J. R., Boixo, S., Smelyanskiy, V. N., Babbush, R. & Neven, H. Barren plateaus in quantum neural network training landscapes. Nature Comm. 9 (2018).

- (34) Du, S. S. et al. Gradient descent can take exponential time to escape saddle points. arXiv:1705.10412 (2017).

- (35) Lee, J. D. et al. First-order methods almost always avoid saddle points. arXiv:1710.07406 (2017).

- (36) Schuld, M., Bergholm, V., Gogolin, C., Izaac, J. & Killoran, N. Evaluating analytic gradients on quantum hardware. Phys. Rev. A 99, 032331 (2019).

- (37) Benzi, R., Sutera, A. & Vulpiani, A. The mechanism of stochastic resonance. J. Phys. A 14, L453–L457 (1981).

- (38) Fontana, E., Fitzpatrick, N., Ramo, D. M. n., Duncan, R. & Rungger, I. Evaluating the noise resilience of variational quantum algorithms. Phys. Rev. A 104, 022403 (2021).

- (39) Haug, T., Bharti, K. & Kim, M. Capacity and quantum geometry of parametrized quantum circuits. PRX Quantum 2, 040309 (2021).

- (40) Patel, D., Coles, P. J. & Wilde, M. M. Variational quantum algorithms for semidefinite programming. arXiv:2112.08859 (2021).

- (41) Pólya, G. Über eine Aufgabe der Wahrscheinlichkeitsrechnung betreffend die Irrfahrt im Straßennetz. Math. Ann. 84, 149–160 (1921).

- (42) Montroll, E. W. Random walks in multidimensional spaces, especially on periodic lattices. J. Soc. Ind. Appl. Math. 4, 241–260 (1956).

- (43) Liu, J., Tacchino, F., Glick, J. R., Jiang, L. & Mezzacapo, A. Representation learning via quantum neural tangent kernels. arXiv:2111.04225 (2021).

- (44) Liu, J. et al. An analytic theory for the dynamics of wide quantum neural networks. arXiv:2203.16711 (2022).

- (45) Liu, J., Lin, Z. & Jiang, L. Laziness, barren plateau, and noise in machine learning. arXiv:2206.09313 (2022).

Supplementary material

.1 Strong smoothness and Lipschitz-Hessian property

In this section we provide a proof of Theorem 14. As stated in the main text we focus our analysis on ansatz circuits of the form

| (19) |

where and are respectively fixed unitaries and Hermitian operators. As a reminder, we want to show that the loss function

| (20) |

is -strongly smooth and has a -Lipschitz Hessian, with

| (21) | ||||

| (22) |

To begin, we state three important facts about Lipschitz constants of multi-variate functions.

Lemma 15.

If is differentiable with bounded partial derivatives, then

| (23) |

is the Lipschitz constant for .

The proof is given in Ref. WildeSDP (Lemma 7).

Lemma 16.

If is a function with all its components Lipschitz functions with Lipschitz constant , then has Lipschitz constant .

The proof is given in Ref. WildeSDP (Lemma 8). Equipped with these facts we can proceed to derive an upper bound for the Lipschitz constant of functions from to .

Lemma 17.

If is a differentiable function with bounded gradient, then its Lipschitz constant satisfies

| (24) |

where the -th component of .

Proof.

Next, we focus on loss functions of the type in equation (10).

Lemma 18.

Proof.

We introduce the standard multi-index notation. For this, we have

| (27) |

With this, the multi derivative of the loss reads

| (30) |

where we have expolited the generalized Leibniz formula

| (33) |

We have

| (35) | ||||

| (36) |

where we have used the triangle inequality and the multi-binomial theorem formula to write

| (37) |

the fact that

| (38) |

which follows immediately by Cauchy-Schwarz and the sub-additivity of the norm. Using the form of the parameterized unitary in Eq. (19), we can also observe that

| (39) |

where we have used that the subadditivity of the infinity norm and the fact that the spectral norm of a unitary matrix is given by the unity. Similarly, we have

| (40) |

Therefore, combining the previous two inequalities with Eq. (36), we have

| (41) |

where we have used . ∎

We are now ready to provide the proof of Theorem 14. Since the loss function is a combination of sin and cosin functions, its derivatives exist and are bounded, and from this it follows that the loss function is strongly smooth and its Hessian is Lipschitz. However, it is worth explicitly calculating and and bounding them to verify, for example, the scaling with the number of qubits.

Proof.

We have the -smooth constant defined by the smallest with

| (42) |

which means we need to consider the Lipschitz constant for the -dimensional function . Using Lemma 17 where and , we have

| (43) |

Applying Lemma 18, we find

| (44) |

where we have used the matrix spectral (operator) norm.

The -Hessian constant is defined as

| (45) |

where is the Hessian matrix and we have used the Hilbert-Schmidt matrix norm. Note that the Hilbert Schmidt norm of a matrix is the 2-norm of the matrix vectorization . We can now apply Lemma 17, where since the Hessian is a map from to . Defining we find

| (46) |

Thus, applying Lemma 18, we arrive at

| (47) |

∎

.2 Discussion on more general noise

In this section, we discuss the impact of more general noise. We assume that we have device noise that is constant in time, i.e., for each state preparation, effectively, we always encounter the same CPTP maps acting on the initial state. This will change the state at the end of the circuit to a noisy instance which can be modeled as the applications of parametrized unitary layers interspersed with suitable CPTP maps to the initial state :

| (48) |

where and are respectively noisy CPTP maps and the parametrized unitary channels. As a result, the loss function changes to:

| (49) |

i.e., we now have to optimize an inherently different loss function. Still, to estimate the noisy loss function , also here we will have to deal with statistical noise derived by the finite number of measurements. In particular for the case of global depolarizing noise with depolarizing noise parameter

| (50) |

the cost function will be

| (51) |

Therefore, in such case, the landscape of the cost function will be rescaled and shifted, but will preserve features of the noiseless-landscape like the position of saddle points.

.3 Additional numerical results

In this section, we show additional numerical results to the one reported in the main text. In Fig. 8, we show the estimated probability of avoiding the saddle point as a function of the number of shots, for the loss function given by the expectation value of the local Pauli Hamiltonian over the circuit qml.StronglyEntanglingLayers (see Fig. 7) in Pennylane bergholm2018pennylane where two layers have been used.

One could directly extend our results towards the description of situations involving more qubits. Here, we consider eight qubits and two layers of quantum gates. Now the loss landscape is richer and we can converge at more integers. For instance, we find convergence at , , , for different initial conditions. Figure 10 illustrates saddle-point avoidance with different noise levels when noise is selected from Gaussian distributions. Figure 9 illustrates the performances among different sizes of noise levels and one can again find a critical value of the noise which leads to the saddle-point avoidance.

In Fig. 11, we depict the performances as a function of the noise level obtained for the molecule experiment.

Furthermore, we try to find the relation between the convergence time and the noise size . With the same setup, we plot the dependence between the convergence time (the time where we approximately get the true minimum) and the size of the noise in the Gaussian distribution case, in Fig. 12. We find that the convergence time indeed decays when we add more noise, and we fit the scaling and find where , which is consistent with the bound in theory. In Section .4, we provide a heuristic derivation on the scaling by dimensional analysis and other analytic heuristics.

Finally, we examine the generality of initial points that could be trapped by saddle points. In Fig. 13, we have studied 1000 initial variational angles randomly sampled between in the same setup of Fig. 3 with four qubits. Under identical gradient descent conditions, we have observed that 305 initial points lead to saddle points rather than local minima in the absence of noise. Consequently, the estimated probability of getting stuck near saddle points in our study is approximately . Classical machine learning theory demonstrates that saddle points are not anomalies but rather common features in loss function landscapes, making stochastic gradient descents crucial for most traditional machine learning applications. We anticipate that in quantum machine learning, getting trapped by saddle points will also be a common occurrence, with the likelihood of entrapment increasing in higher-dimensional settings. Here’s a straightforward explanation: Saddle points are determined by the signs of Hessian eigenvalues. In models with parameters, there are p Hessian eigenvalues. Assuming equal chances of positive and negative eigenvalues, the probability of obtaining a positive semi-definite Hessian becomes exponentially small (). Therefore, as the model size increases, saddle points become increasingly prevalent.

.4 Analytic heuristics

In this section, we provide a set of analytic heuristics about predicting the noisy convergence and the critical noise with significant improvements in performance. Our derivation is physical and heuristic, but we expect that they will be helpful to understand the nature of the noisy dynamics during gradient descent in the quantum devices. The results developed corroborate the idea that a balance between too little and too much noise will have to be struck.

.4.1 Brownian motion and the Polya’s constant

One of the simplest heuristics about noisy gradient descent is the theory of Brownian motion. Define , also known as Polya’s constant, as the likelihood that a random walk on a -dimensional lattice has the capability to return to its starting point. It has been proven that polya1921aufgabe ,

| (52) |

but

| (53) |

In fact, has the closed formula montroll1956random

| (54) |

where is the number of training parameter in our case, and is the modified Bessel function of the first kind. One could compute numerical values of the probability for increasing . From to , it changes monotonically from 0.19 to 0.07. It is hard to compute the integral accurately because of damping, but it is clear that it is decaying and will vanish for large . In our problem, we could regard the process of noisy gradient descent as random walks in the space of variational angles. One could regard the returning probability roughly as the probability of coming back to the saddle point from the minimum. Thus, the statement about lattice random walk gives us intuition that it is less likely to return back when we have a large number of variational angles.

.4.2 Guessing by dimensional analysis

One of the primary progress of the technical result presented in Ref. ExpTimeSaddle is the dependence on the convergence time with the size of the noise . Here, we show that one could guess such a result in the small limit (where is the learning rate) simply by dimensional analysis. Starting from the definition of the gradient descent algorithm,

| (55) |

we can instead study the variation of the loss function

| (56) |

Here, we use the assumption where is small, such that we could expand the loss function change by the first order Taylor expansion. Now we define

| (57) |

and we have

| (58) |

If is a constant (and we could assume it is true since we are doing dimensional analysis), we get

| (59) |

In general, we can assume a time-dependent solution as

| (60) |

Now let us think about how the scaling of convergence time will be with noise. First, in the limit, for small the convergence time would get smaller, not larger (since it is immediately dominated by noise). So it is not possible that to some powers in . So the only possibility is

| (61) |

in the scaling of . We will thus focus on the first term in the small limit. Furthermore, from the form we know that .

Now let us count the dimension, assuming has the -dimension 1 and has the -dimension 0. From the gradient descent formula, has the -dimension 2, has the -dimension -2, and has the -dimension 1. The time is dimensionless since is dimensionless and appears in the exponent. Thus, since we know that , there must be an extra factor balancing the -dimension of . The only choice is , and we cannot use because we are studying the term with the -scaling . Thus, we immediately get, . That is how we get the dependence by dimensional analysis. Note that the estimation only works in the small limit. More generally, we have

| (62) |

if we assume that the expression of is analytic.

.4.3 Large-width limit

The dependence can also be made plausible using the quantum neural tangent kernel (QNTK) theory. The QNTK theory has been established Liu:2021wqr ; Liu:2022eqa ; Liu:2022rhw in the limit where we have a large number of trainable angles and a small learning rate , with the quadratic loss function. According to Ref. Liu:2022rhw , we use the loss function

| (63) |

Here, we make predictions on the eigenvalue of the operator towards . And we use as the variational ansatz. The gradient descent algorithm is

| (64) |

when there is no noise. Furthermore, we hereby model the noise by adding Gaussian random variables in each step of the update. Those random fluctuations are independently distributed through . Now, in the limit where is large, we have an analytic solution of the convergence time, given by

| (65) |

where . In the small limit, we have

| (66) |

This gives substance to the claim in the dimensional analysis.

.4.4 Critical noise from random walks

Moreover, using the result from Ref. Liu:2022rhw , we can also estimate the critical noise , namely, the critical value of phase transition of the noise size that leads to better performance and avoiding the saddle points.

In particular, here we will be interested in the case where the saddle-point avoidance is triggered purely by random walks without any extra potential. The assumption, although may not be real in the practical loss function landscape, might still provide some useful guidance.

According to Ref. Liu:2022rhw , we have

| (67) |

Here is the variance of the residual training error after averaging over the realizations of the noise. Imagine that now the gradient descent process is running from the saddle point to the exact local minimum, we have

| (68) |

where is the distance of the loss function from the saddle point to the local minimum (defined also in the main text), . So we get an estimate of the critical noise,

| (69) |

Here, in the most right hand side of the formula, we use the approximation where is small enough. This formula might be more generic beyond QNTK, since one could regard it as an analog of Einstein’s formula of Brownian motion,

| (70) |

with the averaging moving distance square , mass diffusivity , and time in the Brownian motion.

One could also show such a scaling in the linear model. Say that we have a linear loss function

| (71) |

with constants and . For simplicity, we assume that the initialization makes . The gradient descent relation is

| (72) |

One could find the closed-form solution

| (73) |

One could also find the change of the loss function

| (74) |

The convergence time could be estimated as

| (75) |

Now, instead, we add a random in the gradient descent dynamics, which is following the normal distribution . Now, the stochastic gradient descent equation is

| (76) |

which gives the solution

| (77) |

Thus, we get the loss function

| (78) |

The critical point can be identified as

| (79) |

where the standard deviation of the noise term will compensate the decay. Thus, we get

| (80) |

In the limit where the noise levels are small, we can study the behavior in the late time limit

| (81) |

So we get

| (82) |

which is

| (83) |

Thus, the linear model result is consistent with the derivation using QNTK with the quadratic loss.

.4.5 Phenomenological critical noise

In practice, random walks may not be the only source triggering the saddle-point avoidance, leading to the scaling in loss functions. Since saddle points have negative Hessian eigenvalues, those directions will provide driven forces with linear contributions in the loss function. In the linear model, we can estimate the critical noise as

| (84) |

which leads to the linear relation

| (85) |

In Fig. 14, we show the dependence of the critical noise on in our numerical example with four qubits from Fig. 3 and its linear fitting. We find that our theory is justified for a decent range of learning rate. In our numerical data, in smaller learning rates the increase of the critical noise might be smoother, while for larger critical noise, the growth is more close to linear scaling. If we fit for the exponent of we get .

One could also transform critical learning rates towards the number of shots if the noises are dominated from quantum measurements. One can assume the scaling

| (86) |

and we could obtain the optimal number of shots

| (87) |

One can then take as a good approximation, assuming that the saddle-point avoidance is dominated by negative saddle-point eigenvalues. is a constant depending on the circuit architecture and the loss function landscapes. This formalism could be useful to estimate the optimal number of shots used in variational quantum algorithms. For instance, we take and we get

| (88) |

In the situation of Fig. 3, we obtain

| (89) |

with estimated as from Qiskit. So we get

| (90) |

which is the optimal numbers of shots in this experiment with pure measurement noises. Here, .