Stochastic Dynamic Information Flow Tracking Game using

Supervised Learning for Detecting Advanced Persistent Threats

Abstract

Advanced persistent threats (APTs) are organized prolonged cyberattacks by sophisticated attackers with the intend of stealing critical information. Although APT activities are stealthy and evade detection by traditional detection tools, they interact with the system components to make progress in the attack. These interactions lead to information flows that are recorded in the form of a system log. Dynamic Information Flow Tracking (DIFT) has been proposed as one of the effective ways to detect APTs using the information flows. A DIFT-based detection mechanism dynamically performs security analysis on the information flows to detect possible attacks. However, wide range security analysis using DIFT results in a significant increase in performance overhead and high rates of false-positives and false-negatives. In this paper, we model the strategic interaction between APT and DIFT as a non-cooperative stochastic game. The game unfolds on a state space constructed from an information flow graph (IFG) that is extracted from the system log. The objective of the APT in the game is to choose transitions in the IFG to find an optimal path in the IFG from an entry point of the attack to an attack target. On the other hand, the objective of DIFT is to dynamically select nodes in the IFG to perform security analysis for detecting APT. Our game model has imperfect information as the players do not have information about the actions of the opponent. We consider two scenarios of the game (i) when the false-positive and false-negative rates are known to both players and (ii) when the false-positive and false-negative rates are unknown to both players. Case (i) translates to a game model with complete information and we propose a value iteration-based algorithm and prove the convergence. Case (ii) translates to an incomplete information game with unknown transition probabilities. In this case, we propose Hierarchical Supervised Learning (HSL) algorithm that integrates a neural network, to predict the value vector of the game, with a policy iteration algorithm to compute an approximate equilibrium. We implemented our algorithms for cases (i) and (ii) on real attack datasets for nation state and ransomware attacks and validated the performance of our approach.

keywords:

Cyber security, Stochastic games, Neural network, Advanced persistent threats, Dynamic information flow tracking1 Introduction

Advanced persistent threats (APTs) are sophisticated and prolonged cyberattacks that target specific high value organizations in sectors such as national defense, manufacturing, and the financial industry [14, 34]. APTs use advanced attack methods, including advanced exploits of zero-day vulnerabilities, as well as highly-targeted spear phishing and other social engineering techniques to gain access to a network and then remain undetected for a prolonged period of time. The attacking strategies of APTs are stealthy and are methodically designed to bypass conventional security mechanisms to cause more permanent, significant, and irreversible damages to the network.

Interaction of APTs with the network introduce information flows in the form of control flow and command flow commands which get recorded in the system log. Analyzing information flows is thus a promising approach to detect presence of APTs [15]. Dynamic information flow tracking (DIFT) [32] is a flow tracking-based mechanism that is widely used to detect APTs. DIFT tags information flows originating from suspicious input channels in the network and tracks the propagation of the tagged flows. DIFT then initiates security check points, referred to as traps, to analyze the tagged flows and verify the authenticity of the flows. As the ratio of the malicious flows to the benign flows is very small in the network, implementation of DIFT introduces significant performance overhead on the network and generates false-positives and false-negatives [15].

This paper models detection of APTs using a DIFT-based detection mechanism. The effectiveness of detection depends on both the security policy of the DIFT and also on the actions of the APT. We model the strategic interaction between APTs and DIFT as a stochastic game. The game unfolds on a state space that is defined using the information flow graph (IFG) constructed from the system log of the system. The objective of the DIFT is to select locations in the system, i.e., nodes in the IFG, to place the security check points while minimizing the errors due to generation of false-positives and false-negatives. On the other hand, the objective of the APT is to choose an attack path starting from an entry point to a target node in the IFG. We note that, while APTs aim to achieve a reachability objective, the aim of DIFT is an optimal node selection. The APT-DIFT game has imperfect information structure as APTs are unaware of the locations at which DIFT places security check points and DIFT is unaware of the path chosen by the APT for the attack. We consider two scenarios of the game: (i) when the false-positive and false-negative rates are known to both players and (ii) when the false-positive and false-negative rates are unknown to both players. While case (i) is a complete information game, case (ii) is an incomplete information game with unknown transition probabilities.

We make the following contributions in this paper:

-

We formulate a constant-sum stochastic game with total reward structure that models the interaction between the DIFT and APT. The game captures the information asymmetry between the attacker and the defender, false-positives, and false-negatives generated by DIFT.

-

When the transition probabilities are known, we present a value iteration algorithm to compute equilibrium strategy and prove convergence and polynomial-time complexity.

-

When the transition probabilities are unknown, we propose HSL (Hierarchical Supervised Learning), a supervised learning-based algorithm to compute an approximate equilibrium strategy of the incomplete information game. HSL integrates a neural network with policy iteration and utilizes the hierarchical structure of the state space of the APT-DIFT game to guarantee convergence.

-

We implement our algorithms on attack data, of a nation state attack and a ransomware attack, collected using Refinable Attack INvestigation (RAIN) system, and show the convergence of the algorithm.

This paper is organized is as follows: Section 2 summarizes the related work. Section 3 elaborates on information flow graph, and the attacker and defender models. Section 4 presents the formulation of the stochastic game between APT and DIFT. Section 5 discusses the min-max solution concept used for solving the game. Section 6 presents the value iteration algorithm for the model-based approach and the supervised learning-based approach for the model-free case. Section 7 illustrates the results and discussions on the experiments conducted. Section 8 concludes the paper.

2 Related work

Stochastic games model the strategic interactions among multiple agents or players in dynamical systems and are well studied in the context of security games [18], economic games [3], and resilience of cyber-physical systems [35]. While stochastic games provide a strong framework for modeling security problems, often solving stochastic games are hard. There exists dynamic programming-based approaches, value-iteration and policy iteration, for computing Nash equilibrium (NE) strategies of these games. However, in many stochastic game formulations dynamic programming-based approaches do not converge. References [1, 2] modeled and studied the interaction between defender and attacker in a cyber setting as a two-player partially observable stochastic game and presented an approach for synthesizing sub-optimal strategies.

Multi-agent reinforcement learning (MARL) algorithms have been proposed in the literature to obtain NE strategies of zero-sum and nonzero-sum stochastic games, when the game information such as transition probabilities and payoff functions of the players are unknown. However, algorithms with guaranteed convergence are available only for special cases [11, 12, 23, 24]. A Nash-Q learning algorithm is given in [12] that converges to NE of a general-sum game when the NE is unique. The algorithm in [12] is guaranteed to converge for discounted games with perfect information and unique NE. A Q-learning algorithm is presented in [11] for discounted, constant-sum games with unknown transition probabilities when the players have perfect information. An adaptive policy approach using a regularized Lagrange function is proposed in [23] for zero-sum games with unknown transition probabilities and irreducible state space. A stochastic approximation-based algorithm is presented in [24] for computing NE of discounted general-sum games with irreducible state space. While there exist algorithms that converge to NE of the game for special cases, there are no known algorithms to compute NE of a general stochastic game with incomplete information structure.

Detection of APTs are analyzed using game theory in the literature by modeling the interactions between APT and the detection mechanism as a game [25]. A dynamic game model is given in [13] to detect APTs that can adopt adversarial deceptions. A honeypot game theoretic model is introduced in [33] to address detection of APTs.

There has been some effort to model the detection of APTs by a DIFT-based detection mechanism using game-theoretic framework [22], [29], [21]. Reference [22] studied a DIFT model which selects the trap locations in a dynamic manner and proposed a min-cut based solution approach. Detection of APTs when the attack consists of multiple attackers, possibly with different capabilities, is studied in [29]. We note that, the game models in [22] and [29] did not consider false-negative and false-postive generation by DIFT and are hence non-stochastic. Recently, stochastic models of APT-DIFT games was proposed in [21], [30]. Reference [30] proposed a value iteration-based algorithm to obtain an -NE of the discounted game and [21] proposed a min-cut solution approach to compute an NE of the game. Formulations in [21], [30] assume that the transition probabilities of the game (false-positives and false-nagatives) are known.

DIFT-APT games with unknown transition probabilities are studied in [27], [28]. While reference [27] proposed a two-time scale algorithm to compute an equilibrium of the discounted game, [28] considered an average reward payoff structure. Moreover, the game models in [27], [28] resulted in a unichain structure on the state space of the game, unlike the game model considered in this paper. The unichain structure of the game is critically utilized in [27], [28] for developing the solution approach and deriving the results. Reference [19], the preliminary conference version of this work, studied DIFT-APT game with unknown transition probabilities and average reward payoff structure, when the state space graph is not a unichain structure. The approach in [19] approximated the payoff function to a convex function and utilized an input convex neural network (ICNN) architecture. The ICNN is integrated with an alternating optimization technique and empirical results are presented in [19] to learn approximate equilibrium strategies. In this paper, we do not restrict the payoff function to be convex and hence relax the condition for the neural network to be input convex.

3 Preliminaries

3.1 Information Flow Graph (IFG)

Information Flow Graph (IFG) provides a graphical representation of the whole-system execution during the entire period of monitoring. We use RAIN recording system [15] to construct the IFG. RAIN comprises a kernel module which logs all the system calls that are requested by the user-level processes in the target host. The target host then sends the recorded system recorded logs to the analysis host. The analysis host consists of a provenance graph builder, which then constructs the coarse-grained IFG. The coarse-IFG is then refined using various pruning techniques. A brief discussion on the pruning steps is included in Section 7. For more details, please refer [15].

IFGs are widely used by analysts for effective cyber response [10], [15]. IFGs are directed graphs, where the nodes in the graph form entities such as processes, files, network connections, and memory objects in the system. Edges correspond to the system calls and are oriented in the direction of the information flows and/or causality [10]. Let directed graph represent IFG of the system, where and . Given a system log, one can build the corase-grained-IFG which is then pruned and refined incrementally to obtain the corresponding IFG [15].

3.2 Attacker Model

This paper consider advanced cyberattacks called as APTs. APTs are information security threats that target sensitive information in specific organizations. APTs enter into the system by leveraging vulnerabilities in the system and implement multiple sophisticated methods to continuously and stealthily steal information [10]. A typical APT attack consists of multiple stages initiated by a successful penetration and followed by initial compromise, C&C communications, privilege escalation, internal reconnaissance, exfiltration, and cleanup [10]. Detection of APT attacks are very challenging as the attacker activities blend in seamlessly with normal system operation. Moreover, as APT attacks are customized, they can not be detected using signature-based detection methods such as firewalls, intrusion detection systems, and antivirus software.

3.3 Defender Model

Dynamic information flow tracking (DIFT) is a promising technique to detect security attacks on computer systems [7, 9, 32]. DIFT tracks the system calls to detect the malicious information flows from an adversary and to restrict the use of these malicious flows. DIFT architecture is composed of (i) tag sources, (ii) tag propagation rules, and (iii) tag sinks (traps). Tag sources are the suspicious locations in the system, such as keyboards, network interface, and hard disks, that are tagged/tainted as suspicious by DIFT. Tags are single bit or multiple bit markings depending on the level of granularity manageable with the available memory and resources. All the processed values of the tag sources are tagged and DIFT tracks the propagation of the tagged flows. When anomalous behavior is detected in the system, DIFT initiates security analysis and tagged flows are inspected by DIFT at specific locations, referred to as traps. DIFT conducts fine grain analysis at traps to detect the attack and to perform risk assessment. While tagging and trapping using DIFT is a promising detection mechanism against APTs, DIFT introduces performance overhead on the system. Performing security analysis (trapping) of tagged flows uses considerable amount of memory of the system [9]. Thus there is a tradeoff between system log granularity and performance [15].

4 Problem Formulation

This section formulates the interaction between the APT and the DIFT-based defense mechanism as a stochastic game where the decisions taken by the adversary (APT) and the defender (DIFT), referred to as agents/players, influences the system behavior. The objective of the adversary is to choose transitions in the IFG so as to reach the destination node set from the set of entry points . On the other hand, the objective of the defender is to dynamically choose locations to trap the information flow so as to secure the system from any possible attack. The state of the system denotes the location of the tagged information flow in the system. The defender cannot distinguish a malicious and a benign flow. On the other hand, the adversary does not know if the tagged flow will get trapped by the defender while choosing a transition. Thus the game is an imperfect information game. We note that, the state of the game is known here unlike in a partially observable game setting where the state of the game is unknown. The system’s current state and the joint actions of the agents together determine a probability distribution over the possible next states of the system.

4.1 State Space

The state of the game at a time step , denoted as , is defined as the position of the tagged information flow in the system. Let be the finite state space of the game. Here, denotes the state when the tagged flow drops out by abandoning the attack, denotes the state when DIFT detects the adversary after performing the security analysis, and denotes the state when DIFT performs security analysis and concludes a benign flow as malicious (false positive). Let be the destination (target) node set of the adversary and . Without loss of generality, let . We assume that both agents know the IFG and the destination set . The state corresponds to a virtual state that denote the starting point of the game. In the state space , is connected to all nodes in . Thus the state of the game at is .

4.2 Action Spaces

At every time instant in the game, the players choose their actions from their respective action sets. The action set of the adversary is defined as the possible set of transitions the adversarial flow can execute at a state. The defender has limited resources and hence can perform security analysis at one information flow at a time. Thus the defender’s action set is defined such that, at every decision point, the defender chooses one node to perform security analysis, among the possible nodes that the adversary can transition to.

Let the action set of the adversary and the defender at a state be and , respectively. At a state , the adversary chooses an action either to drop out, i.e., abort the attack, or to continue the attack by transitioning to a neighboring node. Thus . On the other hand, the defender’s action set at state , where represents that the tagged information flow is not trapped and represents that defender decides to perform security analysis at the node at instant .

The game originates at with state with and . The definition of action sets at captures the fact that the adversarial flow originates at one of the entry points. Performing security analysis at entry points can not detect the attack as there are not enough traces to analyze. Moreover, the game terminates at time if the state of the game . The set of states are referred to as the absorbing states of the game. For , . At a non-absorbing state, the players choose their actions from their respective action set and the game evolves until the state of the game is an absorbing state.

4.3 Transitions

The transition probabilities of the game are governed by the uncertainty associated with DIFT. DIFT is not capable of performing security analysis accurately due to the generation of false-positives and false-negatives. Consider a tagged flow incoming at node at time . Let the action chosen by the defender and the adversary at be and , respectively. If defender chooses not to trap an information flow, then the flow proceeds to the node in chosen by the adversary. That is, if , then . If the defender chooses to trap the node at which the adversary also decides to transition to, then the adversary is detected with probability and the flow transition to the node in corresponding to the action of the adversary with the remaining probability. That is, if , then with probability and with probability . If the defender decides to trap a node which is not the node the adversary decides to transition to, then the defender generates a false-positive with probability and the flow transition to the node in corresponding to the action of the adversary with the remaining probability. That is, if and , then with probability and with probability .

Let denote the probability of transitioning to a state from a state under actions and . Then,

| (1) |

The transition probabilities , for , denote the rates of generation of false-negatives and false-positives, respectively, at the different nodes in the IFG. We note that, different nodes in IFG have different capabilities to perform security analysis and depending on that the value of and are different. The numerical values of these transition probabilities also depend on the type of the attack. As APTs are tailored attacks that can manipulate the system operation and evade conventional security mechanisms such as firewalls, anti-virus software, and intrusion-detection systems, ’s and ’s are often unknown and hard to estimate accurately.

4.4 Strategies

A strategy for a player is a mapping which yields probability distribution over the player’s actions at every state. Consider mixed (stochastic) and stationary player strategies. When the strategy is stationary, the probability of choosing an action at a state depends only on the current state of the game. Let the stationary strategy space of the attacker be and that of the defender be . Then, and . Let and . Here, , where denotes the probability distribution over all the actions of the defender at state , i.e., out-neighboring nodes of in IFG and not trapping. For , is a probability distribution over all possible out-neighbors of the node in the IFG and . We note that, , for . Also, .

4.5 Payoffs

In the APT-DIFT game, the aim of the APT is to choose transitions in the IFG in order to reach destination by evading detection by DIFT. The aim of DIFT is to select the security check points to secure the system from the APT attack. Recall that APTs are stealthy attacks that undergo for a long period of time with the ultimate goal of stealing information over a long time. The destructive consequences of APTs are often unnoticeable until the final stages of the attack [4]. In this paper we consider the payoff functions of the APT-DIFT game such that players achieve reward () when their respective aim is achieved. DIFT achieves the aim under two scenarios: (1) when the APT is detected successfully and (2) when APT drops out the attack. We note that, in both cases (1) and (2), DIFT secures the system from the APT attack and hence is rewarded . On the other hand, APT achieves the aim under two scenarios: (i) when APT reach destination and (ii) when a benign flow is concluded as malicious, i.e., false-positive. We note that, when a benign flow is concluded as malicious, DIFT no longer analyzes the flows (as it believes APT is detected) and hence the actual malicious flow evades detection and can achieve the aim. Thus in both cases (i) and (ii) APT achieves the aim and receives a reward of .

Let the payoff of player at an absorbing state be , where . At state DIFT receives a payoff of and APT receives payoff. At state the APT receives a payoff of and DIFT receives payoff. At a state in the set , APT receives a payoff of and DIFT receives payoff. At state DIFT receives a payoff of and APT receives payoff. Then,

| (2) | |||||

| (3) |

At each stage in game, at time , both players simultaneously choose their action and and transition to a next state . This is continued until they reach an absorbing state and receive the payoff defined using Eqs. (2) and (3).

Let denote termination time of the game, i.e., for . At the termination time . Let and denote the payoff functions of the adversary and the defender, respectively. As the initial state of the game is , for a strategy pair the expected payoffs of the players are

| (4) | |||||

| (5) |

where denotes the expectation with respect to and when the game originates at state . The objective of APT and DIFT is to individually maximize their expected total payoff given in Eq. (4) and Eq. (5), respectively. Thus the optimization problem solved by DIFT is

| (6) |

Similarly, the optimization problem of the APT is

| (7) |

To bring out the structure of the payoffs well, we let be the cumulative probability with which the state of the game at the termination time is , when the game originates at . With slight abuse of notation, we use to denote the cumulative probability with which the state of the game at the termination time lies in set , when the game originates at . At the time of termination, i.e., , the state of the game satisfies one of the following: (i) , (ii) , (iii) , and (iv) . Using these definitions Eqs. (4) and (5) can be rewritten as

| (8) | |||||

| (9) |

Using the reformulation of the payoff functions, we present the following property of the APT-DIFT game.

Proposition 4.1.

The APT-DIFT stochastic game is a constant sum game.

Proof.

Recall that APT-DIFT game has absorbing states and . At the termination time of the game, i.e., , the state of the game . This implies

This gives . Thus the game between APT and DIFT is a constant sum game with constant equal to . ∎

5 Solution Concept

The solution concept of the game is defined as follows. Each player is interested in maximizing their individual reward in the minimax sense. In other words, each player is assuming the worst case for an optimal opponent player. Since the APT-DIFT game is a constant-sum game, it is sufficient to view that the opponent player is acting to minimize the reward of the agent. Thus DIFT chooses its actions to find an optimal strategy that achieves the upper value defined as

| (10) |

On the other hand, APT chooses its actions to find an optimal strategy that achieves

| (11) |

This is equivalent to saying that APT aims to find an optimal strategy that achieves the lower value defined as

| (12) |

From Eqs. (10) and (12), the defender tries to maximize and the adversary tries to minimize . Hence the value of the game is defined as

| (13) | |||||

The strategy pair is referred to as the saddle point or Nash equilibrium (NE) of the game.

Definition 5.1.

Let be a Nash equilibrium of the APT-DIFT game. A strategy pair is said to be an Nash equilibrium, for , if

In other words, the value corresponding to the strategy pair denoted as satisfies

Proposition 5.2 proves the existence of NE for the APT-DIFT game.

Proposition 5.2.

There exists a Nash equilibrium for the APT-DIFT stochastic game.

Proof of Proposition 5.2 is presented in the appendix.

In the next section we present our approach to compute an NE of the APT-DIFT game.

6 Solution to the APT-DIFT Game

This section presents our approach to compute an NE of the APT-DIFT game. Firstly, we propose a model-based approach to compute NE using a value iteration algorithm. Later, we present a model-free approach based on a policy iteration algorithm, when the transition probabilities, i.e., the rate of false-positives and false-negatives, are unknown.

6.1 Value Iteration Algorithm

This subsection presents our solution approach for solving the APT-DIFT game when the false-positive and false-negative rates (transition probabilities) are known. By Proposition 5.2, there exists an NE for the APT-DIFT game. Our approach to compute NE of the APT-DIFT game is presented below.

Let be an arbitrary state of the APT-DIFT game. The state value function for a constant-sum game, analogous to the Bellman equation, can be written as

| (14) |

where the -values are defined as

| (15) |

The min in Eq. (14) can also be defined over mixed (stochastic) policies, but, since it is ‘inside’ the max, the minimum is achieved for a deterministic action choice [17]. The strategy selected by Eq. (14) is referred to as the minimax strategy, and given by

| (16) |

The aim here is to compute minimax strategy . Our proposed algorithm and convergence proof is given below.

Let denote the value vector corresponding to a strategy pair . The value for a state , , is the expected payoff under strategy pair if the game originates at state . Then, is the expected payoff corresponding to an NE strategy pair if the game originates at state . Algorithm 6.1 presents the pseudocode to compute the value vector of the APT-DIFT game.

Algorithm 6.1 is a value iteration algorithm defined on a value vector , where is the value of the game starting at the state . Thus , for , and , for . The values of the states is computed recursively, for every iteration , , as follows:

| (17) |

where

The value-iteration algorithm computes a sequence , where for , each valuation associates with each state a lower bound on the value of the game. Algorithm 6.1 need not terminate in finitely many iterations [26]. The parameter , in step 2, denotes the acceptable error bound which is the maximum absolute difference in the values corresponding to two consecutive iterations, i.e., , and serves as a stopping criteria for Algorithm 6.1. Smaller value of in Algorithm 6.1 will return a value vector that is closer to the actual value vector. Below we prove that as approaches , the values approaches the exact values from below, i.e., converges to the value of the game at state . Theorem 6.3 proves the asymptotic convergence of the values.

Lemma 6.1.

Consider the value iteration algorithm in Algorithm 6.1. Let and be the value vectors corresponding to iterations and , respectively. Then, , for all .

Proof.

We first prove that result for a state . From Eq. (17), for every iteration , for , and , for . From the initialization step of Algorithm 6.1 (Step 1), for all . Thus for any state , where , for any arbitrary iteration of Algorithm 6.1.

For an arbitrary state , where , we prove the result using an induction argument. The induction hypothesis is that arbitrary iterations and satisfy , for all .

Base step: Consider as the base step. Initialize for all and set for all . This gives , for all .

Induction step: For the induction step, assume that iteration satisfies for all .

Consider iteration . Then,

| (18) | |||||

| (20) |

Proposition 6.2 (Monotone Convergence Theorem, [26]).

If a sequence is monotone increasing and bounded from above, then it is a convergent sequence.

The value of any state is bounded above by . Using Lemma 6.1 and Proposition 6.2, we state the convergence result of Algorithm 6.1.

Theorem 6.3.

The value of any state is bounded above by . From Lemma 6.1 we know that the sequence is a monotonically increasing sequence. By the monotone convergence theorem [26], a bounded and monotone sequence converges to the supremum, i.e., , for all . Thus the value iteration algorithm converges and the proof follows.

Theorem 6.4.

For any , Algorithm 6.1 returns an -Nash equilibrium of the APT-DIFT game.

The value of trades off the desired accuracy of the value vector and the computational time. For a given value of , the number of iterations of Algorithm 6.1 depends on the size and connectivity structure of the IFG. As the size and connectivity structure of the IFG varies across different datasets, we base the selection of solely on the accuracy desired by the security expert. In our experiments, we set . Theorem 6.5 presents the computational complexity of Algorithm 6.1 for each iteration.

Theorem 6.5.

Proof.

Every iteration of Algorithm 6.1 involves computation of the value vector. This involves solving, for every state , a linear program of the form:

Maximize

Subject to:

, for all

, for all .

We note that . The above linear program has complexity of [5]. Thus solving for all states has a total complexity of . ∎

Algorithm 6.1 is guaranteed to converge to an NE strategy asymptotically. When the state space of the APT-DIFT game has cycles, Algorithm 6.1 updates the value vector recursively and consequently finite time convergence can not be guaranteed. However, when the IFG is acyclic, the state space of the APT-DIFT game is acyclic (Theorem 6.7) and a finite time convergence can be achieved using value iteration.

Henceforth, the following assumption holds.

Assumption 6.6.

The IFG is acyclic.

The IFG obtained from the system log may not be acyclic in general. However, when the IFG is a cyclic graph, one can obtain an acyclic representation of the IFG using the node versioning technique proposed in [10]. Throughout this subsection, we consider directed acyclic IFGs rendered using the approach in [10] and hence Assumption 6.6 is non-restrictive.

Theorem below presents a termination condition of the APT-DIFT game when the IFG is acyclic.

Theorem 6.7.

Consider the APT-DIFT game. Let Assumption 6.6 holds and be the number of nodes in the IFG. Then the state space of the APT-DIFT game is acyclic and the game terminates in at most number of steps.

Proof.

Consider any arbitrary strategy pair . We first prove the acyclic property of the state space of the game under . The state space is constructed by augmenting the IFG with states , , and . We note that a state does not lie in a cycle in as is an absorbing state and hence have no outgoing edge, i.e., . The state does not lie in a cycle in as there are no incoming edges to . This concludes that a state is not part of a cycle in . Thus a cycle can possibly exist in only if there is a cycle which has some states in , since . Recall that states correspond to nodes of . As is acyclic, there are no cycles in . Since is acyclic under any arbitrary strategy pair and the state space has finite cardinality, the game terminates in finite number of steps. Further, since , . ∎

Using Theorem 6.7, we propose a value iteration algorithm with guaranteed finite time convergence. We will use the following definition in our approach.

For a directed acyclic graph, topological ordering of the node set is defined below.

Definition 6.8 ([16]).

A topological ordering of a directed graph is a linear ordering of its vertices such that for every directed edge from vertex to vertex , comes before in the ordering.

For a directed acyclic graph with vertex set and edge set there exists an algorithm of complexity to find the topological order [16]. Using the topological ordering, one can find a hierarchical level partitioning of the nodes of a directed acyclic graph. Let be the topologically ordered set of nodes of . Let the number of hierarchical levels associated with be , say . Then , . Moreover, a state is said to be in hierarchical level if there exists an edge into from a state which is in some level , where , and there is no edge into from a state which is in level , where .

Algorithm 6.2 presents the pseudocode to solve the APT-DIFT game using the topological ordering and the hierarchical levels, when the IFG is acyclic.

Corollary 6.9.

Proof.

Recall that under Assumption 6.6, the state space of the APT-DIFT game is acyclic. Thus the topological ordering and the hierarchical levels, , of can be obtained in polynomial time [16]. We note that, values at the absorbing states, i.e., states at hierarchical level , are for and for all . Using the hierarchical levels, the value vector can be computed in a dynamic programming manner starting from states in the last hierarchical level. Initially, using the values of the states at level , the values of states at level can be obtained. Similarly, using values of states at level and level , the values of states at level can be obtained and so on. By recursive computations we obtain the value vector and the corresponding DIFT strategy .

Finding the topological ordering and the corresponding hierarchical levels of the state space graph has computations. The linear program associated with each state in the value iteration involves computations (See proof of Theorem 6.5). Since we need to compute values corresponding to states, complexity of Algorithm 6.2 is . ∎

6.2 Hierarchical Supervised Learning (HSL) Algorithm

This subsection presents our approach to solve the APT-DIFT game when the game model is not fully known. It is often unrealistic to know the precise values of the false-positive rates and the false-negative rates of DIFT as these values are estimated empirically. More specifically, the transition probabilities of the APT-DIFT game are unknown. The traditional reinforcement learning algorithms (Q-learning) for constant-sum games with unknown transition probabilities [11] assume perfect information, i.e., the players can observe the past actions and rewards of the opponents [11].

On the other hand, dynamic programming-based approaches, including value iteration and policy iteration algorithms, require the transition probabilities in the computations. When and values are unknown, the optimization problem associated with the states in step 6 of Algorithm 6.1 and step 6 of Algorithm 6.2 can not be solved. That is, in the LP

Problem 6.10.

Maximize

Subject to:

, for all

, for all ,

the values are unknown, where

and is defined in Eq. (1). To the best of our knowledge, there are no known algorithms, with guaranteed equilibrium convergence, to solve imperfect and incomplete stochastic game models as that of the APT-DIFT game presented in this paper. To this end, we propose a supervised learning-based approach to solve the APT-DIFT game. Our approach consists of two key steps.

-

(1)

Training a neural network to approximate the value vector of the game for a given strategy pair, and

-

(2)

A policy iteration algorithm to compute an -optimal NE strategy.

Our approach to solve the APT-DIFT game, when the rates of false-positives and false-negatives are unknown, utilizes the topological ordering and the hierarchical levels of the state space graph. We propose a hierarchical supervised learning (HSL)-based approach, that predicts the -values of the APT-DIFT game and then solve the LP for all the states (Problem 6.10) in a hierarchical manner, to approximate an NE strategy of the APT-DIFT game. In HSL, we utilize a neural network to obtain a mapping between the strategies of the players and the value vector. This mapping is an approximation, using which HSL presents an approach to compute an approximate equilibrium and evaluates the performance empirically using real attack datasets.

In order to predict the -values of the game, we train a neural network to learn the value vector of the game and use the trained model to predict the -values. The reasons to train an NN to learn the value vector instead of directly learning the -values are: (a) while the value vector is of dimension the -values have dimension and (b) generation of data samples for value vector is easy. The approach for data generation and training is elaborated below.

We note that, it is possible to simulate the APT-DIFT game and observe the final game outcome, i.e., the payoffs of the players. For a given , the value at state , , is the payoff of the defender if the game originate at state , i.e., . Training the neural network for predicting the value vector of the APT-DIFT game consists of two steps.

-

(i)

Generate random samples of strategy pairs,

-

(ii)

Simulate the APT-DIFT game for each of the randomly generated sample of strategy pair and obtain the values corresponding to all states.

The neural network takes as input the strategy pairs and outputs the value vector. The training is done using a multi-input, multi-output neural network, represented as , where and . The neural network may not compute the exact value vector, however, it can approximate the value vector to arbitrary accuracy. Given a function and , the guarantee is that by using enough hidden neurons it is always possible to find a neural network whose output satisfies , for all inputs [8]. In other words, the approximation will be good to within the desired accuracy for every possible input. However, the training method does not guarantee that the neural network obtained at the end of the training process is one that satisfies the specified level of accuracy. Using the trained neural network we predict the -values of the game.

Lemma 6.11.

Consider the APT-DIFT game with state space . Let a neural network be trained using samples of strategy pairs to predict the value vector of the game such that the mean absolute error is within the desired tolerance of . Then, the trained neural network also yield the -values, , for all , , and .

Proof.

Consider a neural network that is trained using enough samples of strategy pairs to predict the value vector of the game. Thus given a strategy pair , the neural network predicts . Consider an arbitrary state . The -value corresponding to state and action pair for the APT and DIFT, respectively, is given by . For a strategy with such that and such that gives

Hence the -values of the game can be obtained using the neural network that is trained to predict the value vector by inputting a strategy pair with and , for any , , and . ∎

Using the trained neural network we run a policy iteration algorithm. In the policy iteration, we update the strategies of both players, APT and DIFT, by solving the stage games in a dynamic programming manner. The details of the algorithm are presented below.

Algorithm 6.3 presents the pseudocode for our HSL algorithm to solve the APT-DIFT game. Initially we generate random samples of strategy pairs and value vector and train a neural network. Using the trained neural network, we propose a strategy iteration algorithm. As Assumption 6.6 holds, the state space graph is acyclic. Thus one can compute the topological ordering (Step 3) and the hierarchical levels (Step 4) of in polynomial time. The values at the absorbing states are known and are hence set as , , and for all . As the values of the states at level are known, the algorithm begins with level . Initially the strategies of APT and DIFT are randomly set to and , respectively. Then we compute the -values of all the states using the trained neural network, following the hierarchical order. Due to the hierarchical structure of the state space, computation of -value of a state in hierarchical level depends only on the states that are at levels higher than . Consider level and an arbitrary state in level . The -values of are predicted using the trained neural network by selecting suitable deterministic strategy pairs. That is, for predicting , choose strategies such that and as input to the neural network. Using the -values of state , solve the LP and obtain value vector, DIFT strategy, and the optimal action of the APT. The strategies and are updated using the output of the LP such that the and corresponds to an NE strategy. Once the strategies of both players are updated for all the states in level , the process continues for level and so on.

In HSL algorithm, the prediction of -values using the neural network (Step 8-12) corresponding to states in a particular level can be run parallel. To elaborate, let there are number of states in level . Then select an action pair corresponding to each state and run the prediction step of the algorithm. Thus, every run of the neural network can predict number of -values, for different states.

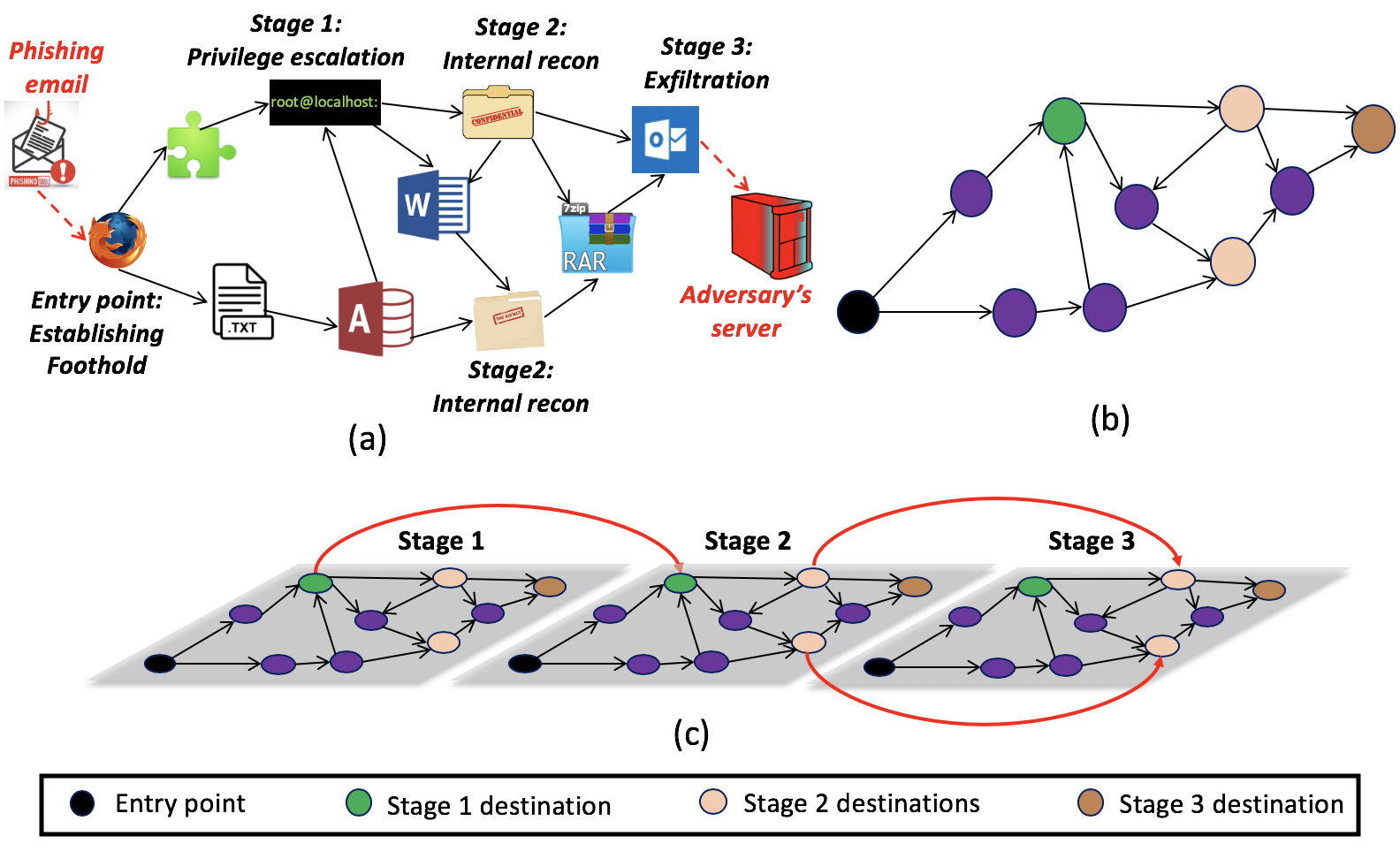

Remark: APT attacks typically consist of multiple stages, e.g., initial compromise, internal reconnaissance, foothold establishment, and data exfiltration, with each stage having a specific set of targets. To capture the multi-stage nature of the attack, we construct a multi-stage IFG, , from the IFG . Consider an attack that consists of attack stages with destinations of stage denoted as . Then we duplicate copies of the IFG such that nodes in in the copy of is connected to respective nodes in in the copy, for . Also, set . The construction of guarantees that any path that originate in set in the first copy and terminate in in the copy is a feasible attack path. Figure 1 shows schematic diagram of a multi-stage IFG. For notational brevity the paper presents the single-stage attack case, i.e., . All the algorithm and results presented in this paper also apply to the case of multi-stage attack using as the IFG.

7 Simulation

In this section we test and validate Algorithms 6.1, 6.2 and HSL algorithm (Algorithm 6.3) on two real world attack datasets. First we provide the details on the attack datasets and explain the construction of IFGs corresponding to each attack from their respective system log data. Then we discuss our experiments and present the results.

7.1 Attack Datasets

We use two attack datasets in our experiments. First attack dataset is corresponding to a nation state attack [6] and the second is related to a ransomware attack [20]. Each attack was executed individually in a computer running Linux operating system and system logs were recorded through RAIN system [15]. System logs contain records related to both malicious and benign information flows.

7.1.1 Nation State Attack

Nation state attack (a state-of-the-art APT attack) is a three day adversarial engagement orchestrated by US DARPA red-team during the evaluation of RAIN system. Attack campaign was designed to steal sensitive proprietary and personal information from the victim system. In our experiments we used the system logs recorded during the day 1 of the attack. The attack consists of four key stages: initial compromise, internal reconnaissance, foothold establishment, and data exfiltration. Our experiments considered the first stage of the attack, i.e., initial compromise stage, where APT used spear-phishing to lead the victim user to a website that was hosting ads from a malicious web server. After navigating to the website, APT exploited a vulnerability in the Firefox browser and compromised the Firefox.

7.1.2 Ransomware Attack

Ransomware attack campaign was designed to block access to the files in directory and demand a payment from the victim in exchange for regranting the access. The attack consists of three stages: privilege escalation, lateral movement of the attack, and encrypting and deleting directory. Upon reaching the final stage of the attack, APT first opened and read all the files in the directory of the victim computer. Then APT wrote the content of the files in into an encrypted file named . Finally, APT deleted the directory.

7.2 Construction of IFG

Direct conversion of the system logs into IFG typically results in coarse graphs with large number of nodes and edges as it includes all the information flows recorded during the system execution. Majority of these information flows are related to the system’s background processes (noise) which are unrelated to the actual attack campaign and it is computationally intensive to run the proposed algorithms on a state space induced by such a coarse graph. Hence, we use the following pruning steps to prune the coarse graph without losing any attack related causal information flow dependencies.

-

1.

When multiple edges with same directed orientation exist between two nodes in the coarse IFG, combine them to a single directed edge. For example, consider a scenario where multiple “read” system calls are recorded between a file and a process. This results in multiple edges between the two nodes of the resulting coarse IFG. Our APT-DIFT game formulation only requires to realize the feasibility of transferring information flows between pairs of processes and files. Hence, in scenarios similar to the above example, we collapse all the multiple edges between the two nodes in the coarse IFG into a single edge.

-

2.

Find all the nodes in coarse IFG that have at least one information flow path from an entry point of the attack to a target of the attack. When attack consists of multiple stages find all the nodes in coarse IFG that have at least one information flow path from a destination of stage to a destination of a stage , for all .

-

3.

From coarse graph, extract the subgraph corresponding to the entry points, destinations, and the set of nodes found in Step .

-

4.

Group all the nodes that correspond to files of a single directory to a single node related to the parent file directory. For example, assume the resulting coarse IFG has three files, , , and , that are uniquely identified by their respective file paths. In this case we will group all three files, , , and under one super-node corresponding to the parent directory . Incoming and outgoing edges associated with each of the three files are then connected to the new node . If any subset of the new edges connected to induce multiple edges with same orientation to another node in the IFG, then follow step 1). It is also possible to group the files into respective sub-directories such as and given in the example. Such groupings will facilitate much finer-grained security analysis at the cost of larger IFGs which require more computation resources to run the proposed APT-DIFT game algorithms presented in this paper.

-

5.

If the resulting graph after Steps - contains any cycles use node versioning techniques [10] to remove cycles while preserving the information flow dependencies in the graph.

Steps and are done using upstream, downstream, and point-to-point stream pruning techniques mentioned in [15]. The resulting information flow graph is called pruned IFG. We tested the proposed algorithms on the state spaces of APT-DIFT games corresponding to these pruned IFGs.

For the nation state attack, initial conversion of the system logs into an IFG resulted in a coarse graph with 299 nodes and 404 edges which is presented in Figure 2(a).. We used steps to explained in Section 7.2 to obtain a pruned IFG with nodes and edges. A network socket connected to an untrusted IP address was identified as an entry point of the attack and the target of the attack is Firefox process. Figure 2(b) shows the pruned IFG of nation state attack. In the IFG , there are 21 information flow paths from the entry point to the target Firefox.

For the ransomware attack, direct conversion of the system logs resulted in a coarse IFG with nodes and edges which is presented in Figure 3(a). We pruned the resulting coarse IFG using the steps given in Section 7.2. The pruned IFG of ransomware attack consists of nodes and edges. Two network sockets that indicate series of communications with external IP addresses in the recorded system logs were identified as the entry points of the attack. Figure 3(b) illustrates the pruned IFG of ransomware attack with annotated targets .

7.3 Experiments and Results

Algorithm 6.1 was implemented on APT-DIFT game model associated with the pruned cyclic IFG of nation state attack given in Figure 2. Figure 4(a) shows the convergence of the value in APT-DIFT game with the iteration number . The threshold value of the maximum absolute error, , in Algorithm 6.1 was set to . We note that where . Figure 4(b) shows that monotonically decreases with . At iteration , .

HSL algorithm (Algorithm 6.3) was tested on APT-DIFT game associated with the pruned acyclic IFG of ransomware attack given in Figure 3 and results are given in Figure 5. We used a sequential neural network with two dense layers to learn the value vector for a given strategy pair of APT and DIFT. Each dense layer consists of neurons and ReLU activation function. Training data consist tuples of APT and DIFT strategy pair and corresponding value vector of APT-DIFT game. We generated training data samples that consist of randomly generated deterministic APT policies. We randomly generated DIFT’s policies such that strategies are stochastic (mixed) and are deterministic. For each randomly generated APT and DIFT strategy pair, the corresponding value vector was computed. A stochastic gradient descent optimizer was used to train the neural network. In each experiment trial the neural network was trained for episodes and validation error was set to .

In Figure 5(a) we analyze the sensitivity of estimated value vector to the variations of payoff parameter, by plotting the mean absolute error between actual value vector and estimated value vector , i.e., , with respect to . In these experiments training data samples were used in HSL algorithm. The results show that values are close and consistent with values when parameter takes smaller values and variations between and is increased when takes larger values. A reason for this behavior can be the numerical unstability associated with the training and estimating tasks done in the neural network model used in HSL algorithm. In order to study the effect of the length of training data samples on the estimated value vector we plot against number of training data samples used in HSL algorithm in Figure 5(b). was used in the experiments. The results show that decreases with the number of training data samples used in HSL algorithm as the increased number of training data samples improves the learning in neural network model.

8 Conclusion

This paper studied detection of Advanced Persistent Threats (APTs) using a Dynamic Information Flow Tracking (DIFT)-based detection mechanism. We modeled the strategic interaction between the APT and DIFT as a non-cooperative stochastic game with total reward structure. The APT-DIFT game has imperfect information as both APT and DIFT are unaware of the actions of the opponent. Also, our game model incorporates the false-positives and false-negatives generated by DIFT. We considered two scenarios of the game (i) when the transition probabilities, i.e., rates of false-positives and false-negatives, are known to both players and (ii) when the transition probabilities are unknown to both players. For case (i), we proposed a value iteration-based algorithm and proved convergence and complexity. For case (ii), we proposed Hierarchical Supervised Learning (HSL), a supervised learning-based algorithm that utilizes the hierarchical structure of the state space of the APT-DIFT game, that integrates a neural network with a policy iteration algorithm to compute an approximate equilibrium of the game. We implemented our algorithms on real attack datasets for nation state and ransomware attacks collected using the RAIN framework and validated our results and performance.

References

- [1] M. Ahmadi, M. Cubuktepe, N. Jansen, S. Junges, J.-P. Katoen, and U. Topcu. The partially observable games we play for cyber deception. arXiv:1810.00092, 2018.

- [2] M. Ahmadi, A. A. Viswanathan, M. D. Ingham, K. Tan, and A. D. Ames. Partially Observable Games for Secure Autonomy. 2020.

- [3] R. Amir. Stochastic games in economics and related fields: An overview. Stochastic Games and Applications, pages 455–470, 2003.

- [4] B. Bencsáth, G. Pék, L. Buttyán, and M. Felegyhazi. The cousins of Stuxnet: Duqu, Flame, and Gauss. Future Internet, 4(4):971–1003, 2012.

- [5] C. Boutilier, T. Dean, and S. Hanks. Decision-theoretic planning: Structural assumptions and computational leverage. Journal of Artificial Intelligence Research, 11:1–94, 1999.

- [6] S. W. Brenner. Cyberthreats: The emerging fault lines of the nation state. Oxford University Press, 2009.

- [7] J. Clause, W. Li, and A. Orso. Dytan: a generic dynamic taint analysis framework. International Symposium on Software Testing and Analysis, pages 196–206, 2007.

- [8] B. C. Csáji et al. Approximation with artificial neural networks. Faculty of Sciences, Etvs Lornd University, Hungary, 24(48):7, 2001.

- [9] W. Enck, P. Gilbert, S. Han, V. Tendulkar, B.-G. Chun, L. P. Cox, J. Jung, P. McDaniel, and A. N. Sheth. Taintdroid: an information-flow tracking system for realtime privacy monitoring on smartphones. ACM Transactions on Computer Systems, 32(2):5, 2014.

- [10] M. N. Hossain, J. Wang, R. Sekar, and S. D. Stoller. Dependence-preserving data compaction for scalable forensic analysis. USENIX Security Symposium, pages 1723–1740, 2018.

- [11] J. Hu and M. P. Wellman. Multiagent reinforcement learning: Theoretical framework and an algorithm. International Conference on Machine Learning, 98:242–250, 1998.

- [12] J. Hu and M. P. Wellman. Nash Q-learning for general-sum stochastic games. Journal of machine learning research, 4:1039–1069, 2003.

- [13] L. Huang and Q. Zhu. Adaptive strategic cyber defense for advanced persistent threats in critical infrastructure networks. ACM SIGMETRICS Performance Evaluation Review, 46(2):52–56, 2019.

- [14] J. Jang-Jaccard and S. Nepal. A survey of emerging threats in cybersecurity. Journal of Computer and System Sciences, 80(5):973–993, 2014.

- [15] Y. Ji, S. Lee, E. Downing, W. Wang, M. Fazzini, T. Kim, A. Orso, and W. Lee. RAIN: Refinable attack investigation with on-demand inter-process information flow tracking. ACM SIGSAC Conference on Computer and Communications Security, pages 377–390, 2017.

- [16] A. B. Kahn. Topological sorting of large networks. Communications of the ACM, 5(11):558–562, 1962.

- [17] M. L. Littman. Value-function reinforcement learning in Markov games. Cognitive Systems Research, 2(1):55–66, 2001.

- [18] K.-w. Lye and J. M. Wing. Game strategies in network security. International Journal of Information Security, 4(1-2):71–86, 2005.

- [19] S. Misra, S. Moothedath, H. Hosseini, J. Allen, L. Bushnell, W. Lee, and R. Poovendran. Learning equilibria in stochastic information flow tracking games with partial knowledge. IEEE Conference on Decision and Control, pages 4053–4060, 2019.

- [20] S. Mohurle and M. Patil. A brief study of wannacry threat: Ransomware attack 2017. International Journal of Advanced Research in Computer Science, 8(5), 2017.

- [21] S. Moothedath, D. Sahabandu, J. Allen, A. Clark, L. Bushnell, W. Lee, and R. Poovendran. Dynamic Information Flow Tracking for Detection of Advanced Persistent Threats: A Stochastic Game Approach. arXiv: 2006.12327, 2020.

- [22] S. Moothedath, D. Sahabandu, J. Allen, A. Clark, L. Bushnell, W. Lee, and R. Poovendran. A game-theoretic approach for dynamic information flow tracking to detect multi-stage advanced persistent threats. IEEE Transactions on Automatic Control, 2020.

- [23] K. Najim, A. S. Poznyak, and E. Gomez. Adaptive policy for two finite Markov chains zero-sum stochastic game with unknown transition matrices and average payoffs. Automatica, 37(7):1007–1018, 2001.

- [24] H. L. Prasad, L. A. Prashanth, and S. Bhatnagar. Two-timescale algorithms for learning Nash equilibria in general-sum stochastic games. International Conference on Autonomous Agents and Multiagent Systems, pages 1371–1379, 2015.

- [25] S. Rass, S. König, and S. Schauer. Defending against advanced persistent threats using game-theory. PLOS ONE, Public Library of Science, 12(1):1–43, 2020.

- [26] H. Royden and P. Fitzpatrick. Real Analysis. Prentice Hall, 2010.

- [27] D. Sahabandu, S. Moothedath, J. Allen, L. Bushnell, W. Lee, and R. Poovendran. Stochastic dynamic information flow tracking game with reinforcement learning. International Conference on Decision and Game Theory for Security, pages 417–438, 2019.

- [28] D. Sahabandu, S. Moothedath, J. Allen, L. Bushnell, W. Lee, and R. Poovendran. A Multi-Agent Reinforcement Learning Approach for Dynamic Information Flow Tracking Games for Advanced Persistent Threats. arXiv: 2007.00076, 2020.

- [29] D. Sahabandu, S. Moothedath, J. Allen, A. Clark, L. Bushnell, W. Lee, and R. Poovendran. Dynamic information flow tracking games for simultaneous detection of multiple attackers. IEEE Conference on Decision and Control, pages 567–574, 2019.

- [30] D. Sahabandu, S. Moothedath, J. Allen, A. Clark, L. Bushnell, W. Lee, and R. Poovendran. A game theoretic approach for dynamic information flow tracking with conditional branching. American Control Conference, pages 2289–2296, 2019.

- [31] L. S. Shapley. Stochastic games. Proceedings of the national academy of sciences, 39(10):1095–1100, 1953.

- [32] G. E. Suh, J. W. Lee, D. Zhang, and S. Devadas. Secure program execution via dynamic information flow tracking. ACM Sigplan Notices, 39(11):85–96, 2004.

- [33] W. Tian, X.-P. Ji, W. Liu, J. Zhai, G. Liu, Y. Dai, and S. Huang. Honeypot game-theoretical model for defending against apt attacks with limited resources in cyber-physical systems. ETRI Journal, 41(5):585–598, 2019.

- [34] B. Watkins. The impact of cyber attacks on the private sector. Briefing Paper, Association for International Affair, 12:1–11, 2014.

- [35] Q. Zhu and T. Başar. Robust and resilient control design for cyber-physical systems with an application to power systems. IEEE Decision and Control and European Control Conference, pages 4066–4071, 2011.

9 Appendix

Proof of Proposition 5.2: It is known that the class of zero-sum, finite, stochastic games with nonzero stopping (termination) probability has Nash equilibrium in stationary strategies [31]. Note that, the APT-DIFT game is a stochastic game with finite state space and finite action spaces for both players. Moreover, the transition probabilities in the game and are such that and . Thus the stopping probabilities of the APT-DIFT game are nonzero, for all strategy pairs except for a deterministic policy in which the defender does not perform security analysis at any state. However, such a policy is trivially irrelevant to the game as the defender is idle and essentially not participating in the game. As the result for zero-sum games also hold for constant-sum games, from [31] it follows that there exists an NE for the APT-DIFT game. ∎