STL2vec: Signal Temporal Logic Embeddings for Control Synthesis With Recurrent Neural Networks

Abstract

In this paper, a method for learning a recurrent neural network (RNN) controller that maximizes the robustness of signal temporal logic (STL) specifications is presented. In contrast to previous methods, we consider synthesizing the RNN controller for which the user is able to select an STL specification arbitrarily from multiple STL specifications. To obtain such a controller, we propose a novel notion called STL2vec, which represents a vector representation of the STL specifications and exhibits their similarities. The construction of the STL2vec is useful since it allows us to enhance the efficiency and performance of the RNN controller. We validate our proposed method through the examples of the path planning problem.

Index Terms:

Signal temporal logic, neural network controller, optimal control.I Introduction

Recently, formal methods have attracted much attention in control to address complex and temporal objectives such as periodic, sequential, or reactive tasks, going beyond the traditional control objectives like stability and tracking. Temporal logics such as linear temporal logic (LTL)[1], metric temporal logic (MTL)[2], and signal temporal logic (STL)[3] allow us to write down formal descriptions for these specifications.

In this paper, we focus on dealing with the STL specifications, which can specify the temporal properties of real-valued signals. One of the notable advantages of using the STL specifications in control is that we have access to the quantitative semantics, called robustness, which measures how much a signal satisfies the STL specification. The robustness has been used for control purposes in many existing works [4, 5, 6, 7, 8, 9, 10]. The authors of [4, 5, 6] proposed a scheme of encoding STL constraints or robustness of the STL specifications by mixed-integer linear constraints, and these are utilized to employ model predictive control (MPC). To alleviate the computational burden of mixed-integer programming, the works [7, 8, 9, 10] proposed the concept of smooth robustness, allowing us to solve the maximization problem of the robustness based on gradient-based algorithms.

Aiming to further improve the scalability and meet real-time requirements, neural network (NN) based feedback controller was employed for STL tasks [11, 12, 13, 14, 15]. Although the learning procedure itself may be time-consuming, once this computation can be done offline, applying the resulting controller online becomes much faster than directly solving the optimization problem at each time step. In [11], the parameters of the feed-forward NN were trained to maximize the robustness of the STL specifications. Reinforcement learning algorithms such as deep Q-learning (DQN) and learning from demonstrations (Lfd) were used for learning the NN controller in [12] and [13] respectively. The works of [14, 15] used a recurrent neural network (RNN) [16] instead of a feed-forward NN, which is more suitable for control with STL specifications since the satisfaction of an STL formula depends on a state trajectory (not just the current state). In [14, 15], RNN parameters were trained using imitation learning and model-based reinforcement, respectively.

However, the afore-cited previous works can deal with only one STL specification (i.e., the NN controller is trained for only one prescribed STL specification), and did not explicitly deal with multiple STL specifications. In particular, it may be more desirable and flexible in practice that the user is able to choose an STL specification freely from multiple STL specifications, such as the case where a surveillance task in a certain region (building, park, etc.) changes from day to day. A naive approach to achieve this would be to learn a set of NN controllers independently for all the candidate STL specifications, although this would be expensive in terms of memory consumption and computational resources if the number of the STL specifications is large (i.e., the number of the parameters to be learned could increase as the number of the candidate STL specifications increases). Hence, we here consider learning a single NN controller, in which not only the states of the system but also a chosen STL specification are regarded as inputs to the NN. We in particular focus on learning an RNN controller since as previously mentioned, it is suitable for dealing with history dependent nature of the STL specifications. The above formulation leads us to the following question to be answered, which has not been investigated in previous works of literature:

How should we encode the STL specifications by vectors, so that they are readable to the RNN?

Naively, we could assign any unique numbers or one-hot vectors to all the STL specifications and use them as the inputs to the RNN. Although these approaches are easy to implement, information about the similarities in the specifications cannot be encoded with these schemes. Thus, the underlying function to be learned can be unreasonably complex as the number of candidate STL specifications increases, which leads to increased computation time for training as well as a critical failure in control execution.

Motivated by the above issue, we propose a novel scheme for constructing a vector representation of STL specifications, called STL2vec, which captures similarities between the STL specifications. The vectors for the specifications will be trained based on Word2vec [17, 18] which is a technique widely known in natural language processing. Then, the parameters for the RNN are trained by taking the vectors obtained by STL2vec and state trajectory as the inputs (to the RNN) and defining a loss function by the average of negative robustness scores for all the candidate STL specifications.

The main contributions of this work are summarized as follows. First, we propose a method for obtaining vector representations of STL specifications that capture their similarities in terms of control policy. Although STL2vec is constructed based on the concept of word2vec, which is a technique that maps a word of the natural language onto the vector space, how to generate a dataset to learn appropriate vector representations for STL specifications in control synthesis is not a trivial problem. We address the details on how to construct dataset to train appropriate STL2vec in Section V. Second, by using the vectors generated by STL2vec, we train the controller that can deal with multiple STL specifications with one RNN model. Naively, if one aims to train the NN controller to deal with multiple specifications by simply applying existing NN-based control synthesis methods such as [11, 12, 13, 14, 15], one needs to ready NN models as many as the number of the STL specifications, which leads to large memory consumption and computational resources (for details, see Section V-C). Moreover, the proposed method has the potential to accelerate the training as will be seen in the case study of Section VI. Although there exist other methods that make the NN controller flexible such as [14] and [19], which can handle (unknown) obstacles by using control barrier function, these methods are restricted to the specification of only collision avoidance and thus are not intended to deal with multiple temporal logic specifications. The proposed method in this paper allows us to overcome such limitations; it allows the user to have a flexibility of selecting an STL specification freely from multiple ones, and this will be achieved by constructing STL2vec.

II Preliminaries

II-A System description and notations

We consider a nonlinear discrete-time system of the form:

| (1) |

where and are the state and the control input at time , is the set of initial states, is the set of control inputs, and is a function capturing the dynamics of the system. We assume that the initial state is randomly chosen from according to the probability distribution , and is given by for given (the inequalities are interpreted element-wise). Given and a sequence of control inputs , we can generate a unique sequence of states according to the dynamics (1), which we call a trajectory: .

II-B Signal Temporal Logic

Signal temporal logic (STL) [3] is a logical formalism that can specify temporal properties of real-valued signals. The syntax of the STL formula is recursively defined as follows:

| (2) |

where is the predicate whose boolean truth value is determined by the sign of a function , , , , and are Boolean true, negation, and, and or operators, respectively, and , , are the temporal eventually, always, and until operators defined on a time interval (, ).

We define the semantics of an STL formula with respect to the trajectory of the system (1) at time as follows:

where . For simplicity, we denote to abbreviate . The above semantics is qualitative, in the sense that it reveals only if the trajectory either satisfies or violates .

The notion of robustness of STL formulas provides quantitative semantics, and it measures how much the trajectory satisfies the STL formula. The robustness is sound in the sense that positive robustness value implies satisfaction of STL formula and negative robustness implies violation of STL formula. The robustness score of the STL formula is defined with respect to a trajectory and a time , which we denote by , and is recursively defined as follows:

Note that the trajectory length should be selected large enough to determine the robustness score (see, e.g., [4]). For simplicity, we denote to abbreviate .

III Problem Statement

Let us now formulate a problem that we seek to solve throughout the paper. First, let denote a set of candidate STL specifications. We assume that the STL specification can be freely chosen from the candidate specifications by the user before the control execution. Once the STL specification is chosen by the user, say , the system (1) is then controlled aiming to satisfy . We also assume for simplicity that the chosen STL specification is fixed during control execution (as detailed below).

In this paper, we aim to learn a feedback controller that maximizes the robustness of the STL specification. Note that the satisfaction and the robustness of an STL specification are defined over the trajectory of the system (1), and that the STL specification is freely chosen from . Hence, we should design a control policy that depends not only on the past and present states, but also on the STL specification, i.e., we need to obtain a control policy of the form , where is the trajectory of the system (1), is the STL specification that is chosen from , and denotes a set of parameters to be learned to characterize the control policy . More formally, the problem considered in this paper is defined as follows:

Problem 1

Given the system (1), horizon length , probability distribution of initial states , and the candidate STL specifications , find a set of parameters that is the solution to the following problem:

| (3) | ||||

| (4) | ||||

where denotes a control policy parameterized by and is the trajectory of (1) along with the policy .

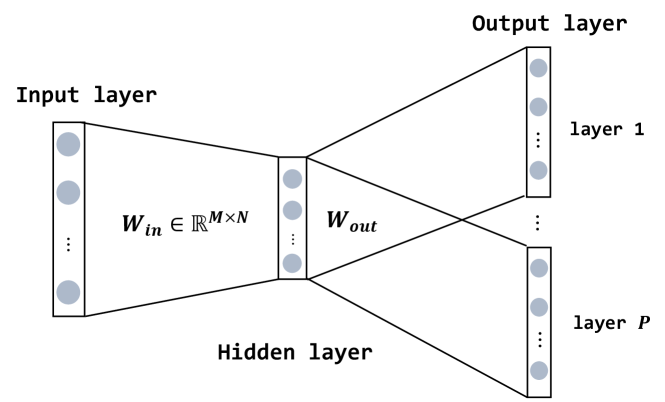

In Problem 1, we look for a set of the parameters of the control policy that maximizes the sum of the expectation of the robustness score with respect to the distribution of initial states over all candidate STL specifications. Since the control policy depends on the past and present states, or it is history dependent, in this paper we use a recurrent neural network (RNN) to learn the control policy (see, [14, 15]). RNN is a type of a neural network that has a feedback architecture. A basic structure of the RNN is illustrated in Fig. 1. As shown in Fig. 1, RNN keeps processing the sequential information via internal hidden state . The update rule of the hidden state and derivation of the output at time are as follows:

| (5) |

where and are the functions parameterized by weights and , respectively, and denotes the input that is fed to the RNN. Hence, the set of the RNN parameters is given by . As discussed in [11], we can restrict the output of the RNN (i.e., the control input ) within the lower bound and the upper bound by defining each element of the function as follows:

| (6) |

where the subscript denote -th element of the vector. Using (6), we can generate control inputs satisfying . A detailed definition of in (5) as well as a concrete procedure to learn are elaborated in Section V.

Indeed, solving Problem 1 is not trivial and challenging in the following sense. Since the control policy depends on an STL specification , we should consider how to transform each STL specification into a corresponding vector, so that it can be fed to the RNN as the inputs together with the state trajectory . Intuitively, it may be desirable that we can provide similar inputs to the RNN if the two STL specifications are close to each other in terms of their control policies. For example, consider a simple case with and the STL candidate specifications

| (7) | |||

| (8) | |||

| (9) |

Intuitively, control policies to satisfy and may be almost the same (as we aim to control the system to the same region with almost the same time intervals), while the control policies to satisfy and may be quite different (as we aim to control the system to different regions). In addition, if we could find a control policy to satisfy , this control policy also leads to the satisfaction of . Hence, it is convenient that we could provide a certain mapping, where and are mapped onto the vector points that are close to each other but far from those corresponding to and . In addition, and are mapped onto the same vector points if a control policy to satisfy could be found.

Motivated by the above intuition, in this paper we propose a novel scheme for constructing a vector representation of the STL specifications, which is referred to as STL2vec. The proposed approach is inspired by the notion of Word2vec [17], a vector representation of words that has been proposed in machine learning literature and widely utilized for natural language processing. A concrete procedure of the proposed approach is elaborated in Section V.

IV Summary of Word2vec (skip-gram)

Before providing the proposed approach, we summarize the basic concept of Word2vec. As we will see in the next section, the concept of Word2vec is a key ingredient to introduce STL2vec and how to train for it. The main objective of Word2vec is to group the vectors of similar or related words (for example, the words ”man” and ”boy” are mapped onto similar points in the vector space). As such, we can let the computer perform mathematical operations on words to detect their similarities. The mapping from each word to the vector is represented via an NN, i.e., the input for the NN is a word, and its output is a corresponding vector. One of the common techniques to train the NN is the skip-gram[17]. The structure of the skip-gram is shown in Fig. 2. As shown in Fig. 2, the skip-gram has a shallow three-layer NN. The input to the NN is an -dimensional one-hot vector, i.e., only one single element is 1 and the other elements are all zero, where indicates a total number of words in the corpus (typically with ). Here, each word is assigned by a unique one-hot vector (for example, ”man” is labeled by , and ”boy” is labeled by , etc.), so that each word in the corpus is readable to the NN. The outputs of the NN are the collection of (-dimensional) vectors whose each element represents the probability of the corresponding word in the corpus (training data for the output layer will be explained later in this section), where is a user-defined parameter. The mapping from the input layer to the hidden layer is given by a matrix , where is a user-defined parameter that indicates the dimension of the word vector. The mapping from the hidden layer to each output layer is given by another matrix . Here, we note that the matrix is the same for all the output layers.

The weight parameters are trained from a large number of sentences, such as those in the news report (see, e.g. [17]). For example, suppose and we have a sentence: ”I want to eat an orange every morning”. Then, we regard the training input as a certain word in this sentence and the training output as the set of words around it. For example, the word ”eat” is regarded as the training input, and the set of words around it, i.e., {to, an} is regarded as the training outputs. Generating the training dataset as above is motivated by the insight that the meaning of a word is established by the surrounding words.

Using the dataset constructed above, we train the skip-gram model. First, we pass the one-hot encoded input data to the input layer and perform the forward computation. As an output layer and loss function, we use softmax and cross-entropy loss, respectively. Then, we update the weights and using the gradients obtained by back-propagation computation (for details, see [17]). After the training, the vector representation of each word in the corpus is given through the projection of the weight matrix (hence, we drop the matrix in the skip-gram model). That is, for each word in the corpus, the corresponding vector representation is given by , where denotes the one-hot vector of the word .

V Proposed Scheme

In this section, we describe the solution approach to Problem 1. The proposed approach consists of the two steps: (i) train a vector representation of the STL specifications, namely STL2vec, whose input is the STL specification and output is the -dimensional vector; (ii) train the RNN, whose inputs are the state trajectory and the vector representation of the STL specification , and the output is the control input . The illustration of the two steps are shown in Fig. 3. Since STL2vec will be trained based on the skip-gram, the parameter to be learned in Step (i) is (recall in Section IV that we neglect ). Also, recall that the parameters to be learned for RNN in Step (ii) is (see Section III). Hence, the overall parameters to be learned for the control policy is .

The proposed approach is beneficial in the following sense. By constructing STL2vec, we can obtain a vector representation that exhibits similarities between the STL specifications. This allows us to accelerate both efficiency and performance of the RNN controller in contrast to some naive approaches, such as integer or one-hot encoding i.e. simply assigning arbitrary numbers or one-hot vectors to the STL specifications (e.g., is assigned by 1, is assigned by 2, and so on) and use these numbers as the inputs to the RNN; for details, see an experimental result in Section VI. Moreover, the proposed approach is more beneficial than the approach of learning RNN controllers one by one for each STL formula in terms of computational memory; for details, see Section V-C and Section VI. The concrete procedures of the above two steps are given in the following subsections.

V-A Training STL2vec

In this subsection, we provide a detailed procedure of Step (i) (train STL2vec). To train STL2vec, we use the skip-gram model as explained in Section IV. First, we encode all the STL candidate specifications by the -dimensional one-hot vectors, i.e. each STL specification is encoded by an -dimensional vector whose -th element is 1, and all other elements are 0 ( is encoded as and is encoded as , and so on), so that they are readable to the NN. For simplicity, the one-hot vector of is denoted by .

A key question regarding the construction of STL2vec is how to learn the weight parameters for the skip gram model, or in other words, how to generate the training dataset to learn . In this paper, we learn these parameters such that similar vector representations can be obtained if the two STL specifications are close to each other in terms of their control policies (for a detailed intuition for this, see Section III). To this end, we generate training dataset by comparing robustness scores among the STL specifications, aiming at measuring their closeness. More specifically, for each , we randomly select an initial state (following the probability distribution ), and solve the following maximization problem:

| (10) |

Let denote the optimal control inputs as the solution to (10), and the corresponding trajectory of the system (1). Thus, the corresponding (maximized) robustness score is . Then, we compute the robustness scores of the obtained trajectory with respect to all the other STL specifications, i.e.,

| (11) |

Then, we sort the robustness scores of (11) in order of their closeness to (i.e., sort by evaluating , ). We denote the ordered specifications by , where denotes the specification which has -th closest robustness value to .

Then, we pick up the first STL specifications, i.e., (recall that is the number of output layers in the skip-gram model), and set the input and output training data as , where represents the input data and represents the output data. This data is then encoded by the one-hot vectors, regarded as the training data to learn the skip-gram:

| (12) |

The data in (12) is then added to the dataset. For each , we repeat the above process times so as to obtain a collection of the training data. The proposed approach presented above is summarized in Algorithm 1. Then, the weight parameters are learned by minimizing the cross entropy loss via back-propagation.

Remark 1

Note that, due to the definition of the robustness given in Section II, the robustness can be nested with max/min functions and thus the optimization problem (10) can be non-differentiable. To deal with such a problem, we adopt a smooth approximation of the min/max operators by the log-sum-exp as follows: and , where is the scaling parameter. It is known that the approximation error goes to as (see, e.g., [7]). This approximation allows the problem (10) to be solved based on gradient-based algorithms.

Remark 2

Since the optimization problem (10) is non-linear, finding the global optimal solution of the problem (10) is difficult and some local optima that leads to undesirable control performance can be obtained. To the best of our knowledge, there are no general, systematic methodologies to obtain the global optimum of (10). However, we believe that there are some heuristic methods that potentially improve the solution. For example, in the case study of Section VI, we have confirmed that we could increase the possibility to obtain the reasonable solution (the solution with the positive robustness) by appropriately setting the parameter in the smooth approximation. Furthermore, we have also confirmed that we could deal with the problem by solving (10) several times with the initial states randomly sampled from the vicinity of , i.e., once is chosen, (10) is solved with the initial state newly sampled satisfying , where is a given small positive constant, which could increase the possibility to obtain positive robustness.

Finally, similarly to Word2vec, the vector representation of the STL specifications is given through the projection of , i.e., the vector representation of is given by .

Example 1

Consider candidate STL specifications and . Suppose that we consider and solve the corresponding optimization problem (10) (i.e., ) to obtain the optimal trajectory . Suppose that the robustness scores of with respect to the STL specifications are obtained as , , , , and . Then, we select the top specifications among whose robustness scores are the closest to the one of . In this case, we select and , since the robustness scores of and have the most and the second closest robustness values to , respectively. Thus, the resulting data is and the encoded training data to be added in the dataset is .

Remark 3

If there exist two or more STL specifications that have the same robustness values in the output data, we add all the combinations to the training data set. For example, in Example 1, if the robustness values are obtained as , , , , and , then we add the training data as and .

V-B Training RNN

Here, we describe a detailed procedure of Step (ii). Recall that in Step (ii), we aim to train RNN, whose inputs are the trajectory and the vector representation of (i.e., ), and the output is the control input . Further, remember that the concrete unfolded structure of the RNN is shown in Fig. 1, and the update rules are given by (5)–(6). Here, the input variable is given by the concatenation of the state and the vector representation of the chosen STL specification, i.e., , where is the vector representation of the chosen STL specification . Now, let denote the control policy (or a mapping) for the RNN, where are the RNN parameters to be learned (for the illustration, see Fig. 3(b)). Since the parameters for STL2vec is fixed, we here focus on learning the RNN parameters to characterize .

To numerically solve the Problem 1, we keep repeating the following procedure until a certain number of epochs is reached. First, in each epoch, we construct mini-batch of the specifications by randomly rearranging the order of the candidate specifications and splitting them up according to the prespecified batch size , i.e., we construct the batches , , , , where (assuming that can be divided by ) 111If is not divisible by , we construct minibatch with ( denotes a floor function) and append the remaining specifications in the last batch. For example, if , and , we construct minibatch e.g., , .. Then, we iterate the following procedures for all the batches to update the parameters and .

V-B1 Forward Computation

For all the pairs of the initial state which are randomly sampled from the initial region and the vectors of the specifications in a batch , we generate the trajectories by iteratively applying for fixed and from the initial state . Then, by using all the trajectories obtained above, we compute the following loss:

| (13) |

which is an approximation of the expectation (3) in Problem 1 with the specifications in a batch (note that we take the negative of the robustness to define a loss).

V-B2 Backward Computation

After the forward computation, we compute the gradients for all the parameters via backpropagation through time (BPTT) [16]. This computation can be easily implemented by combining the auto-differentiation tools designed for NNs like PyTorch [22] and STLCG [20] which is a newly developed python toolbox for computing the STL robustness using computation graph.

V-B3 Weight Update

Lastly, we update all the parameters in the RNN controller by using the weights obtained above. In this paper, we use Adam optimizer [21].

V-C Some comparisons between the proposed approach and the approach of learning the RNNs one by one

As one of the alternative methods, one could consider learning a set of RNN controllers independently one by one for all the STL specifications. This one-by-one approach might eventually provide higher control performance than the proposed method, since it tries to learn a set of RNN controllers independently for all the candidate STL specifications (on the other hand, the proposed method learns the controller by using only one RNN model). However, the proposed approach has the potential to require much less computational memory than the one-by-one approach due to the following reason. In the proposed method, the total number of the parameters to be learned is given by , where , , and are the number of elements in , , and . For instance, when we use the Long-Short-Term-Memory (LSTM) as the RNN model, and are given by and , where is the dimension of the hidden state of RNN and is the number of LSTM layers. The number of parameters for STL embedding is given by . On the other hand, the required number of parameters when we train the controller one-by-one for each candidate specifications is given by , where and are the number of parameters for each RNN model when we train STL specifications one-by-one. From the above discussion, the number of the parameters for the STL embedding and the RNN models in the one-by-one case are both proportional to , and the corresponding coefficients are given by and , respectively. In other words, the number of the required parameters increases with respect to the number of the STL specifications in both of the two approaches. However, it is noted that we typically have 222It is argued that indeed holds for many control problems with STL tasks based on the fact that the previous works regarding the control synthesis with RNN (e.g., in [15]) use the structure of 2 LSTM layers with 32 dimensional hidden states, which leads to , even for simple 2-D path planning problem. On the other hand, in the literature of word2vec [17], vector size for word embedding is typically set from 50 to 200 for very large corpus like (in our case study of Section VI, we have set that is smaller than these typical values, since the total number of the candidate STL specifications considered in the case study is )., i.e., the size of the vector representation of STL is selected much smaller than the number of the RNN parameters, and thus the number of the required parameters by the proposed approach is smaller than the one-by-one approach. For example, in the case study of Section VI, it is shown that the total number of parameters to learn the controller by the proposed approach is 24 times smaller than the one-by-one approach; for details, see Section VI.

VI case study

We show the efficacy of the proposed method through the example of a path planning problem of a vehicle in 2D space. We used PyTorch package [22] for the implementation of RNN, IPOPT in CasADi [23] to solve optimization (10), and STLCG [20] for the computation regarding STL robustness. We used Windows 10 with a 2.80 GHz Core i7 CPU and 32 GB of RAM for all the experiments discussed in this section. Throughout this section, we consider the following nonlinear, discrete-time nonholonomic system: , , , where , represent position of the vehicle, is the heading angle, and are velocity and angular velocity, respectively. The state and control input are defined as and , respectively. As illustrated in Figure 4, we consider 4 regions in a 2D space: , , , , and the set of initial states (blue-edged color region). In addition, we consider 4 sub-regions in each region, which are labeled by indices as shown in Figure 4. In the following, we denote -th sub-region in region as .

The STL candidate specifications are shown in Table I. Here, the specifications are considered for all (, , ) and . The total number of the STL candidate specifications is given by .

VI-A Training STL2vec

Now we train STL2vec based on the proposed approach presented in Section V-A.

| Specifications |

|---|

| Procedure | Run-time |

|---|---|

| Dataset generation (STL2vec) | 1452 |

| Training STL2vec | 87 |

The parameters for the skip-gram are and . in Algorithm 1 and the number of epochs for the training of skip-gram model are set to 1 and 100, respectively. After the training, we evaluate similarities between all the 2 different vector representations () by the cosine similarity, which is defined as and it takes the maximum value of if has the same orientation as . The time required to train STL2vec is summarized in Table II. In Table III, we illustrate STL specifications which have the largest to fourth-largest cosine similarity values for some example specifications.

We summarize some characteristics that we have observed in the obtained embeddings in the followings: (I) Each specification in (a) of Table I is typically embedded close to the corresponding specification in (d). Moreover, each specification in (b) and (e) is embedded close to either (and ) or (and ). Typically, (b) and (e) are embedded close to the specification regarding the region which is closer to init region. For example, as we can partially see in Ex 1 of Table III, we have observed that almost all the specifications and are embedded close to the specification . This is intuitive and desirable result when we train the controller since all of these specifications are satisfied by entering whether or and stay there more than 5 steps, which is possible actions for all of regions in this example. (II) Each specification in (c) of Table I is basically embedded close to the specifications which are satisfied by similar trajectory. Specifically, the properties same as the following examples are observed for all the specifications in (c): (i) specifications () are embedded close to each other (see Ex 2 of Table III); (ii) as we can see in Ex 3 of Table III, the specifications and are mapped onto similar vectors (these specifications are satisfied with the same trajectory since is on the way from the starting point to ). (III) The specification (f) of Table I is embedded close to as we can see from EX 4 in Table III. This is also desirable result result since the specifications (f) require to firstly reach and then reach after that.

| Ex 1 | Ex 2 | ||||

|---|---|---|---|---|---|

| 0.99 | 0.99 | ||||

| 0.99 | 0.99 | ||||

| 0.99 | 0.95 | ||||

| 0.99 | 0.76 | ||||

| Ex 3 | Ex 4 | ||||

| 0.96 | 0.81 | ||||

| 0.95 | 0.73 | ||||

| 0.95 | 0.71 | ||||

| 0.93 | 0.71 |

VI-B Training RNN

Next, we evaluate the control performance of the proposed method. In this experiment, we train the parameters of RNN model for the specifications (a), (b), (c), (f) and in (e) in Table I. As for the specifications in (c), we only consider the specifications with . Thus the total number of specifications is 194. We retrain the STL embeddings (20 dimension) with these specifications and use them as the input to the RNN. Same as the previous work [15], all of the RNN models used in this example consists of 2 LSTM layers with 32-dimensional hidden states.

| Approaches |

|---|

| (A1) Integer encoding scheme |

| (A2) One-hot encoding scheme |

| (A3) Training controllers one-by-one |

As summarized in Table IV, we consider the following 3 alternative approaches for comparison with the proposed method: (A1) Integer encoding scheme: we incrementally assign an integer number for each STL specification (for example, is assigned by , is assigned by , etc), and use it as the input to the RNN (instead of the vectors generated by STL2vec) (A2) One-hot encoding scheme: we generate and assign 194 dimensional one-hot vectors for all the 194 specifications (for example, is assigned by , is assigned by , etc) (A3) Training one-by-one: we ready 194 RNN models and train each specification one-by-one. When we train the controllers for the approaches (A1) and (A2), we used the same training procedure discussed in Section V-B and used integer numbers or one-hot vectors instead of the vectors obtained by STL2vec. In both the proposed approach and (A1), (A2), we set the batch size and the number of initial states sampled for training in each iteration ( and in Section V-B)) to 8 and 3, respectively. In (A3), for each epoch we generate initial states () randomly from and update the RNN parameters assigned for each STL specification () one by one via forward/backward computation (similarly to the procedure of Section V-B) with the following loss: .

| approach \average of robustness | 0.1 | 0.15 | 0.2 | 0.22 |

|---|---|---|---|---|

| Proposed method | 3494 | 4054 | 6722 | 14668 |

| Learning one-by-one | 5686 | 7663 | 10336 | 12168 |

| One-hot encoding | 27238 | 33863 | – | – |

| Integer encoding | – | – | – | – |

Fig. 5 shows the average of robustness values for all the control schemes and Table V summarizes the actual time (in sec) required to reach the robustness values 0.1, 0.15, 0.2, 0.22 for all the proposed and alternative methods (the symbol ”–” indicates that the corresponding average of the robustness value has not been achieved). Furthermore, the resulting few trajectories obtained by applying the RNN controller trained by the proposed method and approach (A3) are plotted in Fig. 6 (both controllers were trained 1100 epochs and the trajectories are plotted for 10 initial states newly sampled from ). The robustness values are collected by testing the controllers once every 10 epochs with 30 initial states newly sampled from the initial region . The values in Fig. 5 for the one-by-one scheme (A3) are plotted by taking the mean of the average of the robustness values for all the 194 RNN controllers at the same epochs. We further note here that the actual times required to run one epoch for all the approaches are different from each other. The averaged times required to run the proposed approach and the approaches (A1)-(A3) were 14.2, 14.0, 32.2, and 14.0 [s], respectively (note that the time for approach (A3) is the total sum of the times required to update the parameters of all the RNN models). The time required for the approach (A2) is larger than the other methods because of the large input dimension (the dimension of the one-hot vector is 194). On the other hand, we have confirmed that the time required to update the parameters is not so much increased for the proposed method when as shown above. As we can see from Fig 5 and Table V, the average of robustness value of the proposed method are improving faster at the beginning of the training procedure than the other methods in terms of both number of epochs and actual time. Especially, from Table V, we can confirm that the average of robustness value of the proposed method reaches 0.1, 0.15, and 0.2 faster than the other approaches. Moreover, as we mentioned in Subsection V-C, we can largely save the memory consumption compared with the scheme (A3). In Table VI, we summarize the total number of the parameters required for each approach in this example.

However, the average of robustness value of the proposed method is subtly overtaken by the approach (A3) around 500 epoch and the averaged robustness value of the approach (A3) reaches 0.22 faster than the proposed approach. Within 1100 epochs, the maximum average of robustness values of the proposed method and approach (A3) were and , respectively. The reason why the average of robustness values of the proposed method is overtaken by that of the approach (A3) may be because of the effect of the other specifications, i.e., since only one RNN controller is trained for many specifications in the proposed method, the control performance for a specification may be affected by the other specifications depending on the obtained embedding. Such effect is observed in (b) of Fig. 6. The resulting trajectories are converged to the ones that are relatively far from the middle of , which leads to the low robustness although the specification itself is satisfied. To remove such behavior, further investigation for obtaining more superior embedding will be one of our future works.

| Number of parameters | |

|---|---|

| Proposed method | 10280 |

| approach (A1) | 1280 |

| approach (A2) | 50432 |

| approach (A3) | 248320 |

VII conclusion and Future work

We proposed a method for mapping STL specifications onto the vector space (STL2vec) based on the word2vec technique. To obtain the STL embeddings that capture the similarities in specifications in terms of control policy, we have provided a method for constructing the dataset by solving the robustness maximization problem for all the candidate specifications. Then, we trained the RNN controller whose inputs are the state trajectory and a vector generated by STL2vec to deal with multiple STL specifications with one RNN model. The example shown in the simulation section shows efficacy of the proposed method in terms of memory consumption and the time required for the training.

In this paper, it is assumed that the chosen STL specification is fixed during control execution and not allowed to change during the execution. Hence, future work should involve investigating the case where the STL specification is changed during control execution so as to provide more flexibility of the RNN controller.

Acknowledgement

This work is supported by JST CREST JPMJCR2012.

References

- [1] A. Pnueli, “The temporal logic of programs,” 18th Annual Symposium on Foundations of Computer Science (SFCF), pp. 46-57, 1977.

- [2] R. Koymans, “Specifying real-time properties with metric temporal logic,” Real-time systems, vol. 2, no. 4, pp. 255–299, 1990.

- [3] O. Maler and D. Nickovic, “Monitoring temporal properties of continuous signals,” in Formal Techniques, Modelling and Analysis of Timed and Fault-Tolerant Systems. Springer, 2004, pp. 152–166.

- [4] V. Raman, A. Donze, M. Maasoumy, R. M. Murray, A. Sangiovanni-Vincentelli, and S. A. Seshia, “Model predictive control with signal temporal logic specifications,” in 53rd IEEE Conference on Decision and Control, Dec 2014, pp. 81–87.

- [5] S. Sadraddini and C. Belta, “Robust temporal logic model predictive control,” in 2015 53rd Annual Allerton Conference on Communication, Control, and Computing (Allerton). IEEE, 2015, pp. 772–779.

- [6] MN. Mehr, D. Sadigh, R. Horowitz, S. S. Sastry and S. A. Seshia, ”Stochastic predictive freeway ramp metering from Signal Temporal Logic specifications,” 2017 American Control Conference (ACC), 2017, pp. 4884-4889.

- [7] Y. V. Pant, H. Abbas, and R. Mangharam, “Smooth operator: Control using the smooth robustness of temporal logic,” in IEEE Conference on Control Technology and Applications (CCTA), 2017, pp. 1235–1240.

- [8] X. Li, Y. Ma, and C. Belta, “A policy search method for temporal logic specified reinforcement learning tasks,” in Annual American Control Conference (ACC). IEEE, 2018, pp. 240–245.

- [9] N. Mehdipour, C. Vasile and C. Belta, ”Arithmetic-Geometric Mean Robustness for Control from Signal Temporal Logic Specifications,” 2019 American Control Conference (ACC), 2019, pp. 1690-1695.

- [10] I. Haghighi, N. Mehdipour, E. Bartocci, and C. Belta, “Control from signal temporal logic specifications with smooth cumulative quantitative semantics,” in 2019 IEEE 58th Conference on Decision and Control (CDC). IEEE, 2019, pp. 4361–4366.

- [11] S. Yaghoubi and G. Fainekos, “Worst-case satisfaction of stl specifications using feedforward neural network controllers: a lagrange multipliers approach,” in 2020 Information Theory and Applications Workshop (ITA). IEEE, 2020, pp. 1–20.

- [12] A. Balakrishnan and J. V. Deshmukh, ”Structured Reward Shaping using Signal Temporal Logic specifications,” 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2019, pp. 3481-3486.

- [13] A. Gopinath Puranic, J. V. Deshmukh and S. Nikolaidis, ”Learning From Demonstrations Using Signal Temporal Logic in Stochastic and Continuous Domains,” in IEEE Robotics and Automation Letters, vol. 6, no. 4, pp. 6250-6257, 2021.

- [14] W. Liu, N. Mehdipour, and C. Belta, “Recurrent neural network controllers for signal temporal logic specifications subject to safety constraints,” IEEE Control Systems Letters, 2021.

- [15] W. Liu and C. Belta, “Model-Based Safe Policy Search from Signal Temporal Logic Specifications Using Recurrent Neural Networks,” arXiv preprint arXiv:2103.15938, 2021.

- [16] S. Hochreiter and J. Schmidhuber, “Long short-term memory,” Neural computation, vol. 9, no. 8, pp. 1735–1780, 1997.

- [17] T. Mikolov, I. Sutskever, K. Chen, G. S. Corrado, and J. Dean, “Distributed representations of words and phrases and their compositionality,” Advances in Neural Information Processing Systems, pp. 3111–3119, 2013.

- [18] X. Rong, “word2vec Parameter Learning Explained,” arXiv:1411.2738, 2016.

- [19] L. Xiao, Z. Serlin, G. Yang, and C. Belta. ”A formal methods approach to interpretable reinforcement learning for robotic planning.” Science Robotics 4, no. 37 2019.

- [20] K. Leung, N. Arechiga, and M. Pavone, “Back-propagation through signal temporal logic specifications: Infusing logical structure into gradient-based methods,” arXiv preprint arXiv:2008.00097, 2020.

- [21] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980, 2014.

- [22] A. Paszke, S. Gross, S. Chintala, G. Chanan, E. Yang, Z. DeVito, Z. Lin, A. Desmaison, L. Antiga, and A. Lerer, “Automatic differentiation in pytorch,” 2017.

- [23] https://web.casadi.org