STG4Traffic: A Survey and Benchmark of Spatial- Temporal Graph Neural Networks for Traffic Prediction

A

Abstract

Traffic prediction has been an active research topic in the domain of spatial-temporal data mining. Accurate real-time traffic prediction is essential to improve the safety, stability, and versatility of smart city systems, i.e., traffic control and optimal routing. The complex and highly dynamic spatial-temporal dependencies make effective predictions still face many challenges. Recent studies have shown that spatial-temporal graph neural networks exhibit great potential applied to traffic prediction, which combines sequential models with graph convolutional networks to jointly model temporal and spatial correlations. However, a survey study of graph learning, spatial-temporal graph models for traffic, as well as a fair comparison of baseline models are pending and unavoidable issues. In this paper, we first provide a systematic review of graph learning strategies and commonly used graph convolution algorithms. Then we conduct a comprehensive analysis of the strengths and weaknesses of recently proposed spatial-temporal graph network models. Furthermore, we build a study called STG4Traffic using the deep learning framework PyTorch to establish a standardized and scalable benchmark on two types of traffic datasets. We can evaluate their performance by personalizing the model settings with uniform metrics. Finally, we point out some problems in the current study and discuss future directions. Source codes are available at https://github.com/trainingl/STG4Traffic.

keywords:

Traffic prediction , Urban computing , Graph neural networks , Spatial-temporal data mining , Benchmark1 Introduction

With the rapid development of the Internet of Things (IoT) and urban computing, the massive deployment of sensors provides a reliable source of data for intelligent transportation systems [1, 2]. To alleviate the pressure of the growing population and vehicles in urbanization, research on data-driven traffic systems has become a hot topic in academia and industry. Traffic prediction, as a fundamental-level task of Intelligent Transportation System (ITS), supports a large number of upper-layer applications on the traffic scene, such as congestion warning, route planning and location services [3]. Traffic forecasting is achieved through the statistics, analysis and summary of historical traffic data to realize the judgment of future flow trends [4]. In traffic management and control systems, accurate traffic forecasting can help city managers perceive the health of the traffic road network in real-time, adopt timely solutions to optimize the traffic flow, and thus improve road traffic efficiency. In addition, online maps (e.g., Google Maps, Baidu Maps) can improve the quality of urban services by planning routes in advance for travelers and shortening travel time.

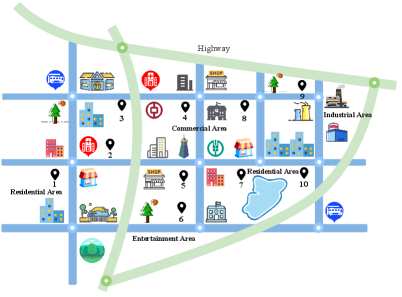

Traffic prediction exhibits typical spatial-temporal correlations. As shown in Fig. 1, traffic variation exhibits intricate and multifaceted patterns of spatial and temporal interdependence. In terms of time, traffic volumes (such as, flow, speed, and demand) are affected by the living routine of urban residents and show significant periodicity, e.g., weekdays morning and evening peaks and weekend/holiday aggregated traffic flow [5]. The traffic at an observation point is closely related to the traffic state in the periods before and after, showing certain closeness and trend. In addition to the temporal properties, the intuitive traffic volume changes are also reflected in the information transmission between nodes in the traffic network. Unlike temporal correlations, the potential spatial relationships are diverse [6, 7], as illustrated in Fig. 1a, where node 7 and node 10 are connected on the same road with essentially the same traffic patterns. Both node 7 and node 1 belong to the residential area and have significant semantic similarities despite their physical distance. Node 7 and node 4 have the same location function (Same POI, i.e., School, Bank), and even though they are not directly connected, they have the similar spatial pattern [8]. These complex and changing spatial-temporal properties make accurate traffic forecasting still challenging.

Extensive research has been devoted to address the challenge of modeling spatial-temporal data. The earliest statistical models (e.g., VAR [9], ARIMA [10]) are widely used for time series forecasting because of their simplicity and interpretability. However, these designs with restricted parameters are difficult to accomplish complex pattern recognition and the data cannot satisfy the assumption of stationary. Although machine learning methods (e.g., SVR [11], FNN [12]) are often good at non-linear representation, the performance of the models heavily depends on feature engineering and expert experience. Data-driven deep learning techniques, especially temporal convolution [13], recurrent neural network (and its variants LSTM [14], GRU [15]) and Transformer [16], have made breakthroughs in sequence tasks. However, they treat traffic data as independent signal streams [17], ignoring or barely exploiting the spatial dependence information.

One attempt is to divide the spatial region into same-size grids. ST-ResNet [18] implicitly represents the correlations between variables in fixed-size convolution kernels by using deep convolutional networks. However, due to the irregularity of roads, topological information inside the traffic network is inevitably missing grid modeling. Inspired by graph neural networks modeling topology graphs, STGCN [19] first proposed to stack gated temporal convolution with graph convolution into Spatial Temporal Blocks to achieve spatial-temporal prediction. This practice demonstrates that embedding prior knowledge of the traffic graph is beneficial to improve the model’s predictive performance greatly. In later model design, extensive research efforts have integrated graph neural networks into sequential models to jointly model the potential temporal and spatial dependencies of traffic data [20], and have achieved state-of-the-art performance. Some models such as DCRNN [21] integrates diffusion convolution into GRU to propose a multi-step prediction architecture that can capture bidirectional random walking graph signals. MTGNN [22] designed a structure that combines adaptive graph learning with dilation convolution to capture spatial-temporal correlation. GMAN [23] uses spatial-temporal attention fusion to expand the perceptual domain of information but reduce the loss of long-term prediction.

Over the years, although a considerable number of spatial-temporal graph neural network models have been proposed for traffic prediction, the existing literature lacks comprehensive surveys specifically focusing on graph learning and graph computing. While some studies [24, 25] attempt to comprehensively and meticulously organize all the datasets and methods for traffic tasks, they are too broad and fall short in providing precise insight into the core issues and methods of spatial-temporal prediction modeling. Unfortunately, the absence of a fair and standardized benchmark is a significant drawback in the field. Existing benchmarks such as [26, 27, 8] suffer from either irregular experimental settings and limited scalability or exhibit inconsistent results compared to the original papers. Furthermore, the evaluation of these diverse models remains confusing and lacks proper organization.

To address the above problems, a focused, well-understood, and inslightful survey will be of significance to the development of traffic prediction. In this paper, we highlight graph structure designs and graph computation methods used in spatial modeling, followed by a concrete survey of spatial-temporal graph neural networks. Then we propose a standardized and easily extensible benchmark to evaluate the performance of the different models. Lastly, we conclude with a prospective analysis of the difficulties and challenges in this study, and possible solutions to resolve them.

In summary, the main contributions of our paper are provided as follows:

-

1.

Firstly, we provide a comprehensive overview of graph structure design and graph computation methods employed in spatial-temporal graph modeling. This aspect has received less attention in previous surveys, making our discussion particularly valuable.

-

2.

Secondly, we conduct an in-depth survey of spatial-temporal graph neural networks used for traffic prediction. We categorize these methods into three groups based on their temporal characteristics: CNN-Based, RNN-Based, and Attention-Based. Furthermore, we analyze the technical details and limitations of each specific model.

-

3.

Thirdly, we introduce a benchmark named STG4Traffic, which facilitates a comprehensive evaluation of approximately 18 models on traffic speed datasets (METR-LA, PEMS-BAY) and traffic flow datasets (PEMSD4, PEMSD8). Our benchmark yields results that closely align with those reported in the original papers. It not only offers a common data access interface but also provides a unified model training pipeline for future studies in model design.

-

4.

Lastly, we outline the challenges encountered in traffic modeling from the perspective of data quality, research perspectives, and migration methods. We aim to provide feasible approaches to overcoming the difficulties faced in this field.

2 Problem Statement

The traffic network can be abstracted as a graph . is the set of nodes, which represent different observation locations (e.g., traffic sensors, roadway monitoring stations) distributed in the road network. is the set of edges and denotes the adjacency matrix depicting the relations between nodes, where each element represents the quantification of proximity from a certain insight, such as road connectivity, distance proximity, POI similarity, etc.

The traffic data observed on at time is denoted as the graph signal , and the signal of the -th node is denoted as , where denotes the feature dimension of the node (e.g., traffic flow, speed, and density). Similarly, we can represent the signals of all nodes on at time length as a 3D feature tensor . Finally, the traffic prediction problem can be formalized as follows:

| (1) |

This formula indicates that given time lengths of historical observations and graph , predict future traffic status for time lengths. The task aims to learn a non-linear function based on the gradient descent of the error. The mathematical form of optimization objective is defined as follows:

| (2) |

where is the parameters to be optimized in the function.

3 Graph Learning and Computing

Although graph neural networks (GNNs) have the advantage of aggregating node neighborhood contexts to generate spatial representations [28], the performance of the task is closely related to the quality of the input graph structure and the computational method used for graph convolution. As shown in Fig. 1a, the spatial relationships among nodes in traffic networks are complex and diverse. The complex spatial dependencies behind the traffic system cannot be explored by a single graph design and simple equation [29]. Therefore, this section focuses on the following two issues:

-

1.

Q1: How to design a reasonable graph structure? And how to mine underlying spatial relationships from the time-series data itself without prior knowledge?

-

2.

Q2: How to perform efficient convolution computation on the existing graphs?

3.1 Graph Stucture Learning (Q1)

Message passing in GNNs is based on local similarity [30], where closer nodes exhibit more similar traffic patterns. Most of the spatial-temporal graphs used for traffic prediction use road connection distances or absolute distances of physical coordinates to calculate the weights of edges [8]. The former is typically a directed graph that reflects the objective distribution of the actual network, while the latter is an undirected graph that only measures the spatial distance between pairs of nodes.

Distance-based Graph. The matrix of the distance graph is defined using a threshold Gaussian kernel [19, 31, 32] as follows:

| (3) |

where is the measured distance of and . The threshold and the variance are used to control the sparsity and distribution of the matrix .

Connectivity Graph, also called as Binary Graph [33, 7, 34]. Similarly, is mathematically defined as follows:

| (4) |

Semantic Graph. We observe that certain nodes are geographically distant but they tend to have the same or similar patterns of traffic variation (They may be lying in the same type of area, such as a residential or commercial region). This suggests that node pairs also have significant semantic correlations.

Generally, the Dynamic Time Warping (DTW) algorithm [35, 36, 37] is used to calculate the similarity in temporal patterns of historical observations. Semantic similarity matrix can be calculated according to the following equation:

| (5) |

where is the historical observation data of the -th node.

Functionality Graph. The POIs distribution surrounding nodes determines the usage of the district. Studies [38, 7, 6] revealed that this composite spatial dependence and heterogeneity largely influence the trend of traffic. In practice, we characterize the region functionality by the category and number of nearby POIs [7], and the formula that defines the functional proximity between node pairs is . Cosine similarity [39] is a typical method used to calculate the functional similarity matrix with the following equation:

| (6) |

where is a vector encoding of POIs for node and its dimensions label the number of categories of POIs. is calculated in some way to represent the density of POI categories around node .

Distribution Graph. Some metrics (e.g., Pearson correlation coefficient) can be used to describe the differences in traffic trends between nodes. However, their results are susceptible to the effects of series length. When the series length is small, it is susceptible to noise interference, and when the length is set too large, the variability of the trends is reduced. In contrast, from a macroscopic perspective, it is possible to simultanously combat data noise and effectively measure the overall proximity of nodes by comparing the feature distribution of nodes. KL divergence [40] and JS divergence [41, 42] are often used to evaluate the similarity of two probability distributions. Let and denote the observed values of two nodes. The distribution matrix based on JS divergence (JSD) [43] can be formulated as follows:

| (7) |

where can be expressed as:

| (8) |

The range of JSD is [0, 1], and smaller values indicate greater distribution similarity. Thus, we define .

The aforementioned methods of constructing graphs either encode adjacency matrices using prior knowledge or construct similarity matrices based on statistical analysis. They significantly enhance the spatial-temporal awareness ability of the model in auxiliary space modeling, compensating for the information bias introduced by an individual graph.

| Method | Equation |

| Direct Parametric A | |

| Undirected Graph A | |

| Directed Graph A | |

| Uni-Directed Graph A | |

| Attention-Based A |

However, the connectivity relations of pre-defined graphs are often missing and biased. On the one hand, they rely on additional data sources and experience, and on the other hand, it is difficult to depict a spatial dependence panorama. This leads to an inability to extend to general spatial-temporal graph tasks. The Adaptive Graph is based on parameter representations of node embeddings [44, 45, 46] that are continuously updated during the training phase to reduce model errors. It identifies biases caused by human definitions and captures hidden spatial dependencies.

The adoption of adaptive graphs has made remarkable progress in traffic prediction. Here we organize the frequently used constructive equations in STGNN models [22, 8] into Table 1. These efforts allow the study of graph computation without having to rely on priori knowledge.

Meanwhile, a continuous signal sampling-based graph learning and optimization strategy has been proposed [47, 48], as illustrated in Fig. 2. It first extracts spatial embedding for each node from the historical observation sequence or initializes embedding parameters directly. Then it computes a pairwise similarity matrix using the dot product on the spatial embeddings. Finally, it uses the Gumbel softmax trick [49] to reparameterize the distribution of the probability graph and remove noise information contained in redundant small values. The Sampled Graph is formulated as follows:

| (9) |

where is a probability matrix, then represents the probability of retaining the edge between and . Here , is a temperature hyperparameter.

The sampled graph in the downstream task continuously adapts to the training data to optimize their structure parameters and learn a similarity matrix that minimizes the training error in an end-to-end way [29]. Additionally, a regularization term for the graph error is also added to prevent the learned graph from deviating from the prior graph.

Research on graph learning goes beyond this. Graphs, either learned or pre-defined, can be used as additional information to help models better extract spatial representations. But currently no golden measure of learned graph quality exists, other than prediction accuracy.

3.2 Graph Computation Method (Q2)

The essence of GNNs is to aggregate the features of the target node itself and its neighbors to generate high-level hidden representations [28]. The spectral method [50] uses Chebyshev polynomial approximate filters to achieve and feature extraction of the graph signal. The formula is as follows:

| (10) |

where Graph Fourier Basis is the matrix of eigenvectors of the normalized graph Laplacian . To balance performance and complexity, in practice, GCN (Graph Convolutional Network) [51] is most commonly used as the -order approximation of ChebNet. Given the graph signal matrix and the adjacency matrix , the graph convolutional network can be simplified to the following equation:

| (11) |

where is the identity matrix and is the degree matrix. Spatial domain graph convolution is widely used in spatial-temporal graph networks to capture the spatial dependence of undirected graphs.

Diffusion Graph Convolution. On the one hand, the traffic dissemination is directional, while on the other hand, the impact of traffic may come from more distant nodes, making simple GCN inadequate for complex scenarios. As a comparison, diffusion convolution on directed graphs can capture information up to -order bi-directional neighbors, expanding the model’s spatial receptive field. The equation [21, 45] is expressed as follows:

| (12) |

where and are the out-degree and in-degree matrices resp., and are the learnable parameters.

Multi-Hop Graph Convolution. A basic fact is that cascading GNNs leads to the over-smoothing of signals [52], i.e., all nodes converge to the same value. Therefore, the graph convolutional network is typically set to two layers, but shallow networks are unable to capture the rich and deeper spatial features [35]. The residual connection can mitigate this issue. The computation of a uni-directional multi-hop graph convolution [22, 8] can be expressed as Eq. (13) and Eq. (14).

| (13) |

| (14) |

where is a signal activation function and is a hyperparameter that controls the proportion of the original state of the root node that is preserved.

The messages from multi-hop nodes are aggregated as the output of the hidden layer using linear weighting or attention aggregation [53], in addition to pooling approaches such as Max and Avg. Formally,

| (15) |

The multi-hop graph convolutional network uses multiple layers of convolutions to effectively extract features of the layered local substructures of nodes.

Graph Attention Network. The graph attention [54], which dynamically calculates edge weights between nodes based on feature similarity, is more suitable for real-time changing traffic scenarios. And compared to GCN, GAT is more flexible. Let the feature vectors of and be and , and be the set of neighbors to . The equation of the graph attention is as follows:

| (16) |

| (17) |

where is a learnable linear matrix, and maps the combined parameter matrix into a scalar. denotes the normalized attention score.

To obtain more abundant representations, the -head attention performs multiple transformations of independent subspaces before concatenating them to obtain the calculated result:

| (18) |

Formally, we formulate the above process in a unified equation:

| (19) |

where , is the element-wise product, denotes the dynamic attention matrix. is the -th head graph attention layer output, and when , .

The exploration of deeper spatial features continues to be an ongoing area of research. Currently, the above graph computation methods have been widely adopted in modeling spatial dependencies for STGNNs.

4 Spatial-Temporal Graph Neural Networks

Spatial-temporal graph neural networks (STGNNs) have gained popularity as a deep learning approach that integrates graph convolutional layers into sequence models. This methodology effectively captures the spatial and temporal characteristics of traffic signals. By considering the entire road network and modeling spatial information, STGNNs surpass the limitations of analyzing independent data streams separately and the prediction accuracy of the model is significantly improved. Through the rapid development in the past five years, a large amount of work has been accumulated to apply spatial-temporal graphs to traffic prediction. According to the modeling strategy of the temporal axis, it can be divided into three categories, namely CNN-Based, RNN-Based, and Attention-Based. The representative STGNNs architectures are shown in Fig. 3. The CNN-Based methods (STGCN, Graph WaveNet, MTGNN, etc.) employ 1D CNNs (TCNs) in tandem with graph convolutional layers to construct ST-Blocks and then learn asynchronous spatial-temporal patterns through the cascading ST-Blocks. 1D CNNs capture more long-range temporal features by stacking convolutional layers or adding dilation factors, thus enjoying the advantages of good computational efficiency and gradient stabilization. However, the implicit temporal connections represented by fixed convolution kernels deprive them of some flexibility and, more importantly, fail to capture the synchronized information in spatial-temporal signals.

In contrast, Recurrent Neural Networks (RNNs, e.g., LSTM, GRU) are powerful in modeling sequence dependencies. Many RNN-Based methods (DCRNN, MRA-BGCN, AGCRN, etc.) extend the fully-connected operation in RNNs using GCNs so that they use graph convolution to capture local spatial dependencies at both input-to-state and state transitions. This design approach associates each time step with graph convolution, enabling the learning of spatial-temporal signals that undergo synchronous changes. However, it suffers from gradient instability and being very time-consuming in training and inference stage. Furthermore, the forgetten gate mechanism in RNNs has constrained their capability in capturing long-term temporal dependencies.

Theoretically, the attention mechanism and its variants possess a large global receptive field, which has led to their widespread use in capturing long-term temporal dependencies in sequence models. Attention-based methods (such as GMAN, ASTGCN, DSTAGNN, etc.) typically combine temporal attention with spatial attention, excelling at handling global contextual features and spatial-temporal correlations in the evolution of states. By dynamically computing attention scores, these methods enhance the model’s focus on critical information, effectively addressing the limitations of TCN and RNN approaches. However, the attention mechanism introduces additional parameters and complexity, making the model overly sensitive to noise or irrelevant information in the training data, which can impact its generalization capability. In addition to the aforementioned methods, there are various other widely-used time series processing techniques, including neural controlled differential equations, Fourier domain transformations, and time pattern decomposition, among others. These provide a diverse set of tools and techniques that can be employed for analyzing and modeling different types and characteristics of time series data.

In recent studies, “GRU-GCN” is one of the most used spatial-temporal graph modeling frameworks. To overcome the challenges of high run-time and error accumulation, several works have proposed “curriculum learning” to optimize the training phase of the model. Curriculum Learning [22, 8] argues that it is not necessary to calculate the error and backpropagation for all time steps early in the training but to gradually increase the prediction length of the model as the number of iterations increases, i.e., in a progressive convergence way. This strategy for the encoder-decoder architecture substantially reduces the training time consumption and alleviates the pressure in terms of efficiency and resource occupation.

| Venue | Model | Graph Construction | Spatial Components | Temporal Components |

| IJCAI 18 | STGCN [19] | Distance-based Graph | ChebNet/GCN | Gated TCN |

| ICLR 18 | DCRNN [21] | Distance-based Graph | DGC | GRU |

| AUAI 18 | GaAN [55] | Distance-based Graph | GAT | GRU |

| IJCAI 19 | GWNET [45] | Distance-based/Adaptive Graph | DGC | Dilated TCN |

| AAAI 19 | ASTGCN [56] | Connectivity Graph | GCN; Attention | TCN; Attention |

| AAAI 19 | ST-MGCN [7] | Multiple Graph | ChebNet | RNN |

| KDD 19 | ST-MetaNet [57] | Distance-based graph | Meta-GAT | Meta-GRU |

| IJCAI 20 | LSGCN [58] | Distance-based Graph | ChebNet; GAT | Gated TCN |

| AAAI 20 | STSGCN [33] | Connectivity Graph | GCN | GCN |

| AAAI 20 | GMAN [23] | Distance-based Graph | Embedding; Attention | Embedding; Attention |

| KDD 20 | MTGNN [22] | Adaptive Graph | MHGC | Dilated TCN |

| NIPS 20 | AGCRN [44] | Adaptive Graph | GCN | GRU |

| CIKM 20 | STAG-GCN [35] | Multiple Graph | GCN; GAT | TCN; Self-Attention |

| TITS 20 | T-MGCN [38] | Multiple Graph | GCN | GRU |

| AAAI 20 | MRA-BGCN [53] | Distance-based/Edge Graph | MHGC | GRU |

| WWW 20 | STGNN [17] | Distance-based Graph | GAT | GRU; Transformer |

| ICLR 21 | GTS [47] | Sampled Graph | DGC | GRU |

| TKDD 21 | DGCRN [8] | Dynamic Graph | MHGC | GRU |

| TKDE 21 | ASTGNN [59] | Distance-based Graph | GAT | TCN; Transformer |

| AAAI 21 | STFGNN [37] | Semantic Graph | GCN | GCN; Dilated TCN |

| AAAI 21 | CCRNN [60] | Adaptive Graph | GCN | GRU |

| KDD 21 | STGODE [36] | Distance-Based/Semantic Graph | GCN; ODE | TCN |

| KDD 21 | DMSTGCN [61] | Dynamic Graph | DGC | Dilated TCN |

| IJCAI 22 | RGSL [48] | Connectivity/Sampled Graph | GCN | GRU |

| KDD 22 | ESG [62] | Dynamic Graph | GRU; DGC | Dilated TCN |

| KDD 22 | STEP [63] | Sampled Graph | DGC | Transformer; Dilated TCN |

| AAAI 22 | STG-NCDE [64] | Adaptive Graph | GCN; NCDE | NCDE |

| ICML 22 | DSTAGNN [65] | Dynamic Graph | ChebNet; Attention | Gated TCN; Attention |

*Note: To simplify the presentation, we use the terms “DGC” to refer to Diffusion Graph Convolution and “MHGC” to refer to Multi-Hop Graph Convolution. We refer to a model with three or more graphs as a “Multiple Graph” model.

Just like the No-Free-Lunch theorem [66] in machine learning, using STGNNs to model the spatial-temporal correlation of traffic scenes requires selecting appropriate components according to specific problems and conditions. Here we summarize some representative research works, as shown in Table 2. Some of these methods had reached the state-of-the-art in prediction tasks. STGCN [19] first combines gated temporal convolution with ChebNet operator for spatial-temporal prediction and achieves better performance than traditional time series models in all metrics. DCRNN [21] extends the diffusion graph convolution to a recurrent neural network of encoder-decoder to solve the directional problem of asymmetric traffic graph propagation. Graph WaveNet [45] innovatively proposes an adaptive graph dissipation of biases caused by human-defined spatial relations, and WaveNet based on causal convolution is used to learn temporal relations. Inspired by ST-ResNet [18], ASTGCN [56] proposes a set of temporal components called Clonesss, Period and Trend. Structurally, it utilizes a skip connection to connect the spatial-temporal attention layer to the convolutional layer to form model branchs, and finally fuses the three components together for prediction. These early studies of spatio-temporal graph neural networks significantly outperform traditional statistical methods and machine learning models in terms of predictive performance.

The complexity of traffic scenarios has also given rise to some novel research perspectives. STSGCN [33] argues that spatial-temporal dependence often affects traffic volumes not individually but synergistically. It proposes a unique local spatial-temporal graph for capturing spatial-temporal heterogeneity and models synchronous spatial-temporal relationships through multiple graph convolutional layers. GMAN [23] proposes gated spatial-temporal attention fusion to capture dynamic nonlinear spatial-temporal correlations and establish the temporal connections between historical and future time steps based on transforming attention. STFGNN [37] combines a novel fusion operation to learn hidden dependencies from spatial and temporal graphs, and handles long sequences by stacking fusion graphs and gated convolutional modules. However, the accuracy of these methods heavily relies on pre-defined graph designs, and the number of layers of graph convolution is superficial.

Adaptive graph convolutional recurrent network (AGCRN) [44] designs two types of adaptive modules based on parameter decomposition. Firstly, the node adaptation module decomposes the shared weights and biases to generate node-specific parameters to capture node-specific patterns. Secondly, the data-adaptive graph generation module automatically infers the interdependencies between different traffic series. STGNN [17] first combines the improved GAT and GRU, and then captures different scales of temporal patterns by concatenating a Transformer neural network architecture. This approach can effectively learn the dependencies among spatio-temporal data and provides a design framework that can be readily adapted. ASTGNN [59] adopts a Transformer-like spatio-temporal encoding-decoding architecture. It first extends the computation of query, key, and value matrices using temporal convolutions, proposes a trend-aware attention layer, and then replaces the feedforward network layer with GAT. The method has been proven effective on multiple traffic datasets, but the complex parameter training brings heavy system strain. DGCRN [8] found the fact that the connectivity of nodes is not immutable, but dynamically evolves with time periods. It proposes using a hyper-network to generate a dynamic adjacency matrix before each step of the RNN to accommodate the dynamic changes in the road network. Additionally, the generated dynamic matrix is merged with the original road network matrix to capture more spatial information. DMSTGCN [61] designs a dynamic graph constructor and dynamic graph convolution method to propagate node hidden states based on dynamic spatial relationships. It also provides a multi-aspect fusion module to merge auxiliary hidden states and primary hidden states in both time and space. They have made a lot of efforts in graph design and graph computing.

Spatio-Temporal Multi-Graph Convolution Network (ST-MGCN) [7] and Temporal Multi-Graph Convolutional Network (T-MGCN) [38] devise multiple attribute graphs to assist in enhanced spatial modeling and mine spatial information from multiple insights. But this means that more expert knowledge is required. STGODE [36] adopts ODE to handle multipe layer GCN over-smoothing problem by expressing residual-connected GCNs as continuous GCNs. Then it adopts the dual branching of TCN and CGCN to solve the spatial-temporal prediction problem. Similarly, STG-NCDE [64] employs neural control differential equations to tackle the knowledge of temporal and spatial dimensions separately. The neural differential equation restores the temporal continuity that is lost due to interval sampling. Unlike previous work, STEP [63] is motivated by unsupervised learning and proposes a time-series pre-training strategy to enhance the graph structural design of STGNNs with promising results.

According to our survey, the studies of spatial-temporal graphs focus on designing graph structures and combining spatial-temporal components. Meanwhile, other related pieces of techniques (unsupervised pre-training, generative adversarial networks, graph contrastive learning, reinforcement learning, etc.) are widely applied to traffic prediction, which greatly expand the technical basis of spatial-temporal data mining and achieve great performance gains.

5 Benchmark And Evaluation

In this section, we first provides an overview of the datasets and models, the experimental setup, and the evaluation results used in the benchmark. Then we analyze the performance and efficiency of some models through the visualization of charts. Finally, we provide a brief introduction to the benchmark interface and its extended usage.

| Traffic Type | Datasets | Nodes | Edges | Time Steps | Missing Ratio |

| Speed | METR-LA | 207 | 1515 | 34,272 | 8.109% |

| PEMS-BAY | 325 | 2369 | 52,116 | 0.003% | |

| Flow | PEMSD4 | 307 | 340 | 16,992 | 3.182% |

| PEMSD8 | 170 | 295 | 17,856 | 0.696% |

5.1 Benchmark Implementation

In view of the heterogeneity of the traffic data, we selected two kinds of datasets, traffic speed (METR-LA, PEMS-BAY) [45, 21] and traffic flow (PEMSD4, PEMSD8) [33, 44], for the building of the benchmark. They are both sampled at 5-minute intervals, and the detailed statistical information of the data is shown in Table 3. At the same time, we selected some representative spatial-temporal graph models in these datasets for comparative studies. Our experiments are conducted on a GPU server with eight GeForce GTX 1080Ti graphics cards, using the unified deep learning framework PyTorch 1.8.0. The raw data are standardized using Z-Score [67]. To maintain consistency with previous studies, we divide the speed data into the training set, validation set, and test set in the ratio of 7:1:2, and the flow data in the ratio of 6:2:2. If the validation error converges within 15-20 epochs or stops after 100 epochs, the training model would stop early and the best model on the validation data is saved [68]. In the multi-step prediction task, we set both and of the problem definition (1) to 12. For the specific model parameters and settings including optimizer, learning rate, loss function and model parameters, we are faithful to the original paper on the one hand and conduct several parameter tuning efforts to obtain better experimental results on the other hand.

In our experiments, we evaluate the model results using the Mask-Based Root Mean Square Error (RMSE), Mean Absolute Error (MAE), and Mean Absolute Percentage Error (MAPE) as metrics, where the zero values (i.e., noisy data) will be ignored [27]. Their initially defined equations are as follows:

|

|

(20) |

where is the number of values to predict, is the prediction result and is the ground truth. The smaller their values, the better the performance of the method is indicated.

| Datasets | Models | Horizon 3 | Horizon 6 | Horizon 12 | ||||||||

| MAE | RMSE | MAPE | MAE | RMSE | MAPE | MAE | RMSE | MAPE | ||||

| METR-LA | T-GCN | 3.09 | 5.77 | 8.81% | 3.60 | 6.90 | 10.61% | 4.29 | 8.39 | 12.56% | ||

| GMAN | 2.84 | 5.71 | 7.54% | 3.16 | 6.65 | 8.79% | 3.50 | 7.46 | 10.18% | |||

| STGCN | 2.79 | 5.34 | 7.29% | 3.21 | 6.48 | 8.81% | 3.74 | 7.72 | 10.71% | |||

| DCRNN | 2.77 | 5.37 | 7.16% | 3.14 | 6.43 | 8.56% | 3.59 | 7.57 | 10.32% | |||

| GTS | 2.75 | 5.26 | 7.12% | 3.13 | 6.29 | 8.59% | 3.56 | 7.33 | 10.21% | |||

| GWNET | 2.69 | 5.14 | 6.94% | 3.06 | 6.15 | 8.25% | 3.52 | 7.30 | 9.80% | |||

| MTGNN | 2.68 | 5.16 | 6.91% | 3.03 | 6.14 | 8.29% | 3.46 | 7.20 | 9.87% | |||

| DGCRN | 2.63 | 5.01 | 6.78% | 2.99 | 6.01 | 8.09% | 3.48 | 7.18 | 9.90% | |||

| PEMS-BAY | T-GCN | 1.49 | 2.98 | 3.13% | 1.86 | 3.99 | 4.18% | 2.29 | 5.01 | 5.46% | ||

| STGCN | 1.39 | 2.92 | 2.99% | 1.75 | 3.95 | 4.00% | 2.11 | 4.81 | 5.06% | |||

| GMAN | 1.36 | 2.90 | 2.99% | 1.64 | 3.79 | 3.80% | 1.88 | 4.32 | 4.50% | |||

| DCRNN | 1.35 | 2.84 | 2.84% | 1.69 | 3.87 | 3.80% | 2.01 | 4.69 | 4.69% | |||

| GTS | 1.33 | 2.80 | 2.82% | 1.64 | 3.77 | 3.77% | 1.93 | 4.49 | 4.59% | |||

| GWNET | 1.31 | 2.75 | 2.72% | 1.65 | 3.70 | 3.74% | 1.98 | 4.55 | 4.64% | |||

| MTGNN | 1.33 | 2.80 | 2.73% | 1.65 | 3.73 | 3.65% | 1.94 | 4.49 | 4.56% | |||

| DGCRN | 1.29 | 2.70 | 2.66% | 1.62 | 3.65 | 3.53% | 1.92 | 4.42 | 4.40% | |||

| Datasets | Metrics | MSTGCN | ASTGCN | STSGCN | STGODE | GMSDR | AGCRN | RGSL | MTGNN | STG-NCDE | DAAGCN |

| PEMSD4 | MAE | 23.58 | 21.83 | 21.16 | 21.04 | 19.79 | 19.67 | 19.29 | 19.22 | 19.21 | 18.78 |

| RMSE | 36.86 | 34.36 | 34.13 | 33.46 | 32.04 | 32.31 | 31.48 | 31.28 | 31.09 | 30.79 | |

| MAPE | 15.85% | 14.13% | 14.39% | 14.14% | 13.34% | 12.91% | 12.65% | 12.64% | 12.79% | 12.23% | |

| PEMSD8 | MAE | 18.25 | 18.10 | 17.13 | 16.81 | 16.07 | 15.81 | 15.60 | 15.56 | 15.45 | 15.06 |

| RMSE | 28.29 | 28.06 | 26.47 | 26.44 | 25.15 | 25.04 | 25.07 | 24.90 | 24.81 | 24.36 | |

| MAPE | 11.57% | 11.13% | 11.15% | 10.57% | 10.41% | 10.19% | 10.04% | 9.83% | 9.92% | 9.74% |

Table 4 reports the multi-step prediction results of STGNNs for 15 minutes, 30 minutes, and 1 hour in the traffic speed datasets. Table 5 records the average performance of the model across all time steps in the traffic flow datasets. It can be noticed that all our reproduced results are very close to the results reported in the original paper. We can draw the following conclusions from the abundant data information: In both METR-LA and PEMS-BAY, DGCRN achieves state-of-the-art performance in almost all horizons except a few metrics. The overall prediction accuracy of GWNET and MTGNN are similar. Both of them use an adaptive graph learning strategy, which is superior to some graph methods based on distance representation. We can also observe that GMAN performs worse at short-term horizons and better at longer times (Horizon 12), suggesting that spatial-temporal attention helps to improve long-range predictions.

DAAGCN is a dynamic spatial-temporal recurrent network combined with GAN, which achieves the best performance on both PEMSD4 and PEMSD8. MTGNN still performs well on traffic flow data, indicating its robustness and versatility across both speed and flow datasets. In our experiments, we find that AGCRN not only achieves higher prediction accuracy compared to ASTGCN, STSGCN, and STGODE, but also occupies very few system resources in training stage. RGSL is an improvement on AGCRN, which proposed to fuse explicit and implicit graphs to effectively model node pair dependencies. STG-NCDE employs nonlinear differential equations to describe the continuous dynamic evolution of node features in both time and space. Despite its superior performance compared to the majority of methods, the complex differential operators increase both the training and testing time of the model. Integrating the experimental data from the two tables, we can find that over the past five years, the performance of the STGNN (Spatial-Temporal Graph Neural Network) models on traffic prediction tasks has improved by 15% to 20%. This indicates that the models have made significant progress in capturing more in-depth spatial-temporal patterns. In terms of method design, STGNNs have gradually moved away from relying on prior graphs, and have instead attempted to establish a more universal and effective spatial-temporal prediction model.

To provide a more intuitive comparison of the performance differences of different methods across multiple prediction horizons, we plotted the MAE line charts for Horizons 1 to 12 in Fig. 4. Through this figure, we can clearly observe the performance differences of these models in short-term and long-term forecasting. As the time interval increases, the model’s uncertainty about the future traffic network increases. Each step of multi-step prediction depends on the previous prediction, and the error will gradually accumulate and amplify, so the prediction error of all models will gradually increase. On the PEMS traffic flow data, we found that ASTGCN (blue line), STSGCN (orange line), and GMSDR (red line) have significant differences in the prediction range of Horizon 6 to 12, which reflects their different modeling capabilities for long-term prediction. GMSDR effectively mitigates the error propagation in multi-step prediction. On METR-LA and PEMS-BAY, we observed relatively smaller changes in the multi-step prediction error rates of the models. Therefore, the key to overall MAE reduction is to improve the model’s ability to handle long-term spatio-temporal dependencies.

5.2 Case Study

We plot the time-series curve of ground truth versus model prediction for a random selection of road network sensors from the four datasets for a given day as in Fig. 5. For better presentation, a limited number of models are selected for comparison across the datasets, rather than all models listed in Table 4. We observe that: (1) Node 36 in METR-LA has a sudden decrease in the speed of vehicle traffic during the 15:00-18:00 segment, which indicates that the highway is jammed. Node 53 in PEMS-BAY shows successive traffic congestion during the hours of 10:00-12:00 and 18:00-21:00. (2) All GMAN, GWNET, and DGCRN have the capability of capturing stable temporal patterns in the data and can fit the traffic trends in non-congested periods better. This demonstrates the excellent performance of STGNNs in capturing temporal and spatial dependencies. (3) Under some traffic congestion conditions, they learn the valley and peak trends. The fitting effect of DGCRN is more prominent compared to GMAN and GWNET, because it seems to be more sensitive to complex traffic state changes. The predicted values of future traffic data by STGNNs show a lag effect compared to the actual values. This issue needs to be addressed in future research on modeling temporal correlations.

Similarly, we selected DAAGCN, AGCRN and STG-NCDE for comparison in PEMSD4 and PEMSD8. The state of the flow data to change over time is not as smooth as the speed, and there are many bumps on the surface of the curve, which makes traffic flow prediction more challenging. Node 85 in PEMSD8 is the peak of traffic during the 5-8h time frame. DAAGCN is capable of learning the shifting spatial-temporal patterns, while AGCRN and STG-NCDE clearly deviate from the ground truth of traffic. In addition, in PEMSD4, Node 111 shows a sharp decrease in traffic between 11-12h, which implies a blocked road. Although all of them capture this state of jumping, DAAGCN’s pattern-matching ability is more robust due to its prediction curve is closer to the trend of the actual value.

The goal of traffic forecasting is to pursue models with low overhead, low complexity, and high generalization capability, which directly relates to the offline deployment of the models. Therefore, it is necessary for us to systematically study the system resource consumption and computational efficiency of STGNNs. Fig. 6 shows the number of parameters and training time per epoch for different models on different datasets. Generally speaking, the larger the dataset, the more system resources and training time the model will consume. On the same dataset, we found that the training time of the model is not strictly correlated with the number of parameters, but is more related to the model architecture and operators. For example, on METR-LA and PEMS-BAY, GWNET and DCRNN have similar parameter counts, but the training efficiency of GWNET is 5 times that of DCRNN. DGCRN and DCRNN use the same Seq2Seq architecture, but after DGCRN introduced a curriculum learning strategy, it effectively improved the model’s training efficiency. DGCRN believes that there is no need to calculate the error of all time steps in the early stage of training, but rather to gradually increase the model’s prediction length as the number of iterations increases, guiding the model training in a gradual convergence manner.

The number of parameters is closely related to the specific hyperparameters of the model, and it reflects the complexity of the model to a certain extent, affecting the utilization of GPU memory. For example, STSGCN constructs a local spatio-temporal graph and performs multi-level cascading, which dramatically increases the number of parameters, and the corresponding training time also increases significantly. In general, graph learning methods (such as AGCRN, RGSL, DAAGCN, etc.) have a higher dependence on parameters, mainly reflected in the influence of node embedding dimensions on spatial representation learning, especially when dealing with datasets with a large number of nodes. Furthermore, we can find that although STG-NCDE achieved good prediction performance, the neural control differential operators used generated huge intermediate parameters, which significantly increased the GPU memory occupation and computation time. MTGNN and GWNET have similar performance and the same model architecture, but after MTGNN optimized the temporal convolutional module and graph learning layer in the structure, the training time was effectively shortened. Therefore, good model design can not only reduce computational complexity but also improve the model’s generalization ability. If we continue to increase the complexity of the model in exchange for performance improvements, it will exacerbate the dilemma of spatio-temporal graph model design.

5.3 Usability And Practicality

With the growing number of spatial-temporal traffic prediction models, we develop a training pipeline called STG4Traffic to provide a convenient and standardized, scalable project architecture for subsequent research. It is organized as presented in Fig. 7 below.

STG4Traffic mainly interacts with users through a unified configuration file, which contains two sub-tasks for traffic speed and traffic flow prediction. These two subtasks provide a common standardized data interface and method interface for model design: DATA directory stores raw traffic data resources and pre-processed data files; LIB is a toolkit that designs callable data loading methods, evaluation methods, and some basic methods; LOG stores the project run logs and saves the final training model; MODEL is a model design file, which realizes the decoupling of model, data and training process. Researchers can extend the data and perform self-defined model designs according to their demands. Taking the DCRNN design as an example, we first complete the model definition in MODEL and then create the DCRNN directory in the subtask directory, where we create: DCRNN_Config.py, DCRNN_Utils.py [optional], DCRNN_Trainer.py, DCRNN_Main.py, and DATANAME_DCRNN.conf [multiple]. The functions and meanings of these files are listed below:

-

1.

DATANAME_DCRNN.conf is the configuration of model parameters and experimental setup, which can vary based on the dataset being used.

-

2.

DCRNN_Config.py is responsible for reading the preset configuration file.

-

3.

DCRNN_Utils.py defines additional methods that are not available in the public interface.

-

4.

DCRNN_Trainer.py serves as the trainer for the model, handling the training, validation, and testing processes.

-

5.

DCRNN_Main.py acts as the entry point for the project, managing tasks such as data loading, model and parameter setting, and more.

In the initial phase of benchmarking, we pre-selected 18 high-impact works in the field on two types of transportation data to serve as the basis for the design of our framework. These case studies provide excellent learning examples for beginners of spatial and temporal mapping tasks to better understand the concepts and techniques. In addition, mature developers can quickly implement their mature research ideas by calling on pre-implemented interfaces using the existing baseline assessment results of the benchmark. The source code of this open-source project is available at https://github.com/trainingl/STG4Traffic.

This training pipeline based on configuration files simplifies the code design of model training, provides a flexible parameter configuration interface to fine-tune existing models, and has good scalability. For users, as long as they understand the functions of each file in the benchmark project in advance, and call the standard input and output interfaces designed by STG4Traffic, they can ignore the setup of data processing and training process, and focus on model design to achieve rapid iteration and effective method innovation.

6 Discussions on Future Directions

Over the years, significant advancements have been achieved in traffic forecasting using STGNNs. However, there are still several challenges that require further attention and resolution. In this section, we highlight some future research directions for addressing the challenges.

Data Quality: Data collected from sensors often suffer from noise or contain a certain percentage of missing data (zero-value padding during preprocessing). While we address this issue carefully during test evaluation, the training process is highly susceptible to being influenced by these anomaly samples. Additionally, the narrow time frame of data sampling, represented by parameters , poses challenges in capturing the periodicity of long sequences on one hand, and makes learning similarity graphs of node pairs unreliable on the other [63]. Moreover, the datasets collected in the study are limited, lacking the introduction of enriched external information or metadata such as weather, events, dates, etc. [69] and the fusion of these heterogeneous data presents a persisting challenge. Some studies have explored effective approaches to addressing data quality concerns in traffic forecasting. For instance, graph contrastive learning methods [70, 71] have shown promise in combating training noise and capturing robust spatial-temporal features. Time Series Pre-training techniques [63] have also been found effective for long time series data with periodicity. In the ST-MetaNet model [57], meta-knowledge is leveraged to parameterize the model, while considering heterogeneous information in the road network to model spatial-temporal dependencies and achieve improved feedback. These studies provide valuable insights and serve as strong references for addressing concerns related to data quality.

Research Perspectives: Research on traffic forecasting mainly revolves around the modeling of temporal and spatial correlations. (1) Temporal Heterogeneity: Existing approaches that model temporal correlation for all nodes often adopt a shared parameter space, ignoring the temporal heterogeneity among different nodes. STID [20] argues that this difference in temporal patterns is due to spatial heterogeneity, and it identifies this indistinguishability in combination with multiple spatial-temporal embeddings. Spatial-Temporal Self-Supervised Learning (ST-SSL) [72] proposes a novel paradigm that enhances the representation of traffic patterns to capture both spatial and temporal heterogeneity by augmenting traffic-level and topology-level data. (2) Dynamic Graph: Although adaptive graphs can compensate for the knowledge bias caused by pre-defined graphs, they can only represent fixed node relations after training and cannot be dynamically adjusted with the data characteristics. Some work as DGCRN [8] and D2STGCN [73], among others, take into account the basic fact that the location dependence of a road network changes dynamically with time. They propose a time-driven dynamic connectivity graph that infers instantaneous connection patterns based on the current traffic state. Although some related work exists, the design of dynamic graphs remains a significant technical challenge. (3) Long-Range Dependence: Attention mechanisms are very flexible in global spatial-temporal modeling, especially in capturing long-range temporal dependencies with good performance, and time series models based on Transformer variants are widely used in various fields. Sequence pattern decomposition [74] is also a potential solution for the future. Long-term dependence information is inferred from three structural components of time series trends, periods and residuals to improve prediction performance. (4) GNN-Free Method: MLP-based spatial-temporal prediction models [20, 75] have become a new idea of spatial-temporal prediction with its advantage of balancing efficiency and performance. It does not advocate the use of complex STGNN modeling spatial-temporal dependence, but adopts diversified spatial-temporal information encoding, and combines the most simple MLP model to process spatial-temporal data. However, essentially, the MLP-Based approaches also draw on graph signal propagation or diffusion to represent neighborhood node correlation, and this viewpoint revisits the features behind spatial-temporal and pays more attention to the research and design of the Embedding layer.

Migration Methods: In the realm of STGNNs, the integration of various methods from different fields has shown the potential to enhance model performance. Generative adversarial networks [76, 77] combat training generators (STGNNs) and discriminators by comparing the proximity of the predicted information to make the predictions converge to the ground truth as much as possible. Knowledge graphs [78, 79, 80] have also emerged as a valuable tool, establishing relationships among different transportation entities and concepts. They enable the consolidation and sharing of knowledge from diverse fields, facilitating comprehensive analysis and prediction of the entire traffic system. Recent studies such as AutoSTG [81] and AutoCTS [82] propose the use of automated machine learning to streamline the construction and optimization of traffic prediction models. These approaches efficiently build STGNNs that meet performance requirements while emphasizing accuracy. Additionally, transfer learning [83, 80] has demonstrated its usefulness by leveraging models trained in other cities or regions with similar traffic patterns to initialize new models. This approach accelerates the training process and alleviates challenges arising from limited data availability. The advancement of these techniques has diversified the research approaches for traffic forecasting, providing greater potential for solving the problems in this domain. However, the transferred methods from other domains have not been well applied due to the differences in data distribution and domain knowledge, which poses challenges to their effective utilization.

7 Conclusion

In this paper, we first present a systematic survey of graph design and graph computation techniques for traffic prediction. Then we provide a detailed introduction to the key modeling components, technical details, and well-established methods of spatial-temporal graph neural networks. In order to establish a standardized benchmark, we introduce STG4Traffic, providing a thorough overview of the performance and efficiency of various methods within this framework. Finally, we conduct an analysis of the challenges encountered in this study and summarize potential solutions for future investigations. We hope that this research make a positive and impactful contribution to the field of spatial-temporal prediction.

8 Acknowledge

Chunjiang Zhu is supported by UNCG Start-up Funds and Faculty First Award. Detian Zhang is partially supported by the Collaborative Innovation Center of Novel Software Technology and Industrialization, the Priority Academic Program Development of Jiangsu Higher Education Institutions.

References

- [1] A. M. Nagy, V. Simon, Survey on traffic prediction in smart cities, Pervasive Mob. Comput. 50 (2018) 148–163.

- [2] J. Ye, J. Zhao, K. Ye, C. Xu, How to build a graph-based deep learning architecture in traffic domain: A survey, IEEE Trans. Intell. Transp. Syst. 23 (5) (2022) 3904–3924.

- [3] X. Yin, G. Wu, J. Wei, Y. Shen, H. Qi, B. Yin, Deep learning on traffic prediction: Methods, analysis, and future directions, IEEE Trans. Intell. Transp. Syst. 23 (6) (2022) 4927–4943.

- [4] D. A. Tedjopurnomo, Z. Bao, B. Zheng, F. M. Choudhury, A. K. Qin, A survey on modern deep neural network for traffic prediction: Trends, methods and challenges, IEEE Trans. Knowl. Data Eng 34 (4) (2020) 1544–1561.

- [5] K. Wang, L. Liu, Y. Liu, G. Li, F. Zhou, L. Lin, Urban regional function guided traffic flow prediction, Inf. Sci. 634 (2023) 308–320.

- [6] W. Shao, Z. Jin, S. Wang, Y. Kang, X. Xiao, H. Menouar, Z. Zhang, J. Zhang, F. D. Salim, Long-term spatio-temporal forecasting via dynamic multiple-graph attention, in: IJCAI, 2022, pp. 2225–2232.

- [7] X. Geng, Y. Li, L. Wang, L. Zhang, Q. Yang, J. Ye, Y. Liu, Spatiotemporal multi-graph convolution network for ride-hailing demand forecasting, in: AAAI, 2019, pp. 3656–3663.

- [8] F. Li, J. Feng, H. Yan, G. Jin, D. Jin, Y. Li, Dynamic graph convolutional recurrent network for traffic prediction: Benchmark and solution, CoRR abs/2104.14917 (2021).

- [9] Z. Lu, C. Zhou, J. Wu, H. Jiang, S. Cui, Integrating granger causality and vector auto-regression for traffic prediction of large-scale wlans, KSII Transactions on Internet and Information Systems (TIIS) 10 (1) (2016) 136–151.

- [10] S. V. Kumar, L. Vanajakshi, Short-term traffic flow prediction using seasonal arima model with limited input data, European Transport Research Review 7 (3) (2015) 1–9.

- [11] C.-H. Wu, J.-M. Ho, D.-T. Lee, Travel-time prediction with support vector regression, IEEE transactions on intelligent transportation systems 5 (4) (2004) 276–281.

- [12] D. Park, L. R. Rilett, Forecasting freeway link travel times with a multilayer feedforward neural network, Computer-Aided Civil and Infrastructure Engineering 14 (5) (1999) 357–367.

- [13] S. Bai, J. Z. Kolter, V. Koltun, An empirical evaluation of generic convolutional and recurrent networks for sequence modeling, CoRR abs/1803.01271 (2018).

- [14] S. Hochreiter, J. Schmidhuber, Long short-term memory, Neural computation 9 (8) (1997) 1735–1780.

- [15] K. Cho, B. van Merrienboer, Ç. Gülçehre, D. Bahdanau, F. Bougares, H. Schwenk, Y. Bengio, Learning phrase representations using RNN encoder-decoder for statistical machine translation, in: EMNLP 2014, 2014, pp. 1724–1734.

- [16] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. Kaiser, I. Polosukhin, Attention is all you need, in: NeurIPS 2017, 2017, pp. 5998–6008.

- [17] X. Wang, Y. Ma, Y. Wang, W. Jin, X. Wang, J. Tang, C. Jia, J. Yu, Traffic flow prediction via spatial temporal graph neural network, in: WWW ’22, 2020, pp. 1082–1092.

- [18] J. Zhang, Y. Zheng, D. Qi, Deep spatio-temporal residual networks for citywide crowd flows prediction, in: AAAI, 2017, pp. 1655–1661.

- [19] B. Yu, H. Yin, Z. Zhu, Spatio-temporal graph convolutional networks: A deep learning framework for traffic forecasting, in: IJCAI, 2018, pp. 3634–3640.

- [20] Z. Shao, Z. Zhang, F. Wang, W. Wei, Y. Xu, Spatial-temporal identity: A simple yet effective baseline for multivariate time series forecasting, in: CIKM ’22, 2022, pp. 4454–4458.

- [21] Y. Li, R. Yu, C. Shahabi, Y. Liu, Diffusion convolutional recurrent neural network: Data-driven traffic forecasting, in: ICLR, 2018.

- [22] Z. Wu, S. Pan, G. Long, J. Jiang, X. Chang, C. Zhang, Connecting the dots: Multivariate time series forecasting with graph neural networks, in: KDD ’20, 2020, pp. 753–763.

- [23] C. Zheng, X. Fan, C. Wang, J. Qi, GMAN: A graph multi-attention network for traffic prediction, in: AAAI, 2020, pp. 1234–1241.

- [24] H. Yuan, G. Li, A survey of traffic prediction: from spatio-temporal data to intelligent transportation, Data Sci. Eng. 6 (1) (2021) 63–85.

- [25] W. Jiang, J. Luo, Graph neural network for traffic forecasting: A survey, Expert Syst. Appl. 207 (2022) 117921.

- [26] N. D. K. Quach, C. Yang, V. H. Vu, T. T. Nguyen, Q. V. H. Nguyen, J. Jo, A benchmarking evaluation of graph neural networks on traffic speed prediction, in: ADMA 2022, Vol. 13725, 2022, pp. 472–488.

- [27] R. Jiang, D. Yin, Z. Wang, Y. Wang, J. Deng, H. Liu, Z. Cai, J. Deng, X. Song, R. Shibasaki, Dl-traff: Survey and benchmark of deep learning models for urban traffic prediction, in: CIKM ’21, 2021, pp. 4515–4525.

- [28] Z. Wu, S. Pan, F. Chen, G. Long, C. Zhang, S. Y. Philip, A comprehensive survey on graph neural networks, IEEE transactions on neural networks and learning systems 32 (1) (2020) 4–24.

- [29] Y. Zhu, W. Xu, J. Zhang, Y. Du, J. Zhang, Q. Liu, C. Yang, S. Wu, A survey on graph structure learning: Progress and opportunities, arXiv e-prints (2021) arXiv–2103.

- [30] J. Gilmer, S. S. Schoenholz, P. F. Riley, O. Vinyals, G. E. Dahl, Neural message passing for quantum chemistry, in: ICML 2017, Vol. 70, 2017, pp. 1263–1272.

- [31] Y. Chen, L. Zheng, W. Liu, Spatio-temporal attention-based graph convolution networks for traffic prediction, in: IEEE International Conference on Systems, Man, and Cybernetics, SMC 2022, 2022, pp. 642–649.

- [32] W. Zhang, F. Zhu, Y. Lv, C. Tan, W. Liu, X. Zhang, F.-Y. Wang, Adapgl: An adaptive graph learning algorithm for traffic prediction based on spatiotemporal neural networks, Transportation Research Part C: Emerging Technologies 139 (2022) 103659.

- [33] C. Song, Y. Lin, S. Guo, H. Wan, Spatial-temporal synchronous graph convolutional networks: A new framework for spatial-temporal network data forecasting, in: AAAI, 2020, pp. 914–921.

- [34] L. Liu, J. Chen, H. Wu, J. Zhen, G. Li, L. Lin, Physical-virtual collaboration modeling for intra- and inter-station metro ridership prediction, IEEE Trans. Intell. Transp. Syst. 23 (4) (2022) 3377–3391.

- [35] B. Lu, X. Gan, H. Jin, L. Fu, H. Zhang, Spatiotemporal adaptive gated graph convolution network for urban traffic flow forecasting, in: CIKM ’20, 2020, pp. 1025–1034.

- [36] Z. Fang, Q. Long, G. Song, K. Xie, Spatial-temporal graph ODE networks for traffic flow forecasting, in: KDD ’21, 2021, pp. 364–373.

- [37] M. Li, Z. Zhu, Spatial-temporal fusion graph neural networks for traffic flow forecasting, in: AAAI, 2021, pp. 4189–4196.

- [38] M. Lv, Z. Hong, L. Chen, T. Chen, T. Zhu, S. Ji, Temporal multi-graph convolutional network for traffic flow prediction, IEEE Trans. Intell. Transp. Syst. 22 (6) (2021) 3337–3348.

- [39] P. Xia, L. Zhang, F. Li, Learning similarity with cosine similarity ensemble, Information sciences 307 (2015) 39–52.

- [40] T. van Erven, P. Harremoës, Rényi divergence and kullback-leibler divergence, IEEE Trans. Inf. Theory 60 (7) (2014) 3797–3820.

- [41] Z. Zhou, Y. Wang, X. Xie, L. Chen, H. Liu, Riskoracle: A minute-level citywide traffic accident forecasting framework, in: AAAI, 2020, pp. 1258–1265.

- [42] B. Wang, Y. Lin, S. Guo, H. Wan, Gsnet: Learning spatial-temporal correlations from geographical and semantic aspects for traffic accident risk forecasting, in: AAAI, 2021, pp. 4402–4409.

- [43] P. Li, J. Fang, P. Chao, P. Zhao, A. Liu, L. Zhao, JS-STDGN: A spatial-temporal dynamic graph network using js-graph for traffic prediction, in: Database Systems for Advanced Applications - 27th International Conference, DASFAA 2022, Vol. 13245, 2022, pp. 191–206.

- [44] L. Bai, L. Yao, C. Li, X. Wang, C. Wang, Adaptive graph convolutional recurrent network for traffic forecasting, in: NeurIPS 2020, 2020.

- [45] Z. Wu, S. Pan, G. Long, J. Jiang, C. Zhang, Graph wavenet for deep spatial-temporal graph modeling, in: IJCAI, 2019, pp. 1907–1913.

- [46] C. Tian, W. K. Chan, Spatial-temporal attention wavenet: A deep learning framework for traffic prediction considering spatial-temporal dependencies, IET Intelligent Transport Systems 15 (4) (2021) 549–561.

- [47] C. Shang, J. Chen, J. Bi, Discrete graph structure learning for forecasting multiple time series, in: ICLR, 2021.

- [48] H. Yu, T. Li, W. Yu, J. Li, Y. Huang, L. Wang, A. X. Liu, Regularized graph structure learning with semantic knowledge for multi-variates time-series forecasting, in: IJCAI, 2022, pp. 2362–2368.

- [49] E. Jang, S. Gu, B. Poole, Categorical reparameterization with gumbel-softmax, in: ICLR, 2017.

- [50] M. Defferrard, X. Bresson, P. Vandergheynst, Convolutional neural networks on graphs with fast localized spectral filtering, NeurIPS 2016 29 (2016).

- [51] T. N. Kipf, M. Welling, Semi-supervised classification with graph convolutional networks, arXiv preprint arXiv:1609.02907 (2016).

- [52] Q. Li, Z. Han, X. Wu, Deeper insights into graph convolutional networks for semi-supervised learning, in: AAAI, 2018, pp. 3538–3545.

- [53] W. Chen, L. Chen, Y. Xie, W. Cao, Y. Gao, X. Feng, Multi-range attentive bicomponent graph convolutional network for traffic forecasting, in: AAAI, 2020, pp. 3529–3536.

- [54] P. Veličković, G. Cucurull, A. Casanova, A. Romero, P. Lio, Y. Bengio, Graph attention networks, arXiv preprint arXiv:1710.10903 (2017).

- [55] J. Zhang, X. Shi, J. Xie, H. Ma, I. King, D. Yeung, Gaan: Gated attention networks for learning on large and spatiotemporal graphs, in: UAI, 2018, pp. 339–349.

- [56] S. Guo, Y. Lin, N. Feng, C. Song, H. Wan, Attention based spatial-temporal graph convolutional networks for traffic flow forecasting, in: AAAI, 2019, pp. 922–929.

- [57] Z. Pan, Y. Liang, W. Wang, Y. Yu, Y. Zheng, J. Zhang, Urban traffic prediction from spatio-temporal data using deep meta learning, in: KDD ’19, 2019, pp. 1720–1730.

- [58] R. Huang, C. Huang, Y. Liu, G. Dai, W. Kong, LSGCN: long short-term traffic prediction with graph convolutional networks, in: IJCAI, 2020, pp. 2355–2361.

- [59] S. Guo, Y. Lin, H. Wan, X. Li, G. Cong, Learning dynamics and heterogeneity of spatial-temporal graph data for traffic forecasting, IEEE Trans. Knowl. Data Eng. 34 (11) (2022) 5415–5428.

- [60] J. Ye, L. Sun, B. Du, Y. Fu, H. Xiong, Coupled layer-wise graph convolution for transportation demand prediction, in: AAAI, 2021, pp. 4617–4625.

- [61] L. Han, B. Du, L. Sun, Y. Fu, Y. Lv, H. Xiong, Dynamic and multi-faceted spatio-temporal deep learning for traffic speed forecasting, in: KDD ’21, 2021, pp. 547–555.

- [62] J. Ye, Z. Liu, B. Du, L. Sun, W. Li, Y. Fu, H. Xiong, Learning the evolutionary and multi-scale graph structure for multivariate time series forecasting, in: KDD ’22, 2022, pp. 2296–2306.

- [63] Z. Shao, Z. Zhang, F. Wang, Y. Xu, Pre-training enhanced spatial-temporal graph neural network for multivariate time series forecasting, in: A. Zhang, H. Rangwala (Eds.), KDD ’22, 2022, pp. 1567–1577.

- [64] J. Choi, H. Choi, J. Hwang, N. Park, Graph neural controlled differential equations for traffic forecasting, in: AAAI, 2022, pp. 6367–6374.

- [65] S. Lan, Y. Ma, W. Huang, W. Wang, H. Yang, P. Li, DSTAGNN: dynamic spatial-temporal aware graph neural network for traffic flow forecasting, in: ICML 2022, Vol. 162, PMLR, 2022, pp. 11906–11917.

- [66] D. H. Wolpert, The supervised learning no-free-lunch theorems, Soft computing and industry: Recent applications (2002) 25–42.

- [67] C. Cheadle, M. P. Vawter, W. J. Freed, K. G. Becker, Analysis of microarray data using z score transformation, The Journal of molecular diagnostics 5 (2) (2003) 73–81.

- [68] X. Luo, C. Zhu, D. Zhang, Q. Li, Dynamic graph convolutional network with attention fusion for traffic flow prediction, in: ECAI 2023 - 26th European Conference on Artificial Intelligence, Vol. 372 of Frontiers in Artificial Intelligence and Applications, IOS Press, 2023, pp. 1633–1640.

- [69] Y. Bao, J. Liu, Q. Shen, Y. Cao, W. Ding, Q. Shi, PKET-GCN: prior knowledge enhanced time-varying graph convolution network for traffic flow prediction, Inf. Sci. 634 (2023) 359–381.

- [70] Y. Zhu, Y. Xu, F. Yu, Q. Liu, S. Wu, L. Wang, Graph contrastive learning with adaptive augmentation, in: WWW, 2021, pp. 2069–2080.

- [71] X. Liu, Y. Liang, C. Huang, H. Hu, Y. Cao, B. Hooi, R. Zimmermann, Do we really need graph neural networks for traffic forecasting?, CoRR abs/2301.12603 (2023).

- [72] J. Ji, J. Wang, C. Huang, J. Wu, B. Xu, Z. Wu, J. Zhang, Y. Zheng, Spatio-temporal self-supervised learning for traffic flow prediction, CoRR abs/2212.04475 (2022).

- [73] Z. Shao, Z. Zhang, W. Wei, F. Wang, Y. Xu, X. Cao, C. S. Jensen, Decoupled dynamic spatial-temporal graph neural network for traffic forecasting, Proc. VLDB Endow. 15 (11) (2022) 2733–2746.

- [74] H. Wang, J. Peng, F. Huang, J. Wang, J. Chen, Y. Xiao, Micn: Multi-scale local and global context modeling for long-term series forecasting, in: ICLR, 2023.

- [75] V. Ekambaram, A. Jati, N. Nguyen, P. Sinthong, J. Kalagnanam, Tsmixer: Lightweight mlp-mixer model for multivariate time series forecasting, in: Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, ACM, 2023, pp. 459–469.

- [76] Y. Zhang, S. Wang, B. Chen, J. Cao, Z. Huang, Trafficgan: Network-scale deep traffic prediction with generative adversarial nets, IEEE Trans. Intell. Transp. Syst. 22 (1) (2021) 219–230.

- [77] J. Jiang, B. Wu, L. Chen, S. Kim, Dynamic adaptive and adversarial graph convolutional network for traffic forecasting, CoRR abs/2208.03063 (2022).

- [78] C. Yang, G. Qi, An urban traffic knowledge graph-driven spatial-temporal graph convolutional network for traffic flow prediction, in: IJCKG, 2022, pp. 110–114.

- [79] J. Zhu, X. Han, H. Deng, C. Tao, L. Zhao, P. Wang, T. Lin, H. Li, KST-GCN: A knowledge-driven spatial-temporal graph convolutional network for traffic forecasting, IEEE Trans. Intell. Transp. Syst. 23 (9) (2022) 15055–15065.

- [80] T. Mallick, P. Balaprakash, E. Rask, J. MacFarlane, Transfer learning with graph neural networks for short-term highway traffic forecasting, in: ICPR’20, 2020, pp. 10367–10374.

- [81] Z. Pan, S. Ke, X. Yang, Y. Liang, Y. Yu, J. Zhang, Y. Zheng, Autostg: Neural architecture search for predictions of spatio-temporal graph, in: WWW, 2021, pp. 1846–1855.

- [82] X. Wu, D. Zhang, C. Guo, C. He, B. Yang, C. S. Jensen, Autocts: Automated correlated time series forecasting, Proc. VLDB Endow. 15 (4) (2021) 971–983.

- [83] Y. Huang, X. Song, S. Zhang, J. J. Q. Yu, Transfer learning in traffic prediction with graph neural networks, in: 24th IEEE International Intelligent Transportation Systems Conference, 2021, pp. 3732–3737.