STDI-Net: Spatial-Temporal Network with Dynamic Interval Mapping for Bike Sharing Demand Prediction

Abstract

As an economical and healthy mode of shared transportation, Bike Sharing System (BSS) develops quickly in many big cities. An accurate prediction method can help BSS schedule resources in advance to meet the demands of users, and definitely improve operating efficiencies of it. However, most of the existing methods for similar tasks just utilize spatial or temporal information independently. Though there are some methods consider both, they only focus on demand prediction in a single location or between location pairs. In this paper, we propose a novel deep learning method called Spatial-Temporal Dynamic Interval Network (STDI-Net). The method predicts the number of renting and returning orders of multiple connected stations in the near future by modeling joint spatial-temporal information. Furthermore, we embed an additional module that generates dynamical learnable mappings for different time intervals, to include the factor that different time intervals have a strong influence on demand prediction in BSS. Extensive experiments are conducted on the NYC Bike dataset, the results demonstrate the superiority of our method over existing methods.

Introduction

With the rapid development of sharing economy around the world, Bike Sharing System (BSS) has become more and more popular in recent years (DeMaio 2009; Shaheen, Guzman, and Zhang 2010). It provides people with a convenient and environment-friendly way of traveling. Users can rent a bike from a BSS station by some apps on their mobile phones and then return the bike to a station after completing their travels.

However, efficiently maintaining these systems is still challenging since the schedule and allocation of these transportation resources vary a lot depending on specific user requirements. For example, the number of rental orders on the morning of a day has an extremely imbalanced distribution between residential areas and commercial places. Therefore, a demand prediction method for adjustments of bikes in advance can improve the efficiency of BSS greatly.

To tackle this problem, there have been several methods proposed in recent years focusing on different prediction tasks. Besides some methods applying hand-crafted features (Chiang, Hoang, and Lim 2015; Moreira-Matias et al. 2013; Shekhar and Williams 2007), one of the first deep learning methods was introduced by Wang et al. (Wang et al. 2017) who concatenated several related factors as inputs to predict the gap between taxi supply and demand via a non-linear MLP network. After that, Zhang et al. (Zhang, Zheng, and Qi 2017) proposed a deep convolutional network named ST-ResNet to predict in-out traffic flow among different areas. However, both of them did not consider the temporal information hidden in the sequential data which is an important factor in transportation issues. Based on that, Yao et al. (Yao et al. 2018) constructed a spatial-temporal model to predict various taxi demands. Moreover, they further created a graph embedding module to pass information among different areas. But their networks only consider a single area with its neighbors as inputs, thus obtains predicted results for different locations separately, which resulted in a serious lack of correlated spatial information on the global level.

Therefore, in our method, we construct a joint spatial-temporal network on a large scale area that contains hundreds of connected BSS stations in a long day hours. The network takes the number of both rental and returning orders of all stations in the past few hours as integrated inputs and predicts all of them in the near future together for once. By this way, the spatial correlation shared by all stations can be captured at the same level and same time, with global transportation information passing through each of them. Besides, the joint consideration of both operations for bikes, renting and returning, helps to maintain the sequential relation at each time interval. For the convolutional part, instead of applying the same filters for all features in different temporal indexes, we assign features in each index with one independent convolutional group. That is, we consider that indexes serve different roles in sequential data, which is far from enough to be captured by the same convolutional kernels. Compared with previous methods, our network can achieve much better performance with measurements of both accuracy and efficiency in demand prediction tasks.

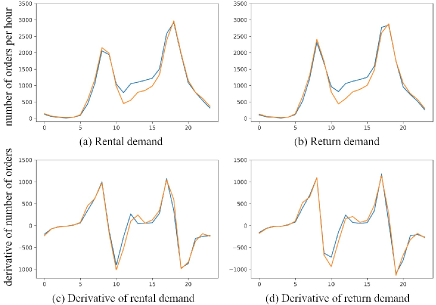

Although all the previous methods have explored temporal information in a wide range, they all ignore an important factor that different time periods influence a lot on the change of demands. Based on that, we analyze the number of orders in BSS for each day and found in some periods, the orders increase or drop dramatically while for other times, no apparent fluctuation can be observed. As shown in Figure 1, two colored lines are representing the change of orders in two single days and also their corresponding derivatives that further demonstrate the variety of demand changes in a continuous way. Therefore, we propose the dynamic interval module that takes different time intervals as inputs to improve the predictions of the main spatial-temporal network. Instead of applying a regular feature fusion for the outputs of the module, we get inspired by some few-shot learning methods (Bertinetto et al. 2016; Wang et al. 2019) and directly assign the generated features as learnable parameters for the top layer that is responsible for final predictions in the main network. In such a learning framework, time intervals participate in the formulation of learning weights in a more straightforward way, which helps the whole model to learn a mapping that is adapted based on different time periods from the extracted spatial-temporal feature to the predicted demands.

In summary, we collect our contribution into the following three folds:

-

•

We propose a joint spatial-temporal network with time-specific convolution layers to predict both renting and returning demand for all the stations in the BSS.

-

•

We further propose a Dynamic Interval module that builds the relationship between different time intervals in a day to the learning representation that is assigned as learnable weights in the top regression layer.

-

•

We conducted large scale experiments on the NYC Bike dataset. The result shows that our approach outperforms all other previous methods and several competitive baselines.

Related Work

Traffic prediction problems include many tasks, such as traffic flow prediction, destination prediction, demand prediction (our task), etc. The methods applied to these tasks are kind of similar. Essentially, they predict the data on future timestamps based on the historical one (Wang et al. 2017; Yao et al. 2018; Zhang, Zheng, and Qi 2017; Zhang et al. 2016). Some traditional methods only rely on information in time series and regress final predictions. For instance, one of the most representative methods is Autoregressive Integrated Moving Average (ARIMA) which is widely used in traffic prediction problems (Moreira-Matias et al. 2013; Shekhar and Williams 2007). It takes continuous temporal information as inputs and regresses desired results. Besides, some other works included external context data, such as weather conditions and event information, to further improve the model’s performance (Pan, Demiryurek, and Shahabi 2012; Wu, Wang, and Li 2016).

Deep learning has been successfully used in a large number of problems, such as computer vision (He et al. 2016; Krizhevsky, Sutskever, and Hinton 2012), which also widely used in traffic prediction. Zhang et al. (Zhang et al. 2016) proposed a DNN-based model for predicting crowd flow. After that, they further introduced the residual connection originated from CNN-based networks (He et al. 2016) for the same task (Zhang, Zheng, and Qi 2017). To utilize context data, Wang et al. (Wang et al. 2017) used a large number of multiple sources as inputs of their network to predict the gap between the supply and demand of taxi in different sub-areas. Besides, some other methods (Yu et al. 2017; Zhao et al. 2018) proposed to use the recurrent neural network, like LSTM and BiLSM, to encode temporal information. With the popularity of a convolutional neural network (CNN), Yao et al. (Yao et al. 2018) jointly modeled spatial-temporal information in a single network, and generated graph embedding additionally to extract the constant feature for each region. Though they achieved a great success in some traffic prediction fields, they neglect the discriminative temporal information hidden in time intervals and encoded sequential data without special consideration, which will both be tackled in our proposed method.

Though deep learning methods have been successful in many areas, most of them require a large amount of annotated data to be optimized. Meta Learning methods (Andrychowicz et al. 2016; Bertinetto et al. 2016; Finn, Abbeel, and Levine 2017; Wang et al. 2019), however, exist to help relieve such a strict requirement by proposing more general training models that can be adjusted well to new tasks with a few new samples. Especially, Bertinetto et al. proposed a siamese-like network to receive image pairs and enforce one sub-network to generate learning weights directly for another one (Bertinetto et al. 2016). Similarly, the TAFE-Net proposed by Wang et al. (Wang et al. 2019) successfully generates weights for both convolutional and fully connected layers to another network. Inspired by such a weight generating strategy, in our work, we also explore the possibility to apply it to the demand prediction tasks, hoping to adjust our model with more adapted parameters captured by external knowledge hidden in our specific sequential data.

Preliminaries

In this section, we first introduce some basic conceptions in BSS and then formulate our demand prediction problem mathematically.

we process stations as matrices according to the geographical regions because the distribution of them is initially matrix-like

Following the definition of(Yao et al. 2018) and(deepstn+), we denote as the set of all stations in which the number of orders needs to be predicted, where is the total number of stations used in our dataset. These stations are further converted into a matrix where , according to the geographical distribution of these stations. For temporal information, suppose each day can be segmented into time intervals and there are days in a dataset, we define as the set of whole time intervals. Given the above definitions, we further formulate the following conceptions.

Rental order: A rental order can be defined as that contains the station where people rent their bikes and the corresponding start time interval. We represent it as with a tuple structure where is the station and denotes the interval.

Return order: Similarly, a return order can be also defined as in which and correspond to the same meaning in .

Rental/Return demand: The rental and return demand in one station and time interval are both defined as the total number of rental/return orders during that time and location, which can be denoted as . Therefore, when dealing with all BSS stations, we set as matrices with each element representing the demand of each station. Furtherly, demand matrices for all time intervals can be defined as and respectively.

Demand: With all definitions above, we finally concatenate two demand matrices, and , together as joints input for our proposed network in time interval . As shown in Figure 2, our demand matrix has two channels representing rental and return demands respectively. Each grid is one station and the corresponding color describes the number of orders.

Demand Prediction: Given the sequential data from the beginning time to the current, demand prediction aims to predict the data in the future one time step or several steps. Especially, for the BSS demand prediction, we denote it as

| (1) |

where is the length of the input sequence, represents some additional information that can help for prediction tasks as prior knowledge, like the spatial connection among stations (Yao et al. 2018) and different time intervals in a day in our method.

Proposed Spatial-Temporal Dynamic Interval Network

In this section, we provide the details of our proposed Spatial-Temporal Dynamic Interval Network (STDI-Net) for the demand prediction task of BSS. We first talk about our spatial-temporal module separately and then introduce the dynamic interval module which generates different parameters for the network based on time intervals in a day. Figure 3 shows the overview architecture of our model.

Spatial Module

The spatial module of the network aims to extract the joint features of all stations in each demand matrix. For each data node in one sequential input, we apply a residual convolutional block to operate on it. Inspired by (He et al. 2016) that proposed the residual link to solve problems brought by very deep networks, like the vanishing gradient problem, we utilize a similar idea in our spatial module. With a concatenation between different levels of layers, the block can not only extract more abstracted representations of the demand matrix in a deep layer but also consider context information connected through different layers from the sparse input as the number of orders to the compact spatial relationships among different stations. More details are shown in Figure 4 and the process can be denoted as

| (2) |

where denotes the input of a ResUnit. and are the outputs of the first and second convolutional layers in the ResUnit respectively. represents the output of the ResUnit. The denotes the non-linear activation function like . , , , and represent the weights and biases of the first and second convolutional layers in the ResUnit separately.

To further consider that matrices in each sequential data serves different roles based on their indexes, we create multiple independent Conv Blocks with the same structure and each of the block is responsible for one corresponding demand matrix. We denote the process as

| (3) |

where is the two-channel demand matrix as original input on time interval and is the output from operated by the Conv Block . represents the index of both sequential inputs and Conv Blocks, and denotes the length of the input sequence. Therefore, the number of different convolutional blocks is equal to the number of intervals in a sequential input. Each block captures the discriminative information hidden in the indexes of the data.

After the convolutional operation, we apply flatten layers to transform that outputs from Conv Block to a feature vector , where is the number of channels of the output matrix. The whole output represents all features extracted from temporal demand matrices separately, which can be denoted as:

| (4) |

Temporal Module

Since the transportation data is a type of time series, we apply the temporal module to capture the temporal dependence of the sequential demand matrices. In the task of sequence learning, Recurrent Neural Networks (RNN) have achieved good results (Sutskever, Vinyals, and Le 2014). The incorporation of Long Short-Term Memory (LSTM) overcomes the shortage of traditional recurrent networks that learning long-term dependencies is difficult (Informatik et al. 2003). Some previous works (Qiu et al. 2019; Yao et al. 2018) have proved the great performance of LSTM in processing traffic sequential data. To follow them, we apply the LSTM network for the BSS sequential data in our temporal module.

Briefly speaking, LSTM maintains a memory cell to accumulate the previous sequence information. Specifically, at time , given an input , the LSTM uses an input gate and a forget gate to update its memory cell , and uses an output gate to control the hidden state . The formulation is defined as follows:

| (5) |

where denotes the Hadamard product, and represents the sigmoid function. are the learnable parameters of the LSTM while and are the memory cell state and the hidden state at time . Please refer to (Hochreiter and Schmidhuber 1997; Informatik et al. 2003) for more details.

In our model, the LSTM net takes as input, which is the output of the spatial module. We use to represent the output of the LSTM net in our temporal module.

Dynamic Interval Module

Though the sequential demand data of BSS holds a kind of trend during the day, their changes will vary according to different time intervals. Therefore, we propose a dynamic interval module that extracts temporal information from each hour and then apply them to influence the learning strategies of the main spatial-temporal network directly.

To encourage such a learning mode, some meta-learning methods (Bertinetto et al. 2016; Wang et al. 2019) have been proposed to create a siamese-like network in which one network is responsible for generating learning weights for another. Inspired by these advanced works, we also apply a similar network structure to map (time) to be directly the learning weights of the top fully connected layer in the main network.

In our module, for the input number of hours ranging from to , we first use (Pennington, Socher, and Manning 2014) to embed the numbers into feature vectors . After that, our Interval Net in the module transforms embedding vectors to features whose dimension is the same as the learnable parameters in the fully connected layer of the main network, including weights and biases. The generated vectors are then directly assigned to be the values in the fully connected layer, and the Dynamic Interval Module participates in the back-propagation process in an end-to-end manner.

However, it is too difficult and too large for parameters in Interval Net to learn, since the parameters space of the Interval Net grows quadratically with the number of the output units. Following (Bertinetto et al. 2016), we construct a factorized representation of the output weights that is decomposed of 2 operating matrices and a diagonal matrix as Figure 5 shows, which is analogous to the Singular Value Decomposition. By this way, the parameters in the Interval Net needed to be learned only grow linearly with the number of output units. The whole process can be formulated as

| (6) |

where is the generated weights for the fully connected layer. represents the output vector of the Linear layer in Interval Net while is the diagonal operating to transform the vector to a diagonal matrix. As a consequence, the net only needs to generate low-dimensional parameters for each time interval. In addition, two matrices and , where , project again to keep the same dimension with the fully connected layer.

Similarly, biases of the fully connected layer are also generated as following:

| (7) |

where represents the generated biases for the fully connected layer. denotes the output vector of the linear layer in Interval Net. After the above operation, we obtain as the parameters in Figure 3 of the fully connected layer (FC).

To get the final results, the fully connected layer takes the output of temporal module as input for the time interval . As we mentioned, consists of the weights and biases where . Therefore, the formulation of the layer can be expressed as follows:

| (8) |

where the denotes the non-linear activation function of prediction layer. represents the predicted demand matrix of the ground truth .

Implementation Details

In the experiments, we set the length of the input sequence to 3. In the spatial module, each Conv Block has 2 ResUnits with the same structure. That is, it contains 2 convolutional layers with each layer followed by a batch normalization (BN) (Ioffe and Szegedy 2015) and a residual link. All the convolutional layers in the Conv Block have 32 filters. The size of each filter is set to with . In the temporal module, the LSTM net has 1 hidden layer with 1024 neurons. The activation functions used in the fully connected layer and Conv Blocks are while is used as the activation function at the linear layers in the dynamic interval module. We optimize our model via Adam (Kingma and Ba 2014) optimization by minimizing the Mean Squared Error (MSE) loss between the predicted result and the ground truth. The learning rate and the weight decay are set to and 5e-5 respectively. For the training data, 90% of it is for training and the remaining 10% is chosen as a validation set for early-stop. We implement our network with Pytorch (Paszke et al. 2019) and train it for 200 epochs on 2 NVIDIA 1080Ti GPUs.

Experiment

Dataset

In the paper, we use the NYC Bike dataset in 2014, from Apr. 1st to Sept. 30th. We treat the data for the last 10 days as the testing data and others as training data. We set one hour as the length of a time interval. The total number of orders and time intervals in the dataset are 5,359,944 and 4,392 respectively. And the number of stations used in the dataset is 128. The dataset can be collected from the website of Citi-Bike system111https://www.citibikenyc.com/system-data.

Evaluation Metric

We use Rooted Mean Square Error (RMSE) and Mean Absolute Error (MAE) as the metrics to evaluate the performance of our model and the baselines, which are defined as:

| (9) |

| (10) |

where and denote the predicted value and ground truth respectively, and is the number of all predicted values.

Baselines

We compare our STDI-Net with the following seven baselines:

-

•

Historical average (HA): Historical Average (HA) predicts the future demand by averaging the historical demands.

-

•

Auto-regressive integrated moving average (ARIMA): Auto-Regression Integrated Moving Average (ARIMA) is a well-known model used for time series prediction.

-

•

Lasso regression (Lasso): Lasso regression is a linear regression method with regularization.

-

•

Ridge regression (Ridge): Ridge regression is a linear regression method with regularization.

-

•

Multiple layer perception (MLP): MLP is a neural network with four hidden layers. The number of hidden units are 256, 256, 128, 128 respectively. The MLP predicts the demand matrix by taking a sequence of the previous demand matrix as input.

-

•

ST-ResNet (Zhang, Zheng, and Qi 2017): ST-ResNet is a CNN-based model with residual blocks for traffic prediction, which used multiple CNN components to extract features from the historical data sequence.

-

•

DMVST-Net (Yao et al. 2018): DMVST-Net is a deep learning model which based on CNN and LSTM for taxi demand prediction. It also contains graph embedding to capture similar demand patterns among regions.

-

•

DeepSTN+ (deepstn+): DeepSTN+ is a deep learning-based convolutional model for crowd flow prediction, which contains long range spatial dependence modeling, POI-based spatial information capturing, and a fusion mechanism for features extracted from different aspects.

| Method | RMSE | MAE |

|---|---|---|

| Historical average | 10.7308 | 5.8374 |

| ARIMA | 10.4773 | 4.7005 |

| Lasso regression | 8.4947 | 3.6799 |

| Ridge regression | 8.4699 | 3.6984 |

| Multiple layer perception | 7.1888 | 3.3388 |

| ST-ResNet | 5.1249 | 2.7206 |

| DMVST-Net | 5.0595 | 2.3423 |

| DeepSTN+ | 4.9060 | 2.4269 |

| STDI-Net | 4.6339 | 2.1946 |

Comparison with Baselines

Table 1 shows the testing results of our proposed model and baselines on the dataset. We can see that our STDI-Net achieves the lowest RMSE and MAE(4.6339 and 2.1946) among all the competing methods. The HA and ARIMA perform poorly, as they only consider the historical demand values for prediction. Because of the consideration of more context relationships among sequence, the linear regression methods (Lasso and Ridge) perform better than the above two methods. However, they do not extract more spatial-temporal information for prediction. The MLP further extracts features from the sequence and performs better than the above methods. However, the MLP does not model spatial or temporal dependency. The ST-ResNet achieves 5.1249 and 2.7206 for RMSE and MAE which is better than MLP due to the extracting of spatial features. Compared with ST-ResNet, DMVST-Net extracts joint spatial-temporal feature and similar demand patterns among regions, which further improve its performance for prediction. Compared with previous methods, DeepSTN+ explores spatial correlations from different aspects to reduce the prediction error. However, it doesn’t consider about the influence of different time intervals. Our model further contributes a dynamic interval module which further improves the performance.

Comparison with Modules Combinations

Our full model consists of three modules for three types of information modeling. To explore the influence of different modules combinations on the task, we combine them and implement the following networks:

-

•

Spatial module + FC: This network contains the spatial module of our proposed model and a fully connected layer. This network only extracts spatial features for prediction.

-

•

Temporal module + FC: This network only uses the temporal module of our proposed model to capture the temporal information, and a fully connected layer is used to output the predicted results.

-

•

Spatial module + Temporal module + FC: This method is the combination of the spatial module, temporal module, and a fully connected layer. In this method, we model joint spatial-temporal information without considering the influence of different time intervals.

-

•

Spatial module + Dynamic Interval module: In this network, we combine the spatial module and the dynamic interval module of our proposed model, to capture spatial information, and the dynamic mappings for different time intervals.

-

•

Temporal module + Dynamic Interval module: For this network, we use the temporal module and the dynamic interval module of our proposed model. This network models the temporal information, and generates the dynamic mappings for different time intervals.

-

•

STDI-Net: Our proposed model, which models joint spatial-temporal information, and generates dynamic mappings for different time intervals.

Table 2 shows the results of the test. The RMSE and MAE of the spatial module + FC are 5.6558 and 2.6218 respectively, while that of the spatial module + dynamic interval module are 4.9077 and 2.3457. The results of the temporal module + FC achieve 5.2614 and 2.3914 while the RMSE and MAE of the temporal module + dynamic interval module are 4.7788 and 2.2582 respectively. We can see that compared with separate spatial or temporal module + fully connected layer, the performance of the combination with the dynamic interval module improves significantly. Furthermore, the spatial module + temporal module + FC achieves the results of 5.0832 and 2.3476, which are worse than that of our complete model. The results show that our dynamic interval module improves the performance significantly.

| Method | RMSE | MAE |

|---|---|---|

| Spatial + FC | 5.6558 | 2.6218 |

| Temporal + FC | 5.2614 | 2.3914 |

| Spatial + Temporal + FC | 5.0832 | 2.3476 |

| Spatial + Dynamic Interval | 4.9077 | 2.3457 |

| Temporal + Dynamic Interval | 4.7788 | 2.2582 |

| STDI-Net | 4.6339 | 2.1946 |

Comparison with Variants of Our Model

The above experiments show that our proposed dynamic interval module achieves a good result in the demand prediction of BSS. However, we have not proved the rationality of the parameters-generated mode in the dynamic interval module. Besides, we also need to evaluate the effectiveness of the time-specific convolutional layers in our spatial module. In addition, the advantage of using need to be proved by comparing with the model that embed time intervals into vectors without the pre-trained . To address these two questions, we construct the following three variants of our proposed model:

-

•

STDI-Net-fusion: In this network, we apply a Linear layer in the Interval Net to transform the interval embedding vector to a feature, and then we concatenate it with the output of the temporal module. After that, a fully connected layer is used to output the predicted results.

-

•

Unified-Spatial Net: This network is the variant of our proposed spatial module, which is used to evaluate the performance of applying the same filters in different temporal indexes. This model Net applies unified filters for each index of the sequence in all convolutional layers, and a fully connected layer is used after convolutional layers. Note that, in the Unified-spatial Net, we use the same Conv Blocks structure as our proposed STDI-Net.

-

•

STDI-Net-embedding: In this model, we apply a learnable embedding layer to embed the hours’ number instead of using the pre-trained to embed them.

| Method | RMSE | MAE |

|---|---|---|

| Unified-Spatial Net | 6.1493 | 2.9533 |

| Spatial module + FC | 5.6558 | 2.6218 |

| STDI-Net-fusion | 4.8149 | 2.2995 |

| STDI-Net-embedding | 4.6154 | 2.1783 |

| STDI-Net | 4.6339 | 2.1946 |

Table 3 shows the results of the above three variants of our model. We can see that our spatial module + FC (5.6558 and 2.6218 for RMSE and MAE) outperforms Unified-Spatial Net (6.1493 and 2.9533 for RMSE and MAE), that means, our proposed time-specific convolution layers perform better than applying same convolutional filters in different temporal indexes. Otherwise, STDI-Net-fusion achieves 4.8149 for RMSE and 2.2995 for MAE, which are worse than our STDI-Net (4.6339 and 2.1946 respectively). Therefore, our parameters-generated mode is better than the fusion way.

Due to applying a trainable embedding layer instead of using a pre-trained model (), the STDI-Net-embedding (4.6154 and 2.1783) has more learnable parameters than STDI-Net (4.6339 and 2.1946). Therefore it can perform better than our STDI-Net. However, its performance has not improved significantly (0.4 and 0.7 for RMSE and MAE respectively) with additional parameters. That means, our STDI-Net can perform almost as well as STDI-Net-embedding with less parameters than it. To reduce the number of learnable weights, we apply to embed hours instead of using an additional embedding layer to embed them.

Conclusion and Discussion

In this paper, we propose a novel deep learning-based method for demand prediction of Bike Sharing System (BSS). Our model considers the extraction of joint spatial-temporal feature and time-specific convolutional layers with residual links. Furthermore, we contribute a dynamic interval module to include the factor that different time intervals have a strong influence on demand prediction in BSS by generating different feature mappings for different time intervals. We evaluate our model on the NYC Bike dataset, and the results show that our model significantly outperforms the competing baselines. In the future, we will consider some other features to further improve the performance of our model, such as meteorology data, holiday data. And we will consider the more dependent relationship of stations, such as use Graph Convolutional Network (GCN) to extract the spatial feature among stations.

References

- Andrychowicz et al. (2016) Andrychowicz, M.; Denil, M.; Gomez, S.; Hoffman, M. W.; Pfau, D.; Schaul, T.; Shillingford, B.; and De Freitas, N. 2016. Learning to learn by gradient descent by gradient descent. In NeurIPS.

- Bertinetto et al. (2016) Bertinetto, L.; Henriques, J. a. F.; Valmadre, J.; Torr, P.; and Vedaldi, A. 2016. Learning feed-forward one-shot learners. In NeurIPS, 523–531.

- Chiang, Hoang, and Lim (2015) Chiang, M.-F.; Hoang, T.-A.; and Lim, E.-P. 2015. Where are the passengers?: A grid-based gaussian mixture model for taxi bookings. In SIGSPATIAL.

- DeMaio (2009) DeMaio, P. 2009. Bike-sharing: History, impacts, models of provision, and future. Journal of Public Transportation 12(4):41–56.

- Finn, Abbeel, and Levine (2017) Finn, C.; Abbeel, P.; and Levine, S. 2017. Model-agnostic meta-learning for fast adaptation of deep networks. In ICML.

- He et al. (2016) He, K.; Zhang, X.; Ren, S.; and Sun, J. 2016. Deep residual learning for image recognition. In CVPR.

- Hochreiter and Schmidhuber (1997) Hochreiter, S., and Schmidhuber, J. 1997. Long short-term memory. Neural computation 9(8):1735–1780.

- Informatik et al. (2003) Informatik, F.; Bengio, Y.; Frasconi, P.; and Schmidhuber, J. 2003. Gradient flow in recurrent nets: the difficulty of learning long-term dependencies. A Field Guide to Dynamical Recurrent Neural Networks.

- Ioffe and Szegedy (2015) Ioffe, S., and Szegedy, C. 2015. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In ICML.

- Kingma and Ba (2014) Kingma, D. P., and Ba, J. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980.

- Krizhevsky, Sutskever, and Hinton (2012) Krizhevsky, A.; Sutskever, I.; and Hinton, G. E. 2012. Imagenet classification with deep convolutional neural networks. In NeurIPS, 1097–1105.

- Moreira-Matias et al. (2013) Moreira-Matias, L.; Gama, J.; Ferreira, M.; Mendes-Moreira, J.; and Damas, L. 2013. Predicting taxi–passenger demand using streaming data. IEEE Transactions on Intelligent Transportation Systems 14(3):1393–1402.

- Pan, Demiryurek, and Shahabi (2012) Pan, B.; Demiryurek, U.; and Shahabi, C. 2012. Utilizing real-world transportation data for accurate traffic prediction. In ICDM.

- Paszke et al. (2019) Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. 2019. Pytorch: An imperative style, high-performance deep learning library. In NeurIPS.

- Pennington, Socher, and Manning (2014) Pennington, J.; Socher, R.; and Manning, C. 2014. Glove: Global vectors for word representation. In EMNLP.

- Qiu et al. (2019) Qiu, Z.; Liu, L.; Li, G.; Wang, Q.; Xiao, N.; and Lin, L. 2019. Taxi origin-destination demand prediction with contextualized spatial-temporal network. In ICME, 760–765.

- Shaheen, Guzman, and Zhang (2010) Shaheen, S. A.; Guzman, S.; and Zhang, H. 2010. Bikesharing in europe, the americas, and asia: Past, present, and future. Transportation Research Record 2143(1):159–167.

- Shekhar and Williams (2007) Shekhar, S., and Williams, B. M. 2007. Adaptive seasonal time series models for forecasting short-term traffic flow. Transportation Research Record 2024(1):116–125.

- Sutskever, Vinyals, and Le (2014) Sutskever, I.; Vinyals, O.; and Le, Q. V. 2014. Sequence to sequence learning with neural networks. In NeurIPS, 3104–3112.

- Wang et al. (2017) Wang, D.; Cao, W.; Li, J.; and Ye, J. 2017. Deepsd: Supply-demand prediction for online car-hailing services using deep neural networks. In ICDE, 243–254.

- Wang et al. (2019) Wang, X.; Yu, F.; Wang, R.; Darrell, T.; and Gonzalez, J. E. 2019. Tafe-net: Task-aware feature embeddings for low shot learning. In CVPR.

- Wu, Wang, and Li (2016) Wu, F.; Wang, H.; and Li, Z. 2016. Interpreting traffic dynamics using ubiquitous urban data. In SIGSPACIAL.

- Yao et al. (2018) Yao, H.; Wu, F.; Ke, J.; Tang, X.; Jia, Y.; Lu, S.; Gong, P.; Ye, J.; and Zhenhui, L. 2018. Deep multi-view spatial-temporal network for taxi demand prediction. In AAAI.

- Yu et al. (2017) Yu, R.; Li, Y.; Shahabi, C.; Demiryurek, U.; and Liu, Y. 2017. Deep learning: A generic approach for extreme condition traffic forecasting. In SIAM.

- Zhang et al. (2016) Zhang, J.; Zheng, Y.; Qi, D.; Li, R.; and Yi, X. 2016. Dnn-based prediction model for spatio-temporal data. In SIGSPATIAL.

- Zhang, Zheng, and Qi (2017) Zhang, J.; Zheng, Y.; and Qi, D. 2017. Deep spatio-temporal residual networks for citywide crowd flows prediction. In AAAI.

- Zhao et al. (2018) Zhao, J.; Xu, J.; Zhou, R.; Zhao, P.; Liu, C.; and Zhu, F. 2018. On prediction of user destination by sub-trajectory understanding: A deep learning based approach. In CIKM, CIKM ’18, 1413–1422.