STDCformer: A Transformer-Based Model with a Spatial-Temporal Causal De-Confounding Strategy for Crowd Flow Prediction

Abstract

Crowd Flow Prediction is critical to urban management, with the goal of capturing the arrival and departure characteristics of crowd movements under different spatial and temporal distributions, which is fundamentally a spatial-temporal prediction task. Existing works typically treat spatial-temporal prediction as the task of learning a function to transform historical observations to future observations. We further decompose this cross-time transformation into three processes: (1) Encoding (): learning the intrinsic representation of observations, (2) Cross-Time Mapping (): transforming past representations into future representations, and (3) Decoding (): reconstructing future observations from the future representations. From this perspective, spatial-temporal prediction can be viewed as learning , which includes learning the space transformations between the observation space and the hidden representation space, as well as the spatial-temporal mapping from future states to past states within the representation space. This leads to two key questions: Q1: What kind of representation space allows for mapping the past to the future? Q2: How to achieve mapping the past to the future within the representation space? To address Q1, we propose a Spatial-Temporal Backdoor Adjustment strategy, which learns a Spatial-Temporal De-Confounded (STDC) representation space and estimates the de-confounding causal effect of historical data on future data. This causal relationship we captured serves as the foundation for subsequent spatial-temporal mapping. To address Q2, we design a Spatial-Temporal Embedding (STE) that fuses the information of temporal and spatial confounders, capturing the intrinsic spatial-temporal characteristics of the representations. Additionally, we introduce a Cross-Time Attention mechanism, which queries the attention between the future and the past to guide spatial-temporal mapping. Finally, we integrate the process of learning the STDC representation space and the spatial-temporal mapping into an -- skeleton for spatial-temporal prediction. The skeleton is further instantiated with a Transformer model, building a Transformer model with Spatial-Temporal De-Confounding Strategy (STDCformer). Experiments on two real-world datasets demonstrate that STDCformer achieves state-of-the-art predictive performance and exhibits stronger out-of-distribution generalization capabilities.

keywords:

Crowd Flow Prediction , Causal Inference , Spatial-temporal Transformer , Causal De-Confounding , Cross-Time Mapping1 Introduction

Crowd flow prediction aims to use historical flow data in a region to predict the inflow and outflow of during future time periods. It is a typical spatial-temporal prediction task that plays a crucial role in urban planning, traffic management, public safety, and other fields [1, 2]. The essence of spatial-temporal prediction lies in capturing the mapping from historical data to future data, enabling the inference of future from historical observations. In recent years, a series of spatial-temporal prediction models have emerged with the goal of solving , and the deep learning-based models have become mainstream. Among these, models based on Spatial-Temporal Graph Neural Networks (STGNNs) and Spatial-Temporal Transformers (ST Transformers) have demonstrated exceptional performance in various spatial-temporal prediction tasks, such as traffic flow prediction and crowd flow prediction [3, 4]. These two types of models follow a unified paradigm, where temporal representation modules (e.g., RNN[5, 6, 7, 8, 9, 10, 11], CNN[12, 13, 14, 15, 16], and Transformer[17, 18, 19, 20]) and spatial representation modules (e.g., CNNs [21], GNNs [5, 6, 7, 10, 12, 13, 15, 15, 16], and Transformers [8, 17, 20, 22]) are used to separately capture the temporal and spatial representations from the observed data. A spatial-temporal fusion module then combines these representations to obtain the final representation, which is used to infer unobserved spatial-temporal data.

needs to be realized through three subprocesses. First, the encoder is used to encode historical observations into representations in a latent space. Second, a cross-time mapping is applied to transform the representation of past states into the representation of future states. Third, the decoder projects the future representations back into the observation space to reconstruct the future observations. From this perspective, the objective of learning is to learn , which includes the space transformation between the observation space and the representation space, as well as the spatial-temporal mapping from future states to past states within the representation space. The purpose of space transformation is to identify a representation space that can capture the essential information in the observations, serving as the foundation for ensuring the feasibility of the subsequent spatial-temporal mapping. The purpose of spatial-temporal mapping is to learn the intrinsic, dynamic transformation relationships between the past and future from their essential representations. These two processes lead to two key questions behind spatial-temporal prediction: Q1: What kind of representation space allows for mapping the past to the future? Q2: How to achieve mapping the past to the future within the representation space? The answers to these questions and the corresponding methods are as follows.

A1: The premise for mapping the past to the future is the accurate transmission of causal effects from the past to the future. Therefore, the mapping from the past to the future can be achieved in a Spatial-Temporal De-Confounded (STDC) representation space.

The premise of inferring the future from the past is based on the assumption that there exists some form of ”influence” of the past on the future, which determines the mapping relationship between the past and future. Historical data and future data are not simply related as input and output, but implicitly involve assumptions about the causal relationship between the past and future. Therefore, fusing the representation of causality can capture the essential information within the observational data. Under this assumption, such ”influence” can be characterized through causal effects. Existing methods typically build neural network models to fit the associations between historical and future data distributions from the observational data to learn the representation space. However, when uncontrolled confounding bias [23] is present, the model fails to capture the true causal effect .

As shown in Figure 1, the basic unit in spatial-temporal data is analogous to a token in text, which serves as the trial unit for estimating , referred to as the ST token (STT), represented as . It can be observed that, similar to different participants with varying physical conditions in a drug trial, each STT also has temporal and spatial characteristics. These characteristics reflect the attributes of the spatial region (e.g., functionality, travel cost, travel safety, etc.) and the temporal window (e.g., travel necessity, travel suitability, etc.), which are potential factors influencing human movement. These characteristics simultaneously affect both historical and future crowd flow observations, serving as confounders in the spatial-temporal prediction process. If the distribution bias of these confounding factors in the sample data is ignored, the model will learn incorrect patterns.

Estimating the correct causal effect requires eliminating the distributional bias of confounders, a process known as de-confounding. In this paper, we argue that the ideal spatial-temporal representation space should enable de-confounding, i.e., learn a Spatial-Temporal De-Confounded (STDC) representation space. The challenge of spatial-temporal observational data de-confounding lies in simulating the intervention operation on the observed samples, with the key being sample stratification and confounder control. Sample stratification entails classifying samples into treatment groups based on their differences, while confounder control then re-weight the samples in each stratum to estimate the true causal effect. However, due to the complexity of spatial-temporal activities and the limited nature of observational data, confounders are often unobservable, and the distribution of such hidden confounders in the observational samples is unknown, which makes it difficult to directly measure the differences between samples, consequently, challenging to accurately pre-define the number of sample strata and their weights. In order to obtain the representation of hidden confounders, existing methods often learn the representations confounders from the original observation time series [24, 25]. However, these abstract representations are hard to be aligned to the specific semantics in the real world, and the separability between different strata heavily depends on the sampling and quality of the observation data. Some methods also introduce Point-of-Interest (POI) data to learn the classification of confounding representations [26], but they need to pre-define the number of categories k of confounding and their distribution. The ideal hidden confounding stratification in crowd data should accurately indicate the key influence factors behind crowd movements, and different confounding representations should be naturally distinguishable and assigned appropriate weights. To approximate this ideal hidden confounder stratification and allow for subsequent control, this paper proposes a Spatial-Temporal Backdoor Adjustment strategy, specifically:

(1) To improve the reliability and semantics of confounders’ representations, we collected auxiliary information that can separately characterize the temporal and spatial characteristics of STTs based on the analysis of human movement behavior. This auxiliary information is fused into the prediction model to learn the representations of hidden confounders. Compared to one-dimensional time series data from uncontrollable sampling process, this type of information has more stable quality and clearer semantics.

(2) To reduce the bias caused by assumptions on the distribution of confounding factors, and based on the two most critical attributes of human mobility, ”When”, ”Where”, we naturally categorize the confounders into temporal and spatial confounders. This approach avoids the need to predefine the number of clusters k while ensuring the completeness of the stratification. Additionally, both temporal and spatial confounders are fed into learnable modules to derive the weights, eliminating the need to assume a predefined distribution for the confounders.

A2: The intrinsic relationship between the past and the future depends on the characteristics of their spatial-temporal contexts, which can be portrayed by spatial and temporal confounders. Therefore, the representations of confounders can be used for querying the relationships between the past and future.

Changes in both time and spatial sampling values can lead to shifts in the spatial-temporal mapping relationships. As shown in Figure 2, taking the crowd flow data from Manhattan Island, New York City, as an example, the flow data is divided into different samples for prediction , with a time window size of 6 hours. For the same time sampling, the future trends of both samples and are similar to their historical trends, showing an overall downward trend. In contrast, the future trend of first follows the historical trend and then reverses. For the same region sample, the future trend of is opposite to its past trend, while shows the same future and past trends.

This difference arises from the spatial-temporal characteristics of the observation samples, i.e., the different spatial-temporal contexts, and spatial-temporal confounders can portray this information. For example, due to the different functionalities of Zone 79 compared to the other two zones, even at the same time, their ability to attract crowd flow differs, leading to different trends. While for Zone 75, the travel demand of people varies at different time windows, resulting in different trends in how the spatial region is visited across different time periods.

Therefore, we propose a Cross-Time-Attention-based Past-to-Future Mapping mechanism. To better capture the inherent spatial-temporal characteristics of STT representations, we construct Spatial-Temporal Embeddings (STE) of STTs by fusing time and spatial confounders, which preserve the global positional and structural information of the STTs in both time and space dimensions. To better capture the dynamic transformation relationship between past and future, which is a pair of cross-time entities, we introduce a Cross-Time-Attention (CTA) mechanism to enable querying between future STEs and past STEs. The queried relationships are future used to guide the transformation of past representations to future representations.

By combining A1 and A2, we constructed a prediction skeleton with the structure of STDC Encoder → CTA-based Past-to-Future Mapping → STDC Decoder, and instantiated this skeleton based on the Transformer architecture to obtain the STDCformer, which simultaneously achieves de-confounding and spatial-temporal prediction. Compared to existing ST Transformer architectures (e.g., Figure 3a), STDCformer introduces confounder’s information in both STE encoding and spatial-temporal representation learning (Figure 3b), allowing the model to use confounder’s information to simultaneously guide spatial-temporal mapping and spatial-temporal fusion. This approach maximizes information sharing between de-confounding representation and spatial-temporal prediction, thereby enhancing information utilization efficiency.

Our contributions are as followed:

(1) We propose a new perspective for formulating spatial-temporal prediction, and construct a novel Encoder-Past-to-Future Mapping-Decoder prediction skeleton. Based on this skeleton, a spatial-temporal prediction model, STDCformer, is developed, which achieves de-confounded crowd flow prediction.

(2) We introduce a spatial-temporal backdoor adjustment strategy, partitioning the confounders behind crowd flow prediction into two categories: temporal confounders and spatial confounders, and incorporating auxiliary information to accurately build confounders. The representation of confounders is used to encode spatial-temporal embeddings and guide spatial-temporal fusion, enhancing the utilization of confounders’ information and simultaneously achieving de-confounding.

(3) We conducted prediction experiments under independent and identically distributed (IID) conditions on two real-world datasets. The experimental results show that STDCformer outperforms state-of-the-art (SOTA) models, including STGNNs and ST Transformers. Moreover, STDCformer generalizes better to out-of-distribution (OOD) datasets in a zero-shot manner. We aligned the wights of learned confounders with real-world data and discussed their physical meaning, explaining how the deconfounding strategy removes confounding bias from the data by reweighting.

(4) We build two crowd flow datasets for Manhattan and Brooklyn in New York City, which include auxiliary information required to build confounders for all STTs within the datasets. These datasets provide a foundation for future research on de-confounded crowd flow prediction.

2 Related Works

2.1 Spatial-Temporal Graph Prediction

Crowd Flow Prediction in Urban Areas is a typical spatial-temporal graph prediction task, and existing spatial-temporal graph prediction methods can potentially be applied to crowd flow prediction. Therefore, this paper provides a general review of spatial-temporal graph prediction methods. Spatial-temporal graph prediction aims to forecast the future states of nodes, edges, or graphs in data constructed as graphs. In such graphs, nodes typically represent spatial entities (e.g., regions), while edges represent relationships between spatial entities (e.g., adjacency, interaction). Typical spatial-temporal graph prediction tasks include traffic flow prediction, crowd flow prediction, epidemic prediction, and air quality prediction. Unlike spatial-temporal data constructed as regular grids (e.g., remote sensing images), the spatial regions in graphs are mostly irregular units, and the relationships between them are often more complex. Unlike purely time-series prediction or graph learning tasks, spatial-temporal graph prediction requires the simultaneous modeling of temporal and spatial features, and thus involves modules for capturing both temporal dependencies and spatial dependencies.

Currently, mainstream spatial-temporal graph prediction frameworks can be divided into two categories: Spatial-temporal Graph Neural Networks (STGNNs) [4, 27, 28] and Spatial-temporal Transformers (ST Transformers) [22, 29]. As shown in Figure 4, STGNNs utilize time-series representation models and GNNs to learn temporal representation , and spatial representation , then fuse these two representations to obtain the final spatial-temporal representation , for prediction. In another way, ST Transformers introduce temporal embeddings , and spatial embeddings to preserve the positions and structural information of tokens in the original spatial-temporal sequence, and use temporal self-attention and spatial self-attention modules to derive temporal representations and spatial representations , respectively. These two representations are then fused to generate the final spatial-temporal representation for prediction (as shown in Figure 3a).

2.1.1 Spatial Representation

The main goal of the spatial representation module is to model the relationships between spatial regions and encode these relationships into representations. Based on the fundamental structure of spatial representation models, existing methods can be categorized into CNN-based, GNN-based, and Transformer-based spatial-temporal prediction approaches. CNN-based models partition spatial regions into regular grids and use spatial convolutions to achieve spatial representation. For example, DeepST[21] uses a deep neural network (DNN) to extract the spatial features of the urban grid. However, this approach is not suitable for spatial-temporal prediction in irregular regions. GNN-based spatial representation modules and Transformer-based spatial representation modules are separately the core component of existing STGNNs and ST Transformers.

GNN-based models. DCRNN [6] uses a diffusion convolution to extract graph structure signals by walking randomly on the graph; STGCN[16], T-GCN[10], and KST-GCN[7] use graph convolution networks to represent graph structures; STFGNN[15] uses a simplified graph matrix multiplication to aggregate spatial dependencies; Graph wavenet

citeRN13 generalizes the form of GCN to diffusion convolution to build graph convolution layer; ASTGCRN[5]proposes an adaptive graph convolution to learn node representations; ASTGCN[12]uses graph attention convolution to obtain spatial representations based on spatial attention; HGCN[14] uses spectral clustering and spatial gated graph convolution to obtain micro and macro spatial representations; MTGNN[30] uses a mix-hop propagation layer in the graph convolution module to select and aggregate information from neighboring nodes.

Transformer-based models. ASTTGN[20] leverages node2vec[31] to retain graph structure information, and inputs it as the embedding of the node sequence into the spatial transformer model. Then, a multi-head attention mechanism is used to capture dynamic and implicit spatial dependencies. STTN[17] inputs the representation obtained by graph convolution into the transformer structure to capture dynamic graph signals; GLSTTN [22] constructs a spatial transformer similar to STTN to learn spatial representations; Traffic transformer[8] constructs an encoder-decoder framework based on multi-head attention, and introduces the original graph structure as a mask in the decoder.

2.1.2 Temporal Representation

The primary goal of the temporal representation module is to model temporal features such as trends and periodicity in time series data. According to the fundamental structure of temporal representation models, existing methods can be categorized into RNN-based, CNN-based, and transformer-based spatial-temporal prediction models. RNN-based models primarily utilize Recurrent Neural Networks (RNNs)[32, 33] and their variants[34] to extract temporal features. CNN-based models extract temporal features by applying convolutions over the time dimension. Transformer-based temporal representation models, on the other hand, use self-attention mechanisms to capture dependencies across different time steps.

RNN-based models. Models such as ASTGCRN[5], T-GCN [10], and KST-GCN[7] all employ Gated Recurrent Units (GRU). DCRNN [6] utilizes GRU to construct a Diffusion Convolutional Gated Recurrent Unit for extracting temporal features. ST-MetaNet [11] employs a GRU as the first layer in a Seq2Seq framework. Traffic Transformer[8]uses a simple Long Short-Term Memory (LSTM) to obtain temporal embeddings for extracting temporal features. MASTGN [9]uses LSTM to process features after transformations based on spatial and temporal attention.

CNN-based models. STGCN[16] utilizes 1-D causal convolution and Gated Linear Units (GLU) to construct temporal convolutional layers. Graph Wavenet[13] uses Gated Temporal Causal Convolution to expand the temporal receptive field. STFGNN[15] proposes a new gated CNN module for extracting temporal features. ASTGCN [12] combines temporal attention with standard convolution to extract temporal features. HGCN [14] replaces the original gated temporal convolution with dilation convolution whose dilation factor is 2 to construct temporal gated convolution.

Transformer-based models. ASTTGN[20] employs an adaptive temporal transformer module to capture long-range temporal dependencies. STTN[17] construct a temporal transformer to capture bidirectional, long-range temporal dependencies. LSTTN [19] trains a transformer-based encoder to represent temporal features of subsequences through a self-supervised task of mask reconstruction.

2.1.3 Spatial-Temporal Fusion

Existing methods for spatial-temporal fusion can be divided into two categories: direct fusion and adaptive fusion. The former typically connects the temporal and spatial representation modules sequentially, interleaves them in a block or simply adding the two types of representations. In contrast, the latter involves inputting both representations into a learnable module for fusion.

Direct Fusion. In TGCN[10], the temporal representation module follows the spatial representation module, with the learned spatial representations being fed into the temporal representation module. While Graph Wavenet[13] places the temporal module before the spatial module. G-SWaN[35] first uses a WaveNet to obtain the temporal representation and then feeds it into the spatial graph transformer to learn the spatial representation. Similarly, Traffic Transformer[8] uses LSTM to obtain representations, which are then input into subsequent global and local spatial graph representation modules. STGCN[16] and HGCN [14] build the temporal gated convolutional layer and spatial convolutional layer into a ”sandwich” structure. MTGNN [30] interleaves the temporal and spatial convolution modules for spatial-temporal fusion, while STTN [17] interleaves the spatial transformer and temporal transformer. DCRNN[6] replaces matrix multiplications in the GRU with spatial diffusion convolutions, using spatial representations as recurrent units to learn temporal representations. Similarly, ASTGCRN[5] replaces the multilayer perceptron (MLP) in GRU with adaptive graph convolution. MASTGN [9] concatenates the representations transformed by spatial attention and internal attention, using this concatenated representation as input for the followed layers.

Adaptive Fusion. ASTTGN[20], MVSTT[36] and GMAN[37] use a gated fusion mechanism to adaptively perform weighted fusion of temporal and spatial representations. PDFormer[18] allocates different attention heads to the temporal and spatial representations for attention learning and then uses a multi-head attention mechanism to fuse the temporal and spatial representations.

2.2 Spatial-Temporal De-confounding Representation Learning

Spatial-temporal de-confounding representation learning involves modeling causal variables in spatial-temporal data, and estimating the causal effect of the treatment variable on the outcome variable by removing the influence of confounding variables (confounders). In different data and task scenarios, the confounders within the system vary, and the de-confounding methods differ accordingly. Existing research can further be categorized into methods based on structural causal model (SCM) and potential outcome framework. The underlying ideas of the two types of approaches are unified, differing only in the causal language used.

2.2.1 Structural Causal Model based Method

Methods based on SCM can directly implement interventions on causal graphs, transforming causal problems into statistical language that can represent the data through front-door, backdoor adjustments and other methods. Backdoor adjustment realizes de-confounding by stratifying and balancing confounding factors, being adopted by many studies. Some works categorize confounding factors into pre-defined layers. For instance, STCTN [26] considers regional attributes in the region network as confounders, which may cause existing spatial-temporal prediction models to absorb spurious correlations between the historical and future data. To address this, spectral clustering is used to partition regional attributes, and an unbiased prediction model is constructed in an independent parameter space for each partitions to achieve de-confounded predictions. SEAD[38] aims to eliminate the confounding effects of social environments on pedestrian trajectories. This work assumes that the confounders follow a uniform distribution, and the joint distribution of confounders and causal variables is learned through a cross-attention module. CISTGNN [39] learns representations of confounders from external weather environments and applies backdoor criteria for de-confounding. STEVE[25] focuses on the finiteness of the number of confounders and the completeness of their categories, dividing confounders into invariant and variant layers. CaST [24] discretizes the confounders through a temporal environment codebook, so that the representations of confounder fall into the most appropriate layers. CTSGI[40] leverages images at pedestrian trajectory points as the source of confounder representations, extracting environmental representations through a semantic segmentation model, and then adjusting confounding effects using backdoor criteria. Front-door adjustment, on the other hand, applies to causal graphs where no back-door paths exist, providing a de-confounding strategy different from the backdoor criterion. STNSCM [41] uses GLUs to obtain the distribution of causal variables after intervention from historical observational data and external environment data. In this distribution, causal variables can combine with different confounders obeying an unbiased probability, allowing for de-confounded effect estimation. A similar strategy is used in vehicle trajectory prediction, where the contextual scene is treated as a confounder and eliminated through a counterfactual representation inference module based on front-door adjustment strategies [42]. CASPER [43] uses front-door adjustment to transform time-series completion tasks into summing over subcategories of input data representations, and calculates de-confounded causal effects through learnable prompt vectors.

2.2.2 Potential Outcome Framework based Method

The main idea of methods based on the potential outcome framework is to treat different observational units as samples and simulate interventions by processing these samples comprehensively, thus obtaining de-confounded causal effects. Sample re-weighting is one of the classical methods for achieving sample balance [44], which is used to assign different cities and regions to different treatment groups and calculate the causal effects corresponding to each treatment. For example, it has been used in studies to assess the impact of changes in POI on regional pedestrian flow[45]. With the development of representation learning, balancing confounders based on the obtained unified representations of the samples through representation learning can also ensure that the background attributes distribution across different experimental groups are similar. For instance, CAPE[46] generates representations for different locations from historical event sequences and measures the differences in the representations using the Integral Probability Metric (IPM), minimizing the overall differences between samples in different experimental groups to achieve representation balance. SINet[47] uses the Hilbert-Schmidt Independence Criterion (HSIC) as a regularizer to guide representation balance and remove the effect of hidden confounders. CIDER[48] applies the Wasserstein distance to balance representations across different administrative regions.

In the spatial-temporal prediction module, the proposed method constructs temporal and spatial representations based on transformer models, and employs adaptive spatial-temporal fusion. In the spatial-temporal de-confounding module, the de-confounding strategy proposed in this paper is based on SCM, utilizing the backdoor criterion for de-confounding. The distinctions between this method and the aforementioned related studies are as follows: 1) The strategy employed in this paper innovatively categorizes confounders into two abstract types: temporal confounder and spatial confounder. Furthermore, the probability distributions of the confounders are learned adaptively, which avoids the pre-setting of the number of categories and the distribution of confounders; 2) The constructed spatial-temporal de-confounded representations can simultaneously participate in both spatial-temporal representation learning and spatial-temporal fusion, maximizing information sharing between the two modules.

3 Methodology

3.1 Hypothesis on Spatial-Temporal Prediction

Spatial-temporal prediction aims to infer the future state for steps based on the known previous observations for steps. Taking the prediction of crowd flow as an example, the spatial-temporal prediction task is typically formalized as , where represents the number of spatial regions to be predicted, and represents the number of features to be predicted. For the case of predicting the inflow and outflow, . As shown in Figure 5a, existing methods typically use spatial-temporal prediction model to approximate , where the underlying assumption is that there exists some learnable pattern between past and future observations. However, in this paper, we argue that the nature of this pattern is to describe the transformation relationship between the past and future in different spaces, rather than simply viewing it as a mapping between input and output values. Therefore, as shown in Figure 5b, we further decompose the spatial-temporal prediction task into space transformations between the observation space and the hidden representation space, and the spatial-temporal mapping from future states to past states within the representation space. The past inflow and outflow values (IO) are first mapped into a high-dimensional hidden space through a representation model . Then, in the high-dimensional latent space, they undergo a spatial-temporal transformation to obtain the future IO vector, which is finally transformed back to the observable IO values through another representation model . Therefore, the crowd flow prediction problem can be formalized as Eq. 1:

| (1) |

Based on this decomposition, the spatial-temporal prediction model must address two key questions. Q1: What kind of representation space allows for mapping the past to the future? Q2: How to achieve mapping the past to the future within the representation space? For Q1, we argue that the predictability of spatial-temporal data is ensured by the transmitting the influence of the past on the future, and this influence can be measured through causal effects. Therefore, based on causal assumptions, we propose a spatial-temporal backdoor adjustment strategy to learn a de-confounded representation space (Section 3.1.1), where past representations being encoded and future representations being decoded. For Q2, we believe that the transformation relationship between the past and the future depends on their spatial-temporal. The spatial-temporal embeddings (STE), which fuse the information of both temporal and spatial confounder can model this spatial-temporal context. Therefore, we propose a Past-to-Future Mapping Module based on a Cross-Time-Attention (CTA) mechanism, which facilitates the mapping between past and future through the query of STE from both the future and the past (Section 3.1.2).

3.1.1 A Spatial-Temporal De-Confounded (STDC) Representation Space

The foundation of the ability to infer future states from historical states for spatial-temporal prediction models is that the past has some form of ”influence” on the future. This influence determines the mapping relationship between historical input data and future output data, which is the basis of the predictability of spatial-temporal data. Causal inference can measure the influence of the past on the future using causal effects, aiming to build causal relationships between variables rather than merely correlations, enabling a more accurate and stable representation of the variables[49]. The underlying assumption is that causality is a more reliable form of association, where information flows along the causal links, transmitting the causal effect of the cause on the outcome. Based on this assumption, the spatial-temporal prediction process can be viewed as the process of estimating the causal effect of historical data representations on future data representations, inferring the outcome from the values of causes. However, the hidden confounding bias makes it impracticable to estimate the true causal effect directly from the observational data. This section takes the basic unit of spatial-temporal prediction, STT, as a starting point, to describe the causality underlying crowd flow prediction. We also propose a spatial-temporal backdoor adjustment strategy to learn a de-confounded representation space.

Spatial-Temporal Token (STT). Based on the form of input data, the basic unit in spatial-temporal data is the observation on a spatial entity at a specific time step . In this paper, this smallest unit in spatial-temporal data is analogized to a token in text, referred to as the Spatial-Temporal Token (STT), represented as: . where is the variable to be predicted, and and describe its spatial and temporal characteristics, respectively. These can be considered as background attributes of STTs, and they influence the estimation of the causal effect .

Causal Graph underlying Spatial-Temporal Prediction. The prediction model infers future data representations from historical data representations, where the historical representation serves as the cause for the future representation. However, for each STT, the background attributes influence both the past and future IO representations, making and serve as confounder in the prediction process of . The causal graph underlying spatial-temporal prediction can be illustrated as Fig. 6, which suggests that the causal effect from may vary under different values of confounders. This is similar to how the effect of a drug may differ for patients of different ages in a medical trial. To estimate an accurate and fair causal effect, it is necessary to configure the trial groups in such a way that the distribution of confounders across all groups becomes uniform, i.e., to remove the confounding bias. The intervention operation on the causal graph provides an intuitive representation of de-confounding, where the arrow is removed to make the distribution of independent of . The causal effect after intervention is expressed as .

Spatial-Temporal Backdoor Adjustment. Backdoor adjustment modifies the weights of observational samples with different values of confounders based on their distribution, simulating the balanced sample distribution under an intervention experiment, and is an effective way to de-confounding in observational data. It can be applied when the confounder satisfies the backdoor criterion for . After backdoor adjustment, the causal effect of is expressed as: . where the confounder is discretized into categories. However, in spatial-temporal prediction, confounders are often hidden, making it difficult to predefine the value of and the distribution of each category. To address this issue, we consider the fundamental decision factors of human movement in the real world-namely, ”where” and ”when”-to construct the minimal descriptive set of human movement: ”when”, ”where”. We then categorize the confounders into two abstract types: temporal confounders and spatial confounders . Furthermore, since STTs naturally contain the background variables and , which can serve as information sources for and , respectively. Based on this partition, we propose a spatial-temporal backdoor adjustment method as our de-confounding strategy, which is expressed as Eq. 2:

| (2) |

3.1.2 A Cross-Time-Attention (CTA)-based Past-to-future Mapping

The relationship between past and future states is very abstract, if using a function to approximate this kind of relationship, the parameters of this mapping function will have different value in different temporal periods. Past and future can be viewed as a pair of Cross-Time spatial-temporal entity. Capturing the transformation relationship between these entities requires addressing the following two issues:

(1) Representation of spatial-temporal Entities’ Intrinsic Characteristics. Based on the observations in Fig. 2, we argue that spatial confounder and temporal confounder can jointly characterize the contextual differences between different STTs, which represent the intrinsic characteristics of tokens. Therefore, fusing the representations of temporal confounders with spatial confounder effectively captures the spatial-temporal characteristics of different spatial-temporal entities. For each STT that constitutes the Past and Future, we integrate their temporal and spatial confounder’s representations to obtain a Spatial-Temporal Embedding (STE). This embedding retains the temporal and spatial characteristics of each STT within the entire dataset, thereby representing the contextual features of different spatial-temporal entities.

(2) Querying Relationships Between Cross-Time Entities. The relationships between Cross-Time entities are diverse and dynamically evolving. Therefore, attention mechanism can be effective for capturing their similarities. Since this type of querying spans across time, we refer it to Cross-Time Attention (CTA). We use the STEs of Past and Future as the Key and Query, respectively, and employ the attention mechanism to perform the query. The spatial-temporal mapping is then implemented based on the results of queried attention.

3.2 Overview

Based on the assumptions in Section 3.1, we combine the physical institutions of space transformation and spatial-temporal mapping in the spatial-temporal prediction process. We implement the representation models and the spatial-temporal mapping function through an Encoder-Decoder architecture and the Past-to-Future Mapping module, respectively. The prediction skeleton with architecture of Encoder Past-to-Future Mapping Decoder is built. The encoder and decoder typically share similar model structures and perform predictions in an end-to-end manner. The transformation processes involved in each part are described as follows:

-

1.

Encoder: The encoder projects the past data into a hidden representation space, producing the intermediate representation . This is expressed as: .

-

2.

Past-to-Future mapping: The spatial-temporal mapping function captures the relationship between past and future states in the hidden representation space, transforming the past representation in the hidden space into the corresponding future vector. This is expressed as: .

-

3.

Decoder: The decoder reconstructs the future observations from the latent representation of the future state , which has been transformed by the spatial-temporal mapping. This is expressed as: .

We introduce a self-attention-based transformer model to instantiate the prediction skeleton. To better achieve de-confounding in spatial-temporal prediction, we integrate the confounder information with the prediction skeleton, constructing a Spatial-Temporal De-Confounding prediction model based on Transformer (STDCformer). As shown in Figure 7, in order to maximize the information sharing between the spatial-temporal confounders’ information and the prediction skeleton, while improving the efficiency and information utilization of spatial-temporal prediction, we allow the representation of spatial-temporal confounders to participate in both space transformation and spatial-temporal mapping. This is achieved by integrating it into the prediction skeleton in the following two ways:

-

1.

Space Transformation : Guiding the de-confounded fusion of spatial and temporal representations. In the representation module with structures of Encoder-Decoder, the weights of temporal and spatial confounders are utilized to guide the spatial-temporal fusion process.

-

2.

Spatial-Temporal Mapping : Encoding Spatial-Temporal Embeddings (STE). Temporal and spatial confounders are combined to generate STEs, which are used within the Past-to-Future Mapping module to capture the spatial-temporal mapping relationships.

The STDCformer consists of the following three components, as depicted in Fig. 8:

-

1.

STDC Encoder: Projects historical data into a Spatial-Temporal De-Confounded Representation Space. It includes a Data Embedding Module that integrates the information of the input STTs, and a stack of Spatial-Temporal De-Confounded Attention Blocks to implement temporal representation, spatial representation, and spatial-temporal fusion.

-

2.

CTA-based Past-to-Future Mapping: Explicitly captures the relationship between the past and future, and performs the mapping. It includes Cross-Time Attention Blocks to query the attention between the STEs of future and past, and further projects the past representation into the future representation.

-

3.

STDC Decoder: Reconstructs future observation. It includes a stack of Spatial-Temporal De-Confounded Attention Blocks to implement temporal representation, spatial representation, and spatial-temporal fusion; and a Prediction Head that maps

3.3 STDC Encoder

3.3.1 Data Embedding

For a , the data embedding layer maps it into a representation vector , which is used to effectively integrate both the spatial-temporal characteristics and observational information of the STTs. The representation of the STT is constructed using the following two types of information:

-

1.

Observational Value. This mainly contains the observational feature of the STT. Therefore, temporal representation is used to preserve this part of the information. The flow observation value is mapped into d-dimensional space by a temporal encoder, resulting in as the value representation.

-

2.

Spatial-Temporal Characteristics. This mainly contains the spatial-temporal location and structural information of the STT. Since the temporal and spatial confounders representation can portray the temporal and spatial properties of the STT, a spatial-temporal embedding fusing the information of two types of confounders can preserve this part of information. A confounder encoder is used to map and into a d-dimensional space, yielding and , respectively. is first concatenated with its representation in the Laplacian space of the adjacency graph, resulting in , and then mapped. Since and capture the spatial-temporal attributes of the STT, they play a similar role to the token’s embedding. Therefore, and are fused through a convolution layer to obtain the Spatial-Temporal Embedding (STE), denoted as .

Finally, the value representation and the are concatenated to form the representation . The transformation within the data embedding layer is described by the following formulas in Eq. 3, where denotes the 2D convolution with the ReLU activation function.

| (3) | ||||

3.3.2 Spatial-Temporal De-Confounded Attention Block

For the spatial-temporal representation within the historical time window, the observation time steps are defined as , and the spatial-temporal representation of the region is given by . For the observed spatial region , the spatial-temporal representation at time step is given by . Therefore, the spatial-temporal representation of the data within the prediction window is for all regions on all steps and is given by . It is then fed into the first layer of the Spatial-Temporal De-Confounded Attention Block in the STDC Encoder.

As illustrated in Fig. 9, the Spatial-Temporal De-Confounded Attention Block is composed of three modules: Spatial Attention, Temporal Attention, and Spatial-Temporal De-Confounding Fusion, which are designed to capture spatial representations, temporal representations, and spatial-temporal fusion, respectively. Spatial Attention and Temporal Attention use the self-attention mechanism to capture spatial and temporal dependencies between different STTs. Spatial-Temporal De-Confounding Fusion applies a spatial-temporal backdoor adjustment strategy to control spatial-temporal confounders, ensuring that the predictions are not influenced by confounding biases.

Spatial attention. The spatial attention module is designed to model the spatial dependencies between different regions within a given time window. In this module, the self-attention mechanism is employed to capture the similarities between STTs distributed across different spatial regions, facilitating the fusion of information across space. For each batch of data, is used to learn the spatial attention distribution for time step . are firstly learned for current input representation to learn the representations of Query, Key and Value. The spatial attention coefficient matrix is then computed. Formulas for this process are in Eq. 4:

| (4) | ||||

Where . Finally, for time step , the data representation after the spatial attention transformation is given by . For the entire input time window, the representation is .

Temporal attention. Temporal attention module is designed to model the temporal dependencies of a specific region across different time steps. In this module, the self-attention mechanism is employed to capture the similarities between STTs distributed across different time steps, facilitating the fusion of information across time. For each batch of data, is used to learn the temporal attention distribution for region . are firstly learned for current input representation to learn the representations of Query, Key and Value. The temporal attention coefficient matrix is then computed. Formulas for this process are in Eq. 5:

| (5) | ||||

Where . Finally, for time step , the data representation after the temporal attention transformation is . The representation for the entire input time window is .

Spatial-Temporal De-Confounded Fusion. The Spatial-Temporal De-Confounded Fusion module fuses the spatial and temporal representations obtained from the previous two modules with simultaneously controlling the confounders. According to the spatial-temporal backdoor adjustment strategy , here, represents the joint distribution of spatial confounders and spatial representations, while represents the joint distribution of temporal confounders and temporal representations. Since contains both and , which are involved in the computation of spatial attention and temporal attention, respectively, and can be considered as the representations of and . For each , to obtain and , and are summed and passed through a Sigmoid function, mapping the weight of the two confounding factors to the range from 0 to 1. Finally, after fusion, the final spatial-temporal representation is obtained for all STTs in the input data, as shown by Eq. 6:

| (6) | ||||

3.4 CTA-based Past-to-Future Mapping

This module introduces a Cross-Time-Attention-based Past-to-Future Mapping mechanism, implemented by the Cross-Time Attention block (as shown in Fig. 10), aimed at capturing the mapping relationship between the historical and future states in the representation space. This relationship is not dependent on the specific observational values, but rather determined by the spatial-temporal characteristics of the STTs before and after the mapping. Therefore, the Cross-Time Attention block captures the relationship between the STE of the historical window and the future window , and based on this relationship, performs the transformation from the historical vector to the future vector . To capture this complex and dynamic relationship, we utilize the attention mechanism to compute the similarity between the spatial-temporal characteristics of and . Since attention is used to query the cross-time relationship between the STEs of the past and future representations, this process is referred to as Cross-Time Attention. We use the STE of the STTs to obtain the Query and Key, and use to obtain the Value, resulting in . The formula for this process is in Eq. 7:

| (7) | ||||

Where . Finally, the data representation after the mapping attention transformation is obtained as .

3.5 STDC Decoder

3.5.1 Spatial-Temporal De-Confounded Attention Block

The STDC Decoder and STDC Encoder share the same structure of the Spatial-temporal De-Confounded attention block. The key difference lies in the input: the STDC Encoder takes the historical data’s ST representation as input, while the Decoder takes the future state ST representation after Past-to-Future mapping. Therefore, is fed into the first layer of the Spatial-temporal De-Confounded attention block in the Decoder, where .

3.5.2 Prediction Head

The Prediction Head maps the de-confounded representations of the future state from the Spatial-temporal De-Confounded attention block to the observational feature space. In this paper, a simple convolutional layer is used to map the vector in the high-dimensional representation space to the observation space. It is formulated as . This directly implements multi-step forecasting for steps. The model is trained end-to-end with the Mean Absolute Error (MAE) as the loss function. The loss function is in Eq. 8:

| (8) |

Where represents the ground truth.

4 Experiments Results and Analysis

4.1 Databases

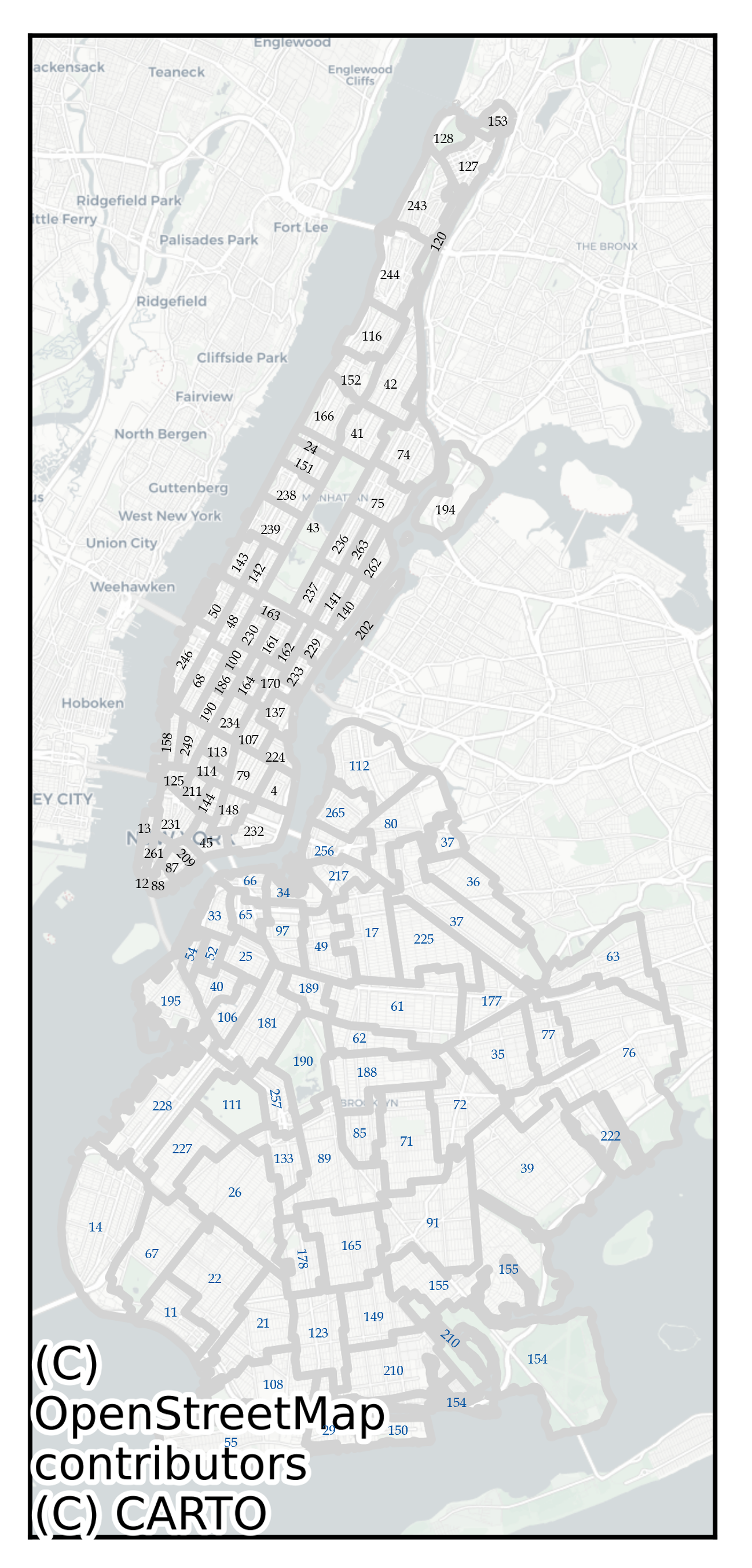

Crowd flow dataset. This study utilizes two real-world crowd flow datasets, respectively for the Manhattan (MHT) and Brooklyn (BKL) areas in New York City. These datasets are derived from the New York City Taxi and Limousine Commission (TLC), which provides data on taxi passengers’ drop-in and drop-off activities. Each dataset is partitioned based on the irregular regions defined by TLC’s taxi zone. For consistency, the ID of regions used in this paper follow TLC’s official zoning system, with taxi zones that are not accessible to taxis being removed. The study areas are shown in Figure 11, with taxi zones in the MHT dataset and BKL dataset marked in black and blue, respectively. In the dataset, for each region at a given time step, inflow data represents the number of passengers dropping off in that region during the specified time window while outflow data represents the number of passengers dropping in in that region during the same time window. These inflow and outflow data are aggregated from three main taxi companies operating in the region: Yellow, Green, and For-Hire Vehicles. The statistical information for these datasets is summarized in Table 1:

| Dataset | Number of Regions | Duration | Interval | Timesteps |

|---|---|---|---|---|

| MHT | 66 | 2023/11/1-2024/6/29 | 1H | 5808 |

| BKL | 61 | 2023/11/1-2024/6/29 | 1H | 5808 |

Auxiliary data. To learn the confounders’ representations of STTs, this study collected auxiliary data to characterize the background characteristics of the STTs. To comprehensively and accurately introduce auxiliary information that captures the key semantic concepts influencing human movement, the information that depicts temporal and spatial confounder was collected for each STT. The auxiliary information introduced and their corresponding semantic concepts are listed in Table 2.

-

1.

Temporal confounders. For each time step, we constructed a temporal confounder vector to characterize attributes such as travel necessity (e.g., whether in the human preferred movement period) and travel suitability at that specific time. The temporal confounder’s information includes: time_in_day, day_in_week, whether it is a holiday, temperature and weather conditions. Weather data were sourced from wunderground.com.

-

2.

Spatial confounders. For each region, we constructed a spatial confounding vector to capture attributes such as geographic location, functionality, travel cost, and travel safety. The spatial confounder’s information includes: taxi zone ID, the number and categories of POI, the number of houses for sale and their average price, the number of reported crime cases. The taxi zone IDs are sourced from the official TLC numbering system. Housing data is obtained from realtor.com. POI data is collected from Google Maps. Crime statistics are sourced from the New York City Police Department (NYPD) official website.

| Category of confounder | Semantic concepts | Information |

| Temporal confounder | travel necessity | time_in_day, day_in_week, is holiday or not |

| travel suitability | weather condition, temperature | |

| Spatial confounder | geographic location | taxi zone ID, Laplacian eigenvalues of adjacency graph |

| functionality | category of POI, number of each POI category | |

| habitability | number of house for sale, average house price | |

| movement safety | shooting cases, complaint cases of citizen during the last year. |

4.2 Baselines and Metrics

Baselines. This paper selects three types of spatial-temporal prediction models as baselines: traditional machine learning models, STGNNs, and ST Transformers. Specifically, the traditional machine learning models include GRU, LSTM, and RNN, which represent fully-connected GRU, LSTM, and RNN, respectively. The STGNNs consist of T-GCN [10], STGCN [16], HGCN[14], Graph WaveNet (GWNET) [13], DCRNN[6], and MTGNN[30]. The ST Transformers include GMAN[37], STTN[17], and PDFormer [18].

Metric. Mean Absolute Error (MAE) and Root Mean Squared Error (RMSE) are used as evaluation metrics to measure the difference between the actual values and the predicted values. These two metrics are commonly used for regression tasks, with MAE providing a straightforward measure of prediction accuracy, and RMSE giving more weight to larger errors, which is helpful for evaluating models where large errors are particularly problematic. All models are trained and inferred on the same device, using the same data partitioning and preprocessing steps to ensure fairness in evaluation.

4.3 Experiments Setup

To evaluate the effectiveness of the proposed model, we assess its IID prediction capability and OOD generalization ability after integrating the de-confounding strategy. The performance experiments are conducted in two aspects: prediction experiments under IID conditions and zero-shot transfer experiments under OOD conditions. The experimental settings are detailed below.

General settings. For task setup, the crowd flow prediction task is set to predict the next 6 time steps (6 hours) based on the previous 6 time steps. The final results are presented as multi-step forecasting. For model optimization, STDCformer is optimized using the Adam optimizer with an initial learning rate of 0.001. The learning rate is automatically adjusted based on the model’s performance on the validation set. The training process is set to 120 epochs, with an early stopping strategy applied after 50 steps. All baseline models follow their original training parameter settings. For hyperparameter settings, for the STDCformer model, the feature dimension of the hidden representation space is set to 64, and the dimension of the Laplacian eigenvalues is set to 8. The number of ST Attention blocks is set to 5 for the MHT dataset and 6 for the BKL dataset. The attention mechanism uses 8 attention heads stacked. For model implementation, all models are trained on an NVIDIA A100 with 1 Core GPU and 64GB of memory.

Prediction experiments under IID conditions. Traditional crowd flow prediction tasks are conducted on the same dataset across training, validation, and testing phases, under the assumption that all samples in the dataset follow an IID distribution. In the IID prediction experiments, the training, validation, and testing data are from the same dataset, with a split ratio of 7:1:2. The training and validation sets are randomly shuffled and standardized using a standard scaler before being fed into the model. The batch size is set to 64. The model is trained on the training set, tuned on the validation set, and the best-performing model is selected to be tested on the test set. Considering that inflow and outflow represent two distinct features, capturing the attraction and repulsion of a region to human crowds during a given time period, they reflect different semantic meanings. Therefore, the model’s predictions are evaluated separately for Inflow, Outflow, and their combined performance (IO).

Zero-shot transfer experiments under OOD conditions. The crowd flow varies significantly across different regions, and the distribution of observations differs both temporally and spatially. Therefore, the transfer of models across datasets from different regions can be viewed as a Spatial OOD problem. Spatial OOD generalization is particularly challenging for spatial-temporal graph predictions because the spatial graphs corresponding to different regions have different sizes. Most spatial dependency modeling modules in existing models cannot directly scale to another graph in a zero-shot manner. In contrast to graph convolution, self-attention in the spatial axes is not constrained by the original graph size and is well-suited to solve this problem. Therefore, we choose to compare the zero-shot spatial OOD transfer capability of two SOTA spatial-temporal transformers that use self-attention for spatial aggregation as baselines. Given that the prediction difficulty of the BKL dataset is lower and more appropriate for the zero-shot test scenario, we directly use the best model trained on the MHT dataset to test on the BKL dataset.

4.4 Comparison experiments

4.4.1 Prediction Experiments Under IID Condition

In order to evaluate the model’s predictive ability for different features and the overall flow, we test the model’s predictions for Inflow, Outflow, and the combined Inflow-Outflow (IO). The results are shown in Table 3.

For different datasets, the model’s performance on the BKL dataset is superior to that on the MHT dataset, with smaller differences in prediction metrics. This is due to the fact that the MHT dataset exhibits an overall larger range of numerical variations. As the most famous and active central district of New York City, the complexity of crowd movement in MHT is higher, making prediction more challenging. For different model architectures, ST Transformers outperform most of the STGNNs and traditional models, indicating that the introduction of self-attention mechanisms is effective in capturing complex spatial-temporal dependencies. However, powerful STGNN models such as DCRNN and MTGNN demonstrate comparable or even stronger predictive performance than some ST Transformers. This suggests that STGNNs still have significant advantages in modeling temporal and spatial dependencies and, in certain cases, can achieve accurate predictions with lower cost.

Since the model is trained based on the overall crowd flow characteristics (IO), IO can be considered the primary evaluation metric. In terms of overall feature prediction, the proposed STDCformer achieves the highest IO prediction accuracy across both datasets, while PDFormer demonstrates the second-best performance. MTGNN, GMAN, and DCRNN show comparable performance to PDFormer. Regarding the prediction accuracy of individual features, STDCformer and PDFormer remain highly competitive. PDFormer outperforms our model in inflow prediction on the MHT dataset, but our model performs better in outflow prediction. This indicates that different models may focus on certain data features during training, influenced by the characteristics of the data. On one hand, it suggests that a key direction for future research is understanding how models balance their learning capacity for different features. On the other hand, it also implies that relevant application departments can select models based on specific strengths, depending on their practical needs.

| Model | MHT | BKL | |||||||||||

| MAE | RMSE | MAE | RMSE | ||||||||||

| In | Out | IO | In | Out | IO | In | Out | IO | In | Out | IO | ||

| Traditional | GRU | 15.21 | 17.07 | 16.12 | 25.02 | 28.52 | 26.83 | 3.64 | 3.11 | 3.38 | 5.20 | 4.63 | 4.92 |

| LSTM | 15.59 | 17.63 | 16.58 | 25.69 | 29.59 | 27.71 | 3.6 | 3.09 | 3.35 | 5.20 | 4.64 | 4.93 | |

| RNN | 15.65 | 17.87 | 16.73 | 25.96 | 29.72 | 27.9 | 3.66 | 3.19 | 3.43 | 5.32 | 4.81 | 5.07 | |

| STGNN | T-GCN | 21.04 | 22.99 | 21.99 | 33.34 | 37.02 | 35.23 | 4.28 | 3.58 | 3.93 | 6.13 | 5.23 | 5.70 |

| STGCN | 16.92 | 19.10 | 17.98 | 27.44 | 31.78 | 29.69 | 3.8 | 3.25 | 3.53 | 5.44 | 4.77 | 5.12 | |

| HGCN | 23.06 | 25.34 | 24.17 | 36.26 | 40.95 | 38.68 | 4.51 | 3.65 | 4.09 | 6.63 | 5.16 | 5.94 | |

| GWNET | 16.38 | 16.98 | 16.67 | 26.4 | 28.29 | 27.36 | 3.76 | 3.10 | 3.39 | 5.28 | 4.59 | 4.94 | |

| DCRNN | 14.97 | 16.56 | 15.75 | 24.16 | 27.49 | 25.88 | 3.67 | 3.10 | 3.39 | 5.28 | 4.59 | 4.94 | |

| MTGNN | 14.86 | 16.26 | 15.54 | 25.20 | 27.98 | 26.63 | 3.63 | 3.09 | 3.37 | 5.17 | 4.52 | 4.85 | |

| ST Transformer | GMAN | 14.92 | 16.52 | 15.69 | 24.56 | 27.60 | 26.14 | 3.59 | 3.10 | 3.35 | 5.18 | 4.65 | 4.92 |

| STTN | 15.14 | 16.78 | 15.94 | 24.72 | 27.60 | 26.14 | 3.81 | 3.27 | 3.54 | 5.47 | 4.80 | 5.15 | |

| PDFormer | 14.64 | 16.05 | 15.33 | 23.70 | 26.61 | 25.20 | 3.54 | 3.07 | 3.31 | 5.09 | 4.53 | 4.81 | |

| Ours | STDCformer | 14.81 | 15.67 | 15.24 | 24.66 | 26.35 | 25.17 | 3.33 | 3.24 | 3.27 | 5.08 | 4.47 | 4.77 |

4.4.2 Zero-Shot Transfer Experiments Under OOD Condition

The evaluation results of the zero-shot experiments under OOD conditions are shown in Table 4. STDCformer outperforms the baseline method in all metrics, indicating that STDCformer has better generalization ability. And it can be observed that on the same dataset, the differences in the metrics between models are more significant on the zero-shot task than on the supervised learning task, which implies that the de-confounded model has a greater advantage in generalization ability. The reason behind may be that although the distribution of spatial-temporal confounders in the ood dataset is shifted, frozen confounder encoder is still able to capture the difference of characteristics between STTs and use it to assign weights, which makes the weights in the de-confounding spatial-temporal fusion module is still able to de-confounding and make prediction.

The ability of the model to transfer to an OOD dataset in a zero-shot manner is of significant practical importance, as it allows for introducing models from other regions with minimal cost to support emergent decision-making while achieving acceptable performance. Although the accuracy of this zero-shot testing is higher compared to training from scratch on the OOD dataset, it is still acceptable for predictive needs in emergency situations, meeting the requirements of relevant planning and management departments.

| Model | MAE | MAPE(%) | MSE | RMSE |

|---|---|---|---|---|

| GMAN | 7.69 | 124.41 | 131.05 | 10.97 |

| PDFormer | 6.94 | 80.70 | 95.16 | 9.23 |

| STDCformer | 6.03 | 65.41 | 95.08 | 9.22 |

4.5 Ablation and Hyperparameters Analysis

4.5.1 Ablation Analysis

To further validate the effectiveness of the components in STDCformer, we conducted ablation experiments. The MAE of the model after ablation is shown in Figure 12. We set up the following five ablated models:

-

1.

’w/o DC’: The original model without the de-confounding module. Ablating this module demonstrates the importance of considering reweighting in the spatial-temporal fusion process.

-

2.

’w/o MAP’: The original model without CTA-based mapping. Ablating this module highlights the importance of learning the transformation relationship between past and future states in the representation space.

-

3.

’w/o SC’: The original model without the spatial confounder. Ablating this module shows the significance of integrating auxiliary information to characterize the spatial characteristics of different regions.

-

4.

’w/o TC’: The original model without the temporal confounder. Ablating this module illustrates the importance of incorporating auxiliary information to represent the temporal characteristics of each time window.

-

5.

’w/o LAP’: The original model without the Laplacian embedding. Ablating this module emphasizes the importance of retaining the global adjacency graph structure when characterizing spatial properties.

Overall, it can be observed that the model’s performance on the BKL dataset does not change significantly, with the original model achieving the best performance. This suggests that the backbone, spatial-temporal transformer, ensures the model’s basic capability on simple datasets, and the additional components we designed enhance performance on top of this foundation. In contrast, on the MHT dataset, which presents a greater prediction challenge, the contribution of each component to prediction accuracy provides a clear and intuitive comparison.

After removing the CTA-based mapping module, the model’s accuracy dropped the most, indicating that the differences between the spatial-temporal characteristics of past and future STTs are significant. The mapping relationship is highly complex and diverse, requiring the design of effective modules to uncover these dynamic relationships. The prediction performance also declined after removing the Laplacian embedding and the De-confounding module, demonstrating the importance of incorporating the region’s position within the global spatial structure and accounting for the weights of the temporal and spatial characteristics of the STTs in improving prediction accuracy. The model’s accuracy also showed an evident decline after removing the spatial confounder and temporal confounder, even falling below that of relatively weaker STGNN models. This demonstrates that modeling the auxiliary information as STT characteristics is beneficial for enhancing the model’s predictive capability.

4.5.2 Hyperparameters Analysis

We further investigate the sensitivity of the model’s predictions to hyperparameter settings. Since our primary goal is to explore the stability of the model’s performance across different parameter ranges and demonstrate that the model’s effectiveness is not benefit by randomness, we selected hyperparameter ranges that vary around the optimal parameter value. We chose values in a reasonable scope, because extreme values were excluded from consideration by any method.

The hyperparameters explored include: (1)Laplacian Dimension: exploring the relationship between spatial structural information and model performance through the dimension of Laplacian eigenvalues; (2) Layers: exploring the relationship between model complexity, feature abstraction, and model performance through the number of STDC attention blocks; (3) Dimension: exploring the relationship between the representation space dimension and model performance through the dimension of the hidden representation space; (4) Attention Head: exploring the relationship between the self-attention mechanism and model performance through the number of attention heads. Taking the MAE of the prediction results as an example, the results are shown in Figure 13. Overall, the model exhibits stable performance across different hyperparameter settings, indicating a strong basic predictive ability. The impacts of different hyperparameters are as follows:

-

1.

The dimension of Laplacian eigenvalues. Since the Laplacian eigenvalues need to be concatenated with other spatial confounders, we selected values close to and lower than the dimension of the hidden representation space with a scope of [6,8,10,12]. The best performance was achieved when the value was set to 8, suggesting that at this point, the spatial position features and spatial semantic features of different regions might have reached an optimal balance.

-

2.

Number of STDC Attention Blocks. While increasing the number of layers theoretically enables the model to learn more abstract representations, excessive layers can make the model over-complex, reducing efficiency or even leading to overfitting. Therefore, the maximum number of layers was set to 6. Overall, the predictive ability of the model improved with more layers, with the MHT and BKL datasets achieving optimal performance at 5 and 6 layers, respectively. The optimal values on these two datasets show little difference, however, for more complex datasets with higher prediction difficulty, more layers are required.

-

3.

Dimension of the hidden representation space. Since the original dimensions of the time-series observations are relatively low, mapping them to excessively high hidden dimensions may lead to information sparsity. Therefore, we chose a range of [32,64,128]. The optimal dimension for the hidden representation space on two datasets was both 64, suggesting that when the original 2-dimensional flow feature is scaled to a 64-dimensional space, an optimal trade-off is achieved between information retention and feature extraction.

-

4.

Number of Attention Heads. Different attention heads can capture attention coefficients with different semantics. Following existing studies, we set the range to [1,2,4,8]. Multi-head attention outperformed single-head attention, with the MHT and BKL datasets achieving optimal performance at 8 and 4 heads, respectively. This indicates that the semantic complexity of the MHT dataset is higher than that of the BKL dataset, which may be strongly correlated with the prediction difficulty.

4.6 Analytical Experiments and Interpretation

To further investigate and explain the reasons behind the model’s strong performance, in addition to the zero-shot transfer experiment for spatial OOD regions (Section 4.4.2), we conducted a series of analytical experiments and provided a physical interpretation aligned with real-world scenes. To validate whether the spatial-temporal de-confounding based fusion approach improves the model’s prediction balance and mitigates the impact of data bias, we conducted a statistical analysis and comparison of prediction balance (Section 4.6.1). To demonstrate the advantages of the proposed model in real-world predictions, we performed an analysis and interpretation based on prediction visualization (Section 4.6.2). To further explore the significance of the two key issues in spatial-temporal prediction proposed in Section 3.1.1 and Section 3.1.2 in real-world tasks, as well as their physical meaning, we also conducted a case study of the research region (Section 4.6.3 and Section 4.6.4).

4.6.1 Prediction Balance

Balance between Inflow and outflow. Crowd flow prediction requires capturing both inflow and outflow characteristics of a region, making it a multi-feature prediction task. Different models perform differently on inflow and outflow predictions and often fail to achieve a balanced performance. This imbalance arises because inflow and outflow can exhibit distinct patterns for the same region. For instance, during working hours, a company may load higher inflow than outflow, while the opposite may occur during after-work hours. Models’ predictive imbalance across features may be raised by biases in the distribution of features within the dataset, causing the model’s favor of certain features. Figure 14 illustrates the relative MAE ratio of predicting inflow and outflow by different models. We observe that STDCformer achieves the most balanced performance between inflow and outflow predictions across both datasets. This balance may be attributed to the incorporation of spatial characteristics, which help in constructing a semantic profile of the region (e.g., functionality). These semantics act as common driving factors behind inflow and outflow variations, enabling the model to capture changes in these features more effectively.

Balance across regions and across timesteps. As shown in Figure 15, using MHT dataset as an example, we examine the distribution of the model’s performance across spatial and temporal dimensions for each model. We gathered the statistic of prediction metrics separately for different regions and time windows, and report the mean and standard deviation. Taking the difference in MAE as an example, it can be observed that STDCformer achieves the smallest standard deviation in prediction across both regions and time steps. This indicates that STDCformer is able to maintain the best balance across spatial and temporal dimensions. The underlying reason for this performance is likely the incorporation of spatial-temporal confounders’ information, followed by a de-confounding process. This approach allows the model to distinguish samples from different spatial and temporal contexts, rather than treating each spatial-temporal sample equally in the parameter fitting process.

4.6.2 Prediction Visualization

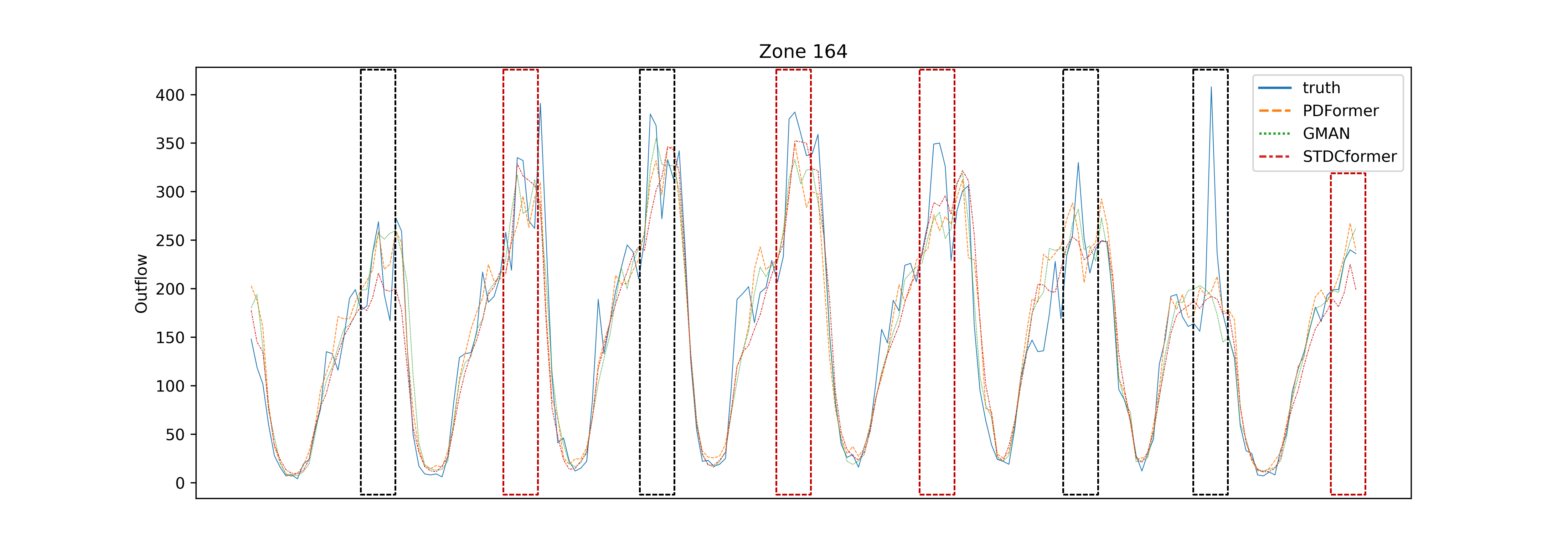

In general, all models perform well in capturing the upward and downward trends of crowd flow. However, STDCformer prefer to make predicted values within a relatively small range, likely due to the inherent restraint between the spatial confounder and temporal confounder . Because STDCformer places significant importance on spatial characteristics, which are considered stable, thus leading to more conservative predictions. For traffic flow peaks that last for over two hours (as indicated by the red dashed boxes in Figures 16 and Figures 17), STDCformer provides relatively accurate predictions. On the other hand, PDFormer and GMAN tend to predict values that exceed the actual peak. However, in the case of sudden increases followed by sharp decreases in crowd flow (as indicated by the black dashed boxes in Figures 16 and Figures 17), STDCformer struggles to make more accurate predictions for the peak. This observation indicates two points, on the one hand, STDCformer is less sensitive to temporal changes, and its ability to predict flow shifts caused by unexpected events is relatively weaker. On the other hand, when the traffic flow data contains errors or noise (such as incorrect passenger counts for taxi orders), STDCformer is better at filtering out the noise.

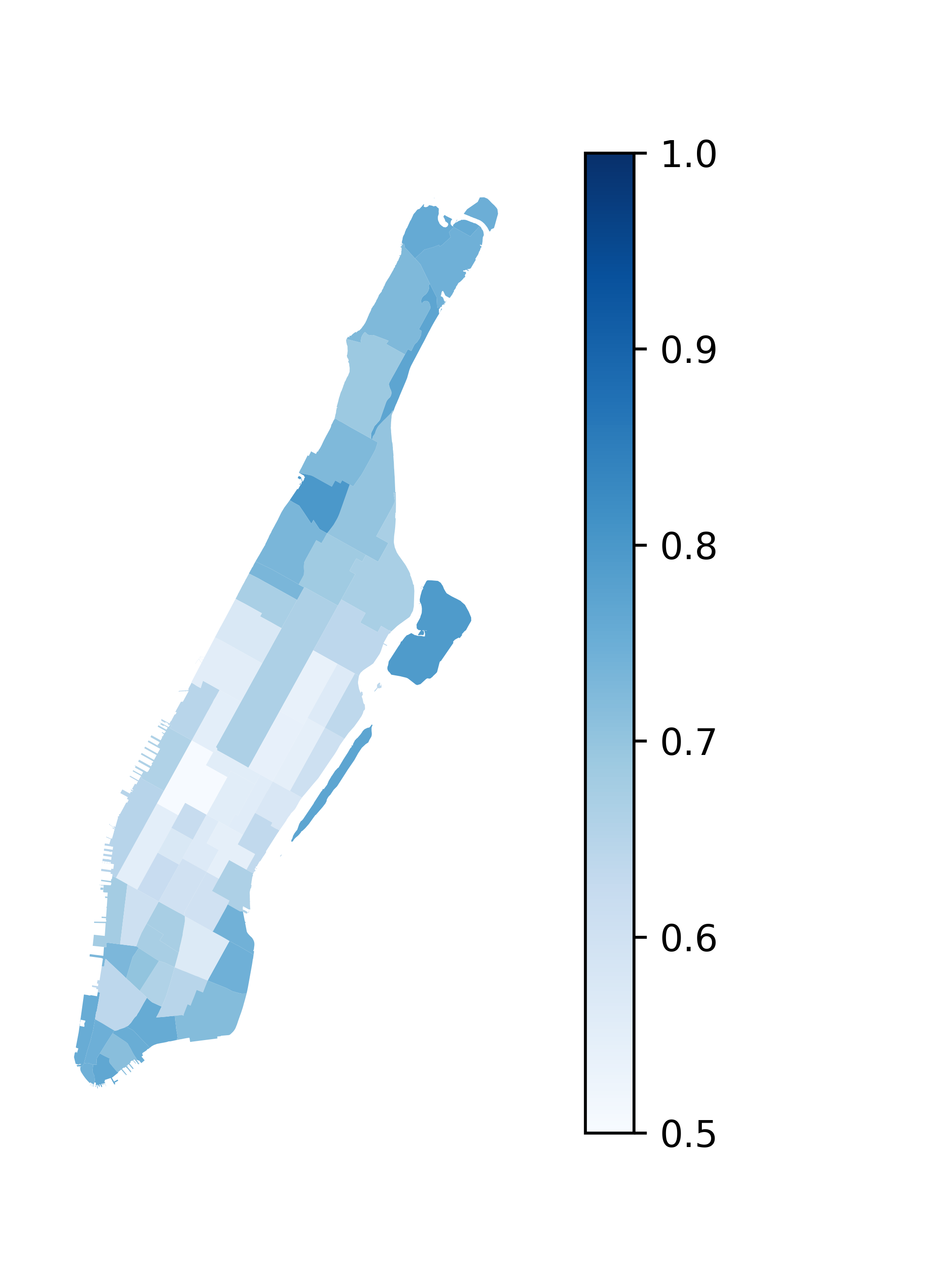

4.6.3 Physical Meaning of Confounders

To explore the physical meaning of the temporal and spatial confounders learned by the model, we analyzed the weights output from the spatial-temporal de-confounding layers. Here, represents the weight of the spatial confounder in the STTs. Based on the observed phenomena, we hypothesize and provide an interpretation of the role and potential implications of the learned confounders. From the perspective of de-confounding, higher weights indicate that these confounders represent a lower proportion of the original data. Therefore, higher weights are needed to model an ideal unbiased distribution. From the perspective of spatial-temporal data fusion, higher weights suggest that, under the original data distribution, the model needs to pay more attention to the information embedded within this type of confounders.

-

1.

Observation 1: For all regions, in the training dataset is no less than 0.5.

Guess: The original data distribution leads the model to be more biased toward capturing temporal representations without a de-confounding strategy. Introducing higher weight of allows the model to emphasize the role of spatial representations during the spatial-temporal fusion process.

Interpretation: When predicting future crowd flow, the model considers the differences between regions and utilizes the position of each region within the global urban context for prediction. The learned in this work not only captures the spatial characteristics of regions but also includes the inter-region connectivity, which preserves the structural relationships between spatial regions from both an attribute and a positional perspective. On one hand, highlights the importance of spatial information in spatial-temporal predictions, which is consistent with previous observations, such as the pre-experiment results from STGC-GNNs[50], which demonstrated the importance of spatial information, and the ablation study results from PDFormer[18], which also emphasizes this. On the other hand, also implies that , indicating that the temporal confounder has a higher weight in the original data distribution. This leads the model without de-confounding to rely more heavily on temporal features. The de-confounding process in this work adjusts this bias and corrects the overemphasis on temporal representations.

-

2.

Observation 2: When the IO value decreases, increases (as shown as Figure 18).

Guess: The enhancement of can serve as an effective buffer when the functional characteristics of a region diminish.