STDAN: Deformable Attention Network for Space-Time Video Super-Resolution

Abstract

The target of space-time video super-resolution (STVSR) is to increase the spatial-temporal resolution of low-resolution (LR) and low frame rate (LFR) videos. Recent approaches based on deep learning have made significant improvements, but most of them only use two adjacent frames, that is, short-term features, to synthesize the missing frame embedding, which cannot fully explore the information flow of consecutive input LR frames. In addition, existing STVSR models hardly exploit the temporal contexts explicitly to assist high-resolution (HR) frame reconstruction. To address these issues, in this paper, we propose a deformable attention network called STDAN for STVSR. First, we devise a long-short term feature interpolation (LSTFI) module, which is capable of excavating abundant content from more neighboring input frames for the interpolation process through a bidirectional RNN structure. Second, we put forward a spatial-temporal deformable feature aggregation (STDFA) module, in which spatial and temporal contexts in dynamic video frames are adaptively captured and aggregated to enhance SR reconstruction. Experimental results on several datasets demonstrate that our approach outperforms state-of-the-art STVSR methods. The code is available at https://github.com/littlewhitesea/STDAN.

Index Terms:

Deformable attention, space-time video super-resolution, feature interpolation, feature aggregation.I Introduction

The goal of space-time video super-resolution (STVSR) is to reconstruct photo-realistic high-resolution (HR) and high frame rate (HFR) videos from corresponding low-resolution (LR) and low frame rate (LFR) ones. STVSR methods have attracted much attention in the computer vision community since HR slow-motion videos provide more visually appealing content for viewers. Many traditional algorithms [8, 19, 3, 2, 4] are proposed to solve the STVSR task. However, due to their strict assumptions in their manually designed regularization, these methods mostly suffer from ubiquitous object and camera motions in videos.

In recent years, deep learning approaches have made great progress in diverse low-level visual tasks [53, 62, 31, 30, 9, 20, 26, 46]. Particularly, video super-resolution (VSR) [53, 38] and video frame interpolation (VFI) [31, 25] networks among these approaches can be combined together to tackle STVSR. Specifically, the VFI model interpolates the missing LR video frames. Then, the VSR model can be adopted to reconstruct HR frames. Nevertheless, the two-stage STVSR approaches usually have large model sizes, and the essential association between the temporal interpolation and spatial super-resolution is not explored.

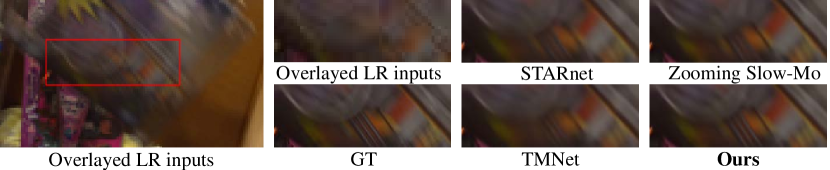

To build an efficient model and explore mutual information between temporal interpolation and spatial super-resolution, several one-stage STVSR networks [13, 36, 64, 65] are proposed. These approaches can simultaneously handle the space and time super-resolution of videos in diverse scenes. Most of them only leverage corresponding two adjacent frames for interpolating the missing frame feature. However, other neighboring input LR frames can also contribute to the interpolation process. In addition, existing one-stage STVSR networks are limited in fully exploiting spatial and temporal contexts among various frames for SR reconstruction. To alleviate these problems, in this paper, we propose a one-stage framework named STDAN for STVSR, which is superior to recent methods, illustrated in Fig. 1. The cores of STDAN are (1) a feature interpolation module known as Long-Short Term Feature Interpolation (LSTFI), and (2) a feature aggregation module known as Spatial-Temporal Deformable Feature Aggregation (STDFA).

The LSTFI module, composed of long-short term cells (LSTCs), utilizes a bidirectional RNN [37] structure to synthesize features for missing intermediate frames. Specifically, to interpolate the intermediate feature, we adopt the forward and backward deformable alignment [13] for dynamically sampling two neighboring frame features. Then, the preliminary intermediate feature in the current LSTC is mingled with the hidden state that contains long-term temporal context from previous LSTCs to obtain the final interpolated features.

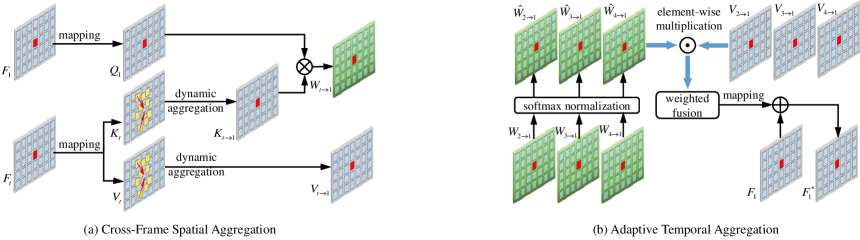

The STDFA module aims to capture spatial-temporal contexts among different frames to enhance SR reconstruction. To dynamically aggregate the spatial-temporal information, we propose to use deformable attention to adaptively discover and leverage relevant spatial and temporal information. The process of STDFA can be divided into two phases: cross-frame spatial aggregation and adaptive temporal aggregation. Through deformable attention, the cross-frame spatial aggregation phase dynamically fuses useful content from different frames. The adaptive temporal aggregation phase mixes the temporal contexts among these fused frame features further to acquire enhanced features.

The contributions of this work are three-fold: (1) We design a deformable attention network (STDAN) to deal with STVSR. Our STDAN with fewer parameters achieves state-of-the-art performance on multiple datasets; (2) We propose a long-short term feature interpolation module, where abundant information from more neighboring frames are explored for the interpolation process of missing frame features; (3) We put forward a spatial-temporal deformable feature aggregation module, which can dynamically capture spatial and temporal contexts among video frames for enhancing features to reconstruct HR frames.

II Related Work

In this section, we discuss some relevant works on video super-resolution, video frame interpolation, and space-time video super-resolution.

Video Super-Resolution. The goal of video super-resolution (VSR) [55, 38, 44, 48] is to generate temporally coherent high-resolution (HR) videos from corresponding low-resolution (LR) ones. Since input LR video frames are consecutive, many researchers focus on how to aggregate the temporal contexts from the neighboring frames for super-resolving the reference frame. Several VSR approaches [32, 24, 23, 51, 52] adopt optical flow to align the reference frame with neighboring video frames. Nevertheless, the estimated optical flow may be inaccurate due to the occlusion and fast motions, leading to poor reconstruction results. To avoid using optical flow, deformable convolution [39, 40] is applied in [53, 54, 38] to perform the temporal alignment in a feature space. In addition, Li et al. [43] established a multi-correspondence aggregation network to exploit similar patches between and within frames. Dynamic filters [49] and non-local [42, 47] modules are also exploited to aggregate the temporal information.

Video Frame Interpolation. Video frame interpolation (VFI) [29, 28, 27, 31, 62] aims to synthesize the missing intermediate frame with two adjacent video frames, which is extensively used in slow-motion video generation. Specifically, for generating the intermediate frame, U-Net structure modules [25] are employed to compute optical flows and visibility maps between two input frames. To cope with occlusion in VFI, contextual features [21] are further introduced into the interpolation process. Furthermore, Bao et al. [31] proposed a depth-aware module to detect occlusions explicitly for VFI. On the other hand, unlike most VFI methods using optical flow, Niklaus et al. [22, 30] adopted the adaptive convolution to predict kernels directly and then leveraged these kernels to estimate pixels of the intermediate video frame. Recently, attention mechanism [31] and deformable convolution [57, 28] are explored.

Space-Time Video Super-Resolution. Compared to video super-resolution, space-time video super-resolution (STVSR) needs to implement super-resolution in time and space dimensions. Due to strict assumptions and manual regularization, conventional STVSR methods [19, 3, 4] cannot effectively process the spatial-temporal super-resolution of sophisticated LR input videos. In recent years, significant advances have been made from the deep neural network (DNN). Through merging VSR and VFI into a joint framework, Kang et al. [64] put forward a DNN model for STVSR. To exploit mutually informative relationships between time and space dimensions, STARnet [66] with an extra optical flow branch is proposed to generate HR slow-motion videos. In addition, Xiang et al. [13] developed a deformable ConvLSTM [58] module, which can achieve sequence-to-sequence (S2S) learning in STVSR. Base on [13], Xu et al. [36] proposed a temporal modulation block to perform controllable STVSR. Recently, Geng et al. [65] proposed a STVSR network based on Swin Transformer. However, most of them only leverage two adjacent frame features to interpolate the intermediate frame feature, and they hardly explore spatial and temporal contexts explicitly among video frames. To address these problems, we propose a spatial-temporal deformable network (1) to use more content from input LR frames for the interpolation process and (2) employ deformable attention to dynamically capture spatial-temporal contexts for HR frame reconstruction.

III Our Method

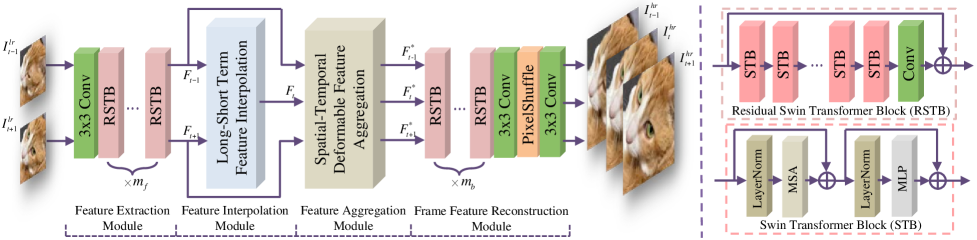

The architecture of our proposed network is illustrated in Fig. 2, which consists of four parts: feature extraction module, long-short term feature interpolation (LSTFI) module, spatial-temporal deformable feature aggregation (STDFA) module and frame feature reconstruction module. Given a low-resolution (LR) and low frame rate (LFR) video with frames: , our STDAN can generate consecutive high-resolution (HR) and high frame rate (HFR) frames: . The structure of each module is described in the following.

III-A Frame Feature Extraction

We first use a convolutional layer in the feature extraction module to get shallow features from the input LR video frames. Considering that these shallow features lack long-range spatial information due to the locality of the naive convolutional layer, which may cause poor quality in the next feature interpolation module. We hope to extract these shallow features further to establish the correlation between two distant locations.

Recently, Transformer-based models have realized good performance in computer vision [5, 7, 6, 10] owing to the strong capacity of Transformer to model long-range dependency. However, the computation cost of self-attention in Transformer is high, which limits its extensive application in video-related tasks. To overcome the drawback, Liu et al. [11] put forward Swin Transformer block (STB) to achieve linear computational complexity with respect to image size. Based on efficient and effective STB [11], Liang et al. [12] proposed residual Swin Transformer block (RSTB) to construct SwinIR for image restoration. Thanks to the powerful ability to model long-range dependency of RSTB, SwinIR [12] obtains state-of-the-art (SOTA) performance compared with CNN-based methods. In this paper, to acquire features that capture long-range spatial information, we also use RSTBs [12] to extract shallow features further, illustrated in Fig. 2. We can see that the RSTB is a residual block with several STBs and one convolutional layer. In addition, given a tensor as input, the detailed process of STB to output is formulated as:

| (1) |

where MSA and denotes multi-head self-attention module and intermediate results, respectively.

III-B Long-Short Term Feature Interpolation

To implement the super-resolution in the time dimension, we also utilize a feature interpolation module to synthesize the intermediate frames in the LR feature space, like [13, 36]. Specifically, given the two extracted features: and , the feature interpolation module can synthesize the feature corresponding to the missing frame . Generally, to obtain the intermediate feature, we should capture the pixel-wise motion information first. Optical flow is usually adopted to estimate the motion between video frames. However, there are several shortcomings in using optical flow for interpolation. The computational cost is high to calculate optical flow precisely, and estimated optical flow may be inaccurate due to the occlusion or motion blur, which causes poor interpolation results.

Considering the drawback of optical flow, Xiang et al. [13] employed multi-level deformable convolution [38] to perform frame feature interpolation. The learned offset used in deformable convolution can implicitly capture forward and backward motion information and achieve good performance. However, the synthesis of intermediate frame feature [13, 36] only utilizes the two neighboring frame features, which cannot fully explore the information from the other input frames to assist in the process. Unlike feature interpolation in previous STVSR algorithms [13, 36], we propose a long-short term feature interpolation (LSTFI) module to realize the intermediate frame in our STDAN, which is capable of exploiting helpful information from more input frames.

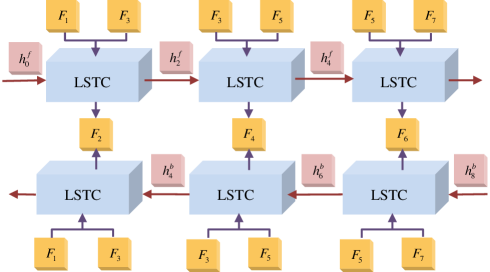

As illustrated in Fig. 3, we adopt a bidirectional recurrent neural network (RNN) [37] to construct the LSTFI module, which consists of two branches in forward and backward directions. Take the forward branch as an example. Two neighboring frame features and the hidden state from the previous long-short term cell (LSTC) are fed into each LSTC, and then the LSTC generates the corresponding intermediate frame feature and current hidden state used for subsequent LSTC. Here, the two neighboring frame features and hidden state serve as short-term and long-term information for the intermediate feature, respectively. However, each branch’s hidden state only considers the unidirectional information flow. To fully mine the information flow of these frame features for the interpolation procedure, we fuse interpolation results from LSTCs in the forward and backward branches to acquire the final intermediate frame feature.

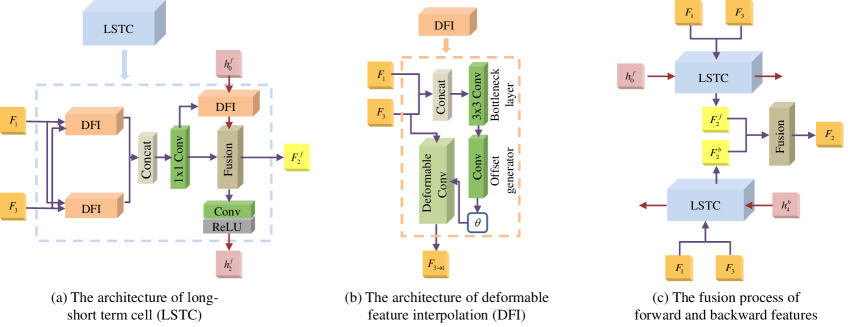

The architecture of LSTC and the fusion process are shown in Fig. 4. Given two neighboring frame features and , we employ deformable feature interpolation (DFI) block [13] to capture the forward and backward motion between the two features implicitly. For simplification, we take the feature that has experienced backward motion compensation as an example. As illustrated in Fig. 4(b), the two frame features are concatenated along channel dimension, and then pass through offset generation function to predict an offset with backward motion information:

| (2) |

where consists of convolutional layers, and denotes the concatenation along channel dimension. With the learned offset, we adopt deformable convolution [40] as motion compensation function to obtain compensated feature:

| (3) |

where denotes the operation of deformable convolution.

To blend the features and that have experienced forward and backward motion compensation respectively, a convolutional layer is applied, which can perform pixel-level linear weighting to achieve preliminary interpolation feature . Note that the acquisition of feature only utilizes the short-term information. In order to combine long-term information , the hidden state from the previous LSTC, we first use the other DFI block to align with the current feature , since there may be some misalignment. The process is expressed as:

| (4) |

where indicates the operation of DFI block. At the end of LSTC, we apply a fusion function into aligned hidden state and preliminary interpolation result to obtain forward intermediate feature:

| (5) |

where refers to the fusion function. Then, the intermediate feature passes through a convolutional layer and an activation layer in sequence to produce hidden state for the subsequent LSTC.

For fully exploring the whole input frame features for interpolation, the bidirectional RNN structure is utilized in our LSTFI module, so we fuse the forward intermediate feature and backward intermediate feature to get the final intermediate frame feature , shown in Fig. 4(c).

III-C Spatial-Temporal Deformable Feature Aggregation

With the assistance of the LSTFI module, we now have frame features, where the generation of intermediate frame features combines their adjacent frame features with hidden states. Although the hidden states can introduce certain temporal information, the whole interpolation procedure hardly explicitly explores the temporal information between various frames. In addition, the input frame features are merely processed independently in the feature extraction module. However, these frame features are consecutive, which means there are abundant temporal content without being exploited among these features.

For a feature vector whose location is on feature , the simplest method to aggregate temporal information is adaptive fusion with the feature vector on the same location from the other frame features. However, the aggregation approach has several drawbacks. Generally, the corresponding point on other frame features may not be on the same location due to inter-frame motion. Furthermore, there are multiple helpful feature vectors for from each of the frame features. Based on the above analysis, we propose a spatial-temporal deformable feature aggregation (STDFA) module to mix cross-frame spatial information adaptively and capture the long-range temporal information.

Specifically, we utilize the STDFA module to learn the residual auxiliary information from the remaining frame features for each frame feature . As presented in Fig. 5, the processing of the STDFA module can be divided into two parts: spatial aggregation and temporal aggregation. To adaptively fuse cross-frame spatial content of frame feature from the other frame features, we perform deformable attention to each pair: and (, ). In detail, frame feature passes through a linear layer to get embedded feature . Similarly, frame feature is fed into two linear layers to obtain embedded features and , respectively.

To implement deformable attention between and , we first predict the offset map:

| (6) |

where indicates offset generation function consisting of several convolutional layers with kernel. The offset map at position is expressed as:

| (7) |

Then the offsets are combined with pre-specified sampling locations to perform deformable sampling. Here, we denote the pre-specified sampling location as , and the value set of of kernel is defined as:

| (8) |

where denotes rounding down function.

With the offsets , the embedded feature vector can attend related points in . Nevertheless, not all the information of these points is helpful for . In addition, each point on embedded feature needs to search points, which inevitably causes a large storage occupation. To avoid irrelevant points and reduce storage occupation, we only choose the first points that are most relevant. To select the points, we calculate the inner product between two embedded feature vectors as the relevance score:

| (9) |

The larger the score, the more relevant the two points are. According to this criterion, we can determine the points. In the following, to distinguish the selected points from original points, we denote the pre-specified sampling location and learned offset of the points as and , respectively.

To adaptively mingle the spatial information from the locations for each embedded feature vector , we first adopt softmax function to calculate the weight of these points:

| (10) |

Then, with the weights and the embedded feature vector , we can obtain corresponding updated embedded feature vector:

| (11) |

Same as , the updated vector can be also achieved with the weight . Finally, we calculate the updated relevant weight map at each position between and for the following temporal aggregation:

| (12) |

To capture the temporal contexts of frame feature vector from the remaining features, we also utilize softmax function to adaptively aggregating feature vectors . Specifically, the normalized temporal weight of each vector (, ) is expressed as:

| (13) |

Then, through fusing embedded feature vector (, ) with the corresponding normalized weight, we can attain the embedded feature that aggregates the spatial and temporal contexts from other embedded features. The weighted fusion process is defined as:

| (14) |

In the tail of STDFA module, the embedded feature is sent into a linear layer to acquire the residual auxiliary feature . Finally, we add frame feature and residual auxiliary feature to get the enhanced feature that aggregates spatial and temporal contexts from the other frame features.

| VFI | (V)SR | Vid4 | SPMC-11 | Vimeo-Slow | Vimeo-Medium | Vimeo-Fast | Speed | Parameters | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Method | Method | PSNR | SSIM | PSNR | SSIM | PSNR | SSIM | PSNR | SSIM | PSNR | SSIM | FPS | (Million) |

| SuperSloMo | Bicubic | 22.84 | 0.5772 | 24.91 | 0.6874 | 28.37 | 0.8102 | 29.94 | 0.8477 | 31.88 | 0.8793 | - | 19.8 |

| SuperSloMo | RCAN | 23.78 | 0.6385 | 26.50 | 0.7527 | 30.69 | 0.8624 | 32.50 | 0.8884 | 34.52 | 0.9076 | 2.49 | 19.8+16.0 |

| SuperSloMo | RBPN | 24.00 | 0.6587 | 26.14 | 0.7582 | 30.48 | 0.8584 | 32.79 | 0.8930 | 34.73 | 0.9108 | 2.06 | 19.8+12.7 |

| SuperSloMo | EDVR | 24.22 | 0.6700 | 26.46 | 0.7689 | 30.99 | 0.8673 | 33.85 | 0.8967 | 35.05 | 0.9136 | 6.85 | 19.8+20.7 |

| SepConv | Bicubic | 23.51 | 0.6273 | 25.67 | 0.7261 | 29.04 | 0.8290 | 30.61 | 0.8633 | 32.27 | 0.8890 | - | 21.7 |

| SepConv | RCAN | 24.99 | 0.7259 | 28.16 | 0.8226 | 32.13 | 0.8967 | 33.59 | 0.9125 | 34.97 | 0.9195 | 2.42 | 21.7+16.0 |

| SepConv | RBPN | 25.75 | 0.7829 | 28.65 | 0.8614 | 32.77 | 0.9090 | 34.09 | 0.9229 | 35.07 | 0.9238 | 2.01 | 21.7+12.7 |

| SepConv | EDVR | 25.89 | 0.7876 | 28.86 | 0.8665 | 32.96 | 0.9112 | 34.22 | 0.9240 | 35.23 | 0.9252 | 6.36 | 21.7+20.7 |

| DAIN | Bicubic | 23.55 | 0.6268 | 25.68 | 0.7263 | 29.06 | 0.8289 | 30.67 | 0.8636 | 32.41 | 0.8910 | - | 24.0 |

| DAIN | RCAN | 25.03 | 0.7261 | 28.15 | 0.8224 | 32.26 | 0.8974 | 33.82 | 0.9146 | 35.27 | 0.9242 | 2.23 | 24.0+16.0 |

| DAIN | RBPN | 25.76 | 0.7783 | 28.57 | 0.8598 | 32.92 | 0.9097 | 34.45 | 0.9262 | 35.55 | 0.9300 | 1.88 | 24.0+12.7 |

| DAIN | EDVR | 25.90 | 0.7830 | 28.77 | 0.8649 | 33.11 | 0.9119 | 34.66 | 0.9281 | 35.81 | 0.9323 | 5.20 | 24.0+20.7 |

| STARnet | 25.99 | 0.7819 | 29.04 | 0.8509 | 33.10 | 0.9164 | 34.86 | 0.9356 | 36.19 | 0.9368 | 14.08 | 111.61 | |

| Zooming Slow-Mo | 26.14 | 0.7974 | 28.80 | 0.8635 | 33.36 | 0.9138 | 35.41 | 0.9361 | 36.81 | 0.9415 | 16.50 | 11.10 | |

| RSTT | 26.20 | 0.7991 | 28.86 | 0.8634 | 33.50 | 0.9147 | 35.66 | 0.9381 | 36.80 | 0.9403 | 15.36 | 7.67 | |

| TMNet | 26.23 | 0.8011 | 28.78 | 0.8640 | 33.51 | 0.9159 | 35.60 | 0.9380 | 37.04 | 0.9435 | 14.69 | 12.26 | |

| STDAN (Ours) | 26.28 | 0.8041 | 28.94 | 0.8687 | 33.66 | 0.9176 | 35.70 | 0.9387 | 37.10 | 0.9437 | 13.80 | 8.29 | |

III-D High-Resolution Frame Reconstruction

To reconstruct HR frames from the enhanced features , we first employ RSTBs [12] to map feature to deep feature . Then, these deep features further pass through an upsampling module to realize the HR video frames . Specifically, the upsampling module consists of the PixelShuffle layer [16] and several convolutional layers. For optimizing our proposed network, we adopt the Charbonnier function [17] as the reconstruction loss:

| (15) |

where indicates the ground truth of -th reconstructed video frame , and the value of is empirically set to . With the loss function, our STDAN can be end-to-end trained to generate HR slow-motion videos from corresponding LR and LFR counterparts.

IV Experiments

In this section, we first introduce the datasets and evaluation metrics used in our experiments. Then, the implementation details of our STDAN are elaborated. Next, we compare our proposed network with state-of-the-art methods on public datasets. Finally, we carry out ablation studies to investigate the effect of the modules adopted in our STDAN.

IV-A Datasets and Evaluation Metrics

Datasets We use the Vimeo-90K dataset [32] to train our network. Specifically, the Vimeo-90K dataset consists of more than 60,000 training video sequences, and each video sequence has seven frames. We adopt the raw seven frames as our HR and HFR supervisions. The corresponding four LR and LFR frames are downscaled by a factor of 4 with bicubic sampling from these odd-numbers ones. The Vimeo-90K also provides corresponding testsets that can be divided into Vimeo-Slow, Vimeo-Medium and Vimeo-Fast according to the degree of motion. The three testsets serve as the evaluation datasets in our experiments. Same as STVSR methods [13, 36], six video sequences in Vimeo-Medium testset and three sequences in Vimeo-Slow testset are removed to avoid infinite values on PSNR. In addition, we report the results on Vid4 [33] and SPMC-11 [23] of different approaches.

Evaluation Metrics To compare diverse STVSR networks quantitatively, Peak Signal to Noise Ratio (PSNR) and Structural SIMilarity (SSIM) [34] are adopted in our experiments as evaluation metrics. In this paper, we calculate the PSNR and SSIM metrics on the Y channel of the YCbCr color space. In addition, we also compare the parameters and inference speed of various models.

IV-B Implementation Details

In our STDAN, the number of RSTBs in the feature extraction module and frame feature reconstruction module is 2 and 6, respectively, where each RSTB contains 6 STBs. In addition, the number of feature and embedded feature channels are set to be 64 and 72 separately. In the LSTFI module, we utilize a Pyramid, Cascading, and Deformable (PCD) structure in [38] to achieve DFI. The hidden states in the forward and backward branches are initialized to zeros. In the STDFA module, the value of and are set to 3 and 2, respectively. We augment the training frames by randomly flipping horizontally and rotations during the training process. Then, we crop the input LR frames with a size of at random to the network, and the batch size is set to be 18. Our model is trained by Adam [35] optimizer by setting and . We employ cosine annealing to decay the learning rate [41] from to . We implement the STDAN with PyTorch and train our model on 6 NVIDIA GTX-1080Ti GPUs.

IV-C Comparison with State-of-the-art Methods

We compare our STDAN with existing state-of-the-art (SOTA) one-stage STVSR approaches: STARnet [66], Zooming Slow-Mo [13], RSTT [65] and TMNet [36]. In addition, we also compare the performance of our network with SOTA two-stage STVSR algorithms, like Zooming Slow-Mo [13] and TMNet [36]. Specifically, two-stage STVSR methods are composed of SOTA VFI and SR algorithms. These VFI networks are SuperSloMo [25], SepConv [30] and DAIN [31], respectively, while SOTA SR approaches are RCAN [56], RBPN [52] and EDVR [38].

Quantitative results of various STVSR methods are shown in Table I. From the table, we can see that: (1) Our STDAN with fewer parameters obtains SOTA performance on both Vid4 [33] and Vimeo [32]; (2) For the SPMC-11 [23] dataset, our model is only 0.1dB lower than STARnet [66] in terms of PSNR, but our STDAN acquires better results than it on SSIM [34] index, which demonstrates our network can recover more correct structures. In addition, our model only needs one thirteenth parameters of STARnet.

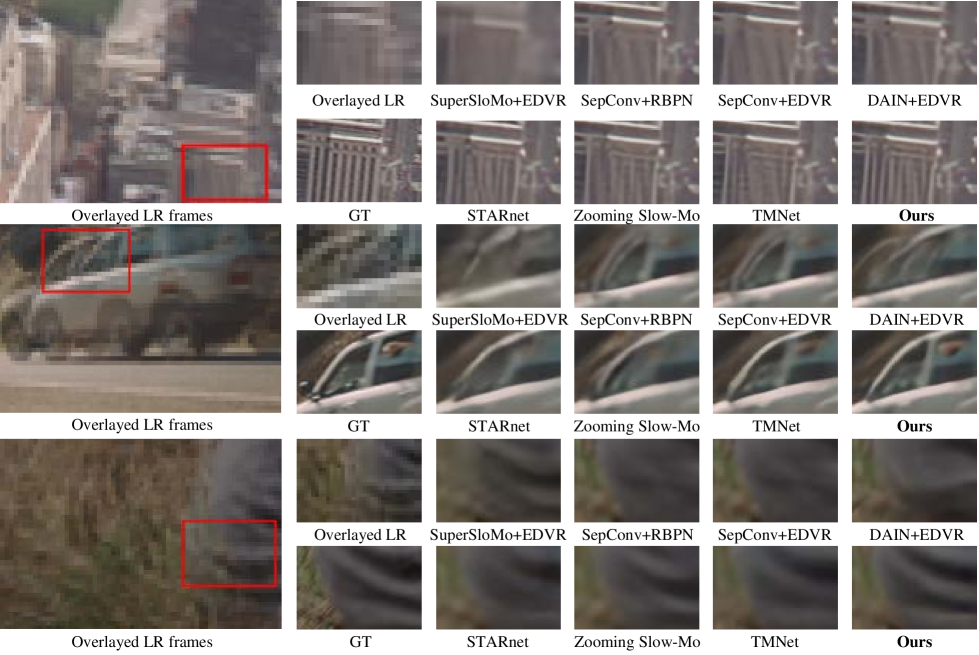

Visual comparison of different models are displayed in Fig. 6. We observe that our STDAN, with the proposed LSTFI and STDFA modules, restores more accurate structures and fewer motion blurs compared with other STVSR approaches, which confirms the higher value on PSNR and SSIM achieved by our model.

IV-D Ablation Study

To investigate the effect of the proposed modules in our STDAN, we conduct comprehensive ablation studies in this section.

| Method | ||||||

| Parameters (M) | 5.44 | 5.54 | 5.54 | 5.82 | 8.29 | |

| Feature Interpolation | Short-term feature interpolation | ✓ | ✓ | ✓ | ✓ | |

| Long-short term feature interpolation | ✓ | |||||

| Feature Aggregation | STFA in a 1x1 fixed window | ✓ | ||||

| STFA in a 3x3 fixed window | ✓ | |||||

| STFA in a deformable window | ✓ | ✓ | ||||

| Vid4 (slow motion) | 25.27 | 25.69 | 25.85 | 25.97 | 26.28 | |

| Vimeo-Fast (fast motion) | 35.88 | 36.22 | 36.41 | 36.63 | 37.10 | |

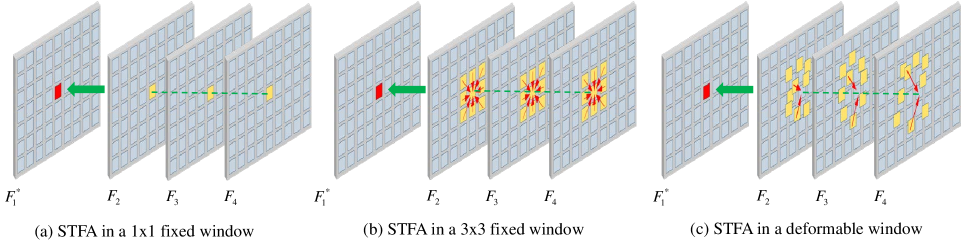

Feature Aggregation To valid the effect of the proposed spatial-temporal deformable feature aggregation (STDFA) module, we establish a baseline: model . It only adopts short-term information to perform interpolation, and then directly reconstructs HR video frames through the frame feature reconstruction module without feature aggregation process. In contrast, we compare three different models: , and with feature aggregation. For the spatial-temporal feature aggregation process in the model , illustrated in Fig. 7(a), each feature vector aggregates the information at the same position of other frame features, that is, the feature vector attends the valuable spatial content in a window. We enlarge the window size of the model to 3. Considering large motions between frames. A deformable window is applied in the model . As shown in Fig. 7(c), model adopts the STDFA module to perform feature aggregation.

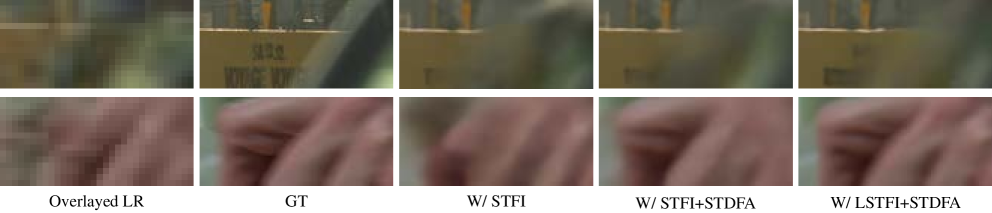

Quantitative results on Vid4 [33] and Vimeo-Fast [32] datasets are shown in Table II. From the table, we know that: (1) Feature aggregation module can improve the reconstruction results; (2) The larger the spatial range of feature aggregation, the more useful information can be captured to enhance recovery quality of HR frames. Qualitative results of the three models are represented in Fig. 8, which confirms the feature aggregation in the deformable window can acquire more helpful content.

Feature Interpolation To investigate the effect of the proposed long-short term feature interpolation (LSTFI) module, we compare two models: and . As shown in Fig. 3, the model with LSTFI can exploit short-term information of two neighboring frames and long-term information of hidden states from other LSTCs. In comparison, model only uses two adjacent frames to interpolate the feature of the intermediate frame. From Table II, combining long-term and short-term information can achieve better feature interpolation results, which leads to high-quality HR frames with more details, as illustrated in Fig. 9.

Efficiency of selecting the first points We also investigate the efficiency of determining the first points in our STDFA module. Specifically, the model’s inference time of each Vimeo sequence without/with the keypoint selection are 0.542s/0.543s, which demonstrates that the utilization of the keypoint selection in our STDFA module cannot lead to a significant increase in the inference time of the model.

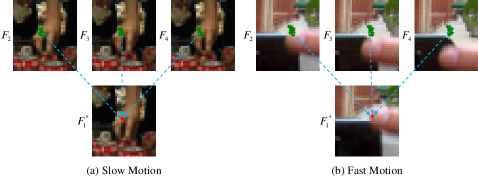

V Failure Analysis

Although our method can outperform existing SOTA methods, it is not perfect especially when handling fast-motion videos. As shown in Fig. 10, we found that our deformable attention might sample wrong locations when video motions are fast. The key reason is that the predicted deformable offsets cannot accurately capture relevant visual contexts due to the large motions.

VI Conclusion

In this paper, we propose a deformable attention network called STDAN for STVSR. Our STDAN can utilize more input video frames for the interpolation process. In addition, the network adopts deformable attention to dynamically capture spatial and temporal contexts among frames to enhance SR reconstruction. Thanks to the LSTFI and STDFA modules, our model demonstrates superior performance to recent SOTA STVSR approaches on public datasets.

References

- [1] W. Yang, X. Zhang, Y. Tian, W. Wang, J.-H. Xue, and Q. Liao, “Deep learning for single image super-resolution: A brief review,” IEEE Transactions on Multimedia, vol. 21, no. 12, pp. 3106–3121, 2019.

- [2] U. Mudenagudi, S. Banerjee, and P. K. Kalra, “Space-time super-resolution using graph-cut optimization,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 33, no. 5, pp. 995–1008, 2010.

- [3] H. Takeda, P. v. Beek, and P. Milanfar, “Spatiotemporal video upscaling using motion-assisted steering kernel (mask) regression,” in High-Quality Visual Experience. Springer, 2010, pp. 245–274.

- [4] O. Shahar, A. Faktor, and M. Irani, Space-time super-resolution from a single video. IEEE, 2011.

- [5] N. Carion, F. Massa, G. Synnaeve, N. Usunier, A. Kirillov, and S. Zagoruyko, “End-to-end object detection with transformers,” in European Conference on Computer Vision. Springer, 2020, pp. 213–229.

- [6] A. Dosovitskiy, L. Beyer, A. Kolesnikov, D. Weissenborn, X. Zhai, T. Unterthiner, M. Dehghani, M. Minderer, G. Heigold, S. Gelly et al., “An image is worth 16x16 words: Transformers for image recognition at scale,” arXiv preprint arXiv:2010.11929, 2020.

- [7] M. Chen, H. Peng, J. Fu, and H. Ling, “Autoformer: Searching transformers for visual recognition,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 12 270–12 280.

- [8] T. Li, X. He, Q. Teng, Z. Wang, and C. Ren, “Space–time super-resolution with patch group cuts prior,” Signal Processing: Image Communication, vol. 30, pp. 147–165, 2015.

- [9] L. Zhang, J. Nie, W. Wei, Y. Li, and Y. Zhang, “Deep blind hyperspectral image super-resolution,” IEEE Transactions on Neural Networks and Learning Systems, vol. 32, no. 6, pp. 2388–2400, 2020.

- [10] B. Yan, H. Peng, J. Fu, D. Wang, and H. Lu, “Learning spatio-temporal transformer for visual tracking,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 10 448–10 457.

- [11] Z. Liu, Y. Lin, Y. Cao, H. Hu, Y. Wei, Z. Zhang, S. Lin, and B. Guo, “Swin transformer: Hierarchical vision transformer using shifted windows,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 10 012–10 022.

- [12] J. Liang, J. Cao, G. Sun, K. Zhang, L. Van Gool, and R. Timofte, “Swinir: Image restoration using swin transformer,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 1833–1844.

- [13] X. Xiang, Y. Tian, Y. Zhang, Y. Fu, J. P. Allebach, and C. Xu, “Zooming slow-mo: Fast and accurate one-stage space-time video super-resolution,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 3370–3379.

- [14] C. You, L. Han, A. Feng, R. Zhao, H. Tang, and W. Fan, “Megan: Memory enhanced graph attention network for space-time video super-resolution,” in Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2022, pp. 1401–1411.

- [15] K. C. Chan, X. Wang, K. Yu, C. Dong, and C. C. Loy, “Understanding deformable alignment in video super-resolution,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, no. 2, 2021, pp. 973–981.

- [16] W. Shi, J. Caballero, F. Huszár, J. Totz, A. P. Aitken, R. Bishop, D. Rueckert, and Z. Wang, “Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 1874–1883.

- [17] W.-S. Lai, J.-B. Huang, N. Ahuja, and M.-H. Yang, “Deep laplacian pyramid networks for fast and accurate super-resolution,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 624–632.

- [18] C. Dong, C. C. Loy, K. He, and X. Tang, “Image super-resolution using deep convolutional networks,” IEEE transactions on pattern analysis and machine intelligence, vol. 38, no. 2, pp. 295–307, 2015.

- [19] E. Shechtman, Y. Caspi, and M. Irani, “Space-time super-resolution,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 27, no. 4, pp. 531–545, 2005.

- [20] M. Tassano, J. Delon, and T. Veit, “Fastdvdnet: Towards real-time deep video denoising without flow estimation,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 1354–1363.

- [21] S. Niklaus and F. Liu, “Context-aware synthesis for video frame interpolation,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 1701–1710.

- [22] S. Niklaus, L. Mai, and F. Liu, “Video frame interpolation via adaptive convolution,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 670–679.

- [23] X. Tao, H. Gao, R. Liao, J. Wang, and J. Jia, “Detail-revealing deep video super-resolution,” in Proceedings of the IEEE International Conference on Computer Vision, 2017, pp. 4472–4480.

- [24] J. Caballero, C. Ledig, A. Aitken, A. Acosta, J. Totz, Z. Wang, and W. Shi, “Real-time video super-resolution with spatio-temporal networks and motion compensation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 4778–4787.

- [25] H. Jiang, D. Sun, V. Jampani, M.-H. Yang, E. Learned-Miller, and J. Kautz, “Super slomo: High quality estimation of multiple intermediate frames for video interpolation,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 9000–9008.

- [26] X. Zhang, R. Jiang, T. Wang, and J. Wang, “Recursive neural network for video deblurring,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 31, no. 8, pp. 3025–3036, 2020.

- [27] J. Park, K. Ko, C. Lee, and C.-S. Kim, “Bmbc: Bilateral motion estimation with bilateral cost volume for video interpolation,” in European Conference on Computer Vision. Springer, 2020, pp. 109–125.

- [28] H. Lee, T. Kim, T.-y. Chung, D. Pak, Y. Ban, and S. Lee, “Adacof: Adaptive collaboration of flows for video frame interpolation,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 5316–5325.

- [29] W. Bao, W.-S. Lai, X. Zhang, Z. Gao, and M.-H. Yang, “Memc-net: Motion estimation and motion compensation driven neural network for video interpolation and enhancement,” IEEE transactions on pattern analysis and machine intelligence, vol. 43, no. 3, pp. 933–948, 2019.

- [30] S. Niklaus, L. Mai, and F. Liu, “Video frame interpolation via adaptive separable convolution,” in 2017 IEEE International Conference on Computer Vision (ICCV). Venice, Italy: IEEE, 2017, pp. 261–270.

- [31] W. Bao, W.-S. Lai, C. Ma, X. Zhang, Z. Gao, and M.-H. Yang, “Depth-aware video frame interpolation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Long Beach, CA, USA: IEEE, 2019, pp. 3698–3707.

- [32] T. Xue, B. Chen, J. Wu, D. Wei, and W. T. Freeman, “Video enhancement with task-oriented flow,” International Journal of Computer Vision, vol. 127, no. 8, pp. 1106–1125, 2019.

- [33] C. Liu and D. Sun, “On bayesian adaptive video super resolution,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 36, no. 2, pp. 346–360, 2013.

- [34] Z. Wang, A. Bovik, H. Sheikh, and E. Simoncelli, “Image quality assessment: from error visibility to structural similarity,” IEEE Transactions on Image Processing, vol. 13, no. 4, pp. 600–612, 2004.

- [35] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980, 2014.

- [36] G. Xu, J. Xu, Z. Li, L. Wang, X. Sun, and M.-M. Cheng, “Temporal modulation network for controllable space-time video super-resolution,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 6388–6397.

- [37] M. Schuster and K. K. Paliwal, “Bidirectional recurrent neural networks,” IEEE transactions on Signal Processing, vol. 45, no. 11, pp. 2673–2681, 1997.

- [38] X. Wang, K. C. Chan, K. Yu, C. Dong, and C. Change Loy, “Edvr: Video restoration with enhanced deformable convolutional networks,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops. Long Beach, CA, USA: IEEE, 2019, pp. 1954–1963.

- [39] J. Dai, H. Qi, Y. Xiong, Y. Li, G. Zhang, H. Hu, and Y. Wei, “Deformable convolutional networks,” in Proceedings of the IEEE international conference on computer vision, 2017, pp. 764–773.

- [40] X. Zhu, H. Hu, S. Lin, and J. Dai, “Deformable convnets v2: More deformable, better results,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 9308–9316.

- [41] I. Loshchilov and F. Hutter, “Sgdr: Stochastic gradient descent with warm restarts,” arXiv preprint arXiv:1608.03983, 2016.

- [42] P. Yi, Z. Wang, K. Jiang, J. Jiang, and J. Ma, “Progressive fusion video super-resolution network via exploiting non-local spatio-temporal correlations,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2019, pp. 3106–3115.

- [43] W. Li, X. Tao, T. Guo, L. Qi, J. Lu, and J. Jia, “Mucan: Multi-correspondence aggregation network for video super-resolution,” in European Conference on Computer Vision. Springer, 2020, pp. 335–351.

- [44] A. Kappeler, S. Yoo, Q. Dai, and A. K. Katsaggelos, “Video super-resolution with convolutional neural networks,” IEEE transactions on computational imaging, vol. 2, no. 2, pp. 109–122, 2016.

- [45] B. Bare, B. Yan, C. Ma, and K. Li, “Real-time video super-resolution via motion convolution kernel estimation,” Neurocomputing, vol. 367, pp. 236–245, 2019.

- [46] Y. Zheng, X. Yu, M. Liu, and S. Zhang, “Single-image deraining via recurrent residual multiscale networks,” IEEE transactions on neural networks and learning systems, 2020.

- [47] H. Wang, D. Su, C. Liu, L. Jin, X. Sun, and X. Peng, “Deformable non-local network for video super-resolution,” IEEE Access, vol. 7, pp. 177 734–177 744, 2019.

- [48] K. C. Chan, X. Wang, K. Yu, C. Dong, and C. C. Loy, “Basicvsr: The search for essential components in video super-resolution and beyond,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 4947–4956.

- [49] Y. Jo, S. W. Oh, J. Kang, and S. J. Kim, “Deep video super-resolution network using dynamic upsampling filters without explicit motion compensation,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 3224–3232.

- [50] S. Li, F. He, B. Du, L. Zhang, Y. Xu, and D. Tao, “Fast spatio-temporal residual network for video super-resolution,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 10 522–10 531.

- [51] L. Wang, Y. Guo, Z. Lin, X. Deng, and W. An, “Learning for video super-resolution through hr optical flow estimation,” in Asian Conference on Computer Vision. Springer, 2018, pp. 514–529.

- [52] M. Haris, G. Shakhnarovich, and N. Ukita, “Recurrent back-projection network for video super-resolution,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 3897–3906.

- [53] Y. Tian, Y. Zhang, Y. Fu, and C. Xu, “Tdan: Temporally-deformable alignment network for video super-resolution,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 3360–3369.

- [54] X. Ying, L. Wang, Y. Wang, W. Sheng, W. An, and Y. Guo, “Deformable 3d convolution for video super-resolution,” IEEE Signal Processing Letters, vol. 27, pp. 1500–1504, 2020.

- [55] T. Isobe, S. Li, X. Jia, S. Yuan, G. Slabaugh, C. Xu, Y.-L. Li, S. Wang, and Q. Tian, “Video super-resolution with temporal group attention,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 8008–8017.

- [56] Y. Zhang, K. Li, K. Li, L. Wang, B. Zhong, and Y. Fu, “Image super-resolution using very deep residual channel attention networks,” in Proceedings of the European Conference on Computer Vision (ECCV). Munich, Germany: Springer, 2018, pp. 294–310.

- [57] X. Cheng and Z. Chen, “Multiple video frame interpolation via enhanced deformable separable convolution,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021.

- [58] X. Shi, Z. Chen, H. Wang, D.-Y. Yeung, W.-K. Wong, and W.-c. Woo, “Convolutional lstm network: A machine learning approach for precipitation nowcasting,” Advances in neural information processing systems, vol. 28, 2015.

- [59] M. Choi, H. Kim, B. Han, N. Xu, and K. M. Lee, “Channel attention is all you need for video frame interpolation,” in AAAI. New York City, NY, USA: AAAI Press, 2020, pp. 10 663–10 671.

- [60] E. Shechtman, Y. Caspi, and M. Irani, “Increasing space-time resolution in video,” in European Conference on Computer Vision. Copenhagen, Denmark: Springer, 2002, pp. 753–768.

- [61] O. Shahar, A. Faktor, and M. Irani, Space-time super-resolution from a single video. Colorado Springs, CO, USA: IEEE, 2011.

- [62] B. Zhao and X. Li, “Edge-aware network for flow-based video frame interpolation,” IEEE Transactions on Neural Networks and Learning Systems, 2022.

- [63] T. Li, X. He, Q. Teng, Z. Wang, and C. Ren, “Space–time super-resolution with patch group cuts prior,” Signal Processing: Image Communication, vol. 30, pp. 147–165, 2015.

- [64] J. Kang, Y. Jo, S. W. Oh, P. Vajda, and S. J. Kim, “Deep space-time video upsampling networks,” in European Conference on Computer Vision. Springer, 2020, pp. 701–717.

- [65] Z. Geng, L. Liang, T. Ding, and I. Zharkov, “Rstt: Real-time spatial temporal transformer for space-time video super-resolution,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 17 441–17 451.

- [66] M. Haris, G. Shakhnarovich, and N. Ukita, “Space-time-aware multi-resolution video enhancement,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 2859–2868.