Statistical inference for mean-field queueing systems

Abstract

Mean-field limits have been used now as a standard tool in approximations, including for networks with a large number of nodes. Statistical inference on mean-filed models has attracted more attention recently mainly due to the rapid emergence of data-driven systems. However, studies reported in the literature have been mainly limited to continuous models. In this paper, we initiate a study of statistical inference on discrete mean-field models (or jump processes) in terms of a well-known and extensively studied model, known as the power-of- (), or the supermarket model, to demonstrate how to deal with new challenges in discrete models. We focus on system parameter estimation based on the observations of system states at discrete time epochs over a finite period. We show that by harnessing the weak convergence results developed for the supermarket model in the literature, an asymptotic inference scheme based on an approximate least squares estimation can be obtained from the mean-field limiting equation. Also, by leveraging the law of large numbers alongside the central limit theorem, the consistency of the estimator and its asymptotic normality can be established when the number of servers and the number of observations go to infinity. Moreover, numerical results for the power-of-two model are provided to show the efficiency and accuracy of the proposed estimator.

2020 Mathematics Subject Classification: Keywords: Mean-field; Queuing systems; Supermarket model; Least square estimation; Consistency; Asymptotic normality

1 Introduction

The origins of mean-field theory trace back to the pioneering works of Curie [8] and Weiss [36] in magnetism and phase transitions. Since then, this theory has expanded across a wide array of fields to study interacting particle systems, including statistical physics [9, 16, 23, 24], biological systems [10, 30], communication networks [4, 20, 21, 22], and mathematical finance [19, 27].

Moreover, the application of mean-field theory in queueing systems is traced back to the work of Dobrushin and Sukhov [14] and has since proliferated due to its many benefits, see, e.g., [11, 12, 31, 35] for further developments. Indeed, in stochastic service systems, particularly those involving multiple parallel queues, load balancing is commonly applied to enhance performance by shortening queues, reducing wait times, and increasing throughput. This balancing mechanism effectively modifies the input-output dynamics to improve the system’s quality of service. When such systems are viewed as interacting systems, mean-field theory becomes a natural framework to study their behavior. By using mean-field analysis, the performance of large systems can be evaluated by examining their limiting behavior as the system size approaches infinity. In particular, the limit often reduces when solving a deterministic system known as the mean-field limit, which corresponds to a McKean-Vlasov-type stochastic differential equation (SDE) solution. In McKean-Vlasov SDEs, the coefficients depend on both the process itself and its distribution, forming a class of non-linear SDEs. The study of such equations was initiated by McKean [23], inspired by Kac’s work in kinetic theory [25].

Although extensive literature exists on mean-field interacting systems, research on their statistical inference has only gained attention in recent years. The pioneering work in this area is Kasonga’s seminal paper [26], which addressed parameter estimation for interacting particle systems modeled by Itô SDEs through a maximum likelihood approach. After Kasonga’s work, interest in the topic waned for nearly two decades before reemerging with significant contributions, as seen in [1, 3, 5, 17, 29, 32] and references therein. Since then, the field has grown steadily, establishing itself as a crucial area of research. This renewed interest can be attributed to novel applications of mean-field theory and the rise of new technologies enabling access to massive datasets generated by systems of interacting agents.

To date, most statistical inference studies on mean-field models, such as those mentioned above, focus on interacting diffusion systems, with limited research on statistical inference for mean-field systems with jumps. Notable exceptions include [13] and [28], where the authors proposed asymptotic estimation for the Bernoulli interaction parameter in a system of interacting Hawkes processes as both the number of particles and time approach infinity. In particular, as discussed in [37], statistical inference in mean-field queueing models remains largely unexplored, despite statistical inference in queueing systems being an active area of research. The reader can consult for example [2] where a comprehensive survey on parameter and state estimation for queueing systems across various estimation paradigms was provided, yet it does not address statistical inference for mean-field queueing systems. To fill this gap, we propose in the current paper a statistical inference scheme for the parameters governing a specific mean-field queuing system, namely the supermarket model, also known as the power of choices. Thus, to the best of our knowledge, our current proposal represents a novel contribution in this area.

The supermarket model was independently introduced by Vvedenskaya et al. [35] and Mitzenmacher [31]. It represents a system of parallel identical queues, each served by a single server with a service rate and infinite buffer capacity. Tasks arrive at a rate of ; each task is allocated queues chosen uniformly at random among the and joins the shortest one, with ties resolved uniformly. All events in this system are independent. In particular, [35] and [31] studied the asymptotic behavior of the system as the number of servers becomes large, showing that the process associated with queue lengths converges to a deterministic limit represented by an infinite system of ordinary differential equations (ODEs). This model and its extensions have since become widely studied due to its theoretical and practical importance; see, e.g., [20, 6, 7] and the references therein. However, as noted, the statistical inference for this model remains unexplored, which is the focus of this paper.

We propose a statistical inference scheme to estimate the arrival and service rates in a supermarket model based on aggregate data obtained from discrete observations of a moment of the system over a finite period. To this end, we propose to exploit the ODE obtained at the mean-field limit to construct an approximate least square estimator (LSE). Then, using the law of large numbers and the central limit theorem established in the literature, we show that the proposed estimator is consistent and asymptotically normal as the number of servers and observations grows large. In addition, we test our estimator on synthetic data obtained by simulation which shows the accuracy of our approach.

It is worthwhile to provide the following remark: An intriguing general approach to statistical inference for mean-field systems was proposed in [18]. This approach leverages a “misspecified” or limiting model, created by approximating the system through large-systems asymptotics, incorporating the law of large numbers and central limit theorem. This enables constructing an approximate likelihood function, evaluated against the data generated by the true model. The estimator is then obtained by maximizing this approximate likelihood function. A key advantage of this method is that the approximate likelihood has a conditionally Gaussian structure, due to the central limit theorem, which allows for efficient numerical evaluation of the estimator. Although one might consider using a similar method to estimate parameters for the supermarket model, the complexity of the approximate likelihood for this model complicates the analysis of the estimator’s asymptotic properties. This difficulty motivates the adoption of an alternative approach, specifically, an approximate LSE scheme.

The rest of the paper is organized as follows: First, in Section 2, we recall the supermarket model and introduce the appropriate notations. We also review some well-known asymptotic results about the model, including a new technical result in Proposition 2.1 that will be used in the sequel. In Section 3, we introduce the inference scheme along with our LSE and prove both the consistency and asymptotic normality of the estimator. To facilitate the reading, we put all the long proofs in the appendices. Section 4 provides simulations demonstrating that our estimator accurately predicts the system parameters and validates the asymptotic normality result. Finally, in Section 5, we present conclusions and open questions, followed by the bibliography.

2 Queuing network with selection of the shortest queue among several servers

2.1 The setting

We start by recalling the supermarket model, first introduced separately in [31] and [35]. Consider a network with identical queues, each with a single server of service rate and an infinite buffer. Tasks arrive at rate , and each task is allocated queues with uniform probability among the servers and elects to join the shortest one, ties being resolved uniformly. The selected queues may coincide. All these random events are independent. Let denote the length of the -th queue at time and define the empirical measure process

which takes values in the space of probability measures on identified with the infinite-dimensional simplex

where is the set of all non-negative real numbers. Define the subspace . Thus, for all . Throughout this paper, we fix a constant . Let be the Skorokhod space of càdlàg functions defined on with values in , equipped with the usual Skorokhod topology. Let be the space of continuous functions defined on with values on , equipped with the uniform topology.

2.2 Law of large numbers and central limit theorem for the empirical process

We recall now some results describing the asymptotic behavior of the supermarket model that will be used to build the statistical inference scheme and the related analysis. For , denote by the space of -th summable sequences, i.e.,

and denote by the norm on it. In particular, let be the space of square summable sequences equipped with the inner product

which makes it a Hilbert space. Moreover, define its subspace

Furthermore, for any , denote by the vector with 1 at the -th coordinate and 0 elsewhere.

We first state the law of large numbers established in [20, Theorem 3.4] and reformulated in [6, Corollary 1].

Theorem 2.1

Suppose that in as . Then in probability in , where is the unique solution in to the ODE:

| (2.1) |

and, for any ,

| (2.2) | |||||

Next, we state results about the fluctuations of the empirical measure process from its law of large number limit . To this end, define the process

the operator

| (2.3) | |||||

and the map by

| (2.4) |

Finally, we recall the definition of cylindrical Brownian motion which is a generalization of the scalar Brownian motion to Hilbert spaces.

Definition 2.1

A collection of continuous real-valued stochastic processes defined on a filtered probability space is called an -cylindrical Brownian motion if, for every , is an -Brownian motion with variance and, for all ,

We state now the central limit theorem introduced in [6, Theorem 2].

Theorem 2.2

Suppose that and in as . Also, suppose that in and that

Then converges to in distribution in as , where is the unique weak solution to the following SDE:

| (2.5) |

is defined by , is the symmetric square root of the operator in , i.e., , and is an -cylindrical Brownian motion.

2.3 The power of two choices model

For the sake of simplicity, we focus in this paper on a special case of the supermarket model obtained when . This model, known as the power of two choices, was first introduced and analyzed in [31]. In this case, the operator in takes the following explicit form:

| (2.7) |

the map in takes now the explicit form:

and for any , the operator in becomes

The following result shows that the solution to is a Gaussian process. Although the proof is given for the power of two choices model, it can be adapted to cover the case with general .

Proposition 2.1

Suppose that L = 2. Then, the solution to the SDE is a Gaussian process.

Proof

See Appendix A.

3 Statistical inference of the supermarket model

Suppose the service and arrival rates, and , that govern the system are unknown. Our goal is to estimate these parameters using observations collected over a specific time interval . The complexity of the system’s dynamics makes brute-force Monte Carlo estimation computationally intensive, particularly as the number of nodes, , increases. Therefore, we propose developing an estimator that utilizes the weak convergence results outlined in Section 2, specifically the law of large numbers presented in Theorem 2.1 and the central limit theorem in Theorem 2.2.

In particular, we construct an approximate LSE based on the ODE given in . We subsequently demonstrate that this estimator is consistent and asymptotically normal as both the system size and the number of observations tend to infinity. For convenience, we denote the vector of unknown parameters as .

3.1 The data

Our objective is to estimate the parameter vector that governs the dynamics of the power-of-two model based on observations collected over a finite time interval. Specifically, we assume that observations are not available for every server in the network; instead, they are gathered as an aggregate measure of the system. Collecting individual data for each server can be prohibitively costly in practice, particularly for large networks, which justifies our approach to data collection.

In this context, we assume that the available data for inference includes observations of the empirical measure of the system, , over the finite time interval at discrete points, defined as :

The observed data is then represented as

Thus, this dataset reflects a realization of the system governed by the true parameters, say .

3.2 Least square estimator (LSE)

Recall from Theorem 2.1 that the empirical measure converges in probability towards the unique solution to the ODE as the number of servers . We propose to take advantage of this result to build an approximate LSE for the parameters based on the dataset .

The least square function:

Let us first introduce the following functions defined for all and ,:

| (3.1) |

and

| (3.2) |

Therefore, by , one can observe that

| (3.3) |

Moreover, let be the unique solution to the ODE (2.1). To highlight the dependence on the parameter , we will write in the sequel. Furthermore, let us introduce the quadratic function defined for any by

Notice that, by (2.1), one immediately observes that . Thus, the function attains its minimum at , namely

| (3.4) |

and

| (3.5) |

Solving the equations and leads to

| (3.6) |

where

and

Note that the right-hand side in is well posed only if . Nevertheless, a simple application of Hölder’s inequality tells us that . Therefore, one needs to investigate the conditions under which the inequality

| (3.7) |

holds. Below we give as examples two sufficient conditions that ensure the validity of (3.7).

Lemma 3.1

Suppose that one of the following conditions holds:

| (3.8) |

or

| (3.9) |

Then, the inequality holds.

Proof

See Appendix B.

The approximate LSE:

Recall that the data are observed on the prelimiting finite -system. Hence, using we construct the following approximate LSE defined for any and as

| (3.10) |

where

and

with for . We will show next that the estimator in is consistent and approximately normal.

3.3 Consistency of the LSE

We show in this section the consistency of the approximate estimator given in (3.10). To this end, let us first prove the following technical lemma:

Lemma 3.2

Let be a sequence of probability measures on . Then, the following statements are equivalent:

-

(i)

converges weakly to as .

-

(ii)

for all .

-

(iii)

.

Proof. Obviously, (iii)(i)(ii). (ii)(iii) is complete by the observation that, for any ,

We are now ready to prove the consistency of the estimator in (3.10).

Theorem 3.1

Suppose that in as and (3.7) holds. Then,

Proof

See Appendix C.

3.4 Asymptotic normality of the LSE

We now analyze the asymptotic distribution of the approximate LSE given in . In particular, one aims to prove the asymptotic normality of

| (3.11) |

as the network size and the number of observations go to infinity. To do so, we will prove that (3.11) converges towards a linear combination of the process which is itself a Gaussian process by Proposition 2.1.

To ease of notations, define the following quantities:

and

One can observe that , , are linear combinations of the Gaussian process solution to the SDE (see Proposition 2.1).

The following theorem, states the asymptotic normality of the approximate LSE (3.11) under the assumption that .

Proof

See Appendix D.

4 Numerical experiments

In this section, we evaluate the consistency and asymptotic normality of the approximate LSE defined in using simulated data. Specifically, we aim to validate the assertions made in Theorem 3.1 and Theorem 3.2. To this end, we propose to generate the datasets by simulating the power of two choices model, with true parameters , for various network sizes and different numbers of observations . For each combination of , we simulate a total of samples which are then utilized to estimate the arrival and service rates using the approximate LSE . The estimated values are plotted in Figure LABEL:est_lamb and Figure LABEL:est_nu.

4.1 Consistency of the estimator

We aim to numerically assess the consistency of the estimator . To achieve this, we utilize the estimated values of obtained from simulated datasets for various values of and to calculate the following empirical moments:

-

•

The empirical mean

-

•

The empirical standard deviation

-

•

The empirical mean square error

-

•

The empirical mean error

The results are presented in Table 1. As observed, as and increase, the empirical mean of the estimator converges to the true parameter values, while the corresponding standard deviations decrease. Furthermore, both the mean squared error and the absolute mean error diminish as and grow larger. These findings validate the consistency of the estimator, as stated in Theorem 3.1.

| Parameters moments | MSE | Mean Error | ||

|---|---|---|---|---|

| , | ||||

| , | ||||

| , | ||||

| , | ||||

| , |

4.1.1 Asymptotic normality

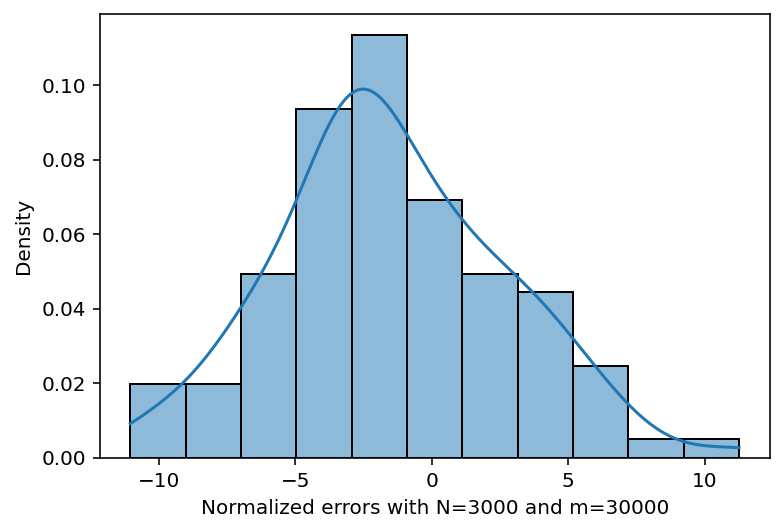

In this section, we assess the asymptotic normality of the approximate LSE as established in Theorem 3.2. Specifically, we numerically verify that the normalized error term converges to a Gaussian distribution as and approach infinity.

We utilize the simulated 100 samples from the power of two choices model with the true parameter , varying the network size and the number of observations . For each combination of , we compute the first four empirical moments of the normalized error terms : the mean, variance, skewness, and kurtosis. The results are summarized in Table 2. Notably, we observe that the skewness and kurtosis values tend to approximate those of a normal distribution (0 for skewness and 3 for kurtosis), even for relatively small values of network size and number of observations .

| Normalized error moments | Mean | Variance | Skewness | Kurtosis |

|---|---|---|---|---|

| N=100,m=1000 | ||||

| N=500,m=10000 | ||||

| N=1000,m=10000 | ||||

| N=2000, m=20000 | ||||

| N=3000, m=30000 |

To further substantiate our findings, we test the normality of the normalized error terms using a Kolmogorov-Smirnov test. We conduct this test on the simulated datasets across the different values of network size and number of observations . The resulting -values are presented in Table 3. As shown, the -values are sufficiently large, suggesting that the null hypothesis asserting that the error terms follow a normal distribution is not rejected, even for smaller values of and . This result indicates that the error terms tend to the normal distribution quickly. Nevertheless, it is also noted that the -values are high for all combinations, and they do not necessarily increase with larger network sizes and higher numbers of observations . This effect may be attributed to the fact that the true mean and variance of the error terms are unknown, requiring the use of empirical values, which may account for the observed outcomes.

| Network and data sizes | P-value for | P-value for |

|---|---|---|

Finally, we plot the histograms of the normalized error terms and , along with a kernel density estimator. The results are shown in Figure 5 and Figure 6. Once again, we observe that the assertion of normality for the error terms aligns well with the empirical data.

5 Conclusions and perspectives

In this paper, we considered the parameter estimation problem of the supermarket model. Based on an aggregate dataset, we constructed an approximate LSE by exploiting the law of large numbers together with the central limit theorem established for the model in the literature. Moreover, we proved the consistency together with the asymptotic normality of the estimator as both the size of the network and the number of observations go to infinity. Finally, we presented a numerical study where we tested our estimator against synthetic data obtained by simulating the power-of-two model highlighting our theoretical results.

The current work is the first statistical scheme for mean-field queuing systems and opens a new perspective. One naturally aims to investigate the statistical inference problem for other models. For instance, one can investigate the approximate LSE approach to the model proposed in [6] for load balancing mechanisms in cloud storage systems which is a generalization of the supermarket model. The established law of large numbers together with the central limit theorem make the approximate LSE approach used in the current work conceivable, provided that one can overcome the technical difficulty arising from the more complicated mean-field limiting equation. Another variation of the supermarket model for which one can study the parameter estimation problem is the one introduced in [7] in which the servers can communicate with their neighbors and where the neighborhood relationships are described in terms of a suitable graph. Again, the limit as the number of servers goes to infinity was identified, which can be exploited to build a statistical scheme, however, no central limit theorem nor a stationary distribution was established, therefore, the asymptotic normality of the estimator cannot be obtained by the similar scheme used in the current paper. Another interesting open problem is the nonparametric estimation of the interaction kernel in general mean-field queuing systems studied in [11]. Indeed, one can consider the exploitation of the limiting mean-field equation to build an estimator. However, contrary to our current proposal where the unknown parameters enter linearly in the mean-field limiting equation, in the nonparametric estimation one needs to deal with an optimization problem in function space. A potential avenue is to exploit the stationary distribution to build an estimator in the stationary regime and then investigate a justification for the interchange of limits and .

Finally, the problem of statistical inference for general mean-field models on discrete space remains open and, as mentioned in the introduction, very few references exist.

Appendix A Proof of Propositoin 2.1

Let be the solution to the following infinite-dimensional ODE:

Define . Then, satisfies the SDE:

By [6, Proposition 2], we know that for all almost surely. By the estimate [6, Page 69, line -1] and Fatou’s lemma (cf. [6, Page 78, Proof of Theorem 2]), we get

Then, for all almost surely. Moreover, for any , we have , and

| (A.1) | |||||

Note that (A.2) can be regarded as an infinite-dimensional non-autonomous linear system of ODEs with random coefficients. Define and by

| (A.3) | |||||

and

| (A.9) |

Then, (A.2) becomes

Denote Therefore, for any and , by (A.3), we get

Then,

For , and , by (A.9), we get

Then,

Hence, by induction, we obtain that

Thus, we have the following explicit expressions:

| (A.10) |

Since is a Gaussian martingale, by (A.3), we deduce that the distributions of and are both Gaussian. The proof is complete.

Appendix B Proof of Lemma 3.1

By Hölder’s inequality, we find that and the equality sign holds if and only if , or , or

for all (see, e.g. [33])

| (B.1) |

Note that for ,

and

Then, by the fact that , we get

and

We have

and

(a) Suppose that holds. Then, and . Thus, cannot hold and hence holds. (b) Suppose that holds. Then, and . Thus, cannot hold and hence holds.

Appendix C Proof of Theorem 3.1

By Theorem 2.1, [15, (5.7), page 117 and Proposition 5.3, page 119] and Lemma 3.2, we obtain that

| (C.1) |

in probability as . Moreover,

By (3.1), we get for all and . Then

Therefore, by (C.1), we get that the right hand side of the last inequality goes to . Similarly, we can show that

Therefore, the proof is complete.

Appendix D Proof of Theorem 3.2

By and , we get

Moreover, simple calculations lead to

and

To simplify notation, let us define

and

We will analyze the convergence of and as . By the Skorohod representation theorem (cf. [15, Page 102]), we can and do assume without loss of generality that converges to in probability in . To save space, we will prove the convergence of , the convergence of the other terms follows by similar arguments. First, we have

with

Moreover,

and

Then, by adding and subtracting terms, we get

| (D.1) |

Below we consider the convergence of each summation term in (D). (a) By Theorem 2.2, as , the term

converges in probability to

(c) By the estimate [6, Page 70, line 4], we have that

| (D.2) |

Then, by Fatou’s lemma, we get

| (D.3) |

By (2.1) and (3.1)–(3.3), we get

which together with (C.1) and (D.2) implies that

(d) For and , define a non-negative measure on by

Note that

Then, for , we have

By [15, (5.7), page 117 and Proposition 5.3, page 119], we have that

| (D.5) |

Thus, by (a)–(d), we deduce that as , converges in probability to

| (D.6) |

By adding and subtracting terms, we get

Similar to the above argument, we can show that as , converges in probability to

| (D.7) |

Similarly, as , converges in probability to

| (D.8) |

and converges converges in probability to

| (D.9) |

Therefore, using –, we deduce that

Similarly, we obtain

References

- [1] C. Amorino, A. Heidari, V. Pilipauskaite, and M. Podolskij. Parameter estimation of discretely observed interacting particle systems. Stochastic Processes and their Applications, 163:350–386, 2023.

- [2] A. Asanjarani, Y. Nazarathy, and P. Taylor. A survey of parameter and state estimation in queues. Queueing Syst, 97:39–80, 2021.

- [3] D. Belomestny, V. Pilipauskaite, and M. Podolskij. Semiparametric estimation of mckean-vlasov sdes. Annales de l’Institut Henri Poincaré, Probabilités et Statistiques, 59(1):79–96, 2023.

- [4] M. Benaïm and J.Y. Le Boudec. A class of mean field interaction models for computer and communication systems. Performance Evaluation, 65(11–12):823–838, 2008.

- [5] P.N. Bishwal. Estimation in interacting diffusions: Continuous and discrete sampling. Applied Mathematics-a Journal of Chinese Universities Series B, 02:1154–1158, 2011.

- [6] A. Budhiraja and E. Friedlander. Diffusion approximations for load balancing mechanisms in cloud storage systems. Advances in Applied Probability, 51(1):41–86, 2019.

- [7] A. Budhiraja, D. Mukherjee, and R. Wu. Supermarket model on graphs. The Annals of Applied Probability, 29(3):1740–1777, 2019.

- [8] P. Curie. Magnetic properties of materials at various temperatures. Ann. Chem. Phys, 5(289), 1895.

- [9] D.A. Dawson. Critical dynamics and fluctuations for a mean field model of cooperative behaviour. J. Statist. Phys., 41:29–85, 1983.

- [10] D.A. Dawson. Introductory lectures on stochastic population systems. arXiv:1705.03781 [math.PR], 2017.

- [11] D.A. Dawson, J. Tang, and Y.Q. Zhao. Balancing queues by mean field interactions. Queueing Syst, 49:335–361, 2005.

- [12] D.A. Dawson, J. Tang, and Y.Q. Zhao. Performance analysis of joining the shortest queue model among a large number of queues. Asia-Pacific Journal of Operational Research, 36(4), 2019.

- [13] S. Delattre and N. Fournier. Statistical inference versus mean field limit for hawkes processes. Electronic Journal of Statistics, 10(1):1223–1295, 2016.

- [14] R.L. Dobrushin and Y.M. Sukhov. Asymptotic investigation of star-shaped message switching networks with a large number of radial rays. Probl. Inf. Trans., 12(1):49–66, 1976.

- [15] S.N. Ethier and T.G. Kurtz. The infinitely-many-alleles model with selection as a measure-valued diffusion. Stochastic Methods in Biology, volume 70. Springer-Verlag, Berlin-Heidelberg-N.Y., 1987.

- [16] J. Gärtner. On the mckean-vlasov limit for interacting diffusions. Math. Nachr., 137:197–248, 1988.

- [17] V. Genon-Catalot and C. Larédo. Parametric inference for small variance and long time horizon mckean-vlasov diffusion models. Electronic Journal of Statistics, 15(2):5811–5854.

- [18] K. Giesecke, G. Schwenkler, and J.A. Sirignano. Inference for large financial systems. Math. Fin., 30:823–838, 2019.

- [19] K. Giesecke, K. Spiliopoulos, R.B. Sowers, and J.A. Sirignano. Large portfolio asymptotics for loss from default. Mathematical Finance, 25(1):77–114, 2015.

- [20] C. Graham. Chaoticity on path space for a queueing network with selection of the shortest queue amongst several. J. Appl. Prob., 37(1):198–211, 2000.

- [21] C. Graham and S. Méléard. Propagation of chaos for a fully connected loss network with alternate routing. Stoch. Processes Appl., 44:159–180, 1993.

- [22] C. Graham and S. Méléard. Dynamic asymptotic results for a generalized star-shaped ioss network. Ann. Appl. Prob., 5, 1995.

- [23] H.P. McKean Jr. A class of markov processes associated with nonlinear parabolic equations. Proc. Natl. Acad. Sci. USA, 56(6):1907–1911, 1966.

- [24] H.P. McKean Jr. Speed of approach to equilibrium for kac’s caricature of a maxwellian gas. Arch. Ration. Mech. Anal., 21(5):343–367, 1966.

- [25] M. Kac. Foundations of kinetic theory. In Calif University of California Press, Berkeley, editor, Proceedings of the Third Berkeley Symposium on Mathematical Statistics and Probability, Volume 3: Contributions to Astronomy and Physics, pages 171–197, 1956.

- [26] R.A. Kasonga. Maximum likelihood theory for large interacting systems. SIAM Journal on Applied Mathematics, 50(3):865–875, 1990.

- [27] O. Kley, C. Klüppelberg, and L. Reichel. Systemic risk through contagion in a core-periphery structured banking network. Banach Center publications, 104:133–149, 2015.

- [28] C. Liu. Statistical inference for a partially observed interacting system of hawkes processes. Stochastic Processes and their Applications, 130(9):5636–5694, 2020.

- [29] L. Della Maestra and M. Hoffmann. Nonparametric estimation for interacting particle systems: Mckean-vlasov models. Probab. Theory Relat. Fields, 182:551–613, 2022.

- [30] S. Méléard and V. Bansaye. Some Stochastic Models for Structured Populations: Scaling Limits and Long Time Behavior. Springer, 2015.

- [31] M. Mitzenmacher. The power of two choices in randomized load balancing. PhD thesis, University of California at Berkeley, 1996.

- [32] L. Sharrock, N. Kantas, P. Parpas, and G.A. Pavliotis. Online parameter estimation for the mckean-vlasov stochastic differential equation. Stochastic Processes and their Applications, 162:481–546, 2023.

- [33] J.M. Steele. The Cauchy-Schwarz Master Class. Cambridge University Press, 2004.

- [34] A. W. van der Vaart. Asymptotic Statistics. Cambridge Series in Statistical and Probabilistic Mathematics. Cambridge University Press, 1998.

- [35] N.D. Vvedenskaya, R.L. Dobrushin, and F.I. Karpelevich. Queueing system with selection of the shortest of two queues: An asymptotic approach. Problems Inform. Transmission, 32(1):15–27, 1996.

- [36] P. Weiss. L’hypothese du champ moléculaire et la propriété ferromagnetique. J. Phys. Theor. Appl., 6(1):661–690, 1907.

- [37] Y.Q. Zhao. Statistical inference for mean-field queueing models. Queueing Syst, 100:569–571, 2022.