Statistical and Computational Complexities of BFGS Quasi-Newton Method for Generalized Linear Models

Abstract

The gradient descent (GD) method has been used widely to solve parameter estimation in generalized linear models (GLMs), a generalization of linear models when the link function can be non-linear. In GLMs with a polynomial link function, it has been shown that in the high signal-to-noise ratio (SNR) regime, due to the problem’s strong convexity and smoothness, GD converges linearly and reaches the final desired accuracy in a logarithmic number of iterations. In contrast, in the low SNR setting, where the problem becomes locally convex, GD converges at a slower rate and requires a polynomial number of iterations to reach the desired accuracy. Even though Newton’s method can be used to resolve the flat curvature of the loss functions in the low SNR case, its computational cost is prohibitive in high-dimensional settings as it is , where the is the problem dimension. To address the shortcomings of GD and Newton’s method, we propose the use of the BFGS quasi-Newton method to solve parameter estimation of the GLMs, which has a per iteration cost of . When the SNR is low, for GLMs with a polynomial link function of degree , we demonstrate that the iterates of BFGS converge linearly to the optimal solution of the population least-square loss function, and the contraction coefficient of the BFGS algorithm is comparable to that of Newton’s method. Moreover, the contraction factor of the linear rate is independent of problem parameters and only depends on the degree of the link function . Also, for the empirical loss with samples, we prove that in the low SNR setting of GLMs with a polynomial link function of degree , the iterates of BFGS reach a final statistical radius of after at most iterations. This complexity is significantly less than the number required for GD, which scales polynomially with .

1 Introduction

In supervised machine learning, we are given a set of independent samples denoted by with corresponding labels , that are drawn from some unknown distribution and our goal is to train a model that maps the feature vectors to their corresponding labels. We assume that the data is generated according to distribution which is parameterized by a ground truth parameter . Our goal as the learner is to find by solving the empirical risk minimization (ERM) problem defined as

| (1) |

where is the loss function that measures the error between the predicted output of using parameter and its true label . If we define as an optimal solution of the above optimization problem, i.e., , it can be considered as an approximation of the ground-truth solution , where is also a minimizer of the population loss defined as

| (2) |

If one can solve the empirical risk efficiently, the output model could be close to , when is sufficiently large. Several works have studied the complexity of iterative methods for solving ERM or directly the population loss, for the case that the objective function is convex or strongly convex with respect to (Balakrishnan et al., 2017; Ho et al., 2020; Loh and Wainwright, 2015; Agarwal et al., 2012; Yuan and Zhang, 2013; Dwivedi et al., 2020b; Hardt et al., 2016; Candes et al., 2011). However, when we move beyond linear models, the underlying loss becomes non-convex and therefore the behavior of iterative methods could substantially change, and it is not even clear if they can reach a neighborhood of a global minimizer of the ERM problem.

The focus of this paper is on the generalized linear model (GLM) (Carroll et al., 1997; Netrapalli et al., 2015; Fienup, 1982; Shechtman et al., 2015; Feiyan Tian, 2021) where the labels and features are generated according to a polynomial link function and we have , where is an additive noise and is an integer. Due to nonlinear structure of the generative model, even if we select a convex loss function , the ERM problem denoted to the considered GLM could be non-convex with respect to . Interestingly, depending on the norm of , the curvature of the ERM problem and its corresponding population risk minimization problem could change substantially. More precisely, in the case that is sufficiently large, which we refer to this case as the high signal-to-noise ratio (SNR) regime, the underlying population loss of the problem of interest is locally strongly convex and smooth. On the other hand, in the regime that is close to zero, denoted by the low SNR regime, the underlying problem is neither strongly convex nor smooth, and in fact, it is ill-conditioned.

These observations lead to the conclusion that in the high SNR setting, due to strong convexity and smoothness of the underlying problem, gradient descent (GD) reaches the desired accuracy exponentially fast and overall it only requires logarithmic number of iterations. However, in the low SNR case, as the problem becomes locally convex, GD converges at a sublinear rate and thus requires polynomial number of iterations in terms of the sample size.

To address this issue, Ren et al. (2022a) advocated the use of GD with the Polyak step size to improve GD’s convergence in low SNR scenarios. hey demonstrated that the number of iterations could become logarithmic with respect to the sample size, when GD is deployed with the Polyak step size. However, such a method still remains a first-order algorithm and lacks any curvature approximation. Consequently, its overall complexity is directly proportional to the condition number of the problem. This, in turn, depends on both the condition number of the feature vectors’ covariance and the norm . As a result, in low SNR settings, the problem becomes ill-conditioned with a large condition number, and hence GD with the Polyak step size could be very slow. Furthermore, the implementation of the Polyak step size necessitates access to the optimal value of the objective function. As precise estimation of this optimal objective function value may not always be feasible, any inaccuracies could potentially lead to a reduced convergence rate for GD employing the Polyak step size.

Another alternative is to use a different distance metric instead of an adaptive step size to improve the convergence of GD for the low SNR setting. More precisely, Lu et al. (2018) have shown that the mirror descent method with a proper distance-generating function can solve the population loss corresponding to the low SNR setting at a linear rate. However, the linear convergence rate of mirror descent, similar to GD with Polyak step size, also depends on the condition number of the problem, and hence could lead to a slow convergence rate.

A natural approach to handle the ill-conditioning issue in the low SNR case as well as eliminating the need to estimate the optimal function value is the use of Newton’s method. As we show in this paper, this idea indeed addresses the issue of poor curvature of the problem and leads to an exponentially fast rate with contraction factor in the population case, where is the degree of the polynomial link function. Moreover, in the high SNR setting, Newton’s method converges at a quadratic rate as the problem is strongly convex and smooth. Alas, these improvements come at the expense of increasing the computational complexity of each iteration to which is indeed more than the per iteration computational cost of GD that is . These points lead to this question:

Is there a computationally-efficient method that performs well in both high and low SNR settings at a reasonable per iteration computational cost?

Contributions. In this paper, we address this question and show that the BFGS method is capable of achieving these goals. BFGS is a quasi-Newton method that approximates the objective function curvature using gradient information and its per iteration cost is . It is well-known that it enjoys a superlinear convergence rate that is independent of condition number in strongly convex and smooth settings, and hence, in the high SNR setting it outperforms GD. In the low SNR setting, where the Hessian at the optimal solution could be singular, we show that the BFGS method converges linearly and outperforms the sublinear convergence rate of GD. Next, we formally summarize our contributions.

-

•

Infinite sample, low SNR: For the infinite sample case where we minimize the population loss, we show that in the low SNR case the iterates of BFGS converge to the ground truth at an exponentially fast rate that is independent of all problem parameters except the power of link function . We further show that the linear convergence contraction coefficient of BFGS is comparable to that of Newton’s method. This convergence result of BFGS is also of general interest as it provides the first global linear convergence of BFGS without line-search for a setting that is neither strictly nor strongly convex.

-

•

Finite sample, low SNR: By leveraging the results developed for the population loss of the low SNR regime, we show that in the finite sample case, the BFGS iterates converge to the final statistical radius within the true parameter after a logarithmic number of iterations . It is substantially lower than the required number of iterations for fixed-step size GD, which is , to reach a similar statistical radius. This improvement is the direct outcome of the linear convergence of BFGS versus the sublinear convergence rate of GD in the low SNR case. Further, while the iteration complexity of BFGS is comparable to the logarithmic number of iterations of GD with Polyak step size, we show that BFGS removes the dependency of the overall complexity on the condition number of the problem as well as the need to estimate the optimal function value.

-

•

Experiments: We conduct numerical experiments for both infinite and finite sample cases to compare the performance of GD (with constant step size and Polyak step size), Newton’s method and BFGS. The provided empirical results are consistent with our theoretical findings and show the advantages of BFGS in the low SNR regime.

Outline. In Section 2, we discuss the BFGS quasi-Newton method. Section 3 details three scenarios in Generalized Linear Models (GLMs): low, middle, and high SNR regimes, outlining the characteristics of the population loss in each. Section 4 explores BFGS’s convergence in low SNR settings, highlighting its linear convergence rate, a marked improvement over gradient descent’s sublinear rate. This section also compares the convergence rates of BFGS and Newton’s method. Section 5 applies these insights to establish the convergence results of BFGS for the empirical loss in the low SNR regime. Lastly, our numerical experiments are presented in Section 6.

2 BFGS algorithm

In this section, we review the basics of the BFGS quasi-Newton method, which is the main algorithm we analyze. Consider the case that we aim to minimize a differentiable convex function . The BFGS update is given by

| (3) |

where is the step size and is a positive definite matrix that aims to approximate the true Hessian inverse . There are several approaches for approximating leading to different quasi-Newton methods, (Conn et al., 1991; Broyden, 1965; Broyden et al., 1973; Gay, 1979; Davidon, 1959; Fletcher and Powell, 1963; Broyden, 1970; Fletcher, 1970; Goldfarb, 1970; Shanno, 1970; Nocedal, 1980; Liu and Nocedal, 1989), but in this paper, we focus on the celebrated BFGS method, in which is updated as

| (4) |

where the variable variation and gradient displacement are defined as

| (5) |

for any respectively. The logic behind the update in (4) is to ensure that the Hessian inverse approximation satisfies the secant condition , while it stays close to the previous approximation matrix . The update in (4) only requires matrix-vector multiplications, and hence, the computational cost per iteration of BFGS is .

The main advantage of BFGS is its fast superlinear convergence rate under the assumption of strict convexity,

where is the optimal solution. Previous results on the superlinear convergence of quasi-Newton methods were all asymptotic, until the recent advancements on the non-asymptotic analysis of quasi-Newton methods (Rodomanov and Nesterov, 2021a, b, c; Jin and Mokhtari, 2020; Jin et al., 2022; Ye et al., 2021; Lin et al., 2021a, b). For instance, Jin and Mokhtari (2020) established a local superlinear convergence rate of for BFGS. However, all these superlinear convergence analyses require the objective function to be smooth and strictly or strongly convex. Alas, these conditions are not satisfied in the low SNR setting, since the Hessian at the optimal solution could be singular, and hence the loss function is neither strongly convex nor strictly convex; we further discuss this point in Section 3. This observation implies that we need novel convergence analyses to study the behavior of BFGS in the low SNR setting, as we discuss in the upcoming sections.

3 Generalized linear model with polynomial link function

In this section, we formally present the generalized linear model (GLM) setting that we consider in our paper, and discuss the low and high SNR settings and optimization challenges corresponding to these cases. Consider the case that the feature vectors are denoted by and their corresponding labels are denoted by . Suppose that we have access to sample points that are i.i.d. samples from the following generalized linear model with polynomial link function of power (Carroll et al., 1997), i.e.,

| (6) |

where is a true but unknown parameter, is a given power, and are independent Gaussian noises with zero mean and variance . The Gaussian assumption on the noise is for the simplicity of the discussion and similar results hold for the sub-Gaussian i.i.d. noise case. Furthermore, we assume the feature vectors are generated as where is a symmetric positive definite matrix. Here we focus on the settings that and .

The above class of GLMs with polynomial link functions arise in several settings. When , the model in (6) is the standard linear regression model, and for the case that , the above setup corresponds to the phase retrieval model (Fienup, 1982; Shechtman et al., 2015; Candes et al., 2011; Netrapalli et al., 2015), which has found applications in optical imaging, x-ray tomography, and audio signal processing. Moreover, the analysis of GLMs with also serves as the basis of the analysis on other popular statistical models. For example, as shown by Yi and Caramanis (2015); Wang et al. (2015); Xu et al. (2016); Balakrishnan et al. (2017); Daskalakis et al. (2017); Dwivedi et al. (2020a); Kwon et al. (2019); Dwivedi et al. (2020b); Kwon et al. (2021), the local landscape of log-likelihood for Gaussian mixture models and mixture linear regression models are identical to GLMs for .

In the case that the polynomial link function parameter in the GLM model in (6) is , by adapting similar arguments from Kwon et al. (2021) under the symmetric two-component location Gaussian mixture, there are essentially three regimes for estimating the true parameter : Low signal-to-noise ratio (SNR) regime: where is the dimension, is the sample size, and is a universal constant; Middle SNR regime: where is a universal constant; High SNR regime: . The main idea is that with different , the optimization landscape of the parameter estimation problem changes. By generalizing the insights from the case , we define the following regimes for any :

-

•

(i) Low SNR regime: where is the dimension, is the sample size, and is a universal constant;

-

•

(ii) Middle SNR regime: where is a universal constant;

-

•

(iii) High SNR regime: .

Note that, the rate that we use to define the SNR regimes is from the statistical rate of estimating the true parameter when approaches 0. Next, we provide insight into the landscape of the least-square loss function for each regime. In particular, given the GLM in (6), the sample least-square takes the following form:

| (7) |

To obtain insight about the landscape of the empirical loss function , a useful approach is to consider that function by its population version, which we refer to as population least-square loss function:

| (8) |

where the outer expectation is taken with respect to the data.

High SNR regime. In the setting that the ground truth parameter has a relatively large norm, i.e., for some constant that only depends on , the population loss in (8) is locally strongly convex and smooth around . More precisely, when is small, using Taylor’s theorem we have

Hence, in a neighborhood of the optimal solution, the objective in (8) can be approximated as

Indeed, if the above function behaves as a quadratic function that is smooth and strongly convex, assuming that is negligible. As a result, the iterates of gradient descent (GD) converge to the solution at a linear rate and it requires to reach an -accurate solution, where depends on the conditioning of the covariance matrix and the norm of . In this case, BFGS converges superlinearly to and the rate would be independent of , while the cost per iteration would be .

Low SNR regime. As mentioned above, in the high SNR case, GD has a fast linear rate. However, in the low SNR case where is small and , the strong convexity parameter approaches zero when the sample size goes to infinity and the problem becomes ill-conditioned. In this case, we deal with a function that is only convex and its gradient is not Lipschitz continuous. To better elaborate on this point, let us focus on the case that as a special case of the low SNR setting. Considering the underlying distribution of , which is , for such a low SNR case, the population loss can be written as

| (9) |

Since we focus on it can be verified that is not strongly convex in a neighborhood of the solution . For this class of functions, it is well-known that GD with constant step size would converge at a sublinear rate, and hence GD iterates require polynomial number of iterations to reach the final statistical radius. In the next section, we study the behavior of BFGS for solving the low SNR setting and showcase its advantage compared to GD.

Middle SNR regime. Different from the low and high SNR regimes, the middle SNR regime is generally harder to analyze as the landscapes of both the population and sample least-square loss functions are complex. Adapting the insight from middle SNR regime of the symmetric two-component location Gaussian mixtures from Kwon et al. (2021), for the middle SNR setting of the generalized linear model, the eigenvalues of the Hessian matrix of the population least-square loss function approach 0 and their vanishing rates depend on some increasing function of . The optimal statistical rate of the optimization algorithms, such as gradient descent algorithm, for solving depends strictly on the tightness of these vanishing rates in terms of , which are non-trivial to obtain. In fact, to the best of our knowledge, there is no result on the convergence of iterative methods (such as GD or its variants) for GLMs with a polynomial link function in the middle SNR. Hence, we leave the study of BFGS for this setting as a future work.

4 Convergence analysis in the low SNR regime: Population loss

In this section, we focus on the convergence properties of BFGS for the population loss in the low SNR case introduced in (9). This analysis provides an intuition for the analysis of the finite sample case that we discuss in Section 5, as we expect these two loss functions to be close to each other when the number of samples is sufficiently large. In this section, instead of focusing on the population loss within the low SNR regime, as described in (9), we shift our attention to a more encompassing objective function, . Detailed in the following expression, this function encompasses the population loss function with as a specific example. This approach enables us to present our results in the most general setting possible. Specifically, we examine the function , defined as follows:

| (10) |

where is a matrix, is a given vector, and satisfies . We should note that for , the considered objective is not strictly convex because the Hessian is singular when . Indeed, if we set and further let be and choose , then we recover the problem in (9) for . Note that since we focus on the generalized linear model with the polynomial link function, which necessitates that be an integer, the parameter is also an integer.

Notice that the problem in (10), which serves as a surrogate for the finite sample problem that we plan to study in the next section, has the same solution set as the quadratic problem of minimizing with solution when is invertible. Given this point, one might suggest that instead of minimizing (10) we could directly solve the quadratic problem which is indeed much easier to solve. This point is valid, but the goal of this section is not to efficiently solve problem (10) itself. Our goal is to understand the convergence properties of the BFGS method for solving the problem in (10) with the hope that it will provide some intuitions for the convergence analysis of BFGS for the empirical loss (7) in the low SNR regime. As we will discuss in Section 5, the convergence analysis of BFGS on the population loss which is a special case of (10) is closely related to the one for the empirical loss in (7).

Remark 1.

One remark is that population loss in (9) holds for the restrictive assumption of , which is only a special case of the general low SNR regime of . Our ultimate goal is to analyze the convergence behavior of the BFGS method applied to the empirical loss (7) of GLM problems in the low SNR regime. The errors between gradients and Hessians of the population loss with and are upper bounded by the corresponding statistical errors between the population loss (8) and the empirical loss (7) in the low SNR regime, respectively. Therefore, the errors between iterations of applying BFGS to the population loss with and are negligible compared to the statistical errors. Instead of directly analyzing BFGS for the population loss (8) in the general low SNR regime, studying the convergence properties of BFGS for the population loss (9) with can equivalently lay foundations for the convergence analysis of BFGS for the empirical loss (7) in the low SNR regime, which is the main target of this paper. The details can be found in Appendix A.4.

Our results in this section are of general interest from an optimization perspective, since there is no global convergence theory (without line-search) for the BFGS method in the literature when the objective function is not strictly convex. Our analysis provides one of the first results for such general settings. That said, it should be highlighted that our results do not hold for general convex problems, and we do utilize the specific structure of the considered convex problem to establish our results. The following examples illustrate some of the specific structures that we exploit to establish our result.

Assumption 1.

There exists , such that . In other words, is in the range of matrix .

This assumption implies that the problem in (10) is realizable, is an optimal solution, and the optimal function value is zero. This assumption is satisfied in our considered low SNR setting in (9) as which implies .

Assumption 2.

The matrix is invertible. This is equivalent to .

The above assumption is also satisfied for our considered setting as we assume that the covariance matrix for our input features is positive definite. Combining Assumptions 1 and 2, we conclude that is the unique optimal solution of problem (10). Next, we state the convergence rate of BFGS for solving problem (10) under the disclosed assumptions. The proof of the next theorem is available in Appendix A.1.

Theorem 1.

Consider the BFGS method in (3)-(5). Suppose Assumptions 1 and 2 hold, and the initial Hessian inverse approximation matrix is selected as , where is an arbitrary initial point. If the step size is for all , then the iterates of BFGS converge to the optimal solution at a linear rate of

| (11) |

where the contraction factors satisfy

| (12) |

Theorem 1 shows that the iterates of BFGS converge globally at a linear rate to the optimal solution of (10). This result is of interest as it illustrates the iterates generated by BFGS converge globally without any line search scheme and the step size is fixed as for any . Moreover, the initial point is arbitrary and there is no restriction on the distance between and the optimal solution .

We should highlight the above linear convergence result and our convergence analysis both rely heavily on the distinct structure of problem (10) and may not hold for a general convex minimization problem. Specifically, it can be shown that if we had access to the exact Hessian and could perform a Newton’s update to solve the problem in (10), then the error vectors would all be parallel. This property arises due to the fact that for the problem in (10) the Newton direction is . Consequently, the next error vector computed by running one step of Newton would satisfy . Therefore, the error vector at time , denoted by , is parallel to the previous error vector , with the only difference being that its norm is reduced by a factor of . Using an induction argument, it simply follows that all error vectors remain parallel to each other while their norm contracts at a rate of . This is a key point that is used in the result for Newton’s method in Theorem 3. In the convergence analysis of BFGS (stated in the proof of Theorem 1), we use a similar argument. While we cannot guarantee that the Hessian approximation matrix in the BFGS method matches the exact Hessian, we demonstrate that it can inherit this property, maintaining all error vectors as parallel to each other. Additionally, we show that the norm is shrinking at a factor of , which is always larger than , yet remains independent of the problem’s condition number or dimensions. In the following theorem, we show that for , the linear rate contraction factors also converge linearly to a fixed point contraction factor determined by the parameter . The proof is available in Appendix A.2.

Theorem 2.

Based on Theorem 2, the iterates of BFGS eventually converge to the optimal solution at the linear rate of determined by (13). Specifically, the factors converge to the fixed point at a linear rate with the contraction factor of . Further, the linear convergence factors and their limit are all only determined by , and they are independent of the dimension and the condition number of the matrix . Hence, the performance of BFGS is not influenced by high-dimensional or ill-conditioned problems. This result is independently important from an optimization point of view, as it provides the first global linear convergence of BFGS without line-search for a setting that is not strictly or strongly convex, and interestingly the constant of linear convergence is independent of dimension or condition number.

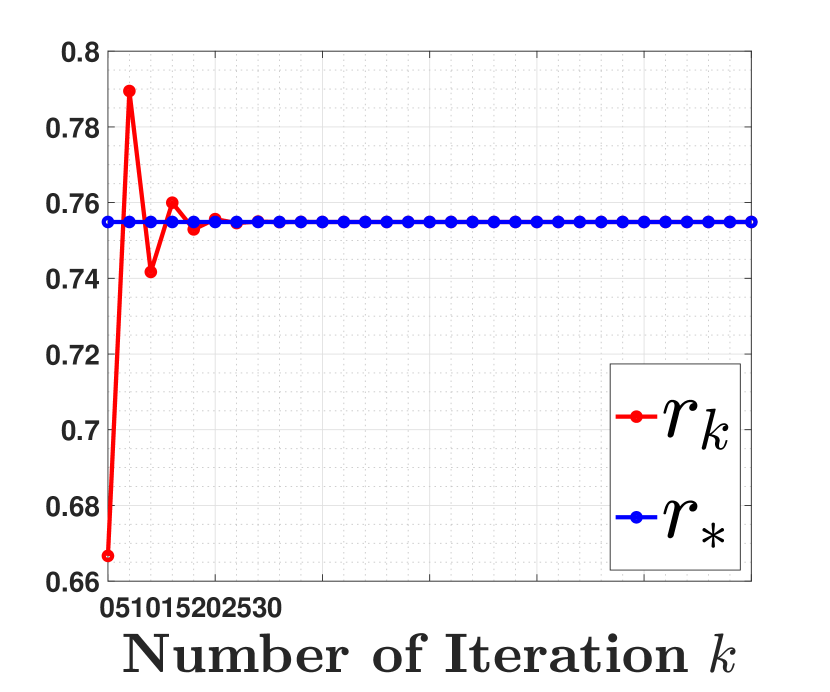

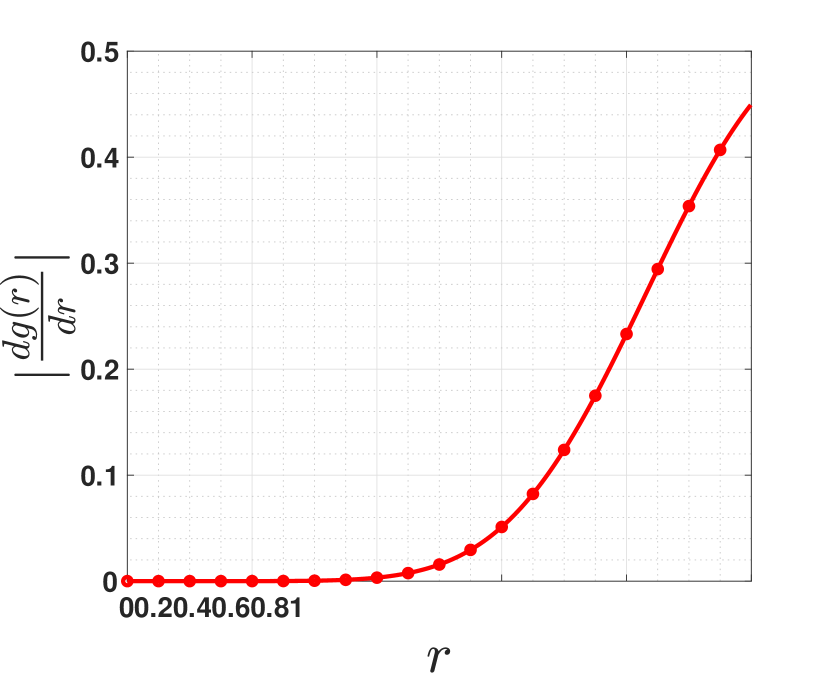

We illustrate the convergence of factors to the fixed point in Figure 1 for and . In plots (a) and (b), we observe that becomes close to after only iterations. Hence, the linear convergence rate of BFGS is approximately after only a few iterations. We further observe in plots (c) and (d) that the factors converge to the fixed point at a linear rate upper bounded by . Note that is the solution of (13). These plots verify our results in Theorem 2.

Remark 2.

The cost of computing the initial Hessian inverse approximation is , but this cost is only required for the first iteration, and it is not required for as for those iterates we update the Hessian inverse approximation matrix based on the update in (4) at a cost of .

Remark 3.

Although the vanilla Gradient Descent (GD) method converges at a sub-linear rate when applied to problem (10), it has been shown that other first-order methods, such as Mirror Descent with a distance-generating function can solve problem (10) at a linear rate (refer to Lu et al. (2018)). However, the linear convergence rate of Mirror Descent is dependent on the condition number of the problem, characterized by the rate . In contrast, as demonstrated by Theorems 1 and 2, the linear convergence rate of BFGS is independent of the problem’s condition number. Consequently, in settings with low SNR, which can be ill-conditioned, we anticipate a faster convergence rate for BFGS compared to Mirror Descent. We illustrate this point in our numerical experiments presented in Figure 2.

4.1 Comparison with Newton’s method

Next, we compare the convergence results of BFGS for solving problem (10) with the one for Newton’s method. The following theorem characterizes the global linear convergence of Newton’s method with unit step size applied to the objective function in (10).

Theorem 3.

Consider applying Newton’s method to optimization problem (10) and suppose Assumptions 1 and 2 hold. Moreover, suppose the step size is for any . Then, the iterates of Newton’s method converge to the optimal solution at a linear rate of

| (15) |

Moreover, this linear convergence rate is smaller than the fixed point defined in (13) of the BFGS quasi-Newton method, i.e., for all .

The proof is available in Appendix A.3. The convergence results of Newton’s method are also global without any line search method, and the linear rate is independent of dimension and condition number . Furthermore, the condition implies that iterates of Newton’s method converge faster than BFGS, but the gap is not substantial as . On the other hand, the computational cost per iteration of Newton’s method is which is worse than the of BFGS.

Moving back to our main problem, one important implication of the above convergence results is that in the low SNR setting the iterates of BFGS converge linearly to the optimal solution of the population loss function, while the contraction coefficient of BFGS is comparable to that of Newton’s method which is . For instance, for , the linear rate contraction factor of Newton’s method are and the approximate linear rate contraction factor of BFGS denoted by are , respectively.

5 Convergence analysis in the low SNR regime: Finite sample setting

Thus far, we have demonstrated that the BFGS iterates converge linearly to the true parameter when minimizing the population loss function of the GLM in (9) in the low SNR regime. In this section, we study the statistical behavior of the BFGS iterates for the finite sample case by leveraging the insights developed in the previous section about the convergence rate of BFGS in the infinite sample case, i.e., population loss. More precisely, we focus on the application of BFGS for solving the least-square loss function defined in (7) for the low SNR setting. The iterates of BFGS in this case follow the update rule

| (16) |

where is updated using the gradient information of the loss by the BFGS rule.

We next show that the BFGS iterates (16) converge to the final statistical radius within a logarithmic number of iterations under the low SNR regime of the GLMs. To prove this claim, we track the difference between the iterates generated based on the empirical loss and the iterates generated according to the population loss. Assuming that they both start from the same initialization , with the concentration of the gradient and the Hessian from Mou et al. (2019); Ren et al. (2022b) we control the deviation between these two sequences. Using this bound and the convergence results of the iterates generated based on the population loss discussed in the previous section, we prove the following result for the finite sample setting. The proof is available in Appendix A.5.

Theorem 4.

Consider the low SNR regime of the GLM in (6) namely, . Apply the BFGS method to the empirical loss (7) with the initial point , where for some , the initial Hessian inverse approximation matrix as , where is positive definite and step size . For any failure probability , if the number of samples is , and the number of iterations satisfies , then with probability , we have

| (17) |

where , , , and are constants independent of and .

Our analysis hinges on linking the gradient and Hessian of the empirical loss to the population loss, subsequently demonstrating a convergence rate for the empirical loss based on the linear convergence established in Section 4 for the population loss. More details can be found in Appendix A.5. Theorem 4 shows that BFGS achieves an estimation accuracy of in iterations, which is substantialy faster than GD that requires iteations to achieve the same guarantee as shown in (Ren et al., 2022a). A few comments about Theorem 4 are in order.

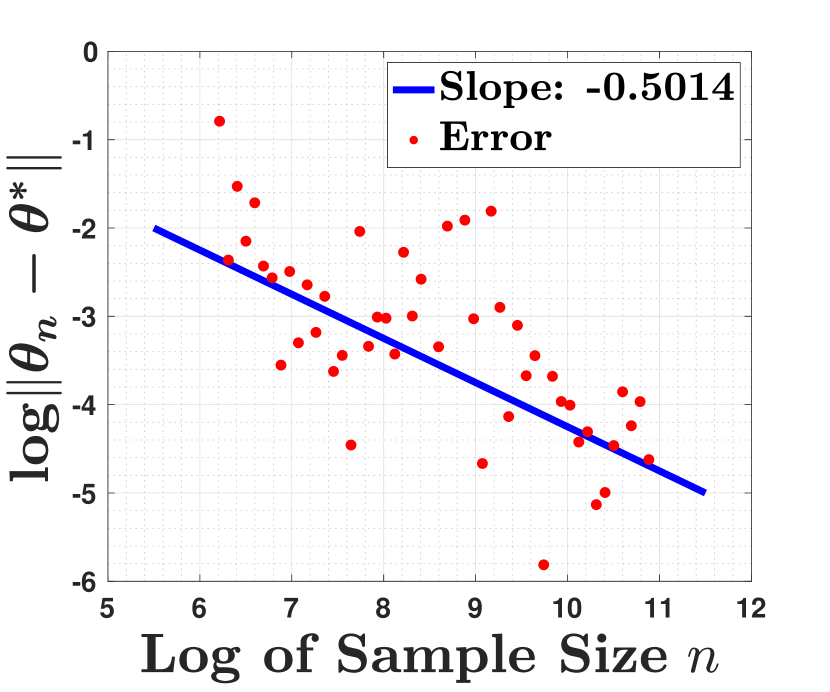

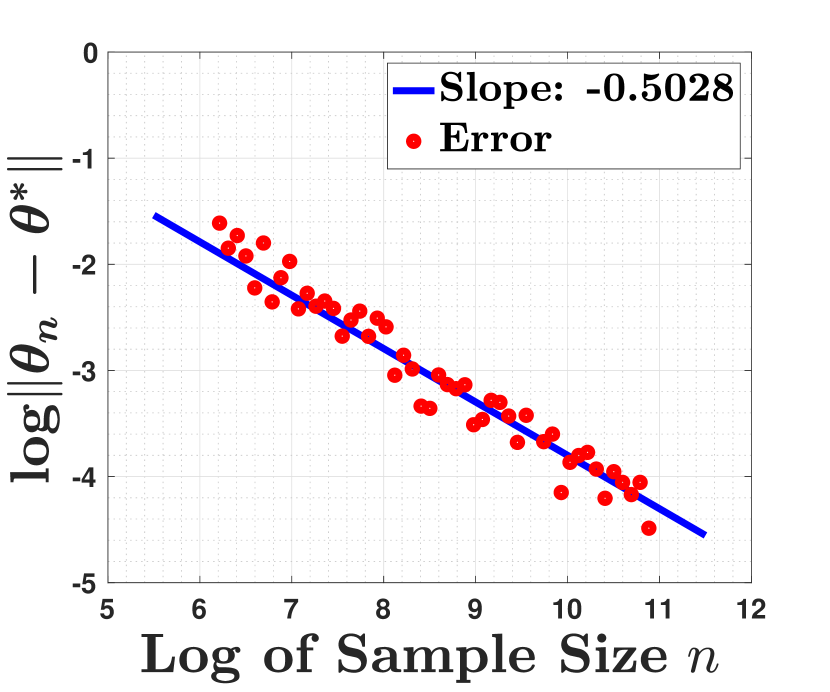

Comparison to GD, GD with Polyak step size, and Newton’s method: Theorem 4 indicates that under the low SNR regime, the BFGS iterates reach the final statistical radius within the true parameter after number of iterations. The statistical radius is slightly worse than the optimal statistical radius . However, we conjecture that this is due to the proof technique and BFGS can still reach the optimal in practice. In our experiments, in the next section, we observe that when and , the statistical radius of BFGS is closer to the optimal radius of instead of suggested by our analysis. We leave an improvement of the statistical analysis as the future work. On the other hand, the overall iteration complexity of BFGS, which is , is indeed better than the polynomial number of iterations of GD, which is at the order of (Corollary 3 in (Ho et al., 2020)).

Moreover, the complexity of BFGS is better than the one for GD with Polyak step size which is iterations (Corollary 1 in (Ren et al., 2022a)), where is the condition number of the covariance matrix . Note that while the iteration complexity of BFGS is comparable to that of GD with Polyak step size in terms of the sample size, the BFGS overcomes the need to approximate the optimal value of the sample least-square loss , which can be unstable in practice, and also removes the dependency on the condition number that appears in the complexity bound of GD with Polyak step size. A similar conclusion also holds for mirror descent with a distance-generating function , as its linear convergence rate has a contraction factor of , leading to an overall iteration complexity of .

Finally, the iteration complexity of BFGS is comparable to the of Newton’s method (Corollary 3 in (Ho et al., 2020)), while per iteration cost of BFGS is substantially lower than Newton’s method.

On the minimum number of iterations: The results in Theorem 4 involve the minimum number of iterations, namely, this result holds for some . It suggests that the BFGS iterates may diverge after they reach the final statistical radius. As highlighted in (Ho et al., 2020), such instability behavior of BFGS is inherent to fast and unstable methods. While it may sound limited, this issue can be handled via an early stopping scheme using the cross-validation approaches. We illustrate such early stopping of the BFGS iterates for the low SNR regime in Figure 3.

6 Numerical experiments

Our experiments are divided into two sections: the first focuses on iterative methods’ behavior on the population loss of GLMs with polynomial link functions, and the second examines the finite sample setting.

Experiments for the population loss function. In this section, we compare the performance of Newton’s method, BFGS, GD with constant step size, GD with Polyak step size, and and mirror descent with constant step size and distance generating function applied to (10) which corresponds to the population loss. We choose different values of parameter , dimension and the exponential parameter in (10). We generate a random matrix and a random vector , and compute the vector . The initial point is also generated randomly. To properly select the steps for GD with a fixed step size , we employed a manual grid search across the following values and selected the value that yielded the best performance for each specific problem. In our plots, we present the logarithm of error versus the number of iteration for different algorithms. All the values of different parameters , , and are mentioned in the caption of the plots.

In Figure 2, we observe that GD with constant step converges slowly due to its sub-linear convergence rate. The performance of GD with Polyak step size is also poor when dimension is large or the parameter is huge. This is due to the fact that as dimension increases the problem becomes more ill-conditioned and hence the linear convergence contraction factor approaches . We observe that both Newton’s method and BFGS generate iterations with linear convergence rates, and their linear convergence rates are only affected by the parameter , i.e., the dimension has no impact over the performance of BFGS and Newton’s method. Although the convergence speed of Newton’s method is faster than BFGS, their gap is not significantly large as we expected from our theoretical results in Section 4. We also observe that mirror descent outperforms GD, but its performance is not as good as the BFGS method.

Experiments for the empirical loss function. We next study the statistical and computational complexities of BFGS on the empirical loss. In our experiments, we first consider the case that and the power of the link function is , namely, we consider the multivariate setting of the phase retrieval problem. The data is generated by first sampling the inputs according to where , and then generating their labels based on where are i.i.d. samples from . In the low SNR regime, we set , and in the high SNR regime we select uniformly at random from the unit sphere. Further, for GD, we set the step size as , while for Newton’s method and BFGS, we use the unit step size .

In plots (a) and (b) of Figure 3, we consider the setting that the sample size is , and we run GD, GD with Polyak step size, BFGS, and Newton’s method to find the optimal solution of the sample least-square loss . Furthermore, for both Newton’s method and the BFGS algorithm, due to their instability, we also perform cross-validation to choose their early stopping. In particular, we split the data into training and the test sets. The training set consists of of the data while the test set has of the data. The yellow points in plots (a) and (b) of Figure 3 show the iterates of BFGS and Newton, respectively, with the minimum validation loss. As we observe, under the low SNR regime, the iterates of GD with Polyak step size, BFGS and Newton’s method converge geometrically fast to the final statistical radius while those of the GD converge slowly to that radius. Under the high SNR regime, the iterates of all of these methods converge geometrically fast to the final statistical radius. The faster convergence of GD with Polyak step size over GD is due to the optimal Polyak step size, while the faster convergence of BFGS and Newton’s method over GD is due to their independence on the problem condition number. Finally, in plots (c) and (d) of Figure 3, we run BFGS when the sample size is ranging from to to empirically verify the statistical radius of these methods. As indicated in the plots of that figure, under the high SNR regime, the BFGS has statistical radius is , while under the low SNR regime, its statistical radius becomes .

To show that BFGS can also be applied to high dimension scenarios, we conduct additional experiments on the generalized linear model with input and the power of link function . The inputs are generated by where , and the remaining setting and hyper-parameters are set identical to the low dimension scenarios. The results are shown in Figure 4 and 5. As the results show, the performance of BFGS in high dimensional scenarios are nearly identical to the low dimensional scenarios.

7 Conclusions

In this paper, we analyzed the convergence rates of BFGS on both population and empirical loss functions of the generalized linear model in the low SNR regime. We showed that in this case, BFGS outperforms GD and performs similar to Newton’s method in terms of iteration complexity, while it requires a lower per iteration computational complexity compared to Newton’s method. We also provided experiments for both infinite and finite sample loss functions and showed that our empirical results are consistent with our theoretical findings. Perhaps one limitation of the BFGS method is that its computational cost is still not linear in the dimension and scales as . One future research direction is to analyze some other iterative methods such as limited memory-BFGS (L-BFGS) which may be able to achieve a fast linear convergence rate in the low SNR setting, while its computational cost per iteration is .

References

- Agarwal et al. (2012) A. Agarwal, S. Negahban, and M. J. Wainwright. Fast global convergence of gradient methods for high-dimensional statistical recovery. Annals of Statistics, 40(5):2452–2482, 2012.

- Balakrishnan et al. (2017) S. Balakrishnan, M. J. Wainwright, and B. Yu. Statistical guarantees for the EM algorithm: From population to sample-based analysis. Annals of Statistics, 45:77–120, 2017.

- Broyden et al. (1973) C. G. Broyden, J. E. Dennis Jr., Broyden, and J. J. More. On the local and superlinear convergence of quasi-Newton methods. IMA J. Appl. Math, 12(3):223–245, June 1973.

- Broyden (1965) Charles G Broyden. A class of methods for solving nonlinear simultaneous equations. Mathematics of computation, 19(92):577–593, 1965.

- Broyden (1970) Charles G Broyden. The convergence of single-rank quasi-Newton methods. Mathematics of Computation, 24(110):365–382, 1970.

- Candes et al. (2011) Emmanuel J. Candes, Yonina Eldar, Thomas Strohmer, and Vlad Voroninski. Phase retrieval via matrix completion, 2011.

- Carroll et al. (1997) R. J. Carroll, J. Fan, I. Gijbels, and M. P. Wand. Generalized partially linear single-index models. Journal of the American Statistical Association, 92:477–489, 1997.

- Conn et al. (1991) Andrew R. Conn, Nicholas I. M. Gould, and Ph L Toint. Convergence of quasi-Newton matrices generated by the symmetric rank one update. Mathematical programming, 50(1-3):177–195, 1991.

- Daskalakis et al. (2017) C. Daskalakis, C. Tzamos, and M. Zampetakis. Ten steps of EM suffice for mixtures of two Gaussians. In Proceedings of the 2017 Conference on Learning Theory, 2017.

- Davidon (1959) WC Davidon. Variable metric method for minimization. Technical report, Argonne National Lab., Lemont, Ill., 1959.

- Dwivedi et al. (2020a) R. Dwivedi, N. Ho, K. Khamaru, M. J. Wainwright, M. I. Jordan, and B. Yu. Sharp analysis of expectation-maximization for weakly identifiable models. AISTATS, 2020a.

- Dwivedi et al. (2020b) R. Dwivedi, N. Ho, K. Khamaru, M. J. Wainwright, M. I. Jordan, and B. Yu. Singularity, misspecification, and the convergence rate of EM. Annals of Statistics, 44:2726–2755, 2020b.

- Feiyan Tian (2021) Xiaoming Chen Feiyan Tian, Lei Liu. Generalized memory approximate message passing. https://arxiv.org/abs/2110.06069, 2021.

- Fienup (1982) J. R. Fienup. Phase retrieval algorithms: a comparison. Appl. Opt., 21(15):2758–2769, Aug 1982. doi: 10.1364/AO.21.002758. URL http://www.osapublishing.org/ao/abstract.cfm?URI=ao-21-15-2758.

- Fletcher (1970) Roger Fletcher. A new approach to variable metric algorithms. The computer journal, 13(3):317–322, 1970.

- Fletcher and Powell (1963) Roger Fletcher and Michael JD Powell. A rapidly convergent descent method for minimization. The computer journal, 6(2):163–168, 1963.

- Gay (1979) David M Gay. Some convergence properties of Broyden’s method. SIAM Journal on Numerical Analysis, 16(4):623–630, 1979.

- Goebel and Kirk (1990) Kazimierz Goebel and W. A. Kirk. Topics in Metric Fixed Point Theory. Cambridge University Press, 1990.

- Goldfarb (1970) Donald Goldfarb. A family of variable-metric methods derived by variational means. Mathematics of computation, 24(109):23–26, 1970.

- Hardt et al. (2016) Moritz Hardt, Ben Recht, and Yoram Singer. Train faster, generalize better: Stability of stochastic gradient descent. In Maria Florina Balcan and Kilian Q. Weinberger, editors, Proceedings of The 33rd International Conference on Machine Learning, volume 48 of Proceedings of Machine Learning Research, pages 1225–1234, New York, New York, USA, 20–22 Jun 2016. PMLR. URL http://proceedings.mlr.press/v48/hardt16.html.

- Ho et al. (2020) N. Ho, K. Khamaru, R. Dwivedi, M. J. Wainwright, M. I. Jordan, and B. Yu. Instability, computational efficiency and statistical accuracy. Arxiv Preprint Arxiv: 2005.11411, 2020.

- Jin and Mokhtari (2020) Qiujiang Jin and Aryan Mokhtari. Non-asymptotic superlinear convergence of standard quasi-newton methods. arXiv preprint arXiv:2003.13607, 2020.

- Jin et al. (2022) Qiujiang Jin, Alec Koppel, Ketan Rajawat, and Aryan Mokhtari. Sharpened quasi-newton methods: Faster superlinear rate and larger local convergence neighborhood. The 39th International Conference on Machine Learning (ICML 2022), 2022.

- Kwon et al. (2019) J. Kwon, W. Qian, C. Caramanis, Y. Chen, , and D. Damek. Global convergence of the EM algorithm for mixtures of two component linear regression. In Conference on Learning Theory (COLT), 2019.

- Kwon et al. (2021) J. Y. Kwon, N. Ho, and C. Caramanis. On the minimax optimality of the EM algorithm for learning two-component mixed linear regression. In AISTATS, 2021.

- Lin et al. (2021a) Dachao Lin, Haishan Ye, and Zhihua Zhang. Explicit superlinear convergence of broyden’s method in nonlinear equations. arXiv preprint arXiv:2109.01974, 2021a.

- Lin et al. (2021b) Dachao Lin, Haishan Ye, and Zhihua Zhang. Greedy and random quasi-newton methods with faster explicit superlinear convergence. Advances in Neural Information Processing Systems 34, 2021b.

- Liu and Nocedal (1989) Dong C Liu and Jorge Nocedal. On the limited memory BFGS method for large scale optimization. Mathematical programming, 45(1-3):503–528, 1989.

- Loh and Wainwright (2015) Po-Ling Loh and Martin J Wainwright. Regularized M-estimators with nonconvexity: Statistical and algorithmic theory for local optima. Journal of Machine Learning Research, 16:559–616, 2015.

- Lu et al. (2018) Haihao Lu, Robert M Freund, and Yurii Nesterov. Relatively smooth convex optimization by first-order methods and applications. In SIAM Journal on Optimization, 28(1):333–354, 2018.

- Mou et al. (2019) Wenlong Mou, Nhat Ho, Martin J Wainwright, Peter Bartlett, and Michael I Jordan. A diffusion process perspective on posterior contraction rates for parameters. arXiv preprint arXiv:1909.00966, 2019.

- Netrapalli et al. (2015) Praneeth Netrapalli, Prateek Jain, and Sujay Sanghavi. Phase retrieval using alternating minimization. IEEE Transactions on Signal Processing, 63(18):4814–4826, 2015. doi: 10.1109/TSP.2015.2448516.

- Nocedal (1980) Jorge Nocedal. Updating quasi-Newton matrices with limited storage. Mathematics of computation, 35(151):773–782, 1980.

- Ren et al. (2022a) Tongzheng Ren, Fuheng Cui, Alexia Atsidakou, Sujay Sanghavi, and Nhat Ho. Towards statistical and computational complexities of Polyak step size gradient descent. Artificial Intelligence and Statistics Conference, 2022a.

- Ren et al. (2022b) Tongzheng Ren, Jiacheng Zhuo, Sujay Sanghavi, and Nhat Ho. Improving computational complexity in statistical models with second-order information. arXiv preprint arXiv:2202.04219, 2022b.

- Rodomanov and Nesterov (2021a) Anton Rodomanov and Yurii Nesterov. Greedy quasi-newton methods with explicit superlinear convergence. SIAM Journal on Optimization, 31(1):785–811, 2021a.

- Rodomanov and Nesterov (2021b) Anton Rodomanov and Yurii Nesterov. Rates of superlinear convergence for classical quasi-newton methods. Mathematical Programming, pages 1–32, 2021b.

- Rodomanov and Nesterov (2021c) Anton Rodomanov and Yurii Nesterov. New results on superlinear convergence of classical quasi-newton methods. Journal of Optimization Theory and Applications, 188(3):744–769, 2021c.

- Shanno (1970) David F Shanno. Conditioning of quasi-Newton methods for function minimization. Mathematics of computation, 24(111):647–656, 1970.

- Shechtman et al. (2015) Yoav Shechtman, Yonina C. Eldar, Oren Cohen, Henry Nicholas Chapman, Jianwei Miao, and Mordechai Segev. Phase retrieval with application to optical imaging: A contemporary overview. IEEE Signal Processing Magazine, 32(3):87–109, 2015. doi: 10.1109/MSP.2014.2352673.

- Wang et al. (2015) Z. Wang, Q. Gu, Y. Ning, and H. Liu. High-dimensional expectation-maximization algorithm: Statistical optimization and asymptotic normality. In Advances in Neural Information Processing Systems 28, 2015.

- Xu et al. (2016) J. Xu, D. Hsu, and A. Maleki. Global analysis of expectation maximization for mixtures of two Gaussians. In Advances in Neural Information Processing Systems 29, 2016.

- Ye et al. (2021) Haishan Ye, Dachao Lin, Zhihua Zhang, and Xiangyu Chang. Explicit superlinear convergence rates of the sr1 algorithm. arXiv preprintarXiv:2105.07162, 2021.

- Yi and Caramanis (2015) X. Yi and C. Caramanis. Regularized EM algorithms: A unified framework and statistical guarantees. In Advances in Neural Information Processing Systems 28, 2015.

- Yuan and Zhang (2013) Xiao-Tong Yuan and Tong Zhang. Truncated power method for sparse eigenvalue problems. Journal of Machine Learning Research, 14(Apr):899–925, 2013.

Appendix

Appendix A Proofs

Lemma 1.

Proof.

Notice that the Hessian of objective function (10) can be expressed as

| (19) |

We use the Sherman–Morrison formula. Suppose that is an invertible matrix and are two vectors satisfying that . Then, the matrix is invertible and

Applying the Sherman–Morrison formula with , and . Notice that is invertible, hence is invertible and

Therefore, we obtain that

| (20) |

As a consequence, we obtain the conclusion of the lemma. ∎

Lemma 2.

Banach’s Fixed-Point Theorem. Consider the differentiable function . Suppose that there exists such that for any . Now let be arbitrary and define the sequence as

| (21) |

Then, the sequence converges to the unique fixed point defined as

| (22) |

with linear convergence rate of

| (23) |

Proof.

Check [Goebel and Kirk, 1990]. ∎

A.1 Proof of Theorem 1

We use induction to prove the convergence results in (11) and (12). Note that by Assumption 1 and the gradient and Hessian of the objective function in (10) are explicitly given by

| (24) |

| (25) |

Applying Lemma 1, we can obtain that

| (26) |

First, we consider the initial iteration

Notice that by Assumption 1 and

| (27) |

Therefore, we obtain that

Condition (11) holds for with . Now we assume that condition (11) holds for where , i.e.,

| (28) |

Considering the condition in Assumption 1 and the condition in (28), we further have

which implies that

| (29) |

We further show that the variable displacement and gradient difference can be written as

and

Considering these expressions, we can show that the rank-1 matrix in the update of BFGS is given by

and the inner product can be written as

These two expressions allow us to simplify the matrix in the update of BFGS as

| (30) |

An important property of the above matrix is that its null space is the set of the vectors that are parallel to . Considering the expression for , any vector that is parallel to is in the null space of the above matrix. We can easily observe that the gradient defined in (29) satisfies this condition and therefore

| (31) |

This important observation shows that if the condition in (28) holds, then the BFGS descent direction can be simplified as

| (32) |

This simplification implies that for the new iterate , we have

| (33) |

where

| (34) |

Therefore, we prove that condition (11) holds for . By induction, we prove the linear convergence results in (11) and (12).

One property of this convergence results is that the error vectors are parallel to each other with the same direction as shown in (11). This indicates that the iterations converge to the optimal solution along the same straight line defined by . Only the length of each vector reduces to zero with the linear convergence rates specified in (12) and the direction remains all the same. Notice that this is not a common property for BFGS and Newton’s method. This property requires that the initial Hessian approximation matrix is and the convex problem must be quadratic problem (10). When the objective function is a general convex function or the initial Hessian approximation matrix is not , there is no guarantee that the error vectors are parallel to each other. To better visualize this property, we have plotted of for both Newton’s method and BFGS in Figures 6 and 7 with population loss function defined in (10). We observed that all values of are one, which indicates that all vectors are parallel to each other.

A.2 Proof of Theorem 2

Recall that we have

| (35) |

Consider that and define the function as

| (36) |

Suppose that satisfying that , which is equivalent to

| (37) |

Notice that

and

Since , we have that

Therefore, we obtain that

and

Our target is to prove that for any ,

First, we present the plots of for with in Figure 8. We observe that for , always holds.

Next, we prove that for and any , we have

which is equivalent to

| (38) |

Define the function as

| (39) |

We obtain that

| (40) |

where

| (41) |

Hence, we have that

| (42) |

where

| (43) |

Therefore, we obtain that

| (44) |

and

| (45) |

where

| (46) |

Now we define the function as

We observe that for , we have and we calculate that and . Hence, we obtain that for all , which indicates that for all ,

Therefore, for all , defined in (46) satisfies that and from (45) we know that . Hence, defined in (44) is decreasing function and . We know that , which implies that defined in (43) is decreasing function. So we have that . From (42) we know that and defined in (41) is increasing function for . Hence, we get that and from (40) we obtain that defined in(39) is decreasing function for . Therefore, we have that and condition (38) holds for all , which is equivalent to .

A.3 Proof of Theorem 3

Notice that the gradient and the Hessian of the objective function (10) can be expressed as

Applying Lemma 1, we can obtain that

Hence, we have that for any ,

Notice that by Assumption 1 and

Therefore, we prove the conclusion that for any ,

We observe that the iterations generated by Newton’s method also satisfy the parallel property, i.e., all vectors are parallel to each other with the same direction.

Notice that the function is strictly increasing and , as well as . Hence, we know that .

A.4 Elaboration of Remark 1

For the ease of presentation, we use to denote any constant that is independent of , , and can be varied case by case to simplify the proof. From Mou et al. [2019] and Lemma 6 of Ren et al. [2022b], we have that, as long as , we have the following two uniform concentration results holding with probability :

| (47) |

Notice that we have

where we use the fact that is independent of and , . Hence, we have that

| (48) |

| (49) |

We denote as the population loss function with respect to the assumption of . Therefore, we have

| (50) |

| (51) |

| (52) |

Hence, we have

Recall that is a Gaussian or sub-Gaussian random variable with . For any , we ahve that and in the low SNR regime, we have . Hence, we have

| (53) |

Similarly, we have that

| (54) |

Leveraging (47), (53) and (54), we obtain that

| (55) |

This explains the Remark 1 of assumption in section 4. The errors between gradients and Hessians of the population loss with and are upper bounded by the corresponding statistical errors between the population loss (8) and the empirical loss (7) in the low SNR regime, respectively. Hence, and can be treated as equivalent.

A.5 Proof of Theorem 4

In this section, we present the proof of Theorem 4. We use and to denote the population objective and empirical objective. Also, as discussed in the previous section, we use to refer to the population loss when the optimal solution is zero . For the ease of presentation, we use to denote any constant that is independent of , , and can be varied case by case to simplify the proof. To prove the result for the iterates generated by the finite sample loss function, we control the gap between the gradient of and . As discussed previously in (55) from A.4, we can show that in the low SNR setting, we have

| (56) | ||||

| (57) |

We further can show that for any . If this does not hold, then we have by the definition of the low SNR regime, which indicates that has already achieved the optimal statistical radius. Therefore, the following bounds hold with probability of at least ,

| (58) |

| (59) |

In the following proof, we assume that all the results hold with probability of at least . Given the fact that the difference between the gradient and Hessian of the finite sample loss, denoted as , and the population loss with the optimal value at zero, denoted by , is of the order of statistical accuracy, and considering that the iterates generated by running BFGS on converge to the optimal solution at a linear rate, we show that the iterates generated by running BFGS on the finite sample loss reach the statistical accuracy in a logarithmic number of iterations of the required statistical accuracy.

To simplify our presentation, we use as the iterates generated by running BFGS on the population loss and as the iterates generated by running BFGS on the finite sample loss . We assume that , i.e., the first iterates are the same for both population loss and empirical loss. Similarly, we denote the inverse Hessian approximation matrix of BFGS applied to the loss by and the inverse Hessian approximation matrix of BFGS applied to the loss by . We have that and . Given the results in Theorems 1 and 2, we know that the iterates generated by BFGS on converge to the optimal solution at a linear rate, i.e.,

| (60) |

where are the linear convergence rates and are the lower and upper bounds of the corresponding linear convergence rates.

More precisely, we show that if the total number of iterations that we run BFGS on is order of log of the inverse of statistical accuracy at most, i.e., , then for any we can show that the difference between the iterates generated by running BFGS on and is controlled and bounded above by

| (61) |

where . Now given the fact that approaches zero at a linear rate, we will use induction to prove the main claim of Theorem 4. We assume that for any , we have that

| (62) |

Otherwise, our results in Theorem 4 simply follow. To prove the claim in (61) we will use an induction argument. Before doing that, in the upcoming sections we prove the following intermediate results that will be used in the induction argument.

Lemma 3.

We denote and as the largest and smallest eigenvalue of the matrix , we have

where and are constants that only depend on and the last inequalities only holds for .

Proof.

Recall that from (51) and (52), we have

Using Cauchy–Schwarz inequality, we get that

where we use the fact that for any . If this does not hold, then we have by the definition of the low SNR regime, which indicates that has already achieved the optimal statistical radius. Similarly, we have that

where we use the same argument that . Using Taylor’s Theorem, we have that

where . Therefore, for any , we have that

Notice that are positive definite for any and is positive definite, hence there exists and such that for any and .

∎

A.5.1 Induction base

Now we use the induction argument to prove the claim in (61). Since the initial iterate for running BFGS on and are the same, we have . Now for the iterates generated after the first step of BFGS, we can show that the error between the iterates of two losses is bounded above by

| (63) | ||||

We observe that for invertible matrices and , we have . Given this observation and the results in Lemma 3 and (59), we can show that

Applying this bound into (63) and using the results in Lemma 3, the bounds in (58), we obtain that

| (64) |

Notice that from (64), we know that (61) holds for . Hence, the base of induction is complete.

A.5.2 Induction hypothesis and step

Now we assume for any , (61) holds, i.e., we have

Consider , we have that

| (65) |

Recall update rules for the Hessian approximation matrices:

Moreover, based on (31) we have

| (66) |

which implies that can be simplified as . Now we proceed to bound the gap between the BFGS descent direction on and , which can be upper bounded by

| (67) |

Now we bound these two terms separately. The first term in (67) can be bounded above by

| (68) |

where we use the fact that . Now using the fact that

we can further upper bound by

| (69) |

Next we proceed to bound the second term in (67) . The second term can be bounded as

| (70) |

Putting together the upper bounds in (68), (69), and (A.5.2) into (67), we obtain that

| (71) |

In the following lemmas, we establish upper bounds for the expressions in the right hand side of (71).

Lemma 4.

The norm of variable variation for the finite sample loss and its difference form the variable variation for the population loss are bounded above by

Moreover, this result implies that

Proof.

The first result simply follows from the fact that

where we used the induction assumption (61) and the linear convergence results (60) for the iterates generated on the population loss. The second claim can be also proved following

where we used the induction assumption (61), linear convergence results (60) and the assumption (62) that with sufficiently large to make the last inequality hold. ∎

Lemma 5.

The gap between the population loss and its finite sample version evaluated at the current iterates are bounded above by

Moreover, this result implies that

Proof.

We have that

where the first inequality is due to (58), the third inequality is due to the induction hypothesis (61) and linear convergence results in (60) and the forth inequality is due to assumption (62) and . We also have

where the first inequality is due to results in Lemma 3, the second inequality is due to the induction hypothesis (61) and , the third inequality is due to the induction hypothesis (61) and the linear convergence results (60) and the forth inequality is due to the assumption (62) and . The first claim follows using the fact that

Given this result, the second claim simply follows from the fact that

where we used results from Lemma 3, the linear convergence rate in (60) and the assumption (62) that again. ∎

Lemma 6.

The norm of gradient variation for the finite sample loss and its difference form the gradient variation for the population loss are bounded above by

Moreover, this result implies that

Proof.

The first claim simply follows from the following bounds,

where we used the results in Lemma 5 and the linear convergence results (60) of BFGS on the population loss. The second claim simply follows from,

where the third inequality is due to results in Lemma 5 and Lemma 3, the forth inequality is due to assumption (62) and the fifth inequality is due to linear convergence results (60). ∎

Lemma 7.

We have the following bounds:

Proof.

The first claim holds since

where the second inequality is due to results in Lemma 4 and Lemma 5, the third inequality is due to linear convergence rates (60) and the forth inequality is due to . The second claim holds since

where the second inequality is due to results in Lemma 4 and Lemma 6, the third inequality is due to linear convergence rates (60). ∎

Lemma 8.

The following bounds hold:

Proof.

The first claim holds since

where we use the linear convergence results (60) and the results in Lemma 3. The second claim holds since

where and we use the Taylor’s Theorem, the linear convergence rates (60) and results in Lemma 3. The last cliam holds since

where we use the second claim, the results from Lemma 7 and the assumption (62). ∎

Lemma 9.

We have the following bounds:

Proof.

Finally, we present an upper bound for the norm of the inverse Hessian approximation matrix .

Lemma 10.

The norm of the inverse Hessian approximation matrix is upper bounded by

Proof.

With all the above lemmas, we can complete the induction hypothesis. Applying results in Lemma 4, 5, 6, 9, and 10 into (71), we have that

Hence, we prove that

| (72) |

Notice that by induction and linear convergence rates (60), we observe that

| (73) | ||||

Therefore, leveraging (65), (72), and (73), we have that

We define that

Then, we have that

With the standard recursion, we know that for large enough. Hence, (61) holds for .

A.5.3 Final conclusion

Therefore, using induction we proved that (61) holds for all :

where . Notice that

The optimal with minimum should satisfy that

for which we obtain for some constant that is independent of and . Therefore, we prove the final conclusion that

where is a constant that is independent of and .

Appendix B Additional experiments for the medium SNR regime

Here we briefly illustrate the behavior of BFGS in Medium SNR regime. We consider the generalized linear model with and . The inputs are still generated by , but now is uniformly sampled from the sphere with radius .

The results are shown in Figure 9. We can see BFGS still converges fast, and the statistical radius of middle SNR regime lies between the High SNR and Low SNR. A rigorous characterization of the statistical radius of middle SNR regime will be left as future work.