State-of-the-art optical-based physical adversarial attacks for deep learning computer vision systems

Abstract

Adversarial attacks can mislead deep learning models to make false predictions by implanting small perturbations to the original input that are imperceptible to the human eye, which poses a huge security threat to the computer vision systems based on deep learning. Physical adversarial attacks, which is more realistic, as the perturbation is introduced to the input before it is being captured and converted to a binary image inside the vision system, when compared to digital adversarial attacks. In this paper, we focus on physical adversarial attacks and further classify them into invasive and non-invasive. Optical-based physical adversarial attack techniques (e.g. using light irradiation) belong to the non-invasive category. As the perturbations can be easily ignored by humans as the perturbations are very similar to the effects generated by a natural environment in the real world. They are highly invisibility and executable and can pose a significant or even lethal threats to real systems. This paper focuses on optical-based physical adversarial attack techniques for computer vision systems, with emphasis on the introduction and discussion of optical-based physical adversarial attack techniques.

Index Terms:

Adversarial attacks, Deep learning, security threat, Optical-based physical adversarial attack.I Introduction

Computer vision uses computers and related equipment to simulate biological vision, so that computers have the ability to recognize three-dimensional environmental information through two-dimensional images. As an important part of the field of artificial intelligence (AI), in recent years, with the rapid development of deep learning technology, computer vision technology based on the deep neural networks (DNNs) has also made rapid progress and has a wide range of applications in face recognition [1], automatic driving [2], intelligent manufacturing [3], biomedicine [4], etc.

However, DNNs have some inherent problems, in particular, the unexplainability due to the black box nature of the algorithms, which make it difficult to obtain the causal factors associated with the essential characteristics of examples and the predicted results. These problems lead to some security loopholes in computer vision technology based on DNNs, which can be used by malicious attackers to launch adversarial attacks. Adversarial attack misleads the classifier to generate false predictions by making small perturbations to the original input. These small perturbations are imperceptible to humans, but can make the classifier generate false predictions with higher confidence. As shown in the literature [5], an adversarial attack against a traffic sign recognition system can cause the self-driving car to misclassify the traffic signs and thus cause the self-driving system to issue false control commands, which can lead to traffic accidents and even endanger the personal safety of the driver and passengers.

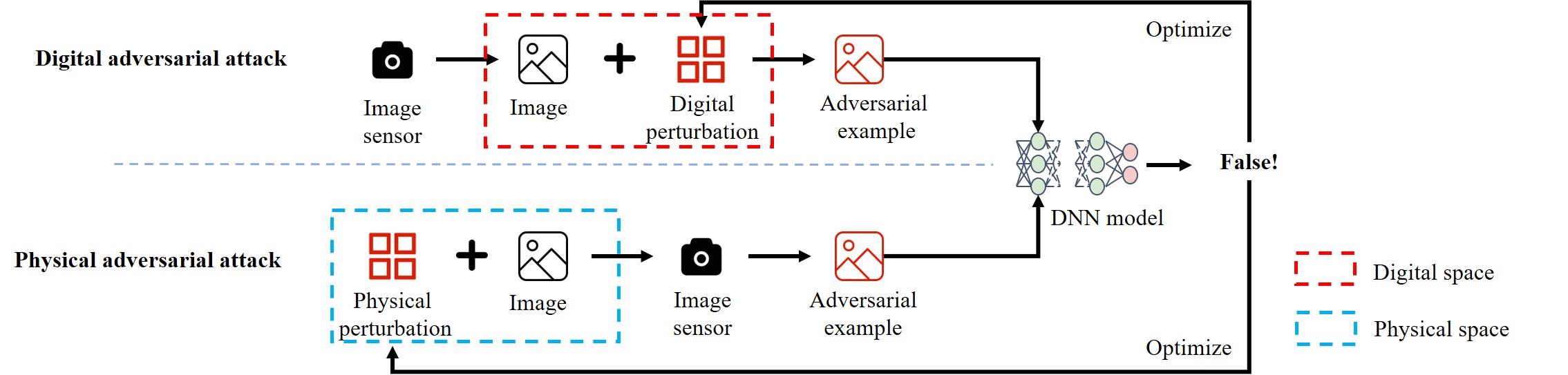

Adversarial attacks pose a huge challenge to the security of computer vision technology based on DNNs and even AI technology. At present, adversarial attacks are mainly divided into two categories: digital and physical adversarial attacks [6] which assume that attackers can directly operate the input binary images at the pixel level. Digital adversarial attacks require direct access/modification of digital image data, which has a much lower feasibility in the real world and is difficult to generate effective real threats to computer vision systems. Unlike digital adversarial attacks, physical adversarial attacks physically implant perturbations to modify the input before it is being captured by the computer vision system and converted to a binary image (e.g. drawing markers on a real road sign). Physical adversarial attacks may not be as effective as digital adversarial attacks as the perceptions of the perturbation cannot be controlled directly as for digital adversarial attacks. On the other hand, they can be implemented in the real world and have a significant or even fatal impact on real systems, so they are receiving more and more attention and research in recent years [7, 8, 9].

Since the receiving device (e.g. a camera) of a computer vision system is essentially a photoelectric sensor, the interference from optics can often have critical effects on the computer vision system; for example, the first death in an autonomous car occurred when a Tesla Model S collided with a white tractor trailer. Tesla stated the incident occurred because “neither Autopilot nor the driver noticed the white side of the tractor trailer against a brightly lit sky.” [10]. Physical adversarial attack methods based on optical means have become a popular research direction in recent years, and some research results have been published so far [11, 12]. Based on a brief introduction of some basic concepts of adversarial attacks, this paper classifies physical adversarial attack techniques in terms of both invasive attacks and non-invasive attacks, and focus on optical-based physical adversarial attack techniques for computer vision systems, discuss and analyze in depth their features, mechanisms, and superiority (e.g., high invisibility, simple deployment).

In this paper, the analysis and research of physical adversarial attacks are helpful to the discovery of endogenous security loopholes of DNNs in advance and inspire the design of targeted defense solutions to reduce the security risks brought by the immaturity of AI and malicious applications, enhance AI security, and promote the deep application of AI.

II Some basic concepts of adversarial attacks

In this section, we mainly introduce some basic concepts of adversarial attacks, first introduce the decision conditions and general classification of adversarial attacks, then analyze the causes of adversarial perturbations, and finally introduce the two main categories of adversarial attacks: digital adversarial attacks and physical adversarial attacks.

II-A Judgment conditions and classification of adversarial attacks

Adversarial attacks were first proposed by Szegedy et al. [13], where a small perturbation of the original image can trick the target model making false predictions, where the image to which the perturbation has been added is called an adversarial example. However, with the development of adversarial attack techniques, adversarial examples can be either generated directly on a binary image (in the digital setting: by adding noise) or generated by capturing an attacked physical scene via image sensors such as cameras (in the physical setting: by adding fog, and sunlight). In general, an adversarial attack satisfies the following three conditions:

-

1.

The target model can make a correct prediction of the original image before a perturbation is added to it, which is shown by ordinal number 1 in Fig.1.

-

2.

The target model must make a false prediction of the original image after a perturbation has been added to it, which is shown by ordinal number 2 in Fig.1.

-

3.

Before and after adding perturbations to the original image, humans can make correct predictions based on their perceptions, which is shown in Fig.1 for ordinal number 3 (before adding perturbations) and ordinal number 4 (after adding perturbations).

Adversarial attacks can be divided into two categories based on the predicted outcome: non-targeted attack and targeted attack:

Non-targeted attack

The attacker simply misleads the target model to output arbitrarily false predictions through the adversarial examples. It includes confidence reduction: the target model predicts a ”dog” adversarial example as a ”dog” image with a low confidence; Another is misclassification: the target depth model takes a ”dog” adversarial example and predicts it as a ”non-dog” image.

Targeted attack

The attacker tries to mislead the target model to output a specific false prediction by the adversarial examples. It includes targeted misclassification: the target model predicts any class of adversarial examples (e.g., ”horse”, and ”cat” images) to a specific class (e.g., ”dog” images). ”Another is source/target misclassification: The targeted deep model takes an adversarial example of a specific class (e.g., ”cat” images) and predicts it to another specific class (e.g., ”dog” images). ” class).

According to the time of the attack, the adversarial attack can also be divided into two categories: attack in the training stage and attack in the testing stage.

In general, the flow of a deep learning (DL) model includes two phases: the training phase (red dashed box) and the testing phase (blue dashed box), which is shown in Fig.2. Thus, the adversarial attack can also generate perturbations at different stages/times, including: training-phase attack (a.k.a. causative or poisoning attack) and testing-phase attack (a.k.a. exploratory or evasion attack) [14].

Training phase attack

In the training phase, the attacker can modify the training dataset by data injection, modifying data features and labels, etc. The aim is to make the target model learn the false target features, to interfere with and destroy the logic of the target model.

Testing phase attack

In the testing phase, the attacker does not and cannot interfere or destroy the logic of the target model, but the attacker can make adversarial examples based on the amount of knowledge of the target model obtained and use the adversarial examples to force the pre-trained target model to generate false predictions [15]. According to the amount of knowledge of the target model obtained by the attacker, the test phase attacks can be further classified into: white-box attacks, black-box attacks, and gray-box attacks.

-

•

White-box attack: The attacker assumes the complete knowledge of the targeted model, including its parameter values, architecture, training method, and in some cases its training data as well [16]. Therefore, attackers can craft an adversarial example by obtaining the full amount of knowledge of the target’s deep model.

-

•

Black-box attack: The attacker cannot access the DNN model, and thus cannot obtain the model structure and parameters, and can only obtain the output result of the target model by inputting the original data to the target model [17].

-

•

Gray-box attack: The attacker assumes partial knowledge of the target model [18]. Therefore, attackers can use the known partial knowledge to obtain more unknown knowledge of the target model and reason out the vulnerability of the target model.

II-B Reasons for adversarial perturbations

Adversarial perturbations can misguide targeting objects by poisoning clean inputs to change their intrinsic structures learned by DNNs for recognition or classification [19]. In recent years, a large number of researchers have tried to analyze the causes of adversarial perturbations from different perspectives, and although there is no unified statement on the causes of adversarial perturbations, in general, they can be divided into three categories of possible causes:

(1) Local over linearization of DNN structure, minimal perturbations may be amplified in the process of transmission.

Goodfellow et al. [20] believe that the cause of adversarial examples is the linear behavior in a high dimensional space. In the high-dimensional linear classifier, the minimal perturbations in each dimension are accumulated and amplified by dot product operations, and when the nonlinear activation function sigmoid is used to calculate the classification probability, the probability of the original image I being classified into ”1” class before and after perturbation is increased from 5% to 88%, which is shown in Fig.3. If the DNN uses a linear activation function, the original image is more susceptible to a larger difference in classification probability before and after perturbation.

(2) The training data set contains insufficient target features, which causes the decision boundary of DNN to stop prematurely.

Some researchers also argue that the vulnerability of DNN models is caused by incomplete training, which is attributed to insufficient data [19]. The large dimensionality of the dataset due to the diversity of the target features will lead to premature stopping and weak generalization of the decision boundaries of the DNN model when the training data is under-labeled.

Suppose that the original target dataset has 6 features including red, blue, and green colors of circles and hexagons, but the randomly selected training dataset contains only red, blue, and green colors of circles features, which is shown in Fig.4. During the training process, when the target depth model can distinguish the features contained in well, the training may stop and the decision edge will also stop evolving. For some features not covered in the training dataset (e.g., hexagon), the target depth model will not be able to classify them correctly, so more data are needed for the target depth model to learn more target features to improve the robustness of the model.

(3) The presence of non-robust features in the classifier leads to inaccurate predictions.

Cubuk et al. [22] argue that the “origin of adversarial examples is primarily due to an inherent uncertainty that neural networks have about their predictions”. They argue that the uncertainty in the output results of the target model is independent of the network architecture, training method, and data set.

Suppose that a classifier contains robust features (dark square and circle) and non-robust features (light square and circle), and the red curve is the real decision boundary of the dataset, which is shown in Fig.5. The presence of non-robust features in the classifier causes the decision boundary of the trained classifier (blue curve in Fig.5) to not recognize non-robust features in the target well, and therefore, if these non-robust features are added to the input image, it will likely result in the target depth model making false predictions.

II-C Digital adversarial attack VS physical adversarial attack

In response to the large variety of approaches to adversarial attacks in recent years [23, 24, 25, 26, 27, 28, 29, 30], Akhtar et al. [31] try to use a simple/unified mathematical model to express the adversarial attacks as:

| (1) |

where is the target model, i.e., , I is the original image and is the output of the target model. , for the adversarial example. As shown in Fig.6, if the attackers add perturbation to the image after the target is imaged, is a digital adversarial example; If the attacker attacks the image before the target is imaged, is a physical adversarial example. Therefore, can be understood as the set of digital adversarial examples and physical adversarial examples, i.e., .

Because the digital adversarial attack is to attack the image after the target is imaged, the attacker can use the program to produce pixel-level perturbation that is imperceptible to the human eye. Physical adversarial attacks require the capture of adversarial images by sensors (e.g., cameras), and since small perturbations are easily ignored when captured by cameras, the perturbations in most physical adversarial examples are easily detected by the human eye, but such perturbations do not appear to humans to affect the ensemble and thus do not raise alarms.

Digital adversarial attack

In a digital adversarial attack, the attacker first adds the perturbation directly to the original image through a program, and even that perturbation can be added precisely to a pixel point of . Then, the size of is usually limited using the norm to ensure that the perturbation reaches imperceptibility to the human eye; finally, through the digital adversarial example misled the target model to make false predictions. Along with these kinds of ideas, researchers have proposed many methods to generate optimal perturbations . For example, Goodfellow et al. [20] proposed fast gradient sign method using norm constraint perturbation, Papernot et al. [32] proposed jacobian-based saliency map attack using norm constraint perturbation, Su et al. [33] proposed One-Pixel attack using norm constraint perturbation, and Athalye A et al. [34] proposed backward pass differentiable approximation attack using , norm constraints, and Carlini et al. [35] proposed C&W attack using , , norm constraints. Although digital adversarial attacks can exhibit high performance, these methods implant small perturbations by directly manipulating the input image at the pixel-level in digital world. Due to the influence of dynamic physical conditions (e.g., different shooting angles and distances, etc.) and optical imaging, it is difficult to perfectly transfer the perturbations in the digital adversarial examples to the real world, so the digital adversarial attack has very low executability in the real world.

Physical adversarial attack

In real applications, the camera is the entrance to the AI vision system, that is, the AI vision system needs to capture the original image through the camera as data input. Therefore, in the physical adversarial attacks, the attacker also needs to capture the adversarial example images through the system camera. However, since the imaging quality of the camera is limited by the physical performance of the camera’s photoelectric sensor, small perturbations may not be captured by the camera, and in a way, it can also be argued that: small perturbations will be filtered out in the camera imaging stage. Therefore, in most existing physical adversarial attacks, the size of the implanted perturbation is usually within the perceptible range of the human eye, and the attack scheme often requires some additional means to hide the perturbation so that it is not noticed by the human eye.

Kurakin et al. [36] first found that the presence of physically adversarial examples, and proved that when the adversarial examples are printed out, they remain to be detrimental to the classifier even under different illumination and directions. Following this work, a large number of physical adversarial attack techniques have emerged. For example, Eykholt et al. [7] proposed a generic attack algorithm—robust physical attack RP2 which finds the attack region of a traffic road sign using norm, then optimizes the attack region using norm, and finally manually attaches a black and white sticker to the optimal attack location. Although RP2 can capture robust perturbations in various environments, black-and-white stickers are not stealthy enough in the real world, which is shown in Fig.7(a), where black-and-white stickers are obvious on traffic road signs. To make the sticker attack more invisibility, Wei et al. [8] proposed a real sticker attack technique, which is mainly used to find the optimal attack location by a region-based algorithm of differential evolution and 3D position transformation. Due to the use of sun stickers that are common in life, it will not arouse human suspicion on certain occasions, which is shown in Fig.7(b). To improve the diversity of stickers, Shen et al. [9] proposed a FaceAdv using adversarial stickers, they designed an adversarial sticker production architecture consisting of a sticker generator and a converter, where the generator can generate multiple shapes of stickers, and the converter digitally pastes the stickers onto the human face and provides feedback to the generator for efficiency, the effect of the attack is shown in Fig.7(c) shows. To improve the invisibility of the perturbation, Yin et al. [37] proposed a generic adversarial makeup attack method to generate imperceptible eye shadows on the human eye region.

To improve the generalization capability of adversarial patches, Liu et al. [38] exploited the perceptual and semantic biases of the model to generate category-independent universal adversarial patches. Huang et al. [39] proposed a universal physical camouflage attack method that generates adversarial patches by jointly spoofing region proposal networks and misleading classifier and regression output errors, and can attack objects belonging to the same category of all objects. To solve the problem of performance degradation of the adversarial patch when the shooting viewpoint is shifted, Hu et al. [40] proposed a toroidal-cropping-based expandable generative attack to generate an adversarial texture with repetitive structure. The adversarial texture is then used to generate T-shirts, skirts, and dresses, etc., so that a person wearing such clothes can accomplish the ” invisibility” effect on pedestrian detectors from different viewpoints.

III Optical adversarial attack techniques

As shown in Fig.7 (a), (b), and (c), the physical adversarial attacks all use non-transparent perturbations to overlay the target features, which are more prominent in the adversarial examples and easily attract human attention, and belong to the invasive attack. The main process of an invasive attack: a) the attacker first trains the target model to get the best attack position and optimal perturbation, and b) then sticks the non-transparent perturbation on the best attack position. However, in the real world, the non-transparent perturbations may be destroyed by the natural environment or human factors before the attack occurs.

| Method | Year | Physical tools for generating perturbation | imperceptible | |

| Light irradiation | BulbAttack [41] | 2021 | Bulb panel | ✘ |

| Invisible mask [11] | 2018 | LED | ✘ | |

| Pony et al. [42] | 2021 | LED | ✘ | |

| Sun et al. [43] | 2020 | Laser | ✘ | |

| LightAttack [44] | 2018 | Laser spot | ✘ | |

| AdvLB [12] | 2021 | Laser | ✘ | |

| AdvNB [23] | 2022 | Neon beam | ✘ | |

| ShadowAttack [24] | 2022 | Shadow | ✘ | |

| Imaging device manipulation | ISPAttack [45] | 2021 | Camera | ✔ |

| Adverial camera stickers [25] | 2019 | Camera | ✘ | |

| TranslucentPatch [46] | 2021 | Camera | ✘ | |

| LEDAttack [47] | 2021 | Camera | ✔ | |

| AdvZL [26] | 2022 | Camera | ✘ | |

| ALPA [27] | 2020 | Projector | ✘ | |

| SLAP [48] | 2021 | Projector | ✘ | |

| ProjectorAttack [49] | 2019 | Projector | ✘ | |

| SPAA [50] | 2022 | Projector | ✔ | |

| OPAD [28] | 2021 | Projector | ✔ | |

| Li et al. [29] | 2022 | Structured light | ✘ | |

| She et al. [30] | 2019 | Visible light | ✔ | |

| SLMAttack [51] | 2022 | Phase modulation module | ✔ | |

| Chen et al. [52] | 2021 | LED | ✔ |

Unlike invasive attacks, non-invasive attacks can hide the perturbation well into the target features without attracting human attention. Optical-based physical adversarial attacks are a good form of non-intrusive attacks, such as optical perturbations generated by using visible light or optical devices (e.g., cameras, and projectors). Since the perturbation caused by such perturbations are more common in life, humans rely on life experience to prompt the brain to selectively ignore them. According to the form of perturbation, we divide the existing optical-based adversarial attacks into based on light irradiation and imaging device manipulation, which is shown in Table.I.

III-A Optical-based physical adversarial attacks based on light irradiation

As mentioned earlier, non-transparent perturbations of invasive attacks are easily destroyed by the natural environment or human factors. To improve the robustness of perturbations against external factors, some methods for generating optical perturbations using light-emitting devices have been proposed [1, 12, 23, 24]. For example, Zhu et al. [41] designed a multi-bulb panel to attack the infrared thermal imaging detector, Zhou et al. [11] used infrared LEDs to deceive a face recognition system by projecting infrared illumination perturbations onto a human face. Although the infrared lighting perturbation is invisible to the human eye, the attack is difficult to deploy the infrared LEDs to the optimal position and the use of an infrared cutoff filter can effectively filter out these infrared perturbations. Pony et al. [42] proposed a method to attack the action recognition system by controlling LED lights. Sun et al. [43] proposed the first black-box spoofing attack against LPS. They manipulate the laser to simulate occlusion mode and sparse point clouds away from autonomous vehicle. Nichols et al. [44] Project light points to specific areas of the target for attack. Duan et al. [12] proposed an adversarial laser beam , which mainly acts the laser beam as an optical perturbation to the original image, and since the perturbation is generated by the laser light source, it will alleviate the problem of difficult deployment of the irradiating device. However, a green laser beam runs through the whole image, and this optical perturbation is successfully added to the target background, which is shown in Fig.8(a). Hu et al. [23] proposed adversarial neon beam , which is shown in Fig.8(b). It can add only the neon beam to the target foreground, thus further improving the invisibility of optical perturbation. Although beam perturbation can alleviate the difficult of device deployment, the perturbation is generated by a human-made light source, and there are some differences with the optical perturbations generated by natural phenomena, so they still attract human attention in some ways. To address this problem, Zhong et al. [24] proposed a physical adversarial attack generated by a natural phenomenon, the shadow attack, which is shown in Fig.8(c). The shadow attack makes full use of the adversary—shadow nature of illumination, and since shadows are more common in the real world, leading humans to selectively ignore shadow regions, this method not only hides shadow perturbations well in the real world but also reduces human alertness.

III-B Optical-based physical adversarial attacks based on imaging device manipulation

Another novel non-intrusive optical-based physical adversarial attack is the physical manipulation of optical imaging devices to generate perturbations. For example, Phan et al. [45] directly modify the RWA image captured by the sensor to deceive the image processing pipeline (ISP) hardware of the camera. Li et al. [25] paste a carefully designed translucent sticker on the camera’s lens, so at the moment of camera exposure, the perturbation on the sticker is projected onto the target and a physical adversarial example is generated, which is shown in Fig.9(a). Zolfi et al. [46] successfully attacked the advanced driving assistance system (ADAS) of Tesla model X using the same attack method. Sayles A et al. [47] used the rolling shutter effect to generate a perturbation that is imperceptible to the human eye but is captured by the camera, which is shown in Fig.9(b). Hu et al. [26] proposed an adversarial zoom lens attack that scales the image by changing the zoom lens of the camera, achieving to successfully attack the target model without adding any perturbation to the original example for the first time, which is shown in Figure.9(c). These attacks against the camera, although simple to deploy, have a perceptible change in the texture and size of the original image, which would raise suspicion in the observer.

Another optical-based non-intrusive physical adversarial attack focuses on influencing AI vision systems by manipulating projection devices. For example, Nguyen et al. [27] proposed a transformation-invariant perturbation generation method to perform real-time light projection attacks on face recognition systems. Lovisotto et al. [48] use a projector to project the perturbation onto the target. Man et al. [49] uses an electromagnetic signal generator, such as a light source, to transmit electromagnetic signals (light) to interfere with the signals (images) captured by the target sensor. Huang et al. [50] projected the perturbation onto the target through a projector, and proposed a stealthy projectorbased adversarial attack (SPAA) method. Gnanasambandam et al. [28] proposed a non-intrusive attack with structured illumination, which uses a low-cost projector-camera system to change the features of the target, and realizes an effective optical adversarial attack against 3D objects, which is shown in Fig.10. OPAD consists of the Radiometric response of the projector (green dashed box) and the Spectral response of the camera projector (red dashed box). OPAD first uses the computer to generate perturbations; then uses the nonlinear mapping of the projector to convert the perturbation signal into an optical signal, finally, the optical perturbation is projected onto the image to change the texture feature of the target, and the camera is used to capture the modified image to generate adversarial examples. Li et al. [29] used this idea by using a fringe projection imaging system and a 3D reconstruction algorithm to interfere with the face recognition system.

Although the above attack methods enhance the invisibility of perturbation in the real world, it has not yet reached a state that is completely imperceptibility to the human eye. In response to this problem, She et al. [30] proposed a directed visible light attack using the Persistence of Vision theory (i.e., when the conversion rate of light exceeds 25 Hz, the human brain will not be able to process these changes). It uses an alternate source consisting of a scrambled post and a hidden post to irradiate the human face, which is shown in Fig.11. The method achieves true imperceptibility to the human eye, which is shown in Fig.11(c). But the adversarial example obtained through the camera (Fig.11(d)) severely alters the texture features of the target.

While the visible light attack system generates imperceptible perturbation to the human eye, the adversarial examples obtained through the camera are severely distorted, which is shown in Fig.11(d). To be able to obtain fidelity of the adversarial examples using the camera, Kim et al. [51] introduces a novel approach for non-targeted adversarial attack by modifying the light field information inside the optical imaging system. The idea is to modulate the phase information of the light in the pupil plane of an optical imaging system, which is shown in Fig.12. The authors designed a phase modulation module (Fig.12(b)) to generate the adversarial examples. A phase modulation module consisting of a polarizer (P), relay lens (RL), beam-splitter (BS), and spatial light modulator is implemented to the photography system. The method achieves perfect perturbation invisibility and is not distorted in the camera-captured adversarial examples, which is shown in Fig.12(c). However, it is difficult to simulate a qualified phase modulation module, so it is very difficult to deploy the experimental device.

Chen et al. [52] propose a novel attack scheme based on LED illumination modulation, which not only generates perturbation imperceptible to the human eye in the real world but is also simple to deploy in the experimental setup. As can be seen in Fig.13(a), the perturbation generated based on the LED illumination modulation is imperceptible to the human eye, and as can be seen in Fig.13(b), the adversarial example captured by the camera contains a large number of black fringes, which are light perturbations generated by the interaction of the rolling shutter effect and modulated LED illumination in the CMOS camera imaging mechanism. When the LED illumination is on, bright pixels are stored in the active image column pixels; when the LED illumination is off, black edges are stored in the image column pixels due to underexposure of the camera, which is shown in the red dashed box in Fig.13. Because the ”on/off” state of LED lighting changes at short intervals and the flicker frequency is beyond the limit of what the human eye can observe, the rapid flicker and bright /dark fringe perturbations caused by LED illumination modulation are not detectable to the human eye.

On this basis, the literature [52] proposes two practical and novel attack methods for face recognition systems: denial-of-service attacks and escape attacks, the effects of which are shown in Fig.14.

DoS Attacks

Wide black fringes are generated by reducing the flicker frequency of the LED. Wide fringes may completely cover important facial features (such as eyes or nose), which is shown in Fig.14(b). Due to the lack of key facial features of the adversarial example, the face recognition system may be paralyzed in the target detection stage. Fig.15(a) verifies that as the distance of the DoS attack gets shorter, the higher the percentage of faces in the image, resulting in more wide black fringes on the face, and more of these black stripes covering key features of the face, ultimately resulting in a lower success rate of the face recognition system.

Escape attacks

Narrower black fringes are generated by accelerating the flicker frequency of the LED, which is shown in Fig.14(c). More narrow fringes not only add a large amount of repetitive and useless gradient information but also cover up many fine facial features, resulting in less difference between two different faces, which may eventually cause the face recognition system to judge two different faces as the same person. Fig.15(b) verifies that the shorter the distance of the escape attack, the narrower the black fringes cover the face features and also obscure a large number of fine facial features, ultimately leading to a lower success rate of the face recognition system as well.

IV Discuss and conclusion

Due to the low feasibility in the real world, it is not easy for digital adversarial attacks to generate a truly effective threat to computer vision systems. In contrast, physical adversarial attacks are more executability (feasibility) in the real world and can have a significant or even fatal impact on real systems. In recent years, more and more attention has been paid to physical adversarial attacks, and the literature on physical adversarial attacks has been growing every year. However, there are some difficulties with physical adversarial attacks: a) when a small perturbation is implanted, the perturbation is easily ignored by the physical properties of the camera; b) when a large perturbation is implanted, it is easily noticed by humans; c) it is difficult to simulate the experimental setup that generates the perturbation.

From the above analysis, a perfect physical adversarial example not only allows the target model to generate false predictions with a higher confidence, but the perturbations are completely imperceptible to the human eye. From the analysis of Section 3 of this paper, it can be concluded that the current use of optical devices to launch adversarial attack is closer to generating a perfect physical adversarial example, but most experimental setups for such methods are difficult to deploy, so developing a physical adversarial attack where the experimental setup is simple to deploy while the perturbations are imperceptible to the human eye is a direction worth exploring in the future.

Acknowledgments

This work was partially supported by the National Natural Science Foundation of China(No. 62171202, No. 62272131), National Science and Technology Major Project Carried on by Shenzhen (CJGJZD20200617103000001), Shenzhen Basic Research Project of China (JCYJ20200109113405927), HKU-SCF FinTech Academy, Shenzhen-Hong Kong-Macao Science and Technology Plan Project (Category C Project: SGDX20210823103537030), and Theme-based Research Scheme of RGC, Hong Kong (T35-710/20-R).

References

- [1] Y. Taigman, M. Yang, M. Ranzato, and L. Wolf, “Deepface: Closing the gap to human-level performance in face verification,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2014, pp. 1701–1708.

- [2] W. Zeng, W. Luo, S. Suo, A. Sadat, B. Yang, S. Casas, and R. Urtasun, “End-to-end interpretable neural motion planner,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 8660–8669.

- [3] T. Yang, X. Yi, S. Lu, K. H. Johansson, and T. Chai, “Intelligent manufacturing for the process industry driven by industrial artificial intelligence,” Engineering, vol. 7, no. 9, pp. 1224–1230, 2021.

- [4] O. Ronneberger, P. Fischer, and T. Brox, “U-net: Convolutional networks for biomedical image segmentation,” in International Conference on Medical image computing and computer-assisted intervention. Springer, 2015, pp. 234–241.

- [5] Y. Li, X. Xu, J. Xiao, S. Li, and H. T. Shen, “Adaptive square attack: Fooling autonomous cars with adversarial traffic signs,” IEEE Internet of Things Journal, vol. 8, no. 8, pp. 6337–6347, 2020.

- [6] H. Wei, H. Tang, X. Jia, H. Yu, Z. Li, Z. Wang, S. Satoh, and Z. Wang, “Physical adversarial attack meets computer vision: A decade survey,” arXiv preprint arXiv:2209.15179, 2022.

- [7] K. Eykholt, I. Evtimov, E. Fernandes, B. Li, A. Rahmati, C. Xiao, A. Prakash, T. Kohno, and D. Song, “Robust physical-world attacks on deep learning visual classification,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 1625–1634.

- [8] X. Wei, Y. Guo, and J. Yu, “Adversarial sticker: A stealthy attack method in the physical world,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022.

- [9] M. Shen, H. Yu, L. Zhu, K. Xu, Q. Li, and J. Hu, “Effective and robust physical-world attacks on deep learning face recognition systems,” IEEE Transactions on Information Forensics and Security, vol. 16, pp. 4063–4077, 2021.

- [10] N. Paul and C. Chung, “Application of hdr algorithms to solve direct sunlight problems when autonomous vehicles using machine vision systems are driving into sun,” Computers in Industry, vol. 98, pp. 192–196, 2018.

- [11] Z. Zhou, D. Tang, X. Wang, W. Han, X. Liu, and K. Zhang, “Invisible mask: Practical attacks on face recognition with infrared,” arXiv preprint arXiv:1803.04683, 2018.

- [12] R. Duan, X. Mao, A. K. Qin, Y. Chen, S. Ye, Y. He, and Y. Yang, “Adversarial laser beam: Effective physical-world attack to dnns in a blink,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 16 062–16 071.

- [13] C. Szegedy, W. Zaremba, I. Sutskever, J. Bruna, D. Erhan, I. Goodfellow, and R. Fergus, “Intriguing properties of neural networks,” arXiv preprint arXiv:1312.6199, 2013.

- [14] Y. Deldjoo, T. D. Noia, and F. A. Merra, “A survey on adversarial recommender systems: from attack/defense strategies to generative adversarial networks,” ACM Computing Surveys (CSUR), vol. 54, no. 2, pp. 1–38, 2021.

- [15] A. Chakraborty, M. Alam, V. Dey, A. Chattopadhyay, and D. Mukhopadhyay, “A survey on adversarial attacks and defences,” CAAI Transactions on Intelligence Technology, vol. 6, no. 1, pp. 25–45, 2021.

- [16] N. Akhtar and A. Mian, “Threat of adversarial attacks on deep learning in computer vision: A survey,” Ieee Access, vol. 6, pp. 14 410–14 430, 2018.

- [17] H. Liang, E. He, Y. Zhao, Z. Jia, and H. Li, “Adversarial attack and defense: A survey,” Electronics, vol. 11, no. 8, p. 1283, 2022.

- [18] N. Akhtar, A. Mian, N. Kardan, and M. Shah, “Advances in adversarial attacks and defenses in computer vision: A survey,” IEEE Access, vol. 9, pp. 155 161–155 196, 2021.

- [19] X. Zhang, X. Zheng, and W. Mao, “Adversarial perturbation defense on deep neural networks,” ACM Computing Surveys (CSUR), vol. 54, no. 8, pp. 1–36, 2021.

- [20] I. J. Goodfellow, J. Shlens, and C. Szegedy, “Explaining and harnessing adversarial examples,” arXiv preprint arXiv:1412.6572, 2014.

- [21] J. Zhang and C. Li, “Adversarial examples: Opportunities and challenges,” IEEE transactions on neural networks and learning systems, vol. 31, no. 7, pp. 2578–2593, 2019.

- [22] E. D. Cubuk, B. Zoph, S. S. Schoenholz, and Q. V. Le, “Intriguing properties of adversarial examples,” arXiv preprint arXiv:1711.02846, 2017.

- [23] C. Hu and K. Tiliwalidi, “Adversarial neon beam: Robust physical-world adversarial attack to dnns,” arXiv preprint arXiv:2204.00853, 2022.

- [24] Y. Zhong, X. Liu, D. Zhai, J. Jiang, and X. Ji, “Shadows can be dangerous: Stealthy and effective physical-world adversarial attack by natural phenomenon,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 15 345–15 354.

- [25] J. Li, F. Schmidt, and Z. Kolter, “Adversarial camera stickers: A physical camera-based attack on deep learning systems,” in International Conference on Machine Learning. PMLR, 2019, pp. 3896–3904.

- [26] C. Hu and W. Shi, “Adversarial zoom lens: A novel physical-world attack to dnns,” arXiv preprint arXiv:2206.12251, 2022.

- [27] D.-L. Nguyen, S. S. Arora, Y. Wu, and H. Yang, “Adversarial light projection attacks on face recognition systems: A feasibility study,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition workshops, 2020, pp. 814–815.

- [28] A. Gnanasambandam, A. M. Sherman, and S. H. Chan, “Optical adversarial attack,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 92–101.

- [29] Y. Li, Y. Li, and B. Xiao, “A physical-world adversarial attack against 3d face recognition,” arXiv preprint arXiv:2205.13412, 2022.

- [30] M. Shen, Z. Liao, L. Zhu, K. Xu, and X. Du, “Vla: A practical visible light-based attack on face recognition systems in physical world,” Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, vol. 3, no. 3, pp. 1–19, 2019.

- [31] N. Akhtar, A. Mian, N. Kardan, and M. Shah, “Advances in adversarial attacks and defenses in computer vision: A survey,” IEEE Access, vol. 9, pp. 155 161–155 196, 2021.

- [32] N. Papernot, P. McDaniel, S. Jha, M. Fredrikson, Z. B. Celik, and A. Swami, “The limitations of deep learning in adversarial settings,” in 2016 IEEE European symposium on security and privacy (EuroS&P). IEEE, 2016, pp. 372–387.

- [33] J. Su, D. V. Vargas, and K. Sakurai, “One pixel attack for fooling deep neural networks,” IEEE Transactions on Evolutionary Computation, vol. 23, no. 5, pp. 828–841, 2019.

- [34] A. Athalye, N. Carlini, and D. Wagner, “Obfuscated gradients give a false sense of security: Circumventing defenses to adversarial examples,” in International conference on machine learning. PMLR, 2018, pp. 274–283.

- [35] N. Carlini and D. Wagner, “Towards evaluating the robustness of neural networks,” in 2017 ieee symposium on security and privacy (sp). Ieee, 2017, pp. 39–57.

- [36] A. Kurakin, I. J. Goodfellow, and S. Bengio, “Adversarial examples in the physical world,” in Artificial intelligence safety and security. Chapman and Hall/CRC, 2018, pp. 99–112.

- [37] B. Yin, W. Wang, T. Yao, J. Guo, Z. Kong, S. Ding, J. Li, and C. Liu, “Adv-makeup: A new imperceptible and transferable attack on face recognition,” arXiv preprint arXiv:2105.03162, 2021.

- [38] A. Liu, J. Wang, X. Liu, B. Cao, C. Zhang, and H. Yu, “Bias-based universal adversarial patch attack for automatic check-out,” in European conference on computer vision. Springer, 2020, pp. 395–410.

- [39] L. Huang, C. Gao, Y. Zhou, C. Xie, A. L. Yuille, C. Zou, and N. Liu, “Universal physical camouflage attacks on object detectors,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 720–729.

- [40] Z. Hu, S. Huang, X. Zhu, F. Sun, B. Zhang, and X. Hu, “Adversarial texture for fooling person detectors in the physical world,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 13 307–13 316.

- [41] X. Zhu, X. Li, J. Li, Z. Wang, and X. Hu, “Fooling thermal infrared pedestrian detectors in real world using small bulbs,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, no. 4, 2021, pp. 3616–3624.

- [42] R. Pony, I. Naeh, and S. Mannor, “Over-the-air adversarial flickering attacks against video recognition networks,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 515–524.

- [43] J. S. Sun, Y. C. Cao, Q. A. Chen, and Z. M. Mao, “Towards robust lidar-based perception in autonomous driving: General black-box adversarial sensor attack and countermeasures,” in USENIX Security Symposium (Usenix Security’20), 2020.

- [44] N. Nichols and R. Jasper, “Projecting trouble: Light based adversarial attacks on deep learning classifiers,” arXiv preprint arXiv:1810.10337, 2018.

- [45] B. Phan, F. Mannan, and F. Heide, “Adversarial imaging pipelines,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 16 051–16 061.

- [46] A. Zolfi, M. Kravchik, Y. Elovici, and A. Shabtai, “The translucent patch: A physical and universal attack on object detectors,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 15 232–15 241.

- [47] A. Sayles, A. Hooda, M. Gupta, R. Chatterjee, and E. Fernandes, “Invisible perturbations: Physical adversarial examples exploiting the rolling shutter effect,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 14 666–14 675.

- [48] G. Lovisotto, H. Turner, I. Sluganovic, M. Strohmeier, and I. Martinovic, “Slap: Improving physical adversarial examples with short-lived adversarial perturbations.” USENIX, 2021.

- [49] Y. Man, M. Li, and R. Gerdes, “Poster: Perceived adversarial examples,” in IEEE Symposium on Security and Privacy, no. 2019, 2019.

- [50] B. Huang and H. Ling, “Spaa: Stealthy projector-based adversarial attacks on deep image classifiers,” in 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR). IEEE, 2022, pp. 534–542.

- [51] K. Kim, J. Kim, S. Song, J.-H. Choi, C. Joo, and J.-S. Lee, “Engineering pupil function for optical adversarial attacks,” Optics Express, vol. 30, no. 5, pp. 6500–6518, 2022.

- [52] Z. Chen, P. Lin, Z. L. Jiang, Z. Wei, S. Yuan, and J. Fang, “An illumination modulation-based adversarial attack against automated face recognition system,” in International Conference on Information Security and Cryptology. Springer, 2021, pp. 53–69.