IEEE Copyright Notice

© 2023 IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new collective works for resale or redistribution to servers or lists, or reuse of any copyrighted component of this work in other works.

STAR-RIS-Aided Mobile Edge Computing: Computation Rate Maximization with Binary Amplitude Coefficients

Abstract

In this paper, simultaneously transmitting and reflecting (STAR) reconfigurable intelligent surface (RIS) is investigated in the multi-user mobile edge computing (MEC) system to improve the computation rate. Compared with traditional RIS-aided MEC, STAR-RIS extends the service coverage from half-space to full-space and provides new flexibility for improving the computation rate for end users. However, the STAR-RIS-aided MEC system design is a challenging problem due to the non-smooth and non-convex binary amplitude coefficients with coupled phase shifters. To fill this gap, this paper formulates a computation rate maximization problem via the joint design of the STAR-RIS phase shifts, reflection and transmission amplitude coefficients, the receive beamforming vectors, and energy partition strategies for local computing and offloading. To tackle the discontinuity caused by binary variables, we propose an efficient smoothing-based method to decrease convergence error, in contrast to the conventional penalty-based method, which brings many undesired stationary points and local optima. Furthermore, a fast iterative algorithm is proposed to obtain a stationary point for the joint optimization problem, with each subproblem solved by a low-complexity algorithm, making the proposed design scalable to a massive number of users and STAR-RIS elements. Simulation results validate the strength of the proposed smoothing-based method and show that the proposed fast iterative algorithm achieves a higher computation rate than the conventional method while saving the computation time by at least an order of magnitude. Moreover, the resultant STAR-RIS-aided MEC system significantly improves the computation rate compared to other baseline schemes with conventional reflect-only/transmit-only RIS.

Index Terms:

Binary optimization problem, computation rate, mobile edge computing (MEC), simultaneously transmitting and reflecting reconfigurable intelligent surface (STAR-RIS), smoothing-based method.I Introduction

In traditional cloud computing systems, the mobile users’ data would be sent to a remote cloud center distant from the end devices[1]. However, this paradigm cannot fit the future Internet of Things era due to the unprecedentedly increasing amount of data generated by a massive number of users and the latency-critical requirements of new applications, such as industrial monitoring multi-sensory communications and mobile virtual reality[2]. Therefore, mobile edge computing (MEC) has emerged and has drawn much attention from both academia and industry. It promotes using computing capabilities at the edge servers attached to the wireless access points (APs), where the users’ data can be offloaded to the nearby APs, and the results are delivered back to the users after the computation[3]. It dramatically reduces latency and communication overhead of the network backhaul, thus overcoming the critical challenges for materializing beyond 5G.

In the MEC paradigm, the system objective shifts from purely maximizing the communication sum rate to maximizing the computation rate. Since the edge server has powerful computation capability and the users’ computation results are in very small sizes, the bottleneck of the computation rate for wireless-aided MEC systems is the uplink offloading performance[4, 5, 6]. However, the uplink offloading performance is severely restricted in practice due to the energy-limited mobile users and the adverse wireless channel condition.

In order to improve the uplink offloading performance in wireless-aided MEC systems, great attention has been drawn to the emerging technology of reconfigurable intelligent surfaces (RIS) due to its advantages of low cost, easy deployment, and directional signal enhancement or interference nulling[7, 8, 9, 10, 11]. However, the traditional RIS can only reflect or transmit the incident wireless signal (conventional reflect-only/transmit-only RISs). In this case, the AP and users must be located on the same side (for reflect-only RIS) or the opposite side (for transmit-only RIS) of the RIS, leading to only a half-space coverage. This geographical restriction may not always be satisfied in practice and severely limits the flexibility of the deployment and effectiveness of the RISs. To overcome this limitation, the simultaneously transmitting and reflecting RIS (STAR-RIS) was proposed[12, 13, 14], where the wireless signals to the STAR-RIS from either side of the surface are divided into two parts [15]. One part (reflected signal) is in the same half-space (i.e., the reflection space), and the other (transmitted signal) is transmitted to the opposite half-space (i.e., the transmission space). By controlling a STAR-RIS element’s electric and magnetic currents, the transmitted and reflected signals can be reconfigured via two generally independent coefficients, known as the transmission and the reflection coefficients [13]. With the recently developed prototypes resembling STAR-RISs[15, 16, 17, 18], a highly flexible full-space coverage will become a reality. Due to the full-space coverage advantage, the STAR-RIS-aided MEC network will break the geographical restriction of the accessed devices to provide a high coverage ratio and support continuous MEC service with better quality.

In this paper, we aim to study the computation rate of a STAR-RIS-aided MEC system. In particular, we formulate the computation rate maximization problem by considering the local and offloaded computation rates under the energy budget. Since the STAR-RIS’s transmission and reflection coefficient matrix couples with the design of receive beamforming vectors and uplink transmission power, the resulting optimization problem is an intertwined design of resource allocation in the STAR-RIS-aided MEC network. Worse still, the reflection and transmission amplitude coefficients of STAR-RIS are discrete binary variables, leading to a challenging mixed-integer non-convex optimization problem.

To address the above challenges, we transform the binary constraints into their equivalent continuous form and then resort to the penalty-based method. However, this equivalent transformation may introduce many undesired stationary points and local optima111These undesired stationary points and local optima have a lower objective value than the global optimum., thus diminishing the solution quality. Therefore, we further adopt the logarithmic smoothing function for binary variables in the penalized objective to eliminate the undesirable stationary points and local optima. With the above ideas, the resultant problem can be solved under the block coordinate descent (BCD) framework. Furthermore, to obtain an overall low complexity algorithm, each subproblem is solved with either closed-forms or first-order algorithms, with complexity orders only scaling linearly with respect to the number of elements in the STAR-RIS or users. Extensive simulation results show that the proposed STAR-RIS-aided MEC system outperforms systems with conventional RIS. At the same time, the proposed smoothing-based method for binary variables optimization leads to better performance than the conventional penalty-based method.

The rest of the paper is organized as follows. The system model and the computation rate maximization problem are formulated in Section II. The binary reflection and transmission amplitude coefficients design problem are handled in Section III. Section IV optimizes the other variables under the BCD framework. Simulation results are presented in Section V. Finally, a conclusion is drawn in Section VI.

Notations: We use boldface lowercase and uppercase letters to represent vectors and matrices, respectively. The transpose, conjugate, conjugate transpose, and diagonal matrix are denoted as and , respectively. The symbols and denote the modulus and the real component of a complex number, respectively. The identity matrix is denoted as and the element of a matrix is denoted by . The complex normal distribution is denoted as . Notations or are used for element-wise comparison. For example, if , is element-wise greater than .

II System Model and Problem Formulation

II-A System Model

We consider a STAR-RIS-aided MEC system as shown in Fig. 1, in which there are an AP with antennas, single-antenna users, and an -element STAR-RIS. The AP is attached to a MEC edge server. Since the STAR-RIS can provide full-space coverage by allowing simultaneous transmission and reflection of the incident signal, it serves both users in the transmission space and users in the reflection space.

Each user has a limited energy budget but has intensive computation tasks to deal with, and the available energy for computing the task in user is denoted as in Joules (J). In practice, if the computation task is too complicated to be completed by a user (possibly due to excessive energy or time involved), part of the task should be delegated to the edge server. Hence, a partial offloading mode is adopted [3]. Partial offloading is designed to cope with computation tasks that can be arbitrarily divided to facilitate parallel operations at users for local computing and the AP for edge computing. Being grid-powered, the MEC server has strong computation and storage capabilities for helping users to compute their offloaded tasks and report the computation results in a negligible time.222In practice, as long as the overall computation workload of users doesn’t exceed the MEC server’s computing capacity, the computation time can be disregarded as the computation at MEC servers is conducted simultaneously with the uplink data transmission[5, 19, 20].

For uplink transmission, let , and denote the index sets of the users in transmission space, the users in reflection space, and all the users, respectively. Let and with zero mean and unit variance denote the information symbols of user and user for the offloading task, and and denote the transmit power of user and user , respectively. Note that all the users with offloading requirements transmit their signals simultaneously, and thus we can express the corresponding received signal at the AP as

| (1) |

where , are respectively the equivalent baseband channels from the user and to the AP, and is the receiver noise at the AP with being the noise power.

With the deployment of a STAR-RIS, the equivalent baseband channels from the user or to the AP consists of both the direct link and transmitted or reflected link from the STAR-RIS. Therefore, can be modeled as

| (2) |

where , and are the narrow-band quasi-static fading channels from user to the AP, from user to the STAR-RIS, and from the STAR-RIS to the AP, respectively. The STAR-RIS phase shift matrix is a diagonal matrix with being the phase shift of the element and . The STAR-RIS transmission amplitude coefficient matrix is a diagonal matrix with being the transmission amplitude coefficient of the element.

Similar to (2), is expressed as

| (3) |

where , denote the narrow-band quasi-static fading channels from the user to the AP, and from the user to the STAR-RIS, respectively. The STAR-RIS reflection amplitude coefficient matrix is a diagonal matrix with being the reflection amplitude coefficient of the element. In this paper, we consider the mode switching (MS) protocol, where and . Such an “on-off” type operating protocol is much easier to implement compared to the energy splitting (ES) protocol and therefore more practical in the application scenarios[12]. Moreover, from the users’ perspective, the MS protocol has lower latency than time switching (TS) protocols, which separates two orthogonal time slots for reflection and transmission users and inevitably incurs higher latency for users assigned to the second time slot.

For the offloading task, we introduce an energy partition parameter for user , and represents the energy used for computation offloading. Correspondingly, the transmit power of user for computation offloading is given as

| (4) |

where is the length of the time slot.

We consider the linear beamforming strategy and denote as the receive beamforming vector of the AP for decoding . Based on (1), the received signal at the AP for user , denoted by , is then given by

| (5) |

The uplink signal-to-interference-plus-noise ratio (SINR) observed at the AP for user is thus given by

| (6) |

where we denote an energy partition vector and a reflection and transmission amplitude coefficient vector . Then the computation rate of user due to offloading is

| (7) |

where is the system bandwidth.

Regarding local computing, the computation rate is , where is the number of required CPU cycles for users to compute 1-bit of input data, and is the user’s CPU frequency. We adopt the dynamic voltage and frequency scaling technique for increasing the computation energy efficiency for users through adaptively controlling the CPU frequency for local computing. Specifically, the energy consumption for the user can be calculated as with being the effective capacitance coefficient of user . Also, as represents the energy for local computing, we have . By expressing in terms of other parameters in this equation, we finally obtain the local computation rate of user as

| (8) |

II-B Problem Formulation

We aim to maximize the computation rate (both due to offloading and local computation) of all the users in a given time slot of duration through jointly optimizing the phase shifts in , the reflection and transmission amplitude coefficients in , the receive beamforming vectors in , and the energy partition parameters in . The corresponding optimization problem is formally written as

| (9a) | ||||

| s.t. | (9b) | |||

| (9c) | ||||

| (9d) | ||||

| (9e) | ||||

However, problem (9) is a non-convex optimization problem without a closed-form solution as , , , and are highly coupled in (9a). Therefore, we apply the BCD framework to so that each block is handled iteratively. Furthermore, due to the binary nature of reflection and transmission amplitude coefficients and , problem (9) is a nonlinear mixed-integer problem, and it usually requires an exponential time complexity to find the optimal solution. To address the above issue, we apply the logarithmic smoothing-based method to solve the binary variables of in the next section.

Remark 1.

For a MIMO channel without the STAR-RIS, the number of data streams cannot exceed the degree of freedom (DoF) the channel provides, which is limited by the number of transceiver antennas [21]. However, with the presence of a STAR-RIS, currently, there is no study on the rule for the number of antennas at AP and the number of STAR-RIS elements to support users. In Appendix A, we have shown that for the system model considered in this paper, assuming , the necessary condition for the number required to serve users simultaneously is , where and are the ranks of the aggregated direct channel between transmission users and the AP and reflection users between the AP , respectively. If there are no direct links between transmission and reflection users and the AP, and equal zero, and the condition becomes .

III Logarithmic Smoothing-Based Method for Handling Binary Variables

In this section, we optimize with other variables in fixed. We first transform the discontinued binary constraints into their equivalent continuous form and then resort to the penalty-based method. However, it is not likely to obtain a solution of good quality with the assistance of penalty terms since many undesired stationary points and local optima could be introduced[22, 23], leading to a poor quality converged solution. To diminish the performance loss, we further propose the logarithmic smoothing-based method that eliminates the undesired stationary points and local optima by suppressing the unsmooth part of the penalized problem.

III-A Penalty-Based Method for Updating

When other variables are fixed, the subproblem for optimizing is given as

| (10a) | ||||

| s.t. | (10b) | |||

| (10c) | ||||

where we replaced (9e) with (10c) since they are equivalent when , and is obtained from (7) by fixing all variables except . The binary constraint (10b) can be equivalently transformed into the following constraints[24]

| (11) | ||||

| (12) |

Based on (12), we always have , where the equality holds if and only if is or , i.e., a binary variable, and the term attains its maximum at . Rather than directly handling the nonlinear constraints (11), we add a penalty term with a penalty parameter . The problem (10) then becomes

| (13a) | ||||

| s.t. | (13b) | |||

| (13c) | ||||

where the penalty function introduced this way is “exact” in the sense that the problem (13) and (10) have the same global optimum for a sufficiently large penalty parameter . In terms of the penalty parameter, we employ an increasing penalty strategy to enforce the exact constraints successively.

The above idea of applying a penalty term to enforce binary constraint generally performs well, as verified by the simulations in [25, 26]. However, the penalty term might introduce many undesired stationary points and local optima. To avoid getting stuck in undesired stationary points or local optima during the optimization process, we further consider a smoothing technique.

III-B Smoothing-Based Optimization Method for Updating

A popular method to eliminate poor local optima is using smoothing methods to suppress the unsmoothness of the objective function [27]. It aims to transform the original problem with many local optima into one with fewer local optima and thus has a higher chance of obtaining the global optimal solution.

The basic idea of smoothing is to add a strictly convex function (or concave, depending on maximizing or minimizing objective function) to the original objective, i.e.,

| (14) |

where is the original objective function, is a strictly convex function, and is the barrier parameter. If is chosen to have a Hessian that is sufficiently positive definite for all , then is strictly convex when is large enough. This is important in smoothing-based methods because the basic idea is to solve the problem (14) iteratively for a decreasing sequence of starting with a large value. With a sufficiently large parameter at the beginning, any local optima of is also the unique global optimum and thus is trivial to optimize.

For a binary vector, a strongly convex function is [28]

| (15) |

This function is well-defined when and attains its maximum at . Based on (14) and (15), we can derive a smoothing-based algorithm for handling (13). Applying (15) to the optimization problem (13), it can be formulated as

| (16a) | ||||

| s.t. | (16b) | |||

| (16c) | ||||

Since (16) has a continuously differentiable objective function and a convex feasible set, the projected-gradient (PG) method can be exploited to tackle this problem. It alternatively performs an unconstrained gradient descent step and computes the projection of the update onto the feasible set of the optimization problem. To be specific, the update of the iteration is given by

| (17) |

where is the objective function in (16), is the Armijo step size to guarantee convergence, and is the gradient of , with its explicit expression shown in (37) of Appendix B.

On the other hand, to project onto the feasible set determined by constraints (16b) and (16c), an update point can be obtained by solving the following optimization problem

| (18a) | ||||

| s.t. | (18c) | |||

| (18d) | ||||

where and we transformed the constraints (16b) into its equivalent compact matrix form (18c). Since the feasible set of is the intersection of a hyperplane and a box, a closed-form solution can be derived based on the Karush-Kuhn-Tucker (KKT) condition and is given by the following Proposition 1, which is proved in Appendix C. Based on (17), (37) and (19), we can iteratively update , where the convergent point is guaranteed to be a stationary point of (16).

Proposition 1.

The optimal solution to (18) is given by

| (19) |

where and . The is a solution to the equation

| (20) |

and can be obtained via the bisection search.

In order to enforce the smoothness induced by the last term of (16a), the problem (16) is solved for a sequence of decreasing values of since the solution to (16), , is a continuously differentiable function [29]. Initially, it starts with a suitably large since it is vital that the iterates move away from an undesired local optimum. After obtaining the solution of (16) with , it is used as the starting point of solving (16) but with where . The iteration goes on until reaches the tolerance for barrier value [30]. In terms of , it can be changed synchronously with by a multiplicative factor since both smoothing-based and penalty-based methods use solution from the previous iteration as the starting point of the next iteration. The overall algorithm for solving under the proposed logarithmic smoothing framework is summarized in Algorithm 1. Since the barrier parameter ends with a value that is close to zero[23], for a sufficiently large value of the penalty parameter , Algorithm 1 will obtain a stationary point of the original problem (10)[25]. The performance improvement of Algorithm 1 compared with the conventional penalty-based method will be illustrated later in Section V-A.

IV Fast Iterative BCD Algorithm for Solving

With the binary transmission and reflection amplitude coefficients solved in Section III, this section derives the details of optimization algorithms for other subproblems under the BCD framework. Specifically, to facilitate a fast iterative algorithm, all the subproblems are solved in closed-form or with a first-order algorithm, with complexity orders only scaling linearly with respect to the number of elements in the STAR-RIS or the number of users.

IV-A Updating

When other variables are fixed, the subproblem for updating is

| (21a) | ||||

| s.t. | (21b) | |||

Conventionally, since in (21a) can be re-expressed as the difference of two concave functions, the DC programming method can be applied to convexify the problem (21) and further solved numerically by the existing convex solvers such as CVX (the second term in (21a) is already concave). However, the above method is a multi-stage iterative optimization algorithm with the outer loop involving DC programming, and the inner loop still requires iterative numerical methods, thus incurring high computational complexity333A common practice is to use the interior-point method, and its complexity order is at least .. To overcome this difficulty, we propose a new approach based on a Lagrangian dual reformulation of the energy partition maximization problem and subsequently apply the quadratic transform method. This leads to an algorithm in which each iteration is performed in closed-forms and bisection search rather than being solved with numerical convex solvers. Thus the proposed method is more desirable and computationally efficient.

First, to establish the legitimacy of applying Lagrangian dual reformulation of (21), we provide the following property, which is proved in Appendix D.

Proposition 2.

The energy partition maximization problem (21) is equivalent to

| (22) | ||||

| s.t. |

where refers to the auxiliary variable, and the new objective is given by

| (23) | ||||

Based on Proposition 2, variables and are updated iteratively. When is fixed, the function is concave with respect to . Therefore, the global optimal is obtained by setting to zero, i.e.,

| (24) |

To optimize when is fixed, we apply the quadratic transform on the fractional term in (23). In particular, when is held fixed, only the last two terms of , which are a sum-of-ratio form and a sum of square roots, are related to the optimization of . In general, if the objective function is a sum of fractional forms with the fraction’s numerator being concave and the denominator being convex (known as the concave-convex form), one can employ the quadratic transform to convert the concave-convex fractional programming into a sequence of convex subproblems[31, 32]. Since the sum of square roots can be considered as a special case of sum-of-ratio form with a denominator equal to 1, by the quadratic transform, we further recast to

| (25) | ||||

where are the auxiliary variables. Then according to the iterative update rules of the quadratic transform, with fixed , the optimal can be directly given as

| (26) |

| (27) |

On the other hand, since is concave with respect to with fixed , the iterative update for is obtained by setting to zero, i.e.,

| (28) |

where

| (29a) | ||||

| (29b) | ||||

| (29c) | ||||

Note that equation (28) is a strictly decreasing function with respect to since its derivative is derived as . Furthermore, if is 0 or 1, the left-hand side of the equation (28) will go to infinity and minus infinity, respectively. The above characteristics imply that equation (28) must have a unique solution, and a bisection search algorithm can be used to find the optimal . The details are omitted here for brevity.

These updating steps amount to an iterative optimization as stated in Algorithm 2. The objective function (23) is bounded above and monotonically nondecreasing after each iteration. The solution of Algorithm 2 arrives at a stationary point of the reformulated problem (22). Since the problem (22) is equivalent to (21), it is a stationary point of the original problem (21)[32].

IV-B Updating

When other variables are fixed, the subproblem of for updating is

| (30) |

Since the uplink SINR of user only contains its own receive beamforming vector , we can optimize in a parallel manner, and the objective function is simply the SINR in (6). This gives the subproblem as where and . It is easy to recognize that this is a generalized eigenvector problem, and its optimal solution should be the eigenvector corresponding to the largest eigenvalue of the matrix .

IV-C Updating

When other variables are fixed, the subproblem for updating STAR-RIS phase shifts is given by

| (31a) | ||||

| s.t. | (31b) | |||

To handle the modulus constraints in (31b), the semi-definite relaxation (SDR) method and manifold method are widely adopted. However, SDR-based methods only yield an approximate solution without an optimality guarantee and incur a heavy computational burden as the variable’s dimension increases. Furthermore, manifold optimization methods slow down the convergence due to their nested loop architecture[33]. Therefore, we propose a gradient descent (GD) method algorithm as follows. In contrast to the SDR and manifold optimization-based methods, this method avoids convex relaxation, does not need to solve high-dimensional SDP problems, and gets rid of the nested loops. Thus, it significantly improves computational efficiency.

Observe that the unknown variable in the feasible set (31b) is in fact . Therefore, problem (31) can be recast into an unconstrained optimization problem as

| (32) |

where we drop the constraints since the objective function is periodic and taking modulus of can recover a . Since (32) is an unconstrained optimization problem with a differentiable objective function, it can be readily solved by the GD method to obtain a stationary point [34]. To be specific, the update of at the iteration is given by

| (33) |

where is the Armijo step size to guarantee convergence and is the gradient of given by

| (34) | ||||

IV-D Summary and Computation Complexity

The proposed four-block BCD optimization algorithm for solving the computation rate maximization problem in (9) is summarized in Algorithm 3, where the converged point is guaranteed to a stationary point [35].

Notice that the iteration with respect to is based on the GD method, which only involves first-order differentiation. Therefore, it has complexity order. On the other hand, the computational complexity of the iteration with respect to is dominated by the gradient step. Hence, the complexity order for updating is also . Furthermore, the complexity to update and in Algorithm 2 is and , respectively [36]. Based on the above discussions, the complexity order of solving (9) is linear in and . This makes the proposed algorithm suitable for massive elements and user networks. The computation complexity of the proposed algorithm is summarised in Table I.

| Updating | Updating | Updating | Updating | |

|---|---|---|---|---|

| Computation complexity |

V Simulation Results

In this section, we present simulation results to verify the effectiveness of the proposed algorithm. As illustrated in Fig. 2, under a three-dimensional Cartesian coordinate system, we consider a system with 4 users in transmission space and 4 users in reflection space, and they are uniformly and randomly located in a square region of centered at the 3-dimensional coordinate and , respectively. The STAR-RIS and AP are located at the 3-dimensional coordinate and , respectively.

Spatially independent Rician fading channel is considered for all channels to account for both the line-of-sight (LoS) and non-LoS (NLoS) components [21]. For example, the AP-RIS channel is expressed as , where is the Rician factor representing the ratio of power between the LoS path and the scattered paths, is the LoS component modeled as the product of the unit spatial signature of the AP-RIS link [21, 37], is the Rayleigh fading components with entries distributed as , and is the distance-dependent path loss of the AP-RIS channel. We consider the following distance-dependent path loss model , where is the constant path loss at the reference distance is the Euclidean distance between the transceivers, and is the path loss exponent[38]. Since the STAR-RIS can be practically deployed in LoS with the AP, we set and [38]. In addition, other channels are similarly generated with and (i.e., Rayleigh fading to account for rich scattering) for the AP-user channel, and for the RIS-user channel[39, 40]. We consider a system with a bandwidth , and the effective noise power density is [41]. Thus the noise power at the AP is . Each antenna at the AP is assumed to have an isotropic radiation pattern, and the antenna gain is [42]. Unless specified otherwise, other parameters are set as follows: , , , and [40].

V-A Performance of the Proposed Algorithms

First, we demonstrate the convergence behavior of Algorithm 1 compared with the penalty-based method in Section III for solving (10). Note that both the smoothing-based and penalty-based methods are solved by the PG method for a fair comparison.

The superiority of the proposed smoothing-based method in terms of the objective values is shown in Fig. 3(a) under a specific channel realization. It is observed that the proposed algorithm achieves a higher computation rate compared with the penalty-based method. This shows the effectiveness of the proposed smoothing-based method in eliminating the poor local optima and therefore achieving a higher computation rate.

Next, in Fig. 3(b), we compare the convergence behavior of Algorithm 2 and the DC programming with CVX for solving (21). It shows that Algorithm 2 and the DC programming reach almost the same objective value at the end. Although DC programming converges in fewer iterations than Algorithm 2, Algorithm 2 is much more efficient than DC programming on a per-iteration basis since Algorithm 2 updates variables in closed-forms or via bisection search, while DC programming requires solving a convex optimization numerically in each iteration. This is reflected by the computation time of Algorithm 2 to reach a computation rate of being only 0.057 s while that of the DC programming is 3.116 s.

In addition, we demonstrate the overall convergence of the proposed algorithm (Algorithm 3) and compare with the following two benchmarks: SDR-DC method for solving via SDR and solving with DC programming, and the ‘Penalty-based Method’ for solving (10) with the penalty-based method as in Section III with exact binary constraints.

The superiority of the proposed algorithm in terms of the computation rate is shown in Fig. 4 under a specific channel realization. It is observed that the proposed algorithm achieves a higher computation rate than the penalty-based method. On the other hand, as the proposed algorithm include an extra smoothing parameters update step, it takes more steps in outer iterations in the BCD algorithm to converge. It is also observed that both the proposed and the penalty-based methods outperform the SDR-DC method in convergence speed and computation rate performance.

In Fig. 5, we show the average performance gap between the proposed and penalty-based methods versus the number of elements in STAR-RIS. We can see that the proposed method outperforms the penalty-based method in terms of the objective values obtained. Moreover, as the number of elements increases, the performance gap widens in general. It illustrates the potential of the proposed smoothing-based method in solving a massive STAR-RIS-aided wireless system.

To further show the low computational complexity of the proposed algorithm, we compare the computation time with SDR-DC and penalty-based methods. As shown in Fig. 6(a) and Fig. 6(b), with the number of elements or users increases, the proposed method and penalty-based method save the computation time to a large extent compared with the SDR-DC method (e.g., more than 10 times differences with 60 elements and 8 times differences with 14 users, in Fig. 6(a) and Fig. 6(b), respectively), and the advantage becomes more prominent as or increases. On the other hand, it shows that the proposed algorithm achieves almost the same computation time compared with the penalty-based method for small numbers of and . As and increase, the computation time of the proposed algorithm is only marginally higher than that of the penalty-based method. Both the proposed and penalty-based methods are suitable for large-scale STAR-RIS optimization.

V-B Performance Comparison with Other Schemes

To verify the effectiveness of the STAR-RIS in the proposed MEC system and the performance of the proposed algorithm, we compare the performance with the following schemes.

V-B1 Conventional RISs

In this case, the full-space coverage facilitated by the STAR-RIS in Fig. 1 is achieved by employing one conventional reflect-only RIS and one transmit-only RIS. The two conventional RISs are deployed adjacent to each other at the same location as the STAR-RIS. For a fair comparison, each conventional reflect-only/transmit-only RIS is assumed to have elements. This baseline scheme can be regarded as a special case of the STAR-RIS in MS mode, where elements can transmit the signal and elements can reflect the signal.

V-B2 Random phase shift

In this case, in are uniformly and randomly distributed in .

V-B3 Performance upper bound provided by energy splitting (ES)

In this case, we assume that all elements of the STAR-RIS are operated in the transmission and reflection mode, where the incident energy on each element is split into the energies of the transmitted and reflected signals with an energy splitting ratio of and . The resulting optimization problem can be obtained by replacing the binary constraints (10b) with the constraints in the problem (10) and can be solved by applying Algorithm 3. Notice that this scheme will theoretically obtain a better computation rate than the MS mode, as the is relaxed from to . However, as the ES mode cannot be implemented in hardware at this moment, this serves as an upper bound for the STAR-RIS.

V-B4 Equal time allocation

In this case, we divide the length of the time slot equally into two parts and let the STAR-RIS transmits the signal half the time and reflects the signal half the time. This baseline scheme can be regarded as mode switching in the time domain.

V-B5 Zero-forcing (ZF) and Equal energy allocation

The equal energy allocation scheme equally allocates the users’ energy budget for local computing and computation offloading, and the ZF scheme leverages ZF receive beamforming at the AP. Note that the ZF receive beamforming cannot effectively deal with the cases when , while our proposed optimal solution in Section IV can perform well even in these cases.

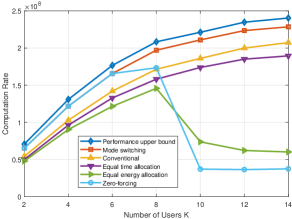

In Fig. 7, we show the computation rate of different schemes with respect to the number of elements of the STAR-RIS. From this figure, we can observe that all schemes’ computation rates increase with the number of elements, which coincides with the intuition that STAR-RISs with more elements have a stronger capability to rectify the channel. It is clear that significant performance improvement can be achieved by the proposed BCD-optimized solution, verifying the great benefits of deploying STAR-RIS with joint optimization of the STAR-RIS’s amplitude coefficients and phase shifts. It is confirmed that the STAR-RIS with MS mode provides a improvement in computation rate over the benchmark of conventional RISs and almost double in computation rate over the benchmark of random phase shift. In addition, the performance upper bound provided by ES mode outperforms MS mode by nearly . We observed that the equal time allocation scheme only performs better than the random phase shift. This is because both users in the transmission or reflection space are served by STAR-RIS only for half the duration of the time length .

The performance in terms of the computation rate versus the number of the AP’s antennas is presented in Fig. 8(a). The system equipped with STAR-RIS always has better performance than the conventional RIS. Furthermore, as the number of antennas decreases, the performance of the ZF and equal energy allocation schemes degrade dramatically. It is because the ZF scheme cannot separate the signal streams when the number of users exceeds the number of antennas at the AP, while optimal beamforming design selects a beamformer between ZF and maximum ratio combining to maximize the SINR. Also, since the equal energy allocation scheme cannot control the uplink transmission power, if the number of antennas is less than the number of users (when the linear beamformer cannot eliminate other users’ interference), it causes severe interference issues, and thus affects the offloading computation rates. In addition, we can observe that all the curves of the computation rate increase as grows, and the performance improvement becomes less prominent with larger .

In Fig. 8(b), we study the effects of the number of users, i.e., , on the system performance of computation rate. It can be observed that the system equipped with STAR-RIS always performs better than the conventional RIS. Although the computation rates increase as the number of users grows, the performance improvement becomes less significant. It is due to the fact that the system is capacity limited when the number of users is small and becomes interference limited as the number of users increases. In addition, similar results can be observed from Fig. 8(a) that instead of performance degradation like ZF and equal energy allocation, the proposed solutions have better performance as becomes larger than through effectively designing the receive beamforming vectors and the users’ energy allocation.

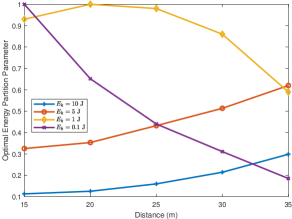

To reveal insight into the energy trade-off between offloading and local computation, we investigate how the optimal energy partition parameter varies with the distance between STAR-RIS and the user. Specifically, in Figure 9, we consider the user with varying energy budgets ranging from to . For users with high energy budgets, i.e., or , we observed that the optimal strategy is to allocate more energy to computation offloading as the distance increases. This is because computation offloading can provide a higher computation rate than local computation, and as the distance increases, the user needs to spend more energy on the data offloading. In contrast, for a user with a low energy budget, i.e., , the optimal energy partition parameter first increases as the distance increases. However, as it reaches the maximum value of , it starts to decline as the distance increases. The reason for the rise of the optimal energy partition parameter at the first segment is the same as that of users with a high energy budget. However, as the distance increases further, a limited energy budget cannot support high-speed data transmission in computation offloading; therefore, computation offloading becomes less economical than local computing. Thus, we observe a decline in the optimal energy partition parameter at the second segment. As for the case of , the optimal energy partition parameter reaches the maximum value of with a lower distance compared with and then followed by a decline as the distance increases. The simulation results demonstrate that the optimal energy allocation strategy for computation offloading depends on the user’s energy budget and the distance between the STAR-RIS and the user. In general, the optimal energy partition parameter first increases as the distance increases before reaching its maximum value of and then decreases as the distance increases. The optimal energy partition parameter curve will shift to the right if the energy budget is abundant, while the curve will shift to the left if the energy budget is small.

VI Conclusion

In this paper, a STAR-RIS-aided MEC system with computation offloading has been investigated. Specifically, the computation rate was maximized via the collaborative design of the STAR-RIS phase shifts, reflection and transmission amplitude coefficients, the AP’s receive beamforming vectors, and the users’ energy partition strategies for local computing and offloading. To handle the binary reflection and transmission amplitude coefficients of the STAR-RIS, the logarithm smoothing-based method has been proposed to eliminate the undesired stationary points and local optima induced by conventional penalty-based methods. Furthermore, a low-complexity iterative algorithm has been proposed to obtain a stationary point of the non-convex joint optimization problem. Due to the linear scaling of the computational complexity with respect to the number of users or STAR-RIS elements, the proposed algorithm is suitable for massive scenarios. Numerical results showed that the proposed low-complexity algorithm could save computation time by at least an order of magnitude compared to the DC programming and SDR-based solution. Besides, the STAR-RIS could significantly improve the computation rate of the system compared to the conventional RIS system.

Appendix A

Degree of Freedom to Support Users with STAR-RIS

We first examine the DoF between transmission users and the AP. The received signal at the AP due to all transmission users is

| (35) |

where denotes the direct link between transmission users and the AP, denotes the link between transmission users and the STAR-RIS elements in the transmission mode, with being the number of STAR-RIS elements in transmission mode, is the phase shift matrix of the STAR-RIS elements in transmission mode, is the channel from the elements in transmission mode to the AP, and is the aggregated information symbols of transmission users. We here assume that the direct link has a rank . The DoF of the equivalent channel is its rank and is upper bounded by [43]. The intuition behind this result is that the direct path channel can be regarded as a separate RIS with elements where the phase shifts are fixed at 0 (or any arbitrary phase).

Similarly, the channel between reflection users to the AP can be written as , where with rank denotes the direct link between reflection users and the AP, is the number of reflection users, denotes the link between reflection users and the STAR-RIS elements in the reflection mode, with being the number of STAR-RIS elements in reflection mode, is the phase shift matrix of the STAR-RIS elements in reflection mode, and is the channel from the STAR-RIS elements in reflection mode to the AP. Correspondingly, the rank of this channel is upper bounded by .

Then the received signal at the AP due to all users can be represented as

| (36) |

where is the aggregated information symbols for all users, , and . Note that the maximum DoF for channel is not exactly but it is related to the DoF of and . To illustrate the above idea, we assume in the following. Since the maximum DoF of is , if , then the DoF of cannot reach , since the rank of is lower than . Similarly, since the maximum DoF of is , if , then the DoF of cannot reach either, since the rank of is lower than . Therefore the necessary condition for to have DoF to support users is the number of elements in transmission mode greater than or equal to and the number of elements in reflection mode greater than or equal to . Since , we obtain .

Appendix B

Derivation of the Gradient of

Firstly, the gradient of (16) for the third term and the fourth term with respect to can be obtained as and , respectively. Then for the first term and second term: and , the gradient with respect to can be obtained based on chain rules for complex-valued variables[44]. The overall results are given below in (37).

| (37a) | ||||

| (37b) | ||||

Appendix C

Proof of Proposition 1

Recall that the orthogonal projection of is the optimal solution of (18), the Lagrangian of the problem is

| (38) |

where refers to a collection of variables and is a vector with its and positions equal to 1 and others equal to 0. Since strong duality holds for the problem (38), it follows that is an optimal solution to the problem (18) if and only if there exists for which

| (39a) | ||||

| (39c) | ||||

Using the expression of the Lagrangian given in (38), the relation (39a) can be equivalently written as

| (40) |

The feasibility condition (39c) can then be rewritten as

| (41) |

Appendix D

Proof of Proposition 2

First, by introducing a new variable to replace each ratio term inside the logarithm, (21) can be rewritten as

| (42a) | ||||

| s.t. | (42b) | |||

| (42c) | ||||

where . The above optimization can be thought of as an outer optimization over and an inner optimization over with fixed . The inner optimization is

| (43a) | ||||

| s.t. | (43b) | |||

Note that (43) is a convex optimization problem, so the strong duality holds. We introduce the dual variable for each inequality constraint in (43b) and form the Lagrangian function

| (44) | ||||

where refers to the collection of variables . Due to strong duality, the optimization (43) is equivalent to the dual problem

| (45) |

Let be the saddle point of the problem (45). It must satisfy the first-order condition :

| (46) |

Given the constraint (43b), the optimal is obtained with the equality of (43b). Hence, the optimal is given by

| (47) |

Note that is automatically satisfied since both the numerator and denominator in (47) are positive. Putting (47) in (45), problem (42) can then be reformulated as

| (48) |

Furthermore, combining with the outer maximization over and after some algebra, we can find (48) to be the same as the maximization of (23) in the Proposition 2.

References

- [1] M. Armbrust, A. Fox, R. Griffith, A. D. Joseph, R. Katz, A. Konwinski, G. Lee, D. Patterson, A. Rabkin, I. Stoica et al., “A view of cloud computing,” Commun. ACM, vol. 53, no. 4, pp. 50–58, 2010.

- [2] A. Al-Fuqaha, M. Guizani, M. Mohammadi, M. Aledhari, and M. Ayyash, “Internet of things: A survey on enabling technologies, protocols, and applications,” IEEE Commun. Surveys Tuts., vol. 17, no. 4, pp. 2347–2376, Quart. 2015.

- [3] Y. Mao, C. You, J. Zhang, K. Huang, and K. B. Letaief, “A survey on mobile edge computing: The communication perspective,” IEEE Commun. Surveys Tuts., vol. 19, no. 4, pp. 2322–2358, Fourth Quart. 2017.

- [4] X. Hu, K.-K. Wong, and K. Yang, “Wireless powered cooperation-assisted mobile edge computing,” IEEE Trans. Commun., vol. 17, no. 4, pp. 2375–2388, Apr. 2018.

- [5] C. You, K. Huang, H. Chae, and B.-H. Kim, “Energy-efficient resource allocation for mobile-edge computation offloading,” IEEE Trans. Wireless Commun., vol. 16, no. 3, pp. 1397–1411, Mar. 2017.

- [6] S. Bi and Y. J. Zhang, “Computation rate maximization for wireless powered mobile-edge computing with binary computation offloading,” IEEE Trans. Wireless Commun., vol. 17, no. 6, pp. 4177–4190, Jun. 2018.

- [7] Q. Wu and R. Zhang, “Towards smart and reconfigurable environment: Intelligent reflecting surface aided wireless network,” IEEE Commun. Mag., vol. 58, no. 1, pp. 106–112, Jan. 2020.

- [8] X. Yuan, Y.-J. A. Zhang, Y. Shi, W. Yan, and H. Liu, “Reconfigurable-intelligent-surface empowered wireless communications: Challenges and opportunities,” IEEE Wireless Commun., vol. 28, no. 2, pp. 136–143, Apr. 2021.

- [9] Z. Li, S. Wang, M. Wen, and Y.-C. Wu, “Secure multicast energy-efficiency maximization with massive RISs and uncertain CSI: First-order algorithms and convergence analysis,” IEEE Trans. Wireless Commun., vol. 21, no. 9, pp. 6818–6833, Sep. 2022.

- [10] Y. Yang, Y. Gong, and Y.-C. Wu, “Intelligent-reflecting-surface-aided mobile edge computing with binary offloading: Energy minimization for IoT devices,” IEEE Internet Things J., vol. 9, no. 15, pp. 12 973–12 983, Aug. 2022.

- [11] Y. Chen, M. Wen, E. Basar, Y.-C. Wu, L. Wang, and W. Liu, “Exploiting reconfigurable intelligent surfaces in edge caching: Joint hybrid beamforming and content placement optimization,” IEEE Trans. Wireless Commun., vol. 20, no. 12, pp. 7799–7812, Dec. 2021.

- [12] Y. Liu, X. Mu, J. Xu, R. Schober, Y. Hao, H. V. Poor, and L. Hanzo, “STAR: Simultaneous transmission and reflection for 360° coverage by intelligent surfaces,” IEEE Wireless Commun., vol. 28, no. 6, pp. 102–109, Dec. 2021.

- [13] J. Xu, Y. Liu, X. Mu, and O. A. Dobre, “STAR-RISs: Simultaneous transmitting and reflecting reconfigurable intelligent surfaces,” IEEE Commun. Lett., vol. 25, no. 9, pp. 3134–3138, Sep. 2021.

- [14] S. Zhang, H. Zhang, B. Di, Y. Tan, M. Di Renzo, Z. Han, H. Vincent Poor, and L. Song, “Intelligent omni-surfaces: Ubiquitous wireless transmission by reflective-refractive metasurfaces,” IEEE Trans. Wireless Commun., vol. 21, no. 1, pp. 219–233, Jan. 2022.

- [15] B. O. Zhu, K. Chen, N. Jia, L. Sun, J. Zhao, T. Jiang, and Y. Feng, “Dynamic control of electromagnetic wave propagation with the equivalent principle inspired tunable metasurface,” Sci. Rep., vol. 4, no. 1, pp. 1–7, 2014.

- [16] “Ntt docomo. docomo conducts world’s first successful trial of transparent dynamic metasurface. [online]. available: https://www.nttdocomo.co.jp/english/info/mediacenter/pr/2020/0117_00.html.”

- [17] H. Zhang, S. Zeng, B. Di, Y. Tan, M. Di Renzo, M. Debbah, Z. Han, H. V. Poor, and L. Song, “Intelligent omni-surfaces for full-dimensional wireless communications: Principles, technology, and implementation,” IEEE Commun. Mag., vol. 60, no. 2, pp. 39–45, Feb. 2022.

- [18] S. Zeng, H. Zhang, B. Di, Y. Liu, M. D. Renzo, Z. Han, H. V. Poor, and L. Song, “Intelligent omni-surfaces: Reflection-refraction circuit model, full-dimensional beamforming, and system implementation,” IEEE Trans. Wireless Commun., vol. 70, no. 11, pp. 7711–7727, Nov. 2022.

- [19] X. Hu, L. Wang, K.-K. Wong, M. Tao, Y. Zhang, and Z. Zheng, “Edge and central cloud computing: A perfect pairing for high energy efficiency and low-latency,” IEEE Trans. Wireless Commun., vol. 19, no. 2, pp. 1070–1083, Feb. 2020.

- [20] X. Pei, W. Duan, M. Wen, Y.-C. Wu, H. Yu, and V. Monteiro, “Socially aware joint resource allocation and computation offloading in NOMA-aided energy-harvesting massive IoT,” IEEE Internet Things J., vol. 8, no. 7, pp. 5240–5249, Apr. 2021.

- [21] D. Tse and P. Viswanath, Fundamentals of Wireless Communication. Cambridge, U.K.: Cambridge Univ. Press, 2005.

- [22] B. Wu and B. Ghanem, “-box ADMM: A versatile framework for integer programming,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 41, no. 7, pp. 1695–1708, Jul. 2019.

- [23] W. Murray and K.-M. Ng, “An algorithm for nonlinear optimization problems with binary variables,” Comput. Optim. Appl., vol. 47, no. 2, pp. 257–288, 2010.

- [24] M. Borchardt, “An exact penalty approach for solving a class of minimization problems with boolean variables,” Optim., vol. 19, no. 6, pp. 829–838, 1988.

- [25] X. Mu, Y. Liu, L. Guo, J. Lin, and R. Schober, “Simultaneously transmitting and reflecting (STAR) RIS aided wireless communications,” IEEE Trans. Wireless Commun., vol. 21, no. 5, pp. 3083–3098, May 2022.

- [26] T. Zhang, S. Wang, Y. Zhuang, C. You, M. Wen, and Y.-C. Wu, “Reconfigurable intelligent surface assisted OFDM relaying: Subcarrier matching with balanced SNR,” IEEE Trans. on Veh. Tech., vol. 72, no. 2, pp. 2216–2230, Feb. 2023.

- [27] R. Horst and H. Tuy, Global Optimization: Deterministic Approaches. Springer Science & Business Media, 2013.

- [28] A. V. Fiacco and G. P. McCormick, Nonlinear Programming: Sequential Unconstrained Minimization Techniques. SIAM, 1990.

- [29] D. P. Bertsekas, “Nonlinear programming,” J. Oper. Res. Soc., vol. 48, no. 3, pp. 334–334, 1997.

- [30] W. Murray and K.-M. Ng, “Algorithms for global optimization and discrete problems based on methods for local optimization,” in Handbook of global optimization. Springer, 2002, pp. 87–113.

- [31] K. Shen and W. Yu, “Fractional programming for communication systems—Part I: Power control and beamforming,” IEEE Trans. Signal Process., vol. 66, no. 10, pp. 2616–2630, May 2018.

- [32] ——, “Fractional programming for communication systems—Part II: Uplink scheduling via matching,” IEEE Trans. Signal Process., vol. 66, no. 10, pp. 2631–2644, May 2018.

- [33] Y. Ma, Y. Shen, X. Yu, J. Zhang, S. Song, and K. B. Letaief, “A low-complexity algorithmic framework for large-scale IRS-assisted wireless systems,” in Proc. IEEE Glob. Commun. Conf. Workshops, Taipei, Taiwan, Dec. 2020, pp. 1–6.

- [34] T. Lipp and S. Boyd, “Variations and extension of the convex-concave procedure,” Optim. Eng., vol. 17, no. 2, pp. 263–287, 2016.

- [35] M. Razaviyayn, M. Hong, and Z.-Q. Luo, “A unified convergence analysis of block successive minimization methods for nonsmooth optimization,” SIAM J. Optim., vol. 23, no. 2, p. 1126, 2013.

- [36] H. Guo, Y.-C. Liang, J. Chen, and E. G. Larsson, “Weighted sum-rate maximization for reconfigurable intelligent surface aided wireless networks,” IEEE Trans. Wireless Commun., vol. 19, no. 5, pp. 3064–3076, May 2020.

- [37] S. Zhang and R. Zhang, “Capacity characterization for intelligent reflecting surface aided MIMO communication,” IEEE J. Sel. Areas Commun., vol. 38, no. 8, pp. 1823–1838, Aug. 2020.

- [38] Q. Wu and R. Zhang, “Intelligent reflecting surface enhanced wireless network via joint active and passive beamforming,” IEEE Trans. Wireless Commun., vol. 18, no. 11, pp. 5394–5409, 2019.

- [39] S. Hua, Y. Zhou, K. Yang, Y. Shi, and K. Wang, “Reconfigurable intelligent surface for green edge inference,” IEEE Trans. Green Commun. Netw., vol. 5, no. 2, pp. 964–979, Jun. 2021.

- [40] X. Hu, C. Masouros, and K.-K. Wong, “Reconfigurable intelligent surface aided mobile edge computing: From optimization-based to location-only learning-based solutions,” IEEE Trans. Commun., vol. 69, no. 6, pp. 3709–3725, Jun. 2021.

- [41] Q. Wu and R. Zhang, “Beamforming optimization for wireless network aided by intelligent reflecting surface with discrete phase shifts,” IEEE Trans. Commun., vol. 68, no. 3, pp. 1838–1851, Mar. 2020.

- [42] ——, “Joint active and passive beamforming optimization for intelligent reflecting surface assisted SWIPT under QoS constraints,” IEEE J. Sel. Areas Commun., vol. 38, no. 8, pp. 1735–1748, Aug. 2020.

- [43] H. V. Cheng and W. Yu, “Degree-of-freedom of modulating information in the phases of reconfigurable intelligent surface,” 2021. [Online]. Available: https://arxiv.org/abs/2112.13787

- [44] K. B. Petersen, M. S. Pedersen et al., “The matrix cookbook,” Technical University of Denmark, vol. 7, no. 15, p. 510, 2008.