Stacked Autoencoder Based Feature Extraction and Superpixel Generation for Multifrequency PolSAR Image Classification

Abstract

In this paper we are proposing classification algorithm for multifrequency Polarimetric Synthetic Aperture Radar (PolSAR) image. Using PolSAR decomposition algorithms 33 features are extracted from each frequency band of the given image. Then, a two-layer autoencoder is used to reduce the dimensionality of input feature vector while retaining useful features of the input. This reduced dimensional feature vector is then applied to generate superpixels using simple linear iterative clustering (SLIC) algorithm. Next, a robust feature representation is constructed using both pixel as well as superpixel information. Finally, softmax classifier is used to perform classification task. The advantage of using superpixels is that it preserves spatial information between neighbouring PolSAR pixels and therefore minimizes the effect of speckle noise during classification. Experiments have been conducted on Flevoland dataset and the proposed method was found to be superior to other methods available in the literature.

Keywords:

polarimetric synthetic aperture radar (PolSAR) multifrequency PolSAR image classification Autoencoder Superpixels simple linear iterative clustering (SLIC) Optimized Wishart Network (OWN).1 Introduction

Synthetic Aperture Radar (SAR) has been popularized in recent years as a technique that captures high resolution microwave images of the earth surface. With SAR technique an image can be taken regardless of weather conditions or time of the day unlike optical sensors. The other major reason to use SAR is its operability over multiple frequency bands, viz, from the X band to P band. The penetrability of the L and the P bands allows SAR to capture data from even below ground level. In case of PolSAR it draws information of the target in four polarization states which makes it an information rich technique. These are some of the reasons that establish the superiority of SAR over optical data capturing techniques.

One of the very early approaches of classification of multifrequency PolSAR data was attempted using DNN (Dynamic Neural Network) [1]. Deep learning based multiplayer autoencoder network was also proposed [2]. It uses Kronecker product of eigenvalues of coherency matrix to combine multiple bands information. Recently, an Optimized Wishart Network (OWN) for classification of multifrequency PolSAR data is reported [3].

Superpixel algorithm in conjunction with deep neural networks has gained popularity for capturing spatial information of a PolSAR image. Hou et al. presented [4] a way of using Pauli decomposition of PolSAR image to generate superpixels and autoencoder to extract features from the coherency matrix of each PolSAR pixel. Prediction of the network was then used to run a KNN (k nearest neighbours) algorithm in each superpixel to determine the class of the complete superpixel. Guo et al. introduced a method to apply Fuzzy clustering algorithm over PolSAR images to generate superpixels [5]. This method considered only those pixels which are similar to their neighbours, pixels which are in all probability badly conditioned were ignored. In another work [6] Cloude decomposition features were used to generate superpixels and CNN (Convolutional Neural Network) to perform the classification. Adaptive nonlocal approach for extracting spatial information was also proposed [7]. It uses stacked sparse autoencoder to extract robust features.

In this paper we propose a classification algorithm for multifrequency PolSAR images. For doing so, we extracted 33 features from each band of PolSAR image to construct a feature vector for each pixel. After that we used two-layer stacked autoencoder to reduce dimensionality of the input vector. The output of autoencoder which contained information of all features of all bands was used to generate superpixels. Next, each pixel along with its corresponding superpixel was used to construct robust feature vector. Finally softmax classifier was used to perform the classification task.

In this paper we propose a classification algorithm for multifrequency PolSAR images. This involves extraction of 33 features from each band which are then used by a two-layer stacked autoencoder to reduce dimensionality of the input vector. This is used to generate superpixels whose features are used to generate a feature vector including information regarding the pixel and the superpixel the pixel belongs to. The merging of this information ensures that the effect of any noise or error can be reduced on the pixels. After this, the softmax layer to used for classification.

This paper is organized as follows: Section II discusses the proposed network architecture; Section III explains the experiments conducted on the Flevoland dataset; Section IV concludes with our observations and the discussions based on the results.

2 Proposed Methodology

The raw data of a PolSAR image is a 2x2 scattering matrix where each element denotes the phase and magnitude information of the received waves in vertical and horizontal polarization after scattering of vertically and horizontally polarized waves as 4 complex numbers:

Due to the reciprocity condition, it is assumed that . So we have 3 complex numbers where each complex number has 2 elements-real and imaginary. If a neural network were to be given the 6 elements as input, it wouldn’t be able to compute the phase information since it considers the real and imaginary components as separate features. This brings the need to give crafted features to the network instead of raw data. We have created 33 dimensional feature vector extracted from one frequency band of a PolSAR image. It contains 6 features of coherency matrix [8], 3 features of Freeman decomposition [9], 4 features of Krogager decomposition [10], 4 features of Yamaguchi decomposition [11], 9 features of Huynen decomposition [12] and 7 features of Cloude decomposition [13] as shown in Table 1. Hence combining information of all three bands we get 99 dimensional feature vector corresponding to each PolSAR pixel.

The Coherency Matrix contains the 6 elements from the scattering matrix. Freeman decomposition has 3 features which describe the power of single-bounce(odd-bounce), double-bounce and volume scattering of the transmitted waves. The Huynen decomposition aims to form a single scattering matrix to model the scattering mechanism of the surface. The Cloude decomposition was proposed in 1997 and it aims to model the surface scattering using the parameters such as Entropy, Anisotropy and other angles. The Krogager decomposition aims to factorize the scattering matrix as the combination of a sphere, a diplane and a helix. The Yamaguchi decomposition adds a Helix scattering component to the Freeman decomposition to model complicated manmade structures.

| Features | Description |

|---|---|

| , , , , , | 6 features of Coherency matrix [8] |

| , , | 3 features of Freeman decomposition [9] |

| , , , | 4 features of Krogager decomposition[10] |

| , , , | 4 features of Yamaguchi decomposition [11] |

| , , , , , , , , | 9 features of Huynen decomposition [12] |

| , , , , , Entropy, Anisotropy | 7 features of Cloude decomposition [13] |

As shown in Fig. 1 our proposed network architecture contains three modules. First module is a two layer autoencoder network. The purpose of this module is to reduce the dimensionality of the input vector by learning efficient representation of combined PolSAR frequency bands information.

Let be the input feature vector of PolSAR pixel. Let , , and be weights and biases of two encoder layers. A hidden representation of input feature vector can be calculated as , where is a activation function. Let , , and be weights and biases of two decoder layers. A reconstructed input vector can be calculated as . We have used mean square error to train the autoencoder. The cost function of first module of the proposed network is given as follows:

| (1) |

Here is a regularization parameter, is the learning rate, is the sparsity parameter, N is total number of training samples, is a size of reduced dimensional feature vector , is a sparsity parameter, is the average activation value of the hidden unit and is a Kullback-Leibler divergence which encourages sparsity in the hidden representation . For our application we have set the value of . Once the training of the first module is complete we disconnect the decoder layers.

Next, we feed entire PolSAR image as an input to this network to obtain hidden representations of all pixels of the PolSAR image. We will use this hidden representation of all pixels of the PolSAR image to generate superpixels. This process has two advantages: i) it contains feature information of all bands and ii) its dimensionality is substantially reduced in comparison to the input feature vector. To generate superpixels we have used algorithm similar to simple linear iterative clustering (SLIC) [14]. Instead of the RGB input image we will use hidden representation of PolSAR image obtained from using first module of the proposed network. Using SLIC we measure the distance between any two pixels which is given by Eq. 2:

| (2) |

where and . Here is a parameter controlling the relative weight between and and is a size of search space [14]. and are the positions of and PolSAR pixels on Euclidean plane. This distance measure was finally used for superpixel generation.

The second module of our proposed architecture combines each pixel and corresponding superpixel information to construct robust feature vector. This is done by letting be the superpixel and . Let be the cluster center of . To extract robust feature using both pixel and superpixel information input for second autoenocder can be constructed as [7]. Since the dimensionality of the hidden representation is low, a single layer autoencoder is sufficient for an effective reconstruction. Let be the activation value obtained at hidden layer of second autoencoder. Let be the reconstructed input. The cost function for the second autoencoder can now readily be described by:

| (3) |

Here is the size of feature vector . Once the training of the second module of the autoencoder is complete we again disconnect the decoder layer. Output of the second module of autoencoder contains both pixel and superpixel information. Finally we use softmax classifier to obtain predicted probability distribution.

3 Experiments

With this theoretical model we conduct the following experiments. We start with the details of dataset chosen for our experiments. After that we analyze the performance of the proposed network for different band combinations. Finally we will perform the analysis of performance for different feature decompositions. The complete experiment on the proposed network architecture was implemented using Python 3.6 and it was executed on a 1.60 GHz machine with 8GB RAM for all the experiments.

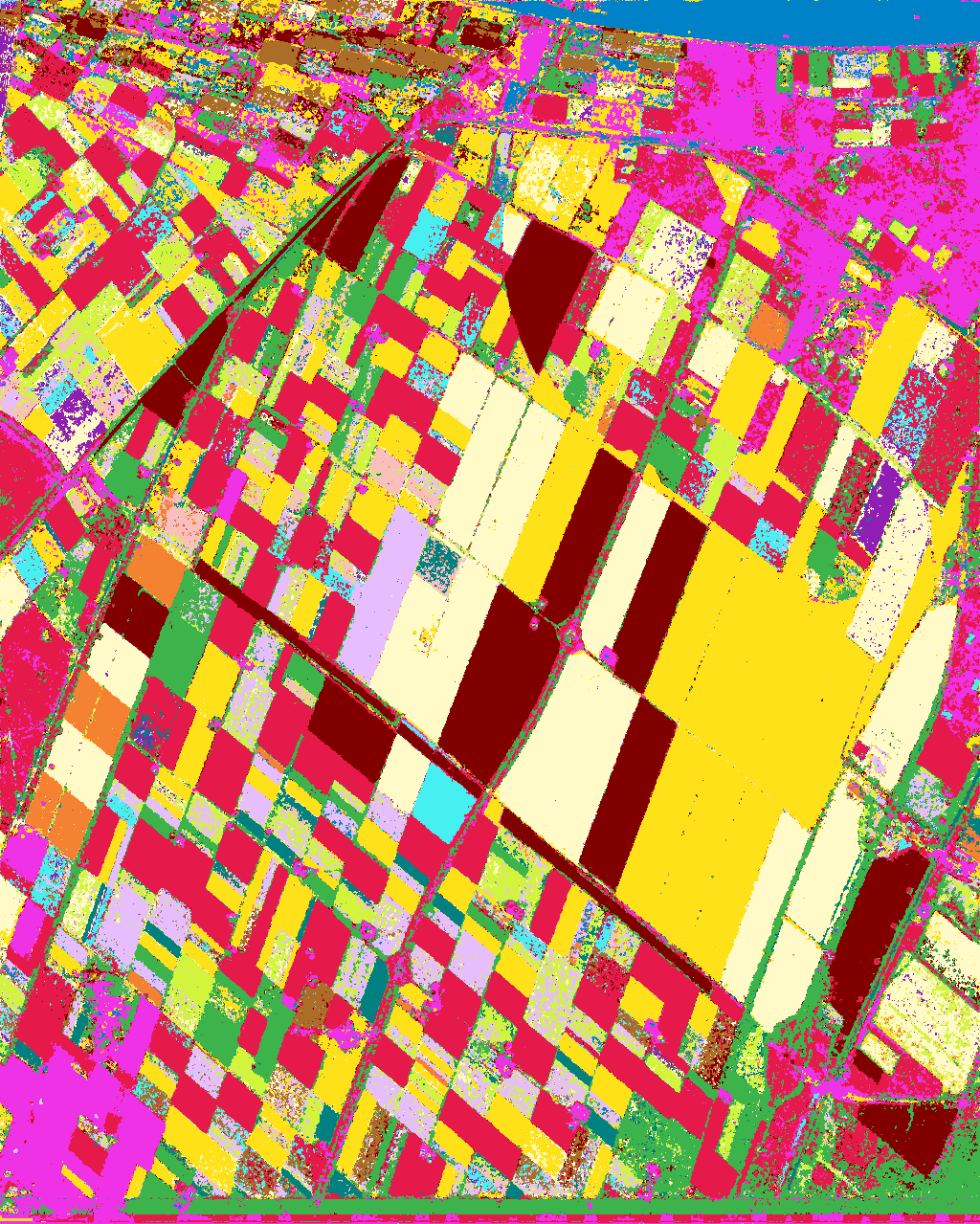

Experiments have been conducted on a dataset of Flevoland [15], an agricultural tract in the Netherlands, whose data was captured by the NASA/Jet Propulsion Laboratory on 15 June, 1991. This dataset has often been viewed as the benchmark dataset for PolSAR applications. The intensities after Pauli decomposition of the dataset have been used to form an RGB image as shown in Fig. 2(a-c). The ground truth of the data set shown in Fig. 2(d) identifies a total of 15 classes of land cover.

| Class/Bands | L | P | C | LP | LC | PC | LPC |

|---|---|---|---|---|---|---|---|

| Potato | 0.9912 | 0.9636 | 0.9890 | 0.9977 | 0.9961 | 0.9931 | 0.9975 |

| Maize | 0.9859 | 0.9745 | 0.9292 | 0.9963 | 0.9932 | 0.9917 | 0.9979 |

| Grass | 0.9339 | 0.8282 | 0.8585 | 0.9741 | 0.9457 | 0.9608 | 0.9773 |

| Barley | 0.9757 | 0.9758 | 0.8848 | 0.9960 | 0.9930 | 0.9857 | 0.9979 |

| Lucerne | 0.9506 | 0.8148 | 0.9573 | 0.9798 | 0.9879 | 0.9638 | 0.9869 |

| Oats | 0.4641 | 0.1771 | 0.0000 | 0.8916 | 0.6275 | 0.7466 | 0.8718 |

| Peas | 0.9642 | 0.0024 | 0.8975 | 0.9952 | 0.9827 | 0.9807 | 0.9952 |

| Beet | 0.8024 | 0.9490 | 0.1667 | 0.9608 | 0.9545 | 0.9627 | 0.9772 |

| Wheat | 0.8756 | 0.6940 | 0.4792 | 0.9375 | 0.9328 | 0.8633 | 0.9538 |

| Fruit | 0.9505 | 0.9819 | 0.7591 | 0.9931 | 0.9674 | 0.9906 | 0.9944 |

| Beans | 0.8954 | 0.4990 | 0.8784 | 0.9410 | 0.9767 | 0.9534 | 0.9850 |

| Flax | 0.9856 | 0.9409 | 0.6749 | 0.9984 | 0.9860 | 0.9718 | 0.9966 |

| Onions | 0.2353 | 0.0000 | 0.8893 | 0.4706 | 0.9273 | 0.2907 | 0.9275 |

| Rapeseed | 0.9952 | 0.9951 | 0.9982 | 0.9986 | 0.9994 | 0.9988 | 0.9994 |

| Water | 0.9979 | 0.9964 | 0.9997 | 0.9975 | 0.9983 | 0.9983 | 0.9995 |

| OA | 0.9784 | 0.9508 | 0.9211 | 0.9938 | 0.9900 | 0.9869 | 0.9959 |

We have evaluated the accuracy of each class with respect to all possible combinations of the data acquired in the three frequency bands. Please note that all the Overall Accuracy mentioned in the paper is the precision obtained. From Table 2 it can be observed that while the network with just the C band information recognizes Onions and Lucerne with high accuracy, it fails in the case of classes such as Beet or Oats. While in the case of the C band, the network fails to recognize Wheat accurately. It can be noted that the lack of information to recognize Wheat is compensated by the help of the L band. In the case of the P band, the network fails to accurately identify Onions and Peas but correctly identifies the majority of the Rapeseed and Fruit. It can also be observed that the L band performs better individually than the C or the P bands.

A total of six PolSAR decomposition methods have been applied to the PolSAR data and their features have been given as input to the proposed network. The significance of each decomposition technique is evident from the Table 3. It can be seen that the Krogager and Freeman decompositions fail to provide enough information to recognize Beet and Onions. On the other hand Cloude decomposition and Huynen decomposition provide sufficient information respectively.

| / | Yamaguchi | Coherency | Krogager | Freeman | Hynen | Cloude |

|---|---|---|---|---|---|---|

| Potato | 0.9820 | 0.9860 | 0.9879 | 0.9721 | 0.9933 | 0.9941 |

| Maize | 0.9672 | 0.9696 | 0.9880 | 0.9465 | 0.9904 | 0.9884 |

| Grass | 0.9243 | 0.8898 | 0.8490 | 0.6757 | 0.9256 | 0.9555 |

| Barley | 0.9653 | 0.9516 | 0.9814 | 0.9554 | 0.9920 | 0.9846 |

| Lucerne | 0.6690 | 0.8568 | 0.4815 | 0.4820 | 0.9695 | 0.9522 |

| Oats | 0.0626 | 0.0031 | 0.2168 | 0.0000 | 0.3420 | 0.7252 |

| Peas | 0.9779 | 0.8686 | 0.7440 | 0.5245 | 0.9546 | 0.9904 |

| Beet | 0.1658 | 0.6794 | 0.0000 | 0.0000 | 0.8834 | 0.9244 |

| Wheat | 0.1460 | 0.7210 | 0.6841 | 0.0000 | 0.8916 | 0.9428 |

| Fruit | 0.9618 | 0.9661 | 0.9827 | 0.9027 | 0.9790 | 0.9874 |

| Beans | 0.7831 | 0.9265 | 0.8758 | 0.0160 | 0.9767 | 0.9167 |

| Flax | 0.9346 | 0.9636 | 0.7162 | 0.7470 | 0.9790 | 0.9688 |

| Onions | 0.0000 | 0.2561 | 0.0000 | 0.0000 | 0.8720 | 0.1246 |

| R.seed | 0.9921 | 0.9949 | 0.9976 | 0.9786 | 0.9976 | 0.9986 |

| Water | 0.9889 | 0.9957 | 0.9958 | 0.9926 | 0.9981 | 0.9968 |

| OA | 0.9509 | 0.9609 | 0.9562 | 0.9105 | 0.9859 | 0.9856 |

The proposed method for classification of multifrequency PolSAR image is also compared with other methods available in the literature. Comparison of overall accuracies is reported in Table 4. ANN [2] and OWN [3] have used small subset of Flevoland dataset containing 7 classes. On the other hand Stein-SRC [16] used ground truth with 14 classes. For parity in comparison with our results we have calculated the overall accuracy of the proposed method using ground truth of 7, 14 as well as 15 classes. We observe from Table 4 that the proposed method outperforms all the three methods [3, 2, 16].

4 Conclusion

In this paper a classification network for multifrequency PolSAR data is proposed. Proposed network involves three modules. First module reduces the dimensionality of the input feature vector. Output of the first module has been used to generate superpixels. The second module constructs robust feature vector using each pixel and its corresponding superpixel information. Finally the last module of the proposed network conducts classification using softmax classifier. It is observed that combining multiple frequency bands information improves overall classification accuracy. We validated our proposed network on Flevoland dataset resulted in 99.59% overall classification accuracy. Experimental result shows that this proposed network outperforms other reported methods available in the literature.

References

- [1] K. S. Chen, W. P. Huang, D. H. Tsay, and F. Amar, “Classification of multifrequency polarimetric sar imagery using a dynamic learning neural network,” IEEE Transactions on Geoscience and Remote Sensing, vol. 34, pp. 814–820, May 1996.

- [2] S. De, D. Ratha, D. Ratha, A. Bhattacharya, and S. Chaudhuri, “Tensorization of multifrequency polsar data for classification using an autoencoder network,” IEEE Geoscience and Remote Sensing Letters, vol. 15, pp. 542–546, April 2018.

- [3] T. Gadhiya and A. K. Roy, “Optimized wishart network for an efficient classification of multifrequency polsar data,” IEEE Geoscience and Remote Sensing Letters, vol. 15, pp. 1720–1724, Nov 2018.

- [4] B. Hou, H. Kou, and L. Jiao, “Classification of polarimetric sar images using multilayer autoencoders and superpixels,” IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, vol. 9, pp. 3072–3081, July 2016.

- [5] Y. Guo, L. Jiao, S. Wang, S. Wang, F. Liu, and W. Hua, “Fuzzy superpixels for polarimetric sar images classification,” IEEE Transactions on Fuzzy Systems, vol. 26, pp. 2846–2860, Oct 2018.

- [6] B. Hou, C. Yang, B. Ren, and L. Jiao, “Decomposition-feature-iterative-clustering-based superpixel segmentation for polsar image classification,” IEEE Geoscience and Remote Sensing Letters, vol. 15, pp. 1239–1243, Aug 2018.

- [7] Y. Hu, J. Fan, and J. Wang, “Classification of polsar images based on adaptive nonlocal stacked sparse autoencoder,” IEEE Geoscience and Remote Sensing Letters, vol. 15, pp. 1050–1054, July 2018.

- [8] Y. Zhou, H. Wang, F. Xu, and Y. Jin, “Polarimetric sar image classification using deep convolutional neural networks,” IEEE Geoscience and Remote Sensing Letters, vol. 13, pp. 1935–1939, Dec 2016.

- [9] A. Freeman and S. L. Durden, “A three-component scattering model for polarimetric sar data,” IEEE Transactions on Geoscience and Remote Sensing, vol. 36, pp. 963–973, May 1998.

- [10] E. Krogager, “New decomposition of the radar target scattering matrix,” Electronics Letters, vol. 26, pp. 1525–1527, Aug 1990.

- [11] Y. Yamaguchi, T. Moriyama, M. Ishido, and H. Yamada, “Four-component scattering model for polarimetric sar image decomposition,” IEEE Transactions on Geoscience and Remote Sensing, vol. 43, pp. 1699–1706, Aug 2005.

- [12] J. R. Huynen, “Phenomenological theory of radar targets,” Electronmagnetic Scattering, 1970.

- [13] S. R. Cloude and E. Pottier, “An entropy based classification scheme for land applications of polarimetric sar,” IEEE Transactions on Geoscience and Remote Sensing, vol. 35, pp. 68–78, Jan 1997.

- [14] R. Achanta, A. Shaji, K. Smith, A. Lucchi, P. Fua, and S. Süsstrunk, “Slic superpixels compared to state-of-the-art superpixel methods,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 34, pp. 2274–2282, Nov 2012.

- [15] Dataset: AIRSAR, NASA 1991. Retrieved from ASF DAAC on 7 December, 2018.

- [16] F. Yang, W. Gao, B. Xu, and J. Yang, “Multi-frequency polarimetric sar classification based on riemannian manifold and simultaneous sparse representation,” Remote Sensing, vol. 7, no. 7, pp. 8469–8488, 2015.