Stability of multiplexed NCS based on an epsilon-greedy algorithm for communication selection

Abstract

In this letter, we study a Networked Control System (NCS) with multiplexed communication and Bernoulli packet drops. Multiplexed communication refers to the constraint that transmission of a control signal and an observation signal cannot occur simultaneously due to the limited bandwidth. First, we propose an -greedy algorithm for the selection of the communication sequence that also ensures Mean Square Stability (MSS). We formulate the system as a Markovian Jump Linear System (MJLS) and provide the necessary conditions for MSS in terms of Linear Matrix Inequalities (LMIs) that need to be satisfied for three corner cases. We prove that the system is MSS for any convex combination of these three corner cases. Furthermore, we propose to use the -greedy algorithm with the that satisfies MSS conditions for training a Deep Network (DQN). The DQN is used to obtain an optimal communication sequence that minimizes a quadratic cost. We validate our approach with a numerical example that shows the efficacy of our method in comparison to the round-robin and a random scheme.

Networked control system, Markovian Jump Linear System, Deep Reinforcement Learning

1 Introduction

A Networked Control System (NCS) is a system consisting of a plant, a controller, and a communication network. NCS plays an important role in various industries such as chemical plants, power systems, and warehouse management [1, 2]. In an NCS, the communication between the plant and the controller, as well as between the controller and the actuator, often occurs over a wireless network. Various challenges exist in such systems, namely, limited resources (bandwidth), delays, packet drops, or adversarial attacks [3, 4, 5, 6]. These uncertainties can affect the plant’s performance, making it important to study these scenarios. Our focus is on an NCS with limited bandwidth, formulated as a multiplexing constraint on transmitting control and observation signals and considering random packet drops. We aim to find a switching policy for selecting a communication direction that ensures Mean Square Stability (MSS) and then find the optimal communication sequence that minimizes a quadratic performance measure.

In an early piece of work, Athans et al. proposed an uncertainty threshold principle [7]. This principle states that optimum long-range control of a dynamical system with uncertainty parameters is feasible if and only if the uncertainty does not exceed a certain threshold. This forms the basis of subsequent work which gives stability conditions in different scenarios. In [8], linear systems controlled over a network face packet drop uncertainties, and are modeled as Bernoulli random variables, with independent drops in both communication channels. The necessary conditions for MSS and optimal control solutions using dynamic programming are then provided. Schenato et al. generalize this work by considering noisy measurements and giving stronger conditions for the existence of the solution [9]. They also show that the separation principle holds for the TCP protocol. Other studies focus on the design of a Kalman filter for wireless networks [10, 11] and stability conditions for systems with packet drops [12]. An alternate approach for random packet drops involves sending multiple copies [13], and event-triggered policies for nonlinear systems with packet drops are developed in [14].

While stability and optimality problems are addressed in the literature, the stability and optimal scheduling problems with multiplexing in control and observation have not been completely investigated. For instance, [9] discusses multiplexing and packet drops but not optimal network selection, while [15] considers a joint strategy for optimal selection and control of an NCS, but only for sensor signals. Major directions of research incorporate bandwidth constraints as i) multiplexing in multiple sensor signals, e.g., [11], ii) multiplexing in sensor and control signals, e.g., [9]. In addition, other communication uncertainties such as packet drops and delays are addressed [16]. The main objective of these attempts is to find an optimal control strategy or to find an optimal policy for the selection of communication channels, or both see, e.g., [17]. Leong et al. address the boundedness of error covariance objective in a multiplexed sensor information system with packet drops [18]. A stability condition is established based on the packet drop probability, and then an optimal sequence of communication is found by training a DQN with a - greedy algorithm.

In this letter, our contributions are as follows:

-

i)

We propose a modified -greedy algorithm for the selection of the direction of communication (transmit or receive.)

-

ii)

We establish the necessary conditions for the MSS of an NCS with multiplexed communication and packet drops.

-

iii)

We provide an optimal switching policy using Deep Learning that utilizes the proposed -greedy algorithm and ensures MSS, not just after training the NN, but also in the training phase.

The rest of the paper is organized as follows. Section 2 introduces the problem setup and communication constraints. In Section 3, we propose a switching strategy and formulate the problem as a Markovian Jump Linear System (MJLS). We provide necessary conditions for MSS in Section 4. Next, we formulate the optimal communication selection problem and provide a solution based on deep reinforcement learning in Section 5. Finally in Section 6, we validate our results on a numerical example.

2 Problem Setup

2.1 Plant and Controller Model

Consider a closed-loop discrete-time linear system

| (1) | ||||

for all , where is the state, is the control input and is the output at instant. We make the following assumptions regarding the original closed-loop system.

Assumption 1.

The pair is controllable and the pair is observable.

Assumption 2.

2.2 Networked System Model

In this letter, we are interested in an application where the plant and the controller are remotely located. The communication between the plant and the controller occurs over a wireless communication network. The networked system is illustrated in Fig. 1. The networked system dynamics can be written as

| (3) | ||||

where denotes the networked version of the control signal. We proceed by emulation of the controller (2) and use the controller as

| (4) |

where denotes the estimates of the state at the controller end. A more detailed explanation of these quantities is presented later in this section.

Motivated by real-world communication constraints in the form of bandwidth limitations, we consider a communication constraint as described below. At any time instant, the network scheduler has three choices:

-

i)

transmit the control input from the controller to the plant or,

-

ii)

transmit the measured signal from the plant to the predictor or,

-

iii)

not communicate at all.

These three choices are encapsulated in the form of a switch variable that takes values in a discrete set where,

| (5) |

We also consider lossy communication in the sense of a packet drop scenario. Consider to denote the packet drop event, modeled as independent Bernoulli random variables with probabilities:

| (6) |

where indicates a successful packet transmission and indicates failure.

Based on the switching and the packet drop assumptions, the transmitted control information through the network is written as

| (7) |

The coarse estimate of the state at the controlled end is

| (8) |

Define the concatenated state as

| (9) |

with and respectively. The overall model of the system is written as

| (10) |

with

| (11) |

| (12) |

and

| (13) |

3 Switching Strategy

In this section, we first present the -greedy strategy for switching that ensures MSS. Next, we present the generalized switching probabilities using the -greedy algorithm. Lastly, we formulate the system as a Markov Jump Linear System (MJLS), discuss modes of operation in the MJLS, and state our objective.

3.1 - Greedy Algorithm for Switching

We employ the -greedy switching strategy for the decision-making process [19]. The algorithm consists of two parts: exploration and exploitation. Exploration addresses finding new possible solutions, whereas exploitation addresses utilizing the already known optimal solution. The variable acts as a parameter that weighs the two. Let the per-stage cost be defined by

| (14) |

The switching strategy is defined mathematically as follows:

| (15) |

where and is a discount factor that discounts the cost at future time stages.

When is less than the switching variable is chosen uniformly randomly from . This random selection represents exploration by allowing the system to consider other strategies that might not be optimal for that instance but could provide a better solution over a longer horizon. When , the switch variable is determined by the exploitation part, i.e., minimizing the expected cost function. Where the cost function has the following terms:

-

i)

, represents a penalty on the state, where is the state at time instant , and .

-

ii)

represents the penalty on the control effort, where is the control at time instant , and .

-

iii)

introduces a penalty for transmission of a packet, with being a weighing parameter that controls the trade-off between state and control cost versus the transmission cost.

Thus the -greedy strategy creates a balance between exploration and exploitation, ensuring that new strategies are explored while utilizing the accumulated information.

3.2 Switching Probabilities with - Greedy Algorithm

In this subsection, we discuss in detail the generalized switching probability under the - greedy algorithm and analyze the effect of the -greedy switching strategy on the MSS of the system. The switching probability distribution function is given by

| (16) |

with some switching algorithm . Given the switching strategy defined in (15), the probabilities of switching states are influenced by the choice of . The choice of , in turn, decides the balance between exploration and exploitation. With the switching strategy (15) the switching probability distribution (denoted as ) can be written as

| (17) | ||||

Here,

-

i)

the first term, , represents the probability distribution in the exploration phase because of the uniform switching between and . With probability , the switch positions are chosen randomly, with an equal probability () of switching to or and zero probability of remaining in position , and

-

ii)

the second term, , represents the probability distribution in exploitation phase. With probability , the switching follows a policy based on the probabilities and , where:

-

•

is the probability of switching to position 1,

-

•

is the probability of switching to position ,

-

•

is the probability of remaining in position .

-

•

Here, and to ensure that all probabilities sum to 1.

Remark 1.

The choice of impacts the MSS of the system which is one of the main interests of this article.

3.3 Formulation as a Markovian Jump Linear System

In this section, we elaborate on the framework of the Markovian Jump Linear System (MJLS) that relates to the system described in (10). An MJLS is a linear system that goes through random transitions between a finite number of modes, each governed by linear dynamics. The random transitions are governed by Markovian probabilities associated with switching from one mode to another mode. In the problem, the randomness takes place at two levels: the first is at the switching under - greedy policy and the second is the random packet drop. With this backdrop, we introduce modes of operation under varying circumstances.

System Modes: The system can operate in one of several modes at any instance, which is determined by the switch position and the status of packet transmission. These modes represent different scenarios of switching and packet drop. We define the modes of the Markovian switching system as follows:

| (18) | ||||

where,

-

•

the first entry in each tuple indicates the position of the switch, which can be 1,-1, or 0;

-

•

the second entry denotes whether the packet transmission was successful () or failed (), and

-

•

the third entry corresponds to the system matrix that governs the dynamics in that particular mode.

For example, represents the case where the switch is in position 1, the packet transmission is successful, and the system dynamics are described by the matrix . On the other hand, represents the case where the switch is in position 1 but the packet transmission has failed, thus the system dynamics revert to .

The mode transition probability, , represents the likelihood of the system switching from mode to mode in the next time step.

Definition 1 (Mode Transition Probability).

Define the mode transition probability of switching from to as,

with where , and denotes the total number of modes.

Definition 2 (Mean Square Stability).

The system (10) is mean square stable if and only if for some , and for every ,

| (19) |

4 Mean Square Stability of MJLS

In this section, we propose the methodology used to address the problem, which involves identifying corner cases relevant to switching probabilities. The goal is to determine a value , given a fixed , which ensures that certain Linear Matrix Inequalities (LMIs) are satisfied for each identified corner case [20]. Note that is not a bound but a value that satisfies the LMIs. Furthermore, we demonstrate that any convex combination of these corner cases also satisfies the MSS conditions derived from the LMIs. The convex combination relates to different switching scenarios in the exploitation phase, implying that the system is MSS irrespective of any switching policy implemented during the exploitation phase.

4.1 Corner Cases

We study this through specific corner cases:

-

C1.

and : This case represents the switch being in position throughout the entire exploitation phase, indicating that only observations are transmitted.

-

C2.

and : This case represents the switch being in position throughout the entire exploitation phase, indicating that only control signals are transmitted.

-

C3.

and : This case depicts the switch remaining in position for the duration of the exploitation phase, signifying that no transmissions occur.

The probability of switching in each of the corner cases is tabulated in TABLE 1.

| Case | Switch probabilities |

|---|---|

| General | |

| C1 | |

| C2 | |

| C3 |

4.2 Mode Transition Probabilities for Corner Cases

Let denote the mode transition probability matrix associated with the corner case , where . Here, the superscript is the variable representing each corner case. Each denotes the probability of transitioning from each node to the mode in the corner case , i.e., for all . The mode transition probabilities, for each case, are described in Table 2 and depicted in Fig. 2. With the given packet transmission success probability , we provide the necessary conditions for the MSS of the system using the LMIs given in [20].

| C1 | |||||

| C2 | |||||

| C3 | |||||

| General |

Theorem 1.

Proof.

First, the MSS conditions given in [20, Corollary 3.26] are tailored to the specific problem to obtain the LMI (20). Suppose there exists an and such that LMI (20) hold, then the origin of the system is stable for these corner cases. To prove the origin of the system (10) is MSS under (15) with , we prove that the general case can be written as a convex combination of the three corner cases and then prove the LMI holds for the general case. Let and . Taking the convex combination of mode transition probabilities for all corner cases (see TABLE 2),

| (21) | ||||

Comparing (21) with the mode transition probability of the general case in TABLE 2, we have and .

To prove that (10) is MSS for any and , let be the general mode transition probability matrix. Then

for all .

The inequality (a) is from (20) and the fact that all quantities on both sides of (20) are non-negative. Hence, if satisfies LMI (20) for three corner cases then, (20) is satisfied for any general switching strategy (15). ∎

To determine the value of that satisfies the LMIs required for MSS, we utilize a method involving Semi-Definite Programming (SDP) solvers and the bisection method. Based on Theorem 1, we set up the necessary LMIs involving symmetric positive definite matrix . These LMIs establish the conditions for MSS that the system must satisfy. We employ an SDP solver to numerically solve the formulated LMIs. Given the dependence of matrix on , we apply the bisection method to determine the value of that satisfies all LMIs. Starting with an initial range for , the bisection method iteratively narrows down to by checking the existence of solutions of LMIs at midpoints within the range.

5 Optimal Switching Strategy

We intend to find an optimal switching policy that satisfies the necessary conditions for MSS established earlier and minimizes the average cost involving penalties on state, control, and communication. That is, to find

| (22) |

which can be written as a discounted cost problem

| (23) |

using [21, Lemma 5.3.1]. This transformation allows the application of reinforcement learning techniques for solutions.

5.1 -Learning

The sequential decision-making problem is treated as a Markov Decision Process (MDP). The MDP has three components: i) state, ii) action, and iii) reward. The state space consists of and the action space is . Define per-stage reward as

| (24) |

The system transitions from state to based on action and receives reward . To evaluate the performance of a given policy, we use the action value function, denoted as . For a policy , which maps states to probabilities of selecting actions, the action value function is defined as:

| (25) |

The optimal value (denoted as ) satisfies Bellman’s principle of optimality:

| (26) |

The optimal policy can be found iteratively in the case of discrete sets of states and actions by the value iteration method [19]. In this problem, the state space is continuous, and this method cannot be directly applied. Hence, we use an advanced deep reinforcement learning technique using a neural network.

5.2 Deep -Learning

We approach the solution of the problem (23) using deep reinforcement learning [22]. A Deep Q Network (DQN) estimates the -values for each state and action. Thereby approximates the function , where the function is parameterized by . The state acts as the input to the DQN, and the output corresponding to each action is the approximated -value. The loss is derived using Bellman’s principle as

| (27) |

Here, two forward passes are made through the network to get the -values for the current and next states. To avoid this, Mnih et al. introduced the replay memory framework and another target network replicating the actual network but updated only after a few iterations [22]. Algorithm 1 presents the deep reinforcement learning technique used.

6 Numerical Experiments

In this section, we validate our approach on system (10) with the following data:

where the controller gain stabilizes as per Assumption 2.

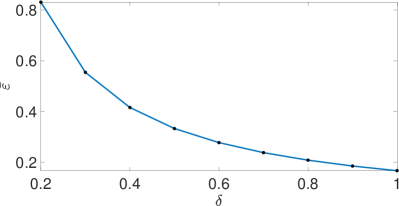

First, we fix the packet transmission success probability , which is typically determined by the communication system. For a given , we determine the corresponding value of () that ensures MSS for all corner cases. The relationship between the packet transmission success probability and the required for ensuring MSS is depicted in Fig. 3.

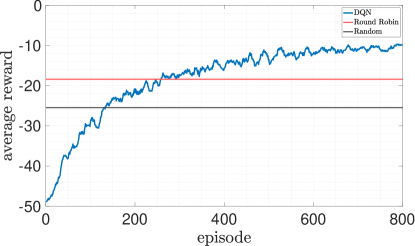

Next, for a fixed packet transmission success probability , we determine the corresponding value of () that ensures the MSS of the system. We then illustrate the associated corner cases and the convex region formed by these corner cases. We implement the Deep Q-Network according to Algorithm 1 with the following specifications. The initial state conditions are randomly sampled from the uniform distribution within the range. A replay memory size of is utilized to store experiences. The policy network architecture consists of two hidden layers, with and neurons, respectively. Since there are three possible actions, the output layer consists of three neurons. Mini-batches of size are used for training. We fix and the exploration parameter is set to . The learning rate is fixed at . The network undergoes training for episodes. The moving average of reward in the training phase shows the DQN’s learning over several episodes and then the learning settles (see Fig. 4).

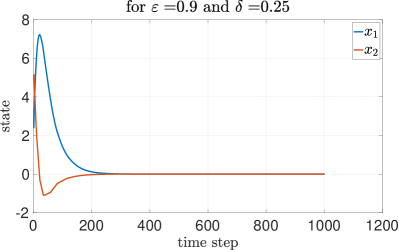

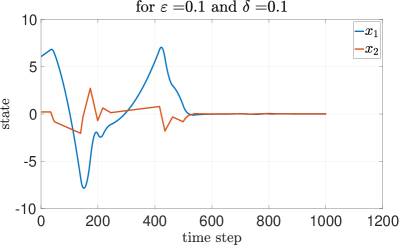

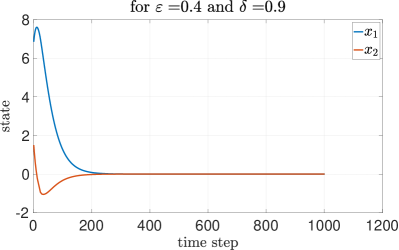

To assess the efficacy of our approach, we compare our results with two other methods: round-robin and random selection. Each method undergoes testing under identical initial conditions and parameters. The DQN method performs better than the other two methods. The round-robin method yields an average reward of , while the random method results in an average reward of . These comparisons underscore the effectiveness of the DQN after about episodes of training. In this subsection, we analyze the MSS property with the proposed - greedy algorithm, under different packet transmission success probability and corresponding found using Theorem 1. Figure 5 represents the case with low transmission success probability that requires a high value of to be MSS. Conversely, with low transmission success probability and low value of , we can observe in Figure 6 that the system is not MSS. As depicted in Figure 7, with high transmission success probability, a low value of is sufficient for achieving MSS.

7 Conclusion

In this letter, we have proposed a modified -greedy algorithm for selecting communication direction in a multiplexed NCS. We have established the necessary conditions for the mean square stability (MSS) of an NCS with multiplexed communication and packet drops. We provided an optimal switching policy using Deep Q-Learning that utilizes the proposed -greedy algorithm and ensures MSS for training the neural network.

References

- [1] A. Bemporad, M. Heemels, M. Johansson, et al., Networked control systems, vol. 406. Springer, 2010.

- [2] X. Ge, F. Yang, and Q.-L. Han, “Distributed networked control systems: A brief overview,” Information Sciences, vol. 380, pp. 117–131, 2017.

- [3] N. Elia, “When bode meets shannon: Control-oriented feedback communication schemes,” IEEE transactions on Automatic Control, vol. 49, no. 9, pp. 1477–1488, 2004.

- [4] W. M. H. Heemels, A. R. Teel, N. Van de Wouw, and D. Nešić, “Networked control systems with communication constraints: Tradeoffs between transmission intervals, delays and performance,” IEEE Transactions on Automatic control, vol. 55, no. 8, pp. 1781–1796, 2010.

- [5] V. Dolk and M. Heemels, “Event-triggered control systems under packet losses,” Automatica, vol. 80, pp. 143–155, 2017.

- [6] H. Sandberg, V. Gupta, and K. H. Johansson, “Secure networked control systems,” Annual Review of Control, Robotics, and Autonomous Systems, vol. 5, pp. 445–464, 2022.

- [7] M. Athans, R. Ku, and S. Gershwin, “The uncertainty threshold principle: Some fundamental limitations of optimal decision making under dynamic uncertainty,” IEEE Transactions on Automatic Control, vol. 22, no. 3, pp. 491–495, 1977.

- [8] O. C. Imer, S. Yuksel, and T. Başar, “Optimal control of dynamical systems over unreliable communication links,” IFAC Proceedings Volumes, vol. 37, no. 13, pp. 991–996, 2004.

- [9] L. Schenato, B. Sinopoli, M. Franceschetti, K. Poolla, and S. S. Sastry, “Foundations of control and estimation over lossy networks,” Proceedings of the IEEE, vol. 95, no. 1, pp. 163–187, 2007.

- [10] X. Liu and A. Goldsmith, “Kalman filtering with partial observation losses,” in 2004 43rd IEEE Conference on Decision and Control (CDC)(IEEE Cat. No. 04CH37601), vol. 4, pp. 4180–4186, IEEE, 2004.

- [11] B. Sinopoli, L. Schenato, M. Franceschetti, K. Poolla, M. I. Jordan, and S. S. Sastry, “Kalman filtering with intermittent observations,” IEEE transactions on Automatic Control, vol. 49, no. 9, pp. 1453–1464, 2004.

- [12] K. You, M. Fu, and L. Xie, “Mean square stability for kalman filtering with markovian packet losses,” Automatica, vol. 47, no. 12, pp. 2647–2657, 2011.

- [13] A. R. Mesquita, J. P. Hespanha, and G. N. Nair, “Redundant data transmission in control/estimation over lossy networks,” Automatica, vol. 48, no. 8, pp. 1612–1620, 2012.

- [14] V. S. Varma, R. Postoyan, D. E. Quevedo, and I.-C. Morărescu, “Event-triggered transmission policies for nonlinear control systems over erasure channels,” IEEE Control Systems Letters, vol. 7, pp. 2113–2118, 2023.

- [15] D. Maity, M. H. Mamduhi, S. Hirche, and K. H. Johansson, “Optimal lqg control of networked systems under traffic-correlated delay and dropout,” IEEE Control Systems Letters, vol. 6, pp. 1280–1285, 2021.

- [16] L. Zhang, H. Gao, and O. Kaynak, “Network-induced constraints in networked control systems—a survey,” IEEE transactions on industrial informatics, vol. 9, no. 1, pp. 403–416, 2012.

- [17] A. Molin and S. Hirche, “On lqg joint optimal scheduling and control under communication constraints,” in Proceedings of the 48h IEEE Conference on Decision and Control (CDC) held jointly with 2009 28th Chinese Control Conference, pp. 5832–5838, IEEE, 2009.

- [18] A. S. Leong, A. Ramaswamy, D. E. Quevedo, H. Karl, and L. Shi, “Deep reinforcement learning for wireless sensor scheduling in cyber–physical systems,” Automatica, vol. 113, p. 108759, 2020.

- [19] R. S. Sutton and A. G. Barto, Reinforcement learning: An introduction. MIT press, 2018.

- [20] O. L. V. Costa, M. D. Fragoso, and R. P. Marques, Discrete-time Markov jump linear systems. Springer Science & Business Media, 2005.

- [21] O. Hernández-Lerma and J. B. Lasserre, Discrete-time Markov control processes: basic optimality criteria, vol. 30. Springer Science & Business Media, 2012.

- [22] V. Mnih, K. Kavukcuoglu, D. Silver, A. A. Rusu, J. Veness, M. G. Bellemare, A. Graves, M. Riedmiller, A. K. Fidjeland, G. Ostrovski, et al., “Human-level control through deep reinforcement learning,” nature, vol. 518, no. 7540, pp. 529–533, 2015.