Speed limit, dissipation bound and dissipation-time trade-off in thermal relaxation processes

Abstract

We investigate bounds on speed, non-adiabatic entropy production and trade-off relation between them for classical stochastic processes with time-independent transition rates. Our results show that the time required to evolve from an initial to a desired target state is bounded from below by the informational-theoretic -Rényi divergence between these states, divided by the total rate. Furthermore, we conjecture and provide extensive numerical evidence for an information-theoretical bound on the non-adiabatic entropy production and a novel dissipation-time trade-off relation that outperforms previous bounds in some cases.

Introduction.— Optimal control of a system’s evolution from an initial to a desired target state is a crucial task [1, 2, 3, 4], with close ties to the optimal transport problem [5, 6]. The definition of “optimal” varies depending on the specific cost function employed, which may include time, energy consumption, dissipation, error, robustness, or trade-offs between them. In addition to optimal control protocols, non-model-specific fundamental bounds on the cost functions are of significant interest [7]. For instance, in quantum systems, rapid state transformations are usually desirable, thereby motivating extensive investigations of the so-called “quantum speed limit” [8, 9, 10]. For a quantum system with a time-independent Hamiltonian , the time it needs to evolve from the initial state to the final state is bounded from below by ()

| (1) |

where is the Bures angle that quantifies the distance between the endpoints, and and are variance and average of the Hamiltonian, respectively [11, 12].

Recent developments have extended the concept of speed limits to classical stochastic processes, where entropy production plays a crucial role [13, 14, 15, 16, 17]111 There exist alternative speed limits that are not explicitly defined in terms of entropy production. For instance, the relevant quantity could be the rate of change in the information content [66, 67], the entropy flux [68], or the dynamical activity [69]. . For Markovian stochastic processes with given initial and final probability distributions and , speed limits can be expressed as a trade-off between entropy production and time duration given by ()

| (2) |

where is a monotonically decreasing function of . The subscript denotes the dependence of the function on the endpoints, and quantifies the system’s timescale. This inequality encompasses the following three important cases in the literature.

In generic processes entropy production can be split into adiabatic and non-adiabatic (Hatano-Sasa [19]) contributions [20]. The former persists even when the probability distribution equals the instantaneous steady state, while the latter arises from deviations from this state. It was reported that non-adiabatic entropy production is lower-bounded by (referred to as activity bound) [15, 16]

| (3) |

where represents the total-variation distance between endpoints and denotes time-averaged dynamical activity [21].

In the quasi-static limit with , the time-averaged dynamical activity becomes time-independent, resulting in an inequality . This observation bears resemblance to the result for slow but finite-time Markovian stochastic processes [22, 23, 24, 25, 26], where the entropy production bound is given by . Here denotes the thermodynamic length [27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44], which already encodes the information about the time scale derived from the transition rates.

For relaxation processes with a time-independent transition rate matrix satisfying the detailed balance condition, the general dissipation-time trade-off relation Eq. (2) is superseded by a simpler -independent bound [45]

| (4) |

where is the -Rényi divergence [46, 47], also known as Kullback-Leibler divergence or relative entropy [48]. Since the bound is independent of time duration, the trade-off between dissipation and evolution time is, in fact, concealed. In the context of this kind of simple but important processes, i.e., relaxation processes with time-independent transition rates where the detailed balance condition is not necessarily met [49], three natural questions arise. First, given the similarity between a quantum process with a time-independent Hamiltonian and a thermodynamic process with a time-independent transition matrix, one may wonder whether there exists a speed limit analogous to Eq. (1), where the bound is given by the distance between the endpoints divided by a timescale constant 222There exists a relevant speed limit for open quantum systems governed by a Markovian quantum master equation [70]. Surprisingly, the bound therein only involves the initial state and the dynamical map.. Second, it is tempting to see whether Eq. (4) also holds for the non-adiabatic entropy production. Third, one may inquire the trade-off relation in the form of Eq. (2), if any, between dissipation and time in these relaxation processes. Specifically, it is desirable to obtain a suitable timescale constant.

In this Letter, we answer these three questions and show that the relevant timescale constant is the total rate, i.e., the sum of all positive transition rates. We also demonstrate with examples that our new dissipation-time trade-off relation outperforms previous bounds.

Speed limit.— Consider a stochastic Markov jump process with finite states. The dynamics of the probability distribution is described by a Pauli master equation [49]

| (5) |

where is the probability of state , is the time-independent transition rate from state to , and . For later use, we define the total rate as

| (6) |

In experiments, the transition rate matrix and its trace can be inferred from trajectory data [51]. Provided that the Markov chain is ergodic, a steady state distribution is expected, satisfying . Steady states can be divided into two categories depending on whether they meet the detailed balance condition, , which is not assumed throughout this Letter. The total entropy production consists of an adiabatic contribution and a non-adiabatic one, both of which are non-negative. Given an initial state and a target state , the non-adiabatic entropy production is given by [52, 15]

| (7) |

where is the -Rényi divergence between the two probability distributions [46, 47, 48]. For arbitrary dynamics, , and the equality is attained when the detailed balance condition is satisfied. Notably, the detailed balance condition is always fulfilled in two-state systems.

The fixed endpoints impose constraints on the transition rate matrix , but generally do not uniquely determine it. The choice of affects the magnitude of entropy and produced by the stochastic process connecting the same endpoints over time . For two-state systems, the steady state is uniquely determined, with expressible in terms of as 333See the Supplemental Material for derivation of Eq. (8) and the code to verify Eqs. (11) and (13).

| (8) |

with .

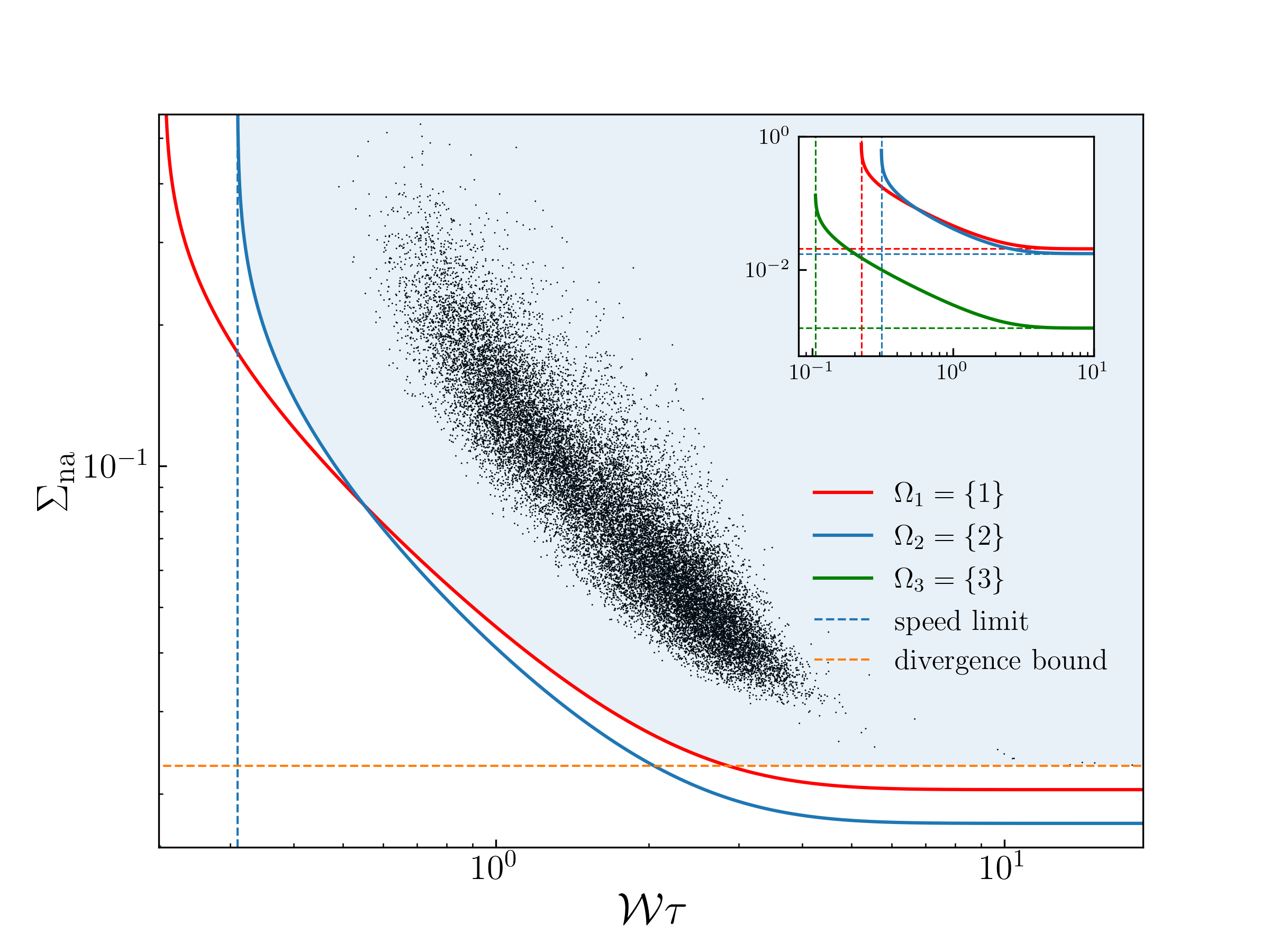

By substituting Eq. (8) into (7), it can be observed that, given the endpoints, the non-adiabatic entropy production in a two-state system is a monotonically decreasing function of . See the inset of Fig. 1 (c). In other words, the versus curve indicates a trade-off between entropy production and time duration, and is bounded by a vertical and a horizontal asymptote. The horizontal asymptote signifies that the minimum of the entropy production is reached when . In this limit, , so Eq. (7) implies that the minimum entropy production is given by (4).

It is unphysical for the population to be or , as this would require one of the transition rates to vanish. Combining Eq. (8) with this constraint (), we obtain a speed limit represented by the vertical asymptote, i.e., a lower bound for the evolution time :

| (9) |

The numerator coincides with the -Rényi divergence, defined as [46, 47]. Specifically, the lower bound on evolution time is given by the quotient of the -Rényi divergence between the two endpoints and the total rate of transitions .

This lower bound reflects an information-theoretical limit on speed in two-state systems; hence, it is relevant to examine whether this bound can be extended to generic -state systems. The answer is affirmative. We state our main result here while deferring the proof to the end of the Letter: the time it needs to evolve from the initial state to the final state is bounded from below by

| (10) |

where is the -Rényi divergence between the distributions and , and is the total rate. Remarkably, this lower bound on evolution time resembles the distance-based formulation in Eq. (1), where a constant rate characterizes the timescale. It is noteworthy that the -Rényi divergence plays a significant role in the resource theory of thermodynamics [54, 55, 47], and its application to speed limits highlights its versatility and significance in various physical contexts. If , the time it needs to reach the steady state is infinite, so this bound is trivially satisfied. When the final state is not of full rank, both the -Rényi divergence and the bound of time diverge. This is a manifestation of the third law of thermodynamics, which states that non-full rank states cannot be attained in finite time [56].

Information-theoretical bound on non-adiabatic entropy production.— Building upon the information-theoretical bound on entropy production described in Ref. [45] for systems with detailed balance, we extend our findings to scenarios without detailed balance. Our second result introduces a conjecture (referred to as divergence bound):

| (11) |

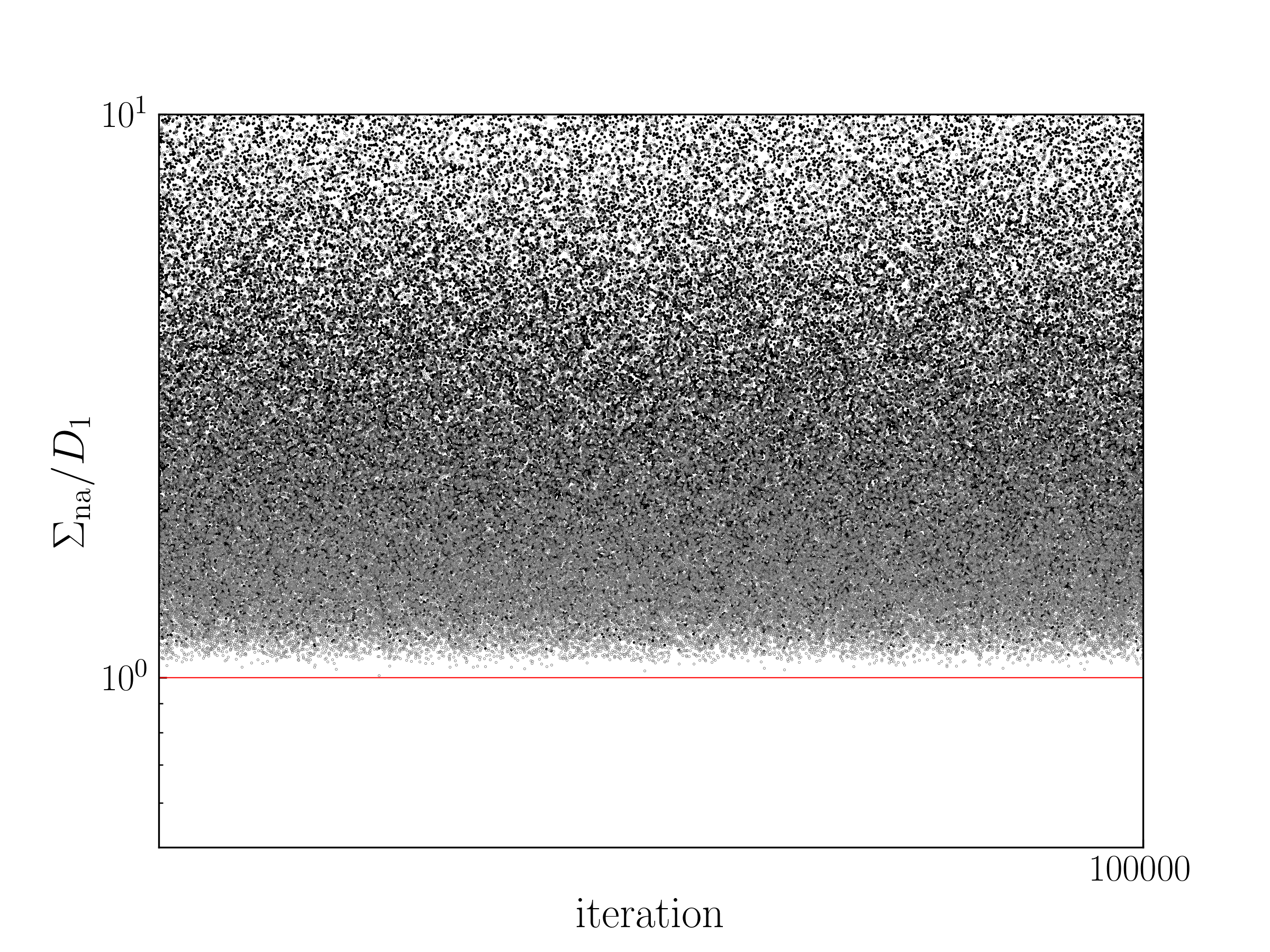

This conjecture, presented as an extension of Eq. (4), suggests a lower bound on the non-adiabatic entropy production given by the -Rényi divergence between the endpoints. Fig. 1(a) provides numerical evidence for three-state systems to support it, and the code used to generate numerical verification for other numbers of states can be found in the Supplemental Material [53]. In fact we confirm a tighter bound, , where represents the non-adiabatic entropy production during the time interval , which strengthens our conjecture. Our findings have implications for the excess entropy production , which is defined by a variational principle and is always greater than [57, 58]. As a consequence, we observe the following relationships:

| (12) |

Trade-off between dissipation and time.— Let us state the third main result: for -state dynamics evolving from to during with an total rate , the conjecture is that the entropy production is bounded from below by (referred to as trade-off bound)

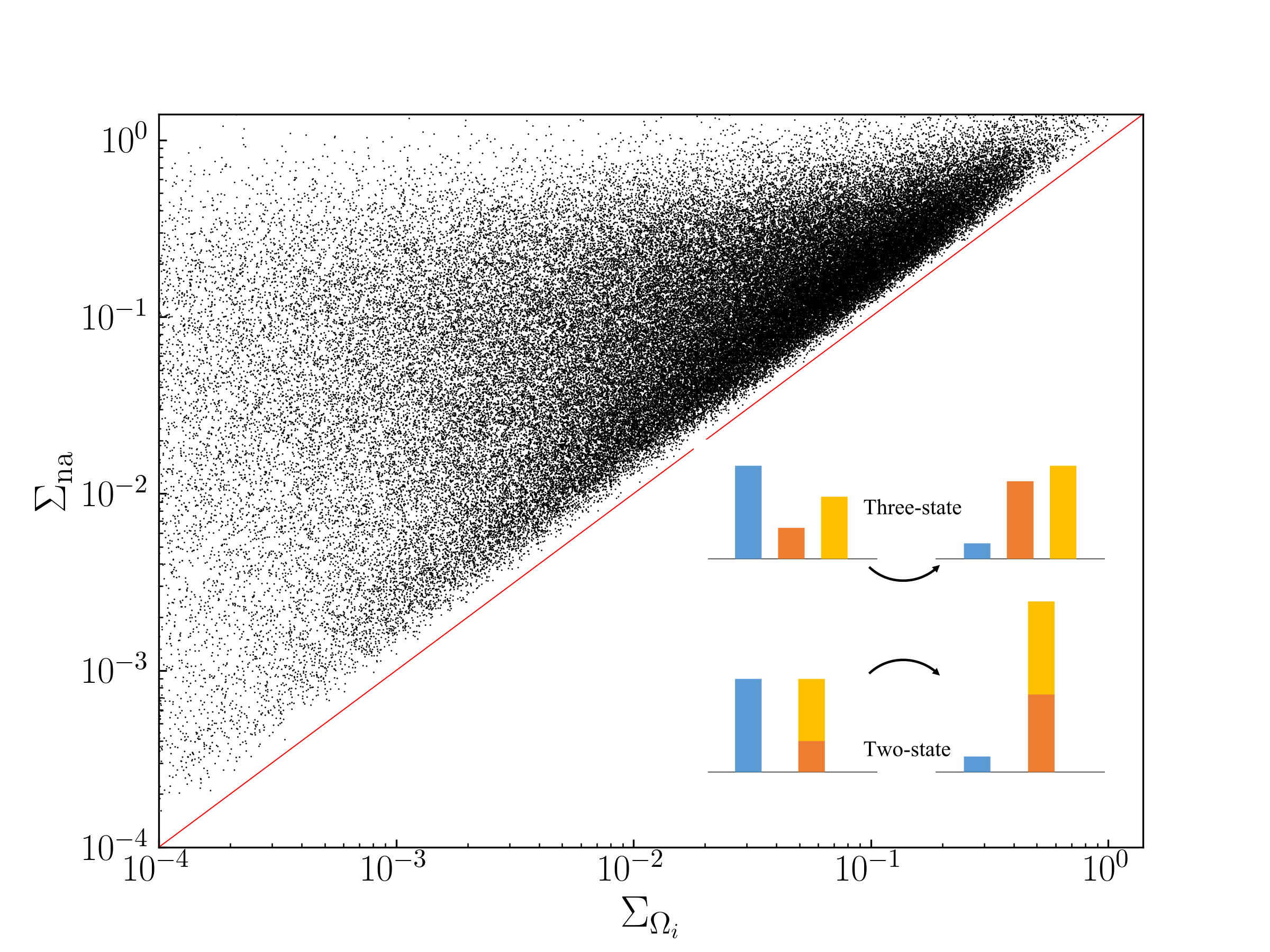

| (13) |

Here , is the entropy production of two-state dynamics obtained from a pseudo-coarse-graining procedure as follows: partition the set of states into two nonempty sets where the first set is denoted by , then we have the two endpoints to . We then use Eq. (8) to find the steady state distribution, where and of the original -state dynamics are used. The entropy production of the two-state dynamics is obtained by substituting the steady state distribution into (7). The inset of Fig. 1(b) gives a schematic of the pseudo-coarse-graining procedure for three states. As shown in the upper panel, the system evolves from to , visually depicted by bars of varying colors and lengths. There are three partitioning schemes and the lower panel shows one in which the second and third state are grouped together, resulting in and . Given states, we have different ways of partitioning the states into two nonempty sets, where is the Stirling number of the second kind [59]. Explicitly, . For example, four states can be partitioned in ways, where , with the elements in each set being the indices of states. Thus, there are different two-state bounds in total, jointly bounding . As discussed above, these two-state bounds are monotonically decreasing function of , which has the form of Eq. (2), with playing the role of . We stress that the two-state dynamics cannot be obtained from the standard coarse-graining procedure [60, 61]: the population is in general not equal to except at the two endpoints; the transition rate matrix for each two-state dynamics is time-independent, and its entries are not simple linear combinations of the original transition rates. In the following, we consider the three-state case as an example, and numerical evidence to support Eq. (13) for other numbers of states can be generated using the code in the Supplemental Material [53].

As a direct verification of the inequality (13), Fig. 1(b) shows a plot of the non-adiabatic entropy production , versus the corresponding two-state . All data points lie above the diagonal, confirming the new bound given by (13). In the long- limit, the entropy production of the two-state dynamics is . By applying the theorem that refinement cannot decrease divergence [62], which is essentially the log-sum inequality, this quantity is not greater than and also . This leads to two questions: (1) Can the bound ever be saturated, as it is not evident from Fig. 1(a)? (2) Is the new bound ever tighter than the divergence bound and the activity bound, or is it otherwise redundant?

There are at least two simple cases in which the bound is (nearly) saturated. By examining the condition for equality in the log-sum inequality, it can be observed that as long as a subset of the states such that are equal for all , the bound is saturated in the long- limit. Another scenario in which the bound is nearly saturated throughout the entire process is when all the states’ initial populations and the transition rates between them are vanishingly small, effectively reducing the dynamics to only two states. This case also partially addresses the second question, as the divergence bound is not generally saturated. The superiority of the new bound over the previous bounds is also evident in nontrivial cases, as will be seen in Figs. 1(c) and Fig. 2. We will also prove that there always exists a parameter range in which the trade-off bound outperforms the divergence bound for any given pair of endpoints.

Fig. 1 (c) displays a representative scenario where the endpoints are given and fixed, while also demonstrating universal behavior. Eqs. (4), (11) and (13) jointly bound , as represented by the shaded area. The trade-off between dissipation and evolution time quantified by Eq. (13) is clearly exemplified in this figure. It demonstrates that, when the endpoints are fixed, faster state transformations are accompanied by larger amounts of dissipation. As shown in the inset, each pseudo-coarse-grained two-state dynamics also has a speed limit, and the log-sum inequality implies that it is not greater than the speed limit of the -state dynamics. The vertical asymptote of the two-state curve for is exactly the speed limit for three states, and this is not a coincidence. For any given pair of endpoints, there must be at least one two-state curve whose vertical asymptote coincides with the genuine speed limit for states. Thus, there must exist a parameter range in which the trade-off bound outperforms the divergence bound.

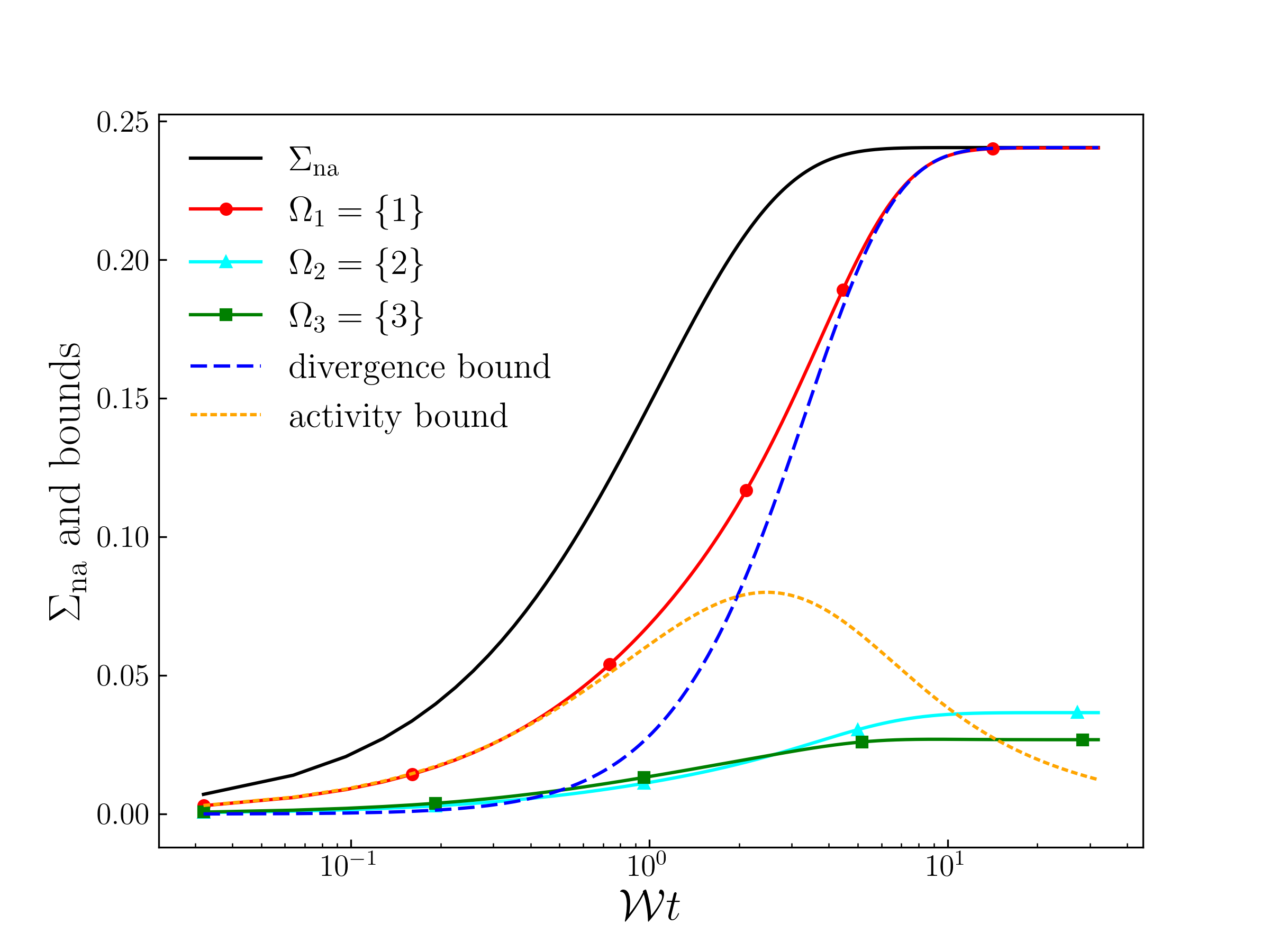

Fig. 2 shows the entropy production and the corresponding divergence bound, activity bound and the new bound, as a function of the dimensionless time for a representative case, whose initial distribution and transition rate matrix (in arbitrary units) are given by

| (14) |

At each time instant , we calculate the instantaneous distribution , and calculate the divergence bound and the new bound using corresponding equations by replacing therein with . The time-averaged dynamical activity is calculated using Eq. (8) in Ref. [15], with the upper bound of the integral set to . Hence, the time derivative of the divergence bound, the activity bound and the new bound cannot be regarded as entropy production rate, and are not guaranteed to be non-negative. The divergence bound is initially loose but saturates as as expected. The activity bound has a much better performance than the divergence bound in the beginning but gradually loses its advantage. The activity bound even decreases as the steady state is approached, showing the expected asymptotic behavior as previously mentioned. The new bound with has a performance that is almost all the time better than both the divergence bound and the activity bound. As discussed above, in the long time limit the divergence bound’s performance should be the best as it saturates. For this special case, the trade-off bound is almost as good, as can be numerically verified. The steady state distribution is , and the divergence bound is . The trade-off bound in the long-time limit is given by the divergence between and , which is .

Proof of the speed limit.—Let us prove that Eq. (10) gives a speed limit for relaxation processes in generic -state systems, where the denominator is still the total rate , i.e., the sum of all the positive rates. First of all, let us divide into intervals so a time sequence is obtained. Consider an arbitrarily selected infinitesimal interval , then the master equation (5) gives

| (15) |

where . Without loss of generality, we assume the state satisfies . Therefore,

| (16) |

If the sum of all positive rates is fixed, it is not hard to see that if with all the other transition rates vanishing, reaches the minimum,

| (17) | ||||

where is exact to first order in . Technically speaking, the equality is not achievable in physical processes because is impractical.

Summing over results in

| (18) |

The inequality that the maximum of sum is at most the sum of maxima gives

| (19) | ||||

Combining with (18) completes the proof.

Conclusion.— We present a speed limit, a bound on the non-adiabatic entropy production and a trade-off relation between dissipation and evolution time for time-independent relaxation processes. Our result in Eq. (10) resembles the quantum speed limit (1) and indicates that the minimum transition time from an initial to a target state is constrained by the ratio of their -Rényi divergence to the total rate. Eq. (11) gives a lower bound on the non-adiabatic entropy production in terms of -Rényi divergence. Furthermore, Eq. (13), implicitly in the form of (2), reveals a new trade-off between dissipation and time that surpasses the divergence bound for certain parameters. Given successful quantum extensions of both divergence and activity bounds [63, 64, 65, 6], we anticipate that our results can also be generalized to quantum settings.

Acknowledgment.— We thank Naoto Shiraishi and anonymous referees for valuable comments and discussions.

References

- Glaser et al. [2015] S. J. Glaser, U. Boscain, T. Calarco, C. P. Koch, W. Köckenberger, R. Kosloff, I. Kuprov, B. Luy, S. Schirmer, T. Schulte-Herbrüggen, D. Sugny, and F. K. Wilhelm, Training Schrödinger’s cat: quantum optimal control, Eur. Phys. J. D 69, 279 (2015).

- Deffner and Bonança [2020] S. Deffner and M. V. S. Bonança, Thermodynamic control —An old paradigm with new applications, Europhys. Lett. 131, 20001 (2020).

- Koch et al. [2022] C. P. Koch, U. Boscain, T. Calarco, G. Dirr, S. Filipp, S. J. Glaser, R. Kosloff, S. Montangero, T. Schulte-Herbrüggen, D. Sugny, and F. K. Wilhelm, Quantum optimal control in quantum technologies. Strategic report on current status, visions and goals for research in Europe, EPJ Quantum Technol. 9, 19 (2022).

- Blaber and Sivak [2023] S. Blaber and D. A. Sivak, Optimal control in stochastic thermodynamics, J. Phys. Commun. 7, 033001 (2023).

- Villani et al. [2009] C. Villani et al., Optimal transport: old and new, Vol. 338 (Springer, 2009).

- Van Vu and Saito [2023] T. Van Vu and K. Saito, Thermodynamic Unification of Optimal Transport: Thermodynamic Uncertainty Relation, Minimum Dissipation, and Thermodynamic Speed Limits, Phys. Rev. X 13, 011013 (2023).

- Gong and Hamazaki [2022] Z. Gong and R. Hamazaki, Bounds in Nonequilibrium Quantum Dynamics, Int. J. Mod. Phys. B 36, 2230007 (2022).

- Mandelstam and Tamm [1945] L. Mandelstam and I. Tamm, The uncertainty relation between energy and time in nonrelativistic quantum mechanics, J. Phys. (Moscow) 9, 249 (1945).

- Margolus and Levitin [1998] N. Margolus and L. B. Levitin, The maximum speed of dynamical evolution, Physica D 120, 188 (1998).

- Deffner and Campbell [2017] S. Deffner and S. Campbell, Quantum speed limits: from Heisenberg’s uncertainty principle to optimal quantum control, J. Phys. A: Math. Theor. 50, 453001 (2017).

- Giovannetti et al. [2003] V. Giovannetti, S. Lloyd, and L. Maccone, Quantum limits to dynamical evolution, Phys. Rev. A 67, 052109 (2003).

- Giovannetti et al. [2004] V. Giovannetti, S. Lloyd, and L. Maccone, The speed limit of quantum unitary evolution, J. Opt. B: Quantum Semiclass. Opt. 6, S807 (2004).

- Shanahan et al. [2018] B. Shanahan, A. Chenu, N. Margolus, and A. del Campo, Quantum Speed Limits across the Quantum-to-Classical Transition, Phys. Rev. Lett. 120, 070401 (2018).

- Okuyama and Ohzeki [2018] M. Okuyama and M. Ohzeki, Quantum Speed Limit is Not Quantum, Phys. Rev. Lett. 120, 070402 (2018).

- Shiraishi et al. [2018] N. Shiraishi, K. Funo, and K. Saito, Speed Limit for Classical Stochastic Processes, Phys. Rev. Lett. 121, 070601 (2018).

- Vo et al. [2020] V. T. Vo, T. Van Vu, and Y. Hasegawa, Unified approach to classical speed limit and thermodynamic uncertainty relation, Phys. Rev. E 102, 062132 (2020).

- Lee et al. [2022] J. S. Lee, S. Lee, H. Kwon, and H. Park, Speed Limit for a Highly Irreversible Process and Tight Finite-Time Landauer’s Bound, Phys. Rev. Lett. 129, 120603 (2022).

- Note [1] There are other speed limits that are not expressed in terms of entropy production. For example, the relevant quantity could be the rate of change in the information content [66, 67], the entropy flux [68], or the dynamical activity [69].

- Hatano and Sasa [2001] T. Hatano and S.-i. Sasa, Steady-State Thermodynamics of Langevin Systems, Phys. Rev. Lett. 86, 3463 (2001).

- Esposito and Van den Broeck [2010] M. Esposito and C. Van den Broeck, Three Detailed Fluctuation Theorems, Phys. Rev. Lett. 104, 090601 (2010).

- Maes [2020] C. Maes, Frenesy: Time-symmetric dynamical activity in nonequilibria, Phys. Rep. 850, 1 (2020).

- Sinitsyn and Nemenman [2007] N. A. Sinitsyn and I. Nemenman, The Berry phase and the pump flux in stochastic chemical kinetics, Europhys. Lett. 77, 58001 (2007).

- Sinitsyn [2009] N. A. Sinitsyn, The stochastic pump effect and geometric phases in dissipative and stochastic systems, J. Phys. A: Math. Theor. 42, 193001 (2009).

- Ren et al. [2010] J. Ren, P. Hänggi, and B. Li, Berry-Phase-Induced Heat Pumping and Its Impact on the Fluctuation Theorem, Phys. Rev. Lett. 104, 170601 (2010).

- Sinitsyn et al. [2011] N. A. Sinitsyn, A. Akimov, and V. Y. Chernyak, Supersymmetry and fluctuation relations for currents in closed networks, Phys. Rev. E 83, 021107 (2011).

- Gu et al. [2018] J. Gu, X.-G. Li, H.-P. Cheng, and X.-G. Zhang, Adiabatic Spin Pump through a Molecular Antiferromagnet Ce 3 Mn 8 III, J. Phys. Chem. C 122, 1422 (2018).

- Kirkwood [1946] J. G. Kirkwood, The Statistical Mechanical Theory of Transport Processes I. General Theory, J. Chem. Phys. 14, 180 (1946).

- Weinhold [1975] F. Weinhold, Metric geometry of equilibrium thermodynamics, J. Chem. Phys. 63, 2479 (1975).

- Ruppeiner [1979] G. Ruppeiner, Thermodynamics: A Riemannian geometric model, Phys. Rev. A 20, 1608 (1979).

- Salamon and Berry [1983] P. Salamon and R. S. Berry, Thermodynamic length and dissipated availability, Phys. Rev. Lett. 51, 1127 (1983).

- Janyszek and Mrugal/a [1989] H. Janyszek and R. Mrugal/a, Riemannian geometry and the thermodynamics of model magnetic systems, Phys. Rev. A 39, 6515 (1989).

- Brody and Rivier [1995] D. Brody and N. Rivier, Geometrical aspects of statistical mechanics, Phys. Rev. E 51, 1006 (1995).

- Ruppeiner [1995] G. Ruppeiner, Riemannian geometry in thermodynamic fluctuation theory, Rev. Mod. Phys. 67, 605 (1995).

- Sivak and Crooks [2012] D. A. Sivak and G. E. Crooks, Thermodynamic Metrics and Optimal Paths, Phys. Rev. Lett. 108, 190602 (2012).

- Zulkowski et al. [2012] P. R. Zulkowski, D. A. Sivak, G. E. Crooks, and M. R. DeWeese, Geometry of thermodynamic control, Phys. Rev. E 86, 041148 (2012).

- Machta [2015] B. B. Machta, Dissipation Bound for Thermodynamic Control, Phys. Rev. Lett. 115, 260603 (2015).

- Mandal and Jarzynski [2016] D. Mandal and C. Jarzynski, Analysis of slow transitions between nonequilibrium steady states, J. Stat. Mech: Theory Exp. 2016, 063204 (2016).

- Miller et al. [2019] H. J. Miller, M. Scandi, J. Anders, and M. Perarnau-Llobet, Work Fluctuations in Slow Processes: Quantum Signatures and Optimal Control, Phys. Rev. Lett. 123, 230603 (2019).

- Miller and Mehboudi [2020] H. J. Miller and M. Mehboudi, Geometry of Work Fluctuations versus Efficiency in Microscopic Thermal Machines, Phys. Rev. Lett. 125, 260602 (2020).

- Scandi and Perarnau-Llobet [2019] M. Scandi and M. Perarnau-Llobet, Thermodynamic length in open quantum systems, Quantum 3, 197 (2019).

- Scandi et al. [2020] M. Scandi, H. J. D. Miller, J. Anders, and M. Perarnau-Llobet, Quantum work statistics close to equilibrium, Phys. Rev. Res. 2, 023377 (2020).

- Abiuso et al. [2020] P. Abiuso, H. J. D. Miller, M. Perarnau-Llobet, and M. Scandi, Geometric Optimisation of Quantum Thermodynamic Processes, Entropy 22, 1076 (2020).

- Frim and DeWeese [2022] A. G. Frim and M. R. DeWeese, Geometric Bound on the Efficiency of Irreversible Thermodynamic Cycles, Phys. Rev. Lett. 128, 230601 (2022).

- Gu [2023] J. Gu, Work statistics in slow thermodynamic processes, J. Chem. Phys. 158, 074104 (2023).

- Shiraishi and Saito [2019] N. Shiraishi and K. Saito, Information-Theoretical Bound of the Irreversibility in Thermal Relaxation Processes, Phys. Rev. Lett. 123, 110603 (2019).

- van Erven and Harremoes [2014] T. van Erven and P. Harremoes, Rényi Divergence and Kullback-Leibler Divergence, IEEE Trans. Inf. Theory 60, 3797 (2014).

- Sagawa [2022] T. Sagawa, Entropy, Divergence, and Majorization in Classical and Quantum Thermodynamics, Vol. 16 (Springer Nature, 2022).

- Vedral [2002] V. Vedral, The role of relative entropy in quantum information theory, Rev. Mod. Phys. 74, 197 (2002).

- Van Kampen [1992] N. G. Van Kampen, Stochastic processes in physics and chemistry, Vol. 1 (Elsevier, 1992).

- Note [2] There exists a relevant speed limit for open quantum systems governed by a Markovian quantum master equation [70]. Surprisingly, the bound therein only involves the initial state and the dynamical map.

- Bladt and Sørensen [2005] M. Bladt and M. Sørensen, Statistical Inference for Discretely Observed Markov Jump Processes, J. R. Stat. Soc., B: Stat. 67, 395 (2005).

- Van den Broeck C. [2013] Van den Broeck C., Stochastic thermodynamics: A brief introduction, ENFI 184, 155 (2013).

- Note [3] See the Supplemental Material at [url] for derivation of Eq. (8\@@italiccorr) and the code to verify Eqs. (11\@@italiccorr) and (13\@@italiccorr).

- Horodecki and Oppenheim [2013] M. Horodecki and J. Oppenheim, Fundamental limitations for quantum and nanoscale thermodynamics, Nat. Commun. 4, 2059 (2013).

- Åberg [2013] J. Åberg, Truly work-like work extraction via a single-shot analysis, Nat. Commun. 4, 1925 (2013).

- Masanes and Oppenheim [2017] L. Masanes and J. Oppenheim, A general derivation and quantification of the third law of thermodynamics, Nat. Commun. 8, 14538 (2017).

- Kolchinsky et al. [2022] A. Kolchinsky, A. Dechant, K. Yoshimura, and S. Ito, Information geometry of excess and housekeeping entropy production (2022).

- Shiraishi [2023] N. Shiraishi, An Introduction to Stochastic Thermodynamics: From Basic to Advanced (Springer Nature Singapore, Singapore, 2023) Chap. 19.

- Abramowitz and Stegun [1965] M. Abramowitz and I. A. Stegun, eds., Handbook of mathematical functions, Dover Books on Mathematics (Dover Publications, Mineola, NY, 1965).

- Esposito [2012] M. Esposito, Stochastic thermodynamics under coarse-graining, Phys. Rev. E 85, 041125 (2012).

- Zhen et al. [2021] Y.-Z. Zhen, D. Egloff, K. Modi, and O. Dahlsten, Universal Bound on Energy Cost of Bit Reset in Finite Time, Phys. Rev. Lett. 127, 190602 (2021).

- Alajaji et al. [2018] F. Alajaji, P.-N. Chen, et al., An Introduction to Single-User Information Theory (Springer, 2018).

- Van Vu and Hasegawa [2021a] T. Van Vu and Y. Hasegawa, Geometrical Bounds of the Irreversibility in Markovian Systems, Phys. Rev. Lett. 126, 010601 (2021a).

- Van Vu and Hasegawa [2021b] T. Van Vu and Y. Hasegawa, Lower Bound on Irreversibility in Thermal Relaxation of Open Quantum Systems, Phys. Rev. Lett. 127, 190601 (2021b).

- Van Vu and Saito [2022] T. Van Vu and K. Saito, Finite-Time Quantum Landauer Principle and Quantum Coherence, Phys. Rev. Lett. 128, 010602 (2022).

- Nicholson et al. [2020] S. B. Nicholson, L. P. García-Pintos, A. del Campo, and J. R. Green, Time–information uncertainty relations in thermodynamics, Nat. Phys. 16, 1211 (2020).

- García-Pintos et al. [2022] L. P. García-Pintos, S. B. Nicholson, J. R. Green, A. del Campo, and A. V. Gorshkov, Unifying Quantum and Classical Speed Limits on Observables, Phys. Rev. X 12, 011038 (2022).

- Falasco and Esposito [2020] G. Falasco and M. Esposito, Dissipation-Time Uncertainty Relation, Phys. Rev. Lett. 125, 120604 (2020).

- Dechant [2022] A. Dechant, Minimum entropy production, detailed balance and Wasserstein distance for continuous-time Markov processes, J. Phys. A: Math. Theor. 55, 094001 (2022).

- del Campo et al. [2013] A. del Campo, I. L. Egusquiza, M. B. Plenio, and S. F. Huelga, Quantum Speed Limits in Open System Dynamics, Phys. Rev. Lett. 110, 050403 (2013).

Appendix A Supplemental Materials: Speed limit, dissipation bound and dissipation-time trade-off in thermal relaxation processes

Appendix B Derivation of Eq. (8)

Consider a two-state systems, where for simplicity we define and . The transition rate matrix can then be represented as:

| (S1) |

The two eigenstates and right-eigenvectors () are, respectively,

| (S2) |

and

| (S3) |

By utilizing the eigenvalue method for solving systems of ordinary differential equations, we obtain

| (S4) |

Given the two endpoints, solving the linear equations gives the steady states expressed in terms of ,

| (S5) |

with .

Appendix C Code for verifying Eqs. (11) and (13)

import numpy as np from scipy.linalg import expm, eig from scipy import special, stats import matplotlib.pyplot as plt plt.rcParams[’font.family’] = ’Times New Roman’ plt.rcParams[’mathtext.fontset’] = ’cm’ plt.rc(’text’, usetex=True)

def compute_steady_state_distribution(transition_matrix): ””” Computes the steady state distribution.

Args: transition_matrix (numpy.ndarray): The transition matrix of the Markov chain.

Returns: numpy.ndarray: The steady state distribution of the Markov chain. ””” eigen_values, eigen_vectors = eig(transition_matrix) idx = eigen_values.argsort()[::-1] eigen_values = eigen_values[idx] eigen_vectors = eigen_vectors[:, idx] steady_state_distribution = eigen_vectors[:, 0].real steady_state_distribution = steady_state_distribution / steady_state_distribution.sum() return steady_state_distribution

def compute_entropy_production(dimension): ””” Computes the non-adiabatic entropy production and bounds.

Args: dimension (int): number of states (dimension of transition rate matrix).

Returns: list: A list containing two entropy productions. ””” initial_distribution = np.random.rand(dimension) initial_distribution = initial_distribution / initial_distribution.sum() tau = 1.0 * np.random.rand() transition_matrix = np.zeros((dimension, dimension)) for j in range(dimension): for i in range(dimension): if i != j: transition_matrix[i][j] = np.random.rand() transition_matrix[j][j] = -transition_matrix[:, j].sum() final_distribution = np.matmul(expm(transition_matrix * tau), initial_distribution) mid_distribution = np.matmul(expm(transition_matrix * tau/2.0), initial_distribution) steady_state_distribution = compute_steady_state_distribution(transition_matrix) entropy_production = stats.entropy(initial_distribution, steady_state_distribution) - stats.entropy(final_distribution, steady_state_distribution) entropy_production_mid = stats.entropy(initial_distribution, steady_state_distribution) - stats.entropy(mid_distribution, steady_state_distribution) KL = stats.entropy(initial_distribution, final_distribution) # pseudo-coarse-grained (PCG) dyamics num = int(1 + (dimension - 1) * np.random.rand()) PCG_initial_distribution = initial_distribution[:num].sum() PCG_initial_distribution = np.array([PCG_initial_distribution, 1 - PCG_initial_distribution]) PCG_final_distribution = final_distribution[:num].sum() PCG_final_distribution = np.array([PCG_final_distribution, 1 - PCG_final_distribution]) PCG_steady_state_distribution_1 = PCG_initial_distribution[0] + (PCG_final_distribution[0] - PCG_initial_distribution[0]) / (1 - np.exp(transition_matrix.trace() * tau)) PCG_steady_state_distribution_2 = 1.0 - PCG_steady