Specular reflections removal in colposcopic images based on neural networks: Supervised training with no ground truth previous knowledge

Abstract

Cervical cancer is a malignant tumor that seriously threatens women’s health, and is one of the most common that affects women worldwide. For its early detection, colposcopic images of the cervix are used for searching for possible injuries or abnormalities. An inherent characteristic of these images is the presence of specular reflections (brightness) that make it difficult to observe some regions, which might imply misdiagnosis. In this paper, a new strategy based on neural networks is introduced for eliminating specular reflections and estimating the unobserved anatomical cervix portion under the bright zones. For overcoming the fact that the ground truth corresponding to the specular reflection regions is always unknown, the new strategy proposes the supervised training of a neural network to learn how to restore any hidden regions of colposcopic images. Once the specular reflections are identified, they are removed from the image, and the previously trained network is used to fulfill these deleted areas. The quality of the processed images was evaluated quantitatively and qualitatively. In 21 of the 22 evaluated images, the detected specular reflections were eliminated, whereas, in the remaining one, these reflections were almost completely eliminated. The distribution of the colors and the content of the restored images are similar to those of the originals. The evaluation carried out by a specialist in Cervix Pathology concluded that, after eliminating the specular reflections, the anatomical and physiological elements of the cervix are observable in the restored images, which facilitates the medical diagnosis of cervical pathologies. Our method has the potential to improve the early detection of cervical cancer.

keywords:

cervical cancer, colposcopic image, specular reflection, inpainting, supervised learning, ground truth1 Introduction

Cancer is a serious health problem due to its high incidence and mortality rates in the world. In particular, cervical cancer is one of the most common that affects women and is the fourth leading cause of female mortality from cancer worldwide [1]. To increase the probability of successful treatment, early detection of the disease is necessary [20]. Before the appearance of cervical cancer, abnormal growth of squamous cells occurs in the cervical epithelium called cervical intraepithelial neoplasia. These precancerous and cancerous cells can be detected through a colposcopy, a visual inspection of the cervix by a clinical examination [16]. This test is performed by using a colposcope that captures color images outside the cervix. Once regions suggestive of intraepithelial lesion or cervical cancer have been identified, a targeted biopsy is performed to confirm the diagnosis, which contributes to developing treatment strategies in correspondence with the size and location of the lesions.

The cervix is a humid area and, when the light of the colposcope falls on it, specular reflections (SRs) may appear in the image. Specular reflections raise challenging problems in medical image analysis, as it degrades (partially or entirely) the information in the affected pixels [11], which can lead to misdiagnosis. Therefore, it is imperative to find effective methods for eliminating the SRs and estimating the missing anatomical region under the bright zones. On this need, the present work is focused.

1.1 Related work

Previous studies on colposcopic image processing have allowed the detection of SR regions [5, 14, 19]. Once these regions are identified, SRs removal can be treated as an inpainting problem, which consists of filling in the missing regions based on the remaining image data [22]. The restored region must be consistent with the cervix anatomy. Different approaches have been taken to deal with SRs removal by inpainting methods in colposcopic images. The authors of [11] proposed filling in the affected regions by interpolating the RGB (Red, Green, Blue) color components individually from the surrounding regions based on Laplace’s equation and modifying the intensity component of the HSI (Hue, Saturation, Intensity) color space transformed image. In [26], it was assumed that the highlights formed on the moist surface of the cervix are very small and the color underneath each highlight is nearly constant and similar to the color of the pixels in the immediate surroundings. So, it was proposed to fill in the SR regions by propagating the surrounding color information. After the color value of the detected SR pixels is set to zero, an iterative process replaces each pixel value inside the SR region by the mean color of its non-zero neighbors. A similar idea was followed in [24], but the pixels inside the SR region were replaced by the weighted color values of their neighboring pixels based on the average gradient direction of the SR region. All these methods are based on the gradual propagation of colors from edges toward the specular reflection center, and they provide satisfactory results when applying to small areas. In contrast, [14] argues that SR regions are typically large, so reconstructing missing information by only considering neighboring pixel value is not realistic. They suggest applying the multi-resolution inpainting technique proposed in [21] to restore the SRs by blocks with different brightness levels and applying histogram equalization to homogenize each restored block and reduce the effect of dividing the SRs into several blocks. By considering the colposcopic image with SRs as a matrix with unknown entries, [6] proposes estimating SR regions employing Non-Negative Matrix Factorization. However, the quality of the reconstruction strongly depends on the initial parameters of the algorithm. The authors of [23] proposed inpainting of SRs in colposcopic images with an exemplar-based method.

In recent years, there has been an increasing amount of literature on Convolutional Neural Networks (CNNs) to perform image inpainting tasks. CNNs are used as a feature extraction method through the process of convolution. Particularly in medical image restoration, the use of neural networks to solve inpainting problems has been increasing due to the good performance they have shown on images from other domains. The authors of [2] chose to incorporate YOLOv3 with spatial pyramid pooling (YOLOv3-spp) for robust detection and improved inference time for endoscopic artifacts, which may affect the physician’s visual assessment, and propose the use of a Conditional Generative Adversarial Network (CGAN) to restore the affected areas in the image. The use of CNN combined with adversarial training [7] has produced excellent results on inpainting tasks, with perceptual similarity to the original image. The authors of [15] propose a new architecture based on generative adversarial networks, Reconstruction Global-Local GAN (Recon-GLGAN), for magnetic resonance image reconstruction. However, despite the good performance of the deep learning algorithms in these inpainting tasks, they have not been applied to specular reflection removal in colposcopic images.

In this paper, we present a new approach to eliminate specular reflections based on training a network to learn how to restore any hidden region of colposcopic images. Once the SRs are identified, they are removed from the image, and the previously trained network is used to fulfill these deleted areas. We use a convolutional denoising autoencoder trained for the completion task using a small database, contrary to the belief that, for optimal performance, large training datasets are needed for models based on deep architectures.

This article is organized as follows. The next section describes the neural network architecture selected to eliminate specular reflections in colposcopic images, and details the strategy followed for its training. The fourth section presents the experimental process carried out as well as a qualitative and quantitative analysis of the obtained results.

2 Material and methods

This section deals with the specular reflections removal in colposcopic images using deep learning techniques. Figure 1 presents two typical colposcopic images from different patients showing large differences in color and shape of the cervices and the presence of scattered brightness (SRs). After considering the main limitation of the standard supervised learning for eliminating SRs in a colposcopic image, a new strategy for applying a supervised learning algorithm is introduced despite not knowing the ground truth of the problem to be solved.

2.1 Characterization and detection of specular reflections

Pixels belonging to regions with SRs are characterized by high intensity (Int) and low color saturation (Sat) [12]. These characteristics allow a preliminary identification of such regions by applying a threshold criterion to these image values [26]. The authors of [19] studied different approaches to detect SR regions on Cuban colposcopic images. They proposed an algorithm based on the application of thresholds to the maximum intensity of the image regardless of color saturation. The pixels with intensity higher than the thresholds were classified as SR. This algorithm was chosen to detect the SR region in this work.

2.2 Peculiarities of the problem to be solved and feasibility of supervised training

Formulation of the problem to be solved. Let us denoted by

-

1.

the area of the cervix focused with the colposcope

-

2.

the image of A captured by the colposcope, i.e., image with SR regions.

-

3.

the ideal image showing the complete content of A, i.e., image without the SR regions.

Target: From image , obtain image using supervised learning.

The supervised training of a neural network depends on knowing in advance the training set consisting of pairs (, ) of inputs and outputs, . Adjusting this to the problem to be solved, the training set would have as input a colposcopic image and as output (ground truth) the image .

However, the presence of SRs is an inherent characteristic of colposcopic images produced by the reflection of the colposcope light on the wet areas of the cervix. Since the areas and the humidity level are different for each patient, the distribution of SRs in the images is heterogeneous (see Figure 1). Moreover, for a patient, the incidence of the colposcope light on an area at different angles may result in images I with SRs located on different regions, but it would never result in an image Ie showing the complete content of A, as seen in Figure 2. Therefore, for image I of the area A, the corresponding ground truth is unknown. For this reason, applying supervised training of a neural network directly to solve this problem is not feasible.

2.3 Strategy for applying a supervised learning algorithm

Considering the above-mentioned peculiarities of the problem to solve, in this section, the problem of SRs removal in colposcopic images using supervised learning is reformulated, the architecture and training of the network defined, and the use of the network to solve the problem described.

Reformulation of the problem to solve. Let us denoted by

-

1.

the area of the cervix focused with the colposcope

-

2.

the image of A captured by the colposcope, i.e., image with SR regions.

-

3.

and modifications of image such that there are hidden regions in the image that are known in the image .

Targets:

-

1.

Train a network for learning to complete the hidden content in by trying to obtain .

-

2.

Use the trained network to complete the regions with SRs in a colposcopic image , which solves the original problem.

Since the network is trained to restore any hidden region of colposcopic images, it is expected that the network will be able to reconstruct any unobserved anatomical cervix portion under the SR regions.

Image pre-processing. The modified images and for constructing the data set of the above-reformulated problem are constructed as follows:

-

1.

The regions with SRs are identified using the algorithm mentioned in Section 2.1, and a binary mask (real mask) was associated with them. The entry of the real mask of a colposcopic image is defined as

where ; . The image is constructed, where the symbol denotes the Hadamard product for two matrices. See Figure 3 center.

-

2.

From the regions without SRs of , regions of interest will be selected as hidden regions (HR), and a new mask (hidden mask) will be associated with them. That is,

where ; . Then, is constructed. See Figure 3 right.

Figure 3: Example of the modified images and of . The black regions marked inside the red circles in are the hidden regions (). -

3.

Applying steps 1 and 2 on a set of colposcopic images , we conform the tuple for the data set, where .

2.3.1 Architecture of the neural network

The authors of [9] propose a Generative Adversarial Network (GAN) model that improves the results obtained by [4, 8, 18] for solving image inpainting problems arisen in different (non-medical) domains such as faces and landscapes. The model architecture by [9] comprises three networks: a generator, a global context discriminator, and a local context discriminator. The generative network is fully convolutional and is used to fill in the missing regions of the image, while the global and local context discriminators are auxiliary networks used exclusively for training. Unlike other approaches focused on image generation, their method does not generate images from noise.

To solve the reformulated problem specified in Section 2.3, we will use a network architecture based on the generative network architecture of [9]. This network follows an encoder-decoder structure that initially decreases the image resolution before its further processing, reducing memory usage and computation time. Subsequently, the network output is restored to the original resolution using deconvolution layers (the opposite process of a convolution filter). The resolution is decreased twice using convolutions of stride 1/4 of the original size, which is important to generate a non-blurred texture in the missing regions [9]. Using dilated convolutions at lower resolutions, the model can effectively process larger input image areas when computing each output pixel than with standard convolutions [9]. The model also has batch normalization layers after all convolutional layers except for the last one.

| Type | Kernel | Dilation | Stride | Filters |

|---|---|---|---|---|

| convolution | 55 | 1 | 11 | 32 |

| convolution | 33 | 1 | 22 | 64 |

| convolution | 33 | 1 | 11 | 64 |

| convolution | 33 | 1 | 22 | 128 |

| convolution | 33 | 1 | 11 | 128 |

| convolution | 33 | 1 | 11 | 128 |

| dilated convolution | 33 | 2 | 11 | 128 |

| dilated convolution | 33 | 4 | 11 | 128 |

| dilated convolution | 33 | 8 | 11 | 128 |

| dilated convolution | 33 | 16 | 11 | 128 |

| convolution | 33 | 1 | 11 | 128 |

| convolution | 33 | 1 | 11 | 128 |

| deconvolution | 44 | 1 | 1/21/2 | 64 |

| convolution | 33 | 1 | 11 | 64 |

| deconvolution | 44 | 1 | 1/21/2 | 32 |

| convolution | 33 | 1 | 11 | 16 |

| output | 33 | 1 | 11 | 3 |

Specifically, we use the above-described network architecture with the following modifications. The number of filters used in each layer is reduced by half to decrease the number of operations to be performed and the computation time. Table 1 shows the final architecture of the network. Instead of an RGB image with a mask representing the region to be filled, the model input is an RGB image with two masks. The first mask identifies the black pixels of the input image that should be retained in the output image. The second mask identifies the black pixels of the input image that must be restored. Both masks are binary arrays of the image size, where the positions with value 0 will be those representing the black pixels, and 1 the rest of the image. It is intended that, from these patterns, the network learns which areas to copy and which ones to restore. A general representation of this model can be seen in Figure 4.

To restore hidden regions of a colposcopic image the input image is defined in Section 2.3. The first mask is the real of , and the second is the hidden . On the other hand, to restore anatomical cervix portion under the SR regions in a colposcopic image , the input image is , the first mask is composed of a matrix full of 1, and the second mask is the real of representing the pixels to be restored.

The optimizer used in training is the Adadelta algorithm [25], which automatically sets a learning rate for each weight in the network. Adadelta optimization is a stochastic gradient descent method that is based on adaptive learning rate per dimension to address two drawbacks:

-

1.

The continual decay of learning rates throughout training, implying an incorrect update of the weights. In the worst case, this may prevent the neural network from continuing its training [17].

-

2.

The need for a manually selected global learning rate [25].

This algorithm adapts learning rates based on a moving window of gradient updates, rather than accumulating all previous gradients. In this way, Adadelta continues to learn even when many updates have been made.

The loss function used for training the network is the Mean Squared Error (MSE)

defined by the Euclidean norm , the obtained output of the network for the input , and the expected output . Since in our case we intend to compute the distance between RGB images, we use the objective function

| (1) |

2.3.2 Image selection and hidden regions

We use a colposcopic imaging database from the University Gynecobstetric Hospital ’Diez de Octubre’ and the University Gynecobstetric Hospital ’Ramón González Coro’. This database contains several images (from different angles) of each patient. The images were partitioned into three sets, training, validation, and test so that they did not share images of the same patient. This partition guarantees the independence between the data of the different sets.

Frequently, colposcopic images may contain part of the speculum222Medical instrument that holds open the entrance orifices of different body cavities such as the vagina to perform examinations. (see Figure 5) or areas outside the cervix that are not subject to clinical studies. For delimiting the cervical area in this type of image, several segmentation methods have been proposed [10, 13, 3]. However, they assume that the SR regions have been previously eliminated, so it is not realistic to use them in this research. Since hidden regions must be inside the gynecological interest areas, they were selected manually by visual inspection.

The manual selection was carried out with the help of a program that displays the images and allows selecting the hidden pixels. The program also automatically generates the hidden mask corresponding to the previously selected HRs.

As mentioned above, the bright pixels do not have a fixed distribution and concentration in the images (see Figures 1 and 2). Therefore, the shape and concentration of the hidden regions were created heterogeneously. To ensure a varied representation of different features of the cervix during the network training process, the pixels of the hidden regions were chosen from distinctive regions of the cervix displayed in the images (as those with lesions, blood vessels, distinct textures, and clean areas) as well as from other areas of the images randomly selected.

2.4 Implementational and computational issues

The strategy proposed in Section 2.3 was implemented in Python 3.7 with the use of the deep learning package Keras on top of the machine learning platform TensorFlow. In addition, Google Colaboratory (also known as Colab) was used to accelerate the training process. The types of GPUs available in Colab often include Nvidia K80s, T4s, P4s, and P100s, but they vary over time.

3 Experimentation, Results and Discussion

When initial values of the weights of a neural network are taken randomly, two networks trained with the same data set and the same number of epochs may result in networks with different final weights, thus with different generalization errors. For this reason, 16 networks were trained with the architecture proposed in Section 2.3.1, the same data set, and 240 epochs. With the purpose of restoring hidden regions, 120 images from the colposcopic image database were selected to construct the training set, 20 for the validation set and 22 for the test set. The last 22 images were also used to create the real test set for evaluating the performance of the trained network to restore SR regions. These sets were arranged in the way explained in Section 2.3.

3.1 Performance of the trained networks to restore hidden regions of colposcopic images

An identifier Rx was assigned to each neural network, where is the number associated with the network. Table 2 shows, in increasing order, the validation errors corresponding to each network. Taking into account that the trained network R3 presents the lowest validation error, it was selected to restore the hidden regions. Figure 6 shows R3 learning curves. It can be appreciated that there is no overfitting as training and validation curves behave similarly. To evaluate the performance of the selected network regarding the others, a series of qualitative and quantitative comparisons are carried out.

ID R3 R14 R4 R2 R6 R10 R1 R7 R5 R12 R15 R11 R8 R0 R13 R9 VE 4.12 4.34 4.41 4.52 4.59 4.60 4.66 4.68 4.76 4.96 5.16 5.37 5.44 5.46 5.50 5.77

By visual inspection of the colposcopic images restored by the different networks, certain qualitative differences can be observed, for example, in the color tonality.

Figure 7 shows a comparison of the restored images obtained by the networks with the lowest R3 and highest R9 validation errors

(see Table 2).

The color of a pixel in a colposcopic image depends on the combination of the intensities of its three channels (RGB). To analyze the restoration performed on each channel, the histograms of their pixel intensities were compared independently. Figure 8, top row, shows the results obtained when restoring the same image with the networks R3, R5, and R9 (extreme and central values of Table 2). In the remaining rows of the Figure, histograms of the pixel intensities corresponding to expected output and obtained output images for each network, separated by channels, are superimposed. It can be seen that there is a considerable variation in the estimated pixel values of each channel among the three networks. The distribution of intensities estimated in each channel by the R3 network is the closest to the expected distribution. By considering the whole test set of images, the test errors of the networks R3, R5, and R9 are 0.0037 0.0007, 0.0045 0.0008, and 0.0058 0.0009, respectively, at the 95 of significance level. Observe that the confidence intervals [0.0030,0.0044] and [0.0049,0.0067] for the error of the networks R3 and R9 on the test set have null intercept, meaning that there is a significant difference in their capacity of generalization, i.e., of restoration of hidden regions of the colposcopic images.

In what follows, the performance of the trained network R3 to restore the hidden regions in images of the test set is analyzed in detail.

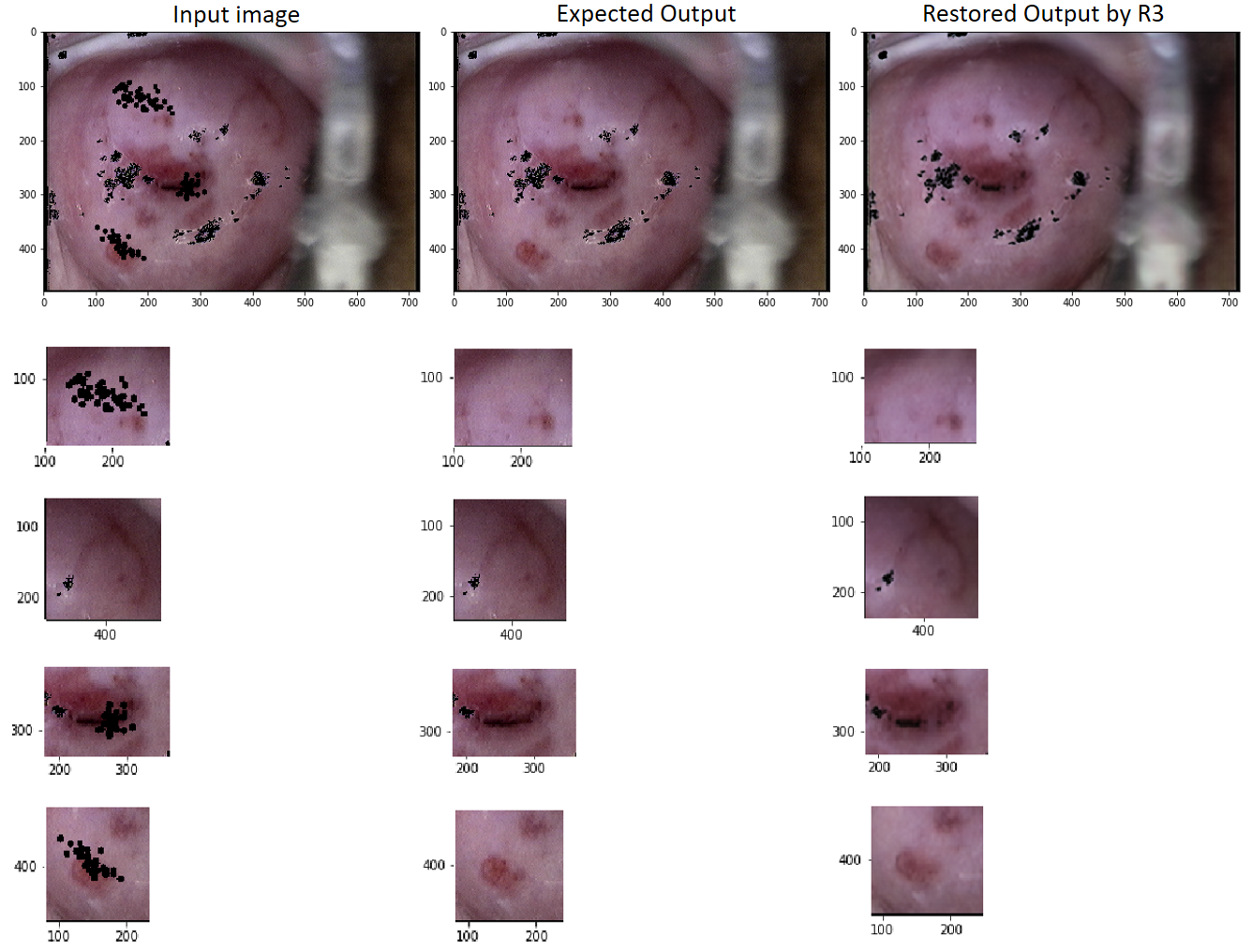

Figure 9 shows fine details in the estimation of various distinctive regions of a restored image. The top row presents the input image to the network (left), the expected output of the network (center), and the output obtained from the network (right). The remaining rows show areas of interest within the images as mentioned above, emphasizing some of their characteristics. These areas are shown in the column corresponding to their image. Rows 2, 4, and 5 contain hidden regions and their respective restorations. Row 3 enhances how the tissue features outside the unknown regions are maintained during the restoration.

Figure 10, top row, presents a restored image by the network R3 and its corresponding expected image, whereas their histograms of the pixel intensity per channel are shown in the remaining rows. Observe the suitable reproduction of the expected intensities by the network R3 for all the intensity values higher than 0. Note also that there is a higher error when restoring the pixels with intensity 0 in the three channels of the images. Looking in detail at the black areas in the expected output image (EO) and comparing them with the corresponding areas in the restored output image (RO), some scattered pixels can be seen around the black areas in EO, but not in RO. This reveals that black pixels (intensity 0 in the three channels) take color (intensities between 0 and 255), and vice versa, pixels with colors become black. This is a predicted result that reveals certain impressions of the network to restore the color of the pixels in the abrupt border between the black and the colored regions. However, as seen from the images on the top row of the Figure, these inaccuracies do not produce some appreciable visual distortion in the restored image.

As mentioned in Section 2.3.1, the trained networks use as loss function the mean square error (1), which quantifies the average of the errors between the three channels of the obtained image and the expected output image. However, this does not imply that the performed optimization minimizes the errors of each channel independently. Indeed, if there are pixels in a channel with a very high error and pixels in another channel with a very small error, it might result in an average error for the three channels lower than that which would be obtained from a restored image where all pixels of each channel have a similar error. In this context, it is important to note that, for a pixel of the output obtained from a network having only one of the three RGB values correct and the others with a large error, the pixel’s color that would be appreciated is different from the expected one. Therefore, the mean square error (1) does not provide a good measure of the quality of the restored image.

To further analyze the existing inaccuracy in the image restoration, we use the supremum norm to measure the difference between each channel of the expected and restored output images. That is, for the expected and restored output images and , the error between the -th channels and , respectively, is computed as

The range of these errors for each channel is presented in Table 3 for the 22 restored images of the test set. This reveals that, for each image in the test set restored by the network R3, there is at least one pixel whose restoration error is greater than 184, 165, and 189 in the red, green, and blue channels respectively.

In order to know how frequently these large errors in the restored images appear, the distribution of the absolute errors

of each pixel in each channel of a restored image is analyzed. Figure 11 plots the histogram of frequency of these errors for each channel of a restored image. It shows that the most frequent error value is 0, and the highest concentration of points is at the beginning of the graph.

Since the largest absolute error among channels obtained from calculating the supremum norm was 251 for the images of the test set (see Table 3), the range of possible errors among pixels was divided into three intervals, from 0 to 25, from 25 to 50, and from 50 to 251. Table 4 reports the percentage of pixels with absolute errors in these ranges, for each channel, in the 22 restored images of the test set. On average, for each channel, at least 95 of the pixels in the images restored by R3 have an absolute error lower than 25. The green channel tends to be, on average, the channel with the highest percentage of pixels having errors greater than 25. This result demonstrates the good performance of the network R3 for restoring the original colors of the test set images.

3.2 Performance of the selected network to reconstruct unobserved anatomical cervix portion under the SR regions.

Once the trained network R3 was selected for having the lowest validation error, and after evaluating its efficiency to restore hidden regions of colposcopic images, it was used to restore the cervix portion under the SR regions. Its performance in this problem was analyzed by a series of experiments on the real test set.

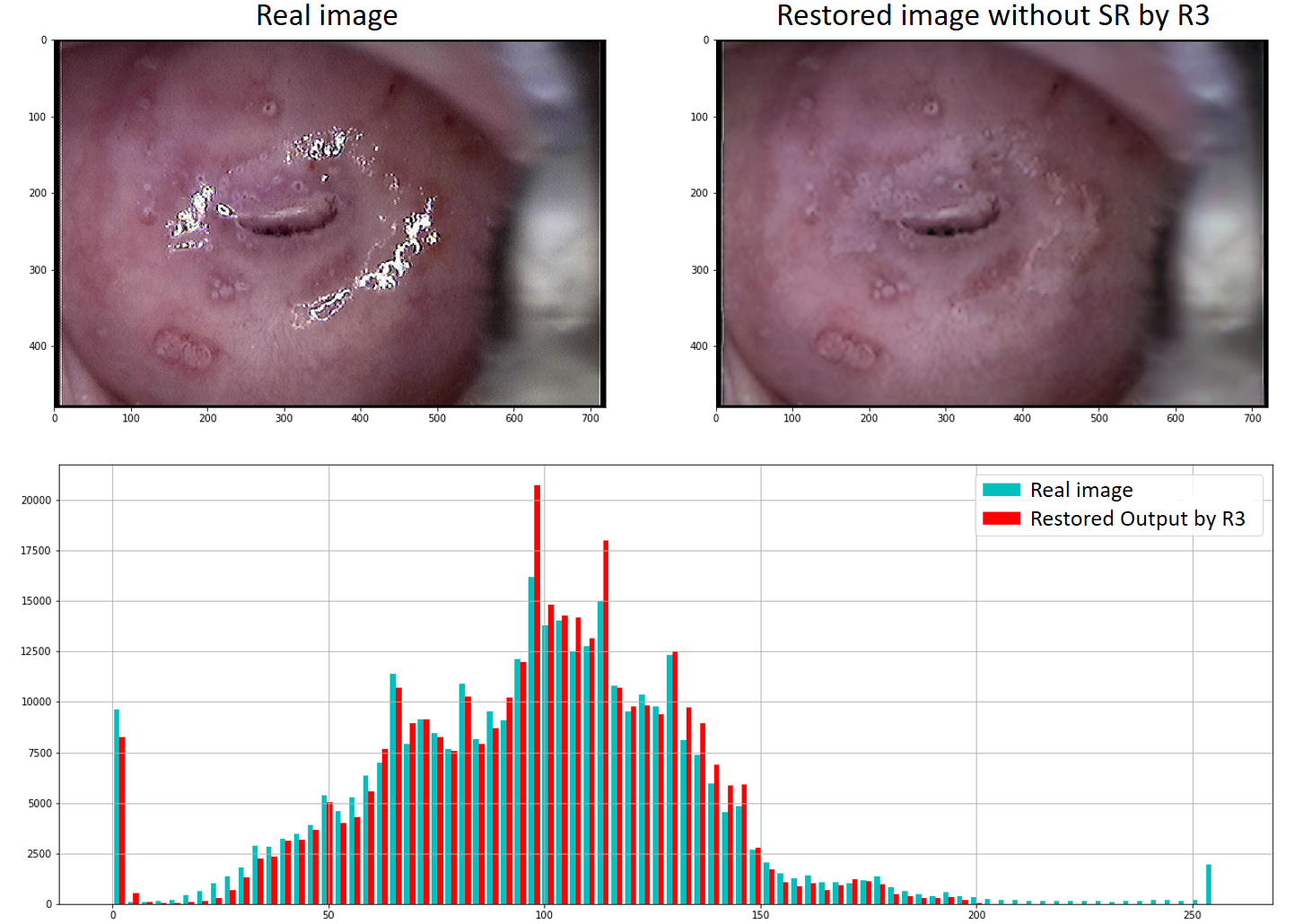

Figure 12 shows, in the upper part, the image and the corresponding restoration obtained from R3.

The lower part shows the histogram of the color intensities of both images. With color cyan, the real image is represented, and with red color, the network’s output.

The histograms illustrate how the pixels with high intensity of the original image disappear in the restored image. It also shows that the distribution of the remaining intensities is reproduced, observing the similarity in both histograms. This behavior was maintained in the 22 analyzed images of the real test set.

| Channel Red | Channel Green | Channel Blue | |

| Image 1 | 208 | 186 | 210 |

| Image 2 | 225 | 198 | 229 |

| Image 3 | 186 | 182 | 208 |

| Image 4 | 224 | 193 | 229 |

| Image 5 | 231 | 187 | 209 |

| Image 6 | 208 | 196 | 218 |

| Image 7 | 194 | 237 | 208 |

| Image 8 | 184 | 165 | 193 |

| Image 9 | 188 | 173 | 199 |

| Image 10 | 202 | 213 | 213 |

| Image 11 | 186 | 175 | 192 |

| Image 12 | 196 | 187 | 226 |

| Image 13 | 198 | 182 | 235 |

| Image 14 | 216 | 214 | 227 |

| Image 15 | 192 | 210 | 211 |

| Image 16 | 238 | 228 | 251 |

| Image 17 | 207 | 203 | 219 |

| Image 18 | 203 | 200 | 214 |

| Image 19 | 199 | 192 | 196 |

| Image 20 | 186 | 182 | 189 |

| Image 21 | 224 | 245 | 245 |

| Image 22 | 190 | 198 | 206 |

| Average | 203.8 | 197.0 | 214.8 |

| Minimum | 184 | 165 | 189 |

| Maximum | 238 | 245 | 251 |

Denote by , and the maximum intensity corresponding to the original image , to the modified image , and to the restored image obtained by R3. corresponds to the highest intensity that the algorithm mentioned in Section 2.1 does not classify as SR in that image. Therefore, if , then all the SRs detected in does not appear in , and it can be argued that the SRs were removed. These three values computed over the images in the real test set are shown in Table 5. Clearly, in 21 of the 22 images, the SRs were successfully removed. It is important to note that this is a criterion for removing SRs, but not for how well the anatomical content under them was estimated.

For evaluating the restoration of the missing anatomical regions, the expert criterion of a medical specialist was considered. After excluding the three images for which the SRs detection algorithm mentioned in Section 2.1 does not correctly select the SRs (compromising the quality of the image restoration as shown in Figure 13), the expert analysis of the remainder 19 restored images yields the following conclusion: except for the image of Figure 14, for which appears some noise in the restored area, the brightness in the rest of the reconstructed images was satisfactorily eliminated, allowing the physician to observe the characteristics of the glandular and squamous epithelia of the cervix. In such images, the anatomical elements of the cervix such as glandular orifices (eggs) or Nabothian cyst and physiological characteristics of the cervical mucus are preserved allowing an evaluation of its quality. An example is shown in Figure 15.

Conclusions

In the present work, a neural network-based strategy for specular reflection elimination in colposcopic images was proposed to restore them successfully. A reformulation of the initial problem was done to perform supervised training since the ground truth corresponding to the SR regions is always unknown. The proposed SRs elimination strategy includes the use of an algorithm for SRs identification in colposcopic images, the training of a set of networks to restore any hidden region of colposcopic images, and the use of the network with the lower validation error to restore any unobserved anatomical cervix portion under the SR regions. A detailed qualitative and quantitative analysis of the performance of the trained networks shown their capability to restore different hidden regions of the colposcopic images. The networks with the lowest validation error restore, on average, the 95 of the pixels in each channel of the images with an error lower than 25 (of a possible maximum of 255).

When using the selected network to reconstruct the cervix portion under the SR regions, the brightness was eliminated in 21 of the 22 evaluated images, whereas the distribution of the color intensities of each channel was reproduced, being similar to the expected. The restorations of the missing anatomical regions under the SRs were evaluated by a medical expert concluding that -qualitatively- the SRs were satisfactorily eliminated and the gynecological elements of interest were conserved, which facilitates the correct clinical evaluation of the patients.

| Error range 0-25 | Error range 25-50 | Error range 50-251 | |||||||

| Red | Green | Blue | Red | Green | Blue | Red | Green | Blue | |

| Image 1 | 95.6 | 96.1 | 95.9 | 1.8 | 1.7 | 1.7 | 2.5 | 2.0 | 2.3 |

| Image 2 | 93.6 | 94.3 | 94.1 | 2.6 | 2.4 | 2.4 | 3.6 | 3.1 | 3.4 |

| Image 3 | 96.3 | 96.5 | 96.1 | 1.7 | 1.9 | 1.8 | 1.8 | 1.5 | 1.9 |

| Image 4 | 96.5 | 97.2 | 97.1 | 1.6 | 1.3 | 1.3 | 1.8 | 1.3 | 1.5 |

| Image 5 | 96.8 | 97.4 | 97.3 | 1.4 | 1.3 | 1.2 | 1.6 | 1.2 | 1.4 |

| Image 6 | 96.4 | 96.7 | 96.5 | 1.8 | 1.8 | 1.8 | 1.6 | 1.4 | 1.5 |

| Image 7 | 96.5 | 93.9 | 95.5 | 2.3 | 4.7 | 3.4 | 1.0 | 1.2 | 1.0 |

| Image 8 | 97.8 | 98.0 | 97.8 | 1.1 | 1.1 | 1.2 | 1.0 | 0.8 | 0.9 |

| Image 9 | 96.6 | 97.0 | 96.8 | 1.7 | 1.7 | 1.7 | 1.6 | 1.2 | 1.4 |

| Image 10 | 98.4 | 98.6 | 98.5 | 0.8 | 0.7 | 0.7 | 0.6 | 0.6 | 0.7 |

| Image 11 | 98.3 | 98.3 | 98.3 | 0.6 | 0.7 | 0.7 | 0.9 | 0.8 | 0.9 |

| Image 12 | 96.9 | 96.9 | 96.7 | 1.3 | 1.4 | 1.3 | 1.7 | 1.6 | 1.8 |

| Image 13 | 98.3 | 98.5 | 95.8 | 0.9 | 0.8 | 2.8 | 0.7 | 0.6 | 1.3 |

| Image 14 | 93.6 | 94.1 | 94.2 | 2.8 | 3.0 | 2.8 | 3.5 | 2.8 | 2.9 |

| Image 15 | 96.1 | 95.6 | 95.7 | 1.9 | 2.4 | 2.6 | 1.9 | 1.8 | 1.6 |

| Image 16 | 87.9 | 85.3 | 90.3 | 8.0 | 10.5 | 6.4 | 4.0 | 4.1 | 3.2 |

| Image 17 | 92.8 | 90.8 | 91.6 | 3.1 | 5.6 | 5.3 | 4.0 | 3.5 | 3.0 |

| Image 18 | 97.1 | 97.2 | 97.2 | 1.2 | 1.3 | 1.2 | 1.4 | 1.4 | 1.5 |

| Image 19 | 96.9 | 97.0 | 97.1 | 1.6 | 1.5 | 1.5 | 1.4 | 1.3 | 1.2 |

| Image 20 | 96.7 | 96.6 | 96.8 | 1.5 | 1.7 | 1.6 | 1.6 | 1.6 | 1.5 |

| Image 21 | 89.6 | 85.6 | 91.0 | 6.6 | 10.7 | 5.6 | 3.6 | 3.6 | 3.2 |

| Image 22 | 97.2 | 97.2 | 97.0 | 1.4 | 1.5 | 1.4 | 1.2 | 1.2 | 1.5 |

| Average | 95.7 | 95.4 | 95.8 | 2.2 | 2.7 | 2.3 | 2.0 | 1.8 | 1.8 |

| Minimum | 87.9 | 85.3 | 90.3 | 0.6 | 0.7 | 0.7 | 0.6 | 0.6 | 0.7 |

| Maximum | 98.4 | 98.6 | 98.5 | 8.0 | 10.7 | 6.4 | 4.0 | 4.1 | 3.4 |

| Median | 96.6 | 96.8 | 96.6 | 1.6 | 1.7 | 1.7 | 1.6 | 1.4 | 1.5 |

| Image 1 | 255.0 | 216.7 | 200.0 | Yes |

| Image 2 | 255.0 | 216.7 | 206.3 | Yes |

| Image 3 | 255.0 | 216.7 | 189.0 | Yes |

| Image 4 | 255.0 | 216.3 | 205.3 | Yes |

| Image 5 | 255.0 | 216.0 | 208.7 | Yes |

| Image 6 | 255.0 | 216.0 | 198.3 | Yes |

| Image 7 | 255.0 | 216.0 | 207.0 | Yes |

| Image 8 | 255.0 | 215.3 | 199.7 | Yes |

| Image 9 | 255.0 | 216.0 | 196.0 | Yes |

| Image 10 | 248.0 | 210.3 | 197.3 | Yes |

| Image 11 | 253.0 | 213.3 | 186.0 | Yes |

| Image 12 | 255.0 | 216.7 | 202.7 | Yes |

| Image 13 | 255.0 | 216.7 | 197.7 | Yes |

| Image 14 | 255.0 | 216.3 | 204.0 | Yes |

| Image 15 | 255.0 | 216.3 | 192.7 | Yes |

| Image 16 | 255.0 | 216.7 | 208.3 | Yes |

| Image 17 | 254.0 | 215.7 | 198.3 | Yes |

| Image 18 | 255.0 | 216.3 | 204.0 | Yes |

| Image 19 | 255.0 | 216.7 | 197.0 | Yes |

| Image 20 | 255.0 | 216.7 | 179.3 | Yes |

| Image 21 | 255.0 | 216.7 | 209.7 | Yes |

| Image 22 | 239.7 | 203.7 | 212.0 | No |

References

- [1] Cuba. Centro Nacional de Información de Ciencias Médicas. Biblioteca Médica Nacional Cáncer Cervicouterino. Estadísticas Mundiales.Factográfico salud. 2019 Dic. Citado 22 junio 2020; 5(12). Disponible en: http://files.sld.cu/bmn/files/2019/12/factografico-de-salud-diciembre-2019.pdf.

- [2] Sharib Ali, Felix Zhou, Adam Bailey, Barbara Braden, James East, Xin Lu, and Jens Rittscher. A deep learning framework for quality assessment and restoration in video endoscopy. arXiv preprint arXiv:1904.07073, 2019.

- [3] Bing Bai, Pei-Zhong Liu, Yong-Zhao Du, and Yan-Ming Luo. Automatic segmentation of cervical region in colposcopic images using k-means. Australasian physical & engineering sciences in medicine, 41(4):1077–1085, 2018.

- [4] Connelly Barnes, Eli Shechtman, Adam Finkelstein, and Dan B Goldman. Patchmatch: A randomized correspondence algorithm for structural image editing. ACM Trans. Graph., 28(3):24, 2009.

- [5] Abhishek Das, Avijit Kar, and Debasis Bhattacharyya. Elimination of specular reflection and identification of roi: The first step in automated detection of cervical cancer using digital colposcopy. In 2011 IEEE International Conference on Imaging Systems and Techniques, pages 237–241. IEEE, 2011.

- [6] Danilo Gómez Gómez. Eliminación de zonas especulares en imágenes de colposcopía utilizando factorizaciones matriciales no-negativas. Tesis de grado, Universidad de La Habana, Cuba, 2018.

- [7] Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. Generative adversarial nets. In Advances in neural information processing systems, pages 2672–2680, 2014.

- [8] Jia-Bin Huang, Sing Bing Kang, Narendra Ahuja, and Johannes Kopf. Image completion using planar structure guidance. ACM Transactions on graphics (TOG), 33(4):1–10, 2014.

- [9] Satoshi Iizuka, Edgar Simo-Serra, and Hiroshi Ishikawa. Globally and locally consistent image completion. ACM Transactions on Graphics (ToG), 36(4):1–14, 2017.

- [10] Holger Lange. Automatic detection of multi-level acetowhite regions in rgb color images of the uterine cervix. In Medical Imaging 2005: Image Processing, volume 5747, pages 1004–1017. International Society for Optics and Photonics, 2005.

- [11] Holger Lange. Automatic glare removal in reflectance imagery of the uterine cervix. In Medical Imaging 2005: Image Processing, volume 5747, pages 2183–2192. International Society for Optics and Photonics, 2005.

- [12] Thomas M Lehmann and Christoph Palm. Color line search for illuminant estimation in real-world scenes. JOSA A, 18(11):2679–2691, 2001.

- [13] Wenjing Li, Jia Gu, Daron Ferris, and Allen Poirson. Automated image analysis of uterine cervical images. In Medical Imaging 2007: Computer-Aided Diagnosis, volume 6514, page 65142P. International Society for Optics and Photonics, 2007.

- [14] Othmane Meslouhi, Mustapha Kardouchi, Hakim Allali, Taoufiq Gadi, and Yassir Benkaddour. Automatic detection and inpainting of specular reflections for colposcopic images. Open Computer Science, 1(3):341–354, 2011.

- [15] Balamurali Murugesan, S Vijaya Raghavan, Kaushik Sarveswaran, Keerthi Ram, and Mohanasankar Sivaprakasam. Recon-glgan: A global-local context based generative adversarial network for mri reconstruction. In International Workshop on Machine Learning for Medical Image Reconstruction, pages 3–15. Springer, 2019.

- [16] Saloney Nazeer and Mahmood I Shafi. Objective perspective in colposcopy. Best Practice & Research Clinical Obstetrics & Gynaecology, 25(5):631–640, 2011.

- [17] Razvan Pascanu, Tomas Mikolov, and Yoshua Bengio. On the difficulty of training recurrent neural networks. In International conference on machine learning, pages 1310–1318, 2013.

- [18] Deepak Pathak, Philipp Krahenbuhl, Jeff Donahue, Trevor Darrell, and Alexei A Efros. Context encoders: Feature learning by inpainting. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 2536–2544, 2016.

- [19] Alejandro Palmer San Pedro. Eliminación de regiones especulares en imágenes colposcópicas de cuello de útero. Tesis de grado, Universidad de La Habana, Cuba, 2015.

- [20] Sylvia C Robles. Introduction to the special issue: Timely detection of cervical cancer. Bulletin of the Pan American Health Organization (PAHO); 30 (4), dec. 1996, 1996.

- [21] Timothy K Shih and Rong-Chi Chang. Digital inpainting-survey and multilayer image inpainting algorithms. In Third International Conference on Information Technology and Applications (ICITA’05), volume 1, pages 15–24. IEEE, 2005.

- [22] Panagiotis-Rikarnto Siavelis, Nefeli Lamprinou, and Emmanouil Z Psarakis. An improved gan semantic image inpainting. In International Conference on Advanced Concepts for Intelligent Vision Systems, pages 443–454. Springer, 2020.

- [23] Xiaoxia Wang, Ping Li, DU Yongzhao, Yuchun Lv, and Yinglu Chen. Detection and inpainting of specular reflection in colposcopic images with exemplar-based method. In 2019 IEEE 13th International Conference on Anti-counterfeiting, Security, and Identification (ASID), pages 90–94. IEEE, 2019.

- [24] Zhiyun Xue, Sameer Antani, L Rodney Long, Jose Jeronimo, and George R Thoma. Comparative performance analysis of cervix roi extraction and specular reflection removal algorithms for uterine cervix image analysis. In Medical Imaging 2007: Image Processing, volume 6512, page 65124I. International Society for Optics and Photonics, 2007.

- [25] Matthew D Zeiler. Adadelta: an adaptive learning rate method. arXiv preprint arXiv:1212.5701, 2012.

- [26] Gali Zimmerman-Moreno and Hayit Greenspan. Automatic detection of specular reflections in uterine cervix images. In Medical Imaging 2006: Image Processing, volume 6144, page 61446E. International Society for Optics and Photonics, 2006.