Spectral-Spatial Global Graph Reasoning for Hyperspectral Image Classification

Abstract

Convolutional neural networks have been widely applied to hyperspectral image classification. However, traditional convolutions can not effectively extract features for objects with irregular distributions. Recent methods attempt to address this issue by performing graph convolutions on spatial topologies, but fixed graph structures and local perceptions limit their performances. To tackle these problems, in this paper, different from previous approaches, we perform the superpixel generation on intermediate features during network training to adaptively produce homogeneous regions, obtain graph structures, and further generate spatial descriptors, which are served as graph nodes. Besides spatial objects, we also explore the graph relationships between channels by reasonably aggregating channels to generate spectral descriptors. The adjacent matrices in these graph convolutions are obtained by considering the relationships among all descriptors to realize global perceptions. By combining the extracted spatial and spectral graph features, we finally obtain a spectral-spatial graph reasoning network (SSGRN). The spatial and spectral parts of SSGRN are separately called spatial and spectral graph reasoning subnetworks. Comprehensive experiments on four public datasets demonstrate the competitiveness of the proposed methods compared with other state-of-the-art graph convolution-based approaches.

Index Terms:

Adaptively, graph convolution, global perception, spectral-spatial, hyperspectral image classification.I Introduction

Relying on excellent characteristics that intrinsic properties of targets can be identified by automatically extracting effective features in an end-to-end manner. Deep learning technologies are being extensively employed in the processing of hyperspectral images (HSIs). HSI includes abundant spectral information which is carried by hundreds of bands and vision representations presented by high spatial resolution, effectively serving precision agriculture [1], environmental monitoring [2], anomaly detection [3] and so on. Among these fields, hyperspectral image classification (HSIC) is always a fundamental and hot topic, where each pixel in the whole scene is needed to be assigned a unique semantic category.

Among existing deep learning technologies, convolutional neural network (CNN) is the most commonly used framework in the HSIC community [4, 5, 6, 7, 8, 9]. However, CNN can only aggregate the contexts in regular regions, while the objects in HSI usually have irregular distributions. Recently, many graph convolution network (GCN) [10] based methods are developed [11, 12, 13, 14, 15, 16, 17, 18, 19] to address this issue by treating objects as graph nodes. The graph structures are usually obtained through superpixel segmentation on the original image. However, the obtained fixed graph topologies still limit the performance.

Furthermore, conventional convolutions are local operators. It is difficult to model the dependencies between long-range positions, causing networks cannot fully leverage contextual information. For this problem, [20] introduces a self-attention mechanism to capture non-local contexts. However, since the similarities between each pixel and all the other positions need to be computed, high computational costs are required.

To tackle the above problems, in this paper, different from previous approaches, our method can produce dynamic graph structures through more flexible homogeneous areas. These areas are generated by conducting superpixel segmentation on intermediate features inside networks based on spectral-spatial similarities between pixels. Then, the graph nodes are produced by these areas. These nodes are also called descriptors since each vector is obtained by aggregating the pixel representations of an area. Compared to the nodes of previous approaches, our descriptors are more discriminative since they are adaptively obtained from constantly changed homogeneous areas with network learning. In addition, the number of descriptors is usually much less than the pixels. Therefore, our method requires lower complexities compared with the aforementioned non-local modules. What’s more, for the issue of local perception, the graph convolution in the proposed method is implemented in a global view to acquire more effective graph contexts. This can be implemented with the help of the self-attention mechanism. After the graph convolution, we obtain pixel-level results used for final classification by reasonably combining these descriptors.

It should be noticed that different from rich and complex natural image databases that have certain channels, HSI scene usually involves a single image with hundreds of bands that are determined by the sensor type. Thus, one of the key parts in HSI processing that can be distinguished from the natural image operation is to explore how to better exploit the spectral information. Existing literatures [21, 22] show that the adjacent bands of HSI also contain contextual information. Therefore, besides the graph convolution in the spatial aspect, we adopt a similar idea in the spectral aspect, i.e., reasonably aggregating these channels to generate spectral descriptors, and additionally employing graph convolution onto them to capture the relationships between different bands. This graph convolution is also implemented in a global perception manner.

Since our graph convolutions are both conducted globally, this is different from the general practice used in previous methods, where the convolution operation is only implemented on adjacent nodes. We call our method spectral-spatial graph reasoning network (SSGRN), which includes spatial and spectral two parts that are separately named spatial graph reasoning subnetwork (SAGRN) and spectral graph reasoning subnetwork (SEGRN). The main contributions of this paper can be summarized as follows:

-

1)

We propose an end-to-end spectral-spatial graph reasoning network named SSGRN. Compared with existing spectral-spatial joint networks, our model can adaptively capture the contexts lying in different objects or channels, despite they are in irregular distributions.

-

2)

We design a spatial subnetwork called SAGRN, where the superpixel segmentation is trainable, adaptively generating flexible homogeneous areas to produce effective descriptors, and we perceive the relationships between any descriptors by adopting global graph reasoning.

-

3)

A spectral subnetwork SEGRN is proposed to capture the contextual information lying in different bands using graph reasoning. As far as we know, it is the first time the relationships of spectral channels are explored from a graph perspective for HSIC.

-

4)

Benefitting from the proposed graph reasoning modules. Our networks achieve promising results on four HSIC benchmarks including Indian Pines, Pavia University, Salinas Valley, and University of Houston, compared with other GCN-based advanced methods.

The remainder of this paper is organized as follows. Section II gives an introduction of related works. Section III describes the proposed networks. Experiments and related comprehensive analyses are presented in section IV. Finally, Section V concludes the paper.

II Related Work

In this section, we first introduce the history of deep learning-based methods for HSIC. Then, since the proposed method is a segmentation network involving graph convolution, we review the deep learning-related segmentation approaches. Finally, we present the approaches related to graph convolution in the HSIC field.

II-A Deep Learning Method for HSIC

Early HSIC community extracts deep features by fully connected networks [23, 24, 25]. However, these networks require sufficient computations since each neuron needs to connect with all units in the next layer. To tackle these problems, many researchers use CNNs for pixel-level classification. In addition, compared with the DNNs receiving 1-D spectral vectors, CNNs process the clipped spatial patches around target pixels and have larger vision fields. In the past years, regarding abundant channels, mainstream deep learning-based methods are classification networks that receive spatial patches or spectral vectors of target pixels [4, 5, 6, 7, 8, 9]. In addition to being directly used as an extractor for single-scale features, CNNs can be modified to generate enhanced features for further accuracy improvement [26, 21, 22, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37]. However, as networks deepen, their abilities are soon saturated, because of the limited sizes of input patches despite theoretical receptive field may extraordinarily large. To this end, many methods adopt the fully convolutional network (FCN) — a special family of CNNs, where the whole image can be directly input into the network and all pixels are simultaneously classified [38, 39, 40, 41]. Although CNN has made promising achievements in HSIC, traditional convolutions still cannot meet the requirements of obtaining the relationships between objects with irregular distributions. Therefore, GCN-based methods are being developed. We will introduce the related technologies later.

II-B Semantic Segmentation

Most existing deep learning-based segmentation methods adopt FCN [42]. For example, UNet [43] and RefineNet [44] use encoder-decoder architecture to carefully recover details for upsampling. However, simple stacking ordinary convolution causes limited perceptions. To expand the vision field, GCN (global convolution network) [45] uses larger kernels, BiSeNet [46] adopts global pooling, while an effective encoding layer is introduced in EncNet [47]. Multiscale features are employed to improve segmentation performance, such as Deeplab [48] and PSPNet [49], which separately benefit from ASPP or PPM modules that adopt multiple dilated convolutions or spatial poolings in different scales. With flexible long-distance perception, self-attention mechanisms have also been introduced into the segmentation community. For instance, DANet [50] utilizes position and channel attention to separately obtain spatial and channel contexts. Pyramid-OCNet [51] restricts the spatial context capturing in grids generated by the PPM module. While in CCNet [52], only pixels lying in a criss-cross area can communicate with each other. Recently, descriptor-based methods are gradually being valued by researchers. To obtain descriptors, ACFNet [53] aggregates features of the same category in the label map, OCRNet [54] adopts the generated soft regions with the help of an auxiliary loss branch, while EMANet [55] directly defines and learns descriptors by Expectation-Maximization algorithm. In addition, the descriptors can interact with each other in various ways, and a typical implementation is with graph convolution [56], whose applications in the HSIC field will be detailed later.

In the HSIC domain, the development history of using segmentation networks is similar to the natural image. Nevertheless, there are still some differences because of the distinct characteristics of HSI. The earliest SSFCN [38] applies dilated convolutions into spatial and spectral feature extractions for image-level classification. Although FPGA [39] also adopts an encoder-decoder structure, it extra designs a stochastic stratified sampler to promote network convergence. ENL-FCN [57] and FullyContNet [58] employ self-attention mechanisms, too, and the latter captures sufficient contextual information by simultaneously introducing spatial, channel, and scale attentions. Unlike them, the proposed methods follow the pattern of employing descriptors to save computational overhead. Concretely, our SAGRN obtains the descriptors by gathering features in homogeneous areas that are generated by superpixel segmentation inside networks, while the counterparts in SEGRN are obtained by aggregating closely related adjacent channels.

II-C HSIC Using GCN

According to our literature survey, GCN is being widely used in the HSIC community, and many approaches [11, 12, 13, 14, 15, 16, 17, 18, 19] are constructed based on superpixel segmentation, since it can provide natural graph structure. For example, MDGCN [11] generates descriptors by directly superpixel segmenting the original image, and then performs the superpixel-level labeling. [12] initializes graph nodes with superpixel centers, and then the nodes are adjusted by modeling the relationships between the center and the pixels within a superpixel, the final classification map is obtained through a region-pixel transformation. Besides using a predefined graph. DGCN [59] separately generates point and distribution graphs with deep features to optimize the relationships between training samples, while EMS-GCN [16] conducts the superpixel segmentation procedure inside the network to generate an adaptive graph, too. Similar to multiple conventional convolutions, DIGCN [15] and XGPN [60] adopt parallel graph convolutions in different scales. While CEGCN [14] and LA-DG-GCN [61] further combine the features from GCN and CNN, respectively. Besides the spatial aspect, spectral information is also drawing researchers’ attention, and it can be exemplified by DASGCN [13]. In addition, some approaches aim to simplify the calculation of the original GCN for efficient HSIC. For instance, [62] operates on smaller graphs using mini-batch samples and the extracted sub-adjacent matrices. Some other schemes, such as spatial pooling [63], novel distance metric [64] and position coordinates [65] are being involved in the graph convolution procedure to achieve HSIC.

Compared with the above attempts, we must argue that the proposed methods are different from them. Firstly, in SEGRN, a spectral graph is adaptively generated since the nodes are obtained by aggregating adjacent channels of changing intermediate features in the network, while the corresponding graph in DASGCN is predefined. What’s more, although the descriptors in SAGRN are also built by superpixel segmentation on the intermediate feature, our spatial graph is dense, since our adjacent matrix is obtained by modeling the relationships between any descriptor with all other partners. Thus, our descriptors can capture global contexts, which are completely different from EMS-GCN, whose adjacent matrix is still produced through traditional similarity computation between neighboring nodes. At last, the proposed SSGRN can adaptively obtain spectral and spatial global graph contextual information.

III Proposed Methods

In this section, we first briefly introduce the definition of GCN. Then, the proposed networks including SAGRN, SEGRN, and SSGRN will be successively presented.

III-A Graph Convolutional Network

The original GCN [10] is defined as follows

| (1) |

Here, is the input feature, , where . is the adjacency matrix of the current graph that has nodes, and is the identity matrix, and are separately the output and trainable parameter matrices of the th layer, is the ReLU activation function.

From the above formula, we can see that the critical parts for graph reasoning are to effectively obtain and , while the proposed networks realize similar ideas.

III-B Spatial Graph Reasoning Subnetwork

Hundreds of bands and high spatial resolution in HSI provide a close spectral-spatial relationship between pixels, and this contextual information is easily utilized by superpixel segmentation, which can be implemented by adopting the SLIC algorithm [66] to generate a series of compact superpixels. However, the original SLIC algorithm is difficult to be directly placed into the network for end-to-end training because of undifferentiable min-max operations. [67] addresses this problem by transforming these operations to differentiable weighted addition. With this technique, we successfully obtain superpixel segments inside the network and generate effective descriptors. In addition, it should be noticed that the generated superpixels bring graph structure and the number of clustered districts is significantly less than the original pixels, benefitting conducting graph reasoning with high efficiency.

In SAGRN, the obtained superpixels and descriptors are actually homogeneous regions and a group of vectors . Concretely, each descriptor is computed by taking the average of the features in the corresponding area. Then, the input of the GCN is obtained from using linear mapping. This process is shown as follows

| (2) |

where is the input feature, and represent the superpixel generating procedure and the corresponding segmentation result map. There are a total of descriptors and is the index, , and are separately the number of channels, height, and width of . is a binary indicator that judges whether the value of the th pixel in is equal to . represents a 1 1 convolutional layer, which is used to conduct information integration for different channels.

To obtain , different from the conventional adjacent matrix that only considers neighbor nodes, we treat it as a dense graph where each node possesses a relationship with all the other nodes to better capture graph contexts. Specifically, the relationship in each pair of nodes is measured by computing their similarity in a mapped latent space

| (3) |

where and are mapping functions, both of which can be implemented with a 1 1 convolution. Then, we normalize the similarity matrix using the softmax function.

After obtaining and , spatial graph reasoning is achieved by directly adopting the GCN formula

| (4) |

where is trainable parameters and ReLU is used as the activation function . It can be seen that each district-level feature is enhanced since it has a global view that captures the contextual information lying in all the other nodes.

At last, these enhanced nodes need to be reprojected for recovering the shape of pixel-level features. The construction of pixel-level features depends on a reasonable combination of descriptors since they possess specific connotations that are various from each other. In this paper, the node vectors after reasoning are considered as a group of bases that can form an effective feature space, where the information at any point can be inferred based on a linear aggregation of these vectors for more complex semantic understanding.

To this end, assume . The affinities between feature and node set are firstly measured in a newly transformed space

| (5) |

Then the target pixel-level feature is subsequently obtained by linearly combining these graph nodes, where the affinity matrix is served as corresponding weights. Thus, , and we subsequently reshape to . Here, , , and are all implemented with a 1 1 convolution, and the subscript main means the main branch to distinguish the later introduced auxiliary path in the network. In the above procedure, for convenience. After obtaining the , through a series of layers, denoted as , including a 3 3 convolutional layer followed by a group normalization (GN) layer, a ReLU function, a 1 1 convolutional layer, and a bilinear upsampling function, the probability matrix is acquired for computing loss, where is the number of categories. This procedure is symbolized as follows.

| (6) |

However, in the early stage of training, disorganized high-level features may be unfavorable to the homogeneous region generation, and classification quality is unavoidably suffered with the affected descriptors. To produce more stable superpixels, we add an auxiliary branch (AB) to achieve fast convergence and obtain probability matrix like the main branch.

| (7) |

In the proposed methods, the loss function is defined to , where is the ground truth and is implemented with the cross-entropy loss, thus the total loss of SAGRN is

| (8) |

The diagram of SAGRN is presented in Figure 1 (A)-(a).

III-C Spectral Graph Reasoning Subnetwork

In SEGRN, we adopt a similar idea as SAGRN. Since the homogeneous areas in SAGRN are regarded as clusters of pixels. Thus, it is natural to consider reasonably obtaining channel clusters. For this purpose, inspired by [21, 22], we directly take the mean value of adjacent channels as spectral descriptors. This can be realized by grouping the feature maps in the channel direction.

Concretely, for a input feature with bands, assume they are separately assigned to groups , then the th group is

| (9) |

Thus the th spectral descriptor can be obtained through

| (10) |

The remaining steps are similar to SAGRN. It should be noticed that is downsampled from by average pooling before conducting graph reasoning to save computational resources. Therefore, , , and we group the downsampled to generate spectral descriptors . The pixel-level feature is reconstructed after a reasonable descriptor linear combination. Then, a bilinear interpolation is employed to ensure the feature sizes are consistent in subsequent aggregations. Through SEGRN, we successfully perform graph reasoning in spectral direction since the contextual information lying in different channels is perceived, and we obtain an enhanced feature, where each channel is improved by capturing the contexts of other bands.

At last, the loss of SEGRN is computed by

| (11) |

The diagram of SEGRN is depicted in Figure 1 (A)-(b).

III-D Spectral-Spatial Graph Reasoning Network

The feature is obtained through a backbone network containing three blocks. Each block includes a convolutional layer followed by a GN layer and a ReLU function. There is a 2x downsampling after the first block to reduce memory consumption. The whole network is trained from scratch and does not need any pre-trained parameters of existing popular models.

After passing through SAGRN and SEGRN, we obtain the corresponding enhanced features and . To preserve the original information of the input feature , we adopt a residual skip connection, thus the spectral-spatial fused feature is defined as

| (12) |

And the corresponding loss is also obtained by

| (13) |

At last, the total loss of the proposed SSGRN is computed through

| (14) |

The whole diagram of SSGRN is shown in Figure 1 (A).

IV Experiments

In this section, we first introduce the used datasets and implementation details, then we conduct a series of comprehensive assessments of the proposed methods, including hyperparameter selection, module ablation study, and model complexity analysis. The performance and stability comparisons between our methods with other state-of-the-art approaches are subsequently presented. In the end, we visualize some internal variables to further assist in understanding the mechanisms inside the network.

IV-A Dataset

| Class ID | Category | Color | Training | Validation | Testing | Total |

| 1 | Alfalfa | 26 | 7 | 13 | 46 | |

| 2 | Corn-notill | 80 | 20 | 1328 | 1428 | |

| 3 | Corn-mintill | 80 | 20 | 730 | 830 | |

| 4 | Corn | 80 | 20 | 137 | 237 | |

| 5 | Grass-pasture | 80 | 20 | 383 | 483 | |

| 6 | Grass-trees | 80 | 20 | 630 | 730 | |

| 7 | Grass-pasture-mowed | 16 | 4 | 8 | 28 | |

| 8 | Hay-windrowed | 80 | 20 | 378 | 478 | |

| 9 | Oats | 11 | 3 | 6 | 20 | |

| 10 | Soybean-notill | 80 | 20 | 872 | 972 | |

| 11 | Soybean-mintill | 80 | 20 | 2355 | 2455 | |

| 12 | Soybean-clean | 80 | 20 | 493 | 593 | |

| 13 | Wheat | 80 | 20 | 105 | 205 | |

| 14 | Woods | 80 | 20 | 1165 | 1265 | |

| 15 | Buildings-Grass-Trees-Drives | 80 | 20 | 286 | 386 | |

| 16 | Stone-Steel-Towers | 60 | 15 | 18 | 93 | |

| Total | 1073 | 269 | 8907 | 10249 |

| Class ID | Category | Color | Training | Validation | Testing | Total |

| 1 | Asphalt | 80 | 20 | 6531 | 6631 | |

| 2 | Meadows | 80 | 20 | 18549 | 18649 | |

| 3 | Gravel | 80 | 20 | 1999 | 2099 | |

| 4 | Trees | 80 | 20 | 2964 | 3064 | |

| 5 | Metal sheets | 80 | 20 | 1245 | 1345 | |

| 6 | Bare soil | 80 | 20 | 4929 | 5029 | |

| 7 | Bitumen | 80 | 20 | 1230 | 1330 | |

| 8 | Bricks | 80 | 20 | 3582 | 3682 | |

| 9 | Shadows | 80 | 20 | 847 | 947 | |

| Total | 720 | 180 | 41876 | 42776 |

| Class ID | Category | Color | Training | Validation | Testing | Total |

| 1 | Brocoli green weeds 1 | 80 | 20 | 1909 | 2009 | |

| 2 | Brocoli green weeds 2 | 80 | 20 | 3626 | 3726 | |

| 3 | Fallow | 80 | 20 | 1876 | 1976 | |

| 4 | Fallow rough plow | 80 | 20 | 1294 | 1394 | |

| 5 | Fallow smooth | 80 | 20 | 2578 | 2678 | |

| 6 | Stubble | 80 | 20 | 3859 | 3959 | |

| 7 | Celery | 80 | 20 | 3479 | 3579 | |

| 8 | Grapes untrained | 80 | 20 | 11171 | 11271 | |

| 9 | Soil vinyard develop | 80 | 20 | 6103 | 6203 | |

| 10 | Corn senesced green weeds | 80 | 20 | 3178 | 3278 | |

| 11 | Lettuce romaine 4wk | 80 | 20 | 968 | 1068 | |

| 12 | Lettuce romaine 5wk | 80 | 20 | 1827 | 1927 | |

| 13 | Lettuce romaine 6wk | 80 | 20 | 816 | 916 | |

| 14 | Lettuce romaine 7wk | 80 | 20 | 970 | 1070 | |

| 15 | Vinyard untrained | 80 | 20 | 7168 | 7268 | |

| 16 | Vinyard vertical trellis | 80 | 20 | 1707 | 1807 | |

| Total | 1280 | 320 | 52529 | 54129 |

| Class ID | Category | Color | Training | Validation | Testing | Total |

| 1 | Grass healthy | 80 | 20 | 1151 | 1251 | |

| 2 | Grass stressed | 80 | 20 | 1154 | 1254 | |

| 3 | Grass synthetic | 80 | 20 | 597 | 697 | |

| 4 | Trees | 80 | 20 | 1144 | 1244 | |

| 5 | Soil | 80 | 20 | 1142 | 1242 | |

| 6 | Water | 80 | 20 | 225 | 325 | |

| 7 | Residential | 80 | 20 | 1168 | 1268 | |

| 8 | Commercial | 80 | 20 | 1144 | 1244 | |

| 9 | Road | 80 | 20 | 1152 | 1252 | |

| 10 | Highway | 80 | 20 | 1127 | 1227 | |

| 11 | Railway | 80 | 20 | 1135 | 1235 | |

| 12 | Parking lot1 | 80 | 20 | 1134 | 1234 | |

| 13 | Parking lot2 | 80 | 20 | 369 | 469 | |

| 14 | Tennis court | 80 | 20 | 328 | 428 | |

| 15 | Running track | 80 | 20 | 560 | 660 | |

| Total | 1200 | 300 | 13511 | 15011 |

-

1)

Indian Pines: This scene was gathered in North-western Indiana by the Airborne Visible/Infrared Imaging Spectrometer (AVIRIS) sensor in 1992, consisting 200 bands with the size of 145 145 pixels that are in 20m spatial resolution after water absorption bands were removed and in the wavelength range of 0.4-2.5m. 16 vegetation classes are involved in this scene. The settings of category color, training, validation, and testing samples have been presented in Table I.

-

2)

Pavia University: This scene was obtained over Pavia University in Northern Italy by Reflective Optics System Imaging Spectrometer (ROSIS) in 2001, consisting 103 bands with the size of 610 340 pixels that are in 1.3m spatial resolution and the wavelength range of 0.43-0.86m. 9 categories are included in this data. The experimental settings are shown in Table II.

-

3)

Salinas Valley: This scene was collected over Salinas Valley, California by the AVIRIS sensor, consisting 204 bands with the size of 512 217 pixels in 3.7m spatial resolution. Same as the Indian Pines scene, 20 water absorption bands were discarded. 16 categories including vegetables, bare soils, and vineyard fields are involved, see Table III for experimental settings.

-

4)

University of Houston: This scene was acquired over the University of Houston campus and its neighboring regions by ITRES-CASI 1500 sensor in 2012, containing 144 bands with the size of 349 1905 pixels that in 2.5m spatial resolution and in the wavelength range of 0.4-1.0m. 15 categories are included in this data. Table IV lists the experimental configurations. This scene was also used in the 2013 IEEE GRSS Data Fusion Contest.

IV-B Implementation Details and Experimental Settings

| Part | Layer | Parameter | GN & ReLU | |||

| Backbone | Block1 | 64, 3, 1, 1, 1 | ✔ | |||

| Max Pool | 64, 2, 2, 0, 1 | |||||

| Block2 | 128, 3, 1, 1, 1 | ✔ | ||||

| Block3 | 256, 3, 1, 1, 1 | ✔ | ||||

| SAGRN | 1 | 64, 1, 1, 0, 1 | ||||

| 64, 1, 1, 0, 1 | ||||||

| 16, 1, 1, 0, 1 | ||||||

| 64, 1, 1, 0, 1 | ||||||

| 64, 1, 1, 0, 1 | ||||||

| 16, 1, 1, 0, 1 | ||||||

|

|

|||||

|

|

|||||

| SEGRN | 3 , 1, 1, 0, 1 | |||||

| , 1, 1, 0, 1 | ||||||

| , 1, 1, 0, 1 | ||||||

| , 1, 1, 0, 1 | ||||||

| , 1, 1, 0, 1 | ||||||

| , 1, 1, 0, 1 | ||||||

|

|

|||||

| SSGRN |

|

|

-

1

Sub scripts are omitted to align with Figure 1.

-

2

Category number.

-

3

and are height and width of the feature .

We employ Pytorch to implement the proposed methods. The base learning rate is set to 1e-3, which is adaptively adjusted with the poly scheduling strategy and multiplied by . The SGD with momentum optimization algorithm is used for training, where the momentum is set to 0.9 and the weight decay is configured as 0.0001. During training, iteration and batch size are always 1 in each epoch since the whole image is directly input into the network, and the total training iteration number is set to 1K. The structure details of the proposed networks are shown in Table V and the values of “Parameter” separately represent “channel number”, “kernel size”, “stride”, “padding size” and “dilation rate” of the corresponding layer. In addition, this table also indicates whether GN and ReLU are used after the convolutional layer. Besides standardization, we do not adopt any data augmentations on the proposed methods.

In our implementations, training samples are obtained by randomly choosing. The remainder consists of a validation set and a testing set. Accuracies are evaluated on the testing set, while the validation set is used for monitoring model status during training. All experiments are repeated 10 times. Three commonly used evaluation criteria in the HSIC community are applied in the experiments, including overall accuracy (OA), average accuracy (AA), and Kappa coefficient (Kappa). OA is the most popular evaluation criterion, which is calculated by dividing the number of correctly classified pixels by the number of pixels that need to be judged. However, OA is usually affected by the phenomenon of unbalance categories. To tackle this problem, AA and Kappa are separately computed based on the confusion matrix. The recall values of all categories are averaged to get AA, while the Kappa is used for measuring classification consistency to penalize the model possessing category preference. All experiments are conducted using an NVIDIA Tesla V100 GPU.

| Method | SAGR | SEGR |

|

|

OA(%) | AA(%) | Kappa(%) | ||||

| FCN | / | 91.06 | 96.35 | 89.71 | |||||||

| FCN+SAGR | ✔ | / | / | 89.87 | 91.81 | 88.31 | |||||

| SAGRN (FCN+SAGR+AB) | ✔ | / | ✔ | / | 94.04 | 95.66 | 93.11 | ||||

| SEGRN (FCN+SEGR) | / | ✔ | / | / | 95.77 | 98.12 | 95.12 | ||||

| FCN+SAGR+SEGR | ✔ | ✔ | 97.06 | 98.75 | 96.60 | ||||||

| FCN+SAGR+SEGR | ✔ | ✔ | ✔ | 97.37 | 98.74 | 96.95 | |||||

| SSGRN (FCN+SAGR+SEGR+AB) | ✔ | ✔ | ✔ | ✔ | 97.87 | 98.77 | 97.52 |

| Method | Params(M) | FLOPs(G) | OA(%) | AA(%) | Kappa(%) |

| FCN | 0.49 | 8.70 | 91.06 | 96.35 | 89.71 |

| FCN+PAM | 0.86 | 20.31 | 93.95 | 97.06 | 93.01 |

| FCN+RCCA() | 0.86 | 16.00 | 93.40 | 95.38 | 92.38 |

| FCN+SAGR | 0.98 | 12.46 | 89.87 | 91.81 | 88.31 |

| SAGRN (FCN+SAGR+AB) | 1.28 | 15.54 | 94.04 | 95.66 | 93.11 |

| FCN+CAM | 0.78 | 12.42 | 94.34 | 97.52 | 93.47 |

| SEGRN (FCN+SEGR) | 1.09 | 12.00 | 95.77 | 98.12 | 95.12 |

IV-C Parameter Analysis

In the proposed methods, only the descriptor numbers in SAGRN and SEGRN need to be configured manually. They are exactly the number of nodes in spatial and spectral graphs. They also implicitly determine the average size of homogeneous regions or channels, which affect the scale of context aggregations when generating descriptors. Because of this, we analyze the influences of different descriptor numbers on the network performance, and the corresponding accuracies including OA, AA, and Kappa have been displayed in Figure 2. It can be observed that the OA keeps increasing as the descriptors grow. Concretely, when increasing descriptors, accuracies are improved quickly in the early stage, but growth rates gradually slow down, and the turning points happen on 16 and 32 in SAGRN and SEGRN, respectively. However, when the descriptor number of SAGRN is larger than 256, accuracies have a slight decline. In our consideration, too manly homogeneous areas fragment the feature, and these small areas narrow the scope of region aggregation, which may weaken the representative ability of the generated descriptors. When there are few descriptors, accuracies may decrease instead even if the descriptor numbers are improved, especially in SEGRN. Moreover, in SEGRN, we also notice that accuracies keep at a low level when the descriptor number is less than 16 because spectral information is lost when a large number of bands are aggregated at once. In our experiments, to obtain relatively high accuracies while reducing the computational complexity as much as possible, we set the descriptor number in SAGRN and SEGRN both to 256.

IV-D Ablation Study

We evaluate different parts of the proposed methods on the Indian Pines dataset, including the branches of spatial or spectral graph reasoning (SAGR or SEGR) and the AB in SAGRN. In addition, the influence of multiple loss functions (we mainly consider the additional and , while is not included) are also considered, and the results are shown in Table VI. It can be seen that the proposed methods improve FCN a lot with the help of graph reasoning. However, without the AB, the accuracies of SAGRN degrade seriously since a rough feature cannot produce high-quality homogeneous areas and effective descriptors. The extra-added AB directly supervises the corresponding feature rather than the reprojected feature like the SAGR path, shortening the distance from feature to the classifier end, overcoming the difficult learning phenomenon that is caused by weak gradient propagation, and further promoting the network convergence. Thus, the abilities of SAGR are helped to be more fully exploited with the high-quality feature generated under the aid of AB, obtaining better results. Compared with FCN, SEGRN performs better since the contexts lying in different channels are well extracted by implementing SEGR, showing the importance of spectral information in HSI interpretation. It is also a critical characteristic to distinguish HSI from natural images. By jointly taking the advantage of SAGR and SEGR, SSGRN achieves the best performance, especially when equipped with and , since the gradients gain better propagations in the corresponding SAGRN and SEGRN.

IV-E Model Complexity

To more comprehensively analyze the proposed methods, we assess the parameter number (Params) and computational complexity of SAGRN and SEGRN, while the latter is shown in the form of floating-point operations per second (FLOPs), and the results are shown in Table VII. Based on the backbone network of FCN, we simultaneously compare performances of the proposed SAGR and SEGR with other existing commonly employed non-local context capturing modules that utilize self-attention mechanisms, including PAM[50], RCCA[52] and CAM[50], where the recurrent number of RCCA is set to 2 to keep consistent with the original literature. It can be seen that our methods perform better than other modules since they achieve competitive accuracies with fewer computations. Concretely, on the square Indian Pines dataset, the inner product operations in PAM and RCCA are separately implemented and times, while the proposed SAGR only needs times, where is the pixel number of input features, is the number of spatial descriptors, in practice . In SEGRN, the complexity of SEGR is less than CAM since the feature is downsampled before implementing graph reasoning. Although the accuracies of FCN+PAM on the Indian Pines dataset are close to that of FCN+SAGR+AB, due to memory issues, it may not be able to handle some large scenes at once, such as the University of Houston dataset, which unavoidably affects practical applications. Actually, in our experiments, the combination of FCN, SAGR, and AB equals SAGRN, while FCN+SEGR equals SEGRN.

IV-F Performance Comparison

| Method | OA | AA | Kappa |

| 1-DCNN | 73.193.61 | 83.292.12 | 69.523.74 |

| 2-DCNN | 82.941.84 | 92.880.83 | 80.552.06 |

| SSFCN | 89.621.45 | 94.250.90 | 88.121.65 |

| GCN | 70.221.51 | 79.001.20 | 66.271.53 |

| Mini-GCN | 76.152.94 | 75.961.79 | 73.113.09 |

| MDGCN | 94.370.67 | 92.911.42 | 93.500.77 |

| CADGCN | 89.360.82 | 93.241.01 | 87.710.93 |

| DASGCN | 74.841.87 | 76.283.25 | 71.122.19 |

| EMS-GCN | 67.723.29 | 68.373.40 | 63.353.27 |

| SAGRN | 94.041.63 | 95.661.99 | 93.111.88 |

| SEGRN | 95.770.74 | 98.120.36 | 95.120.85 |

| SSGRN | 97.870.55 | 98.770.19 | 97.520.63 |

| Method | OA | AA | Kappa |

| 1-DCNN | 75.392.49 | 83.131.10 | 68.732.60 |

| 2-DCNN | 88.191.01 | 89.980.53 | 84.491.27 |

| SSFCN | 85.991.76 | 90.320.95 | 81.832.21 |

| GCN | 81.832.26 | 87.600.48 | 76.752.62 |

| Mini-GCN | 85.222.44 | 87.151.23 | 80.742.96 |

| MDGCN | 95.701.02 | 93.430.44 | 94.301.33 |

| CADGCN | 92.082.66 | 90.433.36 | 89.643.27 |

| DASGCN | 89.771.26 | 84.132.15 | 86.301.67 |

| EMS-GCN | 77.693.50 | 83.773.06 | 71.874.29 |

| SAGRN | 95.381.33 | 94.441.97 | 93.861.77 |

| SEGRN | 86.132.48 | 87.491.44 | 81.933.09 |

| SSGRN | 98.700.42 | 98.840.31 | 98.270.56 |

| Method | OA | AA | Kappa |

| 1-DCNN | 86.923.82 | 93.631.09 | 85.494.12 |

| 2-DCNN | 87.582.33 | 92.871.08 | 86.212.55 |

| SSFCN | 92.990.35 | 96.490.37 | 92.200.40 |

| GCN | 88.211.07 | 94.140.46 | 86.861.18 |

| Mini-GCN | 90.120.14 | 93.920.37 | 89.000.14 |

| MDGCN | 98.370.31 | 98.460.16 | 98.180.35 |

| CADGCN | 97.930.29 | 98.110.38 | 97.690.32 |

| DASGCN | 92.641.27 | 92.401.71 | 91.811.42 |

| EMS-GCN | 93.311.17 | 92.901.56 | 92.551.29 |

| SAGRN | 96.910.58 | 98.480.33 | 96.550.65 |

| SEGRN | 92.222.77 | 93.682.24 | 91.363.06 |

| SSGRN | 99.340.46 | 99.600.21 | 99.260.51 |

| Method | OA | AA | Kappa |

| 1-DCNN | 80.230.84 | 82.090.89 | 78.610.91 |

| 2-DCNN | 84.081.18 | 87.010.93 | 82.771.28 |

| SSFCN | 84.143.26 | 85.842.98 | 82.863.51 |

| GCN | 85.730.95 | 86.410.79 | 84.541.03 |

| Mini-GCN | 83.511.86 | 84.461.66 | 82.152.01 |

| MDGCN | 92.130.41 | 93.250.33 | 91.480.45 |

| CADGCN | 93.050.68 | 93.270.54 | 92.480.74 |

| DASGCN | 67.654.14 | 70.514.34 | 65.024.49 |

| EMS-GCN | 61.871.47 | 65.221.56 | 58.891.60 |

| SAGRN | 92.381.94 | 92.201.83 | 91.752.10 |

| SEGRN | 89.871.52 | 91.801.24 | 89.041.63 |

| SSGRN | 95.591.95 | 96.521.56 | 95.232.11 |

We conduct a comparison between the proposed methods with other classical or state-of-the-art approaches, including typical CNN-based networks: 1-DCNN [6] for spectral classification with spectral vector, 2-DCNN [6] for spatial classification using spatial patches, and an FCN-based algorithm SSFCN [38] for spectral-spatial joint classification. As for approaches on the foundation of graph convolution, we choose classical GCN [10] and Mini-GCN [62], while MDGCN[11], CADGCN [12], DASGCN [13] and EMS-GCN [16] are selected as advanced GCN relevant techniques. The implementation of GCN is in the pattern of transductive learning, where training and testing sets are simultaneously fed into the model since each pixel sample is regarded as a graph node, and it unavoidably consumes too many computational resources. Mini-GCN simplifies this problem by using a shrunk adjacency matrix that is obtained through only computing on the nodes in the current mini-batch, while the graph nodes in MDGCN are obtained from the segmented superpixels and the classification is performed at the superpixel-level, too. CADGCN also uses superpixels to generate graph nodes and performs a pixel-region-pixel transformation. By using predefined superpixels, DASGCN simultaneously obtains spatial and spectral information and samples different pixels as nodes for performing graph convolutions. While EMS-GCN captures spatial graph contexts by dynamic superpixel maps that are generated by networks, they additionally employ channel attention to enhance spectral features.

Table VIII-XI lists the classification accuracies of each algorithm, where the mean value and standard deviation are reported at the same time. It can be seen that the FCN-based SSFCN usually performs better than the CNN-based 1-DCNN and 2-DCNN, showing the importance of spectral-spatial combination in HSIC. However, the accuracies of SSFCN are still limited because SSFCN only perceives local information since its convolutions are local operators, and regular context capturing can not align with irregular object distributions. For graph convolution-based methods, redundant calculations of adjacent pixels in GCN affect the classification while Mini-GCN attempts to use a simplified adjacency matrix to accelerate this procedure with a mini-batch training strategy. However, in GCN and Mini-GCN, pixels without representation of various contexts are directly set as descriptors, degrading the classification. Some effective descriptors are obtained in MDGCN, CADGCN, and DASGCN with the help of superpixel segmentation on the original image. Thus, they get higher accuracies than GCN and Mini-GCN, especially on Pavia University and Salinas Valley datasets. What needs to be noticed is that the adjacency matrices in MDGCN, CADGCN, and DASGCN are both calculated by only considering the relationships between neighbor nodes, which may be limited in the long strip University of Houston scene. Among these methods, DASGCN performs the worst since it conducts the pixel-level graph convolution that has shorter context ranges. We also notice EMS-GCN does not perform well in almost all datasets. It is probably because the obtained dynamic superpixel map during network training is not stable. At this time, the produced graph features by local context aggregations may be not effective. Compared with the above methods, although homogeneous areas are produced by implementing superpixel segmentation on intermediate features in the network, our SAGRN performs better since we adopt a global view where the relationships between each node and all the other nodes are measured, and more effective descriptors can be flexibly and adaptively generated. By implementing spectral reasoning, SEGRN successfully captures the relationships lying in different channels. At last, combining the spatial and spectral graph contexts that are separately obtained by SAGRN and SEGRN, the proposed SSGRN achieves the best overall accuracies of 97.87%, 98.70%, 99.34%, and 95.59%, respectively, exceeding the second place by 3.50%, 3.00%, 1.41%, and 2.54% on Indian Pines, Pavia University, Salinas Valley and University of Houston datasets.

The classification maps of the above methods have been depicted in Figure 3-6. On the Indian Pines dataset, it can be seen that the spectral vector-based methods 1-DCNN, GCN, and Mini-GCN have serious point noises. The introduced spatial information alleviates this issue, making the maps of 2-DCNN and SSFCN cleaner. However, their conventional convolutional filters can not capture irregular contexts, especially in areas where objects are densely distributed, such as red and pink boxes. In GCN-based methods, MDGCN and CADGCN partly solve this problem by adopting convolutions on graph structures. Compared with them, the proposed methods have longer graph context perception distances, and we also consider spectral information. Therefore, SSGRN can simultaneously obtain discriminative results where the objects possess continuous surfaces, well-maintained edges, and are close to the ground truth in the box displayed areas. On the Pavia University dataset, our method produces more smooth surfaces inside objects, see red and pink boxes. In the region of the red box at the Salinas Valley scene, the proposed method generates a preferable visual result with fewer misclassifications compared to other approaches. On the challenging University of Houston dataset, whose classification maps are difficult to be distinguished, since a large number of small objects are distributed dispersedly. Thus, we select and enlarge two typical regions for comparison, which have been presented in red and blue boxes. We can find that MDGCN and the proposed methods perform well on relatively large objects, so we further strengthen restrictions with pink and purple circles, respectively. It can be seen that benefitting from the global graph context capturing, only our SAGRN and SSGRN correctly classify all small objects in these areas. In addition, in our intuition, SEGRN is probably not as good as SAGRN since it only exploits spectral contexts. Surprisingly, SEGRN performs better than SAGRN on the Indian Pines dataset. It may be because the Indian Pines image possesses more channels. This result implies the importance of channel information in HSIC.

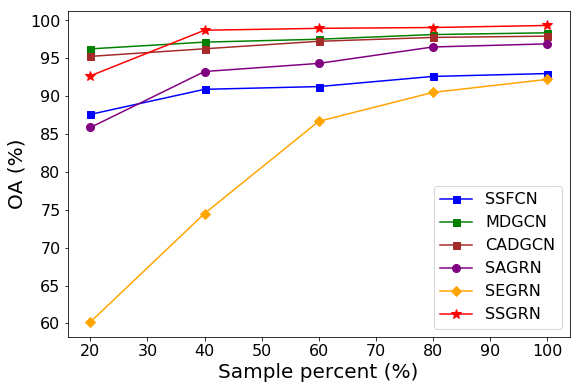

We also evaluate the stability of different methods. Specifically, we adopt different percentages for training samples in Table I-IV. To highlight comparison, we only evaluate the methods that have relatively high accuracies in previous performance comparison experiments, including SSFCN, MDGCN, CADGCN, SAGRN, SEGRN, and SSGRN. The corresponding overall accuracies are shown in Figure 7. In an overall view, SSFCN performs the worst and has no advantage in almost all cases since its conventional convolutions cannot perceive irregularly distributed objects. By addressing this problem using graph convolution with the help of superpixel segmentation, MDGCN and CADGCN perform better than SSFCN. In addition, since their superpixels are always generated on the original image, the number of superpixel regions is far less than the pixel number. Thus, the performances of MDGCN and CADGCN are still stable when using fewer samples, especially for MDGCN that adopts superpixel-level prediction. Owing to the proposed graph reasonings are based on high-level features, whose qualities heavily depend on the learnable knowledge of the training data. Thus, our methods may not be as effective as MDGCN and CADGCN when training samples are extremely scarce (e.g. 20%). Nonetheless, the proposed SAGRN and SEGRN are rapidly improved once the number of training samples is increased. Notice that SEGRN is more preferring the Indian Pines scene, while SAGRN does the opposite. By combining spatial and spectral graph information, SSGRN achieves promising results in most sample scenarios and performs to be comparable even if in cases of few samples.

IV-G Visualization

To more intuitively understand our methods, we separately visualize the affinity matrices in SAGRN and SEGRN in Figure 8-9, which separately indicates the similarity of the selected descriptors in different spatial positions or channels of the feature , and the responsibility intensity is represented by different colors. In Figure 8, the red color means high affinities. It can be seen that different descriptors highlight different areas, demonstrating that they separately possess closer relationships with corresponding regions. In other words, the connotations of these descriptors are certainly the meaning of emphasized areas. These concepts serve as basic components that can be organized by linearly aggregating the transformed graph nodes in to generate more complex semantic information for understanding other positions. In Figure 9, each row represents the weights on different channels for a specific descriptor, while the columns represent different channels. If one descriptor pays more attention to some channels, then the colors of these columns will be closer to white. To our surprise, most descriptors present similar characteristics. For example, almost all descriptors show high responsibilities on channels in the red box. Nevertheless, there are also some descriptors behaving differently and bringing diverse spectral information, which can be exemplified by the descriptor in the orange box. Although only a few descriptors perform in special, SEGRN still achieves comparable accuracies. The visualization of spectral descriptors also indicates spectral features still have great potentials to be mined for HSIC.

We also assess the distinguishability of extracted features in the proposed SSGRN at a three-dimensional space by utilizing t-SNE dimension reduction [68]. The distributions for reference samples at the corresponding input image, the feature generated by the backbone network, the probability matrix in AB, the of SAGRN, the of SEGRN, and the fused feature are separately shown in Figure 10. It can be observed that the samples on the original image space are mixed-up and difficult to be identified, while after backbone network encoding, the obtained starts to be separable, and AB further strengthens the distinction. Benefitting from graph reasoning modules, the pixel representations of , and possess high separability with larger inter-class distances, and the points of each category constitute a unique manifold, indicating that the latent patterns of corresponding categories have been perceived, demonstrating the effectiveness of the proposed SSGRN.

V Conclusion

In this paper, we propose a network called SSGRN to classify the HSI. Considering the irregular distributions of land objects and the various relationships among different spectral bands, the corresponding contextual information is more suitable to be extracted from a graph perspective. Concretely, this network contains two subnetworks that separately extract spatial and spectral graph contexts. In spatial subnetwork SAGRN, to generate more effective descriptors for graph reasoning, different from previous approaches implementing superpixel segmentation on the original image, we move this procedure to intermediate features inside the network. Based on pixel spectral-spatial similarities, we can flexibly and adaptively produce homogeneous regions. Then, descriptors are acquired by separately aggregating these regions. In addition, we conduct a similar operation on channels to obtain spectral graph contexts in a spectral subnetwork named SEGRN, where the spectral descriptors are gained by reasonably grouping different channels. These graph reasoning procedures in spectral and spatial subnetworks are all achieved with graph convolution, where the adjacent matrices are obtained by computing the similarities among all nodes to ensure global perceptions. SSGRN is finally produced by combining SAGRN and SEGRN to further improve the classification. It needs to be noticed that auxiliary branch and multiple loss strategy are separately applied to SAGRN and SSGRN to accelerate network convergence. A series of extensive experiments show that the proposed methods not only maintain high accuracy but also reduce computational resource consumption. Quantitative and qualitative performance comparisons show the competitiveness of the proposed methods compared with other state-of-the-art approaches, even if in the case of fewer samples. The final visualizations make the proposed methods more convincing.

Since the descriptors are obtained by directly aggregating the surrounding areas or channels of the target pixel or band, which may contain irrelevant information and bring noises. Thus, in future work, we will improve the descriptor generation procedure to obtain more serviceable representations.

References

- [1] X. Zhang, Y. Sun, K. Shang, L. Zhang, and S. Wang, “Crop classification based on feature band set construction and object-oriented approach using hyperspectral images,” IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens., vol. 9, no. 9, pp. 4117–4128, Sep. 2016.

- [2] X. Yang and Y. Yu, “Estimating soil salinity under various moisture conditions: An experimental study,” IEEE Trans. Geosci. Remote Sens., vol. 55, no. 5, pp. 2525–2533, May 2017.

- [3] S. Matteoli, M. Diani, and G. Corsini, “A tutorial overview of anomaly detection in hyperspectral images,” IEEE Aerosp. Electron. Syst. Mag., vol. 25, no. 7, pp. 5–28, 2010.

- [4] W. Hu, Y. Huang, L. Wei, F. Zhang, and H. Li, “Deep convolutional neural networks for hyperspectral image classification,” J. Sensors, vol. 2015, 2015.

- [5] W. Zhao and S. Du, “Spectral-spatial feature extraction for hyperspectral image classification: A dimension reduction and deep learning approach,” IEEE Trans. Geosci. Remote Sens., vol. 54, no. 8, pp. 4544–4554, Aug 2016.

- [6] Y. Chen, H. Jiang, C. Li, X. Jia, and P. Ghamisi, “Deep feature extraction and classification of hyperspectral images based on convolutional neural networks,” IEEE Trans. Geosci. Remote Sens., vol. 54, no. 10, pp. 6232–6251, Oct 2016.

- [7] Z. Zhong, J. Li, Z. Luo, and M. Chapman, “Spectral-spatial residual network for hyperspectral image classification: A 3-d deep learning framework,” IEEE Trans. Geosci. Remote Sens., vol. 56, no. 2, pp. 847–858, Feb 2018.

- [8] L. Zhang, Q. Zhang, B. Du, X. Huang, Y. Y. Tang, and D. Tao, “Simultaneous spectral-spatial feature selection and extraction for hyperspectral images,” IEEE Trans. Cybern., vol. 48, no. 1, pp. 16–28, 2018.

- [9] Z. Feng, S. Yang, M. Wang, and L. Jiao, “Learning dual geometric low-rank structure for semisupervised hyperspectral image classification,” IEEE Trans. Cybern., vol. 51, no. 1, pp. 346–358, 2021.

- [10] T. N. Kipf and M. Welling, “Semi-supervised classification with graph convolutional networks,” in ICLR, 2017.

- [11] S. Wan, C. Gong, P. Zhong, B. Du, L. Zhang, and J. Yang, “Multiscale dynamic graph convolutional network for hyperspectral image classification,” IEEE Trans. Geosci. Remote Sens., vol. 58, no. 5, pp. 3162–3177, 2020.

- [12] S. Wan, C. Gong, P. Zhong, S. Pan, G. Li, and J. Yang, “Hyperspectral image classification with context-aware dynamic graph convolutional network,” IEEE Trans. Geosci. Remote Sens., pp. 1–16, 2020.

- [13] Y. Ding, J. Feng, Y. Chong, S. Pan, and X. Sun, “Adaptive sampling toward a dynamic graph convolutional network for hyperspectral image classification,” IEEE Trans. Geosci. Remote Sens., 2021.

- [14] Q. Liu, L. Xiao, J. Yang, and Z. Wei, “Cnn-enhanced graph convolutional network with pixel-and superpixel-level feature fusion for hyperspectral image classification,” IEEE Trans. Geosci. Remote Sens., vol. 59, no. 10, pp. 8657–8671, 2020.

- [15] S. Wan, S. Pan, P. Zhong, X. Chang, J. Yang, and C. Gong, “Dual interactive graph convolutional networks for hyperspectral image classification,” IEEE Trans. Geosci. Remote Sens., vol. 60, pp. 1–14, 2021.

- [16] H. Zhang, J. Zou, and L. Zhang, “EMS-GCN: An end-to-end mixhop superpixel-based graph convolutional network for hyperspectral image classification,” IEEE Trans. Geosci. Remote Sens., vol. 60, pp. 1–16, 2022.

- [17] Y. Ding, Y. Guo, Y. Chong, S. Pan, and J. Feng, “Global consistent graph convolutional network for hyperspectral image classification,” IEEE Trans. Instrum. Meas., vol. 70, pp. 1–16, 2021.

- [18] Y. Yang, X. Tang, X. Zhang, J. Ma, F. Liu, X. Jia, and L. Jiao, “Semi-supervised multiscale dynamic graph convolution network for hyperspectral image classification,” IEEE Trans. Neural Netw. Learn. Syst., pp. 1–15, 2022.

- [19] Z. Gong, L. Tong, J. Zhou, B. Qian, L. Duan, and C. Xiao, “Superpixel spectral-spatial feature fusion graph convolution network for hyperspectral image classification,” IEEE Trans. Geosci. Remote Sens., vol. 60, pp. 1–16, 2022.

- [20] X. Wang, R. Girshick, A. Gupta, and K. He, “Non-local neural networks,” in CVPR, 2018.

- [21] Y. Xu, L. Zhang, B. Du, and F. Zhang, “Spectral-spatial unified networks for hyperspectral image classification,” IEEE Trans. Geosci. Remote Sens., vol. 56, no. 10, pp. 5893–5909, Oct 2018.

- [22] D. Wang, B. Du, L. Zhang, and Y. Xu, “Adaptive spectral-spatial multiscale contextual feature extraction for hyperspectral image classification,” IEEE Trans. Geosci. Remote Sens., vol. 59, no. 3, pp. 2461–2477, 2021.

- [23] Y. Chen, Z. Lin, X. Zhao, G. Wang, and Y. Gu, “Deep learning-based classification of hyperspectral data,” IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens., vol. 7, no. 6, pp. 2094–2107, June 2014.

- [24] T. Li, J. Zhang, and Y. Zhang, “Classification of hyperspectral image based on deep belief networks,” in ICIP, 2014, pp. 5132–5136.

- [25] P. Zhou, J. Han, G. Cheng, and B. Zhang, “Learning compact and discriminative stacked autoencoder for hyperspectral image classification,” IEEE Trans. Geosci. Remote Sens., vol. 57, no. 7, pp. 4823–4833, 2019.

- [26] Y. Xu, B. Du, F. Zhang, and L. Zhang, “Hyperspectral image classification via a random patches network,” ISPRS-J. Photogramm. Remote Sens., vol. 142, pp. 344–357, 2018.

- [27] H. Sun, X. Zheng, X. Lu, and S. Wu, “Spectral-spatial attention network for hyperspectral image classification,” IEEE Trans. Geosci. Remote Sens., vol. 58, no. 5, pp. 3232–3245, 2020.

- [28] Q. Shi, M. Liu, S. Li, X. Liu, F. Wang, and L. Zhang, “A deeply supervised attention metric-based network and an open aerial image dataset for remote sensing change detection,” IEEE Trans. Geosci. Remote Sens., vol. 60, pp. 1–16, 2022.

- [29] L. Mou and X. X. Zhu, “Learning to pay attention on spectral domain: A spectral attention module-based convolutional network for hyperspectral image classification,” IEEE Trans. Geosci. Remote Sens., vol. 58, no. 1, pp. 110–122, 2020.

- [30] Q. Shi, X. Tang, T. Yang, R. Liu, and L. Zhang, “Hyperspectral image denoising using a 3-d attention denoising network,” IEEE Trans. Geosci. Remote Sens., vol. 59, no. 12, pp. 10 348–10 363, 2021.

- [31] R. Hang, Z. Li, Q. Liu, P. Ghamisi, and S. S. Bhattacharyya, “Hyperspectral image classification with attention-aided cnns,” IEEE Trans. Geosci. Remote Sens., pp. 1–13, 2020.

- [32] X. Tang, F. Meng, X. Zhang, Y. Cheung, J. Ma, F. Liu, and L. Jiao, “Hyperspectral image classification based on 3-d octave convolution with spatial-spectral attention network,” IEEE Trans. Geosci. Remote Sens., pp. 1–18, 2020.

- [33] D. He, Q. Shi, X. Liu, Y. Zhong, and L. Zhang, “Generating 2m fine-scale urban tree cover product over 34 metropolises in china based on deep context-aware sub-pixel mapping network,” Int. J. Appl. Earth Observ. Geoinform., vol. 106, p. 102667, 2022.

- [34] M. Zhu, L. Jiao, F. Liu, S. Yang, and J. Wang, “Residual spectral-spatial attention network for hyperspectral image classification,” IEEE Trans. Geosci. Remote Sens., pp. 1–14, 2020.

- [35] H. Lee and H. Kwon, “Going deeper with contextual cnn for hyperspectral image classification,” IEEE Trans. Image Process., vol. 26, no. 10, pp. 4843–4855, Oct 2017.

- [36] M. Zhang, W. Li, and Q. Du, “Diverse region-based cnn for hyperspectral image classification,” IEEE Trans. Image Process., vol. 27, no. 6, pp. 2623–2634, 2018.

- [37] G. Cheng, Z. Li, J. Han, X. Yao, and L. Guo, “Exploring hierarchical convolutional features for hyperspectral image classification,” IEEE Trans. Geosci. Remote Sens., vol. 56, no. 11, pp. 6712–6722, 2018.

- [38] Y. Xu, B. Du, and L. Zhang, “Beyond the patchwise classification: Spectral-spatial fully convolutional networks for hyperpsectral image classificaiton,” IEEE Trans. Big Data., pp. 1–1, 2019.

- [39] Z. Zheng, Y. Zhong, A. Ma, and L. Zhang, “FPGA: Fast patch-free global learning framework for fully end-to-end hyperspectral image classification,” IEEE Trans. Geosci. Remote Sens., vol. 58, no. 8, pp. 5612–5626, 2020.

- [40] D. Wang, B. Du, and L. Zhang, “Fully contextual network for hyperspectral scene parsing,” IEEE Trans. Geosci. Remote Sens., vol. 60, pp. 1–16, 2022.

- [41] M. Zhang, W. Li, Q. Du, L. Gao, and B. Zhang, “Feature extraction for classification of hyperspectral and lidar data using patch-to-patch cnn,” IEEE Trans. Cybern., vol. 50, no. 1, pp. 100–111, 2020.

- [42] J. Long, E. Shelhamer, and T. Darrell, “Fully convolutional networks for semantic segmentation,” in CVPR, 2015, pp. 3431–3440.

- [43] O. Ronneberger, P. Fischer, and T. Brox, “U-net: Convolutional networks for biomedical image segmentation,” in MICCAI, 2015, pp. 234–241.

- [44] G. Lin, A. Milan, C. Shen, and I. Reid, “RefineNet: Multi-path refinement networks for high-resolution semantic segmentation,” in CVPR, 2017, pp. 5168–5177.

- [45] C. Peng, X. Zhang, G. Yu, G. Luo, and J. Sun, “Large kernel matters – improve semantic segmentation by global convolutional network,” in CVPR, 2017.

- [46] C. Yu, J. Wang, C. Peng, C. Gao, G. Yu, and N. Sang, “BiSeNet: Bilateral segmentation network for real-time semantic segmentation,” in ECCV, 2018, pp. 334–349.

- [47] H. Zhang, K. Dana, J. Shi, Z. Zhang, X. Wang, A. Tyagi, and A. Agrawal, “Context encoding for semantic segmentation,” in CVPR, 2018, pp. 7151–7160.

- [48] L.-C. Chen, G. Papandreou, F. Schroff, and H. Adam, “Rethinking atrous convolution for semantic image segmentation,” arXiv preprint arXiv:1706.05587, 2017.

- [49] H. Zhao, J. Shi, X. Qi, X. Wang, and J. Jia, “Pyramid scene parsing network,” in CVPR, 2017, pp. 6230–6239.

- [50] J. Fu, J. Liu, H. Tian, Y. Li, Y. Bao, Z. Fang, and H. Lu, “Dual attention network for scene segmentation,” in CVPR, 2019, pp. 3141–3149.

- [51] Y. Yuan, L. Huang, J. Guo, C. Zhang, X. Chen, and J. Wang, “OCNet: Object context network for scene parsing,” arXiv preprint arXiv:1809.00916, 2018.

- [52] Z. Huang, X. Wang, L. Huang, C. Huang, Y. Wei, and W. Liu, “CCNet: Criss-cross attention for semantic segmentation,” in ICCV, 2019, pp. 603–612.

- [53] F. Zhang, Y. Chen, Z. Li, Z. Hong, J. Liu, F. Ma, J. Han, and E. Ding, “ACFNet: Attentional class feature network for semantic segmentation,” in ICCV, 2019, pp. 6797–6806.

- [54] Y. Yuan, X. Chen, and J. Wang, “Object-contextual representations for semantic segmentation,” in ECCV, 2020, pp. 173–190.

- [55] X. Li, Z. Zhong, J. Wu, Y. Yang, Z. Lin, and H. Liu, “Expectation-maximization attention networks for semantic segmentation,” in ICCV, 2019, pp. 9166–9175.

- [56] Y. Chen, M. Rohrbach, Z. Yan, Y. Shuicheng, J. Feng, and Y. Kalantidis, “Graph-based global reasoning networks,” in CVPR, 2019, pp. 433–442.

- [57] Y. Shen, S. Zhu, C. Chen, Q. Du, L. Xiao, J. Chen, and D. Pan, “Efficient deep learning of nonlocal features for hyperspectral image classification,” IEEE Trans. Geosci. Remote Sens., pp. 1–15, 2020.

- [58] D. Wang, B. Du, and L. Zhang, “Fully contextual network for hyperspectral scene parsing,” IEEE Trans. Geosci. Remote Sens., vol. 60, pp. 1–16, 2022.

- [59] X. He, Y. Chen, and P. Ghamisi, “Dual graph convolutional network for hyperspectral image classification with limited training samples,” IEEE Trans. Geosci. Remote Sens., vol. 60, pp. 1–18, 2021.

- [60] B. Xi, J. Li, Y. Li, R. Song, Y. Xiao, Q. Du, and J. Chanussot, “Semisupervised cross-scale graph prototypical network for hyperspectral image classification,” IEEE Trans. Neural Netw. Learn. Syst., 2022.

- [61] J. Chen, L. Jiao, X. Liu, L. Li, F. Liu, and S. Yang, “Automatic graph learning convolutional networks for hyperspectral image classification,” IEEE Trans. Geosci. Remote Sens., 2021.

- [62] D. Hong, L. Gao, J. Yao, B. Zhang, A. Plaza, and J. Chanussot, “Graph convolutional networks for hyperspectral image classification,” IEEE Trans. Geosci. Remote Sens., pp. 1–13, 2020.

- [63] X. Zhang, S. Chen, P. Zhu, X. Tang, J. Feng, and L. Jiao, “Spatial pooling graph convolutional network for hyperspectral image classification,” IEEE Trans. Geosci. Remote Sens., 2022.

- [64] J. Bai, B. Ding, Z. Xiao, L. Jiao, H. Chen, and A. C. Regan, “Hyperspectral image classification based on deep attention graph convolutional network,” IEEE Trans. Geosci. Remote Sens., vol. 60, pp. 1–16, 2021.

- [65] Y. Ding, Y. Chong, S. Pan, Y. Wang, and C. Nie, “Spatial-spectral unified adaptive probability graph convolutional networks for hyperspectral image classification,” IEEE Trans. Neural Netw. Learn. Syst., 2021.

- [66] R. Achanta, A. Shaji, K. Smith, A. Lucchi, P. Fua, and S. Süsstrunk, “Slic superpixels compared to state-of-the-art superpixel methods,” IEEE Trans. Pattern Anal.Mach. Intell., vol. 34, no. 11, pp. 2274–2282, 2012.

- [67] V. Jampani, D. Sun, M.-Y. Liu, M.-H. Yang, and J. Kautz, “Superpixel sampling networks,” in ECCV, 2018.

- [68] L. van der Maaten and G. Hinton, “Visualizing data using t-sne,” J. Mach Learn. Res., vol. 9, no. 86, pp. 2579–2605, 2008.