Spatiotemporal implicit neural representation for unsupervised dynamic MRI reconstruction

Abstract

Supervised Deep-Learning (DL)-based reconstruction algorithms have shown state-of-the-art results for highly-undersampled dynamic Magnetic Resonance Imaging (MRI) reconstruction. However, the requirement of excessive high-quality ground-truth data hinders their applications due to the generalization problem. Recently, Implicit Neural Representation (INR) has appeared as a powerful DL-based tool for solving the inverse problem by characterizing the attributes of a signal as a continuous function of corresponding coordinates in an unsupervised manner. In this work, we proposed an INR-based method to improve dynamic MRI reconstruction from highly undersampled k-space data, which only takes spatiotemporal coordinates as inputs. Specifically, the proposed INR represents the dynamic MRI images as an implicit function and encodes them into neural networks. The weights of the network are learned from sparsely-acquired (k, t)-space data itself only, without external training datasets or prior images. Benefiting from the strong implicit continuity regularization of INR together with explicit regularization for low-rankness and sparsity, our proposed method outperforms the compared scan-specific methods at various acceleration factors. E.g., experiments on retrospective cardiac cine datasets show an improvement of 5.5 7.1 dB in PSNR for extremely high accelerations (up to 41.6 ). The high-quality and inner continuity of the images provided by INR has great potential to further improve the spatiotemporal resolution of dynamic MRI, without the need of any training data.

keywords:

Dynamic MR imaging, Implicit Neural Representation, Unsupervised learning1 Introduction

Dynamic Magnetic Resonance Imaging (MRI) is one of the most popular MRI technologies, which can preserve not only excellent tissue contrast but also dynamic temporal changes of tissue. Dynamic MRI requires rapid data collection for the study of moving organs with severe physiological motion, such as the heart [1] and abdomen [2]. Dynamic Contrast-Enhanced (DCE) MRI has also made tremendous contributions to the study of microvascular structure and function of in vivo organs [3].

However, the limitations of MRI hardware on gradient encoding performance and long acquisition time slow down our pace for higher spatiotemporal resolutions in dynamic MRI[4]. Spatial and temporal resolution are always inversely related. High spatial resolution images can only be acquired with low temporal resolution and vice versa. Thus, a trade-off has to be made between spatial and temporal resolution in practical dynamic MRI. This conflict can be potentially resolved by developing advanced MRI reconstruction methods from highly-undersampled k-space data, including the traditional Compressed-Sensing (CS)-based methods and the Deep-Learning (DL)-based methods.

CS methods exploit spatial and temporal correlations of dynamic MRI by using irregular k-space undersampling patterns to create incoherent artifacts in a suitable transform domain where the medical images are compressible, such as in the k-t domain [5], temporal-gradient domain (temporal total variation regularizer) [6, 7] and many others. Image reconstruction is performed by exploiting the sparsity in the solution, subject to data consistency constraints. The further development of sparsity extended to the usage of low-rank prior: the Low-rank and Sparsity (LS) strategy enforced a both sparse and low-rank output solution [8, 9], and the Low-rank plus Sparsity (L+S) strategy decomposed the solution images into a low-rank and a sparsity component for background and the dynamic foreground, respectively [10]. Recently, the subspace-modeling strategy enforced a combination of a temporal sparsity constraint and a low-rank spatial subspace constraint to improve DCE-MRI reconstruction [11, 12].

Recent advances in DL techniques have shown potential for further accelerating dynamic MRI data acquisition. By adopting the supervised-learning strategy with large quantities of undersampled and fully-sampled image pairs, DL-based methods showed superior performance compared to CS-based methods [13]. DL-based methods applied in dynamic MRI reconstruction can be separated into two categories, i.e., end-to-end and unrolled methods. The end-to-end methods [14, 15] enable the networks to directly learn the mapping from undersampled images with artifacts to fully sampled high-quality images. In contrast, the unrolled strategy is inspired by unrolling the iterative optimization process of CS, using networks to learn the auxiliary parameters or regularizers [16, 17, 18, 19] during the iterations. Especially, L+S-Net [19] combined the L+S strategy of CS-based methods with the unrolled DL methods, demonstrating the availability of low-rank and sparsity in DL methods. However, the excessive demand for high-quality ground-truth labels in supervised learning hinders its applications in practice due to the generalization issue [13]. For example, the performance of the trained networks would degrade when the data is acquired with different scan parameters or pathological conditions. While in the case of DCE MRI, the ground-truth data are not available [20]. Alternatively, the unsupervised-learning strategy was introduced to the DL-based dynamic MRI reconstruction without involving external data in the training process. For example, Ke et al. [21] used a time-interleaved acquisition scheme, where the fully-sampled images were generated by merging adjacent frames. However, a large dataset is still needed for training the neural net. Yoo et al. [22] and Ahmed et al. [23] both adopted the Deep Image Prior (DIP) approach [24], which leveraged the tendency of untrained Convolutional Neural Networks (CNN) to generate natural-structured images as an implicit regularizer and then optimized the CNN parameters for scan-specific reconstruction. However, DIP-based methods suffer from a heavy computational burden and are still limited for application [22].

Implicit Neural Representation (INR) is a new way which parameterizes signals by a multi-layer perceptron (MLP) [25]. Unlike traditional explicit representation that uses discrete elements such as pixels (2D images) or voxels (3D volumes), INR represents the desired object itself as a continuous representation function of the spatial coordinates. In other words, the values at any spatial location of the object can be retrieved by querying the trained MLP with the corresponding coordinate. It provides a general solution for various applications of object reconstruction. With the application of MLP and proper encoding function mapping the input coordinates to a high-dimensional space [26], INR has achieved superior performance in multiple computer vision tasks [27, 26, 28]. Previous research also showed the INR’s capability to solve the inverse problem in medical imaging fields, e.g., CT image reconstruction [29, 30, 31] and undersampled MRI [32] in an unsupervised manner. For example, implicit Neural Representation learning with Prior embedding (NeRP) [32] was proposed to perform the static MRI reconstruction from the sparsely-sampled k-space data. However, NeRP requires a fully-sampled prior image with the same modality for the reconstruction of longitudinal MRI images of follow-up scans. Additionally, the INR for object reconstruction usually takes hours or even days to converge on one single data. Recently, parametric encoding functions with extra learnable parameters [33, 28, 34, 35] were proposed to significantly shorten the convergence time. For example, the hash encoding [28] function has shown promising results for accelerating the computational processes of INR in seconds for many graphics applications.

In this paper, we aim to present a new unsupervised method for highly accelerated dynamic MRI reconstruction. Inspired by the insight of INR, the proposed method treated the dynamic MR image sequence as a continuous function mapping the spatiotemporal coordinates to the corresponding image intensities. The function was parameterized by a hash encoding function and an MLP and served as an implicit continuity regularizer for dynamic MRI reconstruction. The MLP weights were directly learned from the imaging-model-based (k, t)-space data consistency loss combined with the explicit regularizers, without training databases or any ground-truth data. When inferring, the reconstructed images can simply be querying the optimized network with the same or denser spatiotemporal coordinates, which would allow for sampling and interpolating the dynamic MRI at an arbitrary frame rate. Experiments on retrospective cardiac cine data and prospective untriggered DCE liver MRI data showed that the proposed method outperformed the compared scan-specific methods. Our results showed an improvement of 5.5 dB 7.1 dB in PSNR at an extremely high acceleration factor (41.6-fold). A temporal super-resolution test (4) was conducted without retraining the network to demonstrate the strong continuity of the optimized representation function as an implicit regularizer for dynamic MRI reconstruction. The main contributions of this study are as follows:

-

1.

INR is first introduced to dynamic MRI reconstruction as an implicit continuity regularizer, achieving an improvement of 5.5 dB 7.1 dB in PSNR at an extremely high acceleration rate (41.6 ) compared to other scan-specific methods.

-

2.

The INR-based method is an unsupervised-learning strategy, meaning that it does not require external datasets or prior images for training. Thus, the proposed method generalizes on the data acquired with different scan parameters and imaging areas.

-

3.

The proposed method achieved a reasonable 4 temporal super-resolution for dynamic MRI reconstruction without network retraining, suggesting its strong implicit continuity to achieve higher temporal resolutions.

2 Method

2.1 Dynamic MRI with regularizers

In dynamic MRI, the relationship between measured (k, t)-space data and the reconstructed image matrix can be expressed by a linear model. Given the discretized image matrix and the measured (k, t)-space data of the th coil (), where is the image size, denotes the total temporal frames of the image, () is the number of acquired readout lines for each frame and is the total number of coil channels. The relationship between and can be formulated as:

| (1) |

Here, denotes the Fourier operator with the undersampling mask, which simulates the undersampled acquisition process of dynamic MRI, and is a diagonal matrix representing the th coil sensitivity map.

Reconstructing image from the undersampled (k, t)-space data is actually solving an ill-posed inverse problem, and the optimization process is formulated as:

| (2) |

where is the prior regularizer, helping target reach optimal results at ill-posed conditions.

It has been shown that using sparsity and low-rank regularizers as prior knowledge in CS-based [8, 9, 10] and DL-based methods [19] is able to reach state-of-the-art results for dynamic MRI reconstruction. An example can be formulated as:

| (3) |

where is the temporal TV operator as the sparsity regularizer. is the nuclear norm (sum of singular values) of image matrix , representing the low-rank regularizer. and are the sparsity and low-rank regularization hyperparameters, respectively. Previous works [8] have proved that the target in Eq. 3 can be optimized iteratively for a good dynamic MRI performance without Ground Truth (GT).

2.2 INR in dynamic MRI

Inspired by INR, the internal continuity of the image can be a powerful regularizer for solving the ill-posed inverse problem of dynamic MRI reconstruction from sparsely-acquired (k, t)-space data. The INR-based method can be implemented by applying a learnable continuous mapping function between spatiotemporal coordinates and desired image intensities to be reconstructed. We introduce be the continuous function parameterized by learnable parameters , mapping the spatiotemporal coordinates into corresponding image intensities, where represent the 2D spatial coordinates and represents the temporal coordinate . Thus, the image is rewritten to by feeding all the spatiotemporal coordinates of the dynamic images into and the Casorati matrix is:

| (4) |

2.3 Continuous mapping function with MLP and hash encoding

In INR, the continuous representation function is based on MLP. A better high-frequency fitting performance can be achieved by mapping the input coordinates to a higher dimensional space using an encoding function before passing them to MLP [26, 36]:

| (6) |

In this work, we adopted hash encoding [37] as the coordinate encoding function , which enables the use of smaller MLPs and significantly a faster convergence time. Specifically, hash encoding uses a total of independent hash grids with the size of as learnable feature storages. These hash grids represent a set of resolutions in the form of a geometric series, i.e., , where and b are the first term and the ratio of the geometric series, respectively. Trilinear interpolation is applied in each queried hash grid entry to keep continuity. Each hash grid outputs an -dim feature vector and then these interpolated feature vectors are concatenated as the final encoded input vector. As pointed out by Müller et al. [37], the five hyperparameters mentioned above can be tuned to fit large quantities of tasks better: and decide how the resolution among different hash grids increases, and , , are important tuners for the tradeoff between performance, memory and quality.

2.4 Loss functions

Eq. 5 is rewritten to the form of the following loss functions for the implementation with gradient-descent-based algorithms:

| (7) |

Here , and stand for data consistency (DC) loss in (k, t)-space, temporal TV loss and low-rank loss, corresponding to the three terms of the optimization objective in Eq. 5, respectively.

Considering that the magnitudes of the k-space low-frequency elements are several orders greater than those of the high-frequency elements, a relative L2 loss [38, 28] is used as the DC loss. Compared with normal L2 loss, the relative L2 loss is normalized by the square of predicted output, helping balance the gradients across k-space for better high-frequency performance. Let be one element of the multi-coil predicted k-space data and is the corresponding element of the multi-coil acquired k-space data , then DC loss is written as:

| (8) |

The parameter with a value of is added to the denominator to prevent the zero-division problem.

Therefore, the parameters of hash grids and MLP are optimized to minimize the total loss:

| (9) |

2.5 Implementation details

We used a tiny MLP containing 5 hidden layers and each hidden layer consisted of 64 neurons followed by a ReLU activation function. The MLP output 2 channels, representing the real and imaginary components of the complex-valued MRI images. No activation function was adopted for the last layer.

During the optimization process, all the spatiotemporal coordinates were gathered in one batch and the batch size was set to 1. All the coordinates were isotropically normalized to for fast convergence. The number of optimization epochs was set to 500. The Adam optimizer [39] was used with a constant learning rate of 0.001, , , and .

Once the optimization process was done, the continuous function was considered a good representation of the underlying image sequences. Then the same coordinate batch or a denser coordinate batch can be fed into the INR network to output the image sequences.

The whole pipeline is illustrated in Fig.1, and was conducted on a system equipped with an Intel i7-9700 processor, 64G RAM, and an NVIDIA RTX 2080Ti 11G GPU. The networks were implemented with PyTorch 1.11.0 and tiny-cuda-nn 111https://github.com/nvlabs/tiny-cuda-nn. The non-cartesian Fourier undersampling operation was implemented with the Non-Uniform Fast Fourier Transform (NUFFT) and was deployed with torchkbnufft 1.3.0 [40] for fast calculation and gradient backpropagation on GPU.

3 Experiments and results

3.1 Setup

3.1.1 Datasets

The proposed method was tested on a simulated retrospective cardiac cine dataset and a perspective untriggered DCE liver dataset to prove its effectiveness and generalization.

(1) Retrospective cardiac cine dataset:

The fully sampled cardiac cine data from the OCMR dataset [41] were acquired from healthy volunteers on a 1.5T scanner (MAGNETOM Avanto, Siemens Healthineers, Erlangen, Germany) using a bSSFP sequence with the following parameters: FOV = mm2, imaging matrix = , slice thickness = 8 mm, TR/TE = 2.79 ms/1.33 ms, number of frame = 18. The data acquisition was collected with prospective ECG-gating and breath-holding. The number of receiver coils is 18. A simulation undersampling pattern of 2D golden-angle radial acquisition scheme is adopted, where the readout lines are repetitively through the center of k-space and rotated with a step of 111.25°. The simulation process includes cropping original data to in the image domain and then converting to the frequency domain by multi-coil NUFFT with golden-angle trajectories of Fibonacci numbers [42]. The coil sensitivity maps were calculated by the ESPIRiT algorithm [43].

(2) Untriggered DCE liver dataset:

The DCE liver data were acquired continuously with the golden-angle acquisition scheme. The 3D stack-of-stars Fast Low Angle SHot (FLASH) sequence was acquired on a breath-holding healthy volunteer using a 3T Siemens MAGNETOM Verio scanner with the following parameters: FOV = mm2, TR/TE = 3.83 ms/1.71 ms, imaging matrix = , slice thickness = 3 mm, total spoke number of each slice = 600. A total of 12 receiver coils were used during the scan. The data including coil sensitivity maps were from Feng et al. [7]’s demo and details about intravenous contrast enhancement can be found in the paper. Each 34 acquired spokes were grouped to reconstruct one frame, which corresponds to an Acceleration Factor (AF) and 17 frames in total.

3.1.2 Performance evaluation

In this work, we chose NUFFT, L+S [10] and GRASP [7] as the baselines for comparison. NUFFT gives the results obtained by directly zero-filling the frequency domain. L+S and GRASP two of the CS-based reconstruction methods which use a similar optimization pipeline as Eq. 2. The difference between them is that GRASP adopted a temporal TV regularizer, while L+S decomposed the solution images into a background component with low-rank regularizer and a dynamic foreground with temporal TV regularizer. We did not compare the proposed INR-based method to the supervised DL methods for dynamic MRI reconstruction since the datasets used in this work are insufficient for supervised network training. In addition, the ground truth is not available for the untriggered DCE liver dataset, which also limits the training process of previous supervised methods.

We tested the performance of the proposed method with 21, 13, 8 and 5 spokes per frame (AF ) on the cardiac cine dataset, and with 34 spokes per frame (AF ) on the DCE liver dataset. For a fair comparison, the hyperparameters of all the methods are tuned to get the best performance and fit the GPU storage in different datasets and AFs, respectively.

Quantitative visual comparison and quantitative comparison were used for evaluation. For the cardiac cine dataset, quantitative metrics including peak signal-to-noise ratio (PSNR) and structural similarity index (SSIM) were calculated frame-by-frame as follows:

| (10) |

| (11) |

where and represent ground truth and reconstructed image, respectively, and are the mean intensity of and , and are the variance of and , is the covariance of and , the constant and were set to and . and were both normalized to according to the image sequence maximum and minimum.

The k-space data were calculated from the reconstructed complex-valued MR images with the 2D Fast Fourier Transform. For quantitative comparison, the normalized root mean square error (NRMSE) against GT k-space data was calculated coil-by-coil:

| (12) |

where and represents the predicted and acquired k-space data, respectively.

For the DCE liver dataset, only visual comparison and temporal ROI intensity assessment were conducted due to the lack of the GT image. The ROIs of the aorta (AO) and portal vein (PV) were manually drawn for signal intensity-time curves. For temporal fidelity comparison, NUFFT was used as the reference since no temporal regularization was involved in the reconstructed images. Although contaminated by the streaking artifacts, the average signal intensity from NUFFT results across large ROI was still able to preserve contrast evolution for fidelity analysis.

3.2 Reconstruction performance of the proposed method

3.2.1 Cardiac cine dataset

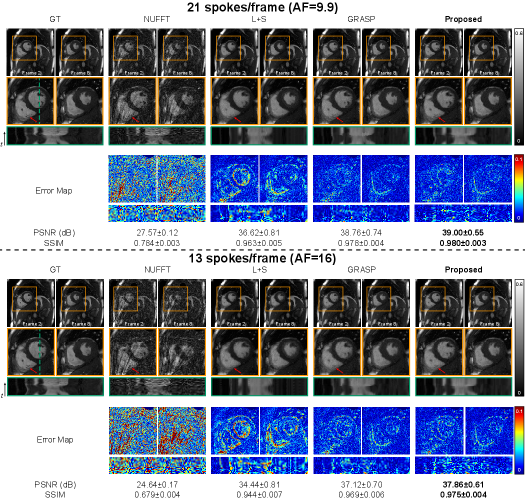

Fig.2 compares the reconstruction performance of different methods on the cardiac cine dataset with 21 and 13 spokes per frame (AF=9.9, 16, respectively). Visually, the images reconstructed by the proposed method appear to have better anatomical details and provide a more accurate temporal fidelity than the baselines at both acceleration conditions of 21 and 13 spokes. NUFFT, L+S and GRASP all suffer from artifacts and noises in the cardiac chamber area. While the reconstructed images by the proposed method show highly similar anatomical details as the ground truth, as pointed out by red arrows. In the y-t view, the L+S results are over smooth and the GRASP results suffer from noticeable streaking artifacts along the temporal axis. The proposed method provides the highest temporal fidelity of the dynamic images between frames. The error map between the reconstruction and the ground truth further supports our observation. It is noted that the reconstructed errors observed on the error maps at the edge of the cardiac ventricles were potentially blurred by cardiac motion. The proposed INR-based method shows the smallest error at the edge of the ventricles, and is consistent with the observation from the y-t view. Quantitatively, the proposed method achieves the best performance with a PSNR of 39.00±0.55 dB (21 spokes) / 37.86±0.61 dB (13 spokes) and an SSIM of 0.980±0.003 (21 spokes) / 0.975±0.004 (13 spokes) than the compared methods.

We further tested the ability of the proposed method for dynamic MRI reconstruction at extremely high acceleration rates (8 and 5 per frame, AF=26 and 41.6, respectively), as shown in Fig.3. The proposed method exhibits comparable performance between AF=26 and 41.6, with the PSNR/SSIM of 36.88 ± 0.63 dB/0.968 ± 0.005 (8 spokes) and 35.41 ± 0.56 dB/0.957 ± 0.006 (5 spokes). The proposed method has the best image quality with minimal noise and artifacts. Contrarily, L+S and GRASP suffer from temporal smoothness and noticeable streaking artifacts with increased acceleration rates. From the y-t view, the dynamic information on the reconstructed images is well captured by the proposed method, even with 5 spokes per frame. Additionally, the proposed INR-based method results in a higher PSNR than GRASP ( 5.5 dB) and L+S ( 7.1 dB), respectively.

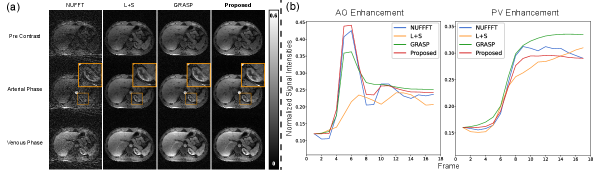

3.2.2 DCE liver dataset

For DCE liver dataset with 34 spokes per frame (AF=11.3), the visual comparisons at different temporal phases are demonstrated in Fig. 4(a). As can be seen from the zoomed-in images, the anatomical details of the kidney can be well visible on the reconstructed images by the proposed method. Severe streaking and noise can be observed on the reconstructed images by NUFFT, L+S, and GRASP. While the proposed method provides high-quality images with less noise than other methods. The signal intensity-time curves in Fig. 4(b) suggest that the proposed method yields the best temporal fidelity, which is consistent with the results of NUFFT in AO and PV. For example, the intensity fluctuation of the AO curve between Frame 5 and Frame 11 can be well captured by the proposed INR-based method.

3.3 Results of the temporal super-resolution

To demonstrate the internal continuity of the optimized representation of the dynamic MRI, we use a denser coordinate along the temporal axis as input to conduct upsampling on the reconstructed dynamic MR image sequence, named temporal super-resolution. The pipeline is shown in Fig.5(a). The GT frames with temporal linear interpolation between Frame 10 and 11 are used as the reference for comparison, as shown in Fig.5(b). Qualitatively, there is no significant structural difference between the super-resolution images and the interpolated images, indicating the strong implicit continuity representation of the optimized INR function.

4 Discussion

In this study, we proposed a novel unsupervised INR-based deep learning method for highly accelerated dynamic MRI reconstruction, which modeled the dynamic MR image sequence as a continuous mapping function. We validated the proposed method on retrospective cardiac cine data and perspective DCE liver data with various acceleration rates. The results showed the effectiveness and generalization of the proposed method on artifact suppression and motion fidelity preservation, especially at extremely high accelerations of 26-fold or 41.6-fold. The proposed method outperforms the compared CS-based methods such as L+S and GRASP. The results indicated that the proposed reconstruction method holds promise for high temporal resolution 2D MRI acquisitions.

The superiority of the proposed method over the baseline methods is believed from the implicit regularization from the internal continuity of INR, which is validated by the results of the temporal super-resolution , as shown in Fig.5. In addition, the super-resolution performance allows us to further speed up the data acquisition along the temporal axis for dynamic MRI. Unlike the existing super-resolution methods, the INR-based method does not require extra modeling or training, but simply gives the denser coordinates, which reduces the computational burden and the reconstruction time usage during deployment.

The proposed method has a few limitations. First, the low-rank regularization adopted in this work is the nuclear norm and is optimized with gradient-descent-based algorithms. However, as discussed by Lingala et al. [8]’s work, naive nuclear norm minimization may not be stable for fast convergence. In future works, INR combined with different low-rank regularization substitutes and optimization methods will be explored. Second, the temporal super-resolution test indicates a comparable 4 times upsampling result with INR, but the smoothness witnessed at the edge of heart chambers demonstrated its limitation for higher or even arbitrary super-resolution results. Third, although the reconstruction time is faster than the other unsupervised methods, it is still challenging for real-time imaging.

5 Conclusion

In this work, we proposed an INR-based unsupervised deep learning method for highly accelerated dynamic MRI reconstruction. The proposed method learns an implicit continuous representation function to represent the desired spatiotemporal image sequence, mapping spatiotemporal coordinates to the corresponding image intensities. The proposed method is training database-free and does not require prior information for the reconstruction. Several tests on retrospective cardiac and perspective DCE liver data proved that the proposed method could robustly produce a high-quality dynamic MR image sequence even at an extremely high acceleration rate . Additionally, benefiting from the internal continuity of the optimized INR network, the proposed method demonstrates an impressive performance of temporal super-resolution to upsample the desired dynamic images at higher temporal rates than the physical acquisitions. We thus believe that the INR-based method has the potential to further accelerate dynamic MRI acquisition in the future.

References

- Marcu et al. [2006] C. B. Marcu, A. M. Beek, A. C. van Rossum, Clinical applications of cardiovascular magnetic resonance imaging, CMAJ 175 (2006) 911–917.

- Low [2007] R. N. Low, Abdominal mri advances in the detection of liver tumours and characterisation, The Lancet Oncology 8 (2007) 525–535.

- Cuenod and Balvay [2013] C. Cuenod, D. Balvay, Perfusion and vascular permeability: Basic concepts and measurement in dce-ct and dce-mri, Diagnostic and Interventional Imaging 94 (2013) 1187–1204.

- Wright and McDougall [2009] S. M. Wright, M. P. McDougall, Single echo acquisition mri using rf encoding, NMR in Biomedicine 22 (2009) 982–993.

- Jung et al. [2009] H. Jung, K. Sung, K. S. Nayak, E. Y. Kim, J. C. Ye, k-t focuss: A general compressed sensing framework for high resolution dynamic mri, Magnetic Resonance in Medicine 61 (2009) 103–116.

- Feng et al. [2013] L. Feng, M. B. Srichai, R. P. Lim, A. Harrison, W. King, G. Adluru, E. V. R. Dibella, D. K. Sodickson, R. Otazo, D. Kim, Highly accelerated real-time cardiac cine mri using k–t sparse-sense, Magnetic Resonance in Medicine 70 (2013) 64–74.

- Feng et al. [2014] L. Feng, R. Grimm, K. T. Block, H. Chandarana, S. Kim, J. Xu, L. Axel, D. K. Sodickson, R. Otazo, Golden-angle radial sparse parallel mri: Combination of compressed sensing, parallel imaging, and golden-angle radial sampling for fast and flexible dynamic volumetric mri, Magnetic Resonance in Medicine 72 (2014) 707–717.

- Lingala et al. [2011] S. G. Lingala, Y. Hu, E. DiBella, M. Jacob, Accelerated dynamic mri exploiting sparsity and low-rank structure: k-t slr, IEEE Transactions on Medical Imaging 30 (2011) 1042–1054.

- Zhao et al. [2012] B. Zhao, J. P. Haldar, A. G. Christodoulou, Z.-P. Liang, Image reconstruction from highly undersampled (k, t)-space data with joint partial separability and sparsity constraints, IEEE Transactions on Medical Imaging 31 (2012) 1809–1820.

- Otazo et al. [2015] R. Otazo, E. Candès, D. K. Sodickson, Low-rank plus sparse matrix decomposition for accelerated dynamic mri with separation of background and dynamic components, Magnetic Resonance in Medicine 73 (2015) 1125–1136.

- Feng et al. [2020] L. Feng, Q. Wen, C. Huang, A. Tong, F. Liu, H. Chandarana, Grasp-pro: improving grasp dce-mri through self-calibrating subspace-modeling and contrast phase automation, Magnetic Resonance in Medicine 83 (2020) 94–108.

- Feng [2022] L. Feng, 4d golden-angle radial mri at subsecond temporal resolution, NMR in Biomedicine (2022) e4844.

- Bustin et al. [2020] A. Bustin, N. Fuin, R. M. Botnar, C. Prieto, From compressed-sensing to artificial intelligence-based cardiac mri reconstruction, Frontiers in cardiovascular medicine 7 (2020) 17.

- Wang et al. [2016] S. Wang, Z. Su, L. Ying, X. Peng, S. Zhu, F. Liang, D. Feng, D. Liang, Accelerating magnetic resonance imaging via deep learning, in: IEEE 13th International Symposium on Biomedical Imaging (ISBI), 2016, pp. 514–517.

- Han et al. [2018] Y. Han, J. Yoo, H. H. Kim, H. J. Shin, K. Sung, J. C. Ye, Deep learning with domain adaptation for accelerated projection-reconstruction mr, Magnetic Resonance in Medicine 80 (2018) 1189–1205.

- Schlemper et al. [2017] J. Schlemper, J. Caballero, J. V. Hajnal, A. Price, D. Rueckert, A deep cascade of convolutional neural networks for mr image reconstruction, in: International Conference on Information Processing in Medical Imaging, 2017, pp. 647–658.

- Qin et al. [2019] C. Qin, J. Schlemper, J. Caballero, A. N. Price, J. V. Hajnal, D. Rueckert, Convolutional recurrent neural networks for dynamic mr image reconstruction, IEEE Transactions on Medical Imaging 38 (2019) 280–290.

- Sandino et al. [2021] C. M. Sandino, P. Lai, S. S. Vasanawala, J. Y. Cheng, Accelerating cardiac cine mri using a deep learning-based espirit reconstruction, Magnetic Resonance in Medicine 85 (2021) 152–167.

- Huang et al. [2021a] W. Huang, Z. Ke, Z.-X. Cui, J. Cheng, Z. Qiu, S. Jia, L. Ying, Y. Zhu, D. Liang, Deep low-rank plus sparse network for dynamic mr imaging, Medical Image Analysis 73 (2021a) 102190.

- Huang et al. [2021b] Z. Huang, J. Bae, P. M. Johnson, T. Sood, L. Heacock, J. Fogarty, L. Moy, S. G. Kim, F. Knoll, A simulation pipeline to generate realistic breast images for learning dce-mri reconstruction, in: Machine Learning for Medical Image Reconstruction, 2021b, pp. 45–53.

- Ke et al. [2020] Z. Ke, J. Cheng, L. Ying, H. Zheng, Y. Zhu, D. Liang, An unsupervised deep learning method for multi-coil cine mri, Physics in Medicine & Biology 65 (2020) 235041.

- Yoo et al. [2021] J. Yoo, K. H. Jin, H. Gupta, J. Yerly, M. Stuber, M. Unser, Time-dependent deep image prior for dynamic mri, IEEE Transactions on Medical Imaging 40 (2021) 3337–3348.

- Ahmed et al. [2022] A. H. Ahmed, Q. Zou, P. Nagpal, M. Jacob, Dynamic imaging using deep bi-linear unsupervised representation (deblur), IEEE Transactions on Medical Imaging 41 (2022) 2693–2703.

- Ulyanov et al. [2018] D. Ulyanov, A. Vedaldi, V. Lempitsky, Deep image prior, in: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2018, pp. 9446–9454.

- Sitzmann et al. [2020] V. Sitzmann, J. N. P. Martel, A. W. Bergman, D. B. Lindell, G. Wetzstein, Implicit neural representations with periodic activation functions, in: Proceedings of the 34th International Conference on Neural Information Processing Systems (NIPS), 2020, pp. 7462–7473.

- Mildenhall et al. [2020] B. Mildenhall, P. P. Srinivasan, M. Tancik, J. T. Barron, R. Ramamoorthi, R. Ng, Nerf: Representing scenes as neural radiance fields for view synthesis, in: European Conference on Computer Vision (ECCV), 2020, pp. 405–421.

- Park et al. [2019] J. J. Park, P. Florence, J. Straub, R. Newcombe, S. Lovegrove, Deepsdf: Learning continuous signed distance functions for shape representation, in: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019, pp. 165–174.

- Müller et al. [2021] T. Müller, F. Rousselle, J. Novák, A. Keller, Real-time neural radiance caching for path tracing, arXiv preprint arXiv:2106.12372 (2021).

- Zang et al. [2021] G. Zang, R. Idoughi, R. Li, P. Wonka, W. Heidrich, Intratomo: self-supervised learning-based tomography via sinogram synthesis and prediction, in: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), 2021, pp. 1960–1970.

- Reed et al. [2021] A. W. Reed, H. Kim, R. Anirudh, K. A. Mohan, K. Champley, J. Kang, S. Jayasuriya, Dynamic ct reconstruction from limited views with implicit neural representations and parametric motion fields, in: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), 2021, pp. 2258–2268.

- Sun et al. [2021] Y. Sun, J. Liu, M. Xie, B. Wohlberg, U. S. Kamilov, Coil: Coordinate-based internal learning for tomographic imaging, IEEE Transactions on Computational Imaging 7 (2021) 1400–1412.

- Shen et al. [2022] L. Shen, J. Pauly, L. Xing, Nerp: Implicit neural representation learning with prior embedding for sparsely sampled image reconstruction, IEEE Transactions on Neural Networks and Learning Systems (2022) 1–13.

- Liu et al. [2020] L. Liu, J. Gu, K. Z. Lin, T.-S. Chua, C. Theobalt, Neural sparse voxel fields, in: Proceedings of the 34th International Conference on Neural Information Processing Systems (NIPS), NIPS’20, 2020, pp. 15651–15663.

- Sun et al. [2022] C. Sun, M. Sun, H. Chen, Direct voxel grid optimization: Super-fast convergence for radiance fields reconstruction, in: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022, pp. 5459–5469.

- Sara Fridovich-Keil and Alex Yu et al. [2022] Sara Fridovich-Keil and Alex Yu, M. Tancik, Q. Chen, B. Recht, A. Kanazawa, Plenoxels: Radiance fields without neural networks, in: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022, pp. 5491–5500.

- Tancik et al. [2020] M. Tancik, P. P. Srinivasan, B. Mildenhall, S. Fridovich-Keil, N. Raghavan, U. Singhal, R. Ramamoorthi, J. T. Barron, R. Ng, Fourier features let networks learn high frequency functions in low dimensional domains, in: Proceedings of the 34th International Conference on Neural Information Processing Systems (NIPS), 2020, pp. 7537–7547.

- Müller et al. [2022] T. Müller, A. Evans, C. Schied, A. Keller, Instant neural graphics primitives with a multiresolution hash encoding, ACM Transactions on Graphics 41 (2022) 102:1–102:15.

- Lehtinen et al. [2018] J. Lehtinen, J. Munkberg, J. Hasselgren, S. Laine, T. Karras, M. Aittala, T. Aila, Noise2noise: Learning image restoration without clean data, in: International Conference on Machine Learning (ICML), volume 80, 2018, pp. 2965–2974.

- Kingma and Ba [2014] D. P. Kingma, J. Ba, Adam: A method for stochastic optimization, arXiv preprint arXiv:1412.6980 (2014).

- Muckley et al. [2020] M. J. Muckley, R. Stern, T. Murrell, F. Knoll, TorchKbNufft: A high-level, hardware-agnostic non-uniform fast Fourier transform, in: ISMRM Workshop on Data Sampling & Image Reconstruction, 2020.

- Chen et al. [2020] C. Chen, Y. Liu, P. Schniter, M. Tong, K. Zareba, O. Simonetti, L. Potter, R. Ahmad, Ocmr (v1. 0)–open-access multi-coil k-space dataset for cardiovascular magnetic resonance imaging, arXiv preprint arXiv:2008.03410 (2020).

- Chandarana et al. [2013] H. Chandarana, L. Feng, T. K. Block, A. B. Rosenkrantz, R. P. Lim, J. S. Babb, D. K. Sodickson, R. Otazo, Free-breathing contrast-enhanced multiphase mri of the liver using a combination of compressed sensing, parallel imaging, and golden-angle radial sampling, Investigative radiology 48 (2013) 10–16.

- Uecker et al. [2014] M. Uecker, P. Lai, M. J. Murphy, P. Virtue, M. Elad, J. M. Pauly, S. S. Vasanawala, M. Lustig, Espirit—an eigenvalue approach to autocalibrating parallel mri: Where sense meets grappa, Magnetic Resonance in Medicine 71 (2014) 990–1001.