Spatio-temporal Neural Structural Causal Models for Bike Flow Prediction

Abstract

As a representative of public transportation, the fundamental issue of managing bike-sharing systems is bike flow prediction. Recent methods overemphasize the spatio-temporal correlations in the data, ignoring the effects of contextual conditions on the transportation system and the inter-regional time-varying causality. In addition, due to the disturbance of incomplete observations in the data, random contextual conditions lead to spurious correlations between data and features, making the prediction of the model ineffective in special scenarios. To overcome this issue, we propose a Spatio-temporal Neural Structure Causal Model(STNSCM) from the perspective of causality. First, we build a causal graph to describe the traffic prediction, and further analyze the causal relationship between the input data, contextual conditions, spatio-temporal states, and prediction results. Second, we propose to apply the frontdoor criterion to eliminate confounding biases in the feature extraction process. Finally, we propose a counterfactual representation reasoning module to extrapolate the spatio-temporal state under the factual scenario to future counterfactual scenarios to improve the prediction performance. Experiments on real-world datasets demonstrate the superior performance of our model, especially its resistance to fluctuations caused by the external environment. The source code and data will be released.

Introduction

Bike-Sharing systems have been widely deployed in urban public transportation due to their convenience and environmental friendliness in recent years. As a representative of Intelligent Transportation System (ITS), one of the key concerns is the effective allocation of bike-sharing resources to enhance the quality of system service. However, due to the high frequency and randomness of bike usage throughout the city, the stations often get imbalanced over time. The bike-sharing system always has some congested and hungry stations, leading to a significant number of unsatisfied customers. Therefore, it is necessary to accurately predict the bike flow in each commuting area. According to the prediction results of bike flow, it can be repositioned in advance to prevent excessive demand at the station, which is an urgent need for bike-sharing system operators.

Bike usage patterns and spatio-temporal dependencies between regions are affected by external conditions (e.g. time factors and weather information). As shown in Fig.1(b), the time factors and weather may greatly restrict bike usage in each region, and weekdays and weekends may cause completely different spatio-temporal dependencies. Traditional traffic flow prediction models based on multi-source data usually simply integrate time and weather into the model through the fully connected layer(Li et al. 2019; Liang et al. 2021; Sun et al. 2020). More input information cannot improve the prediction ability of the model. Instead, it will introduce a large number of confounding factors and extract spurious correlations in observations(Liu et al. 2021), resulting in the decline of model performance.

We build a causal graph to describe the bike flow prediction, and further analyze it from a causal perspective. We formulate the causalities among bike flow , contextual condition , spatio-temporal states , and predicted target . As shown in Fig. 1(a), directed edges denote causal relationships between nodes. The spatio-temporal state represents the intrinsic trend of bicycle demand under the current contextual conditions. The contextual condition , as a common cause, affects and the spatio-temporal state . Bike-sharing systems are susceptible to external contextual conditions, so bike flow prediction must take contextual conditions into account. However, due to the limitation of dataset, the data are collected under normal conditions in most cases, which causes the neural network to only learn the general spatio-temporal patterns under normal conditions, and it is difficult to capture the spatio-temporal state under special conditions. Once a special contextual condition occurs during testing, the predictive performance of the model will drop significantly. As shown in Fig. 1(e), there is a strong snowstorm on this day, the flow is generally low and the rush hours in the morning and evening are not obvious, which is a special environment that unseens in the training set. The predictions of existing methods are on the high side, because they learn a more average spatio-temporal pattern, which is difficult to cope with fluctuations caused by the external environment. These methods emphasize the average performance over the entire dataset while ignoring the prediction performance in specific scenarios. However, bike flow prediction in specific scenarios are often more helpful for managers to formulate emergency measures in advance. Therefore, it is necessary to eliminate the influence of potential contextual conditions on feature extraction by means of causal intervention, so as to make the extracted spatio-temporal state more fair and effectively capture the spatio-temporal pattern of the data itself.

In addition, recent works overemphasize spatio-temporal correlations of bike flow. Generally, these graph-based spatio-temporal prediction models leverage GCN to model spatial correlations, and GRU(Li et al. 2018; Liu et al. 2020; Bai et al. 2020; Ye et al. 2021; Li et al. 2021) or CNN(Wu et al. 2019; Guo et al. 2021a; Fang et al. 2021; Han et al. 2021) to model temporal correlations separately. Although these methods can achieve satisfactory effects, their ability to model complex nonlinear dynamic spatio-temporal causality is still obviously insufficient. As shown in Fig.1(b), bike usage patterns in region 1 are similar to region 2 and 3 during the morning rush hour, but are more similar to region 0 during the evening rush hour. Therefore, the bike flow data implies intense dynamic dependencies in spatial and temporal dimensions and complex nonlinear causality in spatio-temporal dimensions. Most of the adjacency matrices are fixed and generated by heuristic methods based on spatial distance(Liu et al. 2020) or time series similarity(Chai, Wang, and Yang 2018), which cannot capture the time-varying spatio-temporal causality.

To address these aforementioned challenges, We propose a Spatio-Temporal Neural Structural Causal Model (STNSCM), and the causal graph shown in Fig. 1(c) and (d). Its core idea is based on structural causal model theory to remove confounders in the feature extraction process and follow the counterfactual reasoning framework to predict future bike flow. First, we apply the frontdoor criterion based on causal intervention, cutting off the link , which gives a fair opportunity to incorporate each contextual condition into spatio-temporal state . Second, we view future scenarios in a ”what if” way, that is, if the current environment changes, how will the future state change, and thus how will future flow change? The key to answer this counterfactual question is how to make full use of future external conditions. Specifically, The main contributions are as follows:

-

•

We provide a novel causality-based interpretation for the bike flow prediction and apply the frontdoor criterion based on causal interventions to remove confounding biases in the feature extraction process. To the best of our knowledge, our work is the first one that successfully applies the structural causal model to traffic prediction problems.

-

•

We propose a counterfactual representation reasoning module to extrapolate the spatio-temporal state under the factual scenario to the future counterfactual scenario, which enhances the feature’s understanding of future states, thereby improving the prediction performance.

-

•

Extensive experiments on two real-world bike-sharing systems datasets show that our STNSCM comprehensively outperforms state-of-the-art methods for region-level bike flow prediction.

Related Work

Spatio-temporal Traffic Data Prediction

Traffic prediction is a fundamental problem in Intelligent Transportation System including the recent Bike-Sharing System, which has recently attracted significant attention. Since the real-world traffic network is non-Euclidean data, most methods construct GCN models to study spatio-temporal data prediction problems. utilized GCN with predefined graphs to model spatial correlations. To enrich spatial information, (Liu et al. 2020; Fang et al. 2021; Chai, Wang, and Yang 2018) establish multiple static topologies to effectively capture complex patterns. DCRNN(Li et al. 2018) replaces the linear transformation layer in GRU with diffusion graph convolution, which boosts the spatio-temporal representation ability of GRU. HGCN(Guo et al. 2021a) constructs the interaction between the micro and macro layers of GCN, which integrates the different scales of features of road segments and regions.

Recently, some researchers have paid attention to the strong dynamic correlations of traffic data in spatial and temporal dimensions. Therefore, it is crucial to model dynamic and nonlinear spatio-temporal correlations for accurate traffic prediction. GWNet(Wu et al. 2019) and AGCRN(Bai et al. 2020) randomly initialize the node embedding vectors, and then learn an adaptive matrix through gradient descent. Once the training of the model finishes, the adaptive matrix is fixed. So it cannot extract the dynamics from real data effectively. ASTGNN(Guo et al. 2021b) and GMAN(Zheng et al. 2020) dynamically extract the correlations of nodes by means of spatial attention. However, affected by the structure of predefined graphs, spatial attention can only change the weight of predefined graphs rather than the structure. CCRNN(Ye et al. 2021) proposes a coupled graph convolution with self-learned adjacency matrices varying from layer to layer, but the graph structure changes with the convolutional layer instead of time steps. DGCRN(Li et al. 2021) handles the dynamic relations by learning the matrix at each recurrent step, while it significantly depends on the node embedding layer initialized by random parameters. DMSTGCN(Han et al. 2021) learns the time-specific spatial dependencies of road segments to construct dynamic graphs, but ignores the fluctuations caused by the external environment. In addition, these methods do not take external conditions into consideration during the dynamic graph generation process, resulting in very limited effects of dynamic graphs.

Causal learning

The purpose of causal learning is to empower models the ability to pursue the causal effect (Zhang et al. 2020). Recent works are proposed to combine SCM (Pearl 2009; Schölkopf 2022) with deep learning models, which is called causal learning. Causal learning is widely used in the field of causal representation, CasualVAE (Yang et al. 2021) proposes a model with causal layer to transform exogenous factors into causal endogenous ones that correspond to causally related concepts in data. Shen (Shen et al. 2020)et al. use a SCM as the prior for bidirectional generative model which can generate data from any desired interventional distributions of the latent factors. (Zhang et al. 2020; Lin et al. 2022; Liu et al. 2022; Yue et al. 2020) use the backdoor criterion to eliminate the contextual confounding biases. Different from above works, the input data and extracted features do not satisfy the backdoor criterion in the field of traffic prediction. As shown in Fig. 1(a), we apply the frontdoor formula and use neural networks to model the sub terms, so our model is called neural structural causal model.

Methodology

The overall framework of STNSCM is shown in Fig.2.

Structural Causal Model

We formulate the causalities among bike flow , contextual condition , spatial neighborhood features , temporal dynamic features , spatio-temporal states , and predicted target , with a structural Causal Model (SCM). As shown in Fig. 1(a), directed edges denote causal relationships between nodes. We believe that spatial neighborhood features and temporal dynamic features can be decoupled from spatio-temporal data , and they are integrated to form spatiotemporal states , which can describe dynamic spatio-temporal patterns in the data.

Causal Intervention via Frontdoor Criterion

The contextual condition is expressed as the common cause of and , which may cause to be more inclined to the general state and ignore the specific environment due to the limitation of the dataset, resulting in unfair bias of . However, the have infinite possibilities and cannot be completely covered. we cannot use backdoor adjustments according to the path .

Fortunately, we apply the frontdoor criterion based on the path , cutting off the link , which gives a fair opportunity to incorporate each contextual condition into spatio-temporal state . Formally, we have:

| (1) | ||||

where represents the prior distribution of the input data, and we propose an Input Gate to fit this prior distribution. denotes the process of extracting spatio-temporal features from data and noise, and we propose a Dynamic Causality Generator to embed spatio-temporal causality into a dynamic causal graph. denotes the generation of time-varying spatio-temporal states from features to describe the spatio-temporal patterns inherent in the data, and we propose a Spatio-temporal Evolutionary Graph Convolution to extract spatio-temporal states.

Input Gate

The periodic flow data are composed of the slices of bike flow tensor from the previous week , the previous day , and previous time steps , where is the number of flow features. For example, assuming that the time step is one hour, we use the historical four hours () to predict the bike flow for the next two hours () from 18:00-20:00 on Monday. We take the historical periodic flow data as input, including 16:00-20:00 from the previous week, 16:00-20:00 from the previous day and 14:00-18:00 from the previous four hours. Analogously, we can collect the external conditions at the same time step, denoted as , , respectively, where is the number of external conditions.

As shown in Fig.2, the input gate gets a concatenation of the periodic flow data and the external conditions within the same time step (e.g. , where denotes the concatenation operation). The fully connected layer is used to process the input features of week, day, and hour separately. Then we concatenate them together to obtain . Finally, the gated linear unit is used to output the context-conditioned features . Given the concatenated input features , the gated linear unit is defined as follows:

| (2) |

where , , , and are model parameters, is the element-wise product, is the tanh function, and is the sigmoid function.

Dynamic Causality Generator

The conditions of bike-sharing systems are complex, and inter-regional relationships are affected not only by spatio-temporal causality, but also by diverse external conditions. However, most methods related to dynamic graphs focus on the spatial correlations between nodes while ignoring the influence of time-varying contextual conditions and causality. As shown in Fig.2, we propose an Dynamic Causality Generator with tightly coupled spatio-temporal states and context-conditioned features.

At each time step, the output of input gate and spatio-temporal state of the previous time step are concatenated as the input of dynamic causality generator:

| (3) |

where , and are model parameters, is the number of feature channels. We apply the squeeze-excitation method in the node dimension of to learn the importance-aware vectorized representation of each node. According to the importance of different nodes, it can promote useful features and suppress features that have little effect on the current task, so that each node can be differentially expressed, which is helpful for subsequent similarity calculation. First, the global channel information is squeezed into the node descriptor using the global average pool:

| (4) |

Second, the specificity of the node is excited by a self-gating mechanism based on node dependence:

| (5) |

where , and are model parameters, is the sigmoid function, and is the ReLU function. Then, is weighted to generate the dynamic latent representation of each node.

| (6) |

Finally, we follow the idea of self-attention to calculate the inter-node similarity and embed the dynamic causality into the causal graph:

| (7) |

where is the tanh function.

Spatio-temporal Evolutionary Graph Convolution

The static topological graphs and the causal graphs based on bike-sharing systems reflect the inter-node relationship from diverse perspectives. Therefore, we propose a Spatio-temporal Evolutionary Graph Convolution Network (STEGCN) to specify Eq.1, as shown in Fig.2.

For static topological graphs, we establish the geographical distance graph and the transition probability graph based on the historical trip records of the regions. Their respective weighted adjacency matrices are as follow:

| (8) |

where is distance between region and region calculated by the latitude and longitude of the regional center, denotes distance threshold and is set as 2 kilometers according to the actual situation, is the variance of the distance matrix, which is used to control the distribution and sparsity of matrix, and is transition flow.

The STEGCN can be defined as follows:

| (9) | ||||

where , and denotes the normalized adjacency matrices, defined by , and are model parameters, , , , are contribution coefficient that can be learned, and is the depth of propagation. The contributions of different graphs for bike flow prediction can be learned by training . Eq.9 is the specific implementation of Eq.1. The 2nd sum in Eq.1 corresponds to the 2nd formula in Eq.9, indicating that spatio-temporal features are extracted from different aspects (geographic, transition, causality) by means of graph convolution, and they are weighted to sum (i.e. ). The 1st sum in Eq.1 corresponds to the last formula of Eq.9, indicating that all the above information is combined (i.e. ).

Spatio-temporal Neural Structural Causal Unit

We integrate the input gate, dynamic causality generator and spatio-temporal evolutionary graph convolution derived from the frontdoor criterion into the Spatio-temporal Neural Structural Causal Unit (STNSCU) to represent the complete causal intervention process . As shown in Fig.2, STNSCU can effectively model complex nonlinear spatio-temporal causality, denoted as follows:

| (11) | ||||

where , , , , , and are the parameters of graph convolution, is graph convolution defined by Eq.10, and is the spatio-temporal state of the STNSCU at time step .

In the multi-step forecasting model, STNSCM is an encoder-decoder structure composed of STNSCUs. Historical periodic flow data and external conditions are fed into the encoder and predictions are output by the decoder. The spatio-temporal states of the encoder are transformed to initialize the decoder with a counterfactual representation reasoning module. Since the future external conditions are accessible, it is concatenated with the previous prediction as part of the decoder input.

Counterfactual Representation Reasoning

Under the condition of in the factual scenario, is set to to infer the spatio-temporal state in the counterfactual scenario, and then predict the future bike flow via . Formally, we have:

| (12) | ||||

where represents the counterfactual representation reasoning process, and represents the prediction process based on counterfactual representation.

Our purpose is to infer the spatio-temporal state in the case of by focusing on the similar part between the external conditions of the future and history. Counterfactual representation reasoning module employs scaled dot-product attention to dynamically calculate relationships between each future and historical time step, and converts the encoded historical spatio-temporal states to future representations. Finally, future representations are used to initialize the decoder. As shown in Fig.2, a fully connected layer is used to generate future external features and historical external features , which are taken as the query and key of the attention mechanism. The historical spatio-temporal states are taken as the value. The counterfactual representation reasoning module is formulated as:

| (13) | ||||

where is the number of feature channels. , , and are learnable parameters. Intuitively, for historical spatio-temporal states, the counterfactual representation reasoning module indicates to pay more attention to the parts whose external conditions are similar to the future. The future representations are input into a fully connected layer and then used to initialize the decoder.

Experiments

In this section, we evaluate the effectiveness of STNSCM by experiments conducted on real-world datasets111https://github.com/EternityZY/STNSCM.

Experimental Settings

Datasets: We collect two real-world datasets, NYC-Bike and BJ-Bike, each dataset contains the corresponding weather and time information. We split the dataset with a 30-minute interval. We select the first 60% of data as the training set, 20% as the validation set, and 20% as the test set.

Baselines: We compare STNSCM with recent state-of-the-art baselines. We summarize the models into four categories, including deep learning methods, predefined graph methods, adaptive graph methods, attention methods and dynamic graph methods. The main difference between adaptive graph and dynamic graph is whether the change of graph structure depends on input features.

Evaluation Metrics: We evaluate the performance of methods with Root Mean Square Error (RMSE), Mean Absolute Error (MAE) and Mean Absolute Percentage Error (MAPE).

Comparison with Baselines

| dataset | Category | Models | 30min | 60min | Avg | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| MAE | RMSE | MAPE | MAE | RMSE | MAPE | MAE | RMSE | MAPE | |||

| BJ-Bike | Deep Learning | LSTM | 14.2031 | 28.2169 | 19.5308% | 19.8906 | 41.4004 | 26.1706% | 17.378 | 35.3137 | 22.9824% |

| GRU | 14.5063 | 30.5848 | 20.1777% | 20.2897 | 42.8662 | 27.1207% | 17.7293 | 37.0763 | 23.7914% | ||

| Predefined Graph | STGCN | 11.5225 | 32.3062 | 15.4271% | 14.1583 | 36.1548 | 18.3616% | 13.2019 | 33.4340 | 16.9863% | |

| STGODE | 13.0722 | 25.5082 | 17.6931% | 18.2863 | 38.2222 | 23.7129% | 15.9745 | 32.0672 | 20.8288% | ||

| Adaptive Graph | GWNet | 11.3790 | 23.4735 | 15.7683% | 14.4496 | 30.3216 | 20.0026% | 13.0956 | 25.7935 | 17.9991% | |

| HGCN | 12.3808 | 23.7278 | 16.5783% | 14.4550 | 29.5130 | 19.1782% | 13.6700 | 25.9349 | 18.0185% | ||

| CCRNN | 13.0028 | 40.7625 | 16.2663% | 15.1600 | 43.8774 | 19.0467% | 14.4291 | 40.7677 | 17.7535% | ||

| DMSTGCN | 11.3967 | 23.1091 | 15.6620% | 14.1286 | 29.5651 | 18.9005% | 12.9 921 | 25.6640 | 17.3526% | ||

| Attention Graph | GMAN | 14.2979 | 32.5408 | 19.4858% | 19.5415 | 43.8546 | 25.7455% | 17.3069 | 38.4888 | 22.7454% | |

| ASTGNN | 13.0494 | 26.2419 | 17.4269% | 17.8318 | 40.4308 | 23.1511% | 15.8104 | 33.4645 | 20.4270% | ||

| Dynamic Graph | DGCRN | 11.4163 | 27.7059 | 16.0872% | 14.0468 | 33.2321 | 19.2002% | 12.9966 | 29.8171 | 17.7582% | |

| STNSCM | 11.2833 | 23.2897 | 15.2978% | 13.3415 | 28.1254 | 17.4721% | 12.5180 | 24.4383 | 16.4879% | ||

| NYC-Bike | Deep Learning | LSTM | 3.1259 | 6.0591 | 24.8426% | 3.8342 | 7.9119 | 30.9824% | 3.4809 | 6.9045 | 28.5997% |

| GRU | 3.1359 | 6.0629 | 24.8762% | 3.8442 | 7.8694 | 30.9406% | 3.4908 | 6.8827 | 28.6149% | ||

| Predefined Graph | STGCN | 2.6015 | 4.9071 | 20.5065% | 2.9732 | 6.1072 | 23.3678% | 2.7879 | 5.4049 | 22.4650% | |

| STGODE | 2.7222 | 5.2398 | 21.4485% | 3.2079 | 6.7118 | 25.4689% | 2.9649 | 5.8408 | 23.8943% | ||

| Adaptive Graph | GWNet | 2.5686 | 4.8377 | 20.5743% | 2.9589 | 6.1023 | 23.7937% | 2.7644 | 5.3748 | 22.6851% | |

| HGCN | 2.5865 | 4.9755 | 20.2884% | 2.9728 | 6.4410 | 23.4383% | 2.7803 | 5.5742 | 22.3060% | ||

| CCRNN | 2.5945 | 4.8986 | 20.4141% | 2.9670 | 6.2435 | 23.4624% | 2.7814 | 5.4886 | 22.4958% | ||

| DMSTGCN | 2.5539 | 4.7400 | 20.1860% | 2.9159 | 6.0370 | 23.2154% | 2.7395 | 5.3201 | 22.1471% | ||

| Attention Graph | GMAN | 3.1153 | 6.2649 | 23.6753% | 3.1816 | 6.4593 | 24.7447% | 3.1469 | 6.1648 | 24.7131% | |

| ASTGNN | 2.9774 | 5.6568 | 23.3848% | 3.3349 | 6.8282 | 26.2812% | 3.1576 | 6.0232 | 25.3607% | ||

| Dynamic Graph | DGCRN | 2.6175 | 4.9426 | 20.5837% | 2.9653 | 6.1419 | 23.3420% | 2.7918 | 5.4210 | 22.4856% | |

| STNSCM | 2.5289 | 4.6835 | 19.8475% | 2.8099 | 5.6129 | 22.4450% | 2.6701 | 5.0397 | 21.5300% | ||

For fairness, we deploy the same environment, loss function, periodic flow data, and external conditions for all models. We compare STNSCM with baselines for traffic prediction and the final average results are shown in Table 1.

We classify these methods according to whether the graph structure varies depending on input features. Results show our STNSCM outperforms baseline models consistently and overwhelmingly. In deep learning methods, Poor performances of indicate the limitation of failing to consider spatial correlation. STGODE(Fang et al. 2021) deepens networks to extract higher-order features through a tensor-based ordinary differential equation, but hampered by the amount of data, STGODE shows a worse performance. Besides, these methods only rely on the fixed graph structure while ignoring the dynamic characteristics.

The adaptive graph(Wu et al. 2019; Guo et al. 2021a; Ye et al. 2021; Han et al. 2021) is helpful in the short-term prediction (30min), but it is still static over time and fails to capture time-varying spatio-temporal dependencies, and the effect of long-term prediction (60min) is significantly reduced. The attention graph(Zheng et al. 2020; Guo et al. 2021b) can only change the weight of predefined graphs rather than the structure. DGCRN(Li et al. 2021) employs the pre-defined adjacency matrix to conduct the message-passing process for dynamic node status. Due to the incomplete connections in the data, the predefined graph itself may contain noise, hindering the generation of dynamic graphs.

Besides, these models do not have exclusive modules to handle contextual conditions, and we merely concatenate them with periodic flow data, so external information is not fully exploited. The qualitative prediction results are shown in Fig. 1(e). The main contribution of our model is stability and resistance to random fluctuations, which are also the most important capabilities of bike flow forecasting. Therefore, in the NYC-Bike test set, the bike flow distribution fluctuates greatly due to the external conditions, so our method outperforms all methods.

Component Analysis

| Category | Models | Average | ||||

| MAE | RMSE | MAPE | ||||

| Graph | w/o | 2.7101 | 5.2185 | 21.8458% | ||

| w/o | 2.7124 | 5.2515 | 22.0781% | |||

| w/o | 2.7411 | 5.3144 | 22.3316% | |||

| Dynamic Causality Generator | EGG w/o SE | 2.7074 | 5.1928 | 21.8821% | ||

| EGG w/o | 2.7300 | 5.3132 | 22.2931% | |||

| EGG w/o | 2.7105 | 5.2612 | 22.0250% | |||

| Input Gate | IG w/ FC | 2.7266 | 5.3050 | 22.2241% | ||

| w/o IG | 2.7239 | 5.3355 | 22.3176% | |||

|

w/o CR | 2.7041 | 5.2094 | 21.8430% | ||

| STNSCM | 2.6701 | 5.0397 | 21.5300% | |||

To verify effectiveness of key components in STNSCM, we conduct ablation experiments on NYC-Bike dataset, which are described as follows:

-

•

w/o : It removes the geographical distance graph.

-

•

w/o : It removes the transition probability graph.

-

•

w/o : It removes the dynamic causal graph.

-

•

EGG w/o SE: It removes the squeeze-excitation method from dynamic causality generator.

-

•

EGG w/o : It removes the spital-temporal state of the previous time step from dynamic causality generator.

-

•

EGG w/o : It removes the features output by input gate from dynamic causality generator.

-

•

w/o CR: It removes the counterfactual representation reasoning module from STNSCM. The last spital-temporal state of the encoder are copied to initialize the decoder.

-

•

IG w/ FC: It concatenates periodic flow data and external conditions to extract context-conditioned features only through fully connected layers.

-

•

w/o IG: It removes the input gate. The model merely input the bike flow tensor of the previous time steps.

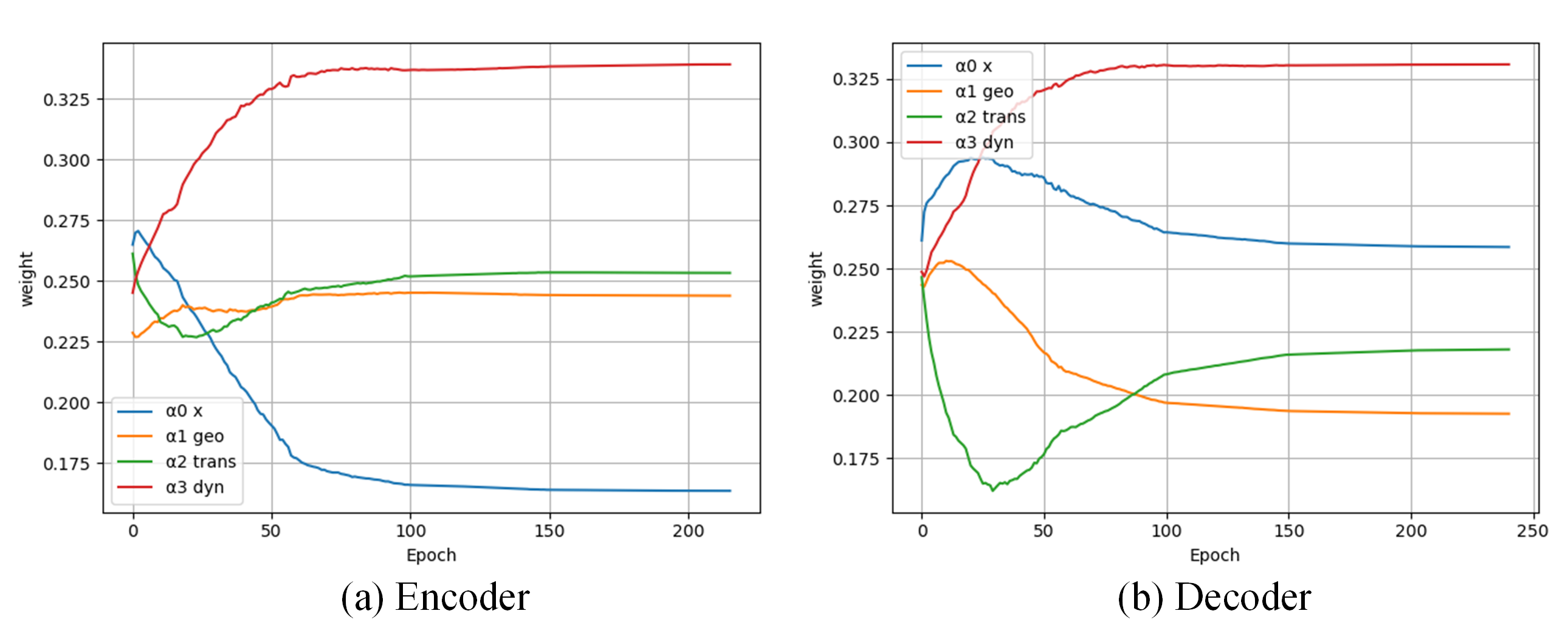

The performance of all variants is summarized in Table 2. For the contribution of different graphs, the dynamic causal graph obviously plays a more prominent role. The introduction of the causal graph can significantly improve performance, as it provides implicit causality that cannot be extracted from static topological graphs. Meanwhile, the geographical distance graph and the transition probability graph are also necessary. The dynamic causal graph can collaborate with static topological graphs to better model complex transportation systems. We further visualized the change process of the contribution coefficient in the spatio-temporal evolutionary graph convolution during the training period, as shown in Fig.3. In the encoder, the geographic distance graph and the transition probability graph have the same importance, while the input feature of the graph convolution has a smaller coefficient. This indicates that the encoder needs to extract potential spatio-temporal dependencies in the transportation system by propagating and aggregating the node features from multi-view graphs. On the contrary, in the decoder, the input features of the graph convolution account for a large proportion, which shows that the decoder needs to restore high-level spatio-temporal features to predict the future flow.

For the dynamic causality generator, the spatio-temporal state of the previous time step is crucial for modeling the dynamic spatio-temporal causality into the causal graph. We visualized the dynamic causal graph, as shown in Fig.4(c), the dynamic causal graph integrated with periodic features and external conditions directly model the interactive evolutionary process across regions.

In addition, external conditions dominate the model performance, otherwise, the model cannot govern the fluctuations caused by this factor. On the one hand, the performance of IG w/ FC and w/o IG is almost the same. This indicates that features extracted by our input gate are more effective. On the other hand, the model’s resistance to random fluctuations can be reflected in MAPE. Removing the input gate will greatly increase MAPE. The input gate can effectively fuse periodic flow data and external conditions to extract the context-conditioned features, which is also the basis for STNSCM to resist fluctuations caused by external environment.

The counterfactual representation reasoning module utilizes the attention mechanism to convert the encoded historical spatio-temporal states to future representations, which reduces information loss and improves the overall performance of the model.

Conclusion

In this work, we build a causal graph to describe the traffic prediction problem from a perspective of causality. It shows that due to the disturbance of incomplete observation, there are spurious correlations in the feature extraction process, resulting in the model can only perform general scenarios, but failing in special scenarios. We propose a novel spatio-temporal neural structural causal model that decomposes the frontdoor criterion into multiple sub-terms and proposes well-designed modules to model these sub-terms. Among them, the dynamic causality generator is the most important. It embeds the inter-regional time-varying causal relationship into the dynamic causal graph, which enables the model to capture the dynamic rules. Second, we propose a counterfactual representation reasoning module, which makes spatio-temporal states in the current factual scenarios have the ability to represent counterfactuals. Detailed experiments and analyses demonstrated the superiority of STNSCM over both classical and state-of-the-art prediction methods.

References

- Bai et al. (2020) Bai, L.; Yao, L.; Li, C.; Wang, X.; and Wang, C. 2020. Adaptive Graph Convolutional Recurrent Network for Traffic Forecasting. In 34th Conference on Neural Information Processing Systems.

- Chai, Wang, and Yang (2018) Chai, D.; Wang, L.; and Yang, Q. 2018. Bike Flow Prediction with Multi-Graph Convolutional Networks. In Proceedings of the 26th ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems, 397–400. New York, NY, USA: Association for Computing Machinery. ISBN 9781450358897.

- Fang et al. (2021) Fang, Z.; Long, Q.; Song, G.; and Xie, K. 2021. Spatial-temporal graph ode networks for traffic flow forecasting. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, 364–373.

- Guo et al. (2021a) Guo, K.; Hu, Y.; Sun, Y.; Qian, S.; Gao, J.; and Yin, B. 2021a. Hierarchical Graph Convolution Networks for Traffic Forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 35, 151–159.

- Guo et al. (2021b) Guo, S.; Lin, Y.; Wan, H.; Li, X.; and Cong, G. 2021b. Learning dynamics and heterogeneity of spatial-temporal graph data for traffic forecasting. IEEE Transactions on Knowledge and Data Engineering.

- Han et al. (2021) Han, L.; Du, B.; Sun, L.; Fu, Y.; Lv, Y.; and Xiong, H. 2021. Dynamic and Multi-faceted Spatio-temporal Deep Learning for Traffic Speed Forecasting. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, 547–555.

- Li et al. (2021) Li, F.; Feng, J.; Yan, H.; Jin, G.; Jin, D.; and Li, Y. 2021. Dynamic Graph Convolutional Recurrent Network for Traffic Prediction: Benchmark and Solution. arXiv preprint arXiv:2104.14917.

- Li et al. (2018) Li, Y.; Yu, R.; Shahabi, C.; and Liu, Y. 2018. Diffusion Convolutional Recurrent Neural Network: Data-Driven Traffic Forecasting. In International Conference on Learning Representations (ICLR ’18).

- Li et al. (2019) Li, Y.; Zhu, Z.; Kong, D.; Xu, M.; and Zhao, Y. 2019. Learning heterogeneous spatial-temporal representation for bike-sharing demand prediction. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 33, 1004–1011.

- Liang et al. (2021) Liang, Y.; Ouyang, K.; Sun, J.; Wang, Y.; Zhang, J.; Zheng, Y.; Rosenblum, D.; and Zimmermann, R. 2021. Fine-Grained Urban Flow Prediction. In Proceedings of the Web Conference 2021, 1833–1845.

- Lin et al. (2022) Lin, X.; Chen, Y.; Li, G.; and Yu, Y. 2022. A Causal Inference Look at Unsupervised Video Anomaly Detection. Proceedings of the AAAI Conference on Artificial Intelligence, 36(2): 1620–1629.

- Liu et al. (2021) Liu, C.; Sun, X.; Wang, J.; Tang, H.; Li, T.; Qin, T.; Chen, W.; and Liu, T.-Y. 2021. Learning causal semantic representation for out-of-distribution prediction. Advances in Neural Information Processing Systems, 34.

- Liu et al. (2020) Liu, L.; Chen, J.; Wu, H.; Zhen, J.; Li, G.; and Lin, L. 2020. Physical-virtual collaboration modeling for intra-and inter-station metro ridership prediction. IEEE Transactions on Intelligent Transportation Systems.

- Liu et al. (2022) Liu, Y.; Cadei, R.; Schweizer, J.; Bahmani, S.; and Alahi, A. 2022. Towards Robust and Adaptive Motion Forecasting: A Causal Representation Perspective. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 17081–17092.

- Pearl (2009) Pearl, J. 2009. Causality. Cambridge university press.

- Schölkopf (2022) Schölkopf, B. 2022. Causality for machine learning. In Probabilistic and Causal Inference: The Works of Judea Pearl, 765–804.

- Shen et al. (2020) Shen, X.; Liu, F.; Dong, H.; Lian, Q.; Chen, Z.; and Zhang, T. 2020. Disentangled generative causal representation learning. arXiv preprint arXiv:2010.02637.

- Sun et al. (2020) Sun, J.; Zhang, J.; Li, Q.; Yi, X.; Liang, Y.; and Zheng, Y. 2020. Predicting citywide crowd flows in irregular regions using multi-view graph convolutional networks. IEEE Transactions on Knowledge and Data Engineering.

- Wu et al. (2019) Wu, Z.; Pan, S.; Long, G.; Jiang, J.; and Zhang, C. 2019. Graph WaveNet for Deep Spatial-Temporal Graph Modeling. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence, IJCAI 2019, Macao, China, August 10-16, 2019, 1907–1913.

- Yang et al. (2021) Yang, M.; Liu, F.; Chen, Z.; Shen, X.; Hao, J.; and Wang, J. 2021. CausalVAE: Disentangled representation learning via neural structural causal models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 9593–9602.

- Ye et al. (2021) Ye, J.; Sun, L.; Du, B.; Fu, Y.; and Xiong, H. 2021. Coupled Layer-wise Graph Convolution for Transportation Demand Prediction. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 35, 4617–4625.

- Yue et al. (2020) Yue, Z.; Zhang, H.; Sun, Q.; and Hua, X.-S. 2020. Interventional few-shot learning. Advances in neural information processing systems, 33: 2734–2746.

- Zhang et al. (2020) Zhang, D.; Zhang, H.; Tang, J.; Hua, X.-S.; and Sun, Q. 2020. Causal intervention for weakly-supervised semantic segmentation. Advances in Neural Information Processing Systems, 33: 655–666.

- Zheng et al. (2020) Zheng, C.; Fan, X.; Wang, C.; and Qi, J. 2020. Gman: A graph multi-attention network for traffic prediction. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 34, 1234–1241.