Spatial-temporal traffic modeling with a fusion graph reconstructed by tensor decomposition

Abstract

Accurate spatial-temporal traffic flow forecasting is essential for helping traffic managers to take control measures and drivers to choose the optimal travel routes. Recently, graph convolutional networks (GCNs) have been widely used in traffic flow prediction owing to their powerful ability to capture spatial-temporal dependencies. The design of the spatial-temporal graph adjacency matrix is a key to the success of GCNs, and it is still an open question. This paper proposes reconstructing the binary adjacency matrix via tensor decomposition, and a traffic flow forecasting method is proposed. First, we reformulate the spatial-temporal fusion graph adjacency matrix into a three-way adjacency tensor. Then, we reconstructed the adjacency tensor via Tucker decomposition, wherein more informative and global spatial-temporal dependencies are encoded. Finally, a Spatial-temporal Synchronous Graph Convolutional module for localized spatial-temporal correlations learning and a Dilated Convolution module for global correlations learning are assembled to aggregate and learn the comprehensive spatial-temporal dependencies of the road network. Experimental results on four open-access datasets demonstrate that the proposed model outperforms state-of-the-art approaches in terms of the prediction performance and computational cost.

Index Terms:

traffic modeling, traffic flow forecasting, graph convolutional network, graph adjacency matrix, tensor decomposition.I Introduction

With the rapid development of economy and the rising number of vehicles, numerous worldwide cities are gradually plagued by traffic congestion and parking difficulties. Many countries have been striving to develop the intelligent transportation system (ITS) to increase the traffic management efficiency, enhance the travel security and improve the increasingly serious traffic conditions [1]. Traffic forecasting is regarded as an important part of the intelligent transportation system, and its researches have gained continuous attention during the past dedicates [2, 3]. Generally, traffic flow forecasting methods are roughly classified as the statistical models like the historical average (HA) [4] and the autoregressive integrated moving average (ARIMA) [5], and the machine learning based models [6]. In the past decades, owing to the increasement on the quantity of the traffic flow, its forecasting evolves into a large-scale network traffic forecasting problem which is challenging for statistical models since it is easy for them to fall into dimensionality disasters [7]. Hence, machine learning models, e.g. support vector regression (SVR) [8], gradient boosting regression tree (GBRT) [9] and artificial neural networks (ANN) [10], based traffic flow forecasting methods become the research focus.

Although the above traditional machine learning methods improved the traffic forecasting accuracy to some extent, they are still poor at capturing and analyzing the complex nonlinear correlations of the large-scale network traffic data [11]. Fortunately, deep learning methods, emerging in recent years, make up for this deficiency through an end-to-end multi-layer learning framework. Early deep learning models applied to traffic flow prediction are the recurrent neural networks (RNN) and its variants, such as long short-term memory (LSTM) [12] and gated recurrent unit (GRU) [13] networks, since they can capture and utilize the temporal correlations of traffic data fully to improve the forecasting accuracy. Subsequently, some researches tried to employ convolutional neural networks (CNN) [14] to capture spatial correlations among the traffic network to further enhance the forecasting accuracy. But CNN works in the Euclidean space, which depicts the correlations of the data through Euclidean distances. However, the structure of the real-world large-scale traffic network is topological and irregular, whose spatial correlations cannot be fully characterised by CNN.

Recently, the graph convolutional neural network (GCN) [15], which can characterize and utilize the spatial topological structure of road networks, has been widely used in traffic flow forecasting [16] and has achieved superior performances [2, 17, 18]. Existing studies show that constructing the proper adjacency graph instead of adopting sophisticated mechanisms for modeling spatial and temporal correlations is a key to enhancing the graph convolutional networks, and many research efforts have been made. Early efforts mainly concentrated on improving the given spatial adjacency matrix, e.g., [19] changed the 0-1 adjacency matrix into a distance-based weighted adjacency matrix whose entries are usually correlated to the given physical spatial distances between adjacent nodes. However, only utilizing the given spatial adjacency matrix to model the graph is not informative enough, since limited representations of the given spatial matrix fail to depict the temporal similarity between nodes to obtain the complete adjacent connections. Some works already made several attempts to improve the temporal representation of the graph. Self-adaptive matrices [20, 21] are employed to adjust the existed spatial adjacency matrix and learn the hidden spatial dependencies, but these learnable matrices are lack of correlations representation ability for complicated spatial-temporal dependencies and the acquisition for them is time consuming. Recently, Song [22] introduced a localized spatial-temporal graph construction method through connecting the nodes at different time steps into a graph and incorporating it with the given spatial graph into a fusion graph, and Li [23] improved this fusion graph model by deploying the Dynamic Time Warping algorithm to measure the similarity between time series and fusing three types of sub-graphs including the given spatial graph,the temporal graph, and the temporal connectivity graph. Both of them captured the spatial-temporal correlations directly trough a fusion graph model instead of using different types of neural network modules separately, hence made the learning model with less computational cost and more efficient. However, they still need a mask matrix learning process to update the graph adjacency matrix during prediction, which increased the model learning parameters to some extent. Besides, they only constructed the graph through a block matrix with each block representing a sub-graph and update the weights of entries in each block separately, which ignored the possible potential temporal-spatial correlations among the blocks.

This paper aims to offline reconstruct the fusion graph through a information maximization mapping method that can extract the potential temporal-spatial correlations among the blocks of the original fusion graph to provide a more informative graph and meanwhile avoid the time-consuming online learning process on the graph weights updating. Since the fusion graph is a block matrix that is usually naturally expressed as a tensor model[24].We attempt to deploy tensor decomposition, which has been proved to be capable of extracting the multi-mode spatial-temporal correlations of traffic data efficiently and widely used in traffic data modeling[25, 26], to reconstruct the graph and enhance the graph convolutional neural network based traffic data forecasting model. The main contributions of our work are as follows:

-

•

We present a novel tensor decomposition based spatial-temporal graph reconstruction method that can maximally utilize the spatial-temporal information and extract the possible potential correlations among the sub-graphs to offline reconstruct and provide a more informative fusion graph that helps to contribute to the prediction accuracy and yet the computational cost.

-

•

We assemble the spatial-temporal graph convolutional module (STTGCM) [22] and the Dilated Convolution module into a new spatial-temporal graph convolutional network model (STTGCN) to learn the localized and global spatial-temporal correlations simultaneously for traffic data forecasting.

-

•

Extensive experiments are conducted on four real-world datasets and the experimental results show that our model consistently outperforms state-of-the-art approaches.

II Related Works

II-A Graph Convolution Network

Traditional convolution has a strong ability to explore local spatial correlations, but it is mainly qualified for handling standard grid data rather than non-Euclidean data such as the rode-network traffic data. Recently, graph convolution networks, which can remedy this limitation and capture the structural characteristics of networks, have been developed rapidly. There are mainly two categories of graph convolution neural networks: Spectral GCN and Spatial GCN. Spectral GCN transforms the convolution networks from the spatial domain into the spectral domain for convenience of leveraging the spectral theory to conduct convolution operation on the graph directly. The SCNN model proposed by [27] adopted a learnable diagonal matrix to replace the convolution kernel of the spectral domain and realizes the graph convolution operation. However, it is time-consuming to calculate the eigenvalue decomposition of the Laplacian matrix and the model parameters are complex. In order to solve the above problems, ChebNet model was proposed by [28], which used Chebyshev polynomials instead of convolution kernels in the spectral domain, and saves time without decomposition. To further simplify the model, [29] proposed the first-order ChebNet model, in which each convolution kernel has only one parameter. Spatial GCN generalizes the traditional convolutional network from the Euclidean space to the vertice domain directly. The GNN model [30] uses the random walk method to transform the graph structure data, but it tried to force a graph to be a rule structure. GraphSAGE [31] sampled the neighbor vertices of each vertex in the graph, and then aggregated the information contained in the neighbor vertices according to the aggregation function. GAT [32], a powerful GCN variant, aggregated the features of neighboring vertices to the center vertex through using attention layers to adjust the importance of neighbor nodes dynamically.

II-B Spatial-temporal Traffic flow Forecasting with Deep Learning Models

Traffic flow forecasting belongs to correlated time series analysis (or multivariate time series analysis) and has been studied for decades. Owing to the strong learning and nonlinear representation abilities, deep learning based traffic flow forecasting methods have been extensively researched and gained outstanding achievements. Most previous studies of this kind [33, 34, 35] have relied on LSTM or GRU to model the temporal dynamics of the sequential traffic data. Some studies use temporal convolutional networks [36] to enable the model to process extremely long-time sequences with less time. Although employing temporal correlations of traffic data fully to improve the prediction accuracy, these models neglects the spatial correlation among the traffic series from different sources (spaces/regions/sensors) which may have a great influence on the prediction results. Then, CNN that is apt at learning the spatial correlation was introduced to capture temporal and spatial correlations simultaneously with RNN to improve the prediction accuracy[37, 38, 39, 40].

Although the hybrid model of CNN and RNN improved the prediction performances significantly, it can only deal with data in the Euclidean space, hence fail to fully represent and dig the comprehensive structural information of the real-world large-scale road network that is spatially irregular and topological. Subsequently, GCN-based models that process the road network as a graph directly, avoiding to destroy the topological structure are utilized for more practical traffic data forecasting. Some works[21, 41] deployed a spectral-domain GCN to capture the spatial correlations among traffic series and used RNN to model and aggregate the temporal correlations to improve the prediction accuracy. Many recent studies [42, 43] further use complex spatial-temporal attention mechanisms to help a spectral-domain GCN to capture dynamic spatial-temporal correlations. However, the spectral-based method, in which the graph structure is fixed, is computationally complex. Recently, researches gradually pay attention to the Spatial GCN based traffic data forecasting models[16, 44, 20, 22, 23], and demonstrated the main concerns to improve the prediction accuracy are to construct a adjacency graph as informative as enough and to implement effective convolution on the graph to aggregate the spatial-temporal features.

II-C Deep Learning Models Optimization through Tensor Decomposition

Tensor decomposition is the higher-order generalization of matrix decomposition [45]. It decomposes high-dimensional tensors into a sum of products of lower dimensional factors. Due to its strong capability of uncovering underlying the hidden low-dimensional structure of high-dimensional data, tensor decomposition has been widely used for data compression, dimension reduction, missing data recovery, and etc [46].

One of the features and benefits of deep learning models is the deep structure, which whereas leads to numerous model training parameters and low training efficiency. Motivated by the data compression methods, some researchers tried to utilize tensor decomposition to compress the neural model for deep learning to make a trade-off between the precision and computational efficiency. There are mainly three kinds of tensor decomposition based neural model compression methods. The first kind is to compress the whole deep learning architectures through constructing the corresponding tensor network representation, which has been successfully applied to Restricted Boltzmann Machines and convolutional architectures[47]. The second kind leverages tensor decomposition on the single layers of the network. For instance, [48] introduced the Tucker Tensor Layer as an alternative to the dense weight-matrices of neural networks. Besides, [49] introduced a multitask representation learning framework leveraging tensor factorisation to share knowledge across tasks in fully connected and convolutional DNN layers.

The use of tensor decomposition methods in graph data processing appears a lively research field recently. Tensor decomposition extracts the hidden information or main components in the original higher-order data. Graph neural networks perform end-to-end calculations on graph data. However, these graph data contain plenty of potential information. Hence, tensor decomposition is adopted to mine the hidden information of the graph data for improving the performances of the graph neural networks. For instances, to reduce the computational burden, Xu [50] utilized Tucker decomposition to perform separate filtering in small-scale space, time, and feature modes, while Zhao [51] used tensor decomposition to extract the graph structure features of each view in the common feature space.Such kinds of models have also been adopted to traffic data forecasting[52, 53].

It can been seen that the cooperation of tensor decomposition helps improve the efficiency and accuracy of deep learning including the graph neural networks models.

III Preliminaries

Definition 1: This study uses to define spatial networks: is the vertices set, in which represents the number of all nodes (each node represents the position of the corresponding sensor), and is the set of edges, which reflects the connection relationship between adjacent nodes. represents the adjacency matrix and stores the connection information, each of which represents the connection between the corresponding sections. represents the relationship between nodes in the spatial dimension.

Definition 2: Graph signal matrix : represents the number of features, represents the time step, and represents the observed value of step in the figure.

Therefore, the time and space dependencies for traffic prediction modeling can be regarded as: learning the mapping function based on the road network and road network characteristic matrix . We use to express the length of the historical spatial-temporal network sequence, represents the length of the target spatial-temporal network sequence to be predicted, which can be expressed as follows:

| (1) |

IV Spatial-Temporal Tensor Graph Neural Network

IV-A Construction of the Spatial-Temporal Tensor Graph

Inspired by [22], as shown in Fig. 1, we unify the relationship of a node with its spatially neighboring nodes and the relationship of the node with itself in neighboring time steps into a graph as input.

As shown in Fig. 2(a), we use to represent the adjacent matrix of the given spatial graph in the time step and to represent the adjacent matrix of the temporal graph between the adjacent time steps and , which equals to the connectivity denoted by the blue arrows in Fig. 1. , a block matrix, is made up of and , which represents the adjacent matrix of the localized spatial-temporal graph constructed from Fig. 1. or is the single block of . For each node in the spatial graph, we recalculate its new index in the local spatial-temporal graph by , where represents the time step number in the local spatial-temporal graph. The entries of are denoted as:

| (2) |

where denotes the node in localized spatial-temporal graph.

However, the 0-1 binary entries can only depict whether there is connectivity between two adjacent nodes, but fail to show the magnitude of the connectivity (i.e. the weight of each entry). To solve this problem, many existing researches[22, 23]update the weights of each block (sub-graph) separately though learning models, which leads to increment in the volume of model learning parameters and ignores the possible potential correlations among the blocks, e.g, the spatial connectivity in the time step may be correlated with that in the time step . Instead, this paper tries to utilize an information maximization mapping method that attempts to minimize the model parameters and consider the spatial-temporal correlations among the blocks fully to update the weights of all the entries of simultaneously, i.e. reconstruct the graph. First, for more general and natural spatial-temporal correlations expression [24], we construct the block matrix into a tensor model as through a reshaping operation, as shown in Fig. 2(b), in which N represent the number of nodes in the spatial graph, and represents the number of blocks in . The block in the block matrix is arranged as the lateral slice in the tensor . Then, Tucker decomposition, a kind of multiple linear mapping, is deployed to reconstruct . It decomposes into a core tensor multiplied by the factor matrix along each mode as follows:

| (3) |

where, denotes the n-mode product of with , is the dimension of the i-th mode of ; P, Q and R are the number of components (i.e., columns) in the factor matrices; represents the vector outer product and is the p-th column vector of . Finally, the core tensor , which can capture the hidden information and interaction characteristics among each mode of , is used to reconstruct the fusion graph as off-line though an unfolding-like operation, as shown in Fig. 2(c). Specifically, in the unfolding-like operation, every three slices are arranged in the m-th row of the new block matrix . The objective function of Tucker decomposition for is compactly formulated as:

| (4) |

where is the Stiefel manifold containing all rank-d orthonormal bases in and denotes the L2 (or Frobenius) norm returning the summation of the squared entries of its tensor argument. If is solution to 4, then the core tensor is expressed by:

| (5) |

Specifically, the L1-Tucker decomposition [54], an improved Tucker decomposition model which replaces the L2 norm in the objective function of Tucker decomposition into L1 norm and has shown high efficiency, is adopted in this paper. The objective function of the L1-Tucker decomposition for is as follows:

| (6) |

It is notable that, to keep the size of the graph unchangeable, during the tensor decomposition, the size of the core tensor is fixed as the as same as the original tensor.

.

IV-B Spatial-Temporal Tensor Graph Convolutional Network

The Spatial-Temporal Synchronous Graph Convolutional Module (STSGCM) [22] is deployed in this paper to capture the localized spatial-temporal heterogeneous correlations in the graph reconstructed in Section 4.1. Furthermore, to learn the global spatial-temporal homogeneous correlations, we assemble a Dilated Convolution Module [16] with STSGCM into a spatial- temporal tensor graph convolution layer (STTGCL). Fig 3 shows the specific structure of the model.

IV-B1 Spatial-Temporal Tensor Graph Convolution Module

Since the spatial-temporal graph constructed in Section 4.1 puts the nodes at different time steps into a same environment without distinguishing them, it obscures the time attribute of each node. To solve this problem, we equip position embedding [55] to the spatial-temporal network series as Song [22] did, so that the model can take the spatial and temporal information into account, which can enhance the ability to model the spatial-temporal correlations. In STTGCM, GLU is selected as the activation function of graph convolution, so the graph convolution operation can be described as follows:

| (7) |

is the graph adjacency matrix constructed in Section 4.1. belongs to the l-th input of the graph convolution layer. , , , are the weight parameters and bias parameters that can be obtained by learning. represents the activation function and represents the element product. Gated linear units control which nodes’ information can be passed to the next layer.

Then, we can aggregate the complex spatial relationships by stacking layers. Here, we select the maximum pool as the aggregation operation. Finally, we cut the information of the previous and next time steps, because the information of these steps has been aggregated during the graph convolution operation, which would cause information redundancy if not deleted. The cutting form is shown in Fig 1. There are T time steps, and every three neighbouring time steps are in a group. Then the outputs of STTGCM for the T-2 time step are the final outputs. These outputs are connected in series into a matrix as the output of STTGCL layer. That can be formulated as:

| (8) |

where represents the outputs of the -th STTGCM.

IV-B2 Dilated Convolution Module

STTGCM can capture the heterogeneity in the local spatial-temporal graph effectively through multiple modules in different time periods, but ignored the global spatial-temporal homogeneity which also has a great influence in the prediction accuracy [23]. Hence, we introduce the dilated convolution, like many works [16, 20], to capture the global spatial-temporal homogeneity, as shown in Fig 4. Dilated convolution allows the input of convolution to be sampled at intervals, and the dilated rate is controlled by .

IV-B3 Loss Function

Huber Loss is a parametric Loss function used in regression problems, it has the advantage of enhancing the robustness of mean square error (MSE) to outliers. When the prediction deviation is less than , it uses the squared error. When the prediction deviation is greater than , the linear error is adopted. Hence, we choose Huber loss as the loss function.

| (9) |

represents the basic truth value, represents the prediction of the model, and is the threshold parameter that controls the square error loss range.

V Experiments

In this section, we conducted a series of experiments on four real-world datasets, and evaluated the performances of the proposed model.

V-A Datasets

We verified the effectiveness of our proposed model on four highway traffic datasets (http://pems.dot.ca.gov/). These data are collected from the Caltrans Performance Measurement System (PeMS). The flow data is aggregated to 5 minutes, which means there are 12 points in the traffic flow data for one hour and 288 points for one day. Table I provides the detailed information of the four datasets. One hour 12 continuous time steps historical data is used to predict next hour’s 12 continuous time steps data.

| Datasets | Number of sensors | Time Steps | Time range |

|---|---|---|---|

| PEMS03 | 358 | 26208 | 2018/9/1-2018/11/30 |

| PEMS04 | 307 | 16992 | 2018/1/1-2018/2/28 |

| PEMS07 | 883 | 28224 | 2017/5/1-2017/8/31 |

| PEMS08 | 170 | 17856 | 2016/7/1-2016/8/31 |

V-B Models for Comparison

Our proposed model will be compared with the following models:

-

•

SVR [56]: Support Vector Regression is a classical traditional time series analysis model.

-

•

LSTM [12]: Long-Short Term Memory belongs to a typical kind of Recurrent Neural Networks.

-

•

DCRNN [44]: Diffusion Convolution Recurrent Neural Network is a model that uses diffusion recurrent neural networks to capture spatial-temporal dependencies.

-

•

STGCN [16]: Spatial-temporal Convolution Network integrates Chebnet and 2D convolution to obtain spatial-temporal correlations.

-

•

ASTGCN(r) [42]: Attention Based Spatial Temporal Graph Convolutional Networks introduces spatial-temporal attention mechanisms into the graph convolution network model. Only recent components of modeling periodicity is taken to keep fair comparison

-

•

Graph WaveNet [20]: A framework combines adaptive adjacency matrix into graph convolution with 1D dilated convolution.

-

•

STSGCN [20]: Spatial-temporal Synchronous Graph Convolution Network uses local spatiotemporal sub-graph modules to independently model local correlations.

-

•

STFGNN [23]: Spatial-Temporal Fusion Graph Neural Networks integrates the fusion graph module and a new gate convolution module into a unified layer, which can learn the temporal and spatial dependencies to deal with long sequences.

V-C Experiment Settings

To keep fair comparison with the previous works, all the data is split with the ratio 6:2:2 into training sets, verification sets and test sets. All experiments are repeated on all datasets for ten times.

The number of time steps in a local temporal-spational network is selected as 3 and the model consists of 4 STTGCLs. Each STTGCM includes 3 graph convolutional operations with 64, 64, 64 filters respectively. The dilated coefficient of the dilated convolution module is 2. In the training process, we set the batch size to be 32, learning rate to be 0.003 and epoch equals to 5000, and we used an early-stop strategy. The threshold parameter of loss function is 1. All experiments were trained and tested under the computational environment with one Intel(R) Core(TM) i9-12900KF CPU @ 5.20Ghz and NVIDIA GeForce RTX 3090 Ti. Besides, in this work, we deploy the core tensor obtained by the tensor decomposition to reconstruct the graph adjacency matrix. Since the diagonal entries of the core tensor represent the connection of a node with itself at the same time step, which should be the largest theoretically. So we adjust them to be the max value 1 manually during the decomposition.

The mean absolute error(MAE), the mean absolute percentage error (MAPE) and the root mean square error (RMSE) are used to evaluate the prediction performances of the proposed model:

| (10) |

| (11) |

| (12) |

where n is the total number of 5-min traffic flow data for prediction in test datasets. and are the true and predicted values respectively.

V-D Experimental Results ans Analysis

V-D1 Prediction accuracy comparisons

Table II shows the prediction results comparison of different methods. It is obvious that our proposed model is always superior to others on all the four datasets.

| Baseline methonds | SVR | LSTM | DCRNN | STGCN | ASTGCN | Graph WaveNet | STSGCN | STFGNN | STTGCN | |

| Datasets | Metrics | |||||||||

| PEMS03 | MAE | 21.07±0.00 | 21.33±0.24 | 18.18±0.15 | 17.49±0.46 | 17.69±1.43 | 19.85±0.03 | 17.48±0.15 | 16.77±0.09 | 15.20±0.17 |

| MAPE(%) | 21.51±0.46 | 23.33±4.23 | 18.91±0.82 | 17.15±0.45 | 19.40±2.24 | 19.31±0.49 | 16.78±0.20 | 16.30±0.09 | 14.95±0.56 | |

| RMSE | 35.29±0.02 | 35.11±0.50 | 30.31±0.25 | 30.12±0.70 | 29.66±1.68 | 32.94±0.18 | 29.21±0.56 | 28.34±0.46 | 26.60±0.58 | |

| PEMS04 | MAE | 28.70±0.01 | 27.14±0.20 | 24.70±0.22 | 22.70±0.64 | 22.93±1.29 | 25.45±0.03 | 21.19±0.10 | 19.83±0.06 | 19.08±0.20 |

| MAPE(%) | 19.20±0.01 | 18.20±0.40 | 17.12±0.37 | 14.59±0.21 | 16.56±1.36 | 17.29±0.24 | 13.90±0.05 | 13.02±0.05 | 12.61±0.11 | |

| RMSE | 44.56±0.01 | 41.59±0.21 | 38.12±0.26 | 35.55±0.75 | 35.22±1.90 | 39.70±0.04 | 33.65±0.20 | 31.88±0.14 | 30.96±0.41 | |

| PEMS07 | MAE | 32.49±0.00 | 29.98±0.42 | 25.30±0.52 | 25.38±0.49 | 28.05±2.34 | 26.85±0.05 | 24.26±0.14 | 22.07±0.11 | 20.36±0.13 |

| MAPE(%) | 14.26±0.03 | 13.20±0.53 | 11.66±0.33 | 11.08±0.18 | 13.92±1.65 | 12.12±0.41 | 10.21±1.65 | 9.21±0.07 | 8.62±0.10 | |

| RMSE | 50.22±0.01 | 45.84±0.57 | 38.58±0.70 | 38.78±0.58 | 42.57±3.31 | 42.78±0.07 | 39.03±0.27 | 35.80±0.18 | 33.92±0.23 | |

| PEMS08 | MAE | 23.25±0.01 | 22.20±0.18 | 17.86±0.03 | 18.02±0.14 | 18.61±0.40 | 19.13±0.08 | 17.13±0.09 | 16.64±0.09 | 15.09±0.09 |

| MAPE(%) | 14.64±0.11 | 14.20±0.59 | 11.45±0.03 | 11.40±0.10 | 13.08±1.00 | 12.68±0.57 | 10.96±0.07 | 10.60±0.06 | 9.77±0.14 | |

| RMSE | 36.16±0.02 | 34.06±0.32 | 27.83±0.05 | 27.83±0.20 | 28.16±0.48 | 31.05±0.07 | 26.80±0.18 | 26.22±0.15 | 24.37±0.16 | |

The proposed STTGCN model works best on the PEMS08 datasets, which outperforms the second performer STFGNN with improvements of 1.55 and 1.85 on MAE and RMSE. The STTGCN achieves improved average performances on MAE, MAPE, and RMSE,compared to other methods, which outperforms the second performer STFGNN with improvements of 1.395, 0.795, and 1.598 on MAE, MAPE and RMSE, and outperforms the STSGCN with improvement of 2.43 on RMSE. The traditional forecasting methods (SVR and LSTM) that only considered the temporal correlations while ignored the spatial correlations perform the worst. The experimental results confirm that our approach can construct more informative graph for the network and learn richer spatial-temporal correlations of traffic flow data to provide more accurate forecasting. STSGCN and STFGNN achieve better performances than DCRNN, STGCN, ASTGCN and Graph WaveNet, because they can capture the spatial-temporal correlations directly through a fusion graph model instead of using different types of neural network modules and sophisticated mechanisms for modeling spatial and temporal correlations separately. STFGNN outperforms STSGCN mainly because that it assembled a gated convolution to learn the global homogeneous correlations except for the localized spatial-temporal dependencies learned by STSGCN.

Fig 5 shows the prediction details of STTGCN and STSGCN for a certain road segment on PEMS04. It is interesting to find that STTGCN outperforms STSGCN prominently in the morning and evening peaks. This means our method may be more robust when dealing with the large-scale traffic flow.

V-D2 Comparison of the model parameters quantities

Table III presents the model parameters quantities of STTGCN, STFGNN and STSGCN. It can be seen that the necessary model parameters of STTGCN are much less than the other two methods especially for the large-scale dataset like PEMS07, which means STTGCN is superior in the computational cost for higher training efficiency. This is mainly because that STSGCN and STFGNN involves the mask matrix learning process which produces too many parameters. STTGCN deploys a tensor decomposition operation to reconstruct and update the graph adjacency matrix offline, which helps to avoid such a learning process and hence reduce the model computational cost as increasing the prediction accuracy.

| PEMS03 | PEMS04 | PEMS07 | PEMS08 | |

|---|---|---|---|---|

| STSGCN | 3496212 | 2872686 | 15358062 | 1661332 |

| STFGNN | 4968652 | 3873580 | 25918252 | 1756108 |

| STTGCN | 1255308 | 1242252 | 1389708 | 1207108 |

V-D3 Comparison of adopting different tensor decomposition models

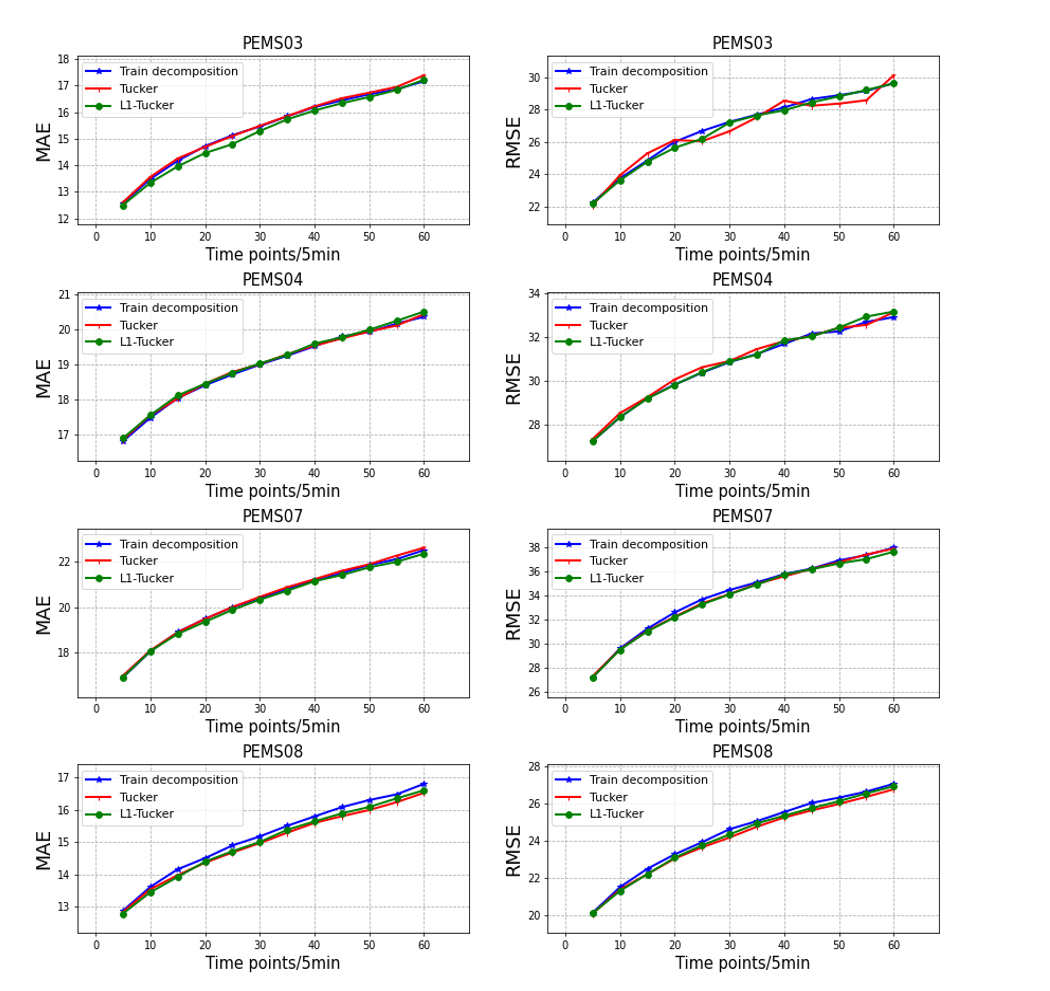

Adopting the tensor decomposition to reconstruct the adjacency graph is a main innovation point and highlight of this work. Hence, choosing a proper decomposition model is of great significance. Fig 6 presents the performances of using the following three different tensor factorization models on the four datasets.

We tested the above three tensor decomposition methods for graph reconstructing on predicting a random one-hour period of traffic flow on all the four datasets. Fig 6 shows the MAE and RMSE in each time point. In the PEMS08 dataset, we can see that the Train decomposition based method, whose prediction error rises rapidly after the second time points, is inferior to the Tucker decomposition based methods. This may be because that the Train decomposition is mainly suitable for processing super high-order() tensors but not the 3-order tensor constructed in this paper. The Tucker and the L1-Tucker methods show little differences in the performance of prediction accuracy on all the datasets, but L1-Tucker trends to be more robust in the PEMS03 and PEMS04 datasets, especially in PEMS03.

For further comparison, we tested the computational consumption of the three models. As shown in Fig 7, to achieve the same prediction accuracy, L1-Tucker is of the highest efficiency in the large-scale PEMS03 and PEMS07 datasets. Hence, leveraging the effectiveness and efficiency for prediction, L1-Tucker is deployed in this paper to reconstruct and improve the graph model.

V-D4 The node relationships in the reconstructed graph

In order to investigate the role of tensor decomposition in our model intuitively, we shown a hotmap (Fig 8) which presents the relationships of 12 random detectors in the reconstructed adjacency graph for the PEMS08 dataset. Fig 8(a) shows the real-world physical distances between neighboring detectors, which is used to construct the given spatial graph. Darker colors indicate farther distances, except for that the yellow grids, whose values equal to 0, represent there is no connection between the corresponding two nodes. Fig 8(b) shows the reconstructed adjacency graph by our model, the i-th row represents the correlation magnitudes between each detector and the i-th detector. For example, the relationship between the 0th and 7th detectors is weaker than that between the 1th and 11th detectors. This is mainly because the distances between the 0th and 7th detectors are much further as shown in Fig 8(a). Besides, there are also relatively strong relationships demonstrated between some detectors that are not connective in the physical space (e.g. the 4th and 10th detectors) and the relationships between two closely connected detectors may be not always that strong(e.g. the 0th and 11th detectors). This means the reconstructed adjacency graph learns more informative relationships not only based on the physical connects but also considering some other factors such as the road capacity,traffic control, artificial preferences, etc.

VI Conclusion

In this paper, we proposed a new graph convolutional network model for spatial-temporal traffic flow forecasting. It can not only capture the underlying spatial-temporal dependencies effectively through an informative graph, but also learn the localized spatial-temporal heterogeneity and the global spatial-temporal homogeneity simultaneously.

The main findings of this paper are: (1) the graph reconstructed by the core tensor obtained through tensor decomposition, especially the L1-Tucker decomposition, is much more informative than the original given spatial graph; (2) deploying the graph reconstructed and updated by tensor decomposition is beneficial for enhancing both the effectiveness and efficiency of traffic data forecasting; (3) learning the localized heterogeneous and global homogeneous spatial-temporal correlations simultaneously through an assembling model can improve traffic data forecasting accuracy, which is consistent with the conclusions of previous works.

Above all, this paper suggests a new way of utilizing the tensor decomposition method to improve the graph convolutional network model for traffic data forecasting. However, the sub-graph information constituting the fusion graph is also important, so we will explore how to construct more informative sub-graphs according to the spatial and temporal properties of traffic data in the future (e.g. the functional similarity graph / the transportation connectivity graph). In addition, how to aggregate the spatial-temporal information is of great significance in the graph convolutional network model, hence we will continuously devote to investigating more efficient and effective learning frameworks.

References

- [1] J. Zhang, F.-Y. Wang, K. Wang, W.-H. Lin, X. Xu, and C. Chen, “Data-driven intelligent transportation systems: A survey,” IEEE Transactions on Intelligent Transportation Systems, vol. 12, no. 4, pp. 1624–1639, 2011.

- [2] L. Zhao, Y. Song, C. Zhang, Y. Liu, P. Wang, T. Lin, M. Deng, and H. Li, “T-gcn: A temporal graph convolutional network for traffic prediction,” IEEE Transactions on Intelligent Transportation Systems, vol. 21, no. 9, pp. 3848–3858, 2019.

- [3] Q. Zhang, J. Chang, G. Meng, S. Xiang, and C. Pan, “Spatio-temporal graph structure learning for traffic forecasting,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, no. 01, 2020, pp. 1177–1185.

- [4] B. L. Smith and M. J. Demetsky, “Traffic flow forecasting: comparison of modeling approaches,” Journal of transportation engineering, vol. 123, no. 4, pp. 261–266, 1997.

- [5] M. Lippi, M. Bertini, and P. Frasconi, “Short-term traffic flow forecasting: An experimental comparison of time-series analysis and supervised learning,” IEEE Transactions on Intelligent Transportation Systems, vol. 14, no. 2, pp. 871–882, 2013.

- [6] J. Van Lint and C. Van Hinsbergen, “Short-term traffic and travel time prediction models,” Artificial Intelligence Applications to Critical Transportation Issues, vol. 22, no. 1, pp. 22–41, 2012.

- [7] M. G. Karlaftis and E. I. Vlahogianni, “Statistical methods versus neural networks in transportation research: Differences, similarities and some insights,” Transportation Research Part C: Emerging Technologies, vol. 19, no. 3, pp. 387–399, 2011.

- [8] C.-H. Wu, J.-M. Ho, and D.-T. Lee, “Travel-time prediction with support vector regression,” IEEE transactions on intelligent transportation systems, vol. 5, no. 4, pp. 276–281, 2004.

- [9] X. Zhan, S. Zhang, W. Y. Szeto, and X. M. Chen, “Multi-step-ahead traffic flow forecasting using multi-output gradient boosting regression tree,” Tech. Rep., 2018.

- [10] W. Huang, G. Song, H. Hong, and K. Xie, “Deep architecture for traffic flow prediction: deep belief networks with multitask learning,” IEEE Transactions on Intelligent Transportation Systems, vol. 15, no. 5, pp. 2191–2201, 2014.

- [11] Z. Cui, K. Henrickson, R. Ke, and Y. Wang, “Traffic graph convolutional recurrent neural network: A deep learning framework for network-scale traffic learning and forecasting,” IEEE Transactions on Intelligent Transportation Systems, vol. 21, no. 11, pp. 4883–4894, 2019.

- [12] S. Hochreiter and J. Schmidhuber, “Long short-term memory,” Neural computation, vol. 9, no. 8, pp. 1735–1780, 1997.

- [13] D. Zhang and M. R. Kabuka, “Combining weather condition data to predict traffic flow: a gru-based deep learning approach,” IET Intelligent Transport Systems, vol. 12, no. 7, pp. 578–585, 2018.

- [14] J. Zhang, Y. Zheng, and D. Qi, “Deep spatio-temporal residual networks for citywide crowd flows prediction,” in Thirty-first AAAI conference on artificial intelligence, 2017.

- [15] B. Yu, M. Li, J. Zhang, and Z. Zhu, “3d graph convolutional networks with temporal graphs: A spatial information free framework for traffic forecasting,” arXiv preprint arXiv:1903.00919, 2019.

- [16] B. Yu, H. Yin, and Z. Zhu, “Spatio-temporal graph convolutional networks: A deep learning framework for traffic forecasting,” arXiv preprint arXiv:1709.04875, 2017.

- [17] X. Kong, J. Zhang, X. Wei, W. Xing, and W. Lu, “Adaptive spatial-temporal graph attention networks for traffic flow forecasting,” Applied Intelligence, vol. 52, no. 4, pp. 4300–4316, 2022.

- [18] X. Zhang, C. Huang, Y. Xu, L. Xia, P. Dai, L. Bo, J. Zhang, and Y. Zheng, “Traffic flow forecasting with spatial-temporal graph diffusion network,” in Proceedings of the AAAI conference on artificial intelligence, vol. 35, no. 17, 2021, pp. 15 008–15 015.

- [19] M. Lv, Z. Hong, L. Chen, T. Chen, T. Zhu, and S. Ji, “Temporal multi-graph convolutional network for traffic flow prediction,” IEEE Transactions on Intelligent Transportation Systems, vol. 22, no. 6, pp. 3337–3348, 2021.

- [20] Z. Wu, S. Pan, G. Long, J. Jiang, and C. Zhang, “Graph wavenet for deep spatial-temporal graph modeling,” arXiv preprint arXiv:1906.00121, 2019.

- [21] L. Bai, L. Yao, C. Li, X. Wang, and C. Wang, “Adaptive graph convolutional recurrent network for traffic forecasting,” Advances in neural information processing systems, vol. 33, pp. 17 804–17 815, 2020.

- [22] C. Song, Y. Lin, S. Guo, and H. Wan, “Spatial-temporal synchronous graph convolutional networks: A new framework for spatial-temporal network data forecasting,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, no. 01, 2020, pp. 914–921.

- [23] M. Li and Z. Zhu, “Spatial-temporal fusion graph neural networks for traffic flow forecasting,” in Proceedings of the AAAI conference on artificial intelligence, vol. 35, no. 5, 2021, pp. 4189–4196.

- [24] A. Cichocki, N. Lee, I. Oseledets, A.-H. Phan, Q. Zhao, D. P. Mandic et al., “Tensor networks for dimensionality reduction and large-scale optimization: Part 1 low-rank tensor decompositions,” Foundations and Trends® in Machine Learning, vol. 9, no. 4-5, pp. 249–429, 2016.

- [25] X. Chen, Z. He, and J. Wang, “Spatial-temporal traffic speed patterns discovery and incomplete data recovery via svd-combined tensor decomposition,” Transportation research part C: emerging technologies, vol. 86, pp. 59–77, 2018.

- [26] H. Tan, Y. Wu, B. Shen, P. J. Jin, and B. Ran, “Short-term traffic prediction based on dynamic tensor completion,” IEEE Transactions on Intelligent Transportation Systems, vol. 17, no. 8, pp. 2123–2133, 2016.

- [27] J. B. Estrach, W. Zaremba, A. Szlam, and Y. LeCun, “Spectral networks and deep locally connected networks on graphs,” in 2nd International conference on learning representations, ICLR, vol. 2014, 2014.

- [28] M. Defferrard, X. Bresson, and P. Vandergheynst, “Convolutional neural networks on graphs with fast localized spectral filtering,” Advances in neural information processing systems, vol. 29, 2016.

- [29] T. N. Kipf and M. Welling, “Semi-supervised classification with graph convolutional networks,” arXiv preprint arXiv:1609.02907, 2016.

- [30] Y. Hechtlinger, P. Chakravarti, and J. Qin, “A generalization of convolutional neural networks to graph-structured data,” arXiv preprint arXiv:1704.08165, 2017.

- [31] W. Hamilton, Z. Ying, and J. Leskovec, “Inductive representation learning on large graphs,” Advances in neural information processing systems, vol. 30, 2017.

- [32] P. Velickovic, G. Cucurull, A. Casanova, A. Romero, P. Lio, and Y. Bengio, “Graph attention networks,” stat, vol. 1050, p. 20, 2017.

- [33] X. Ma, Z. Tao, Y. Wang, H. Yu, and Y. Wang, “Long short-term memory neural network for traffic speed prediction using remote microwave sensor data,” Transportation Research Part C: Emerging Technologies, vol. 54, pp. 187–197, 2015.

- [34] J. Li, L. Gao, W. Song, L. Wei, and Y. Shi, “Short term traffic flow prediction based on lstm,” in 2018 Ninth International Conference on Intelligent Control and Information Processing (ICICIP). IEEE, 2018, pp. 251–255.

- [35] Y.-H. Chan, A. K.-F. Lui, and S.-C. Ng, “Short term traffic flow prediction with neighbor selecting gated recurrent unit,” in 2021 IEEE International Conference on Systems, Man, and Cybernetics (SMC). IEEE, 2021, pp. 857–862.

- [36] W. Zhao, Y. Gao, T. Ji, X. Wan, F. Ye, and G. Bai, “Deep temporal convolutional networks for short-term traffic flow forecasting,” IEEE Access, vol. 7, pp. 114 496–114 507, 2019.

- [37] R. Jiang, X. Song, D. Huang, X. Song, T. Xia, Z. Cai, Z. Wang, K.-S. Kim, and R. Shibasaki, “Deepurbanevent: A system for predicting citywide crowd dynamics at big events,” in Proceedings of the 25th ACM SIGKDD international conference on knowledge discovery & data mining, 2019, pp. 2114–2122.

- [38] L. Wang, X. Geng, X. Ma, F. Liu, and Q. Yang, “Cross-city transfer learning for deep spatio-temporal prediction,” arXiv preprint arXiv:1802.00386, 2018.

- [39] Z. Zheng, Y. Yang, J. Liu, H.-N. Dai, and Y. Zhang, “Deep and embedded learning approach for traffic flow prediction in urban informatics,” IEEE Transactions on Intelligent Transportation Systems, vol. 20, no. 10, pp. 3927–3939, 2019.

- [40] Z. Lv, J. Xu, K. Zheng, H. Yin, P. Zhao, and X. Zhou, “Lc-rnn: A deep learning model for traffic speed prediction.” in IJCAI, vol. 2018, 2018, p. 27th.

- [41] K. Guo, Y. Hu, Z. Qian, H. Liu, K. Zhang, Y. Sun, J. Gao, and B. Yin, “Optimized graph convolution recurrent neural network for traffic prediction,” IEEE Transactions on Intelligent Transportation Systems, vol. 22, no. 2, pp. 1138–1149, 2021.

- [42] S. Guo, Y. Lin, N. Feng, C. Song, and H. Wan, “Attention based spatial-temporal graph convolutional networks for traffic flow forecasting,” in Proceedings of the AAAI conference on artificial intelligence, vol. 33, no. 01, 2019, pp. 922–929.

- [43] C. Zheng, X. Fan, C. Wang, and J. Qi, “Gman: A graph multi-attention network for traffic prediction,” in Proceedings of the AAAI conference on artificial intelligence, vol. 34, no. 01, 2020, pp. 1234–1241.

- [44] Y. Li, R. Yu, C. Shahabi, and Y. Liu, “Diffusion convolutional recurrent neural network: Data-driven traffic forecasting,” arXiv preprint arXiv:1707.01926, 2017.

- [45] T. G. Kolda and B. W. Bader, “Tensor decompositions and applications,” SIAM review, vol. 51, no. 3, pp. 455–500, 2009.

- [46] H. Tan, G. Feng, J. Feng, W. Wang, Y.-J. Zhang, and F. Li, “A tensor-based method for missing traffic data completion,” Transportation Research Part C: Emerging Technologies, vol. 28, pp. 15–27, 2013.

- [47] N. Cohen and A. Shashua, “Convolutional rectifier networks as generalized tensor decompositions,” in International Conference on Machine Learning. PMLR, 2016, pp. 955–963.

- [48] G. G. Calvi, A. Moniri, M. Mahfouz, Z. Yu, Q. Zhao, and D. P. Mandic, “Tucker tensor layer in fully connected neural networks,” arXiv preprint arXiv:1903.06133, 2019.

- [49] Y. Yang and T. Hospedales, “Deep multi-task representation learning: A tensor factorisation approach,” 2016.

- [50] X. Xu, T. Zhang, C. Xu, Z. Cui, and J. Yang, “Spatial-temporal tensor graph convolutional network for traffic prediction,” arXiv preprint arXiv:2103.06126, 2021.

- [51] X. Zhao, Q. Dai, J. Wu, H. Peng, M. Liu, X. Bai, J. Tan, S. Wang, and P. Yu, “Multi-view tensor graph neural networks through reinforced aggregation,” IEEE Transactions on Knowledge and Data Engineering, 2022.

- [52] Z. Diao, X. Wang, D. Zhang, Y. Liu, K. Xie, and S. He, “Dynamic spatial-temporal graph convolutional neural networks for traffic forecasting,” in Proceedings of the AAAI conference on artificial intelligence, vol. 33, no. 01, 2019, pp. 890–897.

- [53] Z. Li, L. Li, Y. Peng, and X. Tao, “A two-stream graph convolutional neural network for dynamic traffic flow forecasting,” in 2020 IEEE 32nd International Conference on Tools with Artificial Intelligence (ICTAI). IEEE, 2020, pp. 355–362.

- [54] D. G. Chachlakis, A. Prater-Bennette, and P. P. Markopoulos, “L1-norm tucker tensor decomposition,” IEEE Access, vol. 7, pp. 178 454–178 465, 2019.

- [55] J. Gehring, M. Auli, D. Grangier, D. Yarats, and Y. N. Dauphin, “Convolutional sequence to sequence learning,” in International conference on machine learning. PMLR, 2017, pp. 1243–1252.

- [56] C.-C. Chang and C.-J. Lin, “Libsvm: a library for support vector machines,” ACM transactions on intelligent systems and technology (TIST), vol. 2, no. 3, pp. 1–27, 2011.

- [57] D. Bigoni, A. P. Engsig-Karup, and Y. M. Marzouk, “Spectral tensor-train decomposition,” SIAM Journal on Scientific Computing, vol. 38, no. 4, pp. A2405–A2439, 2016.