Spatial-Angular Attention Network for

Light Field Reconstruction

Abstract

Typical learning-based light field reconstruction methods demand in constructing a large receptive field by deepening their networks to capture correspondences between input views. In this paper, we propose a spatial-angular attention network to perceive non-local correspondences in the light field, and reconstruct high angular resolution light field in an end-to-end manner. Motivated by the non-local attention mechanism [1, 2], a spatial-angular attention module specifically for the high-dimensional light field data is introduced to compute the response of each query pixel from all the positions on the epipolar plane, and generate an attention map that captures correspondences along the angular dimension. Then a multi-scale reconstruction structure is proposed to efficiently implement the non-local attention in the low resolution feature space, while also preserving the high frequency components in the high-resolution feature space. Extensive experiments demonstrate the superior performance of the proposed spatial-angular attention network for reconstructing sparsely-sampled light fields with non-Lambertian effects.

Index Terms:

Light field reconstruction, deep learning, attention mechanism.I Introduction

Through capturing both intensities and directions from sampled light rays, light field enables high-quality view synthesis without the need of complex and heterogeneous information such as geometry and texture. More importantly, benefiting from the light field rendering technology [3], photorealistic views can be rendered in real-time regardless of the scene complexity or non-Lambertian effect. This high quality rendering technology usually requires a densely-sampled light field (DSLF), where the disparity between adjacent views should be less than one pixel. However, typical DSLF capture either suffers from a long period of acquisition time (e.g., DSLF gantry system [3]) or falls into the well-known resolution trade-off problem. That is, due to the limitation of the sensor resolution [4], the light field is sparsely sampled either in the angular domain [5] or the spatial domain [6].

Recently, a more promising way is the fast capturing of a sparsely-sampled (angular domain) light field followed by direct reconstruction or depth-based view synthesis methods [7, 8] by using advanced deep learning techniques. On the one hand, typical learning-based reconstruction methods [9, 10, 11] employ multiple convolutional layers to map the low angular resolution light field to the DSLF. But due to the limited perceptive range of convolutional filters [12], these networks fail to collect enough information (i.e., the spatial-angular correspondences) when dealing with large disparities, leading to aliasing effects in the reconstructed light field. On the other hand, depth-based view synthesis methods [7, 13, 14] address the large disparity problem through plane sweep (depth estimation), and then synthesize novel views using learning-based prediction. However, these methods require depth consistency along the angular dimension, and thus, often fail to handle the depth ambiguity caused by the non-Lambertian effect.

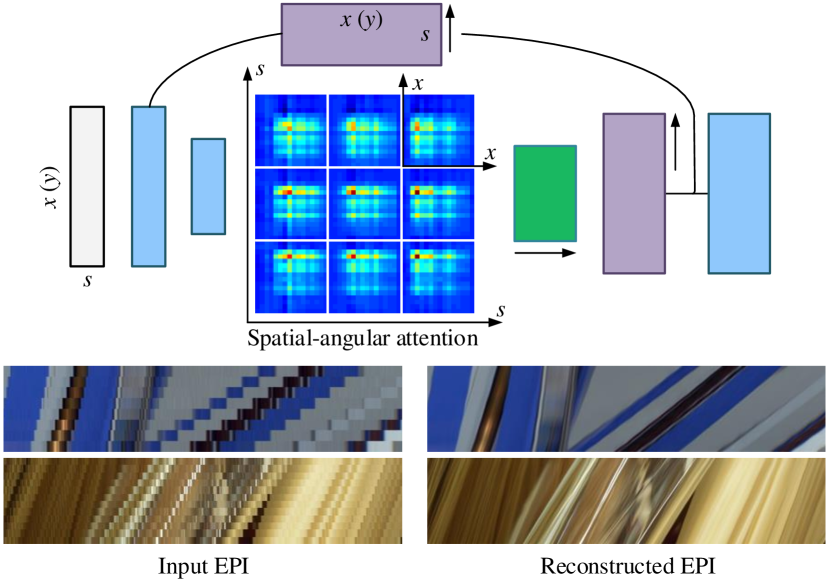

In this paper, we propose a Spatial-Angular Attention Network, termed as SAA-Net, to achieve DSLF reconstruction from a sparse input. The proposed SAA-Net perceives correspondences on the Epipolar Plane Image (EPI) in a non-local fashion, addressing the aforementioned non-Lambertian issue and large disparity issue in a unified framework. Specifically, the SAA-Net consists of two parts, including a spatial-angular attention module (Sec. IV-A) and a U-net backbone (Sec. IV-B). Motivated by the non-local attention mechanism in [1, 2], for each pixel in the input light field, the Spatial-Angular Attention Module (termed as SAAM for short) computes the responses of pixels from all the positions on the epipolar plane, and generates an attention map that records the correspondences along the angular dimension, as shown in Fig. 1 (top). This correspondence information in the attention map is then applied to guide the reconstruction in the angular dimension via matrix multiplication and channel-to-angular pixel shuffling.

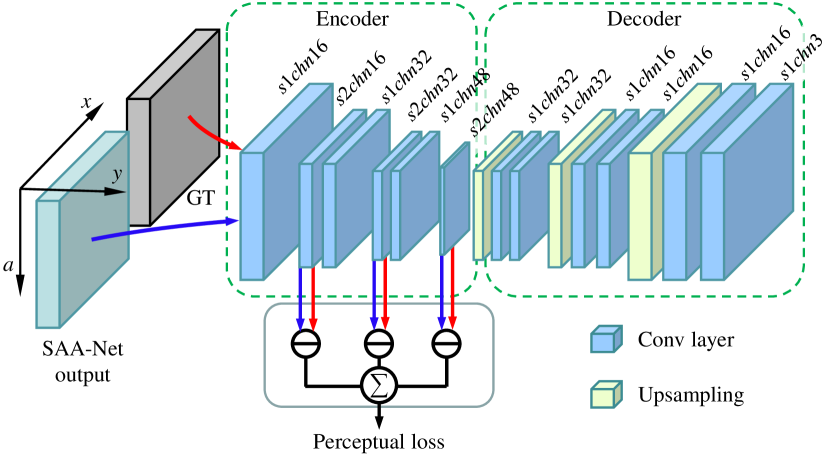

To efficiently perform the non-local attention, we propose a convolutional neural network with multi-scale reconstruction structure. The network follows the basic architecture of the U-net, i.e., an encoder-decoder structure with skip connections. The encoder compresses the input light field in the spatial dimensions and removes redundancy information for the SAAM. Rather than simply reconstruct the light field at the end of the network, we propose a multi-scale reconstruction structure by performing deconvolution along the angular dimension in each skip connection branch, as shown in Fig. 1 (top). The proposed multi-scale reconstruction structure maintains the view consistency in the low spatial resolution feature space while preserving fine details in the high spatial resolution feature space.

For network training, we propose a spatial-angular perceptual loss that is specifically designed for the high-dimensional light field data (Sec. V). Rather than computing the high-level feature loss [17, 18] by feeding each view in the light field into a 2D CNN (e.g., the commonly-used VGG [19]), we pre-train a 3D auto-encoder that considers the consistency in both the spatial and angular dimensions of the light field. In summary, we make the following contributions111The source code is available at https://github.com/GaochangWu/SAAN.:

-

•

A spatial-angular attention module that perceives correspondences non-locally on the epipolar plane;

-

•

A multi-scale reconstruction structure for efficiently performing the non-local attention in the low spatial resolution feature space while also preserving high frequencies;

-

•

A spatial-angular perceptual loss specifically designed for high-dimensional light field data.

We demonstrate the superiority of the SAA-Net by performing extensive evaluations on various light field datasets. The proposed network presents high-quality DSLF on challenging cases with both non-Lambertian effects and large disparities, as illustrated in Fig. 1 (bottom).

II Related Work

II-A Light Lield Reconstruction

First, we briefly review the major works on light field view synthesis (or view synthesis) depending on whether the depth information is explicitly used.

Depth image-based view synthesis. Typically, these kind of approaches first estimate the depth of a scene, then warp and blend the input views to synthesize a novel view [20, 8]. Conventional light field depth estimation approaches follow the pipeline of stereo matching [21], i.e., cost computation, cost aggregation (or cost volume filtering), disparity regression and post refinement. The main difference is that light field converts disparity from discrete space into a continuous space [22], delivering various depth cues that enable light field depth estimation with different strategies, such as structure tensor-based local direction estimation [22], depth from correspondence [23], depth from defocus [24, 25] and depth from parallelogram cues [26]. Moreover, some learning-based approaches incorporate the aforementioned depth estimation pipeline with 2D convolution-based feature extraction, 3D convolution-based cost volume aggregation and depth regression [27]. For novel view synthesis, input views are warped to the novel viewpoints with sub-pixel accuracy using bilinear interpolation and blended in different manners, e.g., total variation optimization [22], soft blending [20] and learning-based synthesis [28].

Recently, researchers mainly focus on the studies for maximizing the quality of synthesized views based on the deep learning technique. Flynn et al. [13] proposed a learning-based method to synthesize novel views using the predicted probabilities and colors for each depth plane. Kalantari et al. [7] further employed a sequential network setting to infer depth (disparity) and color, and optimized the model via end-to-end training. Following the sequential network setting, Meng et al. [29] developed a confidence estimation network between depth and color networks to infer pixel-wise blending weights. Shi et al. [8] proposed to blend the warped views in both pixel level and feature level. Jin et al. proposed to use a regular sampling pattern (four corner views) [30] and a flexible sampling pattern [31] for light field depth estimation, and then perform the reconstruction using spatial-angular alternating refinement. Ko et al. [32] introduced a dynamic blending filter to generate the filter coefficients adaptively according to the warped views. Different from the sequential network settings mentioned above, Zhou et al. [33] proposed a novel learning-based Multi-Plane Image (MPI) representation that infers a novel view by alpha blending of different images. Mildenhall et al. [14] further proposed to use multiple MPIs to synthesize a local light field.

Depth image-based view synthesis approaches solve the problem of large correspondence gap in the sparsely-sampled light field by using depth estimation and warping. But the scene depth are based on the Lambertian assumption, and thus, these approaches will suffer from depth ambiguity when addressing the non-Lambertian effect, as demonstrated in Fig. 8 (second case). In this paper, we address the problem of large correspondence gap with a non-local attention mechanism to capture large gap correspondences. Since we do not rely on depth information, the proposed method shows higher reconstruction quality on the non-Lambertian cases.

Reconstruction without explicit depth. These kind of approaches treat light field reconstruction as the approximation of plenoptic function. In the Fourier domain, the sparse sampling in the angular dimension produces overlaps between the original spectrum and its replicas, leading to aliasing effect [10]. Classical approaches [34, 35] consider a reconstruction filter (usually in a wedge shape) to extract the original signal while filtering the aliasing high-frequency components. For instance, Vagharshakyan et al. [36] utilized an adapted discrete shearlet transform in the Fourier domain to remove the high-frequency spectra that introduce aliasing effects. Shi et al. [37] performed DSLF reconstruction as an optimization for sparsity in the continuous Fourier domain.

Inspired by the success of deep learning in computer vision, depth-independent plenoptic reconstruction approaches [9, 10] were widely investigated by using deep convolution networks. Specifically, Zhu et al. [38] proposed an auto-encoder that combines convolutional layers and convLSTM layers [39]. For explicitly addressing the aliasing effects, Wu et al. [10] took advantage of the clear texture structure of the EPI and proposed a “blur-restoration-deblur” framework. However, when applying a large blur kernel for large disparities, this approach fails to recover the high-frequency details, and thus leading to blur effect. Liu et al. [40] further applied a multi-stream network that takes 3D EPIs in different directions as input. In addition to extracting slices from the plenoptic function, Yeung et al. [11] directly fed the entire 4D light field into a pseudo 4D convolutional network, and proposed a novel spatial-angular alternating convolution to iteratively refine the angular dimensions of the light field. Jin et al. [41] further extended the spatial-angular alternating convolution to the problem of compressive light field reconstruction. Wang et al. [42] applied pseudo 4D convolution to reconstruct the two angular dimensions of the input light field sequentially. Wu et al. [43] proposed an evaluation network for EPIs with different shear amount, termed as sheared EPI structure, and further boosted its performance with an end-to-end optimization framework [44]. With this structure, the networks implicitly use depth information to select a well reconstructed EPI. However, the performances of these networks are limited by the finite perceptive field of the convolutional neurons, especially when handling the large disparity problem.

II-B Attention Mechanism

Attention was first built to imitate the mechanism of human perception that mainly focuses on the salient part [45, 46, 47]. Vaswani et al. [48] indicated that the attention mechanism is able to solve the long term dependency problem even without using convolution operation or recurrent neural cell. Attention mechanism is typically embedded within a conventional network backbone, such as a VGG-net [19], a ResNet [49, 50] or even a simple multi-layer perceptron (MLP) [51]. It encourages the network to focus on the salient parts by assigning an adaptive weight (attention map) to the extracted features.

Hu et al. [52] and Woo et al. [50] proposed to use a global pooling (max-pooling or average-pooling) followed by an MLP to aggregate the entire information in the spatial dimension. This attention mechanism enables the network to focus on certain channels in the feature maps. However, the global pooling operation will decimate the high-frequencies in the feature maps [53], which could be unacceptable, especially for a reconstruction task. Alternatively, Vaswani et al. [48] proposed to use a weighted average of the responses from all the positions with respect to a certain position for Natural Language Processing (NLP), which is called self-attention. Wang et al. [1] further bridged the self-attention for NLP to more general tasks in computer vision, such as video classification. More concretely, a high-dimensional feature map, e.g., a 3D tensor with spatial-temporal dimensions, is reshaped into its 2D form for the operation of matrix multiplication. Different from the convolution, the self-attention allows the element in the feature map to interact with any elements regardless of their distance (range), and thus, is also termed as non-local attention.

Rather than using the non-local attention mechanism, Wang et al. [54, 55] proposed a parallax attention module to calculate the correspondence between two stereo images along the epipolar line. Tsai et al. [56] introduced an attention module in the angular dimension to weight the contribution of each view in a light field. Guo et al. [57] applied a pixel-wise attention map using convolution layers for adaptively blending feature maps from different frequency components in a light field.

Compared with the attention modules in [54, 55, 56, 57], the major difference in this paper is that the proposed attention is calculated non-locally in the 2D epipolar plane for each pixel, enabling the network to capture spatial-angular correspondences. That also makes us different from existing non-local attention mechanisms [1, 2, 48], which perform non-local attention across the entire data dimensions. Another significant difference is that we embed a channel-to-angular pixel shuffling operation into the non-local attention mechanism to explicitly achieve the light field reconstruction task. To the best of our knowledge, our method is the first to apply non-local attention to the light field reconstruction task.

III Problem Analysis and Motivation

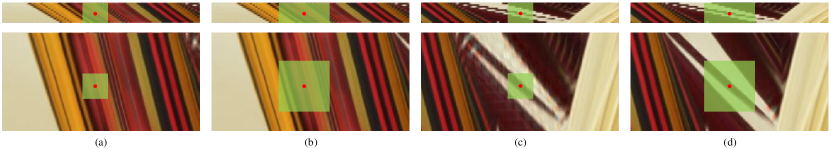

In this section, we empirically show that the performance of a learning-based light field reconstruction method is closely related to the perception range of its neurons (or convolutional filters) on the epipolar plane, especially when handling a light field with large disparity problem.

Deep neural network is proved to be a powerful technique in solving ill-posed inverse problems [58]. For the light field reconstruction task, both network structure and the disparity range of the scene are significant to the performance of light field reconstruction. Since the disparity range of the scene is unalterable once the light field is acquired, most deep learning-based light field reconstruction methods [9, 7, 10, 11] pursue a more appropriate architecture for better performance. Specifically, the depth-based view synthesis methods convert the feature maps into a physically meaningful depth map, while the depth-independent methods directly convert feature maps to novel views. Essentially, both two kinds of approaches adopt convolution operation to generate responses (feature maps) among corresponding pixels.

To quantitatively measure the capability of correspondence capturing, we apply the concept of receptive field introduced in [12, 59]. The receptive field measures the number of pixels that are connected to a particular filter in the CNN, i.e., the number of correspondence pixels perceived by a certain convolutional filter.

We analyse the reconstruction qualities of two networks with the same structure (U-net) and the same number of parameters (around 120K) but different receptive fields, as illustrated in Fig. 2. For a scene with small disparity (about 3 pixels in the demonstrated example), networks with either small receptive field ( pixels) or large receptive field ( pixels) can reconstruct high angular resolution light fields (EPIs) with view consistency, as shown in Fig. 2(a) and Fig. 2(b). However, for a scene with large disparity (about 9 pixels), the network with small receptive field is not able to collect enough information from corresponding pixels on the epipolar plane, as shown clearly at the top of Fig. 2(c). Since the actual size of the receptive field can be smaller than its theoretical size [59], the actual receptive field might not be able to cover the disparity range of the input light field, leading to severe aliasing effects in the reconstructed result, as shown at the bottom of Fig. 2(c). In contrast, the network with a large receptive field can produce high quality result (Fig. 2(d)).

Due to the limitation of parameter amount, it is intractable to expand the receptive field by pursuing a deeper network or a larger filter size. The fundamental idea of our proposed light field reconstruction method is to capture the correspondence non-locally across the spatial and angular dimensions of the light field. We achieve this with two features: 1) a spatial-angular attention module that captures non-local correspondence between any two pixels on the epipolar plane; and 2) an encoder-decoder network that reduces the redundancies in the light field to efficiently achieve the non-local perception.

IV Spatial-Angular Attention Network

In this section, we first introduce the overall architecture of the proposed SAA-Net for light field reconstruction, and then introduce the proposed spatial-angular attention module in details. The input of the SAA-Net is a 3D light field slice with two spatial dimensions and one angular dimension, i.e., or . By splitting light fields into 3D slices, the proposed network can be applied to both 3D light fields from a single-degree-of-freedom gantry system and 4D light fields from plenoptic camera and camera array system.

For a 4D light field , we adopt a hierarchical reconstruction strategy similar with that in [10]. The strategy first reconstruct 3D light fields using slices and , then use the synthesized 3D light fields to reconstruct the final 4D light field.

IV-A Network Architecture

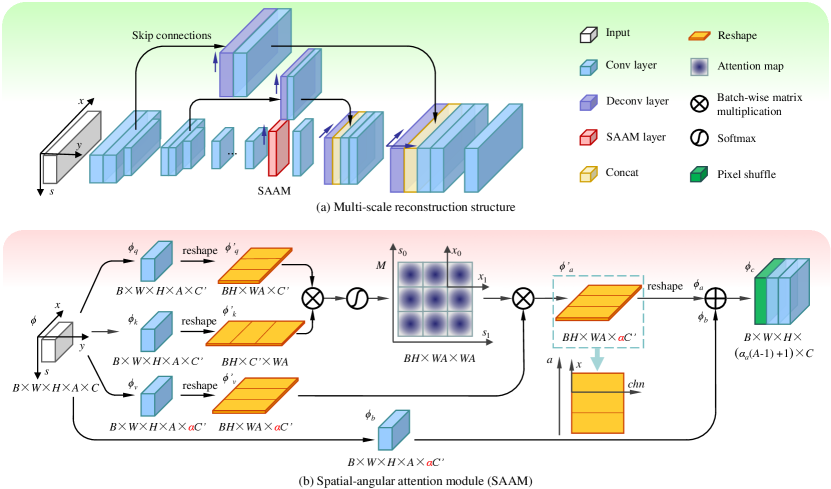

We propose a network with Multi-Scale Reconstruction (MSR) structure to maintain view consistency (i.e., continuity in the angular dimension) in the low spatial resolution feature space while preserving fine details in the high spatial resolution feature space. As shown in Fig. 3(a), the backbone of the proposed SAA-Net follows the encoder-decoder structure with skip connections (also known as U-net). But the proposed SAA-Net has two particular differences: 1) In each skip connection, we use a deconvolution layer along the angular dimension before feeding the feature maps to the decoder part; 2) In the encoder part, we use strided convolution with stride only in the spatial dimensions of the light field. Table I provides the detailed configuration of the proposed SAA-Net.

The encoder part of the SAA-Net generates multi-scale light field features and reduces the redundant information in the spatial dimension to save the computational and GPU memory costs for the non-local perception. We use two convolutional layers (3D) with stride and to downsample the spatial resolution of the light field feature with ratio 4 and 2 along the width and height dimension, respectively. Before each downsampling, two 3D convolutional layers with filter sizes and (width, height and angular) are employed to take place of a single convolutional layer with filter size , reducing parameters without performance degradation [11].

The skip connections copy the feature layers before each downsampling layer in the encoder, as shown in Fig. 3(a). For each skip connection, a deconvolution layer (also known as transposed convolution layer) is applied to upsample the feature map in the angular dimension, followed by a convolution. Since the angular information mainly concentrates on the 2D EPI for reconstructing a 3D light field , the filter size in each deconvolution layer in the skip connection is set to .

The decoder part of the SAA-Net upsamples the feature map from the spatial-angular attention module (Sec. IV-B) by using two deconvolution layer with stride and in the spatial dimensions (width and height), respectively. The decoder also receives information from the skip connections by concatenating the features from corresponding levels along the channel dimension [60], as shown in Fig. 3(a). We then use two 3D convolutional layers with filter sizes and to compress the channel numbers in each level of the decoder. This can be considered as the blending of the light field features from different reconstruction scale. Note that all the reconstructions (upsampling operations) in the angular dimension are implemented in the skip connections and the spatial-angular attention module, where the latter will be introduced in the following subsection.

IV-B Spatial-Angular Attention Module

Inspired by the non-local attention mechanism in [1, 2], we propose a Spatial-Angular Attention Module (SAAM) to disentangle the disparity information in light field. The main differences between the proposed SAAM and the previous non-local attention [1, 2] are as follows: 1) Since the disparity information is encoded in the EPI, the non-local attention mechanism is performed in the 2D epipolar plane rather than the entire 3D space; 2) We model the light field reconstruction with pixel shuffling [61] that disentangles the reconstructed angular information from the channel dimension.

A straightforward choice to perform spatial-angular attention is to embed the attention module in each resolution scale of the U-net. However, implementing non-local perception in the full resolution light field (feature map) is intractable due to the high computation complexity and GPU memory cost. Alternatively, we insert the proposed SAAM between the encoder and decoder, i.e., to perform non-local operation in the low-resolution feature space, as shown in Fig. 3.

Since features in a 3D CNN will be a 5D tensor (i.e., batch, width, hight, angular and channel), we first apply two convolution layers with kernel size to produce two feature layers and with size of . The channel number is set to be (i.e., in our implementation) for computation efficiency. Then the feature layers and are reshaped into 3D tensors and of size and , respectively. In this way, we merge the angular and width dimensions ( and or and in a light field) together to implement the non-local perception on the epipolar plane.

We apply batch-wise matrix multiplication between and and use a softmax function to produce an attention map as illustrated in Fig. 3(b). The attention map is composed of matrices with shape . Each matrix can be considered as a 2D expansion map of a 4D tensor (the batch and height dimensions are neglected). The point indicates the response of light field position to position in the latent space. In other words, the attention map is able to capture correspondence among all the views in the input 3D light field.

| Layer | Input | |||

| Encoder | ||||

| Conv1 | 1/24 | |||

| Conv1 | 24/24 | Conv1 | ||

| Conv1 | 24/48 | Conv1 | ||

| Conv2 | 48/48 | Conv1 | ||

| Conv2 | 48/48 | Conv2 | ||

| Conv2 | 48/96 | Conv2 | ||

| Conv3 | 96/48 | Conv2 | ||

| Conv3 | 48/48 | Conv3 | ||

| Conv3 | 48/48 | Conv3 | ||

| Conv3 | 48/48 | Conv3 | ||

| Conv3 | 48/48 | Conv3 | ||

| Skip connection | ||||

| Deconv4 | 24/24 | Conv1 | ||

| Conv4 | 24/24 | Deconv4 | ||

| Deconv5 | 48/48 | Conv2 | ||

| Conv5 | 48/48 | Deconv5 | ||

| SAAM | ||||

| Decoder | ||||

| Conv6 | 48/96 | SAAM | ||

| Deconv6 | 96/48 | Conv6 | ||

| Concat1 | - | - | - | Conv6; Conv4 |

| Conv6 | 48/48 | Concat1 | ||

| Conv6 | 48/48 | Conv6 | ||

| Deconv7 | 48/24 | Conv6 | ||

| Concat2 | - | - | - | Conv7; Conv5 |

| Conv7 | 24/24 | Concat2 | ||

| Conv7 | 24/24 | Conv7 | ||

| Conv8 | 24/1 | Conv7 | ||

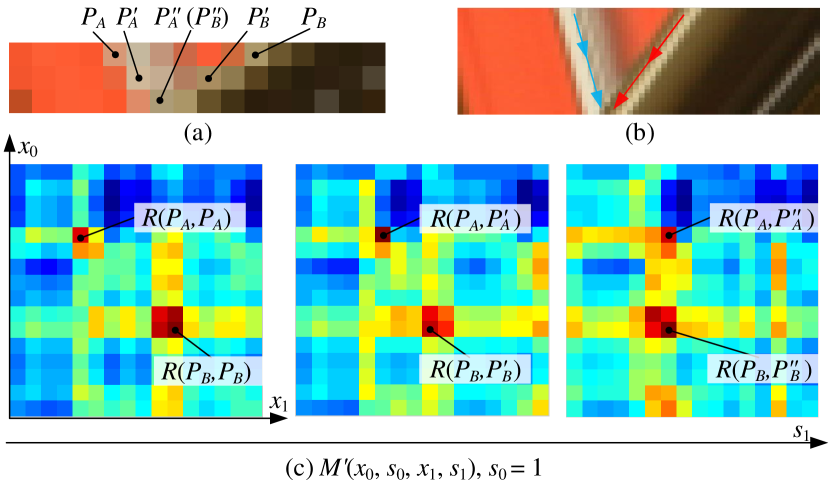

We demonstrate the non-local perception of the proposed SAAM by visualizing a part of the attention map before the softmax function as shown in Fig. 4. In this example, there are two points and with remarkable visual features as shown in Fig. 4(a), and their corresponding points in other views are marked as () and (). As the viewpoint changes along the angular dimension, the background point will be occluded by the foreground point , which is demonstrated more obviously in Fig. 4(b). Fig. 4(c) shows three sub-maps extracted from the attention map with and , respectively. It can be clearly seen that a point will have the highest response at the location of its correspondence in the attention map. For instance, the response at the location for the corresponding patch (the middle sub-figure of Fig. 4(c)), and the response at the location for the corresponding patch (the right sub-figure of Fig. 4(c)). For the occluded point , the location of the maximum response changes from to . In this case, the attention module is able to locate the occluded point through its surrounding pixels. More demonstrations of spatial-angular attention map can be found in Sec. VII-A.

Feature and are obtained by another two convolutions in a similar manner as that for and . The main difference is that the channel numbers of these two feature layers are , where, denotes the reconstruction factor (upsampling scale in the angular dimension) of the network. Another batch-wise matrix multiplication is applied between the attention map and (reshaped from ), resulting a 3D tensor . We then reshape into a 5D tensor .

| Layer | Input | ||

|---|---|---|---|

| Conv1 | / | Encoder | |

| Conv2 | / | Encoder | |

| Conv3 | / | Encoder | |

| Conv4 | / | Encoder | |

| Reshape1 | - | /- | Conv1 |

| Reshape2 | - | /- | Conv2 |

| Reshape3 | - | /- | Conv3 |

| MatMul1 | - | - | Reshape1; Reshape2 |

| Softmax | - | - | MatMul1 |

| MatMul2 | - | - | Softmax; Reshape3 |

| Reshape4 | - | -/ | MatMul2 |

| Add | - | / | Reshape4; Conv4 |

| Pixel shuffle | / | Add | |

| Conv5 | / | Pixel shuffle | |

| Conv6 | / | Conv5 |

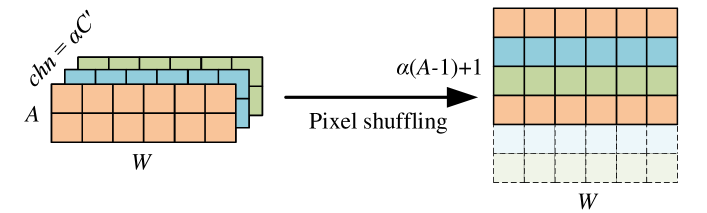

Using the aforementioned SAAM, the reconstructed angular information can be well encoded in the channel dimension of the 5D tensor. By disentangling it with channel-to-angular pixel shuffling, we can reconstruct a high angular resolution light field (feature map) in a non-local manner. Specifically, we first multiply the feature layer by a trainable scale parameter (initialized as 0) and add back to the feature layer . We then apply the channel-to-angular pixel shuffling and reconstruct a 5D tensor . As illustrated in Fig. 5, the channel-to-angular pixel shuffling rearranges elements from channel dimension to angular dimension222Different from typical spatial super-resolution, upsampling a light field by generates a light field of views. So we simply remove the last views from the reconstructed light field.. The final output of the SAAM is generated by two convolutional layers with kernel sizes of and , respectively.

By combining the proposed SAAM with the feature maps in the skip connections, the network is able to reconstruct light field with view consistency while also preserving the high frequency components. Detailed parameter setting of the SAAM is listed in Table II.

V Network Training

V-A Spatial-Angular Perceptual Loss

Typical learning-based light field reconstruction or view synthesis methods optimize the network parameters by formulating a pixel-wise loss between the inferred image and the desired view (or EPI [10]). Recently, researches [33, 62, 63, 14] show that formulating the loss function in the high-level feature space will motivate the restoration of high-frequency components. This high-level feature loss, also known as perceptual loss, can be computed from part of the feature layers in the autologous network [17] or other pre-trained networks [18], such as the commonly-used VGG network [19].

In this paper, we propose a spatial-angular perceptual loss that is specifically designed for the high-dimensional light field data. Existing approaches [14, 63, 29] for light field reconstruction apply perceptual loss between 2D sub-aperture images, neglecting the view consistency constraint in the angular dimension. Alternatively, we propose to use a 3D light field encoder to map the 3D light fields into high-dimensional feature tensors (width, height, angular and channel).

To achieve this, we design another 3D encoder-decoder network (auto-encoder) that learns to extract the high-level features for the proposed spatial-angular perceptual loss333The architecture of the 3D auto-encoder for the perceptual loss is different with that of the SAA-Net.. Note that the auto-encoder can be also generalized to a 4D form. But given that some light field datasets have only one angular dimension (e.g., light fields from gantry system in [15]) and the proposed SAA-Net also takes 3D light field as its input, we only adopts 3D convolution in the encoder and decoder.

The proposed auto-encoder for the spatial-angular perceptual loss uses the output of the SAA-Net or the desired 3D light field (ground truth) as input, as shown in Fig. 6. It has 12 convolutional layers () with kernel size and 3 bilinear upsampling layers. The encoder part includes the first 6 convolutional layers, where 3 convolutional layers () with stride 2 in each dimension are used to compress the light field from low-level pixel space into high-level feature space. The decoder part includes the last 6 convolutional layers and 3 upsampling layers to restore the light field from the latent representations. Detailed configuration of each layer (stride and number of channels) is shown in Fig. 6.

The auto-encoder learns how to extract high-level features through unsupervised learning, i.e., the network learns to predict the input 3D light field. The objective for training the auto-encoder is , where denotes the auto-encoder. We use the same training dataset (Sec. V-B), learning rate and optimizer (Sec. V-C) as those for the SAA-Net.

To compute the final spatial-angular perceptual loss, we feed the output of the SAA-Net as well as the ground truth light field to the auto-encoder, as shown in Fig. 6. And the spatial-angular perceptual loss is defined as follows

where denotes the feature maps obtained from the th layer in the encoder, and is a set of hyperparameters for the proposed spatial-angular perceptual loss.

To prevent the potential possibility that different light field patches are mapped to the same feature vector [17], our loss function also contains a pixel-wise term using Mean Absolute Error (MAE) between and , i.e.,

Then the final loss function for training the SAA-Net is defined as

The two terms are weighted by the set of hyperparameters in the perceptual loss.

V-B Training Data

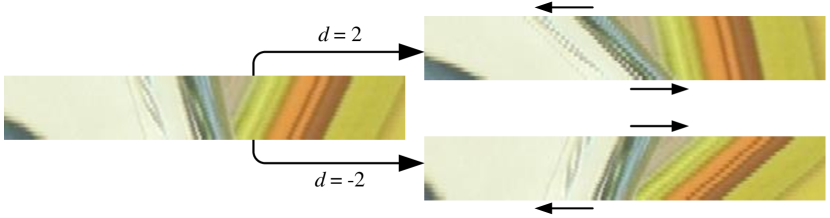

We use light fields from the Stanford (New) Light Field Archive [64] as the training dataset, which contains 12 light fields444The light field Lego Gantry Self Portrait is excluded from the training dataset since the moving camera may influence the reconstruction performance. with views. Since the network input is 3D light fields, we can extract 17 and 17 in each 4D light field set. Similar with the data augmentation strategy proposed in [38], we augment the extracted 3D light fields using shearing operation [65]

where is the angular resolution of the 3D light field , and is the resulting 3D light field with shear amount . can be obtained following a similar manner. In practice, we use two shear amounts . The shearing-based data augmentation increases the number of training examples by 2 times. More importantly, the disparity effects in the augmented light field will be more obvious as shown in Fig. 7, enabling the network to address the large disparity problem.

To accelerate the training procedure, the extracted 3D light fields are cropped into sub-light fields with a spatial resolution of (width and height for or height and width for ) and a stride of 40 pixels. About examples can be extracted from the 3D light fields (original and sheared).

V-C Implementation Details

We train two models with reconstruction factors . The input/output angular resolution of the training samples for these two models are 6/16 and 5/17, respectively. Although the reconstruction factor of the network is fixed, we can achieve a flexible upsampling rate through network cascade. The training is performed on the Y channel (i.e., the luminance channel) of the YCbCr color space. We initialize the weights of both convolution and deconvolution layers by drawing randomly from a Gaussian distribution with a zero mean and a standard deviation of , and the biases by zero. The network is optimized by using ADAM solver [66] with learning rate of (, ) and mini-batch size of 28. The training model is implemented in the Tensorflow framework [67]. The network converges after steps of backpropagation, taking about 35 hours on a NVIDIA Quadro GV100.

VI Evaluations

In this section, we evaluate the proposed SAA-Net on various kinds of light fields, including those from both gantry systems and from plenoptic camera (Lytro Illum [4]). We mainly compare our method with six state-of-the-arts learning-based methods including Kalantari et al. [7] (depth-based), LLFF [14] (MPI representation), Wu et al. [10], Yeung et al. [11], HDDRNet [63] and DA2N [44] (without explicit depth). To fully demonstrate the effectiveness of our design choices, we also perform ablation studies by training our network without the SAAM, without the MSR structure and without the spatial-angular perceptual loss, respectively. The quantitative evaluations is reported by measuring the average PSNR and SSIM [68] values over the synthesized views of the luminance channel in the YCbCr space. Please refer to the submitted video for more qualitative results.

| Scale | Bikes | FairyCollection | LivingRoom | Mannequin | WorkShop | Average | |

|---|---|---|---|---|---|---|---|

| Kalantari et al. [7] | 34.83 / 0.969 | 36.66 / 0.977 | 46.35 / 0.991 | 40.62 / 0.983 | 38.66 / 0.986 | 39.42 / 0.981 | |

| Wu et al. [10] | 38.39 / 0.990 | 40.32 / 0.992 | 45.48 / 0.996 | 43.26 / 0.995 | 41.55 / 0.995 | 41.80 / 0.994 | |

| Yeung et al. [11] | 39.55 / 0.993 | 40.25 / 0.993 | 47.32 / 0.997 | 44.49 / 0.996 | 43.17 / 0.996 | 42.96 / 0.995 | |

| LLFF [14] | 36.84 / 0.985 | 40.14 / 0.989 | 46.85 / 0.990 | 43.23 / 0.989 | 41.79 / 0.991 | 41.77 / 0.989 | |

| HDDRNet [63] | 38.97 / 0.994 | 40.20 / 0.994 | 43.65 / 0.997 | 42.21 / 0.996 | 42.01 / 0.997 | 41.41 / 0.996 | |

| DA2N [44] | 39.14 / 0.992 | 41.00 / 0.993 | 46.30 / 0.996 | 44.17 / 0.996 | 43.09 / 0.996 | 42.74 / 0.994 | |

| Our proposed | 40.35 / 0.994 | 42.22 / 0.995 | 48.01 / 0.997 | 44.99 / 0.996 | 45.29 / 0.997 | 44.17 / 0.996 | |

| Kalantari et al. [7] | 30.67 / 0.935 | 32.39 / 0.952 | 41.62 / 0.973 | 37.15 / 0.970 | 33.94 / 0.971 | 35.15 / 0.960 | |

| Wu et al. [10] | 31.22 / 0.951 | 30.33 / 0.942 | 42.43 / 0.991 | 39.53 / 0.989 | 33.49 / 0.977 | 35.40 / 0.970 | |

| Yeung et al. [11] | 32.67 / 0.967 | 31.82 / 0.969 | 43.54 / 0.993 | 40.82 / 0.992 | 37.21 / 0.988 | 37.21 / 0.982 | |

| LLFF [14] | 34.95 / 0.963 | 34.01 / 0.966 | 44.73 / 0.987 | 39.92 / 0.985 | 37.61 / 0.985 | 38.24 / 0.977 | |

| HDDRNet [63] | 33.97 / 0.976 | 35.08 / 0.979 | 44.83 / 0.997 | 40.60 / 0.993 | 38.54 / 0.992 | 38.60 / 0.987 | |

| DA2N [44] | 35.79 / 0.984 | 36.23 / 0.981 | 45.91 / 0.996 | 40.83 / 0.992 | 40.11 / 0.994 | 39.77 / 0.990 | |

| Our proposed | 36.54 / 0.987 | 37.62 / 0.987 | 47.25 / 0.997 | 42.08 / 0.994 | 40.55 / 0.994 | 40.81 / 0.992 | |

| MSR structure-2 | 34.13 / 0.972 | 35.72 / 0.984 | 46.21 / 0.996 | 40.97 / 0.993 | 38.66 / 0.991 | 39.14 / 0.987 | |

| MSR structure-3 | 34.20 / 0.973 | 35.53 / 0.984 | 46.79 / 0.996 | 41.04 / 0.993 | 38.85 / 0.991 | 39.28 / 0.987 | |

| MSR structure-4 | 34.45 / 0.977 | 35.05 / 0.977 | 47.00 / 0.995 | 41.01 / 0.993 | 38.26 / 0.991 | 39.15 / 0.987 | |

| w/o MSR structure | 33.76 / 0.975 | 34.35 / 0.976 | 46.07 / 0.995 | 40.19 / 0.991 | 38.05 / 0.992 | 38.48 / 0.986 | |

| w/o SAP loss | 36.43 / 0.987 | 37.29 / 0.986 | 47.10 / 0.997 | 41.81 / 0.993 | 40.18 / 0.993 | 40.56 / 0.991 |

-

“MSR structure-X” denotes the proposed network that has series of the MSR structure without using the SAAM.

VI-A Evaluations on Light Fields from Gantry Systems

In this experiment, the comparisons are performed on light fields from the MPI Light Field Archive [16] ( views of resolution , views for evaluation) and the CIVIT Dataset [15] ( views of resolution ) with upsampling scales and . The performances with respect to both angular sparsity and non-Lambertian are taken into consideration. Since the vanilla version of the network by Yeung et al. [11]555In the modified implementation, every 8 (6) views are applied to reconstruct (synthesize) a 3D light field of 22 (21) views for the networks of reconstruction factor (). and Meng et al. [63] (HDDRNet) were specifically designed for 4D light fields, we modify their convolutional layers to fit the 3D input while keeping its network architecture unchanged. The networks by Kalantari et al. [7], Yeung et al. [11], Mildenhall et al. [14] (LLFF) and Meng et al. [63] (HDDRNet) are re-trained using the same training dataset as our SAA-Net. Due to the particularity of the training datasets in [44], we do not retrain the DA2N. We perform network cascade to achieve different upsampling scales, i.e., two cascades for () upsampling using a network of reconstruction factor ().

Fig. 8 shows the reconstruction results on three light fields, Bikes, FairyCollection and WorkShop, from the MPI Light Field Archive [16] with upsampling scale (disparity range up to 33.5px). The first and the third cases have complex occlusion structures, as shown in the top and the bottom row in Fig. 8. The baseline methods [7, 10, 11, 14] fail to reconstruct the complex structures. Among them, the depth and learning-based approach [7] and the MPI-based approach [14] fail to estimate proper occlusion relations between the foregrounds and the backgrounds. The second scene is a non-Lambertian case, i.e., a refractive glass before the toys. The approach by Kalantari et al. [7] cannot reconstruct the refractive object. And the EPIs reconstructed by the baseline methods [10, 11] appear severe aliasing effects due to the limited receptive field. The MPI-based approach LLFF [14] is able to reconstruct the non-Lambertian effects in this case, but produces ghosting artifacts as marked by the red arrow in Fig. 8.

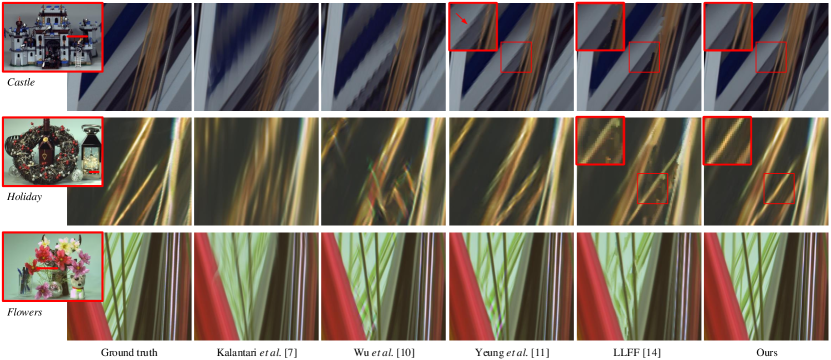

Fig. 9 shows the reconstruction results on three light fields, Castle, Holiday and Flowers, from the CIVIT Dataset [15] with upsampling scale (disparity range about 14px). The first case and the third case have thin structures with complex occlusions. The depth and learning-based approach by Kalantari et al. [7] fails to estimate depth maps accurate enough to warp the input images, and the color CNN cannot correct the misaligned views, producing ghosting artifacts as shown in Fig. 9. For the second case, we demonstrate reconstructed EPIs in a highly non-Lambertian region. Caused by the depth ambiguity, the approach by Kalantari et al. [7] produces choppiness artifacts along the angular dimension. Due to the limited receptive field of the networks, the results by Wu et al. [10] and Yeung et al. [11] show aliasing effects in various degrees. In the demonstrated cases, LLFF [14] assigns wrong planes to the tiny structures, leading to ghosting and tearing artifacts.

Table III and Table IV list the quantitative measurements ( and upsampling scales) on the light fields from the MPI Light Field Archive [16] and the CIVIT Dataset [15], respectively. Compared with the baseline approaches, the proposed SAA-Net shows superior performance on both light field datasets.

| Scale | Seal & Balls | Castle | Holiday | Dragon | Flowers | Average | |

|---|---|---|---|---|---|---|---|

| Kalantari et al. [7] | 46.83 / 0.990 | 39.14 / 0.973 | 36.03 / 0.979 | 43.97 / 0.989 | 39.00 / 0.989 | 40.99 / 0.984 | |

| Wu et al. [10] | 49.01 / 0.997 | 37.67 / 0.984 | 40.46 / 0.995 | 48.38 / 0.997 | 45.85 / 0.998 | 44.27 / 0.994 | |

| Yeung et al. [11] | 49.83 / 0.997 | 40.84 / 0.993 | 41.16 / 0.996 | 48.61 / 0.997 | 47.83 / 0.997 | 45.65 / 0.996 | |

| LLFF [14] | 47.03 / 0.990 | 40.25 / 0.988 | 39.69 / 0.987 | 47.38 / 0.990 | 44.71 / 0.991 | 43.81 / 0.989 | |

| HDDRNet [63] | 46.79 / 0.997 | 40.43 / 0.993 | 39.97 / 0.996 | 47.72 / 0.997 | 43.76 / 0.997 | 43.73 / 0.996 | |

| DA2N [44] | 48.23 / 0.997 | 42.65 / 0.993 | 41.38 / 0.996 | 48.75 / 0.998 | 46.40 / 0.998 | 45.48 / 0.996 | |

| Our proposed | 50.99 / 0.998 | 43.20 / 0.994 | 42.29 / 0.997 | 50.12 / 0.998 | 48.49 / 0.998 | 47.02 / 0.997 | |

| Kalantari et al. [7] | 43.13 / 0.985 | 36.03 / 0.965 | 32.44 / 0.961 | 39.50 / 0.985 | 35.21 / 0.973 | 37.26 / 0.974 | |

| Wu et al. [10] | 45.21 / 0.994 | 35.20 / 0.977 | 35.58 / 0.987 | 46.39 / 0.997 | 41.60 / 0.995 | 40.80 / 0.990 | |

| Yeung et al. [11] | 44.38 / 0.992 | 37.86 / 0.989 | 36.06 / 0.988 | 45.52 / 0.997 | 42.30 / 0.994 | 41.22 / 0.992 | |

| LLFF [14] | 45.50 / 0.990 | 38.60 / 0.971 | 36.69 / 0.984 | 44.80 / 0.992 | 41.19 / 0.989 | 41.36 / 0.985 | |

| HDDRNet [63] | 44.24 / 0.997 | 39.88 / 0.991 | 38.09 / 0.992 | 44.26 / 0.997 | 42.04 / 0.996 | 41.70 / 0.995 | |

| DA2N [44] | 46.19 / 0.996 | 40.77 / 0.992 | 37.99 / 0.992 | 47.19 / 0.998 | 41.95 / 0.996 | 42.82 / 0.995 | |

| Our proposed | 49.19 / 0.998 | 41.32 / 0.992 | 38.88 / 0.993 | 48.39 / 0.998 | 44.05 / 0.997 | 44.37 / 0.996 | |

| MSR structure-2 | 46.85 / 0.995 | 37.78 / 0.989 | 36.17 / 0.988 | 47.10 / 0.998 | 42.98 / 0.996 | 42.18 / 0.993 | |

| MSR structure-3 | 48.50 / 0.997 | 40.66 / 0.991 | 38.23 / 0.992 | 46.94 / 0.997 | 42.92 / 0.996 | 43.45 / 0.995 | |

| MSR structure-4 | 47.15 / 0.997 | 40.86 / 0.992 | 38.43 / 0.993 | 46.69 / 0.997 | 43.18 / 0.997 | 43.26 / 0.995 | |

| w/o MSR structure | 46.41 / 0.994 | 38.65 / 0.990 | 36.78 / 0.988 | 46.83 / 0.997 | 42.77 / 0.996 | 42.29 / 0.993 | |

| w/o SAP loss | 48.83 / 0.996 | 41.05 / 0.992 | 38.62 / 0.992 | 48.00 / 0.997 | 43.85 / 0.997 | 44.07 / 0.995 |

VI-B Evaluations on Light Fields from Lytro Illum

We evaluate the proposed approach using three Lytro light field datasets (113 light fields in total), the 30 Scenes dataset by Kalantari et al. [7], and the Reflective and Occlusions categories from the Stanford Lytro Light Field Archive [69]. In this experiment, we reconstruct a light field from views ( upsampling) and a light field from views ( upsampling). We compare our SAA-Net with 8 learning-based approaches, including 3 depth-based approaches (Kalantari et al. [7], LLFF [14] and Meng et al. [29]) and 5 approaches without explicit depth (Wu et al. [10], Wang et al. [42], Yeung et al. [11], HDDRNet [63] and DA2N [44]). Since the vanilla versions of the networks in [7, 11, 42, 63, 29] are trained on Lytro light fields, we use their open-source model (parameters) without re-training. The networks by Wu et al. [10] and Mildenhall et al. [14] (LLFF) are re-trained using the same dataset introduced in Sec. V-B. Note that the proposed network is not fine-tuned on any Lytro light field datasets, and the results are produced by the same set of network parameters for both and upsampling scales.

Table V lists the quantitative results on the evaluated Lytro light fields. The proposed SAA-Net shows competitive performance compared with the state-of-the-art light field reconstruction approach by Yeung et al. [11]. For the evaluated 113 light fields, the average PSNR / SSIM values of the proposed network are 42.55 / 0.984 for upsampling and 36.55 / 0.969 for upsampling. In comparison, the average PSNR / SSIM values of the baseline approaches with the highest performance are 41.82 / 0.984 (DA2N [44]) for upsampling and 35.81 / 0.938 (Meng et al. [29]) for upsampling. Since our network is not re-trained or fine-tuned on any Lytro light field dataset, these experimental results clearly demonstrate that our network can generalize well on light fields captured by different acquisition geometries.

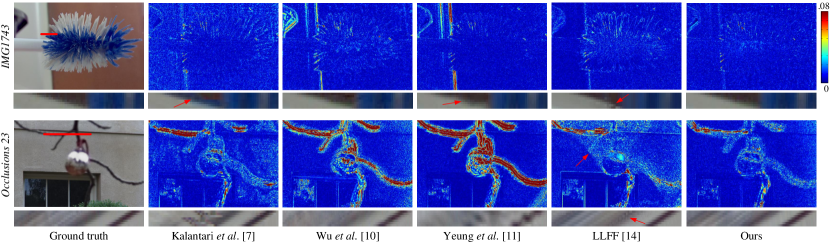

We demonstrate two cases with relatively large disparities (maximum disparity up to 13px), IMG1743 from the 30 Scenes [7] and Occlusions 23 from the Occlusions category [69], as shown in Fig. 10. In both cases, the reconstruction results by Wu et al. [10] and Yeung et al. [11] show ghosting artifacts around the region with large disparity (background in the IMG1743 case, and foreground in the Occlusions 23 case) due to their limited receptive fields. The depth and learning-based approaches by Kalantari et al. [7] and Mildenhall et al. [14] (LLFF) produce promising results in the first case, but appear tearing artifacts near the occlusion boundaries as marked by the red arrows in the EPIs. In the second case, the approach by Kalantari et al. [7] fails to estimate proper depth information, introducing misalignment as shown by the EPI. LLFF [14] produces ghosting effects due to the incorrect plane assignments around the background region, as shown by the red arrows in the figure. In comparison, the proposed SAA-Net provides reconstructed light fields with higher view consistency (as shown in the demonstrated EPIs).

| Scale | 30 Scenes | Reflective | Occlusions | |

|---|---|---|---|---|

| Kalantari et al. [7] | 39.62/0.978 | 37.78/0.971 | 34.02/0.955 | |

| Wu et al. [10] | 41.02/0.988 | 41.71/0.989 | 38.11/0.944 | |

| Wang et al. [42] | 43.82/0.993 | 39.93/0.959 | 34.69/0.923 | |

| Yeung et al. [11] | 44.53/0.990 | 42.56/0.975 | 39.27/0.945 | |

| LLFF [14] | 39.92/0.977 | 39.52/0.969 | 35.64/0.929 | |

| HDDRNet [63] | 43.02/0.989 | 40.72/0.979 | 36.13/0.958 | |

| DA2N [44] | 43.69/0.995 | 43.25/0.991 | 39.82/0.971 | |

| Our proposed | 44.75/0.996 | 44.04/0.992 | 40.32/0.972 | |

| Kalantari et al. [7] | 38.21/0.974 | 35.84/0.942 | 31.81/0.895 | |

| Wu et al. [10] | 36.28/0.965 | 36.48/0.962 | 32.19/0.907 | |

| Yeung et al. [11] | 39.22/0.977 | 36.47/0.947 | 32.68/0.906 | |

| LLFF [14] | 38.17/0.974 | 36.40/0.948 | 31.96/0.901 | |

| HDDRNet [63] | 38.33/0.967 | 36.77/0.931 | 32.78/0.909 | |

| Meng et al. [29] | 39.14/0.970 | 37.01/0.950 | 33.10/0.912 | |

| DA2N [44] | 38.99/0.986 | 36.72/0.975 | 33.14/0.950 | |

| Our proposed | 39.98/0.988 | 37.77/0.978 | 33.77/0.952 | |

| MSR structure-2 | 37.17/0.978 | 36.29/0.974 | 32.02/0.944 | |

| MSR structure-3 | 37.62/0.980 | 36.93/0.975 | 32.53/0.948 | |

| MSR structure-4 | 37.20/0.981 | 36.67/0.975 | 32.58/0.947 | |

| w/o MSR structure | 37.08/0.978 | 36.37/0.975 | 32.37/0.946 | |

| w/o SAP loss | 39.71/0.986 | 37.39/0.977 | 33.52/0.950 |

VI-C Ablation studies

In this experiment, we empirically validate the modules and losses in our SAA-Net by performing the following ablation studies on different datasets. The results are listed in the last five rows in Table IV, III and V. First, we evaluate the network with increasing series of the MSR structure while removing the SAAM. We use “MSR structure-” to represent the network with series of MSR structure. By increasing the series, the network will have a larger receptive field, e.g., the theoretical receptive field size of the MSR structure-4 reaches 247 pixels in the spatial dimension. However, since it is intractable to increase the actual size of the receptive field simply by using a deeper network [59], purely increasing the series of MSR structure has limitations in improving performance. This point is also verified by the ablation study. In comparison, the non-local attention is more effective than simply increasing the receptive field of the network.

In the second ablation study, we use a typical 3D U-net with the same convolutional layers as the backbone and remove the deconvolution layer in the skip connections, denoted as “w/o MSR structure” for short. The angular reconstruction is simply achieved by using deconvolution at the end of the network. The network parameters are kept comparable to the SAA-Net by adjusting the channel dimension of the network. For light fields from gantry systems [16, 15], the performance of the network decreases more than 2dB in terms of PSNR, as shown in Table IV and III. For light fields from Lytro Illum, the performance of the network decreases more than 1.3dB, as shown in Table V.

In the last ablation study, we train the proposed SAA-Net simply by using the pixel-wise term (MAE loss) without the proposed spatial-angular perceptual loss, denoted as “w/o SAP loss” for short. The performance (PSNR) decreases around 0.3dB as shown in Table IV, III and V.

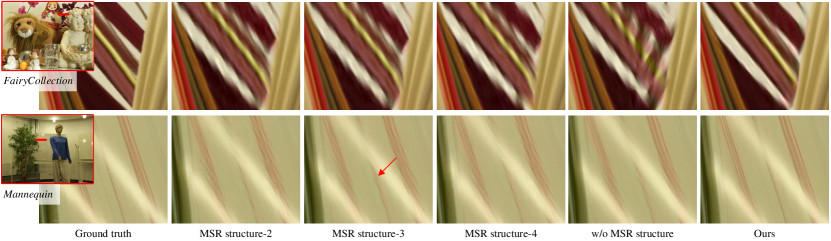

In Fig. 11, we also visualize the comparison ( upsampling) between the proposed SAA-Net, the network with different series of the MSR structure while removing the SAAM and the network without the MSR structure. The evaluated light fields are from the MPI Light Field Archive [16]. The results show that without using the proposed modules, especially the MSR structure, the SAA-Net appears severe aliasing effects around region with large disparity, e.g., the occluded leaves in the FairyCollection case and the hand writing on the reflective board in the Mannequin case.

VII Further Analysis

VII-A Spatial-Angular Attention Map

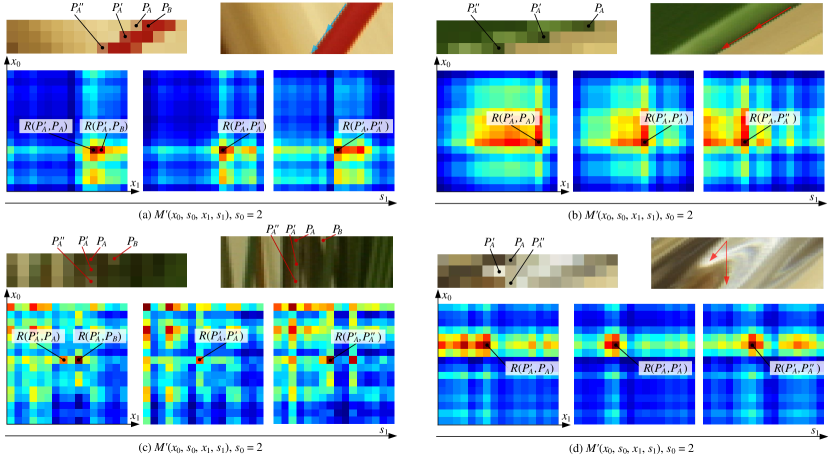

We visualize four additional attention maps (before the softmax function) on scenes with small disparity, large disparity, occlusion and non-Lambertian effect, as shown in Fig. 12. The first case (Fig. 12(a)) shows a scene with a relatively small disparity (about 7 pixels). Due to the spatial downsampling operation in the MSR structure, the disparity of the light field features in the SAAM is about 1.75 pixels, as shown in the top left figure in Fig. 12(a). Three sub-maps are shown in the bottom of Fig. 12(a), which visualize the correspondence captured by the attention mechanism. As we can see, the response with the highest value moves from at position to at position along the angular dimension. In addition, the attention map also shows a high response value at position due to the fractional disparity. This indicates that the proposed SAAM has potential to capture a correspondence with sub-pixel accuracy.

In the second case (Fig. 12(b)), we demonstrate the spatial-angular attention on a scene with a large disparity (about 16 pixels). The spatial downsampling operation in the MSR structure reduces the disparity to 4 pixels. The response in the attention map moves from at position to at position along the angular dimension. Although the SAAM only applies convolutions before the attention, it is capable to capture correspondence with large displacement.

Fig. 12(c) visualize the attention map of a scene with a complex occlusion structure (Mannequin in the MPI Light Field Archive [16]). The background white board is occluded by the foreground leaves (please refer to the sub-aperture image of the second row in Fig. 11). As shown by the demonstrated attention map in Fig. 12(c), the SAAM fails to capture a correct correspondence because of the occlusion. The highest response value is at position , which is also a background point like . However, the SAAM also generate a considerable response at position , which we speculate is inferred from other non-occluded background points.

The fourth case in Fig. 12(d) demonstrates the spatial-angular attention on a scene with non-Lambertian effect. In this case, the positional relation between the corresponding points , and does not follow a clear depth cue, as clearly shown in the top right figure of Fig. 12(d). Analogously, the responses do not follow a regular disparity pattern along the angular dimension, as visualized by the at the bottom of Fig. 12(d). This result shows that the proposed SAAM is able to catch the correspondences even for regions with non-Lambertian effects.

VII-B Tensor Decomposition for Spatial-Angular Attention

Although we propose a multi-scale reconstruction structure to save GPU memory cost, the SAAM will still occupy a large GPU memory when dealing with an input light field with high spatial-angular resolution. For example, when reconstructing light fields from the MPI Light Field Archive [16] (spatial resolution ), we have to disassemble the 3D data into sub-light fields of resolution (width height angular). Our investigation shows that the disassembling will cause quality degradation on the reconstructed results.

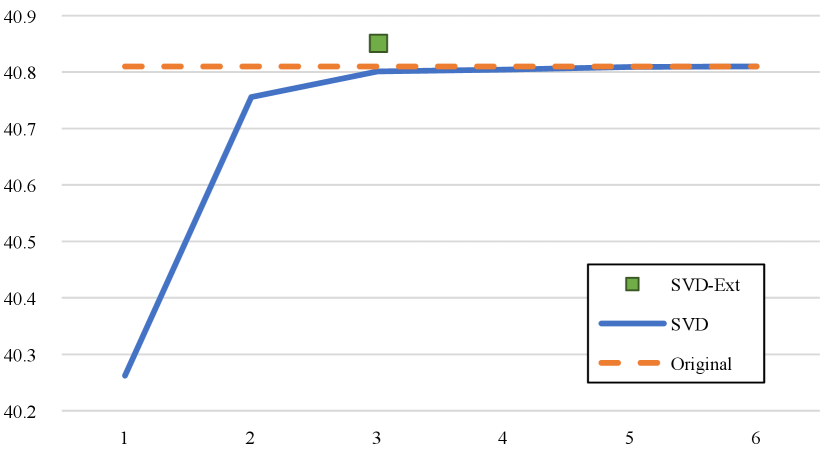

We therefore apply the truncated Singular Value Decomposition (SVD) [70] to compact the 3D tensor and before computing the attention map

where denotes or , and are two orthogonal matrices (ignoring the batch dimension), and is a diagonal matrix with singular values along its diagonal. By truncating the diagonal matrix with the largest singular values, we can get a good approximation and also compress the 3D tensors. Since the rank of the matrices are , the parameter of the truncated SVD .

Fig. 13 shows the performance on PSNR in function of the SVD decomposition using the largest singular values. As we can see from the “SVD” curve, with no less than three singular values, the SVD decomposition will maintain the network performance without using fine-tuning. Moreover, since the decomposition enables us to feed the network with higher spatial resolution input, e.g., from to , we can obtain a reconstruction result with even higher quality (0.04dB higher) when employing truncated SVD decomposition, as shown by the “SVD-Ext” (green dot) in the figure.

VII-C Limitations

The non-local attention involves outer product of large scale matrices, especially for the high-dimensional light field data. For this reason, the proposed network takes almost 15% of its inference time on the SAAM. For the 3D light field from the MPI Light Field Archive [16], the network takes about 51 seconds to reconstruct a light field from views of spatial resolution ( upsampling), i.e., 0.53s per view. For the 3D light field from the CIVIT Dataset [15], the network takes about 126 seconds to reconstruct a light field from views of spatial resolution ( upsampling), i.e., 0.65s per view. For a 4D light field from Lytro Illum, it takes about 17 seconds to reconstruct a light field from views of spatial resolution ( upsampling), i.e., about 0.35s per view. And the reconstruction of a Lytro light field from views ( upsampling) takes about 30 seconds, i.e., about 0.5s per view. The parameter number of our SAA-Net is about 338K. The above evaluations are performed on an Intel Xeon Gold 6130 CPU @ 2.10GHz with an NVIDIA TITAN Xp.

Although we apply a simple SVD decomposition to accerlate the network and compact the 3D tensor, the compression rate is limited by the rank of the matrices. Decomposing the attention map into the combination of small tensors [71] might solve this problem in a more essential way.

The another limitation of our proposed method is that repetitive patterns in the input light field can cause multiple plausible responses in the attention map, leading to misalignments in the reconstructed light fields. A possible solution is to introduce a smooth term in the attention map as in [54] to penalize multiple responses.

VIII Conclusions

We have proposed a spatial-angular attention module in a 3D U-net backbone to capture correspondence information non-locally for light field reconstruction. The introduced Spatial-Angular Attention Module (termed as SAAM) is designed to compute the responses from all the positions on the epipolar plane for each pixel in the light field and produce a spatial-angular attention map that records the correspondences. The attention map is then applied to guide light field reconstruction via channel-to-angular pixel shuffling. We further propose a multi-scale reconstruction structure based on the 3D U-net backbone that implements the SAAM efficiently in the low spatial resolution feature space, while also preserving fine details in the high spatial resolution feature space. For the network training, a spatial-angular perceptual loss is designed specifically for the high-dimensional light field data by pre-training a 3D auto-encoder. The evaluations on light fields with challenging non-Lambertian effects and large disparities have demonstrated the superiority of the proposed spatial-angular attention network.

References

- [1] X. Wang, R. Girshick, A. Gupta, and K. He, “Non-local neural networks,” in IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 7794–7803.

- [2] H. Zhang, I. Goodfellow, D. Metaxas, and A. Odena, “Self-attention generative adversarial networks,” in ICML, 2019, pp. 7354–7363.

- [3] M. Levoy and P. Hanrahan, “Light field rendering,” in SIGGRAPH, 1996, pp. 31–42.

- [4] “Lytro.” https://www.lytro.com/.

- [5] R. S. Overbeck, D. Erickson, D. Evangelakos, M. Pharr, and P. Debevec, “A system for acquiring, processing, and rendering panoramic light field stills for virtual reality,” in SIGGRAPH, 2019.

- [6] Y. Wang, L. Wang, J. Yang, W. An, J. Yu, and Y. Guo, “Spatial-angular interaction for light field image super-resolution,” in European Conference on Computer Vision (ECCV), 2020.

- [7] N. K. Kalantari, T.-C. Wang, and R. Ramamoorthi, “Learning-based view synthesis for light field cameras,” ACM TOG, vol. 35, no. 6, 2016.

- [8] J. Shi, X. Jiang, and C. Guillemot, “Learning fused pixel and feature-based view reconstructions for light fields,” in IEEE Conference on Computer Vision and Pattern Recognition, 2020.

- [9] Y. Yoon, H.-G. Jeon, D. Yoo, J.-Y. Lee, and I. So Kweon, “Learning a deep convolutional network for light-field image super-resolution,” in CVPRW, 2015, pp. 24–32.

- [10] G. Wu, Y. Liu, L. Fang, Q. Dai, and T. Chai, “Light field reconstruction using convolutional network on epi and extended applications,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 41, no. 7, pp. 1681–1694, 2019.

- [11] H. W. F. Yeung, J. Hou, J. Chen, Y. Y. Chung, and X. Chen, “Fast light field reconstruction with deep coarse-to-fine modeling of spatial-angular clues,” in ECCV, 2018, pp. 138–154.

- [12] J. Long, Z. Ning, and T. Darrell, “Do convnets learn correspondence?” Advances in Neural Information Processing Systems, vol. 2, pp. 1601–1609, 2014.

- [13] J. Flynn, I. Neulander, J. Philbin, and N. Snavely, “Deepstereo: Learning to predict new views from the world’s imagery,” in CVPR, 2015.

- [14] B. Mildenhall, P. P. Srinivasan, R. Ortiz-Cayon, N. K. Kalantari, R. Ramamoorthi, R. Ng, and A. Kar, “Local light field fusion: Practical view synthesis with prescriptive sampling guidelines,” ACM Transactions on Graphics (TOG), vol. 38, no. 4, pp. 1–14, 2019.

- [15] S. Moreschini, F. Gama, R. Bregovic, and A. Gotchev, “Civit dataset: Horizontal-parallax-only densely-sampled light-fields,” https://civit.fi/densely-sampled-light-field-datasets/.

- [16] V. K. Adhikarla, M. Vinkler, D. Sumin, R. K. Mantiuk, K. Myszkowski, H.-P. Seidel, and P. Didyk, “Towards a quality metric for dense light fields,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 58–67.

- [17] A. Dosovitskiy and T. Brox, “Generating images with perceptual similarity metrics based on deep networks,” in NIPS, 2016, pp. 658–666.

- [18] J. Johnson, A. Alahi, and L. Fei-Fei, “Perceptual losses for real-time style transfer and super-resolution,” in European Conference on Computer Vision, 2016.

- [19] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” International conference on learning representations, 2015.

- [20] E. Penner and L. Zhang, “Soft 3D reconstruction for view synthesis,” ACM TOG, vol. 36, no. 6, p. 235, 2017.

- [21] D. Scharstein and R. Szeliski, “A taxonomy and evaluation of dense two-frame stereo correspondence algorithms,” International journal of computer vision, vol. 47, no. 1-3, pp. 7–42, 2002.

- [22] S. Wanner and B. Goldluecke, “Variational light field analysis for disparity estimation and super-resolution,” IEEE TPAMI, vol. 36, no. 3, pp. 606–619, 2014.

- [23] C.-T. Huang, “Robust pseudo random fields for light-field stereo matching,” in Proceedings of the IEEE International Conference on Computer Vision, 2017, pp. 11–19.

- [24] M. W. Tao, S. Hadap, J. Malik, and R. Ramamoorthi, “Depth from combining defocus and correspondence using light-field cameras,” in ICCV, 2013, pp. 673–680.

- [25] T.-C. Wang, A. A. Efros, and R. Ramamoorthi, “Occlusion-aware depth estimation using light-field cameras,” in ICCV, 2015, pp. 3487–3495.

- [26] S. Zhang, H. Sheng, C. Li, J. Zhang, and Z. Xiong, “Robust depth estimation for light field via spinning parallelogram operator,” Computer Vision and Image Understanding, vol. 145, pp. 148–159, 2016.

- [27] A. Kendall, H. Martirosyan, S. Dasgupta, P. Henry, R. Kennedy, A. Bachrach, and A. Bry, “End-to-end learning of geometry and context for deep stereo regression,” in Proceedings of the IEEE International Conference on Computer Vision, 2017, pp. 66–75.

- [28] H. Zheng, M. Ji, H. Wang, Y. Liu, and L. Fang, “Crossnet: An end-to-end reference-based super resolution network using cross-scale warping,” in ECCV, 2018.

- [29] N. Meng, K. Li, J. Liu, and E. Y. Lam, “Light field view synthesis via aperture disparity and warping confidence map,” IEEE Transactions on Image Processing, vol. 30, pp. 3908–3921, 2021.

- [30] J. Jin, J. Hou, H. Yuan, and S. Kwong, “Learning light field angular super-resolution via a geometry-aware network.” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, no. 7, 2020, pp. 11 141–11 148.

- [31] J. Jin, J. Hou, J. Chen, H. Zeng, S. Kwong, and J. Yu, “Deep coarse-to-fine dense light field reconstruction with flexible sampling and geometry-aware fusion.” IEEE Transactions on Pattern Analysis and Machine Intelligence, no. 1, pp. 1–1, 2020.

- [32] K. Ko, Y. J. Koh, S. Chang, and C.-S. Kim, “Light field super-resolution via adaptive feature remixing,” IEEE Transactions on Image Processing, vol. 30, pp. 4114–4128, 2021.

- [33] T. Zhou, R. Tucker, J. Flynn, G. Fyffe, and N. Snavely, “Stereo magnification: Learning view synthesis using multiplane images,” in SIGGRAPH, 2018.

- [34] J.-X. Chai, X. Tong, S.-C. Chan, and H.-Y. Shum, “Plenoptic sampling,” in Proceedings of the 27th annual conference on Computer graphics and interactive techniques. ACM Press/Addison-Wesley Publishing Co., 2000, pp. 307–318.

- [35] C. Zhang and T. Chen, “Spectral analysis for sampling image-based rendering data,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 13, no. 11, pp. 1038–1050, 2003.

- [36] S. Vagharshakyan, R. Bregovic, and A. Gotchev, “Light field reconstruction using shearlet transform,” IEEE TPAMI, vol. 40, no. 1, pp. 133–147, 2018.

- [37] L. Shi, H. Hassanieh, A. Davis, D. Katabi, and F. Durand, “Light field reconstruction using sparsity in the continuous fourier domain,” ACM TOG, vol. 34, no. 1, p. 12, 2014.

- [38] H. Zhu, M. Guo, H. Li, Q. Wang, and A. Robleskelly, “Revisiting spatio-angular trade-off in light field cameras and extended applications in super-resolution,” IEEE Transactions on Visualization and Computer Graphics, pp. 1–15, 2019.

- [39] X. Shi, Z. Chen, H. Wang, D. Yeung, W. Wong, and W. Woo, “Convolutional lstm network: a machine learning approach for precipitation nowcasting,” in NIPS, 2015, pp. 802–810.

- [40] D. Liu, Y. Huang, Q. Wu, R. Ma, and P. An, “Multi-angular epipolar geometry based light field angular reconstruction network,” IEEE Transactions on Computational Imaging, vol. 6, pp. 1507–1522, 2020.

- [41] J. Jin, J. Hou, J. Chen, S. Kwong, and J. Yu, “Light field super-resolution via attention-guided fusion of hybrid lenses,” in Proceedings of the 28th ACM International Conference on Multimedia, 2020, pp. 193–201.

- [42] Y. Wang, F. Liu, K. Zhang, Z. Wang, Z. Sun, and T. Tan, “High-fidelity view synthesis for light field imaging with extended pseudo 4DCNN,” IEEE Transactions on Computational Imaging, vol. 6, pp. 830–842, 2020.

- [43] G. Wu, Y. Liu, Q. Dai, and T. Chai, “Learning sheared epi structure for light field reconstruction,” IEEE Transactions on Image Processing, vol. 28, no. 7, pp. 3261–3273, 2019.

- [44] G. Wu, Y. Liu, L. Fang, and T. Chai, “Revisiting light field rendering with deep anti-aliasing neural network.” IEEE Transactions on Pattern Analysis and Machine Intelligence, pp. 1–1, 2021.

- [45] L. Itti, C. Koch, and E. Niebur, “A model of saliency-based visual attention for rapid scene analysis,” IEEE Transactions on pattern analysis and machine intelligence, vol. 20, no. 11, pp. 1254–1259, 1998.

- [46] R. A. Rensink, “The dynamic representation of scenes,” Visual cognition, vol. 7, no. 1-3, pp. 17–42, 2000.

- [47] M. Corbetta and G. L. Shulman, “Control of goal-directed and stimulus-driven attention in the brain,” Nature reviews neuroscience, vol. 3, no. 3, pp. 201–215, 2002.

- [48] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. Kaiser, and I. Polosukhin, “Attention is all you need,” in NIPS, 2017, pp. 5998–6008.

- [49] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016, pp. 770–778.

- [50] S. Woo, J. Park, J. Lee, and I. S. Kweon, “Cbam: Convolutional block attention module,” in ECCV, 2018, pp. 3–19.

- [51] Y. Jiang, S. Chang, and Z. Wang, “Transgan: Two transformers can make one strong gan,” arXiv: Computer Vision and Pattern Recognition, 2021.

- [52] J. Hu, L. Shen, S. Albanie, G. Sun, and E. Wu, “Squeeze-and-excitation networks,” IEEE Transactions on Pattern Analysis and Machine Intelligence, pp. 1–1, 2019.

- [53] Z. Qin, P. Zhang, F. Wu, and X. Li, “Fcanet: Frequency channel attention networks.” arXiv preprint arXiv:2012.11879, 2020.

- [54] L. Wang, Y. Wang, Z. Liang, Z. Lin, J. Yang, W. An, and Y. Guo, “Learning parallax attention for stereo image super-resolution,” in IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 12 250–12 259.

- [55] L. Wang, Y. Guo, Y. Wang, Z. Liang, Z. Lin, J. Yang, and W. An, “Parallax attention for unsupervised stereo correspondence learning,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020.

- [56] Y.-J. Tsai, Y.-L. Liu, M. Ouhyoung, and Y.-Y. Chuang, “Attention-based view selection networks for light-field disparity estimation,” in Proceedings of AAAI Conference on Artificial Intelligence (AAAI), 2020, p. 1.

- [57] M. Guo, J. Hou, J. Jin, J. Chen, and L.-P. Chau, “Deep spatial-angular regularization for compressive light field reconstruction over coded apertures.” in European Conference on Computer Vision, 2020, pp. 278–294.

- [58] K. H. Jin, M. T. Mccann, E. Froustey, and M. Unser, “Deep convolutional neural network for inverse problems in imaging,” IEEE Transactions on Image Processing, vol. 26, no. 9, pp. 4509–4522, 2017.

- [59] B. Zhou, A. Khosla, A. Lapedriza, A. Oliva, and A. Torralba, “Object detectors emerge in deep scene cnns,” in International Conference on Learning Representations, 2015.

- [60] G. Eilertsen, J. Kronander, G. Denes, R. K. Mantiuk, and J. Unger, “Hdr image reconstruction from a single exposure using deep cnns,” Acm Transactions on Graphics, 2017.

- [61] W. Shi, J. Caballero, F. Huszar, J. Totz, A. P. Aitken, R. Bishop, D. Rueckert, and Z. Wang, “Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network,” in IEEE Conference on Computer Vision and Pattern Recognition, 2016, pp. 1874–1883.

- [62] Z. Zhang, Z. Wang, Z. Lin, and H. Qi, “Image super-resolution by neural texture transfer,” in IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 7982–7991.

- [63] N. Meng, H. K.-H. So, X. Sun, and E. Y. Lam, “High-dimensional dense residual convolutional neural network for light field reconstruction,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 43, no. 3, pp. 873–886, 2021.

- [64] “Stanford (New) Light Field Archive.” http://lightfield.stanford.edu/lfs.html.

- [65] Ng and Ren, “Fourier slice photography,” Acm Transactions on Graphics, vol. 24, no. 3, p. 735, 2005.

- [66] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” Computer Science, 2014.

- [67] M. Abadi, P. Barham, J. Chen, Z. Chen, A. Davis, J. Dean, M. Devin, S. Ghemawat, G. Irving, M. Isard et al., “Tensorflow: Large-scale machine learning on heterogeneous systems.” http://tensorflow.org/.

- [68] Z. Wang, A. C. Bovik, H. R. Sheikh, and E. P. Simoncelli, “Image quality assessment: from error visibility to structural similarity,” IEEE TIP, vol. 13, no. 4, pp. 600–612, 2004.

- [69] “Stanford Lytro Light Field Archive.” http://lightfields.stanford.edu/.

- [70] R. Girshick, “Fast r-cnn,” in 2015 IEEE International Conference on Computer Vision (ICCV), 2015, pp. 1440–1448.

- [71] T. G. Kolda and B. W. Bader, “Tensor decompositions and applications,” Siam Review, vol. 51, no. 3, pp. 455–500, 2009.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/2edefaf4-1e08-4907-88c8-43726c9c3ec7/GCWu.jpg) |

Gaochang Wu received the BE and MS degrees in mechanical engineering in Northeastern University, Shenyang, China, in 2013 and 2015, respectively, and Ph.D. degree in control theory and control engineering in Northeastern University, Shenyang, China in 2020. He is currently an associate professor in the State Key Laboratory of Synthetical Automation for Process Industries, Northeastern University. His current research interests include image processing, light field processing and deep learning. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/2edefaf4-1e08-4907-88c8-43726c9c3ec7/YQWang.jpg) |

Yingqian Wang received the B.E. degree in electrical engineering from Shandong University (SDU), Jinan, China, in 2016, and the M.E. degree in information and communication engineering from National University of Defense Technology (NUDT), Changsha, China, in 2018. He is currently pursuing the Ph.D. degree with the College of Electronic Science and Technology, NUDT. His research interests focus on low-level vision, particularly on light field imaging and image super-resolution. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/2edefaf4-1e08-4907-88c8-43726c9c3ec7/YBLiu.jpg) |

Yebin Liu received the BE degree from Beijing University of Posts and Telecommunications, China, in 2002, and the PhD degree from the Automation Department, Tsinghua University, Beijing, China, in 2009. He has been working as a research fellow at the computer graphics group of the Max Planck Institute for Informatik, Germany, in 2010. He is currently an associate professor in Tsinghua University. His research areas include computer vision and computer graphics. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/2edefaf4-1e08-4907-88c8-43726c9c3ec7/LFang.jpg) |

Lu FANG is currently an Associate Professor at Tsinghua University. She received her Ph.D in Electronic and Computer Engineering from HKUST in 2011, and B.E. from USTC in 2007, respectively. Dr. Fang’s research interests include image / video processing, vision for intelligent robot, and computational photography. Dr. Fang serves as TC member in Multimedia Signal Processing Technical Committee (MMSP-TC) in IEEE Signal Processing Society. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/2edefaf4-1e08-4907-88c8-43726c9c3ec7/TYChai.jpg) |

Tianyou Chai received the Ph.D. degree in control theory and engineering from Northeastern University, Shenyang, China, in 1985. He has been with the Research Center of Automation, Northeastern University, Shenyang, China, since 1985, where he became a Professor in 1988 and a Chair Professor in 2004. His current research interests include adaptive control, intelligent decoupling control, integrated plant control and systems, and the development of control technologies with applications to various industrial processes. Prof. Chai is a member of the Chinese Academy of Engineering, an academician of International Eurasian Academy of Sciences, IEEE Fellow and IFAC Fellow. He is a distinguished visiting fellow of The Royal Academy of Engineering (UK) and an Invitation Fellow of Japan Society for the Promotion of Science (JSPS). |