Sparse Symmetric Linear Arrays with Low Redundancy and a Contiguous Sum Co-Array

Abstract

Sparse arrays can resolve significantly more scatterers or sources than sensor by utilizing the co-array — a virtual array structure consisting of pairwise differences or sums of sensor positions. Although several sparse array configurations have been developed for passive sensing applications, far fewer active array designs exist. In active sensing, the sum co-array is typically more relevant than the difference co-array, especially when the scatterers are fully coherent. This paper proposes a general symmetric array configuration suitable for both active and passive sensing. We first derive necessary and sufficient conditions for the sum and difference co-array of this array to be contiguous. We then study two specific instances based on the Nested array and the Kløve-Mossige basis, respectively. In particular, we establish the relationship between the minimum-redundancy solutions of the two resulting symmetric array configurations, and the previously proposed Concatenated Nested Array (CNA) and Kløve Array (KA). Both the CNA and KA have closed-form expressions for the sensor positions, which means that they can be easily generated for any desired array size. The two array structures also achieve low redundancy, and a contiguous sum and difference co-array, which allows resolving vastly more scatterers or sources than sensors.

Index Terms:

Active sensing, sparse array configuration, symmetry, sum co-array, difference co-array, minimum redundancy.I Introduction

Sensor arrays are a key technology in for example, radar, wireless communication, medical imaging, radio astronomy, sonar, and seismology [1]. The key advantages of arrays include spatial selectivity and the capability to mitigate interference. However, conventional uniform array configurations may become prohibitively expensive, when a high spatial resolution facilitated by a large electrical aperture, and consequently a large number of sensors is required.

Sparse arrays allow for significantly reducing the number of sensors and costly RF-IF chains, whilst resolving vastly more scatterers or signal sources than sensors. This is facilitated by a virtual array model called the co-array [2, 3], which is commonly defined in terms of the pairwise differences or sums of the physical sensor positions [3]. Uniform arrays have a co-array with redundant virtual sensors, which allows the number of physical sensors to be reduced without affecting the number of unique co-array elements. This enables sparse arrays to identify up to signal sources using only sensors [4, 5, 6]. A contiguous co-array is often desired, since it maximizes the number of virtual sensors for a given array aperture. The properties of the resulting uniform virtual array model, such as the Vandermonde structure of the virtual steering matrix, can also be leveraged in array processing [7, 8].

Typical sparse array designs, such as the Minimum-Redundancy Array (MRA) [9, 10], seek to maximize the number of contiguous co-array elements for a given number of physical sensors . The minimum-redundancy property means that no physical array configuration can achieve a larger contiguous co-array. Although optimal, the MRA lacks a closed-form expression for its sensor positions, and quickly becomes impractical to compute, as the search space of the combinatorial optimization problem that needs to be solved grows exponentially with . Consequently, large sparse arrays have to be constructed by sub-optimal means, yielding low-redundancy, rather than provably minimum-redundancy, array configurations. For instance, smaller (but computable) MRAs can be extended to larger apertures by repeating regular substructures in the array [10], or by recursively nesting them in a fractal manner [11]. Recent research into such recursive or fractal arrays [12, 13, 14, 15] has also revived interest in symmetric array configurations. Symmetry in either the physical or co-array domain can further simplify array design [16] and calibration [17], or be leveraged in detection [18], source localization [19, 20, 21, 22], and adaptive beamforming [1, p. 721]. Parametric arrays are also of great interest, as their sensor positions can be expressed in closed form. This facilitates the simple design and optimization of the array geometry. For example, the redundancy of the array may be minimized to utilize the co-array as efficiently as possible. Notable parametric arrays include the Wichmann [23, 24, 25], Co-prime [26], Nested [7], and Super Nested Array [27, 28].

Sparse array configurations have been developed mainly for passive sensing, where the difference co-array can be exploited if the source signals are incoherent or weakly correlated. Far fewer works consider the sum co-array, which is more relevant in active sensing applications, such as radar or microwave and ultrasound imaging, where scatterers may (but need not) be fully coherent [3, 29, 30]. In particular, the design of low-redundancy sparse arrays with overlapping transmitting and receiving elements has not been extensively studied. Some of our recent works have addressed this sum co-array based array design problem by proposing symmetric extensions to existing parametric array configurations that were originally designed to only have a contiguous difference co-array [31, 32, 33]. Symmetry can thus provide a simple means of achieving a contiguous sum co-array. However, a unifying analysis and understanding of such symmetric sparse arrays is yet lacking from the literature. The current work attempts to fill this gap.

I-A Contributions and organization

This paper focuses on the design of sparse linear active arrays with a contiguous sum co-array. The main contributions of the paper are twofold. Firstly, we propose a general symmetric sparse linear array design. We establish necessary and sufficient conditions under which the sum and difference co-array of this array are contiguous. We also determine sufficient conditions that greatly simplify array design by allowing for array configurations with a contiguous difference co-array to be leveraged, thanks to the symmetry of the proposed array design. This connects our work to the abundant literature on mostly asymmetric sparse arrays with a contiguous difference co-array [25, 7, 34, 35]. Moreover, it provides a unifying framework for symmetric configurations relevant to both active and passive sensing [31, 32, 33].

The second main contribution is a detailed study of two specific instances of this symmetric array — one based on the Nested Array (NA) [7], and the other on the Kløve-Mossige basis from additive combinatorics [36]. In particular, we clarify the connection between these symmetric arrays and the recently studied Concatenated Nested Array (CNA) [37, 31] and Kløve Array (KA) [38, 33]. We also derive the minimum redundancy parameters for both the CNA and KA. Additionally, we show that the minimum-redundancy symmetric NA reduces to the CNA. Both the CNA and KA can be generated for practically any number of sensors, as their positions have closed-form expressions.

The paper is organized as follows. Section II introduces the signal model and the considered array figures of merit. Section III briefly reviews the MRA and some of its characteristics. Section IV then presents the general definition of the proposed symmetric array, and outlines both necessary and sufficient conditions for its sum co-array to be contiguous. In Section V, we study two special cases of this array, and derive their minimum-redundancy parameters. Finally, we compare the discussed array configurations numerically in Section VI, before concluding the paper and discussing future work in Section VII.

I-B Notation

We denote matrices by bold uppercase, vectors by bold lowercase, and scalars by unbolded letters. Sets are denoted by calligraphic letters. The set of integers from to in steps of is denoted . Shorthand denotes . The sum of two sets is defined as the set of pairwise sums of elements, i.e., . The sum of a set and a scalar is a special case, where either summand is a set with a single element. Similar definitions hold for the difference set. The cardinality of a set is denoted by . The rounding operator quantizes the scalar real argument to the closest integer. Similarly, the ceil operator yields the smallest integer larger than the argument, and the floor operator yields the largest integer smaller than the argument.

II Preliminaries

In this section, we briefly review the active sensing and sum co-array models. We then define the considered array figure of merit, which are summarized in Table I.

II-A Signal model

Consider a linear array of transmitting and receiving sensors, whose normalized positions are given by the set of non-negative integers . The first sensor of the array is located at , and the last sensor at , where is the (normalized) array aperture. This array is used to actively sense far field scatterers with reflectivities in azimuth directions . Each transmitter illuminates the scattering scene using narrowband radiation in a sequential or simultaneous (orthogonal MIMO) manner [29, 39]. The reflectivities are assumed fixed during the coherence time of the scene, which may consist of one or several snapshots (pulses). The received signal after a single snapshot and matched filtering is then [40]

| (1) |

where denotes the Khatri-Rao (columnwise Kronecker) product, is the array steering matrix, is the scattering coefficient vector, and is a receiver noise vector following a zero-mean white complex circularly symmetric normal distribution. A typical array processing task is to estimate parameters , or some functions thereof, from the measurements .

II-B Sum co-array

The effective steering matrix in (1) is given by . Assuming ideal omnidirectional sensors, we have

where is the unit inter-sensor spacing in carrier wavelengths (typically ). Consequently, the entries of are supported on a virtual array, known as the sum co-array, which consists of the pairwise sums of the physical element locations.

Definition 1 (Sum co-array).

The virtual element positions of the sum co-array of physical array are given by the set

| (2) |

The relevance of the sum co-array is that it may have up to unique elements, which is vastly more than the number of physical sensors . This implies that coherent scatterers can be identified from (1), provided the set of physical sensor positions is judiciously designed.

The sum co-array is uniform or contiguous, if it equals a virtual Uniform linear array (ULA) of aperture .

Definition 2 (Contiguous sum co-array).

The sum co-array of is contiguous if .

A contiguous co-array is desirable for two main reasons. Firstly, it maximizes the number of unique co-array elements for a given physical aperture. Second, it facilitates the use of many array processing algorithms designed for uniform arrays. For example, co-array MUSIC [7, 8] leverages the resulting Vandermonde structure to resolve more sources than sensors unambiguously in the infinite snapshot regime.

A closely related concept to the sum co-array is that of the difference co-array [2, 3]. Defined as the set of pairwise element position differences, the difference co-array mainly emerges in passive sensing applications, where the incoherence of the source signals is leveraged. Other assumptions give rise to more exotic co-arrays models, such as the difference of the sum co-array111Up to incoherent scatterers can be resolved by utilizing the second-order statistics of (1) and the difference of the sum co-array [40]. [41, 42], and the union of the sum and difference co-array [43, 44], which are not considered herein.

II-C Array figures of merit

| Symbol | Explanation | Value range |

|---|---|---|

| Number of total DoFs | ||

| Number of contiguous DoFs | ||

| Redundancy | ||

| -spacing multiplicity | ||

| Asymptotic redundancy | ||

| Asymptotic co-array filling ratio |

II-C1 Degrees of freedom (DoF).

The number of unique elements in the sum co-array is often referred to as the total number of DoFs. Similarly, the number of virtual elements in the largest contiguous subarray contained in the sum co-array is called the number of contiguous DoFs.

Definition 3 (Number of contiguous DoFs).

The number of contiguous DoFs in the sum co-array of is

| (3) |

If the offset is zero, then equals the position of the first hole in the sum co-array. Moreover, if the sum co-array is contiguous, then , where is the array aperture.

II-C2 Redundancy,

, quantifies the multiplicity of the co-array elements. A non-redundant array achieves , whereas holds for a redundant array.

Definition 4 (Redundancy).

The redundancy of an sensor array with contiguous sum co-array elements is

| (4) |

The numerator of is the maximum number of unique pairwise sums generated by numbers. The denominator is given by (3). Definition 4 is essentially Moffet’s definition of (difference co-array) redundancy [9] adapted to the sum co-array. It also coincides with Hoctor and Kassam’s definition [10], when the sum co-array is contiguous, i.e., , where is the aperture of the sum co-array.

II-C3 The -spacing multiplicity,

, enumerates the number of inter-element spacings of a displacement in the array [45]. For linear arrays, simplifies to the weight or multiplicity function [7, 46] of the difference co-array (when ).

Definition 5 (-spacing multiplicity [45]).

The multiplicity of inter-sensor displacement in a linear array is

| (5) |

It is easy to verify that , where . If the difference co-array is contiguous, then for .

Typically, a low value for is desired for small , as sensors that are closer to each other tend to interact more strongly and exhibit coupling [17]. Consequently, the severity of mutual coupling effects deteriorating the array performance may be reduced by decreasing [47, 27, 48, 35]. This simplifies array design, but has its limitations. Specifically does not take into account important factors, such as the individual element gain patterns and the mounting platform, as well as the scan angle and the uniformity of the array [49, Ch. 8]. Since treating such effects in a mathematically tractable way is challenging, proxies like the number of unit spacings are sometimes considered instead for simplicity.

II-C4 Asymptotic and relative quantities.

The discussed figures of merit are generally functions of , , or , i.e., they depend on the size of the array. A convenient quantity that holds in the limit of large arrays is the asymptotic redundancy

| (6) |

Another one is the asymptotic sum co-array filling ratio

| (7) |

which satisfies , as . If the sum co-array is contiguous, then . Note that the limits in (6) and (7) may equivalently be taken with respect to or .

In many cases, we wish to evaluate the relative performance of a given array with respect to a reference array configuration of choice – henceforth denoted by superscript “ref”. Of particular interest are the three ratios

or their asymptotic values, when either or is equal for both arrays and approaches infinity. Table II shows that these asymptotic ratios can be expressed using (6) and (7), provided the respective limits exist. For example, the second row of the first column in Table II is interpreted as

where the right-hand-side follows by simple manipulations from (4). The expressions in Table II simplify greatly if both arrays have a contiguous sum co-array, since .

III Minimum-Redundancy Array

In this section, we present the sparse array design problem solved by the Minimum-redundancy array (MRA). We then review some properties of the MRA, and briefly discuss an extension that is computationally easier to find.

The MRA is defined as the solution to either of two slightly different optimization problems, depending on if the sum co-array is constrained to be contiguous or not [9].

Definition 6 (Minimum-redundancy array (MRA)).

The general Minimum-redundancy array (MRA) solves

| subject to | (P1) |

The restricted MRA (R-MRA) is given by the solution to (P1) with the extra constraint .

The general MRA minimizes the redundancy , subject to a given number of sensors and offset in (3). By Definition 4, this is equivalent to maximizing , which by Definition 3 reduces to (P1). In contrast, the R-MRA constrains the sum co-array to be contiguous, and therefore maximizes the array aperture. A more generic definition of the general MRA is possible by including offset as an optimization variable in (P1). Note that the R-MRA implies that , regardless of the definition of the MRA. Finding general MRAs with is an open problem that is left for future work.

MRAs can, but need not, be restricted [50, 51]. For example, two of the three MRAs with sensors in Table III are restricted. On the other hand, none of the general MRAs with sensors are restricted ( in the general case, respectively, in the restricted case) [50, 51]. The “sum MRAs” in Definition 6 are equivalent to extremal additive 2-bases, which have been extensively studied in number theory [37, 52, 36, 38, 51], similarly to difference bases [53, 23] corresponding “difference MRAs” [9]. Solving (P1) is nevertheless challenging, since the size of the search space grows exponentially with the number of elements . Consequently, MRAs are currently only known for [50] and R-MRAs for [54].

The MRA can also be defined for a fixed aperture. E.g., the restricted MRA would minimize subject to a contiguous co-array of length . The difference MRA following this definition is referred to as the sparse ruler [55]. For a given , several such rulers of different length may exist. However, we exclusively consider the MRA of Definition 6 henceforth.

III-A Key properties

The lack of a closed-form expression for the sensor positions of the MRA make its properties difficult to analyze precisely. Nevertheless, it is straightforward to see that a linear array with a contiguous sum co-array necessarily contains two sensors in each end of the array, as shown by the following lemma.

Lemma 1 (Necessary sensors).

Let . If has a contiguous sum co-array, then . If has a contiguous difference co-array, then .

Proof.

Clearly, if and only if . Similarly, if and only if or . We may write without loss of generality, since any can be mirrored to satisfy . ∎

Lemma 1 implies that any array with a contiguous sum co-array and sensors has at least two sensor pairs separated by a unit inter-element spacing, i.e., . Lemma 1 also suggests that the R-MRA achieves redundancy in only two cases. These arrays are called perfect arrays.

Corollary 1 (Perfect arrays).

The only two unit redundancy R-MRAs are and . All other R-MRAs are redundant.

Proof.

By Lemma 1, the element at position of the sum co-array can be represented in at least two ways, namely . Consequently, if , then must hold. The R-MRAs for are , and . Only the first two of these satisfy . ∎

Generally, the redundancy of the MRA is unknown. However, the asymptotic redundancy can be bounded as follows.

Theorem 1 (Asymptotic redundancy of MRA [56, 57, 38, 58]).

The asymptotic redundancy of the MRA satisfies

| General MRA: | |||

| Restricted MRA: |

Proof.

Theorem 1 suggests that the R-MRA may be more redundant than the general MRA that does not constrain the sum co-array to be contiguous. The restricted definition of the MRA is nevertheless more widely adopted than the general one for the reasons listed in Section II-B. We note that difference bases or MRAs can also be of the general or restricted type [53, 9]. Difference MRAs are typically less redundant than sum MRAs due to the commutativity of the sum, i.e., , but .

III-B Unique solution with fewest closely spaced sensors

Problem (P1) may have several solutions for a given , which means that the MRA is not necessarily unique [50, 51]. In order to guarantee uniqueness, we introduce a secondary optimization criterion. In particular, we consider the MRA with the fewest closely spaced sensors. This MRA is found by minimizing a weighted sum of -spacing multiplicities (see Definition 5) among the solutions to (P1), which is equivalent to subtracting a regularizing term from the objective function. This regularizer can be defined as, for example,

| (8) |

where is the largest aperture of the considered solutions. Consequently, any two solutions to the unregularized problem, say and , satisfy , if and only if and for all . In words: (8) promotes large sensor displacements by prioritizing the value of , then , then , etc. For example, Table III shows two R-MRAs with equal and , but different . The R-MRA with the smaller , and therefore lower value of , is preferred.

| Configuration | Restricted | ||||

|---|---|---|---|---|---|

| ✓ | |||||

| ✓ | |||||

| ✗ |

III-C Symmetry and the Reduced Redundancy Array

Many of the currently known sum MRAs are actually symmetric [50, 51]. In fact, there exist at least one symmetric R-MRA for each . Moreover, the R-MRA with the fewest closely spaced sensors (lowest ) turns out to be symmetric for . Indeed, symmetry seems to arise naturally from the additive problem structure (cf. [59, Section 4.5.3]). Note that difference MRAs are generally asymmetric [9, 11].

Imposing symmetry on the array design problem has the main advantage of reducing the size of the search space [36, 16]. In case of the MRA, this can be achieved by adding constraint to (P1). Unfortunately, the search space of this symmetric MRA still scales exponentially with . Fortunately, another characteristic of the MRA may readily be exploited. Namely, MRAs tend to have a sparse mid section consisting of uniformly spaced elements [51]. The reduced redundancy array (RRA) extends the aperture of the MRA by adding extra elements to this uniform mid section [11, 10].

Definition 7 (Reduced redundancy array (RRA) [10]).

The sensor positions of the RRA for a given MRA are given by

where is the prefix and the suffix of the MRA, and

is the mid subarray, with inter-element spacing .

The prefix , suffix , and the inter-element spacing of are determined by the generator MRA, i.e., the MRA that is extended. For example, an MRA with sensors is

Note that holds, if the MRAs is symmetric as above. The RRA also has a contiguous sum co-array, if the MRA is restricted.

The RRA has a low redundancy when . However, the redundancy of the RRA approaches infinity as grows, since the aperture of the RRA only increases linearly with the number of sensors. Consequently, we will next consider a general class of symmetric arrays which scale better and admit a solution in polynomial time, provided the design space is constrained judiciously. In particular, we will show that this class of symmetric arrays naturally extends many of the established array configurations – designed for passive sensing – to the active sensing setting.

IV Symmetric array — general design and conditions for contiguous co-array

In this section, we establish a general framework for symmetric arrays with a contiguous sum (and difference) co-array. The proposed symmetric array with generator (S-) is constructed by taking the union of a generator array222This terminology is adopted from the literature on fractal arrays [60, 14]. and its mirror image shifted by a non-negative integer . The generator, which is the basic construction block of the symmetric array, can be any array configuration of choice.

Definition 8 (Symmetric array with generator (S-)).

The sensor positions of the S- are given by

| (9) |

where is a generator array and is a shift parameter333Note that is equivalent to considering the mirrored generator for . Therefore, we may set without loss of generality..

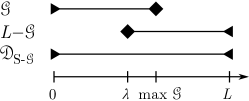

Fig. 1(a) shows a schematic of the S-. Note that the number of sensors satisfies , depending on the overlap between and . The aperture of the S- is

| (10) |

The exact properties of the S- are determined by the particular choice of and . Specifically, the generator array influences how easily the symmetrized array S- can be optimized to yield a large contiguous sum co-array. For example, a generator with a simple nested structure makes a convenient choice in this regard, as we will demonstrate in Section V. Next, however, we establish necessary and sufficient conditions that any and must fulfill for S- to have a contiguous sum co-array. These general conditions are later utilized when considering specific choices for in Section V.

IV-A Necessary and sufficient conditions for contiguous co-array

Fig. 1(b) illustrates the difference co-array of the S-, which is composed of the difference co-array and shifted sum co-arrays of the generator . By symmetry, the sum and difference co-array of the S- are equivalent up to a shift. This fact, along with (9), allows us to express the necessary and sufficient condition for the contiguity of both co-arrays in terms of the generator and shift parameter . Moreover, we may conveniently decompose the condition into two simpler subconditions, as shown by the following theorem.

Theorem 2 (Conditions for contiguous co-array).

The sum (and difference) co-array of the S- is contiguous if and only if

-

(C1)

-

(C2)

where is the array aperture given by (10).

IV-B Sufficient conditions for contiguous co-array

It is also instructive to consider some simple sufficient conditions for satisfying (C1) and (C2), as outlined in the following two corollaries to Theorem 2.

Corollary 2 (Sufficient conditions for (C1)).

Condition (C1) is satisfied if either of the following holds:

-

(i)

has a contiguous difference co-array.

-

(ii)

has a contiguous sum co-array and .

Proof.

Corollary 3 (Sufficient conditions for (C2)).

Condition (C2) is satisfied if any of the following hold:

-

(i)

-

(ii)

and

-

(iii)

and has a contiguous sum co-array.

Proof.

We note that if , then is actually sufficient in Item (ii) of Corollary 2 (cf. Lemma 1). This is also sufficient for satisfying (C2) by Item (iii) of Corollary 3. Item (i) of Corollary 2 is of special interest, since it states that any with a contiguous difference co-array satisfies (C1). This greatly simplifies array design, as shown in the next section, where we develop two arrays leveraging this property.

V Low-redundancy symmetric array designs using parametric generators

Similarly to the R-MRA in (P1), we wish to find the S- with maximal aperture satisfying the conditions in Theorem 2. Given a number of elements , and a class of generator arrays , such that , this minimum-redundancy S- design is found by solving the following optimization problem:

| subject to | ||||

| (P2) |

In general, (P2) is a non-convex problem, whose difficulty depends on the choice of . Solving (P2) may therefore require a grid search over and the elements of , which can have exponential complexity at worst. At best, however, a solution can be found in polynomial time, or even in closed form.

We will now focus on a family of choices for , such that each has the following two convenient properties:

-

(a)

has a contiguous difference co-array

-

(b)

has a closed-form expression for its aperture.

Item (a) greatly simplifies (P2) by directly satisfying condition (C1), whereas Item (b) enables the straightforward optimization of the array parameters. Condition (C2) is typically easy to satisfy for an array with these two properties. This is the case with many sparse array configurations in the literature, such as the Nested [7] and Wichmann Array [23, 24, 25]. Constructing a symmetric array using a generator with a contiguous difference co-array is thus a practical way to synthesize a contiguous sum co-array.

V-A Symmetric Nested Array (S-NA)

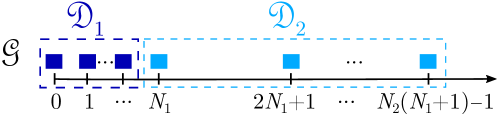

The Nested Array (NA) [7] is a natural choice for a generator satisfying the previously outlined properties (a) and (b). The NA has a simple structure consisting of the union of a dense ULA, , and sparse ULA sub-array, , as shown in Fig. 2(a). When used as the generator in (9), the NA yields the Symmetric Nested Array (S-NA), defined as follows:

Definition 9 (Symmetric Nested Array (S-NA)).

Special cases of the S-NA have also been previously proposed. For example, [61, Definition 2] considered the case for improving the robustness of the NA to sensor failures. Next we briefly discuss a special case that is more relevant from the minimum-redundancy point of view.

V-A1 Concatenated Nested Array (CNA)

The S-NA coincides with a restricted additive 2-basis studied by Rohrbach in the 1930’s [37, Satz 2], when the shift parameter satisfies

| (11) |

where . Unaware of Rohrbach’s early result, we later derived the same configuration based on the NA and called it the Concatenated Nested Array (CNA) [31].

Definition 10 (Concatenated Nested Array (CNA) [31]).

The CNA is illustrated in Fig. 2(c). When or , the array degenerates to the ULA. In the interesting case when , the aperture of the CNA is[31]

| (12) |

Furthermore, the number of sensors is [31]

| (13) |

and the number of unit spacings evaluates to

| (14) |

V-A2 Minimum-redundancy solution

The S-NA solving (P2) is actually a CNA. This follows from the fact that any S-NA that has a contiguous sum co-array, but is not a CNA, has redundant sensors, due to the reduced number of overlapping sensors between and its shifted mirror image.

Proposition 1.

The S-NA solving (P2) is a CNA.

Proof.

We write (C1) and (C2) as a constraint on , which we then show implies the proposition under reparametrization.

Firstly, (C1) holds by Item (i) of Corollary 2, since the NA has a contiguous difference co-array [7]. Secondly, the location of the first hole in the sum co-array of the NA yields that (C2) is satisfied if and only if

| (15) |

If , then a CNA with the same number of sensors (), but a larger aperture () is achieved by instead setting . Otherwise, it is straightforward to verify that the S-NA either reduces to the CNA, or a CNA can be constructed with the same but a larger , by satisfying (15) with equality. ∎

By Proposition 1, problem (P2) simplifies to maximizing the aperture of the CNA for a given number of sensors:

| (P3) |

Problem (P3) actually admits a closed-form solution [31]. In fact, we may state the following more general result for any two-variable integer program similar to (P3).

Lemma 2.

Let be a concave function. The solution to

| (P4) |

is given by and , where solves

and is the smallest non-negative integer satisfying , where .

Proof.

Lemma 2 is useful for solving two-variable integer programs similar to (P3) in closed-form. Such optimization problems often arise in, e.g., sparse array design [32, 33]. In our case, Lemma 2 allows expressing the minimum-redundancy parameters of the CNA directly in terms of as follows444An equivalent result is given in [37, Eq. (7) and (8)] and [31, Eq. (13)], but in a more inconvenient format due to the use of the rounding operator..

Theorem 3 (Minimum-redundancy parameters of CNA).

Proof.

By Theorem 3, the properties of the minimum-redundancy CNA can also be written compactly as follows.

Corollary 4 (Properties of minimum-redundancy CNA).

V-B Symmetric Kløve-Mossige array (S-KMA)

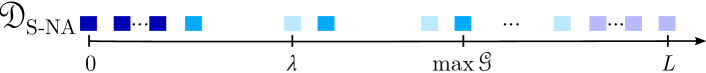

In the 1980’s, Kløve and Mossige proposed an additive 2-basis with some interesting properties [36]. In particular, the basis has a contiguous difference co-array (see Appendix A) and a low asymptotic redundancy (), despite having a non-contiguous sum co-array (see Appendix B). We call this construction the Kløve-Mossige Array (KMA). As shown in Fig. 3(a), the KMA contains a CNA, and can therefore be interpreted as another extension of the NA. However, selecting the KMA as the generator in (9) yields the novel Symmetric Kløve-Mossige Array (S-KMA), shown in Fig. 3(b).

Definition 11 (Symmetric Kløve-Mossige Array (S-KMA)).

The sensor positions of the S-KMA are given by (9), where

follows Definition 10, and parameters555The S-KMA is undefined for , as . Consequently, we will not consider this case further. However, we note that Definition 11 is easily modified so that the S-KMA degenerates to a ULA even in this case. .

V-B1 Kløve array

The structure of the S-KMA simplifies substantially when the shift parameter is of the form

| (19) |

where . In fact, this S-KMA coincides with the Kløve array (KA), which is based on a class of restricted additive 2-bases proposed by Kløve in the context of additive combinatorics [38] (see also [33]).

Definition 12 (Kløve array (KA) [38]).

The sensor positions of the KA with parameters are given by

where follows Definition 10, and Definition 11.

Fig. 3(c) illustrates the KA, which consists of two CNAs connected by a sparse mid-section consisting of widely separated and sub-sampled ULAs with elements each. The KA reduces to the CNA when or . When , the aperture , and the number of sensors of the KA evaluate to [38, Lemma 3]:

| (20) | ||||

| (21) |

Furthermore, the number of unit spacings is [33]

| (22) |

V-B2 Minimum-redundancy solution

The simple structure of the KMA suggests that the minimum redundancy S-KMA is also a KA. Intuitively, any S-KMA that is not a KA is unnecessarily redundant, since increasing to its maximum value yields a KA (cf. Appendix B). However, proving that the S-KMA solving (P2) is a KA is not as simple as in the case of the S-NA and CNA (see Proposition 1 in Section V-A2). In particular, the S-KMA cannot easily be modified into a KA with an equal or larger aperture without affecting the number of sensors . A formal proof is therefore still an open problem.

Nevertheless, we may derive the minimum redundancy parameters of the KA. The minimum-redundancy Kløve array (KAR) maximizes the aperture for a given , which is equivalent to solving the following optimization problem:

| subject to | (P5) |

Problem (P5) is an integer program with three unknowns. This problem is challenging to solve in closed form for any , in contrast to (P3), which only has two integer unknowns (cf. Theorem 3). Nevertheless, some readily yield a closed-form solution to (P5), as shown by the following theorem.

Theorem 4 (Minimum-redundancy parameters of KA).

Proof.

Under certain conditions, we may obtain a solution to (P5) by considering a relaxed problem. Specifically, solving (21) for , substituting the result into (20), and relaxing leads to the following concave quadratic program:

At the critical point of the objective function we have

Solving these equations for and yields (23) and (24), which when substituted into (21) yields (25). These are also solutions to (P5) if:

-

(i)

, which holds when , i.e.,

-

(ii)

, which holds when , as

-

(iii)

, which holds when and , i.e., .

The only integer-valued satisfying all three conditions are , as stated.

The KA in Theorem 4 also has the following properties:

Corollary 5 (Properties of minimum-redundancy KA).

The minimum-redundancy KA achieves the asymptotic redundancy , as shown in the following proposition, which is a reformulation of [38, Theorem, p. 177].

Proposition 2 (Asymptotic redundancy of KA [38]).

The asymptotic redundancy of the solution to (P5) is

Proof.

Let , where . A feasible KA that is equivalent to the minimum-redundancy KA when is then given by the choice of parameters , , and . Substitution of these parameters into (20) yields , i.e., . ∎

V-B3 Polynomial time grid search

Although solving (P5) in closed form for any is challenging, we can nevertheless obtain the solution in at most objective function evaluations. This follows from the fact that the feasible set of (P5) only has or fewer elements.

Proposition 3 (Cardinality of feasible set in (P5)).

The cardinality of the feasible set in (P5) is at most .

Proof.

We may verify from (21) that and . Consequently, the number of grid points that need to be checked is

The upper bound follows from ignoring the floor operations, and substituting , where , to account for the rectangular integration implied by the sum. Finally,

follows from integration by parts.

Algorithm 1 summarizes a simple grid search that finds the solution to (P5) for any in steps, as implied by Proposition 3. We iterate over and , because this choice yields the least upper bound on the number of grid points that need to be checked666Selecting and , or and , yields points.. Since the solution to (P5) is not necessarily unique, we select the KAR with the fewest closely spaced sensors, similarly to the MRA in Section III-B. Note that computing the regularizer requires floating point operations (flops), whereas evaluating the aperture only requires flops. Consequently, the time complexity of finding the KAR with the fewest closely spaced sensors is777Actually, only needs to be computed when in Algorithm 1. , whereas finding any KAR, that is, solving (P5) in general, has a worst case complexity of .

In a recent related work, we also developed a KA with a constraint on the number of unit spacings [33]. This constant unit spacing Kløve Array (KAS) achieves for any at the expense of a slight increase in redundancy (asymptotic redundancy ). The minimum-redundancy parameters of the KAS are found in closed form by application of Lemma 2, similarly to the CNA (cf. [33, Theorem 1]).

V-C Other generator choices

Naturally, other choices for the generator may yield alternative low-redundancy symmetric array configurations. For example, the Wichmann generator with yields the Interleaved Wichmann Array (IWA) [32]. This array satisfies (C1) by Item (i) of Corollary 2 and (C2) by Item (i) of Corollary 3. The IWA has the same asymptotic redundancy as the CNA, but fewer unit spacings. Although finding the minimum-redundancy parameters of the more general Symmetric Wichmann Array (S-WA) in closed-form is cumbersome, numerical optimization of and the other array parameters can slightly improve the non-asymptotic redundancy of the S-WA compared to the IWA. Nevertheless, neither the IWA nor S-WA are considered further in this work, since the KA achieves both lower and .

Numerical experiments suggest that some prominent array configurations, such as the Super Nested Array [27] or Co-prime Array [26], are not as well suited as generators from the redundancy point of view (mirroring does not help either). However, several other generators, both with and without contiguous difference co-arrays, remain to be explored in future work. For example, the difference MRA [9] and minimum-hole array [62, 63] are interesting candidates.

VI Performance evaluation of array designs

In this section, we compare the sparse array configurations presented in Sections III and V in terms of the array figures of merit in Section II-C. We also demonstrate the proposed sparse array configurations’ capability of resolving more scatterers than sensors in an active sensing scenario. Further numerical results can be found in the companion paper [33].

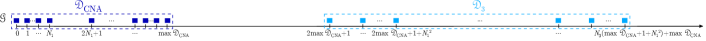

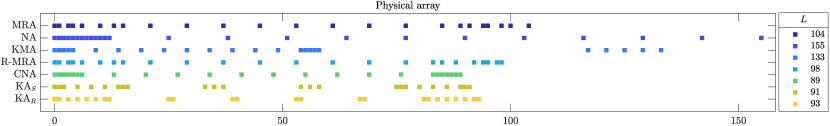

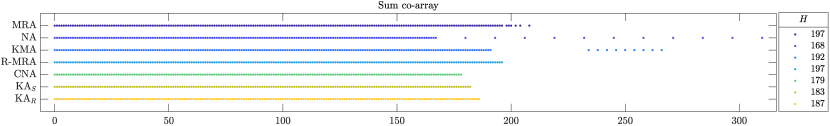

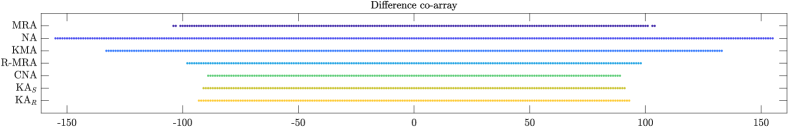

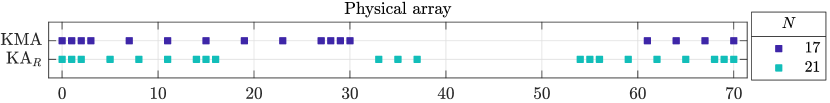

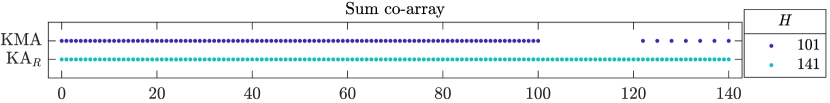

VI-A Comparison of array figures of merit

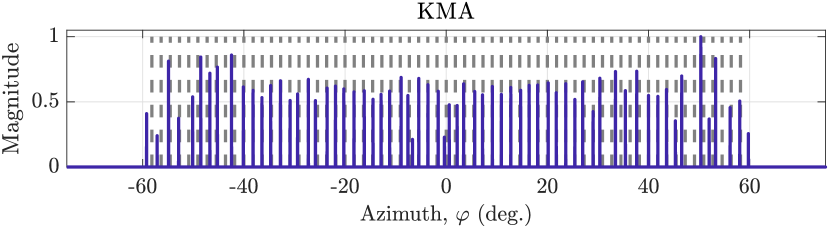

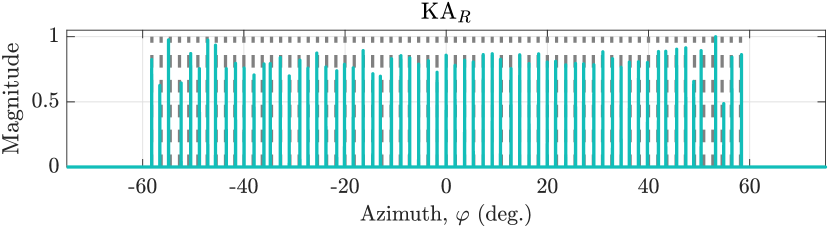

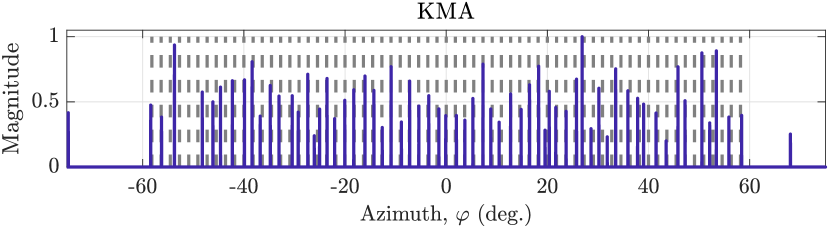

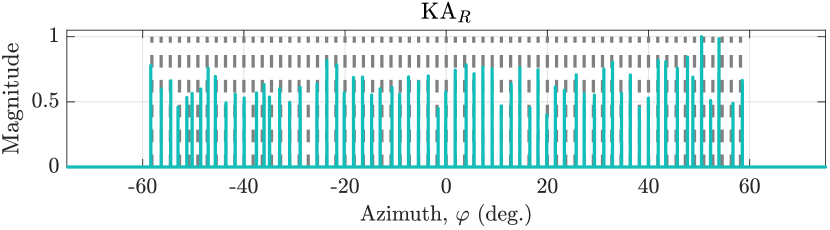

Tables IV and V summarize the key properties and figures of merit of the discussed sparse array configurations. The parameters of each configuration is chosen such that they maximize the number of contiguous DoFs in (3). The optimal parameters of the NA and KMA are found similarly to those of the CNA and KA (cf. Theorems 3 and 4)888Derivations are straightforward and details are omitted for brevity.. Fig. 4 illustrates the array configurations for sensors. The RRA is omitted since the MRA is known in this case. Note that the sum and difference co-arrays of the symmetric arrays are contiguous and merely shifted copies of each other.

[t] Array configuration Symmetric Contiguous DoFsa, Total DoFs, Aperture, No. of sensors, No. of unit spacings, General Minimum-Redundancy Array (MRA) no n/a n/a and n/a Nested Array (NA) [7] no Kløve-Mossige Array (KMA) [36] no \hdashlineRestricted Minimum-Redundancy Array (R-MRA) nob n/a n/a Reduced-Redundancy Array (RRA) [10] yes Concatenated Nested Array (CNA) [31] yes Constant unit spacing Kløve Array (KAS) [33] yes Minimum-Redundancy Kløve Array (KAR) yes

-

a

The tabulated results are representative of the scaling with or for the parameters maximizing . The expressions only hold exactly for specific values of and , which may vary between configurations.

-

b

The R-MRA has both asymmetric and symmetric solutions. A symmetric solution exists at least for all .

| Array | ||||||||

|---|---|---|---|---|---|---|---|---|

| MRA | ||||||||

| NA | ||||||||

| KMA | ||||||||

| \hdashlineR-MRA | ||||||||

| RRA | ||||||||

| CNA | ||||||||

| KAS | ||||||||

| KAR | ||||||||

VI-A1 Non-contiguous sum co-array

We first briefly consider the configurations with a non-contiguous sum co-array before studying the arrays with a contiguous sum co-array in more detail. Note from Table IV that even when the sum co-array is non-contiguous, the number of total DoFs is only slightly larger than the number of contiguous DoFs . Specifically, the difference between the two is proportional to the number of physical sensors , which is much smaller than , especially when or grow large. Table V shows that for the same number of contiguous DoFs , the general MRA has at most fewer sensors than the R-MRA by the most conservative estimate. For a fixed aperture , the corresponding number is at most , mainly due to the uncertainty related to , and thus the asymptotic co-array filling ratio , of the general MRA. In particular, any configuration seeking to maximize , such as the MRA, must satisfy . Moreover, holds by definition.

Among the considered configurations with closed-form sensor positions, the KMA achieves the largest number of contiguous DoFs , and therefore the lowest redundancy999The construction in [57] achieves an approximately lower . for a fixed number of sensors . However, its sum co-array contains holes, since . For equal aperture , the KAR has a larger than the KMA, but only more physical sensors . Similarly, the CNA has a two times larger than the NA and larger . Conversely, for equal , the CNA has a smaller physical aperture than the NA, while still achieving the same . The KAR has a smaller than the KMA, but only a smaller .

VI-A2 Contiguous sum co-array

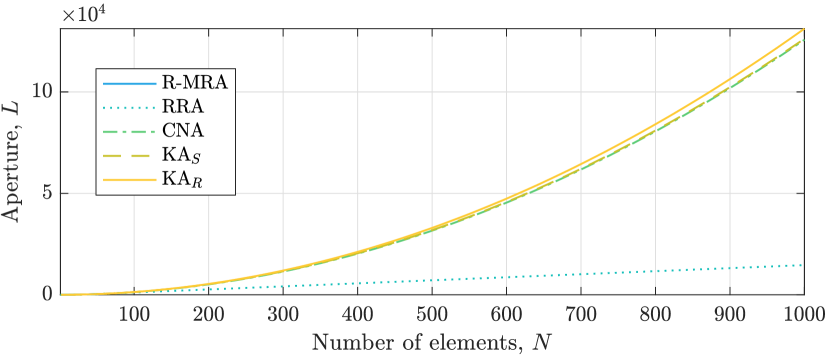

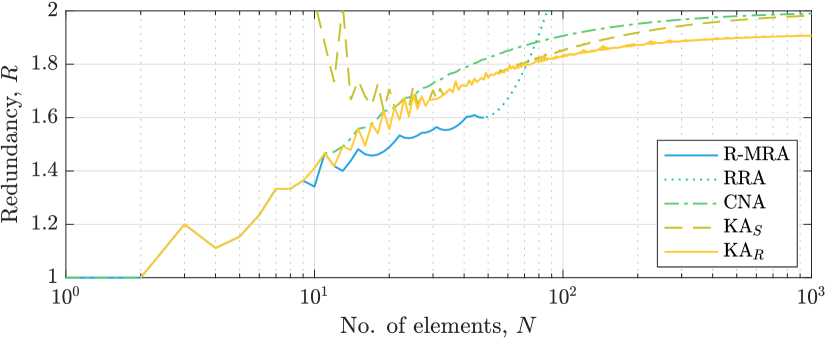

Next, we turn our attention to the configurations with a contiguous sum co-array. Fig. 5 illustrates the array aperture as a function of the number of sensors . The aperture scales quadratically with for all configurations except the RRA with . For reference, recall that the ULA satisfies . The KAR achieves a slightly larger aperture than the rest of the considered parametric arrays. For the same number of sensors (approaching infinity), the KAR has a larger aperture than the KAS and CNA. Conversely, for a fixed aperture, the KAR has fewer sensors. The differences between the configurations is clear in Fig. 6, which shows the redundancy as a function of . By definition, the R-MRA is the least redundant array with a contiguous sum co-array. However, it is also computationally prohibitively expensive to find for large , and therefore only known for [51, 54]. For , one currently has to resort to alternative configurations that are cheaper to generate, such as the KAR. The KAR achieves the lowest redundancy when . When , the RRA is the least redundant configuration. However, the redundancy of the RRA goes to to infinity with increasing . The KAR has between and more sensors than then R-MRA, which is less than any other currently known parametric sparse array configuration with a contiguous sum co-array.

Fig. 7 shows the number of unit spacings as a function of . In general, increases linearly with , and the KAR has the smallest rate of growth. The two exceptions are the KAS and RRA, which have a constant . However, unlike the RRA, the redundancy of the KAS is bounded (cf. Fig. 6). As discussed in Section II-C3, the number of unit spacings may be used as a simplistic indicator of the robustness of the array to mutual coupling. Assessing the effects of coupling ultimately requires actual measurements of the array response, or simulations using an electromagnetic model for the antenna and mounting platform [64, 65, 66, 67]. A detailed study of mutual coupling is therefore beyond the scope of this paper.

Obviously, many other important figures of merit are omitted here for brevity of presentation. For example, fragility and the achievable beampattern are natural criteria for array design or performance evaluation. Fragility quantifies the sensitivity of the co-array to physical sensor failures [68]. The array configurations studied in this paper only have essential sensors, and therefore high fragility, since the difference (and sum) co-array ceases to be contiguous if a sensor is removed. This is the cost of low redundancy. The beampattern is of interest in applications employing linear processing101010For exceptions where the beampattern is also relevant when employing non-linear processing, see, e.g., [7, 69].. For example, in adaptive beamforming, the one-way (transmit or receive) beampattern is critical, whereas in active imaging, the two-way (combined transmit and receive) beampattern is more relevant. Although the one-way beampattern of a sparse array generally exhibits high sidelobes, a wide range of two-way beampatterns may be achieved using one [15] or several [3, 70] transmissions and receptions. The arrays discussed in this paper can achieve the same effective beampattern as the ULA of equivalent aperture by employing multiple transmissions and receptions.

VI-B Active sensing performance

The estimation of the angles and scattering coefficients from (1) can be formulated as an on-grid sparse support recovery problem, similarly to the passive sensing case described in [71]. In particular, let denote a set of discretized angles. The task then becomes to solve

| (P6) |

where is the known steering matrix sampled at the angles, and the unknown sparse scattering coefficient vector. The sparsity of is enforced by the pseudonorm , which enumerates the number of non-zero entries. Although (P6) is a non-convex optimization problem due to the pseudonorm, it can be approximately solved using Orthogonal Matching Pursuit (OMP). OMP is a greedy algorithm that iteratively finds the columns in the dictionary matrix whose linear combination best describes the data vector in an sense. For details on the OMP algorithm, see [72], [73, p. 65].

We now compare the active sensing performance of the KMA and KAR using OMP. As demonstrated in Section VI-A, the KMA and KAR achieve the lowest redundancy of the considered configurations with closed-form sensor positions. We consider scatterers with angles uniformly spaced between and . The scattering coefficients are spherically distributed complex-valued random variables, i.e., , where . We assume that the arrays consist of ideal omnidirectional sensors with unit inter-sensor spacing , such that the th entry of the sampled steering matrix is . Angles form a uniform grid of points between and .

Fig. 8 shows that for a fixed aperture , the KAR has more contiguous DoFs than the KMA, at the expense of more sensors111111For reference, the R-MRA with aperture has sensors.. The KAR can therefore resolve more scatterers than the KMA of equivalent aperture at little extra cost. This is illustrated in Fig. 9, which shows the noiseless spatial spectrum estimate produced by the OMP algorithm. Both arrays resolve more scatterers than sensors, although the KMA misses some scatterers and displays spurious peaks instead. The KAR approximately resolves all scatterers due to its larger co-array. Fig. 9 shows the noisy OMP spectra when the SNR of the scene, defined as , is dB. The spectrum degrades more severely in case of the KMA, partly because it has fewer physical sensors than the KAR. These conclusions are validated by the empirical root-mean square error (RMSE) of the angle estimates, where random realizations of the noise and scattering coefficients were used. In the noiseless case, the RMSE of the KMA is , whereas the KAR achieves . In the noisy case the corresponding figures are (KMA) and (KAR).

VII Conclusions and future work

This paper proposed a general symmetric sparse linear array design suitable for both active and passive sensing. We established a necessary and sufficient condition for the sum and difference co-array to be contiguous, and identified sufficient conditions that substantially simplify the array design. We studied two special cases in detail, the CNA and KA, both of which achieve a low redundancy and can be generated for any number of sensors . The KA achieves the lowest asymptotic redundancy among the considered array configurations. This also yields an upper bound on the redundancy of the R-MRA, whose exact value remains an open question. The upper bound may perhaps be tightened by novel sparse array designs suggested by the proposed array design methodology.

In future work, it would be of interest to characterize the redundancy of other symmetric arrays, including recursive/fractal arrays [13, 14, 15] that have a contiguous sum co-array. Another direction is investigating the advantages of symmetric arrays over co-array equivalent asymmetric arrays in more detail. This could further increase the relevance of the symmetric sparse array configurations studied in this paper.

Acknowledgment

The authors would like to thank Dr. Jukka Kohonen for bringing the Kløve basis to their attention and for the feedback on this manuscript, as well as for the many stimulating discussions regarding additive bases.

Appendix A Contiguous difference co-array of the KMA

We now show that the Kløve-Mossige array in Definition 11 has a contiguous difference co-array. By symmetry of the difference co-array, it suffices to show that . We may easily verify that holds for

where the second line follows from the fact that the CNA is symmetric and has a contiguous difference co-array. Consequently, holds if and only if

Due to the periodicity of , this condition simplifies to

where , and by Definition 10 we have

As , it suffices to show that

By Definitions 10 and 11, we have

which implies that the difference co-array of is contiguous.

Appendix B First hole in the sum co-array of the KMA

Let denote the KMA in Definition 11. Furthermore, let , as defined in (3), be the first hole in . In the following, we show that

where the non-negative integer is

| (26) |

The first case, which we only briefly mention here, follows trivially from the fact that degenerates to the ULA when either and , or and . We prove the latter two cases by contradiction, i.e., by showing that , respectively , leads to an impossibility. Verifying that indeed , respectively , is left as an exercise for the interested reader.

We start by explicitly writing the sum co-array of as

Note that the CNA has a contiguous sum co-array, that is,

Furthermore, it was shown in Appendix A that

Consequently, holds if and only if

By Definition 11, there must therefore exist non-negative integers and such that

| (27) |

Substituting (26) into (27) and rearranging the terms yields

Since , the following inequality must hold:

This reduces to , or more conveniently, , since is an integer. Consequently, we have

| (28) |

where leads to . Substituting in (12) into (28) yields

| (29) |

Combined with , this implies that

since . We identify the following two cases:

- i)

-

ii)

If , then follows from (29), since must be an integer. This leads to a contradiction, since both and cannot hold simultaneously. Consequently, holds.

Finally, also holds when and , or and , since degenerates into the NA in this case. This covers all of the possible values of .

References

- [1] H. L. Van Trees, Optimum Array Processing, Part IV of Detection, Estimation, and Modulation Theory. Wiley-Interscience, 2002.

- [2] R. A. Haubrich, “Array design,” Bulletin of the Seismological Society of America, vol. 58, no. 3, pp. 977–991, 1968.

- [3] R. T. Hoctor and S. A. Kassam, “The unifying role of the coarray in aperture synthesis for coherent and incoherent imaging,” Proceedings of the IEEE, vol. 78, no. 4, pp. 735–752, Apr 1990.

- [4] A. Koochakzadeh and P. Pal, “Cramér-Rao bounds for underdetermined source localization,” IEEE Signal Processing Letters, vol. 23, no. 7, pp. 919–923, July 2016.

- [5] M. Wang and A. Nehorai, “Coarrays, MUSIC, and the Cramér-Rao bound,” IEEE Transactions on Signal Processing, vol. 65, no. 4, pp. 933–946, Feb 2017.

- [6] C. L. Liu and P. P. Vaidyanathan, “Cramér-Rao bounds for coprime and other sparse arrays, which find more sources than sensors,” Digital Signal Processing, vol. 61, pp. 43 – 61, Feb 2017.

- [7] P. Pal and P. P. Vaidyanathan, “Nested arrays: A novel approach to array processing with enhanced degrees of freedom,” IEEE Transactions on Signal Processing, vol. 58, no. 8, pp. 4167–4181, Aug 2010.

- [8] C. L. Liu and P. P. Vaidyanathan, “Remarks on the spatial smoothing step in coarray MUSIC,” IEEE Signal Processing Letters, vol. 22, no. 9, pp. 1438–1442, Sep. 2015.

- [9] A. Moffet, “Minimum-redundancy linear arrays,” IEEE Transactions on Antennas and Propagation, vol. 16, no. 2, pp. 172–175, Mar 1968.

- [10] R. T. Hoctor and S. A. Kassam, “Array redundancy for active line arrays,” IEEE Transactions on Image Processing, vol. 5, no. 7, pp. 1179–1183, Jul 1996.

- [11] M. Ishiguro, “Minimum redundancy linear arrays for a large number of antennas,” Radio Science, vol. 15, no. 6, pp. 1163–1170, 1980.

- [12] C. L. Liu and P. P. Vaidyanathan, “Maximally economic sparse arrays and Cantor arrays,” in Seventh IEEE International Workshop on Computational Advances in Multi-Sensor Adaptive Processing, CAMSAP, Dec 2017, pp. 1–5.

- [13] M. Yang, A. M. Haimovich, X. Yuan, L. Sun, and B. Chen, “A unified array geometry composed of multiple identical subarrays with hole-free difference coarrays for underdetermined doa estimation,” IEEE Access, vol. 6, pp. 14 238–14 254, 2018.

- [14] R. Cohen and Y. C. Eldar, “Sparse fractal array design with increased degrees of freedom,” in IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP 2019, May 2019, pp. 4195–4199.

- [15] ——, “Sparse array design via fractal geometries,” IEEE Transactions on Signal Processing, vol. 68, pp. 4797–4812, 2020.

- [16] R. L. Haupt, “Thinned arrays using genetic algorithms,” IEEE Transactions on Antennas and Propagation, vol. 42, no. 7, pp. 993–999, 1994.

- [17] B. Friedlander and A. J. Weiss, “Direction finding in the presence of mutual coupling,” IEEE Transactions on Antennas and Propagation, vol. 39, no. 3, pp. 273–284, Mar 1991.

- [18] G. Xu, R. H. I. Roy, and T. Kailath, “Detection of number of sources via exploitation of centro-symmetry property,” IEEE Transactions on Signal processing, vol. 42, no. 1, pp. 102–112, 1994.

- [19] R. Roy and T. Kailath, “ESPRIT — Estimation of signal parameters via rotational invariance techniques,” IEEE Transactions on Acoustics, Speech, and Signal Processing, vol. 37, no. 7, pp. 984–995, Jul 1989.

- [20] A. L. Swindlehurst, B. Ottersten, R. Roy, and T. Kailath, “Multiple invariance ESPRIT,” IEEE Transactions on Signal Processing, vol. 40, no. 4, pp. 867–881, 1992.

- [21] F. Gao and A. B. Gershman, “A generalized ESPRIT approach to direction-of-arrival estimation,” IEEE Signal Processing Letters, vol. 12, no. 3, pp. 254–257, 2005.

- [22] B. Wang, Y. Zhao, and J. Liu, “Mixed-order music algorithm for localization of far-field and near-field sources,” IEEE Signal Processing Letters, vol. 20, no. 4, pp. 311–314, 2013.

- [23] B. Wichmann, “A note on restricted difference bases,” Journal of the London Mathematical Society, vol. s1-38, no. 1, pp. 465–466, 1963.

- [24] D. Pearson, S. U. Pillai, and Y. Lee, “An algorithm for near-optimal placement of sensor elements,” IEEE Transactions on Information Theory, vol. 36, no. 6, pp. 1280–1284, Nov 1990.

- [25] D. A. Linebarger, I. H. Sudborough, and I. G. Tollis, “Difference bases and sparse sensor arrays,” IEEE Transactions on Information Theory, vol. 39, no. 2, pp. 716–721, Mar 1993.

- [26] P. P. Vaidyanathan and P. Pal, “Sparse sensing with co-prime samplers and arrays,” IEEE Transactions on Signal Processing, vol. 59, no. 2, pp. 573–586, Feb 2011.

- [27] C. L. Liu and P. P. Vaidyanathan, “Super nested arrays: Linear sparse arrays with reduced mutual coupling – Part I: Fundamentals,” IEEE Transactions on Signal Processing, vol. 64, no. 15, pp. 3997–4012, Aug 2016.

- [28] C. Liu and P. P. Vaidyanathan, “Super nested arrays: Linear sparse arrays with reduced mutual coupling – Part II: High-order extensions,” IEEE Transactions on Signal Processing, vol. 64, no. 16, pp. 4203–4217, 2016.

- [29] R. T. Hoctor and S. A. Kassam, “High resolution coherent source location using transmit/receive arrays,” IEEE Transactions on Image Processing, vol. 1, no. 1, pp. 88–100, Jan 1992.

- [30] F. Ahmad, G. J. Frazer, S. A. Kassam, and M. G. Amin, “Design and implementation of near-field, wideband synthetic aperture beamformers,” IEEE Transactions on Aerospace and Electronic Systems, vol. 40, no. 1, pp. 206–220, Jan 2004.

- [31] R. Rajamäki and V. Koivunen, “Sparse linear nested array for active sensing,” in 25th European Signal Processing Conference (EUSIPCO 2017), Kos, Greece, August 2017.

- [32] ——, “Symmetric sparse linear array for active imaging,” in Tenth IEEE Sensor Array and Multichannel Signal Processing Workshop (SAM), Sheffield, UK, July 2018, pp. 46–50.

- [33] ——, “Sparse low-redundancy linear array with uniform sum co-array,” in IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP 2020, Barcelona, Spain, May 2020, pp. 4592–4596.

- [34] C. L. Liu and P. P. Vaidyanathan, “Super nested arrays: sparse arrays with less mutual coupling than nested arrays,” in IEEE International Conference on Acoustics, Speech, and Signal Processing, ICASSP 2016., Mar 2016.

- [35] Z. Zheng, W. Wang, Y. Kong, and Y. D. Zhang, “MISC array: A new sparse array design achieving increased degrees of freedom and reduced mutual coupling effect,” IEEE Transactions on Signal Processing, vol. 67, no. 7, pp. 1728–1741, April 2019.

- [36] S. Mossige, “Algorithms for computing the h-range of the postage stamp problem,” Mathematics of Computation, vol. 36, no. 154, pp. 575–582, 1981.

- [37] H. Rohrbach, “Ein Beitrag zur additiven Zahlentheorie,” Mathematische Zeitschrift, vol. 42, no. 1, pp. 1–30, 1937.

- [38] T. Kløve, “A class of symmetric 2-bases,” Mathematica Scandinavica, vol. 47, no. 2, pp. 177–180, 1981.

- [39] J. Li and P. Stoica, “MIMO radar with colocated antennas,” IEEE Signal Processing Magazine, vol. 24, no. 5, pp. 106–114, Sep. 2007.

- [40] E. BouDaher, F. Ahmad, and M. G. Amin, “Sparsity-based direction finding of coherent and uncorrelated targets using active nonuniform arrays,” IEEE Signal Processing Letters, vol. 22, no. 10, pp. 1628–1632, Oct 2015.

- [41] C.-Y. Chen and P. P. Vaidyanathan, “Minimum redundancy MIMO radars,” in IEEE International Symposium on Circuits and Systems (ISCAS 2008), May 2008, pp. 45–48.

- [42] C. C. Weng and P. P. Vaidyanathan, “Nonuniform sparse array design for active sensing,” in 45th Asilomar Conference on Signals, Systems and Computers, Nov 2011, pp. 1062–1066.

- [43] X. Wang, Z. Chen, S. Ren, and S. Cao, “Doa estimation based on the difference and sum coarray for coprime arrays,” Digital Signal Processing, vol. 69, pp. 22 – 31, 2017.

- [44] W. Si, F. Zeng, C. Zhang, and Z. Peng, “Improved coprime arrays with reduced mutual coupling based on the concept of difference and sum coarray,” IEEE Access, vol. 7, pp. 66 251–66 262, 2019.

- [45] R. Rajamäki and V. Koivunen, “Sparse active rectangular array with few closely spaced elements,” IEEE Signal Processing Letters, vol. 25, no. 12, pp. 1820–1824, Dec 2018.

- [46] ——, “Comparison of sparse sensor array configurations with constrained aperture for passive sensing,” in IEEE Radar Conference (RadarConf 2017), May 2017, pp. 0797–0802.

- [47] E. BouDaher, F. Ahmad, M. G. Amin, and A. Hoorfar, “Mutual coupling effect and compensation in non-uniform arrays for direction-of-arrival estimation,” Digital Signal Processing, vol. 61, pp. 3–14, 2017.

- [48] C. L. Liu and P. P. Vaidyanathan, “Hourglass arrays and other novel 2-d sparse arrays with reduced mutual coupling,” IEEE Transactions on Signal Processing, vol. 65, no. 13, pp. 3369–3383, July 2017.

- [49] C. A. Balanis, Antenna theory: analysis and design, 4th ed. John Wiley & sons, 2016.

- [50] J. Kohonen and J. Corander, “Addition chains meet postage stamps: reducing the number of multiplications,” Journal of Integer Sequences, vol. 17, no. 3, pp. 14–3, 2014.

- [51] J. Kohonen, “A meet-in-the-middle algorithm for finding extremal restricted additive 2-bases,” Journal of Integer Sequences, vol. 17, no. 6, 2014.

- [52] J. Riddell and C. Chan, “Some extremal 2-bases,” Mathematics of Computation, vol. 32, no. 142, pp. 630–634, 1978.

- [53] J. Leech, “On the representation of by differences,” Journal of the London Mathematical Society, vol. s1-31, no. 2, pp. 160–169, 1956.

- [54] J. Kohonen, “Early pruning in the restricted postage stamp problem,” arXiv preprint arXiv:1503.03416, 2015.

- [55] S. Shakeri, D. D. Ariananda, and G. Leus, “Direction of arrival estimation using sparse ruler array design,” in IEEE 13th International Workshop on Signal Processing Advances in Wireless Communications, SPAWC 2012, June 2012, pp. 525–529.

- [56] G. Yu, “A new upper bound for finite additive h-bases,” Journal of Number Theory, vol. 156, pp. 95 – 104, 2015.

- [57] J. Kohonen, “An improved lower bound for finite additive 2-bases,” Journal of Number Theory, vol. 174, pp. 518 – 524, 2017.

- [58] G. Yu, “Upper bounds for finite additive 2-bases,” Proceedings of the American Mathematical Society, vol. 137, no. 1, pp. 11–18, 2009.

- [59] J. Kohonen, “Exact inference algorithms and their optimization in bayesian clustering,” Ph.D. dissertation, University of Helsinki, 2015.

- [60] D. H. Werner and S. Ganguly, “An overview of fractal antenna engineering research,” IEEE Antennas and Propagation Magazine, vol. 45, no. 1, pp. 38–57, 2003.

- [61] C. Liu and P. P. Vaidyanathan, “Optimizing minimum redundancy arrays for robustness,” in 52nd Asilomar Conference on Signals, Systems, and Computers, 2018, pp. 79–83.

- [62] G. S. Bloom and S. W. Golomb, “Applications of numbered undirected graphs,” Proceedings of the IEEE, vol. 65, no. 4, pp. 562–570, April 1977.

- [63] E. Vertatschitsch and S. Haykin, “Nonredundant arrays,” Proceedings of the IEEE, vol. 74, no. 1, pp. 217–217, 1986.

- [64] J. L. Allen and B. L. Diamond, “Mutual coupling in array antennas,” Massachusetts Institute Of Technology Lexington Lincoln Lab, Tech. Rep. 424, 1966.

- [65] J. Rubio, J. F. Izquierdo, and J. Córcoles, “Mutual coupling compensation matrices for transmitting and receiving arrays,” IEEE Transactions on Antennas and Propagation, vol. 63, no. 2, pp. 839–843, Feb 2015.

- [66] C. Craeye and D. González-Ovejero, “A review on array mutual coupling analysis,” Radio Science, vol. 46, no. 2, 2011.

- [67] B. Friedlander, “The extended manifold for antenna arrays,” IEEE Transactions on Signal Processing, vol. 68, pp. 493–502, 2020.

- [68] C. Liu and P. P. Vaidyanathan, “Robustness of difference coarrays of sparse arrays to sensor failures—part I: A theory motivated by coarray MUSIC,” IEEE Transactions on Signal Processing, vol. 67, no. 12, pp. 3213–3226, 2019.

- [69] R. Cohen and Y. C. Eldar, “Sparse convolutional beamforming for ultrasound imaging,” IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control, vol. 65, no. 12, pp. 2390–2406, Dec 2018.

- [70] R. J. Kozick and S. A. Kassam, “Linear imaging with sensor arrays on convex polygonal boundaries,” IEEE Transactions on Systems, Man, and Cybernetics, vol. 21, no. 5, pp. 1155–1166, Sep 1991.

- [71] P. Pal and P. P. Vaidyanathan, “Pushing the limits of sparse support recovery using correlation information,” IEEE Transactions on Signal Processing, vol. 63, no. 3, pp. 711–726, Feb 2015.

- [72] J. A. Tropp, “Greed is good: algorithmic results for sparse approximation,” IEEE Transactions on Information Theory, vol. 50, no. 10, pp. 2231–2242, 2004.

- [73] S. Foucart and H. Rauhut, A Mathematical Introduction to Compressive Sensing. Birkhäuser, 2013.