11email: [email protected] 22institutetext: Video Processing and Understanding Lab, Univ. Autónoma de Madrid, 28049, Madrid, Spain

22email: [email protected]

22email: {alvaro.garcia, j.bescos}@uam.es

Spacecraft Pose Estimation Based on Unsupervised Domain Adaptation and on a 3D-Guided Loss Combination

Abstract

Spacecraft pose estimation is a key task to enable space missions in which two spacecrafts must navigate around each other. Current state-of-the-art algorithms for pose estimation employ data-driven techniques. However, there is an absence of real training data for spacecraft imaged in space conditions due to the costs and difficulties associated with the space environment. This has motivated the introduction of 3D data simulators, solving the issue of data availability but introducing a large gap between the training (source) and test (target) domains. We explore a method that incorporates 3D structure into the spacecraft pose estimation pipeline to provide robustness to intensity domain shift and we present an algorithm for unsupervised domain adaptation with robust pseudo-labelling. Our solution has ranked second in the two categories of the 2021 Pose Estimation Challenge organised by the European Space Agency and the Stanford University, achieving the lowest average error over the two categories111Code is available at: https://github.com/JotaBravo/spacecraft-uda.

Keywords:

Spacecraft, Pose Estimation, Unsupervised Domain Adaptation, 3D Loss.1 Introduction

Relative navigation between a chaser and a target spacecraft is a key capability for future space missions, due to the relevance of close-proximity operations within the realm of active debris removal or in-orbit servicing. Camera sensors are a growing suitable choice to aid in the relative navigation around non-cooperative targets due to their reduced cost, low-mass and low-power requirements compared to active sensors. However, data acquired in real operational scenarios is often scarce or not available, limiting the deployment of data-driven algorithms. This limitation is nowadays overcome by using data simulators, which solve the issue of data scarcity but introduce the problem of domain shift between the training and test datasets.

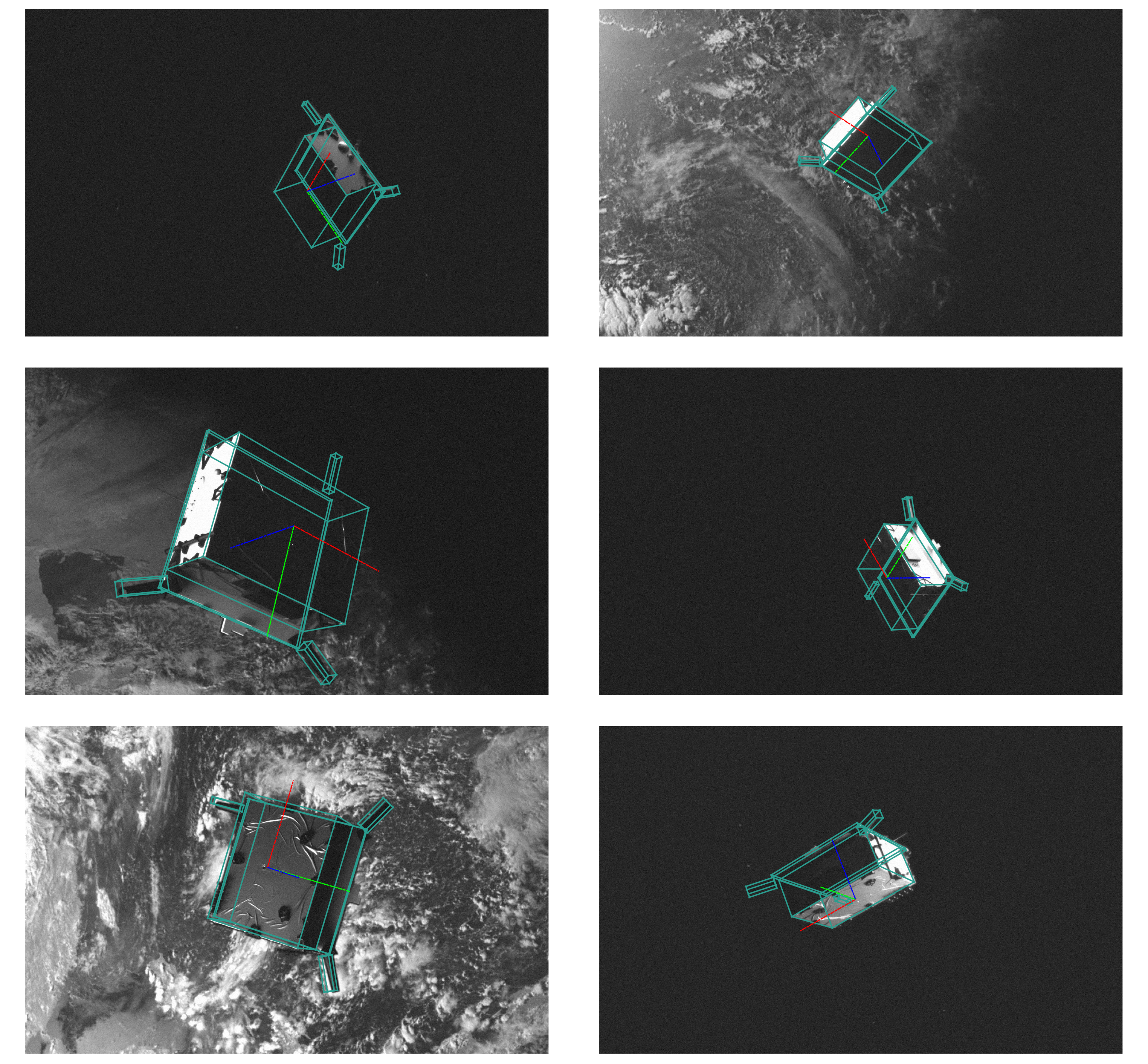

The 2021 Spacecraft Pose Estimation Challenge (SPEC2021) [7], organised by the European Space Agency and the Stanford University, was designed to bridge the performance gap between computer-simulated and operational data for the task of non-cooperative spacecraft pose estimation. SPEC2021 builds around the SPEED+ dataset [25] containing 60,000 computer-generated images of the Tango spacecraft with associated pose labels and 9531 unlabelled hardware-in-the-loop test images of a half-scale mock-up model. Test images are grouped into two subsets: Sunlamp, consisting of 2791 images featuring strong illumination and reflections over a black background; and Lightbox, consisting of 6740 images with softer illumination but increased noise levels and the presence of the Earth in the background (see sample images in Fig. 1). SPEC2021 evaluates the accuracy in pose estimation for each test subset.

Under this Challenge, we have developed a single algorithm that has ranked second in both Sunlamp and Lightbox categories, with the best total average error over the two datasets. Our main contributions are:

-

•

A spacecraft pose estimation algorithm that incorporates 3D structure information during training, providing robustness to intensity based domain-shift.

-

•

An unsupervised domain adaptation scheme based on robust pseudo-label generation and self-training.

2 Related Work

This section briefly presents the related work on pose estimation and unsupervised domain adaptation.

2.1 Pose Estimation

Pose estimation is the process of estimating the rigid transform that aligns a model frame with a sensor, body, or world reference frame [13]. Similarly to [27] we categorise these methods into: end-to-end, two-stage, and dense.

End-to-end methods map the two-dimensional images or their feature representations into the six-dimensional output space without employing geometrical solvers. Handcrafted approaches compare image regions with a database of templates to return the 6 Degree-of-freedom (DoF) pose associated with the template [10, 32]. Learning-based approaches employ CNNs to either learn the direct regression of the 6DoF output space [15, 20, 14, 28] or classify the input into a discrete pose [34]. End-to-end methods have been explored in [30], where an AlexNet was used to classify input images with labels associated to a discrete pose. The authors in [28] employed a network to regress the position and do continuous orientation estimation of the spacecraft with soft assignment coding.

Two-stage pose estimation methods first estimate the 2D image projection of the 3D object key-points and then apply a Perspective-n-Point (PnP) algorithm over the set of 2D-3D correspondences to retrieve the pose. Traditionally, this has been solved via key-point detection, description and matching between the images and a 3D model representation based on handcrafted [19, 2, 1] or learnt [36, 21] key-point detectors, descriptors and matchers. Other approaches directly employ CNNs designed for 2D key-point localisation that detect the vertices of the 3D object bounding-box or regress the key-point position by employing heatmaps, hence unifying the detection, description and matching into a single network [22, 39]. These approaches are the current state-of-the-art in satellite pose estimation. The authors from [4] employed an HRNet and non-linear pose refinement which obtained the first position in the Spacecraft Pose Estimation Challenge of 2019. In [11] a multi-scale fusion scheme with a 3D loss independent of the depth was employed to improve the pose estimation accuracy under different scale distributions of the spacecraft.

Dense pose estimation methods establish dense correspondences, at pixel or patch level, between a 2D image and a 3D surface object representation. These approaches can be based on depth data, RGB images or image pairs [27, 12, 6].

2.1.1 Discussion:

Non-cooperative spacecraft pose estimation presents specific conditions that can be incorporated as prior knowledge into the algorithms: 1) the target will not appear occluded in the image; 2) and the targets have highly reflective materials that coupled with small orbital periods frequently lead to heterogeneous illumination conditions during rendezvous; and 3) the estimation should provide some degree of interpretability so the navigation filter can handle failure cases. End-to-end pose estimation methods, that confront the problem as a classification task, are limited by the error associated with the discretisation of the pose-space and require a correct sampling of the attitude space to obtain uniform representations [16]. In addition, the methods that directly regress the pose fail to generalise, as the formulation might be closer to the image retrieval problem rather than to pose estimation one [29]. Two-stage pose estimation methods do not provide robustness to occlusions and can be affected by key-points falling outside the image space. Finally, dense methods provide robustness to occlusions by producing a prediction for every pixel or patch in the image, but increase the difficulty of the learning task due to the larger output space [27]. These also impose additional constraints on the input data as methods may need depth or surface normals, which were not available for the Challenge.

These considerations motivated our choice of a two-stage approach. Key-points provide a certain degree of interpretability, as the output is easily understandable and can be directly related with a pose. These methods also allow for continuous output estimation without compromising generalisation and do not require the additional ground-truth data, usually depth, that dense methods do. In addition, two-stage methods have shown better performance compared to end-to-end methods in 6DoF pose estimation [31] and are commonly used into the state-of-the-art methods for the spacecraft domain [11, 4].

2.2 Domain Adaptation

In visual-based navigation for space applications the distribution of the data to be acquired during the mission is often unknown, as no or little data is available prior the mission. This entails the introduction of computer graphic simulators [26] to generate the data required to design and test the algorithms. Although computer-graphic simulated images have greatly improved their quality and fidelity, there still exists a noticeable domain gap between the simulated (source) data and the real (target) data. Methods to overcome the reduction in algorithms performance for the same task under data with different distributions fall under the category of Domain Adaptation [23]. More specifically, when labels are only provided in the source domain is referred as Unsupervised Domain Adaptation (UDA). UDA methods can be categorised into: 1) domain-invariant feature learning; 2) aligning input data; and 3) self-training via pseudo-labelling.

Domain-invariant feature learning aims to align the source and target domain at feature level. It is based on the assumption that exists a feature representation that does not vary across domains, i.e. the information captured by the feature extractor is the one that does not depend on the domain. This family of methods try to learn the representation either by minimising the distribution shift under some divergence metric [35], enforcing that both feature representations can be used to reconstruct the target or source domain data [9], or employing adversarial training [8].

Input data alignment. Instead of aligning the domains at the feature level, these approaches aim to align the source domain to the target domain at input level, a mapping usually confronted via generative adversarial networks [42, 33].

Self-training approaches employ generated pseudo-labels to iteratively improve the model. However, these labels generation process is inevitably noisy. Some works have tried to mitigate the effect of the label noise by designing robust losses [41] but they often fail to handle real-world noisy data [40]. Other approaches have used self-label correction that relies on the agreement between different learners [17] or stages of the pipeline [40] to correct the noisy labels. Online domain adaptation is employed in [24] for satellite pose estimation across different domains. A segmentation-based loss is employed to update the feature extractor of a network, adapting the features to the new input-domain.

2.2.1 Discussion:

Feature learning methods rely on the existence of feature representations that do not vary across domains, and assume that similar semantic objects are shared between domains during training. However, in pose estimation the semantic objects (key-points) are pose dependent and thus, the same semantic objects at training might not appear in both domains, hence affecting the alignment performance. Input alignment methods are able to transfer general illumination and contrast properties. However, they might introduce artefacts, and reflectances due to the object materials and sensor specific noise between train and test are often not captured. Our proposal is based on pseudo-labelling, which allows to learn from the target domain providing directly interpretable results. In addition, pseudo-labelling provides state-of-the-art results in UDA for the semantic segmentation task [40].

3 Pose Estimation

This section introduces the proposed method for pose estimation. In our setting, the target frame is the geometrical centre of the target spacecraft, represented by a set of 11 3D key-points. The sensor frame is expressed at the centre of the image. At test time, we use a Perspective-n-Point solver over the 3D spacecraft key-points and their estimated 2D image projections to retrieve the pose.

In this challenging scenario where, apart from a noticeable domain gap, surfaces reflectivity and illumination conditions further generate great change, objects in the source and target domains keep the same 3D structure. This motivates the use of an architecture and learning losses that not only rely on image textures, but make explicit use of the 3D key-point structure.

At architecture level (see Fig. 2 - Model), we propose the use of a stacked hourglass network with two heads to jointly regress the key-point position and their associated depth, enabling 3D-aware feature learning. At loss level (see Fig. 2 - Losses), we formulate a triple learning objective: a) in the 2D-2D loss we compare the mean squared error between the key-point head output with a 2D Gaussian blob centred on the ground-truth key-point location; b) in the 3D-2D loss we incorporate pose information by comparing the coordinates of the 3D key-points projected onto the image using the estimated pose via PnP with the corresponding ground-truth coordinates in the image; c) in the 3D-3D loss we incorporate 3D key-point structure: the estimated depth along the camera intriniscs is used to lift the estimated key-point positions, and these lifted key-points are aligned in 3D with the ground-truth key-points using a least square estimator. Then, the retrieved pose from the alignment is compared to the ground-truth pose.

3.1 2D-2D: Key-point Heatmap Loss

The ground-truth heatmaps are obtained by representing a circular Gaussian centred at the ground-truth image key-point coordinates . Given an image of the target spacecraft, represented by the 3D key-points and acquired with camera intrinsics in a ground-truth pose with rotation matrix and translation vector , the ground-truth key-point coordinates can obtained via the projection expression:

| (1) |

The heatmaps predicted by each head are learnt by comparing the output of the key-point prediction head vs the ground-truth heat-map via mean squared error. To keep a fixed range for the key-point heatmap loss, we normalise each heatmap by its maximum value and weight them by a parameter , ensuring a large difference between the minimum and maximum value of the heatmap. The key-point heatmap loss is then computed via:

| (2) |

3.2 3D-2D: PnP Loss

Pose information is here considered by comparing the ground-truth 2D key-point image coordinates with the projection of the 3D key-points onto the image, obtained using the rigid transform estimated via PnP (see Fig. 2 - Model b)). The predicted key-point locations used to estimate such rigid transform are originally expressed as heatmaps. The application of the arg-max function over to retrieve the predicted key-point pixel positions is a non-differentiable operation which cannot be used for training. We instead use the integral pose regression method [37] and apply an expectation operation over the soft-max normalized heatmaps to retrieve the pixel coordinates . Then, we employ the backpropagatable PnP algorithm from [5] to retrieve the estimated rotation matrix and translation vector .

The PnP loss evaluates the mean squared error between the ground-truth key-point pixel locations and the key-point pixel locations obtained by projecting onto the image with and as in Eq. 1. The loss has an additional regularisation term, comparing with , to ensure convergence of the estimated key-point coordinates the desired positions [5]. The final PnP loss term is computed via:

| (3) |

3.3 3D-3D: Structure Loss

We incorporate 3D structure by comparing the rotation and translation that align the predicted key-points lifted to the 3D space with the key-points representing the spacecraft with the ground-truth pose (see Fig. 2 - Model c)). Following the approach described in Section 3.1, we retrieve the estimated key-point coordinates . Then we lift them to the 3D space by applying the camera intrinsics and the estimated depth . Instead of providing direct supervision to the depth, we choose to sample the depth prediction head at the position of the ground-truth key-point positions , and supervise the output with the 3D alignment loss. Sampling at the ground-truth key-point positions instead of the estimated positions helps convergence, as the number of combinations of key-point locations and depth values that satisfy a given rotation and translation is not unique. and are found by minimising the sum of square distances between the target points and the lifted 3d points :

| (4) |

The algorithm that solves this expression is referred as the Umeyama [38] (or Kabsch) algorithm and has a closed form solution. We employ the Procrustes solver from [3] to obtain the arguments that minimise the above expression. The loss is then computed by:

| (5) |

The final learning objective is then defined by a weighted sum of the three losses. The values of are selected so the contributions of the 2D-3D and 3D-3D losses represent a 10% of the total learning objective.

| (6) |

4 Domain Adaptation

The losses defined in Section 3 to consider texture, pose and structure improve the performance in the test set for the task of spacecraft pose estimation (see Section 5.3). However, we still observe a large performance gap between the source domain (validation) and the two target domains (test). This gap motivates the introduction of unsupervised domain adaptation techniques. Given a dataset of images in the source domain and the associated ground-truth rotation matrix and translation vector that define the pose, we aim to train a model such that when evaluated on a target dataset produces correct ground-truth key-point positions, without using the ground-truth pose. Our proposal relies on self-training with robust pseudo-labelling generation. As discussed before, pseudo-labels provide a strong supervision signal on the target domain but are intrinsically noisy. If not handled properly, this noise can degrade the overall performance. In our setting, we reduce the noise during label generation by enforcing consistent pose estimates across the network head.

The proposed approach is summarised in Algorithm 1. We first pre-train a model (Fig. 2 - Model) on the source dataset. Then, we evaluate the images from a given target dataset on the model . The model will generate a prediction on the key-point locations, expressed as heatmaps at each head of the network. We consider the pixel coordinates of the maximum value of the heatmap to be the predicted key-point pixel coordinates . Differently to the optimisation of the losses and , here we do not require this step to be differentiable, and thus a standard argmax operation suffices: . Next, we perform key-point filtering based on the heatmap response. First, we remove the key-points falling close to the edges of the image. The remaining key-points are sorted based on the total sum of the heatmap , and the seven key-points with strongest response are kept. The remaining key-points are noted as . We employ the the EPnP (Efficient Perspective-n-Point) algorithm [18] under a RANSAC loop to estimate the pose with the filtered key-points at each head .

The pose at each level is estimated with the prediction of each head by applying the EPnP (Efficient Perspective-n-Point) algorithm [18] under a RANSAC loop. A new pose pseudo-label is introduced into the test set if the RANSAC-EPnP routine converges for all the heads . The solution corresponding to the largest total key-point response is chosen as the resulting pose. The convergence of the RANSAC routine is controlled by two parameters: the confidence parameter that controls the probability of the method to provide a useful result, and the reprojection error that defines the maximum allowed distance between a point and its reprojection with the estimated pose to be considered an inlier. Modifying the convergence criteria (i.e. and ) allows to control the amount of label noise. In our case, we choose and .

5 Evaluation

5.1 Metrics

The evaluation metric used in the Challenge was the pose score . The pose score is based on the combination of the position score and the orientation score . The position score for an image is defined as the absolute difference between the estimated and ground-truth translation vectors, normalised by the module of the ground-truth position vector. The position score accounts for the precision in position of the robotic platform, which is of millimetre per metre of ground truth distance. Values of lower than are zeroed.

| (7) |

The orientation score is defined as the angle that aligns the estimated and ground-truth quaternion orientations. This metric also accounts for the precision of the robotic arm which is 0.169º, zeroing than the precision.

| (8) |

The total score is the average of the sum of the orientation and position scores corresponding the images of the test set:

| (9) |

5.2 Challenge results

The ground-truth labels of the two test sets (Lightbox and Sunlamp) from the SPEED+ dataset have not been made publicly available. Instead, the Challenge evaluation was performed by submitting the estimated poses to a evaluation server; the results were then published on public leaderboards only if the obtained score improved a previous result from the same participant. We provide a summary of the results by showing the error achieved by the 10 first teams on the Lightbox and Sunlamp datasets on Table 1 and Table 2 respectively. Teams are represented in decreasing order based on the achieved rank in the leaderbord, corresponding the first row to the winner of the category. The winning teams were TangoUnchained for the Lightbox dataset and lava1302 for the Sunlamp dataset. Our solution VPU ranked 2nd on both datasets, and achieved the best total average error as shown in Table 3, where we represent the averaged scores of the two datasets. This shows that our solution is robust to different domain changes and can be applied without fine-tuning on different settings with different illumination and noise conditions.

| Team Name | |||

|---|---|---|---|

| TangoUnchained | 0.0179 | 0.0556 | 0.0734 |

| VPU (Ours) | 0.0215 | 0.0799 | 0.1014 |

| lava1302 | 0.0483 | 0.1163 | 0.1646 |

| haoranhuang_njust | 0.0315 | 0.1419 | 0.1734 |

| u3s_lab | 0.0548 | 0.1692 | 0.2240 |

| chusunhao | 0.0328 | 0.2859 | 0.3186 |

| for graduate | 0.0753 | 0.4130 | 0.4883 |

| Pivot SDA AI & Autonomy Sandbox | 0.0721 | 0.4175 | 0.4895 |

| bbnc | 0.0940 | 0.4344 | 0.5312 |

| ItTakesTwoToTango | 0.0822 | 0.5427 | 0.6248 |

| Team Name | |||

|---|---|---|---|

| lava1302 | 0.0113 | 0.0476 | 0.0588 |

| VPU (Ours) | 0.0118 | 0.0493 | 0.0611 |

| TangoUnchained | 0.0150 | 0.0750 | 0.0899 |

| u3s_lab | 0.0320 | 0.1089 | 0.1409 |

| haoranhuang_njust | 0.0284 | 0.1467 | 0.1751 |

| bbnc | 0.0819 | 0.3832 | 0.4650 |

| for graduate | 0.0858 | 0.4009 | 0.4866 |

| Pivot SDA AI & Autonomy Sandbox | 0.1299 | 0.6361 | 0.7659 |

| ItTakesTwoToTango | 0.0800 | 0.6922 | 0.7721 |

| chusunhao | 0.0584 | 0.0584 | 0.8151 |

| Team Name | |||

|---|---|---|---|

| VPU (Ours) | 0.0166 | 0.0646 | 0.0812 |

| TangoUnchained | 0.0164 | 0.0653 | 0.0816 |

| lava1302 | 0.0298 | 0.0820 | 0.1117 |

| haoranhuang_njust | 0.0456 | 0.1443 | 0.1742 |

| u3s_lab | 0.0434 | 0.1391 | 0.1824 |

| for graduate | 0.0805 | 0.4070 | 0.4874 |

| bbnc | 0.0879 | 0.4088 | 0.4981 |

| chusunhao | 0.0456 | 0.17215 | 0.56685 |

| Pivot SDA AI & Autonomy Sandbox | 0.0999 | 0.5268 | 0.6266 |

| ItTakesTwoToTango | 0.0811 | 0.61745 | 0.69845 |

For the Challenge, we trained the model described in Section 3 during 35 epochs on the Synthetic dataset at a resolution of 640x640 pixels. Then, we trained individual models following Algorithm 1 for the Sunlamp and Lightbox target datasets during 50 iterations. Fig. 3 and Fig. 4 provide visual results of the estimated poses for the Sunlamp and Lightbox datasets. The estimated poses are represented with the 3-axis plot and the wireframe model projected over the image. They show that the proposed method can handle strong reflections, presence of other elements on the image, complex background and close views of the object.

5.3 Additional Experiments

We show in Fig. 5 the effect of iterating over Algorithm 1 on the number of generated pseudo-labels. The left plot corresponds to the strategy followed over the Sunlamp dataset, where the reprojection error that controls the convergence criteria of RANSAC-EPnP remains fixed during all iterations with a value of 2.0. It can be observed that the percentage of pseudo-labels reaches 90% after 10 iterations and slowly increases from there, suggesting a possible overfit to wrongly estimated labels. The right plot corresponds to the Ligthbox dataset, where a different approach was followed. Motivated by the fact that the Lightbox test set is more visually similar to the training set than the Sunlamp test set, we hypothesize that restricting the label generation by reducing the reprojection error would lead to better pseudo-labels and hence better perfromance. To test this, the reprojection error was decreased after 10 iterations (red dashed line) to 1.0. It can be observed that the percentage of pseudo-labels quickly drops and then grows at a slower rate. However, based on the final results we cannot conclude that this strategy improved the results compared to using a fixed reprojection error, as the achieved errors for both test sets are in a similar range.

To show the effectiveness of the proposed method on the target test sets, we evaluated the proposed pose estimation algorithm against a baseline considering only the 2D heatmap loss. The models were trained on the source synthetic dataset during 10 epochs and tested over a pseudo-test dataset. The pseudo-test set is obtained by running Algorithm 1 until approximately 30 % of the target dataset is labelled. This number is chosen as a compromise between the necessary number of pseudo-labels to obtain a representative analysis and the quality of the labels obtained. The pseudo-test set approach is followed as the real labels of the test set are not made publicy available in the challenge. The results are shown in Table 4 with the validation set from the source dataset as reference. It can be observed that the proposed method, despite obtaining similar values on the validation (Source set) is able to improve the results on the target (test) sets.

| Method | Sunlamp | Lightbox | Validation | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Baseline | 0.3095 | 0.9229 | 1.2324 | 0.2888 | 0.8935 | 1.1823 | 0.0086 | 0.02627 | 0.03490 |

| Proposed | 0.2138 | 0.8554 | 1.0692 | 0.2837 | 0.7709 | 1.0546 | 0.0088 | 0.0256 | 0.0355 |

6 Conclusions

We have presented a method for spacecraft pose estimation that integrates structure information in the learning process by incorporating pose and 3D alignment information in a combined loss function. We argue that incorporating such information provides robustness to domain shifts when the imaged objects keep the same structure although they are captured under different illumination conditions. In addition we have introduced a method for pseudo-labelling in pose estimation that exploits the consensus within a network and provides robustness to label noise. Our solution has ranked second on both datasets for the SPEC2021 Challenge, achieving the best average score.

6.0.1 Acknowledgements.

This work is supported by Comunidad Autónoma de Madrid (Spain) under the Grant IND2020/TIC-17515.

References

- [1] Alcantarilla, P.F., Bartoli, A., Davison, A.J.: Kaze features. In: European conference on computer vision. pp. 214–227. Springer (2012)

- [2] Bay, H., Ess, A., Tuytelaars, T., Van Gool, L.: Speeded-up robust features (surf). Computer vision and image understanding 110(3), 346–359 (2008)

- [3] Brégier, R.: Deep regression on manifolds: a 3D rotation case study (2021)

- [4] Chen, B., Cao, J., Parra, A., Chin, T.J.: Satellite pose estimation with deep landmark regression and nonlinear pose refinement. In: Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops. pp. 0–0 (2019)

- [5] Chen, B., Parra, A., Cao, J., Li, N., Chin, T.J.: End-to-end learnable geometric vision by backpropagating pnp optimization. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 8100–8109 (2020)

- [6] Doumanoglou, A., Kouskouridas, R., Malassiotis, S., Kim, T.K.: Recovering 6d object pose and predicting next-best-view in the crowd. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 3583–3592 (2016)

- [7] European Space Agency: Spacecraft pose estimation challenge 2021 (2021), https://kelvins.esa.int/pose-estimation-2021/

- [8] Ganin, Y., Lempitsky, V.: Unsupervised domain adaptation by backpropagation. In: International conference on machine learning. pp. 1180–1189. PMLR (2015)

- [9] Ghifary, M., Kleijn, W.B., Zhang, M., Balduzzi, D., Li, W.: Deep reconstruction-classification networks for unsupervised domain adaptation. In: European conference on computer vision. pp. 597–613. Springer (2016)

- [10] Hinterstoisser, S., Lepetit, V., Ilic, S., Fua, P., Navab, N.: Dominant orientation templates for real-time detection of texture-less objects. In: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. pp. 2257–2264. IEEE (2010)

- [11] Hu, Y., Speierer, S., Jakob, W., Fua, P., Salzmann, M.: Wide-depth-range 6d object pose estimation in space. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 15870–15879 (2021)

- [12] Kehl, W., Milletari, F., Tombari, F., Ilic, S., Navab, N.: Deep learning of local rgb-d patches for 3d object detection and 6d pose estimation. In: European conference on computer vision. pp. 205–220. Springer (2016)

- [13] Kelsey, J.M., Byrne, J., Cosgrove, M., Seereeram, S., Mehra, R.K.: Vision-based relative pose estimation for autonomous rendezvous and docking. In: 2006 IEEE aerospace conference. pp. 20–pp. IEEE (2006)

- [14] Kendall, A., Cipolla, R.: Geometric loss functions for camera pose regression with deep learning. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 5974–5983 (2017)

- [15] Kendall, A., Grimes, M., Cipolla, R.: Posenet: A convolutional network for real-time 6-dof camera relocalization. In: Proceedings of the IEEE international conference on computer vision. pp. 2938–2946 (2015)

- [16] Kuffner, J.J.: Effective sampling and distance metrics for 3d rigid body path planning. In: IEEE International Conference on Robotics and Automation, 2004. Proceedings. ICRA’04. 2004. vol. 4, pp. 3993–3998. IEEE (2004)

- [17] Lee, K.H., He, X., Zhang, L., Yang, L.: Cleannet: Transfer learning for scalable image classifier training with label noise. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 5447–5456 (2018)

- [18] Lepetit, V., Moreno-Noguer, F., Fua, P.: Epnp: An accurate o (n) solution to the pnp problem. International journal of computer vision 81(2), 155–166 (2009)

- [19] Lowe, D.G.: Distinctive image features from scale-invariant keypoints. International journal of computer vision 60(2), 91–110 (2004)

- [20] Mahendran, S., Ali, H., Vidal, R.: 3d pose regression using convolutional neural networks. In: Proceedings of the IEEE International Conference on Computer Vision Workshops. pp. 2174–2182 (2017)

- [21] Mishchuk, A., Mishkin, D., Radenovic, F., Matas, J.: Working hard to know your neighbor’s margins: Local descriptor learning loss. Advances in neural information processing systems 30 (2017)

- [22] Newell, A., Yang, K., Deng, J.: Stacked hourglass networks for human pose estimation. In: European conference on computer vision. pp. 483–499. Springer (2016)

- [23] Pan, S.J., Yang, Q.: A survey on transfer learning. IEEE Transactions on knowledge and data engineering 22(10), 1345–1359 (2009)

- [24] Park, T.H., D’Amico, S.: Robust multi-task learning and online refinement for spacecraft pose estimation across domain gap. arXiv preprint arXiv:2203.04275 (2022)

- [25] Park, T.H., Märtens, M., Lecuyer, G., Izzo, D., D’Amico, S.: SPEED+: Next-generation dataset for spacecraft pose estimation across domain gap. arXiv preprint arXiv:2110.03101 (2021)

- [26] Parkes, S., Martin, I., Dunstan, M., Matthews, D.: Planet surface simulation with pangu. In: Space ops 2004 conference. p. 389 (2004)

- [27] Peng, S., Liu, Y., Huang, Q., Zhou, X., Bao, H.: Pvnet: Pixel-wise voting network for 6dof pose estimation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 4561–4570 (2019)

- [28] Proença, P.F., Gao, Y.: Deep learning for spacecraft pose estimation from photorealistic rendering. In: 2020 IEEE International Conference on Robotics and Automation (ICRA). pp. 6007–6013. IEEE (2020)

- [29] Sattler, T., Zhou, Q., Pollefeys, M., Leal-Taixe, L.: Understanding the limitations of cnn-based absolute camera pose regression. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp. 3302–3312 (2019)

- [30] Sharma, S., Beierle, C., D’Amico, S.: Pose estimation for non-cooperative spacecraft rendezvous using convolutional neural networks. In: 2018 IEEE Aerospace Conference. pp. 1–12. IEEE (2018)

- [31] Shavit, Y., Ferens, R.: Introduction to camera pose estimation with deep learning. arXiv preprint arXiv:1907.05272 (2019)

- [32] Shi, J.F., Ulrich, S., Ruel, S.: Spacecraft pose estimation using principal component analysis and a monocular camera. In: AIAA Guidance, Navigation, and Control Conference. p. 1034 (2017)

- [33] Shrivastava, A., Pfister, T., Tuzel, O., Susskind, J., Wang, W., Webb, R.: Learning from simulated and unsupervised images through adversarial training. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 2107–2116 (2017)

- [34] Su, H., Qi, C.R., Li, Y., Guibas, L.J.: Render for cnn: Viewpoint estimation in images using cnns trained with rendered 3d model views. In: Proceedings of the IEEE international conference on computer vision. pp. 2686–2694 (2015)

- [35] Sun, B., Saenko, K.: Deep coral: Correlation alignment for deep domain adaptation. In: European conference on computer vision. pp. 443–450. Springer (2016)

- [36] Sun, J., Shen, Z., Wang, Y., Bao, H., Zhou, X.: Loftr: Detector-free local feature matching with transformers. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp. 8922–8931 (2021)

- [37] Sun, X., Xiao, B., Wei, F., Liang, S., Wei, Y.: Integral human pose regression. In: Proceedings of the European conference on computer vision (ECCV). pp. 529–545 (2018)

- [38] Umeyama, S.: Least-squares estimation of transformation parameters between two point patterns. IEEE Transactions on Pattern Analysis & Machine Intelligence 13(04), 376–380 (1991)

- [39] Wang, J., Sun, K., Cheng, T., Jiang, B., Deng, C., Zhao, Y., Liu, D., Mu, Y., Tan, M., Wang, X., et al.: Deep high-resolution representation learning for visual recognition. IEEE transactions on pattern analysis and machine intelligence 43(10), 3349–3364 (2020)

- [40] Zhang, P., Zhang, B., Zhang, T., Chen, D., Wang, Y., Wen, F.: Prototypical pseudo label denoising and target structure learning for domain adaptive semantic segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 12414–12424 (2021)

- [41] Zhang, Z., Sabuncu, M.: Generalized cross entropy loss for training deep neural networks with noisy labels. Advances in neural information processing systems 31 (2018)

- [42] Zhu, J.Y., Park, T., Isola, P., Efros, A.A.: Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of the IEEE international conference on computer vision. pp. 2223–2232 (2017)