Source-Aware Neural Speech Coding for Noisy Speech Compression

Abstract

This paper introduces a novel neural network-based speech coding system that can process noisy speech effectively. The proposed source-aware neural audio coding (SANAC) system harmonizes a deep autoencoder-based source separation model and a neural coding system, so that it can explicitly perform source separation and coding in the latent space. An added benefit of this system is that the codec can allocate a different amount of bits to the underlying sources, so that the more important source sounds better in the decoded signal. We target a new use case where the user on the receiver side cares about the quality of the non-speech components in the speech communication, while the speech source still carries the most important information. Both objective and subjective evaluation tests show that SANAC can recover the original noisy speech better than the baseline neural audio coding system, which is with no source-aware coding mechanism, and two conventional codecs.

Index Terms— Speech enhancement, speech coding, source separation

1 Introduction

Breakthroughs made in deep learning for the past decade have shown phenomenal performance improvements in various pattern recognition tasks, including media compression and coding. Seminal works are proposed in the lossy image compression domain, where autoencoders are a natural choice. With an autoencoder, the encoder part converts the input signal into a latent feature vector, followed by the decoder that recovers the original input [1, 2]. Compression is achieved when the number of bits used to represent the latent vector (or code) is smaller than that of the raw input signal. With increased computational complexity, the deep autoencoders have shown superior compression performance to traditional technology.

Neural speech coding is an emerging research area, too. Autoregressive models, such as WaveNet [3], have shown a transparent perceptual performance at a very low bitrate [4, 5], surpassing that of traditional coders. Another branch of neural speech coding systems takes a frame-by-frame approach, feeding time-domain waveform signals to an end-to-end autoencoding network. Kankanahalli proposes a simpler model that consists of fully convolutional layers to integrate dimension reduction, quantization, and entropy control tasks [6]. Cross-module residual learning (CMRL) inherits the convolutional pipeline and proposes a cascading structure, where multiple autoencoders are concatenated to work on the residual signal produced by the preceding ones [7]. In [8], CMRL is coupled with a trainable linear predictive coding (LPC) module as a pre-processor. It further improves the performance and lowered the model complexity down to 0.45 million parameters, eventually outperforming AMR-WB [9].

In this work, we widen the scope of speech coding applications by taking into account noisy speech as the input. Additional sound sources often accompany real-world speech. However, traditional speech codecs are mostly based on the speech production models [10, 9], thus lacking the ability to model the non-speech components mixed in the input signal. Efforts to address the problem have been partly reflected in the MPEG unified speech and audio coding (USAC) standard [11, 12]. USAC tackles speech signals in the mixture condition by switching between different tools defined for different kinds of signals, such as speech and music. However, the switching decision does not consider the mixed nature within the frame, which requires explicit source separation. Meanwhile, AMR-WB’s discontinuous transmission (DTX) mode also considers the mixed nature of input speech by deactivating the coding process for the non-speech periods [9]. Lombard et al. improved DTX by generating artificial comfort noise that smooths out the discontinuity [13]. However, for the frames where both speech and non-speech sources co-exist, it is difficult to effectively control the bitrate using DTX. Similar ideas have been used in transform coders for audio compression, where the dynamic bit allocation algorithm based on psychoacoustic models can create a spectral hole in low bitrate cases. Intelligent noise gap filling can alleviate the musical noise generated from this quantization process [14, 15], while it is to reduce the artifact generated from the coding algorithm, rather than to model the non-stationary noise source separately from the main source.

To that end, we propose source-aware neural audio coding (SANAC) to control the bit allocation to multiple sources differently. SANAC does not seek a speech-only reconstruction, e.g., by denoising the noisy input while coding it simultaneously [16]. Instead, we target the use case where the user still wants the code to convey the non-speech components to better understand the transmitter’s acoustic environment. We empirically show that the sources in the mixture can be assigned with unbalanced bitrates depending on their perceptual or applicational importance and entropy in the latent space, leading to a better objective and subjective quality.

2 Model Description

The proposed SANAC system harmonizes a source separation module into the neural coding system. Our model performs explicit source separation in the feature space to produce source-specific codes, which are subsequently quantized and decoded to recover the respective sources. The source-specific code vectors can be learned using a masking-based approaches as in TasNets [17, 18], while we propose to utilize the orthogonality assumption between the source-specific code vectors to drop the separator module in the TasNet architecture and reduce the encoder complexity. Soft-to-hard quantization [2] quantizes the real-valued source-specific codes. Bitrate control works on individual sources independently as well.

2.1 Orthogonal code vectors for separation

As a codec system, the model consists of an encoder that converts time-domain mixture frame into code vector : . To marry the source separation concept, we assume mask vectors that can decompose the code vector into components: . Note that is the probability of -th code value belonging to -th source. In addition, we further assume that this probabilistic code assignments to the sources are actually determined by one-hot vectors, so that the masking process assigns each code value to only one source, i.e.,

| (1) |

The TasNet models estimate similar masking vectors via a separate neural network module, which led to the state-of-the-art separation performance. In there, the estimated mask values are indeed somewhat drastically distributed to either near zero or one, making the masked code vectors nearly orthogonal from each other. However, the sigmoid-activated masks in the TasNet architecture and our probabilistic masks do not specifically assume a hard assignment.

From now on, we assume orthogonal code vectors per source as a result of hard masking, i.e., , where , -th source’s code vector defined by the Hadamard product between the mask and the mixture code.

The proposed orthogonality leads us to a meaningful structural innovation. Instead of estimating the mask vector for every input frame, we can use structured masking vectors that force the code values to be grouped into exclusive and consecutive subsets. For example, for a two-source case with , . Hence, we can safely discard the masked-out elements (the latter four elements), by defining its truncated version as . The concatenation of the truncated code vectors determines the final code vector: .

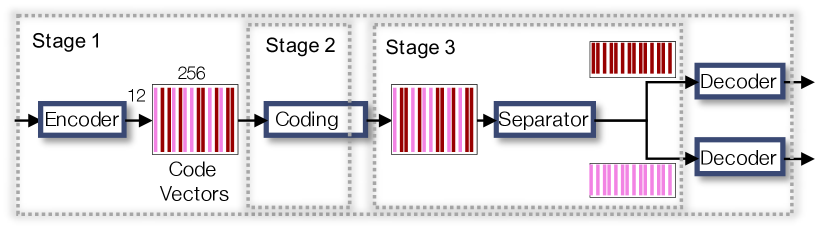

In practice, we implement the encoder as a 1-d convolutional neural network (CNN) whose output is a matrix, where is the number of output channels (see Figure 1, where , ). We collect the first channels of this feature map (dark red bars) as our codes for speech, i.e., corresponds to the vectorized version of the upper half of the feature map of size , or , where should be an integer multiple of . The other half is for the noise source. Since the decoders are learned to predict individual sources, this implicit masking process can still work for source separation.

2.2 Soft-to-hard quantization

Quantization is a mapping process that replaces a continuous variable into its closest discrete representative. Since it is not a differentiable process, incorporating it into a neural network requires careful consideration. Soft-to-hard quantization showed successful performance both in image and speech compression models [2, 6, 7]. The idea is to formulate this cluster assignment process as a softmax classification during the feedforward process, which finds the nearest one among total representatives for the given code vector as follows:

| (2) | ||||

where the algorithm first computes the Euclidean distance vector against all the representatives (i.e., the cluster means), whose negative value works like a similarity score for the softmax function. Using the softmax result, the probability vector of the cluster membership, we can construct the quantized code vector : during test time, simply choosing the closest one will do a proper quantization. However, since the operation is not differentiable, for training, we do a convex combination of the cluster centroids to represent the quantized code, as a differentiable surrogate of the hard assignment process. The discrepancy between training and testing is reduced by controlling the scaling hyperparameter , which makes the softmax probabilities more drastic once it is large enough (e.g., a one-hot vector in the extreme case). Note that it also learns the cluster centroids as a part of the learnable network parameters rather than employing a separate clustering process to define them.

In previous work the quantization has been on scalar variables, i.e., [6, 7, 8]. In this work, the soft-to-hard quantization performs vector quantization (VQ). We denote the CNN encoder output by , which consists of code blocks: . Then, each code vector for quantization is defined by the -th feature spanning over channels: , having as the VQ dimension in our case.

2.3 Source-wise entropy control

The theoretical lower bound of the bitrate, as a result of Huffman coding, can be defined by the entropy of the quantized codes. The frequency of the cluster means defines the entropy of the source-specific codes: , where denotes the frequency of -th mean for the -th source. Meanwhile, the entropy for the code of the mixture signal is smaller than or equal to the sum of the entropy of all sources: , where is the set of quantization vector centroids learned in a source-agnostic way, i.e., directly from the mixture signals. Therefore, in theory, SANAC cannot achieve a better coding gain than a codec that works directly on the mixture.

However, SANAC can still benefit from the source-wise coding, especially by exploiting the perceptual factors. Our main assumption in this work is that the perceptual importance differs from source-by-source, leading to a coding system that can assign different bitrates to different sources. For noisy speech, for example, we will try to assign more bits to the speech source. Consequently, although the user eventually listens to the recovered mixture of speech and noise (a) the perceptual quality of the speech component is relatively higher (b) the codec can achieve a better coding gain if the noise source’ statistical characteristics is robust to low bitrates.

Our argument is based on the codec’s ability to control the entropy of the source-specific codes. In SANAC, we adopt the entropy control mechanism proposed in [2], but by setting up a per-source loss between the target and the actual entropy values: . While this loss does not guarantee the exact bitrate during the test time, in practice, we observe that the actual bitrate is not significantly different from the target.

2.4 Decoding and the final loss

The source-specific truncated codes, after the quantization, , are fed to the decoder part of the network. The decoder function works similarly to Conv-TasNet [18] in that the decoder runs times to predict individual source reconstructions from sourse-specific feature maps as the input. However, SANAC’s decoding is different from Conv-TasNet’s as the decoder input is the quantized codes. In addition, our model cares about the quality of the recovered mixture, not only the separation quality.

Our training loss considers all these goals, consisting of the main mean squared error (MSE)-based reconstruction term and the entropy control terms. More specifically, for the noisy speech case ( for speech and for noise), the MSE loss is for the speech source reconstruction and the mixture reconstruction , while the noise source reconstruction is implied in there. We regularize the total entropy as well as the ratio between the two source-wise entropy values:

| (3) |

where and are the target total entropy and the target ratio, respectively.

3 Experiment

3.1 Dataset

500 and 50 utterances are randomly selected from training and test set of TIMIT corpus [19]. We generate 10 contaminated samples out of each clean utterance by adding 10 different non-stationary background sources, {bird singing, casino, cicadas, typing, chip eating, frogs, jungle, machine gun, motorcycle, ocean} used in [20]. Every contaminated speech waveform was segmented into frames of 512 samples (32ms), with overlap of 64 samples. We apply a Hann window of size 128 samples only to the overlapping regions. Since there are frames per second and each frame produces code vectors for VQ, for the entropy of a source-specific codebook , the bitrate is , e.g., 9.14kbps when and .

3.2 Training process

Adam optimizer with an initial learning rate 0.0001 trains the models [21]. Both SANAC and the baseline are trained in three stages. Every jump to next stage is triggered when the validation loss stops improving in 3 consecutive epochs. We stop the training updates after validation loss does not improve for 20 epochs.

-

•

Stage 1: For the first three epochs, the model trains the encoder to separate the input into the speech and background sources, that are represented by the two orthogonal code vectors. No quantization is involved in yet, but this stage better initializes the parameters for the quantization process. The encoder consists of a few bottleneck blocks that are commonly used in ResNet [22]. With the bottleneck structure, the input and output feature maps can be connected via an identity shortcut with less filters to learn in between. The encoder module also employs a 1-d convolution layer to downsample the feature map from 512 to 256, followed by another bottleneck block and a channel changing layer to yield two sets of code maps of each. The learned source-specific feature maps are fed directly to the channel changer with no quantization, followed by an upsampler and the final decoder. In terms of upsampling, we interlace two adjacent channels into one, doubling the number of features up to 512 while halving the number of channels as introduced in [23], and then adopted for neural speech coding [6, 7].

-

•

Stage 2: In this stage, the model starts to quantize the encoder output using the soft-to-hard VQ mechanism. The VQ is done with centroids. We set the scale of the softmax function , and increase it exponentially until it reaches 500 to gradually introduce more hardness to the softmax function. Meanwhile, the other modules in the network are also updated accordingly to absorb the quantization error. As the model stabilizes, we introduce entropy control terms into the loss function by setting up the regularization weights and . We set to be 1, 2, and 3, which correspond to three bitrates 9.14, 18.29, and 27.43kbps. The target ratio between speech and noise bitrates is trainable, which we set to be at the beginning.

-

•

Stage 3: Finally, the feature maps go through a few more ResNet blocks that strengthen the separation (the “Separator” module in Figure 1), followed by the decoder that runs twice for both sources. The “Separator” and “Decoder” consist of two bottleneck blocks with different input channels, 60 and 30, respectively. “Decoder” needs an additional channel changer.

-

•

The Baseline: Our baseline system shown in Fig. 2 is similar to the proposed architecture, except that it discards the first separation before coding, meaning that the codec system is not aware of the different sources. Hence, the baseline only generates one type of codes representing the mixture signal without any control of the entropy ratio in the loss function. We still use the two-headed decoder architecture to benefit from the two reconstruction loss terms.

| 0dB | Comparing systems | |||

|---|---|---|---|---|

| Bitrate | Baseline | Proposed | AMR-WB | Opus |

| 5dB | ||||

| Bitrates | kbps | kbps | kbps |

|---|---|---|---|

| Preference rate | |||

| Standard deviation |

3.3 Results and analysis

We first show the average MOS-LQO (PESQ) score for SANAC, baseline and two standard codecs (AMR-WB [9] and Opus [24]), at 3 different target bitrates in Table 1. We evaluate the reconstruction of the input noisy speech. For each decoded sample, both the uncompressed mixture input and its corresponding clean speech source are considered as the reference, yielding the left and right hand scores, respectively. Hence, the right hand score measures the speech enhancement performance, while the left hand score indicates the level of overall reconstruction quality. Since our neural codecs are not designed to degrade noise source during compression, the right hand scores are lower than those from standard codecs where the added noise is somewhat suppressed. In terms of the left hand score, neural codecs outperform those counterparts in most cases. It is known, however, that PESQ is not well defined for the perceptual evaluation between noisy speech signals, which to a certain degree explains the very similar left hand scores from the baseline and SANAC.

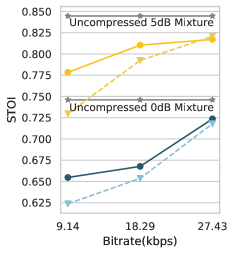

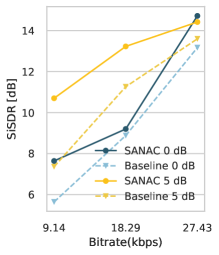

Additionally, the baseline and proposed SANAC are evaluated based on the scale-invariant signal-to-distortion ratio (SiSDR) [25] and short-time objective intelligibility (STOI) [26]. The clean speech is used as the reference when computing STOI. Similar comparison results are observed for both STOI (Fig.3(a)) and SiSDR (Fig.3(b)): overall, SANAC achieves higher scores in most cases. For STOI, we find that the performance gap becomes more prominent in the lower bitrates, thanks to the more speech-oriented setup we used for SANAC. With respect to SiSDR, SANAC shows better mixture reconstruction performance, too. The margin against the baseline is more noticeable when the SNR level of the input mixture is higher.

Finally, we conduct a subjective listening test with eight audio experts participated. The A/B tests are designed to compare our own baseline and SANAC; as a controlled experiment, where all other system aspects are fixed, the test focuses on the impact of the source-aware coding scheme. The test consists of three sessions with different bitrates (, , and kbps). With the uncompressed mixture signal as the reference, listeners are asked to designate the sample from 2 competing systems that sounds more similar to the reference. Results are presented in Table 2, where the preference rate denotes how likely an average listener prefers SANAC. We also report the standard deviation to measure the variation of the preference rate. In all cases, SANAC is favored by most listeners. As the bitrate gets lower, the listener tends to have a higher and more determined preference rate for SANAC over the baseline.

4 Conclusion

In this work, we proposed SANAC for source-aware neural speech coding. In the model, we harmonized a Conv-TasNet-like masking-based separation approach with an end-to-end neural speech coding network, so that the system can produce source-specific codes. SANAC showcased superior performance in both objective and subjective tests to the baseline source-agnostic model with a similar architecture. We believe that SANAC opens a new possibility of widening audio coding on mixture signals by being able to control individual sources differently. The sound examples and source code are available online111https://saige.sice.indiana.edu/research-projects/sanac.

References

- [1] L. Theis, W. Shi, A. Cunningham, and F. Huszar, “Lossy image compression with compressive autoencoders,” 2017.

- [2] E. Agustsson, F. Mentzer, M. Tschannen, L. Cavigelli, R. Timofte, L. Benini, and L. V. Gool, “Soft-to-hard vector quantization for end-to-end learning compressible representations,” in Advances in Neural Information Processing Systems (NIPS), 2017, pp. 1141–1151.

- [3] A. van den Oord, S. Dieleman, H. Zen, K. Simonyan, O. Vinyals, A. Graves, N. Kalchbrenner, A. Senior, and K. Kavukcuoglu, “Wavenet: A generative model for raw audio,” arXiv preprint arXiv:1609.03499, 2016.

- [4] W. B. Kleijn, F. S. C. Lim, A. Luebs, J. Skoglund, F. Stimberg, Q. Wang, and T. C. Walters, “WaveNet based low rate speech coding,” in Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), 2018, pp. 676–680.

- [5] J.-M. Valin and J. Skoglund, “A real-time wideband neural vocoder at 1.6 kb/s using LPCNet,” in Proceedings of the Annual Conference of the International Speech Communication Association (Interspeech), 2019.

- [6] S. Kankanahalli, “End-to-end optimized speech coding with deep neural networks,” in Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), 2018.

- [7] K. Zhen, J. Sung, M. S. Lee, S. Beack, and M. Kim, “Cascaded cross-module residual learning towards lightweight end-to-end speech coding,” in Proceedings of the Annual Conference of the International Speech Communication Association (Interspeech), 2019.

- [8] K. Zhen, M. S. Lee, J. Sung, S. Beack, and M Kim, “Efficient and scalable neural residual waveform coding with collaborative quantization,” in Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), 2020.

- [9] B. Bessette, R. Salami, R. Lefebvre, M. Jelinek, J. Rotola-Pukkila, J. Vainio, H. Mikkola, and K. Jarvinen, “The adaptive multirate wideband speech codec (AMR-WB),” IEEE Transactions on Speech and Audio Processing, vol. 10, no. 8, pp. 620–636, 2002.

- [10] M. Schroeder and B. Atal, “Code-excited linear prediction (CELP): High-quality speech at very low bit rates,” in Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), 1985, vol. 10, pp. 937–940.

- [11] ISO/IEC DIS 23003-3, “Information technology – mpeg audio technologies – part 3: Unified speech and audio coding,” 2011.

- [12] ISO/IEC 14496-3:2009/PDAM 3, “Transport of unified speech and audio coding (USAC),” 2011.

- [13] A. Lombard, S. Wilde, E. Ravelli, S. Döhla, G. Fuchs, and M. Dietz, “Frequency-domain comfort noise generation for discontinuous transmission in evs,” in Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), 2015, pp. 5893–5897.

- [14] J. Herre, J. Hilpert, A. Kuntz, and J. Plogsties, “Mpeg-h audio–the new standard for universal spatial/3D audio coding,” Journal of Audio Engineering Society, vol. 62, no. 12, pp. 821–830, 2015.

- [15] S. Disch, A. Niedermeier, C. R. Helmrich, C. Neukam, K. Schmidt, R. Geiger, J. Lecomte, F¿ Ghido, F. Nagel, and B. Edler, “Intelligent gap filling in perceptual transform coding of audio,” in Audio Engineering Society Convention 141, Sep. 2016.

- [16] F. S. C. Lim, W. Bastiaan Kleijn, M. Chinen, and J. Skoglund, “Robust low rate speech coding based on cloned networks and wavenet,” in Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), 2020, pp. 6769–6773.

- [17] Y. Luo and N. Mesgarani, “Tasnet: time-domain audio separation network for real-time, single-channel speech separation,” in Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), 2018.

- [18] Y. Luo and N. Mesgarani, “Conv-TasNet: Surpassing ideal time–frequency magnitude masking for speech separation,” IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 27, no. 8, pp. 1256–1266, 2019.

- [19] J. S. Garofolo, L. F. Lamel, W. M. Fisher, J. G. Fiscus, D. S. Pallett, N. L. Dahlgren, and V. Zue, “TIMIT acoustic-phonetic continuous speech corpus,” Linguistic Data Consortium, Philadelphia, 1993.

- [20] Z. Duan, G. J. Mysore, and P. Smaragdis, “Online PLCA for real-time semi-supervised source separation,” in Proceedings of the International Conference on Latent Variable Analysis and Signal Separation (LVA/ICA), 2012, pp. 34–41.

- [21] Diederik P. Kingma and Jimmy Ba, “Adam: A method for stochastic optimization,” 2014.

- [22] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), 2016, pp. 770–778.

- [23] W. Shi, J. Caballero, F. Huszár, J. Totz, A. P. Aitken, R. Bishop, D. Rueckert, and Z. Wang, “Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network,” in Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), 2016, pp. 1874–1883.

- [24] J. M. Valin, K. Vos, and T. Terriberry, “Definition of the opus audio codec,” IETF, September, 2012.

- [25] J. Le Roux, S. Wisdom, H. Erdogan, and J. R. Hershey, “SDR - half-baked or well done?,” arXiv preprint arXiv:1811.02508, 2018.

- [26] C. H. Taal, R. C. Hendriks, R. Heusdens, and J. Jensen, “A short-time objective intelligibility measure for time-frequency weighted noisy speech,” in Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), 2010.