∎

22email: [email protected] 33institutetext: Arda Senocak 44institutetext: School of Electrical Engineering, KAIST, Daejeon, Republic of Korea

44email: [email protected] 55institutetext: Hyunwoo Ha 66institutetext: Department of Electrical Engineering, POSTECH, Pohang, Republic of Korea

66email: [email protected] 77institutetext: Tae-Hyun Oh 88institutetext: Department of Electrical Engineering and Graduate School of Artificial Intelligence, POSTECH, Pohang, Republic of Korea

Institute for Convergence Research and Education in Advanced Technology, Yonsei University, Seoul, Republic of Korea

88email: [email protected]

Sound2Vision: Generating Diverse Visuals from Audio through Cross-Modal Latent Alignment

Abstract

How does audio describe the world around us? In this work, we propose a method for generating images of visual scenes from diverse in-the-wild sounds. This cross-modal generation task is challenging due to the significant information gap between auditory and visual signals. We address this challenge by designing a model that aligns audio-visual modalities by enriching audio features with visual information and translating them into the visual latent space. These features are then fed into the pre-trained image generator to produce images. To enhance image quality, we use sound source localization to select audio-visual pairs with strong cross-modal correlations. Our method achieves substantially better results on the VEGAS and VGGSound datasets compared to previous work and demonstrates control over the generation process through simple manipulations to the input waveform or latent space. Furthermore, we analyze the geometric properties of the learned embedding space and demonstrate that our learning approach effectively aligns audio-visual signals for cross-modal generation. Based on this analysis, we show that our method is agnostic to specific design choices, showing its generalizability by integrating various model architectures and different types of audio-visual data.

Keywords:

Audio-visual learning Multimodal Learning Cross-modal Translation Cross-modal Transferability Generative Model1 Introduction

Humans possess the unique ability to link sounds to specific visual scenes. For instance, the sounds of birds chirping and branches rustling evoke images of a dense forest, while the sound of water flowing brings to mind a river. These associations between sound and visual scenes also enable humans to infer information, such as the size and distance of sound sources, as well as the presence of objects that are not immediately visible.

Recent efforts have been focused on developing multimodal learning systems capable of making such cross-modal predictions by generating visual content based on audio inputs (Lee et al, 2022; Li et al, 2022; Wan et al, 2019; Shim et al, 2021; Chen et al, 2017; Hao et al, 2018; Fanzeres and Nadeu, 2021). Nonetheless, these existing approaches face several challenges, including their restriction to simple datasets where there is a strong correlation between images and sounds (Wan et al, 2019; Shim et al, 2021), dependence on vision-and-language supervision (Lee et al, 2022), and limitations to only manipulating the style of existing images rather than generating new ones (Li et al, 2022).

Overcoming these limitations involves addressing several challenges. Firstly, there is a considerable gap between the modalities of vision and sound, as sound often lacks crucial visual details, such as the shape, color, or spatial positioning of objects. Secondly, the correlation between these modalities can often be inconsistent, e.g., highly contingent or off-sync in timing. Cows, for example, only rarely moo, so associating images of cows with “moo” sounds requires capturing training examples with the rare moments when on-screen cows vocalize.

In this work, we introduce Sound2Vision, a novel sound-to-image generation model and training method that overcomes existing limitations and can be trained using diverse, unlabeled in-the-wild videos. Initially, we utilize a self-supervised pre-trained image encoder and develop an image generation model that synthesizes images based on the visual features from the encoder. Next, we design an audio encoder that converts input sounds into their corresponding visual features, by training to align the audio with the visual domain. This enables us to synthesize diverse images from sound by converting audio into visual features and then generating an image. To effectively learn such cross-modal generation from complex real-world videos, we employ a sound source localization technique (Senocak et al, 2022) to select moments with strong cross-modal correlations in the videos and use those moments for training the model.

Our proposed model, Sound2Vision, is evaluated using the VEGAS (Zhou et al, 2018) and VGGSound (Chen et al, 2020a) datasets. Sound2Vision is capable of synthesizing diverse visual scenes from a wide range of audio inputs, compared to existing models. Additionally, it offers an intuitive method for controlling the image generation process through manipulations at both the input and latent space levels, such as mixing multiple input waveforms, modifying their volumes, and interpolating the features in the latent space, as shown in Fig. 1.

We further analyze the geometric properties of the learned audio-visual aligned space to understand the effectiveness of our training approach. Through an analysis of the modality gap in multi-modal contrastive representation, we empirically demonstrate that our method achieves cross-modal transferability (Zhang et al, 2023), which is the core motivation of our proposed approach, i.e., enabling cross-modal generation from audio to sound. Building on this analysis, we show that our approach is not dependent on specific design choices and has broad applicability. We demonstrate that our training method can adapt to various architectural choices, ranging from GANs to the Latent Diffusion Model (LDM) (Rombach et al, 2022). Additionally, it can be applied to different types of audio-visual datasets, including generic audio-visual data as well as speech and face data (Zhu et al, 2022).

Our main contributions are summarized as follows:

-

•

Proposing a sound-to-image generation method that can generate visually rich images from diverse categories of in-the-wild audio in a self-supervised way.

-

•

Demonstrating that the samples generated by our model can be controlled by intuitive manipulations in the waveform space in addition to latent space.

-

•

Showing the effectiveness of training sound-to-image generation using highly correlated audio-visual pairs.

-

•

Providing an analysis of the geometric properties of the learned space that enables cross-modal generation.

-

•

Demonstrating the generalizability of the training method by successfully incorporating different model architectures and types of audio-visual data.

2 Related work

Audio-visual generation

The field of audio-visual cross-modal generation is explored through two main directions: vision-to-sound generation and sound-to-vision generation. The former, vision-to-sound, has received significant attention, particularly in creating music from images or videos of instruments playing (Su et al, 2020; Chen et al, 2017; Hao et al, 2018), as well as generating more general sounds, such as impact noises from silent videos (Owens et al, 2016a) and open-domain sounds from images and videos (Iashin and Rahtu, 2021; Chen et al, 2020b; Zhou et al, 2018). Recent work (Luo et al, 2024; Xing et al, 2024; Zhang et al, 2024) has also shown success in generating temporally synchronized audio from generic open-domain silent videos.

Conversely, early works in sound-to-vision primarily focused on specific audio categories, such as musical instruments (Chen et al, 2017; Hao et al, 2018; Narasimhan et al, 2022; Chatterjee and Cherian, 2020), bird sounds (Shim et al, 2021), and human speech (Oh et al, 2019). More recent efforts by Wan et al. (Wan et al, 2019) and Fanzeres et al. (Fanzeres and Nadeu, 2021) have expanded the scope, aiming to generate images from audio, including 9 categories from SoundNet (Aytar et al, 2016) and 5 categories from the VEGAS (Zhou et al, 2018) datasets, respectively. Building on these foundations, Sound2Scene (Sung-Bin et al, 2023) has made substantial progress in addressing sound-to-image generation problem with enhanced audio-visual latent alignment. They show the model’s capabilities in handling more categories, surpassing prior art by generating more realistic images, and demonstrating control over the generation process. Subsequent works (Yariv et al, 2023; Qin et al, 2023) have utilized the generative capabilities of the Latent Diffusion Model (Rombach et al, 2022) for the sound-to-image generation task.

This work extends Sound2Scene (Sung-Bin et al, 2023) by providing further analysis on the multimodal gap of aligned audio-visual latent space and conducting additional experiments to demonstrate the proposed method’s generalizability in terms of architectural design and training dataset type.

Audio-driven image manipulation

Recent work has demonstrated the ability to edit a reference image using input sound, rather than directly generating a new one. Lee et al. (Lee et al, 2022) adapt a text-based image manipulation model (Patashnik et al, 2021), extending its embedding space to include audio-visual modalities alongside text. Similarly, Li et al. (Li et al, 2022) employ conditional generative adversarial networks (GANs) (Goodfellow et al, 2014) to manipulate the visual style of an image to match specific input sounds, showing that these modifications can be controlled by varying the sound’s volume or by blending multiple sounds. Unlike these previous works, our approach focuses on generating images based on sound inputs, with the editing capability emerging as a byproduct of our method.

Cross-modal generation

Translating one modality into another, i.e., cross-modal generation, remains an interesting yet open research challenge. Various tasks across diverse modalities have been explored, including text-to-image/video (Ramesh et al, 2021, 2022; Patashnik et al, 2021; Ding et al, 2021; Singer et al, 2022; Ho et al, 2022; Villegas et al, 2022; Rombach et al, 2022), touch-to-image (Yang et al, 2023, 2024), speech-to-motion (Ginosar et al, 2019; Sung-Bin et al, 2024a, b), and image/audio-to-caption (Kim et al, 2023; Mokady et al, 2021; Alayrac et al, 2022), among others. To bridge the gap between heterogeneous modalities in cross-modal generations, several studies (Oh et al, 2019; Poole et al, 2022) have leveraged existing pre-trained models or extended the pre-trained CLIP (Radford et al, 2021) embedding space, which is anchored in the text-visual modality, to meet their specific requirements (Patashnik et al, 2021; Lee et al, 2022; Ramesh et al, 2022; Youwang et al, 2022). In this context, our work aims to generate images from sound by leveraging only freely acquired audio-visual signals from videos.

Audio-visual learning

The natural co-occurrence of audio and visual cues is frequently used as a self-supervision signal to learn the associations between the two modalities, thereby enhancing representation learning. These learned representations are then utilized across various applications including cross-modal retrieval (Arandjelovic and Zisserman, 2018; Owens et al, 2018; Senocak et al, 2024), video recognition (Chen et al, 2021b; Morgado et al, 2021b), and sound source localization (Senocak et al, 2022, 2018, 2019; Chen et al, 2021a; Park et al, 2023, 2024; Senocak et al, 2023b). One approach to constructing an audio-visual embedding space involves jointly training separate neural networks for each modality to determine whether corresponding frames and audio match (Arandjelovic and Zisserman, 2017; Owens and Efros, 2018). Recent efforts have incorporated clustering (Hu et al, 2019; Alwassel et al, 2020) or contrastive learning (Morgado et al, 2021a, b; Akbari et al, 2021) to learn this joint audio-visual embedding space more effectively. While many of the aforementioned methods develop audio-visual representations jointly from scratch, another stream of research builds a joint embedding space by leveraging pre-existing expert models. This knowledge transfer may occur from audio to visual representations (Owens et al, 2016b), from visual to audio representations (Aytar et al, 2016; Gan et al, 2019), or be distilled from both audio-visual representations to video-specific representations (Chen et al, 2021b). Our research aligns with this latter approach, where the visual expert model is initially trained to construct an anchored space. This visual expert model is used to distill detailed visual information from extensive Internet videos into the audio modality.

3 Method

In this section, we provide a concise overview of our proposed method for the sound-to-image generation task, followed by details on the training method. We then discuss the network architecture of our proposed model. Finally, we introduce a novel method for constructing highly correlated audio-visual data pairs from in-the-wild videos for this task.

Overview

Our objective is to develop a method for translating input sounds into visual scenes. Existing methods (Wan et al, 2019; Chen et al, 2017; Fanzeres and Nadeu, 2021; Hao et al, 2018) typically train generative models to synthesize images directly from raw audio or processed audio features without enforcing audio-visual alignment. However, these approaches face significant challenges in producing high-quality, recognizable images due to the substantial gap between modalities and the inherent complexity of visual scenes.

To tackle these issues, we approach this task by breaking it down into sub-problems. The overall framework of our proposed model, Sound2Vision, is shown in Fig. 2. This framework consists of three main components: an audio encoder, an image encoder, and an image generator. Initially, we separately pre-train a strong image encoder and an image generator conditioned by the embeddings of the image encoder with a large-scale image-only dataset. Given the inherent correlation between co-ocurring audio-visual signals, we leverage this natural alignment to infuse the audio features with rich visual information extracted by the image encoder. This process enables the construction of an aligned audio-visual latent space, trained through self-supervised learning on in-the-wild videos. This alignment gives cross-modal transferability (Zhang et al, 2023). Consequently, the enriched audio features from this aligned latent space are fed into the image generator, allowing it to produce visual scenes that accurately reflect the given sounds.

3.1 Learning to generate images from sound

Given the audio and image data pairs , where represents a video frame and its corresponding audio, the learning objective of Sound2Vision is to train the audio encoder to extract audio features that align with anchored visual features . Specifically, the unlabeled data pairs are fed into the audio encoder and the image encoder to respectively extract audio features and visual features , where . Since the image encoder is well pre-trained on an image-only dataset, the visual feature from the image encoder serves as the self-supervision signal for the audio encoder. This facilitates the alignment of the audio feature with the visual feature through feature-based knowledge distillation (Hinton et al, 2015; Gou et al, 2021). These feature alignments across modalities construct the shared audio-visual embedding space on which the image generator G(·) is separately trained compatibly.

To align features from heterogeneous modalities, a metric learning approach is commonly employed. This approach assumes that features are aligned if they are close to each other under some distance metric. One straightforward method is to minimize the distance to align the features of and . However, we discover that relying solely on loss can capture the relationship between two different modalities within each pair without considering unpaired samples. This limitation may lead to constructing an aligned space that is not sufficiently rich, resulting in the generation of lower-quality images. Therefore, we utilize InfoNCE (Oord et al, 2018), a type of contrastive learning that has proven effective in learning audio-visual representation (Afouras et al, 2020; Chen et al, 2021a; Senocak et al, 2023a; Chen et al, 2021b; Wu et al, 2021):

| (1) |

where and denotes arbitrary features with the same dimension, and . By applying this loss, we aim to maximize feature similarity between an image and its corresponding audio segment (positive) while minimizing similarity with randomly selected, unrelated audio samples (negatives). More specifically, for the -th visual and audio feature pair, we define our audio feature-centric loss as , where and represent the unit-norm features. To make a symmetric learning objective, we also compute the visual feature-centric loss term as . The overall learning objective is to minimize the sum of loss terms across all audio-visual pairs in mini-batch :

| (2) |

After training the audio encoder using Eq. (2), our model successfully learns to extract the audio features that are visually enriched and aligned with corresponding visual features. Thus, in the inference stage, we can directly feed the learned audio feature along with a noise vector to the frozen image generator, , to generate a visual scene from the input sound. This is possible because our training objective enables cross-modal transferability, allowing audio features to replace image features.

3.2 Architecture details

All the following modules are trained separately according to the proposed steps. The modules presented here are the default design choices. However, our generic pipeline is versatile and can be applied to different architectural choices. This will be discussed in Sec. 6.1.

Image encoder

Image generator

We utilize BigGAN (Brock et al, 2018) architecture to generate images with diverse visual scene contents. We adapt the input structure based on modifications from ICGAN (Casanova et al, 2021) to make BigGAN as a conditional generator. This setup allows us to train the generator to produce photo-realistic images at a resolution of using the conditional visual features obtained from the image encoder. The generator is trained on ImageNet in a self-supervised manner without labels, while the image encoder is pre-trained and remains fixed.

Audio encoder

We use ResNet-18, which takes the audio spectrogram as input. Following the final convolutional layer, adaptive average pooling aggregates the temporal-frequency information into a single vector. This pooled feature is then fed into a linear layer to produce the audio feature . The audio encoder is trained using either the VGGSound (Chen et al, 2020a) or VEGAS (Zhou et al, 2018) datasets, applying the loss defined in Eq. (2), according to each target benchmark.

3.3 Audio-Visual pair selection module

Learning the relationship between images and sounds accurately requires highly correlated data pairs from both modalities. Identifying the most informative frame or segment in a video for audio-visual correspondence is not a straightforward task. One simple way to collect audio-visual pairs is to extract the mid-frame of a video along with the corresponding audio segment (Lee et al, 2022; Chen et al, 2021a). However, the mid-frame does not always guarantee the presence of informative audio-visual signals (Senocak et al, 2022), as in Fig. 3. To this end, we leverage a pre-trained sound source localization model (Senocak et al, 2022) to extract highly correlated audio and visual pairs. The backbone networks of (Senocak et al, 2022) enable us to have fine-grained audio-visual features of temporal time steps, and , respectively. The correlation scores are computed for each time step as , then sorted by top-k(). Using this method of selecting correlated pairs, we annotate the top-1 moment frames for each video in the training splits and use them for training. Figure 3 demonstrates a comparison between selected frames and mid-frames. Although selected automatically, they consistently contain distinct or salient objects that accurately correspond to the audio.

4 Experiment

We evaluate our proposed Sound2Vision through a series of qualitative and quantitative assessments. First, we provide qualitative analysis of the images generated from various categories of sound. Then, we quantitatively assess the quality, diversity, and correspondence between the audio and the generated images. Importantly, we do not use any class labels during training or inference.

4.1 Experiment setup

Datasets

We utilize the VGGSound (Chen et al, 2020a) and VEGAS (Zhou et al, 2018) datasets for training and testing our method. VGGSound contains approximately 200K videos, from which we chose 50 classes and follow the provided training and testing splits. VEGAS, on the other hand, includes about 2.8K videos with 10 classes. To maintain data balance, we use 800 videos for training and 50 videos for testing from each class. We use the test splits from both datasets for subsequent qualitative and quantitative evaluations.

Evaluation metrics

We use both objective and subjective metrics to evaluate our method.

-

•

CLIP (Radford et al, 2021) retrieval : Inspired by the CLIP R-Precision metric (Park et al, 2021), we evaluate the generated images by conducting an image-to-text retrieval test, measuring recall at (). We input the generated images along with audio category names (text) into CLIP, then measure the similarity between the image and text features to rank the text descriptions for each query image.

-

•

Fréchet Inception Distance (FID) (Heusel et al, 2017) and Inception Score (IS) (Salimans et al, 2016) : We use FID to measure the Fréchet distance between features obtained from real and synthesized images using a pre-trained Inception-V3 model (Szegedy et al, 2016). This same model is also used IS, computing the KL-divergence between the conditional and marginal class distributions.

-

•

Human evaluations : We recruit 70 participants to analyze the performance of our method from a human perception perspective. Participants are asked to compare our model with an image-conditioned generation model (Casanova et al, 2021) and to assess whether the images generated by our model accurately correspond to the input sounds. Further details are available in Sec. 4.3.

Implementation details

The audio encoder takes a -dimensional log-spectrogram, which is converted from 10 seconds of audio, to extract audio features. Simultaneously, the video frame is resized to and fed into the image encoder to extract visual features. We train our model on a single GeForce RTX 3090 for 50 epochs with early stopping. The Adam optimizer is used, with a batch size set at 64, a learning rate of , and a weight decay of .

,

,  ,

,  , and

, and  denote wind blowing, elk bugling, skiing, and human talking sounds, respectively.

denote wind blowing, elk bugling, skiing, and human talking sounds, respectively.4.2 Qualitative analysis

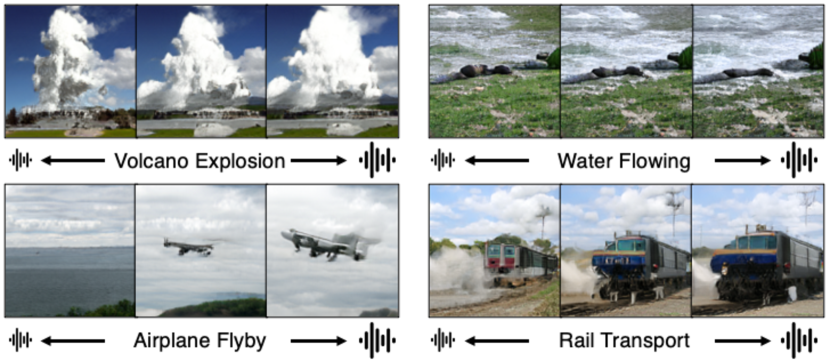

Image generation from sound

Sound2Vision generates visually plausible images from a single input waveform, as shown in Fig.1 and 4. Unlike previous work (Fanzeres and Nadeu, 2021; Wan et al, 2019), it is not limited to a small number of categories but instead handles a diverse range, including animals, vehicles, sceneries, etc. We highlight that our model can even distinguish subtle differences in similar sound categories, such as “Waterfall Burbling” and “Sea Waves” sounds, and produces accurate and distinct images.

Audio event visualization

After presenting the results of Sound2Vision in generating a wide variety of images, we analyze which parts of the audio the model uses to generate images and whether it is attending to the true “semantic” context in the audio. With the trained Sound2Vision, we visualize a coarse localization map on the spectrogram, indicating areas the model highlights for image generation. Using the same technique of Gradient-weighted Class Activation Mapping (Grad-CAM) (Selvaraju et al, 2017), we compute the gradient of the generated image with respect to the feature map activation of the audio encoder’s last convolutional layer to produce the coarse localization map (Fig. 5). As shown, each highlighted moment is visualized in a heatmap, with the most emphasized regions transitioning from blue to red. For example, (a) shows that the model focuses on a certain region of the sound that is dominant throughout the duration, e.g., elk bulging, for generating an image. On the other hand, (b) demonstrates that the model focuses on both regions of skiing sound (wind blowing and footsteps on snow) and human sound, to produce a composite image.

4.3 Quantitative analysis

| Method | Encoder (/) | Generator (/) | VGGSound (50 classes) | VEGAS | |||||

|---|---|---|---|---|---|---|---|---|---|

| R@1 | R@5 | FID () | IS () | R@1 | R@5 | ||||

| (A) | ICGAN (Casanova et al, 2021) | 30.06 | 62.59 | 16.11 | 12.61 | 46.60 | 82.48 | ||

| (B) | Ours | 40.71 | 77.36 | 17.97 | 19.46 | 57.44 | 84.08 | ||

| (C) | Retrieval | 51.28 | 80.37 | - | - | 67.20 | 85.00 | ||

| (D) | Upper bound | - | - | 57.82 | 85.79 | - | - | 73.60 | 88.2 |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/3b59061e-5fb2-479b-9653-13cb8efef854/x14.png) |

(a) Comparison to baselines (b) User study

Comparison with baselines

We conduct a series of experiments to validate our proposed method on VGGSound and VEGAS, compared with several closely related baselines, as detailed in Table 1 (a). First, our model (B) is compared with an image-to-image generation model identical to ICGAN(Casanova et al, 2021) (A). Although (B) shares the same image generator as (A), it utilizes a different encoder type and input modality. Results show that (B) performs favorably against (A) across all metrics. We attribute this to the noisy nature of video datasets, which may disturb (A) from extracting informative visual features for image generation, whereas audio input proves to be more robust to these disturbances, thus resulting in more plausible images. Additionally, we evaluate our model (B) against a retrieval system (C) that serves as a strong baseline. The retrieval system uses the same audio encoder as (B), while the image generator is replaced with the same memory-sized database of images from the training data. This system finds the closest image from the database, given the input audio feature. Compared to (D)—an upper bound where video frames are directly used— (C) shows a significantly smaller performance gap with (D) compared to the gap between (B) and (D), validating the effectiveness of our audio encoder in mapping audio to the shared embedding space. While (C) surpasses (B) in for both datasets, (B) performs comparably with (C) in , demonstrating that our method approaches the performance of this strong baseline.

User study

The user study results are summarized in Table 1 (b) across two experiments: (i) a comparison with ICGAN, and (ii) an evaluation of the plausibility of image generation from given audio. Each experiment consists of 20 questions where participants are presented with audio and multiple images. In (i), participants select images they believe best represent the audio, with two images generated by both our model and ICGAN, and the remaining images randomly chosen from either method. We measure preference by comparing the recall probability of ICGAN with our model. In (ii), all four provided images are generated by our model, but only one corresponds to the given audio. Participants then select the image they find most related to input audio. In (i), our model is preferred. Additionally, (ii) demonstrates that our method achieves a precision rate of 83.8%, supporting that our model generates images highly correlated with the provided audio.

| Loss | Duration | VGGSound (50 classes) | |||||

|---|---|---|---|---|---|---|---|

| R@1 | R@5 | FID () | IS () | ||||

| (A) | ✓ | 10 sec. | 18.21 | 46.69 | 24.05 | 9.97 | |

| (B) | ✓ | 10 sec. | 31.63 | 66.04 | 27.05 | 12.92 | |

| (C) | 10 sec. | 37.20 | 73.13 | 21.20 | 17.51 | ||

| (D) | ✓ | 1 sec. | 35.85 | 72.02 | 19.05 | 17.87 | |

| (E) | 5 sec. | 38.24 | 75.76 | 20.43 | 18.81 | ||

| (F) | ✓ | 10 sec. | 40.71 | 77.36 | 17.97 | 19.46 | |

Ablation study

The ablation studies conducted to assess various design choices are summarized in Table 2. We evaluate the performance of using different distillation losses: a straightforward loss between the visual and audio features, and an InfoNCE loss (Oord et al, 2018) with cosine similarity distance measurement, , rather than using distance as in Eq. (2). The results of experiments (A), (B), and (F) indicate that our chosen loss (F) leads the model to produce more diverse and improved image quality. Furthermore, the comparison between (C) and (F) demonstrates that our audio-visual pair selection method, as discussed in Sec. 3.3, contributes to performance improvements. Lastly, we examine the effect of audio duration by training models with 1, 5, and 10 seconds of audio while keeping other experimental settings the same. The results from (D), (E), and (F) show that longer audio durations consistently enhance performance, likely because longer ones may capture more comprehensive semantics, whereas shorter durations may miss critical details.

4.4 Multimodal gap analysis

The key motivation of our work is to enable cross-modal transferability, allowing our audio embeddings to be used directly with visually pre-trained image generators. Our contrastive learning-based training objective facilitates cross-modal generation from sound to image, even though contrastive learning is known to result in a multimodal gap in the shared space (Liang et al, 2022). We analyze the properties of this multimodal gap that enable cross-modal generation and discuss how closing the gap could further enhance model performance.

Cross-modal transferability

As discussed in (Zhang et al, 2023), the cross-modal transferability is a phenomenon that allows the learned shared embedding space to make different modalities interchangeable for cross-modal tasks. According to Zhang et al., this intriguing phenomenon is enabled by the unique geometry of the modality gap:

-

•

The modality gap approximates a constant vector. This is verified by computing distributions over (magnitude), where is the modality gap between the paired visual and audio features.

-

•

The modality gap is orthogonal to the span of the features, and features have zero mean in the subspace orthogonal to the modality gap. We verify this by computing distributions over (orthogonality) and (center), where and denoting -th dimension of each feature.

As demonstrated in Fig. 6 (a), we find that optimizing InfoNCE loss (Oord et al, 2018) with cosine similarity distance measurement, denoted as , results in a constant magnitude of the modality gap, while the orthogonality and centering values are near zero. This result supports the idea that the objective of our model preserves geometric properties that facilitate cross-modal transferability.

Closing the modality gap

Although objective has proven to be effective in learning aligned audio-visual features, we further explore how closing the multimodal gap affects sound-to-image generation performance. We compare different configurations of the loss function, from to our final objective, . Figure 6 (b) illustrates the geometry of the modality gap learned from . The magnitude of the multimodal gap is significantly reduced compared to that using , while other properties, such as orthogonality and centering, remain close to zero.

Building on this, we analyze the relationship between the modality gap and sound-to-image generation performance. Figure 7 shows the t-SNE (Van der Maaten and Hinton, 2008) visualization of audio and visual features learned by different loss functions. While the features in Figure 7 (a), learned with , exhibit a noticeable gap in the latent space, the features in Figure 7 (b), learned with , show less of a gap, with the two modalities overlapping. We observe significant improvements in overall metrics as we reduce the modality gap between audio-visual features, including quantitative metrics and the multimodal alignment measurement introduced in (Goel et al, 2022). This analysis suggests that learning to closely align audio features with visual features, particularly by reducing the gap between them, is essential for cross-modal generative tasks to produce diverse and visually plausible images.

(a) (b)

Method

R@1

FID ()

IS ()

Magnitude ()

Alignment ()

(a)

31.6

27.0

12.9

0.93

0.51

(b)

40.7

17.9

19.4

0.68

0.71

5 Controllability of the model

Sound2Vision captures the natural correspondence between audio-visual signals through an aligned shared embedding space. Thus, we intuitively ask whether manipulations to the input can lead to corresponding changes in the generated images. Interestingly, we observe that our model supports controllable outputs through straightforward manipulations, either in the waveform space or in the learned latent space, even without a specific learning objective for such control. This opens up interesting experiments, which we explore in the following.

5.1 Waveform manipulation for image generation

Changing the volume

Humans are capable of estimating the rough distance or size of an object based on the volume of its associated sound. To verify whether our model also has a similar ability to understand volume differences, we experiment by both reducing and increasing the volume of the reference audio. Each modified audio waveform input is fed into our model with the same noise vector. As shown in Fig. 8, the objects in the generated images appear larger as the volume increases. Notably, in the “Water Flowing” example, volume adjustments result in images that depict varying strengths of water flow, while in the “Rail Transport” example, they illustrate a train appearing progressively closer in the scene. These observations indicate our model’s ability to not only recognize class-specific features but also understand the relationship between audio volume and visual changes. This suggests that visual supervision, rather than class labels, enables the model to capture such dynamic and meaningful audio-visual relationships.

Mixing waveforms

We explore whether our model can reflect the presence of multiple sounds in a single generated image. To test this, we combine two distinct waveforms into one and input the mixed waveform into our model. As illustrated in Fig. 9, our model successfully generates images that capture the composite audio semantics. For example, mixing the “Skiing” sound with others results in images where elements such as a railroad or a bird emerge within a snowy scene. Similarly, when the “Hail” sound is mixed, the generated images show both a train and a bird appearing in a misty scene. The task of detecting multiple distinct sounds from a single combined audio input, known as audio source separation (Gao and Grauman, 2019), and visually representing them accurately within a single context is not trivial. Nevertheless, our findings indicate that our model is capable of achieving this to a certain extent.

Mixing waveforms and changing the volume

In this experiment, we manipulate the input waveform by combining multiple waveforms and simultaneously adjusting the volume of each waveform. In Fig. 10, we combine the “Wind” sound with either the “Bird” or “Dog” sounds, adjusting their volumes. As the volume of the “wind” sound increases and the “bird” sound decreases, the bird appears smaller in the image, eventually getting covered by bushes. Similarly, as the “dog” sound grows louder, the generated image transitions to a close-up of a dog indoors as the “wind” sound fades. However, when the “wind” sound becomes dominant again, the scene shifts to a wider shot of the dog outdoors. These results demonstrate that our model can detect subtle changes in the audio and accurately reflect these variations in the generated images.

5.2 Latent manipulation for image generation

As we build an aligned audio-visual embedding space, our model can take both image and audio as inputs to generate images. We present two different approaches for audio-visual conditioned image generation, where both methods manipulate the features of the inputs in the latent space.

(a) Inputs (b) Generated images

Image and audio conditioned image generation

Given an image and a semantic condition from audio, our model can generate target images in a compositional manner. This requires understanding the semantic coupling between the given image content and the audio condition. This task is similar to the recent trend of compositional image retrieval. However, here, we aim to demonstrate the compositional image generation ability of our model using audio conditions. We use simple multimodal embedding space arithmetic for image and audio-conditioned image generation. Given an image and audio, we extract visual features and audio features . We then interpolate these features in the latent space to obtain a new feature: , where varies across examples. This new feature is fed into the image generator to synthesize an image. As shown in Fig. 11, this simple approach effectively incorporates the sound context into the given scene, such as adding parade-looking people with marching sounds, stylizing the given building image with hail or skiing sound, and adding various vehicles on the road with respective sounds.

We further use this approach to compare our method with specifically designed the sound-guided image manipulation approach (Lee et al, 2022) in Fig. 12. Although this task is not explicitly targeted by our model, it emerges as a natural outcome of our design. While the results from Lee et al. (Lee et al, 2022) maintain the overall content of the original image, they fail to insert objects that correspond to the sound. In contrast, our method successfully generates an image (nearly similar to the given one) by conditioning on both modalities, for instance, it adds an explosion and a tractor to the scene or makes the ocean view appear cloudy in response to hail sounds.

Image editing with the modifications of paired sound

Here, we explore sound-guided image editing from a different perspective by manipulating inputs in the latent space. Utilizing GAN inversion techniques (Abdal et al, 2019; Richardson et al, 2021), we extract a visual feature and a corresponding noise vector for the reference image. We also change the volume of the associated audio to extract two distinct audio features in the embedding space, and . We then adjust the visual feature by moving it in the direction of the difference between these two audio features, resulting in a new feature: , thereby guiding the visual manipulation with audio cues. This new feature is fed into the image generator , enabling us to edit the original image based on corresponding sounds. As shown in Fig. 13, simply by adjusting the volume and navigating through the latent space, we can modify visual elements such as the size of an explosion, the flow of a waterfall, or the intensity of ocean waves.

6 Generalization on design choices

As we discuss in Sec. 4.4, cross-modal transferability, which aligns audio and visual features, is the key idea behind the effectiveness of Sound2Vision. To further reiterate this and to demonstrate that our method is not dependent on any specific design choice of the model, we present several generalization results with different setups of Sound2Vision. We conduct experiments mainly from two different generalization perspectives: the architectural choice of the model and the type of audio-visual dataset used for training the model.

6.1 Different architectural choice

Different from our original architectural choices outlined in Sec. 3.2, we switch the image generator from GAN (Brock et al, 2018) to the Latent Diffusion Model (LDM) (Rombach et al, 2022) and replace the image encoder from ResNet-50 (He et al, 2016) to the Vision Transformer (ViT) (Dosovitskiy et al, 2020). We identically follow our proposed training method as described in Sec. 3.1 using the VGGSound and VEGAS dataset as detailed in Sec. 4.1. By adopting these more recent and strong components, we observe two key improvements: (1) the model produces higher quality and more realistic images, and (2) it demonstrates an ability to generate images from a broader range of sound categories than before, thereby highlighting the enhanced generative capabilities of the image generator.

Preliminary of Latent Diffusion Model (LDM)

The family of diffusion models (Ho et al, 2020; Dhariwal and Nichol, 2021; Nichol and Dhariwal, 2021) aims to learn the underlying probabilistic model of the image data distribution . Building on these successes, LDM (Rombach et al, 2022) learns such a distribution within the latent space of the variational autoencoder, . This involves learning the reverse Markov process over a sequence of timesteps in the latent space. For each timestep , the denoising function , where is the dimension of the latent, is trained to predict a denoised version of the perturbed at timestep , as . The corresponding objective can be simplify written as follows:

| (3) |

The variant of LDM that accepts conditional inputs formulates the conditional distribution as , where serves as the conditioning input for generation. This model integrates a cross-attention mechanism, allowing it to be conditioned with different modalities. This is done by modifying the objective function to integrate this conditioning as:

| (4) |

In our case, the LDM is initially trained to use the visual features extracted by the Vision Transformer (ViT) (Dosovitskiy et al, 2020) as the conditioning vector . Subsequently, we train the audio encoder to learn to align with these visual features, which are then used as the conditioning for sound-to-image generation.

| Image Generator | VGGSound (50 classes) | VEGAS | |||||

|---|---|---|---|---|---|---|---|

| R@1 | R@5 | FID () | IS () | R@1 | R@5 | ||

| (A) | GAN | 40.71 | 77.36 | 17.97 | 19.46 | 57.44 | 84.08 |

| (B) | LDM | 50.37 | 81.61 | 17.23 | 19.55 | 64.40 | 85.40 |

| (C) | AudioToken | 43.11 | 78.24 | 17.12 | 16.92 | - | - |

Improved image quality

Figure 14 shows the qualitative comparison between images generated by GAN image generator (denoted as GAN) and those produced using the Latent Diffusion Model image generator (denoted as LDM). With the same input audio from various categories, the results from LDM clearly demonstrate improved image quality with more fine-grained details. This enhancement is further supported by the quantitative evaluations summarized in Table 3. Using the LDM as an image generator shows enhanced performance across all datasets and evaluation metrics. Furthermore, compared to AudioToken (Yariv et al, 2023), which are also based on LDM and trained with VGGSound, our model shows favorable results in overall metrics.

We also compare both of our models with other prior arts, S2I (Fanzeres and Nadeu, 2021)111https://github.com/leofanzeres/s2i and Pedersoli et al. (Pedersoli et al, 2022)222https://github.com/ubc-vision/audio_manifold. Note that Pedersoli et al., although not targeted for sound-to-image generation task, utilizes a VQVAE-based model (Van Den Oord et al, 2017) for generating sound-to-depth or segmentation images. Despite our models’ ability to handle a broader range of in-the-wild audio, we adopt the same training setup as S2I by training our models and Pedersoli et al. with five categories from the VEGAS dataset for a fair comparison. As shown in Fig. 15, both of our models surpass all other methods. Additionally, our proposed models generate visually plausible images, whereas previous methods often fail to produce recognizable results. These outcomes highlights the effectiveness of our training approach, where learning visually enriched audio features combined with a robust image generator leads to superior performance.

Handling more categories

The model with the LDM image generator can generate images from a broader range of sound categories that are unattainable with the GAN-based model. As described in (Sung-Bin et al, 2023), while VGGSound contains 310 categories, 50 categories among these are selected for training the model. The choice to use a subset of categories is because of the limitations of the GAN-based model’s generative power, which struggles to produce plausible images across all categories. Training on all categories results in degraded image quality and lower CLIP retrieval performance. However, with the LDM model, we demonstrate that the model can handle more diverse sound categories while maintaining the image quality and reasonable range of CLIP retrieval scores.

Figure 16 (a) shows the CLIP retrieval R@5 results for both the GAN and LDM models, demonstrating how performance varies as the number of training sound classes increases from 50 to 200. As shown, GAN shows a drastic performance drop in R@5, whereas the degradation in LDM follows a more gentle slope. Interestingly, the performance of training 100 classes in GAN is similar to that of training 150-200 classes in LDM. One of the reasons for this degradation in GAN is due to its weaker generative power, especially in human-related classes, a limitation also discussed in (Sung-Bin et al, 2023). As the number of sound classes increases, the likelihood of including audio-visual pairs related to human actions also increases, resulting in poorer CLIP R@5 on GAN.

In Fig. 16 (b), we also provide a qualitative comparison between GAN and LDM along with CLIP R@5 results for each category, where both models are trained with 200 classes. In classes related to animals, both GAN and LDM produce plausible images, resulting in similar R@5 scores. For instance, the “Dog Baying” and “Donkey Braying” classes show approximately a 16% degradation in CLIP R@5 from LDM to GAN, while the “Cuckoo Bird Calling” class shows comparable results. However, in human action-related classes, GAN generates unrecognizable images while LDM consistently produces high-quality images with identifiable human actions. Moreover, LDM outperforms on CLIP R@5, showing approximately a 50% drop from LDM to GAN.

Figure 17 presents additional results across new categories that the GAN-based model could not handle. LDM can generate images from various transportations and environmental sounds, as well as human-related sounds, accurately reflecting the gender or age range.

(a) Inputs (b) Generated images

Controllability

Similar to Sec. 5, the LDM version of our model also supports the capability of generating controllable outputs by manipulating inputs in both waveform and latent spaces to some extent. As demonstrated in Fig. 18, the model can respond to variations in the volume of the same audio, i.e., manipulation in the waveform space. For instance, increasing the volume results in a more forceful waterfall and a larger depiction of thunder. Figure 19 illustrates the results of image and audio conditioned image generation, which involves manipulation in the latent space. We specifically refer to (Liu et al, 2022) and employ compositional generation techniques to incorporate multiple concepts from both image and audio. As shown, the model successfully manipulates the given car image with several environmental sounds, such as a burbling stream and skiing sound. In other examples, the model successfully inserts sound sources into the given images. One clear observation, as also presented above, is that the quality of the generated images is higher than that of the GAN version (see Fig. 11). Additionally, it maintains the integrity of the given image better than the GAN version while manipulating it with the sound. However, this is primarily due to the superior image generation ability of the LDM. As all the examples and results in this section show, regardless of the image generator used, the alignment of the cross-modal signal is the key factor in achieving these capabilities, and our learning objective facilitates this.

6.2 Different dataset type

While the audio-visual pairs constructed from the VGGSound (Chen et al, 2020a) and VEGAS (Zhou et al, 2018) dataset mostly contain environmental and in-the-wild events, i.e., generic sounds, we demonstrate that our training method can be effectively applied to different types of audio-visual datasets, including speech and faces. Although there is no direct one-to-one mapping from speech to face, Speech2Face (Oh et al, 2019) has shown that models can learn to extract facial information from speech and generate faces that resemble the original speaker. Specifically, we utilize the CelebV-HQ (Zhu et al, 2022) dataset, which includes 35,666 video segments featuring a diverse range of talking faces from various identities, ages, genders, and appearances. We arbitrarily select 20,000 videos from which we extract speech and image frames to construct our training dataset, while the remaining videos are used for testing the model. Using this dataset, the training scheme identically follows Sec. 6.1. The audio encoder is trained to align with the visual features extracted by a Vision Transformer (ViT), which is compatible with the LDM. After training, the speech inputs are processed by the audio encoder and then fed into the LDM to generate facial images from the speech.

,

,  ,

,  ,

,  , and

, and  denote speech, tornado roaring, leaves rustling, footsteps on snow, and splashing water sound, respectively.

denote speech, tornado roaring, leaves rustling, footsteps on snow, and splashing water sound, respectively.Results on CelebV-HQ test samples

Figure 20 shows faces generated from diverse types of input speech. Interestingly, without using explicit complex modeling to learn the relationship between speech and facial attributes, the model effectively distills rich visual information into audio features. It successfully extracts facial attributes from the input speech and accurately reflects them in the generated images, including ethnicity (first row), gender (second row), and age range (third row). Moreover, the model captures subtle cues from the speech, such as a vintage audio effect from old black-and-white films, and incorporates this cue by generating faces with grayish colors, mimicking the style of old black-and-white movies, as shown in the last row.

Since the original and generated faces have diverse head poses and are captured from different camera viewpoints, we facilitate comparison by reconstructing these into canonical 3D meshes, displayed in the third and fourth columns. Specifically, we feed both the original and generated images into MICA (Zielonka et al, 2022) to reconstruct 3D faces and use FFHQ-UV (Bai et al, 2023) to apply textures to the reconstructed faces. This visualization method allows us to clearly observe that the model can effectively generate faces from speech, resembling the appearances similar to the original faces.

Combining speech and environment sound

Having trained one audio encoder on environmental in-the-wild sounds as described in Sec. 6.1 and another on a speech dataset as in Sec. 6.2, we are able to generate images that reflect both environmental and speech sounds in a single image. Given an audio feature from environmental sounds, , and another from speech, , we can interpolate between these features to define a composite audio feature, , where varies across examples. This new audio feature is then fed into the LDM to generate images that incorporate both types of content. Figure 21 shows the images that integrate both signals into one image. The leftmost image is generated solely from environmental sound, the rightmost image from speech, and the center image reflects both interpolated signals. Interestingly, this simple interpolation in the latent space enables the placement of humans in diverse environments, such as snowy or cloudy scenes, while preserving their identity.

7 Conclusion

In this paper, we propose Sound2Vision, a model designed to generate images relevant to given audio inputs. This task presents inherent challenges due to the significant information gap between audio and visual signals as audio lacks visual information, and audio-visual pairs do not always correspond directly. Previous approaches have been constrained by the limited number of sound categories they can generate and the low quality of the images produced. Our method addresses these challenges by enriching audio features with visual knowledge and selecting well-correlated audio-visual pairs for training, thereby successfully producing richly detailed images with diverse characteristics. Moreover, our model offers controllability over inputs, allowing for more creative outcomes compared to previous methods. Additionally, we analyze the geometric properties of the multimodal embeddings, demonstrating how our learning approach effectively aligns audio-visual signals for cross-modal generation, which is the key concept behind our work. This analysis shows that our method is agnostic to specific design choices, highlighting its generalizability through successful integration with various model architecture choices and types of audio-visual data.

Data availability statements. All data supporting the findings of this study are available online. The VGGSound dataset can be downloaded from https://www.robots.ox.ac.uk/~vgg/data/vggsound/. The VEGAS dataset can be downloaded from https://github.com/postech-ami/Sound2Scene. The CelebV-HQ dataset can be downloaded from https://github.com/CelebV-HQ/CelebV-HQ.

References

- Abdal et al (2019) Abdal R, Qin Y, Wonka P (2019) Image2stylegan: How to embed images into the stylegan latent space? In: IEEE International Conference on Computer Vision (ICCV)

- Afouras et al (2020) Afouras T, Owens A, Chung JS, Zisserman A (2020) Self-supervised learning of audio-visual objects from video. In: European Conference on Computer Vision (ECCV)

- Akbari et al (2021) Akbari H, Yuan L, Qian R, Chuang WH, Chang SF, Cui Y, Gong B (2021) Vatt: Transformers for multimodal self-supervised learning from raw video, audio and text. In: Advances in Neural Information Processing Systems (NeurIPS)

- Alayrac et al (2022) Alayrac JB, Donahue J, Luc P, Miech A, Barr I, Hasson Y, Lenc K, Mensch A, Millican K, Reynolds M, et al (2022) Flamingo: a visual language model for few-shot learning. In: Advances in Neural Information Processing Systems (NeurIPS)

- Alwassel et al (2020) Alwassel H, Mahajan D, Korbar B, Torresani L, Ghanem B, Tran D (2020) Self-supervised learning by cross-modal audio-video clustering. In: Advances in Neural Information Processing Systems (NeurIPS)

- Arandjelovic and Zisserman (2017) Arandjelovic R, Zisserman A (2017) Look, listen and learn. In: IEEE International Conference on Computer Vision (ICCV)

- Arandjelovic and Zisserman (2018) Arandjelovic R, Zisserman A (2018) Objects that sound. In: European Conference on Computer Vision (ECCV)

- Aytar et al (2016) Aytar Y, Vondrick C, Torralba A (2016) Soundnet: Learning sound representations from unlabeled video. In: Advances in Neural Information Processing Systems (NeurIPS)

- Bai et al (2023) Bai H, Kang D, Zhang H, Pan J, Bao L (2023) Ffhq-uv: Normalized facial uv-texture dataset for 3d face reconstruction. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

- Brock et al (2018) Brock A, Donahue J, Simonyan K (2018) Large scale gan training for high fidelity natural image synthesis. In: International Conference on Learning Representations (ICLR)

- Caron et al (2020) Caron M, Misra I, Mairal J, Goyal P, Bojanowski P, Joulin A (2020) Unsupervised learning of visual features by contrasting cluster assignments. In: Advances in Neural Information Processing Systems (NeurIPS)

- Casanova et al (2021) Casanova A, Careil M, Verbeek J, Drozdzal M, Romero Soriano A (2021) Instance-conditioned gan. In: Advances in Neural Information Processing Systems (NeurIPS)

- Chatterjee and Cherian (2020) Chatterjee M, Cherian A (2020) Sound2sight: Generating visual dynamics from sound and context. In: European Conference on Computer Vision (ECCV)

- Chen et al (2020a) Chen H, Xie W, Vedaldi A, Zisserman A (2020a) Vggsound: A large-scale audio-visual dataset. In: IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP)

- Chen et al (2021a) Chen H, Xie W, Afouras T, Nagrani A, Vedaldi A, Zisserman A (2021a) Localizing visual sounds the hard way. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

- Chen et al (2017) Chen L, Srivastava S, Duan Z, Xu C (2017) Deep cross-modal audio-visual generation. In: Proceedings of the on Thematic Workshops of ACM Multimedia 2017

- Chen et al (2020b) Chen P, Zhang Y, Tan M, Xiao H, Huang D, Gan C (2020b) Generating visually aligned sound from videos. IEEE Transactions on Image Processing (TIP)

- Chen et al (2021b) Chen Y, Xian Y, Koepke A, Shan Y, Akata Z (2021b) Distilling audio-visual knowledge by compositional contrastive learning. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

- Deng et al (2009) Deng J, Dong W, Socher R, Li LJ, Li K, Fei-Fei L (2009) Imagenet: A large-scale hierarchical image database. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

- Dhariwal and Nichol (2021) Dhariwal P, Nichol A (2021) Diffusion models beat gans on image synthesis. In: Advances in Neural Information Processing Systems (NeurIPS)

- Ding et al (2021) Ding M, Yang Z, Hong W, Zheng W, Zhou C, Yin D, Lin J, Zou X, Shao Z, Yang H, Tang J (2021) Cogview: Mastering text-to-image generation via transformers. In: Advances in Neural Information Processing Systems (NeurIPS)

- Dosovitskiy et al (2020) Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, Dehghani M, Minderer M, Heigold G, Gelly S, et al (2020) An image is worth 16x16 words: Transformers for image recognition at scale. In: International Conference on Learning Representations (ICLR)

- Fanzeres and Nadeu (2021) Fanzeres LA, Nadeu C (2021) Sound-to-imagination: Unsupervised crossmodal translation using deep dense network architecture. arXiv preprint arXiv:210601266

- Gan et al (2019) Gan C, Zhao H, Chen P, Cox D, Torralba A (2019) Self-supervised moving vehicle tracking with stereo sound. In: IEEE International Conference on Computer Vision (ICCV)

- Gao and Grauman (2019) Gao R, Grauman K (2019) Co-separating sounds of visual objects. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

- Ginosar et al (2019) Ginosar S, Bar A, Kohavi G, Chan C, Owens A, Malik J (2019) Learning individual styles of conversational gesture. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

- Goel et al (2022) Goel S, Bansal H, Bhatia S, Rossi R, Vinay V, Grover A (2022) Cyclip: Cyclic contrastive language-image pretraining. In: Advances in Neural Information Processing Systems (NeurIPS)

- Goodfellow et al (2014) Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2014) Generative adversarial nets. In: Advances in Neural Information Processing Systems (NeurIPS)

- Gou et al (2021) Gou J, Yu B, Maybank SJ, Tao D (2021) Knowledge distillation: A survey. International Journal of Computer Vision (IJCV)

- Hao et al (2018) Hao W, Zhang Z, Guan H (2018) Cmcgan: A uniform framework for cross-modal visual-audio mutual generation. In: AAAI Conference on Artificial Intelligence (AAAI)

- He et al (2016) He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

- Heusel et al (2017) Heusel M, Ramsauer H, Unterthiner T, Nessler B, Hochreiter S (2017) Gans trained by a two time-scale update rule converge to a local nash equilibrium. In: Advances in Neural Information Processing Systems (NeurIPS)

- Hinton et al (2015) Hinton G, Vinyals O, Dean J, et al (2015) Distilling the knowledge in a neural network. arXiv preprint arXiv:150302531

- Ho et al (2020) Ho J, Jain A, Abbeel P (2020) Denoising diffusion probabilistic models. In: Advances in Neural Information Processing Systems (NeurIPS)

- Ho et al (2022) Ho J, Chan W, Saharia C, Whang J, Gao R, Gritsenko A, Kingma DP, Poole B, Norouzi M, Fleet DJ, et al (2022) Imagen video: High definition video generation with diffusion models. arXiv preprint arXiv:221002303

- Hu et al (2019) Hu D, Nie F, Li X (2019) Deep multimodal clustering for unsupervised audiovisual learning. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

- Iashin and Rahtu (2021) Iashin V, Rahtu E (2021) Taming visually guided sound generation. In: British Machine Vision Conference (BMVC)

- Kim et al (2023) Kim M, Sung-Bin K, Oh TH (2023) Prefix tuning for automated audio captioning. In: IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP)

- Lee et al (2022) Lee SH, Roh W, Byeon W, Yoon SH, Kim C, Kim J, Kim S (2022) Sound-guided semantic image manipulation. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

- Li et al (2022) Li T, Liu Y, Owens A, Zhao H (2022) Learning visual styles from audio-visual associations. In: European Conference on Computer Vision (ECCV)

- Liang et al (2022) Liang W, Zhang Y, Kwon Y, Yeung S, Zou J (2022) Mind the gap: Understanding the modality gap in multi-modal contrastive representation learning. In: Advances in Neural Information Processing Systems (NeurIPS)

- Liu et al (2022) Liu N, Li S, Du Y, Torralba A, Tenenbaum JB (2022) Compositional visual generation with composable diffusion models. In: European Conference on Computer Vision (ECCV)

- Luo et al (2024) Luo S, Yan C, Hu C, Zhao H (2024) Diff-foley: Synchronized video-to-audio synthesis with latent diffusion models. In: Advances in Neural Information Processing Systems (NeurIPS)

- Van der Maaten and Hinton (2008) Van der Maaten L, Hinton G (2008) Visualizing data using t-sne. Journal of Machine Learning Research (JMLR)

- Mokady et al (2021) Mokady R, Hertz A, Bermano AH (2021) Clipcap: Clip prefix for image captioning. arXiv preprint arXiv:211109734

- Morgado et al (2021a) Morgado P, Misra I, Vasconcelos N (2021a) Robust audio-visual instance discrimination. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

- Morgado et al (2021b) Morgado P, Vasconcelos N, Misra I (2021b) Audio-visual instance discrimination with cross-modal agreement. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

- Narasimhan et al (2022) Narasimhan M, Ginosar S, Owens A, Efros AA, Darrell T (2022) Strumming to the beat: Audio-conditioned contrastive video textures. In: IEEE Winter Conference on Applications of Computer Vision (WACV)

- Nichol and Dhariwal (2021) Nichol AQ, Dhariwal P (2021) Improved denoising diffusion probabilistic models. In: International Conference on Machine Learning (ICML)

- Oh et al (2019) Oh TH, Dekel T, Kim C, Mosseri I, Freeman WT, Rubinstein M, Matusik W (2019) Speech2face: Learning the face behind a voice. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

- Oord et al (2018) Oord Avd, Li Y, Vinyals O (2018) Representation learning with contrastive predictive coding. arXiv preprint arXiv:180703748

- Owens and Efros (2018) Owens A, Efros AA (2018) Audio-visual scene analysis with self-supervised multisensory features. In: European Conference on Computer Vision (ECCV)

- Owens et al (2016a) Owens A, Isola P, McDermott J, Torralba A, Adelson EH, Freeman WT (2016a) Visually indicated sounds. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

- Owens et al (2016b) Owens A, Wu J, McDermott JH, Freeman WT, Torralba A (2016b) Ambient sound provides supervision for visual learning. In: European Conference on Computer Vision (ECCV)

- Owens et al (2018) Owens A, Wu J, McDermott JH, Freeman WT, Torralba A (2018) Learning sight from sound: Ambient sound provides supervision for visual learning. International Journal of Computer Vision (IJCV)

- Park et al (2021) Park DH, Azadi S, Liu X, Darrell T, Rohrbach A (2021) Benchmark for compositional text-to-image synthesis. In: Thirty-fifth Conference on Neural Information Processing Systems Datasets and Benchmarks Track (Round 1)

- Park et al (2023) Park S, Senocak A, Chung JS (2023) Marginnce: Robust sound localization with a negative margin. In: IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP)

- Park et al (2024) Park S, Senocak A, Chung JS (2024) Can clip help sound source localization? In: IEEE Winter Conference on Applications of Computer Vision (WACV)

- Patashnik et al (2021) Patashnik O, Wu Z, Shechtman E, Cohen-Or D, Lischinski D (2021) Styleclip: Text-driven manipulation of stylegan imagery. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

- Pedersoli et al (2022) Pedersoli F, Wiebe D, Banitalebi A, Zhang Y, Yi KM (2022) Estimating visual information from audio through manifold learning. arXiv preprint arXiv:220802337

- Poole et al (2022) Poole B, Jain A, Barron JT, Mildenhall B (2022) Dreamfusion: Text-to-3d using 2d diffusion. arXiv preprint arXiv:220914988

- Qin et al (2023) Qin C, Yu N, Xing C, Zhang S, Chen Z, Ermon S, Fu Y, Xiong C, Xu R (2023) Gluegen: Plug and play multi-modal encoders for x-to-image generation. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

- Radford et al (2021) Radford A, Kim JW, Hallacy C, Ramesh A, Goh G, Agarwal S, Sastry G, Askell A, Mishkin P, Clark J, et al (2021) Learning transferable visual models from natural language supervision. In: International Conference on Machine Learning (ICML)

- Ramesh et al (2021) Ramesh A, Pavlov M, Goh G, Gray S, Voss C, Radford A, Chen M, Sutskever I (2021) Zero-shot text-to-image generation. In: International Conference on Machine Learning (ICML)

- Ramesh et al (2022) Ramesh A, Dhariwal P, Nichol A, Chu C, Chen M (2022) Hierarchical text-conditional image generation with clip latents. arXiv preprint arXiv:220406125

- Richardson et al (2021) Richardson E, Alaluf Y, Patashnik O, Nitzan Y, Azar Y, Shapiro S, Cohen-Or D (2021) Encoding in style: a stylegan encoder for image-to-image translation. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

- Rombach et al (2022) Rombach R, Blattmann A, Lorenz D, Esser P, Ommer B (2022) High-resolution image synthesis with latent diffusion models. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

- Salimans et al (2016) Salimans T, Goodfellow I, Zaremba W, Cheung V, Radford A, Chen X (2016) Improved techniques for training gans. In: Advances in Neural Information Processing Systems (NeurIPS)

- Selvaraju et al (2017) Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D (2017) Grad-cam: Visual explanations from deep networks via gradient-based localization. In: IEEE International Conference on Computer Vision (ICCV)

- Senocak et al (2018) Senocak A, Oh TH, Kim J, Yang MH, Kweon IS (2018) Learning to localize sound source in visual scenes. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

- Senocak et al (2019) Senocak A, Oh TH, Kim J, Yang MH, Kweon IS (2019) Learning to localize sound sources in visual scenes: Analysis and applications. IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI)

- Senocak et al (2022) Senocak A, Ryu H, Kim J, Kweon IS (2022) Less can be more: Sound source localization with a classification model. In: IEEE Winter Conference on Applications of Computer Vision (WACV)

- Senocak et al (2023a) Senocak A, Kim J, Oh TH, Ryu H, Li D, Kweon IS (2023a) Event-specific audio-visual fusion layers: A simple and new perspective on video understanding. IEEE Winter Conference on Applications of Computer Vision (WACV)

- Senocak et al (2023b) Senocak A, Ryu H, Kim J, Oh TH, Pfister H, Chung JS (2023b) Sound source localization is all about cross-modal alignment. In: IEEE International Conference on Computer Vision (ICCV)

- Senocak et al (2024) Senocak A, Ryu H, Kim J, Oh TH, Pfister H, Chung JS (2024) Aligning sight and sound: Advanced sound source localization through audio-visual alignment. arXiv preprint arXiv:240713676

- Shim et al (2021) Shim JY, Kim J, Kim JK (2021) S2i-bird: Sound-to-image generation of bird species using generative adversarial networks. In: International Conference on Pattern Recognition (ICPR)

- Singer et al (2022) Singer U, Polyak A, Hayes T, Yin X, An J, Zhang S, Hu Q, Yang H, Ashual O, Gafni O, et al (2022) Make-a-video: Text-to-video generation without text-video data. arXiv preprint arXiv:220914792

- Su et al (2020) Su K, Liu X, Shlizerman E (2020) Audeo: Audio generation for a silent performance video. In: Advances in Neural Information Processing Systems (NeurIPS)

- Sung-Bin et al (2023) Sung-Bin K, Senocak A, Ha H, Owens A, Oh TH (2023) Sound to visual scene generation by audio-to-visual latent alignment. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

- Sung-Bin et al (2024a) Sung-Bin K, Chae-Yeon L, Son G, Hyun-Bin O, Ju J, Nam S, Oh TH (2024a) Multitalk: Enhancing 3d talking head generation across languages with multilingual video dataset. arXiv preprint arXiv:240614272

- Sung-Bin et al (2024b) Sung-Bin K, Hyun L, Hong DH, Nam S, Ju J, Oh TH (2024b) Laughtalk: Expressive 3d talking head generation with laughter. In: IEEE Winter Conference on Applications of Computer Vision (WACV)

- Szegedy et al (2016) Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z (2016) Rethinking the inception architecture for computer vision. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

- Van Den Oord et al (2017) Van Den Oord A, Vinyals O, et al (2017) Neural discrete representation learning. In: Advances in Neural Information Processing Systems (NeurIPS)

- Villegas et al (2022) Villegas R, Babaeizadeh M, Kindermans PJ, Moraldo H, Zhang H, Saffar MT, Castro S, Kunze J, Erhan D (2022) Phenaki: Variable length video generation from open domain textual description. arXiv preprint arXiv:221002399

- Wan et al (2019) Wan CH, Chuang SP, Lee HY (2019) Towards audio to scene image synthesis using generative adversarial network. In: IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP)

- Wu et al (2021) Wu HH, Seetharaman P, Kumar K, Bello JP (2021) Wav2clip: Learning robust audio representations from clip. In: IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP)

- Xing et al (2024) Xing Y, He Y, Tian Z, Wang X, Chen Q (2024) Seeing and hearing: Open-domain visual-audio generation with diffusion latent aligners. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

- Yang et al (2023) Yang F, Zhang J, Owens A (2023) Generating visual scenes from touch. In: IEEE International Conference on Computer Vision (ICCV)

- Yang et al (2024) Yang F, Feng C, Chen Z, Park H, Wang D, Dou Y, Zeng Z, Chen X, Gangopadhyay R, Owens A, Wong A (2024) Binding touch to everything: Learning unified multimodal tactile representations. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

- Yariv et al (2023) Yariv G, Gat I, Wolf L, Adi Y, Schwartz I (2023) Audiotoken: Adaptation of text-conditioned diffusion models for audio-to-image generation. In: Conference of the International Speech Communication Association (INTERSPEECH)

- Youwang et al (2022) Youwang K, Ji-Yeon K, Oh TH (2022) Clip-actor: Text-driven recommendation and stylization for animating human meshes. In: European Conference on Computer Vision (ECCV)

- Zhang et al (2023) Zhang Y, HaoChen JZ, Huang SC, Wang KC, Zou J, Yeung S (2023) Diagnosing and rectifying vision models using language. In: International Conference on Learning Representations (ICLR)

- Zhang et al (2024) Zhang Y, Gu Y, Zeng Y, Xing Z, Wang Y, Wu Z, Chen K (2024) Foleycrafter: Bring silent videos to life with lifelike and synchronized sounds. arXiv preprint arXiv:240701494

- Zhou et al (2018) Zhou Y, Wang Z, Fang C, Bui T, Berg TL (2018) Visual to sound: Generating natural sound for videos in the wild. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

- Zhu et al (2022) Zhu H, Wu W, Zhu W, Jiang L, Tang S, Zhang L, Liu Z, Loy CC (2022) Celebv-hq: A large-scale video facial attributes dataset. In: European Conference on Computer Vision (ECCV)

- Zielonka et al (2022) Zielonka W, Bolkart T, Thies J (2022) Towards metrical reconstruction of human faces. In: European Conference on Computer Vision (ECCV)