Some Insights into Lifelong Reinforcement Learning Systems

Abstract

A lifelong reinforcement learning system is a learning system that has the ability to learn through trail-and-error interaction with the environment over its lifetime. In this paper, I give some arguments to show that the traditional reinforcement learning paradigm fails to model this type of learning system. Some insights into lifelong reinforcement learning are provided, along with a simplistic prototype lifelong reinforcement learning system.

1 Introduction

An agent is an abstraction of a decision-maker. At each time instance , it receives an observation , and outputs an action to be carried out in the environment it lives in. Here, is the (finite) set of possible observations the agent can receive, and is the (finite) set of actions the agent can choose from. An agent’s observation depends on the current environment state through an agent observation function , where is the set of possible environment states. The observation history is the sequence of observations the agent has received till time . Let be the set of possible observation histories of length , the policy at time is defined as the mapping from an observation history of length to the action the agent will take. An agent’s behavior can thus be fully specified by its policy across all timesteps . Throughout the paper, it is assumed that an agent has a finite lifespan .

1.1 Scalar Reward Reinforcement Learning System

We are interested in agents that can achieve some goal. In reinforcement learning, a goal is expressed by a scalar signal called the reward. The reward is dependent on the agent’s observation history, and is assumed to be available to the agent at each timestep in addition to the observation . Our aim is to find policies that maximize the expected cumulative reward an agent receives over its lifetime:

| (1) |

Using the maximization of expected cumulative scalar reward to formulate the general notion of goal is a design choice in reinforcement learning, based on what is commonly known as the reward hypothesis (Sutton & Barto, 2018), In Sutton’s own words:

That all of what we mean by goals and purposes can be well thought of as the maximization of the expected value of the cumulative sum of a received scalar signal (called reward).

This design choice, however, is somewhat arbitrary. Among other things, the reward needs not be a scalar (e.g. multi-objective reinforcement learning (White, 1982)), nor does it have to be a quantity whose cumulative sum is to be maximized (which we will come to shortly). Leaving aside the question of whether or not all goals can be formulated by Eq. 1, I intend to show in this paper that the problem of lifelong reinforcement learning probably should not be formulated as such.

Note that in Eq. 1, I defined the reward in terms of the observation history, instead of the history of environment states as in most reinforcement learning literature. This reflects the view that reward signals are internal to the agent, as pointed out by Singh et al. (2004) in their work on intrinsic motivation. Since the observations are all that the agent has access to from the external environment, the intrinsic reward should depend on the environment state only through the agent’s observation history.

Although the above reinforcement learning formulation recognizes the reward as a signal intrinsic to an agent, it focuses on learning across different generations 111Usage of the word ‘generation’ here is only to emphasize that learning cannot be achieved within an agent’s lifespan, and does not imply that evolution algorithms need to be used. of agents, as opposed to learning within an agent’s lifespan. From an agent’s point of view, the cumulative reward is known only when it reaches its end of life, by which time no learning can be done by the ‘dying’ agent itself. The individual reward received at each timestep does not really matter, since the optimization objective is the cumulative sum (of reward). The information gathered by the agent, however, can be used to improve the policy of the next generation. In other words, with the conventional reinforcement learning formulation, learning can only happen at a level higher than the lives of individual agents (Figure 1), with the goal that an optimal agent can eventually be found — the lifetime behavior of a particular agent is not of concern.

1.2 Towards Lifelong Reinforcement Learning

In lifelong reinforcement learning, on the other hand, the focus is the agent’s ability to learn and adapt to the environment throughout its lifetime. Intuitively, this implies that learning component of the learning system should reside within the agent.

To shed some lights on lifelong reinforcement learning, consider the Q-learning (Watkins & Dayan, 1992) algorithm for the standard reinforcement learning problem formulated by Eq. 1. For the purpose of this example only, it is further assumed that:

-

•

The reward depends only on the current observation. I.e.,

-

•

Observations are Markov with respect to past observations and actions. I.e.,

These assumptions are only made so that Q-learning will find the solution to Eq. 1, and are not essential for the general discussion. The (non-lifelong) learning system works as follows:

-

1.

The agent receives its initial Q estimate from the past generation.

-

2.

At each timestep , the agent takes an -greedy action based on the current Q estimate, then does a Bellman update on the Q estimate:

(2) -

3.

When the agent dies, pass the updated Q estimate to the next generation.

At first sight, the fact that the Q estimate is updated every timestep seems to contradict my argument that learning only happens across generations. However, for Eq. 2 to be a valid update, the timestep needs to be part of the observation — the observation here is in fact the raw observation augmented by time , i.e., . Since the timestep is part of the observation, no same observation will be experienced more than once throughout the agent’s lifetime, and it makes no difference to the agent whether the Q estimate is updated every timestep, or after its life ends 222The statement does not strictly hold true if function approximation is used. An update to can potentially affect the Q estimate of all other observations. However, this is more a side effect than a desired property..

It’s clear that for an agent to exhibit any sensible behavior, the initial Q estimate it inherits from the past generation is vital. If the agent receives a random initial Q estimate, then it’s lifelong behavior is bound to be random and meaningless. On the other side of the spectrum, if the agent receives the true Q function, then it will behave optimally. This suggests that if we care about the lifetime behaviour (which includes lifelong learning behavior) of a Q-learning agent, then is a fundamental signal the agent needs to receive in addition to the scalar reward. In a sense, if the signal represented by the scalar reward is a specification of what the goal is, then the signal represented by the Q estimate is the knowledge past generations have collected about what the goal means for this type of agent. As an analogy, the pain associated with falling to the ground could be the former signal, while the innate fear of height could be the latter.

From a computational perspective, the separation of these two signals may not be necessary. Both signals can be considered as ‘annotations’ for the observation history that the agent receives along with its observation, and can be incorporated into the concept of reward. The reward signals are no longer restricted scalars, nor are they necessarily quantities whose cumulative sum is to be maximized — they are just messages in some reward language that ‘encode’ the knowledge pertaining to an agent’s observation history — knowledge that enables the agent to learn continuously throughout its life. Such knowledge may include the goals of the agent, the subgoals that constitute these goals, the heuristics for achieving them, and so on. The reward is then ‘decoded’ by the learning algorithm, which defines how the agent responds to the reward given the observation history. The learning system should be designed such that by responding to the reward in its intended way, the agent will learn to achieve the goals implied by the reward before its end of life (Figure 2).

To be precise, the reward now belongs to some reward space . The learning algorithm is a mapping from reward histories to policies. Denoting the set of possible reward history of length as , and the set of all possible policies at time as , the learning algorithm can be represented by , where . The formulation is general, and a learning system formulated as such is not automatically a lifelong learning system. In fact, it subsumes traditional reinforcement learning: the reward space is set to the real numbers (), and the learning algorithm can be set to any algorithm that converges to a policy that maximizes the expected cumulative reward. Unfortunately, the reward in traditional reinforcement learning does not contain enough information for an agent to learn within its lifetime.

Viewing the reward as a general language, and the learning algorithm as the response to the reward opens up the possibilities for principled ways to embed learning bias such as guidance and intrinsic motivation into the learning system, instead of relying solely on manipulating the scalar reward on an ad-hoc basis. In the rest of the paper, my focus remains on lifelong reinforcement learning, more specifically, what lifelong reinforcement learning requires of the reward language and the corresponding learning algorithm.

1.3 Reward as Formal Language

Although the term ‘language’ used above can be understood in its colloquial sense, it can also be understood as the formal term in automata theory. To see this, consider the following deterministic finite automaton , where:

-

•

is the alphabet of the automaton, and is set to the reward space of the learning system. In other words, the alphabet of this automaton consists of all possible reward the agent can receive at any single timestep. A string is a sequence of symbols chosen from some alphabet. For this particular automaton, a string is in fact a sequence of reward, so the notation for reward history is also used to denote a string of length . The set of all strings of length over is denoted as , and the set of all strings (of any length) is denoted as .

-

•

is the set of states of the automaton. Each state of this automaton is a possible pair of reward history and policies till some timestep . For example, members of include:

for any , , …, , and , , …, . In addition, Q has a special ‘empty’ member , which corresponds to the initial state before any reward is received.

-

•

is the transition function. The transition function corresponds to the learning algorithm of the learning system, so we have , where .

-

•

is the initial state of the automaton as explained above.

-

•

is the set of accepting states, which are the desired states of the automaton.

It’s not hard to see that this automaton is a model of the learning system described in Section 1.2, with its desired property specified by the accepting states . In this paper, the desired property is that the system be a lifelong learning system, so the accepting states are the set of pairs that correspond to a lifelong learner 333Recall that an agent’s behavior is fully decided by its policy . Therefore given a reward history , the policy is sufficient for us to tell whether the agent is a successful lifelong learner..

To specify learning objectives, each possible reward is assigned some semantics. These semantics implicitly define the set of valid reward sequences . Since is a subset of , it is a language over . We want to make sure that — for all reward sequences in , lifelong learning can be achieved by the learning system abstracted by this automaton, or equivalently, all reward sequences in lead to accepting states .

2 A Prototype Lifelong Reinforcement Learning System

Designing a lifelong reinforcement learning system involves designing the reward language and the learning algorithm holistically. Intuitively, the reward needs to contain enough information to control the relevant aspects of the learning algorithm, and the learning algorithm in turn needs to ‘interpret’ the reward signal in its intended way. In this section, I aim to provide some insights into the design process with a prototype lifelong reinforcement learning system.

2.1 Reward Language

The main reason lifelong learning is impossible in conventional reinforcement learning is that the learning objective in conventional reinforcement learning is global, in the sense that the goal of the agent is defined in terms of the observation history of its entire life. For a lifelong reinforcement learning agent, the learning objectives should instead be local, meaning that the goals should be defined only for some smaller tasks that the agent can encounter multiple times during its lifetime. Once a local goal expires, whether it is because the goal has been achieved or because the agent has failed to achieve it within a certain time limit, a new local goal (can potentially be another instantiation of the same goal) ensues. This way, the agent has the opportunity to gather knowledge for each of the goals, and improve upon them, all within one life. Local goals like this are ubiquitous for humans. For example, when a person is hungry, his main concern is probably not the global goal of being happy for the rest of his life — his goal is to have food. After the person is full, he might feel like taking a nap, which is another local goal. In fact, the local goals and the transition of them seems to embody what we mean by intrinsic motivation.

To be able to specify a series of local goals, the reward in this prototype learning system has two parts: the reward state , and the reward value , where is the set of local goals the agent may have. This form of reward is inspired by the reward machine (Icarte et al., 2018), a Mealy machine for specifying history-dependent reward, but the semantics we assign to the reward will be different. Also note that this Mealy machine bears no relation to the automaton we discussed in Section 1.3 — the reward machine models the reward, while the automaton in Section 1.3 models the learning system, and takes the reward as input. Each reward state corresponds to a local goal. When a local goal (or equivalently, a reward state) expires, the agent receives a numerical reward value . For all other timesteps (other than the expiration of local goals), the reward value can be considered to take a special NULL value, meaning that no reward value is received. The reward value is an evaluation of the agent’s performance in an episode of a reward state, where an episode of a reward state is defined as the time period between the expiration of the previous reward state (exclusive) and the expiration of the reward state itself (inclusive). The reward state can potentially depend on the entire observation history, while the reward value can only depend on the observation history of the episode it is assessing. Overall, the reward is specified by .

The local goals described here are technically similar to subgoals in hierarchical reinforcement learning (Dietterich, 2000; Sutton et al., 1999; Parr & Russell, 1997). However, the term ‘subgoal’ suggests that there is some higher-level goal that the agent needs to achieve, and that the higher-level goal is the true objective the agent needs to optimize. That is not the case here — although it is totally possible that the local goals are designed in such a way that some global goal can be achieved, the agent only needs to optimize the local goals.

The reward language in this prototype system makes two assumptions on the learning algorithm. As long as the two assumptions are met, the learning algorithm is considered to ‘interpret’ the reward correctly. The first assumption is that the learning algorithm only generates policies that are episode-wise stationary, meaning that for any timesteps and in the same episode of a reward state, and that . This assumption is not particularly restrictive, because in cases where a local goal requires a more complex policy, we can always split the goal into multiple goals (by modifying the reward function) for which the policies are episode-wise stationary. With this assumption, we can use a single policy to represent the policies at all timesteps within an episode of reward state . The second assumption is that the learning algorithm keeps a pool of ‘elite’ policies for each reward state: a policy that led to high reward value in some episode has the opportunity to enter the pool, and a policy that consistently leads to higher reward value eventually dominates the policy pool. The exact criterion for selection into the pool (e.g., to use the expected reward value as the criterion, or to use the probability of the reward value being higher than a certain threshold, etc.) is not enforced, and is left up to the learning algorithm.

2.2 Learning Algorithm

The learning algorithm in this prototype lifelong learning system is an evolutionary algorithm, adjusted to meet the assumptions made by the reward. The algorithm maintains a policy pool of maximum size for each reward state . Each item in the pool is a two tuple where is a policy and is the reward value of the last episode in which was executed. Conceptually, the algorithm consists of three steps: policy generation, policy execution, and (policy) pool update, which are described below.

Policy Generation

When an episode of reward state starts, a policy is generated from one of the following methods with probability , , , respectively:

-

1.

Randomly sample a policy from the policy pool , and mutate the policy.

-

2.

Randomly sample a policy from and keep it as is. Remove the sampled policy from . This is to re-evaluate a policy in the pool. Since the transition of observations might be stochastic, the same policy does not necessarily always result in the same reward value.

-

3.

Randomly generate a new policy from scratch. This is to keep the diversity of the policy pool.

, and should sum up to , and are hyper-parameters of the algorithm.

Policy Execution

Execute the generated policy until a numerical reward value is received.

Pool Update

If the policy pool is not full, insert into the pool. Otherwise compare with the minimum reward value in the pool. If is greater than or equal to the minimum reward value, replace the policy and reward value pair (that has the minimum reward value) with .

2.3 Embedding Learning Bias

Learning bias in reinforcement learning systems refers to the explicit or implicit assumptions made by the learning algorithm about the policy. Our assumption that the policy is episode-wise stationary is an example of learning bias. Arguably, a good learning bias is as important as a good learning algorithm, therefore it is important that mechanisms are provided to embed learning bias into the learning system.

A straight-forward way to embed learning bias into the above lifelong learning system is through the policy generation process. This includes how existing policies are mutated, and what distribution new policies are sampled from. The learning bias provided this way does not depend on the agent’s observation and reward history, and is sometimes implicit (e.g., the learning bias introduced by using a neural network of particular architecture).

Another type of learning bias common in reinforcement learning is guidance, the essence of which can be illustrated by Figure 3. Suppose in some reward state, the agent starts from observation and the goal is to reach 444For sake of terminological convenience, we pretend that the observations here are environment states. observation . Prior knowledge indicates that to reach , visiting is a good heuristic, but reaching itself has little or no merit. In other words, we would like to encourage the agent to visit and explore around more frequently (than other parts of the observation space) until a reliable policy to reach is found.

To provide guidance to the agent in the prototype lifelong learning system, we can utilize the property of the learning algorithm that policies leading to high reward values will enter the policy pool. Once a policy enters the pool, it has the opportunity to be sampled (possibly with mutation) and executed. Therefore, we just need to assign a higher reward value for reaching (before the expiration of the reward state) than reaching neither nor . Also important is the ability to control the extent to which region around is explored. To achieve this, recall that the learning algorithm occasionally re-evaluates policies in the policy pool. If we assign a lower reward value for reaching with some probability, we can prevent the policy pool from being overwhelmed only by policies that lead to . In other words, the reward value for reaching should have multiple candidates. Let denote the reward value for an episode where the agent reaches neither nor , denote the reward value for reaching , we can set the reward value for reaching as:

where . The probability controls the frequency region around is to be explored compared the other parts of the observation space 555Note that the word ‘probability’ here should be interpreted as the ‘long-run proportion’, and therefore the reward value needs not be truly stochastic. E.g., we can imagine that the reward has a third component which is the state of a pseudo-random generator..

3 Experiment

Now we evaluate the behaviour of the prototype lifelong reinforcement learning system. The source code of the experiments can be found at https://gitlab.com/lifelong-rl/lifelongRL_gridworld

3.1 Environment

Consider a gridworld agent whose life revolves around getting food and taking the food back home for consumption. The agent lives in a by gridworld shown in Figure 4. The shaded areas are barriers that the agent cannot go through. Some potential positions of interest are marked with letters: F is the food source and is assumed to have infinite supply of food; H is the agent’s home. To get to the food source from home, and to carry the food home, the agent must pass through one of the two tunnels — the tunnel on the left is marked with L and the tunnel on the right is marked with R. At each timestep, the agent observes its position in the gridworld as well as a signal indicating whether it is in one of the four positions of interest (if yes, which), and chooses from one of the four actions: UP, RIGHT, DOWN and LEFT. Each action deterministically takes the agent to the adjacent grid in the corresponding direction, unless the destination is a barrier, in which case the agent remains in its original position. The agent starts from home at the beginning of its life, and needs to go to the food source to get food. Once it reaches the food source, it needs to carry the food back home. This process repeats until the agent dies. The lifespan of the agent is assumed to be million timesteps. The agent is supposed to learn to reliably achieve these two local goals within its lifetime.

3.2 Learning System Setup

The reward state in this experiment is represented by the conjunction of Boolean variables. For example, if three Boolean variables , and are defined, then the reward state would be in the form of or , etc. At the bare minimum, one Boolean variable needs to be defined for this agent, where being true corresponds to the local goal of going to the food source, and corresponds to the local goal of carrying the food home. The agent receives a reward value of if is true and the agent reaches , in which case the Boolean variable transitions to false. Similarly, the agent receives a reward value of if is true and the agent reaches , in which case transitions to true. On top of , we define another Boolean variable , which indicates whether the agent has exceeded a certain time limit for trying to get to the food source, or for trying to carry the food home. If the reward state is , and the agent fails to reach within the time limit, itreceives a reward value of , and the reward state transition to . From , if the agent still fails to get to within the time limit, it receives a reward value of . The agent will remain in , until it reaches , when the reward state transitions to (and receive a reward value as already mentioned). For the case when is false, the reward transition is defined similarly. Throughout the experiments, the time limit is set to , which is enough for the agent to accomplish any of the local goals. We refer to this reward design as the base case.

Unfortunately, even for a toy problem like this, learning can be difficult if no proper learning bias is provided. Since there are actions and possible positions, the number of possible episode-wise stationary policies is for each reward state. Among those policies, very few can achieve the local goals. If the policy generation and mutation is purely random, it will take a long time for the agent to find a good policy.

Biased Policy

The first learning bias we consider is biased policy, which is in contrast to the unbiased policy case where the policy generation and mutation is purely random. More specifically, we make the policy generation process biased towards policies that take the same action for similar observations. This would encourage policies that head consistently in one direction, and discourage those that indefinitely roam around between adjacent positions.

Progress-Based Guidance

The second learning bias we consider is guidance based on the agent’s progress. Different from the base case where the agent always receives a (if is true) or (if is false) reward value when it fails to achieve the local goal within the time limit, the agent now has some probability of receiving a reward value proportional to the Manhattan distance it has traveled since the beginning of the episode. To be precise:

This way, policies leading to more progress (albeit not necessary towards the local goal) will be encouraged.

Sub-Optimal Guidance

Finally, we consider a case of sub-optimal guidance that encourages the agent to explore a sub-optimal trajectory. As we have mentioned, both reaching the food source from home and carrying the food home require the agent to go through one of the two tunnels. However, if the agent goes through the left tunnel, it has to travel more distance. Suppose that we prefer the agent to take the shorter route, but we only know the route that goes through the left tunnel; and as a result, we sub-optimally encourage the agent to explore the left tunnel. To guide the agent to take the left tunnel, Boolean variable is introduced as an indicator of whether L has been visited since the last visitation of F or H. Now we have elements in the reward space, corresponding to possible local goals. The reward transition is different from the base case in that if the agent has already visited L when the local goal or times out, becomes true, and the agent will receive a reward value of with probability, and with probability. To express our preference for the shorter route, the agent receives a reward value of (instead of ) when it reaches F (when is true) or H (when is false) through the left tunnel.

3.3 Results

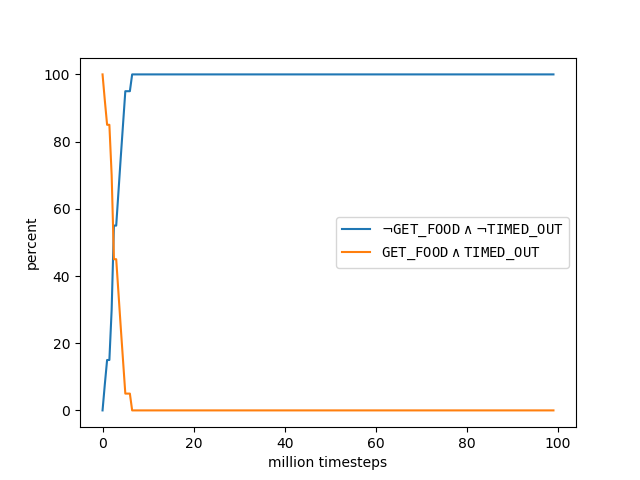

Figure 5 shows the learning curves for reward state with progress-based guidance. The -axis is the timesteps (in million), and the -axis is the percentage of times the agent transitions into a particular next reward state starting from . A next reward state of means that the agent successfully reached F within the time limit, and a next reward state of means that the agent failed to do so. As we can see, with unbiased policy, it took the agent around million timesteps to achieve success rate; while with biased policy, this only took around million timesteps.

Figure 6 shows the learning curves for reward state with the sub-optimal guidance described in Section 3.2. Similar to Figure 5, the -axis is the timesteps (in million), and the -axis is the percentage of times the agent transitioned into a particular next reward state starting from . A next reward state of means that the agent successfully reached F within the time limit; a next reward state of means that the agent failed to reach the food source, but was able to find a way to the left tunnel; and a next reward state of means that the agent was neither able to reach the left tunnel nor the food source within the time limit. As we can see, for both unbiased and biased policy, learning is much slower than progress-based guidance. This is likely due to the much sparser guidance signal — the agent receives guidance only when it reaches the left tunnel. For the unbiased policy case, success rate was not achieved within million timesteps, but we can clearly see that exploration around the left tunnel was encouraged as intended. For the biased policy case, the agent was able to reach success rate after million timesteps. But was the agent able to figure out the optimal route, or did it only learn to take the sub-optimal route as guided? Recall that the agent receives a reward value of if it takes the optimal route, and a reward value of if it takes the sub-optimal route. As shown in Figure 7, although the agent was taking the sub-optimal route by million timesteps when it just learned to reach the food source reliably, it was eventually able to figure out the optimal route by million timesteps.

4 Conclusions

Lifelong reinforcement learning is sometimes viewed as a multi-task reinforcement learning problem (Abel et al., 2018), where the agent must learn to solve tasks sampled from some distribution . The agent is expected to (explicitly or implicitly) discover the relation between tasks, and generalize its policy to unseen tasks from . The focus is therefore on the transfer learning (Taylor & Stone, 2009) and continual learning (Ring, 1998) aspects of lifelong reinforcement learning.

In this paper, I provided a systems view on lifelong reinforcement learning. In particular, I showed that the reward in a lifelong reinforcement learning system can be a general language, and that the language needs to be designed holistically with the learning algorithm. A prototype lifelong reinforcement learning system was given, with an emphasize on how learning bias can be embedded into the learning system through the synergy of the reward language and the learning algorithm.

Acknowledgements

The author would like to thank Gaurav Sharma (Borealis AI) for his comments on a draft of the paper.

References

- Abel et al. (2018) Abel, D., Arumugam, D., Lehnert, L., and Littman, M. L. State abstractions for lifelong reinforcement learning. In Proceedings of the 35th International Conference on Machine Learning, ICML 2018, Stockholmsmässan, Stockholm, Sweden, July 10-15, 2018, pp. 10–19, 2018.

- Dietterich (2000) Dietterich, T. G. Hierarchical reinforcement learning with the MAXQ value function decomposition. J. Artif. Intell. Res., 13:227–303, 2000.

- Icarte et al. (2018) Icarte, R. T., Klassen, T. Q., Valenzano, R. A., and McIlraith, S. A. Using reward machines for high-level task specification and decomposition in reinforcement learning. In Proceedings of the 35th International Conference on Machine Learning, ICML 2018, Stockholmsmässan, Stockholm, Sweden, July 10-15, 2018, pp. 2112–2121, 2018.

- Parr & Russell (1997) Parr, R. and Russell, S. J. Reinforcement learning with hierarchies of machines. In Advances in Neural Information Processing Systems 10, [NIPS Conference, Denver, Colorado, USA, 1997], pp. 1043–1049, 1997.

- Ring (1998) Ring, M. B. Child: A first step towards continual learning. In Learning to Learn, pp. 261–292. 1998. doi: 10.1007/978-1-4615-5529-2“˙11. URL https://doi.org/10.1007/978-1-4615-5529-2_11.

- Singh et al. (2004) Singh, S. P., Barto, A. G., and Chentanez, N. Intrinsically motivated reinforcement learning. In Advances in Neural Information Processing Systems 17 [Neural Information Processing Systems, NIPS 2004, December 13-18, 2004, Vancouver, British Columbia, Canada], pp. 1281–1288, 2004.

- Sutton & Barto (2018) Sutton, R. S. and Barto, A. G. Reinforcement Learning: An Introduction. The MIT Press, second edition, 2018.

- Sutton et al. (1999) Sutton, R. S., Precup, D., and Singh, S. P. Between mdps and semi-mdps: A framework for temporal abstraction in reinforcement learning. Artif. Intell., 112(1-2):181–211, 1999.

- Taylor & Stone (2009) Taylor, M. E. and Stone, P. Transfer learning for reinforcement learning domains: A survey. J. Mach. Learn. Res., 10:1633–1685, 2009.

- Watkins & Dayan (1992) Watkins, C. J. C. H. and Dayan, P. Technical note q-learning. Machine Learning, 8:279–292, 1992.

- White (1982) White, D. Multi-objective infinite-horizon discounted markov decision processes. Journal of Mathematical Analysis and Applications, 89(2):639 – 647, 1982. ISSN 0022-247X.