Solving Partial Differential Equations on Evolving Surfaces via the Constrained Least-Squares and Grid-Based Particle Method

Abstract

We present a framework for solving partial different equations on evolving surfaces. Based on the grid-based particle method (GBPM) [18], the method can naturally resample the surface even under large deformation from the motion law. We introduce a new component in the local reconstruction step of the algorithm and demonstrate numerically that the modification can improve computational accuracy when a large curvature region is developed during evolution. The method also incorporates a recently developed constrained least-squares ghost sample points (CLS-GSP) formulation [37, 38], which can lead to a better-conditioned discretized matrix for computing some surface differential operators. The proposed framework can incorporate many methods and link various approaches to the same problem. Several numerical experiments are carried out to show the accuracy and effectiveness of the proposed method.

1 Introduction

This paper proposes a new numerical method to solve a class of parabolic partial differential equations (PDEs) on evolving surfaces. In particular, we consider the following equation

| (1) |

where is a surface evolving under the velocity flow , is the material derivative given by , the vector represents a flux and is a source term. This equation can also be rewritten as

where the quantity is the mean curvature of the surface, and are the normal velocity of the interface motion and the velocity tangential to the interface, respectively, satisfying the decomposition . For motions in the normal direction, the equation can be further reduced to

We can use various choices of the flux function . Here we only consider two cases with the diffusive flux given by where is a function depending on the quantity defined on the interface and also a diffusion parameter . In particular, if we choose , equation (1) leads to the advection-diffusion equation with a source term given by

| (2) |

Another widely used choice of the flux is where is the so-called double well potential function and represents physically a chemical potential in modeling multiphase fluid flows. This leads to the Cahn-Hilliard equation on moving surfaces

These equations have many applications and are especially important in biological, physical, and engineering sciences. For example, the growth of a solid tumor has been modeled by a coupled system of a reaction-diffusion system with a curvature-dependent evolution law [5, 3, 9]. The Cahn-Hilliard has been used for simulating pattern formation and selection [24, 1]. The advection-diffusion equation can model the multi-phase interfacial flows with a surfactant as discussed in [36, 35].

There are various difficulties in developing a numerical strategy for the problems. The representation of the interface itself already plays an essential role since the topology of the surface might change with evolution. A typical Lagrangian method based on a triangulation of the interface might require remeshing when the dynamic involves significant deformation. This might lead to some computational challenges in the implementation. Mesh surgery might also be needed to carefully reconnect the triangulation of the surface when there is any topological change in the evolution. Implicit methods based on the Eulerian formulation have recently become more attractive. One of the most widely used representations is based on the level set method [27, 26]. The evolution of the interface is modeled by the numerical solution of a Hamilton-Jacobi equation, which is a nonlinear first-order PDE. More recently, we have developed a grid-based particle method (GBPM) and a cell-based particle method (CBPM) in [18, 13] to represent and model the interface dynamics. These grid-based or cell-based representations sample the surface by meshless Lagrangian particles according to an underlying uniform Eulerian mesh. This automatically provides a set of quasi-uniform sampling points of the interface. As the interface evolves according to the motion law, these unconnected non-parametrized sampling points will be continuously updated and can naturally capture changes in the interface topology.

The second phase in the development is to have a numerical approach for solving PDEs on a static surface given by a specific interface representation. For example, based on the implicit Eulerian representation, some projection operators have been introduced to extend a PDE off the interface to a small neighborhood [2, 11]. Without the projection operator, the extension idea has also been used in [34] for solving the surface eikonal equation using the fast sweeping method [32, 39]. Based on the level set representation, a so-called closest point method (CPM) has been developed in [30, 22, 23, 21] by replacing the surface PDE by an evolution equation approach. Some methods have been proposed for general surfaces represented by point clouds. For example, there are methods based on the radial basis functions formulation [29, 31], a local mesh method [14], a virtual grid difference method [33], etc.

A more challenging task is an approach to couple the above two tasks together based on techniques like operator-splitting so that one can develop a numerical method for solving PDEs on evolving surfaces. Some fully explicit Lagrangian methods have been developed in [7, 1, 6] based on a finite element formulation on the triangulated surface. A fully implicit Eulerian method based on the level set method has been proposed in [36]. A numerical approach based on the GBPM has been discussed in [19, 16]. Coupling the GBPM, the CPM can also solve PDEs on moving surfaces [28].

In this paper, we develop a general framework for solving PDEs on evolving surfaces represented by the GBPM representation. We closely follow the original GBPM in [18, 19, 16] for both the interface representation and the evolution. Yet, we discuss an improvement in the implementation of the local reconstruction. We must collect a small set of neighbors in the original algorithm for the local interface reconstruction. These neighboring sample points must be chosen carefully for an accurate approximation. One constraint is that we must sample the same interface segment. When the curvature of the interface is too large compared to the underlying mesh size, one might collect footpoints from different segments of the interface, and it will result in a wrong local reconstruction of the surface. In the original work, we proposed checking if the associated normal vectors from these footpoints were pointing in a similar direction. When the interface developed a high curvature region compared to the size of the underlying mesh, however, this check does not provide enough penalty on far away sampling points. This paper introduces a simple weighting strategy that can naturally reduce the contributions from those footpoints from regions close to a different segment.

The second main contribution of the paper is to incorporate a CLS-GSP approach for approximating local derivatives [37, 38]. In the original GBPM implementation in [18], the surface differential operator is approximated simply by the polynomial least squares fitting. Although the local approximation accuracy is relatively easy to achieve in local polynomial reconstruction, in some applications, it is more important to construct a discretization on the sampling points so that the resulting linear system can be efficiently inverted stably. For example, when applied to the Laplace-Beltrami operator, one can obtain an M-matrix system using a constrained quadratic programming optimization technique to enforce consistency and diagonal dominance after the discretization [20]. In a recent work [33], we have developed a modified virtual grid difference (MVGD) discretization. It has led to a more diagonal dominant and better conditioned linear system, which can be efficiently and robustly solved by any existing fast solver for elliptic PDEs. In this work, we propose to follow the CLS approach for approximating local derivatives as developed in [37, 38]. Numerically, the method can give a better-conditioned discretization of the Laplace-Beltramni operator.

The framework that we are proposing integrates a large class of methods. This allows us to link various current approaches to the same problem. For example, we will also demonstrate that the so-called local tangential lifting (LTL) method [4] and a recently developed CLS method with RBF [37, 38] can be easily incorporated with our framework.

This paper is organized as follows. We first summarize the GBPM as introduced in [18, 17, 16] in Section 2. We will then introduce a new modification step in the local reconstruction at the end of the Section. In Section 3, we introduce our proposed framework for various local reconstructions and explain how the method couples with the original GBPM. In Section 4, we show various numerical experiments to demonstrate the proposed approach’s effectiveness and accuracy. Finally, we summarize our proposed method and discuss some possible future works.

2 Grid Based Particle Method (GBPM)

2.1 The original formulation

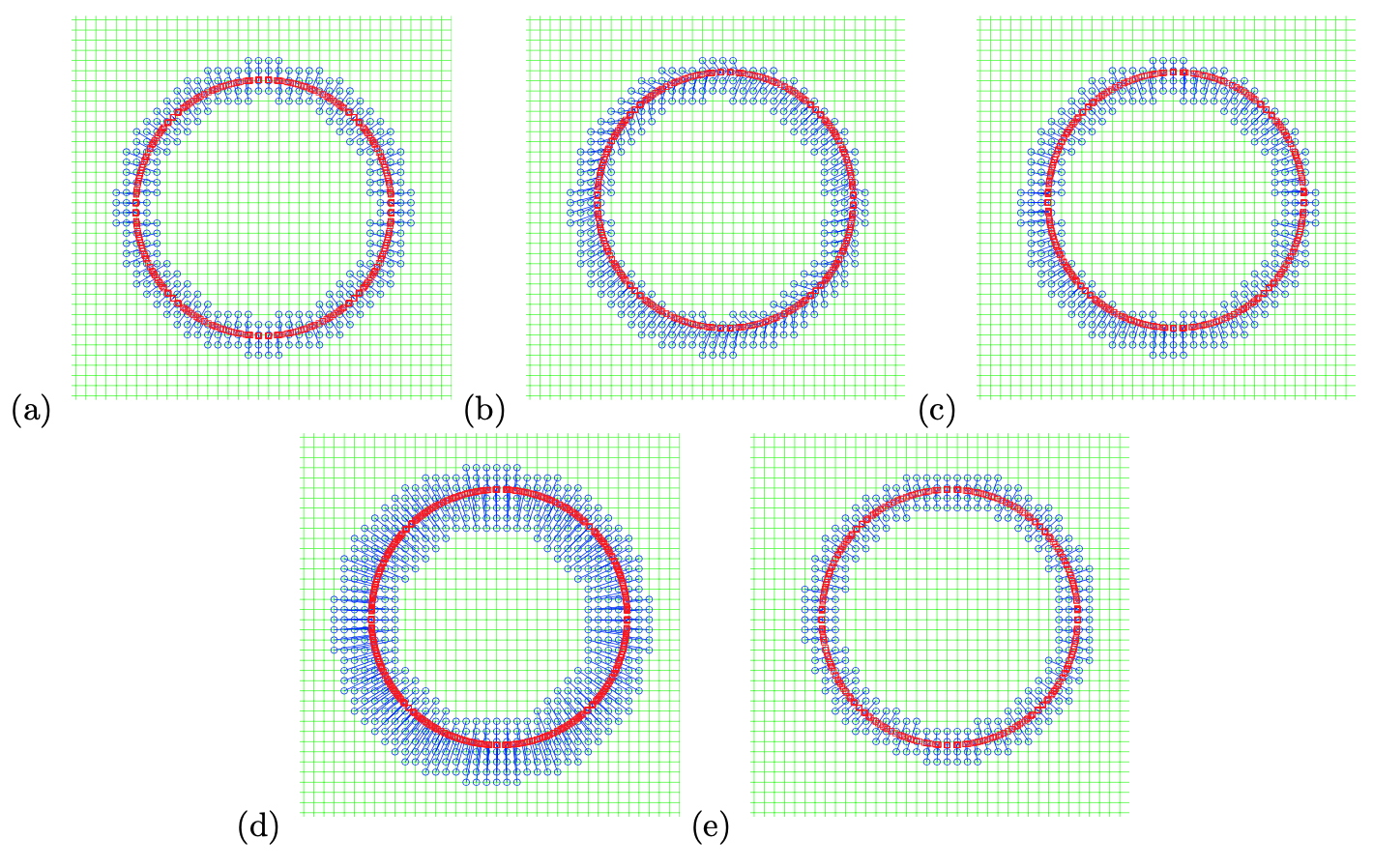

This section briefly summarizes the Grid Based Particle Method (GBPM) proposed and developed in a series of studies [18, 17, 16]. We refer the interested readers to the reference and thereafter. The overall algorithm of the GBPM is given as follows, and a graphical illustration is given in Figure 1.

Algorithm:

-

1.

Initialization [Figure 1 (a)]. Collect all grid points in a small neighborhood (computational tube) of the interface. From each of these grid points, compute the closest point on the interface. We call these grid points active and their corresponding particles on the interface footpoint. The interface is represented by these meshless footpoints (particles).

-

2.

Motion [Figure 1 (b)]. Move all footpoints according to a given motion law.

-

3.

Re-Sampling [Figure 1 (c)]. For each active grid point, re-compute the closest point to the interface reconstructed locally by those particles after the motion in step 2.

-

4.

Updating the computational tube [Figure 1 (d-e)]. Activate any grid point with an active neighbor and find their corresponding footpoints. Then, inactivate grid points that are far away from the interface.

-

5.

Adaptation (Optional). Locally refine the underlying grid cell if necessary.

-

6.

Iteration. Repeat steps 2-5 until the final computation time.

In the GBPM, we represent the interface by meshless particles associated with an underlying Eulerian mesh. Each sampling particle on the interface is chosen to be the closest point from each underlying grid point in a small neighborhood of the interface. This one-to-one correspondence gives each particle an Eulerian reference during the evolution.

In the first step, we define an initial computational tube for active grid points and use their corresponding closest points as the sampling particles for the interface. A grid point is called active if its distance to the interface is smaller than a given tube radius, and we label the set containing all active grids . We associate the corresponding closest point on the interface to each active grid point. This particle is called the footpoint associated with this active grid point. Furthermore, we can also compute and store certain Lagrangian information of the interface at the footpoints, including normal, curvature, and parametrization, which will be helpful in various applications.

This representation is illustrated in Figure 1(a) using a circular manifold as an example. We plot the underlying mesh in a solid line, all active grids using small circles, and their associated footpoints on the manifold using squares. To each grid point near the interface (blue circles), we associate a footpoint on the interface (red squares). The relationship of each of these pairs is shown by a solid line link. To track the motion of the interface, we move all the sampling particles according to a given motion law. This motion law can be very general. Suppose the interface is moved under an external velocity field. Since we have a collection of particles on the interface, we move these points just like all other particle-based methods, which is simple and computationally efficient. We can solve a set of ordinary differential equations using a high-order scheme, which gives a very accurate interface location. For more complicated motions, the velocity may depend on the geometry of the interface. This can be done through local interface reconstruction.

It should be noted that a footpoint after motion may not be the closest point on the interface to its associated active grid point anymore. For example, Figure 1 (b) shows the location of all particles on the interface after the constant motion with a small time step. As we can see, these particles on the interface are no longer the closest point from these active grid points to the interface. More importantly, the motion may cause those original footpoints to become unevenly distributed along the interface. This may introduce stiffness when particles are moving toward each other and a large error when particles are moving apart. To maintain a quasi-uniform distribution of particles, we need to resample the interface by recomputing the footpoints and updating the set of active grid points () during the evolution. During this resampling process, we locally reconstruct the interface, which involves communications among different particles on the interface. This local reconstruction also provides geometric and Lagrangian information at the recomputed footpoints on the interface. The key step in the method is a least squares approximation of the interface using polynomials at each particle in a local coordinate system, , with the associated particle as the origin. Using this local reconstruction, we find the closest point from this active grid point to the local approximation of the interface. This gives the new footpoint location. Further, we also compute and update any necessary geometric and Lagrangian information, such as the normal vector, curvature, and possibly an updated interface parametrization at this new footpoint.

2.2 A new weighting strategy in the local reconstruction

We approximate the surface locally using a polynomial from each grid point . For example, in the three dimensions, we could use a second-order polynomial given by

where are coordinates in the local coordinate system, . The original idea in [18] fits the polynomial using a collection of -footpoints, with , in the neighborhood of . With these points, we determine the least squares solution of the linear system:

This set of footpoints in the local reconstruction must be chosen carefully. As discussed in the original GBPM formulation, this set of points has to satisfy the following conditions. The first one is that these points have to be distinct. In the previous formulation, we have conditioned that these footpoints must be separated by at least a certain distance on the local tangent plane. This constraint avoids putting an extensive amount of weight on these sampling points. The second constraint is that we would like to sample the same segment on the interface. When the curvature of the interface is too large compared to the underlying mesh size, one might collect footpoints from different interface segments, which will result in a wrong local reconstruction of the surface. In the original work, we proposed checking if the associated normal vectors from these footpoints were pointing in a similar direction. In particular, for each of these footpoints, has an associated vector corresponding to the surface normal before the motion. We have checked if for some angle .

The above strategies are in some sense imposing some hard constraints in the selection phase. We either use the neighboring sampling point or eliminate it when choosing the subset of footpoints in the local reconstruction step. This paper proposes relaxing this by imposing some soft constraints. The first condition on the well-separation can be partly handled by the moving least squares (MLS) method [15], which introduces a weighting to the footpoints based on the distance between points on the tangent plane. We will not concentrate on this part. To avoid information from different segments on the surface, instead of simply getting rid of those points pointing at an angle larger than , we propose to add a weighting in the least squares further to emphasize the contribution from footpoints points with similar normal directions. Mathematically, we let be the closest footpoint to the grid point and denote those normal vectors at the footpoints before motion by . We now introduce a weight to each collected footpoints given by

| (3) |

Once we have this set of weights, the -th equation in the least squares system will be modified to

3 Our proposed framework

This section discusses our proposed framework for solving PDEs on an evolving surface. We will first discuss the mathematical background of the problem and discuss all the necessary quantities we have to compute. Then, we introduce our CLS method approach for local approximation.

3.1 Background

Let be a regular surface in parametrized by . Mathematically, the surface gradient of a smooth function is given by

where and are the coefficients of the first fundamental form of the regular surface , the quantities and . For any local vector field , we have the surface divergence defined as

The surface Laplacian (Laplace-Beltrami) operator is defined as . In the local representation, it can be expressed as

Therefore, to design a numerical method for computing any differential operator on the surface, we have to approximate the first fundamental form of the surface and also provide a way to approximate the derivatives in the local coordinate systems.

3.2 The CLS-GSP reconstruction

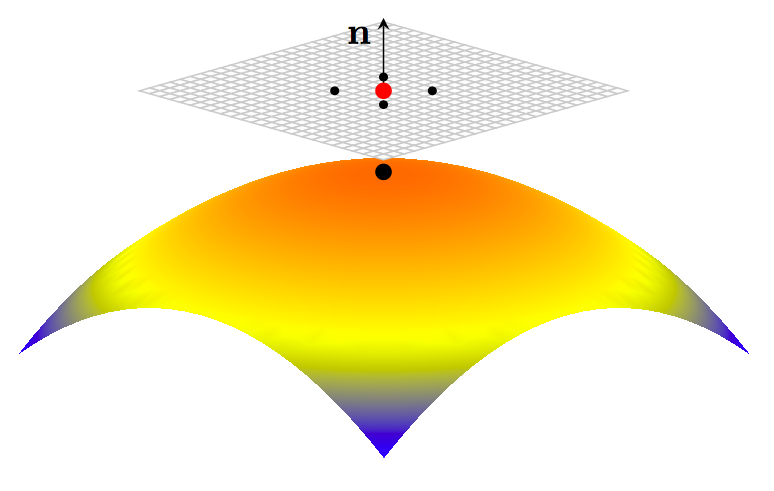

The first issue is to design a good local representation of surface . One natural representation is the local tangential projection as shown in Figure 2. We assume that a set of sampling points represents the interface. These sampling points can be obtained from various approaches. For example, it can be the GBPM representation we summarized above, a typical point cloud dataset, or an interface represented by triangulation. The representation itself does not play a crucial role in the following discussions. Assume further that for any individual sampling point , we can collect a set of -neighboring sampling points denoted by . It is certainly easier to have the interface represented by a triangulation since we have the connectivities of all vertices (the sampling points) on the interface. For the GBPM representation, we can collect these neighboring points through the underlying mesh. Some KNN algorithms can also be applied to point cloud datasets.

For the sampling point , we let be the corresponding (approximated) normal vector of the surface. With and its tangent plane, we introduce a new local coordinate system with the -direction representing the component in the -direction and becomes in the new coordinate system. In this local coordinate system, a function can locally approximate the surface, and we denote it as . Once we have this local approximation , we can approximate the derivatives and for the first fundamental form in the surface differential operators.

Numerically, this leads to one of the following problems. The first one is an interpolation problem: Given for , determine an interpolant which passes through all data points such that . One idea is to use the polynomial interpolation. For example, when the neighbors are carefully chosen such that , we can use a second-order polynomial to interpolate these data points. This approach better estimates the local error if the interpolation uses the polynomial basis. However, we generally have no control over the distribution of from the projection of the sampling points onto the local tangent plane. In particular, some of these projection points could be very close to each other or could collide on the tangent plane. Designing a good subset of points might be challenging, which could lead to a good interpolation problem. Therefore, we do not consider this class of approach further.

The second is the least squares approximation problem: Given for , determine a function that best explains the data points. Mathematically, we obtain the set of parameters to minimize the mismatch between the data points and where is the space for the basis, i.e. we solve

The choice of the basis is clearly not unique. For example, the original GBPM developed in [18, 17, 16] chose the standard polynomial basis for . Of course, one could also replace the standard least squares approximation with a weighted least squares approach so that those points far away from do not contribute equally like the point itself.

In a recent work [37, 38], we have developed a general strategy to impose a hard constraint in the least squares reconstruction by enforcing the least squares reconstruction passing through exactly. We call this a CLS approach. Under this constraint, the least squares method can be modified to fit the function value with functions in the form of . This only requires a simple modification of the standard least squares approximation method. We will give out more information below. Let be some linear differential operator. We can also follow a similar idea to obtain an approximation based on the CLS, i.e.

with the coefficient .

The choice of the basis is not unique. In this paper, we present two different sets of bases and give the technical implementation details. The first is the simple polynomial basis, which leads to an approximation result similar to the original GBPM method for PDEs but with an extra hard constraint at the target location during reconstruction. The second basis follows our previous development in [37, 38], which uses the radial basis functions. A different basis will give slightly different results. We are not trying to conclude which of the following two basis sets will yield better numerical results in all applications. Still, we will only show some examples to explain better the framework that we are proposing.

3.2.1 Standard polynomial basis

The first set of basis in consideration is the standard polynomial basis. The weights in the numerical approximation satisfy

where depends on the order of those polynomials in the basis , the matrix is the corresponding polynomial basis coefficients, and is the pseudo-inverse of the matrix , which can be easily computed using the SVD or the QR algorithm. We only use the second-order least squares polynomial fitting at . A similar formulation can be easily extended to any higher-order polynomial fitting. Set

where . Then we have

This numerical scheme is the so-called local tangential lifting (LTL) developed in [4]. Some nice properties can be proved under the standard polynomial basis. We do not give the full numerical details of this approach but refer the interested readers to the reference and after that.

3.2.2 Radial basis functions

An interesting set of basis is the radial basis functions (RBF). Since the projection of the sampling points from the local neighborhood could collide on the tangent plane, this could sometimes create numerical instability in the standard RBF interpolation. In this paper, we follow [37, 38] and apply the CLS-GSP method to approximate the local surface and any function defined on the evolving manifold.

We propose to convert the interpolation problem in the standard RBF method to a regression problem on a set of prescribed sample locations. A simple two-dimensional demonstration is shown in Figure 3. Because we do not require the interpolating values at all sampling points to match the function values, we relax the constraint by minimizing the mismatch at a different set of sampling locations in the least squares sense. We call this set of new sampling locations the ghost sample points (as shown using the red triangles) and denote them by . The choice of these ghost sample points is not unique. Here, for any target point , we propose to pick ghost sample points uniformly surrounding it. In this paper, we collect 16 local neighbors for each sampling point in various local reconstructions (i.e., ). In Figure 3, the projection of these sampling points onto the local tangent plane is plotted using blue squares and the black circle (representing the target location itself). Then we apply 8 ghost sample points (i.e. ) distributed uniformly at a distance from each individual sampling point (plotted by the red triangles).

Following the idea of the CLS, we enforce the least squares reconstruction passing through the function value exactly. Mathematically, this constraint implies

where is the polynomial basis. If we define

we find that this function for any choice of and . In other words, any function in the form of

passes through exactly for any and . Then, we follow an idea similar to the LS-RBF to determine the coefficients and in the least squares sense. We redefine a new kernel function by

Then, the coefficients can be determined by obtaining the least squares solution to the following system of linear equations

| (4) |

where for and .

Further, to obtain an approximation to the differential operator, we first let be the coefficient matrix on the left hand side of the equation (4). Then we have

| (5) |

where denotes the pseudo-inverse of the matrix . Note that

for any differential operator. With the weights obtained, we have

In particular, if we use the Gaussian kernel given by and set

we can approximate the coefficients by

Omitting the last columns of coefficients, we have

Finally, the partial derivatives , , , and can be easily constructed using the same set of coefficients. Further, with both and approximated, we can determine the coefficients in the first fundamental form using

Note that the coefficients depend only on the distribution of the projected points on the local tangent place but not on the function values of . We can apply the same set of coefficients to compute and at . In particular, we have

Remark 1.

Indeed, to get a more accurate approximation of the surface differential operator, we need to collect more neighboring points for each sample point. If the sampling density of the interface cannot grow with accuracy, we have to go further away to collect enough neighbors in the least squares approximation. However, this will lower the accuracy of the local approximation of the interface. Therefore, we need to assume that the number of sampling points has to be large enough, and the sampling density must be uniform.

Remark 2.

In the discussions above, we only require the fitting function to pass through the target point exactly. Except for the target point, the remaining samples are treated equally with the same weighting in the least squares fitting. However, as discussed in Section 2.2, we introduce an extra weighting to emphasize the contributions from sample points based on the angle of its normal direction compared to the target one.

3.3 The overall algorithm for solving PDEs on evolving surfaces

To end this section, we summarize our overall approach to solving PDEs on evolving surfaces. The original GBPM handles both the interface sampling and the interface motion. At the same time, the proposed CLS methods help to approximate the surface, the local reconstructions of the function value on the interface, and the coefficients in the first fundamental form for the surface differential operator.

Algorithm:

-

1.

Initialization.

-

2.

Generate the surface operators.

-

(a)

Determine neighboring grids (inside the computational band) and collect their corresponding footpoints. Denote the number of points by .

-

(b)

Construct the local coordinate system at each sampling point.

-

(c)

Apply the CLS method to construct the weights for .

-

(d)

Generate the surface differential operators.

-

(a)

-

3.

Update the function value by solving the time-dependent surface PDEs.

-

4.

Update the location of foot points according to the motion law.

-

5.

Follow the GBPM local reconstruction, including resampling new footpoints and updating the corresponding function value.

-

6.

Back to Step 2, repeat until the stopping time.

4 Numerical experiments

In this section, we present several numerical tests to demonstrate the effectiveness of the proposed method. We will first solve some PDEs on static surfaces represented by the GBPM to show the performance of the CLS. Then, we add different motion laws to the interface for various applications. The examples in this section will be arranged in the following way. We will first look at the effect on the new weightings in the local reconstruction in Section 4.1 without consideration of any PDE on the evolving surface. Then, we concentrate on solving PDEs on a static surface sampled by the GBPM representation in Section 4.2. This section tests the ability and effectiveness of the newly proposed CLS-GSP method. We consider in Section 4.3 a simple example for solving an advection-diffusion equation on an evolving surface where the exact solution to the problem can be found. We will then present some simulations of various equations in Section 4.4 to Section 4.6 to demonstrate the effectiveness of the proposed approach.

4.1 The modified GBPM

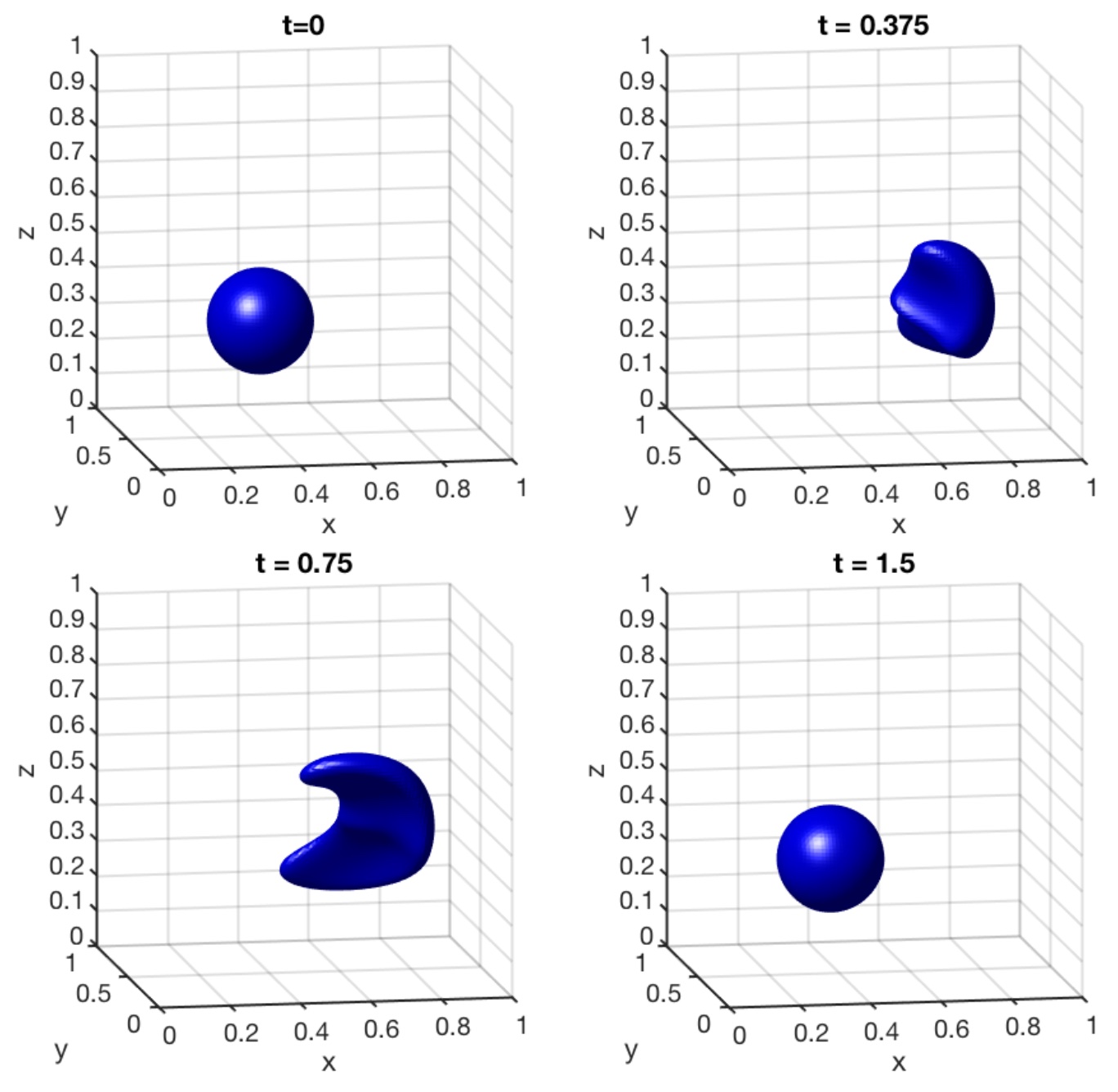

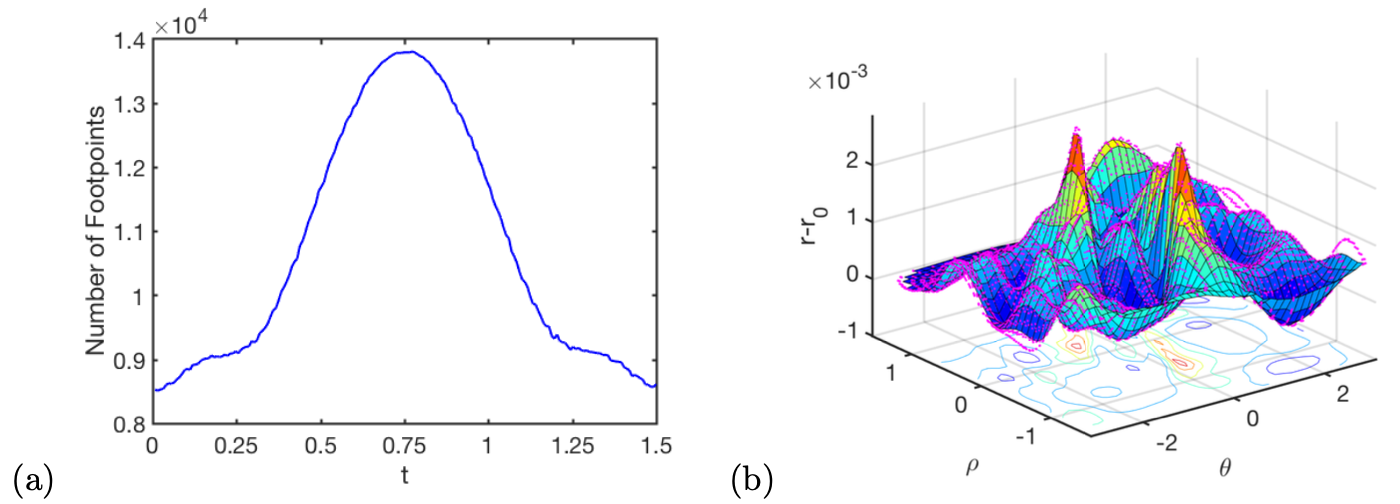

Our first example is the motion of a sphere under a single vortex flow [10, 12, 25, 18]. This is a challenging test case that can test the stability of our GBPM with the modified local reconstruction. The velocity field is given by

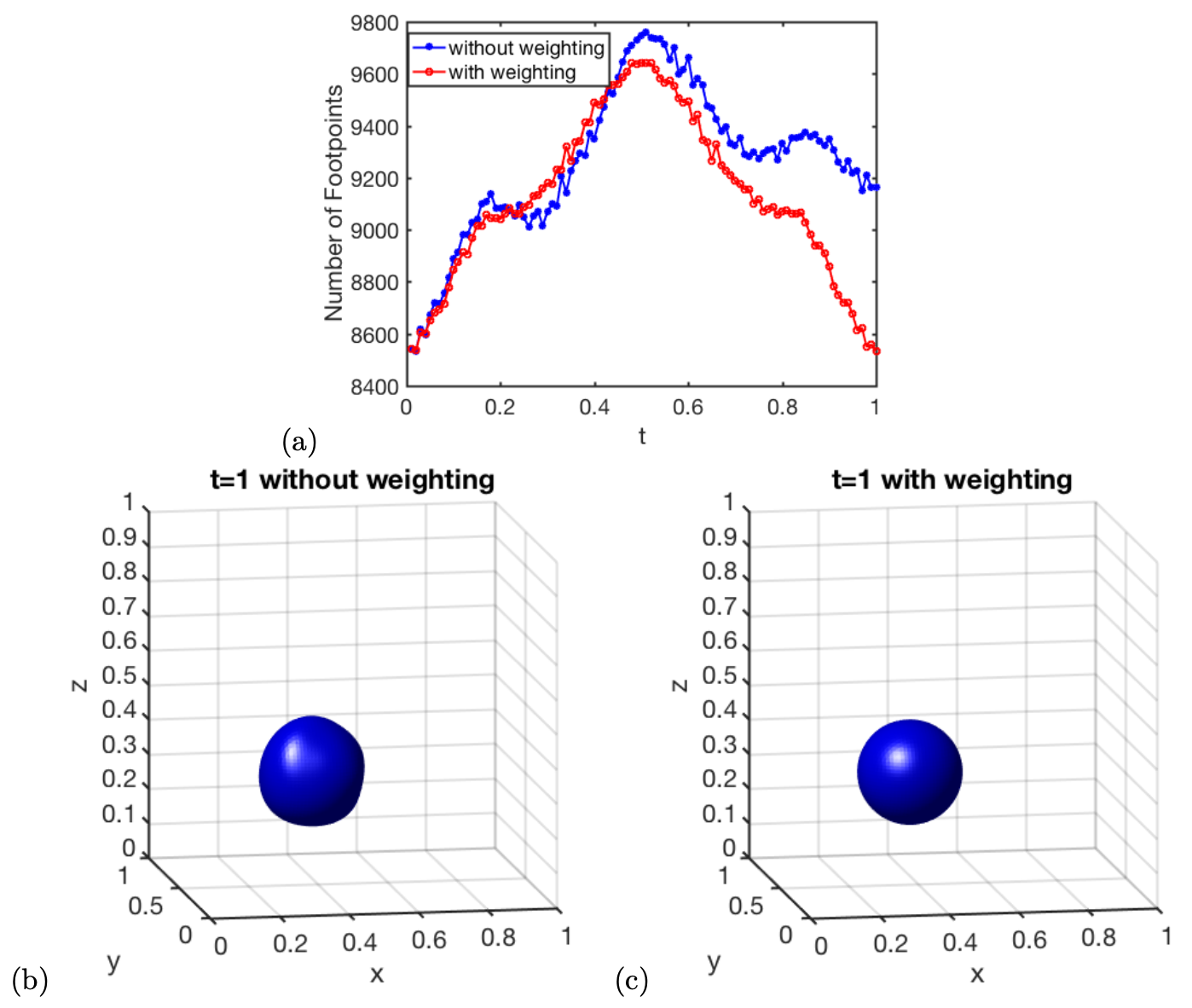

for some given final time . The initial shape is a sphere centered at with radius . In Figure 4, we compute the solution at and with using . The mean distance from the final footpoints to the point at the final time is with the standard deviation . The largest absolute error in the location is . There is a total of initially at . The number gradually increases to the maximum value when , finally dropping to at the final time. Figure 5 shows the detailed evolution of footpoints and the error in the sphere’s radius at the final time.

To see the effect of the new weighting (3) introduced in the local reconstruction, we compare two numerical solutions in Figure 6. In this example, we repeat the similar setup but choose a slightly smaller final time with . Figure 6 shows the changes in the number of footpoints and the solution at the final time . For the original scheme without any weighting in the local reconstruction, we found that the mean distance from the final footpoints to the center at the final time is with the standard deviation . The modified local reconstruction gives a more accurate solution with the corresponding mean distance with the standard deviation .

4.2 The CLS method for solving PDEs on static surfaces represented by the GBPM

In this section, we give two numerical examples to show that the proposed CLS method works well in solving PDEs on surfaces sampled by the GBPM representations. The first example is the Cahn-Hilliard equation on the unit sphere. The surface Cahn-Hilliard equation has the form

| (6) |

where is the Cahn-Hilliard number, is the Peclet number and is a diffusion parameter. In this example, we solve the equation on a unit sphere with the parameters in the equation given by , , and the double well potential function . Our numerical solution at different times is shown in Figure 7. The initial condition is a uniform random perturbation with a magnitude of 0.01, with an average concentration of of 0.5.

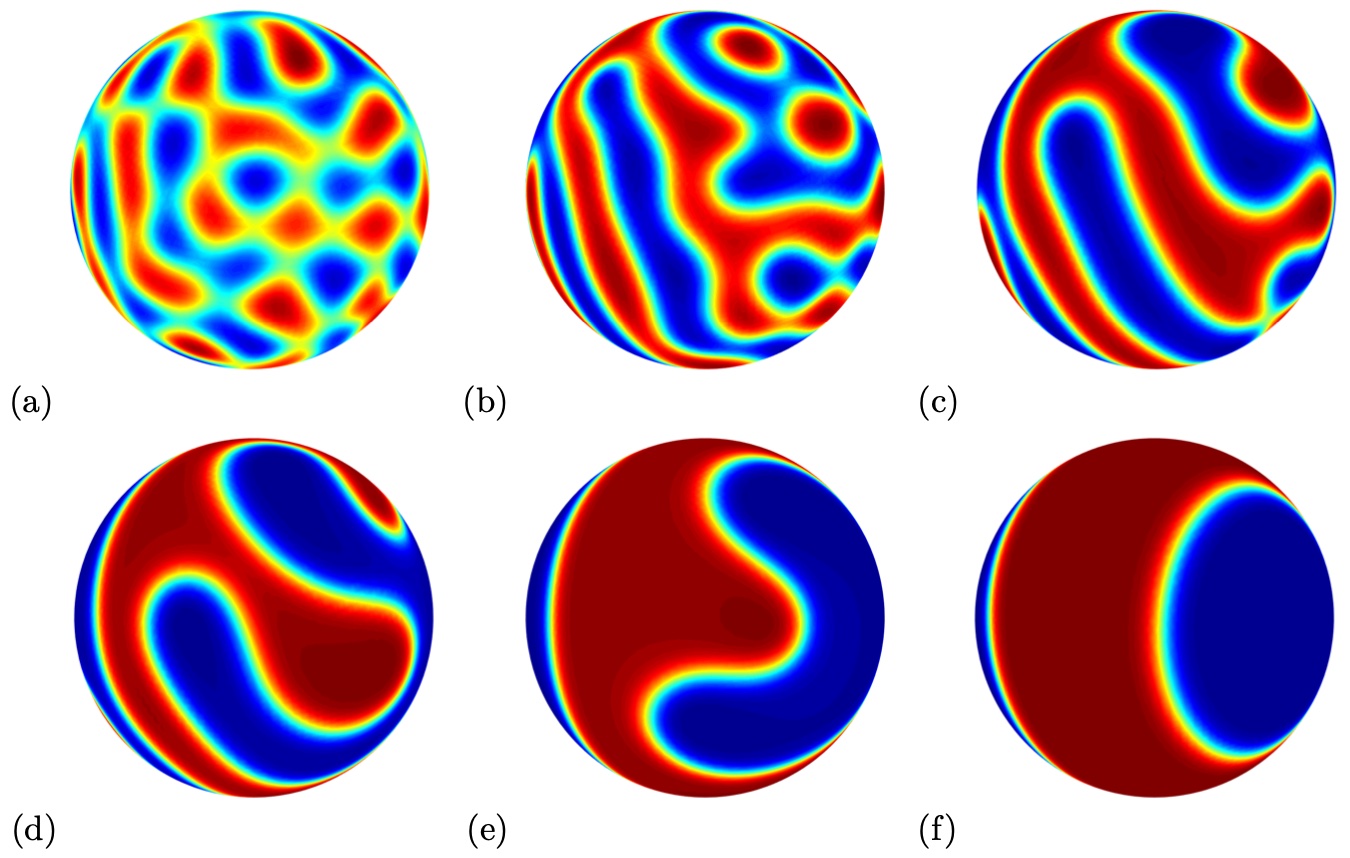

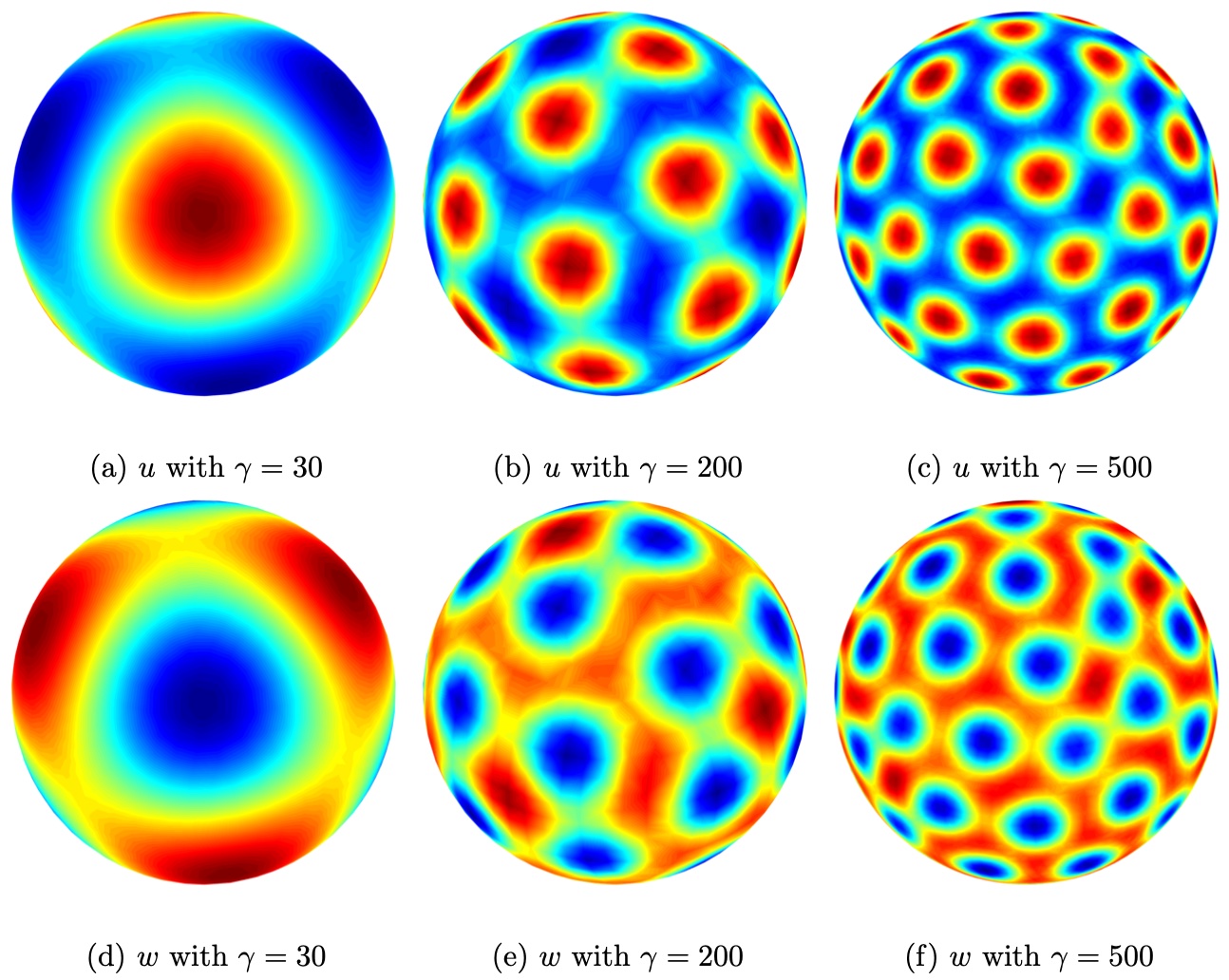

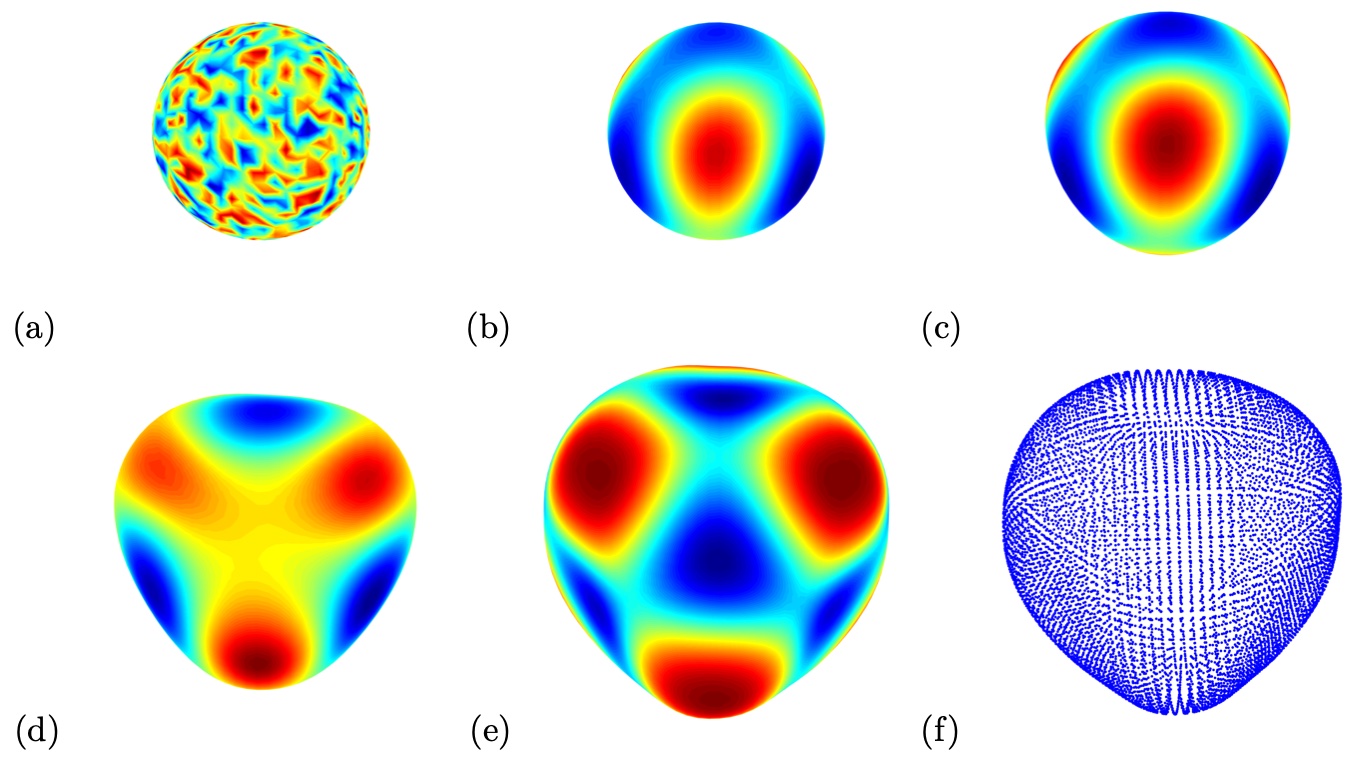

In the second example, we simulate the Turing patterns on a static surface. Consider the following coupled system of equation (2) given by

| (7) |

with

| (8) |

The parameters , , and are all positive constants. In this example, we follow [1] and use the following parameters for the kinetics function in (8): , , and . All three examples use the same initial condition given by with a uniform random perturbation of magnitude 0.01. The parameter is given in different scales. The grid size in the GBPM representation is , and the stopping time is .

Figure 8 shows our numerical solutions corresponding to various at the fixed final time . Even though the exact solution to these examples is not available, these results are similar to those obtained in various articles, including [3, 24, 1]. Numerically, the CLS method is flexible and matches the GBPM well in solving PDEs on a static surface.

| order | order | order | order | |||||

|---|---|---|---|---|---|---|---|---|

| - | - | - | - | |||||

| order | order | order | order | |||||

|---|---|---|---|---|---|---|---|---|

| - | - | - | - | |||||

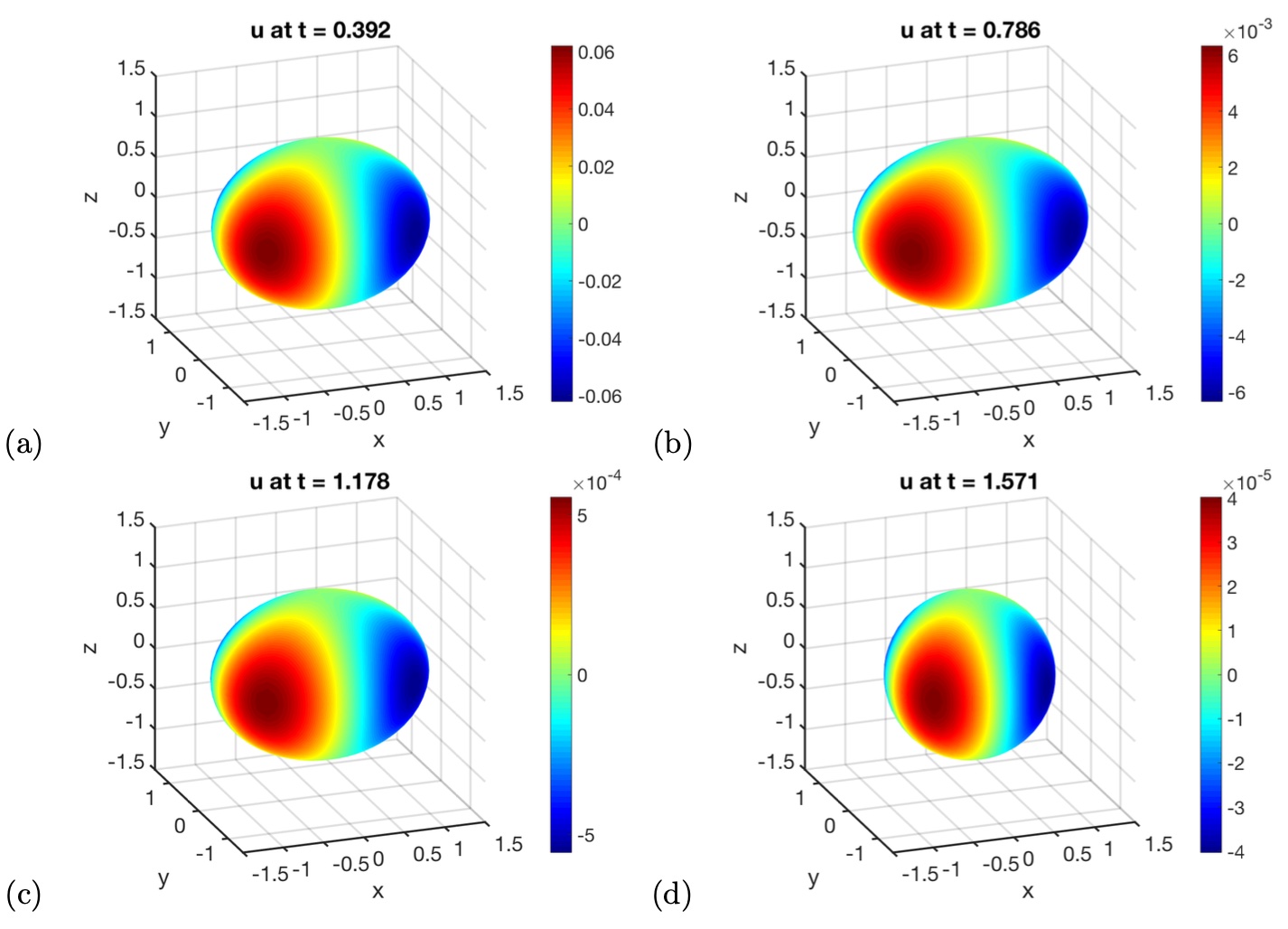

4.3 The advection-diffusion equation with a source term on an oscillating ellipsoid

This three-dimensional example is taken from [7, 8, 28] to test the accuracy of a PDE solver on moving surfaces. The time-dependent oscillating ellipsoid has the following exact solution

where . The surface can be explicitly parametrized as

The associated velocity field is given by . Therefore, the equation involves both the advection along the surface and the deformation by the motion in the normal direction. We choose the exact continuous solution so that the corresponding source term on the right-hand side of the equation is given by

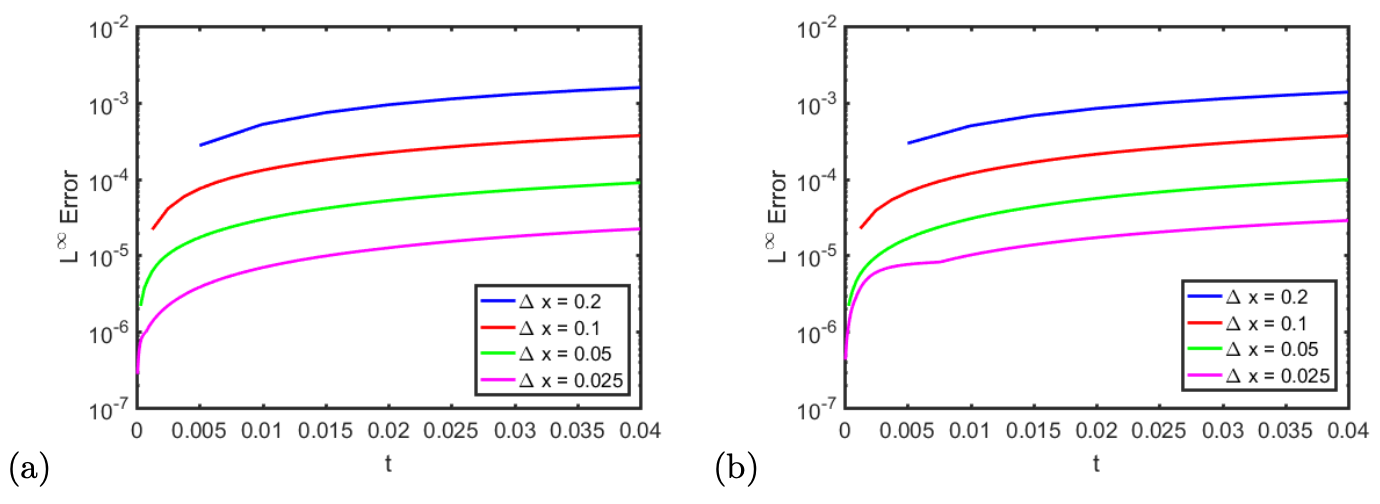

with . In this simulation, we choose the mesh size and a fixed time step size . We plot our solution at different time and in Figure 9. Figure 10 gives the -error in the numerical solution computed using the CLS with different mesh sizes at different times. Table 1 shows the errors in the computed solution at different times and computed on a different mesh. We see that our numerical approach is roughly second-order accurate.

Concerning the computational efficiency, the CPU time for one marching step on the mesh with is seconds, including seconds spent on the CLS reconstruction and seconds on the GBPM computations. This computational time is recorded under a 4-core laptop in MATLAB with a parallel modulo implemented.

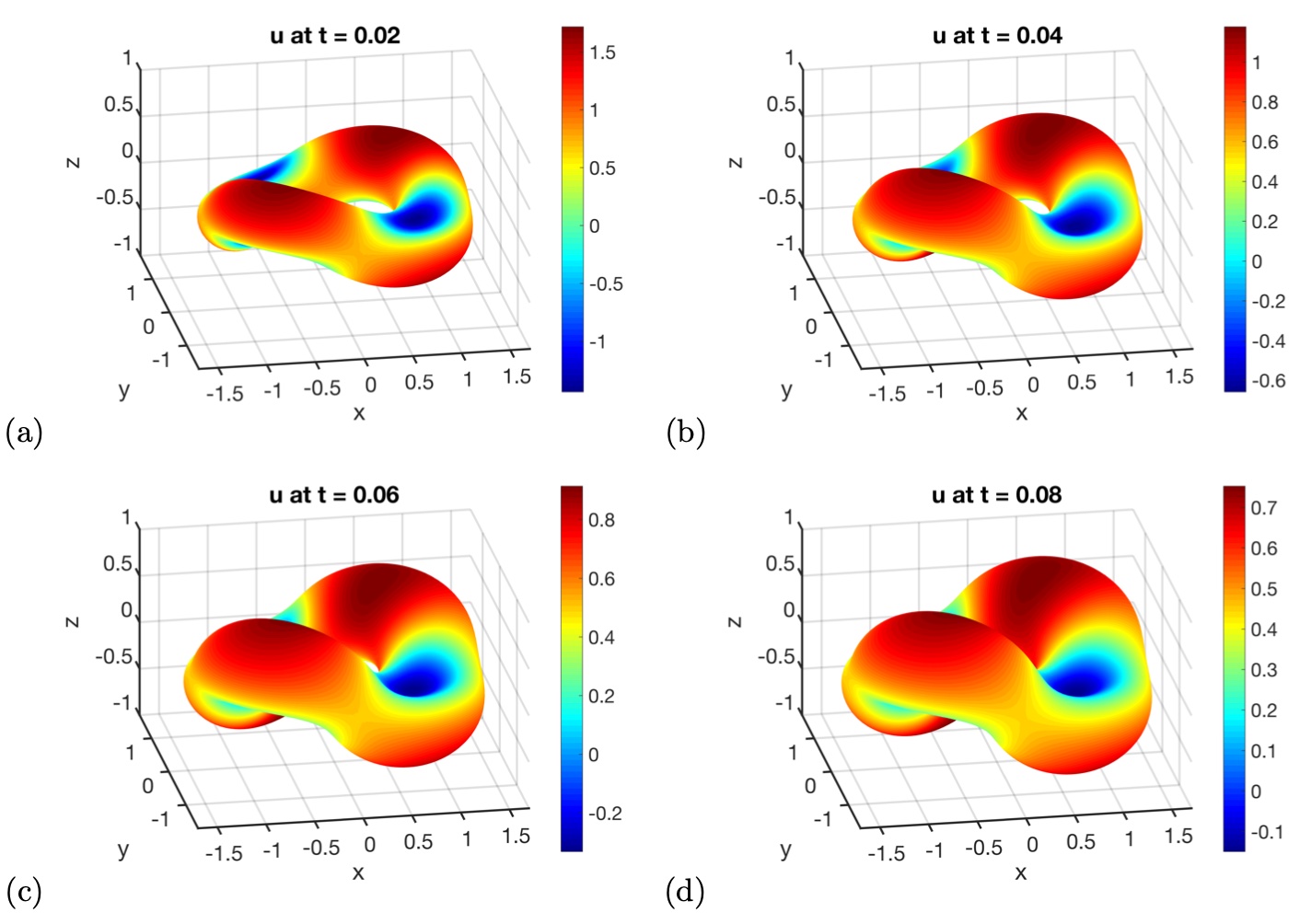

4.4 A strongly coupled flow on an evolving torus

In this case, the interface velocity is strongly coupled with the solution of the PDE. This example is taken from [28], where we evolve an initial torus

according to the given velocity where is the mean curvature, is the unit normal vector, and is the solution to the PDE

The initial value of is given by . Figure 11 shows our computed solution of on the evolving surface at different times from to in an increment of . Even though the exact solution to this problem is unavailable, these numerical solutions are similar to those presented in [28].

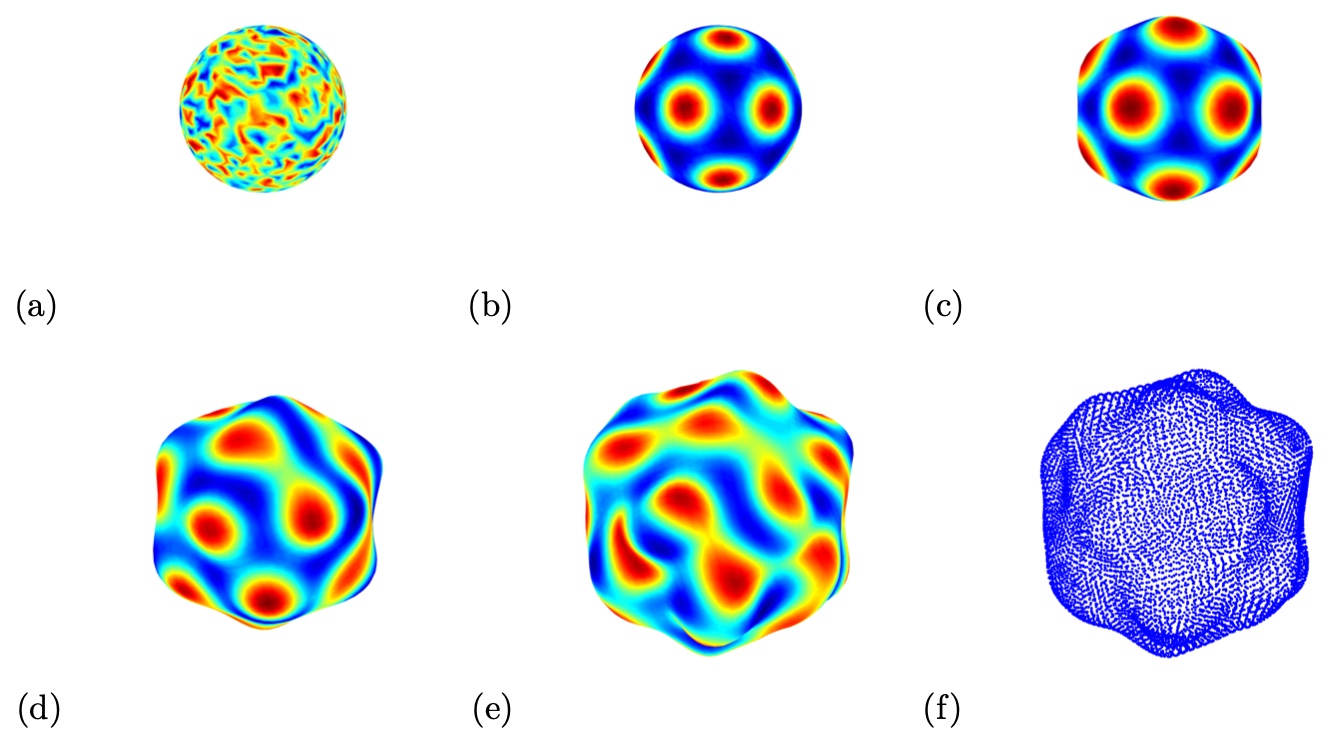

4.5 Solid tumors growth

In this example, we compute the reaction-diffusion system (7) with an activator-depleted kinetics in the form of (8). The surface growth law is assumed to be in the form

where is the growth rate and the parameter models the surface tension of the tumor surface. In this system, the solution of denotes the growth-promoting factor, and is the corresponding growth-inhibiting factor [5]. We follow the discussions in [3, 1, 9] by first starting the numerical simulations from a stationary sphere in a pre-patterning stage (controlled by the parameter ). After the pre-patterning stage, the surface grows under the given motion law.

Figures 12 and 13 show the evolution of the tumor surface together with the growth factor concentration with two different values of ’s. In the pre-pattern stage , the behavior of the solution is the same as when we simulate the Turing patterns in the previous section. After the pre-pattern stage, the surface evolves in the normal direction. Those regions with a larger growth concentration evolve much faster than others. This leads to some interesting configurations of the shape.

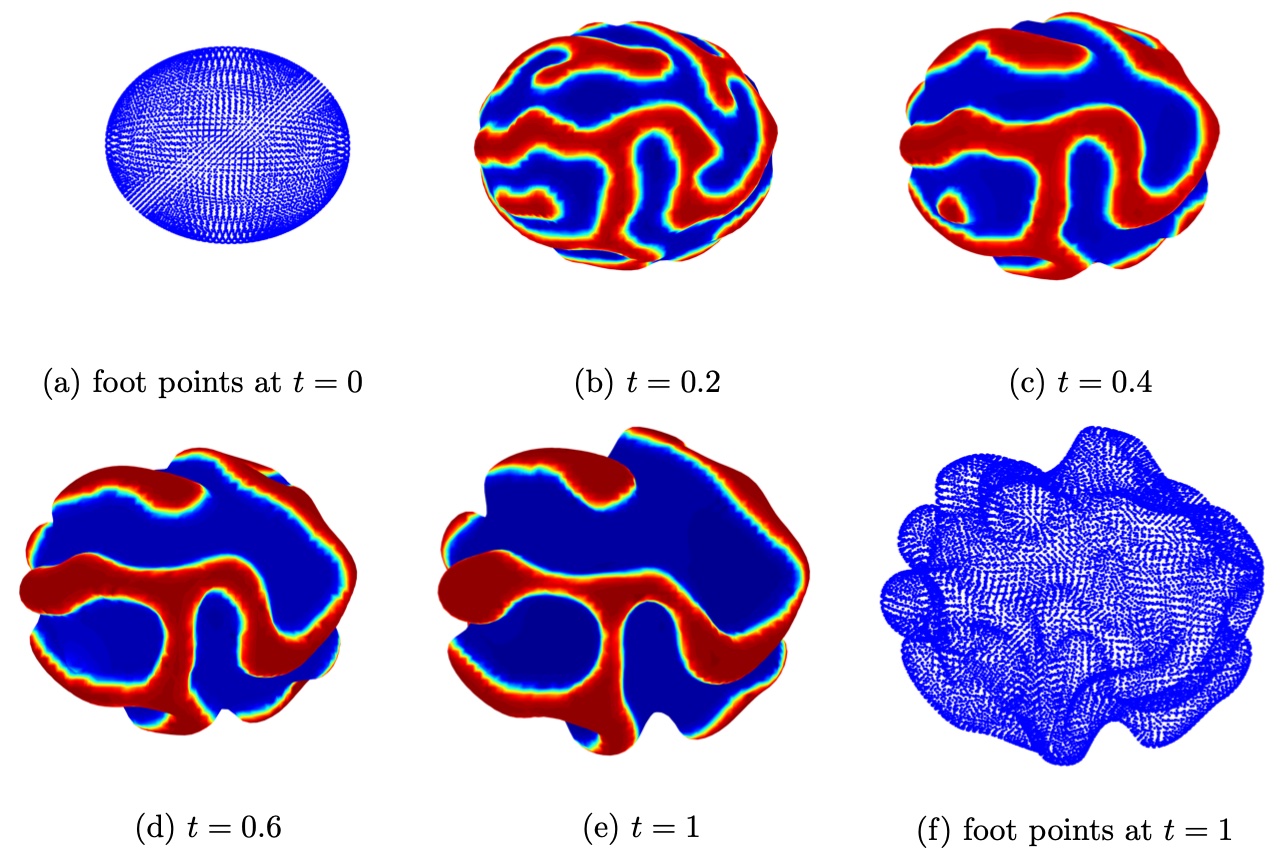

4.6 The Cahn-Hilliard equation on an ellipsoid

This example considers the homogenous Cahn-Hilliard equation (6) on an evolving ellipsoid. An initial ellipsoid centered at the origin with axis and is evolved in the normal direction according to the velocity , where is the mean curvature and is the unit normal direction. We choose the Peclet number , the diffusion parameter , and the double well potential function . The initial value of the function is defined as a Gaussian noise around a constant number . Figure 14 shows the numerical results computed on the mesh with and the time marching step .

5 Conclusion

This paper proposes a new CLS method for solving PDEs on evolving surfaces. The method modifies the GBPM developed in [18, 17, 16] by enforcing an extra constraint in the local least squares approximation. Unlike a recent approach in [33] where we introduce extra weighting to the target point through a virtual grid, the additional condition in the current approach requires the least squares reconstruction passing through the data point at the target location. This significantly improves the computational stability and accuracy. We have shown that this CLS approach fits extremely well with the GBPM for interface representation. The coupling of these two methods provides a computationally efficient and robust method for solving PDEs on evolving surfaces.

Acknowledgment

Leung’s work was partly supported by the Hong Kong RGC grants 16303114 and 16309316.

References

- [1] R. Barreira, C.M. Elliott, and A. Madzvamuse. The surface finite element method for pattern formation on evolving biological surfaces. J. Math. Biol., 63:1095–1119, 2011.

- [2] M. Bertalmio, L.-T. Cheng, S. Osher, and G. Sapiro. Variational problems and partial differential equations on implicit surfaces. J. Comput. Phys., 174:759–780, 2001.

- [3] M.A.J. Chaplain, M. Ganesh, and I.G. Graham. Spatio-temporal pattern formation on spherical surfaces: numerical simulation and application to solid tumour growth. J. Math. Biol., 42:387–423, 2001.

- [4] S. G. Chen, M. H. Chi, and J. Y. Wu. High-Order Algorithms for Laplace–Beltrami Operators and Geometric Invariants over Curved Surfaces. J. Sci. Comput., 65:839–865, 2015.

- [5] E.J. Crampin, E.A. Gaffney, and P.K. Maini. Reaction and diffusion on growing domains: scenarios for robust pattern formation. Bull. Math. Biol., 61:1093–1120, 1999.

- [6] G. Dziuk and C. M. Elliott. Finite element methods for surface PDEs. Acta. Numer., 22:289–396, 2013.

- [7] G. Dziuk and C.M. Elliott. An Eulerian level set method for partial differential equations on evolving surfaces. Comput. Vis. Sci., 13:17–28, 2008.

- [8] C.M. Elliott, B. Stinner, V. Styles, and R. Welford. Numerical computation of advection and diffusion on evolving diffuse interfaces. IMA J. Numer. Anal., 31:786–812, 2010.

- [9] C.M. Elliott and V. Styles. An ale esfem for solving pdes on evolving surfaces. Milan J. Math., pages 1–33, 2012.

- [10] D. Enright, R. Fedkiw, J. Ferziger, and I. Mitchell. A hybrid particle level set method for improved interface capturing. J. Comput. Phys., 183:83–116, 2002.

- [11] J.B. Greer. An improvement of a recent Eulerian method for solving PDEs on general geometries. J. Sci. Comp., 29(3):321–352, 2005.

- [12] S. Hieber and P. Koumoutsakos. A Lagrangian particle level set method. J. Comput. Phys., 210:342–367, 2005.

- [13] S. Hon, S. Leung, and H.-K. Zhao. A cell based particle method for modeling dynamic interfaces. J. Comp. Phys., 272:279–306, 2014.

- [14] R. Lai, J. Liang, and H. Zhao. A local mesh method for solving pdes on point clouds. Inverse Problems and Imaging, 7(3):737–755, 2013.

- [15] P. Lancaster and K. Salkauskas. Surfaces generated by moving least squares methods. Math. Comp., 87:141–158, 1981.

- [16] S. Leung, J. Lowengrub, and H.K. Zhao. A grid based particle method for high order geometrical motions and local inextensible flows. J. Comput. Phys., 230:2540–2561, 2011.

- [17] S. Leung and H.K. Zhao. A grid-based particle method for evolution of open curves and surfaces. J. Comput. Phys., 228:7706–7728, 2009.

- [18] S. Leung and H.K. Zhao. A grid based particle method for moving interface problems. J. Comput. Phys., 228:2993–3024, 2009.

- [19] S. Leung and H.K. Zhao. Gaussian beam summation for diffraction in inhomogeneous media based on the grid based particle method. Communications in Computational Physics, 8:758–796, 2010.

- [20] J. Liang and H. Zhao. Solving partial differential equations on point clouds. SIAM J. on Scientific Computing, 35(3):A1461–A1486, 2013.

- [21] C.B. Macdonald, J. Brandman, and S. Ruuth. Solving eigenvalue problems on curved surfaces using the closest point method. J. Comp. Phys., 230:7944–7956, 2011.

- [22] C.B. Macdonald and S.J. Ruuth. Level set equations on surfaces via the closest point method. J. Sci. Comput., 35:219–240, 2008.

- [23] C.B. Macdonald and S.J. Ruuth. The implicit closest point method for the numerical solution of partial differential equations on surfaces. SIAM J. Sci. Comput., 31:4330–4350, 2009.

- [24] A. Madzvamuse and P. Maini. Velocity-induced numerical solutions of reaction-diffusion systems on continuously growing domains. J. Comput. Phys., 225:100–119, 2007.

- [25] C. Min and F. Gibou. A second order accurate level set method on non-graded adaptive cartesian grids. J. Comput. Phys., 225:300–321, 2007.

- [26] S. J. Osher and R. P. Fedkiw. Level Set Methods and Dynamic Implicit Surfaces. Springer-Verlag, New York, 2003.

- [27] S. J. Osher and J. A. Sethian. Fronts propagating with curvature dependent speed: algorithms based on Hamilton-Jacobi formulations. J. Comput. Phys., 79:12–49, 1988.

- [28] A. Petras and S.J. Ruuth. PDEs on moving surfaces via the closest point method and a modified grid based particle method. J. Comput. Phys., 312:139–156, 2016.

- [29] C. Piret. The orthogonal gradients method: A radial basis functions method for solving partial differential equations on arbitrary surfaces. J. Comput. Phys., 231:4662–4675, 2012.

- [30] S.J. Ruuth and B. Merriman. A simple embedding method for solving partial differential equations on surfaces. J. Comput. Phys., 227:1943–1961, 2008.

- [31] V. Shankar, G. B. Wright, R. M. Kirby, and A. L. Fogelson. A radial basis function (rbf)-finite difference (fd) method for diffusion and reaction–diffusion equations on surfaces. J. Sci. Comput., 63(3):745–768, 2015.

- [32] R. Tsai, L.T. Cheng, S. Osher, and H. K. Zhao. Fast sweeping method for a class of Hamilton-Jacobi equations. SIAM J. Numer. Anal., 41:673–694, 2003.

- [33] M. Wang, S. Leung, and H. Zhao. Modified Virtual Grid Difference (MVGD) for Discretizing the Laplace-Beltrami Operator on Point Clouds. Submitted.

- [34] T. Wong and S. Leung. A fast sweeping method for eikonal equations on implicit surfaces. Accepted by J. Sci. Comput., 2015.

- [35] J. Xu, Z. Li, J. Lowengrub, and H. Zhao. A level set method for interfacial flows with surfactant. J. Comput. Phys., 212:590–616, 2006.

- [36] J. Xu and H.K. Zhao. An Eulerian formulation for solving partial differential equations along a moving interface. J. Sci. Comput., 19:573–594, 2003.

- [37] N. Ying. Numerical Methods for Partial Differential Equations from Interface Problems. HKUST PhD Thesis, 2017.

- [38] N. Ying, K.L. Chu, and S. Leung. A Constrained Least-Squares Ghost Sample Points (CLS-GSP) Method for Differential Operators on Point Clouds. arXiv:2407.06467, 2024.

- [39] H. K. Zhao. Fast sweeping method for eikonal equations. Math. Comp., 74:603–627, 2005.