Softmax Tempering for Training Neural Machine Translation Models

Abstract

Neural machine translation (NMT) models are typically trained using a softmax cross-entropy loss where the softmax distribution is compared against smoothed gold labels. In low-resource scenarios, NMT models tend to over-fit because the softmax distribution quickly approaches the gold label distribution. To address this issue, we propose to divide the logits by a temperature coefficient, prior to applying softmax, during training. In our experiments on 11 language pairs in the Asian Language Treebank dataset and the WMT 2019 English-to-German translation task, we observed significant improvements in translation quality by up to 3.9 BLEU points. Furthermore, softmax tempering makes the greedy search to be as good as beam search decoding in terms of translation quality, enabling 1.5 to 3.5 times speed-up. We also study the impact of softmax tempering on multilingual NMT and recurrently stacked NMT, both of which aim to reduce the NMT model size by parameter sharing thereby verifying the utility of temperature in developing compact NMT models. Finally, an analysis of softmax entropies and gradients reveal the impact of our method on the internal behavior of NMT models.

1 Introduction

Neural machine translation (NMT) (Sutskever et al., 2014; Bahdanau et al., 2015) enables end-to-end training of translation models and is known to give state-of-the-art results for a large variety of language pairs. NMT for high-resource language pairs is straightforward: choose an NMT architecture and implementation, and train a model on all existing data. In contrast, for low-resource language pairs, this does not work well due to the inability of neural networks to generalize from small amounts of data. One reason for this is the strong over-fitting potential of neural models (Zoph et al., 2016; Koehn and Knowles, 2017).

There are several solutions that address this issue of which the two most effective ones are transfer learning and model regularization. Transfer learning can sometimes be considered as data regularization and comes in the form of monolingual or cross-lingual (multilingual) transfer learning (Zoph et al., 2016; Song et al., 2019), pseudo-parallel data generation (back-translation) (Sennrich et al., 2016), or multi-task learning (Eriguchi et al., 2017). On the other hand, model regularization techniques place constraints on the learning of model parameters in order to aid the model to learn robust representations that positively impact model performance. Among existing model regularization methods, dropout (Srivastava et al., 2014) is most commonly used and is known to be effective regardless of the size of data. We thus focus on designing a technique that can complement dropout especially in an extremely low-resource situation.

The most common way to train NMT models is to minimize a softmax cross-entropy loss, i.e., cross-entropy between the softmax distribution and the smoothed label distribution typically represented with a one-hot vector. In other words, the NMT model is trained to produce a softmax distribution that is similar to the label. In high-resource settings, this may never happen due to the diversity of label sequences. However, in low-resource settings, due to lack of the diversity, there is a high chance of this occurring and over-fitting is said to take place. We consider that a simple manipulation of the softmax distribution may help prevent it.

This paper presents our investigation into softmax tempering (Hinton et al., 2015) during training NMT models in order to address the over-fitting issue. Softmax tempering is realized by simply dividing the pre-softmax logits with a positive real number greater than 1.0. This leads to a smoother softmax probability distribution, which is then used to compute the cross-entropy loss. Softmax tempering has been devised and used regularly in knowledge distillation (Hinton et al., 2015; Kim and Rush, 2016), albeit for different purposes. We regard softmax tempering as a means of deliberately making the softmax distribution noisy during training with the expectation that this will have a positive impact on the final translation quality.

We primarily evaluate the utility of softmax tempering on extremely low-resource settings involving English and 11 languages in the Asian Languages Treebank (ALT) (Riza et al., 2016). Our experiments reveal that softmax tempering with a reasonably high temperature improves the translation quality. Furthermore, it makes the greedy search performance of the models trained with softmax tempering comparable to or better than the performance of the beam search using the models that are trained without softmax tempering, enabling faster decoding.

We then expand the scope of our study to high-resource settings, taking the WMT 2019 English-to-German translation task, as well as multilingual settings using the ALT data. We also show that softmax tempering improves the performance of NMT models using recurrently stacked layers that heavily share parameters. Furthermore, we clarify the relationship between softmax tempering and dropout, i.e., the most widely used and effective regularization mechanism. Finally, we analyze the impact of softmax tempering on the softmax distributions and on the gradient flows during training.

2 Related Work

The method presented in this paper is a training technique aimed to improve the quality of NMT models with a special focus on the performance of low-resource scenarios.

Work on knowledge distillation (Hinton et al., 2015) for training compact models is highly related to our application of softmax tempering. However, the purpose of softmax tempering for knowledge distillation is to smooth the student and teacher distributions which is known to have a positive impact on the quality of student models. In our case, we use softmax tempering to make softmax distributions noisy during training a model from scratch to avoid over-fitting. In the context of NMT, Kim and Rush (2016) did experiment with softmax tempering. However, their focus was on model compression and they did not experiment with low-resource settings. In contrast, our application of softmax tempering does have a strong positive impact on decoding speed because we observed that greedy search performs as well as beam search, similarly to Kim and Rush (2016). We refer readers to orthogonal methods for speeding up NMT, such as weight pruning (See et al., 2016), quantization (Lin et al., 2016), and binarization (Courbariaux et al., 2017; Oda et al., 2017).

We regard softmax tempering as a regularization technique, since it adds noise to the NMT model. Thus, it is related to techniques, such as regularization Ng (2004), dropout Srivastava et al. (2014), and tuneout Miceli Barone et al. (2017). The most important aspect of our method is that it is only applied at the softmax layer whereas other regularization techniques add noise to several parts of the entire model. Furthermore, our method is intented to complement the most popular technique, i.e., dropout, and not necessarily replace it.

We primarily focus on low-resource language pairs and in this context data augmentation via back-translating additional monolingual data (Sennrich et al., 2016) to generate pseudo-parallel corpora is one of the most effective approaches. In contrast, our method does not need any additional data on top of the given parallel data. In the literature, multilingualism has been successfully leveraged for improving translation quality (Firat et al., 2016; Zoph et al., 2016; Dabre et al., 2019), and our method could possibly complement the impact of multilingualism due to its data and language independent nature. Recently, pre-training on monolingual data (Devlin et al., 2019; Song et al., 2019; Mao et al., 2020) has been proven to strongly improve the performance of extremely low-resource language pairs. However, this requires enormous time and resources to pre-train large models. In contrast, our method can help improve performance if one does not possess the resources to perform massive pre-training, even though it could also be used on top of pre-training based methods.

3 Softmax Tempering

Softmax tempering (Hinton et al., 2015) consists of two tiny changes in the implementation of the training phase of any neural model used for classification.

Assume that is the output of the decoder for the -th word in the target language sentence, , where stands for the target vocabulary size, and that represents the softmax function producing the probability distribution, where and indicate the given source sentence and the past decoder output, respectively. Let be the one-hot reference label for the -th prediction. Then, the cross-entropy loss for the prediction is computed as , where is the inner product of two vectors.

Let be the temperature hyper-parameter. Then, the prediction with softmax tempering () and the corresponding cross-entropy loss () are formalized as follows.

| (1) | ||||

| (2) |

By referring to Equation (1), when is greater than 1.0, the logits, , are down-scaled which leads to a smoother probability distribution before loss is computed. The smoother the distribution becomes, the higher its entropy is and hence the more uncertain the prediction is. Because loss is to be minimized, back-propagation will force the model to generate logits to counter the smoothing effect of temperature. During decoding with a model trained in this way, the temperature coefficient is not used and the logits will be such that they yield a sharper softmax distribution compared to those of a model trained without softmax tempering.

The gradients are altered by tempering, and we thus re-scale the loss by the temperature as shown in Equation (2). This is inspired by the loss scaling method used in knowledge distillation (Hinton et al., 2015), where both the student and teacher’s softmaxes are tempered and the loss is multiplied by the square of the temperature.

4 Experiment

To evaluate the effectiveness of softmax tempering, we conducted experiments on both low-resource and high-resource settings.

4.1 Datasets

We experimented with the Asian Languages Treebank (ALT),111http://www2.nict.go.jp/astrec-att/member/mutiyama/ALT/ALT-Parallel-Corpus-20190531.zip comprising English (En) news articles consisting of 18,088 training, 1,000 development, and 1,018 test sentences manually translated into 11 Asian languages: Bengali (Bn), Filipino (Fil), Indonesian (Id), Japanese (Ja), Khmer (Km), Lao (Lo), Malay (Ms), Burmese (My), Thai (Th), Vietnamese (Vi), and Chinese (Zh). We focused on translation to and from English to each of these 11 languages. As a high-resource setting, we also experimented with the WMT 2019 English-to-German (EnDe) translation task.222http://www.statmt.org/wmt19/translation-task.html For training, we used the Europarl and the ParaCrawl corpora containing 1.8M and 37M sentence pairs, respectively. For evaluation, we used the WMT 2019 development and test sets consisting of 2,998 and 1,997 lines, respectively.

4.2 Implementation Details

We evaluated softmax tempering on top of the Transformer model (Vaswani et al., 2017), because it gives the state-of-the-art results for NMT. More specifically, we employed the following models.

-

•

EnXX and XXEn “Transformer Base” models where XX is an Asian language in the ALT dataset.

-

•

EnDe “Transformer Base” and “Transformer Big” models.

We modified the code of the Transformer model in the tensor2tensor v1.14. For “Transformer Base” and “Transformer Big” models, we used the hyper-parameter settings in transformer_base_single_gpu and transformer_big_single_gpu, respectively where label smoothing of 0.1 is used by default. We used the internal sub-word tokenization mechanism of tensor2tensor with separate source and target language vocabularies of size 8,192 and 32,768 for low-resource and high-resource settings, respectively. We trained our models for each of the softmax temperature values, 1.0 (default softmax), 1.2, 1.4, 1.6, 1.8, 2.0, 3.0, 4.0, 5.0, and 10.0. We used early-stopping on the BLEU score (Papineni et al., 2002) for the development set which was evaluated every after 1k iterations. Our early-stopping mechanism halts training when the BLEU score does not vary by over 0.1 BLEU over 10 consecutive evaluation steps. For decoding, we averaged the final 10 checkpoints, and evaluated beam search with beam sizes (2, 4, 6 ,8, 10, and 12) and length penalties (0.6, 0.7, 0.8, 0.9, 1.0, 1.1, 1.2, 1.3, and 1.4) and greedy search.

| Decoding | En-to-XX | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Bn | Fil | Id | Ja | Km | Lo | Ms | My | Th | Vi | Zh | ||

| 1.0 | Greedy | 3.5 | 24.3 | 27.4 | 13.4 | 19.3 | 11.5 | 31.5 | 8.3 | 13.7 | 24.0 | 10.4 |

| 1.0 | Beam | 4.2 | 25.9 | 28.7 | 15.1 | 21.5 | 13.1 | 32.8 | 9.1 | 16.0 | 26.6 | 12.1 |

| Greedy | 4.5 | 25.7 | 29.5† | 15.5 | 20.7 | 12.2 | 33.7† | 9.3 | 15.6 | 25.7 | 12.8† | |

| Beam | 4.6† | 25.9 | 29.6† | 15.9† | 21.2 | 12.2 | 33.8† | 9.7 | 15.8 | 26.1 | 13.0† | |

| Value for | 5.0 | 3.0 | 4.0 | 4.0 | 3.0 | 10.0 | 4.0 | 5.0 | 5.0 | 5.0 | 4.0 | |

| Decoding | XX-to-En | |||||||||||

| Bn | Fil | Id | Ja | Km | Lo | Ms | My | Th | Vi | Zh | ||

| 1.0 | Greedy | 7.1 | 22.2 | 25.1 | 8.7 | 14.9 | 9.8 | 27.4 | 7.8 | 10.5 | 19.4 | 9.4 |

| 1.0 | Beam | 8.5 | 24.1 | 26.5 | 10.1 | 16.5 | 11.9 | 28.6 | 9.4 | 12.5 | 21.1 | 11.0 |

| Greedy | 9.1 | 24.7 | 27.5† | 11.0† | 16.8 | 11.4 | 29.7† | 11.7† | 12.2 | 21.3 | 11.5 | |

| Beam | 9.3† | 25.0† | 27.6† | 11.3† | 17.1 | 11.7 | 29.9† | 12.0† | 12.6 | 21.5 | 12.1† | |

| Value for | 5.0 | 4.0 | 3.0 | 5.0 | 4.0 | 5.0 | 4.0 | 4.0 | 4.0 | 4.0 | 4.0 | |

4.3 Evaluation Criteria

We evaluated translation quality of each model using BLEU (Papineni et al., 2002) provided by SacreBleu Post (2018). The optimal temperature () for the tempered model was determined based on greedy search BLEU score on the development set. On the other hand, two hyper-parameters for beam search, i.e., beam size and length penalty, are not searched. The best beam search results reported in this paper are determined after computing BLEU scores on the test set, i.e., oracle. We used statistical significance testing333https://github.com/moses-smt/mosesdecoder/blob/master/scripts/analysis/bootstrap-hypothesis-difference-significance.pl to determine if differences in BLEU are significant.

4.4 Results in Low-Resource Settings

Table 1 shows the greedy and beam search BLEU scores along with the optimal temperature () for translation to and from Asian languages and compare them against those obtained by non-tempered models. Except for a few language pairs, the greedy search BLEU scores of the best performing tempered models are higher than the beam search BLEU scores of non-tempered models.

Figure 1 shows how the greedy and beam search results vary with the temperature, taking EnMs translation as an example.444We show only one translation direction due to lack of space. Kindly, refer to our supplementary material for all translation directions. As the temperature is raised, the greedy search BLEU score increases and begins approaching the beam search score. At temperature values between 2.0 and 5.0, not only does the greedy search score exceed that of a non-tempered model, but that the greedy and beam search scores are barely any different. However, increasing the temperature beyond 10.0 always has a negative effect on the translation quality, because it leads to a highly smoothed probability distribution, quantified by high entropy, that does not seem to be useful for NMT training.

Consequently, we conclude that training with reasonably high temperature (between 2.0 and 5.0), softmax tempering has a positive impact on translation quality for extremely low-resource settings.

We also computed the similarity between the greedy and beam search (beam size 4, length penalty 1.0) translations by computing the BLEU score between them, regarding the greedy search results as references. Table 2 reports on the greedy-beam search similarities for 4 translation directions for several different values of temperature. For non-tempered models, the BLEU scores were around 30 to 60, and as the temperature increases, so does the BLEU score. This indicates that greedy and beam search results grow to be similar and the most likely reason is that training with softmax tempering forces the model to be extremely precise.

| ViEn | EnVi | JaEn | EnJa | |

|---|---|---|---|---|

| 1.0 | 53.4 | 61.5 | 32.7 | 40.5 |

| 2.0 | 72.6 | 79.2 | 53.2 | 59.6 |

| 3.0 | 81.9 | 84.1 | 67.2 | 69.7 |

| 5.0 | 86.7 | 89.4 | 75.4 | 80.0 |

| Model | Training | BLEU | ||

| Greedy | Beam | |||

| Base | EP | 1.0 | 23.6 | 25.8 |

| 1.4 | 25.5 | 27.2† | ||

| EP+PC | 1.0 | 28.2 | 29.2 | |

| 1.2 | 29.1 | 30.1† | ||

| Big | EP | 1.0 | 26.8 | 29.4 |

| 1.2 | 29.1 | 30.2† | ||

| EP+PC | 1.0 | 32.7 | 33.7 | |

| 1.2 | 33.6 | 34.5† | ||

| Direction | Model | Decoding | Bn | Fil | Id | Ja | Km | Lo | Ms | My | Th | Vi | Zh | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Unidirectional | non-RS | 1.0 | Greedy | 3.5 | 24.3 | 27.4 | 13.4 | 19.3 | 11.5 | 31.5 | 8.3 | 13.7 | 24.0 | 10.4 |

| 1.0 | Beam | 4.2 | 25.9 | 28.7 | 15.1 | 21.5 | 13.1 | 32.8 | 9.1 | 16.0 | 26.6 | 12.1 | ||

| Greedy | 4.5 | 25.7 | 29.5† | 15.5 | 20.7 | 12.2 | 33.7† | 9.3 | 15.6 | 25.7 | 12.8 | |||

| Beam | 4.6 | 25.9 | 29.6† | 15.9† | 21.2 | 12.2 | 33.8† | 9.7 | 15.8 | 26.1 | 13.0 | |||

| RS | 1.0 | Greedy | 3.2 | 21.5 | 24.3 | 11.8 | 17.4 | 10.1 | 28.6 | 7.0 | 11.5 | 22.4 | 8.9 | |

| 1.0 | Beam | 3.7 | 23.7 | 25.8 | 13.6 | 19.8 | 11.8 | 30.4 | 8.2 | 13.8 | 24.9 | 10.7 | ||

| Greedy | 3.6 | 23.0 | 25.8 | 13.1 | 18.7 | 10.4 | 30.7 | 8.3 | 13.5 | 23.1 | 10.6 | |||

| Beam | 3.9 | 23.4 | 26.2 | 13.6 | 19.1 | 11.4 | 30.9 | 8.4 | 13.8 | 23.5 | 11.2 | |||

| One-to-many | non-RS | 1.0 | Greedy | 6.2 | 24.9 | 27.0 | 18.9 | 23.0 | 14.7 | 30.9 | 12.9 | 19.0 | 27.4 | 14.3 |

| 1.0 | Beam | 7.1 | 26.5 | 28.6 | 21.1 | 24.7 | 15.5 | 32.1 | 13.8 | 20.6 | 29.5 | 16.2 | ||

| Greedy | 7.0 | 27.6† | 30.0† | 21.2 | 24.4 | 15.5 | 34.2† | 14.2 | 20.5 | 30.1 | 16.3 | |||

| Beam | 7.5 | 28.6† | 30.3† | 22.7† | 25.4 | 16.2† | 34.9† | 15.1† | 21.9† | 31.6† | 17.5† | |||

| RS | 1.0 | Greedy | 6.6 | 26.4 | 28.4 | 19.6 | 23.5 | 14.8 | 32.3 | 13.2 | 19.9 | 28.8 | 14.9 | |

| 1.0 | Beam | 7.5 | 28.4 | 30.0 | 21.3 | 25.2 | 16.1 | 33.9 | 14.3 | 21.7 | 30.7 | 17.2 | ||

| Greedy | 6.6 | 26.6 | 28.8 | 19.7 | 23.7 | 14.6 | 32.5 | 13.4 | 20.0 | 28.7 | 15.1 | |||

| Beam | 7.1 | 27.0 | 29.5 | 20.9 | 24.6 | 16.3 | 33.1 | 14.6 | 21.2 | 30.4 | 16.7 |

4.5 Results in High-Resource Settings

Table 3 gives the BLEU scores for the high-resource EnDe translation task. The results indicate that compared to the low-resource settings, relatively lower temperature values are effective for improving translation quality. While the improvements in translation quality are not as large as those in the low-resource settings, greedy and beam search improve by 0.8 to 2.3 BLEU points for temperature values around 1.2 to 1.6. We noticed that higher temperature values do bridge the gap between greedy and beam search performances. However, since they also reduce translation quality, we do not recommend using high temperature values in high-resource settings.

For the models trained only on the Europarl corpus (EP), the greedy and beam search performances of the Transformer Base model starts approaching those of the Transformer Big model.

4.6 Impact on Training and Decoding Speed

Although training with softmax tempering makes it difficult for a model to over-fit the label distributions, we did not notice any impact on the training time. This indicates that the improvements are unrelated to longer training times.

With regard to decoding, in low-latency settings, we can safely use greedy search with tempered models given that it is as good as, if not better than, beam search using non-tempered models. Thus, by comparing the greedy and beam search decoding speeds, we can determine the benefits that softmax tempering brings in low-latency settings. Greedy search decoding of the ViEn555For ALT tasks, decoding times are very similar when translating into English due to it being a multi-parallel corpus. test set requires 37.6s on average, whereas beam search with beam sizes of 4 and 10 require 56.4s and 138.2s, respectively. For non-tempered models, where beam search scores are higher than greedy search scores by over 2.0 BLEU points, the best BLEU scores are obtained using beam sizes between 4 and 10. Given the improved performance with greedy search, we can decode anywhere from 1.5 to 3.5 times faster. Subjecting softmax tempering to model compression methods, such as weight pruning, might further reduce decoding time.

5 Analysis and Further Exploration

We now focus on an intrinsic and extrinsic analyses of our method by studying its impact on extreme parameter sharing and multilingualism, its relationship to dropout, and finally on the internal working of the models during training.

5.1 Impact on Parameter Sharing Models

Why can regularization improve translation quality? One possible explanation is that the constraints imposed by regularization techniques force the model to more effectively utilize its existing capacity, indicated by the model parameters. This is especially important in low-resource settings. It is thus worth verifying the impact of softmax tempering, a regularization technique, on models with significantly reduced capacity.

In particular, we experimented with models that share parameters between the stacked layers, so-called recurrently stacked (RS) models (Dabre and Fujita, 2019), known to suffer from reduced translation quality for NMT. While we could have trained shallower models and/or models with small hidden sizes, they inevitably require experiments with combinations of hyper-parameters, i.e., number of layers and hidden size. In contrast, RS models are relatively recent and different from non-RS ones with regards to parameter sharing and thereby simplifying exploration.

Compare the first and second blocks of Table 4 for non-RS models and their RS counterparts. It is clear that RS models are always weaker than non-RS models, but the greedy search with RS models are largely improved by the tempered training. However, unlike in the case of a non-RS model, the beam search quality either remains the same or degrades in most cases. The major difference between RS and non-RS models is in the number of parameters and we can safely say that RS models simply lack the capacity to strongly benefit from softmax tempering. Nevertheless, the greedy search performance of an RS model with an appropriate temperature setting becomes comparable to a non-RS model trained without softmax tempering.

RS models tend to be 50-60% smaller than non-RS models (Dabre and Fujita, 2019). Thus, when one wants RS models for low-memory settings, we recommend to train them with softmax tempering.

5.2 Impact on Multilingualism

By training a multilingual model, we share parameters between multiple language pairs thereby reducing the amount of model capacity available for individual language pairs. Additionally, using multilingual data is a way to simulate a high-resource situation. While softmax tempering is not always useful in unidirectional high-resource translation settings (see Section 4.5), it may benefit simulated high-resource settings realized though multilingual models for extremely low-resource language pairs.

We trained a one-to-many NMT model (Dong et al., 2015) for English to 11 Asian languages by concatenating the training data of the ALT corpus after prepending each source sentence with an artificial token indicating the target language as in Johnson et al. (2017). The ALT corpus is 12-way parallel, and thus the English side contains the same sentences 11 times. We used a vocabulary with 8,192 sub-words666In our preliminary experiment with a 32,768 sub-word vocabulary for English, we obtained lower BLEU scores than those of unidirectional models. We realized that the reason was that increasing the number of language pairs did not increase the English side vocabulary and thus these sub-words resulted in a word-level vocabulary which negatively affects translation quality. for English and another one with 32,768 sub-words for all target languages combined. As in Section 4, we trained Transformer Base models with different values for temperature.

Comparing the non-tempered models () in the first and third blocks of Table 4, it is clear that multilingual models already outperform corresponding unidirectional models by up to 6.0 BLEU points (EnJa), even though EnId and EnMs, which originally have the highest BLEU scores, suffer slightly. This highlights the utility of multilingualism in itself, since our training data is multi-parallel and introducing a new language pair does not introduce new contents. With softmax tempering, temperature values between 1.2 and 2.0 brought improvements in translation quality by up to 6.8 BLEU points over the best unidirectional models (marked bold in the first block). Note that the optimal temperature values, , are similar to those in the case of high-resource EnDe translation task. Although multilingualism simulates a high-resource setting, we observed that softmax tempering is more effective compared to a unidirectional modeling in the real high-resource setting. We thus recommend training multilingual models with softmax tempering, especially when the individual language pairs are low-resource.

The bottom block of Table 4 show results when we push parameter sharing to its extreme limits combining both RS and multilingualism. Comparing them with the third block, multilingual RS models without softmax tempering always outperform its non-RS counterpart by up to 1.9 BLEU points. However, multilingual RS models are negatively impacted by softmax tempering: the greedy search translation quality increases slightly (if at all), while the beam search translation quality degrades. Multilingual models already share parameters for 11 translation directions, significantly lowering the capacity per translation direction than unidirectional models. RS of layers further increases the burden on the multilingual model. Thus, temperature puts further burden on the model’s learning which has a negative impact on performance.

Ultimately, multilingual tempered models give the best possible translations. We thus recommend to train (a) multilingual RS models without softmax tempering aiming at extreme compactness or (b) multilingual non-RS models with softmax tempering for higher translation quality and faster decoding with greedy search.

5.3 Dropout

Softmax tempering is a kind of regularization, since it makes the model predictions noisy during training, aiming to enable better learning. We have so far experimented with softmax tempering in combination with dropout. To this end, we experimented with both low-resource and high-resource settings using softmax tempering with and without dropout to explicitly examine their complementarity.

For low-resource settings, we randomly chose BnEn and ViEn translation directions, and additionally trained unidirectional Transformer Base models using softmax tempering in various temperature settings but without dropout. We also trained Transformer Base and Big models for the EnDe task without dropout. To be precise, to disable dropout, we set attention, embedding, and layer dropouts in the model to zero during training.

The results are shown in Table 5. It is known that disabling dropout significantly deteriorates the translation quality. However, in our ViEn and BnEn settings, higher temperature values are able to compensate for the drops. This shows that dropout and softmax tempering are complementary and that the latter acts as a regularizer similarly to the former. Note that softmax tempering alters the softmax layer, whereas dropout is applied throughout the model. This can explain why dropout has a larger impact than softmax tempering.

In the high-resource setting, in contrast, dropout does not positively impact on the translation quality. In the context of high-resource NMT, the impact of dropout on the Transformer was never explicitly investigated in detail. In such a situation, the large quantities of data might enable enough regularization. However, in the case of Transformer Base, temperature values of 1.2 to 1.6 tend to improve the greedy search performance when dropout is not used, bridging the performance gap between the Transformer Base and Transformer Big models. This shows that softmax tempering can be an alternative to dropout depending on the setting. This warrants further exploration of the relationship between data regularization and model regularization.

| Dropout | Decoding | BnEn | ViEn | EnDe | ||

|---|---|---|---|---|---|---|

| (Base) | (Big) | |||||

| Yes | 1.0 | Greedy | 7.1 | 19.4 | 28.2 | 32.7 |

| 1.0 | Beam | 8.5 | 21.1 | 29.2 | 33.7 | |

| Greedy | 9.1 | 21.3 | 29.1 | 33.6 | ||

| Beam | 9.3† | 21.5 | 30.1† | 34.5† | ||

| No | 1.0 | Greedy | 2.6 | 17.4 | 29.5 | 33.3 |

| 1.0 | Beam | 3.1 | 19.9 | 30.7 | 34.5 | |

| Greedy | 3.5 | 20.9† | 30.6 | 33.3 | ||

| Beam | 3.5 | 21.1† | 31.7† | 34.1 | ||

5.4 Temperature and Model Learning

We expected that tempering leads to a smoother softmax and loss minimization using such a softmax makes it sharper as training progresses. With softmax tempering, the model will continue to receive strong gradient updates even during later training stages due to the deliberate perturbation of the softmax distribution. Thus, we confirm whether our model truly behaves this way through visualizing the softmax entropies and gradient values.

Figure 2 visualizes the variation of softmax entropy averaged over all tokens in a batch during training. The left-hand side shows the entropy of tempered softmax distribution in Equation (1), where there is no visible differences between charts with different values for temperature, i.e., . Considering that the distribution is tempered with , this indicates that the distribution of logits, , is sharper when tempered with a higher . The right-hand side plots the entropy of softmax distribution derived from the logits without dividing them by . The lower entropy confirm that the distribution of logits is indeed sharper with higher and that division by as in Equation (1) counters the effect of sharpening. This means that the distribution of logits is forced to become sharper and thus produce exactly one word that the model believes the best to generate the rest of the sequence. Given the fact that translation quality is improved by softmax tempering, designing training methods that lead to sharper distribution might be useful.

Figure 3 shows the gradient norms during training with softmax tempering. This revealed that, similarly to ordinary non-tempered training, gradient norms in softmax tempering first increase during the warm-up phase of training and then gradually decrease. However, the major difference is that the norm values significantly decrease for the non-tempered training, whereas they are much higher for training with softmax tempering. Note that we re-scaled the loss for softmax tempering as in Equation (2), which is one reason why the gradient norms are higher. Larger gradient norms indicate that strong learning signals are being back-propagated and this will continue as long as the softmax is forced to make erroneous decisions because of higher temperature values.

We can thus conclude that the noise introduced by softmax tempering and subsequent loss re-scaling strongly affect the behavior of NMT models which eventually have an impact on translation quality.

6 Conclusion

In this paper, we explored the utility of softmax tempering for training NMT models. Our experiments in low-resource and high-resource settings revealed that not only does softmax tempering lead to an improvement in the decoding quality but also bridges the gap between greedy and beam search performance. Consequently, we can use greedy search while achieving better translation quality than non-tempered models leading to 1.5 to 3.5 times faster decoding. We also explored the compatibility of softmax tempering with multilingualism and extreme parameter sharing, and explicitly investigated the complementarity of softmax tempering and dropout, where we show that softmax tempering can be an alternative to dropout in high-resource settings, while it is complementary to dropout in low-resource settings. Our analysis of the softmax entropies and gradients during training confirms that tempering gives precise softmaxes while enabling the model to learn with strong gradient signals even during late training stages.

In the future, we will explore the effectiveness of softmax tempering in other natural language processing tasks.

Acknowledgments

A part of this work was conducted under the commissioned research program “Research and Development of Advanced Multilingual Translation Technology” in the “R&D Project for Information and Communications Technology (JPMI00316)” of the Ministry of Internal Affairs and Communications (MIC), Japan. Atsushi Fujita was partly supported by JSPS KAKENHI Grant Number 19H05660.

References

- Bahdanau et al. (2015) Dzmitry Bahdanau, Kyunghyun Cho, and Yoshua Bengio. 2015. Neural machine translation by jointly learning to align and translate. In Proceedings of the 3rd International Conference on Learning Representations (ICLR), San Diego, USA. International Conference on Learning Representations.

- Courbariaux et al. (2017) Matthieu Courbariaux, Itay Hubara, Daniel Soudry, Ran El-Yaniv, and Yoshua Bengio. 2017. Binarized neural networks: Training deep neural networks with weights and activations constrained to +1 or -1. CoRR, abs/1602.02830.

- Dabre and Fujita (2019) Raj Dabre and Atsushi Fujita. 2019. Recurrent stacking of layers for compact neural machine translation models. In Proceedings of the AAAI Conference on Artificial Intelligence, pages 6292–6299, Honolulu, USA. Association for the Advancement of Artificial Intelligence.

- Dabre et al. (2019) Raj Dabre, Atsushi Fujita, and Chenhui Chu. 2019. Exploiting multilingualism through multistage fine-tuning for low-resource neural machine translation. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), pages 1410–1416, Hong Kong, China. Association for Computational Linguistics.

- Devlin et al. (2019) Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2019. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), pages 4171–4186, Minneapolis, USA. Association for Computational Linguistics.

- Dong et al. (2015) Daxiang Dong, Hua Wu, Wei He, Dianhai Yu, and Haifeng Wang. 2015. Multi-task learning for multiple language translation. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing of the Asian Federation of Natural Language Processing (ACL-IJCNLP), Volume 1: Long Papers, pages 1723–1732, Beijing, China. Association for Computational Linguistics.

- Eriguchi et al. (2017) Akiko Eriguchi, Yoshimasa Tsuruoka, and Kyunghyun Cho. 2017. Learning to parse and translate improves neural machine translation. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), pages 72–78, Vancouver, Canada. Association for Computational Linguistics.

- Firat et al. (2016) Orhan Firat, Kyunghyun Cho, and Yoshua Bengio. 2016. Multi-way, multilingual neural machine translation with a shared attention mechanism. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pages 866–875, San Diego, USA. Association for Computational Linguistics.

- Hinton et al. (2015) Geoffrey Hinton, Oriol Vinyals, and Jeffrey Dean. 2015. Distilling the knowledge in a neural network. CoRR, abs/1503.02531.

- Johnson et al. (2017) Melvin Johnson, Mike Schuster, Quoc Le, Maxim Krikun, Yonghui Wu, Zhifeng Chen, Nikhil Thorat, Fernand a Viégas, Martin Wattenberg, Greg Corrado, Macduff Hughes, and Jeffrey Dean. 2017. Google’s multilingual neural machine translation system: Enabling zero-shot translation. Transactions of the Association for Computational Linguistics, 5:339–351.

- Kim and Rush (2016) Yoon Kim and Alexander M. Rush. 2016. Sequence-level knowledge distillation. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, pages 1317–1327, Austin, USA. Association for Computational Linguistics.

- Koehn and Knowles (2017) Philipp Koehn and Rebecca Knowles. 2017. Six challenges for neural machine translation. In Proceedings of the First Workshop on Neural Machine Translation, pages 28–39, Vancouver, Canada. Association for Computational Linguistics.

- Lin et al. (2016) Darryl D. Lin, Sachin S. Talathi, and V. Sreekanth Annapureddy. 2016. Fixed point quantization of deep convolutional networks. In Proceedings of the 33rd International Conference on International Conference on Machine Learning, pages 2849–2858, New York, USA.

- Mao et al. (2020) Zhuoyuan Mao, Fabien Cromieres, Raj Dabre, Haiyue Song, and Sadao Kurohashi. 2020. JASS: Japanese-specific sequence to sequence pre-training for neural machine translation. In Proceedings of the 12th Language Resources and Evaluation Conference, pages 3683–3691, Marseille, France. European Language Resources Association.

- Miceli Barone et al. (2017) Antonio Valerio Miceli Barone, Barry Haddow, Ulrich Germann, and Rico Sennrich. 2017. Regularization techniques for fine-tuning in neural machine translation. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, pages 1489–1494, Copenhagen, Denmark. Association for Computational Linguistics.

- Ng (2004) Andrew Y. Ng. 2004. Feature selection, L1 vs. L2 regularization, and rotational invariance. In Proceedings of the 21st International Conference on Machine Learning, Banff, Canada.

- Oda et al. (2017) Yusuke Oda, Philip Arthur, Graham Neubig, Koichiro Yoshino, and Satoshi Nakamura. 2017. Neural machine translation via binary code prediction. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 850–860, Vancouver, Canada. Association for Computational Linguistics.

- Papineni et al. (2002) Kishore Papineni, Salim Roukos, Todd Ward, and Wei-Jing Zhu. 2002. BLEU: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting on Association for Computational Linguistics (ACL), pages 311–318, Philadelphia, USA. Association for Computational Linguistics.

- Post (2018) Matt Post. 2018. A call for clarity in reporting BLEU scores. In Proceedings of the Third Conference on Machine Translation: Research Papers, pages 186–191, Belgium, Brussels. Association for Computational Linguistics.

- Riza et al. (2016) Hammam Riza, Michael Purwoadi, Gunarso, Teduh Uliniansyah, Aw Ai Ti, Sharifah Mahani Aljunied, Luong Chi Mai, Vu Tat Thang, Nguyen Phuong Thai, Vichet Chea, Rapid Sun, Sethserey Sam, Sopheap Seng, Khin Mar Soe, Khin Thandar Nwet, Masao Utiyama, and Chenche n Ding. 2016. Introduction of the Asian Language Treebank. In Proceedings of the 2016 Conference of the Oriental Chapter of International Committee for Coordination and Standardization of Speech Database s and Assessment Technique (O-COCOSDA), pages 1–6, Bali, Indonesia.

- See et al. (2016) Abigail See, Minh-Thang Luong, and Christopher D. Manning. 2016. Compression of neural machine translation models via pruning. In Proceedings of the 20th SIGNLL Conference on Computational Natural Language Learning, pages 291–301, Berlin, Germany. Association for Computational Linguistics.

- Sennrich et al. (2016) Rico Sennrich, Barry Haddow, and Alexandra Birch. 2016. Improving neural machine translation models with monolingual data. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (ACL), Volume 1: Long Papers, pages 86–96, Berlin, Germany. Association for Computational Linguistics.

- Song et al. (2019) Kaitao Song, Xu Tan, Tao Qin, Jianfeng Lu, and Tie-Yan Liu. 2019. MASS: masked sequence to sequence pre-training for language generation. In Proceedings of the 36th International Conference on Machine Learning, pages 5926–5936, Long Beach, USA.

- Srivastava et al. (2014) Nitish Srivastava, Geoffrey Hinton, Alex Krizhevsky, Ilya Sutskever, and Ruslan Salakhutdinov. 2014. Dropout: A simple way to prevent neural networks from overfitting. Journal of Machine Learning Research, 15(56):1929–1958.

- Sutskever et al. (2014) Ilya Sutskever, Oriol Vinyals, and Quoc V. Le. 2014. Sequence to sequence learning with neural networks. In Proceedings of the 27th Neural Information Processing Systems Conference (NIPS), pages 3104–3112, Montréal, Canada. Curran Associates, Inc.

- Vaswani et al. (2017) Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Łukasz Kaiser, and Illia Polosukhin. 2017. Attention is all you need. In Proceedings of the 30th Neural Information Processing Systems Conference (NIPS), pages 5998–6008, Long Beach, USA. Curran Associates, Inc.

- Zoph et al. (2016) Barret Zoph, Deniz Yuret, Jonathan May, and Kevin Knight. 2016. Transfer learning for low-resource neural machine translation. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing (EMNLP), pages 1568–1575, Austin, USA. Association for Computational Linguistics.

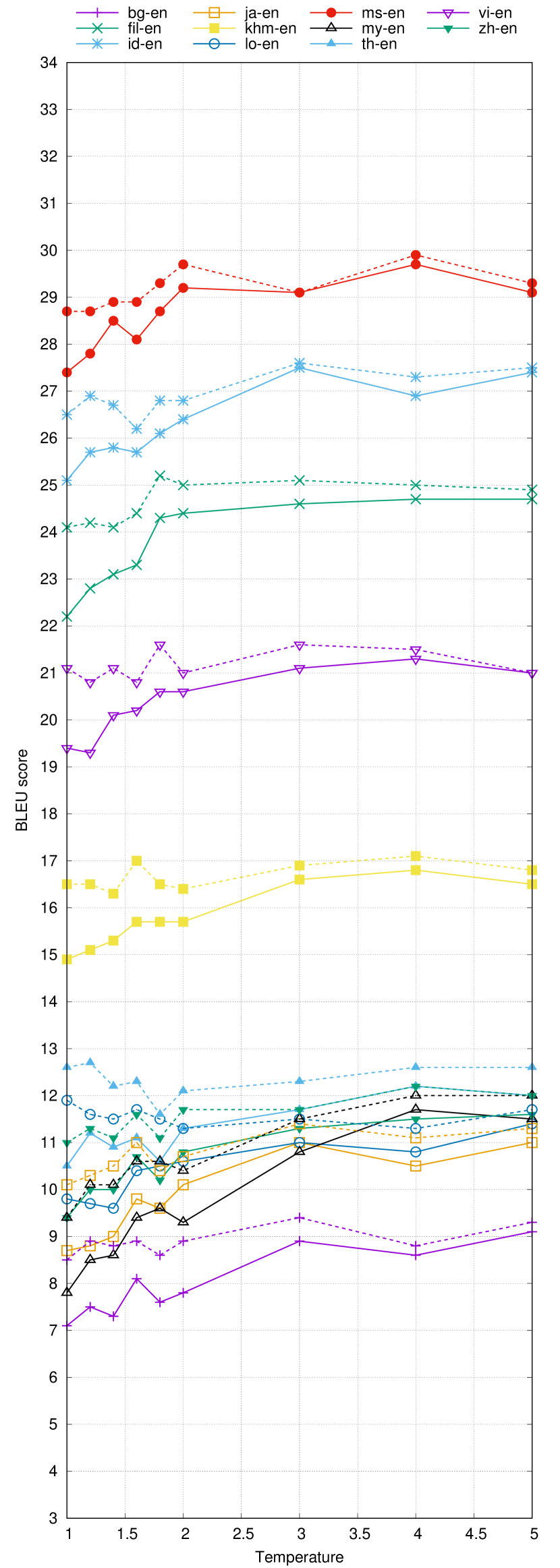

Appendix A Variation of Greedy and Beam Search Results with Temperature

Figure 4 shows the BLEU scores for all the 22 translation directions in the ALT translation tasks. Solid lines in the figure show the results obtained by greedy search. On the other hand, dotted lines are the oracle beam search result, i.e., at each temperature, the best score among 54 different combinations of beam sizes and length penalties is determined after computing BLEU score.