example \AtEndEnvironmentexample∎

Soft Demapping of Spherical Codes from

Cartesian Powers of PAM Constellations

Abstract

For applications in concatenated coding for optical communications systems, we examine soft-demapping of short spherical codes constructed as constant-energy shells of the Cartesian power of pulse amplitude modulation constellations. These are unions of permutation codes having the same average power. We construct a list decoder for permutation codes by adapting Murty’s algorithm, which is then used to determine mutual information curves for these permutation codes. In the process, we discover a straightforward expression for determining the likelihood of large subcodes of permutation codes. We refer to these subcodes, obtained by all possible sign flips of a given permutation codeword, as orbits. We introduce a simple process, which we call orbit demapping with frozen symbols, that allows us to extract soft information from noisy permutation codewords. In a sample communication system with probabilistic amplitude shaping protected by a standard low-density parity-check code that employs short permutation codes, we demonstrate that orbit demapping with frozen symbols provides a gain of about dB in signal-to-noise ratio compared to the traditional symbol-by-symbol demapping. By using spherical codes composed of unions of permutation codes, we can increase the input entropy compared to using permutation codes alone. In one scheme, we consider a union of a small number of permutation codes. In this case, orbit demapping with frozen symbols provides about dB gain compared to the traditional method. In another scheme, we use all possible permutations to form a spherical code that exhibits a computationally feasible trellis representation. The soft information obtained using the BCJR algorithm outperforms the traditional symbol-by-symbol method by dB. Overall, using the spherical codes containing all possible permutation codes of the same average power and the BCJR algorithm, a gain of dB is observed compared with the case of using one permutation code with the symbol-by-symbol demapping. Comparison of the achievable information rates of bit-metric decoding verifies the observed gains.

Index Terms:

Probabilistic amplitude shaping, spherical codes, permutation codes, trellis, soft-out demapping.I Introduction

Recent advancements in optical fiber communication have highlighted the potential of employing short spherical codes for modulation, leading to promising nonlinear gains [1]. These spherical codes, constructed from Cartesian powers of pulse amplitude modulation (PAM) constellations, are essentially unions of permutation codes [2]. Permutation codes have already found their application in probabilistic amplitude shaping (PAS) within the realm of fiber optic communication [3]. This paper aims to delve deeper into the study of such unions of permutation codes, exploring their potential benefits and implications in the context of optical fiber communications.

Permutation codes manifest three intriguing properties. Firstly, all codewords possess the same energy. This characteristic has been identified as the cause for their reduced cross-phase modulation (XPM) and enhanced received signal-to-noise ratio (SNR) when deployed over a nonlinear optical fiber [1], serving as the primary motivation for this work.

Secondly, all codewords, when considering the amplitude of their elements, exhibit the same type. Consequently, they can be utilized to approximate a probability mass function on their constituent constellation (i.e., the alphabet from which the elements of the initial vector are selected). This capability enables their use in distribution matching [4], a key property implicitly fundamental to the development of PAS [3].

Thirdly, minimum Euclidean distance decoding of permutation codes is notably straightforward [2], with a decoding complexity not worse than that of sorting.

The performance of permutation codes has been extensively studied in terms of their distance properties and error probability under maximum likelihood detection when transmitted over an additive white Gaussian noise (AWGN) channel (see [5] and references therein). The performance of permutation codes in terms of mutual information in an AWGN channel has remained largely elusive until recent advancements. In particular, the work of [3] suggests that, given reasonable protection with a forward error-correcting code, subsets of permutation codes with large blocklength can operate remarkably close to the Shannon limit.

To circumvent the inherent rate loss at short blocklengths [6], permutation codes of sufficiently large blocklength should be employed for PAS [7]. Conversely, to leverage their enhanced SNR in nonlinear fiber, a smaller blocklength is preferred [1]. This presents a trade-off between the blocklength and the linear/nonlinear gains, which is an area of focus in this work. Our primary interest lies in the application of permutation codes, and generalizations thereof, as inner multi-dimensional modulation formats in a concatenated scheme with an outer error-correcting code111In the reverse concatenation scheme used in PAS, the meanings of “inner” and “outer” should be interpreted from the point of view of the receiver.. We consider the PAS [3] setup to demonstrate our findings, as detailed in Section II. The novelty of our work lies in our choice of signal set for the inner modulation and in the soft demapping of the inner modulation.

We will focus on permutation codes with a PAM constituent constellation. This maintains standard transmitter designs, making our codes compatible with existing systems. As mentioned, the performance of permutation codes in terms of mutual information has not been extensively studied in the literature. This is chiefly due to the very large size of these codes, which makes conventional computation of mutual information practically impossible. In Sections III and IV, different types of achievable rates of permutation codes are discussed. In particular, using a novel list decoder for permutation codes constructed as a special case of Murty’s algorithm [8], we develop a methodology to obtain mutual information curves of permutation codes for the AWGN channel—a task that was otherwise infeasible using conventional methods. These curves, which are counterparts of the mutual information curves of [9] for PAM constellations, characterize the mutual information as a function of SNR for permutation codes when, at the transmitter, codewords are chosen uniformly at random. Using these curves, we study the shaping performance of permutation codes as a function of their blocklength and find the range of blocklengths for which a reasonable shaping gain can be expected.

The soft-demapping of permutation codes (or the more general spherical codes we will study) has received little attention in the literature. One common approach to obtaining soft information is to assume that the elements of each codeword are independent [3]. This significantly reduces the computational complexity of calculating the soft information, as it can be computed in a symbol-by-symbol fashion. The type of the permutation code contributes to the calculation of the soft information through the prior distribution. While the soft information obtained using this assumption becomes more accurate as the blocklength goes to infinity, for the small blocklengths of interest in nonlinear fiber, the symbol-by-symbol soft-demapping approach of [3] is far from accurate [10, 11]. One key result of this work is a simple and computationally efficient method, which is described in Section IV, to obtain soft information from received permutation codewords.

Finding the largest permutation code for a given average energy and a fixed constituent constellation is a finite-dimensional counterpart of finding the maximizer of input entropy with an average power constraint [12]. By removing the constraint on having a permutation code (i.e., having only one type), the problem of finding the largest spherical code for a given average energy and a fixed constituent constellation is more relevant at finite blocklengths. This will allow us to further increase the input entropy at shorter blocklengths of interest in communication over nonlinear optical fiber while preserving the constant-energy property. Most importantly, in Section V, we show that such codes exhibit a trellis structure with reasonable trellis complexity, allowing us to obtain soft information from the received spherical codewords. Moreover, the achievable rates of permutation codes under bit-metric decoding are numerically evaluated using various methods for estimating soft information. The soft-in soft-out structure of our scheme makes it an appealing choice for fiber-optic communications systems, as it allows for short constant-energy spherical codes with reasonable shaping gain and tolerance to nonlinear impairments caused by XPM.

Throughout this paper, we adopt the following notational conventions to ensure clarity and consistency. The set of real numbers is denoted as . A normal lowercase italicized font is used for variables, samples of a random variable and functions with one-letter names, as in or . A bold lowercase italicized font is used for vectors, which are always treated as column vectors, as in . A normal capital italicized font is used for random variables and graphs, as in and . The only exception to this rule is which is used as Landau’s big-O symbol. A bold capital italicized font is used for random vectors, as in . A sans-serif capital font is used for matrices, as in . A capital calligraphy font is used for sets and groups, as in and . A monospace font is used for energy (squared euclidean norm) of vectors as in or .

II Background

This section contains an overview of some of the most important concepts used in this paper including a brief description of permutation codes and a general overview of PAS. We also review the assignment problem, a fundamental combinatorial optimization problem which will play a central role in the development of list decoders for permutation codes.

II-A Permutation Codes

We denote the set of the first positive integers by . A permutation of length is a bijection from to itself. The set of all permutations of length forms a group under function composition. This group is called the symmetric group on and is denoted by .

There are different ways to represent a permutation . In the two-line form, the permutation is given as

The matrix form of is given as

where denotes a column vector of length with in the position and in every other position. The set of permutation matrices forms a group under matrix multiplication and is usually denoted as . This group is, of course, isomorphic to . A signed permutation matrix is a generalized permutation matrix whose nonzero entries are . The set of all signed permutation matrices forms a group under matrix multiplication and is usually denoted as .

A Variant I permutation code of length [2] is characterized as the set of points in real Euclidean -space that are generated by the group acting on a vector :

This means that the code is the set of all distinct vectors that can be formed by permuting the order of the elements of a vector . Each vector in is called a codeword. Likewise, a Variant II permutation code of length is characterized as the set of points in that are generated by the group acting on a vector :

Here, the code is the set of all distinct vectors that can be formed by permuting the order or changing the sign of the elements of a vector .

The initial vector of a Variant I or II permutation code is the codeword

that satisfies . The left most inequality is strict to be consistent with the use of a PAM constituent constellation.

II-B Probabilistic Amplitude Shaping

We consider the communication system of PAS as described in [3]. As shown in Fig. 1, at the first stage in the transmitter, a sequence of binary information inputs is mapped to a Variant I permutation code whose initial vector has an empirical distribution that resembles a desired input distribution. This first step is called permutation encoding and is described further in Section IV. Each symbol in the constituent constellation is labeled according to some Gray labeling of the used PAM. Therefore, corresponding to each Variant I codeword, there is a binary label obtained by concatenating the Gray label of each of the elements in the codeword. If the constituent constellation222We assume that the constituent constellation has a power-of-two size. is , the length of the label of each Variant I codeword of blocklength is exactly . The binary label of a codeword should not be confused with the information bits at the input of the permutation encoder that produced the codeword. Each Variant I permutation codeword is the output of the permutation encoder applied to a sequence of typically less than input information bits.

To protect the labels, a soft-decodable channel code is used. We emphasize that instead of encoding the input information bits that was used by the permutation encoder, the codeword labels are encoded by the channel code. This is fundamental in the development of PAS that uses bit-interleaved coded modulation as it allows the permutation codewords to have a semi-Gray labeling: if codewords are close in Euclidean distance, their labels are also close in the Hamming distance. If the input information bits are used to label the codewords, a semi-Gray labeling, even for small blocklengths, appears to be challenging to find. The channel encoder produces a sequence of parity bits which is used to assign signs to the elements of the Variant I permutation codeword333Sometimes, some binary inputs are directly used as sign bits of some of the elements of the permutation codeword. This is done when the ratio of the blocklength of the permutation code to the length of its binary label, , is larger than the overhead of the channel code. See [3] for details.. This will produce a Variant II permutation codeword which will be transmitted over an AWGN channel with noise variance .

A noisy version of each transmitted Variant II permutation codeword is received at the receiver. By demapping, we mean the detection of the binary label and sign bits of a permutation codeword from its received noisy version. The first block at the receiver estimates the log-likelihood ratio (LLR) for each bit in the label of the received word—a process we refer to as soft demapping. The estimated LLRs are then passed to the decoder of the channel code to approximate the label of the transmitted Variant I permutation codeword at the receiver. If an acceptable Variant I codeword is obtained, the inverse of permutation encoder produces the corresponding binary input sequence. Conversely, if the reproduced symbols do not form a valid Variant I permutation codeword, a block error is declared.

II-C The Assignment Problem

A bipartite graph is a triple consisting of two disjoint set of vertices and and a set of edges . Each edge in connects a vertex in to a vertex in . The graph is called balanced if . The bipartite graph is complete if . All bipartite graphs that we consider are assumed complete and balanced. The graph is weighted if there exist a weight function . A matching is a set of edges without common vertices. The weight of a matching is the sum of the weight of the edges in that matching. A perfect matching is a matching that matches all vertices of the graph. The maximum weight perfect matching problem is the problem of finding a perfect matching with maximum weight. This problem is usually called the assignment problem and is stated as follows.

Problem 1 (Assignment Problem).

Find a bijection such that the reward function

is maximized.

The assignment problem is typically solved using the Hungarian method [13, 14]. The time complexity of the Hungarian algorithm is . However, the weight function may have additional structure that can be exploited to reduce the complexity of solving the assignment problem.

A weight function is said to be multiplicative if there exist two functions

such that

If the weight function is multiplicative the assignment problem can be solved using sorting as follows: Assume

and that there are two permutations and on such that

In this case, the assignment problem can be immediately solved using the upper bound in the rearrangement inequality [15]. The bijection that solves the assignment problem is defined by

The solution can be found by sorting in time.

III Permutation Codes as Multidimensional Constellations

In this section, we discuss how permutation codes can be used as multidimensional constellations. List decoding of permutation codes is formulated as an assignment problem and proper weight functions for permutation codes are defined. In particular, a novel weight function for Variant II permutation codes is introduced that allows for efficient orbit list decoding of Variant II permutation codes. Orbit decoding is used in the rest of the paper in order to obtain soft information when demapping Variant II permutation codes. In the Appendix, using the definitions of this section, we present a methodology to obtain mutual information vs. SNR curves for permutation codes. Essential to the development of our method is a specialized version of Murty’s algorithm which is described in the Appendix. This section concludes with example mutual information curves which show that considerable shaping gain is obtained by using permutation codes with blocklengths as small as .

III-A List Decoding of Variant I Permutation Codes

We consider a vector Gaussian noise channel

where the input is a random vector uniformly distributed over a permutation code , the noise term is a standard normal random vector and is the standard deviation of the additive white Gaussian noise. The output is a noisy version of . The joint distribution444Instead of distinguishing between discrete, continuous or mixed random vectors, we always refer to as a distribution for convenience. of the input and the output is denoted by . Similarly, is the conditional distribution of the output conditional on the input and is the output distribution. List decoding of a Variant I permutation code is to find a set of codewords with the highest likelihoods so that

where is the list size.

To be able to find such a list of candidate codewords, we need to define a weight function for the maximum likelihood decoding of permutation codes. Because the noise is Gaussian, a codeword has a higher likelihood than a codeword if is closer to the received word than . That is,

Using the constant-energy property of permutation codes, we can simplify further to get

Therefore, list decoding with maximum likelihood reward is equivalent to list decoding with maximum correlation reward. For Variant I permutation codes, we can define the following assignment problem:

Problem 2 (Maximum Correlation Decoding).

Find a bijection such that the reward function is maximized.

This is an assignment problem with

and weight function

The weight function is clearly multiplicative with and . The list decoding problem, then, can be solved by a direct application of Murty’s algorithm as described in the Appendix.

The assignment problem defined for Variant I permutation codes is not suitable for Variant II permutation codes as in a Variant II code, each symbol can have a plus or minus sign. It is not possible to accommodate such arbitrary sign flips in the assignment problem defined for Variant I permutation codes. In what follows, we introduce maximum orbit-likelihood decoding of Variant II permutation codes that allows us to reformulate the decoding problem in the framework of an assignment problem.

III-B Orbit Decoding of Variant II Permutation Codes

A trivial subcode of a Variant II permutation code corresponding to a codeword is the subcode containing and all codewords obtained by changing the sign of elements in in all possible ways. We denote the group of -dimensional diagonal unitary matrices (i.e., diagonal integer matrices with diagonal entries) as . A trivial subcode corresponding to is the orbit of under the group action defined by

Similar to finding the codeword in a Variant I permutation code with the maximum likelihood, we can think about finding the orbit in a Variant II permutation code with the maximum orbit likelihood—a process that we call maximum orbit-likelihood decoding. Also, similar to a list of most likely codewords for a Variant I permutation code, we can think about finding a list of most likely orbits for a Variant II permutation code—a process that we refer to as orbit list-decoding. In order to use Murty’s algorithm, a weight function for the orbits needs to be defined. We denote the likelihood of the orbit of by

The key result of this section is the following theorem.

Theorem 1.

The likelihood of the orbit of under the action of is given by

| (1) |

where is a constant that depends on the energy of the received word , the energy of each permutation codeword, the noise standard deviation and the blocklength .

Proof.

∎

Theorem 1 suggests the weight function

for Murty’s algorithm in orbit list-decoding of Variant II permutation codes. This weight function, however, is not multiplicative. Using a generalized version of the rearrangement inequality [16], it turns out that this weight function has all the required properties of a multiplicative weight function that we used to simplify Murty’s algorithm in the Appendix.

Mutual information of Variant II permutation codes can be computed using the orbit likelihoods by the method discussed in the Appendix. Note that the contribution of the likelihood of one orbit in in (7) is equivalent to the contribution of all codewords in the orbit.

Example 1.

As an example, we find the mutual information vs. SNR for five codes with blocklength with the following initial vectors:

-

•

for Code 1, ,

-

•

for Code 2, ,

-

•

for Code 3, ,

-

•

for Code 4, ,

-

•

for Code 5, .

The average symbol power of each of the codes is , , , and , respectively, and the initial vectors have been chosen to maximize the code size given these power constraints. Note that the constituent constellation is an -PAM. The average power of this constituent constellation with uniform input distribution is . In Fig. 2, we illustrate the mutual information curves for these five codes as well as the constituent constellation under uniform input distribution. Also shown is the mutual information of the constituent constellation with Maxwell–Boltzman (MB) distributions which are known to be entropy maximizers [12] and close to optimal, in terms of mutual information, for the AWGN channel [17]. The channel capacity is also depicted. It is obvious that none of the five codes show any shaping gain as all mutual information curves are below that of the -PAM with uniform distribution.

Example 2.

As another example, we find the mutual information vs. SNR for five codes with blocklength . The initial vectors are chosen so that the largest Variant II codes with the average power of , , , and , are chosen. The list size for Murty’s algorithm was set to . The number of Monte Carlo samples for each SNR is . However, no numerically significant difference was observed even with samples. In Fig. 3, we illustrate the mutual information curves for these five codes as well as the constituent constellation under uniform and MB input distribution. It is evident that Code 1, Code 2 and Code 3 show considerable shaping gains as their curves cross that of the -PAM with uniform distribution. For lower SNR values, one needs to have larger list sizes to get an accurate estimate for . As a result, the computation of curves for lower SNRs is slower. We did not generate the curves for lower SNRs as we were mostly interested in the crossing of the permutation codes’ mutual information curves and the mutual information curve of the -PAM with uniform distribution.

Remark 1.

As shown in Fig. 3, a short blocklength of is enough to have considerable shaping gain over the AWGN channel. In practice, we are usually interested in using binary channel codes and, therefore, the so-called bit-metric decoding (BMD) of permutation codes, in which the mutual information induced via the labels of permutation codewords is considered. In the next section, we present BMD rates for Code 2 of Example 2 and its generalizations for various methods of soft demapping.

IV Soft Demapping of Permutation Codes

IV-A Variant I Encoder Function and its Inverse

A Variant I permutation code is uniquely identified by its initial vector . Note that when the length of the code is , the number of distinct elements in is at most . That is,

We denote the number of unique elements of by . We define as the vector whose elements are the distinct elements of in ascending order. That is, if

then . The number of times each occurs in is denoted as . This means that

The size of this code is

and its rate is .

The problem of encoding permutation codes has been considered by many [19, 4, 20, 18]. Here, we adapt the encoder provided in [18]. A pseudocode for this algorithm is provided in Algorithm 1. A pseudocode for the inverse encoder is given in Algorithm 2. The computational complexity of both algorithms is arithmetic operations.

IV-B Variant II Encoder Function and its Inverse

A Variant II permutation code , similar to a Variant I permutation code, is uniquely identified by its initial vector . Note that all elements of the initial vector are positive. We use the same notation to denote the number of distinct elements of . Similarly, the vector is defined as the vector whose elements are the distinct elements of in ascending order. The number of times each occurs in is denoted as . The rate of this code is

Let . Encoding an integer in the range to can be done using an encoder for its constituent Variant I permutation code. The least significant bits are mapped to a codeword in the constituent Variant I permutation code using an encoder as in Section IV-A. The signs of the symbols in the resulting codeword are chosen using the most significant bits. If the encoder for the constituent Variant I permutation code is , the encoder for the Variant II permutation code will be the function

such that

where, base- representation of is . Similarly, an inverse encoder can be defined using the inverse encoder for the constituent Variant I permutation code.

IV-C Permutation Encoding for PAS

We are now ready to explicitly describe the first block in the PAS system of Fig. 1. Assume we want to use a Variant I permutation code with initial vector and blocklength whose rate is . The first step for us is to expurgate the corresponding Variant I code so that its size is a power of . Let . To identify a sequence of amplitudes of length , i.e., a Variant I codeword of blocklength , one can use the encoder defined in Section IV-A. The input is an integer in the range . Therefore, each codeword in this Variant I permutation code can be indexed by a binary vector of length . This way, if is not an integer, the Variant I permutation code is expurgated so that only the first codewords, based on the lexicographical ordering, are utilized. This can potentially introduce a considerable correlation between the elements of codewords and breaks the geometric uniformity of the permutation code [21].

In order to properly randomize so that each codeword is surrounded by approximately equal number of codewords at any given distance, we first try to randomly spread the set of integers in the range of on the integers in the range of . One way to achieve this is to take the sampled integer from and multiply it by a randomly chosen integer coprime with and add another randomly chosen integer in modulo . The coprime factor and the dither can be generated based on some shared randomness so that the receiver can also reproduce them synchronously. Consequently, the first block in Fig. 1 takes in an integer from at the input and produces a Variant I codeword by

The codeword serves as the set of amplitudes in PAS and is labeled based on the Gray labeling of the constituent PAM constellation. The labels are then encoded using a systematic soft-decodable channel code. The resulting parity bits are used to set the signs of each of the amplitudes and as a result, a Variant II permutation code is produced.

Example 3.

To better understand the significance of randomization, consider the case of using a permutation code and choosing the first codewords according to the lexicographical ordering of the codewords. For example, assume that the initial vector is

With this choice, . If we only consider the first codewords according to the lexicographical ordering, the last codeword that we use is

Note, for instance, that in none of the codewords is the first symbol or . Also note that the second symbol is never . As is evident, the correlation between symbols is very strong. With the proposed randomization, such strong correlations are avoided to a great extend.

IV-D Soft-in Soft-out Demapping

The input to the first block at the receiver in Fig. 1 contains noisy version of Variant II permutation codewords. Assume that the received word is and that we want to estimate the LLR for the bit, , which is a part of the label in the symbol, , of the Variant II codeword . Four different estimators are discussed in what follows.

Exact Method: To get the exact LLR, one should use

| (2) |

where, in the numerator, runs over all Variant II codewords that their bit is , and that

The condition for codewords in the denominator is similar except bit is . The total number of terms in both summations is which is at least half of the size of the Variant II code and is typically very large. Note that there are label bits in each codeword and, as a result, the computational complexity of this method is operations per permutation codeword. The exact method, therefore, is only useful for very short permutation codes.

Symbol-by-Symbol: In the symbol-by-symbol method of [3], the LLR is estimated using

| (3) |

where runs over all symbols in the constituent constellation and, with slight abuse of notation, is the number of occurrences of in the initial vector. From the point of view of the receiver, any word from is a possible codeword and in the computation of the LLRs all words in can contribute. This allows for a computationally efficient method of estimating LLRs. For each LLR, computation of (3) requires operations. Therefore, the computational complexity of the symbol-by-symbol method is operations per permutation codeword. However, this method is inaccurate at short blocklengths [10, 11].

Orbit Decoding with Frozen Symbols: One key contribution of this paper is this new and simple method of soft demapping using orbit decoding of permutation codes. If the bit is an amplitude bit (i.e., it is not a sign bit), to estimate we use

| (4) |

where runs over all Variant I codewords in the following list: For any amplitude , we only include the codeword with the most likely orbit such that on the list. That is, the most likely orbits for every element of the codeword frozen to any possible amplitude will contribute in the calculation of .

If the bit is a sign bit, we only take into account the contribution of the half of the codewords in each orbit that have positive sign in the numerator and the other half in the denominator. Using a technique similar to the proof of Theorem 1, one can show that for a sign bit

| (5) |

To find the corresponding orbit of each codeword on the list, the assignment problem needs to be solved with the frozen symbol as an inclusion constraint (See the Appendix). Once the received word is sorted by the magnitude of its elements, the orbit likelihood of each item on the list can be found using operations (see Theorem 1). There are a total of orbits to consider. Therefore, the orbit likelihoods can be computed with complexity. Once the orbit likelihoods are computed, each of the LLRs can be found with complexity. Therefore, the computational complexity of this method is operations per permutation codeword.

Example 4.

Consider a permutation code with the initial vector

The corresponding Variant I code has size , and since is an integer, there is no need for random spreading. Assume that we send the codeword

and the noise vector, sampled from a standard normal random distribution and rounded to one significant digit, is

Assume that the constituent constellation is labeled such that the amplitude of is labeled with bit and the amplitude of is labeled with bit . The LLR for the first amplitude bit using the exact method is . The estimate for this LLR using the symbol-by-symbol method is . The estimate for the LLR using orbit decoding is . Note that the sign of the LLR using the symbol-by-symbol method is more in favor of an amplitude of , while the other two values are in favor of an amplitude of in the first position. This shows that in this example the quality of the LLR estimated using the orbit decoding with frozen symbols is superior to the estimation using the symbol-by-symbol method.

Soft Decoding Using the BCJR Algorithm: For typical permutation code parameters of interest such as Code 2 of Example 2, the natural trellis representation of permutation codes has a prohibitively large number of states and edges [22, 10]. However, the trellis representation of the more general spherical codes that we study in Section V, which include permutation codes as subcodes, is much more tractable. Similar to the symbol-by-symbol method, in which the receiver presumes a codebook encompassing the used permutation code, we can consider the larger, more general spherical codes and estimate the likelihood of each symbol in the codeword by running the Bahl–Cocke–Jelinek–Raviv (BCJR) algorithm [23].

IV-E Numerical Evaluation

Consider the abstract channel for which the channel input is the label of the transmitted permutation codeword and its output is the noisy permutation codeword . The bit-metric decoding (BMD) rate555Here, is the same as . [7]

is an achievable rate [24] and can be used to compare different methods of soft demapping. This is particularly useful as it provides an achievable rate when a binary soft-decodable channel code is intended to be used, without specifically restricting the system to a particular implementation of the channel code. The BMD rate of permutations codes, as well as generalizations discussed in the next section, for different methods of estimating LLRs are shown in Fig. 4.

To better compare the symbol-by-symbol method with our proposed method using orbit decoding as well as decoding using the BCJR algorithm, we use a permutation code in a PAS scheme. We compare the permutation block error rate (BLER) for Code 2 from Example 2 for these three estimation methods. By each block, we mean a permutation codeword (or, in general, a spherical codeword) and BLER is the ratio of blocks received in error after the channel decoder to the total number of blocks sent. For the channel code, we use the low density parity check (LDPC) code from the 5G NR standard [25]. Each LDPC code frame contains permutation codewords. Among the sign bits, are used for systematic information bits [3]. In Fig. 5, we show the BLER for these three LLR estimation methods. Note that we think of the case of using a permutation code with symbol-by-symbol decoding [3] as the baseline for comparison with other spherical codes and decoding methods that we study. For instance, it is clear that at BLER , about dB improvement is achieved by using the orbit decoding with frozen symbols compared with the symbol-by-symbol method. Also, about dB improvement is achieved compared with the BCJR method. In Fig. 5, similar results are shown for more general spherical codes as well. The structure of these codes will be explained in Section V.

V Structure of Constant-Energy Shells of PAM Constellations

In this section, we explore a generalization of permutation codes that consists of codewords from a union of permutation codes, each corresponding to a distinct initial vector or “type”. If each of these initial vectors has the same energy, the resulting code is termed a “spherical code”. In comparison to permutation codes, this construction can deliver an increase in code rate. At the same time the codes we now describe are also spherical codes and so, as mentioned in the introduction, can reduce the XPM in the optical fiber channel. We are particularly interested in constructions of spherical codes that abide by the constraints enforced by standard (PAM) modulation formats. Given an underlying constellation and a desired codeword energy (squared sphere radius), the largest code can be designed is the intersection of a sphere of the given radius with the -fold product of the underlying constellation.

Larger spherical codes, while often superior in rate, can have high complexity for storage, encoding, and decoding. For this reason, for a given PAM constellation, we also investigate the structure of these codes so as to describe intermediate subcodes that can increase code performance without drastically increasing complexity.

V-A Complete Shell Codes

We design codes with blocklength that are shells of the product of -PAM constellations, constrained to have total energy in each block. This is formalized as follows.

Definition 1.

Given a symbol set , a blocklength- code consisting of all codewords of the form , where the s satisfy and is referred to as a complete shell code over .

We refer to the complete shell code of length and squared radius over the -PAM constellation as the code. Complete shell code automatically deliver a shaping gain, as the symmetry of the construction induces an approximate Boltzmann distribution over the symbols. That is, if codewords are selected uniformly, the probability distribution over the symbols is , where is a function of the code parameters and . Note that this is a discretized version of a Gaussian distribution.

Complete shell codes can be decomposed into a union of permutation subcodes as , where each is a permutation code, and the number of distinct permutation subcodes is a function of the code parameters. We will also write when is the code. We refer to each subcode as a type class following the terminology of, e.g., [26, Ch. 11.1]. Describing the code in this union form is useful when describing and analyzing the code performance.

We call the initial vector in a type class the type class representative. For instance, the code has two type classes, with representatives and . The first class contains codewords, and the second . In general, the number of type classes of a given energy is a complicated function, which we usually compute numerically. A loose bound for the maximum value of when varying and is . This becomes intractable quickly for larger values of .

V-B Encoding and Decoding Complete Codes

V-B1 Encoding

It is natural to extend the encoding and decoding methods described in Section IV to the complete shell codes. For encoding, if the type-class subcodes are , we can assign the first codeword indices to the first type class, the next to the second, and so forth. This is formalized in Algorithm 3. The index of the corresponding permutation subcode can be found using binary search on the vector cumulative sums . Since the complexity of Algorithm 1 is (as for the PAM case), our new algorithm has complexity . The randomization method of Section IV-C can be used here as well. The index input to this algorithm is obtained similarly to Section IV-C, with the exception that the code rate is the rate of the complete shell code.

V-B2 Orbit decoding

For decoding, we again consider our code as a union of permutation codes (type classes). When forming the list of most likely orbits for each frozen symbol, we find the most likely orbit for each type class and pick the one with the highest likelihood to put on our list. Once the received word is sorted by the magnitude of its elements, the orbit likelihood of each item on the list can be found using operations. There are a total of orbits to consider. Once the list of orbits with the highest likelihoods is formed, each of the LLRs can be computed with complexity. Therefore, the total computational complexity per spherical codeword is .

V-B3 BCJR Decoding

A natural alternative decoding procedure to consider in this context is BCJR decoding, as described in [23]. The complete shell code has a simple trellis structure, similar to that discussed in [27]. An example trellis for the code is shown in Fig. 6. Here, each path from left to right through the trellis represents an unsigned codeword, with states labeled by the energy accumulated up to that point in the code. It can be shown that at depths between 3 and , the trellis will have at most states. Since each state has a maximum of outgoing edges, the number of edges in the trellis is , which is the complexity of the algorithm.

V-B4 Results

If we accept our heuristic for , we see that orbit decoding has a much worse complexity than BCJR decoding, meaning that BCJR decoding is preferable. In Fig. 5 we see the results of decoding the complete shell code, using both symbol-by-symbol decoding and BCJR decoding. Results are presented in terms of the rate-normalized SNR which is defined as follows. The channel coding theorem for the real AWGN channel indicates that a coding scheme with rate can achieve an arbitrarily small probability of error if and only if

Equivalently, a coding scheme with rate can achieve an arbitrarily small probability of error if and only if

The quantity is defined as the rate-normalized SNR [28].

Symbol-by-symbol decoding is not optimal. Furthermore, the complete code outperforms the permutation code.

V-C Partial Shell Spherical Codes

For large , orbit decoding is impractical. Even BCJR decoding becomes unwieldy as grows. Here we define a new family of codes that has competitive rate and shaping properties, with vastly improved encoding and decoding complexities. In particular, keeping only a small number of the largest type classes in a complete shell code is often sufficient to create a high-performing code. We formalize this as follows.

Definition 2.

Take a complete shell code , where each is a distinct type class with symbols drawn from a modulation format , and . The subcode of defined by is referred to as the maximal -class partial shell code over .

The maximal -class partial shell code consists of the largest type classes. In many cases, relatively small values of result in codes whose rates are only marginally smaller than that of the complete shell code, with the benefit that the encoding and decoding algorithms now have a constant number of type classes, i.e., we have replaced the function with the constant . Note that corresponds to a permutation code.

Example 5.

Consider the , and codes. While these codes have different block-lengths, was chosen to give similar numbers of bits per dimension. In each case, we consider the subcodes for different values of , comparing the rate of the partial shell code to the rate of the complete code. In Fig. 7 the fractional rate loss is very small even when is small. These codes have 34, 113, and 369 type classes respectively, but values of below 10 already achieve 99% of the rate.

The acceptable reduction in rate will depend on the use-case for the code, but we already see the complexity benefits of using selective codes instead of complete codes. Conversely, if we want to create a selective code from a union of permutation codes, we see that the rate increases for adding each subsequent code to the union decrease quickly.

As previously noted, one of the appealing features of complete codes over PAM constellations is that they induce an approximate marginal Boltzmann distribution. Partial shell codes also have this property. This is a result of the fact that they are constructed from the largest type classes available, whose symbols are distributed proportionally to , a Boltzmann distribution, where parameter depends on . This result (more specifically its dual) is shown in [29].

The exact Boltzmann distribution does not give an integer solution in general, but the non-integer solution is often a near approximation for solutions. In fact, since we are often interested not only in the largest type class, but in the largest type classes, an alternative method for constructing -class partial shell codes is to start with the non-integer Boltzmann solution and search for several nearby solutions, e.g., by iterating over all combinations of integer values within some neighborhood of each non-integer entry, and keeping the resulting vectors that are the best solutions.

Example 6.

As an example, for the code, whose largest type class is Code 2 in Example 2, we have the following exact Boltzmann distribution:

| (6) |

with . The largest type classes in the integer-constrained case are given in Table I. The distributions are similar to the non-integer solution, and can be found by searching for integer solutions in its neighborhood. Each type class in this table contains approximately codewords, not counting sign flips (i.e., in the Variant I version). Most of the balance of the 113 type classes in the complete shell code are much smaller and contribute little, as seen in Example 5.

| Bits | |

|---|---|

| (24, 15, 7, 4) | 79.87 |

| (21, 18, 8, 3) | 79.40 |

| (23, 15, 9, 3) | 78.45 |

V-D Encoding and Decoding Partial Codes

The primary benefit of partial codes over complete codes is their improved complexity of decoding. Encoding can be done using Algorithm 3 with using type-classes.

V-D1 Encoding

V-D2 Type-class decoding

The orbit decoding algorithm is also the same as the algorithm for the complete case. Again, is replaced with , which gives a complexity of .

V-D3 BCJR decoding

Finally, we can still use BCJR decoding in the partial shell case. The trellis for the partial code is complicated, but we can decode more efficiently by using the trellis for the complete code, and reporting an error if the output is not a codeword. This still has complexity .

Comparing complexities, we see that orbit decoding is now preferable when , which happens as becomes large for fixed and .

V-E Results

Fig. 5 also shows the SNR values for the 4-maximal partial shell code. We see that they are intermediate between the results for the permutation and complete codes. It is important to note that, compared with orbit decoding, the complete shell code with symbol-by-symbol decoding performs very well and is less than 0.15 dB worse than decoding with the BCJR algorithm. In terms of computational complexity, this is much cheaper than the BCJR algorithm and, therefore, the complete shell code with symbol-by-symbol decoding is still practically competitive.

VI Conclusions

In this paper, we have studied the use of more general spherical codes in place of permutation codes for probabilistic amplitude shaping at short blocklengths, which are of particular relevance to optical communications. A key result is the development of a novel soft-demapping technique for these short spherical codes. When used in a PAS framework with a standard LDPC channel code, our findings suggest that a gain of about dB can be achieved compared to the conventional case of using one permutation code with the symbol-by-symbol decoding. In terms of BMD rate, the total gain is calculated to be more than dB for a particular spherical codes at blocklength .

However, while our results are promising, it remains to be seen how well our proposed methods work for nonlinear optical fiber. Future work will focus on further exploring this aspect, while studying possible improvements and general performance versus complexity trade-offs for our proposed methods.

[Computation of Mutual Information] One can calculate the mutual information between input and output of the channel by Monte Carlo evaluation of the mutual information integral given by

To find for each trial, one may use the total law of probability

The code is typically very large, meaning that this summation cannot be computed exactly. Instead, we use a list-decoding approximation as follows:

| (7) |

where is a list of codewords with the highest likelihoods so that

If is large enough, can be approximated accurately. Because of the exponential decay of a Gaussian distribution, usually a computationally feasible list size is enough to get an accurate estimate of . Note that higher SNRs require smaller list sizes.

Murty’s algorithm finds solutions to the assignment problem with the largest rewards [8]. We describe a specialized version of Murty’s algorithm pertinent to the list decoding problem of this paper using a running example. The first step is to find the maximizing solution .

Example 7.

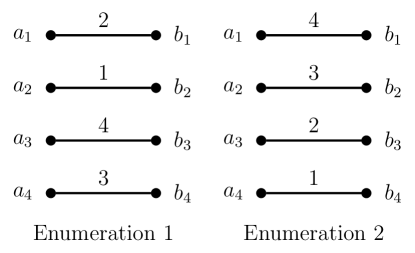

The next step in Murty’s algorithm is to choose an arbitrary enumeration666An enumeration of a non-empty finite set is a bijection . In other words, an enumeration is an ordered indexing of all the items in the set with numbers . of the edges in the matching . Then we partition the set of remaining matchings into subsets which we refer to as cells. Each cell is specified by a set of inclusion and exclusion constraints. The first cell contains all matchings that exclude edge in . The second cell contains all matchings that include edge but exclude edge . The next cell contains all matchings that include both edges and but exclude edge . The algorithm goes on to partition the set of matchings similarly according to the enumeration of the edges in . A weight function is defined for each cell by modifying the original weight function based on the inclusion and exclusion constraints. For an excluded edge, the corresponding weight is set to . For an included edge, the two vertices incident with that edge are removed from the vertex sets and and the corresponding row and column of the weight function are removed. The assignment problem is then solved for each cell with respect to the modified weight function.

Example 8.

We consider two different enumerations for the edges in . These are shown in Fig. 9. The cells obtained by partitioning according to both enumerations are shown in Fig. 10 and Fig. 11, respectively. For each cell, the inclusion constrains are shown with blue dotted lines and the exclusion constraints are shown with red dashed lines.

The algorithm then proceeds by solving the assignment problem for each cell. Due to the introduction of into the range of weight function, it should be clear that the weight function of the resulting assignment problems are not necessarily multiplicative. Therefore, solving the assignment problem for each cell may not be as easy as sorting. However, if the enumeration of the edges in is chosen carefully, solving the resulting assignment problem may still be easy. In particular, if we can start off by matching the vertices in that correspond to columns in the weight function with entries, we can reduce the problem again to an assignment problem with a multiplicative weight function. To formalize this intuition, the following definition will be useful.

Definition 3.

Let be a perfect matching of the balanced bipartite graph . Let be a weight function for the vertices in . An enumeration of is said to be ordered with respect to if

In other words, an enumeration is ordered with respect to the weight function of , if it sorts the edges based on the weight of the vertices in that are incident with the edges in descending order.

Definition 4.

A weight function is almost multiplicative if there exist two functions

such that assumes its maximum at ,

and

If the weight function of an assignment problem is multiplicative and an ordered enumeration with respect to the weight function of is used, the weight function of each cell after the first partitioning in Murty’s algorithm is almost multiplicative. Solving the assignment problem with an almost multiplicative weight function is straightforward. One can show that in a maximum weight matching, the edge incident on with the largest weight is included in the matching. After removing the corresponding row and column from the weight function, the problem then reduces to an assignment problem with a multiplicative weight function and can be solved by sorting.

Example 9.

Enumeration in our running example is ordered with respect to . In what follows, we only use this ordered enumeration. In each of the cells, the edge connected to vertex in with the largest vertex weight is first selected. This is shown by the thick black edge in Fig. 12. The weight function is correspondingly adjusted by removing one row and one column. After choosing the first edge, the rest of the edges in the maximum weight matching are found by sorting and are shown using thin gray lines.

A sorted list of potential candidates , sorted by their reward, is formed and all the solutions obtained for each cell are placed on this list. Recall that the solution with the largest reward is denoted . The solution with the second largest reward, , is the first item in , i.e., the potential candidate in with the largest reward. The algorithm removes this solution from and partitions the set of remaining matchings in its cell according to an enumeration of . Again, by choosing an ordered enumeration with respect to , the reduced problems can be solved by sorting.

Example 10.

We insert the solutions for each cell into the sorted list . The reward of the solutions of Cell , Cell and Cell in Fig. 12 are all the same and equal to . Note that in calculating the reward of each solution, the edges coming from the inclusion constraints (the blue dotted edges) are also counted. These three matchings are

The list is

We remove the first matching from this list and set the second solution with the largest reward to be the codeword corresponding to the matching .

We then partition the set of remaining matchings in Cell by considering the ordered enumeration for the edges in . The resulting cells, together with their inclusion and exclusion constraints as well as their weight functions are shown in Fig. 13. The inclusion and exclusion constraints of Cell are carried forward in forming Cell , Cell and Cell .

The algorithm then solves the assignment problem in the newly partitioned cells. The solution of each cell is then added to the list of candidates . The next matching with the largest weight will then be the first item in the sorted list . The algorithm proceeds by partitioning the corresponding cell with respect to the ordered enumeration of .

Example 11.

The solutions of the assignment problem in Cell , Cell and Cell are shown in Fig. 14. Again, the edge connected to vertex in with the largest vertex weight is selected first. The weight function is correspondingly adjusted by removing one row and one column. After choosing the first edge, the rest of the edges in the maximum-weight matching are found by sorting (shown using thin gray lines). The solution in Cell is referred to as and has a weight of . The solution in Cell is called and has a weight of . The solution in Cell , , has a weight of . The sorted list of candidate solutions is updated to

The next solution in Murty’s algorithm is then the codeword corresponding to the matching . The next step is to partition Cell with respect to the ordered enumeration of . Continuing this, one can see that is the codeword corresponding to the matching and is the codeword corresponding to the matching .

This specialized Murty’s algorithm continues until the solutions with the largest rewards are found.

Remark 2.

In practice, we may have some extra constraints on the perfect matchings that disallow some matchings. That is, it is common to have an allowed set of perfect matchings that is a proper subset of all possible perfect matchings. If one wishes to find the solutions to the assignment problem with the largest rewards subject to the extra constraint that the corresponding matchings should be in , once a candidate solution is chosen from the top of the list , the candidate solution should be tested for inclusion in the set of allowed solutions and only then be considered as a member in the final list of solutions. If such a solution is disallowed because of non-membership of , it still must be kept on the sorted list as the descendant cells may lead to a viable solution included in . We often expurgate permutation codes to have a subset with a power-of-two size, meaning that not all permutation codewords are allowed. This translates into a restriction on the set of allowed perfect matchings that we can have on the list of most likely codewords.

Acknowledgment

The authors wish to thank the reviewers of this paper for their insightful comments. Their constructive suggestions have significantly contributed to the improvement of this work. This work was supported in part by Huawei Technologies, Canada.

References

- [1] R. Rafie Borujeny and F. R. Kschischang, “Why constant-composition codes reduce nonlinear interference noise,” J. Lightw. Technol., vol. 41, pp. 4691–4698, July 2023.

- [2] D. Slepian, “Permutation modulation,” Proceedings of the IEEE, vol. 53, pp. 228–236, Mar. 1965.

- [3] G. Böcherer, F. Steiner, and P. Schulte, “Bandwidth efficient and rate-matched low-density parity-check coded modulation,” IEEE Trans. Commun., vol. 63, pp. 4651–4665, Dec. 2015.

- [4] P. Schulte and G. Böcherer, “Constant composition distribution matching,” IEEE Trans. Inf. Theory, vol. 62, pp. 430–434, Jan. 2016.

- [5] I. Ingemarsson, Group Codes for the Gaussian Channel, pp. 73–108. Berlin, Heidelberg: Springer Berlin Heidelberg, 1989.

- [6] Y. C. Gültekin, T. Fehenberger, A. Alvarado, and F. M. Willems, “Probabilistic shaping for finite blocklengths: Distribution matching and sphere shaping,” Entropy, vol. 22, p. 581, May 2020.

- [7] G. Böcherer, “Probabilistic amplitude shaping,” Foundations and Trends in Communications and Information Theory, vol. 20, pp. 390–511, 2023.

- [8] K. G. Murty, “An algorithm for ranking all the assignments in order of increasing cost,” Operations Research, vol. 16, pp. 682–687, June 1968.

- [9] G. Ungerboeck, “Channel coding with multilevel/phase signals,” IEEE Trans. Inf. Theory, vol. 28, pp. 55–67, Jan. 1982.

- [10] P. Schulte, W. Labidi, and G. Kramer, “Joint decoding of distribution matching and error control codes,” in International Zurich Seminar on Information and Communication, pp. 53–57, ETH Zurich, 2020.

- [11] Y. Luo and Q. Huang, “Joint message passing decoding for LDPC codes with CCDMs,” Optics Letters, vol. 48, pp. 4933–4936, Oct. 2023.

- [12] F. R. Kschischang and S. Pasupathy, “Optimal nonuniform signaling for Gaussian channels,” IEEE Trans. Inf. Theory, vol. 39, pp. 913–929, May 1993.

- [13] R. Burkard, M. Dell’Amico, and S. Martello, Assignment Problems. SIAM, 2012.

- [14] H. W. Kuhn, “The Hungarian method for the assignment problem,” Naval Research Logistics Quarterly, vol. 2, pp. 83–97, Mar. 1955.

- [15] G. H. Hardy, J. E. Littlewood, and G. Pólya, Inequalities. Cambridge, England: Cambridge University Press, Feb. 1988.

- [16] J. Holstermann, “A generalization of the rearrangement inequality,” Mathematical Reflections, vol. 5, no. 4, 2017.

- [17] S. Delsad, “Probabilistic shaping for the AWGN channel,” 2023.

- [18] A. Nordio and E. Viterbo, “Permutation modulation for fading channels,” in 10th International Conference on Telecommunications (ICT), vol. 2, pp. 1177–1183 vol.2, 2003.

- [19] T. Berger, F. Jelinek, and J. Wolf, “Permutation codes for sources,” IEEE Trans. Inf. Theory, vol. 18, pp. 160–169, Jan. 1972.

- [20] W. Myrvold and F. Ruskey, “Ranking and unranking permutations in linear time,” Information Processing Letters, vol. 79, pp. 281–284, Sept. 2001.

- [21] G. D. Forney, “Geometrically uniform codes,” IEEE Trans. Inf. Theory, vol. 37, pp. 1241–1260, Sept. 1991.

- [22] F. R. Kschischang, “The trellis structure of maximal fixed-cost codes,” IEEE Trans. Inf. Theory, vol. 42, pp. 1828–1838, Nov. 1996.

- [23] L. Bahl, J. Cocke, F. Jelinek, and J. Raviv, “Optimal decoding of linear codes for minimizing symbol error rate,” IEEE Trans. Inf. Theory, vol. 20, pp. 284–287, Mar. 1974.

- [24] G. Böcherer, “Achievable rates for shaped bit-metric decoding,” CoRR, vol. abs/1410.8075, 2014.

- [25] 3GPP, “5G NR multiplexing and channel coding,” Tech. Rep. TS 138.212 V15.2.0 (2018-07), 3GPP, July 2018.

- [26] T. M. Cover and J. A. Thomas, Elements of information theory. John Wiley & Sons, 2005.

- [27] Y. C. Gültekin, W. J. van Houtum, A. G. C. Koppelaar, and F. M. J. Willems, “Enumerative sphere shaping for wireless communications with short packets,” IEEE Trans. Wireless Commun., vol. 19, pp. 1098–1112, Feb. 2020.

- [28] G. D. Forney and G. Ungerboeck, “Modulation and coding for linear Gaussian channels,” IEEE Trans. Inf. Theory, vol. 44, pp. 2384–2415, Oct. 1998.

- [29] I. Ingemarsson, “Optimized permutation modulation,” IEEE Trans. Inf. Theory, vol. 36, no. 5, pp. 1098–1100, 1990.

| Reza Rafie Borujeny (Member, IEEE) received the B.Sc. degree from the University of Tehran in 2012, the M.Sc. degree from the University of Alberta in 2014 and the Ph.D. degree from the University of Toronto in 2022 all in electrical and computer engineering. His research interests include applications of information theory and coding theory. |

| Susanna E. Rumsey (Graduate Student Member, IEEE) was born in Toronto, ON, Canada in 1993. She received the B.A.Sc. degree with honours in engineering science (major in engineering physics), and the M.Eng and M.A.Sc. degrees in electrical and computer engineering from the University of Toronto, Toronto, ON, Canada, in 2015, 2016, and 2019 respectively. Since 2019, she has been a Ph.D. student in electrical and computer engineering at the University of Toronto, Toronto, ON, Canada. |

| Stark C. Draper (Senior Member, IEEE) received the B.S. degree in Electrical Engineering and the B.A. degree in History from Stanford University, and the M.S. and Ph.D. degrees in Electrical Engineering and Computer Science from the Massachusetts Institute of Technology (MIT). He completed postdocs at the University of Toronto (UofT) and at the University of California, Berkeley. He is a Professor in the Department of Electrical and Computer Engineering at the University of Toronto and was an Associate Professor at the University of Wisconsin, Madison. As a Research Scientist he has worked at the Mitsubishi Electric Research Labs (MERL), Disney’s Boston Research Lab, Arraycomm Inc., the C. S. Draper Laboratory, and Ktaadn Inc. His research interests include information theory, optimization, error-correction coding, security, and the application of tools and perspectives from these fields in communications, computing, learning, and astronomy. He has been the recipient of the NSERC Discovery Award, the NSF CAREER Award, the 2010 MERL President’s Award, and teaching awards from UofT, the University of Wisconsin, and MIT. He received an Intel Graduate Fellowship, Stanford’s Frederick E. Terman Engineering Scholastic Award, and a U.S. State Department Fulbright Fellowship. He spent the 2019–2020 academic year on sabbatical visiting the Chinese University of Hong Kong, Shenzhen, and the Canada-France-Hawaii Telescope (CFHT), Hawaii, USA. Among his service roles, he was the founding chair of the Machine Intelligence major at UofT, was the Faculty of Applied Science and Engineering (FASE) representative on the UofT Governing Council, is the FASE Vice-Dean of Research, and is the President of the IEEE Information Theory Society for 2024. |

| Frank R. Kschischang (Fellow, IEEE) received the B.A.Sc. degree (with honors) from the University of British Columbia, Vancouver, BC, Canada, in 1985 and the M.A.Sc. and Ph.D. degrees from the University of Toronto, Toronto, ON, Canada, in 1988 and 1991, respectively, all in electrical engineering. Since 1991 he has been a faculty member in Electrical and Computer Engineering at the University of Toronto, where he presently holds the title of Distinguished Professor of Digital Communication. His research interests are focused primarily on the area of channel coding techniques, applied to wireline, wireless and optical communication systems and networks. He has received several awards for teaching and research, including the 2010 Communications Society and Information Theory Society Joint Paper Award, and the 2018 IEEE Information Theory Society Paper Award. He is a Fellow of IEEE, of the Engineering Institute of Canada, of the Canadian Academy of Engineering, and of the Royal Society of Canada. During 1997–2000, he served as an Associate Editor for Coding Theory for the IEEE Transactions on Information Theory, and from 2014–16, he served as this journal’s Editor-in-Chief. He served as general co-chair for the 2008 IEEE International Symposium on Information Theory, and he served as the 2010 President of the IEEE Information Theory Society. He received the Society’s Aaron D. Wyner Distinguished Service Award in 2016. He was awarded the IEEE Richard W. Hamming Medal for contributions to the theory and practice of error correcting codes and optical communications in 2023. |