SocialGuard: An Adversarial Example Based Privacy-Preserving Technique for Social Images

Abstract

The popularity of various social platforms has prompted more people to share their routine photos online. However, undesirable privacy leakages occur due to such online photo sharing behaviors. Advanced deep neural network (DNN) based object detectors can easily steal users’ personal information exposed in shared photos. In this paper, we propose a novel adversarial example based privacy-preserving technique for social images against object detectors based privacy stealing. Specifically, we develop an Object Disappearance Algorithm to craft two kinds of adversarial social images. One can hide all objects in the social images from being detected by an object detector, and the other can make the customized sensitive objects be incorrectly classified by the object detector. The Object Disappearance Algorithm constructs perturbation on a clean social image. After being injected with the perturbation, the social image can easily fool the object detector, while its visual quality will not be degraded. We use two metrics, privacy-preserving success rate and privacy leakage rate, to evaluate the effectiveness of the proposed method. Experimental results show that, the proposed method can effectively protect the privacy of social images. The privacy-preserving success rates of the proposed method on MS-COCO and PASCAL VOC 2007 datasets are high up to 96.1% and 99.3%, respectively, and the privacy leakage rates on these two datasets are as low as 0.57% and 0.07%, respectively. In addition, compared with existing image processing methods (low brightness, noise, blur, mosaic and JPEG compression), the proposed method can achieve much better performance in privacy protection and image visual quality maintenance.

keywords:

Artificial intelligence security, Privacy protection, Social photos, Adversarial examples, Object detectors1 Introduction

In recent years, artificial intelligence (AI) techniques have been widely applied in a variety of tasks. Although bringing many conveniences, the abuse of AI techniques will also cause a series of security problems, such as social privacy disclosure. With the popularity of social platforms, people are keen to share their photos online. However, malicious hackers or entities can leverage the deep neural network (DNN) based object detectors (or image classifiers) to extract valuable private information from those uploaded social photos. In this way, they can analyze and explore users’ preferences, and push the accurate and targeted commercial advertisements [1]. Moreover, the leakage of sensitive information (such as identity) can even cause the users to suffer from property losses. For example, Echizen [2] indicated that the fingerprint might be stolen from the shared photos that contain the “V” gesture.

So far, there are several defense strategies to protect the privacy of the shared social photos. The most direct and common countermeasure is to set user access control [3, 4, 5], where the strangers are not permitted to browse private personal photos. In addition, the image encryption based techniques [6, 7] are also effective for protecting sensitive data on shared photos. However, the above two methods will either reduce the users’ social experience (access control), or will require huge computation overhead (image encryption). Therefore, the image processing methods, such as blur, noise and occlusion, have been widely used to protect the privacy contents in images. However, these image processing methods will greatly affect the visual qualities of the photos. Moreover, they are ineffective to resist the detection of those advanced DNN based object detectors [8, 9].

In this work, we propose a novel adversarial example based method to protect social privacy from being stolen. The key idea is that, the DNN based object detectors are sensitive to small perturbations [8, 10, 11], i.e., adversarial examples. By constructing adversarial version of these uploaded social photos, i.e., adding some visual invisible perturbations into the photos, the DNN based object detectors can be successfully fooled. Consequently, the object detectors will make incorrect predictions, or even completely unaware of the existence of the objects in a photo, thus the private information in the social photos can be protected.

The main contributions of this paper are as follows:

-

1.

Protect the social privacy effectively without affecting the visual qualities of the photos. We propose a method based on adversarial examples to protect the privacy in social photos, which can make Faster R-CNN [12] detector fails to predict any bounding box in a social image. Experimental evaluations on two datasets (MS-COCO [13] and PASCAL VOC 2007 [14]) show that, the privacy-preserving success rate of the proposed method is high up to 96.1% and 99.3%, respectively. Compared with existing social privacy protection methods, the proposed method can effectively protect image contents by fooling DNN based object detectors, without affecting the visual qualities of the social photos.

-

2.

Supporting customized privacy setting. Some objects (such as person or commercialized objects) in social images contain personal information and thus can be considered as sensitive objects. The proposed method can not only hide all the objects from being detected, but can also make the customized sensitive objects in a social image be incorrectly predicted. The user can customize the sensitive objects that he wants to keep private. After the social image is processed by the proposed method, Faster R-CNN will identify the customized sensitive objects incorrectly.

-

3.

Use the attack method as a defense. Adversarial example used to be a malicious attack method against DNN models. In this paper, we are doing the opposite by using the attack as a defense technique for resisting the detection of DNN based object detectors, so as to protect the privacy information in shared social photos from being disclosed.

The rest of this paper is organized as follows. Section 2 describes the related work on adversarial example attacks, the workflow of Faster R-CNN, and the existing social privacy protection works against DNN based privacy stealing. Section 3 elaborates the proposed method. Section 4 presents the experimental results on two standard image datasets. This paper is concluded in Section 5.

2 Related work

In this section, the adversarial example attacks, the workflow of Faster R-CNN, and the existing four social privacy protection works against DNN based privacy stealing, are reviewed.

2.1 Adversarial example attacks

Some researches have indicated that DNN models are vulnerable to adversarial examples, and a range of adversarial example generation methods have been proposed. Szegedy et al. [15] first proposed adversarial example attacks against the DNN models, and developed the L-BFGS algorithm to construct the adversarial perturbations. By adding the generated perturbations into a clean image, the target DNN model will output the incorrect classification result. Then, Goodfellow et al. [16] proposed the Fast Gradient Sign Method (FGSM) to explain the linear behavior of adversarial perturbations, which is effective to accelerate the generation of adversarial examples. Carlini and Wagner [17] constructed the high-confidence adversarial examples with three different distance metrics (i.e., distance, distance, and distance), and their generated adversarial examples could successfully fool the distilled neural networks. Chen et al. [18] developed the ShapeShifter attack to craft physical adversarial examples to fool road sign object detectors. By applying the Expectation over Transformation technique [19, 20], the ShapeShifter is robust to the changes of different distances and angles.

2.2 Workflow of Faster R-CNN

The workflow of the Faster R-CNN detector includes two stages [12]: region proposal and box classification. In the first stage, the features in an image are extracted, and the region proposal network (RPN) is used to determine where an object may exist and mark the region proposal. In the second stage, the corresponding objects in the marked region proposals are classified by DNN based classifier. Meanwhile, the bounding box regression [21] is performed to obtain the accurate location of each object in the image.

2.3 Social privacy protection against DNN based privacy stealing

We proposed the idea of this paper in 2019 and applied for a Chinese patent [22]. Besides, few exploratory studies have been conducted on the protection of image privacy against DNN based privacy stealing. Li et al. [23] presented the Private Fast Gradient Sign Method (P-FGSM) to prevent the scene (e.g., hospital, church) in social images from being detected by DNN model. They added imperceptible perturbation to the social images to generate the adversarial social images, in which the scene will be incorrectly classified by DNN classifier. Shen et al. [24] proposed an algorithm to protect the sensitive information in social images against DNN based privacy stealing and keep the changes of the social images imperceptible. They also conducted a human subjective evaluation experiment to evaluate the factors that influence the visual sensitivity of human [24]. The difference between our work and [24] is that, [24] focuses on preventing sensitive information from being detected by DNN based classifier, while our work focuses on preventing sensitive objects from being detected by object detector. Shan et al. [25] proposed a Fawkes system to protect personal privacy against unauthorized face recognition models. Specifically, they add pixel-level perturbations to user’s photos before uploading them to the Internet [25]. The functionality of unauthorized facial recognition models trained on these photos with perturbations will be deteriorated seriously. The differences between our work and [25] are that: (i) The work [25] protects social privacy against facial recognition models, while our method protect social privacy against object detectors. (ii) The work [25] adds adversarial images to the training set of unauthorized facial recognition models to influence the training phase of these models (which is a strong assumption), while our work focuses on the testing phase of DNN detectors.

The above three related works focus on privacy protection against the image classifiers. Compared with image classifiers, attacking or deceiving object detectors is more challenging [26, 27, 8]. The reasons are as follows. First, an object detector is more complex, which can detect multiple objects at once. Second, the object detector can infer the true objects by the obtained information from the image background. To the best of the authors’ knowledge, the only social privacy protection work against object detectors is Liu et al. [8]. Liu et al. [8] constructed the adversarial examples with a stealth algorithm, which made all objects in the image invisible to the object detectors, thus protecting the image privacy from being detected. Compared to the work [8], our work has the following differences: (i) The mechanisms are different. The work [8] focuses on the region proposal stage (the first stage) of Faster R-CNN, while our method focuses on the classification stage (the second stage) of Faster R-CNN. Moreover, our method leverages the classification boundary of object detector to craft perturbations. Specifically, the position of the feature point of each bounding box is moved and crossed the classification boundary, which make object detector cannot find any sensitive object and abandon the corresponding bounding boxes, while the stealth algorithm in [8] performs a backpropagation on the loss function to create perturbations and suppress the generation of bounding boxes. (ii) The effects are different. The work [8] can hide all the objects in a social image from being detected, while our method can not only achieve this, but can also make the customized sensitive objects in a social image be misclassified as other classes.

3 Proposed adversarial example based privacy-preserving technique

In this section, the proposed adversarial example based social privacy-preserving technique is elaborated. First, the privacy protection goals for social photos are described. Second, the overall flow of the proposed method is presented. Third, the Object Disappearance Algorithm is elaborated. The proposed method can generate two kinds of adversarial examples. One can make all the objects in a social image invisible, and the other can make the customized sensitive objects in a social image be incorrectly predicted.

3.1 Privacy goal

DNN based object detectors perform excellently in various computer vision tasks. The deep learning based object detectors can be divided into two categories: (i) one-stage object detectors, such as You Only Look Once (YOLO) [28], and Single Shot Multibox Detector (SSD) [29]; (ii) two-stage object detectors, such as Faster R-CNN [12], and Region-based Fully Convolutional Networks (R-FCN) [30]. Existing research [31] has indicated that, compared to SSD and R-FCN, the Faster R-CNN can achieve more superior performance on the trade-off between speed and detection accuracy. Besides, generally, Faster R-CNN has higher detection accuracy than that of YOLO detector. Therefore, in this paper, the proposed method is targeting at protecting image privacy from being detected by Faster R-CNN detectors.

The privacy goal of the proposed method includes two aspects: one is that Faster R-CNN fails to predict any bounding box in an image, which will be discussed in Section 3.3, and the other is that Faster R-CNN can predict some bounding boxes in an image but classifies the sensitive objects incorrectly, which will be discussed in Section 3.4. If one of these two aspects is met, it is considered that the social privacy is successfully protected. To achieve the first aspect, we need to make sure that for each bounding box, the score of each bounding box is lower than the threshold, which can be formalized as:

| (1) |

where is an adversarial social image. is the number of classes that an object detector can recognize ( in the experiment), and denotes the k-th category. is the score of class of the bounding box. is the detection threshold of the system.

For an object detector, it outputs bounding boxes with high scores (higher than the threshold), and discards the bounding boxes with low scores (lower than the threshold). Equation (1) indicates that the scores predicted by Faster R-CNN for all the bounding boxes are all lower than the threshold. Therefore, the system will abandon all bounding boxes and detect nothing in the adversarial social image.

The proposed adversarial example based method can make all objects in a social image undetectable by Faster R-CNN (the first aspect), which will be discussed in Section 3.3. In addition, the proposed method can also make the customized sensitive objects incorrectly classified as another object (the second aspect), which will be discussed in Section 3.4.

3.2 Overall flow

The overall flow of the proposed method is shown in Fig. 1. The method takes users’ photos as the inputs, and outputs the adversarial examples to deceive the Faster R-CNN object detector. First, pre-detection is performed on the users’ photos to obtain the detected objects. Then, the classes that do not belong to those correctly detected classes are selected as non-sensitive classes (defined in Section 3.3). Second, the region proposals are obtained in the classification stage of Faster R-CNN. Third, the adversarial attack is implemented at the second stage (i.e., the classification stage) of Faster R-CNN by iteratively constructing the perturbation in the image. The perturbation is iteratively constructed until that, for every bounding box, the score of each object is lower than the threshold, which will cause Faster R-CNN to discard all the bounding boxes. In this way, the generated adversarial social image can successfully deceive the Faster R-CNN detector when it is uploaded to the social platforms.

3.3 Object Disappearance Algorithm

We propose an Object Disappearance Algorithm which is presented in Algorithm 1, to generate adversarial examples and prevent Faster R-CNN from predicting any bounding box (the first aspect as discussed in Section 3.1). The proposed method focuses on attacking the classification network at the second stage (i.e., the classification stage) of Faster R-CNN. The adversarial attack includes two steps. First, the pre-detection on the original image is performed to obtain the predicted classes, including the correct and incorrect prediction results. We define the correctly predicted classes as the sensitive classes that need to be protected. In this paper, for an unprocessed social image, the sensitive objects are the objects that appear in the image and can be detected by object detector, and the non-sensitive objects are the objects that do not appear in the image. For different social images, the sensitive objects and the non-sensitive objects are different. Then, the non-sensitive class set is selected, where is the number of classes that an object detector can recognize, and is the number of classes that are detected in the original image. do not belong to these correctly detected classes and are the classes of objects that do not appear in the original image . Second, the region proposals are obtained in the classification stage of Faster R-CNN, and the perturbations are constructed iteratively in the original image with the following formula:

| (2) |

where is an adversarial example generated in the -th iteration. represents the j-th predicted bounding box, and the total number of bounding boxes is . is the label of the non-sensitive class and . is not a fixed class label and is sequentially selected from the set in each iteration. represents the classification network of Faster R-CNN, and is the loss function that computes the difference between the output of the classification network and the non-sensitive class label .

In each iteration, we sequentially select the non-sensitive class from the set . Then, we construct the adversarial perturbation with formula (2) and utilize the constraint to make the perturbation visually invisible, where restricts the intensity of the generated perturbations. After the adversarial example for the -th iteration is generated, the classification network is used to predict the classes of objects for all the bounding boxes . Subsequently, the scores of each class for all the bounding boxes will be obtained. can be considered as a matrix as follows:

| (3) |

where is the score of class for the -th bounding box (, ). The maximum score is then calculated, where represents the maximum score of all classes for all the bounding boxes in the generated adversarial example . We denote the maximum number of iterations as . The iteration process is terminated when the iteration number reaches or is lower than the threshold, which means that there is no bounding box that can be detected by Faster R-CNN.

Here, we explain the reason why the above algorithm can cause all objects in the generated adversarial example invisible. For sensitive classes in an image, adding perturbation with Equation (2) can change the classification boundary of sensitive classes and reduce the scores of them. Thus, the scores of sensitive objects are below the detection threshold. For non-sensitive classes, the multi-classes perturbing method restraints the increase of non-sensitive objects’ scores with insufficient iterations. As a result, the scores of non-sensitive objects are also below the detection threshold. As a result, the score of each class for any bounding boxes will be lower than the threshold, which makes Faster R-CNN discard all the bounding boxes and detect nothing in the adversarial social image.

3.4 Customized privacy setting

In this section, we describe another method (denoted as ) of generating adversarial examples which can protect the customized sensitive objects in the social images. In a social photo, some objects are usually sensitive and private, such as: (i) Person. The person in a social photo may involve users’ personal information. For example, a common V-shaped gesture photo may contain the fingerprint information of an user. (ii) Commercialized objects. Some commercial objects (such as handbags, sports balls, and books) may reveal users’ interests. The leakage of these commercialized objects may bring unnecessary advertising to users. To avoid the disclosure of the sensitive objects in the shared social photos, the proposed method can also provide users with privacy settings, where users can customize the sensitive objects that he wants to protect and the specific class that he wants the sensitive objects to be misclassified as. The proposed method can achieve this purpose by iteratively constructing perturbations on social images with the following formula:

| (4) |

The perturbation is iteratively constructed until that, for every bounding box, the scores of the customized sensitive objects are lower than the threshold. can cause the score of the class to be higher than the threshold, which will make Faster R-CNN classify the customized sensitive objects as the class . As a result, Faster R-CNN cannot detect the customized sensitive object and will present the bounding boxes with wrong class (the non-sensitive class ).

We denote the method introduced in Section 3.3 as , and the method in this section as . We use and to represent the adversarial examples generated by and , respectively. The difference between the workflows of and is shown in Fig. 2. performs pre-detection on the original social image to obtain the non-sensitive classes and utilizes multiple non-sensitive classes to generate adversarial example, while does not perform pre-detection and utilizes a fixed non-sensitive class to generate adversarial example.

In a word, this paper provides two adversarial example based method ( and ) to protect the privacy of social photos. hides all objects in a social image from being detected by Faster R-CNN, while is used to protect the sensitive objects customized by the users.

4 Experimental results

In this section, we evaluate the proposed method. First, the experimental settings, the experimental datasets, and the two evaluation metrics, are described in Section 4.1. Second, we demonstrate the effectiveness of the proposed method in terms of two metrics: the privacy-preserving success rate (Section 4.2) and the privacy leakage rate (Section 4.3). Then, we explore the impacts of two parameters (perturbation constraint and detection threshold ) on the proposed method in Section 4.4. Finally, we compare the proposed method with the existing image processing methods in Section 4.5.

4.1 Experimental setup

In our experiments, we evaluate the performance of the proposed method on the Faster R-CNN [12] Inception v2 [32] model. The model has been pre-trained on the Microsoft Common Objects in Context (MS-COCO) dataset [13] with Tensorflow [33] platform. We conduct the experiment on MS-COCO dataset [13] and PASCAL VOC 2007 dataset [14]. The MS-COCO dataset has more than 200,000 images for object detection. It contains 80 object categories including people, vehicles, animals, sports goods, and other common items [13]. The PASCAL VOC 2007 dataset [14] has 20 categories, most of which are the same as the categories in MS-COCO dataset. We randomly select 1,000 images from MS-COCO dataset [13], and 1,000 images from PASCAL VOC 2007 dataset [14], respectively, to evaluate the proposed method.

We use two metrics, named privacy-preserving success rate and privacy leakage rate, to evaluate the proposed method on protecting the privacy of social images. As mentioned in Section 3.3 and Section 3.4, the proposed social privacy-preserving method can generate two kinds of adversarial examples. As discussed in Section 3.4, we use to represent the adversarial examples that aim to make all objects invisible, and to represent the adversarial examples that aim to hide the customized sensitive object. The privacy-preserving success rate () for adversarial examples can be formalized as:

| (5) |

where is the number of adversarial social images in which Faster R-CNN cannot present any bounding boxes. is the number of all the adversarial social images. The privacy-preserving success rate () for adversarial examples can be formalized as:

| (6) |

where is the number of adversarial examples in which Faster R-CNN classifies the customized sensitive class incorrectly.

To further explore the effectiveness of the proposed method on preventing the privacy leakage, we use a metric named privacy leakage rate. The privacy leakage rate represents how many sensitive contents are detected by Faster R-CNN in social images. The privacy leakage rate for the adversarial social images that aim to hide all the objects () can be calculated as follows:

| (7) |

where is the number of bounding boxes that are detected in all the adversarial social images , including the correct and incorrect detection results. is the number of bounding boxes that are detected in all the original social images, including the correct and incorrect detection results. The privacy leakage rate for the adversarial social images that aim to hide the customized sensitive objects () can be expressed as:

| (8) |

where is the number of bounding boxes of the customized sensitive classes that are correctly detected in the adversarial social images . is the number of bounding boxes of customized sensitive classes that are correctly detected in the original social images.

4.2 Privacy-preserving success rate

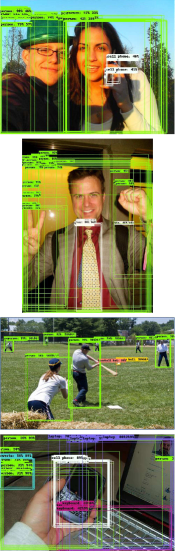

In this section, we use the privacy-preserving success rate to evaluate the proposed method. Fig. 3 shows four examples to illustrate the effectiveness of the proposed method. The four examples consist of the original images, the detection results of the original images, the detection results of adversarial examples , and the detection results of adversarial examples , respectively. Faster R-CNN can easily recognize all the sensitive objects if the shared images are unprocessed, as shown in Fig. 3 and Fig. 3. Specifically, all the persons are accurately detected in Fig. 3. However, Faster R-CNN detects nothing in the adversarial examples , as shown in Fig. 3. Meanwhile, it is hard for humans to observe the differences between adversarial examples and the original images. In Fig. 3, all the persons in adversarial examples are misclassified as other objects. The above results indicate that, the proposed method can protect the social privacy effectively without affecting the visual effect of social images.

The privacy-preserving success rates of the proposed method are shown in TABLE 1. In the experiment, we select 1,000 images in each dataset and test the privacy-preserving success rates for the adversarial social image and , respectively. The success rates of on MS-COCO and PASCAL VOC 2007 datasets are 96.1% and 99.3%, respectively. The success rates of on the two datasets are 96.5% and 99.4%, respectively. The privacy-preserving success rates of and are very close and both reach a very high level. The results indicate that the proposed method performs well on protecting the privacy of social photos.

| Dataset | Privacy-preserving success rate | |

| MS-COCO [13] | 96.1% | 96.5% |

| PASCAL VOC 2007 [14] | 99.3% | 99.4% |

4.3 Privacy leakage rate

In this section, we use privacy leakage rate to evaluate the proposed method. We calculate the privacy leakage rates for and on MS-COCO and PASCAL VOC 2007 datasets, respectively. As shown in TABLE 2, the privacy leakage rates for adversarial social images on the two datasets are 0.57% and 0.07%, respectively, and the privacy leakage rates for adversarial social images on the two datasets are 2.23% and 0.18%, respectively. The privacy leakage rates of and both reach a very low level. The results indicate that, our method can protect the sensitive objects from being detected by Faster R-CNN.

| Dataset | Privacy leakage rate | |

| MS-COCO [13] | 0.57% | 2.23% |

| PASCAL VOC 2007 [14] | 0.07% | 0.18% |

Considering that our method may have different privacy protection effects for different sensitive objects, we select 9 different sensitive objects and calculate the privacy leakage rates of these objects, respectively. The 9 classes are person, elephant, airplane, laptop, traffic light, book, sports ball, parking meter, and cell phone. In the experiment, we select 300 images from MS-COCO dataset [13] for each class. Fig. 4 shows the privacy leakage rates of the nine sensitive objects in the adversarial social images . The result indicates that, the proposed method can effectively prevent the privacy information in the social image from being detected. The privacy leakage rates of the nine sensitive classes are all in a very low level (lower than 2%). Moreover, the privacy leakage rate of the elephant class is as low as 0.26%.

4.4 Parameter discussion

In the proposed method, there are two parameters that may affect the performance: the perturbation constraint and the detection threshold . The perturbation constraint constrains the intensity of the perturbation, which makes the difference between the original image and the adversarial example difficult to be noticed. The detection threshold determines the number of bounding boxes that are presented by Faster R-CNN.

We set the values of the perturbation constraint to be because these values of are small enough and can make the generated perturbations difficult to be noticed. The privacy-preserving success rate and the privacy leakage rate of adversarial social images under different settings are shown in Fig. 5. It can be seen that when , both the privacy-preserving success rate and the privacy leakage rate change drastically as increases, and and tend to stabilize when . Moreover, when , the value of already reaches a high level (96.5%) and the value of is only 2.52%. Note that, the larger the value of is, the larger the perturbation strength to an image. A large perturbation strength may affect the visual quality of the perturbed image. Therefore, has the optimal performance when considering the trade-off between privacy preserving effect and perturbation strength.

We also study the relationship between and the image quality. Structural Similarity (SSIM) [34] and Peak Signal to Noise Ratio (PSNR) are two quantitative metrics to reflect the visual quality of the processed images. PSNR describes the pixel level difference between two images, while SSIM [34] shows structural changes of a processed image. The higher the value of PSNR and SSIM [34], the more similar between the original image and the modified image. Fig. 6 presents the PSNR and SSIM of the modified images under different settings of perturbation constraint . Both PSNR and SSIM show downward trends with the increase of . In other words, the smaller the value of , the better the visual quality of the image. Therefore, the value of should be as small as possible to maintain a good visual quality for the adversarial example. In conclusion, is supposed to be the optimal one when considering the trade-off between image quality and privacy leakage rate.

We further explore the impact of the detection threshold on the privacy-preserving success rate and privacy leakage rate. The privacy-preserving success rates of and under different are shown in Fig. 7. It can be seen that the success rate of increases rapidly as increases when is in , and the success rate of is always in a high level. When reaches , the privacy-preserving success rates for and are 96.1% and 96.5%, respectively. When , the privacy-preserving success rates for and are 99.5% and 99.6%, respectively. Generally, for most object detectors, the detection threshold is set to be higher than in order to achieve high detection accuracy. This indicates that, the proposed method has a good performance in terms of privacy preserving when detection threshold is set to be greater or equal to .

In addition, we also calculate the privacy leakage rate of the proposed method under different settings of the detection threshold , as shown in TABLE 3. It is shown that the privacy leakage rate decreases with the increase of . When , the privacy leakage rates of and are 0.06% and 0.42%, respectively, which indicate that the proposed method has a good performance in preventing privacy leakage. Generally, for most object detectors, the detection threshold is set to be higher than 0.5 in order to achieve high detection accuracy. The result indicates that, the proposed method can effectively reduce the scores of most bounding boxes to below 0.3, which will lead these bounding boxes to be discarded.

| Detection threshold | 0.2 | 0.24 | 0.28 | 0.32 | 0.36 | 0.4 | |

| Privacy leakage rate | 8.56% | 3.84% | 0.86% | 0.27% | 0.12% | 0.06% | |

| 4.53% | 3.36% | 2.61% | 1.03% | 0.50% | 0.42% | ||

4.5 Comparison with traditional image processing methods

In this section, we compare the proposed method with the traditional image processing methods. The traditional image processing methods includes low brightness, noise, mosaic, blur, and JPEG (Joint Photographic Experts Group) compression [9, 8].

We select 500 images from MS-COCO dataset to conduct the experiment. Fig. 8 shows an example image processed by different methods and the detection results. As shown in Fig. 8(a), if the image is unprocessed, Faster R-CNN is able to accurately identify all the objects. As shown in Fig. 8(b), although low-brightness method seriously degrades the visual quality of the social image, Faster R-CNN can still identify all the objects correctly. As shown in Fig. 8(c)Fig. 8(f), the persons are all correctly detected in the images processed by blur, mosaic, noise and JPEG compression method, which indicate that these methods are all ineffective to protect the sensitive objects. As shown in Fig. 8(g) and Fig. 8(h), Faster R-CNN detects nothing in and recognizes person as dining table in , which indicate that the proposed adversarial example based method can successfully deceive Faster R-CNN. The reason why the proposed method can resist the detection is that, the feature of a bounding box extracted by Faster R-CNN can be considered as a point in the hyperplane. The proposed method can change the position of the feature point and makes it cross the classification boundary, which will cause the bounding box to be misclassified or be discarded [8].

TABLE 4 presents the comparison between the proposed method and the above five image processing methods (low brightness, noise, mosaic, blur, and JPEG compression [9, 8]) in terms of privacy-preserving success rate, privacy leakage rate, PSNR, and SSIM. The results indicate that the proposed method can effectively protect the privacy of social images as the privacy-preserving success rates of and are 96.2% and 96.6%, respectively, and the privacy leakage rates of and are 0.54% and 2.20%, respectively. The five traditional image processing methods all fail to protect the social privacy as the privacy-preserving success rates of the five methods are all lower than 5.4%. Compared with the five traditional image processing methods, the proposed method has almost no influence on the visual quality of social images as the values of PSNR and SSIM of the proposed method are significantly higher than that of the five traditional image processing methods. Especially, the values of PSNR and SSIM of the proposed are 46.91 and 0.992, which are much higher than that of other methods.

| Methods | Privacy-preserving success rate | Privacy leakage rate | PSNR | SSIM |

| Low brightness | 0.6% | 100% | 8.46 | 0.252 |

| Blur (Gaussian) | 0.4% | 100% | 24.08 | 0.676 |

| Mosaic | 2.2% | 78.19% | 23.87 | 0.683 |

| Noise | 0.8% | 95.55% | 22.68 | 0.437 |

| JPEG compression | 5.4% | 57.32% | 24.96 | 0.687 |

| The proposed | 96.2% | 0.54% | 27.16 | 0.751 |

| The proposed | 96.6% | 2.20% | 46.91 | 0.992 |

5 Conclusion

The malicious attackers can obtain private and sensitive information from uploaded social photos using object detectors. In this paper, we propose an Object Disappearance Algorithm to generate adversarial social images and prevent the object detectors from detecting privacy information. The proposed method injects imperceptible perturbations to the social images before they are uploaded online. Specifically, the proposed method generates two kinds of adversarial social images. One can hide all the objects from being detected by Faster R-CNN, and the other can lead Faster R-CNN to misclassify the customized objects. We use two metrics, named privacy-preserving success rate and privacy leakage rate, to evaluate the effectiveness of the proposed method, and use PSNR and SSIM to evaluate the impact of the proposed method on the visual quality of social images. Experimental results show that, compared with traditional image processing methods (low brightness, blur, mosaic, noise, and JPEG compression), the proposed method can effectively resist the detection of Faster R-CNN, while has the minimal influence on the visual quality of social images. The proposed method iteratively constructs perturbation to generate adversarial social images. In the future work, we will study the acceleration method for adversarial examples generation to reduce the overhead.

References

- Hardt and Nath [2012] Hardt, M., Nath, S.. Privacy-aware personalization for mobile advertising. In: Proceedings of the ACM Conference on Computer and Communications Security, 2012, pp. 662–673.

- onl [2017] Japan researchers warn of fingerprint theft from ‘peace’ sign. 2017. URL: https://phys.org/news/2017-01-japan-fingerprint-theft-peace.html.

- Klemperer et al. [2012] Klemperer, P.F., Liang, Y., Mazurek, M.L., Sleeper, M., Ur, B., Bauer, L., et al. Tag, you can see it! Using tags for access control in photo sharing. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, 2012, pp. 377–386.

- Vishwamitra et al. [2017] Vishwamitra, N., Li, Y., Wang, K., Hu, H., Caine, K., Ahn, G.. Towards PII-based multiparty access control for photo sharing in online social networks. In: Proceedings of the 22nd ACM on Symposium on Access Control Models and Technologies, 2017, pp. 155–166.

- Li et al. [2019a] Li, F., Sun, Z., Li, A., Niu, B., Li, H., Cao, G.. Hideme: Privacy-preserving photo sharing on social networks. In: Proceedings of the IEEE Conference on Computer Communications, 2019a, pp. 154–162.

- Sun et al. [2016] Sun, W., Zhou, J., Lyu, R., Zhu, S.. Processing-aware privacy-preserving photo sharing over online social networks. In: Proceedings of the 24th ACM Conference on Multimedia Conference, 2016, pp. 581–585.

- Abdulla and Bakiras [2019] Abdulla, A.K., Bakiras, S.. HITC: Data privacy in online social networks with fine-grained access control. In: Proceedings of the 24th ACM Symposium on Access Control Models and Technologies, 2019, pp. 123–134.

- Liu et al. [2017] Liu, Y., Zhang, W., Yu, N.. Protecting privacy in shared photos via adversarial examples based stealth. Security and Communication Networks 2017. 2017:1–15.

- Wilber et al. [2016] Wilber, M.J., Shmatikov, V., Belongie, S.. Can we still avoid automatic face detection. In: Proceedings of the IEEE Winter Conference on Applications of Computer Vision, 2016, pp. 1–9.

- Papernot et al. [2018] Papernot, N., McDaniel, P., Sinha, A., Wellman, M.. SoK: Security and privacy in machine learning. In: Proceedings of the IEEE European Symposium on Security and Privacy, 2018, pp. 399–414.

- Akhtar and Mian [2018] Akhtar, N., Mian, A.. Threat of adversarial attacks on deep learning in computer vision: A survey. IEEE Access 2018. 6:14410–14430.

- Ren et al. [2017] Ren, S., He, K., Girshick, R.B., Sun, J.. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Transactions on Pattern Analysis and Machine Intelligence 2017. 39(6):1137–1149.

- Lin et al. [2014] Lin, T., Maire, M., Belongie, S.J., Hays, J., Perona, P., Ramanan, D., et al. Microsoft COCO: Common objects in context. In: Proceedings of the European Conference on Computer Vision, 2014, pp. 740–755.

- Everingham et al. [2010] Everingham, M., Gool, L.V., Williams, C.K.I., Winn, J.M., Zisserman, A.. The pascal visual object classes (VOC) challenge. International Journal of Computer Vision 2010. 88(2):303–338.

- Szegedy et al. [2014] Szegedy, C., Zaremba, W., Sutskever, I., Bruna, J., Erhan, D., Goodfellow, I.J., et al. Intriguing properties of neural networks. In: Proceedings of the 2nd International Conference on Learning Representations, 2014, pp. 1–10.

- Goodfellow et al. [2015] Goodfellow, I.J., Shlens, J., Szegedy, C.. Explaining and harnessing adversarial examples. In: Proceedings of the 3rd International Conference on Learning Representations, 2015, pp. 1–11.

- Carlini and Wagner [2017] Carlini, N., Wagner, D.. Towards evaluating the robustness of neural networks. In: Proceedings of the IEEE Symposium on Security and Privacy, 2017, pp. 39–57.

- Chen et al. [2018] Chen, S., Cornelius, C., Martin, J., Chau, D.H.P.. Shapeshifter: Robust physical adversarial attack on faster R-CNN object detector. In: Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, 2018, pp. 52–68.

- Brown et al. [2017] Brown, T.B., Mané, D., Roy, A., Abadi, M., Gilmer, J.. Adversarial patch. arXiv:1712.09665 2017.

- Athalye et al. [2018] Athalye, A., Engstrom, L., Ilyas, A., Kwok, K.. Synthesizing robust adversarial examples. In: Proceedings of the 35th International Conference on Machine Learning, 2018, pp. 284–293.

- Girshick [2015] Girshick, R.. Fast R-CNN. In: Proceedings of the IEEE International Conference on Computer Vision, 2015, pp. 1440–1448.

- Xue et al. [2019] Xue, M., Yuan, C., Sun, S., Wu, Z.. A privacy protection method and device for social photos against yolo object detector. 2019. Chinese Patent, Patent No. 201911346202.0, Filed December, 2019.

- Li et al. [2019b] Li, C.Y., Shamsabadi, A.S., Sanchez-Matilla, R., Mazzon, R., Cavallaro, A.. Scene privacy protection. In: Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, 2019b, pp. 2502–2506.

- Shen et al. [2019] Shen, Z., Fan, S., Wong, Y., Ng, T., Kankanhalli, M.S.. Human-imperceptible privacy protection against machines. In: Proceedings of the 27th ACM International Conference on Multimedia, 2019, pp. 1119–1128.

- Shan et al. [2020] Shan, S., Wenger, E., Zhang, J., Li, H., Zheng, H., Zhao, B.Y.. Fawkes: Protecting personal privacy against unauthorized deep learning models. arXiv:2002.08327 2020.

- Zhao et al. [2018] Zhao, Y., Zhu, H., Liang, R., Shen, Q., Zhang, S., Chen, K.. Seeing isn’t believing: Practical adversarial attack against object detectors. arXiv:1812.10217 2018.

- Song et al. [2018] Song, D., Eykholt, K., Evtimov, I., Fernandes, E., Li, B., Rahmati, A., et al. Physical adversarial examples for object detectors. In: Proceedings of the 12th USENIX Workshop on Offensive Technologies, 2018, pp. 1–10.

- Redmon et al. [2016] Redmon, J., Divvala, S.K., Girshick, R., Farhadi, A.. You only look once: Unified, real-time object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016, pp. 779–788.

- Liu et al. [2016] Liu, W., Anguelov, D., Erhan, D., Szegedy, C., Reed, S.E., Fu, C., et al. SSD: Single shot multibox detector. In: Proceedings of the European Conference on Computer Vision, 2016, pp. 21–37.

- Dai et al. [2016] Dai, J., Li, Y., He, K., Sun, J.. R-FCN: Object detection via region-based fully convolutional networks. In: Proceedings of the 30th International Conference on Neural Information Processing Systems, 2016, pp. 379–387.

- Huang et al. [2017] Huang, J., Rathod, V., Sun, C., Zhu, M., Korattikara, A., Fathi, A., et al. Speed/accuracy trade-offs for modern convolutional object detectors. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 3296–3297.

- Szegedy et al. [2016] Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., Wojna, Z.. Rethinking the inception architecture for computer vision. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016, pp. 2818–2826.

- Abadi et al. [2016] Abadi, M., Agarwal, A., Barham, P., Brevdo, E., Chen, Z., Citro, C., et al. Tensorflow: Large-scale machine learning on heterogeneous distributed systems. arXiv:1603.04467 2016.

- Wang et al. [2004] Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.. Image quality assessment: From error visibility to structural similarity. IEEE Transactions on Image Processing 2004. 13(4):600–612.