11email: [email protected], [email protected], [email protected], [email protected] 22institutetext: Ngee Ann Polytechnic, Singapore

22email: {s10163148,s10177638}@connect.np.edu.sg 33institutetext: National College for Education, Vietnam

33email: [email protected] 44institutetext: National University Health System, Singapore

44email: [email protected] 55institutetext: National University of Singapore, Singapore

55email: [email protected]

Social Behaviour Understanding using Deep Neural Networks: Development of Social Intelligence Systems

Abstract

With the rapid development in artificial intelligence, social computing has evolved beyond social informatics toward the birth of social intelligence systems. This paper, therefore, takes initiatives to propose a social behaviour understanding framework with the use of deep neural networks for social and behavioural analysis. The integration of information fusion, person and object detection, social signal understanding, behaviour understanding, and context understanding plays a harmonious role to elicit social behaviours. Three systems, including depression detection, activity recognition and cognitive impairment screening, are developed to evidently demonstrate the importance of social intelligence. The study considerably contributes to the cumulative development of social computing and health informatics. It also provides a number of implications for academic bodies, healthcare practitioners, and developers of socially intelligent agents.

Keywords:

Artificial Intelligence (AI) Social Intelligence Deep Neural Networks Social Behaviours1 Introduction

The landscape of social computing has evolved, with the proliferation of smaller, more powerful devices, such as mobile phones, tablets and wearable devices. Having all these advanced technologies available at our fingertips, people are now using them in everyday life, introducing new habits and generating new forms of data.

The social computing paradigm has been moving beyond capturing information toward focusing on social intelligence [29]. As a vital facet of human intelligence, social intelligence is the capability to understand oneself and to understand others. The development of social intelligence systems entails recognising social and behavioural patterns from the new types of data and providing in-depth analysis of social signals for better human support. This paper aims to address major boundaries of social computing capabilities and social signal processing by introducing a social behaviour understanding platform with the use of deep neural networks.

With the rapid advancement of artificial intelligence (AI), deep learning utilises complex networks of artificial neurons to provide new ways to investigate human interactions in various contexts. We propose a deep learning framework for understanding social signals and behaviours from an individual or a group of people.

The niche nature of the previous generations of approaches and devices restricted the types and possibilities for social behaviour analysis. With state-of-the-art technologies today, we introduce the design and implementation of social intelligence systems for activity recognition, behavioural analysis, and health assessment. The paper demonstrate three use cases of social intelligence.

-

•

Depression detection aims to develop a social intelligence system, that uses machine learning techniques, to classify vocal features present in a depressed individual’s voice. Utilising smartphone microphones, to determine if an individual suffers from depression through a mobile application.

-

•

Activity recognition aims to utilise smartphones, activity trackers and smartwatches, to collect accelerometer sensor data, for the classification of human activities. Proceeded by machine learning and deep learning techniques, to predict patient activities, for a fall prevention mobile application.

-

•

Cognitive impairment screening aims to build a tool to assess cognitive disorders based on individual’s writings and movements with the use of convolutional neural networks (CNN).

Based on academic foundations, the study contributes to the cumulative development of social intelligence and mobile health. It draws out many implications for academic theorists and healthcare practitioners.

The structure of the paper is as follows. Firstly, we review the literature background of our study in Sect. 2. Next, we present our social behaviour understanding architecture with the design concepts and three use cases of social intelligence systems. Lastly, the paper is concluded with findings and contributions.

2 Literature Background

2.1 Social Intelligence

Social intelligence is the ability to detect, interpret and react to human social and behavioural cues. These cues have many facets, ranging from physical appearance to vocal and facial features and gestures. Innately present in humans, social intelligence is a vital skill for understanding human behaviour, attributing significant impact on people’s lives, to this form of intelligence [1].

With the advance of Internet technologies, social computing has been moving beyond social informatics towards emphasising on social intelligence [29]. The field of implementing social intelligence in computers, is named social signal processing (SSP) [27]. Computers are relatively untrained to comprehend the aforementioned social signals in most current applications. With existing computing capabilities, context-independent tasks like arithmetic and retrieval operations can be performed without issue; however, the current state of computing is struggled to handle context-dependent tasks, such as virtual-reality applications. Incapable of realising the full potential of Internet-of-Things (IoT) networks as it is unable to utilise the data generated to predict actions or needs [27].

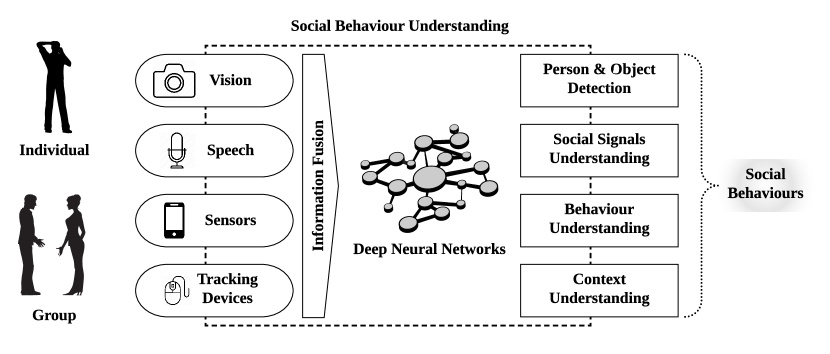

On the other hand, in regards to the ability to observe social signals, human tend to have fluctuating performance, whereas computers have more consistent performance. This indicates that humans are not fully utilising present social and behavioural cues, relying on more scenario-oriented contextual cues. While machines are able to utilise the cues more extensively [20, 5]. Vinciarelli et al. (2009) proposed a popular framework for machine analysis of social signals and behaviours as shown in Fig. 1.

Achieving social intelligence will open up opportunities to a whole myriad of new applications. The development of social intelligence systems, therefore, becomes essential to stimulate greater availability of approaches and methodologies. To enable researchers and administrator to select the optimal approach, a guideline with clear objective and procedures will be invaluable.

2.2 Machine Learning for Cognitive and Social Behavioural Detection

The ever-growing popularity of artificial intelligence has led it to be applied in numerous fields of study. Empowering the discovery of novel applications, and thoroughly testing its limits. This trend has drawn focus into the utility of machine learning in health care [18].

Many researchers have investigated the accuracy and viability of incorporating or utilising machine learning, with existing methodologies. The research results have proven capability of artificial intelligence at diagnosing various ailments and disorders, displaying high levels of accuracy, with the opportunity for further improvement [21]. Wall et al. (2012) demonstrated an opportunity to improve healthcare methodology for diagnosing mental disorders and decrease healthcare costs [28]. The use of AI in computer games has also been explored to evaluate human behaviour [9]. It was bound to specific behavioural preferences with the ability to simulate a certain degree of human behaviours.

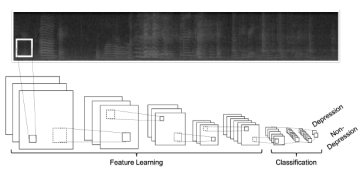

With recent breakthroughs in AI, deep learning has been well recognised as the next suitable wave of machine learning for social and behavioural analysis. Deep learning a multi-layered neural network, to steadily draws higher-level features from the input. Each deep neural network layer transforming the data to increasingly abstract representations of the input. A common deep learning implementation of interest is convolution neural networks (CNN), often used for image and video analysis [13], as shown in Fig. 2. The CNN architecture consists of thousands to millions of artificial neurons in multiple layers, including convolutional layers, pooling layers, or fully connected activation layers. CNNs have been proven to perform with the better efficiency when it comes to vision and speech classification tasks, as shown in [10, 8]

3 Social Behaviour Understanding using Deep Neural Networks

In recent research, the use of artificial intelligence has been widely exploited to analyse the behavioural and social cues in social interactions [28, 28, 9]. This research trend has led to better understandings of human beings, where new knowledge and patterns of behavioural, social, and contextual cues are constantly discovered. With the new computing capabilities, we propose a framework for social behaviour understanding using state-of-the-art deep learning, as shown in Fig. 3. Multiple constructs are adapted based on the original framework for machine analysis of social and behavioural signal processing from Vinciarelli, Pantic and Bourlard (2009) [27].

In our framework, the role of socially intelligent agents has been evolved to more closely emulate humans, thereby shortening the gap between machines and humans. We emphasise on fully realising the capabilities of artificial intelligence for robust and versatile detection of social and behavioural signals. The use of deep neural networks aims to simulate cerebral activities to create new ways of understanding data and making inferences. The proposed framework consists of FIVE (5) key components: (i) Information Fusion, (ii) Person and Object Detection, (iii) Social Signal Understanding, (iv) Behavioural Understanding, and (v) Context Understanding.

-

•

Information Fusion. With the increasing ubiquity of Internet-of-Things (IoT) technology, new types of sensors, tracking devices, and mobile equipment have been widely introduced [17]. These capabilities allow data capture of multimodal inputs, including visual, audible and movement data. Information fusion strategies are required to eliminate uncertainty and reliability issues in such data. The process of information fusion integrates multiple data sources into a robust, accurate and consistent input body for deep learning. Lee et al. (2008) suggested a hierarchical decomposing method to handle the data at three different levels: raw sensor data fusion, feature level fusion, and decision level fusion [14].

-

•

Person and Object Detection. Social intelligence entails interactions among multiple agents, including humans and objects. The traditional methods in person and object detection are typically developed based on limited feature extraction and shallow learning models [31]. The recent breakthroughs in deep learning have raised a new ground for detecting objects with high confidence in audios, images and videos. Convolutional neural network models perform distinguishably, with a variety of network architectures, training and optimisation strategies. It is also important to note that detection models can be integrated within a single multimodal neural network architecture.

-

•

Social Signal Understanding. Social signals occur in everyday situations, which include many social cues such as attention, empathy, politeness, or agreement. Social signal processing has drawn huge research efforts to understand human interactions in an automated and continuous manner [22, 16, 7]. Deep evolutional spatial-temporal networks were suggested to extract both temporal and spatial features of facial expressions, which outperformed traditional approaches in a large margin [30]. Similarly, deep learning has been used to learn social signals from appearance, gesture and posture [3].

-

•

Behavioural Understanding. Human behaviours play a vital role in shaping the perception of human interactions. Investigating behavioural cues, hence, allows intelligent agents to elicit social signals with a higher degree of support. This is also applicable to individual behaviours, captured with or without social interactions, due to temporal dynamics of social behaviours. This framework suggests behavioural understanding component is a good supplement to develop social intelligence.

-

•

Context Understanding. Understanding social and behavioural signals is not without contextual information such as location, time, or situation. The contexts are tightly associated with communicative intention; thus, it is critical to consider their dynamics in social behaviour analysis. With new mobile and sensor capabilities, the presence of context data can be embedded into multimodal deep neural networks in various ways [26].

With the recent development in deep neural networks, the fusion of multimodal understanding units opens new pathways to analyse and recognise social behaviours. Frequently, social, behavioural, and contextual dimensions of the data contain both unique and overlapped signals; thus, training using deep neural networks is a viable option for the development of intelligent agents. Cross-modality transformers are increasingly explored to address the challenge of multifacet representation learning and pattern recognition [25], such as social intelligence.

4 Development of Social Intelligence Systems

Based on the Social Behaviour Understanding framework, the study takes an important step to develop three social intelligence systems for health assessment. They utilise deep neural networks to detect social and behavioural cues using real-time data for timely interventions.

The paper aims to bring collaborative care to the next level, where social behaviours are recognised and exchanged with social support agents.

4.1 Use Case 1: Depression Detection

Current methods of diagnosing depression are time-consuming and archaic, with only minor improvements being made regarding its process [2]. The process requires psychiatrists to initially screen patients through questionnaires utilising scales, including but not limited to: Centre for Epidemiological Studies Depression Scale (CES-D) [24], Beck Depression Inventory (BDI) [15] or PRIME-MD Patient Health Questionnaire [12, 11].

After screening, individuals suspected of suffering from depression would be contacted to arrange for an additional appointment to affirm the diagnosis [2]. This process could entail a minimum wait period of 2 weeks before patients are diagnosed and can begin treatment, as depicted in Fig. 4. Currently, much of the waiting period, is devoted to the processing of questionnaires, and the scheduling for an appointment. Each of the patient’s responses must be evaluated by a psychologist, after which, the result is only an indication of whether the patient suffers from depression.

Few studies and experiments have been conducted to evaluate the effectiveness of speech-based depression detection. However, 2 studies, Depression Speaks [4] and Depression Detect [23] used, The Distress Analysis Corpus -Wizard of Oz (DAIC-WOZ) database [6], to experiment with speech-based depression detection. Utilising machine and deep learning, respectively, to extract features and classify depression from speech audio. Nevertheless, there is no mobile application currently, that is able to detect symptoms of depression, based on their voice. By attempting to improve the medical industry through novel means, advances in the methods of mental health diagnosis could be made in the future.

This system aims to explore the feasibility of using a mobile application to detect patients with depression based on their vocal features. Allowing it to predict in real time, if an individual displays symptoms of depression.

4.1.1 Data Collection.

Data is collected from the built-in microphone of, in this case, a Google Pixel 2 XL. The output format is a Pulse Code Modulation (PCM) file, which is a file format that represents a digitization of analog audio. The sampling rate is 44100Hz which means that there are 44100 samples of audio frequency per second.

The training dataset used for this project is The Distress Analysis Interview Corpus - Wizard of Oz (DAIC-WOZ) database by the University of Southern California (USC) [6]. Its contains 189 clinical interview sessions designed to support the diagnosis of psychological distress conditions. Each session comprises of a transcript of the interview, an audio recording and the facial features of the participant.

4.1.2 Development.

We processed the data into visual representations using convolutional neural networks in Fig. 5. And a prototype mobile application was developed using the Android Platform. Upon opening the application, the user is prompted to enter the Name and ID of the patient before beginning the session. Once a session is started, the user can start the recording process to detect possibility of having depression, as shown in Fig. 6.

4.2 Use Case 2: Activity Recognition for Fall Detection and Prevention

Patient accidents in hospitals are of significant concern, especially if they occur with the elderly. Globally, a third of adults over 65 years old, falls once a year. These accidents could lead to additional harm, such as further injury, complications and loss of mobility. Therefore, this social intelligence system aims to recognise patients’ activities for fall detection and prevention. High-risk patients are given green wrist tags, as shown in Fig. 7, and a green label, which are set at the panel of their beds, and they are required to always be continuously monitored in the system.

4.2.1 Data Collection.

The widespread proliferation of smartphones has made low-cost smartphones equipped with a variety of sensors commonplace. This project would explore the use of mobile technologies to enhance the fall detection and prevention strategy further. The training dataset from UniMiB-SHAR was used as it was an open dataset available online. The UniMib-SHAR dataset consists of 17 different kinds of activities, divided into nine different types of daily activities such as walking, running, etc. and eight different types of falls such as fall forward, fall left, etc. There are a total of 7759 daily activities, and 4192 falls respectively.

The social intelligence would be performed in real-time with the assistance of a smartphone, with an in-built accelerometer. The patient would carry a smartphone, with the mobile application deployed to it and perform different activities. Logged accelerometer data of 1-second intervals would be sent through an API call to the server for processing.

4.2.2 Development.

We employed 3D convolutional neural networks to analyse the behavioural data, as shown in Fig. 8. The 4D tensor would then be passed through the many convolutional, pooling, batch normalisation, flatten, and multi-perceptron layers to finally the activation layer. This would generate the 3D CNN model and show the training accuracy of the model. Firebase was used to provide real-time database as a backend service to store and return the information of the patient’s name, activity and time of activity to be displayed on the clinician and patient applications.

4.3 Use Case 3: Cognitive Impairment Screening

75 million people are predicted to be affected by dementia by 2030 [19]. With individuals older than 65 years, at much greater risk of developing a form of cognitive impairment. Hospitals employ a battery of cognitive tests, to detect cognitive impairments. The tests commonly take the form of writing and drawing examinations, requiring the completion of tasks ranging from simple instructional writing, to complex memory-based drawings.

This social intelligence system aims to predict the risk of cognitive impairments with the use of hand writings and pen movements.

4.3.1 Data Collection.

In this study, participants undergo a series of cognitive manual handwriting tests; and electronic tablet and pen was used to capture writing and in-air (hover) trajectory.

4.3.2 Development.

We developed 3D images from feature scaling the training and test data, will be represented by a 4D tensor. Then, the tensors would then be passed through a 3D deep neural networks for cognitive impairment detection. In our development, Angular 8 and JavaScript framework was utilised for the frontend, Flask and Python for the backend, and MongoDB for the database. Finally, after configuration, a 3D model will created from the variables selected, with the option to filter the X, Y and Z axis, for inspection as shown in Fig. 9.

5 Conclusion

Social intelligence systems are promising to revolutionise people’s everyday life. Our study proposes a social behaviour understanding framework which performs recognition of social and behavioural signals for the development of socially intelligent systems. The framework consists of five key components: (i) Information Fusion, (ii) Person and Object Detection, (iii) Social Signal Understanding, (iv) Behavioural Understanding, and (v) Context Understanding. Cross-modality analysis of social, behavioural, and contextual information with the use of deep neural networks is suggested to bring social intelligence to the next level. Moreover, we developed three social intelligence systems for depression detection, activity recognition, and cognitive impairment screening.

Our study contributes to the cumulative theoretical development of social computing and artificial intelligence. The uniqueness of social intelligence is evidently demonstrated to shed light on new applications. We hope our social behaviour understanding framework provides meaningful guidelines on the development of new types of social computing systems. This paper is not an end, but rather a beginning of future research as we are looking into ways of further refining and evaluating our social intelligence systems.

References

- [1] Albrecht, K.: Social intelligence: The new science of success. John Wiley & Sons (2006)

- [2] Beck, A., Ward, C., Mendelson, M., Mock, J., Erbaugh, J.: An inventory for measuring depression. archives of general psychiatry, vol. 4 (1961)

- [3] Chen, H., Liu, X., Li, X., Shi, H., Zhao, G.: Analyze spontaneous gestures for emotional stress state recognition: A micro-gesture dataset and analysis with deep learning. In: 2019 14th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2019). pp. 1–8. IEEE (2019)

- [4] Eyben, F., Weninger, F., Wöllmer, M., Shuller, B.: Open-source media interpretation by large feature-space extraction. TU Munchen, MMK (2016)

- [5] Gold, J.M., Tadin, D., Cook, S.C., Blake, R.: The efficiency of biological motion perception. Perception & Psychophysics 70(1), 88–95 (2008)

- [6] Gratch, J., Artstein, R., Lucas, G.M., Stratou, G., Scherer, S., Nazarian, A., Wood, R., Boberg, J., DeVault, D., Marsella, S., et al.: The distress analysis interview corpus of human and computer interviews. In: LREC. pp. 3123–3128. Citeseer (2014)

- [7] Gunes, H., Pantic, M.: Automatic, dimensional and continuous emotion recognition. International Journal of Synthetic Emotions (IJSE) 1(1), 68–99 (2010)

- [8] Hershey, S., Chaudhuri, S., Ellis, D.P., Gemmeke, J.F., Jansen, A., Moore, R.C., Plakal, M., Platt, D., Saurous, R.A., Seybold, B., et al.: Cnn architectures for large-scale audio classification. In: 2017 ieee international conference on acoustics, speech and signal processing (icassp). pp. 131–135. IEEE (2017)

- [9] Hildmann, H.: Designing behavioural artificial intelligence to record, assess and evaluate human behaviour. Multimodal Technologies and Interaction 2(4), 63 (2018)

- [10] Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems. pp. 1097–1105 (2012)

- [11] Kroenke, K., Spitzer, R.L.: The phq-9: a new depression diagnostic and severity measure. Psychiatric annals 32(9), 509–515 (2002)

- [12] Kroenke, K., Spitzer, R.L., Williams, J.B.: The phq-9: validity of a brief depression severity measure. Journal of general internal medicine 16(9), 606–613 (2001)

- [13] LeCun, Y., Bengio, Y., Hinton, G.: Deep learning. nature 521(7553), 436–444 (2015)

- [14] Lee, H., Park, K., Lee, B., Choi, J., Elmasri, R.: Issues in data fusion for healthcare monitoring. In: Proceedings of the 1st international conference on PErvasive Technologies Related to Assistive Environments. pp. 1–8 (2008)

- [15] Lewinsohn, P.M., Seeley, J.R., Roberts, R.E., Allen, N.B.: Center for epidemiologic studies depression scale (ces-d) as a screening instrument for depression among community-residing older adults. Psychology and aging 12(2), 277 (1997)

- [16] LiKamWa, R., Liu, Y., Lane, N.D., Zhong, L.: Moodscope: Building a mood sensor from smartphone usage patterns. In: Proceeding of the 11th annual international conference on Mobile systems, applications, and services. pp. 389–402 (2013)

- [17] Nguyen, H.D., Jiang, Y., Eiring, Ø., Poo, D.C.C., Wang, W.: Gamification design framework for mobile health: designing a home-based self-management programme for patients with chronic heart failure. In: International Conference on Social Computing and Social Media. pp. 81–98. Springer (2018)

- [18] Nguyen, H.D., Poo, D.C.C.: Automated mobile health: Designing a social reasoning platform for remote health management. In: International Conference on Social Computing and Social Media. pp. 34–46. Springer (2016)

- [19] Organization, W.H., et al.: Global action plan on the public health response to dementia 2017–2025 (2017)

- [20] Pollick, F.E., Lestou, V., Ryu, J., Cho, S.B.: Estimating the efficiency of recognizing gender and affect from biological motion. Vision research 42(20), 2345–2355 (2002)

- [21] Rutkowski, T.M., Abe, M.S., Koculak, M., Otake-Matsuura, M.: Cognitive assessment estimation from behavioral responses in emotional faces evaluation task–ai regression approach for dementia onset prediction in aging societies. arXiv preprint arXiv:1911.12135 (2019)

- [22] Schuller, B., Steidl, S., Batliner, A., Vinciarelli, A., Scherer, K., Ringeval, F., Chetouani, M., Weninger, F., Eyben, F., Marchi, E., et al.: The interspeech 2013 computational paralinguistics challenge: Social signals, conflict, emotion, autism. In: Proceedings INTERSPEECH 2013, 14th Annual Conference of the International Speech Communication Association, Lyon, France (2013)

- [23] Scibelli, F., Roffo, G., Tayarani, M., Bartoli, L., De Mattia, G., Esposito, A., Vinciarelli, A.: Depression speaks: Automatic discrimination between depressed and non-depressed speakers based on nonverbal speech features. In: 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). pp. 6842–6846. IEEE (2018)

- [24] Spitzer, R.L., Kroenke, K., Williams, J.B., Group, P.H.Q.P.C.S., et al.: Validation and utility of a self-report version of prime-md: the phq primary care study. Jama 282(18), 1737–1744 (1999)

- [25] Tan, H., Bansal, M.: Lxmert: Learning cross-modality encoder representations from transformers. arXiv preprint arXiv:1908.07490 (2019)

- [26] Vahora, S., Chauhan, N.: Group activity recognition using deep autoencoder with temporal context descriptor. International Journal of Next-Generation Computing 9(3) (2018)

- [27] Vinciarelli, A., Pantic, M., Bourlard, H.: Social signal processing: Survey of an emerging domain. Image and vision computing 27(12), 1743–1759 (2009)

- [28] Wall, D.P., Dally, R., Luyster, R., Jung, J.Y., DeLuca, T.F.: Use of artificial intelligence to shorten the behavioral diagnosis of autism. PloS one 7(8) (2012)

- [29] Wang, F.Y., Carley, K.M., Zeng, D., Mao, W.: Social computing: From social informatics to social intelligence. IEEE Intelligent systems 22(2), 79–83 (2007)

- [30] Zhang, K., Huang, Y., Du, Y., Wang, L.: Facial expression recognition based on deep evolutional spatial-temporal networks. IEEE Transactions on Image Processing 26(9), 4193–4203 (2017)

- [31] Zhao, Z.Q., Zheng, P., Xu, S.t., Wu, X.: Object detection with deep learning: A review. IEEE transactions on neural networks and learning systems 30(11), 3212–3232 (2019)