\ul

Smooth Robustness Measures for Symbolic Control Via Signal Temporal Logic

Abstract

Symbolic control problems aim to synthesize control policies for dynamical systems under complex temporal specifications. For such problems, Signal Temporal Logic (STL) is increasingly used as the formal specification language due to its rich expressiveness. Moreover, the degree of satisfaction of STL specifications can be evaluated using STL robust semantics as a scalar robustness measure. This capability of STL enables transforming a symbolic control problem into an optimization problem that optimizes the corresponding robustness measure. However, since these robustness measures are non-smooth and non-convex, exact solutions can only be computed using computationally inefficient mixed-integer programming techniques that do not scale well. Therefore, recent literature has focused on using smooth approximations of these robustness measures to apply scalable and computationally efficient gradient-based methods to find local optima solutions. In this paper, we first generalize two recently established smooth robustness measures (SRMs) and two new ones and discuss their strengths and weaknesses. Next, we propose STL error semantics to characterize the approximation errors associated with different SRMs under different parameter configurations. This allows one to sensibly select an SRM (to optimize) along with its parameter values. We then propose STL gradient semantics to derive explicit gradients of SRMs leading to improve computational efficiency as well as accuracy compared to when using numerically estimated gradients. Finally, these contributions are highlighted using extensive simulation results.

I Introduction

A symbolic control problem arises when a dynamical system needs to be controlled such that its trajectory satisfies some complex temporal specifications. For example, such specifications may include requirements expected from the dynamical system, like achieving time-sensitive goals, avoiding undesirable behaviors and ensuring desirable behaviors over certain periods of time. To precisely express such temporal specifications, temporal logics like Linear Temporal Logic [1], Computation Tree Logic [2], Interval Temporal Logic [3], Metric Temporal logic [4], Time Window Temporal Logic [5] and Signal Temporal Logic (STL) [6] can be used.

Among these temporal logics, STL has the advantage of having STL robust semantics that can systematically transform the given temporal specifications and a system trajectory (i.e., a “signal”) into a scalar robustness measure - which is indicative of both the satisfaction/violation and the degree of satisfaction/violation of the specification via its sign and magnitude, respectively. This unique property enables STL to transform a symbolic control problem into an optimization problem that finds the control sequence (signal) that maximizes the corresponding robustness measure. Due to these capabilities of STL, in recent years, it has been increasingly used in control systems related application domains such as in planning [7], robotics [8], multi-agent systems [9], automotive systems [10], cyber-physical systems [11] and biological systems [12, 13].

The conventional robustness measure is non-smooth and non-convex due to the involved min and max operators in its definition. Therefore, optimizing such a robustness measure is an extremely challenging task. The work in [14] formulates this optimization problem as a Mixed-Integer Program (MIP) so as to obtain an exact solution. However, this MIP formulation scales poorly with respect to many aspects of the original optimization problem (e.g., the complexity of the specification). Moreover, MIPs are inherently computationally inefficient to solve due to their complexity properties. With regard to this optimization problem, prior work have also explored alternative MIP formulations [15, 11] as well as heuristic [16, 17] and non-smooth [18, 19] optimization approaches, but with no solution to the aforementioned scalability and computational efficiency concerns.

To address these concerns, several recent works such as [7, 8, 20] pioneered by [21] propose to use smooth approximations of the conventional robustness measure so as to exploit the scalable, computationally efficient and well-established gradient-based optimization methods. It is worth noting that even though gradient-based optimization methods are susceptible to the issue of local optima, there are several efficient and practical ways to overcome this issue [22, 23, 24]. Moreover, the consensus in the literature [21, 9, 7, 8, 20] seems to indicate that the scalability and the computational efficiency offered by adopting gradient-based optimization methods outweigh the effect of any such drawbacks.

Typically, an approximate smooth robustness measure (SRM) is obtained by replacing (approximating) the min and max operators in the conventional robustness measure with some smooth operators. Therefore, depending on the nature of the used smooth operators, the resulting SRM will have different characteristics. For example, [21] proposes to use quasi-min and quasi-max [25] operators as the smooth operators. However, even though the resulting SRM is globally smooth, it is not sound, i.e., achieving a positive value for the SRM does not imply the satisfaction of the specification [21]. To recover this soundness property, [7] proposes to use smooth operators based on arithmetic and geometric means (partly motivated by [8]). Even though the resulting SRM ensures soundness and also leads to more conservative results (i.e., more uniformly robust to external disturbances), it is not globally smooth [7]. Motivated by this predicament, the subsequent work [20] (which also is the predecessor to this work) proposes to use quasi-min and soft-max [25] operators as smooth operators. The resulting SRM is sound, globally smooth, and also offers a tunable level of conservativeness [20] (via selecting the parameters of the used smooth operators).

Despite these successive recent improvements, there still are many contributions to be made in this research. For example, even though the proposed SRMs in [7] and [20] are sound, they are not reverse sound, i.e., achieving a negative value for the SRM does not imply the violation of the specification. In fact, in some applications (e.g., in falsification problems [16]), the reverse soundness property is far more crucial than the soundness property. To address this need, inspired by [21, 20], in this paper, we propose a new SRM that is reverse sound and also globally smooth. Along the same lines, we propose another SRM that aims to provide more flexibility when tuning the level of conservativeness (still, via selecting the parameters of the used smooth operators).

A natural question to ask at this point is: “How one should select these smooth operator parameters?” Typically, smaller parameter values lead to more conservative results, while larger parameter values lead to more accurate SRM (i.e., closer to the actual robustness measure). Authors in [21] motivate the latter accuracy argument as it leads to ensure properties like soundness and reverse soundness (together called completeness) asymptotically. However, authors in [20] point out the prior conservativeness argument. Nevertheless, both works do not provide a systematic approach to select these parameters. To answer this question, in this paper, we propose STL error semantics to determine a bound for the approximation error associated with the used SRM in terms of the used operator parameters. Note that, since the width of such an error bound is a measure of the accuracy of the used SRM in terms of the used operator parameters, it can easily be used to tune the operator parameters and compare different (existing and newly proposed) SRMs.

As mentioned before, the primary motivation behind using an SRM (as opposed to using the non-smooth conventional robustness measure) is to enable the use of efficient and scalable gradient-based optimization methods [21, 9, 8, 7, 20]. Therefore, intuitively, to best reap these benefits of gradient-based optimization, having an accurate as well as efficient way to evaluate the required “gradients” is of prime importance (here, the “gradients” refer to the gradients of the used SRM with respect to the system controls). Clearly, the use of numerically evaluated gradients is both computationally inefficient as well as inaccurate [21]. However, to the best of the authors’ knowledge, experimental results reported in existing literature [21, 9, 8, 7, 20] use numerically evaluated gradients defined via finite differences [21] or software packages like AutoGrad [26]. Motivated by this, we propose STL gradient semantics to determine the explicit gradient of any used SRM. Such derived explicit gradients are accurate (by definition) and significantly efficient compared to numerically evaluated gradients, and thus, allows us to reap the benefits of gradient-based optimization methods to the fullest.

In all, our contributions can be summarized as follows:

-

1.

Two new SRMs are proposed. One is guaranteed to be reverse sound, while the other is intended to provide more flexibility when tuning the level of conservativeness.

-

2.

We propose STL error semantics to determine a bound for the approximation error of the used SRM in terms of the used operator parameters, enabling one to sensibly select an SRM along with its smooth parameter values.

-

3.

We propose STL gradient semantics to derive the explicit gradient of the used SRM, leading to improve the accuracy and the efficiency (of both gradients and results) compared to when using numerically estimated gradients.

-

4.

An extensive collection of simulation results has been reported along with source codes for reproduction and reuse purposes.

This paper is organized as follows. Section II introduces the considered class of symbolic control problems and the STL robust semantics. Section III provides the details of existing and new SRMs and introduces the smooth STL robust semantics. The proposed STL error semantics that can be used to characterize the approximation errors associated with different SRMs are discussed in Section IV. The proposed STL gradient semantics that can be used to derive explicit gradients of SRMs are discussed in Section V. Several observed simulation results, along with few interesting future research directions, are provided in Section VI before concluding the paper in Section VII.

II Problem Formulation

We consider a non-linear discrete-time system of the form:

| (1) | ||||

where is the system state, is the control input, and is the output signal. It is also assumed that and in (1) are continuous and differentiable. Given a finite horizon , an initial condition and a bounded-time STL specification , our goal is to synthesize a control input sequence such that the resulting output signal satisfies (here, denotes the discrete interval ).

II-A STL Robust Semantics

STL was first introduced in [6] as a variant of temporal logic tailored for specifying desired temporal properties of real-valued signals. In particular, an STL specification is built recursively from the predicates using the STL syntax:

| (2) |

where is a predicate defined by the function . While some STL synthesis methods require to be linear, in our approach, we only require to be differentiable. The conjunction () and negation () operators can be used to derive other Boolean operators such as disjunction () and implication (). Similarly, the until () operator can be used to derive other temporal operators such as eventually () and always (). Here represents a discrete interval starting from a time point to a time point where . Since we restrict ourselves to bounded-time STL specifications, .

For example, the STL specification states that “ must be True at all time points in and must be True at some time point in .” Here, each is an STL specification itself, which incidentally can be a predicate . In the latter case, notice the dependence of on the time point .

We use the STL robust semantics (defined below) to assign a scalar value (called the robustness measure) for a signal with respect to a given specification. This particular robustness measure is positive if and only if the signal satisfies the given specification. In other words, , where is the robustness measure of the signal with respect to the specification . Let us use the notation to denote the suffix of the signal starting from time point (note that, ).

Definition 1.

(STL Robust Semantics)

-

•

-

•

-

•

-

•

-

•

-

•

-

•

-

•

-

•

II-B Synthesis Problem as an Optimization Problem

Finding a control input sequence for the system (1) so that the resulting output signal satisfies the given specification is formally known as the synthesis problem. Using STL robust semantics discussed above, this synthesis problem can be stated as an optimization problem of the form:

| (3) | ||||||

| Subject to: | ||||||

Note that the three feasibility constraints in the last line of (3) can be included in the robustness measure (i.e., in the objective function of (3)) by considering them as STL specifications, say , on respective signals (similar to and , ). For example, if , then, .

Therefore, (3) can be re-stated as

| (4) | ||||||

| Subject to: | ||||||

where , and hence, . With a slight abuse of notation, we can replace the STL specification simply by . As a result, the robustness measure objective in (4) can be written of as

| (5) |

It is worth pointing out that the state signal and the output signal are both fully determined by the control signal through the agent dynamics (1) and the given initial condition . Exploiting this dependence, we can define a composite control dependent signal where . Hence, in (5) can be written as . Therefore, (4) can be re-stated as an unconstrained optimization problem:

| (6) |

where the objective function is simply the robustness measure of the signal with respect to the specification .

According to the STL robust semantics, if the determined optimal control signal in (6) is such that , the signal is guaranteed to satisfy the specification . In other words, denoting the corresponding output and state signals respectively as and , is guaranteed to satisfy the given specification in (3), and, signals , and will always remain within their respective feasible regions stated in (3).

Remark 1.

In this paper, we focus on solving the optimization problem (6) (same as (3) and (4)) to determine the optimal controls for the synthesis problem. An alternative energy-aware approach would be to solve an optimization problem of the form

| (7) |

where is a scaling factor. Similarly, an alternative receding horizon control approach (preferred if is large) can also be facilitated - by slightly modifying the form of (7).

III Smooth Robustness Measures (SRMs)

The robustness measure objective of the optimization problem (6) (i.e., the interested synthesis problem) is non-smooth and non-convex due to the involved non-smooth and operators in the STL robust semantics. In the special case where the system (1) is linear and all the predicates required to define the STL specification are linear, the problem (6) can be encoded as a Mixed Integer Program (MIP) [14]. However, the complexity of this MIP’s solution process grows exponentially with the number of predicates and . Therefore, even for such a special (linear) case, solving (6) is extremely challenging.

To address this challenge, the recent literature [21, 8, 7, 20] has focused on replacing the non-smooth robustness measure objective in (6) with a smooth approximation of it. Such a smooth approximation is denoted as and is referred to as a smooth robustness measure (SRM). This modification enables the use of computationally efficient and scalable gradient-based optimization techniques to solve (6).

Typically, an SRM is obtained by replacing (approximating) the non-smooth and operators involved in by respective smooth-min and smooth-max operators (denoted as and ). In particular, to evaluate such an SRM , we use the smooth STL robust semantics defined below.

Definition 2.

(Smooth STL Robust Semantics)

-

•

-

•

-

•

-

•

-

•

-

•

-

•

In writing the above semantics, for notational simplicity, we have omitted representing the control () dependence of the composite signal (). Moreover, without loss of generality, we have assumed that the STL specification is given in disjunctive normal form, i.e., the negation operator is only applied to predicates . It is worth noting that any STL specification can be written in disjunctive normal form [20].

| Smooth Operator |

|

|

|

|||||

| -Quasi- | ||||||||

| -Quasi- | ||||||||

| -Soft- | ||||||||

| -Soft- |

Table I summarizes possible two smooth-min operators and two smooth-max operators that can be used in Def. 2. Based on the configuration of the used smooth-min and smooth-max operators, as outlined Tab. II, we can define four different SRMs (labeled SRM1-4). In the following four subsections, we discuss each of these different SRMs.

Notation

Unless stated otherwise, is used to represent an arbitrary finite set of real numbers (without loss of generality, is also assumed to be in descending order). Further, are used to represent two user defined parameters. We also point out that, we have slightly abused the notation by using it to represent closed intervals in both and . However, we believe its meaning would be clear from the context.

III-A SRM1: Proposed in [21]

The work in [21] has proposed to use -Quasi- and -Quasi- operators (see Tab. I and [25]):

| (8) | ||||

as smooth-min and smooth-max operators, respectively. The operators in (8) are smooth everywhere, and thus, their respective gradients can be derived easily (provided in Tab. I). The following lemma establishes bounds on the approximation errors associated with the operators in (8).

Lemma 1.

The smooth-min and smooth-max operators in (8) satisfy the following respective approximation error bounds:

| (9) | ||||

Proof.

Recall that the set is in descending order. Therefore, the approximation error associated with the smooth-min operator in (8) is

| (10) | ||||

| (11) |

Both inequalities used in the above derivation exploit the monotonicity property of the function. Using the same property, we can also write

| (12) |

The relationships in (10), (11) and (12) establish the first result in (9). Following the same steps, the second result in (9) can also be established. This completes the proof. ∎

From the above lemma, it is clear that the approximation errors associated with the smooth-min and smooth-max operators in (8) are bounded and also vanishes as . Since these operators are used when determining the SRM1 (via Def. 2), we can use (9) to bound the approximation error associated with the SRM1 as

| (13) |

where and are two real numbers dependent on the STL specification and the parameters . In other words, the actual (non-smooth) robustness measure with respect to the SRM1 is placed such that

| (14) |

The exact details on how and can be computed are provided in Section IV. Regardless, at this point, it should be clear from (9) that neither nor in (13) is necessarily zero. This implies that, in this scenario (SRM1), achieving for some composite signal does not guarantee the satisfaction of the specification , i.e.,

Similarly, in this scenario (SRM1), achieving for some composite signal does not guarantee the violation of the specification either, i.e.,

The above two characteristics indicate that the SRM1 is neither sound, revers-soundness nor complete. The formal definitions of these concepts: soundness, reverse-soundness and completeness considered in this paper are given in the following definition.

Definition 3.

A smooth robustness measure is called:

-

1.

“sound” if , for any signal and specification ,

-

2.

“reverse-sound” if , for any signal and specification ,

-

3.

“complete” if it is both sound and reverse-sound.

Since the approximation errors associated with the smooth-min and smooth-max operators in (8) vanish as the parameters (see Lm. 1), the same can be expected from the approximation error associated with the SRM1, i.e., as , the SRM1 . Therefore, as , SRM1 becomes sound, reverse-sound and complete. Hence, the SRM1 is called asymptotically sound, asymptotically reverse-sound and asymptotically complete.

III-B SRM2: Recovering the Soundness Property [20]

Even though the aforementioned asymptotic properties of SRM1 are theoretically reassuring, when it comes to implementations, allowing the parameters is not practical (see (8)). This limitation is partially addressed in [20] by proposing a new SRM (labeled as SRM2) that is sound - irrespective of the parameters . Moreover, this SRM2 is asymptotically reversed-sound and asymptotically complete. In all, SRM2 [20] recovers the lacking soundness property in SRM1 [21].

In particular, SRM2 is defined using -Quasi- and -Soft- operators (see Tab. I):

| (15) | ||||

as smooth-min and smooth-max operators, respectively. Similar to before, the above operators are smooth everywhere, and their respective gradients are provided in Tab I. The following lemma establishes the bounds on the approximation errors associated with the operators in (15).

Lemma 2.

The smooth-min and smooth-max operators in (15) satisfy the following respective approximation error bounds:

| (16) | ||||

Proof.

The first result in (16) has already been proven in Lm. 1. Hence, here we only need to prove the second result in (16). The approximation error associated with the smooth-max operator in (15) is

| (17) | ||||

| (18) |

An alternative lower bound can be obtained by continuing from (17) as

| (19) |

All the inequalities used in the above derivations exploit the monotonicity property the exponential function. Using the same property, we can also write

| (20) |

The relationships in (18), (19) and (20) prove the second result in (16), and thus, the proof is complete. ∎

Recall that the operators in (15) define the SRM2 via Def. 2. Now, the following lemma can be established regarding the approximation error associated with the SRM2.

Lemma 3.

The approximation error associated with the SRM2 is bounded such that

| (21) |

where is some positive real number dependent on the specification and the parameters , and as .

Proof.

Lemma 2 implies that for any set of real numbers , the smooth operators in (15) satisfy and . Note also that SRM2 is computed recursively using the semantics in Def. 2 while is computed recursively using the semantics in Def. 1. Then, from comparing the respective semantics in Defs. 1 and 2, it is easy to see that as

-

•

-

•

-

•

-

•

-

•

-

•

-

•

.

Moreover, according to Lm. 2, the approximation errors associated with the operators in (15) are bounded and also vanishes as . Therefore, the approximation error associated with the SRM2 should follow (21). ∎

The above lemma implies that the actual (non-smooth) robustness measure with respect to the SRM2 is placed such that

Therefore, in this scenario (SRM2), achieving guarantees the satisfaction of the specification, i.e.,

This indeed is the soundness property promised earlier. Hence, it is clear that the SRM2 proposed in [20] is sound and also asymptotically reverse-sound and asymptotically complete.

III-C SRM3: Recovering the reverse-soundness

Even though having a sound SRM (e.g., SRM2) is important in verification type problems, in falsification type problems (where the aim is to find a control that violates a given specification), it is critical to have a reverse-sound SRM. Further, since a reverse-sound SRM will, by definition, over-approximate the actual robustness measure (as opposed to a sound SRM that under-approximates the actual robustness measure), one can expect to use a sound SRM together with a reverse-sound SRM (e.g., use one as a “boosting” objective function for the other [23]) to achieve better and more decisive results. Motivated by these prospects, in the same spirit of the SRM2, here we propose a new SRM (labeled as SRM3) that recovers the lacking reverse-soundness property in SRM1 [21], while also preserving the asymptotic soundness and asymptotic completeness properties in SRM1 [21].

The proposed SRM3 is defined using -Soft- and -Quasi- operators (see Tab. I):

| (22) | ||||

as smooth-min and smooth-max operators, respectively. Similar to before, the above operators are smooth everywhere, and their respective gradients can be found in Tab. I. The following lemma establishes the bounds on the approximation error associates with the operators in (22).

Lemma 4.

The smooth-min and smooth-max operators in (22) satisfy the following respective approximation error bounds:

| (23) | ||||

Proof.

In parallel to Lm. 3, the following lemma can be established regarding the approximation error associated with the SRM3 defined by the operators in (22).

Lemma 5.

The approximation error associated with the SRM3 is bounded such that

| (24) |

where is some negative real number dependent on the specification and the parameters , and as .

Proof.

The proof follows the same steps as that of Lm. 3, and is, therefore, omitted. ∎

The above lemma implies that the actual (non-smooth) robustness measure lies in the range:

Hence, in this scenario (SRM3), achieving implies a violation of the specification (i.e., ). i.e.,

This indeed is the reverse-soundness property promised earlier. Therefore, it is clear that the proposed SRM3 is reverse sound and also asymptotically sound and asymptotically complete.

III-D SRM4: Lifting the uniform robustness

As a complementary approach for the SRM1 [21], we now propose a new SRM (labeled SRM4). In particular, this SRM4 is defined using the -Soft- and -Soft- operators (see Tab I):

| (25) | ||||

as smooth-min and smooth-max operators, respectively.

Compared to the smooth-min and smooth-max operators used in SRM1 (given in (8)), the operators in (25) have a special property: when , both operators converge to the arithmetic mean operator. According to the findings in [7], optimizing a robustness measure computed based on mean-like (e.g., arithmetic mean) operators rather than min/max-like (e.g., smooth-min/max) operators can lead to more uniformly robust solutions. This is because, while the latter (min/max-based) approach optimizes the minimum margin of satisfaction of all the specification components (e.g., predicates), the former (mean-based) approach optimizes the mean margin of satisfaction of all the specification components. Hence, one can expect to use the SRM1 (with moderate values) together with the SRM4 (with low values) to reach more uniformly robust solutions (e.g., use SRM4 as a “boosting” objective function for the SRM1 [23]). Even when used alone, intuitively, the SRM4 (with moderate values) can be expected to provide more uniformly robust solutions.

Similar to previous SRMs, the above operators are smooth everywhere, and their respective gradients are provided in Tab I. The following lemma establishes the bounds on the approximation errors associated with the operators in (25).

Lemma 6.

The smooth-min and smooth-max operators in (25) satisfy the following respective approximation error bounds:

| (26) | ||||

From the above lemma, it is clear that the approximation errors associated with the smooth-min and smooth-max operators in (25) are bounded and also vanishes as . Therefore, similar to the case with the SRM1, the approximation error associated with the SRM4 can be bounded as

| (27) |

i.e., the actual robustness measure is such that

| (28) |

where and are two real numbers dependent on the STL specification and the parameters . The exact details on how and can be computed are provided in Section IV. Regardless, it should be clear that . Therefore, similar to the SRM1, the SRM4 is asymptotically sound, asymptotically reverse-sound and asymptotically complete.

IV Approximation Errors of Smooth Robustness Measures

In the previous section, we discussed four candidate smooth robustness measures (SRM1-4) that can be used to approximate the actual non-smooth robustness measure when solving the synthesis problem (6) via a gradient-based optimization method. For notational convenience, let us define the corresponding approximation error as

| (29) |

As shown in (13), (21), (24) and (27) (obtained via Lms. 1, 2, 4 and 6, respectively), this approximation error will always be bounded inside some finite interval (henceforth called an error bound) of the form:

| (30) |

irrespective of the signal . Here, and are two real numbers where can be thought of as a collection of all the used smooth-min and smooth-max operator parameters (e.g., ). Intuitively, this error bound will depend on: 1) the specification , 2) the SRM (i.e., the used smooth-min and smooth-max operator configuration: (8), (15), (22) or (25)), and 3) the smooth-min and smooth-max operator parameters .

In this section, we develop a set of semantics (parallel to the ones in Def. 2) to explicitly derive the error bound in (30) for a given specification and an SRM - in terms of the operator parameters . Having this knowledge is critical as it enables one to sensibly select: 1) the SRM to optimize (in (6)), and 2) the operator parameters . For example, one can select an SRM and its operator parameters that minimizes the width of the error bound, i.e., .

IV-A Some Preliminaries

In the following analysis, we use the notation

| (31) | ||||

to commonly represent the approximation error bounds associated with different possible respective smooth-min and smooth-max operators proven in Lms. 1, 2, 4, 6 (also outlined in Tab. I). Note also that, in (31), is the cardinality of the set and are operator parameters that a user needs to select. For example, if the SRM1 is to be used,

We also require the following minor theoretical result.

Lemma 7.

If are such that for all , then

| (32) | |||

Proof.

Finally, we improve the generality of the proposing set of semantics (that computes the error bound in (30)) by introducing a small modification to the smooth STL robust semantics given in Def. 2. In particular, we replace the first smooth STL robust semantic: by a noise affected version of it:

| (33) |

where the noise term is such that with known functions and . For example, where is a known constant, is a possibility. Intuitively, this modification causes the error bound in (30) to become dependent on the signal , i.e., now,

| (34) |

Therefore, this modification complicates the task at hand: computing the error bound . However, as we will see in the sequel, it allows us to see how the uncertainties in the mission space can affect this error bound .

Remark 2.

If there are no uncertainties in the mission space, one can simply set in (33) for all and for all predicates . Moreover, if such uncertainties are independent of the agent trajectory , one can simply set and where and are known constants. In both of these occasions, the resulting error bound will be independent of the signal . Evaluating such a signal independent error bound is a reasonable thing to do, especially in an off-line stage where the signal is unknown and the focus is on sensibly selecting an SRM along with its operator parameters .

IV-B STL error semantics

Consider the set of semantics (we named the STL error semantics) defined in the following definition.

Definition 4.

(STL Error Semantics)

-

•

-

•

-

•

-

-

•

-

-

•

-

-

•

-

-

•

-

-

where

-

-

-

-

.

Theorem 1.

Proof.

Semantic 1

Semantic 2

Can be proved using the same steps as above.

Semantic 3

According to the corresponding semantics in Defs. 1 and 2, and , respectively. Therefore, the corresponding approximation error is .

To bound this error term , what we have at our disposal are the error bounds: , for Applying these two error bounds in Lm 7, we get

| (35) | |||

Further, using (31), we can write

| (36) | |||

Semantic 4

Can be proved using the same steps as above.

Semantic 5

Similar to before, by subtracting the corresponding semantics in Defs. 1, 2, we get the error term

| (37) | |||

To bound this error term , what we have at our disposal are the bounds: , for all . Applying these error bounds in Lm 7, we get

| (38) | |||

Further, using (31), we can write

| (39) | |||

Semantic 6

Can be proved by combining the techniques used to prove Semantics 3, 5 and 6. Due to space constraints, details are omitted here. This completes the proof of Th. 1. ∎

Remark 3.

Note that we are not constrained to use the same parameter values across all the smooth-min, smooth-max operators required when defining an SRM for a specification. In other words, at different levels of the specification, different values can be used. Having this flexibility is especially useful when the components of the specification are not properly normalized. One such example, identified using the proposed STL error semantics, is discussed in Section VI.

To conclude this section, we propose the following set of semantics (we named the Supplementary STL error semantics) applicable for a situation: 1) where there are no mission space uncertainties (i.e., when in (33)), or, 2) where mission space uncertainties are independent of the agent trajectory (i.e., when and where and are known constants). As pointed out in Remark 2, in such scenarios, the resulting error bounds will be independent of the signal . This property is more evident in the semantics defined below (compared to the semantics defined in Def. 4).

Definition 5.

(Supplementary STL Error Semantics)

-

•

-

•

-

•

-

-

•

-

-

•

-

•

-

•

-

where

-

-

-

.

Corollary 1.

Proof.

The first two semantics in Def. 5 can be obtained by applying the relationships and in respective semantics in Def. 4. As a result of these first two semantics being independent of the signal suffix , all the remaining semantics in Def. 5 will also be independent of the signal suffice . These remaining semantics in Def. 5 can be proved by following the same steps used to establish their respective semantics in Def. 4. ∎

V The Gradients of Smooth Robustness Measures

Recall that, in Section II, we formulated the interested synthesis problem (3) as an unconstrained optimization problem (6). Then, since the involved robustness measure objective in (6) is non-smooth, in Section III, we proposed to replace it with a smooth approximation (an SRM) inspired by the recent literature [21, 8, 7, 20]. The main motivation behind this replacement is to enable the use of efficient gradient-based optimization techniques to solve (6). Hence, the importance of knowing the gradient of the used SRM (with respect to ) is clear. However, to the best of the authors’ knowledge, experimental results reported in existing literature [21, 8, 7, 20] relies on numerically evaluated gradients rather than explicitly derived gradients (of SRMs). Intuitively, it is worth investigating the accuracy and the efficiency of such numerically evaluated gradients compared to explicitly derived gradients. Motivated by this need, in this section, we propose an efficient systematic approach to explicitly derive the gradient of the used SRM , i.e., .

First, notice that we can use the chain rule to write

| (41) |

At this point, we remind that: 1) the SRM , 2) the composite signal where with , and 3) the control where (all vectors are column vectors). For notational simplicity (also without any ambiguity), let us denote the three gradient terms in (41) as

where , and (consistent with the previously mentioned vector dimensions). Note that, the composition of these three gradient terms are as follows:

| (42) |

where each for all ,

| (43) |

where each for all and

| (44) |

where each for all .

To obtain the interested gradient term , we need to compute the gradient terms and . For this purpose, in the following two subsections, we respectively propose a novel set of semantics and exploit the agent dynamics (1).

V-A The gradient term:

In the following analysis, we use the notation to represent the zero vector in . Note also that, as shown in Tab. I, we already have derived the gradients of smooth-min and smooth-max operators (i.e., , , for all ) used in different SRMs: SRM1-4. Now, consider the set of semantics (we named the STL gradient semantics) defined below.

Definition 6.

(STL gradient semantics)

-

•

-

•

-

•

-

where and

-

•

-

where and

-

•

-

where for any

-

•

-

where for any

-

•

,

-

where

-

and

-

with , ,

-

and .

Theorem 2.

For a known signal suffix , a specification and a smooth robustness measure (SRM1-4), the STL gradient semantics in Def. 6 can be used to determine the gradient (in the form: ).

Proof.

In Semantics 3-7 of Def. 6, note that all the involved gradient terms of smooth operator values are scalars (e.g., in Semantic 3, see also Tab. I). Hence, in those semantics, such scalar gradients act only as scaling factors on the respective vector-valued constituent gradients (e.g., on in Semantic 3). Therefore, the dimension of the resulting gradient upon using a Semantic 3-7 will be the same as that of the corresponding constituent gradients used. Now, since each such constituent gradient has its root in Semantic 1 - that always assigns a vector in as the gradient value - it is clear that the semantics in Def. 6 will always produce a vector in as the gradient .

In fact, each of the Semantics 3-7 in Def. 6 can be established easily by differentiating the respective semantic in Def. 2 (with respect to ) using the chain rule. For example, the Semantic 3 in Def. 6 is proved by differentiating the Semantic 3 in Def. 2 as

where and . Similarly, even without applying the chain rule, the Semantics 1-2 in Def. 6 can be proved by simply differentiating the respective semantics in Def. 2. Therefore, in parallel to the way that the semantics in Def. 2 computes the SRM , the semantics in Def. 6 computes the gradient of the SRM: . Finally, note that, by definition, and . Hence, is independent of the signal values , and thus, the first terms in will always be zeros. This completes the proof. ∎

V-B The gradient term:

In the following analysis, with regard to the agent dynamics model (1), we use the notation to represent the evaluated at where . The same convention applies for the notations , and . Since the functions and in (1) are assumed as a given and differentiable, their partial derivatives, i.e., , , and are also considered as a given. Therefore, for a given control signal (which also determines the generated state signal through (1)), terms like , , and can be evaluated efficiently for any .

In order to determine the the required gradient term: , we first need to establish the following two lemmas.

Lemma 8.

Under the agent dynamics model (1), the control input signal and the output signal are related such that, for all ,

| (45) |

Proof.

Lemma 9.

Under the agent dynamics model (1), the control input signal and the state signal are related such that, for all ,

| (47) |

Proof.

Using these two lemmas, we now propose a theorem that can be used to determine the required gradient term: .

Theorem 3.

VI Simulation Results

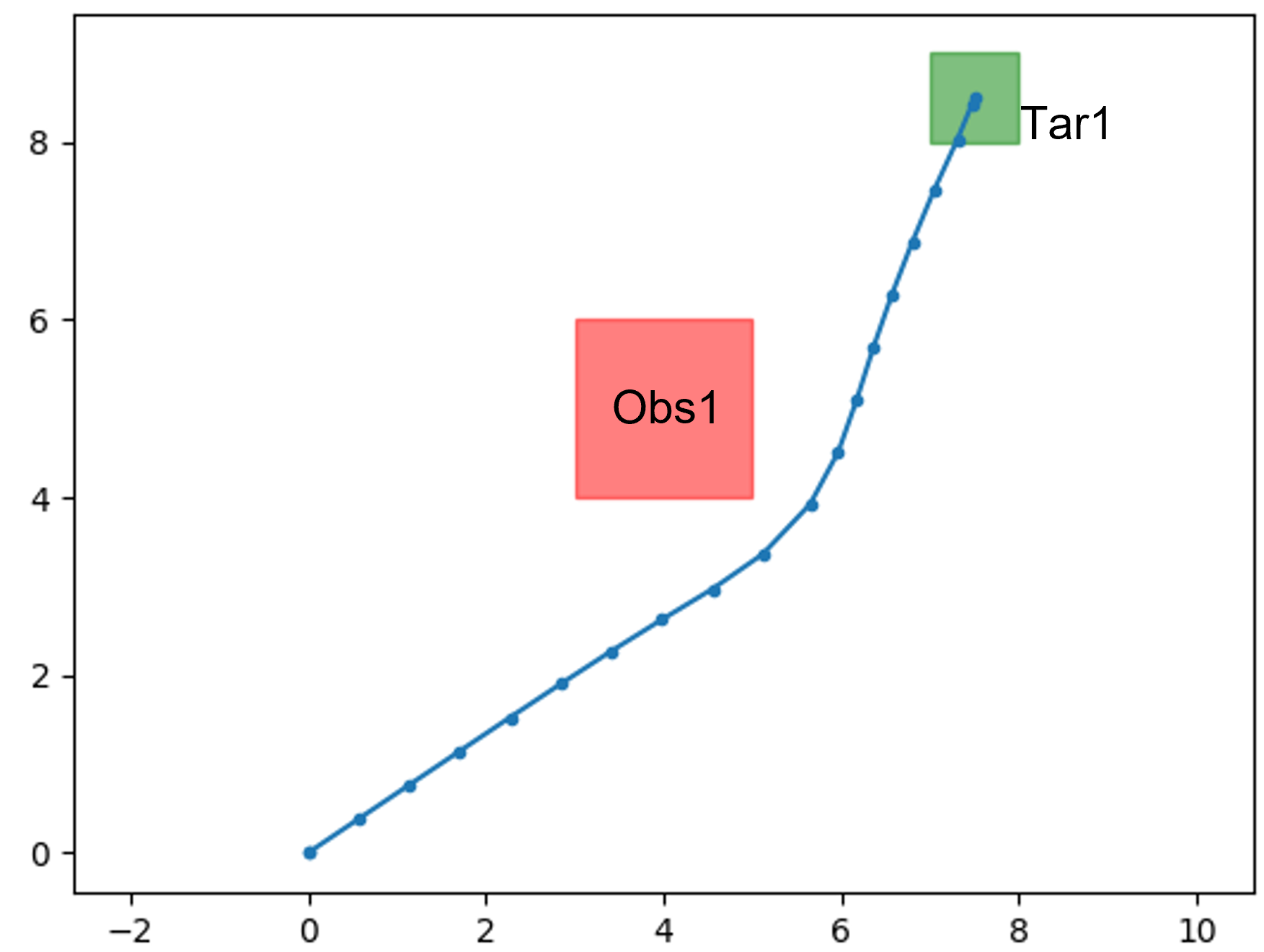

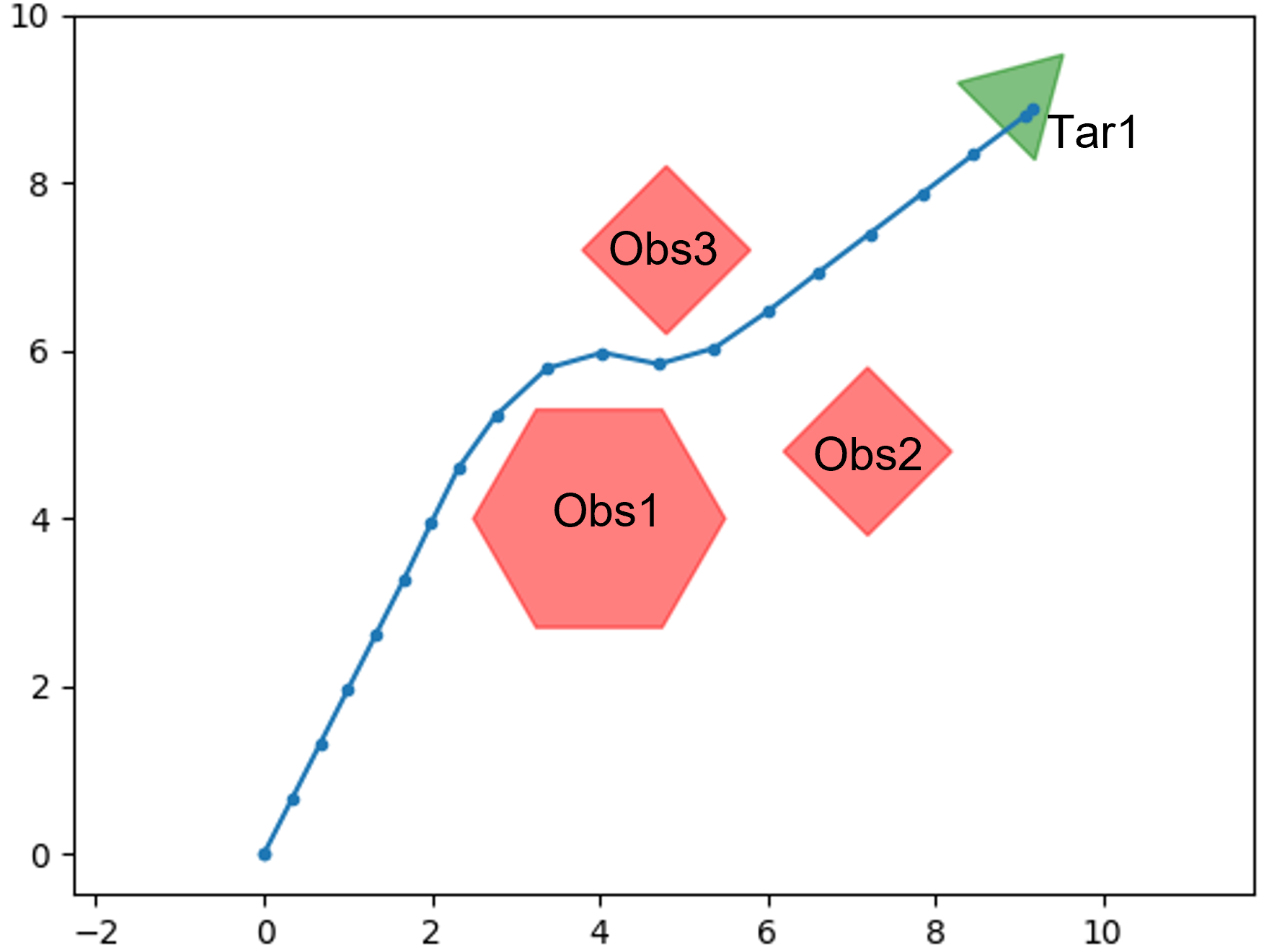

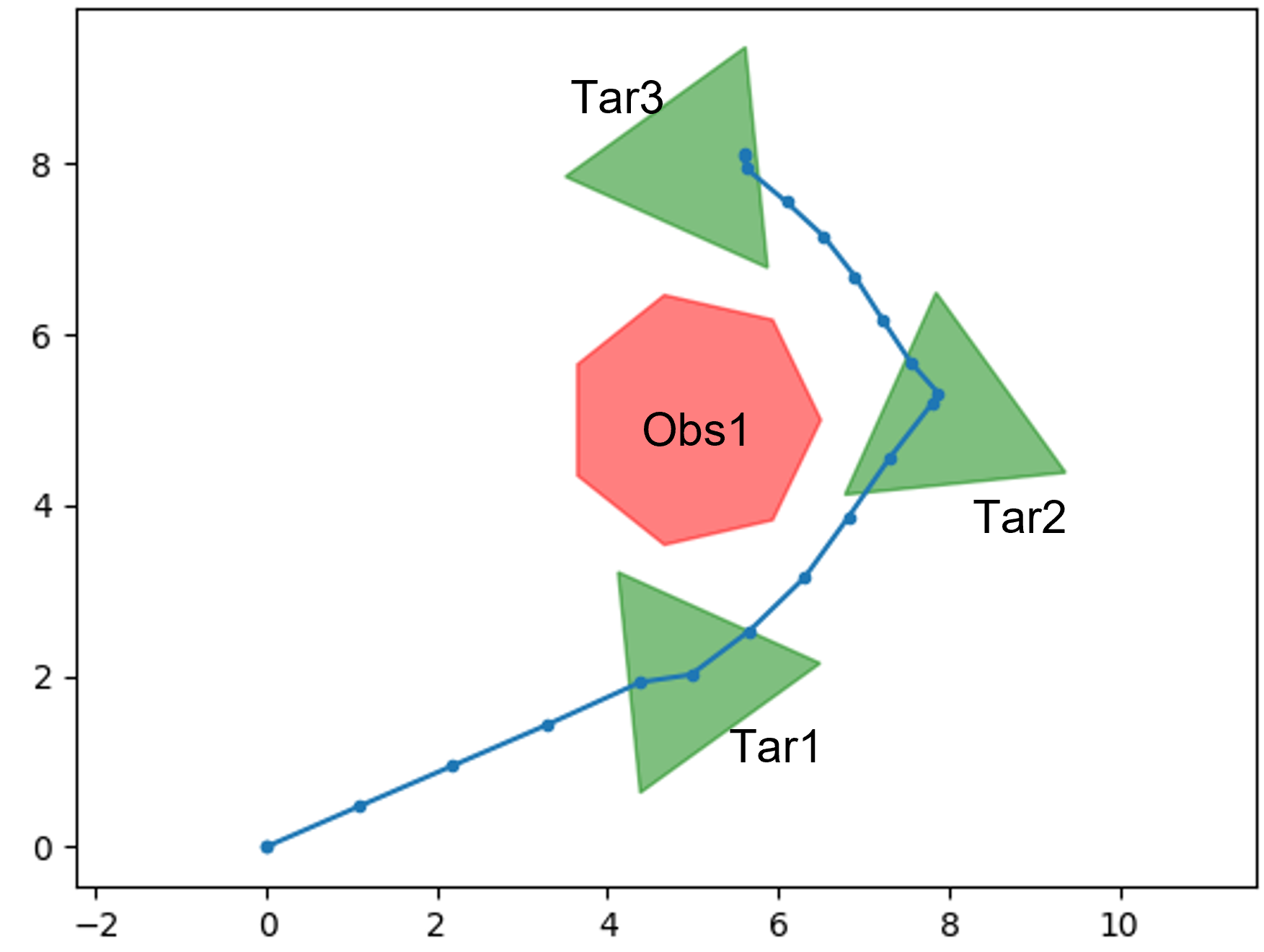

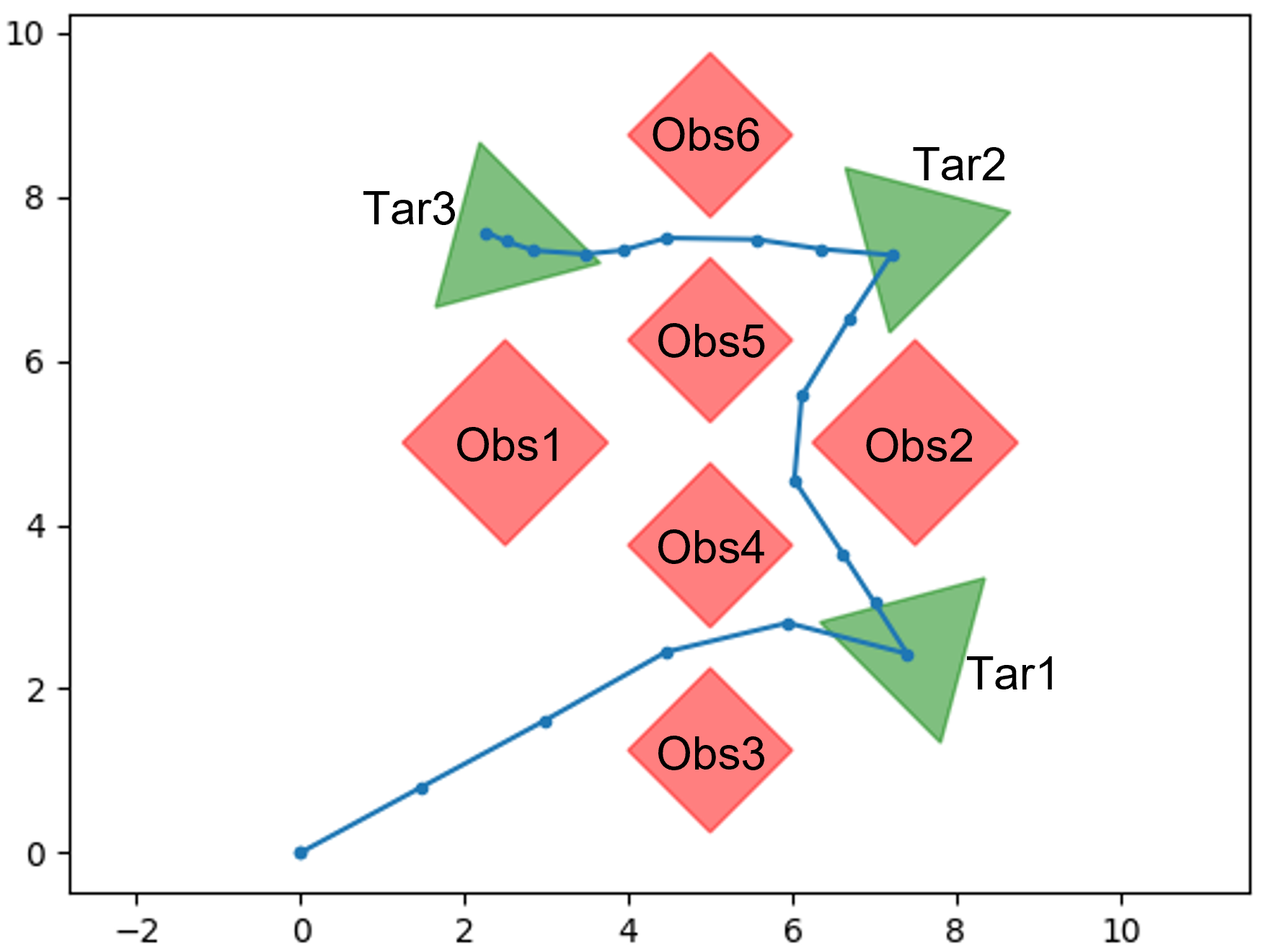

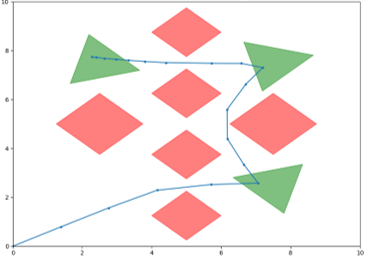

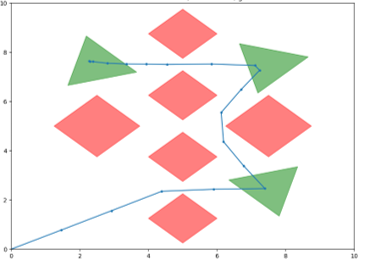

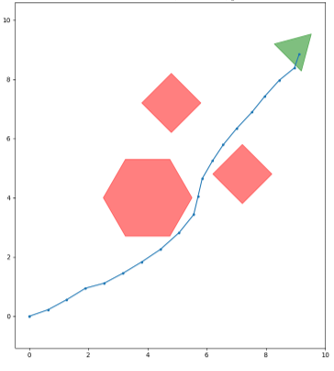

In this section, to highlight our contributions, we consider the four symbolic control problems (SCPs) shown in Fig. 1. In each SCP, the objective is to synthesize a control signal for a simple simulated robot that follows 2-D integrator dynamics [20] such that it satisfies a given specification (indicated in Fig. 1). We have implemented: 1) the SCPs shown in Fig. 1, 2) the SRMs discussed in Section III, 3) the STL error semantics proposed in Section IV and 4) the STL gradient semantics proposed in V, in a Python environment that has been made available at https://github.com/shiran27/Symbolic-Control-via-STL for reproduction and reuse purposes.

In order to solve the aforementioned synthesis problem (i.e., (6)) in an energy-aware manner, we follow the approach used in [20] where a smooth cost function

| (50) |

is optimized (as an alternative to optimizing its non-smooth version: , see also (7)), using the SciPy’s SQP method [27].

VI-A Effectiveness of the explicit gradients

We start with illustrating the efficiency and the accuracy of the explicit gradient computation technique proposed in Section V. For this purpose, we compare such explicit gradients with the gradients provided by the symbolic AutoGrad software package [26] (that was used in [20]) - in terms of the execution times and the absolute component differences (errors). It is also worth noting that the said AutoGrad based approach is much more efficient and accurate compared to numerical methods where the gradient is computed using finite differences [21].

In our experiments, for each SCP shown in Fig. 1 and for each SRM, the explicit gradient and the AutoGrad based gradient (of the cost function) were evaluated at 500 randomly selected control signals. The observed mean execution times and the () mean absolute component errors are reported in Tab. III. According to the observed execution times, the proposed explicit gradient evaluation technique is significantly efficient compared to the AutoGrad based technique as it has improved the mean execution time on average by 57.7% irrespective of the considered SCP or the SRM. Moreover, the observed error values (in log scale) reveal that both gradient computing techniques essentially provide the same gradient values.

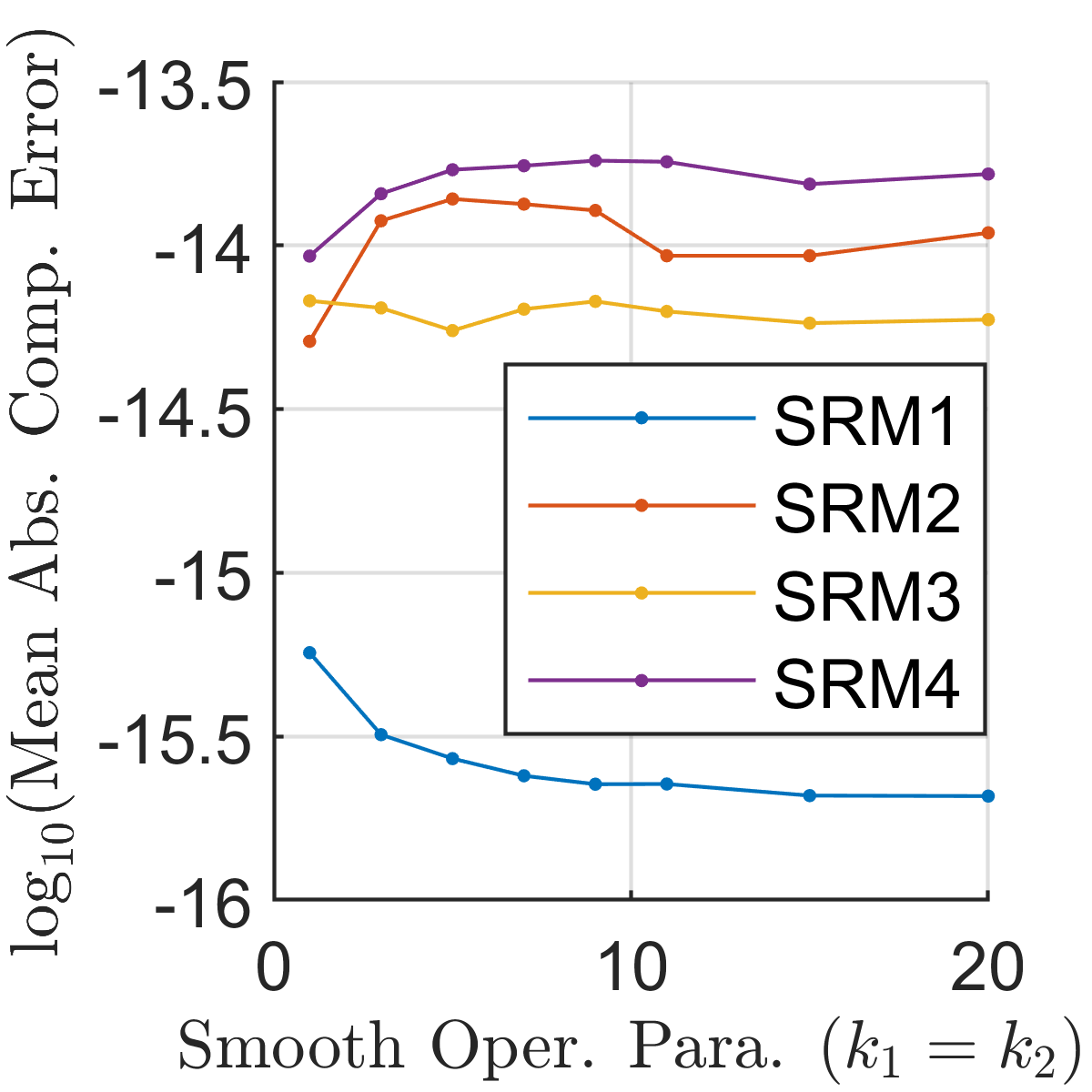

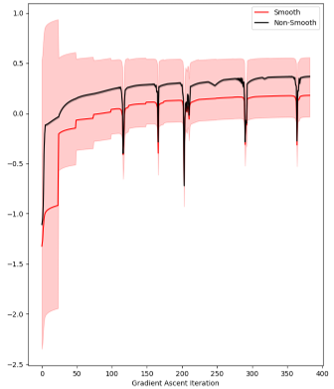

However, notice a considerable difference in the scale (albeit small in the magnitude) in the observed error values across different SRMs. Therefore, this behavior was further investigated under varying smooth operator parameters , for the SCP3. The observed results are illustrated in Fig. 2. Intuitively, with increasing , values, an SRM becomes approximately close to the actual non-smooth robustness measure, and thus, the corresponding gradients evaluated using the AutoGrad method can be expected to deviate from the actual explicit gradients. However, according to the observations shown in Fig. 2, such a behavior can only be seen for SRM2 and SRM4. Moreover, error magnitudes associated with SRM3 and SRM1 are lower (mush significantly in the latter case) than those of SRM2 and SRM4. Hence, we can conclude that SRMs like SRM1 and SRM3 can be used even with the AutoGrad package (to compute gradients) without running into significant numerical inaccuracies irrespective of the used , values.

| SCP | SRM | Mean Execution Time / (ms) | Absolute Component Error | ||||

|

|

|

|||||

| SRM1 | 23.3 | 54.9 | 57.6 | -16.6 | -16.4 | ||

| SRM2 | 23.9 | 56.0 | 57.2 | -16.1 | -15.8 | ||

| SRM3 | 24.8 | 56.6 | 56.3 | -16.0 | -15.8 | ||

| SCP1 | SRM4 | 25.6 | 57.0 | 55.2 | -15.8 | -15.5 | |

| SRM1 | 46.8 | 115.0 | 59.3 | -16.2 | -15.9 | ||

| SRM2 | 44.1 | 105.8 | 58.3 | -15.7 | -15.3 | ||

| SRM3 | 43.8 | 104.2 | 58.0 | -15.6 | -15.3 | ||

| SCP2 | SRM4 | 49.8 | 114.5 | 56.5 | -15.4 | -15.1 | |

| SRM1 | 24.0 | 58.9 | 59.3 | -15.5 | -15.1 | ||

| SRM2 | 24.9 | 60.9 | 59.0 | -13.9 | -13.5 | ||

| SRM3 | 26.3 | 63.0 | 58.3 | -14.2 | -13.7 | ||

| SCP3 | SRM4 | 27.3 | 64.9 | 57.9 | -13.8 | -13.5 | |

| SRM1 | 62.8 | 150.9 | 58.4 | -15.7 | -15.4 | ||

| SRM2 | 66.9 | 156.1 | 57.2 | -14.7 | -14.3 | ||

| SRM3 | 64.4 | 150.7 | 57.2 | -14.5 | -14.2 | ||

| SCP4 | SRM4 | 72.3 | 168.3 | 57.0 | -14.4 | -14.1 | |

VI-B Effectiveness of different SRMs

We now compare different control solutions obtained for each SCP shown in Fig. 1 under different SRMs discussed in Section III. Here we also provide the details of respective approximation error bounds revealed by the STL error semantics proposed in Section IV. As mentioned before, in each scenario, similar to [20], we obtain the control solution by optimizing the smooth cost function (50) using the SciPy’s SQP method [27]. However, one key difference compared to [20] is that we now provide explicitly computed gradients (i.e., ) to this SQP solver.

For the purpose of comparing different control solutions obtained for a SCP, we can directly use the performance metrics: 1) the approximation error bound width , 2) the control cost , 3) the non-smooth (actual) robustness measure and 4) the non-smooth (total) cost function . These performance metrics, along with a few other metrics (that cannot be used for such comparisons, e.g., the smooth robustness measure ) that were observed are summarized in Tab. IV. It is important to point out that all the values provided in Tab. IV are average values computed over 50 realizations, where in each realization, the SQP solver was initialized with a randomly selected control solution.

According to the simulation results reported in Tab. IV, in each SCP, the control solution found using the SRM3 provides the best total cost function value and the best actual robustness value, while the control solution found using the SRM1 provides the best control cost and the best approximation error bound width. This behavior implies that: 1) using an SRM with a moderate-sized approximation error bound (like the SRM3, as opposed to the SRM1) can lead to control solutions with better actual robustness measures, and 2) different SRMs can favor different aspects of the composite cost function (e.g., SRM1 favors reducing the control cost while the SRM2 favors improving robustness measure). Moreover, based on the performance metrics considered in Tab. IV, notice that both SRM2 and SRM4 do not show noticeable results compared to SRM1 or SRM3. In fact, for the SCP4, on average, SRM2 and SRM4 fail to find a feasible solution (as ).

To further investigate the properties of different SRMs, we executed the same set of experiments (that generated the results reported in Tab. IV) with different smooth operator parameter values: . The observed results (omitting some indecisive cases for simplicity) are summarized in Tab. V. Similar to before, these observations show that across different values and SCPs, the SRM1 provides the best control cost and the best approximation error bound width (if it finds a feasible solution). Also, in terms of the actual robustness measure and the total cost function value, the SRM3 performs better than the SRM1 in 65% of the cases considered. Furthermore, the results in Tab. V show that: 1) the overall best robustness measure value achieved for each SCP has been achieved when using the SRM3, 2) the SRM3 is more effective (compared to the SRM1) with low values, 3) the SRM3 finds a feasible solution irrespective of the values used, 4) the SRM1 is more effective (compared to the SRM3) with high values, and, 5) the SRM1 can fail to find a feasible solution when the used values are small.

Naturally, the aforementioned conflicting properties among different SRMs encourage one to jointly use two (or more) SRMs to achieve better performance metrics. For example, a control solution obtained using the SRM3 can be applied to initialize a subsequent stage where the SRM1 will be used. In fact, when this exact strategy was applied to the considered four SCPs (with , as in Tab. IV), the percentage improvements achieved in terms of the average actual robustness measure were: 0.17%, 1.80%, 45.9% and 0.83%, respectively.

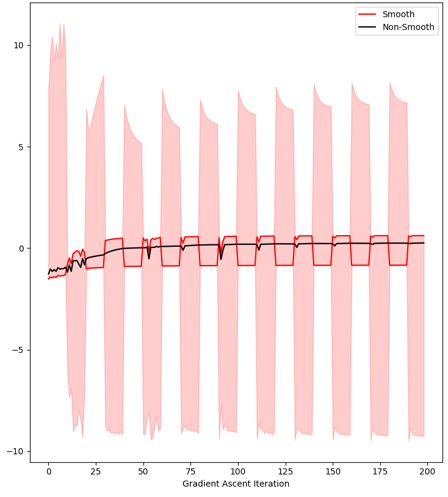

Another example approach (to jointly use two SRMs) is to constantly switch between using the SRM2 and the SRM3 while following a gradient ascent scheme that optimizes the smooth cost function (50). Recall that the SRM2 and the SRM3 are essentially under- and over-approximations to the actual robustness measure. Hence, as illustrated in Fig. 3, with a properly selected switching strategy, this approach will guarantee an approximation error bound width , where and are the converged SRM2 and SRM3 values, respectively. For example, for the four considered SCPs (with ), if the reported SRM2 and SRM3 values in Tab. IV were assumed to be the converged respective SRM2 and SRM3 values under a switching-gradient-ascent approach (as in Fig. 3), the percentage improvements in the approximation error bound widths would be: 63.3%, 71.7%, 77.7% and 44.1%, respectively.

| Smooth | Error Bound Information | Non-Smooth | |||||||||||||||||||

| SCP | SRM |

|

|

|

|

|

Width |

|

|

||||||||||||

| SCP1 | SRM1 | 0.072 | -0.810 | -0.739 | -1.025 | 1.853 | 2.878 | 0.182 | 0.110 | ||||||||||||

| SRM2 | 0.074 | -0.847 | -0.772 | -0.010 | 4.245 | 4.255 | 0.338 | 0.264 | |||||||||||||

| SRM3 | 0.079 | 0.620 | 0.698 | -3.982 | 0.010 | 3.992 | 0.401 | 0.323 | |||||||||||||

| SRM4 | 0.096 | 0.395 | 0.491 | -3.373 | 4.419 | 7.792 | 0.152 | 0.057 | |||||||||||||

| SCP2 | SRM1 | 0.093 | -0.892 | -0.799 | -1.025 | 1.853 | 2.878 | 0.264 | 0.170 | ||||||||||||

| SRM2 | 0.100 | -0.942 | -0.843 | -0.010 | 5.184 | 5.194 | 0.150 | 0.050 | |||||||||||||

| SRM3 | 0.102 | 0.528 | 0.630 | -10.037 | 0.010 | 10.047 | 0.321 | 0.220 | |||||||||||||

| SRM4 | 0.116 | 0.352 | 0.468 | -9.543 | 4.110 | 13.653 | 0.155 | 0.040 | |||||||||||||

| SCP3 | SRM1 | 0.214 | -0.416 | -0.202 | -0.703 | 1.853 | 2.556 | 0.524 | 0.310 | ||||||||||||

| SRM2 | 0.245 | -0.522 | -0.276 | -0.010 | 6.266 | 6.276 | 0.523 | 0.278 | |||||||||||||

| SRM3 | 0.236 | 0.856 | 1.091 | -6.176 | 0.010 | 6.186 | 0.620 | 0.385 | |||||||||||||

| SRM4 | 0.276 | 0.625 | 0.901 | -5.359 | 3.823 | 9.182 | 0.463 | 0.187 | |||||||||||||

| SCP4 | SRM1 | 0.231 | -0.673 | -0.442 | -0.703 | 1.988 | 2.691 | 0.410 | 0.179 | ||||||||||||

| SRM2 | 0.167 | -1.896 | -1.729 | -0.010 | 4.409 | 4.419 | -1.345 | -1.512 | |||||||||||||

| SRM3 | 0.242 | 0.574 | 0.816 | -7.107 | 0.010 | 7.117 | 0.506 | 0.264 | |||||||||||||

| SRM4 | 0.147 | -1.450 | -1.303 | -9.295 | 4.526 | 13.822 | -1.535 | -1.681 | |||||||||||||

| SCP | SRM | Error Bound width: | Control Cost: | Robustness Measure: | Cost: | ||||||||||||||||

| k_1=k_2 | k_1=k_2 | k_1 = k_2 | k_1 = k_2 | ||||||||||||||||||

| 1 | 3 | 5 | 7 | 9 | 1 | 3 | 5 | 7 | 9 | 1 | 3 | 5 | 7 | 9 | 1 | 3 | 5 | 7 | 9 | ||

| SCP1 | SRM1 | 8.594 | 2.878 | 1.735 | 1.245 | \ul0.973 | 0.068 | \ul0.072 | 0.072 | 0.073 | 0.073 | -1.213 | 0.182 | 0.349 | 0.400 | 0.416 | -1.281 | 0.110 | 0.277 | 0.327 | \ul0.343 |

| SRM3 | 8.635 | 3.992 | 3.420 | 2.766 | 2.946 | 0.080 | 0.079 | 0.079 | 0.079 | 0.078 | 0.192 | 0.401 | \ul0.416 | 0.401 | 0.399 | 0.112 | 0.323 | 0.338 | 0.323 | 0.320 | |

| SCP2 | SRM1 | 8.594 | 2.878 | 1.735 | 1.245 | \ul0.973 | 0.097 | \ul0.093 | 0.094 | 0.094 | 0.093 | -0.896 | 0.264 | 0.335 | 0.360 | 0.372 | -0.993 | 0.170 | 0.241 | 0.266 | \ul0.278 |

| SRM3 | 11.648 | 10.047 | 9.456 | 8.982 | 8.741 | 0.102 | 0.102 | 0.098 | 0.100 | 0.098 | 0.185 | 0.321 | \ul0.372 | 0.360 | 0.346 | 0.083 | 0.220 | 0.274 | 0.260 | 0.248 | |

| SCP3 | SRM1 | 7.629 | 2.556 | 1.542 | 1.107 | \ul0.865 | \ul0.118 | 0.214 | 0.225 | 0.223 | 0.219 | 0.187 | 0.524 | 0.602 | 0.596 | 0.590 | 0.069 | 0.310 | 0.376 | 0.374 | 0.371 |

| SRM3 | 7.251 | 6.186 | 5.498 | 5.105 | 5.179 | 0.165 | 0.236 | 0.248 | 0.235 | 0.226 | 0.542 | \ul0.620 | 0.568 | 0.590 | 0.574 | 0.376 | \ul0.385 | 0.320 | 0.355 | 0.348 | |

| SCP4 | SRM1 | 8.034 | 2.691 | 1.623 | 1.165 | \ul0.910 | 0.227 | 0.231 | 0.229 | 0.222 | 0.219 | 0.061 | 0.410 | 0.506 | 0.512 | 0.524 | -0.166 | 0.179 | 0.276 | 0.290 | 0.304 |

| SRM3 | 8.866 | 7.117 | 6.451 | 6.348 | 6.021 | 0.231 | 0.242 | 0.235 | \ul0.217 | 0.220 | 0.151 | 0.506 | 0.494 | 0.532 | \ul0.538 | -0.080 | 0.264 | 0.258 | 0.315 | \ul0.317 | |

VI-C The use of approximation error bounds

We finally highlight three possible interesting uses of approximation error bounds determined by the STL error semantics proposed in Section IV. Before getting into the details, recall the notation that we used to represent an approximation error bound: and the fact that we optimize a SRM using a gradient-based optimization process.

The first use of knowing the error bound is that it allows one to terminate the said optimization process prematurely if a given minimum level of actual robustness is guaranteed to be achieved. For example, if we need to ensure for some known value, we can use the simple (also efficient and smooth) termination condition: in the gradient-based optimization process. As a numerical example, consider the (average) case for the SCP1 with the SRM2 reported in Tab. IV and assume we need to ensure . By simply looking at the achieved and values, one can directly conclude that as .

The next use of error bounds is that it allows one to see how uncertainties in the mission space translate into uncertainties in the actual robustness measure. For example, in Tab. IV, under any SCP, notice that in SRM2 and in SRM3 (even though, they both should be zero according to Lms. 3 and 5, respectively). This is because, in our experiments, we have assumed that each predicate value (i.e., ) can be deviated by from its true value due to some source of noise. In other words, we set and for all and , in the experiments (see also (33)). In applications, since these and values can take different values depending on the predicate , it is far from obvious that how such terms will determine an accuracy error bound: , where is the computed/estimated actual robustness measure and is the true actual robustness measure. However, note that we now can address this challenge by simply using the supplementary STL error semantics proposed in Def. 5 with to compute the said accuracy error bound .

Finally, we elaborate on the main intended use of the proposed error bound analysis, i.e., to select (tune) the smooth operator parameters . Intuitively, for this purpose, one can solve an optimization problem of the form:

| (51) |

where is a scaling factor and the second term in the RHS is a regulatory term that ensures the parameters in will not grow indefinitely. Note that this latter term is required because the error bound width term in (51) converges to zero as the parameters in grow (irrespective of the used SRM). Clearly, with respect to the optimization process of the cost function (50), this parameter tuning stage (51) can be executed either off-line or on-line. A situation where off-line parameter tuning has lead to an improved solution is shown in Fig. LABEL:Fig:OfflineTuning. Further, Fig. 5 shows a case where on-line parameter tuning has been used - while the cost function (50) is being optimized using a gradient ascent process. From these motivating results, the importance of the proposed error bound analysis is evident. The future work is directed towards studying efficient and accurate ways to solve this parameter tuning problem (51) and investigating effective ways to combine it with the main optimization problem where the cost function (50) is optimized.

VII Conclusion

In this paper, we motivated the use of smooth robustness measures and gradient-based optimization methods to efficiently solve complex symbolic control problems where the specifications are given using Signal Temporal Logic. First, two existing and two new smooth robustness measures were extensively discussed while establishing their fundamental properties. Then, to characterize the approximation errors associated with different SRMs, we proposed a set of semantic rules that we named STL error semantics. This error analysis, among its other uses, enables one to assess different SRMs and sensibly select SRM parameters. Finally, to efficiently and accurately compute the gradients of different SRMs, we proposed another set of semantic rules that we named STL gradient semantics. Simulation results are provided to highlight the improvements achieved due to these contributions. Ongoing work aims to determine effective ways to use multiple SRMs in gradient-based optimization schemes simultaneously.

References

- [1] A. Pnueli, “The Temporal Logic of Programs,” in Proc of Annual IEEE Symposium on Foundations of Computer Science, vol. 1977-October, 1977, pp. 46–57.

- [2] E. M. Clarke and E. A. Emerson, “Design and Synthesis of Synchronization Skeletons Using Branching Time Temporal Logic,” in Logics of Programs, 1982, pp. 52–71.

- [3] R. L. Schwartz, P. M. Melliar-Smith, and F. H. Vogt, “An Interval Logic for Higher-Level Temporal Reasoning,” in Proc. of 2nd Annual ACM Symposium on Principles of Distributed Computing. New York, New York, USA: ACM Press, 1983, pp. 173–186.

- [4] R. Koymans, “Specifying Real-Time Properties with Metric Temporal Logic,” Real-Time Systems, vol. 2, no. 4, pp. 255–299, 1990.

- [5] C. I. Vasile, D. Aksaray, and C. Belta, “Time Window Temporal Logic,” Theoretical Computer Science, vol. 691, pp. 27–54, 2017.

- [6] O. Maler and D. Nickovic, “Monitoring Temporal Properties of Continuous Signals,” Lecture Notes in Computer Science, vol. 3253, pp. 152–166, 2004.

- [7] N. Mehdipour, C. I. Vasile, and C. Belta, “Arithmetic-Geometric Mean Robustness for Control From Signal Temporal Logic Specifications,” in Proc. of American Control Conf., vol. 2019-July, 2019, pp. 1690–1695.

- [8] I. Haghighi, N. Mehdipour, E. Bartocci, and C. Belta, “Control from Signal Temporal Logic Specifications with Smooth Cumulative Quantitative Semantics,” in Proc. of 58th IEEE Conf. on Decision and Control, vol. 2019-Decem, 2019, pp. 4361–4366.

- [9] Y. V. Pant, H. Abbas, R. A. Quaye, and R. Mangharam, “Fly-by-Logic: Control of Multi-Drone Fleets with Temporal Logic Objectives,” in Proc. of 9th ACM/IEEE Intl. Conf. on Cyber-Physical Systems, 2018, pp. 186–197.

- [10] G. E. Fainekos, S. Sankaranarayanan, K. Ueda, and H. Yazarel, “Verification of Automotive Control Applications Using S-TaLiRo,” in Proc. of American Control Conf., 2012, pp. 3567–3572.

- [11] V. Raman, A. Donzé, D. Sadigh, R. M. Murray, and S. A. Seshia, “Reactive Synthesis from Signal Temporal Logic Specifications,” in Proc. of 18th Intl. Conf. on Hybrid Systems: Computation and Control, 2015, pp. 239–248.

- [12] S. Sankaranarayanan and G. Fainekos, “Simulating Insulin Infusion Pump Risks by In-Silico Modeling of the Insulin-Glucose Regulatory System,” in Proc. of Intl. Conf. on Computational Methods in Systems Biology, 2012, pp. 322–341.

- [13] N. Mehdipour, D. Briers, I. Haghighi, C. M. Glen, M. L. Kemp, and C. Belta, “Spatial-Temporal Pattern Synthesis in a Network of Locally Interacting Cells,” in Proc. of 58th IEEE Conf. on Decision and Control, vol. 2018-Decem, 2019, pp. 3516–3521.

- [14] C. Belta and S. Sadraddini, “Formal Methods for Control Synthesis: An Optimization Perspective,” Annual Review of Control, Robotics, and Autonomous Systems, vol. 2, no. 1, pp. 115–140, 2019.

- [15] S. Saha and A. A. Julius, “An MILP Approach for Real-Time Optimal Controller Synthesis with Metric Temporal Logic Specifications,” in Proc. of the American Control Conf., vol. 2016-July, 2016, pp. 1105–1110.

- [16] H. Abbas and G. Fainekos, “Convergence Proofs for Simulated Annealing Falsification of Safety Properties,” in Proc. of 50th Annual Allerton Conference on Communication, Control, and Computing, 2012, pp. 1594–1601.

- [17] S. M. LaValle and J. James J. Kuffner, “Randomized Kinodynamic Planning,” The International Journal of Robotics Research, vol. 20, no. 5, pp. 378–400, 2001.

- [18] H. Abbas and G. Fainekos, “Computing Descent Direction of MTL Robustness for Non-Linear Systems,” in Proc. of American Control Conf., 2013, pp. 4405–4410.

- [19] H. Abbas, A. Winn, G. Fainekos, and A. A. Julius, “Functional Gradient Descent Method for Metric Temporal Logic Specifications,” in Proc. of American Control Conference, 2014, pp. 2312–2317.

- [20] Y. Gilpin, V. Kurtz, and H. Lin, “A Smooth Robustness Measure of Signal Temporal Logic for Symbolic Control,” IEEE Control Systems Letters, vol. 5, no. 1, pp. 241–246, 2021.

- [21] Y. V. Pant, H. Abbas, and R. Mangharam, “Smooth Operator: Control Using the Smooth Robustness of Temporal Logic,” in Proc. of 1st IEEE Conf. on Control Technology and Applications, vol. 2017-Janua, 2017, pp. 1235–1240.

- [22] L. Lasdon and J. C. Plummer, “Multistart algorithms for seeking feasibility,” Computers and Operations Research, vol. 35, no. 5, pp. 1379–1393, may 2008.

- [23] S. Welikala and C. G. Cassandras, “Distributed Non-Convex Optimization of Multi-Agent Systems Using Boosting Functions to Escape Local Optima,” IEEE Trans. on Automatic Control, 2020.

- [24] H. H. Hoos and T. Stutzle, “Praise for Stochastic Local Search: Foundations and Applications,” in Stochastic Local Search. Morgan Kaufmann Publishers, 2005, pp. i–ii.

- [25] M. Lange, D. Zühlke, O. Holz, and T. Villmann, “Applications of lp-Norms and Their Smooth Approximations for Gradient Based Learning Vector Quantization,” in Proc. of European Symposium on Artificial Neural Networks, 2014, pp. 271–276.

- [26] D. Maclaurin, D. Duvenaud, and R. P. Adams, “Autograd: Effortless Gradients in numpy,” in Proc. of ICML AutoML Workshop, 2015, pp. 1–3.

- [27] P. Virtanen, R. Gommers, T. E. Oliphant, M. Haberland, T. Reddy, D. Cournapeau, E. Burovski, P. Peterson, W. Weckesser, J. Bright, S. J. van der Walt, M. Brett, J. Wilson, K. J. Millman, N. Mayorov, A. R. J. Nelson, E. Jones, R. Kern, E. Larson, C. J. Carey, I. Polat, Y. Feng, E. W. Moore, J. VanderPlas, D. Laxalde, J. Perktold, R. Cimrman, I. Henriksen, E. A. Quintero, C. R. Harris, A. M. Archibald, A. H. Ribeiro, F. Pedregosa, and P. van Mulbregt, “SciPy 1.0: Fundamental Algorithms for Scientific Computing in Python,” Nature Methods, vol. 17, no. 3, pp. 261–272, 2020. [Online]. Available: https://www.nature.com/articles/s41592-019-0686-2