mO Revanth[#1] \NewDocumentCommand\daniel mO Daniel[#1] \NewDocumentCommand\heng mO Heng[#1]

SmartBook: AI-Assisted Situation Report Generation for Intelligence Analysts

Abstract.

Timely and comprehensive understanding of emerging events is crucial for effective decision-making; automating situation report generation can significantly reduce the time, effort, and cost for intelligence analysts. In this work, we identify intelligence analysts’ practices and preferences for AI assistance in situation report generation to guide the design strategies for an effective, trust-building interface that aligns with their thought processes and needs. Next, we introduce SmartBook, an automated framework designed to generate situation reports from large volumes of news data, creating structured reports by automatically discovering event-related strategic questions. These reports include multiple hypotheses (claims), summarized and grounded to sources with factual evidence, to promote in-depth situation understanding. Our comprehensive evaluation of SmartBook, encompassing a user study alongside a content review with an editing study, reveals SmartBook’s effectiveness in generating accurate and relevant situation reports. Qualitative evaluations indicate over 80% of questions probe for strategic information, and over 90% of summaries produce tactically useful content, being consistently favored over summaries from a large language model integrated with web search. The editing study reveals that minimal information is removed from the generated text (under 2.5%), suggesting that SmartBook provides analysts with a valuable foundation for situation reports.

1. Introduction

In today’s rapidly changing world, intelligence analysts face the constant challenge of staying informed amidst an overwhelming influx of news, rumors, and evolving narratives. To understand unfolding events, it is essential to extract common truths from heterogeneous data sources. Currently, intelligence analysts prepare situation reports that provide an overview of the state of affairs, potential risks or threats, and perspectives, along with recommended actions to guide action planning and strategic development (Organization et al., 2020, 2022). Situation reports are expected to present salient information about key events and trends with a clear logical structure to facilitate understanding, tracking, and easy access to information. The downstream consumers of these reports (general public, decision-makers, and stakeholders) are not just looking for raw information; they are seeking clarity, context, and actionable insights. However, these reports, being manually crafted, come with limitations: they are time-consuming to produce (National Academies of Sciences et al., 2019), might exhibit biases (Castonguay, 2013; Belton and Dhami, 2020), factual errors (Sun et al., 2024), and may be restricted due to the large volume of information they must process (Doggette et al., 2020; Young, 2019). Intelligence analysts spend a lot of time sifting through vast and complex data sources (Golson and Ferraro, 2018; Council et al., 2011) (e.g., social media rumor propagation, news outbreak, background statistics, domain expert opinions, etc.), and readers often grapple with information that’s either too dense or not sufficiently comprehensive. Given the sheer volume of information, it is challenging for analysts to do deep analysis and critical thinking, to be able to formulate strategic questions and generate insights. We hypothesize that automatic situation report generation can bridge the gap between reading and writing intelligence information. However, existing summarization approaches (Fabbri et al., 2019; Abdi et al., 2017; Li et al., 2014) generate plain-form text and do not yield the details, structures, and high-level strategic information to support decision-making for the downstream consumer. In particular, current large language model-based approaches such as GPT-4/ChatGPT (OpenAI, 2021) may return no answers or incorrect answers to user questions as they do not update information in real-time and lack fact-checking mechanisms (e.g., Table 1).

To establish a basis for the automated generation of situation reports, we carry out a formative study (see §2.1.1) to grasp intelligence analysts’ expectations from AI-driven systems. Our findings reveal that analysts are open to AI assistance, seek clarity in AI operations, and vary in their desire to control the AI system. To comprehend the composition process of situation reports, we augmented the initial study’s findings with collaborative design sessions (see §2.1.2), aiming to define practical design strategies and recommendations, particularly on how human intelligence analysts navigate, research, and write their reports. On a high level, we infer that the system interface must visually align with analysts’ sequential thought processes, provide transparency of information flow to build trust, and enable analysts to have some level of control over the AI tool’s processes. These conclusions consequently guided the design strategies for automating the generation of situation reports.

| Question: Is Russian positioning forces for possible follow-on actions into Ukraine after the initial invasion? |

|---|

| GPT-4: As an AI language model, I cannot provide real-time information on current events. However, it is essential for intelligence analysts to monitor the activities of various countries, including troop movements and military exercises,to assess potential threats and anticipate future actions. To determine if Russia is positioning forces for possible actions into Ukraine, one must analyze the latest intelligence reports, satellite imagery, and open-source information. Additionally, understanding the political and historical context of the region can provide valuable insight into the intentions of the countries involved. |

| SmartBook: The arrival of Russian forces in Slavutych came after several days of shelling against the city, which is strategically located close to the Dnieper River, about 95 miles (150 kilometers) north of Kyiv and close to the border with Belarus. Russia has been using Belarus as a springboard for many of its air operations in Ukraine, according to intelligence collected by NATO surveillance planes flying over the Polish-Ukrainian border and radar seen by CNN. So far, however, US officials have not seen Belarusian troops “being readied to move into Ukraine” or “that they are moving or are in Ukraine”, a senior US defense official said Monday, adding that the forces inside Ukraine are Russian. |

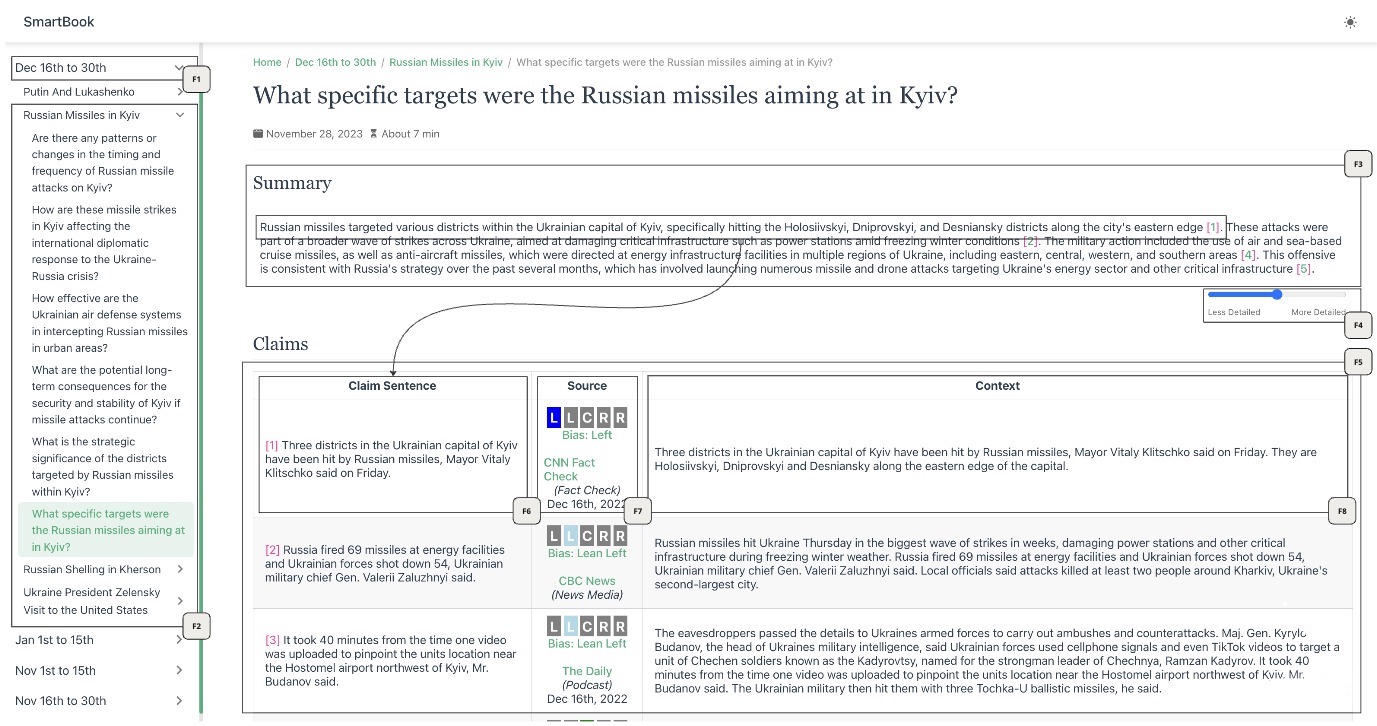

Building on the foundation set by the formative study and collaborative design, we present SmartBook, a framework designed to assist human analysts who author situation reports. SmartBook ingests data from multiple sources to generate a comprehensive report with information updated regularly. Human analysts typically source information by asking questions based on their own understanding of the situation. However, these questions can be static or too vague, and become outdated as the situation evolves rapidly. In contrast, SmartBook automatically discovers questions important for situation analysis and gathers salient information for generating the report. For all questions about a major event, the report contains summaries with tactical information coming from relevant claims, presented with local context and links to source news articles. SmartBook structures data in a manner that mirrors current intelligence analyst workflows–breaking down events into timelines, chapters, and question-based sections. Each section contains a grounded query-focused summary with its relevant claims. This intuitive structure facilitates easier assimilation of information for both reading and writing. Designed with a human-centered approach, our goal is to augment the capabilities of human analysts rather than replace them. Figure 1 shows an example from SmartBook for the Ukraine-Russia crisis, with the structured hierarchy of timespans, chapters, and corresponding sections.

In our comprehensive evaluation of SmartBook, we conducted two complementary studies: a utility study (in §3.1) for assessing usability and interaction, and a content review (in §3.2) for examining the quality of text summaries. The user study, involving intelligence analysts and decision-makers, focused on key research questions to explore SmartBook’s usability, intuitiveness, and effectiveness in situation report generation. Participants engaged in semi-structured interviews and post-study questionnaires, reflecting on their experience with the system. The content review complemented this by assessing the readability, coherence, and relevance of SmartBook-generated reports, including an editing study where an expert analyst revised the summaries to meet their standards of acceptability. The primary goal of this process was to evaluate the viability of using SmartBook as a tool for creating preliminary drafts of situation reports. The findings indicate that the content generated by SmartBook was mostly accurate, requiring minimal edits to correct a few factual errors. However, most of the effort in the editing process was focused on adding evidence to support the summaries. The results of the editing study suggest that while SmartBook provides a solid foundation, it significantly benefits from human refinement. The contributions of this work are as follows:

-

•

A comprehensive formative study and collaborative design process for identifying the design strategies to guide the automated generation of situation reports.

-

•

SmartBook, an automated framework that generates comprehensive, up-to-date situation reports from various sources and presents them in an intuitive and user-friendly manner. It identifies critical strategic questions, ensuring that downstream readers receive targeted, relevant, and evidence-grounded information to aid their decision-making processes.

-

•

A thorough utility evaluation involving intelligence analysts and decision-makers investigating the usability of the system.

-

•

A content review to grade the quality of the information generated, along with an editing study to understand how viable SmartBook is for producing preliminary first drafts of situation reports.

2. SmartBook Framework

2.1. System Design

The development of SmartBook, an AI-driven system for generating situation reports, followed an iterative human-centered design approach. The initial phase focused on designing and developing the backend workflow, along with a preliminary frontend interface, and included multiple evaluation stages. Throughout this process, numerous presentations were made to stakeholders in both government and the private sector, which provided valuable feedback. The second phase involved engaging intelligence analysts and decision-makers through formative and collaborative design studies to better understand their needs and expectations. The formative study phase (§2.1.1) involves semi-structured interviews to gather insights from users, involving detailed analysis of expectations from AI systems. Subsequently, the collaborative design phase (§2.1.2) brought users and developers together to refine and validate the initial design concepts. Through interactive sessions, participants provided real-time feedback on prototype functionalities to obtain precise requirements for AI assistance in report generation. The culmination of these efforts is a set of design strategies that ensure SmartBook incorporates a user-centric design to serve the practical needs of intelligence analysts.

2.1.1. Formative Study

The formative study aims to gather information on the general needs and expectations from intelligence analysts for AI-driven systems. The study was conducted on ten intelligence analysts with experience in government and military roles (details on recruitment in Supplementary §4.1). Over a two-week period, semi-structured interviews were conducted with these analysts to examine their understanding, perspectives, and recommendations regarding AI use in professional settings. The study highlighted emerging themes related to analysts’ perceptions and expectations of AI-assisted authoring tools, which we briefly describe below:

-

•

Viewing technology as a means to enhance human capability: An overwhelming majority (9 out of 10) emphasized the crucial role of AI in enhancing their capabilities, with these tools regarded not as mere process accelerators, but as essential elements that enrich their work by improving research efficiency, idea generation, and clarity of information. This perspective contrasts with the simplistic media depiction of these technologies as mere replacements for human effort.

-

•

Trusting and relying on machines, as with humans: The majority of participants (8 out of 10) exhibited a tendency to attribute human-like qualities of trust and reliability to AI systems. The criteria for trusting AI closely resembled those for human interactions: the ability to provide dependable information, transparency in reasoning, and a foundation in verifiable facts. Interestingly, analysts did not set higher standards for AI than for human colleague. This parity in trust and reliability criteria suggests that participants viewed AI as an equal collaborative partner, assessing its competence and trustworthiness on the same grounds as a human team member.

-

•

Training and guiding AI: Our study identified a split in intelligence analysts’ perspectives on their role in training and guiding AI systems. Four out of ten participants advocated for substantial control over AI, emphasizing the need for an interactive system that allows them to influence everything from information source selection to narrative shaping in reports. In contrast, the majority (six out of ten) favored a more hands-off approach, highlighting that situation report creation follows well-established, standardized procedures suitable for AI implementation. They perceived AI involvement as an extension of routine oversight, akin to reviewing a junior colleague’s work.

2.1.2. Collaborative Design

To gain an operational understanding of intelligence analysis process and generating situation reports, we expanded the design opportunities identified in the formative study (§2.1.1) with subsequent collaborative design sessions with the ten analysts. The goal was to capture tangible design strategies and recommendations from users about how they, as intelligence analysts, navigate, research, and author their situation reports.

We conducted study sessions with the analysts, with each session consisting a workflow review using storyboards and hands-on training with a simulation report exercise. In our study, participants engaged with a low-fidelity storyboard (shown in Figure 2), where each panel depicted a distinct phase in situation report creation. Participants were tasked with providing detailed descriptions of each storyboard panel to ensure comprehension of the depicted scenario and workflow. Participants simulated each storyboard step using sample situations to gain practical workflow experience, and were advised to utilize diverse resources, including web search engines like Google and Bing, and Large Language Models (LLMs) such as ChatGPT (Achiam et al., 2023), for task completion.

Data analysis from the collaborative design sessions showed three main themes: enhancing analytical efficiency, transparency in AI systems, and customization flexibility. Participants highlighted the need for interfaces that reflect their mental models, reducing cognitive load and allowing them to focus on strategic aspects. There was a significant emphasis on understanding AI systems’ underlying logic for trust, with a preference for transparent methods and traceable data sources to verify the credibility of automated outputs. Additionally, users expressed a desire for tools that support varying analytical styles and complexities, and that can integrate information from diverse sources to provide a comprehensive analysis.

From the findings of the formative study and the collaborative design above, we identified the following design strategies:

-

•

DS1: Given the emphasis on reducing cognitive load and enhancing analytical efficiency, the system will be designed with an interface, that mirrors intelligence analysts’ natural processes of data analysis and report generation.

-

•

DS2: To increase efficiency, the system will integrate features to automate time-intensive tasks such as question curation and preliminary research, thereby reducing analysts’ manual workload and enabling greater focus on strategic analysis and decision-making.

-

•

DS3: The design, addressing the need for trust and reliability, will convey clear explanations of the system’s data processing algorithms and criteria. This includes transparent data sourcing, providing references within reports, and tools for users to easily understand and verify the system’s conclusions. The design will also facilitate incremental trust-building through consistent and validated performance over time.

-

•

DS4: Addressing the themes of customization and flexibility, the system will offer a high degree of adaptability to accommodate various analytical styles and levels of detail in reporting. It will include features for adjusting the depth of analysis, focusing on specific data sets, and seamlessly integrating various data sources.

2.2. System Architecture

The above four design strategies helped shape SmartBook, an AI-assisted system for situation report generation that provides analysts with a first-draft report to work from as they respond to time-critical information requirements on emerging events. SmartBook consists of: 1) An intuitive user interface (shown in Figure 3) with design strategies from §2.1.2, and 2) a back-end framework (shown in Figure 4) that, when given a collection of documents from a variety of news sources, automatically generates a situation report.

Our automatic situation report is organized into coherent, chronological timelines spanning two weeks each, enhancing the tracking and comprehension of events’ developments. Within these timelines, major events are identified by clustering news articles, forming the basis for subsequent chapters (see §2.2.1). To guide detailed chapter analysis, we incorporate a logical structure with automatically generated section headings in the form of strategic questions covering various aspects of each major event (details in §2.2.2). SmartBook generates content addressing these questions from a strategic perspective, by pinpointing relevant claims in news articles (see §2.2.3). Each section contains query-focused summaries that answer the strategic questions, providing a comprehensive view of the event’s contexts and implications (see §2.2.4). These summaries include citation links that allow for factual verification and cross-checking by experts.

SmartBook is designed for efficiency, utilizing smaller models (Liu et al., 2019; Lewis et al., 2020) for tasks that have training data available, such as event headline generation, duplicate question detection, and claim extraction. Conversely, for more complex tasks—such as generating long-form summaries with citations or identifying strategic questions, we employ large language models (OpenAI, 2021; Achiam et al., 2023). In this section, we describe the various components within SmartBook, along with emphasizing the advantages of each aspect of SmartBook’s design for users (i.e., intelligence analysts) and for recipients of the final SmartBook report (i.e., decision-makers), who both initiate information requirements and are downstream readers.

2.2.1. Major Events within Timespans as Chapters

Situation reports cover event progressions over considerably long periods. Hence, it is beneficial to organize such reports in the form of timelines (F1 in Fig. 3), which enables seamless report updates (Ma et al., 2023) with new events and helps facilitate (Singh et al., 2016) users tracking and understanding of situation context (informed by DS1). Timelines aid intelligence analysts in understanding event progression and predicting future trends by organizing events chronologically and highlighting cause-and-effect relationships. For readers, especially those less familiar with the subject, timelines provide a visual guide to easily grasp the sequence and significance of events in a scenario. Our automatic situation report has timelines to provide a coherent, chronological representation of event developments (DS1, DS2).

In each timespan, we first identify major events by clustering daily news summaries from the period into major event groups using an agglomerative hierarchical clustering algorithm (Jain and Dubes, 1988) based on term frequency-inverse document frequency (TF-IDF) scores (Sparck Jones, 1972). Finally, we are left with clusters of news snippets, each providing a focused view of a major event. However, since news summary snippets are condensed in detail, we improve the comprehensiveness of each event cluster by expanding the news corpus, as described next. First, to create a chapter name for SmartBook and also use it for additional news article retrieval, we generate a concise headline for each event cluster. To achieve this, we utilize a sequence-to-sequence transformer-based (Vaswani et al., 2017) language model, BART (Lewis et al., 2020), that takes the concatenated title and text from all the news snippets within the event cluster as input and generates a short event heading. We made use of the model trained on the NewsHead dataset (Gu et al., 2020) for generating multi-document headlines. In that dataset, each cluster contained up to five news articles, and a crowd-sourced headline of up to 35 characters described the major information in that cluster. We then use these chapter names as the query to retrieve additional relevant news articles via Google News.

2.2.2. Strategic Questions as Section Headings

A situation report should have a logical structure and descriptive section titles (F2 in Fig. 3) for clarity and easy access to information for intelligence analysts (DS2). SmartBook not only describes event details in each chapter but also aims to present strategic insights that support decision-making and policy planning. To guide such detailed analysis, we incorporate a logical structure by automatically generating section headings in the form of strategic questions relating to each major event. These questions cover insightful details such as the motivations behind actions in an event and its potential future consequences.

Recent work (Sharma, 2021; Wang et al., 2022a) has shown that LLMs are capable of generating comprehensive, natural questions that require long-form and informative answers, in comparison to existing approaches (Murakhovs’ka et al., 2022; Du et al., 2017) that mainly generate questions designed for short and specific answers. In this work, we direct the LLM (GPT-4) to generate strategic questions about specific events, using news articles from the event cluster to anchor the context and reduce model-generated hallucinations (Ji et al., [n. d.]; Maynez et al., 2020). To ensure diversity in the generated questions, we sample multiple question sets using nucleus sampling (Holtzman et al., 2019). Our analysis reveals that questions may occasionally be repeated across different sets, as depicted in Figure 4 where duplicates are highlighted in blue. To address this, we perform question de-duplication using a RoBERTa-large (Liu et al., 2019) model trained on the Quora Duplicate Question Pairs dataset, thereby consolidating the sets into a singular, diverse collection of strategic questions relevant to the event.

2.2.3. Extraction of Claims and Hypotheses

Intelligence analysts, given the high stakes nature of their work but limited time, need systems that quickly identify key information in documents (DS2). This enables them to focus on urgent matters without sorting through irrelevant data. Hence, automated situation report generation should be able to identify and extract the most scenario-relevant and crucial information across multiple documents (F5 in Fig. 3). Readers of the situation report benefit from information salience because they are presented with a concise, relevant overview of a situation. Essential points need to be highlighted, to enhance readability and clarity. Moreover, we also present the bias of each news source (taken from AllSides) to help analysts consider information presented from different perspectives.

Providing readers with a comprehensive understanding of event context requires foraging for different claims and hypotheses from the source documents (i.e., news articles) that help explain a situation (Toniolo et al., 2023). We adopt a Question Answering (QA) formulation to identify claims relevant to a given strategic question, driven by the ability of directed queries to systematically extract relevant claims from news articles, as demonstrated in recent research (Reddy et al., 2022b, a). Our QA pipeline utilizes a transformer-based RoBERTa-large encoder model (Liu et al., 2019) that has been trained on SQuAD 2.0 (Rajpurkar et al., 2018) and Natural Questions (Kwiatkowski et al., 2019). The model takes as input the news corpus split into snippets along with the strategic question, and outputs answer extractions to these questions. The corresponding sentences that contain these answers are considered as the extracted claims. However, the risk of high-confidence false positives (Chakravarti and Sil, 2021) necessitates validation (Reddy et al., 2020; Zhang et al., 2021) of these answers. To address this, we utilize an answer sentence selection model (Garg et al., 2020) that verifies each context against the strategic question. The model is a binary classifier with a RoBERTa-large backbone trained on datasets such as Natural Questions (Kwiatkowski et al., 2019) and WikiQA (Yang et al., 2015), and outputs a validation score ranging from 0 (incorrect) to 1 (correct), which is used to select the top-5 relevant contexts for summarization.

2.2.4. Grounded Summaries as Section Content

Considering the issue of hallucination in LLM-based summarization (Ji et al., 2023; Li et al., 2023a; Bang et al., 2023), factuality is far more important than creativity for situation report generation. A reliable situation report must be anchored in verifiable sources to ensure credibility (DS3). This supports analysts in drawing robust, evidence-based conclusions, and the embedded links act as a springboard to more extensive research for readers wanting to dive deeper (F6, F7, F8 in Fig. 3). Additionally, we offer summaries with varied detail levels—brief (2-3 sentences), standard (4-6 sentences), and extended (2 paragraphs)—to cater to different reader preferences (F4 in Fig. 3) (DS4).

Using strategic questions obtained for each chapter as section headings, we incorporate query-focused summarization to generate each section’s content. A concise summary is generated for each section in SmartBook based on the relevant claim contexts (F3 in Fig 3). Recent work (Goyal et al., 2022; Bhaskar et al., 2022; Reddy et al., 2022c) has shown that humans overwhelmingly prefer summaries from prompt-based LLMs (Brown et al., 2020; Chowdhery et al., 2022) over models fine-tuned on article-summary pairs (Lewis et al., 2020; Zhang et al., 2020; Liu et al., 2022), due to better controllability and easier extension to novel scenarios. For summary generation, we feed the LLM (GPT-4) with the top-5 most relevant contexts (from §2.2.3) and instructions to summarize with respect to the given strategic question and include citations. This approach not only aids in maintaining accuracy by grounding on claim contexts but also enhances the trustworthiness of the summaries by allowing verification against the cited sources.

3. Results

To comprehensively evaluate SmartBook and its efficacy in aiding intelligence analysis, we performed three distinct but interrelated studies: a utility evaluation, a content review and an editing study. The utility evaluation (in (§3.1) aimed to answer three research questions concerning SmartBook’s usability, focusing on its effectiveness for intelligence analysts and decision-makers in generating and interacting with situation reports. Complementing this, the content review (in §3.2) assessed the quality of SmartBook’s automatically generated questions and summaries, focusing on readability, coherence, and relevance. Finally, the editing study (in §3.3) involved an expert analyst editing the generated reports to assess their value as a preliminary first draft.

3.1. Utility Study: Evaluating the System

During this user study, we conducted an interview with semi-structured questions and a post-study questionnaire on the usability of the SmartBook. Each user study session was structured in four segments: (i) an introductory overview, (ii) a free-form investigation, (iii) a guided exploration, and (iv) a concluding reflective discussion. The participants were ten intelligence analysts (from §2.1.1) and two decision-makers from Canadian government boards. These decision-makers engaged in the initial qualitative study but did not answer subsequent post-study questionnaires, due to time constraints. The study investigated the following research questions:

-

•

RQ1: How do intelligence analysts interact and leverage the features within SmartBook?

-

•

RQ2: Do intelligence analysts find SmartBook intuitive, usable, trustable and useful?

-

•

RQ3: How do decision-makers interact, perceive and use SmartBook?

Upon commencement of the study, (i) participants received a concise introduction to SmartBook, outlining its core premise. This orientation was designed to acquaint them with the system without biasing their exploration. Subsequently, (ii) participants were invited to freely investigate SmartBook, with the specific task of exploring a minimum of three questions across five chapters of their choosing. This approach afforded substantial freedom, enabling interactions with the user interface that reflected their natural inclinations and interests. Following the free-form investigation, participants were systematically introduced (iii) to the SmartBook framework. This process involved tracing the journey from a chosen question related to a specific event within a designated timespan, to the corresponding claims, contexts, and sources, resulting in a summarized answer. Subsequently, a semi-structured interview (iv) was conducted to gather reflective feedback on the participants’ experience with SmartBook, focusing on its efficacy and potential areas of enhancement. Finally, to conclude the session, participants were asked to complete a post-study questionnaire. This questionnaire was focused on assessing the usability of SmartBook, gathering quantitative data to complement the qualitative insights gained from the semi-structured interviews. Upon collecting, discussing and iterating on the data, behaviors and insights were merged into the following themes:

3.1.1. UI Understandability and Interaction

The structured layout of the user interface (UI) was notably effective, as seen in the free-form investigation (ii) with a 100% feature discovery rate (10 out of 10 participants). All participants successfully engaged with key features, underscoring the UI’s design intuitiveness. This high feature discovery rate indicates that the UI aligns with users’ cognitive patterns, facilitating intuitive interaction without extensive training, providing us with a positive response to RQ1. This sentiment was further emphasized in the post-study questionnaire. As seen in the results in Figure 5, 70% of participants strongly agreed that most intelligence analysts would learn to use the system very quickly, while 80% strongly disagreed that the system was difficult to navigate and use. However, we observed mixed views on UI flexibility, specifically in personalization and customization. While most found the tool easily integrateable into their workflow, a minority (30%) remained neutral. When further prompted, two participants described the UI as a “one size fits all” solution, lacking personalization.

3.1.2. Building Trust on SmartBook

In the study, trust in the SmartBook system developed progressively, resembling the formation of trust in a human analyst. Initially, users exhibited skepticism, thoroughly scrutinizing the system’s sources and assertions. This included validating the authenticity of sources, the accuracy and pertinence of ratings, and the correct representation of context. As users became more acquainted with SmartBook and evaluated its reliability, their dependence on extensive source verification lessened. As can be seen in Figure 5, three out of ten participants did not feel the need to conduct additional research beyond the presented information to trust the tool, while another three felt additional research was necessary. This trust was found to be context-dependent, with two participants noting that their trust varied based on the “impact severity” or the potential negative consequences of disseminating incorrect information. Nonetheless, a majority (eight out of ten) concurred that the information provided was accurate and reliable, a positive answer to RQ2.

3.1.3. Perceived Benefits for Intelligence Analysts

The primary benefit identified was the substantial reduction in time and effort required for compiling and analyzing complex data. SmartBook’s automation of initial report generation processes, such as data collection and summarization, was highly valued by analysts as it allowed more focus on thorough analysis and strategic planning. As seen in Figure 5, all participants agreed on the tool’s utility in assisting intelligence analysts in creating situation reports, expressing satisfaction with the system-generated reports, thereby providing a positive response to RQ2. Regarding the necessity of significant edits to the system-generated reports, half participants suggested that this need was not inherently due to report deficiencies but varied based on the report’s intended purpose or audience. We later conducted a study (described in §3.3) to understand the extent of edits needed.

3.1.4. SmartBook as a Learning Tool for Decision-Makers

Decision-makers highly valued SmartBook’s ability to rapidly deliver accurate and easily digestible information. Their primary engagement with the interface centered around utilizing the summaries, to learn about different topics in various degrees. The system’s effectiveness in simplifying complex data into structured, clear formats was particularly appreciated, to help aid in swift understanding and decision-making processes. Although data lineage and source transparency were recognized as important, these were considered secondary to the primary need for timely and format-specific information delivery. While SmartBook’s target users are intelligence analysts, the decision-makers’ view highlights SmartBook’s dual functionality as both an analytical tool and a decision-support system, providing a key capability for the high-paced and information-intensive needs of decision-makers, addressing RQ3.

3.2. Content Review: Evaluating the Generated Content

Our content review study primarily involves evaluation of the quality of automatically generated strategic questions and summaries within SmartBook. The studies involved two participant groups: one senior expert intelligence analyst and six text evaluators. The expert analyst, affiliated with a US national defense and security firm, possessed a decade of experience in intelligence analysis, training, and AI-based tool assessment. The text evaluators, all fluent in English and US-based, involved undergraduate and graduate students with experience in Natural Language Processing.

3.2.1. Evaluation of Strategic Questions

Evaluation of the quality of strategic questions, which are used as section headings in SmartBook, is done by drawing parallels to the traditional construction process of a situation report, which involves the participation of both senior and junior analysts. A senior analyst’s role is usually to come up with strategic questions, which are then passed onto a junior analyst. The junior analyst then gathers tactical information that can help answer or provide more background to the strategic questions. Our human evaluation of the questions within SmartBook measures the following aspects, based on guidelines defined by the expert intelligence analyst:

-

•

Strategic Importance: Evaluating the strategic importance of a question requires introspection on whether the question provided within SmartBook probes for an insightful aspect of the event. The scoring rubric involves marking the question as one of three categories, namely, ‘Not Strategic’, ‘Some Strategic Value’, and ‘Definitely Strategic’. This metric is based on the level of speculative nature, such as questioning the background/motives/reasons behind actions taken by actors (governments/military, etc) involved in the event.

-

•

Tactical Information: Another dimension of evaluating the quality of a question is how much relevant tactical information can be gathered using that question. In this case, the question is evaluated based on its corresponding SmartBook summary, in terms of whether the question-relevant tactical information in the summary is helpful for an analyst to gain deeper insights into the situation. Tactical information is defined as content that is neither obvious nor trivially obtained (e.g. cannot be obtained from a news header). The scoring rubric is categorized as ‘No information is tactical’, ‘Some information is tactical’, and ‘Most information is tactical’. Table 6 in supplementary shows examples for summaries with corresponding tactical information.

We randomly selected 25 chapters from SmartBook, comprising 125 strategic questions, with each question evaluated by three annotators. Results show that most questions are strategic (detailed split in Figure 7 in supplementary), with at least 82% having some strategic value. Further, we see that these questions can help gather relevant tactical information in roughly 93% of the cases, as judged by the annotators that most summaries to these questions have tactically relevant information. When assessing the diversity of strategic questions within each chapter, evaluators noted that 64% of chapters contained questions addressing different aspects, 28% had up to two questions on similar aspects, and 8% included more than two questions on similar aspects.

3.2.2. Evaluation of SmartBook Summaries

Next, we assess the quality of text summaries generated by SmartBook, which uses question-driven claim extraction to identify and summarize relevant information. For baseline comparisons, we utilize two methods: firstly, a query-focused summarization baseline that processes entire news articles directly with an LLM to generate summaries for the strategic question as the query. This approach uses the same LLM and prompt as in SmartBook, except the entire news article texts are passed as input context without an explicit claim extraction step. Secondly, we compare against a web search + LLM baseline, where relevant web pages sourced from the internet serve as the input context, which are then summarized by an LLM. This method simulates using an LLM-enabled web search engine (like perplexity.ai), incorporating the strategic question as the query along with a phrase “concerning the Ukraine-Russia crisis between timeline”. Since the LLM-enabled web search was using GPT-3.5 (OpenAI, 2021) at the time of experiments, we used GPT-3.5 as the LLM in SmartBook and other baselines for a fair comparison. We randomly select summaries corresponding to 50 strategic questions from SmartBook to perform the evaluation.

| Summary-Type | Background | Coherence | Relevance | Usefulness |

|---|---|---|---|---|

| (1) Query-Focused | News | 4.39 | 3.85 | 3.77 |

| (2) Web Search + LLM | Web | 4.44 | 4.07 | 3.83 |

| (3) SmartBook | News | 4.56 | 4.23 | 4.17 |

| Question: How have kamikaze drones changed the nature of the Russia-Ukraine conflict? | ||

| Query-Focused | Web Search + LLM | SmartBook |

| Kamikaze drones, or suicide drones, have been used by Russia in its attacks on Ukrainian cities such as Kyiv, Vinnytsia and Odesa. They are known as a “loitering munition” because they are capable of waiting in an area identified as a potential target and only strike once an enemy asset is identified. Russia is using Iranian-made Shahed-136 drones, which have a payload of approximately 50 kilograms and are hard to detect due to their small size. Ukraine has responded by developing an app that civilians can use to report sightings of incoming drones and missiles, and increasing the proportion shot down before they hit the ground. | Russia and Ukraine have been using Iranian-made Shahed-136 drones in the conflict. These drones are known as ‘kamikaze’ drones due to their ability to self-destruct on impact. The use of these drones has been highlighted by Russia’s growing reliance on them and their use as both military weapons and psychological weapons. On October 13, 2022, kamikaze drones and missiles hit north and south Ukraine, though the Kremlin denied any involvement from President Putin in talks between Russia and the West. | The Russia-Ukraine conflict has seen a paradigm shift in the nature of Russian tactics, with the increased reliance of kamikaze drones over artillery fire. These drones, which are designed to crash into a target and explode, can spray potentially deadly debris and are being used to plug gaps in the deployment of Russian forces along the front line. Russia is reportedly using Iranian-made Shahed series drones, which are showcased at Kashan Airfield south of Tehran. This shift in tactics has seen an escalation in the aerial bombardment of densely populated urban areas and an attack on critical infrastructure and power sources. |

For each generated summary, three evaluators are asked to assess the coherence, relevance, and usefulness with scores ranging from 1 (worst) to 5 (best). Simply, coherence measures the quality of all summary sentences collectively regardless of the question. Relevance quantifies whether the summary contains key points that answer the given question. We define usefulness as an indication of whether the summary provides non-trivial and insightful information for analysts, and suggests the breadth and depth of the provided key points. Table 2 shows results from the evaluation study. We observe that SmartBook outperforms alternative competitive strategies in coherence, relevance, and usefulness. The advantage of the question-driven claim extraction in SmartBook is evident, yielding significantly more relevant summaries compared to the direct query-focused summarization without such a step (row (3) vs (1)). Additionally, summaries generated using information sourced from the Web are less useful than those generated through SmartBook’s news-driven approach (row (2) vs (3)). Table 3 displays an example showing the difference in outputs. Overall, the evaluation demonstrates that across the metrics, SmartBook excels over the baselines in providing high-quality summaries.

We also evaluated the citation quality in SmartBook’s summaries using metrics for citation precision and recall as defined by Gao et al. (2023b). Citation recall assesses whether the output sentence is fully supported by the cited context, whereas citation precision identifies any irrelevant citations. These metrics were calculated using the 11B-parameter TRUE model (Honovich et al., 2022), which is trained on a collection of natural language inference datasets, and is frequently employed to evaluate attribution accuracy (Bohnet et al., 2022; Gao et al., 2023a). Overall, 97% of the sentences in SmartBook’s summaries included citations, while 29.5% had multiple citations. We observed a citation precision and recall of 64.7% and 69.2%, respectively.

3.3. Editing Study: Evaluation as a Preliminary Draft

SmartBook serves as an initial draft for intelligence analysts to refine or adapt to their specific needs. We evaluate SmartBook’s effectiveness in producing situation reports through an editing study with an expert analyst, by measuring how much of the content the analyst directly accepts or further edits. The analyst actively explored SmartBook’s features and subsequently edited 94 randomly selected summaries from SmartBook-generated situation reports, until they met professional intelligence reporting standards.

Based on the revised summaries by the expert analyst, we assessed the alterations made to the original content. We quantified these changes using token-overlap metrics like BLEU (Papineni et al., 2002) and ROUGE (Lin, 2004) scores, and Levenshtein edit distance, which calculates character-level modifications needed to transform the original into the revised summary. Empirical results show high token overlap between the generated and post-edited texts, with BLEU and ROUGE-L scores respectively at 59.0% and 74.1%, indicating that the SmartBook-generated reports are of sufficiently good quality and extensive human expert revision may not be necessary. However, we acknowledge that a gap still exists between the automatically generated summaries and human expert curation, as the Levenshtein edit distance computed at the character level is 34.4%. Notably, 15% of the generated summaries had no edits made by the expert analyst.

Further analysis revealed that the analyst predominantly added rather than removed content, with insertions at 49.6% and deletions at 2.3%. This suggests that automated summary generation may generally need to be more detailed.

| SmartBook Summary: Russia has reportedly stepped up its use of kamikaze drones in its assault against Ukraine. The increased reliance on kamikaze drones over artillery fire likely signals a paradigm shift in Russian tactics a shift introduced to counter high mobility offensive probing by Ukrainian forces. |

| Analyst-edited Summary: Russia has reportedly stepped up its use of kamikaze drones in its assault against Ukraine. The aircraft are called kamikaze drones because they attack once and don’t come back. The increased reliance on kamikaze drones over artillery fire likely signals a paradigm shift in Russian tactics - a shift introduced to counter high mobility offensive probing by Ukrainian forces. Their low price means the drones can be deployed in large numbers and they hover before they strike, so they have a psychological effect on civilians as they watch and wait for them to strike. These drones allow Russia to target Ukrainians far away from the front line, away from the primary battle space. The emergence of swarms of drones in Ukraine is part of a shift in the nature of the Russian offensive, which some speculate indicates that Moscow may be running low on long-range missiles. |

Table 4 shows an example of edits (in color) made by an expert analyst for a machine-generated summary in SmartBook. We can see here that the human analyst added additional tactical information (in blue) to elaborate on certain aspects (e.g. what is special about the “kamikaze” type of drone). Further, the analystalso added some interesting insights (in green) based on the information in the summary. Overall, this shows that SmartBook provides a good starting point for analysts to expand upon for the generation of situation reports.

It is noteworthy that 15% of summaries produced by SmartBook needed no modifications, highlighting its proficiency in creating acceptable reports in some scenarios without human intervention. This suggests that as technology advances and iterative refinements are applied, this rate will likely improve, reducing the workload for analysts in the future. To gain a better understanding of the different types of errors in the remaining summaries, we asked the expert analyst to categorize the errors within them. The analyst was also shown the strategic question and the corresponding extracted contexts that were used to automatically generate the summary. The summary errors were categorized as follows:

-

•

No relevant contexts: None of the extracted contexts are relevant to the question (and thereby the summary is expected to be irrelevant too).

-

•

Inaccurate information in summary: Summary has incorrect information, that is not reflective of the underlying input contexts.

-

•

Incoherent summary: Summary is incomprehensible and unclear.

-

•

Incomplete summary: Important information in the input contexts is missing in the summary.

-

•

Irrelevant information in summary: Summary has material that is not relevant to the question, despite some extracted contexts being relevant.

Figure 6 shows the distribution of error categories for the summaries. It can be seen that incompleteness of summaries is a predominant error, with more than 50% of the summaries missing important information or not being sufficiently complete. While the predominance of incomplete summaries could be a concern, this can also be framed positively: it represents a conservative approach ensuring that SmartBook does not over-extend based on limited data, and instead offers a foundational understanding. Other errors corresponded to summaries with inaccurate (18.6%) or irrelevant (14.6%) information. Directing the LLMs to reference input documents while generating summaries does improve factuality, aligning with recent findings (Gao et al., 2023c). Nevertheless, LLMs’ tendency to hallucinate (Ji et al., [n. d.]; Tam et al., 2022), calls for validation and cross-referencing techniques (discussed in §3.4.2) to address these issues. Finally, we see that very few summaries were judged incoherent, as expected given that large language models have been shown (Goyal et al., 2022; Zhang et al., 2023) to generate fluent and easy-to-read output.

3.4. Discussion

Amid advancements in AI-assisted writing tools (Wang et al., 2019, 2020, 2023; Cardon et al., 2023) tailored for diverse end-users, including academics (Gero et al., 2022), screenwriters (Mirowski et al., 2023) and developers (Chen et al., 2021), SmartBook introduces a specialized automated framework for generating situation reports for intelligence analysts. Unlike previous systems such as CoAuthor (Lee et al., 2022), Creative Help (Roemmele and Gordon, 2015), Writing Buddy (Samuel et al., 2016) and WordCraft (Yuan et al., 2022), which support collaborative and creative tasks, SmartBook focuses on factual accuracy, analytical depth, and efficiency in structured, data-driven tasks. This distinct approach emphasizes less creative interaction and more rigorous information processing, aligning SmartBook closely with the needs of intelligence analysis. However, the design also stands to benefit from iterative refinement (discussed in §3.4.2), exploring deeper integration into analysts’ workflows.

SmartBook, with its modular design, automates the generation of situation reports efficiently across various contexts, including geopolitical, environmental, or humanitarian situations. Unlike traditional approaches that require substantial research and domain-specific expertise, SmartBook can adapt to new domains with minimal configuration, thereby delivering accurate reports in diverse and rapidly changing global contexts. SmartBook’s efficacy was primarily evaluated on news from the Ukraine-Russia military conflict. Here, we briefly explore SmartBook’s applicability in a humanitarian scenario–the Turkey-Syria earthquake. By modifying the input data to identify key events in the specified time window (February 6-13, 2023), SmartBook produced relevant reports detailing the earthquake’s aftermath (link), international aid (link), rising casualties (link), and notable incidents like the disappearance of a Ghanaian soccer star (link). This adaptability from military to humanitarian crises highlights SmartBook’s robustness and versatility. It also emphasizes the importance of the source articles and suggests that, with mission-relevant data input, SmartBook has the potential to be an invaluable tool for analysts across diverse scenarios.

3.4.1. Outlook

We have developed SmartBook, an innovative framework that generates situation reports with comprehensive, current, and factually-grounded information extracted from diverse sources. SmartBook goes beyond mere information aggregation; it presents chronologically ordered sequences of topically summarized events extracted from news sources and placed into a UI layout structure that aligns with the workflow of intelligence analysis. Throughout the development of SmartBook, from its conceptual design to its evaluation, we engaged analysts to ensure the tool meets their practical needs and enhances their workflow. Our formative study and collaborative design efforts have been guided by the needs of intelligence analysts, particularly in addressing the challenge of data overload. The findings from these studies indicate that analysts are cautiously optimistic about integrating AI assistance into their processes, expressing a clear desire to shape a future in which AI tools can both adapt to the evolving data landscape and personalize to their individual analytical techniques.

SmartBook establishes a foundation for situation understanding in a variety of scenarios. However, history repeats itself, sometimes in a bad way. Historical patterns, particularly those leading to natural or man-made disasters, can be a useful signal to trigger proactive measures for crisis mitigation. Building on prior research in event prediction (Li et al., 2021; Wang et al., 2022b; Li et al., 2023b), SmartBook can guide the development of a news simulator that forecasts event outcomes in crisis situations. This tool would be invaluable for humanitarian workers and policymakers to exercise reality checks, enhancing their capacity to prevent and respond to disasters effectively.

3.4.2. Limitations and Future Extensions

While SmartBook represents a significant advancement in the automated generation of situation reports, it is essential to acknowledge certain limitations that stem from both the technical aspects of the system and the scope of its application. These include (i) unverified news source credibility, (ii) potential inaccuracies in reflecting source material despite citations, and (iii) user studies focused mainly on military intelligence analysts, which may not represent the needs of a wider analytical audience. Recognizing these limitations is crucial for guiding future improvements and ensuring the framework’s applicability across diverse intelligence analysis sectors. We elaborate on the limitations in more detail in the supplementary material. Here, we provide key future extensions designed to elevate SmartBook’s utility as a comprehensive, unbiased, and reliable tool for situation report generation in intelligence analysis:

-

•

Incorporating Multimodal, Multilingual Information: Intelligence analysis increasingly relies on integrating diverse data types such as text, images, videos, and audio to understand global events comprehensively. We aim to enhance SmartBook’s situation reports by correlating textual claims with corresponding multimedia, utilizing advanced systems like GAIA (Li et al., 2020) for multimedia knowledge extraction. Furthermore, global events’ reach necessitates multilingual intelligence analysis to capture local nuances missed by solely using English. Employing cross-lingual techniques (Du et al., 2022) allows for the inclusion of multiple languages, thereby enhancing understanding of local dynamics and cultural subtleties that influence global situations. Thereby, integrating multilingual sources democratizes intelligence analysis, shifting away from a predominantly English-centric perspective. Ultimately, SmartBook aims to set new standards in intelligence reporting, fostering a more inclusive and globally informed approach.

-

•

Controlling the Bias of News Sources: News sources carry inherent biases influenced by editorial policies, audience demographics, and geographical locations. Dominance of a single perspective may obscure crucial details or alternative viewpoints. In developing the next version of SmartBook, we seek to mitigate these biases by incorporating a diverse spectrum of news outlets representing various political stances. This approach aims to diminish informational blind spots and expand the scope of scenarios available to intelligence analysts, thereby enhancing SmartBook’s utility.

-

•

Co-Authoring with Iterative Refinement: Authoring situation reports is an iterative process, where analysts continuously refine and update the reports. In the next iteration, we aim to provide a dynamic multi-turn editing process with SmartBook, by leveraging analyst feedback for self-improvement. Techniques like Reinforcement Learning with Human Feedback (Stiennon et al., 2020; Bai et al., 2022) and other personalization algorithms (Wu et al., 2019; Monzer et al., 2020) will further enhance the system’s capability to integrate human feedback, and progressively adapt to the preferences and decision-making styles of analysts. This strategy will align SmartBook more closely with human analysts, leading to tailored situation reports that meet the specific requirements of intelligence analysis workflows. Ultimately, SmartBook will evolve from a preliminary drafting tool to a comprehensive AI co-author for generating situation reports.

-

•

Improving Reliability of the Generated Reports: The integrity of data and claims in situation reports is crucial for strategic decision-making. A key challenge in automating these reports is guaranteeing the accuracy and reliability of data from diverse sources. To address this, we propose introducing a ‘verification score’ for each claim, which evaluates the reliability of information based on source credibility, corroborative data, and historical accuracy of similar claims. This mechanism provides intelligence analysts with a confidence metric to quickly assess data reliability. Higher scores indicate greater confidence and facilitate rapid integration into reports, whereas lower scores necessitate comprehensive review and possibly further verification.

4. Supplementary Material

4.1. Recruitment Details

For our studies, we targeted individuals with experience in government and military roles. We distributed a pre-screening survey to ascertain their background in creating situation reports. Participants qualifying for the study were either experienced intelligence analysts or had a minimum of one year of equivalent experience. The final group comprised 10 military personnel from different branches, as shown in Table 5. Their experience in intelligence report writing varied between 1 and 10 years. Compensation ranged from $25 to $35 per hour, reflecting participants’ levels of experience.

We implemented a targeted outreach strategy to recruit decision-makers for our study. These participants, identified through email addresses sourced from government boards and social media profiles (e.g., LinkedIn, Twitter), were self-identified professionals who make decisions based on prepared reports in their official capacity. Our group comprised of individuals with current or past experience on Canadian government boards. Unlike intelligence analysts, these participants did not receive compensation due to their government affiliation.

| PID | Age | Education | Intelligence Exp. | AI Knowledge | LLM Usage |

|---|---|---|---|---|---|

| IA1 | 25 - 34 | Bachelor’s | 5 - 10 years | 2 - Intermediate | Rarely |

| IA2 | 25 - 34 | High School Diploma | 2 - 5 years | 3 - Proficient | Rarely |

| IA3 | 18 - 24 | High School Diploma | 1 - 2 years | 2 - Intermediate | Daily |

| IA4 | 25 - 34 | Bachelor’s | 2 - 5 years | 2 - Intermediate | Rarely |

| IA5 | 45 - 54 | Bachelor’s | 2 - 5 years | 1 - Basic | Rarely |

| IA6 | 45 - 54 | Master’s | 1 - 2 years | 1 - Basic | Rarely |

| IA7 | 35 - 44 | High School Diploma | 5 - 10 years | 1 - Basic | Weekly |

| IA8 | 45 - 54 | Master’s | 1 - 2 years | 2 - Intermediate | Rarely |

| IA9 | 35 - 44 | Bachelor’s | 2 - 5 years | 2 - Intermediate | Rarely |

| IA10 | 25 - 34 | Bachelor | 2 - 5 years | 4 - Advanced | Weekly |

| Question: What were the motives behind the attack on Russian missile in Dnipro? | Question: What is the capability of Ukraine’s air defense systems in repelling the missile attacks from Russian missiles |

| Summary: The Russian missile attack on the city of Dnipro on Saturday was part of the Kremlin’s efforts to limit Ukraine’s ability to heat and power itself in the middle of winter. The attack was reportedly carried out with a Kh-22 missile, an older and less accurate weapon than most modern missiles. It is believed that the attack was part of the Kremlin’s attempts to retake a town, Soledar, and the nearby city of Bakhmut, as a way to grind down the best Ukrainian forces and prevent them from launching counterattacks elsewhere. Nine people were killed, including a 15-year old girl, Kyrylo Tymoshenko, and Russia denied responsibility for the attack, claiming it was caused by Ukrainian air defences. | Summary: Ukraine’s air defense systems have demonstrated their capability in repelling the missile attacks from Russian forces in Kyiv. [1] reported that Ukrainian air defense forces shot down 37 of ”about 40” rockets that entered the city’s airspace, with one person injured. [2] revealed that a commander, who asked to be identified only by the call sign ”Hera” for security reasons, stated that a cruise missile was shot down by a machine gun from the city’s territorial defense mobile group. [3] mentioned that U.S. President Joe Biden had pledged to deliver one Patriot surface-to-air missile battery system to Ukraine, which is one of the most advanced U.S. air defense systems and is capable of intercepting threats such as aircraft and ballistic missiles. |

4.2. SmartBook Limitations

-

•

The generation of situation reports within SmartBook leverages news articles aggregated from Google News. Nonetheless, the process does not involve a rigorous assessment of the news sources’ credibility, nor does it incorporate an explicit verification of the factual accuracy of the claims used in the summary generation model. Considering the extensive exploration of these aspects within computational social science (Lee et al., 2023) and natural language processing (Gong et al., 2023), we deemed them beyond the scope of SmartBook’s framework in this work.

-

•

SmartBook enhances the reliability and credibility of the generated situation reports by incorporating citations. However, it does not rigorously ensure the accuracy of these reports in reflecting the content of the source documents. In contrast, LLMs have been shown (Mallen et al., 2022; Baek et al., 2023) to tend to produce content that may not directly correlate to the source materials. While this is still an active area of research, recent studies (Tian et al., 2023) have shown that LLMs can be effectively optimized to enhance factual accuracy and attribution capabilities (Gao et al., 2023b). Consequently, we propose that future advancements could involve substituting the current summary-generating language model with one that is specifically refined for improved attribution (Gao et al., 2023b).

-

•

The selection of analysts for the user studies in SmartBook was primarily comprised of individuals from military intelligence. This limitation arose due to the challenges encountered in recruiting analysts who could participate without breaching confidentiality agreements. Consequently, our recruitment efforts were confined to analysts within our existing networks. It is important to recognize that our studies may not fully represent the needs and perspectives of analysts in other fields, such as political, corporate, or criminal analysis. Acknowledging this gap, future work can aim to extend and adapt SmartBook’s capabilities for generating situation reports, tailoring them to meet the distinct requirements of each of these domains.

Acknowledgement

We would like to acknowledge the invaluable contributions of Paul Sullivan, who passed away before the publication of this paper. His enduring commitment to knowledge is greatly missed and deeply appreciated. We are grateful to Lisa Ferro and Brad Goodman from MITRE for their valuable comments and help with expert evaluation. This research is based upon work supported by U.S. DARPA AIDA Program No. FA8750-18-2-0014, DARPA KAIROS Program No. FA8750-19-2-1004, DARPA SemaFor Program No. HR001120C0123, DARPA INCAS Program No. HR001121C0165 and DARPA MIPS Program No. HR00112290105. The views and conclusions contained herein are those of the authors and should not be interpreted as necessarily representing the official policies, either expressed or implied, of DARPA, or the U.S. Government. The U.S. Government is authorized to reproduce and distribute reprints for governmental purposes notwithstanding any copyright annotation therein.

References

- (1)

- Abdi et al. (2017) Asad Abdi, Norisma Idris, Rasim M Alguliyev, and Ramiz M Aliguliyev. 2017. Query-based multi-documents summarization using linguistic knowledge and content word expansion. Soft Computing 21, 7 (2017), 1785–1801.

- Achiam et al. (2023) Josh Achiam, Steven Adler, Sandhini Agarwal, Lama Ahmad, Ilge Akkaya, Florencia Leoni Aleman, Diogo Almeida, Janko Altenschmidt, Sam Altman, Shyamal Anadkat, et al. 2023. Gpt-4 technical report. arXiv preprint arXiv:2303.08774 (2023).

- Baek et al. (2023) Jinheon Baek, Soyeong Jeong, Minki Kang, Jong C Park, and Sung Ju Hwang. 2023. Knowledge-Augmented Language Model Verification. arXiv preprint arXiv:2310.12836 (2023).

- Bai et al. (2022) Yuntao Bai, Andy Jones, Kamal Ndousse, Amanda Askell, Anna Chen, Nova DasSarma, Dawn Drain, Stanislav Fort, Deep Ganguli, Tom Henighan, et al. 2022. Training a helpful and harmless assistant with reinforcement learning from human feedback. arXiv preprint arXiv:2204.05862 (2022).

- Bang et al. (2023) Yejin Bang, Samuel Cahyawijaya, Nayeon Lee, Wenliang Dai, Dan Su, Bryan Wilie, Holy Lovenia, Ziwei Ji, Tiezheng Yu, Willy Chung, et al. 2023. A Multitask, Multilingual, Multimodal Evaluation of ChatGPT on Reasoning, Hallucination, and Interactivity. In Proceedings of the 13th International Joint Conference on Natural Language Processing and the 3rd Conference of the Asia-Pacific Chapter of the Association for Computational Linguistics (Volume 1: Long Papers). 675–718.

- Belton and Dhami (2020) Ian K Belton and Mandeep K Dhami. 2020. Cognitive biases and debiasing in intelligence analysis. In Routledge Handbook of Bounded Rationality. Routledge, 548–560.

- Bhaskar et al. (2022) Adithya Bhaskar, Alexander R Fabbri, and Greg Durrett. 2022. Zero-Shot Opinion Summarization with GPT-3. arXiv preprint arXiv:2211.15914 (2022).

- Bohnet et al. (2022) Bernd Bohnet, Vinh Q Tran, Pat Verga, Roee Aharoni, Daniel Andor, Livio Baldini Soares, Massimiliano Ciaramita, Jacob Eisenstein, Kuzman Ganchev, Jonathan Herzig, et al. 2022. Attributed question answering: Evaluation and modeling for attributed large language models. arXiv preprint arXiv:2212.08037 (2022).

- Brown et al. (2020) Tom Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared D Kaplan, Prafulla Dhariwal, Arvind Neelakantan, Pranav Shyam, Girish Sastry, Amanda Askell, et al. 2020. Language models are few-shot learners. Advances in neural information processing systems 33 (2020), 1877–1901.

- Cardon et al. (2023) Peter Cardon, Carolin Fleischmann, Jolanta Aritz, Minna Logemann, and Jeanette Heidewald. 2023. The Challenges and Opportunities of AI-Assisted Writing: Developing AI Literacy for the AI Age. Business and Professional Communication Quarterly (2023), 23294906231176517.

- Castonguay (2013) Major J.R.P. Castonguay. 2013. INTELLIGENCE ANALYSIS: FLAWED WITH BIASES. CANADIAN FORCES COLLEGE. https://www.cfc.forces.gc.ca/259/290/301/305/castonguay.pdf.

- Chakravarti and Sil (2021) Rishav Chakravarti and Avirup Sil. 2021. Towards confident machine reading comprehension. arXiv preprint arXiv:2101.07942 (2021).

- Chen et al. (2021) Mark Chen, Jerry Tworek, Heewoo Jun, Qiming Yuan, Henrique Ponde de Oliveira Pinto, Jared Kaplan, Harri Edwards, Yuri Burda, Nicholas Joseph, Greg Brockman, et al. 2021. Evaluating large language models trained on code. arXiv preprint arXiv:2107.03374 (2021).

- Chowdhery et al. (2022) Aakanksha Chowdhery, Sharan Narang, Jacob Devlin, Maarten Bosma, Gaurav Mishra, Adam Roberts, Paul Barham, Hyung Won Chung, Charles Sutton, Sebastian Gehrmann, et al. 2022. Palm: Scaling language modeling with pathways. arXiv preprint arXiv:2204.02311 (2022).

- Council et al. (2011) National Research Council et al. 2011. Intelligence analysis for tomorrow: Advances from the behavioral and social sciences. National Academies Press.

- Doggette et al. (2020) Brandon D Doggette, US Army Command, and General Staff College. 2020. Information overload: impacts on brigade combat team S-2 current operations intelligence analysts. Ph. D. Dissertation. Fort Leavenworth, KS: US Army Command and General Staff College.

- Du et al. (2017) Xinya Du, Junru Shao, and Claire Cardie. 2017. Learning to Ask: Neural Question Generation for Reading Comprehension. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). Association for Computational Linguistics, Vancouver, Canada, 1342–1352. https://doi.org/10.18653/v1/P17-1123

- Du et al. (2022) Xinya Du, Zixuan Zhang, Sha Li, Pengfei Yu, Hongwei Wang, Tuan Lai, Xudong Lin, Ziqi Wang, Iris Liu, Ben Zhou, et al. 2022. RESIN-11: Schema-guided event prediction for 11 newsworthy scenarios. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies: System Demonstrations. 54–63.

- Fabbri et al. (2019) Alexander Fabbri, Irene Li, Tianwei She, Suyi Li, and Dragomir Radev. 2019. Multi-News: A Large-Scale Multi-Document Summarization Dataset and Abstractive Hierarchical Model. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. Association for Computational Linguistics, Florence, Italy, 1074–1084. https://doi.org/10.18653/v1/P19-1102

- Gao et al. (2023a) Luyu Gao, Zhuyun Dai, Panupong Pasupat, Anthony Chen, Arun Tejasvi Chaganty, Yicheng Fan, Vincent Zhao, Ni Lao, Hongrae Lee, Da-Cheng Juan, et al. 2023a. RARR: Researching and Revising What Language Models Say, Using Language Models. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). 16477–16508.

- Gao et al. (2023b) Tianyu Gao, Howard Yen, Jiatong Yu, and Danqi Chen. 2023b. Enabling Large Language Models to Generate Text with Citations. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing. 6465–6488.

- Gao et al. (2023c) Tianyu Gao, Howard Yen, Jiatong Yu, and Danqi Chen. 2023c. Enabling Large Language Models to Generate Text with Citations. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Houda Bouamor, Juan Pino, and Kalika Bali (Eds.). Association for Computational Linguistics, Singapore, 6465–6488. https://aclanthology.org/2023.emnlp-main.398

- Garg et al. (2020) Siddhant Garg, Thuy Vu, and Alessandro Moschitti. 2020. TANDA: Transfer and Adapt Pre-Trained Transformer Models for Answer Sentence Selection. Proceedings of the AAAI Conference on Artificial Intelligence 34, 05 (Apr 2020), 7780–7788. https://doi.org/10.1609/aaai.v34i05.6282

- Gero et al. (2022) Katy Ilonka Gero, Vivian Liu, and Lydia Chilton. 2022. Sparks: Inspiration for science writing using language models. In Designing interactive systems conference. 1002–1019.

- Golson and Ferraro (2018) Preston Golson and Matthew F Ferraro. 2018. To Resist Disinformation, Learn to Think Like an Intelligence Analyst. CIA Studies in Intelligence 62, 1 (2018), 31–32.

- Gong et al. (2023) Shuzhi Gong, Richard O Sinnott, Jianzhong Qi, and Cecile Paris. 2023. Fake news detection through graph-based neural networks: A survey. arXiv preprint arXiv:2307.12639 (2023).

- Goyal et al. (2022) Tanya Goyal, Junyi Jessy Li, and Greg Durrett. 2022. News Summarization and Evaluation in the Era of GPT-3. arXiv preprint arXiv:2209.12356 (2022).

- Gu et al. (2020) Xiaotao Gu, Yuning Mao, Jiawei Han, Jialu Liu, Hongkun Yu, You Wu, Cong Yu, Daniel Finnie, Jiaqi Zhai, and Nicholas Zukoski. 2020. Generating Representative Headlines for News Stories. In Proc. of the the Web Conf. 2020.

- Holtzman et al. (2019) Ari Holtzman, Jan Buys, Li Du, Maxwell Forbes, and Yejin Choi. 2019. The Curious Case of Neural Text Degeneration. In International Conference on Learning Representations.

- Honovich et al. (2022) Or Honovich, Roee Aharoni, Jonathan Herzig, Hagai Taitelbaum, Doron Kukliansy, Vered Cohen, Thomas Scialom, Idan Szpektor, Avinatan Hassidim, and Yossi Matias. 2022. TRUE: Re-evaluating Factual Consistency Evaluation. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Marine Carpuat, Marie-Catherine de Marneffe, and Ivan Vladimir Meza Ruiz (Eds.). Association for Computational Linguistics, Seattle, United States, 3905–3920. https://doi.org/10.18653/v1/2022.naacl-main.287

- Jain and Dubes (1988) Anil K Jain and Richard C Dubes. 1988. Algorithms for clustering data. (1988).

- Ji et al. ([n. d.]) Ziwei Ji, Nayeon Lee, Rita Frieske, Tiezheng Yu, Dan Su, Yan Xu, Etsuko Ishii, Yejin Bang, Andrea Madotto, and Pascale Fung. [n. d.]. Survey of hallucination in natural language generation. Comput. Surveys ([n. d.]).

- Ji et al. (2023) Ziwei Ji, Nayeon Lee, Rita Frieske, Tiezheng Yu, Dan Su, Yan Xu, Etsuko Ishii, Ye Jin Bang, Andrea Madotto, and Pascale Fung. 2023. Survey of hallucination in natural language generation. Comput. Surveys 55, 12 (2023), 1–38.

- Kwiatkowski et al. (2019) Tom Kwiatkowski, Jennimaria Palomaki, Olivia Redfield, Michael Collins, Ankur Parikh, Chris Alberti, Danielle Epstein, Illia Polosukhin, Jacob Devlin, Kenton Lee, et al. 2019. Natural Questions: A Benchmark for Question Answering Research. Transactions of the Association for Computational Linguistics 7 (2019), 452–466.

- Lee et al. (2023) Edmund WJ Lee, Huanyu Bao, Yixi Wang, and Yi Torng Lim. 2023. From pandemic to Plandemic: Examining the amplification and attenuation of COVID-19 misinformation on social media. Social Science & Medicine 328 (2023), 115979.

- Lee et al. (2022) Mina Lee, Percy Liang, and Qian Yang. 2022. Coauthor: Designing a human-ai collaborative writing dataset for exploring language model capabilities. In Proceedings of the 2022 CHI conference on human factors in computing systems. 1–19.

- Lewis et al. (2020) Mike Lewis, Yinhan Liu, Naman Goyal, Marjan Ghazvininejad, Abdelrahman Mohamed, Omer Levy, Veselin Stoyanov, and Luke Zettlemoyer. 2020. BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics. 7871–7880.

- Li et al. (2014) Chen Li, Yang Liu, Fei Liu, Lin Zhao, and Fuliang Weng. 2014. Improving multi-documents summarization by sentence compression based on expanded constituent parse trees. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP). 691–701.

- Li et al. (2023a) Junyi Li, Xiaoxue Cheng, Wayne Xin Zhao, Jian-Yun Nie, and Ji-Rong Wen. 2023a. Halueval: A large-scale hallucination evaluation benchmark for large language models. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing. 6449–6464.

- Li et al. (2021) Manling Li, Sha Li, Zhenhailong Wang, Lifu Huang, Kyunghyun Cho, Heng Ji, Jiawei Han, and Clare Voss. 2021. The Future is not One-dimensional: Complex Event Schema Induction by Graph Modeling for Event Prediction. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing. 5203–5215.

- Li et al. (2020) Manling Li, Alireza Zareian, Ying Lin, Xiaoman Pan, Spencer Whitehead, Brian Chen, Bo Wu, Heng Ji, Shih-Fu Chang, Clare Voss, et al. 2020. Gaia: A fine-grained multimedia knowledge extraction system. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics: System Demonstrations. 77–86.

- Li et al. (2023b) Sha Li, Ruining Zhao, Manling Li, Heng Ji, Chris Callison-Burch, and Jiawei Han. 2023b. Open-Domain Hierarchical Event Schema Induction by Incremental Prompting and Verification. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). 5677–5697.

- Lin (2004) Chin-Yew Lin. 2004. Rouge: A package for automatic evaluation of summaries. In Text summarization branches out. 74–81.

- Liu et al. (2022) Yixin Liu, Pengfei Liu, Dragomir Radev, and Graham Neubig. 2022. BRIO: Bringing Order to Abstractive Summarization. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). 2890–2903.

- Liu et al. (2019) Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Mandar Joshi, Danqi Chen, Omer Levy, Mike Lewis, Luke Zettlemoyer, and Veselin Stoyanov. 2019. Roberta: A robustly optimized bert pretraining approach. arXiv preprint arXiv:1907.11692 (2019).

- Ma et al. (2023) Yunshan Ma, Chenchen Ye, Zijian Wu, Xiang Wang, Yixin Cao, Liang Pang, and Tat-Seng Chua. 2023. Structured, Complex and Time-complete Temporal Event Forecasting. arXiv preprint arXiv:2312.01052 (2023).

- Mallen et al. (2022) Alex Mallen, Akari Asai, Victor Zhong, Rajarshi Das, Hannaneh Hajishirzi, and Daniel Khashabi. 2022. When not to trust language models: Investigating effectiveness and limitations of parametric and non-parametric memories. arXiv preprint arXiv:2212.10511 (2022).

- Maynez et al. (2020) Joshua Maynez, Shashi Narayan, Bernd Bohnet, and Ryan McDonald. 2020. On Faithfulness and Factuality in Abstractive Summarization. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics. 1906–1919.