Sleep Posture One-Shot Learning Framework Using Kinematic Data Augmentation: In-Silico and In-Vivo Case Studies11footnotemark: 1

Abstract

Sleep posture is linked to several health conditions such as nocturnal cramps and more serious musculoskeletal issues. However, in-clinic sleep assessments are often limited to vital signs (e.g. brain waves). Wearable sensors with embedded inertial measurement units have been used for sleep posture classification; nonetheless, previous works consider only few (commonly four) postures, which are inadequate for advanced clinical assessments. Moreover, posture learning algorithms typically require longitudinal data collection to function reliably, and often operate on raw inertial sensor readings unfamiliar to clinicians. This paper proposes a new framework for sleep posture classification based on a minimal set of joint angle measurements. The proposed framework is validated on a rich set of twelve postures in two experimental pipelines: computer animation to obtain synthetic postural data, and human participant pilot study using custom-made miniature wearable sensors. Through fusing raw geo-inertial sensor measurements to compute a filtered estimate of relative segment orientations across the wrist and ankle joints, the body posture can be characterised in a way comprehensible to medical experts. The proposed sleep posture learning framework offers plug-and-play posture classification by capitalising on a novel kinematic data augmentation method that requires only one training example per posture. Additionally, a new metric together with data visualisations are employed to extract meaningful insights from the postures dataset, demonstrate the added value of the data augmentation method, and explain the classification performance. The proposed framework attained promising overall accuracy as high as on synthetic data and on real data, on par with state of the art data-hungry algorithms available in the literature.

keywords:

Wearable sensors, Sensor fusion , Data augmentation, One-shot learning, Multi-classifier system, Human posture1 Introduction

A recent comprehensive epidemiological study revealed that nearly of the global population suffer from musculoskeletal disorders with most cases being in high-income countries [1]. For example, in the United Kingdom, musculoskeletal conditions affect 1 in every 4 adults. One-third of medical consultations [2] and over of all surgical interventions [3] are consequent to musculoskeletal conditions. Another study projects these conditions will rise more rapidly in low- and middle-income countries [4].

The study of human posture allows for understanding the musculoskeletal system and opens the door for supporting the musculoskeletal health and well-being over the whole lifespan. Over recent years, human sleep behaviour studies have gained more traction among the research community [5]. Traditionally, sleep had been considered as a natural mechanism to recover from exhaustion of daily activities, but recent sleep studies gave contradictory observations. In fact, it was found that certain sleep behaviours could bring about health complications, such as pressure ulcers [6], or uncover underlying disorders [7], including restless leg syndrome and periodic leg movements. Interestingly, some studies linked musculoskeletal morbidity to postural cues, for example, the supine position has been correlated to apnoea more strongly compared to lateral positions [8]. Another evidence shows that prolonged joint immobilisation could lead to muscular contractions [9] which could potentially develop into chronic pain episodes. Moreover, muscle cramps and painful spasms can also occur during wake or sleep states due to sustained abnormal body postures, lack of exercise or pregnancy [10].

Motivated by the evidence above, clinicians and biomedical engineers are keen to investigate whether a significant statistical link exists between the development of musculoskeletal diseases and specific sleep postures. To this end, body sleep postures need to be monitored by means of motion capture technologies, which are generally categorised into optical and non-optical techniques, both of which have been employed to monitor the sleep body postures.

Optical methods are categorised according to whether they involve the use of on-body retroreflective markers or not: marker-based versus markerless techniques. The first category tends to be impractical for sleep analysis due to cost of the equipment involved, controlled lab setting requirements, and marker occlusions. Markerless motion capture capitalises on recent advances in computer vision and deep neural architectures to regress over the body-surface coordinates given a set of image pixels [11, 12, 13]. Markerless techniques also struggle with occlusions due to body covering and are often criticised over privacy failing, thus limiting their adoption.

Non-optical motion capture methods in sleep-related applications comprise two main categories; bed-embodied sensors and wearable sensors. Within the former category, force sensitive resistor grids embedded into mattresses [14] and load cells attached to bed frame supports [15], are by far the most common techniques. However, bed-embodied sensors only provide measurements of the body weight distribution, which consequently require an indirect pose inference framework that is not guaranteed to be entirely reliable. Wearable inertial sensing offers a solution with low intrusiveness, does not require optical line of sight and guarantees privacy, addressing the aforementioned limitations of both optical and bed-embodied non-optical techniques. Moreover, processing of low-dimensional timeseries from on-body sensors is generally of low computational cost. Therefore, it is overall more suited for sleep monitoring applications.

There are a number of open research questions that hinder the large-scale deployment of wearable inertial sensors for tracking sleep postures. The challenges are primarily with the sensing and intelligent perception aspects of these systems. Measurement errors [16], sensor misalignment with respect to body segments [17], and soft tissue artefact [18] are amongst the most prominent sensing errors. Speaking of intelligent perception, we herein focus on three main challenges. First, wearable inertial sleep trackers have so far been exploited mostly for standard posture sensing (supine, prone and lateral positions) which has little to offer clinicians studying posture-dependent musculoskeletal pathologies, such as leg and calf cramps. Second, current works typically employ machine learning (ML) models that directly operate on raw sensor data - an incomprehensible black-box framework to clinicians who have an outsider perspective on artificial intelligence. Third, for these models to function reliably, extended data collection and expensive manual labelling are often prerequisites.

This paper proposes a human sleep posture learning framework (illustrated in Fig. 1 and detailed in Section 3) to overcome the aforementioned challenges. The framework capitalises on data augmentation to facilitate sleep posture modelling from a single postural observation (hereafter “shot”). The experimental pipelines have been developed and validated both in silico and on real world data. The main contributions of the presented work can be summarised as follows:

-

1.

Our approach is the first study directed at wearable-based classification of twelve sleep postures, whereas previous work had been mostly limited to four “standard” postures. The twelve postures include a much wider range of postures common in sleep, thus making the proposed framework better suited for clinical use.

-

2.

To the best knowledge of the authors, we are the first to use inertial sensor fusion in sleep postural analysis. Unlike the often used sensor raw data, our framework provides access to joint orientations which is more human-interpretable and better serves medical diagnosis.

-

3.

We showcase that approximate segment-to-segment orientations are sufficiently viable to characterise sleep postures, without the need for exhaustive sensor-to-segment calibration procedures that hinder the deployment of wearable sensors in clinical or home settings.

-

4.

We propose the use of three-dimensional (3D) computer graphics software to accelerate development and tune algorithms by performing an in silico sleep experiment before validating the methodology on human participants. Previous works often rely solely on real data, which may be hard to collect during the developmental phase.

-

5.

We propose a novel one-shot learning scheme to accelerate learning of arbitrary human sleep postures with augmented observations. This eliminates the need for longitudinal data collection and labelling, which often hinder the use of wearables.

-

6.

We built quadruple non-invasive wearable sensor modules using low-cost off-the-shelf components. Each module comprises two inertial measurement units (IMUs) to offer dual-segment tracking across the distal joint of each extremity limb.

-

7.

We propose a metric-based approach, coupled with data visualisation, to extract quantitative and qualitative insights on posture data trends, data augmentation, and the sleep posture classification problem as a whole.

The structure of the rest of this paper is as follows. Section 2 discusses the literature relevant to the problem of human posture analysis using wearable sensors, with particular emphasis on the knowledge gap and clinical needs. In Section 3, we explain our methodology with reference to the proposed framework depicted in Fig. 1. Section 4 presents the experimental design and setup, together with a description of the framework implementation. Section 5 presents the evaluation results obtained and discusses the main findings. In Section 6, the paper highlights are summarised along with suggestions for future research directions.

2 Related Work

In the clinical landscape, polysomnography (PSG) is regarded as the gold standard clinical diagnostic tool for diagnosing sleep disorders. It involves the simultaneous recording of several parameters to evaluate two major aspects: sleep staging and physiology [19]. Sleep stages are essential as they allow for the recovery and development of the body and the brain. Sleep staging is typically evaluated based on the brain neural activity from electroencephalogram signals, besides electrooculogram and electromyography which help too, particularly with the rapid eye movement stage [20]. The sleep physiology is necessary to assess the respiratory health, blood circulation and other functions like that of the renal and endocrine systems [21]. Hence, PSG is a key tool for the diagnosis of various cardiovascular, neurologic and neuromuscular conditions, and in the evaluation of other sleep disorders such as insomnia and abnormal body movements.

Nevertheless, PSG is faced with sensing, interpretation and diagnosis challenges. To begin with, it requires patient overnight hospitalisation with on‑body intrusive sensors which adversely affect the patient’s sleep quality. As far as human posture is concerned in this work, this aspect remains premature and partially exploited in PSG. Although some PSG implementations evaluate the positional component of sleep-disordered breathing, they often come with a basic thoracic sensor that is limited to recognising only few body postures [22, 23]. An enhanced postural analysis tool would allow clinicians to have a more holistic understanding of how sleep is linked to other conditions such as musculoskeletal morbidities.

Clinicians often welcome the uprising of wearable sleep trackers [20]. However, these trackers remain in the early validation phase, as it is unclear how this additional data can provide more information other than the general wellness and sleep/wake detection [24]. For wearable trackers, each manufacturer integrates their own proprietary algorithm and there is no widely accepted standard, unlike the case of PSG whose standard was set by the American Academy of Sleep Medicine.

From a motion capture perspective, the human motion analysis literature is branched into movement quantification and classification [25]. Most of the available literature belongs to the former category, and is concerned with the estimation of position and/or orientation of one or more body segments during various human activities, which are useful in sport science and film making. In contrast, classification targets high-level interpretations or labelling of the underlying human motion/posture. Within the field of sleep posture classification, only the works on IMU-based wearables of a geo-inertial sensing modality are reviewed here as they are the most relevant for the work presented in this paper.

The available literature can be grouped according to the number of postures considered. The majority of literature consider only four standard sleep postures: supine, prone, right and left lateral positions. Interestingly, a single sensor attached to the chest and feeding data to a Linear Discriminant Analysis (LDA) classifier was sufficient to classify four sleep postures with an accuracy of [26]. Another study [27] evaluated four classifier architectures: Naïve Bayes, Bayesian Network, Decision Tree (DT) and Random Forest on their performance in recognising the four postures based on statistical features extracted from an accelerometer embedded in a smartwatch. Their accuracies were found to vary between and , with Random Forest being the best performer. In [28], spectral features extracted from a sole upper arm sensor on a frame-by-frame basis were used to train a Long Short-Term Memory (LSTM) network, achieving in a four-posture classification problem. A recent study [29] investigated two aspects of a single-sensor sleep posture classification: (1) optimal body locations for sensor placement, and (2) the evaluation of feature-based pattern recognition against deep learning models. Given a quad-posture dataset, the comparative analysis identified the chest and either thighs as optimal body locations, and revealed comparable performance between handcrafted feature-based classifier and deep learning models. A different approach to sleep quad-posture classification was proposed in [30], where a probabilistic state transition from one posture to another is conditioned on the inertial profile of the pose change motion. The authors defined the transitioning motion profile through the extraction of 66 different features in time and frequency domains from raw data channels sourced from triple sensors attached to the chest and wrists.

Fewer works included more sleep postures in their case studies. For a care home application [31], three classifier models were evaluated in a six-posture classification problem: (1) k-nearest neighbours (-NN), (2) Decision Tree (DT), and (3) Support Vector Machines (SVM). Using three sensors embedded into garments (socks and T-shirt), this work adopted a pure pattern recognition approach where the authors preprocessed and extracted features from the sensory timeseries for classifier training. These pose classifications were then fed into a knowledge-based fuzzy model to automatically determine the priority level of postural changes for the prevention of pressure ulcers. The SVM was identified as the best performing classifier during pilot experiments with an accuracy of .

A clinical study investigated the recognition of eight sleep postures using three wearable sensors placed on the forearms and chest [32]. The eight postures represent minor variations of the four standard sleep postures. Using statistical features manually extracted from raw sensory data, the average four-posture classification accuracy was . Notably, this figure dropped to when considering the eight minor posture variations, with the worst model accuracy hitting as low as . The authors also identified battery life and large sensor size as two limitations of wearable-based sleep trackers, and provided recommendations on sensor design, packaging and data capture/transmission optimisation.

With three sensors attached to the chest and ankles, a case study explored the feasibility of classifying six to eight minor variations of the four standard sleep postures [33]. Under different test settings, the generalised matrix learning vector quantisation (GMLVQ) technique was found to perform variably on 7-hour individual participant data from up to . Multi-subject models were examined too and achieved a mean accuracy between and .

More recently, we reported the classification of twelve simulated benchmark sleep postures using sparse postural cues from the four extremity limbs [34]. The posture dataset encloses different limb configurations common in sleep, making it more qualified for clinical use. The proposed data augmentation technique allowed for synthetically generating more postural samples and was proven to enhance the overall posture classification performance. Given a scarce dataset, the reported average classification accuracy was as high as using an SVM-based classifier. To emulate sensing artefacts commonly encountered in off-the-shelf sensors, mild to extreme levels of noise-based jamming were added to the testing postural samples, with the classifier showing high robustness (above ).

Some case studies investigated additional aspects of sleep as well. In [35], a smartwatch embedded with an accelerometer, microphone and illumination sensor was used to capture sleep information on the body posture, hand position and acoustic events (e.g. snores and coughs). Based on the tilt of the hand, the authors employed a 1-NN classifier to recognise the four standard body sleep postures where the similarity criterion is based on direct Euclidean distance measurement. With a 6-hour data recording per participant, the system achieved over accuracy in the quad-posture classification task.

Though some works do not emerge from the domain of sleep tracking, they remain relevant to intelligent wearable sensing and the analysis of human posture and movement. A smart jumpsuit with four inertial sensors on the upper arms and thighs was used for the early detection of neurodevelopmental disorders among infants through the analysis of their body postures and movements [36]. The authors investigated: (1) feature-based ML, and (2) end-to-end deep learning, both of which performed comparably around . It was also shown that the quadruple sensor configuration improved the system’s classification accuracy by up to compared to partial sensor deployment.

Another study employed a dense sensor network composed of 31 wearable sensors to classify 22 (non-sleep) body postures common in human daily activities [37]. Despite the large number of postures considered and the high throughput of sensor data, a 1-NN classifier attained an average classification accuracy of using simple weighted posture attributes.

The framework proposed in this paper sits at a sweet spot between the quantification and classification branches of human motion analysis. Instead of operating on raw sensor signals, we map the sleep posture labels to the kinematic orientation space of the body’s extremity limbs. Specifically, the extremity segment-to-segment relative orientations (joint angles of wrists and ankles) are regarded as primal indicators of body posture. This translates to a more explainable posture recognition algorithm and equips clinicians with better qualified diagnostic tools. With twelve sleep postures, our work goes beyond the four standard poses commonly considered in the literature. Clinically speaking, our work advances the sleep posture sensing capability which has been a main shortcoming of today’s PSG systems. According to the literature, it is evident that different classification models perform comparably; from the naïve -NN classifiers to deep learning models. Such remarkable a conclusion shall draw more attention to the data collection and treatment stages. Therefore, we leverage on a noise injection based data augmentation technique to: (1) mitigate the effect of biases present in our postures dataset, and (2) accomplish performance similar to the state-of-the-art models at a fraction of the training data. We also leverage on additional performance interpretation techniques to showcase the added value brought by data augmentation to the one-shot learning problem, while lending explainability to the reasoning behind the model.

3 Methods

This section describes the methods adopted in each stage of the proposed framework sketched in Fig. 1. An overview of the framework is presented in Section 3.1. The acquisition of postural cues defining the sleep posture had been first formulated virtually through in silico simulations as explained in Sections 3.2 and 3.3 and then similarly performed using real world data collected by the wearable sensors described in Sections 3.4 and 3.5. The proposed postural data augmentation is described in Section 3.6. Lastly, the model behind the posture classification is outlined in Section 3.7.

3.1 Posture Learning Framework

The proposed human posture learning framework is designed to serve as a plug-and-play system to recognise any arbitrary body posture given a single training shot for that posture. The system comprises four wearable sensor modules and a server for sensor data acquisition, storage and analysis. In real life the system would be used as follows. Following an instruction manual or video, a subject will attach the wearable sensor modules to their wrists and ankles before sleep, then replicate a defined set of sleep postures in bed and a snapshot of sensor data is recorded at each posture. All transmitted sensor data are preprocessed to extract segment-to-segment orientations (joint angles) to be used as postural cues defining each posture (see Section 3.3). To avoid the need for longitudinal data collection and labelling, the single shots of preprocessed data are subsequently augmented with many more modified copies (i.e. synthetic data samples) which accelerate effective modelling of each posture. This new augmented posture dataset is sufficiently diversified, and therefore suitable for training a multi-class classifier for this particular sleep session. The patient would then sleep while the sensor data continue to be streamed to the server for sleep posture analysis. From the sequence and duration of sleep postures overnight, a clinician may be able to extract useful clinical insights. The fact that the wearable sensor modules are not taken off between the collection of the training data and real sleep data (testing data) means that sensor-to-segment misalignment is fixed throughout the recording session, thus no calibration is required.

Unlike the vast majority of literature that considers only four standard sleep postures, we showcase the scalability of the framework with twelve wide-ranging postures common in sleep. The framework has been validated in two experimental pipelines, in silico sleep simulation and human participant study as depicted in Fig. 1.

The mechanics of the proposed framework is best explained by analogy with the flow of information in a standard pattern recognition system: data collection, preprocessing, classifier training and testing. The data collection stage involves a local server acquiring body segment orientations either from an exported sleep simulation file (see Section 3.2) or from IMU data transmitted by the wearable sensor modules (see Section 3.5).

The data preprocessing stage starts with the body pose characterisation step (described in Section 3.3) to extract segment-to-segment orientations from each extremity limb to monitor joints perceived relevant from a clinical perspective. These four relative orientations serve as a simplified and human-interpretable representation of the overall body posture. The segment-to-segment orientation data are then augmented as described in Section 3.6 to accelerate sleep posture modelling.

The use of the data augmentation step for the in-silico sleep simulation slightly differs from that of the in-vivo case. The sleep simulation provides only one observation for each posture; therefore, data augmentation is used twice to generate training and testing posture datasets, respectively, to validate the framework virtually. In contrast, the in-vivo session provides recordings of body postures. Therefore, data augmentation is only used to diversify the training dataset of postures, whereas the real test-labelled recordings are readily available for the purpose of framework validation in real-world. Since real timeseries are used for testing, the relative orientation data channels from the quadruple wearable sensors were synchronised.

The multi-class classification model is comprised of an ensemble of SVM binary classifiers. The classifier training and testing procedures are the same for both in-silico and in-vivo pipelines. Using the augmented posture dataset for training, the classifier model is trained to recognise the underlying sleep posture. Then, the test-labelled posture dataset is used to evaluate the performance of the pre-trained model against groundtruth labels.

3.2 In Silico Sleep Simulation

The virtual sleep simulation is built around Blender© (The Blender Foundation, Amsterdam, NL), an open-source computer graphics software for 3D modelling, animation and video rendering. A 3D human-like character model222https://cloud.blender.org/training/animation-fundamentals/5d69ab4dea6789db11ee65d1/ is virtually animated to replicate the twelve sleep postures shown in Fig. 2(a). Each body posture was captured in a keyframe and transitions between keyframes were interpolated to create a motion sequence simulating sleep. We followed the standard pipeline used by digital artists for character animation. An anthropomorphic rig (acting as a skeleton, see Fig. 2(b)) is carefully aligned and bond to the character model to allow for full-body animation by posing the rig alone.

Structurally, the rig segments have root-parent-child relationships. The root is a fully unconstrained segment with six Degrees of Freedom (DOF) representing the translation and rotation of the rig as a whole. The root segment sits at the top of the rig’s hierarchy and was chosen to be the lower spine segment. Branched off from the root segment are the kinematic chains forming the remainder of the rig (e.g. lower limbs, upper back segments, etc.). The 26 segments forming these kinematic chains follow a parent-child transformation nature in the sense that rotation or translation of a parent segment, affects the pose of all its subsequent child segments, but not vice versa.

The complete definition of the body posture, , is defined by two components: (1) the combined position and orientation of the root segment , and (2) the rotations vector of the remaining 26 rig segments. The vector contains the angular displacements about active rotational axes of all body segments, depending on the joint definitions (ball, saddle, or hinge joints). To allow for body posture tracking, Blender© automatically assigns right-handed 3D coordinate systems to all segments anchored at their respective parent joints’ active centres of rotation. Given an arbitrary segment, its pose can be referred to a reference coordinate system, , using the shape forward kinematics map

| (1) |

where denotes the homogeneous transformation matrix describing the rotations and translations of , and represents the initial postural offset between coordinate systems and after the rig binding process is completed. This map allows for the calculation of the net transformation of a child segment by combining all hierarchical transformations from parent segments. In Blender©, is often the global coordinate system of the 3D viewport, and thus the offset term for each segment is known a priori.

The terms can be exported with the animation rendered video as a BioVision Hierarchy (BVH) file containing these hierarchical transformations, quantified by the angular and translational displacements of each segment at each frame, and the fixed segment-to-segment positional offsets.

3.3 Characterisation of Body Posture

In principle, a posture is defined by the complete set of joint angles of all body segments, which is an unrealistic measurement and computing challenge for wearable sensing. Therefore, what we refer to as “body pose characterisation” is the selection of relatively few joint angles that are practically measurable and, at the same time, allow sleeping postures to be classified. The definition of the sleep posture is important since the outcome of this step will have a strong impact on the selection of effective techniques for collecting and analysing data.

The challenge with the body posture is that its study follows a dual nature of parametric and subjective aspects. It is parametric in the sense that measurements of some modality are required for algorithms to use in decision making. This is considered the mainstream direction of the available literature as covered in Section 2. Subjectivity is more related to the human perception of the sleep posture, which varies from a person to another. For example, in [36], multiple human annotators were found to disagree in labelling postures captured in video. Having said that, subjectivity is not completely disjointed from measurements; humans need high-level information in some form (e.g. images, or numbers), but not raw sensor readings. The subjective element in posture classification is useful since posture measurement variability within some constraint should be permissible. In this paper, we exploit the parametric-subjective nature for posture characterisation (herein this section), and augmentation (see Section 3.6).

To reach a good compromise between the numerical and perceptual reasoning behind postural analysis, we propose the use of segment-to-segment relative orientations at the four extremity limbs (wrists and ankles) as primal indicators of the sleep posture. This provides clinicians with an advantageous access to human-interpretable and simplified posture definition alongside the output pose labels. The choice of ankle and wrist joints stems from their strong connection with various sleep-related pathologies, such as ankle osteoarthritis [38] and carpal tunnel syndrome [39]. Such intuitive postural information is envisaged to bring clinicians more comprehensibility of the posture classification algorithm, making it a better fit as a future medical diagnostic tool.

Segment-to-segment relative orientations represent the rotational component of the local joint transformation linking a child segment to its parent. For illustration, Fig. 3 shows the right wrist joint and the coordinate systems and of, respectively, the forearm (parent segment) and the hand (child segment). The wrist is a condyloid synovial (or saddle) joint allowing only two motions: flexion/extension and ulnar/radial deviation. Based on this definition, the hand-to-forearm rotation matrix, , can be formulated as

| (2) |

where, in the case of the wrist joint, and represent the rotations of the flexion/extension and ulnar/radial deviation respectively. The wrist pronation/supination originates from the elbow joint, hence is ideally an identity matrix.

For the in silico sleep simulation, segment-to-segment orientations can be derived from the skeleton hierarchical transformations provided in the BVH file exported from Blender©. Indeed, using Eq. 1, the relative transformation between the parent and child segments can be obtained as

| (3) |

The rotational component of the local transformation across the extremity limb distal joint can then be extracted as

| (4) |

where

Similar kinematic definitions are made for the lower extremity limbs. In this case, the local transformation of the ankle joint can be monitored by tracking both the shin and foot segments, with the allowable ankle motions being the inversion/eversion and plantar/dorsi-flexion.

The BVH articulated body representation is often expressed in Euler angle-based rotation matrices as defined in Eq. 2. However, for the purpose of this study was converted to its equivalent quaternion form to obtain a more concise and numerically stable representation. Thus, the pose characterisation vector for the virtual character model is defined as

| (5) |

where denotes the set of four distal joints of the four extremity limbs.

It is worth noting that the pose characterisation framework proposed in this paper does not utilise any calibration poses and exploits segment-to-segment orientations, making the approach more meaningful clinically. This goes beyond the approach previously presented by the authors in [34] which required a reference calibration T-pose, and offered tracking of the child segment alone.

3.4 Wearable Posture Sensors

Some form of wearable technology is required to track segment-to-segment orientation of the four distal joint angles. The main requirements for such wearable technology include: (1) multi-segment orientation tracking, (2) compact size to not compromise sleep quality, and (3) low cost to make it affordable to the public health sector. Predominantly, reported works on human motion analysis involving several body segments simply employ multiple standalone wearable sensors; one for each segment. In this work, we opt to design a custom-made sensor module (shown in Fig. 4(b)) with dual-segment tracking capability, empowered by two embedded BNO055 IMU sensors from Bosch Sensortec© (Bosch Sensortec GmbH, Reutlingen, DE). Both IMU sensors are managed by a single ESP32-WROOM-32D microcontroller from Espressif Systems© (Espressif Systems Shanghai Co Ltd, Shanghai, CN) featuring Bluetooth connectivity for wireless data transmission. At about in volume for each IMU case, the sensor module is sufficiently slim and small for wearability during sleep. Moreover, all the electronic components used in this design are commercially available, with a total low cost of approximately GBP 100. Fig. 4(a) illustrates the on-body placement of these sensor modules such that the parent and child IMU sensors are mounted on the last two segments of each extremity limb.

3.5 Intra- and Inter-Sensor Fusion

A sensor fusion algorithm is needed to estimate the attitude of each IMU sensor (intra-sensor fusion), that is, a function of the body segment it is mounted on. Afterwards, a pose characterisation framework is employed in a similar way to that described in Section 3.3. To this end, an inter-sensor fusion step is applied to fuse the two absolute IMU orientations for each wearable sensor module into one segment-to-segment orientation.

To compensate for the drift inherent to the IMU heading estimates, readings from the magnetometer, embedded in the IMU, was exploited to provide a stable estimate of the orientation. Herein, the Madgwick filter [40] is employed for fusing the geo-inertial measurements from the IMU sensor, thanks to its orientation tracking robustness and successful deployment in human motion analysis research [41]. Furthermore, the optimisation procedure of the filter is of low computational cost and takes place in the quaternion space, allowing for online and singularity-free attitude estimation. As formulated in Eq. 6, the filter first carries out a vector observation step which involves iteratively searching for an optimal orientation estimate, defined from the IMU frame to the Earth frame . The validity criterion for the orientation estimate depends on how well it aligns a sensor-measured field vector with some Earth-referenced geophysical quantity .

| (6) |

such that

where the operator denotes quaternion multiplication.

The filter then uses the Jacobian matrix of the vector objective function to determine its gradient , which is later used to define the normalised quaternion estimation error at time index

| (7) |

Geophysical vector observation alone provides a sluggish orientation estimate since it is a memoryless framework and is highly susceptible to sensor noise. As shown in Eqs. 8 and 9, the Madgwick filter produces a smoother orientation estimate through numerically integrating a reliable orientation rate estimate at each descent update step. The orientation rate is the outcome of fusing , weighted by a hyperparameter , with the rate of orientation change derived from the gyroscope measurement vector .

| (8) |

| (9) |

where

As depicted in Fig. 5, the two IMUs and built in each wearable sensor modules are attached to the two most distal segments of each limb respectively. Leveraging on the sensor fusion algorithm outlined above, the absolute orientations of both IMUs are first estimated, and then fused to determine the IMU-to-IMU orientation as

| (10) |

This quaternion is computed for each extremity limb to approximately measure the underlying segment-to-segment orientation. Unlike works that prerequisite IMU-to-segment misalignment calibration [42, 43], the proposed framework instead aims at fast posture classification using approximate segment orientations. Fortunately, this serves the feasibility of the proposed system since it is impractical to calibrate eight IMUs for each use.

Similar to Eq. 5, the pose characterisation vector based on wearable sensor data is defined as

| (11) |

3.6 Postural Data Augmentation

This section embarks on the stage of data preprocessing where segment-to-segment orientations are augmented to create a larger dataset better suited for the ML algorithm (see Section 3.7) for pose classification. To begin with, let a generic variable be defined as either or , depending on whether posture tracking is taking place in silico or real world. Next, we define a collective pose characterisation vector that brings together all the twelve sleep postures

| (12) |

where corresponds to the sleep posture.

Based on this definition, resembles a reference dictionary containing postural cues belonging to the sleep postures included in the presented case study. In practice, with such single-observation definitions of postures, over-fitting and poor generalisation are clearly unavoidable outcomes for any classifier regardless of its type. As covered in Section 2, related works record extended sensor data timeseries for each posture which sometimes reach several hours or nights worth of training data. Eventually, extended data collection translates to a higher cost of manual data labelling. Moreover, each subject may have slightly different sleep postures that are of interest to clinicians; training data collection and labelling should then be repeated for each subject. This would clearly be an obstacle for clinical use of wearable-based sleep monitoring solutions.

In this work, data augmentation is a key preprocessing step to make a trade-off between the cost of data collection and timeseries classification. It is essentially needed in applications where only scarce [44] or class-imbalanced [45] datasets are available. Another possible use of data augmentation is to obtain a more capable ML model through enhancing the quantity and quality of the training data by deliberately introducing synthetic samples. When assigned correct labels, synthetic data allows the ML model to explore regions of the input space dismissed in the real training dataset. This leads to expanding the decision boundary of the model, thus lowering the risk of over-fitting [46].

Several families of data augmentation techniques are extensively reviewed in [47, 48] which include, but not limited to, pattern mixing, signal decomposition and generative neural networks. However, these techniques prerequisite medium to large timeseries datasets, thus directly applying any of them to our single “snapshots” of postures is irrational. To address this one-shot learning problem, we propose a noise injection based data augmentation approach to facilitate timeseries generation having only provided a single observation of each posture. The addition of artificial noise helps in overcoming the scarcity and bias issues present in the training data, and provide a good compromise between the parametric and subjective aspects of the human pose definition. Another advantage of noise injection is that the noise generation process can be easily modelled which means that the data augmentation is both editable and invertible. Artificially noised datasets reportedly led to increased robustness to sensor noise and improved classification performance in real world applications, including construction equipment activity recognition [49] and meteorological sensor data processing [50].

Nonetheless, simple addition of noise to a quaternion leads to a chaotic data augmentation process with nonsensical synthetic samples as illustrated in Figs. 6(a) and 6b. Therefore, to generate near-realistic postural data, the quaternion-based pose descriptor is first converted into its corresponding axis-angle representation . As shown in Fig. 7, the axis of rotation is defined in the singularity-free Cartesian space, while the augmentation step is performed in an intermediate spherical coordinate system to obtain a more homogeneous augmented dataset. In particular, is defined as

| (13) |

such that and denote parametric matrix and vector, respectively, used to transform the axis-angle representation from the spherical space to the Cartesian space, and a generic is defined as

| (14) |

where:

-

1.

subscript and superscript stand for the child and parent frames, respectively, anchored to either a body segment or an IMU .

-

2.

for all represents a standard-basis vector.

-

3.

and denote the polar and azimuthal angles, respectively, defining a unit axis of rotation in a spherical coordinate system.

-

4.

is angle of rotation about the defined axis.

A vectorisation of is performed such that for convenience of notation. Then, an augmented dictionary variable, is defined as the collective pose characterisation vector timeseries obtained through the augmentation of

| (15) |

where represents the time index vector for the augmented timeseries.

For each arbitrary time index , we sample two Gaussian-distributed noise terms, and , to augment the axis-angle representation outlined in Eq. 14 as follows

| (16) |

where is used to augment and . The symmetric covariance matrix, is parameterised by a variance

| (17) |

and is used to augment and is parameterised by a variance .

The strength of the proposed data augmentation technique is that it has a single controllable hyperparameter for each of the two main elements defining a static orientation: and for the axis and angle of rotation respectively. Assigning different values to both hyperparameters provides varying data augmentation characteristics as per the application requirements. Figs. 6c and 6(d) illustrate one possible augmentation result using the proposed method. The carefully noised augmented timeseries resembles the output signals of microelectromechanical systems (MEMS) making up many of today’s commercial IMU sensors. Moreover, the addition of noise can be intentionally exaggerated to boost the robustness of trained classifiers.

3.7 Sleep Posture Classification

The definition of the collective pose characterisation vector timeseries is context-dependent; it can be either or to denote training and testing timeseries, respectivey. Herein refers to the time index vector or to indicate real or augmented timeseries respectively. By definition, a classifier is required such that denotes the posture label. For clarity of notation, a generic could either be a training or testing , which corresponds to and respectively regardless of whether a real or augmented time index is considered.

We leverage on an error-correcting output codes (ECOC) model [51, 52] to achieve multi-class classification via aggregating binary classifiers, . The ECOC framework begins with the encoding step in which an encoding matrix, , dictates the class memberships for each such that these values denote negative, ignored and positive classes respectively. The matrix elements of is denoted by corresponding to arbitrary class and binary classifier . Depending on the adopted encoding strategy, the number of employed binary classifiers and their collective generalisation capability may vary. Herein, we use the one-against-one encoding technique that explores all possible pairs of classes , as this was found to have good generalisation capability, without compromising computational efficiency [53].

Once all binary classifiers are fully trained, the ECOC model then relies on a decoding step to map the output of to the corresponding class label. To accomplish this, a base of reference codewords is created to define the aggregate outputs from all classifiers for each class. The ECOC model eventually compares a given test codeword against each of the reference codewords to determine the class of the largest likelihood. Different decoding strategies were proposed in the literature with the most popular ones being (1) distance-based, (2) probabilistic-based and (3) pattern space transformation techniques [54]. In this work, the pairwise Hamming distance is used as the loss measure to estimate the most likely class label for , i.e.

| (18) |

where . In this context, serves as the groundtruth binary label for given . A similar expression can be formulated for and too.

In regard to the binary classifiers, we apply an ensemble of soft margin SVM algorithms to obtained from the one-shot learning phase. The framework of this algorithm uses two hyperparameters: (1) a slack variable to tolerate minimal misclassifications owing to outliers in the training dataset, and (2) a scalar to control the smoothness of the classifier’s decision boundary. The standard optimisation problem for each is outlined in Eq. 19, where only one positive and one negative classes are selected according to the one-against-one encoding defined by . While searching for a solution hyperplane, parameterised by weight vector , a Gaussian kernel of hyperparameter spread is applied to the support vectors to enhance the separability of classes [55]. Finally, a Bayesian optimisation algorithm [56] is used to find the optimal values of the aforementioned hyperparameters:

| (19) |

| s.t. | |||

4 Experimental Setup

This section describes the experimental design and setup for implementing the posture learning framework reported in Section 3, for both the virtual and the human participant pipelines from data collection all through performance evaluation and interpretation.

4.1 Virtual Sleep Pipeline

As mentioned in Section 3.2, in silico sleep simulation is built around a motion sequence animated in Blender© through manually keyframing each sleeping pose as depicted in Fig. 2(a). The motion sequence keeps each pose for ten consecutive frames before making another ten-frame transition to the next pose, thus making the whole animation 230 frames long in total. The animation relies on linear interpolation to fill in the gaps between each two consecutive keyframes.

The motion sequence is then exported from Blender© in the BVH file format and imported into the MATLAB© (The MathWorks, Massachusetts, US) environment via a bespoke parser script. The parser creates a data structure to allow for the reconstruction of throughout the motion sequence as shown in Fig. 8. Another pose characterisation script then extracts at each keyframed sleep posture, forming the pose characterisation vector to be used in the one-shot learning scheme explained in Section 4.3.

4.2 Participant Study Pipeline

An experimental setup was built at an outdoor university facility for ideal data collection conditions, avoiding measurement anomalies due to, for example, interference from the building environment with the magnetometer. Prior to the pilot experiment, all IMU sensors were calibrated as described in [57, 58] to estimate and reduce errors owing to constant bias, scale factors, cross-axis sensitivity and response nonlinearity. The protocol was approved by The University of Liverpool Research Ethics Committee (review reference: 9850).

The microcontroller chip built in each wearable sensor performs uniform sampling of both IMUs at a rate of Hz. For optimal multi-sensor data transmission, dual-IMU data packets are simultaneously sent over Bluetooth from all four wearable sensor modules (clients) to the localhost server running a Python script. All data packets are timestamped using a monotonic digital clock with a microsecond resolution. At the end of the data collection session and after sensor fusion, these timestamps are later needed to synchronise, using linear interpolation, quad-sensor relative orientations under one unified time vector as illustrated in Fig. 1.

Both IMU sensors were placed on each extremity limb such that they are approximately aligned with the distal joint axes to gauge nearly accurate segment-to-segment orientations. As depicted in Fig. 5, the -axis and -axis of both IMUs were aligned as much as possible with the flexion/extension and ulnar/radial deviation axes of the wrist joint when the hand is parallel to the forearm. Both IMUs were positioned maximally close towards the wrist joint to reduce artefacts from muscle contractions and skin movements, and to avoid interference with the elbow rotation. Similar considerations were taken into account for the placement of the lower limb sensor modules.

A leaflet containing pictures of the sleep postures was given to the participant to assist them in replicating the desired poses before each sample was recorded. As portrayed in Fig. 9, each sleep posture is recorded twice; one recording for each of two trial sets. To ensure postural data resembles that of a realistic sleep scenario, we collect statistically independent posture samples using a random pose shuffling technique throughout each trial set. In addition, to account for the participant gaining familiarity with pose replication over the course of the experiment, we adopt a randomised train/test trial assignment strategy.

At the server back end, all received sensor data are immediately logged into a comma-separated values (CSV) file for subsequent import into MATLAB©. Therein, a sensor fusion script applies Madgwick filtering to each IMU data channels to estimate its orientation given a unit quaternion as the initial orientation estimate and learning rate . All IMU orientations are then collectively fed into a pose characterisation script which extracts belonging to each train-labelled posture recording, and from test-labelled trials. For each training posture trial, only one randomly selected sample from the quad-sensor relative orientation timeseries is used to identify for that pose. The resultant will be later utilised in the one-shot learning described in Section 4.3. When constructing the timeseries, the time vector has a length , where is the timeseries length of any test-labelled posture recording. Thus, can be mathematically written as follows:

| (20) |

such that is the relative complement of in :

and denotes a sixteen-column matrix of Not a Number (NaN) elements to account for any test-labelled posture recording with .

4.3 One-Shot Learning

In scenarios where only scarce data is available, data augmentation is necessary. As outlined in Section 3.6, we proposed a one-shot learning method for modelling human sleep postures given a single observation per pose. Depending on whether the virtual or human participant pipeline is considered, the usage of data augmentation varied slightly.

For the in silico sleep simulation, the motion sequence only provides , meaning that separate training and testing timeseries are unavailable for ML. In such case, data augmentation is employed twice for generating training and testing timeseries, and respectively. Besides the single posture observation, new augmented samples are appended to the timeseries, contributing to a total of training samples per sleep posture. Additional augmented samples are designated for .

With regard to the human participant experiment, since is obtained from the test-labelled trial recordings, it is therefore required to generate only one timeseries for classifier training that is . Hence, for each posture, augmented samples are appended to the single observation, contributing to a total of training samples per sleep posture.

The timeseries augmentation step described in Section 3.6 for both the virtual and human participant experiments was carried out given the same range of hyperparameter settings, . A grid of discrete points in the hyperparameter space was constructed where and , yielding 36 different data augmentation settings. Given each pair of hyperparameters, the respective training and testing timeseries datasets are used for the training and testing of the posture classification algorithm outlined in Section 3.7. For the soft margin SVM problem, the Bayesian optimisation algorithm carries out iterative search ( iterations) for the optimal values of the two hyperparameters and over the range .

4.4 Performance Evaluation

Two main metrics are used for the evaluation of the posture classification performance; the accuracy and F1 score . The accuracy refers to the ratio of correct classifications to the total number of testing samples, and is reliable when the testing dataset is evenly distributed as it is the case with the virtual sleep experiment. For class-imbalanced datasets, such as , the F1 score offers a less biased assessment of the model performance through finding the harmonic mean of precision and recall [59]. To mitigate any skewed dataset distribution, we employ a macro-averaged F1 score expressed in Eq. 21 that involves computing all class-specific scores independently, followed by finding the overall unweighted arithmetic mean.

| (21) |

such that

and , and correspond to the true positives, false positives and false negatives, respectively, of a given arbitrary posture class.

Additionally, all performance evaluation experiments are repeated ten times where the mean and standard deviation of both metrics indicate the effectiveness of the Bayesian optimisation algorithm in solving for the SVM optimal hyperparameters.

4.5 Performance Interpretation

The metrics described in Section 4.4 are used to monitor, measure and compare the performance of one or more models during the training and testing phases. To build confidence in deploying ML algorithms in real world applications, additional interpretation methods need to be created to allow human users (e.g. clinicians) to comprehend and trust the outputs and decisions made available by these algorithms. Ideally, these methods are expected to unravel the reasoning behind ML algorithms, and be able to explain their cases of success and failure.

Therefore, we herein present two approaches to lend more explainability to the posture learning algorithm. These approaches are employed to explore any interesting data trends, and the findings are then used to interpret the model’s posture inference.

The first visualisation-based approach utilises uniform manifold approximation and projection (UMAP) [60] to produce a two-dimensional (2D) force-directed graph of high-dimensional datasets, such as and . Dimensionality reduction has been successfully applied to visualise data in many domains, including human motion analysis [36], pedagogical research [61] and speaker recognition [62]. In this work, UMAP facilitates the visualisation of postural observations of the same posture (intra-class distribution) as well as across different postures (inter-class distribution). Such data analysis could provide insights on the research methods (e.g. assessing the added value of data augmentation), and on the problem as a whole (e.g. estimating human postural variability). The visualisation software was based on a MATLAB implementation of UMAP [63].

Although UMAP is known for its capability to preserve the local and global data structures in , it lacks a guarantee on the faithful reconstruction of the actual cluster sizes and inter-cluster distances. Therefore, further interpretation tools, perhaps metric-based, are required for a fine-resolution analysis.

Localisation and pose estimation approaches often use quaternions for tracking the orientation of some target asset. Several works reported the use of the angular offset , expressed in Eq. 22, between any two quaternions and as a common metric to assess the (dis)similarity between orientations [64, 65, 66]:

| (22) |

where represents the residual orientation error between and , and is an operation to extract the scalar term of .

Such error metric can be used to fuse different orientation estimates into one more robust estimate, or compare different estimation techniques. Although it is useful for some applications, it completely overlooks the axis of rotation error in . For the purpose of this paper it is essential to identify where any postural discrepancies/overlaps, may emerge from: are they due to the angle, axis or both components of the extremity limb orientations? In fact, such information can be used to interpret the model perception, and potentially enable clinicians to make evidence-backed future changes to the sleep analysis problem itself, such as insertion/removal of postures, or altering the pose characterisation method.

Therefore, we propose a second performance interpretation approach empowered by a hybrid metric to evaluate the (dis)similarity between multiple posture observations, fusing the axes similarity with the angles similarity . Suppose we have two arbitrary postural observations and , then can be defined as

| (23) |

where

The quadruple axes similarity is captured in using the vector dot product of and , whereas computes a normalised similarity measure based on the total absolute angle error between and . Each of and is defined and scored out of four (i.e. a full score dictates ), contributing to a total similarity score out of eight for .

5 Results and Discussion

This section presents the results obtained following the proposed experimental methods and protocols covered in Sections 3 and 4. The discussion first sheds light on the role data augmentation plays in both the in silico (Section 5.1) and the human participant posture analysis pipelines (Section 5.2). Thereafter, further performance interpretation uncovers qualitative and quantitative insights on sleep postures and the classification problem as a whole. A comparison of the results obtained with the proposed approach and the state-of-the-art available in the literature is reported afterwards in Section 5.3.

5.1 Virtual Sleep Experiment

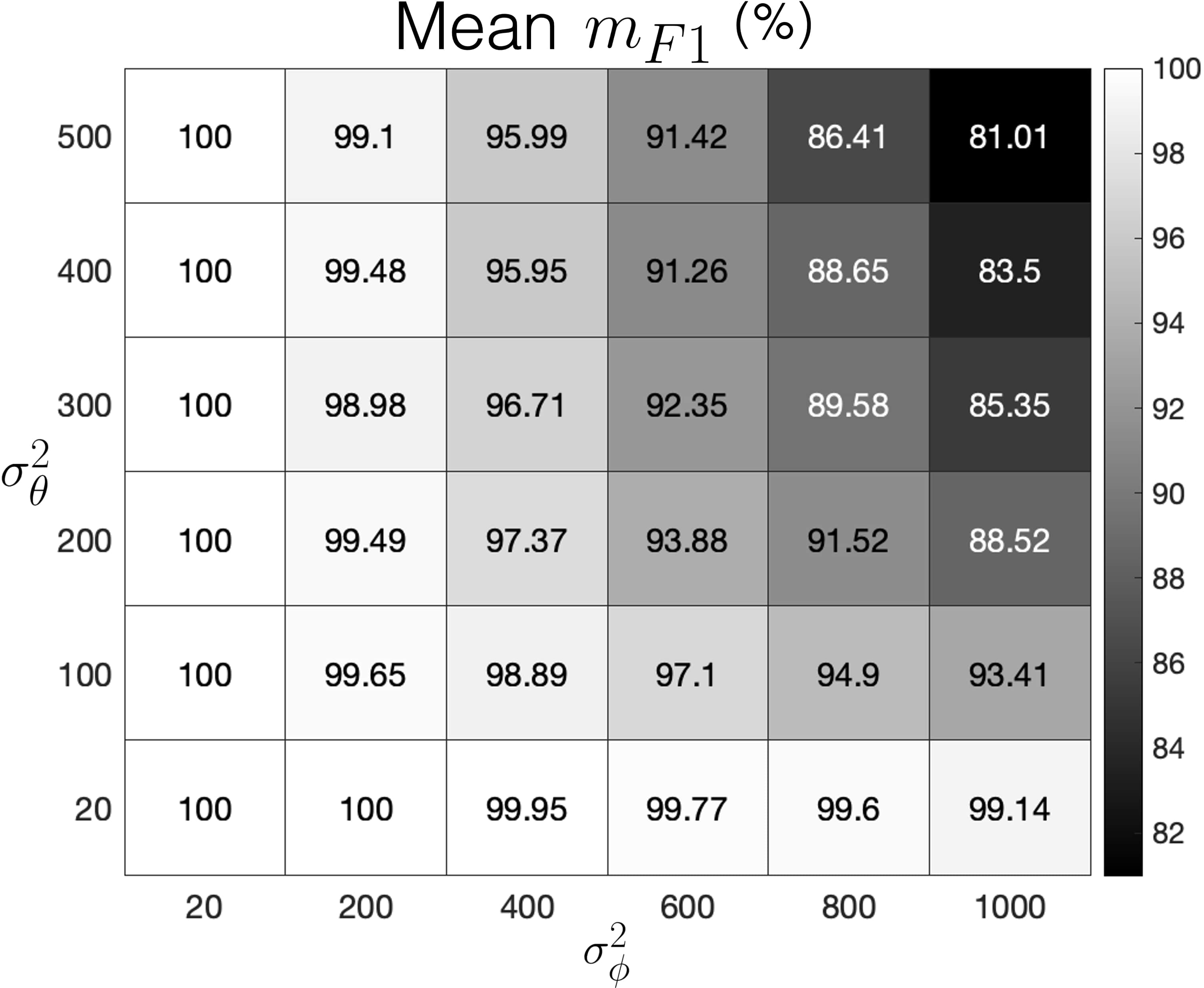

The in silico sleep posture learning pipeline operates on augmented posture datasets in both the training and testing phases. The same data augmentation hyperparameters ( and ) are shared by and , thus the posture classification model is tested on postural observations for which the level of variability is known a priori. As presented in Section 4.3, this evaluation is conducted repeatedly at different levels of postural variability as dictated by the hyperparameter settings, and , in . Therefore, the results obtained from the in silico pipeline inform on how sensitive the posture learning framework is to variations in postural observations.

Fig. 10 shows the sleep posture classification performance given each augmentation setting in . Since all posture classes are equally weighted in and , we only show the mean and standard deviation of as they are almost identical to that of . At the bottom left corner of where the level of injected noise is lowest, , a perfect score with zero standard deviation is attained. On the opposite corner, where , exaggerated noise injection seems to pose more challenge to the classification task; nevertheless, the mean remains above with small standard deviation.

The mean heat map can be used to study the effect of each augmentation hyperparameter. When is below , the gradual increase of barely affects the classification performance. On the other hand, the increase in tend to have more influence over the performance when is below . As we move diagonally along and near to the top right corner, the influence of stepping up overtakes that of . Overall, the results obtained through the virtual experiment suggest that the proposed sleep posture learning framework is robust to mild-to-extreme variations in postural observations.

5.2 Participant Pilot Experiment

The participant study pipeline utilises one-shot learning only during the training phase, then validates the resultant trained model on “unseen” real timeseries. Therefore, some level of discrepancy (mismatch) is present between the augmented training dataset and test-labelled posture recordings. Therefore, the participant study complements the virtual experiment through exploring what data augmentation offers to sleep posture learning when unknown discrepancy exists between and .

Fig. 11 shows the results obtained via the proposed sleep posture learning framework. Owing to the class-imbalanced , both and scores are reported. It is evident that the data augmentation settings have a substantial influence over the classification performance, with ranging roughly between . Given mild noise injection at , the mean score was found to be .

Examining the heat map of the human participant study provides a good picture of how augmentation hyperparameter tuning influences the classification performance. In the virtual experiment, each classifier was trained and tested on augmented observations sharing the same postural variability. For the participant study, different data augmentation settings are used to pre-train multiple classifier models that are later tested on the same testing posture dataset, , with unknown train-test pose discrepancy. Logically speaking, a good data augmentation setting would then be one that produces an augmented training posture dataset of variability level close to the actual train-test pose discrepancy. Based on this, recommendations for optimal tuning of the data augmentation hyperparameters can be informed.

To facilitate understanding, let us subdivide in Fig. 11 into three subgrids annotated by , and to study the effect of different augmentation settings on the classification performance. Subgrid shows that angle-dominant augmentation yields performance metrics similar to that of . For subgrid , the performance undergoes a falling trend ( as low as ) in response to augmenting the axes and angles of rotation simultaneously. Finally, subgrid showcases further performance enhancement brought by axis-dominant augmentation, boosting to about . The reason why augmenting axes is more useful may be related to the presence of environmental objects (e.g. mattress and pillow) which constrain joint rotations, hence, variation in sleep postures rather owes to deviations in joint axes of rotation. Consequently, subgrid is recommended for data augmentation, specifically given .

To further understand how axis-dominant augmentation can contribute up to gain in performance compared to other augmentation settings, we use UMAP-empowered data visualisation described in Section 4.5. Fig. 12 reports for two different scenarios: mild noise injection at (Fig. 12(a)), and optimal axis-dominated augmentation at (Fig. 12(b)). At mild noise injection, Fig. 12(a) shows large discrepancies between training and testing observations across most sleep postures, as reflected by the sparse distribution of scattered clusters of observations. This clarifies why, in the absence of a sufficiently large dataset, it is hard to accomplish satisfactory posture classification performance. On the other hand, Fig. 12(b) showcases the effectiveness of the axis-dominant augmentation in bringing about structure to the data distribution as the training and testing observations of each posture are located in close proximity. The resultant classifier-friendly data distribution stands behind the significant rise in performance and robustness to postural discrepancies compared to the mild augmentation case. Additionally, it is noteworthy how overlaps emerged between certain postures as in annotated regions , and .

The hybrid metric proposed in Section 4.5 can also be used to further understand the intra-posture similarities between: (i) , (ii) , and (iii) . Fig. 13 presents the mean with and exhausting all combinations of posture-specific (augmented) training and testing observations given the mild augmentation case . Remarkably, the metric is capable of revealing postural similarities that UMAP did not capture in Fig. 12(a). Sifting data for such correlations and trends is of great significance to researchers and clinicians in terms of rethinking human postural analysis, for instance, to evaluate the efficacy of pose characterisation methods. Another possible usage of this map is the examination of posture definitions and confirming their parametric and subjective distinction from other postures before including it in the study.

Fig. 14(a) shows the confusion matrix given the optimal augmentation setting; . The SVM-ECOC model achieves 100% classification accuracy on testing observations of all postures except , demonstrating satisfactory robustness to the postural overlaps outlined in Figs. 12(b) and 13. Such overlaps were viewed as a challenge in similar studies although these works considered no more than eight postures of standard-to-moderate complexity, see for example [33]. To understand why the model confuses with , we conduct a -based similarity assessment specifically focused on the misclassification in Fig. 14(b). Since the model relies on augmented training data to handle unseen testing observations, we compare against all , where denotes the mean in time domain. Fig. 14(b) reveals a small difference in between and . Moreover, scores of both and indicate a moderate similarity level only around out of , with no clear winner. Recalling region from Fig. 12(b), the relatively large train-to-test distance for confirms the participant was (unintentionally) inconsistent in replicating that posture during data collection, which again explains the misclassification of . Further inspection into the root cause of such discrepancy is presented in Fig. 15 which shows that the participant’s mean orientation differed considerably between train- and test-labelled recordings of . Such inexact posture recreation by participants is an occasional challenge inherent to similar works, as in [32].

For the sake of comparison, Fig. 14(c) shows the result of the same assessment of Fig. 14(b) but with . Fig. 14(c) reveals an uncertainty-free scenario where has a similarity metric of about out of . Therefore, the metric can be regarded as a confidence measure associated with the output posture label, indicating how far one can trust the system at any instant of time.

Interestingly, Figs. 14(b) and 14(c) shows that experience more acute variations in comparison to . This clarifies why axis-dominant augmentation accomplishes better enhancement to the performance compared to angle-dominant augmentation. Such observation also reflects on the nature of in-bed postural analysis as environmental constraints essentially inhibit the mobility of joints, causing variation to mostly take place along the axial component.

For reference, posture classification using only the real training data available was conducted to directly evaluate the simulation-to-real (Sim2Real) gap. In this case, the training of the classification model is not limited to only one observation per posture (one shot). Instead, the SVM-ECOC model leverages the whole length of train-labelled posture recordings and utilises all observed segment-to-segment orientations for posture modelling. After 10 repeated train-test runs, the average classification accuracy of the model on was found to be around . The macro-averaged is found to be , which means the Sim2Real gap is to . This chance-level accuracy reveals poor generalisation performance and justifies the need for a posture learning framework that better deals with data insufficiency - the aim of this paper. The proposed framework indeed provides a boost in by more than 20% out of the box and before fine-tuning the data augmentation hyperparameters.

5.3 Comparison with state of the art

To the best knowledge of the authors, the presented work serves as the most advanced sleep posture analysis in the literature with twelve complex postures representing non-standard postural variations common during sleep. Similar works only covered no more than eight postures, many of which are minor variations of the four standard sleep postures. The protocol of sleep data collection is another crucial aspect that sets the presented methodology apart from others reported in the literature. Specifically, randomised strategies for both pose shuffling and train/test trial assignment are adopted to ensure statistical independence and to account for the participant gaining familiarity with the experiment over time. Moreover, the proposed one-shot posture learning framework makes the use of wearable technologies far easier and more viable, as it removes the need for expensive training data collection sessions. Lastly, the exclusive use of inertial sensor fusion brings forward approximate segment orientations instead of the rudimentary raw data approach often adopted in the literature. Therefore, our approach provides a more comprehensible posture representation to non-technical experts such as clinicians. To better highlight the benefits of the framework proposed in this paper, a comparison with existing works is summarised in Table 1.

Some state-of-the-art studies [32, 33] had reported the possible occurrence of intra-posture similarity and inexact posture recreation, and regarded these as limitations without a clear attempt of formally verifying or quantifying them. A distinctive highlight of this work is the proposed interpretation approaches which provide qualitative and quantitative insights into the nature of sleep postures, their augmentation, and the classification problem as a whole. Our investigation shows that appropriate augmentation settings can make the classification robust to intra-posture similarity.

6 Conclusions

A novel human sleep posture learning framework is proposed, capable of classifying twelve complex sleep postures. This goes beyond related works that mostly consider only four standard postures (supine, prone and lateral positions). The framework was first developed and tested through in silico sleep simulation, then successfully validated in a pilot human participant study. In both experimental pipelines, aggregate segment-to-segment orientations from four distal joints (wrists and ankles) were used to characterise the body posture. This simplified representation was the basis for the sleep posture learning task assigned to an ensemble classifier model. Computer graphics software and custom-made wearable sensor modules with inertial sensing capability were used, respectively, in the virtual and participant pipelines. A major highlight of this work is the use of inertial sensor fusion to gauge segment orientations instead of the raw sensor readings heavily used in the literature. Therefore, our posture representation is more comprehensible to non-technical end users, such as clinicians. Another prominent contribution of this work is the augmentation of postural observations which accelerated posture modelling with increased robustness given only one observation (shot) per posture, omitting the need for longitudinal data collection. The proposed one-shot learning scheme was found to boos the posture classification performance by up to with respect to learning from scarce postural observations. Despite insufficient training data and diversified posture selection, we report performance comparable to the state-of-the-art works. Lastly, we outlined a new metric-based approach and used it along with data visualisation to extract quantitative and qualitative insights on postural analysis, the added value of data augmentation, and the interpretation of the classification performance. The results carry evidence-backed findings that could potentially inform policies and recommendations for the use of wearable sensors in sleep medicine.

A number of directions may guide future research. Since inexact posture recreation (i.e. human non-compliance) seems to be a likely-to-happen open challenge, it might be useful to avoid the discretisation of the human posture space in classification problems and resort to partial- or full-body posture estimation instead. A potentially interesting work would be to examine the system performance when further increasing the number and complexity of sleep postures, and checking whether the robustness to intra-posture similarity will continue to hold. Another future direction could investigate whether incorporating additional absolute segment orientations at the pose characterisation stage would strengthen the differences between postures and reduce overlap.

Acknowledgements

The authors would like to thank Daniel Potts for his assistance with the development of the wearable sensors.

Funding

Omar Elnaggar (first author) is supported by the University of Liverpool Doctoral Network in AI for Future Digital Health.

Declaration of Competing Interest

All authors declare that they have no conflict of interest.

References

- [1] A. Cieza, K. Causey, K. Kamenov, S. W. Hanson, S. Chatterji, T. Vos, Global estimates of the need for rehabilitation based on the global burden of disease study 2019: a systematic analysis for the global burden of disease study 2019, The Lancet 396 (2020) 2006–2017. doi:10.1016/S0140-6736(20)32340-0.

- [2] D. of Health) (Institution/Organization, The musculoskeletal services framework – a joint responsibilty: doing it differently (2006).

- [3] P. M. Clark, B. M. Ellis, A public health approach to musculoskeletal health (2014). doi:10.1016/j.berh.2014.10.002.

- [4] J. Hartvigsen, M. J. Hancock, A. Kongsted, Q. Louw, M. L. Ferreira, S. Genevay, D. Hoy, J. Karppinen, G. Pransky, J. Sieper, R. J. Smeets, M. Underwood, R. Buchbinder, D. Cherkin, N. E. Foster, C. G. Maher, M. van Tulder, J. R. Anema, R. Chou, S. P. Cohen, L. M. Costa, P. Croft, P. H. Ferreira, J. M. Fritz, D. P. Gross, B. W. Koes, B. Öberg, W. C. Peul, M. Schoene, J. A. Turner, A. Woolf, What low back pain is and why we need to pay attention, The Lancet 391 (2018) 2356–2367. doi:10.1016/S0140-6736(18)30480-X.

- [5] M. Abdel-Basset, W. Ding, L. Abdel-Fatah, The fusion of internet of intelligent things (ioit) in remote diagnosis of obstructive sleep apnea: A survey and a new model, Information Fusion 61 (2020) 84–100. doi:10.1016/j.inffus.2020.03.010.

- [6] L. Paquay, R. Wouters, T. Defloor, F. Buntinx, R. Debaillie, L. Geys, Adherence to pressure ulcer prevention guidelines in home care: A survey of current practice, Journal of Clinical Nursing 17 (2008) 627–636. doi:10.1111/j.1365-2702.2007.02109.x.

- [7] V. Ibáñez, J. Silva, O. Cauli, A survey on sleep questionnaires and diaries, Sleep Medicine 42 (2018) 90–96. doi:10.1016/j.sleep.2017.08.026.

- [8] G. D. Pinna, E. Robbi, M. T. L. Rovere, A. E. Taurino, C. Bruschi, G. Guazzotti, R. Maestri, Differential impact of body position on the severity of disordered breathing in heart failure patients with obstructive vs. central sleep apnoea, European Journal of Heart Failure 17 (2015) 1302–1309. doi:10.1002/ejhf.410.

- [9] W. H. Akeson, D. Amiel, M. F. Abel, S. R. Garfin, S. L. Woo, Effects of immobilization on joints, Clinical Orthopaedics and Related Research 219 (1987) 28–37. doi:10.1097/00003086-198706000-00006.

- [10] L. Parisi, F. Pierelli, G. Amabile, G. Valente, E. Calandriello, F. Fattapposta, P. Rossi, M. Serrao, Muscular cramps: Proposals for a new classification, Acta Neurologica Scandinavica 107 (2003) 176–186. doi:10.1034/j.1600-0404.2003.01289.x.

- [11] S. Akbarian, G. Delfi, K. Zhu, A. Yadollahi, B. Taati, Automated non-contact detection of head and body positions during sleep, IEEE Access 7 (2019) 72826–72834. doi:10.1109/ACCESS.2019.2920025.

- [12] Y. Y. Li, Y. J. Lei, L. C. L. Chen, Y. P. Hung, Sleep posture classification with multi-stream cnn using vertical distance map, International Workshop on Advanced Image Technology (IWAIT) (2018) 1–4. doi:10.1109/IWAIT.2018.8369761.

- [13] S. M. Mohammadi, M. Alnowami, S. Khan, D. J. Dijk, A. Hilton, K. Wells, Sleep posture classification using a convolutional neural network, 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) (2018) 1–4. doi:10.1109/EMBC.2018.8513009.

- [14] T. H. Kim, S. J. Kwon, H. M. Choi, Y. S. Hong, Determination of lying posture through recognition of multitier body parts, Wireless Communications and Mobile Computing 2019 (2019) 1–16. doi:10.1155/2019/9568584.

- [15] M. Alaziz, Z. Jia, R. Howard, X. Lin, Y. Zhang, In-bed body motion detection and classification system, ACM Transactions on Sensor Networks 16 (2020) 13:1–13–26. doi:https://doi.org/10.1145/3372023.

- [16] U. Qureshi, F. Golnaraghi, An algorithm for the in-field calibration of a mems imu, IEEE Sensors Journal 17 (2017) 7479– 7486. doi:10.1109/JSEN.2017.2751572.

- [17] B. Fan, Q. Li, T. Tan, P. Kang, P. B. Shull, Effects of imu sensor-to-segment misalignment and orientation error on 3-d knee joint angle estimation, IEEE Sensors Journal 22 (2022) 2543–2552. doi:10.1109/JSEN.2021.3137305.

- [18] A. Leardini, A. Chiari, U. D. Croce, A. Cappozzo, Human movement analysis using stereophotogrammetry part 3. soft tissue artifact assessment and compensation, Gait and Posture 21 (2005) 212–225. doi:10.1016/j.gaitpost.2004.05.002.

- [19] B. V. Vaughn, P. Giallanza, Technical review of polysomnography, Chest 134 (2008) 1310–1319. doi:10.1378/chest.08-0812.

- [20] L. C. Markun, A. Sampat, Clinician-focused overview and developments in polysomnography, Current Sleep Medicine Reports 6 (2020) 309–321. doi:10.1007/s40675-020-00197-5.

- [21] H. R. Colten, B. M. Altevogt, Sleep disorders and sleep deprivation: An unmet public health problem, 2006. doi:10.17226/11617.

-

[22]

A. Tiotiu, O. Mairesse, G. Hoffmann, D. Todea, A. Noseda,

Body position and breathing

abnormalities during sleep: A systematic study, Pneumologia 60 (2011)

216–221.