SLaM: Student-Label Mixing for Distillation with Unlabeled Examples

Abstract

Knowledge distillation with unlabeled examples is a powerful training paradigm for generating compact and lightweight student models in applications where the amount of labeled data is limited but one has access to a large pool of unlabeled data. In this setting, a large teacher model generates “soft” pseudo-labels for the unlabeled dataset which are then used for training the student model. Despite its success in a wide variety of applications, a shortcoming of this approach is that the teacher’s pseudo-labels are often noisy, leading to impaired student performance. In this paper, we present a principled method for knowledge distillation with unlabeled examples that we call Student-Label Mixing (SLaM) and we show that it consistently improves over prior approaches by evaluating it on several standard benchmarks. Finally, we show that SLaM comes with theoretical guarantees; along the way we give an algorithm improving the best-known sample complexity for learning halfspaces with margin under random classification noise, and provide the first convergence analysis for so-called “forward loss-adjustment” methods.

1 Introduction

While good quality human-labeled data are often hard to obtain, finding huge amounts of unlabeled data is relatively easy. Therefore, in modern machine learning applications, we often face the situation where we have a small “golden” dataset with human labels and a large unlabeled dataset. In Distillation with Unlabeled Examples [12, 26, 16] a large teacher model is first trained (or fine-tuned) on the human-labeled data and is then used to generate “soft” pseudo-labels for the unlabeled dataset. Then the (typically smaller) student model, i.e., the model that will be deployed for the purposes of the application, is trained on the combined dataset that contains both the labels generated by humans and the pseudo-labels generated by the teacher model. This general-purpose training paradigm has been applied in a wide variety of contexts [16, 46, 53, 54, 58, 57] including but not limited to distilling knowledge from large-scale foundational models like BERT [18] and GPT-3 [11]. We remark that in such settings one does not have access to the teacher model but only on its pseudo-labels (which were generated during some previous “bulk-inference” phase). This “bulk-inference” step is typically computationally expensive and happens once: one cannot modify the teacher network (or even use it for inference) during the training process of student.

Despite its widespread success in practice, the effectiveness of this powerful approach generally depends on the quality of the pseudo-labels generated by the teacher model. Indeed, training the student model on noisy pseudo-labels often leads to significant degradation of its generalization performance, and this is a well-known phenomenon that has been observed and studied in a plethora of papers in the literature, e.g., [6, 36, 44, 51, 53, 8, 27].

In this work, we propose Student-Label Mixing (SLaM), a principled method for knowledge distillation with unlabeled examples that accounts for the teacher’s noise and consistently improves over prior approaches. At the heart of our method lies the observation that the noise introduced by the teacher is neither random nor adversarial, in the sense that it correlates well with metrics of “confidence” such as the margin score or the entropy of the teacher’s predictions. We exploit this empirical fact to our benefit in order to introduce a model for the teacher’s noise, which we use to appropriately modify the student’s loss function. At a high level, for any given example during the student’s training process, we evaluate the student’s loss function on a convex combination of the student’s current prediction and another (soft-)label that we estimate using our model for the teacher’s noise (hence the name “student-label mixing”).

Our contributions can be summarized as follows:

-

1.

We propose SLaM: a principled method for improving knowledge distillation with unlabeled examples. The method is efficient, data-agnostic and simple to implement.

-

2.

We provide extensive experimental evidence and comparisons which show that our method consistently outperforms previous approaches on standard benchmarks. Moreover, we show that SLaM can be combined with standard distillation techniques such as temperature scaling and confidence-based weighting schemes.

-

3.

We give theoretical guarantees for SLaM under standard assumptions. As a byproduct of our analysis we obtain a simple “forward loss-adjustment” iteration that provably learns halfspaces with -margin under Random Classification Noise with samples improving over prior works that had worse dependence on either the margin or the generalization error (see Theorem 5.1 and Remark 5.2).

2 Related Work

Knowledge Distillation. Most of the literature on knowledge distillation has been focused on the fully supervised/labeled setting, i.e., when distillation is performed on the labeled training data of the teacher model rather than on new, unlabeled data — see e.g. the original paper of [26]. Naturally, in this setting the pseudo-labels generated by the teacher are almost always accurate and so many follow-up works [2, 14, 15, 41, 52] have developed advanced distillation techniques that aim to enforce greater consistency between the teacher’s and the student’s predictions, or even between the intermediate representations learned by the two models. Applying such methods in our setting where the training dataset contains mainly unlabeled examples is still possible but, in this case, it is known [51, 27] that fully trusting the teacher model can be actually harmful to the student model, making these methods less effective. (In fact, when the teacher is highly noisy these methods even underperform vanilla distillation with unlabeled examples.) In Section 4.2 we present results that show the improved effectiveness of SLaM relative to the state-of-the-art supervised knowledge distillation methods like the Variational Information Distillation for Knowledge Transfer (VID) framework [2]. Moreover, in Section D.5 we show that our method can be combined with (i.e., provide an additional improvement) the most simple, yet surprisingly effective, methods of improving knowledge distillation, namely the temperature-scaling idea introduced by [26].

For distillation with unlabeled examples, many approaches [17, 33, 29] propose filtering-out or reweighting the teacher’s pseudo-labels based on measures of teacher’s uncertainty, such as dropout variance, entropy, margin-score, or the cut-statistic. These methods are independent of the student model and can be synergistically combined with our technique. For instance, in Section D.4 we demonstrate that combining our method with teacher-uncertainty-based reweighting schemes leads to improved student performance relative to applying the reweighting scheme alone.

Much more closely related to our approach is the recently introduced approach of [27]. There, the authors design a model for the teacher’s noise and utilize it in order to modify the student’s loss function so that, in expectation, the loss simulates the loss with respect to noise-free pseudo-labels. One of the main advantages of our method compared to that of [27] is that our model for the teacher’s noise is more structured and easier to learn, which — as our experiments in Section 4.2 show — leads to consistently better student performance.

Learning From Noisy Labels. Learning from noisy labels is an important and well-studied problem with a vast literature [7, 21, 23, 28, 31, 37, 40, 42, 45, 47] — see [50] for a recent survey. The fundamental difference between our setting and papers in this literature is that the noise introduced by the teacher is structured, and this is a crucial observation we utilize in our design. Specifically, our approach is inspired by the so-called forward loss-adjustment methods, e.g. [43], but it is specifically tailored to the structure of the distillation with unabeled examples setting. Indeed, forward methods typically attempt to estimate a noise transition matrix whose entry is the probability of the true label being flipped into a corrupted label , which can be rather problematic when dealing with general, instance specific noise like in the case of distillation with unlabeled examples. On the other hand, we exploit that (i) we have access to confidence metrics of the teacher’s predictions; and (ii) that often times, when the teacher model’s top- prediction is inaccurate the true label is within its top- predictions for some appropriate , to design and estimate a much more refined model for the teacher’s noise that we use to inform the design of the student’s loss function.

Another related technique for dealing with noisy data is using “robust” loss functions [4, 20, 24, 35, 56] such that they achieve a small risk for new clean examples even under the presence of noise in the training dataset. In Section 4.2 we compare our method with the general framework of [20] for designing robust loss functions and we show that our approach, when applied to the standard cross-entropy loss, consistently outperforms [20] in the setting of distillation with unlabeled examples. That said, we stress that our method is not tied to the cross-entropy loss and, in fact, it often gives better results when combined with more sophisticated loss functions. We demonstrate this in Section D.6 where we apply our method in cases where the student loss function comes from the families of losses introduced in [20] and [35].

Semi-Supervised Learning. Akin to our setting, in semi-supervised learning (SSL) (see e.g. [55] for a recent survey) the learner is presented with a small labeled dataset and a typically much larger unlabeled dataset . Unlike to our setting though, there is typically no distinction between the student and teacher: the model of interest generates pseudo-labels on which are utilized by using appropriate loss functions or preprocessing procedures (e.g. “filtering” or “correcting”) — often times in an iterative fashion with the goal of improving the quality of the newly-generated pseudo-labels. It is also worth noting that in many real-world applications of distillation with unlabeled examples either the teacher model is unavailable or it is too expensive to retrain it and create fresh pseudo-labels on the data (e.g., when we request labels from a pretrained large language model). Therefore, SSL approaches that either (i) update the “teacher” model (e.g., [34]), or (ii) require several fresh teacher-generated pseudo-labels (e.g., by requesting teacher-predictions on random data-augmentations or perturbed version of the unlabeled examples of e.g., [9]) are not applicable in our setting. We implement the recent SSL technique of [48] and show that our method outperforms it in the context of distillation with unlabeled examples. Besides performing on par with state-of-the-art SSL approaches like [9], the method of [48] is free of inherent limitations like using domain-specific data augmentations — which is also an important feature of our approach.

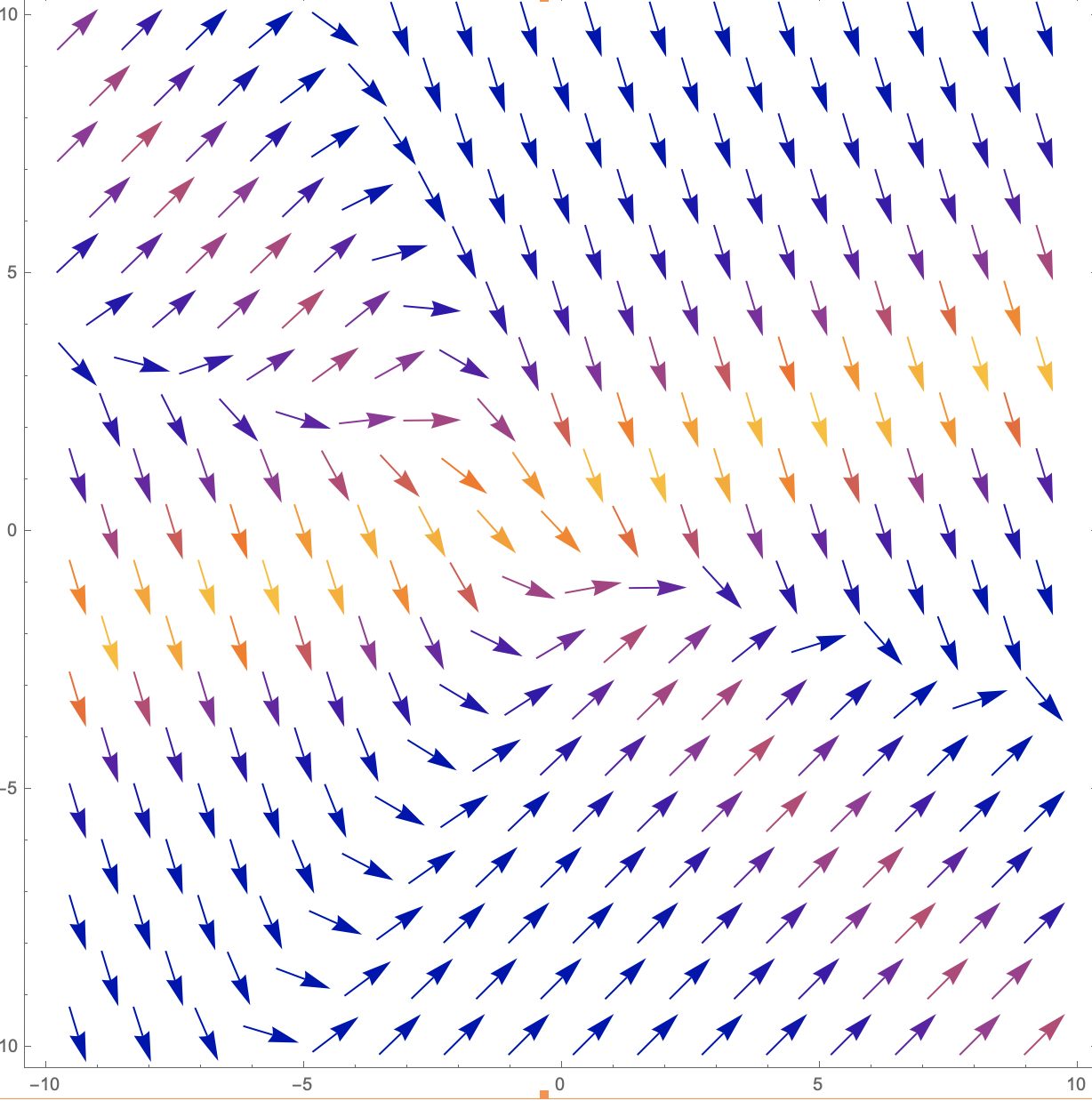

Learning Halfspaces with Random Classification Noise. The theoretical study of classification with Random Classification Noise (RCN) was initiated by [5]. For the fundamental class of linear classifiers (halfspaces) the first polynomial time algorithms for the problem where given in [13] and [10]. The iteration proposed in [13] is a “backward loss-adjustment” method [43] for which it is known that resulting optimization landscape is convex (for linear classifiers). In [19] an improved analysis of the method of [13] was given, showing that SGD on this convex loss learns -margin halfspaces with RCN with samples. On the other hand, forward loss-adjustment methods for dealing with RCN are known to result in an inherently non-convex landscape, see [38] and Figure 8). Our theoretical result for SLaM (see Theorem 5.1) is the first convergence result for a “forward loss-adjustment” method and, at the same time, achieves a sample complexity of improving over the prior work.

3 SLaM: Student-Label Mixing Distillation

In this section, we describe our distillation with unlabeled examples setting and present SLaM. In what follows, we assume that examples are represented by feature-vectors in some space . We shall denote by the distribution over examples. We consider multi-class classification with classes and assume that the ground-truth label of an example is represented by a one-hot vector in given by some unknown function . In multi-class classification the learning algorithm typically optimizes a parametric family of classification models , i.e., for every parameter , is an -dimensional “score vector”, where corresponds to the probability that the model assigns to the class for the example . We shall denote by the classification loss function used by the learning algorithm. During training the algorithm considers a set of labeled examples and optimizes the loss over , i.e., solves the problem For two vectors we denote by the indicator of the event that the positions of the maximum elements of agree. Similarly, for two classifiers we can use to denote whether their top-1 predictions for the example agree. Our goal is to train a classifier over the sample so that its generalization error, i.e., , is small.

Distillation with Unlabeled Examples.

We assume that we are given a (usually small) dataset of correctly labeled examples and a set of unlabeled data . A “teacher” model is first trained on the labeled dataset and then provides soft-labels for the examples of dataset , i.e., we create a dataset containing examples labeled with the corresponding probability distribution over classes (soft-labels) of the teacher model. We then train a (typically smaller) student model using both the original labeled data and the teacher-labeled dataset , i.e., . In what follows, we shall call the above training procedure as “vanilla-distillation”.

Remark 3.1 (“Hard-” vs “Soft-” Distillation).

We remark that the process where instead of using the soft-labels provided by the teacher model on the unlabeled dataset U, we use one-hot vectors representing the class with maximum score according to the teacher, is known as hard-distillation. We will denote by the soft-label of the teacher and by the corresponding hard-label, i.e., is the one-hot representation of . When it is clear from the context we may simply write instead of .

Modelling the Teacher as a “Noisy” Label Oracle.

In the distillation etting described in the previous paragraph, it is known [51, 27, 8, 44] that the teacher model often generates incorrect predictions on the unlabeled examples, impairing the student’s performance. Given any , we model the teacher’s prediction as a random variable. Similarly to [27] we assume that, for every unlabeled datapoint , the provided teacher label is correct with probability and incorrect with probability . However, in contrast with [27], our noise model prescribes a non-advsersarial (semi-random) behavior of the teacher when its top-1 prediction is incorrect.

A first step towards more benign noisy teachers is to assume that, conditionally on being wrong, the teacher label is a uniformly random class of the remaining classes. We remark that this model is already enough to give improvements in datasets with a moderately large number of classes (e.g., up to 100). In particular, it perfectly captures the noisy teacher in binary classification: when the teacher label is different than the ground-truth then it has to be equal to the “flipped” ground-truth .

We now further refine our model so that it is realistic for datasets with thousands of classes. Even though the top-1 accuracy of the teacher model may not be very high on the unlabeled data , the true label is much more likely to belong in the top-5 or top-10 predictions of the teacher rather than being completely arbitrary. For example, training a ResNet50 network on of ImageNet [49] yields an average top-1 accuracy about on the test dataset whereas the top-10 accuracy of the same model is about . In datasets with a large number of classes, this observation significantly reduces the number of potential correct classes of the examples where the teacher label is incorrect. Motivated by the above, we assume the following structured, semi-random noise model for the teacher, tailored to multi-class settings.

Definition 3.2 (Noisy Teacher Model).

Let be any example of the unlabeled data and denote by its ground-truth label. Let resp. be the random variable corresponding to the soft resp. hard prediction of the teacher model for the example . We assume that for every there exist (unknown to the learner) and such that the teacher’s top-1 prediction agrees with the ground-truth with probability and, with probability : (i) the ground-truth belongs in the top- predictions of the teacher; and (ii) the teacher’s (hard)-prediction is a uniformly random incorrect class out of the top- predictions of the teacher soft-label 111Given that the teacher’s prediction is incorrect and that the ground-truth belongs in the top-k(x) predictions of the teacher, assumption (ii) describes a uniform distribution on labels..

Remark 3.3.

We remark that the model of Definition 3.2 captures having a “perfect” teacher model by setting for all and also generalizes the binary case described above by taking for all .

Given the above noise model for the teacher, the problem of improving knowledge-distillation consists of two main tasks: (i) obtaining estimates for accuracy statistics for each example ; and (ii) using those estimated values to improve the training of the student model so that it is affected less by the mistakes of the teacher on dataset .

Training Better Students Using

We first assume that for every we have oracle access to the values and present our Student-Label Mixing loss function. Instead of using to “denoise” the teacher’s label, we use them to add noise to the student’s predictions. To make notation more compact, in what follows, given a vector we denote by the vector that has the value in the positions of the of the -st up to -th largest elements of and in all other positions, e.g., and . Assuming that the student-label for some is we “mix” it (hence the name Student-Label Mixing) using to obtain the mixed prediction

| (1) |

where is the element-wise multiplication of the vectors . We then train the mixed student model, on the “noisy” dataset :

| (2) |

The main intuition behind the mixing of the student’s labels is that by training the “noisy” student to match the “noisy” teacher label on dataset , the underlying (non-mixed) student will eventually learn the ground-truth. In particular, when is the Cross-Entropy loss we have that the expected mixed loss conditioned on any is

where we used the fact that the cross-entropy is linear in its first argument, and that by the definition of our noise model (Definition 3.2) it holds that . Therefore, when the student is equal to the ground-truth , we obtain that the mixed student-model will satisfy for all , and (by Gibb’s inequality), we obtain that is a minimizer of the SLaM loss. We show the following proposition, see Appendix C for the formal statement and proof.

Proposition 3.4 (SLaM Consistency (Informal)).

Let be the distribution of the teacher-labeled examples of dataset , i.e., we first draw and then label it using the noisy teacher of Definition 3.2. Moreover, assume that there exists some parameter such that the ground-truth . Then is the minimizer of the (population) SLaM objective: , where is the Cross-Entropy loss.

Estimating the Teacher’s Accuracy Statistics via Isotonic Regression

We first show how we estimate for each of dataset , i.e., the dataset labeled by the teacher model. In [27] the authors empirically observed that correlates with metrics of teacher’s confidence such as the “margin”, i.e., the difference between the probabilities assigned in the top-1 class and the second largest class according to the teacher’s soft label . In particular, the larger the margin is the more likely is that the corresponding teacher label is correct. We exploit this monotonicity by employing isotonic regression on a small validation dataset to learn the mapping from the teacher’s margin at an example to the corresponding teacher’s accuracy . For more details, see Section B.1.

To perform this regression task we use a small validation dataset with correct labels that the teacher has not seen during training. For every example we compute the corresponding soft-teacher label and compute its margin . For every we also compute the hard-prediction of the teacher and compare it with the ground-truth, i.e., for every the covariate and responce pair is . We then use isotonic regression to fit a piecewise constant, increasing function to the data. We remark that isotonic regression can be implemented very efficiently in time (where is the size of the validation dataset).

For we consider two different options: (i) using the same value for all examples (e.g., using so that the top-k accuracy of teacher is above some threshold on the validation data); and (ii) using a “data-dependent” that we estimate by solving (recall that is the number of classes) isotonic-regression problems (similar to that for estimating above). We refer to Section B.1 for more details.

4 Experimental Evaluation

In this section, we present our experimental results. In Section 4.1 we describe our experimental setup and in Section 4.2 we compare the performance of our method with previous approaches on standard benchmarks. In Section D.4 we show that our method can be combined with teacher-uncertainty-based reweighting techniques. Finally, due to space limitations, we provide additional empirical results in the Appendix: in Section D.5 we show that SLaM can effectively be used with distillation temperature, and in Section D.6 we consider using SLaM with other losses beyond the Cross-Entropy.

4.1 The Setup

Here, we describe our procedure for simulating knowledge distillation with unlabeled examples on academic datasets. We start by splitting the training dataset in two parts: dataset A and dataset C. We then train the teacher and student models on dataset A (using the standard cross-entropy loss).222 We remark that our method does not require pre-training the student on dataset A, however, since [27] requires pre-training the student, we do the same for all methods that we compare. Then we perform multiple independent trials where, for each trial, we randomly split dataset C into a small (e.g., 500 examples validation dataset V and an unlabeled training dataset U. For each trial we (i) use the teacher model to label the points on dataset U to obtain the teacher-labeled dataset B (ii) initialize the weights of the student to those of the student model that was pre-trained on dataset A; (iii) train the student model (using each distillation method) on the combined labeled data of A, V (that have true labels) and the data of B (that have teacher labels). We remark here that we include the validation data V during the training of the student to be fair towards methods that do not use a validation dataset. However, while it is important that the teacher has not seen the validation data during training, the performance of no method was affected significantly by including (or excluding) the validation data from the training dataset.

4.2 Comparison with Previous Approaches

The Baselines

A natural question is whether a more sophisticated distillation method that enforces greater consistency between the teacher and the student, would improve distillation with unlabeled examples: we use the VID method [2] that incorporates the penultimate layer of the student model (after a suitable trainable projection) in the loss. We also compare our method against the weighted distillation method of [27] that reweights the examples of dataset in order to “correct” the effect of the noisy pseudo-labels provided by the teacher. The Taylor cross-entropy method of [20] is a modification of CE that truncates the taylor-series of the CE loss. In [20] it was shown that it offers significant improvements when the labels are corrupted by random classification noise. The fact that the teacher’s noise is much closer to random than to adversarial makes this approach a natural baseline. The UPS loss of [48] is a semi-supervised technique that takes into account the variance (uncertainty) of the teacher model on the examples of dataset in order to transform the soft pseudo-labels provided by the teacher to more “robust” binary vectors and then use a modified binary CE loss. To estimate the uncertainty of the teacher model, we used either dropout with Monte-Carlo estimation or random data-augmentations as suggested in [48]. We remark that, as we discussed in Section 2 and Section 1, strictly speaking, this method is not applicable in our setting because it requires multiple forward passes of the teacher model to estimate its variance but we implement it as it is a relevant approach that aims to improve the pseudo-labels of the teacher.

CIFAR-{10,100} and CelebA

Here we present our results on CIFAR-{10, 100} [30] and CelebA [22]. CIFAR-10 and CIFAR-100 are image classification datasets with 10 and 100 classes respectively. They contain 60000 labeled images, which are split to a training set of 50000 images, and a test set of 10000 images. From the 50000 images of the train set we use the (or 5000, 7500, 10000, 12500, 15000, and 17500 examples) as the labeled dataset A where we train the teacher and pre-train the student models. For each size of dataset A, we perform a random split on the remaining training data and use 500 labeled examples as the validation dataset and the remaining examples as the unlabeled dataset U. For the CIFAR-10 experiments, we use a Mobilenet with depth multiplier 2 as the teacher, and a Mobilenet with depth multiplier 1 as the student. For CIFAR-100, we use a ResNet-110 as a teacher, and a ResNet-56 as the student. We compare the methods both on soft- and hard-distillation. For each trial we train the student model for epochs and keep the best test accuracy over all epochs. We perform 3 trials and report the average of each method and the variance of the achieved accuracies over the trials. The results of our experiments for soft-distillation can be found in Table 1 and Table 2. The corresponding plots are given inFigure 2. We include our results for hard-distillation in Section D.2.

| Labeled Examples | ||||||

|---|---|---|---|---|---|---|

| Teacher | ||||||

| Vanilla | ||||||

| Taylor-CE [20] | ||||||

| UPS [48] | ||||||

| VID [3] | ||||||

| Weighted [27] | ||||||

| SLaM (Ours) |

| Labeled Examples | ||||||

|---|---|---|---|---|---|---|

| Teacher | ||||||

| Vanilla | ||||||

| Taylor-CE [20] | ||||||

| UPS [48] | ||||||

| VID [3] | ||||||

| Weighted [27] | ||||||

| SLaM (Ours) |

We consider the male/female binary classification task using the CelebA dataset [22] consisting of a training set of 162770 images and a test set of 19962 images. We use a MobileNet with depth multiplier 2 as the teacher, and a ResNet-11 as the student. As the labeled dataset A we used percent (or 3256, 4883, 6510, 8138, 9766, 11394 examples) of the training dataset and split the remaining data in a validation dataset of 500 examples and an unlabeled dataset U. Our results for CelebA can be found in Table 3 (soft-distillation) and in Table 7 (hard-distillation). The corresponding plots are given in Figure 2. Due to space limitations our results for hard-distillation can be found in Section D.2.

Taken together, our comparisons show that SLaM consistently outperforms the baselines, often by a large margin. The reader is referred to Section D.1 for additional details.

Remark 4.1 (Soft-Distillation and Temperature Scaling).

We remark that in the comparisons we performed soft-distillation with temperature set to , i.e., for every example we do not scale the corresponding teacher and student logits. In Section D.5 we show that our method can readily be used together with temperature scaling to improve the accuracy of the student model.

ImageNet

Here we present the results on ImageNet [49]. ImageNet is an image classification dataset with 1000 classes consisting of a training set of approximately million images, and a test set of 50000 images. From the million images of the training set we use the percent (or 64058, 128116, 192174, 256232 examples) as the labeled dataset where we train the teacher and pre-train the student models. For each size of dataset , we perform a random split on the remaining training data and use labeled examples as the validation dataset and the remaining examples as the unlabeled dataset . We use a ResNet-50 as the teacher, and a ResNet-18 as the student. We compare the methods on soft-distillation. For each trial, we train the student model for epochs and keep the best test accuracy over all epochs. We perform trials and report the average of each method and the variance of the achieved accuracies over the trials. Our results for ImageNet can be found in Table 4. We remark that we do not include the results of the UPS method in Table 4 because it did not improve over the accuracy achieved after pre-training the student model on dataset . The reader is referred to Section D.1 for additional details.

Large Movies Reviews Dataset

Here we present results on the Large Movies Reviews Dataset [39]. This is a dataset for binary sentiment classification containing 25000 movie reviews for training and 25000 for testing. We use an ALBERT-large model [32] as a teacher, and an ALBERT-base model as a student. We use percent (or 500, 1000, 2000, 10000 examples) from the training dataset and split the remaining data in a validation dataset of 500 examples and an unlabeled dataset . Our results and more experimental details can be found in Section D.3.

Performance Gains of SLaM as a Function of The Number of Labeled Examples

In our experiments, the fraction of examples we consider “labeled” controls two things at the same time: (i) the accuracy of the teacher model — as the teacher is trained on the labeled examples available; and (ii) the number of unlabeled examples the teacher model provides pseudo-labels for. The more inaccurate the teacher model is, the better the improvements provided by our method. (Given a “perfect” teacher that never generates incorrect pseudo-labels for the unlabeled examples, our method is mathematically equivalent to the “vanilla” approach (see the mixing operation in Equation 1). Therefore, the smaller the number of labeled examples available, the bigger the performance gains of SLaM as (i) the teacher will be less accurate; and (ii) it has to generate labels for more unlabeled examples (and therefore the absolute number of inaccurate predictions that SLaM “corrects” increases statistically). It is worth emphasizing that the main reason behind the enormous success of distillation is exactly that the teacher network can blow up the size of the student’s training dataset: in practice, the ratio of labeled examples to unlabeled examples is typically (much) less than 1%.

5 Distilling Linear Models and Learning Noisy Halfspaces

In this section we show that, when the dataset is separable by a halfspace, i.e., for every example , the ground-truth is for some unknown weight vector , then using SLaM with a linear model as the student will recover the ground truth classifier. We make the standard assumption that the ground-truth halfspace has -margin, i.e., that and that it holds for all examples . For a fixed example , the observed noisy teacher-label satisfies Definition 3.2, i.e., w.p. and w.p. (since for binary classification). Our approach consists of using the standard cross-entropy loss and training a student-model consisting of a linear layer plus a soft-max activation, i.e.,

Theorem 5.1 (SLaM Convergence).

Let be a distribution on and be the ground-truth halfspace with normal vector . Let be the distribution over (noisy) teacher-labeled examples whose -marginal is . Assume that there exist such that for all examples in the support of it holds that and . Let . After SGD iterations on the SLaM objective (see Algorithm 3), with probability at least , there exists an iteration where .

Remark 5.2 (Learning Halfspaces with RCN).

The problem of learning halfspaces with Random Classification Noise (RCN) can be modeled as having a teacher with constant accuracy probability, i.e., for all . As a corollary of Theorem 5.1 we obtain an efficient learning algorithm for -margin halfspaces under RCN achieving a sample complexity of . Prior to our work, the best known sample complexity for provably learning halfspaces with RCN was [19] where the “backward loss-adjustment” of [13] was used.

6 Conclusion, Limitations, and Broader Impact

In this work we propose SLaM, a novel and principled method for improving distillation with unlabeled examples. We empirically show that SLaM consistently outperforms the baselines, often by a large margin. We also showed that SLaM can be used with and improve (i) knowledge distillation with temperature scaling; (ii) loss functions beyond the standard Cross-Entropy loss; and (iii) confidence-based weighting schemes that down-weight examples where the teacher model is not very confident. Apart from extensive experimental evaluation, we provide strong theoretical guarantees establishing the consistency and optimality of SLaM. As a byproduct of our theoretical analysis, we obtain a new iteration for learning -margin halfspaces with RCN that improves the best known sample complexity for this problem.

A limitation of SLaM is that it does not necessarily improve over vanilla distillation when the teacher model makes only a few mistakes (this is to be expected as our method is designed for the case where the teacher-model is imperfect). Moreover, while our theoretical result for learning noisy halfspaces improves the theoretical SOTA, it does not match the information-theoretic lower bound of . An interesting question for future work is whether this can be further improved to match the information-theoretic lower bound.

Knowledge-distillation is a very popular deep learning method, and therefore, potentially malicious usage of our work is an important societal issue, as deep learning has far-reaching applications from NLP to Robotics and Self-Driving cars.

References

- [1] Martín Abadi, Ashish Agarwal, Paul Barham, Eugene Brevdo, Zhifeng Chen, Craig Citro, Greg S Corrado, Andy Davis, Jeffrey Dean, Matthieu Devin, et al. Tensorflow: Large-scale machine learning on heterogeneous distributed systems. arXiv preprint arXiv:1603.04467, 2016.

- [2] Sungsoo Ahn, Shell Xu Hu, Andreas Damianou, Neil D Lawrence, and Zhenwen Dai. Variational information distillation for knowledge transfer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 9163–9171, 2019.

- [3] Sungsoo Ahn, Shell Xu Hu, Andreas Damianou, Neil D. Lawrence, and Zhenwen Dai. Variational information distillation for knowledge transfer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2019.

- [4] Ehsan Amid, Manfred KK Warmuth, Rohan Anil, and Tomer Koren. Robust bi-tempered logistic loss based on bregman divergences. Advances in Neural Information Processing Systems, 32, 2019.

- [5] D. Angluin and P. Laird. Learning from noisy examples. Machine Learning, 2(4):343–370, 1988.

- [6] Eric Arazo, Diego Ortego, Paul Albert, Noel E O’Connor, and Kevin McGuinness. Pseudo-labeling and confirmation bias in deep semi-supervised learning. In 2020 International Joint Conference on Neural Networks (IJCNN), pages 1–8. IEEE, 2020.

- [7] Noga Bar, Tomer Koren, and Raja Giryes. Multiplicative reweighting for robust neural network optimization. arXiv preprint arXiv:2102.12192, 2021.

- [8] Cenk Baykal, Khoa Trinh, Fotis Iliopoulos, Gaurav Menghani, and Erik Vee. Robust active distillation. International Conference on Learning Representations (ICLR), 2023.

- [9] David Berthelot, Nicholas Carlini, Ian Goodfellow, Nicolas Papernot, Avital Oliver, and Colin A Raffel. Mixmatch: A holistic approach to semi-supervised learning. Advances in neural information processing systems, 32, 2019.

- [10] A. Blum, A. Frieze, R. Kannan, and S. Vempala. A polynomial-time algorithm for learning noisy linear threshold functions. Algorithmica, 22(1):35–52, 1998.

- [11] Tom Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared D Kaplan, Prafulla Dhariwal, Arvind Neelakantan, Pranav Shyam, Girish Sastry, Amanda Askell, et al. Language models are few-shot learners. Advances in neural information processing systems, 33:1877–1901, 2020.

- [12] Cristian Buciluǎ, Rich Caruana, and Alexandru Niculescu-Mizil. Model compression. In Proceedings of the 12th ACM SIGKDD international conference on Knowledge discovery and data mining, pages 535–541, 2006.

- [13] T. Bylander. Learning linear threshold functions in the presence of classification noise. In Proceedings of the seventh annual conference on Computational learning theory, pages 340–347, 1994.

- [14] Hanting Chen, Yunhe Wang, Chang Xu, Chao Xu, and Dacheng Tao. Learning student networks via feature embedding. IEEE Transactions on Neural Networks and Learning Systems, 32(1):25–35, 2020.

- [15] Liqun Chen, Dong Wang, Zhe Gan, Jingjing Liu, Ricardo Henao, and Lawrence Carin. Wasserstein contrastive representation distillation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 16296–16305, 2021.

- [16] Ting Chen, Simon Kornblith, Kevin Swersky, Mohammad Norouzi, and Geoffrey E Hinton. Big self-supervised models are strong semi-supervised learners. Advances in neural information processing systems, 33:22243–22255, 2020.

- [17] Mostafa Dehghani, Arash Mehrjou, Stephan Gouws, Jaap Kamps, and Bernhard Schölkopf. Fidelity-weighted learning. arXiv preprint arXiv:1711.02799, 2017.

- [18] Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805, 2018.

- [19] I. Diakonikolas, T. Gouleakis, and C. Tzamos. Distribution-independent pac learning of halfspaces with massart noise. Advances in Neural Information Processing Systems, 32, 2019.

- [20] Lei Feng, Senlin Shu, Zhuoyi Lin, Fengmao Lv, Li Li, and Bo An. Can cross entropy loss be robust to label noise? In Proceedings of the Twenty-Ninth International Conference on International Joint Conferences on Artificial Intelligence, pages 2206–2212, 2021.

- [21] Benoît Frénay and Michel Verleysen. Classification in the presence of label noise: a survey. IEEE transactions on neural networks and learning systems, 25(5):845–869, 2013.

- [22] Tommaso Furlanello, Zachary Lipton, Michael Tschannen, Laurent Itti, and Anima Anandkumar. Born again neural networks. In International Conference on Machine Learning, pages 1607–1616. PMLR, 2018.

- [23] Dragan Gamberger, Nada Lavrac, and Ciril Groselj. Experiments with noise filtering in a medical domain. In ICML, volume 99, pages 143–151, 1999.

- [24] Aritra Ghosh, Himanshu Kumar, and P Shanti Sastry. Robust loss functions under label noise for deep neural networks. In Proceedings of the AAAI conference on artificial intelligence, volume 31, 2017.

- [25] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- [26] Geoffrey E. Hinton, Oriol Vinyals, and Jeffrey Dean. Distilling the knowledge in a neural network. CoRR, abs/1503.02531, 2015.

- [27] Fotis Iliopoulos, Vasilis Kontonis, Cenk Baykal, Gaurav Menghani, Khoa Trinh, and Erik Vee. Weighted distillation with unlabeled examples. In NeurIPS, 2022.

- [28] Lu Jiang, Zhengyuan Zhou, Thomas Leung, Li-Jia Li, and Li Fei-Fei. Mentornet: Learning data-driven curriculum for very deep neural networks on corrupted labels. In International Conference on Machine Learning, pages 2304–2313. PMLR, 2018.

- [29] Akisato Kimura, Zoubin Ghahramani, Koh Takeuchi, Tomoharu Iwata, and Naonori Ueda. Few-shot learning of neural networks from scratch by pseudo example optimization. arXiv preprint arXiv:1802.03039, 2018.

- [30] Alex Krizhevsky, Geoffrey Hinton, et al. Learning multiple layers of features from tiny images. 2009.

- [31] Abhishek Kumar and Ehsan Amid. Constrained instance and class reweighting for robust learning under label noise. arXiv preprint arXiv:2111.05428, 2021.

- [32] Zhenzhong Lan, Mingda Chen, Sebastian Goodman, Kevin Gimpel, Piyush Sharma, and Radu Soricut. Albert: A lite bert for self-supervised learning of language representations. arXiv preprint arXiv:1909.11942, 2019.

- [33] Hunter Lang, Aravindan Vijayaraghavan, and David Sontag. Training subset selection for weak supervision. arXiv preprint arXiv:2206.02914, 2022.

- [34] Dong-Hyun Lee et al. Pseudo-label: The simple and efficient semi-supervised learning method for deep neural networks. In Workshop on challenges in representation learning, ICML, volume 3, page 896, 2013.

- [35] Zhaoqi Leng, Mingxing Tan, Chenxi Liu, Ekin Dogus Cubuk, Jay Shi, Shuyang Cheng, and Dragomir Anguelov. Polyloss: A polynomial expansion perspective of classification loss functions. In International Conference on Learning Representations, 2022.

- [36] Lu Liu and Robby T Tan. Certainty driven consistency loss on multi-teacher networks for semi-supervised learning. Pattern Recognition, 120:108140, 2021.

- [37] Tongliang Liu and Dacheng Tao. Classification with noisy labels by importance reweighting. IEEE Transactions on pattern analysis and machine intelligence, 38(3):447–461, 2015.

- [38] Michal Lukasik, Srinadh Bhojanapalli, Aditya Menon, and Sanjiv Kumar. Does label smoothing mitigate label noise? In International Conference on Machine Learning, pages 6448–6458. PMLR, 2020.

- [39] Andrew L. Maas, Raymond E. Daly, Peter T. Pham, Dan Huang, Andrew Y. Ng, and Christopher Potts. Learning word vectors for sentiment analysis. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, pages 142–150, Portland, Oregon, USA, June 2011. Association for Computational Linguistics.

- [40] Negin Majidi, Ehsan Amid, Hossein Talebi, and Manfred K Warmuth. Exponentiated gradient reweighting for robust training under label noise and beyond. arXiv preprint arXiv:2104.01493, 2021.

- [41] Rafael Müller, Simon Kornblith, and Geoffrey Hinton. Subclass distillation. arXiv preprint arXiv:2002.03936, 2020.

- [42] Nagarajan Natarajan, Inderjit S Dhillon, Pradeep K Ravikumar, and Ambuj Tewari. Learning with noisy labels. Advances in neural information processing systems, 26, 2013.

- [43] Giorgio Patrini, Alessandro Rozza, Aditya Krishna Menon, Richard Nock, and Lizhen Qu. Making deep neural networks robust to label noise: A loss correction approach. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 1944–1952, 2017.

- [44] Hieu Pham, Zihang Dai, Qizhe Xie, and Quoc V Le. Meta pseudo labels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 11557–11568, 2021.

- [45] Geoff Pleiss, Tianyi Zhang, Ethan Elenberg, and Kilian Q Weinberger. Identifying mislabeled data using the area under the margin ranking. Advances in Neural Information Processing Systems, 33:17044–17056, 2020.

- [46] Ilija Radosavovic, Piotr Dollár, Ross Girshick, Georgia Gkioxari, and Kaiming He. Data distillation: Towards omni-supervised learning. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 4119–4128, 2018.

- [47] Mengye Ren, Wenyuan Zeng, Bin Yang, and Raquel Urtasun. Learning to reweight examples for robust deep learning. In International conference on machine learning, pages 4334–4343. PMLR, 2018.

- [48] Mamshad Nayeem Rizve, Kevin Duarte, Yogesh S Rawat, and Mubarak Shah. In defense of pseudo-labeling: An uncertainty-aware pseudo-label selection framework for semi-supervised learning. In International Conference on Learning Representations (ICLR), 2021.

- [49] Olga Russakovsky, Jia Deng, Hao Su, Jonathan Krause, Sanjeev Satheesh, Sean Ma, Zhiheng Huang, Andrej Karpathy, Aditya Khosla, Michael Bernstein, et al. Imagenet large scale visual recognition challenge. International journal of computer vision, 115(3):211–252, 2015.

- [50] Hwanjun Song, Minseok Kim, Dongmin Park, Yooju Shin, and Jae-Gil Lee. Learning from noisy labels with deep neural networks: A survey. IEEE Transactions on Neural Networks and Learning Systems, 2022.

- [51] Samuel Stanton, Pavel Izmailov, Polina Kirichenko, Alexander A Alemi, and Andrew G Wilson. Does knowledge distillation really work? Advances in Neural Information Processing Systems, 34:6906–6919, 2021.

- [52] Yonglong Tian, Dilip Krishnan, and Phillip Isola. Contrastive representation distillation. arXiv preprint arXiv:1910.10699, 2019.

- [53] Qizhe Xie, Minh-Thang Luong, Eduard Hovy, and Quoc V Le. Self-training with noisy student improves imagenet classification. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 10687–10698, 2020.

- [54] I Zeki Yalniz, Hervé Jégou, Kan Chen, Manohar Paluri, and Dhruv Mahajan. Billion-scale semi-supervised learning for image classification. arXiv preprint arXiv:1905.00546, 2019.

- [55] Xiangli Yang, Zixing Song, Irwin King, and Zenglin Xu. A survey on deep semi-supervised learning. IEEE Transactions on Knowledge and Data Engineering, 2022.

- [56] Zhilu Zhang and Mert Sabuncu. Generalized cross entropy loss for training deep neural networks with noisy labels. Advances in neural information processing systems, 31, 2018.

- [57] Barret Zoph, Golnaz Ghiasi, Tsung-Yi Lin, Yin Cui, Hanxiao Liu, Ekin Dogus Cubuk, and Quoc Le. Rethinking pre-training and self-training. Advances in neural information processing systems, 33:3833–3845, 2020.

- [58] Yang Zou, Zhiding Yu, Xiaofeng Liu, BVK Kumar, and Jinsong Wang. Confidence regularized self-training. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 5982–5991, 2019.

Appendix A Notation

For two vectors we denote by their inner product. We use to denote their element-wise product, i.e., . We use the notation to denote the -th largest element of the vector . We use to denote the difference between the top-2 elements of , i.e., . Moreover, we use to denote the top-k margin, i.e., . Given a function we denote by the gradient of with respect to the parameter .

Appendix B Detailed Description of SLaM

B.1 Estimating the Teacher’s Accuracy Parameters:

Estimating the Teacher’s Accuracy via Isotonic Regression

We now turn our attention to the problem of estimating for each of dataset , i.e., the dataset labeled by the teacher model. In [27] the authors empirically observed that correlates with metrics of teacher’s confidence such as the “margin”, i.e., the difference between the probabilities assigned in the top-1 class and the second largest class according to the teacher’s soft label . In particular, the larger the margin is the more likely is that the corresponding teacher label is correct. We exploit (and enforce) this monotonicity by employing isotonic regression on a small validation dataset to learn the mapping from the teacher’s margin at an example to the corresponding teacher’s accuracy .

To perform this regression task we use a small validation dataset with correct labels that the teacher has not seen during training. For every example we compute the corresponding soft-teacher label and compute its margin . For every we also compute the hard-prediction of the teacher and compare it with the ground-truth, i.e., for every the covariate and responce pair is . We then use isotonic regression to fit a piecewise constant, increasing function to the data. Sorting the regression data by increasing margin to obtain a list , isotonic regression solves the following task

where the parameter is a lower bound on the values and is a hyper-parameter that we tune. On the other hand, the upper bound for the values can be set to since we know that the true value is at most for every (since it corresponds to the probability that the teacher-label is correct). After we compute the values for any given the output of the regressor is the value of corresponding to the smallest that is larger-than or equal to . This is going to be our estimate for . We remark that finding the values can be done efficiently in time after sorting the data (which has a runtime of ) so the whole isotonic regression task can be done very efficiently.

Estimating .

We now describe our process for estimating the values of and for every example of dataset . Similarly to the binary classification setting, we estimate the accuracy probability using isotonic regression on a small validation dataset. The value of can be set to be equal to a fixed value of for all data, so that the top-k accuracy of the teacher on the validation data is reasonable (say above ). For example, in our ImageNet experiments, we used . We also provide a data-dependent method to find different values for every example . To do this we adapt the method for estimating the top-1 accuracy of the teacher from the validation dataset. For every value of we compute the top-k margin of the teacher’s predictions on the validation data which is equal to the sum of the top-k probabilities of the teacher soft-label minus the probability assigned to the -th class, i.e.,

Using the top-k margin as the covariate and the top-k accuracy as the response we solve the corresponding regression task using isotonic regression to obtain the value representing the probability that the true label belongs in the top-k predictions of the teacher soft-label. For some threshold, say , for every we set to be the smallest value of so that . We empirically observed that using larger thresholds for the top-k accuracy (e.g., or ), is better. We remark that while using the top-k margin as the covariate in the regression task is reasonable, our method can be used with other “uncertainty metrics” of the teacher’s soft-labels, e.g., the entropy of the distribution of after grouping together the top-k elements. The higher this entropy metric is the more likely that the top-k accuracy probability of the teacher is low.

B.2 SLaM for Distillation with Unlabeled Examples: Pseudocode

In this section we present pseudo-code describing the distillation with unlabeled examples setting and the SLaM method, Algorithm 1.

Remark B.1.

We remark that in our experiments, we observed that not normalizing the mixing operation with resulted in better results overall. Therefore, the mixing operation used in our experimental evaluation of SLaM is . For more details we refer the reader to the code provided in the supplementary material.

Appendix C SLaM Consistency

In the following proposition we show that any minimizer of the SLaM loss over the noisy teacher-data must agree with the ground-truth for all (that have positive density). To keep the presentation simple and avoid measurability issues (e.g., considering measure zero sets under ) in the following we will assume that the example distribution is supported on a finite set. We remark that one can easily adapt the proof to hold for any distribution (but the result will hold after excluding measure-zero sets under ).

Proposition C.1 (SLaM Consistency).

Let be the distribution of the teacher-labeled examples of dataset , i.e., we first draw and then label it using the noisy teacher of Definition 3.2. Moreover, assume that there exists some parameter such that the ground-truth . Denote by the SLaM objective. The following hold true.

-

1.

minimizes the SLaM objective.

-

2.

Assuming further that for all it holds that , we have that any minimizer of the SLaM objective satisfies: for all .

Proof.

Fix any example . By Definition 3.2 we have that the corresponding teacher label is correct with probability and a uniformly random incorrect label out of the top-k labels according to the teacher soft-label . Recall for an -dimension score vector , by we denote the vector that has on the positions of the top-k elements of , e.g., . Conditional on , the corresponding expected noisy teacher label is

We know that the expected teacher label conditional on it being wrong is a uniformly random incorrect label from the top-k labels of the corresponding teacher soft-label . Assume first that , since the ground-truth is represented by a one-hot vector, the distribution of uniformly random incorrect labels conditional on can be written as . For example, if the ground-truth label is then a uniformly random incorrect label has probability distribution . Assume now that and . Then the distribution of the (incorrect) teacher label becomes . Using to denote element-wise multiplication of two vectors, we have

Therefore, we obtain

Therefore, by using the fact that Cross-Entropy is linear in its first argument, we obtain that the expected SLaM loss on some example is

We first have to show that there exist some parameter that matches the (expected) observed labels . Observe first that by using the realizability assumption, i.e.,that there exists so that we obtain that, for every , it holds . In fact, by Gibb’s inequality (convexity of Cross-Entropy) we have that is a (global) minimizer of the SLaM objective.

We next show that any (global) minimizer of the SLaM objective must agree with the ground-truth for every . Since we have shown that is able to match the (expected) labels any other minimizer must also satisfy . Assume without loss of generality that , i.e., the ground-truth label is . We observe that by using that and the fact that the ground-truth belongs in the top- of the teacher’s predictions conditional that the teacher’s top-1 prediction is incorrect (thus ), we obtain that

Using the fact that we can simplify the above expression to

Using the assumption that we obtain that the term is not vanishing and therefore it must hold that , i.e., the student model must be equal to the ground-truth.

∎

Appendix D Extended Experimental Evaluation

We implemented all algorithms in Python and used the TensorFlow deep learning library [1]. We ran our experiments on 64 Cloud TPU v4s each with two cores.

D.1 Implementation Details: Vision Datasets

Here we present the implementation details for the vision datasets we considered.

Remark D.1.

We note that in all our experiments, “VID” corresponds to the implementation of the loss described in equation (2), (4) and (6) of [2] (which requires appropriately modifying the student model so that we have access to its embedding layer).

Experiments on CIFAR-{10/100} and CelebA

For the experiments on CIFAR-10/100 and CelebA we use the Adam optimizer with initial learning rate . We then proceed according to the following learning rate schedule (see, e.g., [25]):

Finally, we use data-augmentation. In particular, we use random horizontal flipping and random width and height translations with width and height factor, respectively, equal to .

The hyperparameters of each method are optimized as follows. For SLaM we always use as the lower bound for isotonic regression (i.e., the parameter in Algorithm 2). As CelebA is a binary classification benchmark is naturally set to for all examples. For CIFAR-10/10 we used the data-dependent method for estimating (see Algorithm 2) with threshold parameter . For weighted distillation we do a grid search over updating the weights every epochs and we report the best average accuracy achieved. Finally, for VID we search over for the coefficient of the VID-related term of the loss function, and for the PolyLoss we optimize its hyperparameter over .

Experiments on ImageNet

For the ImageNet experiments we use SGD with momentum as the optimizer. For data-augmentation we use random horizontal flipping and random cropping. Finally, the learning rate schedule is as follows. For the first epochs the learning rate is increased from to linearly. After that, the learning rate changes as follows:

The hyperparameters of each method are optimized as follows. For SLaM we do a hyperparameter search over for the lower bound for isotonic regression, and we keep the best performing value for each potential size of dataset . We used the fixed value for , as the top-5 accuracy of the teacher model was satisfactory (much higher than its top-1 accuracy) on the validation dataset. For Taylor-CE we did a hyper-parameter search for the Taylor series truncation values in . For weighted distillation we compute the weights in a one-shot fashion using the pre-trained student (as in the ImageNet experiments in [27]). For VID we search over for the coefficient of the VID-related term of the loss function, and for the PolyLoss we optimize its hyperparameter over .

D.2 Hard-Distillation

Here we present results on hard-distillation. The hyper-parameters of all methods are chosen the same way as in our soft-distillation experiments, see Section D.1. Tables 5, 6 and 7 contain our results on CIFAR-10, CIFAR-100 and CelebA, respectively. We observe that in almost all cases, SLaM consistently outperforms the other baselines. Moreover, for CIFAR-10 and CIFAR-100 hard-distillation performs worse than soft-distillation (as it is typical the case) but in CelebA hard-distillation seems to be performing on par with (sometimes even outperforming) soft-distillation. A plausible explanation for the latter outcome is that in our CelebA experiments the teacher and student have different architectures (MobileNet and ResNet, respectively) so that soft-labels from the teacher are not so informative for the student. (This is also a binary classification task where the information passed from the teacher to the student through its soft-labels is limited.)

| Labeled Examples | ||||||

|---|---|---|---|---|---|---|

| Teacher | ||||||

| Vanilla | ||||||

| Taylor-CE [20] | ||||||

| UPS [48] | ||||||

| VID [3] | ||||||

| Weighted [27] | ||||||

| SLaM (Ours) |

| Labeled Examples | ||||||

|---|---|---|---|---|---|---|

| Teacher | 35.97 | 44.65 | 49.62 | 55.68 | 59.19 | 62.05 |

| Vanilla | ||||||

| Taylor-CE [20] | ||||||

| UPS [48] | ||||||

| VID [3] | ||||||

| Weighted [27] | ||||||

| SLaM (Ours) |

| Labeled Examples | ||||||

|---|---|---|---|---|---|---|

| Teacher | ||||||

| Vanilla | ||||||

| Taylor-CE [20] | ||||||

| UPS [48] | ||||||

| VID [3] | ||||||

| Weighted [27] | ||||||

| SLaM (Ours) |

D.3 Large Movies Reviews Dataset Results

Here we present the results and the implementation details regarding the experiments on the Large Movies Reviews dataset. Recall that we use an ALBERT-large model as a teacher, and an ALBERT-base model as a student. We also use percent (or 500, 1000, 2000, 10000 examples) from the training dataset and split the remaining data in a validation dataset of 500 examples and an unlabeled dataset U. We compare the methods on the soft-distillation. For each trial we train the student model for epochs and keep the best test accuracy over all epochs. We perform trials and report the average of each method and the variance of the achieved accuracies over the trials. The results of our experiments can be found in Table 8. We remark that we did not implement the UPS method for this dataset as the data-augmentation method for estimating the teacher’s accuracy could not be readily used for this NLP dataset. Moreover, using dropout and Monte Carlo estimation for the uncertainty was also not compatible with the Albert model used in this experiment.

Since we are dealing with ALBERT-models (which are already pre-trained), we do not pre-train the student model on dataset A except in the case of “weighted-distillation” [27], where we pre-train the student model on dataset A just for epoch. The teacher model is trained using the Adam optimizer for epochs with initial learning rate . The student model is trained also using the Adam optimizer but for epochs and with learning rate .

The hyperparameters of each method are optimized as follows. For SLaM we do a hyperparameter search over for the lower bound for isotonic regression, and we keep the best performing value for each potential size of dataset . As this is a binary classification benchmark we naturally set for all examples. For weighted distillation we do a grid search over updating the weights every epochs and, similarly, we report the best average accuracy achieved. Finally, for VID (recall also Remark D.1) we search over for the coefficient of the VID-related term of the loss function, and for the PolyLoss we opitmize its hyperparameter over .

D.4 Combining with Teacher-Uncertainty-Based Reweighting Techniques

As we discussed in Section 2, our method can in principle be combined with teacher-uncertainty filtering and weighting schemes as these can be seen as preprocessing steps. To demonstrate this, we combine our method with the so-called fidelity-based weighting scheme of [17]. The fidelity weighting scheme reweights examples using some uncertainty measure for teacher’s labels, e.g., by performing random data-augmentations and estimating the variance of the resulting teacher labels or using dropout and Monte Carlo estimation. More precisely, for every example in the teacher-labeled dataset , the fidelity-weighting scheme assigns the weight for some hyper-parameter . In our experiments we performed random data augmentations (random crop and resize), estimated the coordinate-wise variance of the resulting teacher soft-labels, and finally computed the average of the variances of the -classes, as proposed in [17]. We normalized the above uncertainty of each example by the total uncertainty of the teacher over the whole dataset . The weights of examples in dataset are set to and the reweighted objective is optimized over the combination of the datasets .

| (3) |

To demonstrate the composability of our method with such uncertainty-based weighting schemes, we use CIFAR100 and the percentage of the labeled dataset A (as a fraction of the whole training set) is , similar to the setting of Section 4.2. The teacher is a ResNet110 and the student is a ResNet56. We first train the student using only the fidelity weighting scheme, i.e., optimize the loss function of Equation 4 using different values for the hyperparameter , i.e., ranging from mildly reweighting the examples of dataset B to more agressively “removing” examples where the teacher’s entropy is large. For the same values of we then train the student using the reweighted SLaM objective:

| (4) |

For the combined SLaM + Fidelity method we did not perform hyper-parameter search and used the same parameters for the isotonic regression as we did in the “standard” SLaM experiment in CIFAR100 of Section D.1. We present our comprehensive results for all sizes of dataset A and values of the hyper-parameter in Figure 5. Our results show that, regardless of the value of the hyperparameter and the size of the labeled dataset A, using SLaM together with the fidelity weighting scheme provides consistent improvements. Moreover, in Figure 5, we observe that by using SLaM the achieved accuracy depends less on the hyper-parameter : since SLaM takes into account the fact that some of the teacher’s predictions are incorrect, it is not crucial to down-weight them or filter them out.

D.5 Using Distillation Temperature

In this section we show that our approach can be effectively combined with temperature-scaling [26]. Choosing the right distillation temperature often provides significant improvements. In our setting, the teacher provides much more confident predictions (e.g., soft-labels with high-margin) on dataset A (where the teacher was trained) compared to the teacher soft-labels of dataset B where the teacher is, on average, less confident. Given this observation, it is reasonable to use different distillation temperatures for dataset A and dataset B. We try different temperatures for dataset A and dataset B and perform vanilla distillation with temperature and also consider applying the temperature scaling before applying SLaM. For each size of dataset A we try pairs of temperatures and report the best accuracy achieved by vanilla distillation and the best achieved by first applying temperature scaling and then SLaM. In Figure 6 we observe that SLaM with temperature scaling consistently improves over vanilla distillation with temperature.

D.6 Using SLaM with other loss functions beyond cross-entropy

In this section, we demonstrate that our method can be successfully applied when the student loss function comes from the families of losses introduced in [20] and [35]. We perform experiments on CIFAR-100 and ImageNet following the setting of Section 4.2. In particular, we compare vanilla distillation with unlabeled examples using the Taylor-CE loss of [20] and the PolyLoss of [35], with combining SLaM with these losses. For the Taylor-CE loss we set the “degree” hyperparameter to be (as suggested in [20]) and we set the hyperparameter of the PolyLoss to be (as suggested in [35]). The corresponding results can be found in Figure 7.

Appendix E Distilling Linear Models and Learning Noisy Halfspaces

In this section we state and prove our convergence result for the SLaM method when applied to linear models. Our assumption is that the ground-truth corresponds to a halfspace, i.e., for some unknown weight vector . We show that using SLaM with a linear model as the student will recover the ground truth classifier. We make the standard assumption that the ground-truth halfspace has -margin, i.e., that and that it holds for all examples . For a fixed example , the observed noisy teacher-label satisfies Definition 3.2, i.e., w.p. and w.p. (since for binary classification). Our approach consists of using the standard cross-entropy loss and training a student-model consisting of a linear layer plus a soft-max activation, i.e.,

Recall, that for binary classification, we define the mixing operation as

Theorem E.1 (Student Label Mixing Convergence).

Let be a distribution on and be the ground-truth halfspace with normal vector . Let be the distribution over (noisy) teacher-labeled examples whose -marginal is . We denote by the probability that the teacher label is correct, i.e., . Assume that there exist such that for all examples in the support of it holds that and . Let . After iterations of SLaM (Algorithm 3), with probability at least , there exists an iteration where .

Remark E.2 (High-Probability Result).

We remark that even though our learner succeeds with constant probability (at least ) we can amplify its success probability to by standard amplification techniques (i.e., by repeating the algorithm times and keeping the best result). To achieve success probability the total sample complexity is .

Proof.

We first provide simplified expressions for the gradient of the SLaM objective and the update vectors used in Algorithm 3. In what follows we remark that for any binary classification model we have the following identities: (i) , where to simplify notation we overload the mixing operation to also act on the scalar , i.e., ; and (ii) .

Lemma E.3 (SLaM Gradient).

The gradient of the SLaM objective is equal to

where

Let be the expected student label mixing loss conditional on some example . It holds

Proof.

We first show the formula

| (5) |

Using the chain rule, we obtain

Now we observe that that for binary classification, it holds that , , and therefore, also to obtain the simplified expression:

Further simplifying the above expression, we obtain:

Using again the chain rule we obtain that

Using the fact that the derivative of the sigmoid function , is , and the chain rule, we obtain that . Putting everything together we obtain the claimed formula for .

To obtain the gradient formula for the expected loss conditional on some fixed example , we can use the fact that Now using the formula of Equation 5 and the fact that by the definition of our noise model, we obtain that

∎

We first show the following claim proving that after roughly gradient iterations the student parameter vector will have good correlation with the ground-truth vector .

Claim 1.

Fix any larger than a sufficiently large constant multiple of , and assume that for all it holds that . Then, we have , with probability at least .

Proof.

Denote by the update vector used in Algorithm 3. We observe that the weight vector at round is equal to . In what follows we denote by the filtration corresponding to the randomness of the updates of Algorithm 3. We define the martingale with . We first show that under the assumption that , for all , it holds that . Using the SLaM gradient expression of Lemma E.3 and the definition of the step size we obtain that . Take any step . We have that

where we used the fact that . Now, using the -margin assumption of the distribution and the fact that we obtain

where for the penultimate inequality we used the fact that when and disagree it holds that . Take, for example, the case where . Then must be smaller than otherwise the prediction of the model would also be (and would agree with the prediction of ). Finally, for the last inequality we used the fact that, by our assumption, it holds that . Therefore, we conclude that . Next, we shall show that also achieves good correlation with the optimal direction with high probability. We will use the fact that is a martingale and the Azuma-Hoeffding inequality to show that will not be very far from its expectation.

Lemma E.4 (Azuma-Hoeffding).

Let be a martingale with bounded increments, i.e., . It holds that .

Recall that from Lemma E.3 we have that and

Observe that since for all it holds that . Therefore, the difference with probability . Since , using Cauchy-Schwarz, we also obtain that .

Using Lemma E.4, and the fact that we obtain that Therefore we conclude that for any larger than , with probability at least , it holds that or equivalently , where we used our previously obtained bound for the expected updates .

∎

Claim 2.

Fix any . Then, we have , with probability at least .

Proof.

We have that . Unrolling the iteration, we obtain that

| (6) |

where we used the fact that, since , it holds that (see the proof of 1). We first show that . We have

We will show that for it holds that

Fix some and let . Assume first that . Then, we have

where we used the fact that for and for (using the elementary inequality for all ). When we similarly have that

where we used the fact that when it holds that and when , . For the final inequality, we used again the inequality for all (where we replaced with ).

Therefore, we obtain that and . Using the decomposition of Equation 6, linearity of expectation, and the tower rule for conditional expectations, we conclude that . Using Markov’s inequality we obtain that with probability at least 99% it holds that or equivalently .

∎

We can now finish the proof of Theorem 5.1. Assume, in order to reach a contradiction, that for all it holds that . Now picking to be larger than a sufficiently large constant multiple of and using 1 and 2 we obtain that, with probability at least , it holds that , which can be made to be larger than by our choice of . However, this is a contradiction as by Cauchy-Schwarz we have . Therefore, with probability at least , it must be that for some it holds that .

∎