Skill-Based Reinforcement Learning with Intrinsic Reward Matching

Abstract

While unsupervised skill discovery has shown promise in autonomously acquiring behavioral primitives, there is still a large methodological disconnect between task-agnostic skill pretraining and downstream, task-aware finetuning. We present Intrinsic Reward Matching (IRM), which unifies these two phases of learning via the skill discriminator, a pretraining model component often discarded during finetuning. Conventional approaches finetune pretrained agents directly at the policy level, often relying on expensive environment rollouts to empirically determine the optimal skill. However, often the most concise yet complete description of a task is the reward function itself, and skill learning methods learn an intrinsic reward function via the discriminator that corresponds to the skill policy. We propose to leverage the skill discriminator to match the intrinsic and downstream task rewards and determine the optimal skill for an unseen task without environment samples, consequently finetuning with greater sample-efficiency. Furthermore, we generalize IRM to sequence skills for complex, long-horizon tasks and demonstrate that IRM enables us to utilize pretrained skills far more effectively than previous skill selection methods on both the Fetch tabletop and Franka Kitchen robot manipulation benchmarks.

Correspondence to [email protected], [email protected]

Code and visualizations are available at https://github.com/ademiadeniji/irm

1 Introduction

Generalist agents must possess the ability to execute a diverse set of behaviors and flexibly adapt them to complete novel tasks. Although deep reinforcement learning has proven to be a potent tool for solving complex control and reasoning tasks such as in-hand manipulation [25] and the game of Go [30], specialist deep RL agents learn new tasks from scratch, possibly collecting new data and learning to new objectives with no prior knowledge. This presents a massive roadblock in the integration of RL in many real-time applications, such as robotic control, where collecting data and resetting robot experiments is prohibitively costly [17].

Recent progress in scaling multitask reinforcement learning [27, 18] has revealed the potential of multitask agents to encode vast skill repertoires, rivaling the performance of specialist agents and even generalizing to out-of-distribution tasks. Moreover, skill-based unsupervised RL [19, 22, 29] shows promise of acquiring similarly useful behaviors but without the expensive per-task supervision required for conventional multitask RL. Recent skill-based RL results suggest that unsupervised RL can distill diverse behaviors into distinguishable skill policies; however, such approaches lack a principled framework for connecting unsupervised pretraining and downstream finetuning. The current state-of-the-art leverages inefficient skill search methods at the policy level such as performing a sampling-based optimization or sweeping a coarse discretization of the skill space [20]. However, such methods still exhibit key limitations; namely they (1) rely on expensive environment trials to evaluate which skill is optimal, and (2) are likely to select suboptimal behaviors as the continuous skill space grows due to the curse of dimensionality.

In this work, we present Intrinsic Reward Matching (IRM), a scalable algorithmic methodology for unifying unsupervised skill pretraining and downstream task finetuning by leveraging the learned intrinsic reward function parameterized by the skill discriminator. Centrally, we introduce a novel approach to leveraging the intrinsic reward model as a multitask reward function that, via interaction-free task inference, enables us to select the most optimal pretrained policy for the extrinsic task reward. During pretraining, unsupervised skill discovery methods learn a discriminator-parameterized, family of reward functions that correspond to a family of policies, or skills, through a shared latent code. Instead of discarding the discriminator during finetuning as is done in prior work, we observe that the discriminator is an effective task specifier for its corresponding policy that can be matched with the extrinsic reward, allowing us to perform skill selection while bypassing brute force environment trials. Our approach views the extrinsic reward as a distribution with measurable proximity to a pretrained multitask reward distribution and formulates an optimization with respect to skills over a reward distance metric called EPIC [12].

Contributions The key contributions of this paper are summarized as follows:

-

•

We describe a unifying discriminator reward matching framework and introduce a practical algorithm for selecting skills without relying on environment samples (Section 3).

-

•

We evaluate IRM on a suite of robot manipulation environments, underscoring the limitations of previous approaches and showing that our method enables more efficient finetuning (Section 4.1).

-

•

We generalize IRM to sequence pretrained skills and solve more challenging, long-horizon manipulation tasks (Section 4.2), as well as ablate key algorithmic components.

-

•

We provide analysis and visualizations that yield insight into how skills are selected and further justify the generality of IRM (Section 5).

2 Background

2.1 Unsupervised Skill Pretraining

The skill learning literature has long sought to design agents that autonomously acquire structured behaviors in new environments [33, 32, 26]. Recent work in competence-based unsupervised RL proposes generic objectives encouraging the discovery of skills representing diverse and useful behaviors [7, 29, 19]. A skill is defined as a latent code vector that indexes the conditional policy . In order to learn such a policy, this class of skill pretraining algorithms maximizes the mutual information between sampled skills and their resulting trajectories [13, 6, 28] :

| (1) |

Commonly, methods consider or , and we leave more details in Appendix 8.2. Since the mutual information is intractable to calculate in practice, competence-based methods instead maximize a variational lower bound proposed in [2] which is parameterized by a learned neural network function called a skill discriminator. This discriminator, along with other terms independent of , parameterizes an intrinsic reward that the skill policy maximizes during pretraining. Given an unseen task specification, the agent needs to infer which skill will finetune to solve the task with the fewest samples. For more detailed explanations of the mutual information decompositions of various skill discovery algorithms, refer to Appendix 8.2.

Pretrained Multitask Reward Functions: We observe that the intrinsic reward function learned during skill pretraining can be viewed as a multitask reward function, where the continuous skill code determines the task. In other words, we have some function:

| (2) |

where is the variational lower bound proposed in [2] ( is a trajectory representation such as ). Since skill discovery algorithms aim to maximize , we can view its parameterized lower bound VLB as a multitask reward function: scoring transitions based on their alignment with a skill code [19].

2.2 Equivalent-Policy Invariant Comparison

We can formalize a general notion of reward function similarity by equivalent-policy invariant comparison (EPIC) as established in [12]. EPIC defines a distance metric between two reward functions such that similar reward functions induce similar optimal policies. We consider the case of action-independent reward:

| (3) |

where is the Pearson distance between two random variables and , are samples from the Pearson distribution , and are batches sampled from the Canonical distribution . We compute the Pearson distance over Pearson samples , with additional canonicalization with batches to ensure invariance over constant shifts and scaling. The canonicalized reward function is defined as:

| (4) |

where is a reward function and is the discount factor [12]. The expectation is taken over the Canonical distribution ; for simplicity, we sample these batches , ahead of time. The canonicalization ensures invariance to reward shaping such that rewards that have different shaping but induce similar optimal policies are close in distance. In practice, the final term can be omitted as the Pearson correlation is invariant to constant shifts and scaling.

3 Intrinsic Reward Matching

3.1 Task Inference via Intrinsic Reward Matching

A multitask reward function that can supervise the learning of diverse behaviors is useful in its own right. However, in the case of skill-based RL, we have additionally learned a corresponding . Therefore, for any “task" that can be specified by our intrinsic reward function, we already have an optimal policy, so long as we condition on the corresponding skill. If we have learned a sufficiently diverse library of skills, we might expect that some of our skills share behavioral similarity to the optimal policy for the downstream task. It thus also holds that the corresponding intrinsic reward for that skill is a semantically similar task specification to the downstream task.

Given this interpretation of intrinsic reward, we posit that the task of identifying which our pretrained skills to apply to a downstream task can be reframed as inferring which task in our multitask reward function is most similar to the downstream task. Moreover, we should hope to find the skill code that produces the reward function most semantically aligned with the downstream task reward.

With this formalism, we can formulate the task inference problem as performing the following optimization:

| (5) |

in order to find most aligned with the task reward. Moreover, Equation 5 performs a minimization of a novel loss we name the EPIC loss with respect to the skill parameter . By EPIC’s equivalence class invariance, we know that if the EPIC loss is small for some , and is near optimal for , then approaches the optimal policy for the task as specified by . Notably, we require access to the task reward function to compute the EPIC loss. Leveraging a known task reward function is a divergence from conventional skill selection methods.

Computing during reward matching During pretraining, for some methods such as [19, 29], we require negative samples in order to compute the variational objective in Equation 2 and avoid a degenerate optimization where all embedded trajectories have high similarity with all skills. However, during selection when skills are fixed, the negative sampling component amounts to a reward offset which does not impact the task semantics. Furthermore, since we may not in general have access to a large amount of negative samples on a given downstream task, we choose to simplify the objective to the following:

| (6) |

where is the skill discriminator. This parameterization of the intrinsic reward preserves the alignment semantics of VLB without the normalization by negative samples. For more details regarding the discriminator parameterization of the intrinsic reward for [19, 29] refer to Appendix 8.3 and Appendix 8.4.

3.2 EPIC Sample-Based Approximation

We make a number of sample-based approximations of various unknown quantities in order to concretize the continuous optimization Equation 5 as a tractable loss minimization problem.

Canonical State Distribution Approximation: In order to canonicalize our reward functions, we estimate the expectation over the state and next state distributions with a sample-based average over 1024 samples. These distributions can be entirely arbitrary, though using heavily out-of-distribution samples with respect to pretraining can weaken the accuracy of the approximation. We choose to instantiate a uniform distribution bounded by known workspace constraints for both of these distributions.

Sampling Distribution for Pearson Correlation: We find that generating samples uniformly roughly within the environment workspace bounds, just as with the reward canonicalization, often leads to strong approximations. Furthermore, as both sample generation and relatively inexpensive function evaluation are independent of the online-finetuning phase, we can perform the full skill optimization as a self-contained preprocess to downstream policy adaptation without any environment samples. Rough knowledge of workspace bounds represents some amount of prior environment knowledge. We leave more general options such as sampling from a learned generative model over trajectories encountered during pretraining or sampling from saved pretraining data to future work. We ablate various sampling distribution choices in Table 7 and present the full algorithm in detail in Algorithm 1.

3.3 Generalization to Skill Sequencing

Many realistic downstream tasks derive additional complexity from temporally extended planning horizons. In contrast to hierarchical reinforcement learning (HRL) approaches, which aim to stitch together pretrained skills at the policy level with a higher-level manager policy, we can extend the task matching framework of IRM to efficiently solve the problem of skill sequencing, entirely doing away with the manager policy. Consider the long-horizon setting where we have a sequence of reward functions over task horizon . Central to the finetuning problem is determining over what time intervals should potentially different pretrained skills be selected. In this work, we predetermine a fixed skill horizon , where is the number of rewards. This skill horizon could also be specified as a parameter and learned from the task reward signal.

Next, in order to perform skill selection over each time interval, we perform the IRM algorithm in parallel or sequentially for each reward. We note the key assumption that IRM requires access to the reward functions for each of the subtasks. For example, for a sequential goal reaching task, we divide the episode into segments for each of the goals and corresponding rewards. We perform the IRM skill selection algorithm for each reward to select the optimal skill over each interval. After selecting the skills, we freeze our selections and finetune the skill policies jointly.

4 Experiments

In this section we aim to experimentally evaluate whether IRM improves the adaptation sample-efficiency of skill finetuning on a downstream reinforcement learning task as compared to baselines. For pretraining skills, we experiment with both the CIC [19] and DADS [29] algorithms. We consider IRM Random a version of IRM that randomly samples skills and picks the one with the lowest EPIC loss, IRM CEM which selects elites as those skills with the lowest EPIC loss, and IRM Gradient Descent which minimizes the EPIC loss using the Adam optimizer and uses backpropagation through the discriminator to regress the optimal skill.

Task IRM CEM IRM GD IRM Rand Env Roll. Env CEM GS Relabel Rand Fetch Reach 95.9 1.0 87.5 0.20 92.5 1.1 85.0 6.2 87.8 1.9 97.3 0.00 43.9 0.00 16.7 19 Fetch Push 80.2 2.5 73.1 0.48 77.6 2.7 74.3 0.92 75.4 2.6 72.1 0.00 23.6 0.023 51.5 12.5

Task IRM Rand Seq IRM CEM Seq IRM GD Seq Env Seq HRL Fetch Reach Seq Easy 88.1 1.5 89.5 0.34 86.7 0.64 80.7 4.7 28.4 31.0 Fetch Reach Seq Hard 73.4 2.8 66.4 1.1 72.4 3.3 62.7 6.1 28.0 30.6 Fetch Barrier Easy 29.3 16.4 49.0 22.1 57.5 9.5 65.5 9.0 30.1 20.0 Fetch Barrier Hard 43.3 16.3 48.1 17.8 88.2 10.4 65.4 5.6 42.8 18.2 Fetch Tunnel Easy 20.9 14.3 40.8 13.0 49.1 8.4 52.2 9.1 32.2 13.9 Fetch Tunnel Hard 25.6 9.4 58.4 1.8 35.7 1.3 14.5 4.8 33.7 13.9

Task IRM CEM IRM GD Env Roll. Env CEM GS Rand Kettle 105 0.00 96.2 6.3 105 0.27 105 0.00 89.1 0.00 18.7 26.4 Slide Cabinet 5.00 0.00 5.00 0.00 4.81 0.13 5.00 0.00 3.51 0.00 2.00 0.34 Hinge Cabinet 175 0.00 175 0.00 175 0.00 175 0.00 175 0.00 7.02 75 Light Switch 182 9.3 187 12.9 190 13.4 208 0.32 141 0.00 53.7 31

Intr. Rew. IRM CEM IRM GD IRM Rand Env Roll. Env CEM GS Rand DADS 83.4 2.19 69.9 2.22 77.2 3.83 74.6 5.15 70.3 5.38 68.9 2.81 28.3 13.5

Fetch and Franka Kitchen Environments

Environments We design both a goal reaching and a tabletop pushing environment in the OpenAI Gym Fetch environment [5]. We instantiate a single-task variant in both environments as well as extend both environments to the long-horizon sequential task setting. In the case of the block pushing environment, we introduce obstacles around which the policy must manipulate the object. For long-horizon goal reaching, we further validate across two levels of difficulty (Easy, Hard) based on the environment time limit by which the agent must solve the task. For the tabletop manipulation environment, we validate across two levels of difficulty (Easy, Hard). The Easy tasks consists of reaching two waypoints, and the Hard tasks consists of reaching three. Further task design details are provided in Appendix 8.5.

Baselines We benchmark many conventional finetuning approaches after a single skill pretraining phase of Contrastive Intrinsic Control (CIC) [19]. The Grid Search (GS) baseline coarsely sweeps each of 10 skills evenly from the all 0’s skill vector to the all 1’s skill vector and finetunes the skill which achieves the best evaluation reward over an episode. Env Rollout randomly samples 10 skills to evaluate with a rollout and Env Rollout CEM uses the episode reward as the metric by which to select elites. Random Skill selects a skill at random. Relabel relabels saved skill rollouts obtained during pretraining with the task reward function, and selects the skill that achieved the highest reward. All baselines use the TD3 [11] RL algorithm.

Evaluation First, we pretrain each RL agent with the intrinsic rewards for 2M steps. Then, we finetune each agent to the downstream task with extrinsic rewards roughly until convergence for each task. Since our primary contribution involves skill selection, we especially focus on zero-shot episode rewards: rewards achieved by a selected skill policy but without any RL updates on the task reward. We report results averaged over 3 seeds with standard error bars.

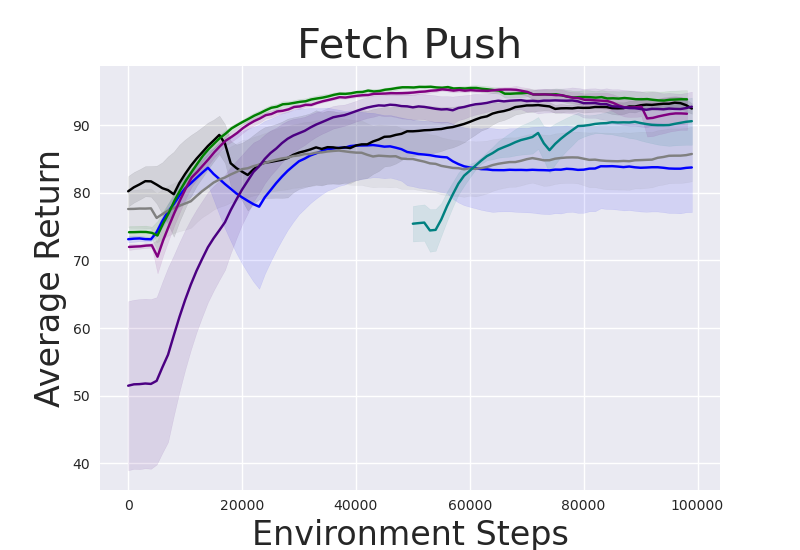

4.1 Tabletop Manipulation

Reach Target We first evaluate IRM on the Reach Target task, where the Fetch robot is rewarded for reaching a target position. IRM outperforms or performs similarly to environment-rollout methods while requiring no environment samples to perform skill selection. As shown in Table 1, the random skill policy performs particularly poorly and with very high variance relative to the IRM and environment-rollout based methods. Moreover, appropriate skill selection is required for strong zero-shot performance as certain skills obtain much higher rewards than others. Reward relabelling fails to consistently select optimal skills across the benchmark, likely because its options are limited to the finite set of skills sampled during pretraining. IRM by contrast leverages continuous optimization in the infinite skill space to find the best skill for the task. Figure 3 shows the finetuning performance of the methods on the downstream task reward.

Push Block to Goal Next, we evaluate IRM on a more complex manipulation task involving pushing a block to a goal position. We report the zero-shot IRM skill selection performance in Table 1 and finetuning performance in Figure 3. This more complex task similarly benefits from bootstrapping the appropriate pretrained skill policy as evidenced by the performance gap of the selection based methods over random skill selection. We remark that even for more complex manipulation tasks, IRM is robust in consistently guiding optimal skill selection without requiring any interaction with the environment. Although Env Rollout CEM is the strongest interaction baseline in terms of zero-shot reward, it far exceeds a reasonable computational budget of interactions entirely on skill selection. For illustrative purposes, we display the plot although heavily shifted.

Franka Kitchen Manipulation Lastly, we evaluate IRM on 4 manipulation tasks in the Franka Kitchen benchmark [10]. We make no changes to the default environment rewards for each task, which do not include any shaping for reaching to the correct object, and thus present a harder exploration problem. We similarly observe favorable zero-shot performance of the IRM method, in the case of hinge cabinet and slide cabinet, solving the task zero-shot without any finetuning (Table 3, Figure 3). This zero-shot adaptation behavior presents a compelling means for agents to immediately deploy optimal policies in highly sample-sensitive applications.

Finetuning Performance on Fetch and Franka Kitchen

4.2 Extensions and Ablations

Long-Horizon Manipulation Building on the results in Section 4.1, we demonstrate that IRM fully generalizes to solving long-horizon tasks in the setting of tabletop manipulation. During the unsupervised pretraining phase, skill discovery methods can acquire useful skills such as directional block pushing or pushing the block to certain spatial locations. We show that IRM can intelligently select a sequence of such skills to finetune via reward matching, avoiding learning a hierarchical manager policy that finetunes at the policy level.

For the Fetch Reach Sequential tasks, we consider an extended horizon where the agent is tasked with reaching a sequence of goals in a particular order. For the Fetch barrier tasks, we consider the environments such as depicted in Figure 2, where the agent must navigate around a barrier introduced during the finetuning phase in order to reach the goal. The tunnel environments consists of a goal enclosed in a tunnel; the agent must navigate around and into the tunnel to complete the task. We compare IRM methods to an environment rollout baseline (Env Seq) and a hierarchical RL baseline (HRL). The ‘IRM Seq’ methods select skills based on each defined sub-task’s reward function according to the IRM optimization scheme. ‘Env Seq’ chooses the best combination of skills based on extrinsic reward from rollouts. ‘HRL’ is initialized with random skills and simultaneously optimizes a manager policy over skills and the skill policies themselves. In both settings, IRM methods outperform the environment rollout method and in some cases the HRL method in identifying (Table 2) and finetuning skills (Figure 3). Implementation details are provided in Appendix 8.9.

| Reward Matching | IRM CEM |

|---|---|

| IRM | 21.0 0.75 |

| L1 | 8.71 0.70 |

| L2 | 7.87 0.86 |

| L1 + Learn Scale | 5.51 1.9 |

| L2 + Learn Scale | 3.95 2.2 |

Matching Metric Ablations We validate the importance of employing the EPIC pseudometric for formulating the matching loss by ablating its contribution against more naive selections in Table 5. L1 and L2 losses are common metrics in supervised regression problems but are poor choices for comparing task similarity with rewards. Moreover, rewards can have arbitrary differences in scaling and shaping that L1 and L2 are not invariant to. To strengthen these comparisons, we include a learned reward scaling parameter for L1 and L2 and similarly observe that EPIC is a superior matching metric.

Skill Discovery Algorithm Ablations IRM is fully general to any mutual information maximization based, RL pretraining algorithm as shown in Table 4. We validate on the Fetch Reach task that IRM CEM and IRM Rand convincingly outperform all episode rollout baselines in zero-shot episode reward.

5 Analysis

Does optimizing the EPIC loss lead to effective skill selection? In Figure 4, we verify that EPIC loss is strongly negatively correlated with extrinsic reward on a Planar Goal Reaching task detailed in Appendix 8.8. Thus, optimizing for a low EPIC loss is an effective substitute for optimizing the environment reward, and crucially, it forgoes collecting expensive environment samples.

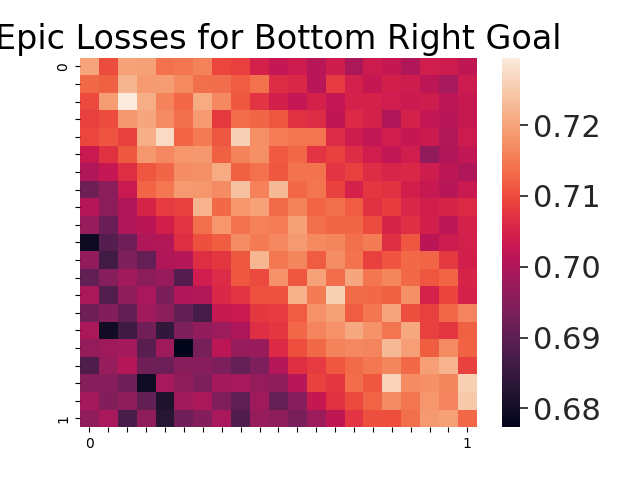

How can we understand skills through EPIC losses? In Figure 5, we plot EPIC losses between intrinsic rewards and goal-reaching rewards across the 2D continuous skill space. Not only is the loss landscape smooth, which motivates optimization methods like gradient descent, but there is also a banded partitioning of the manifold. Furthermore, the latent skill space is well-structured as different darker-colored partitions of the skill space correspond to the group of skills with low EPIC loss from each task reward. EPIC losses concisely represent desirability of skills with respect to a downstream reward function, so skills that achieve a low EPIC loss for the Top Left goal will achieve high EPIC losses for the opposite reward, Bottom Right goal.

EPIC Loss and Extrinsic Reward are Negatively Correlated

We include a scatter plot and trajectory visualizations in Figure 4. As Figure 4 suggests, skills with the lowest EPIC loss receive high extrinsic reward, reaching the goal with high spatial precision. Skills with the highest losses produce the opposite behavior: moving in the direct opposite direction of the goal. In the sequential case, low-EPIC loss skills attempt to reach the 1st goal then the 2nd goal, while high-EPIC loss skills perform the behavior in the inverse order. The intrinsic reward module provides a much deeper insight into the semantics of skills than the extrinsic rewards obtained by skill policy rollouts.

EPIC Loss Visualizations

6 Related Work

Several works including [29, 7, 1, 14, 4, 8, 19] employ mutual information maximization for skill pretraining. While [19] leverages coarse grid search to select skills for downstream RL, methods such as [29] instead plan through a learned skill dynamics model at finetuning time. Our approach is similar in that it leverages pretraining model components other than the policy to guide skill selection. However, rather than generating a reward maximizing plan through possibly complex, learned environment dynamics, we instead look to match a policy to the task reward directly through a pretrained discriminator.

In the context of sequential finetuning, [4, 7] employ hierarchical RL to chain pretrained skills with a manager policy requiring additional environment interactions. Works on such HRL methods include [24, 9, 35, 16] and more classic approaches like [32, 31]. By contrast, we demonstrate that the intrinsic reward matching framework can be extended to choose skill sequences without reliance on environment samples. The successor features line of work also adopts a unified view of skill-based RL. Such work relies on the assumption that arbitrary rewards can be parameterized linearly in some learned features and some task vector as in [22, 3]. Our approach relaxes this assumption to the fully general setting by instead searching for a pretrained task with minimal proximity to an arbitrarily parameterized task reward.

7 Discussion

We present Intrinsic Reward Matching (IRM), a framework for algorithmically unifying information maximization unsupervised reinforcement learning with downstream task adaptation. We instantiate a practical algorithm for implementing this framework and demonstrate that IRM outperforms current methods on a continuous control benchmark and tabletop manipulation tasks. IRM diverges from past works in leveraging the discriminator for downstream task inference and consequently performing skill selection without environment interactions in the short horizon setting. We also show that IRM can be readily extended to the general skill sequencing setting to solve more realistic long-horizon tasks as an alternative to hierarchical methods. Central to our contribution is a novel loss function, the EPIC loss, which serves as both a skill selection utility as well as a new way to interpret the task-level semantics of pretrained skills.

We acknowledge a number of limitations of our approach. IRM relies on samples of the state, roughly within workspace boundaries as well as access to an external reward function, ideally well-shaped, which trades off with IRM’s reduced reliance on environment interactions. In order to obtain realistic image samples to compute the EPIC loss, an agent could learn an expressive generative model over the image states obtained during pretraining and sample from the model to generate diverse and realistic sampled states. For learning unknown state-based rewards, the agent could additionally learn an image-reward model by regressing the rewards encountered during exploration [15]. This represents an exciting direction for future work.

References

- [1] Joshua Achiam, Harrison Edwards, Dario Amodei, and Pieter Abbeel. Variational option discovery algorithms. CoRR, abs/1807.10299, 2018.

- [2] David Barber and Felix V. Agakov. The im algorithm: A variational approach to information maximization. In NIPS, pages 201–208, 2003.

- [3] André Barreto, Rémi Munos, Tom Schaul, and David Silver. Successor features for transfer in reinforcement learning. CoRR, abs/1606.05312, 2016.

- [4] Kate Baumli, David Warde-Farley, Steven Hansen, and Volodymyr Mnih. Relative variational intrinsic control. CoRR, abs/2012.07827, 2020.

- [5] Greg Brockman, Vicki Cheung, Ludwig Pettersson, Jonas Schneider, John Schulman, Jie Tang, and Wojciech Zaremba. Openai gym, 2016.

- [6] Benjamin Eysenbach, Abhishek Gupta, Julian Ibarz, and Sergey Levine. Diversity is all you need: Learning skills without a reward function, 2018.

- [7] Benjamin Eysenbach, Abhishek Gupta, Julian Ibarz, and Sergey Levine. Diversity is all you need: Learning skills without a reward function. In International Conference on Learning Representations, 2019.

- [8] Carlos Florensa, Yan Duan, and Pieter Abbeel. Stochastic neural networks for hierarchical reinforcement learning. CoRR, abs/1704.03012, 2017.

- [9] Kevin Frans, Jonathan Ho, Xi Chen, Pieter Abbeel, and John Schulman. Meta learning shared hierarchies. CoRR, abs/1710.09767, 2017.

- [10] Justin Fu, Aviral Kumar, Ofir Nachum, George Tucker, and Sergey Levine. D4rl: Datasets for deep data-driven reinforcement learning, 2020.

- [11] Scott Fujimoto, Herke van Hoof, and David Meger. Addressing function approximation error in actor-critic methods. CoRR, abs/1802.09477, 2018.

- [12] Adam Gleave, Michael Dennis, Shane Legg, Stuart Russell, and Jan Leike. Quantifying differences in reward functions. CoRR, abs/2006.13900, 2020.

- [13] Karol Gregor, Danilo Jimenez Rezende, and Daan Wierstra. Variational intrinsic control, 2016.

- [14] Karol Gregor, Danilo Jimenez Rezende, and Daan Wierstra. Variational intrinsic control. CoRR, abs/1611.07507, 2016.

- [15] Danijar Hafner, Timothy P. Lillicrap, Jimmy Ba, and Mohammad Norouzi. Dream to control: Learning behaviors by latent imagination. CoRR, abs/1912.01603, 2019.

- [16] Karol Hausman, Jost Tobias Springenberg, Ziyu Wang, Nicolas Heess, and Martin Riedmiller. Learning an embedding space for transferable robot skills. In International Conference on Learning Representations, 2018.

- [17] Dmitry Kalashnikov, Alex Irpan, Peter Pastor, Julian Ibarz, Alexander Herzog, Eric Jang, Deirdre Quillen, Ethan Holly, Mrinal Kalakrishnan, Vincent Vanhoucke, and Sergey Levine. Qt-opt: Scalable deep reinforcement learning for vision-based robotic manipulation. CoRR, abs/1806.10293, 2018.

- [18] Dmitry Kalashnikov, Jacob Varley, Yevgen Chebotar, Benjamin Swanson, Rico Jonschkowski, Chelsea Finn, Sergey Levine, and Karol Hausman. Mt-opt: Continuous multi-task robotic reinforcement learning at scale, 2021.

- [19] Michael Laskin, Hao Liu, Xue Bin Peng, Denis Yarats, Aravind Rajeswaran, and Pieter Abbeel. Cic: Contrastive intrinsic control for unsupervised skill discovery, 2022.

- [20] Michael Laskin, Denis Yarats, Hao Liu, Kimin Lee, Albert Zhan, Kevin Lu, Catherine Cang, Lerrel Pinto, and Pieter Abbeel. URLB: unsupervised reinforcement learning benchmark. CoRR, abs/2110.15191, 2021.

- [21] Xinran Liang, Katherine Shu, Kimin Lee, and Pieter Abbeel. Reward uncertainty for exploration in preference-based reinforcement learning, 2022.

- [22] Hao Liu and Pieter Abbeel. Aps: Active pretraining with successor features, 2021.

- [23] Eric J. Michaud, Adam Gleave, and Stuart Russell. Understanding learned reward functions. CoRR, abs/2012.05862, 2020.

- [24] Ofir Nachum, Shixiang Gu, Honglak Lee, and Sergey Levine. Data-efficient hierarchical reinforcement learning. CoRR, abs/1805.08296, 2018.

- [25] OpenAI, Ilge Akkaya, Marcin Andrychowicz, Maciek Chociej, Mateusz Litwin, Bob McGrew, Arthur Petron, Alex Paino, Matthias Plappert, Glenn Powell, Raphael Ribas, Jonas Schneider, Nikolas Tezak, Jerry Tworek, Peter Welinder, Lilian Weng, Qiming Yuan, Wojciech Zaremba, and Lei Zhang. Solving rubik’s cube with a robot hand. CoRR, abs/1910.07113, 2019.

- [26] Marc Pickett and Andrew G Barto. Policyblocks: An algorithm for creating useful macro-actions in reinforcement learning. In ICML, volume 19, pages 506–513, 2002.

- [27] Scott Reed, Konrad Zolna, Emilio Parisotto, Sergio Gomez Colmenarejo, Alexander Novikov, Gabriel Barth-Maron, Mai Gimenez, Yury Sulsky, Jackie Kay, Jost Tobias Springenberg, Tom Eccles, Jake Bruce, Ali Razavi, Ashley Edwards, Nicolas Heess, Yutian Chen, Raia Hadsell, Oriol Vinyals, Mahyar Bordbar, and Nando de Freitas. A generalist agent, 2022.

- [28] Archit Sharma, Shixiang Gu, Sergey Levine, Vikash Kumar, and Karol Hausman. Dynamics-aware unsupervised discovery of skills. arXiv preprint arXiv:1907.01657, 2019.

- [29] Archit Sharma, Shixiang Gu, Sergey Levine, Vikash Kumar, and Karol Hausman. Dynamics-aware unsupervised discovery of skills. In International Conference on Learning Representations, 2020.

- [30] David Silver, Aja Huang, Christopher Maddison, Arthur Guez, Laurent Sifre, George Driessche, Julian Schrittwieser, Ioannis Antonoglou, Veda Panneershelvam, Marc Lanctot, Sander Dieleman, Dominik Grewe, John Nham, Nal Kalchbrenner, Ilya Sutskever, Timothy Lillicrap, Madeleine Leach, Koray Kavukcuoglu, Thore Graepel, and Demis Hassabis. Mastering the game of go with deep neural networks and tree search. Nature, 529:484–489, 01 2016.

- [31] Martin Stolle and Doina Precup. Learning options in reinforcement learning. In Sven Koenig and Robert C. Holte, editors, Abstraction, Reformulation, and Approximation, pages 212–223, Berlin, Heidelberg, 2002. Springer Berlin Heidelberg.

- [32] Richard S. Sutton, Doina Precup, and Satinder Singh. Between mdps and semi-mdps: A framework for temporal abstraction in reinforcement learning. Artificial Intelligence, 112(1):181–211, 1999.

- [33] Sebastian Thrun and Anton Schwartz. Finding structure in reinforcement learning. In G. Tesauro, D. Touretzky, and T. Leen, editors, Advances in Neural Information Processing Systems, volume 7. MIT Press, 1994.

- [34] Aaron van den Oord, Yazhe Li, and Oriol Vinyals. Representation learning with contrastive predictive coding, 2019.

- [35] Alexander Sasha Vezhnevets, Simon Osindero, Tom Schaul, Nicolas Heess, Max Jaderberg, David Silver, and Koray Kavukcuoglu. Feudal networks for hierarchical reinforcement learning. CoRR, abs/1703.01161, 2017.

8 Appendix

8.1 Background and Notation

Markov Decision Process: The goal of reinforcement learning is to maximize cumulative reward in an uncertain environment it interacts with. The problem can be modelled as a Markov Decision Process (MDP) defined by , where is the set of states, is the set of actions, is the transition probability distribution, is the reward function and is the discount factor.

Unsupervised Skill Discovery: In competence-based unsupervised RL the aim is to learn skills that generate diverse and useful behaviors [7]. The broad aim is to learn policies that are skill-conditioned and generalizable. Formally, we also learn skills and take actions according to . As an illustrative example, applying this formalism to the Mujoco Walker domain, we might hope to find a skill-conditioned policy and skills such that makes the agent walk, while makes it run. Further, if we allow for continuous skills, we can also imagine being able to use the policy to “jog" at different speeds by interpolation the and skills. That is, taking should, intuitively, yield a policy that makes the agent jog at speed dictated by the parameter .

Finetuning Pretrained Skills: With a skill-conditioned policy , an agent needs to infer which skill to index for a downstream task (e.g. identifying if it needs to use or ) during finetuning. This is a relatively under-explored area, with the most universal approach being a coarse, discretized grid search. Least squares regression has also been investigated in the context of successor features [22].

8.2 Competence-Based Skill Discovery

Competence-based skill discovery algorithms aim to maximize the mutual information between trajectories and skills:

| (7) |

Since the mutual information is intractable to calculate, in practice, competence-based methods maximize a variational lower bound. Many mutual information maximization algorithms, such as Variational Intrinsic Control [13] and Diversity is All You Need [6], use the estimate . Other competence-based methods, such as Dynamics-Aware Unsupervised Discovery of Skills [28], Active Pretraining with Successor Features [22], and Contrastive Intrinsic Control (CIC) [19], maximize a lower bound for .

While the decompositions of the mutual information objective are equivalent, algorithms make different design choices regarding how to approximate entropy, represent trajectories, and embed skills. These choices affect the distillation of skills: for instance, without explicit maximization of in the decomposition of mutual information, behavioral diversity may not be guaranteed when the state space is much larger than the skill space [19].

8.3 CIC

Contrastive Intrinsic Control (CIC) [19] is a state of the art algorithm for competence-based skill discovery. CIC maximizes a lower bound for through a particle estimator for and a contrastive loss from Contrastive Predictive Coding (CPC) [34] for . The lower bound for is:

| (8) |

where , is the k-Nearest Neighbors embedding, is the number of k-NNs used to approximate entropy, and is the number of negative samples.

8.4 DADS

We additionally use Dynamics-Aware Unsupervised Discovery of Skills (DADS) [29] for skill discovery, as it is one of the few skill discovery algorithms to successfully scale up to continuous skills. DADS maximizes a lower bound for through learning skill-conditioned transition distributions. The lower bound for is:

| (9) |

For our experiments, we reimplement the on-policy DADS algorithm in PyTorch. We follow the default hyperparameters and train for 20 million environment steps, per [29].

8.5 Environment Details

For our Fetch Reaching environment, we use the Gym Robotics Fetch environment [5]. We set the time limit to 200. For the fetch push environment, we partition the continuous action space into 4 actions, which involve pushing the block forward, backward, left, and right. We set the time limit to 10 for skill learning.

We evaluate sequential skill selection on Fetch Reach and Fetch Push. For the Fetch Push agent, we have a Barrier and Tunnel environment, each with 3 waypoints, depicted in Figure 2. We fix a time horizon of 30 pushes per waypoint. For Fetch Barrier, the easy task consists of the first two waypoints (L shape), and the hard task consists of all three (U shape). For Fetch Tunnel, the easy task consists of the last two (into the tunnel), and the hard task consists of all three waypoints (side of the tunnel, and then into the tunnel). For Fetch Reach, we consider 2 waypoints and a time horizon of 25 for each waypoint.

Our plane environment is a 2D world with observations in [-128, 128] x [-128, 128] and continuous actions in [-10, 10] x [-10, 10].

The Franka Kitchen Environment consists of a 9 dof Franka Arm in a Kitchen Environment with a variety of rigid and articulated objects. The benchmark specifies several goals with rewards for reaching those goals. The 7d action space includes the end-effector 3d translation, 3d rotation, and a gripper open/close dimension. The state includes the joint positions corresponding to each dof of the arm in addition to the joint positions of articulated bodies and 7-dimensional position and orientation information for rigid objects in the scene. The Kettle task involves moving the kettle to a target burner location on the stove. The Hinge and Slide Cabinet tasks involve opening cabinet drawers with revolute and prismatic joints respectively. The Light Switch task involves flipping the switch for the stove light. We use an episode length of 1000 for solving the tasks.

8.6 Pretraining Hyperparameters

For the Fetch Reach environment, we use a skill dimension of 8 and a discriminator MLP hidden dimension of 64. We use an alpha value of 0 for entropy weighting. For the Fetch Push environment, we use a skill dimension of 16 and a discriminator MLP hidden dimension of 16. We use an alpha value of 0 for entropy weighting. For the Franka Kitchen environment we use an alpha value of 0.1 for entropy weighting along with a skill dimension of 2 and an MLP hidden dimension of 64. For all environments, we use a replay buffer size of 100k.

8.7 Intrinsic Reward Matching and Environment Rollout Baseline hyperparameters

IRM CEM and Env Rollout CEM are trained for 5 iterations with 1000 samples at each iteration and 100 elites selected each iteration. Env Rollout CEM consumes the entire downstream finetuning budget on just skill selection. For illustrative purposes, we start its plot at 50k steps to show that finetuning still occurs, however, sample-inefficiency suffers due to excessive rollouts for skill selection. This problem only worsens for long time horizons. IRM Gradient Descent is trained for 5000 steps with a learning rate of 5e-3 and initialized at the skill vector of all 0.5s. IRM Random selects 100 random skills. Env Rollout trials 10 random skills for a fully episode. Grid Search coarsely trials 10 skills from the skill of all 0s to the skill of all 1s as in [20].

8.8 Planar Goal Reaching

The planar goal reaching task consists of a simple 2D plane with a point with a 2D Cartesian state space that can displace in the x and y coordinates with a 2D action space. Skills learned tend to span the 2D space reaching to diverse locations distributed broadly across the environment. We show some sample zero-shot skill selection results over three different skill dimensions in Figure 6.

Zero-Shot Performance for Planar Goal Reaching

8.9 Sequential Skill Selection

For sequential skill selection, we compare IRM Sequential and Environment Sequential skill selection. IRM Sequential consists of an iterative process. The first skill is chosen entirely free of environment samples, exactly identical to the single-skill tasks. Once the first skill is chosen, we roll out a trajectory with the skills we have chosen so far and use the latter half of the trajectory as the Pearson samples for our EPIC loss. We use Gaussian noise with variance 1 for our Canonical samples as described in Appendix 8.12.2. At each step of the skill selection process, we use the corresponding IRM optimization methods.

For our Environment Sequential skill selection method, we select skills iteratively as well. For each waypoint or subtask, we randomly sample skills and commit to the best, where .

8.10 Hierarchical Reinforcement Learning Baseline

In order to validate the benefits of IRM’s offline skill selection, we compare against a baseline that leverages a conventional hierarchical RL algorithm to solve long-horizon, sequential tasks. We instantiate a TD3 manager agent that outputs into a skill action space from state input at a temporally abstract timescale. As in the IRM setup, this timescale is fixed to align with the changes in reward to encourage the manager to change its skill prediction according to the change in the reward semantics. The manager’s is then inputted to the low-level pretrained skill policy which is rolled out over many steps with the skill fixed. Both the manager policy and the low-level policy weights are updated during finetuning. The manager agent is randomly initialized such that its initial skill prediction is random.

8.11 Compute

We thank NVIDIA for providing compute resources through the NVIDIA Academic DGX Grant. We used a cluster of 8 NVIDIA A100s for experimentation.

8.12 Additional Ablations

8.12.1 Skill Dimension

We ablate skill dimension and evaluate the zero-shot performance of all skill selection methods. IRM’s performance generally increases with increased skill dimension despite discriminator overfitting issues associated with larger skill spaces. The IRM GD learning rate is chosen as 5e-3 for all experiments in this work and is not tuned at all. Such likely explains the divergence of the 64 dimensional result.

Skill Dim IRM CEM IRM GD IRM Rand Env Roll. Env CEM GS Rand 8 21.1 0.51 15.7 1.61 18.9 0.18 18.4 0.18 18.8 0.48 17.9 0.101 13.5 1.85 16 17.4 1.30 14.6 0.63 18.8 0.26 22.7 0.83 23.1 0.36 14.0 0.19 11.2 2.32 32 20.1 0.54 22.537 0.25 19.8 0.14 22.2 0.58 21.5 0.67 24.0 0.12 19.9 0.67 64 21.9 0.48 1.68 0.069 20.9 0.74 22.5 0.70 21.6 0.89 18.2 0.059 13.3 2.15

8.12.2 Pearson & Canonical Distributions

We experiment with many ways to approximate the Pearson and Canonical distributions. We defined Full Random to be our uniform samples from a reasonable estimate of the upper and lower bounds for each dimension of the state. For our planar environment, the bounds are defined explicitly and thus known; for more complex environments, we estimate the bounds. For example, for a tabletop manipulation workspace, we sample 2-dimensional block positions uniformly within the rectangular plane of the table surface. In practice, IRM is fairly robust to the distributions, though there are subtleties that emerge in the various choices for the Pearson and Canonical distributions. For instance, we also ablate a Uniform(0,1) distribution, which generally performs much worse, due to lack of state coverage for most environments. For the Canonical distribution, we also approximate samples by perturbing the Pearson samples by sampled from a Gaussian distribution. We experiment with hyperparameters of variance, which may be adjusted based on the environment. For our sequential IRM method, we use this Canonical distribution to ablate on-policy samples.

| Pearson Distribution | Canonical Distribution | IRM CEM |

|---|---|---|

| Full Random | Full Random | 20.341 0.306 |

| Full Random | Uniform(0,1) | 16.343 0.708 |

| Full Random | 21.191 0.629 | |

| Full Random | 21.027 0.419 | |

| Uniform(0,1) | 5.905 3.157 | |

| Uniform(0,1) | 2.851 0.605 |

None of the distributions ablated above require on-policy environment samples. It is possible to use on-policy samples for the state distributions, and we choose to do so for our sequential IRM method, as previous skill rollouts may provide useful Pearson samples for the subsequent skill selection. Note that while on-policy Canonical samples are possible, they are incredibly expensive and require access to the environment simulator, so we focus on other choices of distributions.

8.12.3 Sparse Reward Ablation

We ablate our planar EPIC Loss visualizations with sparse rewards. Instead of a well-shaped goal-reaching reward, we use sparse rewards based on the tolerance to the goal. We define the tolerance as the radius the agent must be within if our 2d planar environment is scaled to [0, 1] x [0, 1]. With a very sparse reward, we show that EPIC losses are largely uninformative. However, by slightly relaxing the tolerance, we show a much better shaped EPIC loss landscape that bears similarity to that of Figure 5. Thus, while our method is dependent on access to extrinsic rewards, and ideally, shaped rewards, we show that the EPIC loss landscape over sparse reward landscapes with sufficient tolerance can be optimized.

EPIC Loss Visualizations