Skeleton Prototype Contrastive Learning with Multi-Level Graph Relation Modeling for Unsupervised Person Re-Identification

Abstract

Person re-identification (re-ID) via 3D skeletons is an important emerging topic with many merits. Existing solutions rarely explore valuable body-component relations in skeletal structure or motion, and they typically lack the ability to learn general representations with unlabeled skeleton data for person re-ID. This paper proposes a generic unsupervised Skeleton Prototype Contrastive learning paradigm with Multi-level Graph Relation learning (SPC-MGR) to learn effective representations from unlabeled skeletons to perform person re-ID. Specifically, we first construct unified multi-level skeleton graphs to fully model body structure within skeletons. Then we propose a multi-head structural relation layer to comprehensively capture relations of physically-connected body-component nodes in graphs. A full-level collaborative relation layer is exploited to infer collaboration between motion-related body parts at various levels, so as to capture rich body features and recognizable walking patterns. Lastly, we propose a skeleton prototype contrastive learning scheme that clusters feature-correlative instances of unlabeled graph representations and contrasts their inherent similarity with representative skeleton features (“skeleton prototypes”) to learn discriminative skeleton representations for person re-ID. Empirical evaluations show that SPC-MGR significantly outperforms several state-of-the-art skeleton-based methods, and it also achieves highly competitive person re-ID performance for more general scenarios.

Index Terms:

Skeleton Based Person Re-Identification; Unsupervised Representation Learning; Multi-Level Skeleton Graphs; Skeleton Prototype Contrastive Learning1 Introduction

Person re-identification (re-ID) aims at identifying or matching a target pedestrian across different views or scenes, which plays an essential role in safety-critical applications including intelligent video surveillance, security authentication and human tracking [1, 2, 3, 4, 5, 6, 7, 8, 9]. Conventional studies [10, 11, 12, 13, 14, 15, 16] typically utilize visual features such as human appearances, silhouettes and body textures from RGB or depth images to discriminate different individuals. Nevertheless, this kind of methods are often vulnerable to appearance, lighting and clothing variation in practice. Compared with RGB-based and depth-based methods, 3D skeleton-based models [17, 18, 19, 20, 21, 22] exploit 3D coordinates of numerous key joints to characterize human body and motion, which enjoys smaller data size and better robustness to scale and view variation [23]. With these advantages, 3D skeleton data have drawn surging attention in the fields of person re-ID and gait recognition [24, 25, 19, 21, 22, 20]. However, the way to model discriminative body and motion features with 3D skeleton data remains to be an open challenge.

To perform person re-ID via 3D skeletons, existing endeavors typically model skeleton features by two groups of methods. Skeleton descriptor based methods [17, 18, 26] manually extract certain anthropometric and geometric attributes of body from skeleton data. However, these hand-crafted methods usually require domain knowledge such as human anatomy [27], and cannot fully mine underlying features beyond human cognition. Deep neural network based methods [25, 19, 20] usually leverage convolutional neural networks (CNN) or long short-term memory (LSTM) to learn skeleton representations with sequences of raw body-joint positions or pose descriptors ( limb lengths). Nevertheless, these works rarely explore inherent relations between different body joints or components, which could ignore some valuable structural information of human body. Taking the human walking for example, neighbor body joints “foot” and “knee” have strong motion correlations, while they usually enjoy diverse degree of collaboration with limb-level components “leg” and “arm” during movement, which can be exploited to catch unique and recognizable patterns [28]. Other important flaws of this type of methods are label dependency and weak generalization ability. In practical terms, these methods usually require massive labeled data of pre-defined classes to either train the model from scratch [25, 21] or fine-tune the pre-trained skeleton representations [19, 20, 22] to classify the known identities. As a result, they lack the flexibility to learn general and representative skeleton features that can re-identify different pedestrians under the unavailability of labels, which limits its application in many real-world scenarios.

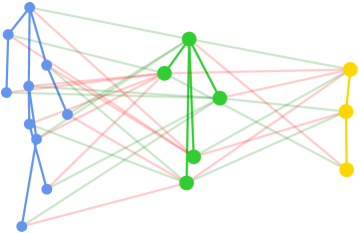

To address the above challenges, this work for the first time proposes a generic Skeleton Prototype Contrastive learning paradigm with Multi-level Graph Relation modeling (SPC-MGR) in Fig. 1 that can comprehensively model body structure and relations at various levels and mine discriminative features from unlabeled skeletons for person re-ID. Specifically, we first devise multi-level graphs to represent each 3D skeleton in a unified coarse-to-fine manner, so as to fully model body structure within skeletons. Then, to enable a comprehensive exploration of relations between different body components, we propose to model structural-collaborative body relations within skeletons from multi-level graphs. In particular, since each body component is highly correlated with its physically-connected components and may possess different structural relations ( motion correlations), we propose a multi-head structural relation layer (MSRL) to capture multiple relations between each body-component node and its neighbors within a graph, so as to aggregate key correlative features for effective node representations. Meanwhile, motivated by the fact that dynamic cooperation of body components in motion could carry unique patterns (, gait) [28], we propose a full-level collaborative relation layer (FCRL) to adaptively infer collaborative relations among motion-related components at both the same-level and cross-level in graphs. Furthermore, we exploit a multi-level graph feature fusion strategy to integrate features of different-level graphs via collaborative relations, which encourages the model to capture more graph structural semantics and discriminative skeleton features. Lastly, to mine effective features from unlabeled skeleton graph representations ( referred as skeleton instances), we propose a skeleton prototype contrastive learning scheme (SPC), which clusters correlative skeleton instances and contrasts their inherent similarity with the most representative skeleton features ( referred as skeleton prototypes) to learn general discriminative skeleton representations in an unsupervised manner. By maximizing the similarity of skeleton instances to their corresponding prototypes and their dissimilarity to other prototypes, SPC encourages the model to capture more discriminative skeleton features and class-related semantics ( intra-class similarity) for person re-ID without using any label.

An earlier and preliminary version of this work was reported in [21]. Compared with [21] that performs supervised skeleton representation for person re-ID, this paper focuses on the unsupervised re-ID problem under general settings. In this work, we explore unified and generalized multi-level skeleton graphs to extend earlier graph representations to different skeleton data, and propose a novel skeleton prototype contrastive learning paradigm to mine discriminative skeleton features with the proposed unlabeled skeleton graphs for person re-ID. To our best knowledge, this is also the first work that explores a generic unsupervised learning paradigm for skeleton-based person re-ID. We also devise a new full-level collaborative relation layer to capture more comprehensive relations between different-level body components, and further explore a multi-level graph feature fusion strategy to enhance graph semantics and global pattern learning. To validate the above improvement, this work conducts comprehensive experiments on four public person re-ID datasets, and carries out more detailed analysis on different person re-ID tasks ( multi-view person re-ID) under more general scenarios. Furthermore, we propose two evaluation metrics, mean intrA-Class Tightness (mACT) and mean inteR-Class Looseness (mRCL), to quantitatively evaluate the tightness of same-class representations and the looseness of different-class representations, so as to showcase the effectiveness of skeleton representations learned by the proposed approach.

Our main contributions are summarized as follows:

-

•

We devise unified multi-level graphs to model 3D skeletons, and propose a novel Skeleton Prototype Contrastive learning paradigm with Multi-level Graph Relation modeling (SPC-MGR) to learn an effective representation from unlabeled skeleton data for unsupervised person re-ID.

-

•

We propose multi-head structural relation layer (MSRL) to capture relations of neighbor body components, and devise full-level collaborative relation layer (FCRL) to infer collaboration between different-level components, so as to learn more structural semantics and unique patterns.

-

•

We propose a skeleton prototype contrastive learning (SPC) scheme to encourage capturing representative discriminative skeleton features and high-level class-related semantics from unlabeled skeleton data for person re-ID.

-

•

We propose mean intra-class tightness (mACT) and mean inter-class looseness (mRCL) to evaluate skeleton representation learning, and show the effectiveness of skeleton representations learned by our unsupervised approach.

-

•

Extensive experiments show that the proposed SPC-MGR outperforms several state-of-the-art skeleton-based methods on four person re-ID benchmarks, and is also highly effective when applied to skeleton data estimated from large-scale RGB videos under more general re-ID settings.

The rest of our paper is organized as follows. Sec. 2 provides an introduction for related works in the literature. Sec. 3 presents each technical module of the proposed approach. Sec. 4 elucidates the details of experiments, and comprehensively compares our approach with existing solutions. Sec. 5 conducts ablation study and extensive analysis of the proposed approach. Sec. 6 concludes this paper. Sec. 7 provides a statement for potential ethical issues.

2 Related Works

2.1 Skeleton-Based Person Re-Identification

2.1.1 Hand-Crafted Methods

Early skeleton-based works extract hand-crafted descriptors in terms of certain geometric, morphological or anthropometric attributes of human body. Barbosa et al. [17] compute 7 Euclidean distances between the floor plane and joint or joint pairs to construct a distance matrix, which is learned by a quasi-exhaustive strategy to extract discriminative features for person re-ID. Munaro et al. [26] and Pala et al. [29] further extend them to 13 () and 16 skeleton descriptors () respectively, and leverage support vector machine (SVM), -nearest neighbor (KNN) or Adaboost classifiers for person re-ID. Since such solutions using 3D skeletons alone are hard to achieve satisfactory performance, they usually combine other modalities such as 3D point clouds [30] and 3D face descriptors [29] to improve person re-ID accuracy.

2.1.2 Supervised and Self-Supervised Methods

Most recently, a few works exploit deep learning paradigms to learn gait representations from skeleton data for person re-ID in a supervised or self-supervised manner. Liao et al. [25] propose PoseGait, which feeds 81 hand-crafted pose features of 3D skeletons into CNN for human recognition. Rao et al. [19] devise a self-supervised attention-based gait encoding (AGE) model with multi-layer LSTM to encode gait features from unlabeled skeleton sequences, and then fine-tune the learned features with the supervision of labels for person re-ID. In [20], they further propose a locality-awareness approach (SGELA) that combines various pretext tasks ( reverse sequential reconstruction) and contrastive learning scheme to enhance self-supervised gait representation learning for the person re-ID task. The latest self-supervised work is SM-SGE [22], which utilizes a skeleton graph based reconstruction and inference mechanism to encode discriminative skeleton structure and motion features for the person re-ID task.

Our work differs in following aspects. We for the first time propose an unsupervised learning paradigm to learn discriminative skeleton representations from unlabeled skeleton data to directly perform person re-ID. Our approach requires neither any form of feature engineering ( hand-crafted skeleton descriptors [17, 26, 29]) nor any skeleton label for training model [25, 21] or fine-tuning representations [19, 20, 22]. Unlike most existing works that directly represent skeletons as sequences of body joints [19, 20] or pose descriptors [25], this work not only explores more comprehensive body-structure modeling by devising unified and generalized multi-level skeleton graphs, but also systematically mines valuable body-component relations at various levels. Besides, the proposed approach needs neither self-supervised pretext tasks ( reconstruction [22]) for pre-training or supervised classification networks for discriminative representation learning. Instead, it exploits the unsupervised skeleton prototype contrastive learning to mine representative skeleton features and class-related semantics from unlabeled 3D skeleton data. To the best of our knowledge, this is also the first attempt to leverage skeleton graph based relation modeling and contrastive learning to realize both skeleton representation learning and unsupervised person re-ID.

2.2 Contrastive Learning

Contrastive learning has recently achieved great success in many self-supervised and unsupervised learning tasks [31, 32, 33, 34, 16, 35, 20]. Its general objective is to learn effective data representations by pulling closer positive pairs and pushing apart negative pairs in the feature space using contrastive losses, which are often designed based on certain auxiliary tasks ( similarity metrics learning). For example, Wu et al. [32] devise an instance-level discrimination method in the form of exemplar task [36] to perform image contrastive learning with noise-contrastive estimation loss (NCE) [37]. In [33], contrastive predictive coding (CPC) based on a probabilistic contrastive loss (InfoNCE) is proposed to learn general representations for different domains. To optimize representation learning ( consistency) in memory bank based contrastive methods [38, 39, 40], some recent end-to-end works [41, 42, 43] utilize all samples of the current mini-batch to generate negative instance features, while the momentum-based approach [44] further explores the use of momentum-updated encoder and queue dictionary to improve consistency of both encoder and instance features. The latest PCL [35] integrates both contrastive learning and clustering into an expectation-maximization (EM) framework, which is highly efficient on unsupervised visual representation learning and inspires our work for 3D skeletons.

Our work differs from previous studies in following aspects. The proposed skeleton prototype contrastive learning scheme is devised to mine most representative and discriminative skeleton features ( skeleton prototypes) from unlabeled multi-level skeleton graph representations of 3D skeleton sequences ( skeleton instances). In this scheme, the skeleton instances and generated skeleton prototypes are exploited as the contrasting instances, which fundamentally differs from existing works [43, 44, 34, 35] that use augmented samples of images as instances. The goal of skeleton prototype contrastive learning scheme is to maximize skeleton instances’ similarity with their prototypes and dissimilarity to other prototypes using the proposed clustering-contrasting strategy, which does not require any extra memory or sampling mechanism [16, 35] and can directly learn effective skeleton representations for the person re-ID task without introducing any ground-truth label from source data domains.

3 The Proposed Approach

Suppose that a 3D skeleton sequence , where is the skeleton with body joints and dimensions. Each skeleton sequence corresponds to an ID label , where and is the number of different persons. The training set , probe set , and gallery set contain , , and skeleton sequences of different persons under varying views or scenes. Our goal is to learn an embedding function that maps and to effective skeleton representations and without using any label, such that the representation in the probe set can match the representation of the same identity in the gallery set. The overview of the proposed approach is given in Fig. 2, and we present the details of each technical component below.

3.1 Unified Multi-Level Skeleton Graphs

Inspired by the fact that human motion can be decomposed into movements of functional body-components ( legs, arms) [45], we spatially group skeleton joints to be higher level body components at their centroids. Specifically, we first divide human skeletons into several partitions from coarse to fine. Based on the nature of body structure, we specify the location of each body partition and its corresponding skeleton joints of different sources ( datasets). Then, we adopt the weighted average of body joints in the same partition as the node of higher level body component and use its physical connections as edges, so as to build unified skeleton graphs for an input skeleton. As shown in Fig. 3, we construct three levels of skeleton graphs, namely part-level, body-level and hyper-body-level graphs for each skeleton , which can be represented as , and respectively. Each graph () consists of nodes , , and edges , . Here and denote the set of nodes corresponding to different body components and the set of their internal connection relations, respectively. denotes the number of nodes in . More formally, we define a graph’s adjacency matrix as to represent structural relations among nodes. We compute the normalized structural relations between node and its neighbors, , , where denotes the neighbor nodes of node . is adaptively learned to capture flexible structural relations in the training stage.

Remarks: The proposed unified multi-level skeleton graphs can be generalized into different skeleton datasets, which enables the pre-trained model to be directly transferred across different domains for generalized person re-ID (see Sec. 5.5.2). Besides, they can be extended to skeleton data estimated from RGB videos to learn effective person re-ID representations (see Sec. 5.5.1).

3.2 Structural-Collaborative Body Relation Modeling

The physical connections of body structure typically endow body components in a local partition with higher correlations, while components of different parts may act collaboratively in various global patterns during motion [46]. To exploit such internal relations to mine rich body-structure features and unique motion characteristics from skeletons, we propose the multi-level structural relation layer (MSRL) and full-level collaborative relation layer (FCRL) to model the structural and collaborative relations of body components from multi-level skeleton graphs as follows.

3.2.1 Multi-Head Structural Relation Layer

To capture latent body structural information and learn an effective representation for each body-component node in skeleton graphs, we propose to focus on features of structurally-connected neighbor nodes, which enjoy higher correlations ( referred as structural relations) than distant pairs. For instance, adjacent nodes usually have closer spatial positions and similar motion tendency. Therefore, we devise a multi-head structural relation layer (MSRL) to learn relations of neighbor nodes and aggregate the most correlative spatial features to represent each body-component node.

We first devise a basic structural relation head based on the graph attention mechanism [47], which can focus on more correlative neighbor nodes by assigning larger attention weights, to capture the internal relation between adjacent nodes and in the same graph as:

| (1) |

where denotes the weight matrix to map the level node features into a higher level feature space , is a learnable weight matrix to perform relation learning in the level graph, indicates concatenating features of two nodes, and LeakyReLU is a non-linear activation function. Then, to learn flexible structural relations to focus on more correlative nodes, we normalize relations using the function as follows:

| (2) |

where denotes directly-connected neighbor nodes (including ) of node in graph. We use structural relations to aggregate features of most relevant nodes to represent node :

| (3) |

where is a non-linear function and is feature representation of node computed by a structural relation head.

To sufficiently capture potential structural relations ( position similarity and movement correlations) between each node and its neighbor nodes, we employ multiple structural relation heads, each of which independently executes the same computation of Eq. 3 to learn a potentially different structural relation , as shown in Fig. 4. We averagely aggregate features learned by different structural relation heads as the representation of node as follows:

| (4) |

where denotes the multi-head feature representation of node in , is the number of structural relation heads, represents the structural relation between node and computed by the structural relation head, and denotes the corresponding weight matrix to perform feature mapping in the head. Here we use average rather than concatenation operation to reduce feature dimension and allow for more structural relation heads. MSRL enables our model to capture the relations of correlative neighbor nodes ( see Eq. 1 and 2) and integrates key spatial features into node representations of each graph ( see Eq. 3 and 4). However, it only considers the local relations of the same-level components in graphs and is insufficient to capture global collaboration between different level body components, which motivates us to propose the full-level collaborative relation layer.

3.2.2 Full-Level Collaborative Relation Layer

Motivated by the natural property of human walking, , gait, which could be represented by the dynamic cooperation among body joints or between different body components [28], we expect our model to infer the degree of collaboration (referred as collaborative relations) among body-component nodes in multi-level graphs, so as to capture more unique and recognizable walking patterns from the motion of skeletons. For this purpose, we propose a full-level collaborative relation layer (FCRL) to capture relations between a node and all motion-related nodes of the same level and that between a node and its spatially corresponding higher level body component or other potential components. As shown in Fig. 2 and Fig. 4, we compute collaborative relation matrix () between the level nodes and the level nodes as following:

| (5) |

where is the collaborative relation between node in and node in . Here we use the inner product of multi-head node feature representations (see Eq. 4) that retain key spatial information of nodes to measure the degree of collaboration. Instead of merely considering body relations between adjacent level graphs [21], FCRL can capture the global collaborative relations among both adjacent and non-adjacent graphs, and meanwhile provides full collaboration inference between a node and all potential motion-correlated nodes in the same graph.

3.2.3 Multi-Level Graph Feature Fusion

To enhance structural semantics of multiple graphs ( global graph patterns) and adaptively integrate key correlative features in component collaboration, we exploit collaborative relations to fuse body-component node features across different spatial levels. We update the node representation () of level graph by fusing collaborative node features () learned from different graphs:

| (6) |

where is a learnable weight matrix to integrate features of collaborative node of level into level node . denotes the number of nodes in the level graph, and represents the fusion coefficient between level and level graphs, which can be adjusted according to their inherent correlations ( level similarity). We denote the fused level graph features of the skeleton as by concatenating all node representations. Inspired by [22], we retain graph representations of each individual level and adopt their concatenation to represent a skeleton as follows:

| (7) |

where is the multi-level graph representation of the skeleton , and indicates the concatenation of graph features. By combining all graph-level representations that integrate structural and collaborative body relation features (see Eq. 1-Eq. 6), we encourage the model to capture richer features of body structure and skeleton patterns at various levels. We show the effectiveness of the proposed structural-collaborative body relation learning by the relation visualization in Sec. 5.3 and Appendix.

3.3 Skeleton Prototype Contrastive Learning Scheme

As skeletons of the same individual typically share highly similar body attributes ( anthropometric attributes) and unique walking patterns [28], it is natural to consider mining the most typical attributes or patterns to identify the same person from others. To achieve this goal and encourage the model to capture more high-level skeleton semantics ( class-related patterns), we propose a Skeleton Prototype Contrastive learning (SPC) scheme to focus on the most representative skeleton graph features ( referred as skeleton prototypes) of pedestrians and exploit their inherent similarity and dissimilarity with other unlabeled graph representations (referred as skeleton instances) to learn general and discriminative representations of each individual for the person re-ID task.

3.3.1 Skeleton Prototype Generation

Given multi-level graph representations () of an input skeleton sequence (), we first integrate graph features into a sequence-level skeleton graph representation:

| (8) |

where is the multi-level graph representation of skeleton sequence , which incorporates structural-collaborative features and temporal dynamics of consecutive multi-level skeleton graphs, and denotes the importance of skeleton graph representation. Here we assume that each skeleton equally contributes to representing graph features of a sequence, , . For clarity, we use to represent multi-level graph representations of skeleton sequences in the training set , which are exploited as skeleton instances in the proposed SPC scheme.

Then, to gather skeleton instances that contain similar features to find the representative skeleton prototypes, we leverage the DBSCAN algorithm [48], which can discover clusters with arbitrary shapes or semantics, to perform clustering as:

| (9) |

where , is the number of clusters ( pseudo classes), , , is the cluster that contains instances belonging to the pseudo class, and denotes the set of outlier instances that do not belong to any cluster. We compute the centroid of each cluster, which averagely aggregates features of skeleton instances in the cluster, to obtain corresponding skeleton prototype:

| (10) |

where is the skeleton instance in the cluster, and denotes the skeleton prototype.

3.3.2 Skeleton Prototype Contrastive Learning

To focus on the typical and discriminative features of skeleton prototypes as well as facilitate learning high-level skeleton semantics from different prototypes, we propose to enhance the inherent similarity of a skeleton instance to corresponding skeleton prototype and maximize its dissimilarity to other skeleton prototypes with a skeleton prototype contrastive loss as:

| (11) |

where represents the number of all training instances, denotes the number of skeleton prototypes, is the number of skeleton instances belonging to the prototype , and represents the temperature for contrastive learning, where higher value of produces a softer probability distribution over prototypes and retains more similar information among clusters [49]. The skeleton prototype contrastive loss can be viewed as a special form of InfoNCE loss [33], which aims to maximize the dot product based similarity between the query for each skeleton instance and its positive key for the same prototype while maximizing the dissimilarity to negative keys for other prototypes.

3.3.3 Analysis of Skeleton Prototype Contrastive Learning

The objective of skeleton prototype contrastive learning is to maximize the intra-prototype similarity and inter-prototype dissimilarity to mine discriminative skeleton features and identity-related semantics in an unsupervised manner. Such learning process can be interpreted as to simultaneously enhance the tightness of skeleton instances belonging to the same prototype and the looseness among different prototypes. Considering that a better representation space should have smaller intra-class distance (higher tightness) and larger inter-class distance (higher looseness), we can measure the tightness and looseness levels of the learned representations with regard to ground-truth classes to verify whether the model has learned effective features for different classes from the unlabeled data. To this end, we propose two metrics named mean intrA-Class Tightness (mACT) and mean inteR-Class Looseness (mRCL) to quantitatively evaluate the merits of the learn skeleton representations.

Mean Intra-Class Tightness (mACT): To obatin mACT, we first compute intra-class tightness (ACT) of the class with

| (12) |

where represents the average intra-class distance between the representation, , of sample and the representation centroid, , belonging to the class, denotes the global average distance between each representation and all centroids, represents the number of instances in the class, is the number of ground-truth classes, and is the cosine distance between and . Note that can be used to represent the -class tightness, but this result does NOT consider the factor of global feature distribution that leads to different representation distances. Therefore, we use to indicate the degree of intra-class tightness. When is smaller than , the representations in class are denser than the average, thus indicating a higher ACT of class. Then, we average the ACT values of all classes to represent the mean intra-class tightness (mACT) of representations by .

Mean Inter-Class Looseness (mRCL): To measure the mRCL of representations, we compute the ratio of the average distance between different class centroids, , to the global average distance, (see Eq. 12), with

| (13) |

where higher mRCL can be obtained with greater average distance between different representation centroids compared to the global average representation distance . Full details of mACT and mRCL are provided in the Appendix. We conduct both quantitative and qualitative analysis of the proposed skeleton prototype contrastive learning scheme using the mACT and mRCL metrics, which shows that it can encourage the model to capture class-related semantics for more effective skeleton representations, as detailed in Sec. 5.4 and Appendix.

3.4 The Entire Approach

The computation flow of the proposed approach can be described as: (Sec. 3.1) (Sec. 3.2) (Eq. 7) (Sec. 3.3) (Eq. 10). For convenience, we use the embedding function to represent the multi-level skeleton graph representation encoding process, which can be formulated as . We perform skeleton prototype contrastive learning by minimizing , so as to optimize and learn effective skeleton representations in an unsupervised manner, as illustrated in Algorithm 1. To facilitate better skeleton representation learning with more reliable clusters, we optimize our model by alternating clustering and contrastive learning. More technical details are provided in the Appendix. For the person re-ID task, we exploit the learned embedding function to encode each skeleton sequence of the probe set into corresponding multi-level graph representation, , and match it with representations, , of the same identity in the gallery set using Euclidean distance.

| KGBD | BIWI | KS20 | IAS-Lab | CASIA-B | ||||||||||

| # Train IDs | 164 | 50 | 20 | 11 | 124 | |||||||||

|

188,742 | 205,764 | 35,976 | 88,986 | 706,480 | |||||||||

| # Gallery IDs | 164 | 28 | 20 | 11 | 62 | |||||||||

|

188,700 |

|

3,252 |

|

|

|||||||||

| # Probe IDs | 164 | 28 | 20 | 11 | 62 | |||||||||

|

94,146 |

|

3,306 |

|

|

| KS20 | KGBD | IAS-A | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Types | Methods | # Params | GFLOPs | top-1 | top-5 | top-10 | mAP | top-1 | top-5 | top-10 | mAP | top-1 | top-5 | top-10 | mAP |

| Hand-crafted | Descriptors [26] | — | — | 39.4 | 71.7 | 81.7 | 18.9 | 17.0 | 34.4 | 44.2 | 1.9 | 40.0 | 58.7 | 67.6 | 24.5 |

| Descriptors [29] | — | — | 51.7 | 77.1 | 86.9 | 24.0 | 31.2 | 50.9 | 59.8 | 4.0 | 42.7 | 62.9 | 70.7 | 25.2 | |

| Supervised | PoseGait [25] | 8.93M | 121.60 | 49.4 | 80.9 | 90.2 | 23.5 | 50.6 | 67.0 | 72.6 | 13.9 | 28.4 | 55.7 | 69.2 | 17.5 |

| MG-SCR [21] | 0.35M | 6.60 | 46.3 | 75.4 | 84.0 | 10.4 | 44.0 | 58.7 | 64.6 | 6.9 | 36.4 | 59.6 | 69.5 | 14.1 | |

| Self-supervised /Unsupervised | AGE [19] | 7.15M | 37.37 | 43.2 | 70.1 | 80.0 | 8.9 | 2.9 | 5.6 | 7.5 | 0.9 | 31.1 | 54.8 | 67.4 | 13.4 |

| SGELA [20] | 8.47M | 7.47 | 45.0 | 65.0 | 75.1 | 21.2 | 38.1 | 53.5 | 60.0 | 4.5 | 16.7 | 30.2 | 44.0 | 13.2 | |

| SM-SGE [22] | 5.58M | 22.61 | 45.9 | 71.9 | 81.1 | 9.5 | 38.2 | 54.2 | 60.7 | 4.4 | 34.0 | 60.5 | 71.6 | 13.6 | |

| SPC-MGR (Ours) | 0.01M | 0.12 | 59.0 | 79.0 | 86.2 | 21.7 | 40.8 | 57.5 | 65.0 | 6.9 | 41.9 | 66.3 | 75.6 | 24.2 | |

4 Experiments

4.1 Experimental Setup

We evaluate our approach on four skeleton-based person re-ID benchmarks: Kinect Gait Biometry Dataset (KGBD) [18], BIWI [26], KS20 VisLab Multi-View Kinect Skeleton Dataset [50], IAS-Lab [51], and a large-scale RGB video based multi-view gait dataset: CASIA-B [52]. They collect skeleton data from 164, 50, 20, 11, and 124 different individuals respectively, as detailed in Table I. For BIWI and IAS-Lab datasets, we evaluate each testing set by setting it as the probe and the other one as the gallery. For KGBD, since no training and testing splits are given, we randomly leave one skeleton video of each person as the probe set, and equally divide the remaining videos into the training set and gallery set. For KS20, we design different splitting setups to evaluate the multi-view person re-ID performance of our approach. In Random View Evaluation (RVE), we randomly select one sequence from each viewpoint as the probe sequence and use one half of the remaining skeleton sequences for training and the other half as the gallery. For Cross-View Evaluation (CVE), we divide probe samples by each viewpoint, including left lateral at , left diagonal at , frontal at , right diagonal at , and right lateral at , to construct five individual viewpoint-based probe sets. We test each of them by matching identities from other four viewpoints to evaluate cross-view person re-ID performance. More details about the experimental setups are provided in the Appendix. Experiments with each evaluation setup are repeated for multiple times and the average performance is reported.

4.2 Implementation Details

The numbers of nodes in the part-level, body-level, and hyper-torso-level graphs are , , and respectively. The sequence length on four skeleton-based datasets (KS20, KGBD, BIWI, IAS-Lab) is empirically set to , which achieves best performance in average among different settings. For the largest dataset CASIA-B with roughly estimated skeleton data from RGB frames, we set sequence length for training/testing. The node feature dimension is and the number of structural relation heads is for KGBD and for other datasets. We use () to averagely fuse multi-level graph features. For the DBSCAN algorithm, we empirically use maximum distance (KGBD, BIWI), (KS20, IAS-Lab), (CASIA-B), and adopt minimum amount of samples for KGBD and for other datasets. We set the temperature to (KGBD), (CASIA-B), (BIWI), (KS20, IAS-Lab) for skeleton prototype contrastive learning. We employ Adam optimizer with learning rate for all datasets. The batch size is for KGBD and for other datasets. For all methods compared in our experiments, we select optimal model parameters for training, and use their pre-defined skeleton descriptors or pre-trained skeleton representations for person re-ID. Full implementation details are provided in the Appendix and our codes are available at https://github.com/Kali-Hac/SPC-MGR.

| IAS-B | BIWI-S | BIWI-W | |||||||||||

| Types | Methods | top-1 | top-5 | top-10 | mAP | top-1 | top-5 | top-10 | mAP | top-1 | top-5 | top-10 | mAP |

| Hand-crafed | Descriptors [26] | 43.7 | 68.6 | 76.7 | 23.7 | 28.3 | 53.1 | 65.9 | 13.1 | 14.2 | 20.6 | 23.7 | 17.2 |

| Descriptors [29] | 44.5 | 69.1 | 80.2 | 24.5 | 32.6 | 55.7 | 68.3 | 16.7 | 17.0 | 25.3 | 29.6 | 18.8 | |

| Supervised | PoseGait [25] | 28.9 | 51.6 | 62.9 | 20.8 | 14.0 | 40.7 | 56.7 | 9.9 | 8.8 | 23.0 | 31.2 | 11.1 |

| MG-SCR [21] | 32.4 | 56.5 | 69.4 | 12.9 | 20.1 | 46.9 | 64.1 | 7.6 | 10.8 | 20.3 | 29.4 | 11.9 | |

| Self-supervised /Unsupervised | AGE [19] | 31.1 | 52.3 | 64.2 | 12.8 | 25.1 | 43.1 | 61.6 | 8.9 | 11.7 | 21.4 | 27.3 | 12.6 |

| SGELA [20] | 22.2 | 40.8 | 50.2 | 14.0 | 25.8 | 51.8 | 64.4 | 15.1 | 11.7 | 14.0 | 14.7 | 19.0 | |

| SM-SGE [22] | 38.9 | 64.1 | 75.8 | 13.3 | 31.3 | 56.3 | 69.1 | 10.1 | 13.2 | 25.8 | 33.5 | 15.2 | |

| SPC-MGR (Ours) | 43.3 | 68.4 | 79.4 | 24.1 | 34.1 | 57.3 | 69.8 | 16.0 | 18.9 | 31.5 | 40.5 | 19.4 | |

| Gallery | |||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Probe | top-1 | top-5 | top-10 | mAP | top-1 | top-5 | top-10 | mAP | top-1 | top-5 | top-10 | mAP | top-1 | top-5 | top-10 | mAP | top-1 | top-5 | top-10 | mAP | |

| AGE [19] | 46.7 | 74.2 | 83.5 | 22.5 | 11.0 | 35.7 | 47.5 | 10.0 | 8.1 | 29.9 | 47.5 | 9.2 | 7.5 | 26.7 | 43.5 | 8.4 | 7.0 | 23.0 | 37.4 | 8.2 | |

| SGELA [20] | 76.2 | 89.6 | 92.8 | 37.1 | 15.1 | 27.3 | 35.1 | 19.9 | 10.1 | 27.5 | 40.9 | 18.2 | 10.7 | 21.5 | 29.3 | 18.0 | 15.4 | 25.8 | 38.0 | 12.6 | |

| SM-SGE [22] | 58.4 | 84.7 | 92.2 | 27.7 | 17.2 | 50.0 | 63.3 | 10.8 | 7.2 | 21.9 | 39.1 | 10.5 | 4.4 | 19.4 | 34.7 | 9.3 | 10.0 | 23.8 | 33.1 | 9.4 | |

| SPC-MGR (Ours) | 78.9 | 94.1 | 97.3 | 52.9 | 26.2 | 53.1 | 71.5 | 22.9 | 39.1 | 59.8 | 71.5 | 31.4 | 30.5 | 57.4 | 72.7 | 26.6 | 27.0 | 52.0 | 66.4 | 19.9 | |

| AGE | 10.1 | 42.8 | 57.8 | 8.8 | 52.3 | 82.7 | 91.5 | 25.0 | 15.0 | 35.6 | 58.5 | 8.8 | 10.1 | 24.2 | 41.8 | 8.1 | 7.8 | 24.2 | 34.3 | 8.3 | |

| SGELA | 13.1 | 19.6 | 22.6 | 19.4 | 70.9 | 88.2 | 91.8 | 40.5 | 11.8 | 24.5 | 36.3 | 16.5 | 6.9 | 22.6 | 31.7 | 15.4 | 9.2 | 15.4 | 22.9 | 13.9 | |

| SM-SGE | 18.1 | 48.4 | 65.0 | 11.5 | 60.2 | 82.0 | 89.8 | 28.2 | 12.5 | 27.2 | 35.3 | 10.7 | 7.5 | 23.4 | 33.8 | 10.6 | 8.8 | 27.2 | 39.1 | 10.5 | |

| SPC-MGR (Ours) | 39.1 | 60.2 | 69.1 | 26.2 | 75.4 | 95.7 | 96.5 | 56.7 | 40.2 | 62.5 | 72.3 | 32.4 | 28.9 | 55.1 | 66.0 | 24.9 | 18.4 | 48.1 | 66.4 | 16.1 | |

| AGE | 7.5 | 27.3 | 43.2 | 8.7 | 9.0 | 28.5 | 44.1 | 9.3 | 57.4 | 81.4 | 90.7 | 19.2 | 13.8 | 41.1 | 57.1 | 9.0 | 7.8 | 30.0 | 46.0 | 8.3 | |

| SGELA | 9.6 | 19.8 | 29.7 | 16.4 | 10.8 | 15.6 | 20.4 | 17.5 | 48.4 | 75.7 | 86.5 | 31.6 | 17.1 | 35.7 | 43.0 | 22.0 | 13.5 | 23.4 | 31.8 | 21.3 | |

| SM-SGE | 19.1 | 33.1 | 48.1 | 12.4 | 23.1 | 40.6 | 57.4 | 11.5 | 72.2 | 89.1 | 92.8 | 24.9 | 20.9 | 48.4 | 69.4 | 12.8 | 19.4 | 36.9 | 51.6 | 11.3 | |

| SPC-MGR (Ours) | 37.5 | 67.2 | 75.0 | 26.0 | 41.8 | 65.2 | 74.2 | 32.2 | 86.7 | 98.1 | 99.2 | 63.1 | 59.0 | 82.4 | 86.3 | 40.7 | 34.8 | 62.1 | 77.0 | 24.8 | |

| AGE | 6.7 | 21.3 | 34.7 | 8.2 | 7.9 | 23.4 | 38.9 | 8.9 | 15.2 | 35.9 | 54.4 | 9.2 | 45.3 | 70.5 | 82.1 | 18.7 | 11.3 | 37.1 | 50.2 | 8.9 | |

| SGELA | 5.8 | 18.8 | 28.0 | 14.2 | 11.6 | 15.5 | 20.7 | 16.8 | 17.6 | 47.1 | 53.2 | 24.5 | 59.6 | 81.5 | 89.1 | 36.8 | 17.0 | 29.8 | 32.5 | 23.0 | |

| SM-SGE | 8.4 | 24.4 | 37.8 | 10.4 | 12.9 | 26.6 | 36.3 | 10.9 | 24.1 | 53.4 | 66.3 | 12.9 | 64.4 | 85.9 | 95.0 | 25.5 | 17.8 | 40.9 | 59.1 | 12.1 | |

| SPC-MGR (Ours) | 28.5 | 59.8 | 71.9 | 23.3 | 25.4 | 49.6 | 65.2 | 22.3 | 57.4 | 77.0 | 85.2 | 40.8 | 77.3 | 96.5 | 97.7 | 58.6 | 35.9 | 62.5 | 79.3 | 23.0 | |

| AGE | 7.9 | 17.7 | 32.6 | 8.1 | 5.2 | 22.4 | 33.4 | 8.3 | 10.5 | 25.6 | 34.0 | 8.2 | 11.6 | 33.1 | 52.9 | 8.8 | 47.1 | 72.4 | 82.6 | 22.6 | |

| SGELA | 14.0 | 29.1 | 39.2 | 21.3 | 11.9 | 20.6 | 25.9 | 17.3 | 18.6 | 37.8 | 49.7 | 19.4 | 22.7 | 45.9 | 55.2 | 20.7 | 74.5 | 92.7 | 95.1 | 38.3 | |

| SM-SGE | 5.6 | 20.0 | 30.6 | 8.5 | 6.6 | 22.7 | 31.6 | 8.6 | 13.8 | 34.1 | 45.6 | 9.4 | 10.3 | 37.5 | 56.6 | 10.4 | 51.9 | 79.7 | 87.8 | 25.6 | |

| SPC-MGR (Ours) | 28.5 | 53.5 | 62.5 | 21.7 | 17.2 | 37.1 | 50.0 | 18.8 | 31.6 | 53.5 | 66.8 | 30.0 | 31.3 | 56.3 | 77.3 | 26.4 | 65.2 | 89.5 | 96.5 | 45.9 | |

4.3 Evaluation Metrics

We compute Cumulative Matching Characteristics (CMC) curve, which typically adopts top-1, top-5, and top-10 accuracy as the quantitative metrics, to show ratios that a probe sample matches the same identity in different-sized ranked lists of gallery samples. We also report Mean Average Precision (mAP) [53] to evaluate the overall performance of our approach. Unlike existing skeleton-based re-ID works that take only top-1 accuracy and nAUC measurements [54] under the assumption of fixed classes, this work evaluates all existing methods with more comprehensive metrics (top-1, top-5, top-10 accuracy and mAP) under the frequently-used person re-ID evaluation protocol, match IDs between probe and gallery, which can be extended to more general scenarios with varying classes such as generalized person re-ID tasks.

4.4 Performance Comparison

In this section, we compare our approach with state-of-the-art self-supervised and unsupervised skeleton-based person re-ID methods [19, 20, 22] on KS20, KGBD, IAS-Lab, and BIWI datasets with different probe settings in Table II and Table III. As a reference for the overall performance, we include the latest supervised skeleton-based person re-ID methods [25, 21] and representative hand-crafted person re-ID methods [30, 29]. For deep learning based methods, we also report their model sizes, , amount of network parameters, and computational complexity in Table II.

4.4.1 Comparison with Self-Supervised and Unsupervised Methods

As presented in Table II and Table III, the proposed SPC-MGR enjoys distinct advantages over existing self-supervised and unsupervised methods on all datasets. Compared with AGE model [19] that learns skeleton features based on body-joint sequence representations, our approach consistently achieves higher person re-ID performance by a large margin of to top-1 accuracy and to mAP on different datasets, which demonstrates that the proposed multi-level skeleton graph representations with structural-collaborative body relation learning are more effective on modeling discriminative skeleton features for the person re-ID task. Our approach significantly outperforms the state-of-the-art skeleton contrastive learning method SGELA [20] by up to top-1 accuracy and mAP on KS20, KGBD, IAS-A, and IAS-B testing sets. On two testing sets of BIWI, both SGELA and the proposed approach obtain comparable mAP, while our SPC-MGR can achieve superior overall performance with higher top-1 (-), top-5 (-), and top-10 accuracy (-). Finally, our approach also performs better than the latest graph-based skeleton representation learning method SM-SGE [22] by a distinct margin of - top-1 accuracy and - mAP on all datasets. In contrast to direct inter-sequence contrastive learning [20] or manually devising pretext tasks for skeleton representation learning [19, 22], our approach can automatically mine most representative skeleton features by contrasting sequence-level representations (instances) and cluster-level representations (prototypes), which enables our model to learn better skeleton representations for person re-ID. Moreover, our model requires only 0.01M parameters and evidently lower computational complexity for skeleton representation learning compared with existing self-supervised and unsupervised methods, as shown in Table II, which demonstrates its superior efficiency for person re-ID tasks.

| Id | Configurations | KS20 | KGBD | IAS-A | IAS-B | BIWI-W | BIWI-S | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| top-1 | mAP | top-1 | mAP | top-1 | mAP | top-1 | mAP | top-1 | mAP | top-1 | mAP | ||

| 1 | Baseline | 17.0 | 9.5 | 20.5 | 4.4 | 29.4 | 13.8 | 30.2 | 13.3 | 10.9 | 14.1 | 24.8 | 9.3 |

| 2 | SG + MSRL | 18.6 | 10.2 | 21.4 | 3.7 | 30.3 | 14.3 | 31.8 | 13.3 | 11.2 | 13.8 | 26.0 | 11.0 |

| 3 | SG + MSRL + SPC | 28.4 | 15.5 | 26.2 | 5.7 | 37.9 | 21.5 | 38.5 | 20.8 | 15.4 | 16.4 | 27.3 | 12.2 |

| 4 | MG + MSRL + SPC | 45.1 | 21.2 | 34.5 | 6.3 | 40.0 | 22.5 | 41.9 | 23.2 | 18.1 | 16.9 | 31.5 | 13.4 |

| 5 | MG + MSRL + FCRL + SPC | 59.0 | 21.7 | 40.8 | 6.9 | 41.9 | 24.2 | 43.3 | 24.1 | 18.9 | 19.4 | 34.1 | 16.0 |

| Hyper-body-level | Body-level | Part-level | KS20 | KGBD | IAS-A | IAS-B | BIWI-W | BIWI-S | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| top-1 | mAP | top-1 | mAP | top-1 | mAP | top-1 | mAP | top-1 | mAP | top-1 | mAP | |||

| ✓ | 29.9 | 15.6 | 15.2 | 4.2 | 30.6 | 20.4 | 30.9 | 21.1 | 14.5 | 3.7 | 20.9 | 9.4 | ||

| ✓ | 44.3 | 19.9 | 20.8 | 4.0 | 34.7 | 20.9 | 38.5 | 22.9 | 16.5 | 15.4 | 27.9 | 12.4 | ||

| ✓ | 48.2 | 20.9 | 34.2 | 5.4 | 41.5 | 21.7 | 39.1 | 20.3 | 17.8 | 16.9 | 29.9 | 13.7 | ||

| ✓ | ✓ | 46.1 | 20.5 | 26.7 | 5.7 | 39.3 | 21.3 | 37.8 | 22.0 | 16.6 | 16.9 | 33.4 | 14.1 | |

| ✓ | ✓ | 51.4 | 21.1 | 34.6 | 6.0 | 41.3 | 22.8 | 38.9 | 23.2 | 18.3 | 16.5 | 34.0 | 13.5 | |

| ✓ | ✓ | 53.3 | 21.3 | 35.6 | 6.3 | 41.7 | 21.9 | 41.5 | 23.1 | 18.9 | 18.5 | 32.5 | 15.1 | |

| ✓ | ✓ | ✓ | 59.0 | 21.7 | 40.8 | 6.9 | 41.9 | 24.2 | 43.3 | 24.1 | 19.3 | 18.9 | 34.1 | 15.3 |

| KS20 | KGBD | IAS-A | IAS-B | BIWI-W | BIWI-S | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| top-1 | mAP | top-1 | mAP | top-1 | mAP | top-1 | mAP | top-1 | mAP | top-1 | mAP | |

| 2 | 52.7 | 20.1 | 30.3 | 6.2 | 38.9 | 22.2 | 40.7 | 23.1 | 17.3 | 17.5 | 25.8 | 11.5 |

| 4 | 53.9 | 21.4 | 33.7 | 6.5 | 39.3 | 19.4 | 40.8 | 23.4 | 17.8 | 17.8 | 30.5 | 13.3 |

| 8 | 59.0 | 21.7 | 38.9 | 6.6 | 41.9 | 24.2 | 43.3 | 24.1 | 18.9 | 19.4 | 34.1 | 16.0 |

| 16 | 56.5 | 21.6 | 40.8 | 6.9 | 41.0 | 23.4 | 42.1 | 23.8 | 18.8 | 19.3 | 33.2 | 14.4 |

| KS20 | KGBD | IAS-A | IAS-B | BIWI-W | BIWI-S | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| top-1 | mAP | top-1 | mAP | top-1 | mAP | top-1 | mAP | top-1 | mAP | top-1 | mAP | |

| 0.1 | 53.3 | 20.4 | 34.1 | 6.3 | 40.8 | 22.3 | 40.6 | 21.7 | 18.4 | 18.2 | 32.8 | 13.5 |

| 0.25 | 54.2 | 20.2 | 34.0 | 5.7 | 40.9 | 22.2 | 43.1 | 23.9 | 18.4 | 17.1 | 33.3 | 13.5 |

| 0.5 | 55.5 | 21.2 | 35.3 | 6.3 | 41.4 | 22.8 | 43.6 | 24.0 | 18.5 | 18.5 | 33.4 | 13.4 |

| 1.0 | 59.0 | 21.7 | 40.8 | 6.9 | 41.9 | 24.2 | 43.3 | 24.1 | 18.9 | 19.4 | 34.1 | 16.0 |

We also compare the performance of our approach with state-of-the-art skeleton-based counterparts with the cross-view evaluation (CVE) setup of KS20. As shown in Table IV, our approach remarkably outperforms the latest self-supervised and multi-view skeleton-based methods SGELA [20] and SM-SGE [22] by an average margin of - top-1 accuracy, - top-5 accuracy, - top-10 accuracy, and - mAP on 24 out of 25 testing combinations of probe views and gallery views, which demonstrates that our model can learn more discriminative skeleton representations with better robustness against viewpoint variations for cross-view person re-ID.

4.4.2 Comparison with Hand-Crafted and Supervised Methods

Compared with [26] and [29] that extract hand-crafted geometric and anthropometric skeleton descriptors, our model achieves a significant improvement of person re-ID performance by - top-1 accuracy on KGBD, BIWI-S, and BIWI-W. Despite gaining similar performance on IAS-A and IAS-B, these methods are inferior to our approach by at least top-1 accuracy on more challenging datasets such as KS20 and KGBD that contains more viewpoints and individuals. Furthermore, with unlabeled 3D skeletons as the only input, the proposed approach can obtain comparable or even superior performance to two state-of-the-art supervised methods PoseGait [25] and MG-SCR [21] on five out of six testing sets (KS20, IAS-A, IAS-B, BIWI-S, BIWI-W). Interestingly, with massive labels as the supervision, these methods still fail to obtain satisfactory person re-ID accuracy and even perform worse than hand-crafted methods on datasets with frequent view, shape, and appearance changes in KS20, IAS, and BIWI with the probe-gallery person re-ID setup. Considering that our approach does not require any manual annotation and can achieve highly competitive and more balanced performance with a significantly smaller size of network parameters, as shown in Table II, it can be a more general solution to skeleton-based person re-ID and related tasks. We will show broader applications of our approach to more general person re-ID scenarios in Sec. 5.5.

| KS20 | KGBD | IAS-A | IAS-B | BIWI-W | BIWI-S | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| top-1 | mAP | top-1 | mAP | top-1 | mAP | top-1 | mAP | top-1 | mAP | top-1 | mAP | |

| 0.06 | 58.3 | 21.2 | 40.8 | 6.9 | 41.2 | 22.5 | 43.2 | 24.0 | 18.5 | 18.7 | 34.5 | 15.6 |

| 0.07 | 58.6 | 21.3 | 38.6 | 6.6 | 41.5 | 22.7 | 43.0 | 23.8 | 18.9 | 19.4 | 34.1 | 16.0 |

| 0.08 | 59.0 | 21.7 | 36.6 | 6.6 | 41.9 | 24.2 | 43.3 | 24.1 | 18.8 | 18.9 | 33.9 | 14.5 |

| 0.1 | 57.7 | 21.6 | 34.0 | 6.5 | 41.4 | 23.1 | 43.8 | 24.2 | 18.6 | 17.6 | 32.8 | 12.9 |

| 0.25 | 57.0 | 21.5 | 27.7 | 5.7 | 41.0 | 21.4 | 43.2 | 23.5 | 18.3 | 16.2 | 33.8 | 15.1 |

| 0.5 | 56.5 | 21.1 | 19.8 | 5.0 | 40.8 | 24.0 | 41.0 | 24.5 | 19.0 | 18.5 | 33.0 | 15.0 |

| KS20 | KGBD | IAS-A | IAS-B | BIWI-W | BIWI-S | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| top-1 | mAP | top-1 | mAP | top-1 | mAP | top-1 | mAP | top-1 | mAP | top-1 | mAP | |

| 1 | 53.9 | 21.5 | 30.3 | 6.3 | 41.2 | 23.1 | 43.0 | 23.6 | 18.4 | 18.5 | 34.0 | 14.3 |

| 2 | 59.0 | 21.7 | 34.4 | 6.5 | 41.9 | 24.2 | 43.3 | 24.1 | 18.9 | 19.4 | 34.1 | 16.0 |

| 3 | 58.4 | 21.2 | 36.6 | 6.5 | 41.1 | 22.6 | 43.2 | 23.9 | 19.1 | 19.3 | 32.0 | 13.8 |

| 4 | 58.6 | 21.5 | 40.8 | 6.9 | 40.0 | 22.1 | 42.8 | 24.2 | 18.8 | 18.1 | 30.9 | 13.3 |

| KS20 | KGBD | IAS-A | IAS-B | BIWI-W | BIWI-S | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| top-1 | mAP | top-1 | mAP | top-1 | mAP | top-1 | mAP | top-1 | mAP | top-1 | mAP | |

| 0.4 | 53.3 | 21.7 | 33.9 | 5.8 | 39.8 | 23.1 | 43.2 | 24.3 | 18.6 | 17.6 | 24.6 | 11.2 |

| 0.6 | 55.7 | 21.5 | 40.8 | 6.9 | 41.3 | 22.6 | 42.7 | 24.0 | 18.9 | 19.4 | 34.1 | 16.0 |

| 0.8 | 59.0 | 21.7 | 22.1 | 5.4 | 41.9 | 24.2 | 43.3 | 24.1 | 17.3 | 18.5 | 33.2 | 14.4 |

| 1.0 | 54.3 | 21.0 | 17.7 | 4.0 | 40.7 | 22.2 | 41.0 | 23.8 | 12.3 | 15.1 | 28.9 | 12.0 |

5 Further Analysis

5.1 Ablation Study

In this section, we conduct ablation study to demonstrate the necessity of each component in the proposed approach. We use raw skeleton sequences as the baseline and report average performance of each configuration. As reported in Table V, we can draw the following conclusions. The model utilizing single-level skeleton graph with MSRL (Id = 2, 3) shows higher performance than the baseline (Id = 1) that directly uses raw body-joint sequences by - top-1 accuracy and - mAP on all datasets, regardless of using SPC. Such results demonstrate the effectiveness of graph representations, as it can model richer body structural information and mine valuable body-component relations to obtain a more discriminative skeleton representation. Compared with the model without contrastive learning (Id = 2), employing SPC (Id = 3) obtains consistent re-ID performance improvement by up to top-1 accuracy and mAP on different datasets. This justifies that the proposed SPC is a highly effective contrastive learning paradigm, which enables the model to mine more typical and unique skeleton features of different identities from the unlabeled graph representations for person re-ID. Exploiting the proposed multi-level graphs (Id = 4) performs better than solely using single-level graph (Id = 3) with a remarkable margin of - top-1 accuracy and - mAP, which demonstrates that modeling body structure and relations at various levels with the proposed graph representations (MG) can encourage the model to learn more useful skeleton features for person re-ID. We will compare the effects of different level graphs in the next section as well. Adding FCRL further improves the overall performance in terms of both top-1 accuracy by - and mAP by - on different datasets. Such results verify our claim that combining structural and collaborative body relation learning can facilitate capturing richer features of body structure and skeleton patterns for the person re-ID task.

5.2 Discussions

5.2.1 Different Skeleton Graph Levels

To analyze the effects of different graph levels, Table VI evaluates the performance of our approach when combining different graphs for person re-ID under the same optimal model setting. Exploiting low-level graph representations can achieve evidently better person re-ID performance than using high-level graphs by - top-1 accuracy on most datasets, which suggests that the low-level graphs ( part-level) that contain more body-component nodes and body structural/relational information can benefit learning more discriminative skeleton representations for person re-ID. Combining different levels of graphs can improve the person re-ID performance compared with solely using single-level graphs (first three rows in Table VI) in most cases, while the model exploiting all level graphs is the best performer among different datasets. This further justifies that the proposed multi-level graphs that model body structure and motion at various levels enable our approach to capture more recognizable structural features and pattern information for the person re-ID task.

5.2.2 Different Structural Relation Head Settings

As shown in Table VII, we show the effects of different amounts of structural relation heads on our approach. It is observed that introducing more learnable structural relation heads with larger values can improve the performance of our approach on different datasets, as it can encourage capturing more spatially or motion related features of adjacent body components. However, too many structural relation heads , , for example, may cause the model to learn redundant relation information and degrade model performance on relatively limited training data such as KS20.

5.2.3 Different Graph Fusion Coefficients

The hyper-parameter controls the extent of fusing collaborative node features between level and level graphs. Here we use a unified to equally fuse features between different graph levels, so as to better analyze the overall effects of collaborative fusion. As reported in Table VIII, improving enables our model to achieve better Re-ID performance in terms of both top-1 accuracy and mAP on all datasets. Such results verify the necessity of adequate collaborative graph fusion to learn more effective multi-level skeleton graph representations for person re-ID. Based on this observation, we set to fuse skeleton graph features.

5.2.4 Temperature Settings for SPC Scheme

As shown in Table IX, the model using lower temperature can obtain slightly better person re-ID performance. Since higher temperatures tend to retain more similar features between different skeleton prototypes, it might reduce the capacity of contrastive learning on capturing inter-prototype differences and their underlying discriminative features. This result is also consistent with our previous analysis that the proposed SPC scheme under an appropriate temperature can encourage learning higher mRCL, inter-class differences, and more effective skeleton representations for the person re-ID task. We also found that a high temperature could lead to an evident degradation of model performance on the KGBD dataset that contains more individuals. In practice, we select the best model temperature for datasets of different sizes to achieve more balanced and better person re-ID performance.

5.2.5 Different Settings for DBSCAN

We adjust the sample density, minimum sample amount within the maximum distance , in the DBSCAN algorithm to encourage more balanced and stable clustering. In Table X and Table XI, it is seen that relatively lower and higher , which enhances the connectedness of skeleton instances in larger clusters and generates less prototypes, can boost the model performance in most cases. However, on larger datasets such as KGBD, setting a high is shown to reduce the overall performance, while adopting lower and higher , improving the amount of prototypes, can facilitate person re-ID performance. It suggests that more diverse skeleton prototypes may encourage mining richer discriminative features in the case of more identities.

5.3 Analysis of Body Relation Learning

To validate the ability of our approach on inferring body-component relations between different graphs, we visualize collaborative relations and corresponding relation value matrices of different graphs in Fig. 6. Here we take collaborative relations between adjacent graph levels as an example for analysis. As shown in Fig. 6 (a)-(c), we can see that our approach can learn to assign larger relation values to significantly moving body partitions such as feet, hips, hands, and elbows (nodes 0, 1, 2, 3, 6, 7, 8 and 9 in ), which typically perform collaborative motion during walking process and can carry abundant and unique motion features. By inferring inherent relations between these body components and fusing them into multi-level graph representations, our model is able to focus on crucial patterns of skeletal motion to learn more effective skeleton representations. Our approach can not only capture relations of major body components to their corresponding high-level components, but also allocate different attention to potentially motion-related components. For example, as shown in Fig. 6(d), Fig. 6(f) and Fig. 6(h), the movement of arms (nodes 3 and 4 in ) is highly correlated with the leg motion (nodes 0, 1, 2 and 3 in ), and different parts of them enjoy varying degree of collaboration in walking. Such results demonstrate that our approach can capture body-related high-level semantics ( body-component correspondence) and meanwhile facilitate global pattern learning of correlative components. More graph details and visualization results are provided in the Appendix.

| Probe-Gallery | Nm-Nm | Bg-Bg | Cl-Cl | Cl-Nm | Bg-Nm | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Methods | top-1 | top-5 | top-10 | mAP | top-1 | top-5 | top-10 | mAP | top-1 | top-5 | top-10 | mAP | top-1 | top-5 | top-10 | mAP | top-1 | top-5 | top-10 | mAP |

| †LMNN∗ [55] | 3.9 | 22.7 | 36.1 | — | 18.3 | 38.6 | 49.2 | — | 17.4 | 35.7 | 45.8 | — | 11.6 | 12.6 | 17.8 | — | 23.1 | 37.1 | 44.4 | — |

| †ITML∗ [56] | 7.5 | 22.2 | 34.2 | — | 19.5 | 26.0 | 33.7 | — | 20.1 | 34.4 | 43.3 | — | 10.3 | 24.5 | 36.1 | — | 21.8 | 30.4 | 36.3 | — |

| †ELF∗ [54] | 12.3 | 35.6 | 50.3 | — | 5.8 | 25.5 | 37.6 | — | 19.9 | 43.9 | 56.7 | — | 5.6 | 16.0 | 26.3 | — | 17.1 | 30.0 | 37.9 | — |

| †SDALF [57] | 4.9 | 27.0 | 41.6 | — | 10.2 | 33.5 | 47.2 | — | 16.7 | 42.0 | 56.7 | — | 11.6 | 19.4 | 27.6 | — | 22.9 | 30.1 | 36.1 | — |

| †Score-based MLR∗ [58] | 13.6 | 48.7 | 63.7 | — | 13.6 | 48.7 | 63.7 | — | 13.5 | 48.6 | 63.9 | — | 9.7 | 27.8 | 45.1 | — | 14.7 | 32.6 | 50.2 | — |

| †Feature-based MLR∗ [58] | 16.3 | 43.4 | 60.8 | — | 18.9 | 44.8 | 59.4 | — | 25.4 | 53.3 | 68.9 | — | 20.3 | 42.6 | 56.9 | — | 31.8 | 53.6 | 64.1 | — |

| AGE [19] | 20.8 | 29.3 | 34.2 | 3.5 | 37.1 | 56.2 | 67.0 | 9.8 | 35.5 | 54.3 | 65.3 | 9.6 | 14.6 | 33.0 | 42.7 | 3.0 | 32.4 | 51.2 | 60.1 | 3.9 |

| SM-SGE [22] | 50.2 | 73.5 | 81.9 | 6.6 | 26.6 | 49.0 | 59.4 | 9.3 | 27.2 | 51.4 | 63.2 | 9.7 | 10.6 | 26.3 | 35.9 | 3.0 | 16.6 | 36.8 | 47.5 | 3.5 |

| SPC-MGR (Ours) | 71.2 | 88.0 | 92.8 | 9.1 | 44.3 | 66.4 | 76.4 | 11.4 | 48.3 | 71.6 | 81.6 | 11.8 | 22.4 | 40.4 | 51.0 | 4.3 | 28.9 | 49.3 | 59.1 | 4.6 |

5.4 Analysis of Skeleton Representations

5.4.1 Intra-Class Tightness and Inter-Class Looseness

In this section, we further analyze the effectiveness of the learned skeleton representations with the proposed mACT and mRCL metrics, and compare our approach with existing self-supervised and unsupervised methods, AGE [19], SGELA [20], SM-SGE [22]), on all datasets. As shown in Fig. 7, we can obtain the following crucial analysis. The representations learned by the proposed SPC-MGR enjoy the highest mACT and mRCL among all methods on different datasets, which indicates that our approach is able to learn higher similarity for the same-class instances while improving the distance between different classes. Such results also substantiate our intuition on SPC scheme that maximizing intra-prototype similarity and inter-prototype dissimilarity can encourage capturing both similar and unique semantics of ground-truth classes in skeleton representation learning. The model obtaining both higher mACT and mRCL can also achieve better person re-ID performance in most cases. For example, our method, SGELA, and SM-SGE achieve higher top-1 accuracy and mAP than AGE on KS20 and BIWI testing sets (see Table II and Table III), which is consistent with results of mACT and mRCL shown in Fig. 7(b) and Fig. 7(d). This further verifies that the proposed mACT and mRCL can serve as auxiliary evaluation metrics to help analyze the effectiveness of learned representations in person re-ID tasks.

5.4.2 Visualization of Skeleton Representations

We conduct a T-SNE [59] visualization of skeleton representations for a qualitative analysis, and compare our approach with two state-of-the-art skeleton-based methods, , AGE [19], SM-SGE [22]. As presented in Fig. 8(c), the skeleton representations learned by our approach can form different class clusters with higher separation than AGE and SM-SGE on BIWI, which suggests the lower entropy of our representations. Interestingly, it is observed that the learned representations on KS20 are separated in small groups of the same class, as shown in Fig. 8(f), which enjoys significantly larger looseness than other two methods. Such results imply that our model may learn skeleton representations with finer separation and enable pattern-based grouping in a specific class.

| Source | KS20 | KGBD | IAS-Lab | BIWI | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Target | Type | top-1 | top-5 | top-10 | mAP | top-1 | top-5 | top-10 | mAP | top-1 | top-5 | top-10 | mAP | top-1 | top-5 | top-10 | mAP |

| KS20 | DG | — | — | — | — | 19.7 | 54.3 | 69.7 | 10.2 | 20.1 | 60.4 | 75.6 | 13.5 | 29.7 | 62.7 | 79.9 | 15.0 |

| UF | 59.0 | 79.0 | 86.2 | 21.7 | 48.4 | 77.7 | 85.2 | 21.6 | 52.2 | 78.3 | 89.1 | 22.8 | 50.8 | 79.7 | 87.1 | 21.9 | |

| KGBD | DG | 18.3 | 37.3 | 46.7 | 4.4 | — | — | — | — | 15.6 | 35.6 | 46.2 | 4.0 | 20.5 | 40.8 | 50.1 | 4.9 |

| UF | 28.5 | 45.2 | 52.9 | 6.4 | 40.8 | 57.5 | 65.0 | 6.9 | 31.1 | 48.1 | 55.7 | 6.5 | 29.7 | 47.4 | 55.0 | 6.4 | |

| IAS-A | DG | 27.9 | 57.1 | 71.5 | 15.6 | 29.6 | 56.5 | 71.6 | 16.0 | — | — | — | — | 27.5 | 53.6 | 67.9 | 14.4 |

| UF | 42.8 | 67.7 | 77.5 | 23.0 | 34.1 | 59.4 | 72.0 | 18.4 | 41.9 | 66.3 | 75.6 | 24.2 | 37.2 | 61.8 | 72.2 | 23.8 | |

| IAS-B | DG | 32.0 | 60.6 | 72.0 | 15.7 | 29.5 | 58.8 | 68.9 | 16.5 | — | — | — | — | 27.0 | 57.6 | 70.5 | 13.5 |

| UF | 45.4 | 68.8 | 81.4 | 29.0 | 35.9 | 61.6 | 72.1 | 21.9 | 43.3 | 68.4 | 79.4 | 24.1 | 39.1 | 66.9 | 74.5 | 24.2 | |

| BIWI-W | DG | 19.3 | 31.5 | 38.9 | 19.6 | 10.3 | 22.5 | 33.1 | 12.0 | 10.0 | 23.1 | 31.1 | 15.9 | — | — | — | — |

| UF | 21.6 | 32.3 | 40.9 | 20.7 | 15.0 | 29.6 | 39.1 | 14.3 | 17.7 | 29.3 | 36.2 | 16.6 | 18.9 | 31.5 | 40.5 | 19.4 | |

| BIWI-S | DG | 23.8 | 52.2 | 69.9 | 14.2 | 19.0 | 49.4 | 67.4 | 8.5 | 18.8 | 46.1 | 61.1 | 12.2 | — | — | — | — |

| UF | 40.4 | 62.9 | 74.2 | 16.2 | 21.3 | 46.5 | 57.2 | 9.8 | 27.9 | 44.5 | 63.9 | 12.8 | 34.1 | 57.3 | 69.8 | 16.0 | |

5.5 Broader Applications Under General Scenarios

In more general person re-ID scenarios such as large-scale RGB-based scenes, the source datasets might only contain RGB images without any skeleton data or lack sufficient data for model training. For the former case, the proposed approach can exploit unlabeled 3D skeletons estimated from RGB videos to learn effective representations for the person re-ID task. In the later case, our approach is able to directly transfer the pre-trained model to perform person re-ID on a new dataset. We show the broader applications of our approach under these general settings as follows.

5.5.1 Application to Model-Estimated Skeleton Data

To verify the effectiveness of our skeleton-based approach when applied to large-scale RGB-based settings (CASIA-B), we exploit pre-trained pose estimation models [60, 61] to extract 3D skeleton data from RGB videos of CASIA-B, and evaluate the performance of our approach with the estimated skeleton data. We compare our approach with representative appearance-based methods [55, 56, 54, 57, 58] and skeleton-based methods [19, 22]. As reported in Table XII, our approach is superior to recent skeleton-based methods SM-SGE and AGE with an evident performance gain of - top-1 accuracy and - mAP in four out of five evaluation conditions of CASIA-B, which substantiates that the proposed approach is capable of learning more discriminative skeleton representations than these methods in the case of using model-estimated skeleton data. Compared with representative classic appearance-based methods that utilize visual features, RGB features and silhouettes, our skeleton-based approach still achieves the best performance in most conditions. For instance, our approach not only performs better than LMNN [55] and ITML [56] that use metric learning with different visual features ( RGB and HSV colors and textures) [58], but also surpasses the score-based MLR model [58] that fuses RGB appearance and GEI features by up to top-1 accuracy, top-5 accuracy, and top-10 accuracy. Despite only utilizing estimated skeleton data with noise for training, the proposed unsupervised approach can still obtain highly competitive performance compared with supervised appearance-based methods in different conditions, which demonstrates the great potential of our approach to be applied to large-scale RGB-based datasets under more general re-ID settings.

5.5.2 Application to Generalized Person Re-Identification

Our approach can learn a unified skeleton graph representation for different skeleton data with varying body joints or topologies, which enables the pre-trained model to be directly transferred to different datasets for the generalized person re-ID task. To evaluate the effectiveness of our approach on generalized person re-ID, we exploit the model trained on the source dataset to perform person re-ID on the target dataset , , direct domain generalization (DG), and then further fine-tune the model with the unlabeled data of target datasets , , unsupervised fine-tuning (UF), to compare the generalization performance. As shown in Table XIII, we can draw the following observations and conclusions. The model trained on one dataset can achieve competitive person re-ID performance on other unseen target datasets. Direct generalization is shown to be effective among different datasets, while unsupervised fine-tuning on the target dataset can further improve the person re-ID performance. Such results demonstrate that our approach possesses good generalization ability with robustness to domain shifts [62] and can be promisingly applied to other open person re-ID tasks. Interestingly, we observe that training on different source datasets typically leads to different person re-ID performance on a new dataset. For example, the model trained on the KGBD fails to yield satisfactory performance on IAS-B, BIWI-W and BIWI-S, while the pre-trained model of KS20 with further fine-tuning on those testing sets can achieve superior performance to the original ones , as shown by the bold numbers in Table XIII, which implies that an appropriate domain initialization or model pre-training of our model could be potentially exploited to facilitate better generalized person re-ID performance.

6 Conclusion and Future Work

In this paper, we devise unified multi-level graphs to represent 3D skeletons, and propose an unsupervised skeleton prototype contrastive learning paradigm with multi-level relation modeling (SPC-MGR) to learn effective skeleton representations for person re-ID. We devise a multi-head structural relation layer to capture relations of neighbor body-component nodes in graphs, so as to aggregate key correlative features into effective node representations. To capture more discriminative patterns in skeletal motion, we propose a full-level collaborative relation layer to infer dynamic collaboration among different-level components. Meanwhile, a multi-level graph fusion is exploited to integrate collaborative node features across graphs to enhance structural semantics and global pattern learning. Lastly, we propose a skeleton prototype contrastive learning scheme to cluster unlabeled skeleton graph representations and contrast their inherent similarity with representative skeleton features to learn effective skeleton representations for person re-ID. The proposed SPC-MGR outperforms several state-of-the-art skeleton-based methods, and is also highly effective in more general person re-ID scenarios.

There exist two limitations in our study. The 3D skeletons used in this work are collected with relatively high precision with little noise, while a more general scenario for skeleton collection and person re-ID ( extracted from RGB videos in outdoor scenes) has not been thoroughly studied. The datasets for evaluation are with relatively limited scale when compared with prevalent RGB-based re-ID datasets like MSMT17, since large-scale 3D skeleton based re-ID benchmarks with more pedestrians and scenarios are still unavailable. To facilitate skeleton-based research, we will build and open our own skeleton-based re-ID datasets in the future.

We believe that this work makes progress towards lightweight, general, and robust person re-ID research, where we for the first time exploit unlabeled 3D skeleton data of small scale to learn discriminative and generalizable pedestrian representations to effectively perform unsupervised, multi-view, and generalized person re-ID. There are several potential directions to be further explored. More efficient clustering schemes, such as memory-based clustering, could be devised to improve the stability and consistency of skeleton representation learning in clustering. Combining finer-grained contrastive learning is able to help bootstrap clustering, while employing skeleton augmentation strategies could sample more instances to enhance the skeleton prototype contrastive learning. Another important direction is to explore graph-based self-supervised pretext tasks to facilitate capturing richer high-level graph semantics from unlabeled graph representations. Our model can be potentially transferred to other skeleton-based tasks , such as 3D human action recognition, and we can expect it to synergize other modalities for more computer vision tasks.

7 Ethical Statements

Person re-ID is a crucial task providing significant value for both academia and industry. Nevertheless, it could be a controversial technology like face recognition, since its improper application will probably pose threat to the public privacy and society security. In this context, we would like to emphasize that all datasets in our experiments are either publicly available (IAS-Lab, BIWI, KGBD) or officially authorized (CASIA-B, KS20). The official agents of those datasets have confirmed and guaranteed that all data are collected, processed, released, and used with the consent of subjects. For the protection of privacy, all individuals in datasets are anonymized with simple identity numbers. Besides, our approach and models must only be used for research purpose.

References

- [1] A. Nambiar, A. Bernardino, and J. C. Nascimento, “Gait-based person re-identification: A survey,” ACM Computing Surveys, vol. 52, no. 2, p. 33, 2019.

- [2] W.-S. Zheng, S. Gong, and T. Xiang, “Towards open-world person re-identification by one-shot group-based verification,” IEEE transactions on pattern analysis and machine intelligence, vol. 38, no. 3, pp. 591–606, 2015.

- [3] D. Baltieri, R. Vezzani, and R. Cucchiara, “Sarc3d: a new 3d body model for people tracking and re-identification,” in International Conference on Image Analysis and Processing. Springer, 2011, pp. 197–206.

- [4] R. Vezzani, D. Baltieri, and R. Cucchiara, “People reidentification in surveillance and forensics: A survey,” ACM Computing Surveys, vol. 46, no. 2, p. 29, 2013.

- [5] C. Su, F. Yang, S. Zhang, Q. Tian, L. S. Davis, and W. Gao, “Multi-task learning with low rank attribute embedding for multi-camera person re-identification,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 40, no. 5, pp. 1167–1181, 2018.

- [6] Y.-C. Chen, X. Zhu, W.-S. Zheng, and J.-H. Lai, “Person re-identification by camera correlation aware feature augmentation,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 40, no. 2, pp. 392–408, 2018.

- [7] M. Li, X. Zhu, and S. Gong, “Unsupervised tracklet person re-identification.” IEEE Transactions on Pattern Analysis and Machine Intelligence, pp. 1–1, 2019.

- [8] X. Qian, Y. Fu, T. Xiang, Y.-G. Jiang, and X. Xue, “Leader-based multi-scale attention deep architecture for person re-identification,” IEEE Transaction on Pattern Analysis Machine Intelligence, 2019.

- [9] H.-X. Yu, A. Wu, and W.-S. Zheng, “Unsupervised person re-identification by deep asymmetric metric embedding,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 42, no. 4, pp. 956–973, 2020.

- [10] L. Wang, T. Tan, H. Ning, and W. Hu, “Silhouette analysis-based gait recognition for human identification,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 25, no. 12, pp. 1505–1518, 2003.

- [11] C. Wang, J. Zhang, L. Wang, J. Pu, and X. Yuan, “Human identification using temporal information preserving gait template,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 34, no. 11, pp. 2164–2176, 2011.

- [12] T. Wang, S. Gong, X. Zhu, and S. Wang, “Person re-identification by discriminative selection in video ranking,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 38, no. 12, pp. 2501–2514, 2016.

- [13] R. Zhao, W. Oyang, and X. Wang, “Person re-identification by saliency learning,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 39, no. 2, pp. 356–370, 2017.

- [14] Z. Zhang, C. Lan, W. Zeng, and Z. Chen, “Densely semantically aligned person re-identification,” in CVPR, 2019, pp. 667–676.

- [15] N. Karianakis, Z. Liu, Y. Chen, and S. Soatto, “Reinforced temporal attention and split-rate transfer for depth-based person re-identification,” in ECCV, 2018, pp. 715–733.

- [16] Y. Ge, F. Zhu, D. Chen, R. Zhao, and H. Li, “Self-paced contrastive learning with hybrid memory for domain adaptive object re-id,” in NeurIPS, 2020.

- [17] I. B. Barbosa, M. Cristani, A. Del Bue, L. Bazzani, and V. Murino, “Re-identification with rgb-d sensors,” in ECCV. Springer, 2012, pp. 433–442.

- [18] V. O. Andersson and R. M. Araujo, “Person identification using anthropometric and gait data from kinect sensor,” in AAAI, 2015.

- [19] H. Rao, S. Wang, X. Hu, M. Tan, H. Da, J. Cheng, and B. Hu, “Self-supervised gait encoding with locality-aware attention for person re-identification,” in IJCAI, vol. 1, 2020, pp. 898–905.

- [20] H. Rao, S. Wang, X. Hu, M. Tan, Y. Guo, J. Cheng, X. Liu, and B. Hu, “A self-supervised gait encoding approach with locality-awareness for 3d skeleton based person re-identification,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021.

- [21] H. Rao, S. Xu, X. Hu, J. Cheng, and B. Hu, “Multi-level graph encoding with structural-collaborative relation learning for skeleton-based person re-identification,” in IJCAI, 2021.

- [22] H. Rao, X. Hu, J. Cheng, and B. Hu, “Sm-sge: A self-supervised multi-scale skeleton graph encoding framework for person re-identification,” in Proceedings of the 29th ACM international conference on Multimedia, 2021.

- [23] F. Han, B. Reily, W. Hoff, and H. Zhang, “Space-time representation of people based on 3d skeletal data: A review,” Computer Vision and Image Understanding, vol. 158, pp. 85–105, 2017.

- [24] R. Tanawongsuwan and A. Bobick, “Gait recognition from time-normalized joint-angle trajectories in the walking plane,” in CVPR, vol. 2, Dec 2001, pp. II–II.

- [25] R. Liao, S. Yu, W. An, and Y. Huang, “A model-based gait recognition method with body pose and human prior knowledge,” Pattern Recognition, vol. 98, p. 107069, 2020.

- [26] M. Munaro, A. Fossati, A. Basso, E. Menegatti, and L. Van Gool, “One-shot person re-identification with a consumer depth camera,” in Person Re-Identification. Springer, 2014, pp. 161–181.

- [27] J.-H. Yoo, M. S. Nixon, and C. J. Harris, “Extracting gait signatures based on anatomical knowledge,” in Proceedings of BMVA Symposium on Advancing Biometric Technologies. Citeseer, 2002, pp. 596–606.