Singular spectrum analysis of time series data from low frequency radiometers, with an application to SITARA data

Abstract

Understanding the temporal characteristics of data from low frequency radio telescopes is of importance in devising suitable calibration strategies. Application of time series analysis techniques to data from radio telescopes can reveal a wealth of information that can aid in calibration. In this paper, we investigate singular spectrum analysis (SSA) as an analysis tool for radio data. We show the intimate connection between SSA and Fourier techniques. We develop the relevant mathematics starting with an idealised periodic dataset and proceeding to include various non-ideal behaviours. We propose a novel technique to obtain long-term gain changes in data, leveraging the periodicity arising from sky drift through the antenna beams. We also simulate several plausible scenarios and apply the techniques to a 30-day time series data collected during June 2021 from SITARA - a short-spacing two element interferometer for global 21–cm detection. Applying the techniques to real data, we find that the first reconstructed component - the trend - has a strong anti-correlation with the local temperature suggesting temperature fluctuations as the most likely origin for the observed variations in the data. We also study the limitations of the calibration in the presence of diurnal gain variations and find that such variations are the likely impediment to calibrating SITARA data with SSA.

keywords:

Dark ages, reionisation, first stars – methods: data analysis1 Introduction

There is a renewed interest in low-frequency (< 300 MHz) radio astronomy, due to the multitude of science cases that benefit from low-frequency observations. Several low-frequency radio telescopes such as LOFAR (van Haarlem et al., 2013), MWA (Tingay et al., 2013), HERA (DeBoer et al., 2017) and LWA(Hallinan et al., 2015) have been constructed and are currently observing, with the low frequency Square Kilometer Array, SKA-low (Dewdney et al., 2009) in construction phase. Some of the key science goals for these metre wavelengths radio telescopes are cosmic dawn and epoch of reionisation (CD/EoR)(Trott, 2017), solar and heliospheric science (Nindos et al., 2019) and cosmic magnetism (Gaensler et al., 2004). Modern low frequency radio telescopes differ from their higher frequency counterparts in that they consist of aperture arrays constructed from large numbers of antennas, that are often beamformed in the analog or digital domain and correlated against each other to observe the radio sky. The calibration requirements, calibration models and the complexity are different to higher frequency (cm wavelengths and above) radio telescopes.

For dish-based interferometers with a small number of antennas, single dish telescopes and specialised low frequency radiometers, calibration techniques such as Dicke switching or noise injection can be employed. Specifically, global 21–cm experiments constitute a group of low-frequency radiometers requiring precise and accurate calibration of the systems to limit the systematics to less than one part in a million. Most of the global 21–cm experiments use single antennas as sensors and employ Dicke-switching ambient temperature thermal loads and noise diodes (see Rogers & Bowman (2012); Nambissan T. et al. (2021)) or noise injection (Singh et al., 2018) for bandpass calibration. The same calibrators are used to compensate for receiver gain drifts over extended periods of time. However, in order to maintain a stable excess noise ratio (ENR), the calibrators have to be maintained in temperature controlled environments, and the noise diodes themselves require periodic re-calibration with laboratory standards to mitigate drift and ageing, especially if they are to be used in applications demanding high accuracy such as cosmology. Besides, with future telescopes such as the SKA-low potentially employing thousands of low cost active antennas, calibration using dedicated noise diodes at each antenna becomes impossible. The aperture array nature of these instruments, i.e. large numbers of stationary antennas of simple construction, often with integrated low-cost low noise amplifiers (LNAs), necessitates calibration based on sky models. Therefore, development of novel mathematical tools to explore the long-term stability of these low-frequency telescopes and radiometers, and to determine the limits of calibration and data integration, become essential.

In this paper, we explore the potential of one such tool in analysing time series data from low frequency radiometers, and an application of it to real valued time series data. The instrument under study is SITARA, a broadband two element interferometer targeting global 21–cm detection employing short spacing interferometry. SITARA consists of two MWA style active antennas kept 1 m apart over a large ground-plane (35 m) and a correlator that records auto-correlations and cross-correlations with spectral resolution of about 61 kHz, across 0–250 MHz. The usable band is limited to 70–200 MHz, with reduced sensitivity in the 50–70 MHz band. SITARA is deployed within the radio quiet zone of the Murchison Radioastronomy Observatory (MRO) in Western Australia, which is also the site for future SKA-low. Data are collected round the clock and timestamped data are written out in miriad format (Sault et al., 1995). SITARA has been conceived as the first prototype to evolve tools and techniques for short-spacing interferometry; further details about SITARA can be found in Thekkeppattu et al. (2022). Single frequency data from SITARA auto-correlations can essentially be treated as radiometric data, with SITARA behaving as an uncalibrated total power radiometer.

2 Motivation

As an example to motivate this study, we consider real valued auto-correlations from SITARA data. A plot of single frequency channel time series data from SITARA auto-correlations, at a frequency of 111 MHz for the month of June 2021, is shown in Fig.1. The frequency of 111 MHz has relatively low radio frequency interference (RFI) and is also a frequency where mutual coupling between antennas does not cause large beam shifts (see Thekkeppattu et al. (2022) for details). The data have a periodic nature arising from the drifting of various regions of the radio sky through the antenna beams as the Earth rotates. If the radio telescope was perfectly calibrated, and there was no RFI, Fig.1 would have shown a perfectly repeating pattern.

As can be seen, this is not the case. The data have some multiplicative gain variations as well as some additive RFI. Therefore, our aim is to decompose this time series into some components that can help us understand it better. A plausible decomposition is the Fourier transform, i.e. into sines and cosines. However, radiometric data can have aperiodic patterns such as a drift which gets distributed to various modes in a Fourier analysis, thus making an interpretation difficult. Therefore, it is desirable to adopt an analysis which selects a suitable basis from the data; in other words a data driven technique.

Singular spectrum analysis (SSA) is a set of data driven tools that can decompose a time series into elementary patterns such as trend and oscillatory components. The raw data from a low frequency radio telescope with a fixed pointing are expected to have oscillatory components with a period corresponding to a sidereal day, while owing to environmental changes trend-like patterns (drift) are also expected. This makes SSA an ideal tool for analysis of such data.

Singular spectrum analysis (SSA) techniques appear in the analysis of dynamical systems (Broomhead & King, 1986; Vautard & Ghil, 1989) and SSA has been a popular tool for time series analysis in a variety of fields such as meteorology and climate science (Ghil et al., 2002) and geophysics (Dokht et al., 2016). However the application of SSA in radio astronomy has been limited (Donskikh et al., 2016; Gürel et al., 2018). Indeed, to the best of our knowledge, SSA has not been applied for time series analysis of radiometric time series data. This paper aims to detail the necessary mathematical tools for SSA of time series data from a low frequency radiometer and demonstrate them with SITARA data.

2.1 Notation and mathematical preliminaries

In this section, we describe the notation employed and define certain matrices that are useful for the subsequent analysis. Many of these definitions can be found in Davis (1979) as well as Olson et al. (2014). We use bold capital Roman letters such as to denote matrices, and small Roman letters such as are used for indexing. We use zero-based numbering such that the indices start at . Small Roman letters with an arrow such as denote vectors. Integers are denoted by capital Roman letters such as . We now proceed to define some basic matrices and associated linear algebra.

2.1.1 Circulant and anti-circulant matrices

An square matrix is circulant if each row of the matrix is a right shifted version of the previous row as shown in Eq.1.

| (1) |

An square matrix is anti-circulant if each row of the matrix is a left shifted version of the previous row as shown in Eq.2.

| (2) |

Both the circulant and anti-circulant matrices can be obtained from a sequence and are completely specified by that sequence. While useful in a wide variety of analyses, circulant matrices are not of much utility in this paper. We will be dealing with anti-circulant matrices instead. A matrix can also be interpreted to have been broken into blocks or submatrices which are themselves matrices.

2.1.2 Matrix products

The notations employed in this paper for the various matrix products are listed below.

-

1.

Regular matrix-matrix multiplication is denoted with no specific operator.

-

2.

denotes the outer product of two vectors that results in a matrix.

-

3.

represents an element-by-element multiplication of two matrices, known as the Hadamard product.

3 Basics of singular spectrum analysis

Though the theory of SSA is covered in detail in references such as Golyandina et al. (2001), we provide a basic description of the steps involved for completeness. In this paper we follow the SSA approach known as the Broomhead-King (BK) version, with the alternate being the Vautard-Ghil (VG) version. The VG version is only suitable for the analysis of a stationary time series and therefore is not discussed here. Following Golyandina & Korobeynikov (2014), the four major steps in BK SSA are given below.

-

1.

Convert the 1-D time series into a 2-D matrix called a trajectory matrix. This step is called embedding in time series analysis.

-

2.

Decompose the trajectory matrix with singular value decomposition (SVD). The result consists of a set of left and right singular vectors and associated singular values.

-

3.

Reconstruct the constituent components of the trajectory matrix with selected singular vectors and singular values.

-

4.

Reconstruct the time series with these components by performing diagonal averaging.

In the embedding step, the trajectory matrix is constructed with columns consisting of elements from sliding a window of length across the original time series. The window length is chosen in such a manner that each column of the resulting matrix consists of one cycle of the data. As an example, for drift sky data from a ground based radiometer, as given in Sec.4.1.1 this corresponds to one sidereal day . For each sliding, the elements inside the window are made into one column of the trajectory matrix, yielding a matrix with rows and columns. Consider a time series . The embedding step converts this time series of length into an matrix that has the elements of the time series as given in Eq.3.

| (3) |

From Eq.3, it can be seen that the anti-diagonals of a trajectory matrix contain similar terms, and therefore it is like a Hankel matrix, although it is not square in general. It may be noted that some implementations pad the original time series with zeros to obtain , though we do not employ this.

In the second step, the trajectory matrix is decomposed via SVD to yield left and right singular vectors as well as the corresponding singular values. This can be written as

| (4) |

The SVD operation decomposes the matrix into three matrices and ; where is an unitary matrix, is a unitary matrix and is an diagonal matrix consisting of the singular values. Since we consider only real valued matrices in this paper, the matrices and are real orthogonal matrices. Each singular value and the corresponding singular vectors and form an eigentriple . The decomposition step can also be written as

| (5) |

where is the vector outer product of two vectors yielding a matrix.

These eigentriples can be grouped and used to reconstruct the various components of the time series. An inspection of the singular values can give an insight into the complexity of the data and the number of eigentriples to consider, and this is also related to the rank of the trajectory matrix. It may be noted that calculation of SVD of a data matrix and subsequent reconstruction is identical to principal component analysis or PCA (Jolliffe, 1986). Seen in this light, SSA can be treated as PCA of the trajectory matrix formed out of a time series. However, PCA does not involve embedding or trajectory matrices, and therefore the interpretation of the PCA results is different to that of SSA.

The next step is to reconstruct the trajectory matrix with the selected eigentriples. Once this is accomplished, a reconstructed time series is obtained by performing an anti-diagonal averaging over the reconstructed matrix - this step is called “diagonal averaging” (Golyandina & Korobeynikov, 2014) 111The procedure is technically anti-diagonal averaging, however we use the term “diagonal averaging” to be consistent with the SSA literature.. The diagonal averaging step applied to a trajectory matrix yields a series as shown in Eq.6.

| (6) |

where and is the number of elements in the set . This step can be treated as the reverse of the embedding step, conversion of an anti-diagonal, Hankel-like matrix back into a time series. To gain an appreciation of this step, it is useful to take a closer look at the structure of a trajectory matrix as given in Eq.3. Recalling that this matrix is formed by sliding a window and converting the data into columns of a matrix, it can be seen that the anti-diagonals consist of the same value from the time series. Therefore to convert a trajectory matrix back into a time series, all that one has to do is a computation of the mean of the redundant information in each anti-diagonal and form a time series out of them. This operation is what Eq.6 does. It may also be noted that one can even select the first row and the last column of a trajectory matrix to reconstruct a time series, however it is preferable to obtain a mean of the redundant information in the anti-diagonal elements. When applied to each in Eq.5, diagonal averaging results in reconstructed series. However, one can also choose to form from groups of eigentriples, and then obtain reconstructed series. For example, one may pair the eigentriples, then each eigentriple used to form a matrix (by forming outer products and scaling with the corresponding singular value) and a pair of matrices added together to form one . Indeed, this grouping approach is what we employ in this paper. Therefore, the result is the decomposition of the original time series into a sum of reconstructed series as shown in Eq.7.

| (7) |

where is the reconstructed series obtained from and depends on the grouping of eigentriples. For elementary grouping, as there can only be a maximum of singular values for a matrix of dimensions . Depending on the selection of eigentriples, the reconstructed time series reveals the corresponding aspect of the data. For example, the first eigentriple contains information on the trend, while the subsequent ones are associated with oscillatory patterns. Higher eigentriples are typically associated with noise.

4 SSA of periodic time series

In this section, we obtain the mathematical form of SSA when applied to a time series containing periodic data. We first consider the case where we have an ideal periodic time series. We find that in this case, the singular vectors obtained are sinusoidal in nature. The case where a periodic series is corrupted by multiplicative element is then considered. We also perform simulations to validate the algebra.

4.1 SSA applied to an ideal periodic time series

Consider a time series of length that is strictly periodic with a period given by .

| (8) |

We also assume that the length of the time series obeys the relation , where is an integer. Forming a trajectory matrix out of this sequence gives the following matrix.

| (9) |

As is an integer multiple of , the trajectory matrix can be interpreted as a block matrix, and partitioned into square submatrices as given in Eq.10, where is an anti-circulant matrix of dimensions .

| (10) | ||||

| (11) |

Before proceeding further, some observations can be made.

-

1.

We find that , as there can only be a maximum of linearly independent columns in . Consequently there can be a maximum of non-zero singular values for .

-

2.

The anti-circulant matrix form given in Eq.10 is real symmetric. Therefore, it has an eigendecomposition given as , where is an orthonormal matrix of eigenvectors and is an matrix of the corresponding eigenvalues.

This enables us to write the trajectory matrix as a block matrix . Without going through the pedagogical details, we state that can be decomposed into three matrices as given below.

| (12) | ||||

| (13) |

where, in the last step we recall the SVD of to facilitate a direct comparison.

We now attempt to find the nature of this decomposition - specifically what one can expect from the singular vectors if the time series is periodic in nature. From Eq.12, it can be noted that the singular values are a scaled version of the eigenvalues of anti-circulant matrix . Inspecting the block matrix representation in Eq.12, it can also be seen that the matrix that forms to the left and right singular vectors is the matrix of eigenvectors . Therefore, all that we need to know is the nature of . A calculation of this is provided in Appendix.A, where it is shown that consists of sinusoidal eigenvectors if the data are periodic. Therefore, the left singular vectors - which are exactly the eigenvectors contained in the matrix - are all sinusoidal. Besides, the vectors in are all periodic in and thus when arranged as blocks in the right singular vector matrix, they form continuous sinusoids. Thus, the equivalence of decompositions in Eq.12 shows that when SSA is applied to a strictly periodic sequence, the singular vectors obtained are sinusoidal.

In Appendix.A, it is also shown that the eigendecomposition of anti-circulant matrices such as has a deep relation to discrete Fourier transforms (DFT). Indeed, when applied to an ideal periodic series, SSA results are directly comparable to DFT of one cycle of periodic data; this is also demonstrated with simulations in Sec.4.1.1. However, if the data have aperiodic structures, DFT of the entire span of data is not a suitable choice as aperiodic structures are not well represented in a sine/cosine Fourier basis. In such a scenario, SSA is better suited to reveal aperiodic structures in the data. Therefore, regardless of the nature of data, SSA can be applied to them and if the data are periodic, SSA reduces to a form of Fourier analysis.

Even though the decomposition given may be treated as an SVD of the trajectory matrix, some caution has to be exercised. Since the singular vector matrices have to be unitary, the right singular matrix gets divided by a scaling factor of while the singular values get multiplied the same factor. Also, the left singular matrix in SVD is unitary, while the eigenvector form given in Appendix.A has unit amplitude. The corresponding scaling applies to the singular value, however it is inconsequential when the eigendecomposition and SVD are computed with numerical packages. Moreover, eigenvalues can be positive and negative, and as given in Appendix.A, they occur in positive and negative pairs in this context. However, singular values are always non-negative and therefore the signs of the eigenvalues get applied to the singular vectors when equated with the SVD given in Eq.12. Also, we assume that both the singular values and eigenvalues (and corresponding vectors) have been ordered in the same fashion - typically in descending magnitude.

4.1.1 Simulations

To validate the above calculations, we analyse a simulated periodic time series. Since our aim is to gain a better understanding of SSA as applied to radiometric time series data, the simulations are beam multiplied sky temperature as would be seen by a radiometer. The simulation methodology used to obtain the time series is described in Thekkeppattu et al. (2022), which we have extended to yield a time series spanning 30 days. For this, we make an important assumption that the radio sky is static and therefore, the true sky temperature is exactly the same across all days for each local sidereal time. The simulations are for auto-correlations at a frequency of 111 MHz with a time cadence of 15 minutes of sidereal time. Since the sky has a periodicity of one sidereal day, it is important that the cadence is chosen in sidereal time units.

The simulation yields data points which are then converted into a trajectory matrix. Key to embedding data is selection of an appropriate window length. Typically, a window length is chosen such that it is divisible by the fundamental of the known periodicity. As we have prior information that data have a periodicity corresponding to a sidereal day, a choice of the window length is the number of samples that correspond to one sidereal day. Therefore a suitable embedding dimension is ; as it corresponds to one sidereal day. This gives a matrix of dimensions which can be partitioned into block matrices. The simulated time series and a representation of the trajectory matrix are shown in Fig.2.

The trajectory matrix is then decomposed with SVD. The results from the SVD are shown in Fig.3. Independently, an submatrix of the trajectory matrix (with ) is eigendecomposed. The eigenspectrum of this anti-circulant matrix is also given in Fig.3. Moreover, the DFT of the underlying periodic sequence of length is also computed and plotted in the same figure with the DFT values sorted in descending order. As the sequence is real, the DFT spectrum is Hermitian and the sorted values appear twice in the spectrum.

Several observations can be made from Fig.3. The spectrum of singular values matches the spectrum of eigenvalue magnitudes exactly, except for a scaling (which is ). The spectrum of eigenvalue magnitudes matches exactly the DFT magnitude spectrum, thereby validating the results from Appendix.A. The same scaling is also evident in the amplitudes of the right singular vectors. We also find that the singular vectors, except the component, are purely sinusoidal and occur in sine-cosine pairs as the calculations showed. We have also verified that the vector pairs are indeed orthogonal by calculating the inner product between such pairs. The singular vectors are also periodic in while the component is essentially the DC component of the data, similar to the zeroth component in Fourier transforms.

In Fig.4, we compare the first pair of orthogonal left singular vectors with the corresponding pair of the eigenvectors. We find that the eigenvectors are exactly the same as the singular vectors, except for a sign reversal in one of the vectors in the pair. It has been verified that the corresponding eigenvalue carries a negative sign, thus it is inconsequential to the overall analysis, and we can safely consider the equivalence between SVD and eigendecomposition in Eq.12 to be valid.

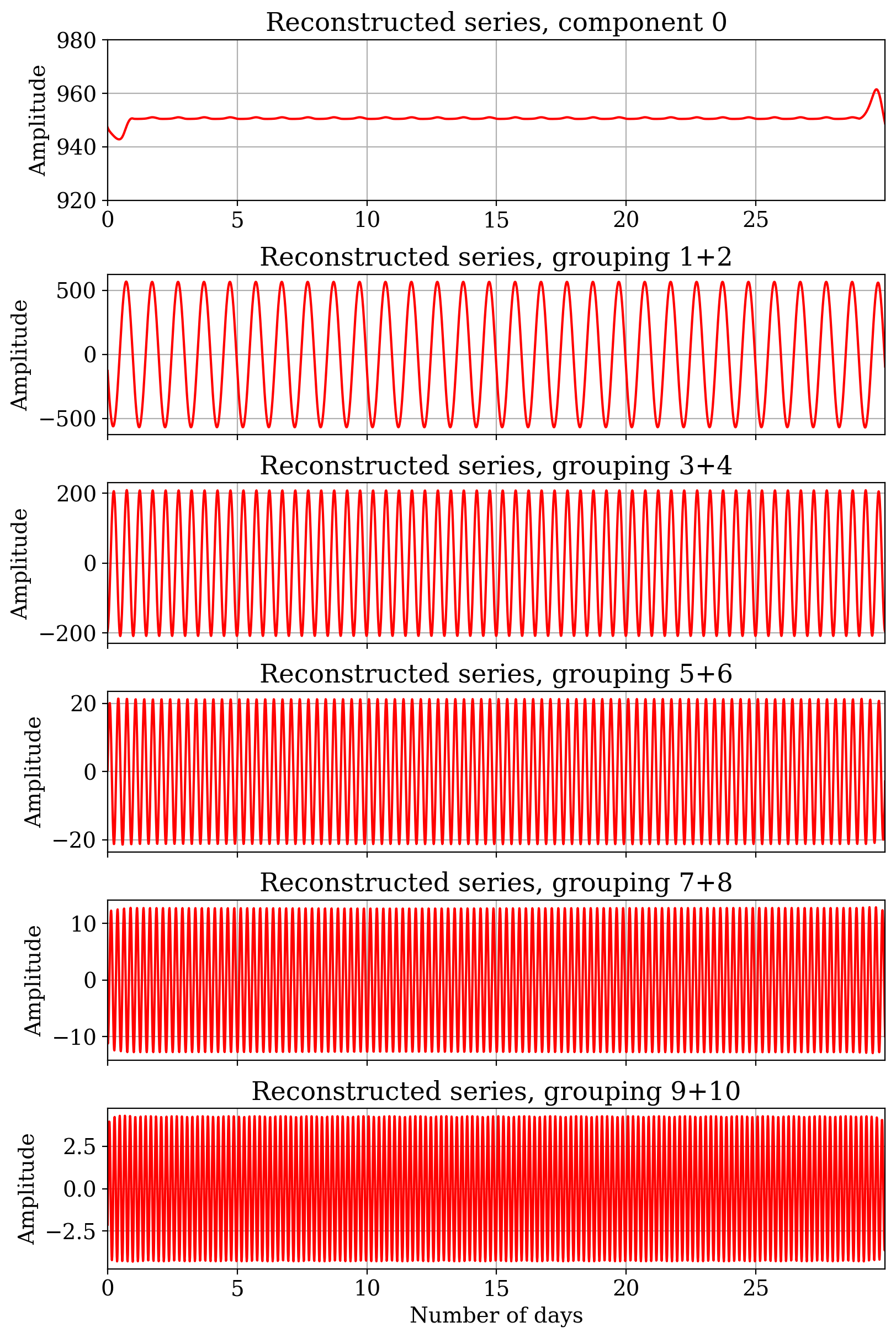

Thus, we find that the singular vectors obtained from SSA of a periodic sequence are purely sinusoidal with them occurring in orthogonal pairs. It may be also noted that while such orthogonal features have been noticed in the SSA literature (see for e.g. Ghil et al. (2002)), we mathematically demonstrated using simple arguments why such orthogonal sinusoidal pairs are to be expected in the SSA of an ideal time series. We now inspect the reconstructed series given in Fig.5. As we know that the singular vectors and corresponding values occur in pairs, we group the eigentriples into pairs (except the component) and apply the diagonal averaging. As can be seen, the reconstructed series are also purely sinusoidal since the vectors are sinusoidal. Another important observation with Fig.5 is the component, which is often called “trend” in SSA literature. For radiometric data this term corresponds to the sky-averaged component of the radio data and is the relevant observable for global 21–cm research. Since the origin of the reconstructed component can be traced back to eigenvector of the matrix , we can see that the corresponding Fourier component has a frequency of zero. In other words for an ideal periodic time series, the component in SSA is related to the zero frequency or DC term in the Fourier spectrum, such that its singular value is the same as the Fourier DC term.

In the above exercise, it is essential that the embedding dimension (window length) corresponds to exactly one sidereal day. If not, the decomposition will not lead to sinusoidal patterns of appropriate periodicity, and the various relations that we obtained between SVD, eigendecomposition and Fourier analysis become invalid. With this important result, we now proceed to introduce some nonidealities into the time series.

4.2 SSA applied to periodic time series with time-varying gains

The calculations and simulations so far assume a case where the radiometer system has been fully calibrated to yield data calibrated to a reference plane outside the Earth’s ionosphere. Observational radiometric data have multiple nonidealties, the dominant ones are additive components such as receiver noise temperatures and mutliplicative factors that are often called gains. Both of them can be time-varying and therefore are required to be known to correct the data. We now incorporate them into our model.

Assuming a simple system model for SITARA data (see Sec. 5.2 of Thekkeppattu et al. (2022)), the trajectory matrix of the measured data can be expressed as a Hadamard product, given in Eq.14.

| (14) |

where is the sky temperature trajectory matrix, is the receiver noise temperature trajectory matrix and is the trajectory matrix of time-varying system gains. Since the low frequency radio sky is bright, the dominant time-varying component in the data is due to sky temperature multiplied with time-varying system gains. Therefore, we can apply a simplifying assumption that the receiver noise temperature is constant with time and absorb it into the “ideal” sky matrix, and the sum can be written as . Thus we write

| (15) |

While Eq.14 and 15 may be written without resorting to a matrix formulation, expressing them with trajectory matrices enables SSA.

Based on the summation of from Eq.5 and Eq.15, the following relations can be derived.

| (16) | ||||

where we have used the distributive property of Hadamard products. Thus we have,

| (17) |

Though Eq.17 appears trivial, it reveals a useful aspect of the decomposition. Applying diagonal averaging to each leads to gain multiplied reconstructed series, as the Hadamard product is an element-by-element multiplication. However, we already know the “true” reconstructed series to be purely sinusoidal from Sec.4. The multiplication of a pure sinusoid waveform by a signal of much lower frequency results in amplitude modulation of the sinusoid, a process that is called amplitude modulation (AM) in communication systems (Haykin & Moher, 2008). Therefore, we may treat the gain multiplied reconstructed series as a form of AM signals with the sinusoids acting analogous to “carriers”; and using AM demodulation techniques to extract the temporal variations in gains.

Before proceeding further, it is instructive to verify the above calculations with simulations. For this, we begin with the basic time series from Sec.4. To this series, we add a constant of as the receiver noise temperature and simulated radiometric noise (integration time of 15 minutes and bandwidth of 61 kHz) for the overall system temperature. The gain profile is modelled as a random-walk, which is generated as a cumulative sum of Gaussian white noise. The resulting profile is subsequently smoothed with box-car averaging to suppress variations of time cadence less than one sidereal day. The gains are also normalised to avoid negative excursions. The time series is then multiplied with the simulated gains and the resulting series and the associated trajectory matrix are shown in Fig.6. It may be noted that the dimensions of the matrices and time series have all been kept the same.

The trajectory matrix is then decomposed with SVD, and the eigentriples grouped in the same manner as in Sec.4 and diagonally averaged. It is easy to notice that the resulting reconstructed series shown in Fig.7 are indeed amplitude modulated sinusoids.

Several algorithms exist to demodulate such AM signals to obtain their envelopes. Here we use an algorithm that is mathematically simple to interpret. The first step in this algorithm is converting a real valued signal into an analytic signal, which can be accomplished with Hilbert transforms. Taking the magnitude of this analytic signal yields the modulation envelope. We apply this procedure to the series given in Fig.7 . The resulting gain profiles are plotted in Fig.8, in which the curves are also normalised according to the method described in Sec.4.3.

Fig.8 validates the interpretation of Eq.17 that the reconstructed series have identical gain templates that can be recovered with amplitude demodulation. Indeed, a major outcome of this exercise is that the gain template for the DC component is identical to those of the periodic components. Therefore the time-variations in the DC term may be corrected for by using the gain templates from periodic components. This leads us to a novel calibration strategy for global 21–cm radiometers that can correct long-term drift and related systematics.

4.3 On the use of SSA to aid calibration

The recovered gains require normalisation to enable a comparison with the injected gains and make them useful for calibration. We begin with Eq.17 and incorporate Eq.5 as shown in Eq.18, where we also include the eigentriple grouping. The indices have also been modified for convenience.

| (18) | ||||

where we used the property that singular values are identical for the eigentriples in a grouped pair. The vectors and are from unitary matrices and therefore their individual inner products equal to unity. However, for subsequent analysis, these vectors need to be normalised to have unity amplitude when diagonally averaged after grouping. The normalisation can be achieved with the following operations, where the sinusoidal terms in the brackets have been normalised to have unity amplitude.

| (19) | ||||

as the lengths of the vectors and are and respectively. The grouped and diagonally averaged reconstructed series is given as

| (20) |

where is the gain series and is the “carrier” sinusoid. The normalisation in Eq.19 ensures unity amplitude for . Upon demodulation of a series , the carrier is removed and a modulation envelope is obtained as

| (21) |

From these envelopes, gain templates can be recovered as

| (22) |

For Eq.22 to be useful for calibration, the only auxiliary information required is the singular value . This is the singular value of the component with the same periodicity of that we expect from the true sky, and can be obtained from the SSA of the simulated ideal sky time series from Sec.4. Using those singular values, we obtain the recovered and appropriately normalised gains that are plotted in Fig.8.

We are now in a position to apply those gains to the reconstructed series to achieve calibration and compare the calibration with the expected levels. The results are given in Fig.9.

We find that the mean-sky component has been calibrated solely using the periodic component(s) of the sky with the aid of SSA. It has to be noted that the prescription provided here to calibrate the mean-sky relies only on the singular values of the simulated periodic components. Even without using the singular values, and only using the data, it is possible to achieve relative gain calibration, while applying gains with appropriate singular values to the mean-sky component establishes its proper brightness temperature scale.

Let us now try to obtain an intuitive appreciation of this calibration. The trend component obtained from SSA is essentially a box-car averaging of time series data. If the window used for averaging encompasses exactly one sidereal day, the periodic variations caused by the Galaxy transiting through the beam are averaged out. If the system were perfect the result would be a constant temperature, which is the sum of mean-sky and receiver noise temperatures. Thus the trend that we observe in the non-ideal system may be modelled by a constant value multiplied with the time-varying gains.

The gain variations that we have studied so far have smooth evolution over time with no periodicity. However, for real radio telescope systems that do not have temperature regulation, gain variations with local temperature are expected - details given in Sec.5. Indeed, the rising and setting of the Sun can heat and cool the components in the analog signal chain and induce diurnal variations in the gain that may be correlated with the sky-drift. Yet another potential origin for diurnal patterns in gains is the ionosphere. Therefore it is imperative to study such a scenario, which we perform next.

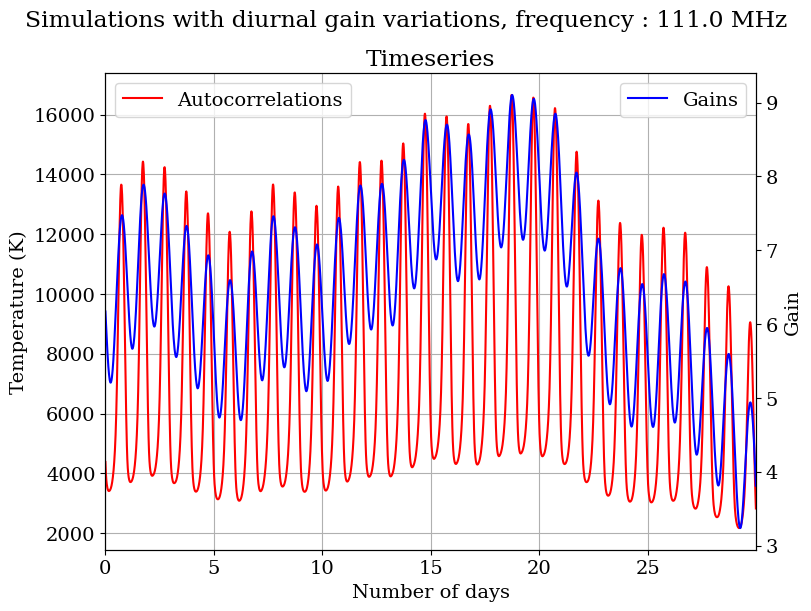

4.4 SSA with diurnal gain variations

To the gains simulated in the previous section, we add a sinusoidal diurnal gain component. For a time series that spans a month, the diurnal gain variations are expected to show a strong correlation with Galaxy transit. For longer time series, the difference between sidereal time and civil time reduces this correlation. The modified gains and the resulting time series are shown in Fig.10.

We perform SSA in the exact same manner as in the previous case, and recover normalised gains, as shown in Fig.11.

It is interesting to note that the correlation between gains and sky patterns reduces the separability of the two, and the recovered gains from the reconstructed series differ from one another. We attempt a calibration of the mean component with these gains and the results are given in Fig.12.

The differences in the recovered gains in Fig.11 as well as the calibrated temperatures in Fig.12 demonstrate a fundamental limitation of the SSA technique in calibrating data when the gain variations are correlated with sky patterns. Lacking auxiliary information related to the cause of such gain variations, for example temperature measurements, \colorblack the calibration technique fails and yields erroneous results as demonstrated. On the other hand, a disagreement between the recovered gains can be used as a practical indicator of gain variations that have frequencies coinciding with the periodicity induced by sky drift, thus pointing to a necessity for subsidiary information. In other words, differences in recovered gains between the groupings indicate presence of correlations between the gains and sky drift. It may be also noted that no a priori information is required to infer such a correlation. Having demonstrated the various cases of SSA, we now apply these techniques to data from SITARA observations.

5 SSA applied to SITARA data

5.1 Data preparation

We use a time series of SITARA spectral data from the concatenated 30 days of June 2021 data. The individual data files in miriad format are converted to hdf5 format and concatenated using custom tools written in python. From the concatenated dataset, auto-correlation data for a frequency of 111 MHz are extracted, as it is a frequency with relatively low RFI occupancy. The major source of RFI observed during this period was Solar radio bursts that are transient in nature. This raw data time series as a function of Julian Date (JD) is shown in Fig.1.

The raw SITARA data spanning a month have about 749,000 points, which makes calculations difficult. Therefore, the data are binned into the same number of bins (2879) as done in the simulations, giving one sample per 15 minutes or 96 samples per sidereal day. Once again, in performing binning it is important to use sidereal time - and not Julian time - to ensure an equal number of samples for each cycle of sky-drift. The data are then embedded into a trajectory matrix and SSA is applied in the same manner as before. The eigentriples are grouped and reconstructed series formed.

Fig.13 shows first 4 of the reconstructed series. The trend plot also has a low time cadence record of the temperature recorded within the Murchison shire, which is available from NCEI-NOAA 222https://www.ncei.noaa.gov/access/search/index. The temperature scale has been inverted, as the trend shows an anti-correlation with temperature. Further, the radio data and temperature data fall into two different families with different sampling, and therefore the temperature data have been interpolated to the same Julian day bins as the radio data. The Pearson correlation coefficient computed between the trend and the temperature data is -0.83, which denotes a strong anti-correlation between the trend and temperature.

Apart from component ageing effects, there are two major reasons for such temperature-induced variations in radiometric data.

-

1.

The noise temperatures of active devices used in amplifiers increase with physical temperature333It may be noted that this is one of the reasons for cryogenic cooling of radio telescope front-ends to achieve a low overall system temperature.. The same holds true for passive components such as attenuators. If this is the cause of drift, the pattern so obtained is expected to be correlated with physical temperature.

-

2.

The gain of amplifiers reduces as temperature is increased. In this case, the trend pattern and physical temperature would be anti-correlated.

It is therefore evident from Fig.13 that the major contributor to the trend is temperature-induced gain variations. The receiver noise would inevitably vary as a function of physical temperature, however when the overall system temperature is sky-dominated the impact of this would be secondary to gain variations. This informs our choice of calibration model given in Sec.4.3, where we assume a constant receiver noise temperature and a time-varying gain.

Subsequently, the gain patterns are recovered from the reconstructed series with the demodulation technique. These recovered gains for the first two periodic components are shown in Fig.14. As can be seen, the normalised gains are different between the series, thus pointing to potential diurnal gain variations. Therefore, we refrain from applying these gains to the mean component to establish its brightness temperature scale.

6 Discussion

In this paper we developed the mathematical framework for SSA of a radiometric time series and demonstrated its application in analysing radiometric time series data from a radio telescope at a frequency with relatively low RFI. A major outcome of this work is a novel method to calibrate the mean-sky component of radio data using the periodic component of sky-drift patterns. Calibration using differential measurements of the sky to obtain the system gain has been successfully utilised in different low frequency experiments (Rogers et al., 2004; Singh et al., 2017; Thekkeppattu et al., 2022). However, those applications were limited, as the calibration was performed on a day-to-day basis assuming a constant value for the system gain for a specific day. With SSA, the periodic nature of data is leveraged to obtain gains that can vary with time, provided they do not a diurnal component. Seen in this light, SSA can be viewed as an extension of the aforesaid technique.

Let us examine the implications of the gain recovery in Fig.8. The gain patterns that we recovered are purely from the periodic components of the sky and the singular values of simulated periodic components. The procedure does not need simulations that include the mean-sky or the zero-point of the sky maps. Indeed, zero-point errors have been noticed in sky maps, since these maps are often products of combination of sky surveys conducted with different telescopes. Disagreements exist between estimates of the zero-points of sky maps across experiments, for example, Monsalve et al. (2021) report a different zero-point offset for the 150 MHz sky map (Landecker & Wielebinski, 1970) compared to the measurements of Patra et al. (2015). Therefore, the methods outlined in this paper can be applied to experiments where zero-point levels have to be accurately known to verify that the gains do not have variations that can confuse sky signals. However, to get accurate estimates of system gains the input sky maps need to get an accurate temperature scale even though zero-points errors are acceptable. An associated caveat is that while SSA can provide multiplicative system gains, the additive receiver noise temperature is practically indistinguishable from the mean-sky component. Therefore, experiments requiring accurate measurements of zero-level still need additional calibration to estimate the receiver noise temperature. Nonetheless, as demonstrated in this paper, SSA can be utilised as a diagnostic tool for such experiments.

6.1 Caveats and future work

Since this is the first work exploring SSA for radiometric data analysis and calibration, we have kept the analysis simple. Specifically, low frequency radio data can have RFI and we ignored the flagging that has been performed. Flagging considerations lead to gaps in a time series dataset and may necessitate data in-painting or interpolation. Such explorations will be taken up in future work.

We have developed the mathematical background and demonstrated an application of SSA for auto-correlations at a single frequency, while SITARA has nearly two thousand usable frequency channels in auto-correlations and cross-correlations each. To use all the frequency channels and to consider complex cross-correlations, the mathematical framework developed in this paper has to be expanded. For a proper treatment of multiple frequency channels, multivariate SSA techniques will be explored in future work.

While we demonstrated via simulations a calibration technique to remove gain variations that evolve smoothly with time, the application of it to SITARA data is hampered by diurnal gain variations. This shows the necessity to maintain temperature regulation or inclusion of thermometers at points in the signal chain to track such gain variations - this will be incorporated in future revisions of the SITARA system. Additionally, the data in frequency channels below 50 MHz, where receiver temperature dominates over sky temperature, show diurnal periodicity arising from such gain variations which may be exploited as a template to improve calibration.

6.2 A potential application of SSA for space-based 21–cm experiments

As we demonstrated, a major limitation with the SSA technique for calibration is the confusion between diurnal gain variations and sky drift, as both have approximately the same periodicity. If the periodicities can be made to differ, the calibration can be improved significantly. Specifically, if the sky-drift can be made faster than the gain variations, substantially better calibration can be expected. While it is difficult for ground-based experiments to introduce such a separation, a radiometer payload on a spin-stabilized satellite can have beams that rapidly scan the sky, thereby increasing the sky-drift rate. For example, a broadband dipole antenna could be placed on a satellite spinning such that the nulls sweep the Galactic plane at a rate much faster than any gain variations, including the flicker noise of the electronic systems. Spinning a satellite at a rate faster than than the knee frequency of the radiometric system, to reduce the deleterious impact of gain fluctuations on the images, has been employed in CMB missions such as Planck (Bersanelli et al., 2010). As there are a few projects proposed, planned or launched targeting the global 21–cm signal from a satellite platform such as DARE (Burns et al., 2012), Longjiang/Chang’e-4 (Jia et al., 2018), DSL (Chen et al., 2021), PRATUSH etc., we opine that SSA would be an ideal tool for analysis and calibration of time series data from such radiometers in space.

7 Conclusions

In this paper, we introduced singular spectrum analysis (SSA) as a powerful tool to study radiometric data. We showed the deep connections between the SSA techniques and Fourier transforms and leverage that to obtain long-term gain evolution from radio data. A novel technique to calibrate 21–cm experiments using periodicity in the sky drift patterns has been proposed and simulated. The limitations of that technique in the presence of diurnal variations that can confuse with sky-drift is studied. Upon application of SSA to SITARA data, we find that the obtained decomposition has the features as expected, with the trend showing strong anti-correlation with temperature. However, the gains obtained point to diurnal variations as a limiting factor in using SSA for gain calibration of SITARA data.

Acknowledgements

This work is funded through CT’s ARC Future Fellowship, FT180100321 - ”Unveiling the first billion years: enabling Epoch of Reionisation science”. This work makes use of the Murchison Radio-astronomy Observatory, operated by CSIRO. We acknowledge the Wajarri Yamatji people as the traditional owners of the Observatory site. JNT acknowledges Dr. Adrian T. Sutinjo for his Linear Algebra over Lunch lectures. This work uses the following python packages and we would like to thank the authors and maintainers of these packages :- numpy (Harris et al., 2020), scipy (Virtanen et al., 2020), healpy (Zonca et al., 2019), astropy (Astropy Collaboration et al., 2013, 2018), aipy (Parsons, 2016), matplotlib (Hunter, 2007), ephem (Rhodes, 2011), pygdsm (Price, 2016) and h5py (https://www.h5py.org/).

Data Availability

The data used in this work will be made available based on reasonable requests.

References

- Astropy Collaboration et al. (2013) Astropy Collaboration et al., 2013, A&A, 558, A33

- Astropy Collaboration et al. (2018) Astropy Collaboration et al., 2018, AJ, 156, 123

- Bersanelli et al. (2010) Bersanelli M., et al., 2010, A&A, 520, A4

- Broomhead & King (1986) Broomhead D., King G. P., 1986, Physica D: Nonlinear Phenomena, 20, 217

- Burns et al. (2012) Burns J. O., et al., 2012, Advances in Space Research, 49, 433

- Chen et al. (2021) Chen X., Yan J., Deng L., Wu F., Wu L., Xu Y., Zhou L., 2021, Philosophical Transactions of the Royal Society of London Series A, 379, 20190566

- Davis (1979) Davis P. J., 1979, Circulant Matrices. John Wiley, NewYork

- DeBoer et al. (2017) DeBoer D. R., et al., 2017, Publications of the Astronomical Society of the Pacific, 129, 045001

- Dewdney et al. (2009) Dewdney P. E., Hall P. J., Schilizzi R. T., Lazio T. J. L. W., 2009, IEEE Proceedings, 97, 1482

- Dokht et al. (2016) Dokht R. M., Gu Y. J., Sacchi M. D., 2016, Geophysical Journal International, 208, 1430

- Donskikh et al. (2016) Donskikh G. I., Ryabov M. I., Sukharev A. L., Aller M., 2016, Astrophysics, 59, 199

- Gaensler et al. (2004) Gaensler B., Beck R., Feretti L., 2004, New Astronomy Reviews, 48, 1003

- Ghil et al. (2002) Ghil M., et al., 2002, Rev. Geophys., 40, 1 – 41

- Golyandina & Korobeynikov (2014) Golyandina N., Korobeynikov A., 2014, Computational Statistics & Data Analysis, 71, 934

- Golyandina et al. (2001) Golyandina N., Nekrutkin V., Zhigljavsky A. A., 2001, Analysis of time series structure: SSA and related techniques. CRC press

- Gray (2006) Gray R. M., 2006, Foundations and Trends in Communications and Information Theory, 2, 155

- Gürel et al. (2018) Gürel N. M., Hurley P., Simeoni M., 2018, in 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). pp 1983–19832, doi:10.1109/CVPRW.2018.00254

- Hallinan et al. (2015) Hallinan G., et al., 2015, in American Astronomical Society Meeting Abstracts #225. p. 328.01

- Harris et al. (2020) Harris C. R., et al., 2020, Nature, 585, 357

- Haykin & Moher (2008) Haykin S., Moher M., 2008, An Introduction to Analog and Digital Communications, 2nd Edition. Wiley

- Hunter (2007) Hunter J. D., 2007, Computing in Science & Engineering, 9, 90

- Jia et al. (2018) Jia Y., Zou Y., Ping J., Xue C., Yan J., Ning Y., 2018, Planet. Space Sci., 162, 207

- Jolliffe (1986) Jolliffe I. T., 1986, Principal component analysis

- Landecker & Wielebinski (1970) Landecker T. L., Wielebinski R., 1970, Australian Journal of Physics Astrophysical Supplement, 16, 1

- Monsalve et al. (2021) Monsalve R. A., et al., 2021, ApJ, 908, 145

- Nambissan T. et al. (2021) Nambissan T. J., et al., 2021, Experimental Astronomy

- Nindos et al. (2019) Nindos A., Kontar E., Oberoi D., 2019, Advances in Space Research, 63, 1404

- Olson et al. (2014) Olson B. J., Shaw S. W., Shi C., Pierre C., Parker R. G., 2014, Applied Mechanics Reviews, 66

- Parsons (2016) Parsons A., 2016, AIPY: Astronomical Interferometry in PYthon (ascl:1609.012)

- Patra et al. (2015) Patra N., Subrahmanyan R., Sethi S., Udaya Shankar N., Raghunathan A., 2015, ApJ, 801, 138

- Price (2016) Price D. C., 2016, PyGSM: Python interface to the Global Sky Model (ascl:1603.013)

- Rhodes (2011) Rhodes B. C., 2011, PyEphem: Astronomical Ephemeris for Python (ascl:1112.014)

- Rogers & Bowman (2012) Rogers A. E. E., Bowman J. D., 2012, Radio Science, 47

- Rogers et al. (2004) Rogers A. E. E., Pratap P., Kratzenberg E., Diaz M. A., 2004, Radio Science, 39

- Sault et al. (1995) Sault R. J., Teuben P. J., Wright M. C. H., 1995, in Shaw R. A., Payne H. E., Hayes J. J. E., eds, Astronomical Society of the Pacific Conference Series Vol. 77, Astronomical Data Analysis Software and Systems IV. p. 433 (arXiv:astro-ph/0612759)

- Singh et al. (2017) Singh S., et al., 2017, The Astrophysical Journal, 845, L12

- Singh et al. (2018) Singh S., Subrahmanyan R., Shankar N. U., Rao M. S., Girish B. S., Raghunathan A., Somashekar R., Srivani K. S., 2018, Experimental Astronomy, 45, 269

- Thekkeppattu et al. (2022) Thekkeppattu J. N., McKinley B., Trott C. M., Jones J., Ung D. C. X., 2022, Publ. Astron. Soc. Australia, 39, e018

- Tingay et al. (2013) Tingay S. J., et al., 2013, Publ. Astron. Soc. Australia, 30, e007

- Trott (2017) Trott C. M., 2017, Proceedings of the International Astronomical Union, 12, 92–97

- Vautard & Ghil (1989) Vautard R., Ghil M., 1989, Physica D: Nonlinear Phenomena, 35, 395

- Virtanen et al. (2020) Virtanen P., et al., 2020, Nature Methods, 17, 261

- Zonca et al. (2019) Zonca A., Singer L., Lenz D., Reinecke M., Rosset C., Hivon E., Gorski K., 2019, The Journal of Open Source Software, 4, 1298

- van Haarlem et al. (2013) van Haarlem M. P., et al., 2013, A&A, 556, A2

Appendix A Eigendecomposition of anti-circulant matrices

The relation between circulant matrices and circular convolution as well as the relation between the eigendecomposition of such matrices and Discrete Fourier Transforms (DFT) are well known - see for e.g. Gray (2006) for an approachable introduction to this relation - and put to use in several signal processing applications. However, the matrices of relevance for this paper are anti-circulant and therefore we provide some useful relations between those matrices and DFT.

Consider a real valued sequence . This sequence can be used to construct an real valued anti-circulant matrix as given in Eq.23.

| (23) |

The eigendecomposition of can be written as

| (24) |

where

| (25) |

where are the eigenvalues and corresponding eigenvectors with being the index. The following set of equations are obtained from eigendecomposition.

| (26) | ||||

which can be rearranged into,

| (27) | ||||

The above set of equations can be recast into a summation equation as given in Eq.28.

| (28) |

where . The matrix is real symmetric and therefore the eigenvalues are real valued. The eigenvectors are fully real and we assume them to be periodic as given in Eq.29.

| (29) |

This simplifies Eq.28 into

| (30) |

It may be noted that the LHS of the above equation is a case of circular correlation between and . Therefore we can write

| (31) |

where denotes correlation. Applying Fourier transforms, we get

| (32) |

Fourier transform of correlation can be written as a product, giving us

| (33) |

We know that and are real. Since is periodic, a suitable choice is sinusoidal functions.

A.1 Cosine case

We first assume a cosine form for such that , where . The Fourier transform of such a cosine function is a pair of delta functions as given in Eq.34.

| (34) |

The real valued normalisation factors have been ignored for the Fourier transform as they cancel out in the subsequent steps. The correlation equation can now be written as

| (35) |

Note that the above equation becomes non-zero only when or . Let us take case. We have

| (36) |

where the component of is

| (37) |

Then

| (38) |

Leading to

| (39) |

Since is real, we have or . As is real, the Fourier spectrum is Hermitian and therefore it can be shown that the above relation holds for . Thus, the eigenvalue and the corresponding cosine eigenvector for a given are

| (40) | ||||

A.2 Sine case

Another plausible eigenvector is , giving

| (41) |

Calculating the Fourier transform and simplifying, we obtain

| (42) |

Once again, let us consider case. We have

| (43) |

Leading to

| (44) |

Once again, since is real we have . Thus, the eigenvalue and the corresponding sine eigenvector for a given are

| (45) | ||||

Summarising, the spectrum of an anti-circulant matrix consists of the absolute value of the DFT spectrum of the underlying periodic pattern, with the values occurring in positive-negative pairs. The eigenvectors are sines and cosines with phases determined by the phase of the DFT.