Single-pixel diffuser camera

Abstract

We present a compact, diffuser assisted, single-pixel computational camera. A rotating ground glass diffuser is adopted, in preference to a commonly used digital micro-mirror device (DMD), to encode a two-dimensional (2D) image into single-pixel signals. We retrieve images with an 8.8% sampling ratio after the calibration of the pseudo-random pattern of the diffuser under incoherent illumination. Furthermore, we demonstrate hyperspectral imaging with line array detection by adding a diffraction grating. The implementation results in a cost-effective single-pixel camera for high-dimensional imaging, with potential for imaging in non-visible wavebands.

I Introduction

By encoding depth or spectral information, imaging assisted by a diffuser or thin scatterer can retrieve a three-dimensional (3D) data cube rather than the conventional 2D image obtained from an optical sensor. The concept of a ’diffuser camera’ (’DiffuserCam’) [1] has gained a lot of interest, due to its compact and cost-effective approach. Retrieval of a three-dimensional (3D), multi-view [2], multispectral [3] or hyperspectral image [4] via single-shot computational imaging has recently been demonstrated. However, such approaches are challenging for more esoteric wavelength bands, for instance, x-ray or terahertz imaging. As the name suggests, single-pixel imaging [5, 6, 7, 8, 9], produces images without the need for a 2D detector, making use of structured detection or illumination of the object to computationally derive an image. A such, single-pixel approaches are of interest in offering alternatives to conventional imaging, both for applications in the visible, but also as a low-cost alternative in regimes such as x-ray [10, 11], infrared [12] and terahertz band [13], and even imaging atoms [14]. Additionally, single-pixel approaches help to inform high-performance imaging techniques, for example, 3D depth, time-resolved or multispectral imaging, in which CCD based systems would be complicated or expensive to implement.

Typically, a single-pixel camera uses a digital micromirror device (DMD), which is placed in the image plane, to modulate the image of objects with different 2D structured sampling patterns. The single-pixel detector then measures the corresponding total light intensity. By correlating the 1 dimensional (1D) single-pixel signals with the modulation patterns, reconstruction algorithms such as compressed sensing can rebuild the 2D image. Alternatively, the DMD can modulate the illumination of the object, an approach commonly called computational ghost imaging (CGI) [15, 6, 16]. The DMD can be replaced by a liquid crystal spatial light modulator [17], rotating ground-glass diffusers [18] or LED arrays [19, 20]. Image retrieval can be achieved using the same algorithms as in the structured detection scheme.

In cases where only passive imaging is needed, the structured detection scheme offers the benefit of a more compact and cheaper imaging system, due to the lack of a need for light sources. However, when using wavelengths such as x-ray, conventional DMDs cannot be used for modulation. Thus, x-ray single-pixel imaging was realized using the CGI scheme, using, for example, a monochromatic x-ray beam passing through a slit array and a moving porous gold film [10] or using polychromatic x-rays and a sheet of rotating sandpaper [11] to generate pseudothermal illumination speckle patterns.

Here in this work, we present a single-pixel diffuser camera (SP-DiffuserCam) that uses a low-cost rotating ground glass diffuser instead of a DMD for 2D structured detection modulation with incoherent light. We show that the random and fixed patterns of a simple diffuser placed in the image plane can serve as 2D light intensity modulation in single-pixel imaging. We refer to this concept as a passive version of classical GI, but with no need for simultaneous measurements of reference patterns. We show this concept is readily extendable for hyperspectral imaging.

II Principle

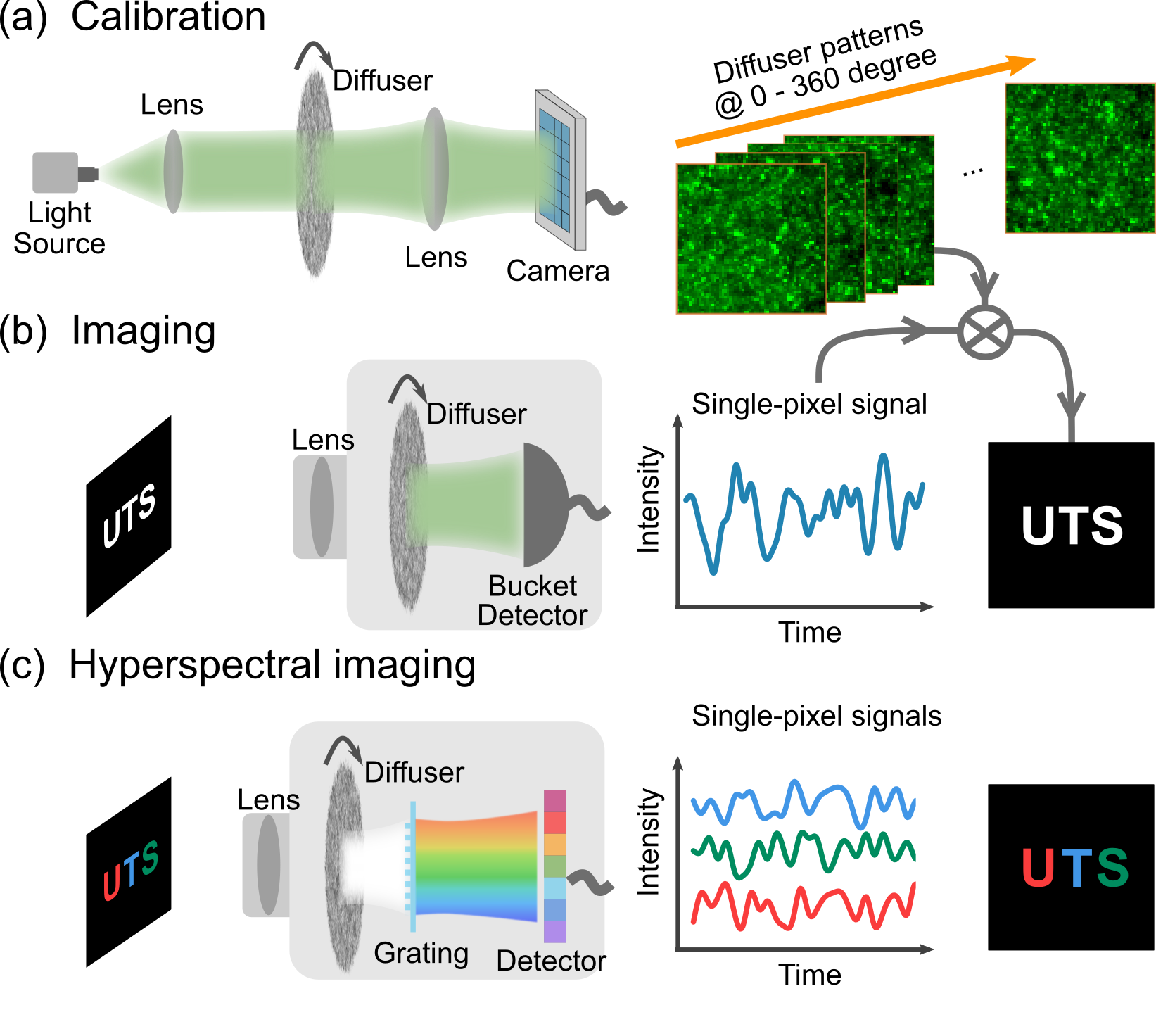

Figure 1 presents the SP-DiffuserCam concept. The first procedure is a calibration process to map the speckle-liked patterns of the diffuser, where the intensity distributions for each angle are acquired sequentially by rotating the diffuser. Note that this could be achieved using an laterally moving stage, but here we only consider rotation for system compactness. The second step is the temporal single-pixel measurement. An object is illuminated by the same incoherent light source and imaged on the diffuser plane by a lens. The transmitted light intensity passing through the object , measured by a single-pixel detector under another repeated rotation, is simply the integration of the light on the detector plane,

| (1) |

We then can acquire the object image by using the differential correlation approach [21],

| (2) |

where denotes the ensemble average over the distribution of patterns and denotes the weights of the patterns.

For hyperspectral imaging, the detection intensity is simply the single-pixel signal corresponding to different wavelengths, measured using a line detector placed after a grating. Thus, the spectral image data cube can be reconstructed as

| (3) |

by only replacing the with in Equation 2 and without characterizing the surface roughness for the other wavelengths.

III Experiment

III.1 Single color SP-DiffuserCam

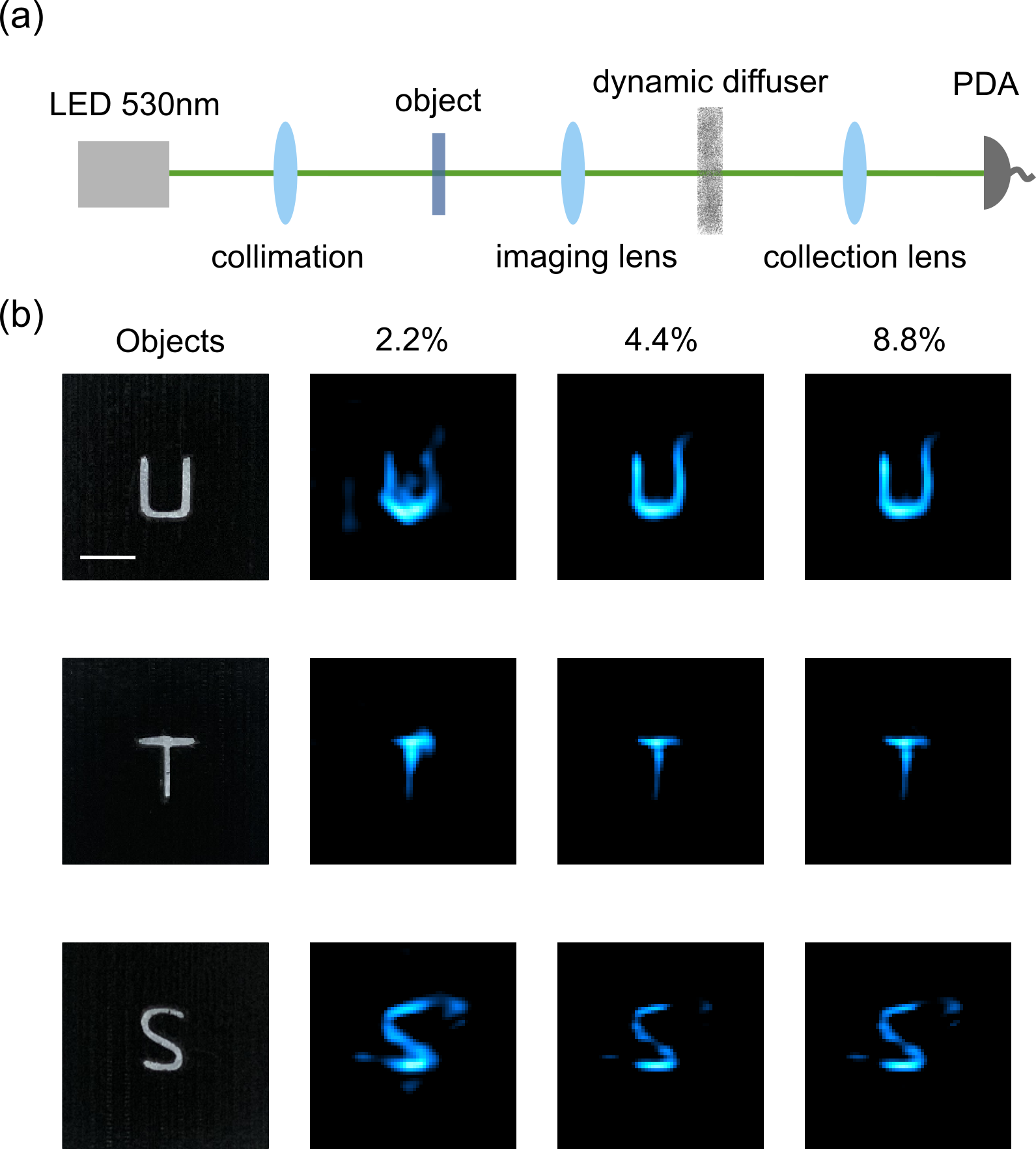

A simple proof-of-concept experimental realization of the approach is presented in Figure 2. Figure 2 (a) depicts the optical configuration of a single-color SP-DiffuserCam, where the light source is a monochromatic 530 nm LED with a bandwidth of 33 nm (M530D2, Thorlabs). The object is imaged on the diffuser plane, illuminated by the collimated light. The ground glass diffuser ( = 24mm, DG10-120-MD, Thorlabs) is mounted on a motorized rotation stage (PRM1/MZ8, Thorlabs), which has a 25 ∘/second rotation velocity. A silicon amplified photodetector (PDA100A2, Thorlabs) is used to measure the intensity fluctuations. For calibration, the speckle patterns of the diffuser are recorded by a camera (panda 4.2 bi, PCO AG) through the same lens (f=50mm) used for the photodetector to keep the same numerical aperture; the exposure time of 4ms is the same for both detectors. A motor controller (KDC101, Thorlabs) and a low-cost DAQ device (USB-6002, National Instruments) are used to synchronize the rotator and the detector or the camera.

To ensure our patterns are sufficiently different, we use the edge of the diffuser region(about from the center of the diffuser) as the image plane area. Due to the small diameter of the diffuser, however, the neighbouring patterns still have similar areas along the rotation direction. The objects (Figure 2(b) left) are 3D printed transmission masks of ’U’, ’T’, ’S’ with a thickness of 2mm and the size about 3mm. The retrieved pixel images with sampling ratios of 2.2%, 4.4%, and 8.8% are shown in Figure 2(b), corresponding to a minimum acquisition time of 14.4s, which is limited by the maximum rotation velocity. Here we also use a simple block-matching and the collaborative filtering algorithm [22] for noise suppression, which takes about 0.02 seconds. Outlines of objects emerge at 2.2%, with image quality increasing with improved sampling ratios to 8.8%. Further increasing the number of scattering patterns would not improve the reconstruction quality. The first reason is the ’imperfect’ reference patterns with overlap regions between neighbouring ones. The second one is that a higher sampling ratio leads to a finer angle variation of measurement within one cycle of rotation, and increased overlap areas of the reference patterns. Thus, the above challenges induce a phenomenon that the spatial information along the vertical direction of rotation is reconstructed better than the parallel ones. This is evident in Figure 2(b), in the top-right corner of ’U’, the bottom of ’T’ and the emerging noisy pixels in the left of ’S’. Note the rotation directions are clockwise the three masks, with the sampling area in the lower half of the circular diffuser.

III.2 Comparison of different diffusers

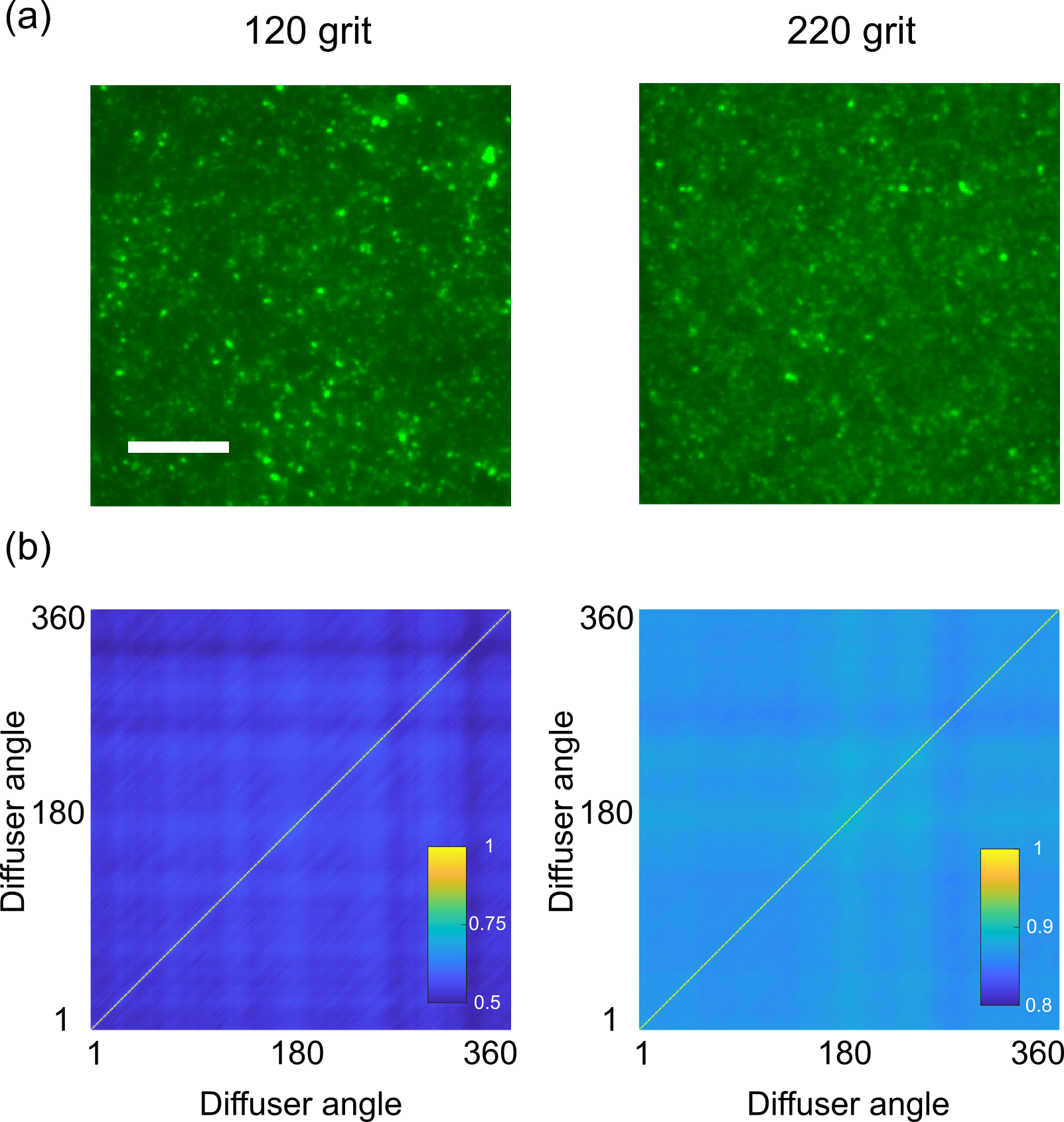

The performance of our setup is largely dependent on the choice of diffuser. Here we compare the 120-grit diffuser, which is used in Figure 2, and a 220-grit diffuser ( = 24mm, DG10-220-MD, Thorlabs), which has smaller grain size on the polished surface. Figure 3(a) shows typical transmission images of the two diffusers, in which the former one shows a higher contrast than the later one, due to the coarser polishing. We calculate the correlation coefficient of the two groups of diffuser patterns, for a quantitative study, as defined by

| (4) |

where = 0∘,1∘,, 360∘, and denote the average values for two arbitrary patterns and , respectively. The mean correlation coefficients between different angles for the 120 grit diffuser is , while for the 220 grit one we find . Note that these coefficients are based on pixels reference patterns, and they would be further higher in the condition of Figure 3(b), where the patterns are binned to matrices with lower contrasts. A higher correlation means an improved intrinsic coherence between the sampling basis and leads to a lower detection efficiency of the spatial images. Key to contemporary single-pixel imaging is the use of high-efficiency orthonormal patterns, such as Hadamard [12] or Fourier basis [7]. However, since we adopt a commercial diffuser, the sampling patterns in our setup are actually pseudo-random grayscale matrices, which are more related to classical GI using laser and dynamic diffuser induced speckle patterns [18]. According to a study of the influence of speckle size in GI [23], an optimal speckle size exists in the range where the speckle size is comparable to the feature size of the object. This is the reason that we choose to use the 120-grit diffuser, as it is our coarsest diffuser and the one most approaches the feature size of the masks (about ). Future work could focus on the optimization of the diffuser or an integrated mini camera.

III.3 Hyperspectral SP-DiffuserCam

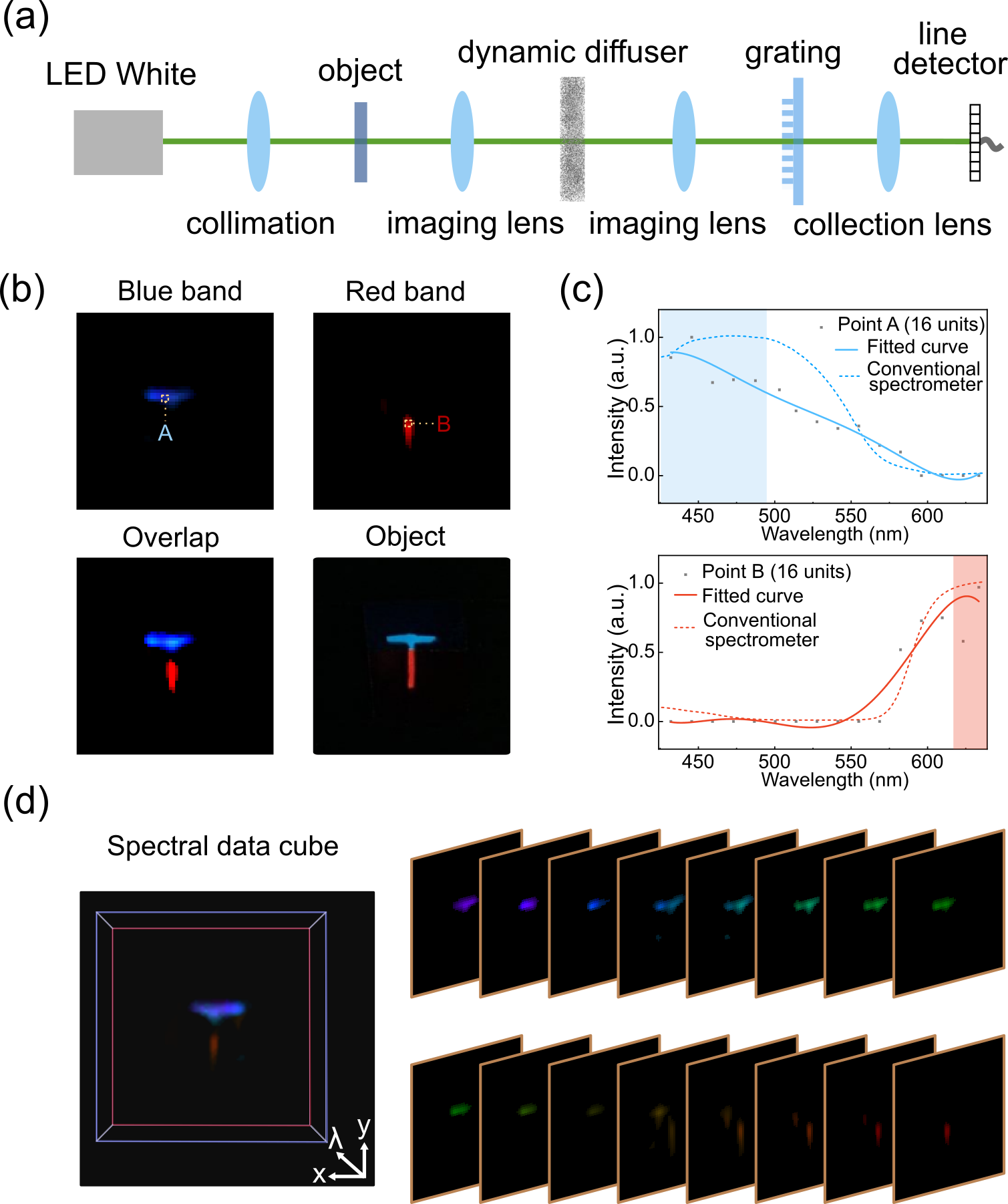

One of the advantages of the SP-DiffuserCam is that it is easy to integrate other functions within the platform, such as the hyperspectral imaging shown in Figure 4. To do this, we can directly use the pre-recorded reference patterns without additional calibration for specific wavelengths. Figure 4 (a) illustrates the optical setup of the hyperspectral SP-DiffuserCam. Here we use a cold white LED (MCWHLP1, Thorlabs) for a broad spectrum. Different from the monochrome setup, a low-cost diffraction grating slide (1,000 Line/mm, Rainbow Symphony, $0.40), is used to disperse the incident light. The object image on the diffuser passes through the grating after the imaging lens (f=50mm) and is focused onto a line array detector via the collection lens (f=35mm). Here a compact COMS camera (acA1920-150um, Basler) serves as line-detector by binning the pixels to a array in a sensor area of . To keep the same numerical aperture with the single-pixel measurement, we use the same two lenses system for calibration.

The 1D spectral intensity at each degree (exposure time: 4ms) are recorded in a 2D matrix . Then this matrix is converted to a spectral data cube by the reconstruction Equation 3. Here we apply standardized [International Commission on Illumination (CIE)] color-matching functions to the spectral cube to produce the pseudo-colored images to make consistent with the original object (made by sticking the color filters to the ’T’ mask, a blue filter for the ’-’ and a red filter for the ’’ of ’T’). Figure 4 (b) shows the retrieved spectral image in the blue band () and the red band (). Compared with the object photograph in the lower right corner, the middle, overlap area in the reconstruction has much lower intensities, due to refraction between the junction of the two filters. The reconstructed spectra of two selected areas in Figure 4 (b) show a similar trend when compared to measurements using a conventional spectrometer (UV-2401 PC UV-VIS, Shimadzu) of the same two color filters. Finally, we show the 3D spatio-spectral data cube in Figure 4 (d), as well as the spatial mapping image at single wavebands ranging from 426nm to 637 nm.

IV conclusion

In conclusion, we have reported a passive single-pixel camera that employs a rotating diffuser as the spatial modulator in the image plane and uses incoherent light, for 2D imaging from 1D temporal signals. Note that classical pseudothermal ghost imaging [18, 15, 21, 24] has also used a rotating ground-glass diffuser illuminated by a laser beam to generate dynamic speckles, and then split the beam into a reference and an object beam. After that, a CCD is used to measure the diffracted speckles, and a single-pixel bucket detector is used to measure the signals from the object, simultaneously, in which both CCD and the object keep the same distances from the diffuser. As a comparison, our setup uses pre-recorded reference patterns and does not need to measure these in experiments. Another difference is the intensity distribution on the surface of the diffuser is used here instead of the laser interference speckles after the diffuser. In fact, our work can be considered as the passive version of x-ray ghost imaging that uses pre-recorded patterns and an incoherent source [10, 11]. However, we directly use the patterns of the diffuser as the reference patterns, not the diffracted speckles after propagation for a distance as in x-ray ghost imaging. Thus, our work would help to compact these imaging systems. We also demonstrate our concept is readily extended to achieve low-cost hyperspectral imaging, for 3D spatio-spectral image retrieval with temporally 1D spectral signals. Furthermore, the SP-DiffuserCam can be explored with coherent light, or other forms of imaging techniques such as time-resolved imaging [25] using fast response detectors and phase imaging [9, 24] by adopting phase engineered diffusers. We, therefore, anticipate that this work will open opportunities for developing cost-effective integrated single-pixel cameras, especially in exotic wavebands and imaging under ultra-low illumination.

Acknowledgements.

Funding: Australian Research Council DECRA fellowship (DE200100074, F.W.), Australian Research Council Discovery Project (DP190101058, F.W.). The authors acknowledge financial support from the UTS Faculty of Engineering and IT, and China Scholarship Council (B.L.: No.201706020170).References

- Antipa et al. [2018] N. Antipa, G. Kuo, R. Heckel, B. Mildenhall, E. Bostan, R. Ng, and L. Waller, Diffusercam: lensless single-exposure 3d imaging, Optica 5, 1 (2018).

- Zhu et al. [2019] X. Zhu, S. K. Sahoo, D. Wang, H. Q. Lam, P. A. Surman, D. Li, and C. Dang, Single-shot multi-view imaging enabled by scattering lens, Optics Express 27, 37164 (2019).

- Sahoo et al. [2017] S. K. Sahoo, D. Tang, and C. Dang, Single-shot multispectral imaging with a monochromatic camera, Optica 4, 1209 (2017).

- Monakhova et al. [2020] K. Monakhova, K. Yanny, N. Aggarwal, and L. Waller, Spectral diffusercam: Lensless snapshot hyperspectral imaging with a spectral filter array, Optica 7, 1298 (2020).

- Edgar et al. [2019] M. P. Edgar, G. M. Gibson, and M. J. Padgett, Principles and prospects for single-pixel imaging, Nature Photonics 13, 13 (2019).

- Gibson et al. [2020] G. M. Gibson, S. D. Johnson, and M. J. Padgett, Single-pixel imaging 12 years on: a review, Optics Express 28, 28190 (2020).

- Zhang et al. [2015] Z. Zhang, X. Ma, and J. Zhong, Single-pixel imaging by means of fourier spectrum acquisition, Nature Communications 6, 1 (2015).

- Liu et al. [2017] B.-L. Liu, Z.-H. Yang, X. Liu, and L.-A. Wu, Coloured computational imaging with single-pixel detectors based on a 2d discrete cosine transform, Journal of Modern Optics 64, 259 (2017).

- Liu et al. [2018] Y. Liu, J. Suo, Y. Zhang, and Q. Dai, Single-pixel phase and fluorescence microscope, Optics Express 26, 32451 (2018).

- Yu et al. [2016] H. Yu, R. Lu, S. Han, H. Xie, G. Du, T. Xiao, and D. Zhu, Fourier-transform ghost imaging with hard x rays, Physical Review Letters 117, 113901 (2016).

- Zhang et al. [2018] A.-X. Zhang, Y.-H. He, L.-A. Wu, L.-M. Chen, and B.-B. Wang, Tabletop x-ray ghost imaging with ultra-low radiation, Optica 5, 374 (2018).

- Gibson et al. [2017] G. M. Gibson, B. Sun, M. P. Edgar, D. B. Phillips, N. Hempler, G. T. Maker, G. P. Malcolm, and M. J. Padgett, Real-time imaging of methane gas leaks using a single-pixel camera, Optics Express 25, 2998 (2017).

- Olivieri et al. [2018] L. Olivieri, J. S. Totero Gongora, A. Pasquazi, and M. Peccianti, Time-resolved nonlinear ghost imaging, ACS Photonics 5, 3379 (2018).

- Khakimov et al. [2016] R. I. Khakimov, B. Henson, D. Shin, S. Hodgman, R. Dall, K. Baldwin, and A. Truscott, Ghost imaging with atoms, Nature 540, 100 (2016).

- Shapiro [2008] J. H. Shapiro, Computational ghost imaging, Physical Review A 78, 061802 (2008).

- Liu et al. [2020] B. Liu, F. Wang, C. Chen, F. Dong, and D. McGloin, Self-evolving ghost imaging, arXiv preprint arXiv:2008.00648 (2020).

- Bromberg et al. [2009] Y. Bromberg, O. Katz, and Y. Silberberg, Ghost imaging with a single detector, Physical Review A 79, 053840 (2009).

- Valencia et al. [2005] A. Valencia, G. Scarcelli, M. D’Angelo, and Y. Shih, Two-photon imaging with thermal light, Physical Review Letters 94, 063601 (2005).

- Xu et al. [2018] Z.-H. Xu, W. Chen, J. Penuelas, M. Padgett, and M.-J. Sun, 1000 fps computational ghost imaging using led-based structured illumination, Optics Express 26, 2427 (2018).

- Liu et al. [2016] B.-L. Liu, Z.-H. Yang, A.-X. Zhang, and L.-A. Wu, A novel correlation imaging method using a periodic light source array, Proc. SPIE 10154, 1015413 (2016).

- Ferri et al. [2010] F. Ferri, D. Magatti, L. Lugiato, and A. Gatti, Differential ghost imaging, Physical Review Letters 104, 253603 (2010).

- Dabov et al. [2007] K. Dabov, A. Foi, V. Katkovnik, and K. Egiazarian, Image denoising by sparse 3-d transform-domain collaborative filtering, IEEE Transactions on Image Processing 16, 2080 (2007).

- Sun et al. [2019] Z. Sun, F. Tuitje, and C. Spielmann, Toward high contrast and high-resolution microscopic ghost imaging, Optics Express 27, 33652 (2019).

- Dou et al. [2020] L.-Y. Dou, D.-Z. Cao, L. Gao, and X.-B. Song, Dark-field ghost imaging, Optics Express 28, 37167 (2020).

- Sun et al. [2016] M.-J. Sun, M. P. Edgar, G. M. Gibson, B. Sun, N. Radwell, R. Lamb, and M. J. Padgett, Single-pixel three-dimensional imaging with time-based depth resolution, Nature Communications 7, 1 (2016).