Single-channel electroencephalography decomposition by detector-atom network and its pre-trained model

Abstract

Signal decomposition techniques utilizing multi-channel spatial features are critical for analyzing, denoising, and classifying electroencephalography (EEG) signals. To facilitate the decomposition of signals recorded with limited channels, this paper presents a novel single-channel decomposition approach that does not rely on multi-channel features. Our model posits that an EEG signal comprises short, shift-invariant waves, referred to as atoms. We design a decomposer as an artificial neural network aimed at estimating these atoms and detecting their time shifts and amplitude modulations within the input signal. The efficacy of our method was validated across various scenarios in brain–computer interfaces and neuroscience, demonstrating enhanced performance. Additionally, cross-dataset validation indicates the feasibility of a pre-trained model, enabling a plug-and-play signal decomposition module.

Keywords: Artificial neural network, dictionary learning, electroencephalography, signal decomposition

1 Introduction

Signal decomposition is a pivotal technique in signal and data processing [Cichocki & Amari, 2002]. This technique finds widespread applications in areas like audio and speech [Virtanen, 2007], and image [Minaee et al., 2022] processing, as well as in the analysis of electroencephalogram (EEG) signals. There exists a wealth of techniques specifically designed to dissect EEG signals into constituent components. These decomposition methods serve various purposes, including noise reduction [Vorobyov & Cichocki, 2002], source separation/localization [Asadzadeh et al., 2020], and facilitating brain–computer interfaces (BCI) [Lotte et al., 2018, Gu et al., 2021].

EEG recordings are typically obtained using multiple electrodes in a multi-channel configuration. Over the years, advanced signal decomposition techniques that leverage the spatial features of signals, such as beamforming [Van Veen & Buckley, 1988] and spatial filtering, have been thoroughly developed. For example, independent component analysis (ICA) [Hyvärinen & Oja, 2000] predicates the statistical independence of decomposed signals and estimates their spatial mixture. While multi-channel measurement devices ware widely used in research and medical purposes, there is a rising trend of consumer devices with fewer channels. However, for these devices equipped with fewer channels, wherein the electrodes may be considerably distant from one another, it is difficult to effectively leverage the spatial features for signal decomposition.

For measurement devices that capture signals with spatially-sparse electrodes, single-channel signal decomposition is often the technique of choice [Maddirala & Shaik, 2018]. Traditional frequency-driven methods like Fourier and (continuous) wavelet transforms [Mallat, 1998] are apt for this scenario. In these decomposition approaches, decomposed signals are anchored to specific theoretical templates, such as sinusoidal signals and short waveforms, termed “mother wavelets”. This frequency-driven decomposition operates on the implicit assumption that distinct EEG components segregate into separate frequency bands. However, when it comes to EEG decomposition, components might not be neatly categorized into specific frequency bands; they can span and overlap across multiple frequency bands [de Munck et al., 2009, Babadi & Brown, 2014].

An alternative to single-channel signal decomposition is the adoption of data-driven or heuristic techniques—known for their flexibility in decomposing signals [Bajaj & Pachori, 2012]. A notable example is empirical mode decomposition (EMD) [Huang et al., 1998], which has found applications in EEG analsysis [Sweeney-Reed et al., 2018]. Many prevalent data-driven methods aim for adaptive application, meaning they rely solely on the signal-to-be-decomposed for the design of the decomposition. However, a potential drawback is that when the input signal is relatively short, its decomposition can be significantly influenced by noise. Furthermore, the decomposition lacks consistency across multiple observed signals, making it challenging to determine whether a decomposed signal is desired or not.

As we introduced earlier, EEG decomposition has traditionally employed prior knowledge encompassing spatial features, frequency assumptions, and statistical hypotheses to facilitate robust decomposition. Here, we propose a new data-driven approach that capitalizes on training data, not relying on the prior knowledge. In most practical situations where EEG decomposition is needed, such data that we can use for training a decomposer is available. For instance, in the realm of neuroscience, EEG data from numerous trials is often aggregated and subjected to a collective analysis. In a similar vein, when it comes to BCI applications [Gu et al., 2021], training data may exist that aid in the supervised training of classifiers. Our study is underpinned by the aspiration to harness these EEG data, convinced that such an approach holds promise for a more robust and consistent decomposition framework.

Using the training data, our proposed decomposition identifies short-length waves that collectively reconstruct the original EEG signals. This approach is based on the model proposed by Brockmeier and Principe [Brockmeier & Principe, 2016], which suggests the existence of recurrent wave patterns within EEG signals. Theoretically, key EEG signatures, such as oscillatory patterns—including alpha, mu, beta, and gamma rhythms [Jas et al., 2017]—as well as event-related potentials (ERPs) [Barthélemy et al., 2013], can be modeled by these recurrent waves. The proposed decomposer estimates recurrent waves, akin to shift-invariant atoms in dictionary learning frameworks [Kreutz-Delgado et al., 2003, Grosse et al., 2007, Simon & Elad, 2019, Garcia-Cardona & Wohlberg, 2018], using either supervised or unsupervised data-driven methods. Analogous to continuous wavelet transform techniques [Unser & Aldroubi, 1996], our method reconstructs signals by overlaying time-shifted and amplitude-modulated atoms. The time shifts and amplitude modulations of the atoms are identified by neural network modules, which we refer to as detectors. This reconstruction process is formulated as a convolution of the detector outputs with the atoms, implemented via convolutional layers [Tao et al., 2021, Stanković & Mandic, 2023, Davies et al., 2024]. By employing an artificial neural network framework, we enable optimization of the detectors and atoms in the decomposer using various loss functions, including those derived from supervised learning principles. This approach supports the flexible design of signal models tailored to distinct EEG signatures through the development of specialized neural network architectures.

In this paper, we introduce several loss functions and network architectures designed to tackle various challenges commonly encountered in neuroscience and BCI research. A series of experiments have been conducted to verify the efficacy of these proposed losses and architectures. These experiments demonstrated the practical utility and adaptability of our proposed decomposition technique in real-world applications. Furthermore, we explored the feasibility of pre-training to develop a decomposer that requires no calibration. This approach involves pre-training the network (detectors and atoms) with large datasets. Our BCI experiment, which included a cross-dataset experimental scenario for validating dataset shifts [Quinonero-Candela et al., 2009, Dockès et al., 2021, Mellot et al., 2023], suggests the feasibility of applying a pre-trained decomposer to any EEG signal. We have made this pre-trained decomposer publicly available; it require only a single-channel input, thereby simplifying measurement and analysis procedures. This advancement enables researchers, engineers, medical professionals, and consumers to decompose EEG signals in a plug-and-play manner.

In this paper, our contributions encompass:

-

1.

Introducing a robust single-channel EEG decomposition using a convolution model with a limited number of atoms.

-

2.

Providing empirical evidence through our experiments that demonstrates the feasibility of decomposing and reconstructing EEG signals using our model.

-

3.

Releasing a pre-trained decomposer to the public, enabling users to decompose EEG signals in a plug-and-play manner.

2 Detector-atom network

In this paper, we introduce a novel signal decomposition method that leverages training data and artificial neural networks. This section outlines the foundation of our decomposer network. We begin by detailing the key components and structure of the network. Subsequently, we discuss both unsupervised and supervised loss functions, which are employed to optimize the network. Additionally, we introduce a technique called “atom reassignment” designed to prevent the optimization process from becoming stagnant.

2.1 Signal model and network architecture

Our decomposition proposition hinges on the idea that an EEG signal can be reconstructed by a linear combination of short signals, referred to as atoms. This concept echoes the tenets of the Fourier transform, where atoms are complex sinusoidal signals, and the wavelet transform, where atoms are mother wavelets. What sets our method apart is the unsupervised/supervised design of these atoms. In our approach, we employ a network comprising of detector and atom parts. The detector is responsible for generating coefficients for linear combinations, i.e., it determines how to convolve an atom. On the other hand, the atom network holds a single atom. The whole network produces the decomposed signal which is generated by a convolution of the atom.

To elucidate our decomposition framework, let us delve into a simple illustration. Consider a simple reconstruction model that decomposes a single-channel signal into multiple signals and then reconstructs the original signal by summing the decomposed signals. We hypothesize that EEG signals can be reconstructed using atoms, implying that an EEG signal is decomposed into signals. This model is formulated as [Lewicki et al., 1999, Grosse et al., 2007]

| (1) |

where is the observed signal at time index , denotes the th atom, is the length of the th atom, and is the magnitude of the th atom at time index . Given that represents the magnitudes, must uphold a non-negative constraint(). The objective of our proposed networks is to estimate the atoms and their respective magnitudes .

The integrated detector-atom network aims to determine these components using training data. The fundamental architecture of this network is visually represented in Figure 1.

The detector’s primary responsibility is deducing . Any network that can generate a non-negative signal, congruent in length to the input signal , can potentially function as a detector. For simple notation, we opt for a solitary convolutional layer, and show an implementation example. The output of the th detector is formulated as

| (2) |

where is the observed signal and the input for the detector, the set encompasses coefficients for the convolutional layer, and is the filter size of the convolutional layer. To convey this in vector form, with a signal length of , it can be represented as

| (3) |

where are the vector forms of the observed signal and the th detector output, defined respectively as and , respectively, and is an operator, as described in (2). Both and maintain congruent signal lengths. Owing to the non-negative activation function, the detector’s output results in non-negative values. The detector’s output is conceived to, within the input signal , identify the time shift and amplitude modulation of the atoms, which is delineated by the subsequent atom network .

The subsequent convolution with the atoms produces a decomposed signal:

| (4) |

In vector form, the signal reconstruction by the atom network outputs can be described as

| (5) |

where is the vector of the reconstructed signal, formulated as , and is an operator, defined in accordance with (4).

If we interpret the atoms as elements of a dictionary and the detector outputs as (sparse) representations using those elements, we found that this decomposition procedure is fundamentally the same as convolutional dictionary learning (CDL) [Garcia-Cardona & Wohlberg, 2018]. Typically, CDL optimizes the dictionary and representations to minimize reconstruction loss. In contrast, our proposed method, which implements these dictionary and representations as neural network modules, can use flexible optimization strategies tailored to EEG characteristics and the specific goals of the decomposition, as we will introduce in the next section.

2.2 Loss for network optimization

The parameters subject to optimization encompass the coefficient set for a detector and an atom in each detector-atom network: , where and for . The objective of this optimization is to minimize the discrepancy between the observed signal and the reconstructed signal . This discrepancy is quantified as

| (6) |

The loss function is minimized throughout the training phase. Consequently, the detector-atom network is trained to decompose the input observed signal and accurately reconstruct the input from the decomposed ones, each of which is represented by an atom.

Given labeled training samples, meaning pairs of signals and their corresponding class labels as , it is possible to define supervised loss functions tailored to the class. Suppose we have signals assigned to distinct classes, represented as . Our approach is to design individual decomposers. Each decomposer is specialized; its primary function is to decompose and reconstruct signals that belong to a specific class. This concept is formulated by a supervised (SV) loss function for the th decomposer;

| (7) |

for , where represents the sum of signals decomposed by the th decomposer. If the signal class matches the class for which the decomposer is designed, the loss is the difference between the observed signal and the decomposed one. If the signal class does not match, the loss is simply the magnitude of the reconstructed signal. This ensures that the decomposer specific to other classes does not attempt to reconstruct the signal that does not belong to its designated class.

Promoting sparsity in the dictionary representation helps prevent overfitting and enhances robustness [Chen et al., 1998]. For our proposed decomposer, we introduce a sparsity loss for the outputs of the detectors, defined by

| (8) |

This loss is combined with a fidelity loss, or , to form a total loss function such as , where is a regularization coefficient.

When promoting sparsity in detector outputs, pairs of detector outputs and atoms often tend to converge toward zero at the beginning of training, before the reconstruction performance is still low. Once this zero convergence occurs, these pairs do not produce any non-zero decomposed signals in later epochs and thus do not contribute to improving the reconstruction loss—they effectively become deactivated. To explore the potential contribution of these deactivated pairs to reconstruction performance in the later optimization phase, when reconstruction performance is relatively high, we propose a technique called atom reassignment. Atom reassignment revives deactivated pairs by transferring the detector and a part of the atom from an active pair. See Appendix Section A for the details.

3 Applications

The network architecture for our decomposer can be easily modified, improved, and applied, thanks to recent advances in artificial neural network. Here, we propose several networks specifically designed to address common problems encountered in neuroscience and BCI research.

3.1 Noise reduction using noisy-inducing event periods

This section expands the detector-atom network-based decomposer to a noise reduction techniques tailored for scenarios where we know the timings of noise-inducing events in training samples, but not their specific nature. For instance, consider a setup where both EEG and electrooculogram (EOG) signals are available. The EOG signal can pinpoint the timings of ocular activities like blinks and saccades, which often induce noise into the EEG signal. Traditional methods for noise reduction such as principal component analysis (PCA) or ICA, work under the presumption that EEG noise profiles are congruent to the EOG signal [Nolan et al., 2010]. However, our proposed method deviates from this convention, offering a more versatile approach. Essentially, our method is applicable with any noise reference, as long as the event timings inducing EEG noise can be ascertained. This flexibility means it can accommodate varied noise references, including eye trackers or motion capture systems.

The network architecture for noise reduction is based on a signal observation model where the EEG signal, , is a composite of brain-related signals, , and noise-event-related signals, . This relationship is formulated as:

| (9) |

where is the number of brain-related signals and is the number of noise-related signals. The objective of this noise reduction process is the accurate estimation of the signals, and .

The network encompasses two integral detector-atom networks: one tailored for the extraction of brain-related signals, and another focused on isolating signals associated with noise events. In this setup, and constitute the detectors and atoms for the first network, the signal-estimator, which is designed to extract brain-related signals. Conversely, and constitute the detectors and atoms of the second network, the noise-estimator, which is designed to extract the noise-event-related signals.

The noise-event-related signal is estimated by summing the outputs of processing the input signal, , through each pair of detector-atom network in the noise-estimator, expressed as:

| (10) |

where represents the integrated operation of the detector-atom pairs in the noise-estimator. Following the computation of , this estimated noise component is deduced from the original input signal , resulting in a version devoid of noise components. Subsequently, this noise-reduced signal undergoes processing through each pair of detector-atom within the first network, culminating in the estimation of the brain-related signal , articulated as:

| (11) |

where represents the integrated operation of the detector-atom pairs in the signal-estimator.

Optimizing the parameters for the detector-atom pairs involves minimizing specific loss functions. For the signal-estimator, , the loss function, , measures the deviation between the input signal and the signal combined the outputs of the signal and noise-estimators. This is computed as

| (12) |

where and are the th time instance of and . Conversely, the loss function for the noise-estimator, , denoted as , accounts for two factors: the deviation between the input signal and the output of the noise-estimator during noisy events, and the energy of the estimated signal when no noisy event are present. With which represents the set of time indices at which noise events occur, the loss is articulated as:

| (13) |

This formulation ensures a precise estimation of noise-event-related signals during periods of noise events while maintaining the integrity of brain-related signal estimations outside of these periods.

Nevertheless, this approach does not consider the output of the signal-estimator, , for designing the noise-estimator. Thus, following the convergence of or after a predetermined number of iterations, a refined loss function , is proposed. This modification not only accounts for the noise-event-related signals but also integrates the estimated brain-related signals, formulated as:

| (14) |

3.2 SSVEP detection

The subsequent application focuses on SSVEP-based BCIs. These BCIs are engineered to estimate, from an EEG signal, the target stimulus a user is fixating on. Typically, this estimator is designed utilizing supervised methods, given the availability of training samples. Each sample for training comprises an EEG signal paired with a class label that corresponds to the target SSVEP stimulus.

The network devised for SSVEP detection is anchored in a generative model that characterizes SSVEP responses. Within the paradigm of an SSVEP-based BCI, visual stimuli are periodically presented at a specific frequency. The BCI detects the corresponding EEG activity that matches the stimulus frequency. These detected responses are indicative of the user’s visual focus and are represented by a series of visual-evoked potentials corresponding to each stimulus flash.

The mathematical model of the response against a stimulus flashing at a frequency [Hz] ( [s]) and a phase [s] is defined as

| (15) |

where denotes the response evoked by a single flash of the stimulus, represents the amplitude of the response to the th flash, and includes various forms of noise such as background EEG activity, artifacts, and responses to non-target stimuli. For a practical scenario, we implement in a discrete-form with .

The network for SSVEP detection is structured with detectors, denoted as , and a single atom network . Here, corresponds the number of distinct SSVEP stimuli in the BCI can detect. The detectors’ role is to ascertain the temporal shifts and amplitudes of the visual-evoked potentials (VEPs). Specifically, the detectors determine the time-varying VEP amplitude in (15). These detectors are linked to a single atom network , which is tantamount to the VEPs elicited by a single stimulus flash . The decomposer reproduces the observed signal by

| (16) |

The architecture is designed such that each detector pinpoints the temporal offset and amplitude of VEPs induced by the th class of SSVEP stimulus, while the atom network ’s atom replicates the fundamental VEP response to the flashes across all SSVEP stimuli.

The supervised loss defined in (7) is applied for optimizing the detector and atom networks. The loss function is designed to measure the discrepancy between the observed EEG signal and the reconstructed signal estimated by the associated with the target SSVEP class. Given a training sample represented as , where is a class label corresponding to one of the possible SSVEP stimuli. The loss function is formulated as the sum of two distinct loss components:

| (17) |

where captures the reconstruction error, quantifying the difference between the input signal and the aggregate of the signals reconstructed by all detectors:

| (18) |

and is a supervised loss term that penalizes the contributions of non-target class decompositions, ensuring that the reconstruction primarily utilizes the components corresponding to the target class:

| (19) |

The goal of this loss function is to ensure that the reconstructed signal is derived predominantly from the detector-atom network corresponding to the target class, thereby enhancing the specificity and accuracy of the SSVEP signal reconstruction.

Importantly, the optimization of the networks does not necessitate explicit knowledge of the stimulus frequencies and phases, which are denoted as (or ) and in (15). Instead, these stimulus properties are intrinsically discerned by the detectors via the class label associated with each SSVEP stimulus.

3.3 Time-locked component extraction

This application focuses on the decomposition of event-locked signals, which are time-aligned with specific event occurrences (epoch). Event (Time)-locked signals are characterized by ERPs, forming by positive or negative fluctuations at certain latencies post-event. These ERPs are considered to be indicative of underlying cognitive processes, exhibiting amplitude variations that correlate with these processes. The goal here is to effectively decompose these components by introducing a constraint in the network architecture that prevents the time-shifting of atoms.

In contrast to the SSVEP decomposer delineated in Section 3.2, which utilizes a shared atom for all detectors, the ERP decomposer is designed differently to capture diverse waveforms associated with ERPs, as follows. First, atoms are distinct across each detector, enabling the representation of the diverse ERP waveforms. Second, the temporal shift, dictated by the detector, remains constant to extract the event-locked components. Last, the amplitude of a detector’s output quantifies the influence of the event-locked component that is triggered post-event. The contrast bears resemblance to that between the principles of dictionary learning [Kreutz-Delgado et al., 2003], which underpin the ERP model, and convolutional dictionary learning [Garcia-Cardona & Wohlberg, 2018], which is analogous to the foundational detector-atom network structure.

The network architecture for the ERP decomposer is based on a generative model [Kotani et al., 2024]. In this model, we assume that ERPs are overlaid in an observed signal. The waveform of the th evoked potential is represented by . The epoched, observed signal is then modeled as

| (20) |

where represents the amplitude of the th evoked potential and is noise. This model assumes that the waveform does not shift over time within the epoch, meaning that the potential fluctuation is time-locked. While the atoms, , do not change across trial (epoch), the corresponding amplitude, , vary for each epoch. In our decomposer network, the detector functions as an estimator of these trial-by-trial amplitude variations.

The architecture of the network is composed of detectors, each corresponding to a distinct ERP. Given that the exact number of ERPs is typically not known a priori, it is often determined through empirical estimation. The network reconstructs the observed input signal by

| (21) |

where is the th decomposed signal given by , is the th detector to estimates the amplitude of the th components, , in the observed signal, and the th atom serves to model the waveform associated with an event-locked ERP, . This model assume that the observed signals are linear combinations of vectors, . The unknown parameters of this model are the pairs of the detector and atom, . To optimize these parameters, we can use the fidelity loss as defined in (6).

4 Experimental validation

Signal decomposition techniques hold significant potential for advancing research in neuroscience and the development of BCIs. This section showcases toy examples of EEG signal decomposition utilizing the method proposed in this study. These examples serve to demonstrate the practical application and effectiveness of our decomposition approach in scenarios for real-world EEG data analysis. To validate that the proposed method can decompose an EEG signal into meaningful components, we employed the simples possible methods for other procedures, including preprocessing, feature extraction, and classification.

4.1 Reconstruction

Our initial experiment focused on assessing the accuracy of signal reconstruction post-decomposition. To evaluate the accuracy, we divided a dataset into two parts: one for training the decomposer and the other for testing. The decomposer was trained using the training set, after which it decomposed signals from the testing set. We then evaluated the reconstruction accuracy by comparing the original signals with those reconstructed from their decomposed counterparts. Furthermore, to explore the potential for transfer learning, we employed datasets from different subjects, tasks, and channels as testing samples.

4.1.1 Dataset

We used an open dataset [Dzianok et al., 2022] containing EEG data from 42 subjects performing four different tasks. Due to the absence of trigger data in the recordings, the whole trials from the two subjects were excluded. These subjects engaged in four distinct tasks: simple reaction time (SRT), Oddball, multi-source interference task (MSIT), and Rest. While the EEG signals were originally captured using a 123-channel setup at a sampling frequency of 1000 Hz, our experiment focused primarily on the data from channel Cz for training the network. Additionally, we utilized data from a select few other channels for testing purposes. On average, a single session, where a subject performed a certain task, contained about 431 trials (samples).

4.1.2 Architecture

The decomposer was based on the fundamental network architecture outlined in Section 2.1. We experimented with decomposers configured to generate various numbers of decomposed signals, specifically 2, 4, 8, 16, and 32 outputs.

A detector was structured with three layers, each comprising a convolutional layer followed by a ReLU activation function. The convolutional layers were designed with a kernel size of 100, corresponding to a filter size of approximately 0.1 s, and were configured to have a single channel. This kernel size was also adopted for the convolutional layer in the atom network.

For optimizing the decomposer, we employed an unsupervised loss function as defined in (6). The optimization process utilized an Adam optimizer with the following parameters: a learning rate of 0.001, of 0.5, of 0.999, and a weight decay of . The optimization was conducted 1,000 iterations (epochs), with batches of 100 samples each.

4.1.3 Result

Figure 2(a) shows the reconstruction accuracy for each trial, quantified using the root mean squared error (RMSE), which reflects the average error at each time instance. For each session, the network was trained on 80% of the samples and subsequently tested on the remaining 20%. For the number of decomposed signals, the results indicated that an increase in the number of decomposed components led to an improvement in RMSE. Notably, the decomposer configured for 32 signal decompositions achieved an RMSE of approximately 0.5 V.

Figure 3 shows examples of the reconstructed signals with RMSEs of 0.16 V and 0.19 V. Considering the original signal (approximately – V), these errors are relatively small, indicating a high level of reconstruction accuracy.

In the averaged signals over trials, as shown in Figure 4, the accuracy was remarkably high, as similarly observed in Figure 3. In both the time and frequency domains, the lines in the plot would seem to completely overlapped. The time-domain representation of the decomposed signals exhibited only smooth fluctuations, as these signals were not time-locked, and the high-frequency components were likely diminished through averaging. However, when examining the power spectrum, computed in the induced response analysis, it became evident that the decomposed signals exhibited distinct frequency properties. Notably, the frequency bands of some decomposed signals showed overlap. This suggests that, unlike traditional filter banks, the proposed decomposer does not decompose the signal based on frequency.

Figure 2(b) shows the reconstruction accuracy achieved by using the first decomposed signals. In this analysis, the decomposed signals were sorted according to their RMSEs—the error between the original signal and each decomposed signal. From the figure, it is evident that the decomposers configured with ultimately attained a lower RMSE level. This observation suggests that each decomposed signal contributes to the reconstruction process, and the collective effect of these signals on the reconstruction performance varies based on the number of components () utilized in the decomposition.

Figure 2(c) shows the results of applying transfer learning. This figure compares the performance under four conditions:

-

•

No transfer (Train and Test): This condition is equivalent setup to that for Figure 2(a). Both training and testing samples were from the same session. The Train condition specifically denotes the use of training samples for testing, offering a baseline for the best possible performance.

-

•

Subject transfer: The testing samples were from a different subject than the one used for decomposer training, but both the training and testing subjects performed the same task. The networks used were identical to those in the no transfer conditions, where 80% of the samples from a training subject were used to train the decomposer. This procedure was repeated for all subject combinations for each task, and the RMSEs were averaged.

-

•

Task transfer: The training and testing samples were from the same subject, but the subject performed different tasks for the testing and training samples. As with the subject transfer condition, the networks used were identical to those in the no transfer condition. This procedure was repeated for all task combinations for each subject, and the RMSEs were averaged.

As expected, the Train condition yielded the lowest RMSE, followed closely by the Test condition. Notably, even in scenarios involving subject and task transfers, the RMSEs remained very close to those obtained in the no-transfer condition. This observation suggests that the trained decomposer was able to generalize effectively across different subjects and tasks, decmonstrating its robustness and versatility in real-world applications. The generalization capabilities of the decomposer will be further explored in Section 4.6, where we discuss the feasibility benefits of utilzing a pre-trained model of the decomposer.

Figure 2(d) provides the reconstruction accuracy when applied across different EEG channels. In this analysis, while maintaining the same 20% of samples designated for testing (as in previous experiments), the channel that testing signals were recorded was not Cz. The results indicate that for a decomposer configured with 32 decomposed signals (), the RMSE was approximately 1 V. This performance, while slightly inferior compared to the no-transfer condition (where training and testing were done on the same channel), still demonstrates a reasonably high level of accuracy for reconstruction.

4.2 ERP classification

In this section, we shifted our focus from assessing the reconstruction accuracy to evaluating the decomposed signals, themselves. Specifically, we examined their contribution to the classification of ERPs elicited by an oddball task. The objective of this classification was to accurately determine the category of stimuli presented during the task, based on the EEG signals.

4.2.1 Dataset

The dataset was the same as the one described in Section 4.1 [Dzianok et al., 2022]. We exclusively focused on the samples acquired while subjects engaged in the Oddball task. These samples were labeled as three classes: standard, target, and deviant. The number of samples for each of the three stimulus classes was equalized for each subject. This equalization was achieved through a process of random undersampling. On average, this process yielded a dataset comprising approximately 1,392 samples per subject.

4.2.2 Architecture

We employed the same decomposers as those used in the experiment detailed in Section 4.1.

4.2.3 Classification procedure

As previously outlined in Section 4.1.3, for the ERP classification experiment, the decomposer was trained using 80% of the samples from the session while the remaining 20% were reserved for testing and evaluating classification accuracy. All the signals decomposed by the decomposer were inputted into a classifier. We employed a linear support vector machine (SVM) [Vapnik, 1998] with a regularization parameter set to . The primary task of the SVM classifier was to categorize each input EEG signal into one of the three labels. The classifier itself was trained using the same training samples that were utilized for training the decomposer. We compared the classification accuracy achieved by using the decomposed signals against that obtained by using the original, undecomposed EEG signals.

4.2.4 Result

Figure 5 presents the classification accuracy results. The classification that underwent decomposition was significantly higher than those using the original, undecomposed signals (a paired -test showing and ).

Figure 6 shows the grand-average of the decomposed signals that appear to contribute accurate classification. The contribution of each signal to classification was evaluated by measuring the classification accuracy using only individual decomposed signal, following the same procedure as in Section 4.2.3. Although the decomposer was different for each subject, the decomposed signals with high classification contributions seem to preserve distinctions among the classes. In contrast, the decomposed signals with low contribution did not appear to have class-specific features. This suggests that the decomposer effectively separated task-related and non-task-related (possibly noise-related) components, improving the signal-to-noise ratio for certain decomposed signals and contributing to enhanced classification accuracy.

4.3 Noise reduction

The effectiveness of the proposed method was further assessed in terms of its performance in noise reduction. Instead of direct evaluation of noise reduction performance, we evaluated its impact indirectly by measuring classification accuracy in a motor-imagery (MI)-based BCI dataset.

4.3.1 Dataset

For our noise reduction performance assessment, we utilized an open dataset, specifically designed for MI-based BCIs, as detailed in [Ofner et al., 2017]. This dataset comprises samples from 15 subjects, each engaging in seven distinct MI tasks. The tasks included in the datasets are (elbow flexion), (elbow extension), (supination), (pronation), (hand close), (hand open), and (rest). The dataset also encompasses three electrooculography (EOG) channels, which record the electrical activity of the muscles around the eyes. The electrodes for three channels were placed around the left, right, and top areas of the eyes. EOG data is useful for identifying artifacts related to eye movements, which are common sources of noise in EEG signals.

The original EEG were recorded using 61 electrodes at a sampling rate of 512 Hz. However, given that our study concentrates on single-channel decomposition, we exclusively utilized data from channel F1. Channel F1 is situated in the frontal area of the head, a region frequently susceptible to noise events, such as eye blinks and saccades. For each subject, the dataset comprised 120 samples.

4.3.2 Architecture

The decomposer was configured based on the network architecture detailed in Section 3.1. Both the signal-estimator and noise-estimator within the network designed to provide 16 decomposed signals each. The detectors in both estimators consisted of four layers, with each layer comprising a convolutional layer (having a kernel size of 33, which equates to approximately 0.13 s, and single channel), followed by a ReLU activation function. The convolutional layer in the atom network was set with a kernel size of 512 corresponding to roughly 2 s.

The optimization of the networks for both the signal- and noise-estimators was carried out using supervised loss functions, as specified in (12) and (13). For this process, an Adam optimizer (a learning rate of 0.0001, of 0,5, of 0.999, and a weight decay of ) was employed. The optimization was spanned over 2,000 iterations (epochs) with a batch size of 100. In the case of the noise-estimator, we used the loss function in (13) for the initial 1,000 iterations, followed by (14) for the subsequent 1,000 iterations.

4.3.3 Classification procedures

For the classification task, the available samples were categorized into three separate sets, serving distinct purposes in the experimental procedure. The first set is samples for decomposer training, denoted by . These samples were selected from the classes , , , , and . They were specifically used for training the decomposer. The second and third sets are samples for classifier training, , and for testing, . These sets comprised samples from classes and . They were divided into and using a leave-one-out cross validation (CV) method.

We trained the decomposer using the samples from set . The noise-estimators were optimized based on labels indicating the presence or absence of noise events at each time index. These labels were derived from the EOG signals. From the three EOG channels available in the dataset, we derived three bipolar EOG signals. Time indexes where the amplitude of these bipolar signals exceeded the threshold of V were marked as instances of noise-event-present.

The decomposer, trained with these noise-event labels, was then able to differentiate and produce two distinct outputs for each sample: one representing the noise-related signal and the other presenting the brain-related signal. The next step of the process involved the classification of these brain-related signals, i.e., the outputs of the signal-estimator. For this purpose, we employed an SVM classifier equipped with a radial basis function kernel. The SVM classifier was configured with a kernel coefficient set to “scale” and a regularization parameter set to 1. The classifier’s task was to discriminate an output of the signal-estimator into either class or .

In addition to the proposed method (shown as Proposed), performance was also evaluated under two alternative conditions for comparison: Raw, Reject, EOG-Reg, and SSA-ICA. In the Raw condition, the signal underwent no decomposition or noise reduction. The observed signals in were directly input into the classifier without any processing. In the Reject condition, a straightforward noise rejection approach was implemented. Any samples with time indexes labelled as having noise events were completely excluded. In the EOG-Reg condition, we applied a method for reducing EOG artifacts through regression [Croft & Barry, 2000], as implemented in MNE-Python [Gramfort, 2013]. In the SSA-ICA condition, we used a frequency-based single-channel decomposition method that combines singular spectrum analysis (SSA) and ICA [Maddirala & Shaik, 2018]. We first decomposed the single-channel signal using SSA into eight signals and then applied ICA to the eight decomposed signals. Independent components with a correlation coefficient greater than or less than with any EOG bipolar signals were removed, and the signal was reconstructed using the inverse transforms of ICA and SSA. It should be noted that this comparison is not entirely fair, as the proposed method requires training data, whereas both the EOG-Reg and SSA-ICA methods only require the observed signal for decomposition.

4.3.4 Result

Figure 7 presents several signal examples. The EOG signals shown in the top panel were used for labeling each time index as noise-event-absent or noise-event-present. The grey-shaded areas represent time indexes marked as noise-event-present, where large amplitude fluctuations in the EOG signals suggest the present of noisy events like eye blinks or eye movements. The middle panel compares the original EEG signals (channel F1) with their noise-reduced counterparts. In addition to EOG-Reg, SSA-ICA, and the proposed method, the noise reduction was applied by ICA which utilized signals from all 64 EEG channels. For the noise-reduced signals via ICA, independent components with a correlation coefficient greater than or less than with any EOG bipolar signals were removed from the reconstruction.

Figures 7(a) and 7(b) indicate that both EOG-Reg, ICA, and the proposed method were all effective in reducing large fluctuations presumed to be caused by noise events. In contrast, SSA-ICA altered the signal values even during periods without noise events, suggesting that frequency-based decomposition may not be suitable for reducing EOG artifacts. As shown in Figure 7(c) for the proposed method and Figure 7(d) for ICA and EOG-Reg, there were instances where both methods did not completely eliminate these fluctuations. This observation suggests that while these noise reduction techniques are generally effective, they are not foolproof in all scenarios.

Figure 8 displays the classification accuracy rates for each condition in the MI task.

The proposed method demonstrated significantly higher accuracy rates compared to the Raw, EOG-Reg, and SSA-ICA conditions, with -values of 0.007, 0.005, and 0.022, respectively. Both the EOG-Reg and SSA-ICA conditions appear to reduce not only noise but also other signal components, as shown in Figure 7(d), which may lead to the decline in accuracy. Furthermore, the accuracy rate by the proposed method were on par with those of the Reject condition, evidenced by a non-significant difference with a -value of 0.298. This suggests that the effectiveness of the proposed method in reducing noise-related components and its subsequent contribution to a robust classification.

4.4 SSVEP classification

In this section, we evaluated the performance of the proposed decomposer, specifically designed with an architecture where a single atom is shared across all detectors. This unique design aligns with the signal model associated with SSVEP-BCI.

4.4.1 Dataset

Two open datasets [Wang et al., 2017, Liu et al., 2020] designed for SSVEP-BCI research were used in this experiment. These datasets collectively included EEG recordings from 35 and 70 subjects, respectively. Subjects were displayed 40 stimuli, each flickering at different frequencies and arranged in a matrix on a monitor. The task is to identify the specific stimulus a subject was gazing at, based on solely on the EEG signal. The original signals in both datasets were recorded using 64 channels at a sampling rate of 250 Hz. However, for the purpose of this SSVEP-BCI task, only the data from channel Oz were used. Signals were epoched in the time range of – s post stimulus onset for [Wang et al., 2017] and – s for [Liu et al., 2020]. Additionally, the epoch signals underwent bandpass filtering using a 4th order Butterworth filter with a frequency range of 5 to 100 Hz. For each subject, each class had 6 samples, leading to a total of 240 samples for [Wang et al., 2017]. For [Liu et al., 2020], there were 4 samples per class, resulting in 160 samples in total.

4.4.2 Architecture

The decomposer was configured based on the network architecture described in Section 3.2. The decomposer was designed to output a number of decomposed signals corresponding to the number of stimuli, which in this case was 40. Each detector had two layers. Each layer consisted of a convolutional layer with a kernel size of 501 (equivalent to approximately 2 s) and two channels. The final layer in the detector sequence employed a ReLU activation function. The detectors shared a single atom. The length of this atom was set to 125, corresponding to 0.5 s. The supervised loss, as defined in (17), was used for the optimization of the decomposer. For the optimization, an Adam optimizer was employed with the following settings: a learning rate of 0.0001, of 0.5, of 0.999, and a weight decay of . The optimization process spanned over 200 iterations (epochs), and was conducted with a batch size of 40.

4.4.3 Classification Procedure

For the SSVEP classification, the procedure involved splitting the samples into two equal parts: one set for training and the other for testing. The training samples were first utilized for optimizing the decomposer. Following the optimization of the decomposer, the same training samples were then used to train a classifier. For classification, the powers of the decomposed signals, derived from the decomposer, were used as input features. The classifier was implemented using an SVM with a kernel coefficient was set to “scale” and a regularization parameter of 1. In addition to using the decomposed signals, the experiment also included a comparison where the power spectrum density (PSD) of the original EEG signal was used as input to the classifier.

4.4.4 Result

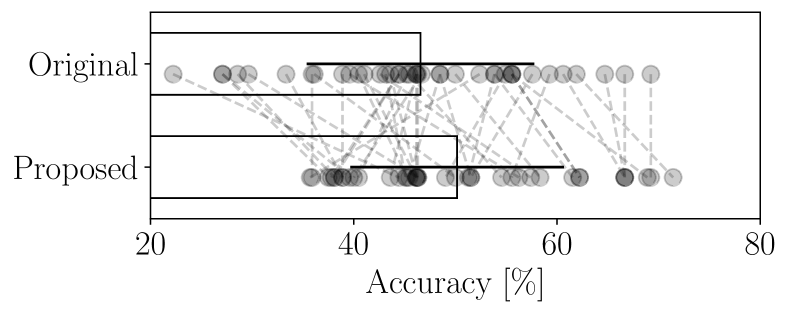

Figure 9 shows the classification accuracy rates for the SSVEP-BCI task, encompassing results from 105 subjects. The results revealed that the average rate was 35.3% for the method using PSD features and 40.23% for the proposed method. A paired -test was conducted to validate this improvement statistically, yielding and ,which clearly demonstrates the significance of the accuracy enhancement brought by the proposed method. Notably, the improvement was particularly pronounced among subjects who already had relatively high accuracy with the PSD method. This observation suggests that a robust amplitude of SSVEP signals might be a critical factor for the effective optimization of the decomposer.

Figure 10 provides a detailed view of a single-trial signal sample. Figure 10(a) show outputs of the detectors, with each detector tailored for a specific class among the 40 available. This PSD suggests that the detector captured the target stimulus’s flickering frequency and its harmonics. The atom, which is shared across all decomposed signals, is depicted in Figure 10(b). It represents an estimation of the VEPs induced by a single flash, composing the flickering stimulus. As illustrated in Figure 10(c), The decomposed signal identified by the detector corresponding to the target stimulus class exhibited greater power compared to the other decomposed signals. The reconstructed signal shown in Figure 10(d) does not precisely replicate the original, reflecting the decomposer’s focus on class separation rather than pure reconstruction fidelity. The spectra of both the original and reconstructed signals show that the reconstruction process may enhance certain frequency components characteristic of SSVEP.

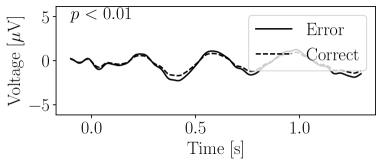

4.5 ERP decomposition

In this section, we explored the application of the proposed decomposer in extracting error-related potentials (ErrPs). ErrPs are specific types of ERPs that emerge in response to negative feedback [Nieuwenhuis et al., 2004]. They have been recognized for their usefulness in detecting errors within BCI systems [Parra et al., 2003].

While we previously assessed our decomposer’s capability with ERPs in Section 4.2, ErrPs are characterized by their time-invariant nature across trials. This specific attribute of ErrPs, where there is no expected time shift in response to stimuli or events, makes the specialized decomposer architecture described in Section 3.3.

4.5.1 Dataset

We used an open dataset designed for error detection during a P300 spelling task [Margaux et al., 2012]. This dataset included samples from 15 subjects. The dataset posed a classification challenge where EEG signals are classified into either “Error” or “Correct” classes. These classes represented whether the feedback from the BCI corresponds to the target letter the subject intended to spell. The EEG data were originally recorded using 56 channels at a 200 Hz sampling rate. However, in line with the focus of our study on single-channel decomposition, we used data from channel Cz.

As preprocessing, the continuous signals were filtered using a 5th order Butterworth filter within a frequency range of 1 to 40 Hz. The signals were segmented into epochs spanning from – s relative to the onset of the BCI system feedback. The epoch samples were baseline-corrected using the average voltage calculated over a period from to s. Any epoch samples that exhibited voltage excursions beyond V were excluded from further analysis. After these preprocessing steps, a total of 3,254 samples remained for analysis.

For this experiment, our aim was to identify components common across all subjects. To achieve this, a single decomposer was designed for all the subjects’ samples, akin to the concept of grand-averaging, which typically involves averaging signals across multiple subjects. Consequently, samples from all subjects were concatenated into a unified dataset.

4.5.2 Architecture

The decomposer was structured based on the framework introduced in Section 3.3. The decomposer was set up to decompose an input signals into eight distinct signals. These eight detector-atom pairs shared a uniform architecture. The detector of each network consisted of three convolutional layers, each with a kernel size of 25, translating to 125 ms, followed by a linear layer and a ReLU activation function. The atom network was designed with a kernel size of 280 (1.4 s), equivalent to the signal length of the input. The networks were optimized using the supervised loss function as detailed in (7). Half of the networks were dedicated to the Error class, with optimization aimed at accurately reproducing signals characteristic of error responses. The remaining networks were focused on the Correct class. This architecture and loss function aimed to effectively distinguish between common and unique signatures associated with both Error and Correct classes. The network underwent optimization process, spanning 10,000 iterations (epochs). The batch size was set at 100.

4.5.3 Result

The performance evaluation was conducted using a setting where 50% of the samples were randomly selected for training the decomposer, with the remaining 50% used for testing. Although, our primary objective is to identify EEG signatures between classes, necessitating the use of all samples for training, this setup of splitting the training and testing samples was essential to avoid overfitting, which could result in spurious EEG signatures. The waveforms presented in the results were decomposed and reconstructed from the training samples. The RMSEs for the training and testing samples were 8.41 V and 8.55 V, respectively. This RMSE was notably higher compared to the results in Section 4.1. This difference in error magnitude can be attributed to the challenges of reconstructing EEG signals from 15 subjects using only eight atoms without any time shift. Figure 11 shows the grand-averaged original and reconstructed signals for each class. Even though these signals are grand-averaged, which is expected to reduce reconstruction errors caused by noise, we still observe some slight errors through visual comparison, suggesting that the reconstruction accuracy was not particularly high.

Figure 12 presents the grand average of the decomposed signals, offering insights into the effectiveness of the decomposer in capturing class-specific components. The -values indicated in the figures were calculated through a -test. This statistical test was applied to evaluate whether the scalar outputs by the detectors (i.e., in (21)) differed significantly between the Error and Correct classes. A significant difference in these values implies that the decomposers have successfully captured components characteristic of each class. Three decomposed signals show significant differences, while the others did not. Moreover, Figure 13 illustrates two reconstructed signals. One (Different) was reconstructed using output signals from the decomposers that demonstrated significant differences between classes. These can be interpreted as the ERPs specifically modulated by the class distinction. Another was reconstructed using outputs from the decomposers where no significant class-based difference was observed. These represent components that are common across both classes.

This demonstration provides an intriguing perspective on the nature of ErrPs, traditionally understood in neuroscience as negative voltage fluctuations in response to error observation. The decomposed signals, as illustrated in Figures 12 and 13(a), suggest that a neural response typically recognized as an ErrP might actually be the result of overlapping multiple neural components. The waveforms of the decomposed signals suggest that the observation of an error might lead to a reduction in the amplitude of a positive fluctuation, rather than solely generating a negative fluctuation. Although further investigation is required to understand the specific conditions under which ERPs are activated or suppressed, our decomposer could be a effective tool for disentangling these components.

4.6 Pre-trained decomposer and cross-dataset validation

The results of the transfer learning shown in Figure 2 demonstrate the feasibility of our proposed decomposer to be applied to any signal. Here, we briefly tested the decomposition performance on signals that were recorded in completely different recording environments (including different measurement devices), BCI tasks, and subjects from those used for decomposer training. We refer to this test as a cross-dataset validation.

4.6.1 Datasets

The datasets available with the Mother of all BCI Benchmarks (MOABB) [Aristimunha et al., 2023] were used to build and validate the decomposer. For detailed information on the datasets, please refer to the software website111http://moabb.neurotechx.com. The dataset included several datasets covering MI, P300, SSVEP, c-VEP, and resting-state paradigms.

We divided the datasets into two sets: a decomposer training set and a testing set. The division was conducted randomly, but datasets with paradigms other than MI, SSVEP, and P300 were assigned to the training set to ensure validation of decomposition performance with standard BCI paradigms. The training and testing sets include samples from 18 and 15 datasets, respectively. The dataset codes are listed in the vertical axes of Figures 14 and 18 for training and testing, respectively.

4.6.2 Architecture

The decomposer, consisting of eight pairs of detectors and atoms, generated eight signals. Each detector had four one-dimensional-convolutional layers. A layer was designed with a kernel size of 125, corresponding to a filter size of approximately 0.5 s. The middle layers of the detector had eight channels. The final module of the detector was a ReLU activation function, ensuring that the detector output was positive. The atom convolution was implemented as a one-dimensional convolutional layer with a kernel size of 125, corresponding to a filter size of 0.5 s. This layer did not have a bias term.

4.6.3 Training

For organizing the decomposer training samples, we applied a common procedure for all signals in the training set. We did not select specific electrodes; therefore, all channels were used for building the decomposer. The signals underwent bandpass filtering using a 4th order Butterworth filter with a frequency range of 0.5 to 100 Hz. The sampling frequency were standardized by an upsampling and downsampling scheme to 250 Hz. We epoched the signals using a non-overlapping 3 s sliding window. Then, the epoched signals were normalized to have a zero mean and unit variance. This procedure did not retain the class labels, which were independently labeled for each dataset, meaning that the decomposer training was conducted in an unsupervised manner. Finally, the total number of samples was approximately 7.48M. The statistics (number of samples, channels, and subjects) for the training set are summarized in Figure 14.

Due to the large size of the training samples, we randomly selected 100,000 samples from the training set to accommodate our computational resources. The optimization was performed over 100 iterations (epochs), with batches of 10,000 samples each from the selected samples. After completing these iterations, we reselected the samples randomly and optimized the network with the new selection. This process of random selection and optimization was repeated 140 times, resulting in a total of 14,000 epochs. The computation took approximately five days using a single NVIDIA RTX A6000 GPU.

The network weights were initialized randomly. The optimization process utilized an Adam optimizer with the following parameters: a learning rate of , of 0.5, of 0.999, and a weight decay of .

We monitored the power of the atoms at the beginning of every 100, applying the atom reassignment procedure described in Appendix Section A as needed. The regularization coefficient in (8) was set to 0 for the first 4,000 epochs and adjusted to for the remaining epochs. Due to the significant computational resources required for training the decomposer, this coefficient was empirically tuned without additional validation.

Figure 15 illustrates the convergence of the total loss () over the optimization epochs. At the 4,000th epoch, the introduction of the sparsity loss (8) caused a significant fluctuated in the total loss. Following the introduction, the loss generally decreased, with minor fluctuations due to the execution of atom replacement. While the atom replacement theoretically does not affect the fidelity loss, it impacted the sparsity loss, which in turn affected the fidelity loss.

Figure 16 presents the atoms in the pre-trained decomposer, along with an example of an input and output signals. Each atom exhibits a distinct shape, seemingly representing different components within the input signal. The reconstruction error in this example is 0.46 V in mean absolute error (MAE), indicating successful reconstruction.

4.6.4 Classification procedures for testing samples

While we epoched signals using a sliding window for the training set, the samples for the testing set were epoched according to the class labels for each dataset. After obtaining the epoched signals, they were classified by the following procedure.

Firstly, for each dataset, we selected a single channel manually chosen for the BCI paradigm, as described in Appendix Section B. The signals were then resampled to a Hz sampling rate and bandpass filtered using a 4th order Butterworth filter with a frequency range of 0.5 to 100 Hz. This resampling was necessary to match the sampling frequency of the decomposer and the input signal.

The resampled signals were input into the pre-trained decomposer. The input signals were first normalized to have zero mean and unit variance. The output decomposed signals were then inverse-normalized to match the original mean and variance. After decomposition, the signals, which originally had a single channel, were treated as having eight channels.

These eight-channel signals were then processed through bandpass filtering, downsampling, preprocesssing systems, and classification into labels. The specific procedures varied depending on the BCI paradigms, as described in Appendix Section B. These paradigm-specific classification procedures follow “classic” approaches. In addition to the classic classifiers, we employed a deep-learning based classifier that does not require paradigm-specific preprocessing and classifier. We used an EEGNet [Lawhern et al., 2018]-based model, specifically the EEGNet-v4 implementation from Braindecode library [Schirrmeister et al., 2017]. Signals without any preprocessing were directly input into this model.

The classification accuracy was evaluated using 10-fold cross-validation. It is important to note that while the decomposer had been pre-trained using different datasets, the classifiers were trained using samples from the same dataset as the testing samples. We compared the performance of the classification with the our decomposer (Proposed) to the performance without the decomposer (Original). In the Original condition, the procedure was the same as that for the Proposed condition, except that the decomposer was not used.

4.6.5 Result

Figure 17 shows MAEs between the original signal (input for the decomposer) and the reconstructed signal (sum of the decomposed signals). Considering that EEG signals typically range of V, an MAE of approximately 2 V would be relatively low. A Mann-Whitney (MAU) test revealed no significant difference in dataset-wise MAEs (the averaged for each dataset) between the training and testing sets (). Figure 17 also shows the normalized MAE (NMAE), defined as , where is the mean amplitude of the original signal. NMAE represents the ratio between the original amplitude and the reconstruction error. The proposed decomposer achieved a ratio of approximately 10–12%. This metric also showed no significance difference between the training and testing sets ( by an MAU test). This lack of significant difference in the reconstruction performance suggests that overfitting is not present.

Figure 18 shows the classification accuracy for each dataset in the testing set. The differences in accuracy rates between the proposed and original conditions were tested using an MAU test. With the classical classifiers, as the average accuracy across all subjects showed no significant improvement (49.48% without the decomposer vs. 50.16% with the decomposer, ), we found that the decomposer did not enhance accuracy for all datasets. However, focusing on the BCI-paradigm-wise results, the decomposer significantly improved accuracy for the MI (46.75% vs. 48.20%, ) and SSVEP (31.78% vs. 33.83%, ) paradigms, while it degraded accuracy for the P300 paradigm (65.04% vs. 63.76%, ). This suggests that the decomposer is effectively in handling frequency components. Decomposing time-locked components like P300 may require a different architecture, as discussed in Section 3.3. With the EEGNet-v4 model, however, the decomposer significantly improved the classification accuracy for all paradigms (MI: 36.29% without the decomposer vs. 40.89% with the decomposer, , P300: 63.61% vs. 64.70%, , SSVEP: 26.02% vs. 28.76%, ), and also for the average across all subjects (42.88% vs. 45.93%, ). Because the performance of neural network-based models depends on the sample size and the setting of training parameters, we cannot simply compare the results between the classical classifier and EEGNet-v4. However, these improvements in the classification accuracy with the both classifiers suggest the pre-trained decomposer contributed to decoding of brain patterns.

5 Discussion

This paper presents a novel approach for single-channel EEG signal decomposition, based on the concept that a signal comprises multiple time-shifted and amplitude-modulated atoms [Brockmeier & Principe, 2016, Lewicki et al., 1999]. Our method utilizes a neural network-based model and training data to estimate these atoms, determining their time shifts and amplitude modulations within the signal. Consequently, we obtain decomposed signals, each associated with one of these atoms.

We evaluated the effectiveness of our decomposition method using real-world EEG data, emphasizing its contributions to neuroscience research and BCI development. Our findings reveal that the decomposition and reconstruction process can faithfully reproduce original signals and enhance the performance of specific neuroscience and BCI tasks. This supports our signal model based on atom convolution, indicating that the decomposed signals hold valuable information. Additionally, our cross-dataset validation reaffirms this indication and highlights the feasibility of a pre-trained decomposer. This pre-trained model would require no calibration and could be used in a plug-and-play manner, simplifying EEG measurement and analysis for various applications. Further empirical research by peer researchers and developers, who can utilizing the publicly available pre-trained model, will help clarify the neuroscientific significance of our research.

One of the major issues is that our decomposer does not provide a unique decomposition. The simultaneous optimization of the detectors and atoms is highly arbitrary, meaning that the trained detectors and atoms depend on the initial values at the start of optimization, even when the training data remains the same. Introducing reasonable initialization for the atoms, such as using Fourier basis functions, mother wavelets, and a pre-trained model, could improve consistency for training.

Hyperparameter tuning also requires a solution. Our current approach necessitates the tuning of network hyperparameters, particularly the number of decomposed signals and the signal length of atoms. Expertise in the specific task domain can aid in predicting suitable parameters. Additionally, the availability of training data is a prerequisite for our method. Using the data, heuristic methods could be developed to facilitate parameter tuning.

Another limitation is the applicability of the pre-trained decomposer for unknown EEG patterns. The effectiveness of the pre-trained model depends significantly on the training data. Our decomposer produces decomposed signals through the convolution of pre-trained atoms. Therefore, if a signal comprises atoms that are unknown or not represented in the training data, the decomposition may be incomplete. Consequently, the pre-trained decomposer is suited for signals containing well-known and well-defined EEG components and may not be ideal for neuroscientific research aimed at discovering unknown EEG patterns. While the pre-trained model can be valuable for preprocessing tasks like noise reduction, re-training techniques such as fine-tuning or continual learning [Parisi et al., 2019] with current datasets are recommended to enhance its applicability and accuracy.

This research introduced a novel single-channel EEG decomposition technique utilizing a convolution model with a limited number of atoms. Our experiments provided empirical evidence supporting the applicability of this decomposition method across various EEG research scenarios. Including the high feasibility of a pre-trained model, our approach simplifies measurement and analysis procedures, potentially advancing EEG and BCI research.

Ethics statement

This research is not applicable.

Data and code availability

A demo code is available in https://github.com/hgshrs/detector-atom-net.

Author Contributions

Hiroshi Higashi is responsible for all aspects of this work, including its conception and design, analysis, and interpretation.

Funding

This work was supported in part by the Japan Society for the Promotion of Science (JSPS) KAKENHI, grant number 22H05163 and 24K15047, and Japan Science and Technology Agency (JST) Advanced International Collaborative Research Program (AdCORP), grant number JPMJKB2307.

Competing interests

The authors declare no conflict of interest.

References

- [Aristimunha et al., 2023] Aristimunha, B., et al. (2023). Mother of all BCI Benchmarks. http://moabb.neurotechx.com.

- [Asadzadeh et al., 2020] Asadzadeh, S., Yousefi Rezaii, T., Beheshti, S., Delpak, A., & Meshgini, S. (2020). A systematic review of EEG source localization techniques and their applications on diagnosis of brain abnormalities. Journal of Neuroscience Methods, 339(February), https://doi.org/10.1016/j.jneumeth.2020.108740.

- [Babadi & Brown, 2014] Babadi, B. & Brown, E. N. (2014). A review of multitaper spectral analysis. IEEE Transactions on Biomedical Engineering, 61(5), 1555–1564, https://doi.org/10.1109/TBME.2014.2311996.

- [Bajaj & Pachori, 2012] Bajaj, V. & Pachori, R. B. (2012). Classification of seizure and nonseizure EEG signals using empirical mode decomposition. IEEE Transactions on Information Technology in Biomedicine, 16(6), 1135–1142, https://doi.org/10.1109/TITB.2011.2181403.

- [Barthélemy et al., 2013] Barthélemy, Q., Gouy-Pailler, C., Isaac, Y., Souloumiac, A., Larue, A., & Mars, J. (2013). Multivariate temporal dictionary learning for EEG. Journal of Neuroscience Methods, 215(1), 19–28, https://doi.org/10.1016/j.jneumeth.2013.02.001.

- [Blankertz et al., 2011] Blankertz, B., Lemm, S., Treder, M., Haufe, S., & Müller, K.-R. (2011). Single-trial analysis and classification of ERP components–A tutorial. NeuroImage, 56(2), 814–25, https://doi.org/10.1016/j.neuroimage.2010.06.048.

- [Blankertz et al., 2008] Blankertz, B., Tomioka, R., Lemm, S., Kawanabe, M., & Müller, K.-R. (2008). Optimizing spatial filters for robust EEG single-trial analysis. IEEE Signal Processing Magazine, 25(1), 41–56.

- [Brockmeier & Principe, 2016] Brockmeier, A. J. & Principe, J. C. (2016). Learning recurrent waveforms within EEGs. IEEE Transactions on Biomedical Engineering, 63(1), 43–54, https://doi.org/10.1109/TBME.2015.2499241.

- [Chen et al., 1998] Chen, S. S., Donoho, D. L., & Saunders, M. A. (1998). Atomic decomposition by basis pursuit. SIAM Journal on Scientific Computing, 20(1), 33–61, https://doi.org/10.1137/S1064827596304010.

- [Cichocki & Amari, 2002] Cichocki, A. & Amari, S. (2002). Adaptive Blind Signal and Image Processing: Learning Algorithms and Applications. Wiley.

- [Croft & Barry, 2000] Croft, R. & Barry, R. (2000). Removal of ocular artifact from the EEG: A review. Neurophysiologie Clinique/Clinical Neurophysiology, 30(1), 5–19, https://doi.org/10.1016/S0987-7053(00)00055-1.

- [Davies et al., 2024] Davies, H. J., Hammour, G., Zylinski, M., Nassibi, A., Stanković, L., & Mandic, D. P. (2024). The deep-match framework: R-peak detection in Ear-ECG. IEEE Transactions on Biomedical Engineering, 71(7), 2014–2021, https://doi.org/10.1109/TBME.2024.3359752.

- [de Munck et al., 2009] de Munck, J., Gonçalves, S., Mammoliti, R., Heethaar, R., & Lopes da Silva, F. (2009). Interactions between different EEG frequency bands and their effect on alpha–fMRI correlations. NeuroImage, 47(1), 69–76, https://doi.org/10.1016/j.neuroimage.2009.04.029.

- [Dockès et al., 2021] Dockès, J., Varoquaux, G., & Poline, J.-B. (2021). Preventing dataset shift from breaking machine-learning biomarkers. GigaScience, 10(9), https://doi.org/10.1093/gigascience/giab055.

- [Dzianok et al., 2022] Dzianok, P., Antonova, I., Wojciechowski, J., Dreszer, J., & Kublik, E. (2022). The Nencki-Symfonia electroencephalography/event-related potential dataset: Multiple cognitive tasks and resting-state data collected in a sample of healthy adults. GigaScience, 11, https://doi.org/10.1093/gigascience/giac015.

- [Friedman, 1989] Friedman, J. H. (1989). Regularized discriminant analysis. Journal of the American Statistical Association, 84(405), 165–175, https://doi.org/10.1080/01621459.1989.10478752.

- [Garcia-Cardona & Wohlberg, 2018] Garcia-Cardona, C. & Wohlberg, B. (2018). Convolutional dictionary learning: A comparative review and new algorithms. IEEE Transactions on Computational Imaging, 4(3), 366–381, https://doi.org/10.1109/tci.2018.2840334.

- [Gramfort, 2013] Gramfort, A. (2013). MEG and EEG data analysis with MNE-Python. Frontiers in Neuroscience, 7(7 DEC), https://doi.org/10.3389/fnins.2013.00267.

- [Grosse et al., 2007] Grosse, R., Raina, R., Kwong, H., & Ng, A. Y. (2007). Shift-invariant sparse coding for audio classification. In 23rd Conference on Uncertainty in Artificial Intelligence (UAI’07) (pp. 66–79).

- [Gu et al., 2021] Gu, X., Cao, Z., Jolfaei, A., Xu, P., Wu, D., Jung, T.-P., & Lin, C.-T. (2021). EEG-based brain-computer interfaces (BCIs): A survey of recent studies on signal sensing technologies and computational intelligence approaches and their applications. IEEE/ACM Transactions on Computational Biology and Bioinformatics, 18(5), 1645–1666, https://doi.org/10.1109/TCBB.2021.3052811.

- [Huang et al., 1998] Huang, N. E., et al. (1998). The empirical mode decomposition and the Hilbert spectrum for nonlinear and non-stationary time series analysis. Proceedings of The Royal Society A: Mathematical, Physical and Engineering Sciences, 454(1971), 903–995, https://doi.org/10.1098/rspa.1998.0193.

- [Hyvärinen & Oja, 2000] Hyvärinen, A. & Oja, E. (2000). Independent component analysis: Algorithms and applications. Neural Networks, 13(4-5), 411–430, https://doi.org/10.1016/S0893-6080(00)00026-5.

- [Jas et al., 2017] Jas, M., Dupré, T. T. L., Şimşekli, U., & Gramfort, A. (2017). Learning the morphology of brain signals using alpha-stable convolutional sparse coding. In Advances in Neural Information Processing Systems.

- [Kotani et al., 2024] Kotani, S., Higashi, H., & Tanaka, Y. (2024). Single-channel P300 decomposition using detector-kernel networks. In 2024 International Technical Conference on Circuits/Systems, Computers, and Communications (ITC-CSCC) (pp. 1–5).

- [Kreutz-Delgado et al., 2003] Kreutz-Delgado, K., Murray, J. F., Rao, B. D., Engan, K., Lee, T.-W., & Sejnowski, T. J. (2003). Dictionary learning algorithms for sparse representation. Neural Computation, 15(2), 349–396, https://doi.org/10.1162/089976603762552951.

- [Krusienski et al., 2006] Krusienski, D. J., Sellers, E. W., Cabestaing, F., Bayoudh, S., McFarland, D. J., Vaughan, T. M., & Wolpaw, J. R. (2006). A comparison of classification techniques for the P300 Speller. Journal of Neural Engineering, 3(4), 299.

- [Lawhern et al., 2018] Lawhern, V. J., Solon, A. J., Waytowich, N. R., Gordon, S. M., Hung, C. P., & Lance, B. J. (2018). EEGNet: A compact convolutional neural network for EEG-based brain-computer interfaces. Journal of Neural Engineering, 15(5), 1–30, https://doi.org/10.1088/1741-2552/aace8c.

- [Lewicki et al., 1999] Lewicki, M. S., Sejnowski, T. J., & Hughes, H. (1999). Coding time-varying signals using sparse, shift-invariant representations. In Advances in Neural Information Processing Systems.

- [Lin et al., 2006] Lin, Z., Zhang, C., Wu, W., & Gao, X. (2006). Frequency recognition based on canonical correlation analysis for SSVEP-based BCIs. IEEE Transactions on Biomedical Engineering, 53(12), 2610–2614.

- [Liu et al., 2020] Liu, B., Huang, X., Wang, Y., Chen, X., & Gao, X. (2020). BETA: A large benchmark database toward SSVEP-BCI application. Frontiers in Neuroscience, 14(June), https://doi.org/10.3389/fnins.2020.00627.

- [Lotte et al., 2018] Lotte, F., Bougrain, L., Cichocki, A., Clerc, M., Congedo, M., Rakotomamonjy, A., & Yger, F. (2018). A review of classification algorithms for EEG-based brain-computer interfaces: A 10 year update. Journal of Neural Engineering, 15(3), aab2f2, https://doi.org/10.1088/1741-2552/aab2f2.

- [Maddirala & Shaik, 2018] Maddirala, A. K. & Shaik, R. A. (2018). Separation of sources from single-channel EEG signals using independent component analysis. IEEE Transactions on Instrumentation and Measurement, 67(2), 382–393, https://doi.org/10.1109/TIM.2017.2775358.

- [Mallat, 1998] Mallat, S. (1998). A Wavelet Tour of Signal Processing. Academic Press.

- [Margaux et al., 2012] Margaux, P., Emmanuel, M., Sébastien, D., Olivier, B., & Jérémie, M. (2012). Objective and subjective evaluation of online error correction during P300-based spelling. Advances in Human-Computer Interaction, 2012, https://doi.org/10.1155/2012/578295.

- [Mellot et al., 2023] Mellot, A., Collas, A., Rodrigues, P. L. C., Engemann, D., & Gramfort, A. (2023). Harmonizing and aligning M/EEG datasets with covariance-based techniques to enhance predictive regression modeling. Imaging Neuroscience, 1, 1–23, https://doi.org/10.1162/imag_a_00040.