Simultaneously Optimizing Perturbations and Positions for Black-box Adversarial Patch Attacks

Abstract

Adversarial patch is an important form of real-world adversarial attack that brings serious risks to the robustness of deep neural networks. Previous methods generate adversarial patches by either optimizing their perturbation values while fixing the pasting position or manipulating the position while fixing the patch’s content. This reveals that the positions and perturbations are both important to the adversarial attack. For that, in this paper, we propose a novel method to simultaneously optimize the position and perturbation for an adversarial patch, and thus obtain a high attack success rate in the black-box setting. Technically, we regard the patch’s position, the pre-designed hyper-parameters to determine the patch’s perturbations as the variables, and utilize the reinforcement learning framework to simultaneously solve for the optimal solution based on the rewards obtained from the target model with a small number of queries. Extensive experiments are conducted on the Face Recognition (FR) task, and results on four representative FR models show that our method can significantly improve the attack success rate and query efficiency. Besides, experiments on the commercial FR service and physical environments confirm its practical application value. We also extend our method to the traffic sign recognition task to verify its generalization ability.

Index Terms:

Deep learning models, adversarial patches, face recognition, traffic sign recognition, robustness, security, physical world.1 Introduction

Deep neural networks (DNNs) have shown excellent performance in many tasks [1], [2], [3], but they are vulnerable to adversarial examples [4], where adding small imperceptible perturbations to the image could confuse the network. However, this form of attack is not suitable for real applications because images are captured through the camera and the perturbations also need to be captured. An available way is to use a local patch-like perturbation, where the perturbation’s magnitudes are not restricted. By printing out the adversarial patch and pasting it on the object, the attack in the real scene can be realized. Adversarial patch [5] has brought security threats to many tasks like traffic sign recognition [6, 7], image classification [8, 9], as well as person detection and re-identification [10, 11].

Face Recognition (FR) is a relatively safety-critical task, and the adversarial patch has also been successfully applied in this area [12, 13, 14, 15]. For example, adv-hat [13] and adv-patch [16, 12, 17] put the patch generated based on the gradients on the forehead, nose, or eye area to achieve attacks. Adv-glasses [18, 14] confuse the FR system by placing a printed perturbed eyeglass frame at the eye. The above methods mainly focus on optimizing the patch’s perturbations, and the patch’s pasting position is fixed on a location selected based on the prior knowledge. On the other hand, adv-sticker [15] adopts a predefined meaningful adversarial patch, and uses an evolutionary algorithm to search for a good patch’s position to perform the attack, which shows that the patch’s position is one of the major parameters on the patch attacks. The above methods motivate us that if the position and perturbation are optimized at the same time, the high attack performance could be achieved.

However, simultaneous optimization cannot be viewed as a simple combination of these two independent factors. There is a strong coupling relationship between the position and perturbation. Specifically, experiments show that the perturbations generated at different positions on the face tend to resemble the facial features of the current facial region (detailed in Section 3.1). This verifies that the two factors are interrelated and influenced with each other. Therefore, they should be optimized simultaneously, which means a simple two-phase method or an alternate iterative optimization is not optimal. Additionally, in practical applications, the detailed information about the target FR model usually cannot be accessed. Instead, some commercial online vision APIs (e.g. Face++ and Microsoft cloud services) usually return the predicted identities and scores for the uploaded face images. By utilizing this limited information, exploring the query-based black-box attack to construct adversarial patches is a reasonable solution. In such a case, the simultaneous optimization will lead to a large searching space, and further bring a lot of queries to the FR system. Thus, how to design an efficient simultaneous optimization mechanism to solve for these two factors in the black-box setting becomes a challenging problem for further study.

Currently, some works [19, 20, 21] have studied the optimization of both positions and perturbations. Location-optimization [19] uses an alternate iterative strategy to optimize one while fixing the other under the white-box setting. TPA [20] uses reinforcement learning to search for suitable patch textures and positions in the black-box setting. It belongs to the two-phase method, and because the patch’s pattern comes from a set of predefined texture images, it requires thousands of queries to the target model. GDPA [21] considers the intrinsic coupling relationship, generating both of them through a generator. However, it is a white-box attack and also has other shortcomings, such as the need for the time-consuming offline training and the restrictions to only deal with continuous parameter spaces (detailed in Section 2.1). Therefore, these methods cannot meet the challenge.

Based on above considerations, in this paper, we propose an efficient method to simultaneously optimize the position and perturbation of the adversarial patch to improve the black-box attack performance with limited information. For the perturbation, pixel values of each channel in the patch range from 0 to 255, which results in a huge searching space that is time-consuming to optimize. To tackle this issue, we first reduce the searching dimension. Specifically, based on the transferability of attacks [22], we utilize the modified I-FGSM [23, 24, 25] conducted on the ensemble surrogate models [26], and adjust its hyper-parameters (i.e., the attack step size and the weight for each surrogate model) to generate the patch’s perturbations. In this process, the black-box target model will be well-fitted by the ensemble surrogate models with a proper set of weights, and thus the computed transferable perturbations can perfectly attack the target model. Compared with directly optimizing the pixel-wise perturbation values, changing the attack step size and models’ weights can greatly reduce the parameter space and improve the solving efficiency. Based on this setting, the patch’s position, the hyper-parameters for the patch’s perturbations (i.e., the surrogate models’ weights and the attack step size) become the key variables that ultimately need to be simultaneously learned.

To search for the optimal solution, we use a small number of queries to the target model to dynamically adjust the above learnable variables. This process can be formulated into the Reinforcement Learning (RL) framework. Technically, we first carefully design the actions for each variable, and then utilize an Unet-based [27] agent to guide this simultaneous optimization by designing the policy function between the input image and the output action for each variable. The actions are finally determined according to the policy functions. The environment in the RL framework is set as the target FR model. By interacting with the environment, the agent can obtain a reward signal feedback to guide its learning by maximizing this reward [28]. Note that our method is an online learning process, we don’t need to train the agent in advance. Given a benign image, the agent begins from the random parameters. The reward in each iteration will guide the agent to become better and better until the attack is achieved. The whole scheme is illustrated in Figure 1. The code can be found in https://github.com/shighghyujie/newpatch-rl.

The generated adversarial patch can also combine with the existing gradient estimation attack methods [29, 30, 31] in a carefully designed manner, and provide a good initialization value for them. Thus, the high query cost of the gradient estimation process can be reduced and the attack success rate is further improved (see Section 5.3). In addition to the face recognition, our method is also applicable to other scenarios, such as the traffic sign recognition task. The experiments are given in Section 6.

In summary, this paper has the following contributions:

-

•

We empirically illustrate that the position and perturbation of the adversarial patch are equally important and interact with each other closely. Therefore, taking advantage of the mutual correlation, an efficient method is proposed to simultaneously optimize them to generate an adversarial patch in the black-box setting.

-

•

We formulate the optimization process into a RL framework, and carefully design the agent structure and the corresponding policy functions, so as to guide the agent to efficiently learn the optimal parameters.

-

•

Extensive experiments in face dodging and impersonation tasks confirm that our method can realize the state-of-the-art attack performance and high query efficiency (maximum success rate of 96.65% with only 11 queries on average), and experiments in the commercial API and physical environment prove the good application value.

-

•

To show the flexibility of the proposed method, we combine it with existing gradient estimation attack methods to reduce their high query costs and improve the attack performance. Besides, we extend the proposed method to the traffic sign recognition task to verify its generalization ability.

The remainder of this paper is organized as follows. Section 2 briefly reviews the related work. We introduce the details of the proposed adversarial patch method against face recognition task in Section 3. Section 4 gives the method about how to combine with the gradient estimation attacks. Section 5 shows a series of experiments, and Section 6 gives the extensions to traffic sign recognition task. Finally, we conclude the paper in Section 7.

2 Related Work

2.1 Adversarial patch

Compared with -norm based adversarial perturbations, the adversarial patch [5] is a more suitable attack form for real-world applications where objects need to be captured by a camera. Different from pixel-wise imperceptible perturbations, the adversarial patch does not restrict the perturbations’ magnitude. Up to now, the adversarial patch has been applied to image classifiers [32, 8], person detectors [10], traffic sign detectors [6, 7], and many other security-critical systems. For example, the adversarial T-shirt [10] evades the person detector by printing the patch generated by the gradients in the optimization framework on the center of the T-shirt. The work in [7] confuses the traffic sign detection system by pasting the patch generated by the generative adversarial network on a prior fixed position of the traffic sign. The work in [6] attacks the image classifier and the traffic sign detector by pasting stickers generated by the robust physical perturbation algorithm on the daily necessities and the traffic sign. Therefore, the adversarial patch has become an important method to help evaluate the robustness of DNN models deployed in the real life.

Recently, Location-optimization [19] proposes to jointly optimize the location and content of an adversarial patch, but there are three limitations: (1) They belong to the white-box attack for image classification, which needs to know detailed information of target classifiers. (2) They optimize the two factors via alternate iterations, where one factor is fixed when the other factor is solved, so it is not the simultaneous optimization. (3) The pattern generated at a position is often more applicable to the area near this position. So after the pattern is optimized, the range of position change is limited, and the optimal position within the entire image cannot be achieved. As a comparison, our method can better meet the challenge.

Another related paper is presented in [20], where the authors parameterize the appearance of adversarial patches by a dictionary of class-specific textures, and then optimize the position and texture parameters of each patch using reinforcement learning like ours. However, we are different from [20] in the following aspects: (1) the position and texture in [20] are not solved simultaneously. They explore a two-step mechanism, which first learns a texture dictionary and then uses RL to search for the patch’s texture on the dictionary as well as the position in the input image. This separate operation limits the performance. (2) Because of the defective formulation, the method in [20] shows the poor query efficiency, while our method only needs very few queries to finish the black-box attacks. (3) [20] aims at attacking the DNN classification models, while our method can perform attacks on face recognition and traffic sign recognition. Furthermore, we show the effectiveness in the physical world. So, our method is more practical than [20].

Additionally, GDPA [21] considers the simultaneous optimization as we do by training a universal generator, but there are still some differences: (1) It is a white-box attack, where the training of the generator needs to obtain the gradient information of the model. While our method is a black-box attack, which is more applicable in the real life. (2) The generator that outputs the two parameters is trained offline and can only be used for the target model and the unique target identity specified at training. When attacking other models or other identities, the generator needs to be retrained. In contrast, our method is learned online and is not limited to the specified model and identity in advance. (3) It is solved in a continuous parameter space, but in some scenarios, the valid position values may be discontinuous (e.g., in the FR system, the patch position is often required not to cover the facial features), and our method can deal with such discontinuous cases (Section 3.4). (4) In this method, the motivation of simultaneous optimization for the two key factors is intuitive without a careful analysis, but we provide a more detailed analysis and some visualization results (Section 3.1) to better explore this coupling relationship.

2.2 Adversarial patch in the face recognition

Adversarial patches also bring risks to face recognition and detection tasks, and their attack forms can be roughly divided into two categories. On the one hand, some methods fix the patch on a specific position of the face selected based on the experience or prior knowledge, and then generate the perturbations of the patch. For example, adversarial hat [13], adv-patch [16], and adversarial glasses [18, 14] are classical methods against face recognition models which are realized by placing perturbation stickers on the forehead or nose, or putting the perturbation eyeglasses on the eyes. GenAP [17] optimizes the adversarial patch on a low dimensional manifold and pastes them on the area of eyes and eyebrows. The main concern of these methods is to mainly focus on generating available adversarial perturbation patterns but without considering the impact of patch’s position versus the attack performance.

On the other hand, some methods fix the content of the adversarial patch and search for the optimal pasting position within the valid pasting area of the face. RHDE [15] uses a pattern-fixed sticker existing in the real life, and changes its position through RHDE algorithm based on the idea of differential evolution to attack FR systems. We believe that the position and perturbation of the adversarial patch are equally important to attack the face recognition system, and if the two are optimized simultaneously, the attack performance can be further improved.

2.3 Deep Reinforcement Learning

Deep reinforcement learning (DRL) combines the perception ability of deep learning with the decision-making ability of reinforcement learning, so that the agent can make appropriate behaviors through the interaction with the environment [28, 33]. It receives the reward signal to evaluate the performance of an action taken through the agent without any other supervisory information, and can be used to solve multiple tasks such as parameter optimization and computer vision [28]. In this paper, we apply the RL framework to solve for the attack variables, which can be formalized as the process of using reward signals to guide the agent’s learning. Therefore, a carefully-designed agent is proposed to learn the parameter selection policies, and generate better attack parameters under the reward signals obtained by querying the target model.

3 Methodology

3.1 The interaction of positions and perturbations

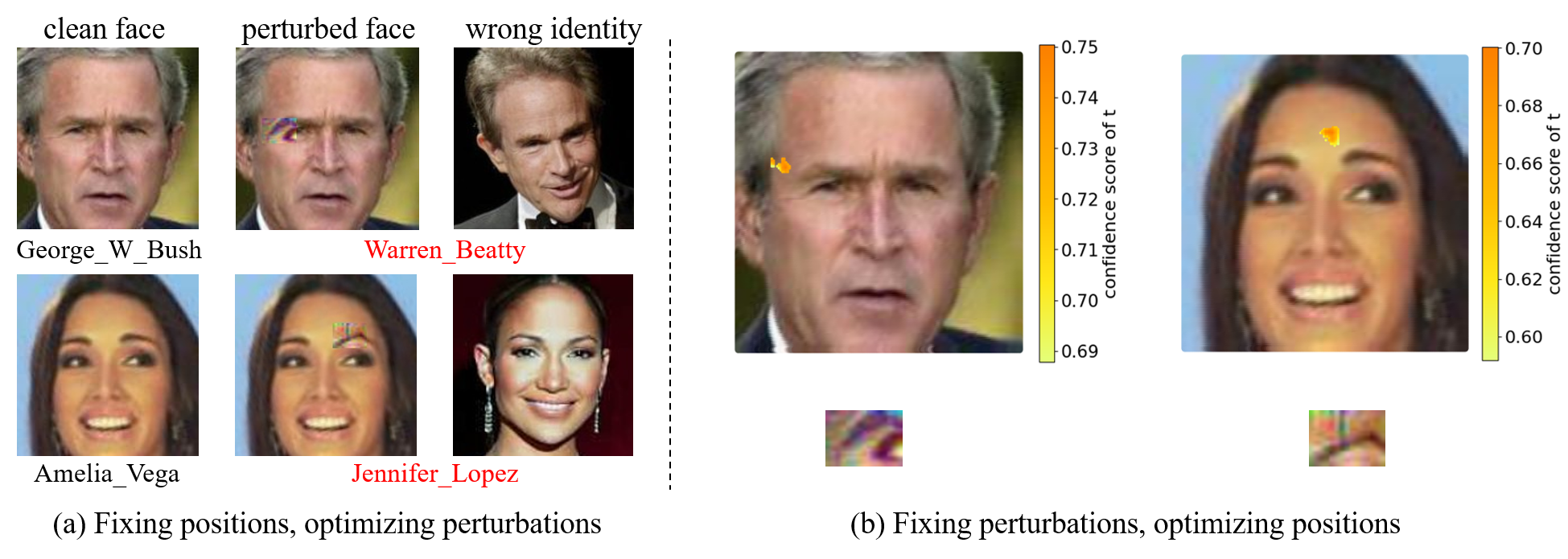

To understand the intrinsic coupling relationship between positions and perturbations, we conduct experiments on 1000 face images.

Firstly, we paste patches on fixed positions in different facial regions to see the differences between their perturbations. To make the effect more obvious, we choose the positions close to the key facial features (i.e. eyes, eyebrows, nose, mouth, etc.), and allow a small range of coverage (no more than 30 pixels) of the facial features. Two examples generated by MI-FGSM [23] are shown in Figure 2 (a). Interestingly, we find that the perturbations generated at different positions on the face tend to resemble the facial features of the current facial region, but their shapes have been changed. For example, in the top row of Figure 2 (a), the patch is pasted to the area near the left eye, and the generated pattern is also like the shape of the eye, but it is different from the real identity’s eye; in the bottom row of Figure 2 (a), the adversarial patch is pasted on the left side eyebrow, and the generated pattern is similar to the shape of the eyebrow, which changes the eyebrow’s shape, and may mislead the extraction of eyebrow features by the face recognition network. These phenomena indicate that the pattern of the patch is strongly correlated with the pasting position. Patterns tend to be the features of the face region in which they are located, and the pattern of the patch varies greatly from region to region.

Then, we study the patch’s pasting position with a given pattern to the adversarial attacks. For the patch patterns shown in Figure 2 (a), we exhaustively search for their positions (i.e., the patch’s center points) that can realize successful attacks to the target wrong identity within the range of valid face pasting regions (see Figure 2 (b)), and find that for these given patterns, the available positions (the orange region in the faces) are still concentrated near their original pasting positions. In other words, patterns generated at one position tend to have good attack effects only in a small area near this position. Besides, we find that the confidence score for the target wrong identity will decrease when the patch’s position is gradually moving away from its original position (the color denotes the score). This shows the patch’s optimal position is closely relevant to its pattern, and changing the patch’s position will lead to different adversarial attack effects.

In conclusion, a simultaneous optimization for these two factors is needed to optimize the matching of the position and perturbation that can realize the attack in the entire parameter space. However, as discussed above, the simultaneous optimization will lead to a large searching space, and further bring a lot of queries to the FR system in the black-box attacks. So an efficient simultaneous optimization is a challenging problem.

3.2 Problem formulation

In the face recognition task, given a clean face image , the goal of the adversarial attack is to make the face recognition model predict a wrong identity for the perturbed face image . Formally, the perturbed face with the adversarial patch can be formulated as Eq. (1), where is Hadamard product and is the adversarial perturbation across the whole face image. is a binary mask matrix to constrain the shape and pasting position of the patch, where the value of the patch area is 1.

| (1) |

The previous methods either optimize with pre-fixed , or fix to select the optimal . In our method, we optimize and simultaneously to further improve the attack performance.

For the optimization of the mask matrix , we fix the shape and size of the patch region in , and change the patch’s upper-left coordinates to adjust the mask matrix. In order not to interfere with the liveness detection module, we limit the pasting position to the area that does not cover the key facial features (i.e. eyes, eyebrows, nose, etc). Please refer to Section 3.4 for details.

To generate the perturbation, the ensemble attack [26] based on MI-FGSM [23] is used here. For the ensemble attack with surrogate models, we let denote the weight of each surrogate model and denote the attack step size. Then taking the un-targeted attack (or dodging in the face recognition case) as an example, given the ground-truth identity , we let denote the confidence score that the model predicts a face image as identity , then can be computed by an iteration way. Let denote the -th iteration, then:

| (2) |

| (3) |

| (4) |

where and . For the targeted attack (or impersonation in the face recognition case), given the target identity , can be simply replaced by .

Our attack goal is to simultaneously optimize both the patch position and the perturbation to generate good adversarial patches to attack the target model. Therefore, the patch’s coordinates , the attack step size in Eq. (2) and weights in Eq. (4) are set as the learned variables. We call them attack variables in the following sections. To be suitable for the target model, we adjust the attack variables dynamically through a small number of queries to the target model. The details of solving for these variables are shown in Section. 3.3.

3.3 Attacks based on RL

3.3.1 Formulation overview using RL

In our method, the process to optimize the attack variables is formulated into the process of learning the agent’s parameters in the RL framework under the guidance of the reward signal from the target model. Specifically, the -th variable’ value is defined as the action in the action space generated by the agent under the guidance of the policy . The image input to the agent is defined as the state , and the environment is the target model . with parameters is the policy function whose output is the probability value corresponding to each action in the action space under the state . It obeys a certain probability distribution and is a rule used by the agent to decide what action to take.

The reward reflects the performance of the currently generated adversarial patch on the target model, and the training goal of the agent is to learn good policies to maximize the reward signal. In the face recognition, the goal of the dodging attack is to generate images that are as far away as possible from the ground-truth identity , while impersonation attacks want to generate images that are as similar as possible to the target identity . Thus, the reward function is formalized as:

| (5) |

In the iterative training, the agent firstly predicts a set of actions according to policy , and then the adversarial patch based on the predicted actions is generated. Finally the generated adversarial face image is input to the target model to obtain the reward value. In this process, policy gradient [34] is used to guide the agent’s update. After multiple training iterations, the agent will generate actions that perform well on the target model with a high probability.

3.3.2 Design of the agent

The agent needs to learn the policies of the position, weights and attack step size. To take advantage of the coupling relationship between the position and perturbation, the two factors need to be mapped to the feature map output by the same agent at the same time. Considering this, we design a U-net [27] based structure, which can achieve the correspondence between positions and image pixels, and output the feature map of the same size as the input image. Let the number of surrogate models be , we design the agent to output the feature map with channels and the same length and width as the input image (i.e. the size is ).

In each channel of the feature map , the relative value of each pixel point represents the importance of each position for the surrogate model , and the average value of the overall channel reflects the importance of the corresponding surrogate model. We believe that the patch requires different attack strengths in different locations, so at the top layer of the agent network, a fully connected layer is used to map the feature map to a vector representing different attack step values. The structural details of the agent are shown in Figure 3.

Specifically, for the position action, the optional range of positions is discrete, so the position policy is designed to follow Categorical distribution [35]. Given the probability of each selected position, the position parameters , and is computed:

| (6) |

For the weight actions, the weight ratio of the loss on each surrogate model to the ensemble loss is a continuous value, and we set the weight policy to follow Gaussian distribution [35]. So the -th weight parameter , and is calculated as:

| (7) |

where refers to the mean value of the -th channel in the feature map, and is a hyperparameter. In the actual sampling, we use the clipping operation to make the sum of weights equal to 1.

For the attack step action, we set 20 values in the range of 0.01 to 0.2 at intervals of 0.01, and adopt Categorical distribution [35] as the step size policy due to the discreteness of the values. So the step size parameter , and probability of each candidate value is:

| (8) |

By sampling from the corresponding distribution, we can obtain and .

3.3.3 Policy Update

In the agent training, the goal is to make the agent learn a good policy with parameters to maximize the expectation of the reward , where represents the number of attack variables, and in our case (the attack variables are patch position , attack step size in Eq. (2) and weights in Eq. (4), respectively.) is a set of decision results obtained by sampling according to the policy of the current agent, and is formalized as a set of the input state s and the sampling results of each attack variable, then the optimal policy parameters can be formulated as:

| (9) |

We use the policy gradient [34] method to solve for by the gradient ascent method, and follow the REINFORCE algorithm [36], using the average value of sampling of the policy function distribution to approximate the policy gradient :

| (10) | ||||

where is the reward in the -th sampling. A large reward will cause the agent parameters to have a large update along the current direction, while a small reward means that the current direction is not ideal, and the corresponding update amplitude will also be small. Therefore, the agent can learn good policy functions with the update of in the direction of increasing the reward.

For actions that follow the Categorical policy (i.e., and ), let denote the probability of action under the corresponding Categorical probability distribution (i.e., the probability value of the position variable under , and the probability value of the attack step size variable under ), then for and , in Eq. (10) can be calculated as:

| (11) |

For actions that follow the Gaussian policy distribution ( i.e. the weights of the surrogate models that follow ), the mean value of Gaussian distribution is calculated by the output of the agent, so can be expressed as . Therefore, for following the Gaussian policy, in Eq. (10) can be calculated as follows:

| (12) | ||||

After the policy update using Eq. (10), we solve for the optimal parameters . And then the optimal action strategy is obtained.

3.4 Optional area of the pasting positions

In this section, we give more details about changing the pasting position of the adversarial patch.

It is worth noting that in order not to interfere with the liveness detection module and to maintain the concealment of the attack method, the area that does not cover the facial features of the face (e.g. cheek and forehead) is regarded as the optional pasting area. In practical applications, the liveness detection module is often used in combination with the face recognition to confirm the real physiological characteristic of the object and exclude the form of replacing real faces with photos, masks, etc. [37]. It is mainly based on the depth or texture characteristics of the face skin or the movements of the object (such as blinking and opening the mouth), so the patch cannot be pasted in the area that covers the facial features (such as eyes and mouth).

Specifically, we use library to extract 81 face feature points and determine the effective pasting region. Figure 4 shows some examples of the effective pasting area corresponding to the face. After calculating the probability of each position in Eq. (6), we set the probabilities of the invalid positions to 0, and then sample the pasting position.

3.5 Overall framework

The complete process of our method is given in Algorithm 1. In the iterations of the agent learning, policy functions are firstly calculated according to the output of the agent, and then samplings are performed according to the probability distribution of the policy function to generate sets of parameters. According to each set of parameters, the attacks are conducted on surrogate models and the generated adversarial examples are input to the face recognition model to obtain the reward. Policy functions are finally updated according to the rewards. During this process, if a successful attack is achieved, the iteration is stopped early.

| FaceNet | ArcFace34 | ArcFace50 | CosFace50 | cml. API | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| ASR | NQ | ASR | NQ | ASR | NQ | ASR | NQ | ASR | NQ | ||

| Dodging | FaceNet | - | 13.21% | 79 | 16.89% | 80 | 11.47% | 72 | 10.37% | 69 | |

| ArcFace34 | 84.97% | 17 | - | 57.28% | 18 | 23.61% | 52 | 32.31% | 53 | ||

| ArcFace50 | 89.37% | 14 | 61.05% | 16 | - | 33.45% | 45 | 38.20% | 49 | ||

| CosFace50 | 85.91% | 15 | 53.48% | 15 | 60.51% | 23 | - | 29.41% | 57 | ||

| Ensemble | 96.65% | 11 | 72.86% | 18 | 72.09% | 27 | 62.50% | 23 | 52.19% | 46 | |

| Impersonation | FaceNet | - | 12.77% | 23 | 15.09% | 15 | 8.70% | 75 | 9.52% | 161 | |

| ArcFace34 | 58.53% | 25 | - | 37.73% | 47 | 28.31% | 69 | 20.55% | 126 | ||

| ArcFace50 | 53.52% | 28 | 33.23% | 60 | - | 28.30% | 53 | 27.91% | 114 | ||

| CosFace50 | 59.21% | 31 | 43.40% | 55 | 39.62% | 47 | - | 16.13% | 157 | ||

| Ensemble | 72.83% | 27 | 50.28% | 66 | 49.50% | 36 | 40.08% | 77 | 37.56% | 91 | |

4 Combined with gradient estimation attack

Our simultaneous optimization method can also be combined with the gradient estimation attacks (e.g., Zeroth-Order (ZO) optimization [38, 29], natural evolution strategy [30], random gradient estimation [31]) to further enhance the attack performance. Gradient estimation attack estimates the gradient of each pixel through the information obtained by querying the model. It has good attack performance, but the query cost is high. The result of the simultaneous optimization can be used as the initialization value of the gradient estimation to provide a good initial position and pattern. Compared with the random initialization used in the original gradient estimation, a good initialization value with certain attack performance can improve the query efficiency. Here we take Zeroth-Order (ZO) optimization [38, 29] as an example to describe the combined manner.

In the Zeroth-Order (ZO) optimization, let denote the image variable, and its value is the adversarial image in the iterative process. The value range in is . To expand the optimization range, is often replaced [29] as:

| (13) |

In this way, optimizing turns to optimize , and the optimization range is expanded to . Gradient estimation is achieved by adding a small offset at for the calculation of the symmetric difference quotient [39, 29]. In order for the addition of the small offset to have an effect on the result, cannot be in an overly smooth position in the solution space, i.e., the gradient of at (i.e. ) can not be too small. is calculated as follows:

| (14) |

To make the initial values of the gradient estimation obtained by simultaneous optimization also meet the above requirements, we need to add this gradient requirement to the objective function of the simultaneous optimization. So the loss function in Eq. (4) is modified as:

| (15) |

where and represent the height and width of the perturbation patch pasted to the face, and is the scale factor. Given the paste coordinates , then at the pixel point of the patch, .

Replacing Eq. (4) with Eq. (15), keeping the rest of the process in Algorithm 1 unchanged, we can obtain an adversarial initialization result (i.e. pattern and position ) suitable for gradient estimation through our simultaneous optimization. On this basis, we fix the position and use the gradient estimation method to only refine the perturbation. Through querying the target models, we can estimate the gradient in - iteration:

| (16) |

where is a small constant and is a standard basis vector where pixels selected for update correspond to the value of 1. is the loss function, that is, the variant of of Eq. (4) migrated from -space to -space, and the result is obtained by querying the model. . Based on , we can use ADAM optimization method [29] to optimize image pixel values in the iterative process. Thanks to the good initialization value, the query efficiency in the gradient estimation attack will also be improved.

5 Experiments

In this section, we give the experiments from experimental settings, comparisons with SOTA methods, ablation study, integration with gradient estimation attacks, and so on.

5.1 Experimental Settings

Target models and datasets: We choose five face recognition models as target models, including four representative open-source face recognition models (i.e. FaceNet [40], CosFace50 [41], ArcFace34 and ArcFace50 [42]) and one commercial face recognition API111https://intl.cloud.tencent.com/product/facerecognition. When performing ensemble attacks for the API, the four open-source models are set as surrogate models. When performing ensemble attacks for one of these four models, the other three models are used as surrogate models. Thus, the attacks are conducted via the transferability. Our face recognition task is based on the face identification mechanism, therefore, a large face database is needed. So, we randomly select 5,752 different people from Labeled Faces in the Wild (LFW)222http://vis-www.cs.umass.edu/lfw/ and CelebFaces Attribute (CelebA)333http://mmlab.ie.cuhk.edu.hk/projects/CelebA.html to construct the face database. We use the above face models to extract the face features, and then calculate the cosine similarity with all identities in the face database to perform the 1-to-N identification. The identity with the highest similarity is regarded as the face’s identity, and the corresponding similarity is taken as the confidence score.

Metrics: Two metrics, attack success rate (ASR) and the number of queries (NQ) are used to evaluate the performance of attacks. ASR refers to the proportion of images that are successfully attacked in all test face images, where we ensure that the clean test images selected in the experiment can be correctly identified. We count the cases of incorrect identification as successful attacks. NQ refers to the number of queries to the target model required by the adversarial patch that can achieve a successful attack.

Implementation:

The size of the adversarial patch is set as , the number of sampling in the policy gradient method is set to , and the variance of the Gaussian policy is equal to . Other parameters are set as , , and .

5.2 Experimental results

5.2.1 Performance of simultaneous optimization

We first evaluate our simultaneous optimization method qualitatively and quantitatively according to the setting in Section 5.1. Table I shows the quantitative results of dodging and impersonation attacks under the black-box setting against the five target models.

| Dodging | Impersonation | |||||||||||

| FaceNet | ArcFace50 | CosFace50 | FaceNet | ArcFace50 | CosFace50 | |||||||

| ASR | NQ | ASR | NQ | ASR | NQ | ASR | NQ | ASR | NQ | ASR | NQ | |

| GenAP [17] (p.) | 58.50% | - | 57.00% | - | 56.50% | - | 28.75% | - | 27.50% | - | 24.25% | - |

| ZO-AdaMM [38] (p.) | 65.79% | 1972 | 41.58% | 2161 | 43.47% | 2434 | 50.52% | 2874 | 40.41% | 3198 | 39.16% | 3106 |

| RHDE [15] (l.) | 48.82% | 408 | 35.39% | 504 | 42.93% | 514 | 41.63% | 522 | 29.40% | 586 | 35.64% | 577 |

| LO [19] (p.l.) | 76.82% | 1105 | 61.59% | 1475 | 55.14% | 2117 | 55.69% | 1974 | 42.67% | 2870 | 38.66% | 2624 |

| PatchAttack [20] (p.l.) | 94.54% | 1204 | 65.44% | 1566 | 61.43% | 1632 | 68.95% | 2389 | 44.00% | 3324 | 35.56% | 3878 |

| Ours (p.l.) | 96.65% | 11 | 72.09% | 27 | 62.50% | 23 | 72.83% | 27 | 49.50% | 36 | 40.08% | 77 |

-

•

l.- change location. p.- change perturbations

From the results in Table I, we can see : (1) the proposed method achieves good attack success rates and query efficiency under both two kinds of attacks. The dodging attack achieves the highest success rate of 96.65% under 11 queries, while the impersonation attack achieves the highest success rate of 72.83% under 27 queries. (2) The performance of using ensemble models to attack is better than that using a single model as the surrogate model, which shows that simultaneous optimization can adaptively adjust the weights of different surrogate models to achieve the best performance. (3) For the relationship between the surrogate model and the target model, when the two are structurally similar, it is more likely to help the attack. For example, for ArcFace50, CosFace50 has a greater influence on it. This is because the backbone of ArcFace50 and CosFace50 are both ResNet50-IR [42], and the backbone of FaceNet and ArcFace34 are Inception-ResNet-v1 [43] and ResNet34-IR [42] respectively. Therefore, in ensemble attacks, we can dynamically adjust the importance of different models by optimizing their weights. (4) On the commercial API, performance drops slightly compared to the open-source model, but remains at an acceptable level. This is because commercial FR services introduce some defense measures like image compression.

Figure 5 shows some visual examples of the position and perturbation at different stages of the attack. We can see that with the increase of query times, the positions and perturbations of the generated adversarial patch are gradually stable and convergent until the face recognition model predicts the target wrong identity. This show that the proposed method has a good convergence. More visual results are shown in Figure 6. For each group of three images, the first one represents the clean image, the second one represents the image after the attack, and the third one represents the image corresponding to the wrong identity in the face database. The above results are obtained by ensuring that the patch is not pasted to the area that covers the facial features.

In addition, we also study the regularity of the position of the patch. We divide the face into four areas: between the eyebrows, the forehead, the left face and the right face, and count the position of the patch when attacking FaceNet, ArcFace50, and CosFace50. The ratio of patches in each area is shown in Table III. It can be seen that for the three models, the proportions of the patch located between the eyebrows are the highest, reaching 42.55%, 58.32%, 54.36%, respectively, followed by the forehead, while the proportions of the left and the right face are relatively low. This may be because the position between the eyebrows is close to the key facial features (eyes, eyebrows), so these positions have a greater impact on the model’s prediction.

| FaceNet | ArcFace50 | CosFace50 | |

|---|---|---|---|

| Between the eyebrows | 42.55% | 58.32% | 54.36% |

| Forehead | 40.43% | 25.01% | 26.73% |

| Left face | 10.64% | 5.56% | 10.94% |

| Right face | 6.38% | 11.11% | 7.97% |

5.2.2 Comparisons with SOTA methods

To prove the superiority, we compare our method with five state-of-the-art methods: GenAP [17] and ZO-AdaMM [38] fix the position and change perturbations relying on transferability and gradient estimation, respectively. RHDE [15] fixes the perturbations and only changes the position, and Location Optimization (LO) [19] optimizes the position and the perturbation alternately, as well as the PatchAttack [20] optimizes the position and perturbation in a two-step manner. In the implementation, to adapt Location Optimization (LO) [19] to black-box attacks, we retain the framework of alternate iterations, and use gradient estimation to replace white-box gradient calculations. For GenAP, because we use its transferability to perform the black-box attack, its NQ is zero, and we use “-” to replace it. For our method, the target model in each column is not within the corresponding ensemble surrogate models (see Table I), therefore, the comparison is fair. The above results on three target models are shown in Table II.

From the results, we can see that: (1) For the perturbation-only method, GenAP and ZO-AdaMM have an average success rate of 42.08% and 46.82%; For the location-only method, RHDE is 38.97%. However, LO, PatchAttack, and ours are 55.10%, 61.60% and 65.61%, respectively. Therefore, considering both factors can achieve a significant improvement. (2) Our simultaneous optimization is ahead of LO’s alternate optimization and PatchAttack’s two-step attack, which shows that simultaneous optimization can make better use of the inherent connection between the perturbation and location, so it is better than optimizing the two separately. (3) Our method achieves the optimal query efficiency among several methods, requiring only a few dozen queries.

5.2.3 Ablation study

In order to verify the effectiveness of each attack variable used in our method, we also report the results when each component is added separately. First, we fix weights as equal values and the step size as 0.1 to test the performance when only changing the position parameter. Then, we add the weight parameter to learn the importance of each surrogate model. Finally, we add the step size parameters to carry out the overall learning. The results in Figure 7 show that learning only the position parameter can achieve an average success rate of 69.80%. The performance is greatly improved after adding the weight, and further improved after adding the step size. All parameters contribute to the enhancement of the overall attack effect and the weight parameter has a greater impact on the results than the step size. In the process of adding components, the number of queries (shown in brackets) is basically maintained at the same level.

5.2.4 Performance against defense methods

Here, we test the attacks’ performance under two mainstream forms of defenses: preprocessing and adversarial training.

For the preprocessing defense, we explore the “detecting-repairing” methods, i.e., they first detect the region of the adversarial patch, and then repair the patch via different strategies. Specifically, we use three methods to defend our attack: Local Gradients Smoothing (LGS) [44] locates the noisy region based on the concentrated high-frequency changes introduced during the attack, and regularizes the gradient of this region to suppress the effect of adversarial noise. DW [45] locates local perturbations by the dense clusters of saliency maps in adversarial images, and then blocks the corresponding regions for defense. Februus [46] locates the influential region based on the heat map of GradCAM, removes the region and conducts image restoration for defense. The corresponding attack success rates (ASR) of dodging attacks against the above defense methods are shown in Table IV.

It can be seen that these methods cannot effectively weaken the attack performance. For LGS and Februus, ASR only drops slightly, by 1.01% and 3.37% on FaceNet, respectively. For DW, ASR decreases by 14.94% on FaceNet, but remains at an acceptable level with the ASR of 81.71%. We think this is because these methods do not locate/detect the local perturbations accurately. In the face classification task, the local patches often make the model decision solely affected by this small area [45], and thus localization is performed by the sensitive information exhibited by these local regions (e.g. frequency, saliency maps, and heatmaps of GradCAM). However, in the face recognition task, the model’s decision is affected by the combined effects of multiple regions on the face, and the addition of patches only affects the characteristics of a local facial feature. Before and after the attack, the region that the model focuses on is still scattered on each key area of the face instead of a certain area. After the attack, only the importance of a certain area will change slightly, so it is difficult to distinguish how it is different from the clean face image.

| FaceNet | ArcFace50 | CosFace50 | |

|---|---|---|---|

| LGS | 95.64% | 64.52% | 56.82% |

| (1.01%) | (7.57%) | (5.68%) | |

| DW | 81.71% | 46.10% | 45.45% |

| (14.94%) | (25.99%) | (17.05%) | |

| Februus | 93.28% | 66.22% | 46.88% |

| (3.37%) | (5.87%) | (15.62%) |

| FaceNet | ArcFace50 | CosFace50 | ||||

|---|---|---|---|---|---|---|

| ASR | NQ | ASR | NQ | ASR | NQ | |

| GDPA | 51.49% | - | 13.30% | - | 11.66% | - |

| (48.51%) | (74.89%) | (61.61%) | ||||

| Ours | 93.96% | 9 | 72.71% | 20 | 59.72% | 25 |

| (2.69%) | ( 2) | (0.62%) | ( 7) | (2.78%) | ( 2) | |

For adversarial training, we retrain the target model using adversarial examples generated by attack methods against this model, and then take the retrained robust model as the new target model to test the attack performance [19]. To demonstrate our superiority, we compare our method with GDPA [21] under the same adversarial training setting. GDPA [21] is a white-box attack method that also considers both positions and perturbations of the adversarial patch. The results of the attack success rate (ASR) and the number of queries (NQ) are shown in Table V, where the numbers in brackets denote the change value compared to the undefended model. It can be seen that adversarial training defends against GDPA attack well, when we use the same GDPA to attack the robust target models, the ASRs have a great drop (48.51% for FaceNet, 74.89% for ArcFace50, and 61.61% for CosFace50). This is reasonable because the generated adversarial examples by GDPA are integrated into the training set for these three models, and the target models have “seen” the adversarial examples. As a contrast, adversarial training does not defend against our attack, the ASR only has a slight change (i.e. 2.69%, 0.62%, 2.78% respectively), and it is still maintained at a relatively high level. The reason for this difference is that the patch’s positions chosen by GDPA on face images usually lie in the key facial features like eye, mouth, nose, etc. In other words, the learned position is constrained within a fixed scope (its original paper also shows this phenomenon). While the patch’s position chosen by our method can occur in any face area except for the key facial features. Therefore, it is difficult for adversarial training to learn the complete data distribution prevalent in our attack, which leads to the poor defense performance.

| Translations | Rotations | ||||

|---|---|---|---|---|---|

| 2pix | 3pix | 5pix | |||

| FaceNet | 96.65% | 90.59% | 79.24% | 93.12% | 83.21% |

| (0.00%) | (5.49%) | (16.84%) | (2.96%) | (12.87%) | |

| ArcFace50 | 71.36% | 56.91% | 53.11% | 63.85% | 53.20% |

| (0.72%) | (15.17%) | (18.97%) | (8.23%) | (19.46%) | |

| D | I | |

|---|---|---|

| FaceNet | 100.00% | 83.33% |

| ArcFace34 | 75.29% | 65.08% |

| CosFace50 | 61.95% | 46.71% |

| cml. API | 33.16% | 25.23% |

| dist. | light | ||||||

|---|---|---|---|---|---|---|---|

| D | FaceNet | 100.00% | 92.31% | 36.36% | 87.50% | 97.06% | 99.79% |

| ArcFace34 | 75.29% | 62.50% | 28.57% | 45.24% | 71.43% | 65.45% | |

| I | FaceNet | 83.33% | 70.23% | 35.75% | 62.50% | 88.24% | 41.38% |

| ArcFace34 | 65.08% | 54.17% | 23.81% | 57.14% | 60.71% | 41.81% | |

| Dodging | Impersonation | |||||||||||

| Random+ZO | SO+ZO | SO | Random+ZO | SO+ZO | SO | |||||||

| 65.79% | 1972 | 97.73% | 834 | 96.65% | 11 | 50.52% | 2874 | 87.37% | 1311 | 72.83% | 27 | |

5.2.5 Attacks in the physical world

We show the results of our adversarial patch in the physical environment. We first perform simulated successful attacks on different subjects in the digital environment, and then conduct experiments in the physical world.

To make digital simulation results better adapt to the physical environment, we process the smoothness of the perturbation pattern. Specifically, during each iteration of the pattern generation, we first obtain a pattern with half the size of the original patch by scaling down or averaging pooling, and then enlarge the image back to the original size by bilinear interpolation. The reduced pattern retains the key information of the pattern. After zooming, the smoothness of the image is improved, avoiding the problem that the pattern generated per pixel is sensitive to the position point when it is transferred to the physical environment. Even if the pasting position is slightly a few pixels away, the attack effect can still be preserved. We also try to use Total Variation (TV) [14] loss to enhance the smoothness, but the actual effect is not as good as the scaling process. When printing, we use photo paper rather than ordinary paper as the patch material to recreate the colors of the digital simulation as realistic as possible.

Before applying to the physical environment, we first verify the robustness to the translation and rotation changes in the digital world. We translate the patch by 2, 3, and 5 pixels, and rotate it by 5 and 15 degrees to test the performance of the attack. Table VI lists the attack success rate (ASR) of dodging attacks on FaceNet and ArcFace50 models. It can be seen that for translation, moving 2 pixels has little effect on the attack (ASR for FaceNe does not drop, ArcFace50 drops 0.72%). Even with a 5-pixel shift, the ASR of 79.24% and 53.11% can still be achieved on two models. For rotation, within 5 degrees of rotation, the ASR of the two models decreases slightly by 2.96% and 8.23%, respectively, which can meet our requirements for applying to the physical environment.

For the performance in the physical environment, we record the video when faces are moving within a small range of the current posture, and count the frame proportion of successful physical attacks as the attack success rate. Table VIII shows the results of the frontal face. To test the performance under various physical conditions, we further change the face posture, the distance from the camera and the illumination. For face postures, we take the conditions of the frontal face, yaw angle rotation of (the mean value at and ), and pitch angle rotation of . The results are shown in Table VIII.

It can be seen from Table VIII that our method maintains high physical ASR (100.0% and 83.33%) on FaceNet, and the results are also good on ArcFace34 and CosFace50. Although there is a slight decline in commercial API, the ratio of 33.16% and 25.23% of successful frames is enough to bring potential risks to commercial applications. In Table VIII, when the pose changes in a small range (, ), ASR still maintains a high value. Even if the deflection angle is slightly larger (), it can still maintain an average of 31.13%. The effect of distance is small, and when lighting is changed, the results are still at an acceptable level. When performing impersonation attacks under different conditions, the face can be recognized as a false identity different from the true identity, but not the target identity, which may lead to a slightly lower result. Visual results are shown in Figure 8.

5.3 Combined with gradient estimation attacks

The results of combining our simultaneous optimization with the gradient estimation attack are given in Table IX, where SO denotes the results output by our simultaneous optimization method. SO+ZO denotes the combined version of our SO method and Zeroth-Order (ZO) attack, i.e., using the adversarial patch of SO as the initialized values of ZO. Random+ZO denotes the ZO attack with random initialized values of ZO. From the table, we can see that SO+ZO outperforms random+ZO significantly. For the dodging attack, the ASR increases from 65.79% to 97.73%, and NQ decreases from 1972 to 834. For the impersonation attack, the ASR increases from 50.52% to 87.37%, and NQ decreases from 2874 to 1311. This contrast proves that our SO method indeed provides a good initialization for the gradient estimation attack, reducing their high query costs and improving their attack success. In addition, we also see SO+ZO obtains better ASR than SO for both the dodging and impersonation, but introduces much more query consumption.

6 Extensions to traffic sign recognition

We also use our method to attack the traffic sign recognition model to prove its wide applicability. We conduct experiments on the Tsinghua-Tencent 100K (TT100K) dataset [47] which contains many different types of Chinese traffic signs, covering a variety of weather and light conditions. Specifically, we use YOLOv4 [48] and YOLOv3 [49] as surrogate models, and NanoDet444https://github.com/topics/nanodet [50] as the target model to be attacked. We choose NanoDet because it can run on the mobile phone, which can simulate the vehicle perspective. The size of the adversarial patch is set as , and the allowable patch sticking region is restricted within the detection bounding box of the traffic sign predicted in the clean image. The rest of the attack settings are consistent with the face recognition task.

The attack success rate (ASR) and the number of queries (NQ) of untargeted and targeted attacks are shown in Table X. It can be seen that our attack can achieve ASR of 68.57% and 45.62% in untargeted and targeted attacks with only a few queries, respectively, which is similar to the face recognition task. This performance demonstrates the effectiveness of our method in the traffic sign recognition task, showing its generalization ability.

| Target model | Untargeted | Targeted | ||

| ASR | NQ | ASR | NQ | |

| NanoDet | 68.57% | 36 | 45.62% | 45 |

Several visual examples are shown in Figure 9. The bounding boxes are marked on the image, and the predicted category and confidence score are given below the image. In these four groups, the image on the left represents a clean example that has not been attacked, which can be correctly detected and classified by the target detection model. The picture on the right shows the corresponding adversarial example. For example, No U-Turn is recognized as Giving Way, and Keeping Right is recognized as No Parking. After being attacked by the adversarial patch, the traffic sign is still detected, but the prediction result of its category is wrong, even though its semantics does not change for humans.

7 Conclusion

In this paper, we proposed an efficient method to achieve the simultaneous optimization of the positions and perturbations to create an adversarial patch in the black-box setting. This process was formulated into a reinforcement learning framework. Through the design of the agent and different policy functions, the attack variables involving the patch’s position and perturbation can be simultaneously realized with a few query times. Extensive experiments demonstrated that our method could effectively improve the attack effect, and experiments on the commercial FR API and physical environments confirmed that it had the practical value and could be used to help evaluate the robustness of DNN models in real applications. Besides, our method can also be adapted to other applications, such as autonomous driving, etc.

Acknowledgment

This work is supported by National Key RD Program of China (Grant No.2020AAA0104002), National Natural Science Foundation of China (No.62076018). We also thank anonymous reviewers for their valuable suggestions.

References

- [1] Y. Guo, H. Wang, Q. Hu, H. Liu, L. Liu, and M. Bennamoun, “Deep learning for 3d point clouds: A survey,” IEEE transactions on pattern analysis and machine intelligence, 2020.

- [2] S. Minaee, Y. Y. Boykov, F. Porikli, A. J. Plaza, N. Kehtarnavaz, and D. Terzopoulos, “Image segmentation using deep learning: A survey,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021.

- [3] T. Baltrušaitis, C. Ahuja, and L.-P. Morency, “Multimodal machine learning: A survey and taxonomy,” IEEE transactions on pattern analysis and machine intelligence, vol. 41, no. 2, pp. 423–443, 2018.

- [4] C. Szegedy, W. Zaremba, I. Sutskever, J. Bruna, D. Erhan, I. Goodfellow, and R. Fergus, “Intriguing properties of neural networks,” arXiv preprint:1312.6199, 2013.

- [5] T. B. Brown, D. Mané, A. Roy, M. Abadi, and J. Gilmer, “Adversarial patch,” arXiv preprint:1712.09665, 2017.

- [6] K. Eykholt, I. Evtimov, E. Fernandes, B. Li, A. Rahmati, C. Xiao, A. Prakash, T. Kohno, and D. Song, “Robust physical-world attacks on deep learning visual classification,” in CVPR, 2018, pp. 1625–1634.

- [7] A. Liu, X. Liu, J. Fan, Y. Ma, A. Zhang, H. Xie, and D. Tao, “Perceptual-sensitive gan for generating adversarial patches,” in AAAI, vol. 33, 2019, pp. 1028–1035.

- [8] D. Karmon, D. Zoran, and Y. Goldberg, “Lavan: Localized and visible adversarial noise,” in ICML. PMLR, 2018, pp. 2507–2515.

- [9] H. Wang, G. Li, X. Liu, and L. Lin, “A hamiltonian monte carlo method for probabilistic adversarial attack and learning,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020.

- [10] K. Xu, G. Zhang, S. Liu, Q. Fan, M. Sun, H. Chen, P.-Y. Chen, Y. Wang, and X. Lin, “Adversarial t-shirt! evading person detectors in a physical world,” in ECCV, 2020, pp. 665–681.

- [11] S. Bai, Y. Li, Y. Zhou, Q. Li, and P. H. Torr, “Adversarial metric attack and defense for person re-identification,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 43, no. 6, pp. 2119–2126, 2020.

- [12] X. Yang, F. Wei, H. Zhang, and J. Zhu, “Design and interpretation of universal adversarial patches in face detection,” in ECCV, 2020, pp. 174–191.

- [13] S. Komkov and A. Petiushko, “Advhat: Real-world adversarial attack on arcface face id system,” arXiv preprint:1908.08705, 2019.

- [14] M. Sharif, S. Bhagavatula, L. Bauer, and M. K. Reiter, “A general framework for adversarial examples with objectives,” ACM Transactions on Privacy and Security (TOPS), vol. 22, no. 3, pp. 1–30, 2019.

- [15] X. Wei, Y. Guo, and J. Yu, “Adversarial sticker: A stealthy attack method in the physical world,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022.

- [16] M. Pautov, G. Melnikov, E. Kaziakhmedov, K. Kireev, and A. Petiushko, “On adversarial patches: real-world attack on arcface-100 face recognition system,” in SIBIRCON. IEEE, 2019, pp. 0391–0396.

- [17] Z. Xiao, X. Gao, C. Fu, Y. Dong, W. Gao, X. Zhang, J. Zhou, and J. Zhu, “Improving transferability of adversarial patches on face recognition with generative models,” in CVPR, 2021, pp. 11 845–11 854.

- [18] M. Sharif, S. Bhagavatula, L. Bauer, and M. K. Reiter, “Accessorize to a crime: Real and stealthy attacks on state-of-the-art face recognition,” in CCS, 2016, pp. 1528–1540.

- [19] S. Rao, D. Stutz, and B. Schiele, “Adversarial training against location-optimized adversarial patches,” in ECCV, 2020, pp. 429–448.

- [20] C. Yang, A. Kortylewski, C. Xie, Y. Cao, and A. Yuille, “Patchattack: A black-box texture-based attack with reinforcement learning,” in ECCV, 2020, pp. 681–698.

- [21] X. Li and S. Ji, “Generative dynamic patch attack,” BMVC, 2021.

- [22] A. Demontis, M. Melis, M. Pintor, M. Jagielski, B. Biggio, A. Oprea, C. Nita-Rotaru, and F. Roli, “Why do adversarial attacks transfer? explaining transferability of evasion and poisoning attacks,” in USENIX security, 2019, pp. 321–338.

- [23] Y. Dong, F. Liao, T. Pang, H. Su, J. Zhu, X. Hu, and J. Li, “Boosting adversarial attacks with momentum,” in CVPR, 2018, pp. 9185–9193.

- [24] Y. Dong, T. Pang, H. Su, and J. Zhu, “Evading defenses to transferable adversarial examples by translation-invariant attacks,” in CVPR, 2019, pp. 4312–4321.

- [25] G. Wang, H. Yan, Y. Guo, and X. Wei, “Improving adversarial transferability with gradient refining,” arXiv preprint:2105.04834, 2021.

- [26] Y. Liu, X. Chen, C. Liu, and D. Song, “Delving into transferable adversarial examples and black-box attacks,” arXiv preprint:1611.02770, 2016.

- [27] O. Ronneberger, P. Fischer, and T. Brox, “U-net: Convolutional networks for biomedical image segmentation,” in MICCAI, 2015, pp. 234–241.

- [28] Y. Li, “Deep reinforcement learning: An overview,” arXiv preprint:1701.07274, 2017.

- [29] P.-Y. Chen, H. Zhang, Y. Sharma, J. Yi, and C.-J. Hsieh, “Zoo: Zeroth order optimization based black-box attacks to deep neural networks without training substitute models,” in Proceedings of the 10th ACM workshop on artificial intelligence and security, 2017, pp. 15–26.

- [30] A. Ilyas, L. Engstrom, A. Athalye, and J. Lin, “Black-box adversarial attacks with limited queries and information,” in ICML. PMLR, 2018, pp. 2137–2146.

- [31] C.-C. Tu, P. Ting, P.-Y. Chen, S. Liu, H. Zhang, J. Yi, C.-J. Hsieh, and S.-M. Cheng, “Autozoom: Autoencoder-based zeroth order optimization method for attacking black-box neural networks,” in AAAI, vol. 33, 2019, pp. 742–749.

- [32] X. Jia, X. Wei, X. Cao, and X. Han, “Adv-watermark: A novel watermark perturbation for adversarial examples,” in ACMMM, 2020, pp. 1579–1587.

- [33] W. Dong, Z. Zhang, and T. Tan, “Attention-aware sampling via deep reinforcement learning for action recognition,” in AAAI, vol. 33, 2019, pp. 8247–8254.

- [34] R. S. Sutton, D. A. McAllester, S. P. Singh, Y. Mansour et al., “Policy gradient methods for reinforcement learning with function approximation.” in NeurIPs, vol. 99, 1999, pp. 1057–1063.

- [35] K. P. Murphy, Machine learning: a probabilistic perspective. MIT press, 2012.

- [36] R. J. Williams, “Simple statistical gradient-following algorithms for connectionist reinforcement learning,” Machine learning, vol. 8, no. 3-4, pp. 229–256, 1992.

- [37] Z. Ming, M. Visani, M. M. Luqman, and J. Burie, “A survey on anti-spoofing methods for face recognition with RGB cameras of generic consumer devices,” 2020. [Online]. Available: https://arxiv.org/abs/2010.04145

- [38] X. Chen, S. Liu, K. Xu, X. Li, X. Lin, M. Hong, and D. Cox, “Zo-adamm: Zeroth-order adaptive momentum method for black-box optimization,” NeurIPS, vol. 32, pp. 7204–7215, 2019.

- [39] P. D. Lax and M. S. Terrell, Calculus with applications, 2014.

- [40] F. Schroff, D. Kalenichenko, and J. Philbin, “Facenet: A unified embedding for face recognition and clustering,” in CVPR, 2015, pp. 815–823.

- [41] H. Wang, Y. Wang, Z. Zhou, X. Ji, D. Gong, J. Zhou, Z. Li, and W. Liu, “Cosface: Large margin cosine loss for deep face recognition,” in CVPR, 2018, pp. 5265–5274.

- [42] J. Deng, J. Guo, N. Xue, and S. Zafeiriou, “Arcface: Additive angular margin loss for deep face recognition,” in CVPR, 2019, pp. 4690–4699.

- [43] C. Szegedy, S. Ioffe, V. Vanhoucke, and A. Alemi, “Inception-v4, inception-resnet and the impact of residual connections on learning,” in AAAI, vol. 31, 2017.

- [44] M. Naseer, S. Khan, and F. Porikli, “Local gradients smoothing: Defense against localized adversarial attacks,” in WACV. IEEE, 2019, pp. 1300–1307.

- [45] J. Hayes, “On visible adversarial perturbations & digital watermarking,” in CVPR Workshop, 2018, pp. 1597–1604.

- [46] B. G. Doan, E. Abbasnejad, and D. C. Ranasinghe, “Februus: Input purification defense against trojan attacks on deep neural network systems,” in Annual Computer Security Applications Conference, 2020, pp. 897–912.

- [47] Z. Zhu, D. Liang, S. Zhang, X. Huang, B. Li, and S. Hu, “Traffic-sign detection and classification in the wild,” in CVPR, 2016, pp. 2110–2118.

- [48] A. Bochkovskiy, C.-Y. Wang, and H.-Y. M. Liao, “Yolov4: Optimal speed and accuracy of object detection,” arXiv preprint arXiv:2004.10934, 2020.

- [49] J. Redmon and A. Farhadi, “Yolov3: An incremental improvement,” arXiv preprint arXiv:1804.02767, 2018.

- [50] RangiLyu, “Nanodet-plus: Super fast and high accuracy lightweight anchor-free object detection model.” https://github.com/RangiLyu/nanodet, 2021.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/8591f21a-558d-4e2b-b862-851bbbbe1c30/wei.jpg) |

Xingxing Wei received his Ph.D degree in computer science from Tianjin University, and B.S. degree in Automation from Beihang University, China. He is now an Associate Professor in Beihang University (BUAA). His research interests include computer vision, adversarial machine learning and its applications to multimedia content analysis. He is the author of referred journals and conferences in IEEE TPAMI, TMM, TCYB, TGRS, IJCV, PR, CVIU, CVPR, ICCV, ECCV, ACMMM, AAAI, IJCAI etc. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/8591f21a-558d-4e2b-b862-851bbbbe1c30/gy.jpg) |

Ying Guo is now a Master student at School of Computer Science and Engineering, Beihang University (BUAA). Her research interests include deep learning and adversarial robustness in machine learning. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/8591f21a-558d-4e2b-b862-851bbbbe1c30/yj.jpg) |

Jie Yu is now a Master student at School of Computer Science and Engineering, Beihang University (BUAA). His research interests include deep learning, compter vision and adversarial robustness. |

Bo Zhang is the senior researcher with the Tencent Corporation in Shenzhen, China. His research interest is AI security.