SimpsonsVQA: Enhancing Inquiry-Based Learning with a Tailored Dataset

Abstract

Visual Question Answering (VQA) has emerged as a promising area of research to develop AI-based systems for enabling interactive and immersive learning. Numerous VQA datasets have been introduced to facilitate various tasks, such as answering questions or identifying unanswerable ones. However, most of these datasets are constructed using real-world images, leaving the performance of existing models on cartoon images largely unexplored. Hence, in this paper, we present “SimpsonsVQA”, a novel dataset for VQA derived from The Simpsons TV show, designed to promote inquiry-based learning. Our dataset is specifically designed to address not only the traditional VQA task but also to identify irrelevant questions related to images, as well as the reverse scenario where a user provides an answer to a question that the system must evaluate (e.g., as correct, incorrect, or ambiguous). It aims to cater to various visual applications, harnessing the visual content of “The Simpsons” to create engaging and informative interactive systems. SimpsonsVQA contains approximately 23K images, 166K QA pairs, and 500K judgments (https://simpsonsvqa.org). Our experiments show that current large vision-language models like ChatGPT4o underperform in zero-shot settings across all three tasks, highlighting the dataset’s value for improving model performance on cartoon images. We anticipate that SimpsonsVQA will inspire further research, innovation, and advancements in inquiry-based learning VQA.

1 Introduction

Visual Question Answering (VQA) is a promising research field that lies at the intersection of Computer Vision (CV) and Natural Language Processing (NLP) to enable machines to answer questions about visual content [6, 56, 73, 43, 22]. The research interest in VQA has encouraged the creation of numerous datasets for constructing and evaluating VQA models including VQA v1.0 [6], VQA v2.0 [22], and GQA [26]. In addition, many datasets are purposefully crafted for specialized applications in practical domains such as healthcare [24, 36, 1], diagnosing medical images [38, 20], cultural heritage [58, 19], aiding customer service [7], enhancing entertainment experiences [21], and generating captions for social media content [65].

Despite the keen interest in VQA, the majority of the aforementioned datasets are primarily designed for Scenario (1), where individuals ask relevant questions about the content of an image, often with the aim of aiding visually impaired people [6, 22]. Conversely, several datasets have emerged to tackle Scenario (2), involving individuals posing irrelevant questions [55, 60, 10, 54, 64, 23, 62, 12, 48, 40, 44]. These questions should be intentionally left unanswered to prevent confusion and promote trust. We argue in this paper that the existing literature has overlooked Scenario (3), where an individual provides an answer to a question related to an image, requiring the system to evaluate it, e.g., “Correct”, “Incorrect”, or “Ambiguous”. These three scenarios are particularly relevant for individuals with cognitive impairments and within educational contexts, especially early-age education. In these settings, individuals with developmental disorders may not only ask coherent questions but also pose irrelevant or inconsistent ones and provide incorrect answers related to visual content.

In this paper, we present “SimpsonsVQA”, a unified VQA dataset that can be used to address the three scenarios described above, fostering the development of intelligent systems that promote inquiry-based learning for individuals with cognitive disabilities and within early-age education. Unlike traditional datasets that primarily feature photorealistic images, our dataset leverages the cartoon imagery from The Simpsons TV show, chosen for its , cultural relevance, and the ability to explore domain gaps between cartoon and real-world images. Also, we argue that scenery, cartoons, and sketches are integral parts of our lives and should be considered in developing AI applications. Additionally, the dataset provides a valuable opportunity to assess the robustness of large vision-language models, such as Llava [42], which are predominantly trained on photorealistic datasets.

SimpsonsVQA incorporates triples consisting of images, questions, and answers, with evaluating judgments. For example, in Figure 1, we show a sample of images along with free-form, open-ended, natural-language questions about the images, as well as their corresponding natural-language answers. For instance, the image in Figure 1(a) is linked to a relevant question and a correct answer. The image in Figure 1(b) has a relevant question and an ambiguous or partially correct answer. Meanwhile, the image in Figure 1(c) is associated with a relevant question but an incorrect answer, and the image in Figure 1(d) is paired with an irrelevant question. In total, SimpsonsVQA contains approximately 23K images, 166K QA pairs, and approximately 500K judgments.

We summarize the key contributions of this work as follows: (i) we introduce a new VQA task, specifically focused on assessing candidate answers; (ii) we present “SimpsonsVQA”, a unified VQA dataset that is explicitly tailored to address the aforementioned tasks to foster inquiry-based learning; and (iii) we conduct a comprehensive evaluation with the aim to benchmark the SimpsonsVQA dataset using various state-of-the-art VQA models. SimpsonsVQA has real-world applications in enhancing educational tools and assistive technologies by enabling interactive, inquiry-based learning through AI systems that can handle diverse visual questions, assess relevance, and evaluate answer correctness, thereby supporting early-age education and individuals with cognitive impairments. Besides, our findings indicate that while models trained on the SimpsonsVQA dataset perform well on our cartoon-based test set, advanced models like Llava [41] and ChatGPT4o [52], which typically excel in various zero-shot VQA challenges, encounter difficulties when dealing with cartoon images.

2 Related Work

Existing datasets: Several VQA datasets have been introduced for research purposes, as summarized in Table 1. The VQA v1.0 dataset [6] is often credited with popularizing the VQA task. It was introduced as one of the first large-scale VQA datasets, containing real-world images paired with open-ended questions, and it has been widely used to develop and benchmark VQA models. Later, it was expanded and improved in VQA v2.0 [22] to address language bias, establishing itself as the benchmark dataset for the VQA task. Since then, various datasets with diverse objectives have been released, such as DAQUAR [45] that focuses on indoor scenes, Visual Genome [35] and Visual7W [74] for information about the relationships between objects, CLEVR [28] in which images are rendered geometric shapes, and GQA [26] for visual reasoning and compositional answering. As shown in Table 1, some datasets have images, questions and/or answers generated and/or selected entirely through manual processes, while others involved automated procedures [66, 56, 45, 24]. While existing VQA datasets, such as VQA v2.0 and GQA, have advanced the field by providing large-scale benchmarks for evaluating model performance on photorealistic images, they often lack the complexity introduced by non-photorealistic like those in SimpsonsVQA. .

| Name | Year | Domain | #Images | #Questions | Type | Automated | |

|---|---|---|---|---|---|---|---|

| 1 | VQA v1.0 [6] | 2015 | General | 204,721 | 614,163 | OE&MC | No |

| 2 | VQA v1.0 [6] | 2015 | Abstract Scene | 50,000 | 150,000 | OE&MC | No |

| 3 | COCO-QA [56] | 2015 | General | 123,287 | 117,684 | OE | Yes |

| 4 | Binary-VQA [73] | 2015 | Abstract Scene | 50,000 | 150,000 | MC | No |

| 5 | FM-IQA [18] | 2015 | General | 158,392 | 316,193 | OE | No |

| 6 | KB-VQA [67] | 2015 | KB-VQA | 700 | 2,402 | OE | No |

| 7 | VG [35] | 2016 | General | 108,077 | 1,700,000 | OE | Yes |

| 8 | SHAPE [5] | 2016 | Abstract Shape | 15,616 | 244 | MC | Yes |

| 9 | Art-VQA [58] | 2016 | Cultural Heritage | 16 | 805 | OE | No |

| 10 | FVQA [66] | 2017 | KB-VQA | 1,906 | 4,608 | OE | Yes |

| 11 | DAQUAR [45] | 2017 | General | 1,449 | 12,468 | OE | Yes |

| 12 | Visual7W [74] | 2017 | General | 47,300 | 327,939 | MC | Yes |

| 13 | VQA v2.0 [22] | 2017 | General | 200,000 | 1,100,000 | OE&MC | No |

| 14 | CLEVR [28] | 2017 | Geometric Shapes | 100,000 | 853,554 | OE | Yes |

| 15 | VQA-CP1 [3] | 2017 | General | 205,000 | 370,000 | OE | No |

| 16 | VQA-CP2 [3] | 2017 | General | 219,000 | 658,000 | OE | No |

| 17 | AD-VQA [27] | 2017 | Advertisement | 64,832 | 202,090 | OE | No |

| 18 | TDIUC [29] | 2020 | General | 167,437 | 1,654,167 | OE | Yes |

| 19 | VQA-MED-18 [24] | 2018 | Medical | 2,866 | 6,413 | OE | Yes |

| 20 | VQA-RAD [36] | 2018 | Medical | 315 | 3,515 | OE | No |

| 21 | VizWiz [23] | 2018 | VIP | 32,842 | 32,842 | OE | No |

| 22 | VQA-MED-19 [2] | 2019 | Medical | 4,200 | 15,292 | OE | Yes |

| 23 | TextVQA [59] | 2019 | Text-VQA | 28,408 | 45,336 | OE | No |

| 24 | OCR-VQA [50] | 2019 | Text-VQA | 207,572 | 1,002,146 | OE | Yes |

| 25 | STE-VQA [69] | 2019 | Text-VQA | 21,047 | 23,887 | OE | No |

| 26 | ST-VQA [11] | 2019 | Text-VQA | 22,020 | 30,471 | OE | No |

| 27 | OK-VQA [47] | 2019 | KB-VQA | 14,031 | 14,055 | OE | No |

| 28 | GQA [26] | 2019 | General | 113,000 | 22,000,000 | OE | Yes |

| 29 | LEAF-QA [13] | 2019 | FigureQA | 240,000 | 2,000,000 | OE | Yes |

| 30 | DOC-VQA [49] | 2020 | Text-VQA | 12,767 | 50,000 | OE | No |

| 31 | AQUA [19] | 2020 | Cultural Heritage | 21,383 | 32,345 | OE | Yes |

| 32 | RSVQA-low [23] | 2020 | Remote Sensor | 772 | 77,232 | OE | Yes |

| 33 | RSVQA-high [23] | 2020 | Remote Sensor | 10,659 | 1,066,316 | OE | Yes |

| 34 | VQA-MED-20 [1] | 2020 | Medical | 5,000 | 5,000 | OE | Yes |

| 35 | RadVisDial [33] | 2020 | Medical | 91,060 | 455,300 | OE | Yes |

| 36 | PathVQA [25] | 2020 | Medical | 4,998 | 32,799 | OE | Yes |

| 37 | VQA-MED-21 [8] | 2021 | Medical | 5,500 | 5,500 | OE | Yes |

| 38 | SLAKE [39] | 2021 | Medical | 642 | 14,000 | OE | No |

| 39 | GeoQA [15] | 2021 | Geometry Problems | 5,010 | 5,010 | MC | No |

| 40 | VisualMRC [61] | 2021 | Text-VQA | 10,197 | 30,562 | OE | Yes |

| 41 | A-OKVQA [57] | 2022 | KB-VQA | 23,700 | 37,687 | OE | No |

| 42 | VizWiz-Ground [14] | 2022 | VIP + AG | 9,998 | 9,998 | - | Yes |

| 43 | WSDM Cup [63] | 2023 | AG | 45,119 | 45,119 | - | No |

| 44 | SimpsonsVQA | 2024 | Cartoon | 23,269 | 103,738 | OE | Yes |

Question relevance: The common belief when gathering responses to visual questions is that questions can be answered using the provided image [6, 5, 18, 22, 28, 35, 45, 56, 67, 66]. However, in practice, not everyone asks questions directly related to the visual content [17], especially early-age learners. In VQA v1.0 [6], Ray et al. [55] conducted a study where they randomly selected 10,793 question-image pairs from a pool of 1,500 unique images. Their findings revealed that 79% of the questions were unrelated to the corresponding images. Hence, a VQA system should avoid answering an irrelevant question to an image, as doing so may lead to considerable confusion and a lack of trust. The exploration of question relevance has been extensively explored in the literature, leading to the development of numerous methods and algorithms aimed at avoiding answering irrelevant questions. Notable contributions include works such as [55, 60, 10, 54, 64, 23, 62, 12, 48, 44]. Our work with the SimpsonsVQA dataset expands on previous efforts by integrating both relevant and irrelevant questions. Testing models with irrelevant queries enhances their ability to distinguish between relevant and unrelated inputs, improving reliability and ensuring accurate responses in real-world applications like educational and assistive technologies.

Answer Correctness: While recent studies on VQA responses focuses on abstaining when uncertain to handle negative pairs and improve reliability [70], our method directly evaluates answers without abstaining, ensuring scalability and flexibility in real-world applications like education, assistive technologies, and customer services. The most relevant work, LAVE [46], uses an LLM to evaluate answers based on reference alignment and context from the question and image. However, LAVE’s reliance on reference answers limits independent evaluation. Our approach directly evaluates answers with image-based questions, eliminating the need for reference answers, enabling scalable, flexible, and autonomous VQA systems.

3 SimpsonsVQA Dataset

We provide in the following an overview of the dataset.

3.1 Dataset Creation

Due to the constraints imposed by limited time and budget, we adopted a pragmatic approach of automation to streamline the dataset construction process. In fact, many datasets listed in Table 1 have been created through partial automation methods [35, 66, 56, 45, 24, 5, 74, 28, 2, 50]. To accomplish this, we employed a three-step approach: (1) harnessing the capabilities of Machine Learning models, particularly captioning models, to extract descriptions for each image; (2) employing ChatGPT to generate a diverse set of question-answer pairs using the obtained descriptions; and ultimately, (3) conducting a meticulous manual review by qualified workers on the AMT platform to judgments of accuracy and reliability. In the following subsections, we provide a detailed description of these steps.

Image Collection: We have collected cartoon images from the popular American sitcom, “The Simpsons”. Focusing on seasons 24 to 33, which include 220 episodes and approximately 80 hours of content, we used an automated process to capture images every 5 seconds, initially gathering around 43,000 images. After manually filtering out about 1,200 inappropriate images (containing violence, weapons, or sexual content) and excluding images lacking substantial content, we employed the NN algorithm [16] (with ) to remove duplicates. This process resulted in a final dataset of 23,269 images.

Image Captioning: Image Captioning [65] combines CV and NLP to generate descriptive captions for images. We used the captioning OFA model [68], originally trained on COCO[37], which typically generates short captions focused on basic object descriptions. To enable the generation of richer, longer captions capable of supporting diverse question-answer pairs per image, we fine-tuned OFA using the Localized Narratives [53] and Image Paragraph Captioning datasets [34]. These datasets enhanced the coherence and richness of multi-sentence descriptions. Notably, it might not have captured character names or specific nuances of the TV show. Since the questions were generated from the visual captions, they are primarily focused on visual elements. While the questions are generally clear, some present a greater level of difficulty. For instance, the question “What is the object in the hand on the left-back wall?” refers to a phone, which may not be immediately obvious without context or additional visual cues.

Generating Question-Answer Pairs: We used ChatGPT [51] to generate at least 10 question-answer pairs alongside with question topics for each image description, allowing the model to produce answers and topics freely to capture diverse responses. Initially, we obtained over 1,000 unique answers. After manually removing inconsistent questions (e.g., those with predictable answers like ”yellow” for skin color), we normalized the dataset by standardizing numerical answers (e.g., “one”,“1”, to “1”). To maintain simplicity and focus, we also dropped any answers that were longer than a single word or phrase, ensuring that the dataset remained concise and consistent. As a result, we finalized a dataset of 166,533 image-question-answer triples with around 200 unique answers.

Assessing image-question-answer triples: The above models are prone to errors, which can lead to the generation of irrelevant questions and/or incorrect answers. We acknowledge that the generated questions and answers may not reflect typical human learner errors, potentially creating a domain gap, which is important to consider for real-world applicability. We employed the Amazon Mechanical Turk (AMT) platform to assess each image, question, and answer triple, using the interface depicted in Figure 2. In particular, we engaged human evaluators through the AMT platform and tasked them with evaluating each triple according to particular criteria. Initially, workers are presented with an image and a question, and then they are prompted to determine whether the question directly relates to the content of the image, offering a binary choice between “relevant” or “irrelevant”. If the worker chooses “irrelevant”, no additional action is needed for the given triple as in the case shown in Figure 2(a). Otherwise, as depicted in Figure 2(b), the worker must evaluate the accuracy of the answer to the question and its alignment with the image context by selecting one of these options: (i) incorrect, indicating that the provided answer is entirely wrong; (ii) ambiguous or partially correct, suggesting that the answer is unclear, open to interpretation, or it includes some correct details but also incorporates incorrect or irrelevant elements, making its validity hard to determine; and (iii) correct, implying that the answer is precise and directly addresses both the question and image.

To ensure the integrity of the evaluation process, each triple was assessed by three different workers. Rigorous eligibility criteria were enforced, allowing only individuals with a minimum approval rate of 99% and a track record of at least 10,000 approved HITs (Human Intelligence Tasks) to participate in the evaluation of the triples. To mitigate fraudulent or unreliable evaluations, each HIT required a minimum of 1 minute to be completed.

3.2 Task Description

Considering a set of images , a set of questions , a set of possible answers , the SimpsonsVQA dataset is designed to emphasize three tasks as described below.

Conventional VQA Task: Given a dataset with instances, the objective of this task is to develop a classification algorithm that learns a mapping function , which associates each image-question pair with its corresponding correct answer . This initial task embodies the conventional VQA scenario, where an individual poses a question that the system is tasked to answer.

Question relevance Task: Consider a dataset , where represents a binary label indicating the relevance of a question to an image. The objective of this task is to formulate a classification algorithm, denoted as , aimed at learning a mapping that associates each image-question pair with its corresponding binary label . In this scenario, an individual poses a question, and the system is required to assess its relevance to a provided image.

Answer correctness Task: Consider a dataset , where denotes a label signifying the alignment of an answer with an image-question pair. The objective is to formulate a classification algorithm denoted as , aimed at learning a mapping that associates each image-question-answer triple with its corresponding label . This final task represents the scenario where an individual provides an answer to a question related to an image that the system must evaluate.

Overall, we believe that the three aforementioned scenarios hold significant relevance within the realm of assistive technology applications. Our overarching goal is to craft interactive systems that are both captivating and enlightening, fostering and facilitating inquiry-based learning experiences.

| #Image | #QA pairs | |

| Train | 13,961 | 115,663 |

| Validation | 3,490 | 21,949 |

| Test | 5,818 | 28,921 |

| Total | 23,269 | 166,533 |

4 SimpsonsVQA Dataset Analysis

In this section, we analyze various aspects of the SimpsonsVQA dataset, including its characteristics and distribution patterns, while also providing insights obtained from analyzing its content. As reported in Table 2, the dataset is partitioned into three subsets: train, validation, and test.

It is important to note that, in order to uphold the integrity and confidentiality of the evaluation procedure, the test set remains both private and undisclosed.

4.1 Question Analysis

A total of 1,633 workers from AMT evaluated all the image-question-answer triples in our dataset. As mentioned earlier, each triple has undergone evaluation by three distinct workers, each providing judgments on two aspects: (1) the question’s relevance to the image content, and (2) the accuracy of the answer in relation to the given image context. As illustrated in Figure 3, approximately 66% of the questions generated by ChatGPT (totaling 80,137 questions) have been assessed as relevant by at least 2 workers for the corresponding images. In contrast, only 34% (35,526 questions) generated questions lack relevance to the images.

Question Types: Popular datasets like VQA v2.0 and VizWiz lack clearly defined question types, and while TDIUC [29] generates template-based questions, our dataset uses ChatGPT to create more diverse and nuanced questions, enhancing its richness, as shown in Figure 4. The majority of questions, approximately 55% of the questions start with the word “what”. Following behind are questions beginning with “is” and “how”, which account for percentages ranging from 12% to 20%. Conversely, questions initiated by words like “are”, “who”, and “where” make up a significantly smaller proportion. Furthermore, the most frequent question patterns include variations such as “what is the color…”, “what color…”, and “what is the man/woman/person doing/holding”. Additionally, a substantial number of questions involve positional inquiries, such as “what is on/in/behind…”, and there is also a significant presence of “how many…” questions.

Question Topics: As shown in Figure 5, the questions cover a wide range of topics, encompassing attribute classification 38%, object recognition 29%, counting 12%, spatial reasoning 10% , and action recognition 9%. The remaining topics collectively represent a negligible percentage, totaling only about 2% of the questions.

These diverse topics play a crucial role in fostering various developmental abilities for inquiry-based learning in early-age education. Figure 6 presents individual word cloud visualizations for each question type, capturing the distinctive vocabulary associated with different question categories. Each cloud highlights the frequency of specific terms, offering a visual insight into the unique linguistic characteristics of various question types.

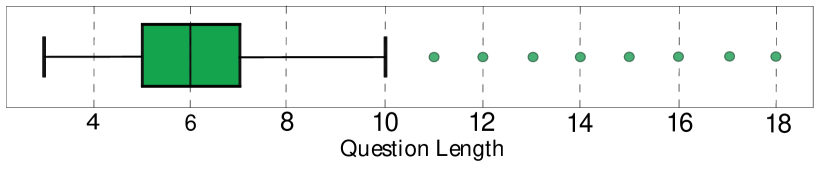

Question Length: Figure 7 illustrates the distribution of question lengths, revealing that the majority of questions fall within the range of 3 (e.g., “are there balloons?”) to 10 words, with a median of 6 words. Notably, the longest question observed has a length of 18 words, which is comparable to those found in other general VQA datasets.

4.2 Answer Analysis

Figure 8 illustrates that approximately 51% of the triples were assessed as “Correct” by at least two workers, while roughly 42% were deemed “Incorrect” by at least two workers, and around 6% were labeled as “Ambiguous” by at least two workers.

In Figure 9, we notice that around 52,000 triples were judged “Correct” by all three workers, and more than 18,000 triples were judged “Correct” from exactly two workers. On the other hand, around 45,000 triples were unanimously judged as “Incorrect” by all workers, and approximately 14,000 triples had agreement from exactly two workers labeling them as “Incorrect”. The number of triples judged partially ambiguous or partially correct was small.

Popular Answers: All answers within the dataset consist of a single word. Figure 10(a) displays the top 30 answers with the highest frequency in the training set. Notably, the answer “yes” predictably holds the top position, constituting 25% of the answers, maintaining a notable lead of 11% over the second-place answer, “no”. Among the 15 most frequent answers, the majority tend to revolve around numbers or colors. This characteristic makes the dataset particularly suitable for educational applications. Finally, as shown in Figure 10(b), 27% of the questions prompted “yes” or “no” responses, while 12% of the questions received numerical answers. The remaining 61% of questions were answered in diverse ways.

Answers and Question Types: Figure 11 shows how different question types are answered. Questions starting with words like “are”, “can”, “do”, “does”, and “is” are mostly answered with either “Yes/No”. Surprisingly, questions beginning with “How” not only receive numeric answers but also frequently involve words like “many” and “groups”. On the other hand, questions starting with “what”, “where”, and “who” have a wider variety of possible answers.

5 Experimental Evaluation

In this section, we assess the effectiveness of various deep-learning models for the tasks outlined in Section 3.2, utilizing the SimpsonsVQA dataset.

Baselines: To benchmark SimpsonsVQA, we employed several VQA models, LSTM Q + I [6], MLB [30], MLB+Att [30], MUTAN [9], MUTAN+Att [9], BUTD [4] and MCAN [71], which were evaluated across three tasks with minor adaptations. For the Answer Correctness task, involving image, question, and answer inputs, we passed the answer through a word-embedding and dense layer, then merged it with the question embedding via element-wise multiplication. Additionally, we used recent advanced LVLMs like LLava 1.5 [41], LLava-Next [42], and ChatGpt-4o [52] in a zero-shot setting. For the Conventional VQA task, we fine-tuned LVLMs such as ViLT [31], OFA [68], and X-VLM [72]. For the Question Relevance task, we included QC Simility [55] and QQ’ Simility [55].

Metrics: We employ the standard accuracy metric as our primary evaluation criterion. Additionally, we use Precision, Recall, F1-score, and AUC score to ensure a thorough and comprehensive assessment.

Implementation details: All models were implemented according to their original implementation using PyTorch and were trained on a Linux Ubuntu 18.04.1 LTS Dual Intel(R) Xeon(R) Silver CPU @2.20GHz with a GPU NVIDIA Tesla V100. Also, all models were trained using the ADAM optimizer [32] for 15 epochs, while fine-tuned LVLMs were trained for an additional 4 epochs.

5.1 Results of the Conventional VQA Task

| Dataset | #Images | #QA Pairs |

| Train | 13,936 | 60,643 |

| Validation | 3,451 | 9,764 |

| Test | 5,409 | 10,507 |

| Total | 22,796 | 80,914 |

SimpsonsVQA dataset: We curated a dataset that includes only triples for which at least 2 workers have assessed as “Correct”, ensuring the inclusion of only high-quality triples for evaluation. Table 3 displays the size of the resulting dataset.

Results analysis: Table 4 presents the performance obtained on the Conventional VQA Task. The results show that traditional VQA models, such as LSTM Q + I, SAN, MLB, and their attention-enhanced variants, exhibit moderate accuracy with particular strength in the “Yes/No” questions but notably weaker performance in the “Number” and “Other” categories.

In contrast, fine-tuned LVLMs such as ViLT, X-VLM, and OFA demonstrate superior performance across all categories, showing a substantial increase in accuracy for “Number” questions, with OFA achieving an impressive 85.45% and leading in the “Other” category at 76.22%. The overall accuracy rates of these LVLMs significantly exceed those of traditional models, with OFA reaching the highest overall accuracy of 82.00%. Zero-shot models, including LLaVa 1.5, LLaVa 1.6, and ChatGPT-4o, show inconsistent performance and fail to reach the accuracy levels of fine-tuned models. Specifically, ChatGPT-4o, one of the best LVLMs, achieves an overall accuracy of only 68.32%, highlighting their limitations on SimpsonVQA.

| Accuracy | |||||

| ID | Model | Number | Yes/No | Other | All |

| 1 | LSTM Q + I | 0.6102 | 0.8627 | 0.4561 | 0.5823 |

| 2 | SAN | 0.691 | 0.9103 | 0.5336 | 0.6543 |

| 3 | MLB | 0.6042 | 0.8841 | 0.4376 | 0.5786 |

| 4 | MLB+Att | 0.6927 | 0.9038 | 0.6009 | 0.6937 |

| 5 | Mutan | 0.6176 | 0.8962 | 0.4472 | 0.5828 |

| 6 | Mutan+Att | 0.7027 | 0.9262 | 0.6139 | 0.7086 |

| 7 | BUTD | 0.6901 | 0.9362 | 0.6023 | 0.7037 |

| 8 | MCAN | 0.7347 | 0.9284 | 0.6355 | 0.7209 |

| 9 | LXMERT | 0.7659 | 0.9149 | 0.6369 | 0.7248 |

| 10 | ViLT | 0.8447 | 0.9101 | 0.7155 | 0.7720 |

| 11 | XVLM | 0.8241 | 0.9215 | 0.7449 | 0.8020 |

| 12 | OFA | 0.8545 | 0.9335 | 0.7622 | 0.8200 |

| 13 | LLaVa-1.5-LLama-7b | 0.8193 | 0.8241 | 0.4953 | 0.6299 |

| 14 | LLaVa-1.6-Mistral-7b | 0.8279 | 0.8398 | 0.5657 | 0.6784 |

| 15 | GPT-4o-2024-05-13 | 0.8404 | 0.8493 | 0.5789 | 0.6832 |

5.2 Results of the Question Relevance Task

SimpsonsVQA dataset: We curated a dataset that comprises images and questions labeled as “relevant” or “irrelevant”, determined through the majority decision of the workers. Table 5, presents details of this dataset.

| Dataset | #Relevant QA Pairs | #Irrelevant QA Pairs | Total |

| Train | 80,137 | 35,526 | 115,663 |

| Validation | 13,240 | 8,709 | 21,949 |

| Tests | 14,680 | 14,241 | 28,921 |

| Total | 108,057 | 58,476 | 166,533 |

Results analysis: Table 6 provides an overview of the performance of baseline models on the Question Relevance Task. Notably, all models surpass the accuracy of the majority vote classifier. Among the assessed models, Mutan+Att stands out, achieving the highest overall accuracy of 87.77%, significantly benefiting from the addition of attention mechanisms which enhance its capability to discern question relevance. Other traditional VQA models, such as QC Similarity and QQ’ Similarity, also perform competently but do not match the effectiveness of Mutan+Att. The integration of attention mechanisms generally boosts model performance, as seen with the improved metrics of MLB+Att over its base version, indicating that attention-augmented models handle the complexities of question relevance tasks more adeptly.

Meanwhile, three zero-shot LVLMs do not outperform the top traditional models because the zero-shot setting inherently lacks task-specific fine-tuning. Among the zero-shot models, ChatGPT-4o achieved the highest accuracy at 83.94%. Despite this, they still exhibit considerable accuracy, particularly in identifying irrelevant questions.

| Precision | Recall | F1-Score | ||||||

| ID | Model | Accuracy | Rel | Irrel | Rel | Irrel | Rel | Irrel |

| 1 | LSTM Q + I | 0.8683 | 0.8501 | 0.8895 | 0.8991 | 0.8365 | 0.8739 | 0.8622 |

| 2 | SAN | 0.8687 | 0.8576 | 0.8809 | 0.8888 | 0.8479 | 0.8729 | 0.8641 |

| 3 | MLB | 0.8686 | 0.8558 | 0.8830 | 0.8914 | 0.8452 | 0.8732 | 0.8637 |

| 4 | MLB+Att | 0.8713 | 0.8545 | 0.8906 | 0.8997 | 0.8421 | 0.8765 | 0.8657 |

| 5 | Mutan | 0.8650 | 0.8463 | 0.8869 | 0.8970 | 0.8321 | 0.8709 | 0.8586 |

| 6 | Mutan+Att | 0.8777 | 0.8770 | 0.8785 | 0.8830 | 0.8723 | 0.8800 | 0.8754 |

| 7 | QC Similarity | 0.8592 | 0.8563 | 0.8628 | 0.8684 | 0.8502 | 0.8623 | 0.8564 |

| 8 | QQ’ Similarity | 0.8595 | 0.8553 | 0.8646 | 0.8705 | 0.8487 | 0.8629 | 0.8566 |

| 9 | LLaVa-1.5-LLama-7b | 0.6569 | 0.4148 | 0.9065 | 0.6030 | 0.8218 | 0.5513 | 0.7243 |

| 10 | LLaVa-1.6-Mistral-7b | 0.7253 | 0.6333 | 0.8201 | 0.7843 | 0.6862 | 0.7008 | 0.7472 |

| 11 | ChatGPT-4o | 0.8394 | 0.8391 | 0.8609 | 0.8702 | 0.8281 | 0.8441 | 0.8493 |

| 12 | Majority Vote | 0.5075 | 0.5075 | 0.0000 | 1.0000 | 0.0000 | 0.6733 | 0.0000 |

5.3 Results of the Answer Correctness Task

SimpsonsVQA dataset: We constructed the dataset using image-question pairs that were deemed relevant as follows: When there was unanimous consensus among two or more workers, the majority perspective was assigned as the label for the triple. If unanimous agreement is not reached, we assign the label “Ambiguous”. Table 7 provides its details.

| Dataset | #images | #C QA Pairs | #AM QA Pairs | #IC QA Pairs | Total |

| Train | 13,961 | 60,643 | 6,695 | 12,799 | 80,137 |

| Validation | 3,490 | 9,764 | 1,158 | 2,318 | 13,240 |

| Test | 10,507 | 5,800 | 1,253 | 2,920 | 14,680 |

| Total | 23,251 | 80,914 | 9,106 | 18,037 | 108,057 |

Results analysis: Table 8 provides a summary of the performance of the baseline models. Given the predominance of “Correct” triples in the data, models generally achieve high performance in this category. Conversely, performance for the “Ambiguous” category is notably low. The “Incorrect” category shows significant fluctuations, with performance ranging from 17.02% to 40.26%. MLB+Att and Mutan+Att excel in classifying incorrect triples, likely due to their attention mechanisms and architectures. Zero-shot large vision language models demonstrate a clear trend of improved performance compared to traditional VQA models. Especially, LLaVa-1.6-Mistral-7b performs well in the incorrect category. ChatGPT-4o shows balanced performance across all categories, highlighting significant advancements in zero-shot models for assessing the answer correctness.

| Precision | Recall | F1-Score | |||||||||

| ID | Model | Accuracy | C | AM | IC | C | AM | IC | C | AM | IC |

| 1 | LSTM Q + I | 0.7660 | 0.7835 | 0.0000 | 0.5107 | 0.9660 | 0.0000 | 0.1907 | 0.8652 | 0.0000 | 0.2777 |

| 2 | SAN | 0.7712 | 0.8023 | 0.0250 | 0.5172 | 0.9476 | 0.0001 | 0.3067 | 0.8689 | 0.0018 | 0.3850 |

| 3 | MLB | 0.7672 | 0.7750 | 0.0000 | 0.5604 | 0.9735 | 0.0000 | 0.1190 | 0.8669 | 0.0000 | 0.1963 |

| 4 | MLB+Att | 0.7530 | 0.8119 | 0.1753 | 0.4736 | 0.9078 | 0.0663 | 0.3465 | 0.8571 | 0.0960 | 0.4001 |

| 5 | Mutan | 0.7742 | 0.7969 | 0.1570 | 0.5459 | 0.9586 | 0.0051 | 0.2691 | 0.8703 | 0.0009 | 0.3904 |

| 6 | Mutan+Att | 0.7500 | 0.8180 | 0.1909 | 0.4601 | 0.8968 | 0.0819 | 0.3710 | 0.8556 | 0.1139 | 0.4107 |

| 7 | LLaVa-1.5-LLama-7b | 0.6933 | 0.9666 | 0.0167 | 0.0571 | 0.7211 | 0.0972 | 0.4394 | 0.8260 | 0.0285 | 0.1012 |

| 8 | LLaVa-1.6-Mistral-7b | 0.7431 | 0.8550 | 0.0247 | 0.6486 | 0.8473 | 0.2719 | 0.4778 | 0.8512 | 0.0453 | 0.5502 |

| 9 | ChatGPT-4o | 0.7198 | 0.7947 | 0.1761 | 0.6802 | 0.8912 | 0.2269 | 0.4574 | 0.8402 | 0.1983 | 0.5470 |

5.4 Discussion

Drawing from our experimental findings, several key observations come to light. First, among the three VQA tasks mentioned earlier, the assessed baseline models demonstrate effectiveness in the Conventional VQA Task, while facing challenges in predicting questions that do not fall into the categories of “yes/no” or “number” questions. This outcome is comprehensible given that these models are crafted with a distinct emphasis on the conventional VQA task. Second, in the Question Relevance task, existing models showed comparable performance. A key challenge is determining the validity of a question based on the visual content of the image. This requires models to understand the question and assess its appropriateness within the context of the image. The Answer Correctness task presents a significant challenge, more complex than traditional VQA tasks. In addition to understanding the image and question to generate answers, models must evaluate the interplay between the image, question, and answer to classify responses as “correct”, “incorrect”, or “ambiguous”. This task is a more advanced version of the standard VQA exercise, requiring a deeper, more nuanced level of understanding.

6 Potential Negative Societal Impact

While the SimpsonsVQA holds valuable educational and learning applications, there are several potential negative implications that need to be acknowledged.

Stereotyping and Bias: The dataset, derived from The Simpsons TV show, may contain stereotypes, biases, or cultural references that could perpetuate negative perceptions. Using it in educational settings risks learners absorbing these biases or incorrect information.

Cognitive and Emotional Impact: AI-generated content in education can affect learners’ cognitive and emotional development. Ensuring the content is age-appropriate, respectful, and conducive to positive learning is crucial.

Over-reliance on AI-driven tools: Excessive dependence on AI in education may diminish the role of educators and human interaction. Hence, it’s important to balance technological advancements with human guidance.

7 Conclusion & Future Work

In conclusion, our findings show that advanced LVLMs like LLaVa and ChatGPT face significant challenges when processing cartoon images, such as those in the SimpsonsVQA dataset, due to their training on photorealistic data. SimpsonsVQA provides a valuable resource for evaluating and fine-tuning these models, enhancing their robustness in handling cartoon-based tasks and pushing the limits of VQA capabilities.

By leveraging The Simpsons’ consistent visual style, this dataset contributes to the development of intelligent systems for educational applications, promoting inquiry-based learning. However, we acknowledge that the automatically generated irrelevant questions and incorrect answers may introduce a domain gap compared to human learners’ errors. To address this, we plan a human study to ensure better alignment with real learner behaviors. Additionally, we recognize that different cartoon styles may create distinct image domains, which will be explored in future work to improve model adaptability and expand the dataset’s value for broader visual applications.

Licensing: The dataset is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International (CC BY-NC-SA 4.0) 111https://creativecommons.org/licenses/by-nc-sa/4.0/.

Ethical considerations: In creating SimpsonsVQA, there was no collection or publication of any personal or critical data related to the AMT workers. The annotators responsible for labeling the SimpsonsVQA dataset were compensated fairly for their efforts, adhering to the minimum wage standards set by the platform.

References

- [1] Asma Ben Abacha, Vivek V Datla, Sadid A Hasan, Dina Demner-Fushman, and Henning Müller. Overview of the vqa-med task at imageclef 2020: Visual question answering and generation in the medical domain. In CLEF (Working Notes), 2020.

- [2] Asma Ben Abacha, Sadid A Hasan, Vivek V Datla, Joey Liu, Dina Demner-Fushman, and Henning Müller. Vqa-med: Overview of the medical visual question answering task at imageclef 2019. CLEF (working notes), 2(6), 2019.

- [3] Aishwarya Agrawal, Dhruv Batra, Devi Parikh, and Aniruddha Kembhavi. Don’t just assume; look and answer: Overcoming priors for visual question answering. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 4971–4980, 2018.

- [4] Peter Anderson, Xiaodong He, Chris Buehler, Damien Teney, Mark Johnson, Stephen Gould, and Lei Zhang. Bottom-up and top-down attention for image captioning and visual question answering. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 6077–6086, 2018.

- [5] Jacob Andreas, Marcus Rohrbach, Trevor Darrell, and Dan Klein. Neural module networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 39–48, 2016.

- [6] Stanislaw Antol, Aishwarya Agrawal, Jiasen Lu, Margaret Mitchell, Dhruv Batra, C. Lawrence Zitnick, and Devi Parikh. Vqa: Visual question answering. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), December 2015.

- [7] Tadas Baltrušaitis, Chaitanya Ahuja, and Louis-Philippe Morency. Multimodal machine learning: A survey and taxonomy. IEEE Transactions on Pattern Analysis and Machine Intelligence, 41(2):423–443, 2019.

- [8] Asma Ben Abacha, Mourad Sarrouti, Dina Demner-Fushman, Sadid A. Hasan, and Henning Müller. Overview of the vqa-med task at imageclef 2021: Visual question answering and generation in the medical domain. In CLEF 2021 Working Notes, CEUR Workshop Proceedings, Bucharest, Romania, September 21-24 2021. CEUR-WS.org.

- [9] Hedi Ben-Younes, Rémi Cadene, Matthieu Cord, and Nicolas Thome. Mutan: Multimodal tucker fusion for visual question answering. In Proceedings of the IEEE international conference on computer vision, pages 2612–2620, 2017.

- [10] Nilavra Bhattacharya, Qing Li, and Danna Gurari. Why does a visual question have different answers? In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 4271–4280, 2019.

- [11] Ali Furkan Biten, Ruben Tito, Andres Mafla, Lluis Gomez, Marçal Rusinol, Ernest Valveny, CV Jawahar, and Dimosthenis Karatzas. Scene text visual question answering. In Proceedings of the IEEE/CVF international conference on computer vision, pages 4291–4301, 2019.

- [12] Arjun Chandrasekaran, Viraj Prabhu, Deshraj Yadav, Prithvijit Chattopadhyay, and Devi Parikh. Do explanations make vqa models more predictable to a human? arXiv preprint arXiv:1810.12366, 2018.

- [13] Ritwick Chaudhry, Sumit Shekhar, Utkarsh Gupta, Pranav Maneriker, Prann Bansal, and Ajay Joshi. Leaf-qa: Locate, encode & attend for figure question answering. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pages 3512–3521, 2020.

- [14] Chongyan Chen, Samreen Anjum, and Danna Gurari. Grounding answers for visual questions asked by visually impaired people. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 19098–19107, 2022.

- [15] Jiaqi Chen, Jianheng Tang, Jinghui Qin, Xiaodan Liang, Lingbo Liu, Eric P Xing, and Liang Lin. Geoqa: A geometric question answering benchmark towards multimodal numerical reasoning. arXiv preprint arXiv:2105.14517, 2021.

- [16] T. Cover and P. Hart. Nearest neighbor pattern classification. IEEE Transactions on Information Theory, 13(1):21–27, 1967.

- [17] Ernest Davis. Unanswerable questions about images and texts. Frontiers in Artificial Intelligence, 3:51, 2020.

- [18] Haoyuan Gao, Junhua Mao, Jie Zhou, Zhiheng Huang, Lei Wang, and Wei Xu. Are you talking to a machine? dataset and methods for multilingual image question. Advances in neural information processing systems, 28, 2015.

- [19] Noa Garcia, Chentao Ye, Zihua Liu, Qingtao Hu, Mayu Otani, Chenhui Chu, Yuta Nakashima, and Teruko Mitamura. A dataset and baselines for visual question answering on art. In Computer Vision–ECCV 2020 Workshops: Glasgow, UK, August 23–28, 2020, Proceedings, Part II 16, pages 92–108. Springer, 2020.

- [20] Haifan Gong, Guanqi Chen, Sishuo Liu, Yizhou Yu, and Guanbin Li. Cross-modal self-attention with multi-task pre-training for medical visual question answering. In Proceedings of the 2021 International Conference on Multimedia Retrieval, ICMR ’21, page 456–460, New York, NY, USA, 2021. Association for Computing Machinery.

- [21] Daniel Gordon, Aniruddha Kembhavi, Mohammad Rastegari, Joseph Redmon, Dieter Fox, and Ali Farhadi. Iqa: Visual question answering in interactive environments. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2018.

- [22] Yash Goyal, Tejas Khot, Douglas Summers-Stay, Dhruv Batra, and Devi Parikh. Making the v in vqa matter: Elevating the role of image understanding in visual question answering. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 6904–6913, 2017.

- [23] Danna Gurari, Qing Li, Abigale J. Stangl, Anhong Guo, Chi Lin, Kristen Grauman, Jiebo Luo, and Jeffrey P. Bigham. Vizwiz grand challenge: Answering visual questions from blind people. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 3608–3617, 2018.

- [24] Sadid A Hasan, Yuan Ling, Oladimeji Farri, Joey Liu, Henning Müller, and Matthew P Lungren. Overview of imageclef 2018 medical domain visual question answering task. In CLEF (Working Notes), 2018.

- [25] Xuehai He, Yichen Zhang, Luntian Mou, Eric Xing, and Pengtao Xie. Pathvqa: 30000+ questions for medical visual question answering. arXiv preprint arXiv:2003.10286, 2020.

- [26] Drew A Hudson and Christopher D Manning. Gqa: A new dataset for real-world visual reasoning and compositional question answering. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 6700–6709, 2019.

- [27] Zaeem Hussain, Mingda Zhang, Xiaozhong Zhang, Keren Ye, Christopher Thomas, Zuha Agha, Nathan Ong, and Adriana Kovashka. Automatic understanding of image and video advertisements. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 1705–1715, 2017.

- [28] Justin Johnson, Bharath Hariharan, Laurens Van Der Maaten, Li Fei-Fei, C Lawrence Zitnick, and Ross Girshick. Clevr: A diagnostic dataset for compositional language and elementary visual reasoning. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 2901–2910, 2017.

- [29] Kushal Kafle and Christopher Kanan. An analysis of visual question answering algorithms. In Proceedings of the IEEE international conference on computer vision, pages 1965–1973, 2017.

- [30] Jin-Hwa Kim, Kyoung-Woon On, Woosang Lim, Jeonghee Kim, Jung-Woo Ha, and Byoung-Tak Zhang. Hadamard product for low-rank bilinear pooling. arXiv preprint arXiv:1610.04325, 2016.

- [31] Wonjae Kim, Bokyung Son, and Ildoo Kim. Vilt: Vision-and-language transformer without convolution or region supervision. In International Conference on Machine Learning, pages 5583–5594. PMLR, 2021.

- [32] Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980, 2014.

- [33] Olga Kovaleva, Chaitanya Shivade, Satyananda Kashyap, Karina Kanjaria, Joy Wu, Deddeh Ballah, Adam Coy, Alexandros Karargyris, Yufan Guo, David Beymer Beymer, et al. Towards visual dialog for radiology. In Proceedings of the 19th SIGBioMed Workshop on Biomedical Language Processing, pages 60–69, 2020.

- [34] Jonathan Krause, Justin Johnson, Ranjay Krishna, and Li Fei-Fei. A hierarchical approach for generating descriptive image paragraphs. In Computer Vision and Patterm Recognition (CVPR), 2017.

- [35] Ranjay Krishna, Yuke Zhu, Oliver Groth, Justin Johnson, Kenji Hata, Joshua Kravitz, Stephanie Chen, Yannis Kalantidis, Li-Jia Li, David A Shamma, et al. Visual genome: Connecting language and vision using crowdsourced dense image annotations. International journal of computer vision, 123(1):32–73, 2017.

- [36] Jason J. Lau, Soumya Gayen, Asma Ben Abacha, and Dina Demner-Fushman. A dataset of clinically generated visual questions and answers about radiology images. Scientific Data, 5, 2018.

- [37] Tsung-Yi Lin, Michael Maire, Serge Belongie, James Hays, Pietro Perona, Deva Ramanan, Piotr Dollár, and C Lawrence Zitnick. Microsoft coco: Common objects in context. In European conference on computer vision, pages 740–755. Springer, 2014.

- [38] Zhihong Lin, Donghao Zhang, Qingyi Tac, Danli Shi, Gholamreza Haffari, Qi Wu, Mingguang He, and Zongyuan Ge. Medical visual question answering: A survey. arXiv preprint arXiv:2111.10056, 2021.

- [39] Bo Liu, Li-Ming Zhan, Li Xu, Lin Ma, Yan Yang, and Xiao-Ming Wu. Slake: a semantically-labeled knowledge-enhanced dataset for medical visual question answering. In 2021 IEEE 18th International Symposium on Biomedical Imaging (ISBI), pages 1650–1654. IEEE, 2021.

- [40] Feng Liu, Tao Xiang, Timothy M Hospedales, Wankou Yang, and Changyin Sun. Inverse visual question answering: A new benchmark and vqa diagnosis tool. IEEE transactions on pattern analysis and machine intelligence, 42(2):460–474, 2018.

- [41] Haotian Liu, Chunyuan Li, Yuheng Li, and Yong Jae Lee. Improved baselines with visual instruction tuning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 26296–26306, 2024.

- [42] Haotian Liu, Chunyuan Li, Yuheng Li, Bo Li, Yuanhan Zhang, Sheng Shen, and Yong Jae Lee. Llava-next: Improved reasoning, ocr, and world knowledge, January 2024.

- [43] Lin Ma, Zhengdong Lu, and Hang Li. Learning to answer questions from image using convolutional neural network. In Thirtieth AAAI Conference on Artificial Intelligence, 2016.

- [44] Aroma Mahendru, Viraj Prabhu, Akrit Mohapatra, Dhruv Batra, and Stefan Lee. The promise of premise: Harnessing question premises in visual question answering. arXiv preprint arXiv:1705.00601, 2017.

- [45] Mateusz Malinowski and Mario Fritz. A multi-world approach to question answering about real-world scenes based on uncertain input. Advances in neural information processing systems, 27, 2014.

- [46] Oscar Mañas, Benno Krojer, and Aishwarya Agrawal. Improving automatic vqa evaluation using large language models. arXiv preprint arXiv:2310.02567, 2023.

- [47] Kenneth Marino, Mohammad Rastegari, Ali Farhadi, and Roozbeh Mottaghi. Ok-vqa: A visual question answering benchmark requiring external knowledge. In Proceedings of the IEEE/cvf conference on computer vision and pattern recognition, pages 3195–3204, 2019.

- [48] Akib Mashrur, Wei Luo, Nayyar A Zaidi, and Antonio Robles-Kelly. Robust visual question answering via semantic cross modal augmentation. Computer Vision and Image Understanding, page 103862, 2023.

- [49] Minesh Mathew, Dimosthenis Karatzas, and CV Jawahar. Docvqa: A dataset for vqa on document images. In Proceedings of the IEEE/CVF winter conference on applications of computer vision, pages 2200–2209, 2021.

- [50] Anand Mishra, Shashank Shekhar, Ajeet Kumar Singh, and Anirban Chakraborty. Ocr-vqa: Visual question answering by reading text in images. In 2019 International Conference on Document Analysis and Recognition (ICDAR), pages 947–952, 2019.

- [51] OpenAI. Chatgpt. OpenAI API, 2021. Accessed: [13/17/2023].

- [52] OpenAI. Gpt-4o. 2024.

- [53] Jordi Pont-Tuset, Jasper Uijlings, Soravit Changpinyo, Radu Soricut, and Vittorio Ferrari. Connecting vision and language with localized narratives. In ECCV, 2020.

- [54] Pranav Rajpurkar, Robin Jia, and Percy Liang. Know what you don’t know: Unanswerable questions for squad. arXiv preprint arXiv:1806.03822, 2018.

- [55] Arijit Ray, Gordon Christie, Mohit Bansal, Dhruv Batra, and Devi Parikh. Question relevance in vqa: identifying non-visual and false-premise questions. arXiv preprint arXiv:1606.06622, 2016.

- [56] Mengye Ren, Ryan Kiros, and Richard Zemel. Exploring models and data for image question answering. Advances in neural information processing systems, 28, 2015.

- [57] Dustin Schwenk, Apoorv Khandelwal, Christopher Clark, Kenneth Marino, and Roozbeh Mottaghi. A-okvqa: A benchmark for visual question answering using world knowledge. In Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part VIII, pages 146–162. Springer, 2022.

- [58] Shurong Sheng, Luc Van Gool, and Marie-Francine Moens. A dataset for multimodal question answering in the cultural heritage domain. In Proceedings of the COLING 2016 Workshop on Language Technology Resources and Tools for Digital Humanities (LT4DH), pages 10–17. ACL, 2016.

- [59] Amanpreet Singh, Vivek Natarajan, Meet Shah, Yu Jiang, Xinlei Chen, Dhruv Batra, Devi Parikh, and Marcus Rohrbach. Towards vqa models that can read. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 8317–8326, 2019.

- [60] Elias Stengel-Eskin, Jimena Guallar-Blasco, Yi Zhou, and Benjamin Van Durme. Why did the chicken cross the road? rephrasing and analyzing ambiguous questions in vqa. arXiv preprint arXiv:2211.07516, 2022.

- [61] Ryota Tanaka, Kyosuke Nishida, and Sen Yoshida. Visualmrc: Machine reading comprehension on document images. In Proceedings of the AAAI Conference on Artificial Intelligence, pages 13878–13888, 2021.

- [62] Andeep S Toor, Harry Wechsler, and Michele Nappi. Question part relevance and editing for cooperative and context-aware vqa (c2vqa). In Proceedings of the 15th International Workshop on Content-Based Multimedia Indexing, pages 1–6, 2017.

- [63] Dmitry Ustalov, Nikita Pavlichenko, Daniil Likhobaba, and Alisa Smirnova. WSDM Cup 2023 Challenge on Visual Question Answering. In Proceedings of the 4th Crowd Science Workshop on Collaboration of Humans and Learning Algorithms for Data Labeling, pages 1–7, Singapore, 2023.

- [64] Jordy Van Landeghem, Rubèn Tito, Łukasz Borchmann, Michał Pietruszka, Pawel Joziak, Rafal Powalski, Dawid Jurkiewicz, Mickaël Coustaty, Bertrand Anckaert, Ernest Valveny, et al. Document understanding dataset and evaluation (dude). In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 19528–19540, 2023.

- [65] Oriol Vinyals, Alexander Toshev, Samy Bengio, and Dumitru Erhan. Show and tell: A neural image caption generator. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2015.

- [66] Peng Wang, Qi Wu, Chunhua Shen, Anthony Dick, and Anton Van Den Hengel. Fvqa: Fact-based visual question answering. IEEE transactions on pattern analysis and machine intelligence, 40(10):2413–2427, 2017.

- [67] Peng Wang, Qi Wu, Chunhua Shen, Anton van den Hengel, and Anthony Dick. Explicit knowledge-based reasoning for visual question answering. arXiv preprint arXiv:1511.02570, 2015.

- [68] Peng Wang, An Yang, Rui Men, Junyang Lin, Shuai Bai, Zhikang Li, Jianxin Ma, Chang Zhou, Jingren Zhou, and Hongxia Yang. Ofa: Unifying architectures, tasks, and modalities through a simple sequence-to-sequence learning framework. In International Conference on Machine Learning, pages 23318–23340. PMLR, 2022.

- [69] Xinyu Wang, Yuliang Liu, Chunhua Shen, Chun Chet Ng, Canjie Luo, Lianwen Jin, Chee Seng Chan, Anton van den Hengel, and Liangwei Wang. On the general value of evidence, and bilingual scene-text visual question answering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 10126–10135, 2020.

- [70] Spencer Whitehead, Suzanne Petryk, Vedaad Shakib, Joseph Gonzalez, Trevor Darrell, Anna Rohrbach, and Marcus Rohrbach. Reliable visual question answering: Abstain rather than answer incorrectly. In European Conference on Computer Vision, pages 148–166. Springer, 2022.

- [71] Zhou Yu, Jun Yu, Yuhao Cui, Dacheng Tao, and Qi Tian. Deep modular co-attention networks for visual question answering. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 6281–6290, 2019.

- [72] Yan Zeng, Xinsong Zhang, Hang Li, Jiawei Wang, Jipeng Zhang, and Wangchunshu Zhou. X2-vlm: All-in-one pre-trained model for vision-language tasks. arXiv preprint arXiv:2211.12402, 2022.

- [73] Peng Zhang, Yash Goyal, Douglas Summers-Stay, Dhruv Batra, and Devi Parikh. Yin and yang: Balancing and answering binary visual questions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2016.

- [74] Yuke Zhu, Oliver Groth, Michael Bernstein, and Li Fei-Fei. Visual7w: Grounded question answering in images. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 4995–5004, 2016.