Sim911: Towards Effective and Equitable 9-1-1 Dispatcher Training with an LLM-Enabled Simulation

Abstract

Emergency response services are vital for enhancing public safety by safeguarding the environment, property, and human lives. As frontline members of these services, 9-1-1 dispatchers have a direct impact on response times and the overall effectiveness of emergency operations. However, traditional dispatcher training methods, which rely on role-playing by experienced personnel, are labor-intensive, time-consuming, and often neglect the specific needs of underserved communities. To address these challenges, we introduce Sim911111More details: https://meiyima.github.io/angie.html, the first training simulation for 9-1-1 dispatchers powered by Large Language Models (LLMs). Sim911 enhances training through three key technical innovations: (1) knowledge construction, which utilizes archived 9-1-1 call data to generate simulations that closely mirror real-world scenarios; (2) context-aware controlled generation, which employs dynamic prompts and vector bases to ensure that LLM behavior aligns with training objectives; and (3) validation with looped correction, which filters out low-quality responses and refines the system performance. Beyond its technical advancements, Sim911 delivers significant social impacts. Successfully deployed in the Metro Nashville Department of Emergency Communications (MNDEC), Sim911 has been integrated into multiple training sessions, saving time for dispatchers. By supporting a diverse range of incident types and caller tags, Sim911 provides more realistic and inclusive training experiences. In a conducted user study, 90.00% of participants found Sim911 to be as effective or even superior to traditional human-led training, making it a valuable tool for emergency communications centers nationwide, particularly those facing staffing challenges.

1 Introduction

Emergency response services are essential for public safety, managing around 240 million 911 calls annually, based on year-round stats from New York City (NYC-911 2022). However, there is a critical staffing shortfall, with a third of centers reporting more vacancies in 2023 compared to 2019, resulting in approximately 25,000 unfilled positions nationwide. This staffing crisis increases the workload on current staff (Chen et al. 2022, 2023), leading to dispatcher burnout and impacting emergency service quality (NICE 2023). As urban areas in the US grow, the strain on emergency response systems intensifies. Rapid urbanization and population growth demand effective solutions to adapt to and manage these increasing pressures (Ma et al. 2019).

Traditional training environments prepare trainees for real-world dispatcher roles by employing role-playing scenarios where experienced dispatchers coach trainees through simulated calls. The need for experienced dispatchers to participate in training diverts essential personnel from actual emergency duties, causing inconsistencies in training quality and reducing the availability of skilled staff, particularly in underserved areas (Saxon et al. 2022; Afonso 2021). However, traditional training methods, which rely on role-playing by experienced personnel, are labor-intensive, time-consuming, and frequently overlook the specific needs of underserved communities.

In light of these demands, exploring innovative technological solutions is critical. Advancements in artificial intelligence, especially Large Language Models (LLMs), offer promising methods for enhancing training environments. Employing LLMs to simulate caller interactions can reduce reliance on human resources, improving training efficiency and consistency (Naveed et al. 2023; Wang et al. 2023; Carta et al. 2023). However, directly applying plain LLM agents is not ideal. In our preliminary investigations, we identified the following challenges: (1) Achieving consistent realistic simulations is difficult without detailed factual databases, despite meticulous prompt engineering across different LLMs. This lack of realism results in simulations that do not fit the local context, making the training less effective and potentially confusing for trainees. (2) LLMs excel in generating coherent content but tend to fabricate details, undermining authenticity. Simulations with fabricated geographic information lead dispatchers to make decisions based on incorrect data, compromising emergency response effectiveness. (3) The needs of vulnerable populations in metropolitan areas are often understudied during conventional training, leaving practitioners unprepared. This lack of inclusiveness results in biased training, inadequately preparing dispatchers for handling calls from vulnerable groups, and leading to disparities in emergency response. (4) The inherently complex nature of 9-1-1 calls presents significant challenges, even for human trainers. Human-led training may also fail to capture these complexities, as discussed in detail in Section 2.

In this paper, we introduce Sim911, the first system that leverages LLMs to simulate realistic 9-1-1 calls, specifically designed to enhance dispatcher training. Sim911 focuses on creating effective and equitable simulation experiences tailored to the local metro area. Sim911 comprises three key components: knowledge construction, context-aware controlled generation, and validation with looped feedback. Knowledge construction organizes real-world information into retrieval knowledge bases, while context-aware controlled generation fine-tunes the LLM’s behavior through human-designed instructions. Validation with looped feedback ensures high-quality outputs by filtering out low-quality responses.

We summarize our technical innovations and contributions as follows: (1) Innovative Knowledge Construction from 9-1-1 Calls: Sim911 organizes real-world call data into detailed knowledge bases, allowing for the generation of contextually accurate and realistic training simulations, supporting 57 different incident types. (2) Context-Aware Controlled Generation: Sim911 strategically and dynamically uses advanced techniques, such as Chain-of-Thought (CoT) and Retrieval-Augmented Generation (RAG), to tailor LLM behavior during training sessions. (3) Validation with Looped Correction: Sim911 includes a unique validation process that filters out low-quality responses, ensuring high-quality and scenario-appropriate outputs. (4) Focus on Social Equity: Sim911 emphasizes training that addresses the needs of underserved and vulnerable communities, incorporating relevant data to better prepare dispatchers for diverse real-world scenarios. (5) Real-World Deployment and Evaluation: Successfully deployed in DEC, Sim911 has proven an effective tool in enhancing 9-1-1 dispatcher training from experimental results on real-world data.

Beyond technical advancements, Sim911 delivers significant social impacts: (1) Sim911 has been successfully deployed in DEC’s training programs, seamlessly integrated into 4 training classes across different service sites. (2) To the date of this paper, Sim911’s system logs reveal a total active simulation time of 26.55 hours, effectively saving this time for MNDEC dispatchers. (3) Sim911 supports 57 different real-world incident types and covers 14 caller tags, such as “unhoused” and “non-English speaking,” to enrich caller profiles. (4) In a user study conducted with DEC, 90.00% of participants found Sim911 to be as effective or even superior to traditional human-led training. Additionally, Sim911 received an average helpfulness score of 4.89 for its assistance in call-taking training. (5) Sim911 has the potential to assist emergency communications centers across the U.S. with limited staffing by enabling trainees to engage individually with the training program.

2 Motivating Study

We analyzed 11,841 real-world phone call recordings (from Nov. 2022 to May 2024) and manually reviewed 33 conventional training pieces (see examples in the Appendix, leading to the following observations.

Traditional training is laborious and time-consuming. In traditional training setups, each trainee engages in call simulations, assuming three roles: the call-taker, the caller, and the instructor. The trainee, as the call-taker, manages calls directed by the instructor and an experienced dispatcher. Each trainee typically participates in 60 independent simulated calls, with each call requiring the involvement of three participants. Based on past recordings, the average call duration is 3.5 minutes; with an average of 12 trainees per session, the total time commitment for experienced dispatchers amounts to at least 84 working hours per session.

Real-world 9-1-1 calls cover a wide spectrum of incident types and contextual scenarios. From our analysis of past phone call recordings, we identified over 200 distinct incident specifications. However, during initial training, each trainee is exposed to only 40 incident types and 15 call templates. Our review reveals that, on average, trainees cover only 48.00% of the incident types in the first 3-day program, and only 61.54% of special contexts or requests are adequately addressed. This limited exposure fails to prepare trainees for the variety of incidents they will encounter.

Caller images are critical for call-taking training but rarely considered. Equity and inclusiveness are often overlooked in conventional role-playing simulations during dispatcher training. Even with guidance from experienced dispatchers, these simulations frequently struggle to empathetically and accurately capture the nuanced experiences of vulnerable groups. Among the 33 training scenarios we reviewed, only 4 focused on vulnerable populations (such as non-native English speakers, who may use different language patterns; and callers from lower-income housing areas, who might have limited access to personal vehicles) representing just 12.12%. However, government statistics, see Figure 1, indicate that the needs of various vulnerable groups are significantly reflected in real-world 9-1-1 calls. This discrepancy highlights the importance of incorporating diverse caller images into training, as different scenarios might require distinct call-taking skill sets to effectively handle real-life situations.

3 Methodology

This section first provides an overview of Sim911. Then we introduce the technical aspects of how Sim911 works in Sections Knowledge Construction, Context-aware Controlled Generation, and Validation with Looped Correction.

Sim911 simulates calls by playing the role of 9-1-1 callers and interacting directly with the trainees. It comprises three main components, depicted in Figure 2: knowledge construction, context-aware controlled generation, and validation with looped correction. During the Knowledge Construction phase, 11,841 calls are analyzed to develop knowledge bases containing tags for incident specifics and caller images. This ensures that pertinent information is readily available during simulations. At runtime, Sim911 utilizes these tags to select the most suitable LLM backends, query the knowledge bases, and generate prompts in the context-aware controlled generation phase. This process tailors prompts to include incident details and caller profiles, ensuring that LLM responses align with simulation requirements. The Validation with Looped Correction component filters out low-quality responses in as the simulation goes.

3.1 Dynamic Knowledge Construction

As a first step, we build an in-depth review and sophisticated reconstruction of the existing dataset, see running examples in Appendix, which has three key data sources: Computer-Aided Dispatching(CAD) logs, archived 9-1-1 call recordings, and their corresponding transcriptions.

Detailing Two Components in 9-1-1 Calls

We integrate insights from dispatcher teams at MNDEC to identify two key components in 9-1-1 call handling: Incident Specifications and Caller Images. We use finely-grained tags for each data entry to create more accurate simulations. In our annotation work, we manually review each call and apply all relevant tags. A single call may be annotated with multiple tags to ensure comprehensive coverage.

Incident Specifications (IS) capture critical details of incidents, including: (1) Incident Type: Categorizes the incident, from routine (e.g., illegal parking) to critical (e.g., severe medical emergencies). (2) Scenario Context: Adds situational context, such as environmental conditions (e.g., severe weather), potential threats (e.g., sightings of firearms), or specific events (e.g., large public gatherings). (3) Special Requests: Identifies specific instructions, like the need for specialized units (e.g., bomb squads) or coordination with other agencies (e.g., fire departments).

Caller Images (CI) create a comprehensive caller profile, enhancing the LLM’s understanding of the caller’s perspective, especially for vulnerable groups. This includes: (1) General Tags: Profiles the caller by age (e.g., minor, adult) and emotion (e.g., “neutral”, “anxious”). Dispatchers assign these tags based on conversation clues (e.g., “My mom is mid-70s and living alone”) or voice analysis. These tags are less sensitive and linked to pre-customized LLM agents to avoid identification confusion (Wei, Haghtalab, and Steinhardt 2024). (2) Vulnerable Groups: These government-introduced tags include descriptors such as “low-income housing area” (if the call originates from a lower-income area, according to year-round statistics), “mental health” (if the caller exhibits potential mental health issues, such as bipolar disorder or depression; inferred from the conversation), “non-native speaker” (if the caller uses limited English), and “unhoused” (if the caller indicates lack of stable, permanent housing, inferred from conversation clues). These tags are considered highly sensitive and remain hidden during runtime due to ethical concerns.

Specializing Knowledge Bases for Contextual Control

We leverage the Retrieval Augmented Generation (RAG) approach, which enhances LLMs for tasks requiring deep knowledge by incorporating external databases as reference points during content generation. This methodology, as discussed by (Lewis et al. 2020), improves the LLMs’ ability to provide accurate and relevant outputs. Here, we introduce the two major knowledge bases for runtime use.

Factual Bases. First, we build a static base, which contains factual knowledge: (1) Validated Address List: a comprehensive list of real addresses within the local area; (2) Encoded Map with Connectivity Information: beyond simple address listings, this map provides detailed information about the connectivity between locations; (3) Tree-Structured Protocols: a collection of protocols for various types of emergency incidents, organized in a tree structure. These protocols detail the question sequence dispatchers should follow, ensuring Sim911’s simulations adhere to procedural standards of emergency response.

Retrievable Bases. This base includes data entries tagged according to Incident Specifications (IS) and Caller Images (CI). The retrievable base allows Sim911 to query and retrieve necessary data samples that enhance the simulation experience during runtime.

3.2 Context-aware Controlled Generation

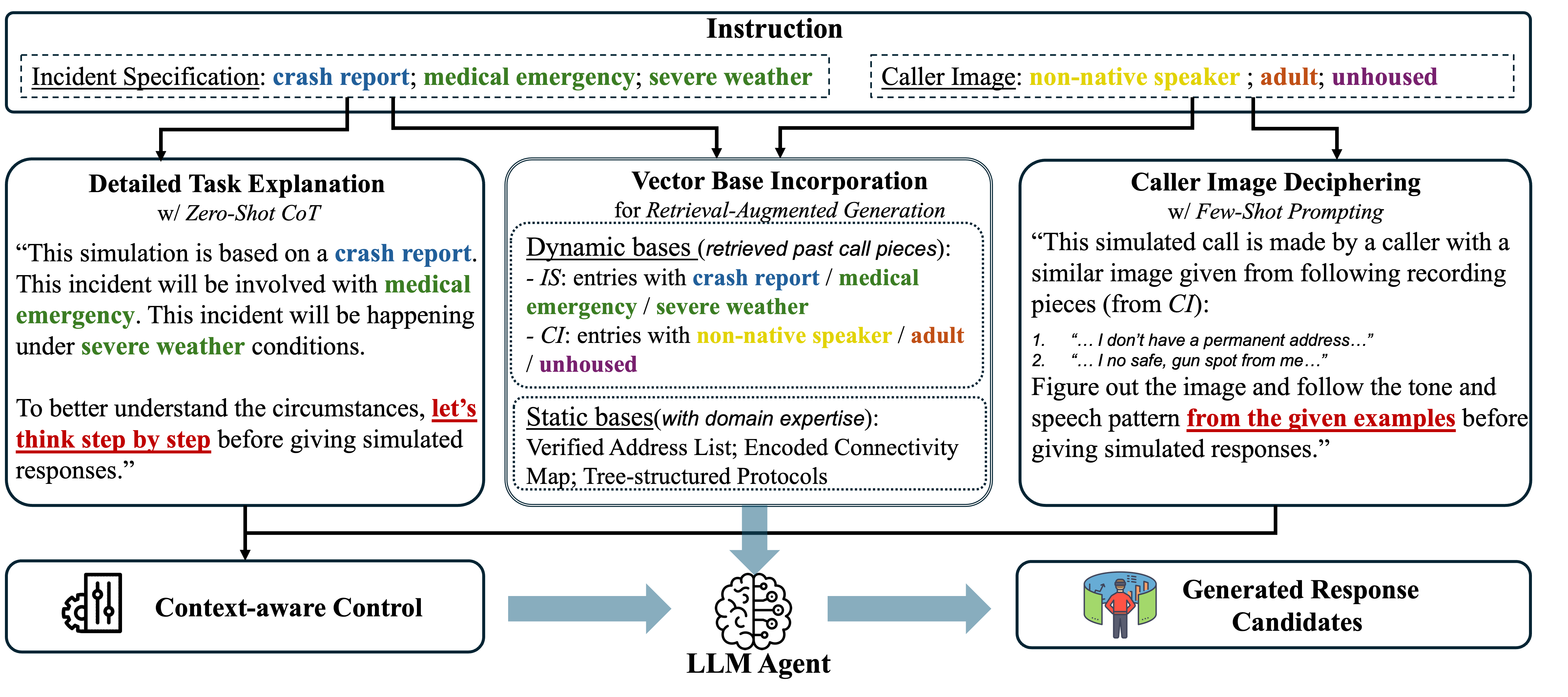

Each simulation runtime begins with predefined tags (referred to as ‘instructions’) that detail the desired scenarios. These tags delineate Incident Specifications (IS) and Caller Images (CI), guiding the setup for each simulation. We select the most appropriate preset backend for each simulation based on less sensitive CI attributes (emotion, age) and gather data associated with these tags from knowledge bases. This ensures a well-informed simulation environment tailored to the given simulation instructions. Context-aware Controlled Generation employs advanced prompting techniques, including Chain-of-Thought (CoT), Retrieval-Augmented Generation (RAG), and Few-shot Prompting (FSP), to enhance LLM performance (Touvron et al. 2023; Kaplan et al. 2020; Wei et al. 2022). Unlike the direct and static application of these techniques, our approach dynamically adapts to the emergency response context, with a focus on both IS and CI. The context-aware controlled generation process consists of three major steps, illustrated in the running example in Figure 3: (1) Vector Base Incorporation: We statically mount the fact bases and dynamically retrieve all past call pieces associated with given tags in both IS (e.g., crash report, medical emergency, severe weather) and CI (e.g., non-English speaking, adult, unhoused). The LLM backend is granted access to both vector bases; (2) Detailed Task Explanation: We elaborate on the instruction through step-by-step explanations, setting the stage for how the simulation should proceed. This preparation allows the LLM to conceptualize the simulation’s context and objectives before initiation; (3) Caller Image Deciphering: By including examples of past utterances and interactions associated with both similar tags, we provide the LLM with contextually relevant examples to draw from. This repository of past interactions enriches the LLM’s understanding and ability to generate responses that are both consistent with the user’s profile and grounded in real-world examples. See detailed algorithmic description in Appendix.

3.3 Validating with Looped Correction

During runtime, Sim911 employs an in-context validation process with a co-pilot design (Chen et al. 2024) to prevent negative examples and iteratively loops back to the LLM backend until a validated response is obtained. To avoid infinite loops and reduce latency, threshold of 3 is applied considering both latency and accuracy under deployment, see detailed discussion in Appendix. The In-Context Validation process includes four key checks: (1) Format check: This check ensures that the generated response adheres to the expected format. Any response that violates the format requirements is discarded to prevent system errors. (2) Alignment check: This step utilizes a BERT-based classifier (Devlin et al. 2019) to extract incident specifications from the response. The extracted specifications are then compared with the expected instructions, and any misalignment results in the response being discarded. (3) Factual check: A RoBERTa-based question-answering framework (Liu et al. 2019) is used to query key details, such as location information, by asking preset questions (e.g., “What is the address?”). If the extracted address does not exist in real life, the response is discarded. (4) Human-in-the-Loop check: This step allows users to provide immediate feedback on the generated response, supporting both written comments and scaled (1-5) ratings. Users can reject any response that does not meet their standards, and this feedback is systematically collected for further analysis. See details in Appendix.

4 Evaluation of Sim911

Sim911 introduces a pioneering AI-driven system to enhance call-taker training for emergency response scenarios. Due to its novelty, there is limited existing literature to guide its evaluation. To provide a comprehensive assessment, we not only report system-level performance but also conduct a study on the component-wise impacts using pre-configured runtimes. This approach allows us to evaluate Sim911 component by component without disrupting its ongoing deployment at DEC. Therefore, our evaluation of Sim911’s performance, focusing on effectiveness and equity, consists of two components: (1) component-wise analysis using pre-configured runtimes, and (2) system-level assessment during real-world deployment. GPT-4o is tested to be the optimal LLM backend for Sim911 by the date of submission. Refer to the complete baseline comparison in Appendix. We fetched GPT-4o responses using OpenAI API and tested the workflow on a machine with a 2.50GHz CPU, 32GB RAM, and Nvidia RTX 3080Ti GPU.

Component-wise analysis with pre-configured runtimes: We begin by extracting instructions from 2,641 past calls in the MNDEC database, spanning 13 incident types (e.g., Motor Vehicle Accidents 11%, Lost and Stolen 10%, Aggressive Drivers 10%) from Nov. 2022 to May 2024, based on Incident Specifications (IS) and Caller Images (CI) provided by expert annotations. We then replicate the dialogue flows using rule-based scripts that mimic the questions typically asked by call-takers. These instructions and replicated dialogue flows are used to simulate calls with Sim911. Sim911 operates without access to highly granular details. For example, if a past call involved an abandoned vehicle with a specific license plate and tinted windows, Sim911 would only be informed that the simulation involves an abandoned vehicle, without further specifics like the license plate or tinted windows. To ensure a fair evaluation, we exclude these granular discrepancies from our analysis. Effectiveness and equity scores are obtained through call-wise comparisons under control experiments. We record average scores with standard deviations to ensure robust evaluation.

| Realism | Authenticity | |||||

| PPL | METEOR | TTR | GMap(%) | SAR(%) | ||

| Sim911KC | 31.2213.38 | 0.220.05 | 0.880.01 | 83.005.11 | 94.111.66 | |

| Sim911CaCG | CoT | 21.986.64 | 0.670.18 | 0.850.02 | 98.131.06 | 90.832.71 |

| FSP | 12.555.71 | 0.750.21 | 0.940.01 | 98.111.08 | 98.141.06 | |

| RAG | 57.1912.22 | 0.190.12 | 0.920.02 | 61.476.89 | 96.442.60 | |

| Sim911VLC | 18.898.19 | 0.770.08 | 0.880.02 | 89.115.15 | 89.483.12 | |

| Sim911All(GPT-4o) | 61.9913.91 | 0.120.02 | 0.880.02 | 61.0111.31 | 81.633.97 | |

| Sim911 | 11.075.49 | 0.850.03 | 0.940.01 | 99.190.81 | 98.421.58 | |

System-level assessment during real-world deployment: During Sim911’s deployment, we collected data from 3,416 system interactions and 3,409 user interactions across both complete (228) and incomplete simulations, each guided by its own set of instructions (IS and CI). These data are utilized in assessing Sim911’s authenticity. Additionally, we conducted a user study in collaboration with MNDEC to evaluate Sim911 at a system level. This study involved trainees and personnel from DEC, including those from training management and quality assurance. The user study gathered scaled feedback (1-5) from MNDEC personnel on several key aspects, e.g., realism (‘How similar or vivid are the calls generated by Sim911 compared to real-world calls?’), authenticity (‘Are Sim911’s responses valid and true to real-life situations?’), equity (‘How well does Sim911 simulate the experiences of vulnerable callers?’), and helpfulness (‘How helpful is Sim911 in assisting with call-taking training?’). Written comments were also collected to provide additional insights. See survey details in Appendix. To assess effectiveness and equity, we review system logs and user feedback, with further details discussed later.

4.1 Effectiveness of Sim911

We assess Sim911’s effectiveness by following aspects: realism and authenticity.

Realism: “How closely do Sim911’s simulations mirror real-world calls?” We use the following metrics to evaluate Sim911’s performance on pre-configured runtimes: Perplexity (a measure of distributional similarity commonly used in language model training; it assesses how reasonable the generated texts are compared to a reference set), METEOR (Banerjee and Lavie 2005) (text generation metric that balances precision and recall, considering word stems, synonyms, and word order to determine how closely a generated text mirrors a reference text), and TTR (Type-Token Ratio; measures lexical diversity by comparing the number of unique words to the total number of words in the text).

Authenticity: “Does Sim911 provide accurate, true-to-life information without fabricating given instructions?” For evaluation, we break authenticity down into two aspects: “matter of facts” and “simulation alignment.” For the first, we focus on the accuracy of the given location in a simulation, as recommended by MNDEC experts. We use the Google Maps API with Geocoding (Google Maps Platform 2024) to verify the geographic information provided in the simulation and report the locating success rate. To measure simulation alignment, we use the copilot’s results to determine if the indicated Incident Specification (IS) aligns with the one provided in the simulation instructions. System-level performance during real-world deployment is assessed through quantitative analysis of system logs.

From the statistics in Table 1, we observe the following key points. When all components are enabled, Sim911 achieves optimal results in both realism (PPL=11.07, METEOR=0.85) and authenticity (GMap=99.19%, SAR=98.42%). Disabling knowledge construction (KC) and the RAG sub-component of CaCG leads to significant drops in realism (PPL=31.22 and PPL=57.19, respectively). Similarly, turning off validation with looped correction (VLC) reduces both realism and authenticity, though the system remains moderately effective. When all components are disabled, the system’s performance declines significantly, particularly in realism (PPL=61.99) and authenticity (SAR=81.63%). In conclusion, Sim911 demonstrates high effectiveness in terms of realism and authenticity in real-world deployment when all components are active. Disabling components harms Sim911’s overall effectiveness.

| Age Groups | Emotion Ranges | Unhoused | ||||||

| BART(%) | Margin | BART(%) | Margin | NRCLex | BART(%) | Margin | ||

| Sim911KC | 66.463.89 | 0.130.11 | 71.683.55 | 0.190.14 | 69.852.61 | 59.874.45 | 0.110.21 | |

| Sim911CaCG | CoT | 78.652.95 | 0.290.15 | 86.123.56 | 0.340.23 | 77.113.44 | 72.443.19 | 0.200.10 |

| FSP | 62.903.51 | 0.090.06 | 63.414.71 | 0.080.06 | 65.973.12 | 60.113.51 | 0.010.20 | |

| RAG | 64.634.13 | 0.130.12 | 68.113.78 | 0.220.19 | 61.003.10 | 61.845.76 | 0.050.16 | |

| Sim911VLC | 78.714.29 | 0.220.17 | 76.124.69 | 0.250.18 | 64.113.90 | 69.414.17 | 0.150.14 | |

| Sim911All(GPT-4o) | 59.115.55 | 0.070.05 | 57.875.86 | 0.210.19 | 51.194.51 | 51.125.46 | 0.170.22 | |

| Sim911 | 83.112.82 | 0.340.26 | 85.663.17 | 0.360.22 | 73.312.16 | 73.944.31 | 0.210.13 | |

| Mental Health | Non-Native Speakers | Low-income Housing | ||||||

| BART(%) | Margin | BART(%) | Margin | Gunning Fog | BART(%) | Margin | ||

| Sim911KC | 67.674.41 | 0.170.11 | 70.194.56 | 0.220.21 | 0.020.17 | 51.135.17 | 0.040.11 | |

| Sim911CaCG | CoT | 81.675.40 | 0.310.11 | 82.134.87 | 0.450.10 | 0.170.11 | 74.494.11 | 0.130.18 |

| FSP | 64.553.42 | 0.110.14 | 72.134.41 | 0.390.11 | 0.090.14 | 57.784.14 | 0.070.11 | |

| RAG | 76.714.45 | 0.010.09 | 75.335.19 | 0.230.18 | 0.110.17 | 64.655.77 | 0.010.14 | |

| Sim911VLC | 83.223.77 | 0.210.20 | 81.193.11 | 0.240.26 | 0.070.09 | 63.185.13 | 0.160.13 | |

| Sim911All(GPT-4o) | 61.624.15 | 0.040.11 | 69.134.36 | 0.210.22 | 0.030.12 | 44.443.72 | 0.040.02 | |

| Sim911 | 86.163.37 | 0.330.14 | 84.414.80 | 0.480.11 | 0.170.13 | 77.983.37 | 0.190.21 | |

4.2 Equity of Sim911

We assess Sim911’s equity features by evaluating “how effectively it provides simulation experiences for different caller groups”, represented by each supported tag in the caller image (CI). Recognizing that some tags are subjective and challenging to quantify, we adopt two general approaches to study these equity features. We employ fine-tuned BART (Lewis et al. 2019), a state-of-the-art model for zero-shot text classification, to evaluate Sim911-generated emergency call texts against a predefined set of image tags. For each generated call , associated with ground truth tags , BART predicts the presence or absence of each tag using a binary classifier , which outputs 1 if is associated with , and 0 otherwise. The predicted tags form a binary vector . Accuracy for each call is calculated by comparing with using the formula . This classification is iteratively performed for each tag, and the overall accuracy is determined by averaging the individual accuracies across all generated calls as . Second, we perform a textual similarity analysis based on syntax (Context-Free Grammar Parser), lexicon (TF-iDF), and sentiment (Loria 2018). We compare the generated outputs tagged as with both the ground truth tagged as and not-. To quantify the strength of classification for each tag, we calculate the Margin Score , where is the overall syntactic similarity of the output to reference texts with a given tag.

Besides these two approaches, we additionally introduce the following tag-specific evaluation methods: (1) NRCLex (Mohammad and Turney 2013) for unsupervised textual emotion detection, where we analyze the accuracy similarly to the BART Score. (2) Gunning Fog Index, a well-known method in linguistics of text readability analysis, is used to assess the readability of the text for non-native English speakers. Gunning Fog Index outputs a readability level and we analyze this score similarly to the Margin Score.

From the statistics in Table 2, we derive the following findings. Sim911 achieves strong performance across all caller image tags when all components are enabled, including age groups (BART=83.11%, Margin=0.34), emotion ranges (BART=85.66%, Margin=0.36), and unhoused populations (BART=73.94%, Margin=0.21). Disabling the FSP sub-component of CaCG results in notable declines for age groups (BART=62.90%, Margin=0.09) and mental health tags (BART=64.55%, Margin=0.11). Turning off knowledge construction (KC) significantly reduces performance, especially for low-income housing (BART=51.13%, Margin=0.04) and mental health (BART=67.67%, Margin=0.17). Similarly, disabling validation with looped correction (VLC) leads to a decrease in metrics for mental health (BART=83.22%, Margin=0.21). When all components are disabled, the system’s performance deteriorates significantly, particularly for the low-income housing tags (BART=44.44%, Margin=0.04) and non-native speakers (BART=69.13%, Margin=0.21). In conclusion, Sim911 delivers equitable and inclusive simulations in real-world deployment when all components are enabled. Disabling components negatively impacts Sim911’s equity features.

4.3 Insights from User Study

We collected 10 anonymous feedback from trainees (x2), active call-takers/dispatchers (x2), and training officers (x6) at DEC. Surveys are contributed by MNDEC based on the availability. Responses included yes/no questions, written comments, and a scaled rating system: Not at all (1), Neutral (2), Somewhat (3), Very much (4), and Perfectly (5). We find following insights. See complete survey setup in Appendix.

Effectiveness and Equity: Sim911 received scores of 4.50 for realism and 4.70 for authenticity. In terms of equity, it performed well across various caller image tags, with average scores as follows: Age Groups (4.25), Emotion Ranges (4.20), Unhoused (4.10), Mental Health (4.25), Non-Native Speakers (4.25), and Low-Income Housing (4.10). Additionally, Sim911 earned an average score of 4.89 for “How effectively does Sim911 support call-taker training in real-life scenarios?”. One participant commented: “I was surprised by how well it handled a call as a pregnant woman. I even managed to successfully deliver a baby on the phone!” Another shared: “When it played the role of a kid caller, it acted just like a real child—refusing to do anything until his mom arrived on the scene.” These results emphasize Sim911’s effectiveness in preparing call-takers by simulating diverse caller profiles and challenging real-life situations.

Comparison to Human-led Training: 9 out of 10 participants found Sim911 to be on par with or better than traditional human-led training. One participant remarked: “Sim911 is a great starting point because it comes up more incident types than what we do right now. It’s a valuable tool for enhancing our training.” Another said: “It’s impressive how Sim911 can simulate different callers (images). Trainees can be exposed to rare but useful calls that we could not (simulate) in the past.” These findings highlight that Sim911 not only complements human-led training but also enhances it by providing a broader range of incident types and scenarios that are difficult to replicate manually.

5 Related Work

Simulation-based training is a key component in various fields such as healthcare, aviation, and emergency services, where it provides a controlled environment for skill development without real-world risks (Suresh et al. 2023; Preiksaitis and Rose 2023; Daun et al. 2023). This method enhances critical thinking, decision-making, and practical skills by allowing repeated exposure to diverse and sometimes hazardous scenarios (Ibrahim et al. 2023; Flores, Ziakkas, and Dillman 2023; Rahman et al. 2023). Recent technological advancements, including Augmented Reality (AR), have begun to enhance traditional training setups, offering more immersive training experiences (Fitria 2023; Pfaff et al. 2020; Li et al. 2018; Ummenhofer et al. 2019), especially those for emergency responses (Parry et al. 2022; Mehta et al. 2022). Despite these innovations, most training simulations still rely heavily on human-scripted scenarios and instructor feedback, which can limit scalability and adaptability (Violato et al. 2023; Salvato et al. 2021; de Paula Ferreira, Armellini, and De Santa-Eulalia 2020). Large Language Models (LLMs) are emerging as a transformative tool for dialogue-focused simulations, able to generate dynamic and realistic interactions (Webb 2023; Thoppilan et al. 2022; Gong et al. 2023). But their integration into training programs must carefully address accuracy, ethical concerns, and potential biases to ensure effectiveness (Shanahan, McDonell, and Reynolds 2023; Shayegani et al. 2023; Yao et al. 2024b; Salewski et al. 2024). Refer to our extended relation work in Appendix.

6 Summary

In this paper, we introduce Sim911, the first AI-driven simulation environment designed to assist 9-1-1 dispatcher training under emergency response scenarios. Sim911 aims to enhance the preparedness of emergency dispatchers, contributing to the resilience and safety of urban populations. Evaluation results on pre-configured runtimes and real-world deployment show that Sim911 effectively delivers realistic, authentic, and equitable simulations, to assist dispatcher training with the integration of knowledge construction, context-aware controlled generation, and validation with looped correction.

This work can help emergency communications centers with limited staffing by allowing trainees to interact individually with the training program. Nearly 6,000 emergency communications centers could benefit from this training opportunity. The GenAI-enabled solution can be extended to other training spaces, such as teachers and medical students.

Acknowledgment

This work was supported in part by the U.S. National Science Foundation under Grants 2427711, the Google Academic Research Award, OpenAI Researcher Access Program, and the U.S. Department of Education under Grant R305C240010. The opinions, findings, conclusions, or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the sponsoring agencies.

References

- Afonso (2021) Afonso, W. 2021. Planning for the unknown: Local government strategies from the fiscal year 2021 budget season in response to the COVID-19 pandemic. State and Local Government Review, 53(2): 159–171.

- Almazrouei et al. (2023) Almazrouei, E.; Alobeidli, H.; Alshamsi, A.; Cappelli, A.; Cojocaru, R.; Alhammadi, M.; Daniele, M.; Heslow, D.; Launay, J.; Malartic, Q.; et al. 2023. The falcon series of language models: Towards open frontier models. Hugging Face repository.

- Artetxe et al. (2019) Artetxe, M.; Schwenk, H.; Marquez, L.; and Cho, K. 2019. Massively Multilingual Sentence Embeddings for Zero-Shot Cross-Lingual Transfer and Beyond. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), 3293–3302.

- Banerjee and Lavie (2005) Banerjee, S.; and Lavie, A. 2005. METEOR: An automatic metric for MT evaluation with improved correlation with human judgments. In Proceedings of the acl workshop on intrinsic and extrinsic evaluation measures for machine translation and/or summarization, 65–72.

- Brown et al. (2020) Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J. D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. 2020. Language models are few-shot learners. Advances in neural information processing systems, 33: 1877–1901.

- Carta et al. (2023) Carta, T.; Romac, C.; Wolf, T.; Lamprier, S.; Sigaud, O.; and Oudeyer, P.-Y. 2023. Grounding large language models in interactive environments with online reinforcement learning. In International Conference on Machine Learning, 3676–3713. PMLR.

- Cer et al. (2018) Cer, D.; Yang, Y.; Kong, S.-y.; Hua, N.; Limtiaco, N.; John, R.; Ngu, A.; Christopher, A.; Constant, N.; Guajardo-Cespedes, M.; et al. 2018. Universal Sentence Encoder. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, 169–174.

- Chen et al. (2022) Chen, Z.; Li, I.; Zhang, H.; Preum, S.; Stankovic, J. A.; and Ma, M. 2022. Cityspec: An intelligent assistant system for requirement specification in smart cities. In 2022 IEEE International Conference on Smart Computing (SMARTCOMP), 32–39. IEEE.

- Chen et al. (2023) Chen, Z.; Li, I.; Zhang, H.; Preum, S.; Stankovic, J. A.; and Ma, M. 2023. CitySpec with shield: A secure intelligent assistant for requirement formalization. Pervasive and Mobile Computing, 92: 101802.

- Chen et al. (2024) Chen, Z.; Sun, X.; Li, Y.; and Ma, M. 2024. Auto311: A Confidence-Guided Automated System for Non-emergency Calls. In Proceedings of the AAAI Conference on Artificial Intelligence, 21967–21975.

- Damerau (1964) Damerau, F. J. 1964. A technique for computer detection and correction of spelling errors. Communications of the ACM, 7(3): 171–176.

- Daun et al. (2023) Daun, M.; Grubb, A. M.; Stenkova, V.; and Tenbergen, B. 2023. A systematic literature review of requirements engineering education. Requirements Engineering, 28(2): 145–175.

- de Paula Ferreira, Armellini, and De Santa-Eulalia (2020) de Paula Ferreira, W.; Armellini, F.; and De Santa-Eulalia, L. A. 2020. Simulation in industry 4.0: A state-of-the-art review. Computers & Industrial Engineering, 149: 106868.

- Devlin et al. (2019) Devlin, J.; Chang, M.-W.; Lee, K.; and Toutanova, K. 2019. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv:1810.04805.

- Fitria (2023) Fitria, T. N. 2023. Augmented reality (AR) and virtual reality (VR) technology in education: Media of teaching and learning: A review. International Journal of Computer and Information System (IJCIS), 4(1): 14–25.

- Flores, Ziakkas, and Dillman (2023) Flores, A. D. C.; Ziakkas, D.; and Dillman, B. G. 2023. Artificial Cognitive Systems and Aviation training. Intelligent Human Systems Integration (IHSI 2023): Integrating People and Intelligent Systems, 69(69).

- Gong et al. (2023) Gong, T.; Lyu, C.; Zhang, S.; Wang, Y.; Zheng, M.; Zhao, Q.; Liu, K.; Zhang, W.; Luo, P.; and Chen, K. 2023. Multimodal-gpt: A vision and language model for dialogue with humans. arXiv preprint arXiv:2305.04790.

- Google Maps Platform (2024) Google Maps Platform. 2024. Google Maps Platform. https://mapsplatform.google.com/. Accessed: 2024-05-15.

- Ibrahim et al. (2023) Ibrahim, S.; Lok, J.; Mitchell, M.; Stoiljkovic, B.; Tarulli, N.; and Hubley, P. 2023. Equity, diversity and inclusion in clinical simulation healthcare education and training: An integrative review. International Journal of Healthcare Simulation.

- Kaplan et al. (2020) Kaplan, J.; McCandlish, S.; Henighan, T.; Brown, T. B.; Chess, B.; Child, R.; Gray, S.; Radford, A.; Wu, J.; and Amodei, D. 2020. Scaling laws for neural language models. arXiv preprint arXiv:2001.08361.

- Lewis et al. (2019) Lewis, M.; Liu, Y.; Goyal, N.; Ghazvininejad, M.; Mohamed, A.; Levy, O.; Stoyanov, V.; and Zettlemoyer, L. 2019. Bart: Denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension. arXiv preprint arXiv:1910.13461.

- Lewis et al. (2020) Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.-t.; Rocktäschel, T.; et al. 2020. Retrieval-augmented generation for knowledge-intensive nlp tasks. Advances in Neural Information Processing Systems, 33: 9459–9474.

- Li et al. (2022) Li, D.; Rawat, A. S.; Zaheer, M.; Wang, X.; Lukasik, M.; Veit, A.; Yu, F.; and Kumar, S. 2022. Large language models with controllable working memory. arXiv preprint arXiv:2211.05110.

- Li et al. (2018) Li, Y.; Wu, J.; Tedrake, R.; Tenenbaum, J. B.; and Torralba, A. 2018. Learning particle dynamics for manipulating rigid bodies, deformable objects, and fluids. arXiv preprint arXiv:1810.01566.

- Lin (2004) Lin, C.-Y. 2004. ROUGE: A Package for Automatic Evaluation of Summaries. In Text Summarization Branches Out, 74–81. Barcelona, Spain: Association for Computational Linguistics.

- Liu et al. (2019) Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; and Stoyanov, V. 2019. Roberta: A robustly optimized bert pretraining approach. arXiv preprint arXiv:1907.11692.

- Loria (2018) Loria, S. 2018. textblob Documentation. Release 0.15, 2.

- Ma et al. (2019) Ma, M.; Preum, S. M.; Ahmed, M. Y.; Tärneberg, W.; Hendawi, A.; and Stankovic, J. A. 2019. Data sets, modeling, and decision making in smart cities: A survey. ACM Transactions on Cyber-Physical Systems, 4(2): 1–28.

- Mehta et al. (2022) Mehta, R.; Moats, J.; Karthikeyan, R.; Gabbard, J.; Srinivasan, D.; Du, E.; Leonessa, A.; Burks, G.; Stephenson, A.; and Fernandes, R. 2022. Human-centered intelligent training for emergency responders. AI Magazine, 43(1): 83–92.

- Mohammad and Turney (2013) Mohammad, S. M.; and Turney, P. D. 2013. Crowdsourcing a word–emotion association lexicon. Computational intelligence, 29(3): 436–465.

- Naveed et al. (2023) Naveed, H.; Khan, A. U.; Qiu, S.; Saqib, M.; Anwar, S.; Usman, M.; Barnes, N.; and Mian, A. 2023. A comprehensive overview of large language models. arXiv preprint arXiv:2307.06435.

- NICE (2023) NICE. 2023. State of 911 Staff Performance and Retention Benchmark Study Report. https://www.nicepublicsafety.com/resources/state-of-911-staff-performance-and-retention-benchmark-study-report?UTM˙source=NENAad.

- NYC-911 (2022) NYC-911. 2022. Next-Gen 911 on Target for 2024 Completion. https://www.nyc.gov/content/oti/pages/press-releases/next-gen-911-on-target-2024-completion. Accessed: 08-15-2023.

- Papineni et al. (2002) Papineni, K.; Roukos, S.; Ward, T.; and Zhu, W.-J. 2002. BLEU: a method for automatic evaluation of machine translation. In Proceedings of the 40th annual meeting of the Association for Computational Linguistics, 311–318.

- Parry et al. (2022) Parry, A. E.; Kirk, M. D.; Colquhoun, S.; Durrheim, D. N.; and Housen, T. 2022. Leadership, politics, and communication: challenges of the epidemiology workforce during emergency response. Human Resources for Health, 20(1): 33.

- Pfaff et al. (2020) Pfaff, T.; Fortunato, M.; Sanchez-Gonzalez, A.; and Battaglia, P. W. 2020. Learning mesh-based simulation with graph networks. arXiv preprint arXiv:2010.03409.

- Preiksaitis and Rose (2023) Preiksaitis, C.; and Rose, C. 2023. Opportunities, challenges, and future directions of generative artificial intelligence in medical education: scoping review. JMIR medical education, 9: e48785.

- Rahman et al. (2023) Rahman, M. A.; Jia, L.; Mirza, E.; Preum, S. M.; Alemzadeh, H.; Williams, R. D.; and Stankovic, J. A. 2023. emsReACT: A Real-Time Interactive Cognitive Assistant for Cardiac Arrest Training in Emergency Medical Services. In 2023 19th International Conference on Distributed Computing in Smart Systems and the Internet of Things (DCOSS-IoT), 120–128. IEEE.

- Reimers and Gurevych (2019) Reimers, N.; and Gurevych, I. 2019. Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks. arXiv preprint arXiv:1908.10084.

- Salewski et al. (2024) Salewski, L.; Alaniz, S.; Rio-Torto, I.; Schulz, E.; and Akata, Z. 2024. In-Context Impersonation Reveals Large Language Models’ Strengths and Biases. Advances in Neural Information Processing Systems, 36.

- Salvato et al. (2021) Salvato, E.; Fenu, G.; Medvet, E.; and Pellegrino, F. A. 2021. Crossing the reality gap: A survey on sim-to-real transferability of robot controllers in reinforcement learning. IEEE Access, 9: 153171–153187.

- Saxon et al. (2022) Saxon, N.; Villena, P.; Wilburn, S.; Andersen, S.; Maloney, D.; and Jacobson, R. 2022. Annual Survey of Public Employment & Payroll Summary Report: 2021. US Census Bureau.

- Shanahan, McDonell, and Reynolds (2023) Shanahan, M.; McDonell, K.; and Reynolds, L. 2023. Role play with large language models. Nature, 623(7987): 493–498.

- Shayegani et al. (2023) Shayegani, E.; Mamun, M. A. A.; Fu, Y.; Zaree, P.; Dong, Y.; and Abu-Ghazaleh, N. 2023. Survey of vulnerabilities in large language models revealed by adversarial attacks. arXiv preprint arXiv:2310.10844.

- Singhal et al. (2023) Singhal, K.; Tu, T.; Gottweis, J.; Sayres, R.; Wulczyn, E.; Hou, L.; Clark, K.; Pfohl, S.; Cole-Lewis, H.; Neal, D.; et al. 2023. Towards expert-level medical question answering with large language models. arXiv preprint arXiv:2305.09617.

- Suresh et al. (2023) Suresh, D.; Aydin, A.; James, S.; Ahmed, K.; and Dasgupta, P. 2023. The role of augmented reality in surgical training: a systematic review. Surgical Innovation, 30(3): 366–382.

- Thoppilan et al. (2022) Thoppilan, R.; De Freitas, D.; Hall, J.; Shazeer, N.; Kulshreshtha, A.; Cheng, H.-T.; Jin, A.; Bos, T.; Baker, L.; Du, Y.; et al. 2022. Lamda: Language models for dialog applications. arXiv preprint arXiv:2201.08239.

- Touvron et al. (2023) Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.-A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. 2023. Llama: Open and efficient foundation language models. arXiv preprint arXiv:2302.13971.

- Ummenhofer et al. (2019) Ummenhofer, B.; Prantl, L.; Thuerey, N.; and Koltun, V. 2019. Lagrangian fluid simulation with continuous convolutions. In International Conference on Learning Representations.

- Violato et al. (2023) Violato, E.; MacPherson, J.; Edwards, M.; MacPherson, C.; and Renaud, M. 2023. The use of simulation best practices when investigating virtual simulation in health care: A scoping review. Clinical Simulation in Nursing, 79: 28–39.

- Wang et al. (2023) Wang, Y.; Zhong, W.; Li, L.; Mi, F.; Zeng, X.; Huang, W.; Shang, L.; Jiang, X.; and Liu, Q. 2023. Aligning large language models with human: A survey. arXiv preprint arXiv:2307.12966.

- Webb (2023) Webb, J. J. 2023. Proof of concept: using ChatGPT to teach emergency physicians how to break bad news. Cureus, 15(5).

- Wei, Haghtalab, and Steinhardt (2024) Wei, A.; Haghtalab, N.; and Steinhardt, J. 2024. Jailbroken: How does llm safety training fail? Advances in Neural Information Processing Systems, 36.

- Wei et al. (2022) Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Xia, F.; Chi, E.; Le, Q. V.; Zhou, D.; et al. 2022. Chain-of-thought prompting elicits reasoning in large language models. Advances in neural information processing systems, 35: 24824–24837.

- Yao et al. (2024a) Yao, S.; Yu, D.; Zhao, J.; Shafran, I.; Griffiths, T.; Cao, Y.; and Narasimhan, K. 2024a. Tree of thoughts: Deliberate problem solving with large language models. Advances in Neural Information Processing Systems, 36.

- Yao et al. (2024b) Yao, Y.; Duan, J.; Xu, K.; Cai, Y.; Sun, Z.; and Zhang, Y. 2024b. A survey on large language model (llm) security and privacy: The good, the bad, and the ugly. High-Confidence Computing, 100211.

Appendix

Appendix A Discussion and Future Work

We plan to enhance the system in several aspects. (1) Knowledge Base Timeliness: Real-time updates are crucial for accuracy (Chen et al. 2022, 2023), but they can add overhead. Enhancing timeliness with text ranking or abstract representation will improve Sim911. (2) Hallucination: Despite using advanced LLM backends like GPT-4o, the simulation sometimes generates inaccurate or fabricated information. Further research is needed to mitigate these hallucinations and enhance Sim911’s credibility. (3) Systematic Review on Expert Feedback: Collecting and systematically reviewing expert feedback during deployment will provide valuable insights for improving Sim911 and other simulation tools. (4) Model Exploration: We will explore additional implementations of Sim911 by introducing and fine-tuning more LLMs, such as LlaMa (Touvron et al. 2023) and Falcon (Almazrouei et al. 2023).

Appendix B Related Work (Extended)

Simulation-based training is recognized as a pivotal component in preparing individuals for various scenarios across different occupations (Suresh et al. 2023; Preiksaitis and Rose 2023; Daun et al. 2023). This training methodology offers a safe and controlled environment for learners to acquire and refine their skills without the real-world consequences of mistakes. Studies show that simulation-based training enhances decision-making, critical thinking, and practical skills, making it an indispensable tool in fields such as healthcare, aviation, and emergency response services, which require high-stakes decision-making (Ibrahim et al. 2023; Flores, Ziakkas, and Dillman 2023; Rahman et al. 2023). Simulation allows for repeated exposure to a wide range of scenarios, some of which may be infrequent or too dangerous to practice in real life, thereby ensuring that trainees are well-prepared for any situation.

In recent years, traditional training setups have begun to evolve, incorporating advanced technologies such as Augmented Reality (AR) and 3D Modeling to provide more immersive and interactive learning experiences (Fitria 2023; Li et al. 2018; Ummenhofer et al. 2019; Pfaff et al. 2020). These technological advancements aim to bridge the gap between simulation and real-life experience, offering more realistic and engaging training environments. However, despite these innovations, the core of most training simulations remains largely human-based, relying on scripted scenarios and instructor feedback to guide the learning process (Violato et al. 2023; Salvato et al. 2021; de Paula Ferreira, Armellini, and De Santa-Eulalia 2020). This dependence on human-generated content and feedback limits training programs’ scalability and adaptability and increases training costs, especially in scenarios that demand rapid updates or customization to address emerging challenges.

Large Language Models (LLMs) demonstrate remarkable performance in generating human-like text, opening new avenues for their application in dialogue-focused simulations (Webb 2023; Thoppilan et al. 2022; Gong et al. 2023). With their ability to understand and generate natural language, LLMs offer more dynamic and responsive simulation experiences, adapting to the trainee’s inputs in real time to create more personalized and unpredictable training scenarios (Shanahan, McDonell, and Reynolds 2023). This capability sets the stage for LLMs to revolutionize training simulations, particularly in domains like emergency call dispatching, where the ability to navigate complex dialogues under pressure is crucial. However, integrating LLMs into training simulations presents challenges, including ensuring the models’ responses are accurate, ethical, and free from biases, which are critical considerations for their successful application in important areas (Shayegani et al. 2023; Yao et al. 2024b; Salewski et al. 2024).

Prompt Engineering is an important technique in enhancing LLMs’ overall performance based on the latest literature (Singhal et al. 2023; Wang et al. 2023) by using specifically designed workflows based on prompt templates like Chain-of-Thoughts (Wei et al. 2022), Tree-of-Thoughts (Yao et al. 2024a), Few-shot Prompting (Brown et al. 2020; Touvron et al. 2023; Kaplan et al. 2020), and Retrieval-Augmented Generation (Lewis et al. 2020). These methodologies leverage structured prompts to guide LLMs in reasoning through complex tasks, enabling more coherent and contextually accurate responses. However, directly applying these techniques in specialized applications such as Sim911, which simulates emergency call handling, results in various performance drops if no relevant knowledge is introduced prior. This phenomenon is well-documented in literature (Li et al. 2022) and corroborated by experimental results, indicating that the effectiveness of prompt engineering is highly contingent on the contextual alignment of the prompts with the specific domain knowledge required for the task. Consequently, further refinement and domain-specific adjustments of prompt templates are essential to mitigate performance issues and enhance the utility of LLMs in such specialized scenarios.

Text Comparison metrics are explored and applied in Sim911’s evaluation. For our specific goal of measuring realism, authenticity, and equity under emergency response scenarios, traditional metrics like Damerau-Levenshtein distance (Damerau 1964) and BLEU (Papineni et al. 2002) are unsuitable due to their focus on surface-level text similarities and lack of semantic depth. Other n-gram metrics like ROUGE (Lin 2004) and WER fail to capture the nuances of meaning and context, which are crucial in evaluating emergency response dialogues. While end-to-end metrics such as sentence embeddings and learned metrics (Reimers and Gurevych 2019; Cer et al. 2018; Artetxe et al. 2019) do consider semantic content, each of them, when applied in isolation, tends to misalign with our specific criteria for realism and authenticity. Furthermore, these metrics often lack interpretability and generalization in the emergency response context, which is critical for understanding and improving the system’s performance. Thus, a comprehensive approach that integrates multiple metrics and adapts them to the emergency response domain is necessary to achieve a more accurate and meaningful evaluation.

Appendix C Ethical Concerns

Our Institutional Review Board (IRB) has approved the Sim911 project under reference number 232131. We recognize the potential ethical implications of using generative AI technologies, such as large language models (LLMs), in training simulations. Below, we outline how we address these concerns:

Human Supervision and Intervention. Sim911 is a training tool designed exclusively for simulation purposes, aiding 9-1-1 dispatchers without impacting real-world emergency responses. The simulation operates under rigorous human oversight, with training managers actively monitoring all interactions. Both trainers and trainees can terminate the simulation at their discretion.

Human Subjects. Participants in the Sim911 project include 9-1-1 dispatchers and training managers. We refine the system collaboratively through regular feedback sessions, compensating all participants with funds from both institutional and governmental sources. Participants have the freedom to exit the simulation at any point during a session.

Privacy and Data Security. We handle sensitive 9-1-1 call data with utmost care, anonymizing all data to eliminate personal identifiers. Our data storage solutions are secure, utilizing internal cloud servers backed by institutional and government-supported security measures.

Bias and Fairness. We prioritize fairness and actively work to minimize bias, particularly in scenarios involving vulnerable populations. Our approach includes expert consultations in ethics and bias mitigation and ongoing updates to simulation scenarios to ensure diverse and equitable interactions.

Continuous Monitoring. An independent ethics board regularly audits our system, ensuring ethical compliance and optimal performance. These audits help us identify and rectify any issues promptly.

Transparency and Accountability. We maintain a transparent operation, with regular updates and audits shared with stakeholders such as the Chief Information Security Officer (CISO) of the metro government and the Director of the Department of Emergency Communications (DEC). Detailed logs of all simulation activities are recorded and reviewed.

Social and Public Safety. In consultation with key metro government officials, we have implemented additional safeguards, such as tagging data related to vulnerable groups in de-identified call records. Sim911 is strictly a training tool and is not used for any other purposes.

Appendix D The Design of User Study

We design our user study survey with the following questions and corresponding rating system (not exhaustive list, we only list questions within this paper’s scope):

-

•

Realism: How similar/vivid are the calls generated by Sim911 to real-world calls? (1-5)

-

•

Authenticity: Are Sim911’s responses valid and true to real-life situation? (1-5)

-

•

Inclusiveness: How well does Sim911 provide experiences for simulating vulnerable callers? (1-5)

-

•

Helpfulness: How helpful do you think Sim911 assists call-taking training? (1-5)

-

•

Improving: Do you observe Sim911’s improvements over time? (1-5)

-

•

Others: Do you have any comments on realism, authenticity, inclusiveness, helpfulness, and overtime improving? (written)

-

•

How well does Sim911 mimic different age groups (e.g., kid, teenager, adult, senior)? (1-5)

-

•

How well does Sim911 mimic different emotion ranges (e.g., sad, calm, neutral, anxious, angry, irrational)? (1-5)

-

•

How well does Sim911 consider the non-English speaking tag? (1-5)

-

•

How well does Sim911 consider the unhoused tag? (1-5)

-

•

How well does Sim911 consider the low-income housing area tag? (1-5)

-

•

How well does Sim911 consider the mental health issues tag? (1-5)

-

•

Others: Do you have any comments on age groups, emotion ranges, non-English speaking, unhoused, low-income housing area, mental health issues want to share? (written)

-

•

What do you think of Sim911 compared to traditional human-led training simulations? (less effective, somewhat similar, more effective)

-

•

Your training experience is improved by Sim911. (disagree, somewhat, agree)

-

•

How satisfied are you with your overall experience with Sim911? (1-5)

Appendix E Running Examples and Algorithms

We provide some running examples to better demonstrate the process of Knowledge Construction, introduced in Section 3.1, shown in Figure 4 and Controlled Prompt Generation, introduced in Secion 3.2, shown in Figure 3.

As detailed in Algorithm 1, the procedure begins by importing constructed knowledge , associated tags , and static fact bases , setting the stage for a predetermined number of simulations . At the start of each simulation cycle, instruction sets and are prepared using the relevant tags to accurately depict the incident specifications and caller images. Following this, the system processes the tags to extract and compile necessary data from into specialized knowledge bases and , enriching the simulation content and providing a robust contextual backdrop. These compiled datasets and facts are then grouped into reference components , crucial for crafting the final prompts. Finally, the system selects the most appropriate LLM backend agent based on general tags from . Leveraging CoT, RAG, and FSP, the system dynamically constructs prompts and feeds them to . These prompts are designed to not only simulate realistic and varied emergency call situations but also to ensure they align with the tailored needs and profiles identified through the knowledge bases.

Threshold Adjustment and Backend Selection

The threshold defines how often Sim911 regenerates responses when failing checks (format, alignment, factual), before being reviewed by human supervisors, as detailed in section Validation with Looped Correction. We adjust the threshold to 3 throughout our deployment considering both latency and negative sample capture rate (the percentage of low-quality responses get filtered out):

-

•

T=1: Average latency is 24 ms/token w/ capture rate (CR) of 53.13%.

-

•

T=2 increases latency to 49 ms/token and boosts CR to 77.43%.

-

•

T=3 achieves a 98.47% CR (similar to T=4 and 5) with shorter latency of 61 ms/token.

We also finalized the LLM backend as GPT-4o based on its optimal performance (by the date of the submission): Sim911 with GPT-4o achieved the best realism scores at submission (avg ppl 11.07) compared to LLaMa 3.1 (avg ppl 32.74). Also, many dispatch centers lack resources for local LLM hosting; OpenAI’s API offers an efficient solution.

Appendix F Archived Call Data & Training Pieces

In this section, we include a few screenshots of both archived call data and conventional training logs after de-identification. We plan to release the de-identified data samples including both call data and training logs upon the acceptance of this paper.

F.1 Conventional Training Pieces

In Figure 5, the first page is the training plan, filled by the actor (dispatcher), and the following two parts are the detailed simulation instructions for one specific simulation.

F.2 Call Data Pieces

[Call Snippet 1]

911 call-taker: 9-1-1, what’s the address of the emergency?

911 caller: A 322 Broadway.

911 call-taker: Alright, 322 Broadway, that’s downtown.

911 caller: Right in between 3rd and 4th Avenue South.

911 call-taker: What’s the phone number you’re calling from?

911 caller: 407-456-0938.

911 call-taker: What is your name?

911 caller: I’m Brian Markham-Cammons.

911 call-taker: And tell me exactly what happened.

911 caller: I have a homeless fellow named Jerry that came in asking us to call an ambulance for him. I’m not sure, what kind of problems are you having Jerry? Okay, he said he’s hearing voices and then he has pain in his left leg. Hey Jerry, how old are you? Fifty-one.

911 call-taker: Is he breathing normally?

911 caller: Yeah, he’s standing up currently, and yeah.

911 call-taker: Has he been violent at all? Aggressive?

911 caller: No, no, he’s not, he’s just, he came in asking if we could call him an ambulance.

911 call-taker: Jerry, do you have any weapons on you?

911 caller: No.

911 call-taker: We have this sent up and they’ll be on the way to 322 Broadway at the Margaritaville downtown between 3rd Avenue and 4th Avenue.

911 call-taker: Is he going to be waiting inside or outside?

911 caller: He’s going to be waiting outside, yeah, I’ll be outside.

911 call-taker: Alright, perfect. I will let them know. If anything changes or gets worse, call us back immediately. In the meantime, just let him rest in the most comfortable position and do not allow him to have anything to eat or drink unless he’s diabetic.

[Call Snippet 2]

911 call-taker: Metro City X 9-1-1, what is the exact location of your emergency?

911 caller: At 411 Murfreesboro Pike, Apartment 302.

911 call-taker: Okay, you said 411 Murfreesboro Pike in South City X, apartment 302.

911 call-taker: Okay, is there a building letter or anything with this?

911 call-taker: Okay, is this near Paris Avenue and Nance Lane , 37210 is the zip code?

911 call-taker: Okay, and what’s your phone number?

911 caller: 615-946-5518.

911 call-taker: And what’s your first and last name, ma’am?

911 caller: Jennifer Robinson.

911 call-taker: Okay, and what’s going on?

911 caller: I had someone opening my door less than five minutes ago. I had to go to the bathroom and mop my bathroom floor because of some illegal hazardous that had been applied to my walker. And I got it mopped and I came back into the living room and I’ve got three urine spots that someone has opened the door and placed on it since I had less than three minutes out from the bathroom back to the living room.

911 call-taker: Okay, do you know who the subject was that did this?

911 caller: No, I do not know, because visually I did not catch them in my apartment, but the door and the lock had to be unlocked, because I keep the door locked at all times. And they’re on property and they had to come out of maintenance at 301 or 303.

911 call-taker: Okay, but you did not see this subject or anything? But you don’t have a description.

911 caller: No, there are small puddles, and they’re like puddles, but there were no waters or puddles in the living room. And it’s not only one that has access to my front door. It’s a family of police officers, and I’m calling it in to you because they’re running illegal and erotic porn over here, and I have suffered long enough at this property and them not be cracked or turned in by cameras over here.

911 call-taker: Okay ma’am, the only thing that I can do for you is I can send an officer out there to speak with you about this and you make a report.

911 caller: It’s like, oh, well, I’ll get it.

911 call-taker: Yes, I’ll get a picture. I can still have it serviced from their body fluids. You did say 411 Murfreesboro Pike in South City X, the Mercury Corps Department, is that correct?

911 caller: That’s right, in apartment 302 for maintenance, in 302.

911 call-taker: Yes ma’am, 302, I got that. I’ll go ahead and send this call up and we’ll get an officer out to speak with you, okay?

911 caller: Alright, thank you.

[Call Snippet 3]

911 call-taker: Metro City X 9-1-1, what is the location of your emergency?

911 caller: I’ll tell you what, I probably ought to call the other one, 865, what is that number? Because we have a suspicious vehicle that’s been coming and going right across my business all night, been sitting out by our gate. He just left again, but he only goes for a little while and he comes right back and sits out in front of our gate again. It’s a white Nissan, and I don’t really, I don’t want to go out there because I don’t know what they’re up to.

911 call-taker: Okay, what address are you at?

911 caller: 20 Culver Street, it’s Axel Nobel.

911 call-taker: You said 20 Culver Street? What is his name?

911 caller: Culvert, C-U-L-V-E-R-T. I just found out about it, some people have been telling me. He’s been there since 10 o’clock last night, and he just, he drives off, but he comes back and sits across from the gate, and he just drove off against us. I’m sure he’ll probably come back.

911 call-taker: What’s your first and last name?

911 caller: Uh, Darren Brown.

911 call-taker: And the number you’re calling me from?

911 caller: 615-405-4748.

911 call-taker: I have a call sent up. We’ll get officers in the area. Did you want to speak to officers?

911 caller: Uh, well, I’ll tell you what, they can call me and let me know if they have any, if they’ve seen him or anywhere, but he’s riding just right around in this area. So he’ll be in this area. Um, you can have one of them to call me if anything goes on, but it’s not, don’t worry about it.

Appendix G Snippets of Sim911 Runtimes

Here we only include three runtimes. We are planning to release all past training interactions as well upon the acceptance of this paper.

[Runtime 1]

Sim911: Hello, I need to report a car that’s been parked in front of my house for days. It looks abandoned.

Trainee: What is your address?

Sim911: My address is 742 Evergreen Terrace.

Trainee: Okay, is the car yours?

Sim911: No, the car isn’t mine. I’ve never seen it in the neighborhood before.

Trainee: How long has the car been there?

Sim911: It’s been there for about four days now.

Trainee: And you say it’s a red car. What’s the make and model?

Sim911: Actually, I didn’t mention the color. The car is a grey sedan, but I’m not sure about the make or model. It’s an older model, though.

Trainee: Is there anyone inside?

Sim911: I haven’t seen anyone in the car. It’s been empty the whole time.

Trainee: Are there any other identifying marks, bumper stickers, scars, dents, scratches?

Sim911: Yes, there’s a noticeable dent on the passenger side door and a bumper sticker for a local radio station, WZKB.

Trainee: What’s the license plate number?

Sim911: I don’t have the license plate number. I didn’t think to write it down. Should I go check and call you back?

Trainee: No, that’s not necessary. Do you know what state the license plate is from?

Sim911: Yes, the license plate is from our state.

Trainee: Is there anything suspicious inside the car that you’ve seen?

Sim911: From what I can see, there doesn’t appear to be anything suspicious inside the car. It looks pretty empty.

Trainee: Okay, what’s your name?

Sim911: My name is Jordan Smith.

Trainee: And what’s your telephone number, Jordan?

Sim911: My phone number is 555-0199.

Trainee: Okay, thank you, Jordan. Do you want to see police when they come out, or do you just want them to leave?

Sim911: I’m fine with just them checking it out. I don’t need to speak with them unless they need more information from me.

Trainee: Okay, we’ll send police. Thank you for calling. Have a good day.

[Runtime 2]

Sim911: Hi, there’s a really aggressive driver with red pickup truck here swerving in and out of lanes. It’s happening on the I-40 going east. They’re driving extremely dangerously, and I’m really concerned they’re going to cause an accident.

Trainee: Let’s see here, I-40 East you said, is there a mile marker that you can tell me?

Sim911: Yes, it’s right around mile marker 210. This person is driving a red pickup truck, and they’re just… they’re all over the road.

Trainee: Trying to find the exact location. Give me one second.

Trainee: Can I have your number, sir?

Sim911: Yes, my number is 615-555-0123.

Trainee: Can I have your full name?

Sim911: My name is John Smith.

Trainee: Alright, Mr. Smith, you said it’s a red pickup truck, do you know what kind of pickup truck and which direction it’s headed?

Sim911: Yes, it’s a Ford F-150, and it’s headed east on I-40.

Trainee: Where would you like to meet them at?

Sim911: Could we meet at the parking lot of the City X Public Library on Church Street? It’s a public space, and I’ll be able to get there in about 15 minutes.

Trainee: Okay, alright Mr. Smith, I got a call set up and you said that was mile marker 210 on I-40 East and I will get this set up and the officer will meet you at the City X Public Library and I’ve got everything I need. Is there anything else you need?

Sim911: No, that should be everything. Thank you so much for your help. I really appreciate it.

Trainee: Okay, got it. Goodbye.

[Runtime 3]

Sim911: Hi, I need to report some property damage that happened at my place. Can you help me with this?

Trainee: Yeah, can you go ahead and give me the address?

Sim911: It’s 742 Evergreen Terrace, in City X.

Trainee: That’s not showing up as an address for me. Can you give me another address?

Sim911: Sorry for the confusion, it’s Evergreen Terrace.

Trainee: Good.

Sim911: Thank you for understanding. So, there was some damage to my front lawn and mailbox. It looks like someone drove through it last night.

Sim911: Hello, are you still there? Do you need any more information to help with this situation?

Trainee: Can I get your first and last name?

Sim911: Of course, my name is John Smith.

Trainee: Is there, do you see tire marks?

Sim911: Yes, there are very clear tire marks across the lawn and leading up to the damaged mailbox.

Trainee: Alright, and then would you like to speak to officers when they arrive?

Sim911: Yes, I would appreciate speaking to the officers when they arrive to provide any additional details they might need.

Trainee: All right.

Sim911: Thank you for your help. Is there anything else you need from me at this stage?

Trainee: Is there anyone that’s injured that you can see?

Sim911: No, there are no injuries. It looks like it was just property damage.

Trainee: All right, we will have that place checked soon. Bye.

Appendix H Co-pilot Designs in In-Context Validation

BERT Classifier for Incident Type Given a sequence of conversational turns , the BERT classifier aims to predict the type of incident associated with the conversation. Each turn is represented as a tokenized input to BERT, which processes the input to predict the incident class. The classification process can be formulated as:

| (1) |

where represents the BERT model fine-tuned for classification tasks.

RoBERTa-based Question Answering The RoBERTa model is utilized to determine whether a specific turn in the conversation contains an answer to a given question . For each turn , the model evaluates the presence of an answer by processing both the turn and the question together. The output is a binary indicator , where signifies that the turn contains an answer, and otherwise. This can be represented as:

| (2) |

where denotes the RoBERTa model adapted for the question-answering task.

Appendix I Background of Evaluation Metrics

In this section, we introduce each of the mentioned metrics in evaluation in detail.

I.1 METEOR

METEOR (Metric for Evaluation of Translation with Explicit ORdering) is a metric used to evaluate the quality of machine-generated text, especially in the context of machine translation and natural language generation. It aims to address some of the limitations of the BLEU metric by incorporating synonymy, stemming, and paraphrasing, and by emphasizing precision, recall, and a harmonic mean.

Definition of METEOR. Unlike BLEU, which primarily focuses on precision, METEOR considers both precision and recall, thereby providing a more balanced evaluation of translation quality. METEOR computes an alignment between the candidate and reference translations based on exact matches, stemmed matches, synonym matches, and paraphrase matches.

Calculation of METEOR. The METEOR score is calculated through the following steps:

-

1.

Tokenization: Both the candidate translation and reference translations are tokenized into words.

-

2.

Matching: Tokens from the candidate translation are matched to tokens in the reference translation using exact matches, stem matches, synonym matches, and paraphrase matches.

-

3.

Precision and Recall: The precision () and recall () are calculated based on the matches.

| (3) | |||

| (4) |

where:

-

•

is the number of matched tokens.

-

•

is the total number of tokens in the candidate translation.

-

•

is the total number of tokens in the reference translation.

Harmonic Mean and Penalty. The harmonic mean of precision and recall, also known as the F-score, is computed as follows:

| (5) |

To account for the fragmentation of matches, METEOR introduces a penalty ():

| (6) |

where is the number of contiguous sequences of matched tokens. The final METEOR score is calculated by combining the harmonic mean and the penalty:

| (7) |

Interpretation of METEOR Scores. METEOR scores range from 0 to 1, where higher scores indicate better alignment between the candidate and reference translations. The metric provides a more nuanced evaluation than BLEU by considering recall, synonyms, and paraphrases, making it particularly useful for assessing translation quality in a more human-like manner.

I.2 Perplexity (PPL)

Perplexity is a metric commonly used to evaluate the performance of language models in natural language processing (NLP). It measures how well a probabilistic model predicts a sample and is often used to compare different language models.

Definition of Perplexity. Perplexity is defined as the exponentiated average negative log-likelihood of a sequence of words. For a language model, it is a measure of how surprised the model is by the test data, with lower perplexity indicating a better model. Formally, for a given sequence of words , the perplexity () is given by:

| (8) |

Calculation of Perplexity. The calculation involves the following steps:

-

1.

Log-Likelihood: Calculate the log-likelihood of each word in the sequence given the previous words.

-

2.

Average Log-Likelihood: Compute the average of these log-likelihoods over the entire sequence.

-

3.

Exponentiation: Exponentiate the negative average log-likelihood to obtain the perplexity.

Given a language model that assigns a probability to each word in the sequence, the perplexity is calculated as:

| (9) |

Alternatively, if the cross-entropy of the model on the sequence is known, the perplexity can be computed as:

| (10) |

where the cross-entropy is given by:

| (11) |

Interpreting Perplexity Scores. Perplexity scores provide an intuitive measure of a language model’s performance:

-

•

Lower Perplexity: Indicates that the model is better at predicting the test data, implying higher quality and accuracy.

-

•

Higher Perplexity: Suggests that the model struggles to predict the test data, indicating lower quality.

For example, if a language model assigns high probabilities to the actual words in the sequence, it will have a lower perplexity, reflecting better performance. Conversely, if the model assigns low probabilities, the perplexity will be higher, indicating poorer performance.

I.3 Typical-Token Ratio (TTR)

Type-Token Ratio (TTR) is a measure used in linguistics and natural language processing (NLP) to assess the lexical diversity of a text. It is calculated by dividing the number of unique words (types) by the total number of words (tokens) in a given text. A higher TTR indicates greater lexical diversity.

Definition of Type-Token Ratio. Formally, let be the number of unique words (types) and be the total number of words (tokens) in a text. The Type-Token Ratio (TTR) is defined as:

| (12) |

Calculation of Type-Token Ratio. The calculation involves the following steps:

-

1.