Signal Temporal Logic Meets Convex-Concave Programming:

A Structure-Exploiting SQP Algorithm for STL Specifications

Abstract

This study considers the control problem with signal temporal logic (STL) specifications. Prior works have adopted smoothing techniques to address this problem within a feasible time frame and solve the problem by applying sequential quadratic programming (SQP) methods naively. However, one of the drawbacks of this approach is that solutions can easily become trapped in local minima that do not satisfy the specification. In this study, we propose a new optimization method, termed CCP-based SQP, based on the convex-concave procedure (CCP). Our framework includes a new robustness decomposition method that decomposes the robustness function into a set of constraints, resulting in a form of difference of convex (DC) program that can be solved efficiently. We solve this DC program sequentially as a quadratic program by only approximating the disjunctive parts of the specifications. Our experimental results demonstrate that our method has a superior performance compared to the state-of-the-art SQP methods in terms of both robustness and computational time.

I Introduction

As technology becomes more and more powerful, autonomous systems––such as drones and self-driving cars––are required to execute increasingly complex control tasks. Signal temporal logic (STL) has been progressively used as a specification language for these complex robotic tasks in various situations, due to their expressiveness and closeness to natural language [1]. However, the resulting optimization problem is a mixed integer program (MIP) [2, 3], which can easily be computationally intractable and scales poorly with the number of integer variables. To avoid this complexity, recent work formulates the problem as nonlinear programs (NLP) and solve it by sequential quadratic programming (SQP) methods [4, 5]. Although these methods can find a locally optimal solution in a short time compared to the MIP-based methods in general, the major difficulty of this formulation is that the solution can get stuck in a local minimum far from the global optimum as this method approximates the highly non-convex programs to quadratic programs. Moreover, the popular formulation makes this method more inefficient because all nonconvex functions are compressed into the objective function––which results in a coarse approximation by the naive SQP method.

In this study, we address the aforementioned challenges by leveraging the structure of STL control problems. We introduce a novel optimization framework, termed the convex-concave procedure based sequential quadratic programming (CCP-based SQP), which is a variant of the SQP method that solves quadratic programs sequentially. However, unlike traditional SQP methods, this framework approximates the original program at each iteration differently. It is based on the iterative optimization approach of CCP [6, 7]. Stated differently, only the concave portion is linearized at each iteration, allowing the method to retain the complete information of the convex parts. This is in contrast to naive SQP, which can only retain second-order derivative information at each iteration. As a result, our approach was able to synthesize a trajectory that is not only more robust (in terms of robustness score) but is also produced in a shorter time than the naive SQP methods.

The remainder of this paper is organized as follows. We first provide the notation, preliminaries, and problem formulation in Section II. Then, Section III provides our robustness decomposition method that transforms the optimization problem to an efficient DC form. Section IV provides some properties of the resulting CCP-based SQP framework. Section V demonstrates the effectiveness of our method over a state-of-the-art naive SQP method.

II Preliminaries

, and are defined as the fields of real and integer numbers, respectively. Given with , denotes a set of integers from to . True and false are denoted by and . denotes the identity matrix of size . denotes the zero matrix of size .

II-A System description

Throughout this paper, we consider a discrete-time linear system:

| (1) |

where and denote the state and input. We assume that and are a conjunction of polyhedra. Given an initial state and a sequence of control inputs , the sequence of states , which we call a trajectory, is uniquely generated.

II-B Specification by Signal temporal logic

In this study, we consider the specifications given in STL. STL is a predicate logic defined for specifying properties for continuous signals [1]. STL consists of predicates that can be obtained through a function as follows:

| (2) |

In this study, we consider affine functions for of the form , where and . In addition to standard boolean operators , in STL, temporal operators (always), (eventually), and (until) are also considered. Each temporal operator has an associated bounded time interval where . We assume that STL formulae are written in negation normal form (NNF) without loss of generality [8]. STL formulae in NNF have negations only in front of the predicates [9] and we omit such negations from STL syntax. We refer to this negation-free STL as STL, which is defined by [10]:

The notion of robustness is a useful semantic defined for STL formulae, which is a real-valued function that describes how much a trajectory satisfies an STL formula. Let denote a trajectory starting at timestep , i.e., . The trajectory length should be selected large enough to calculate the satisfaction of a formula (see e.g., [9]). Given an STL formula , we define the reversed robustness with respect to a trajectory and a time that can be obtained recursively according to the following quantitative semantics:

Definition 1

(reversed-robustness)

| (3a) | |||

| (3b) | |||

| (3c) | |||

| (3d) | |||

| (3e) | |||

| (3f) | |||

Note that we define the recursive semantics for the reversed robustness function, i.e., the minus of the original robustness defined in the literature (see e.g., [11, Definition 2]) as . We reverse the sign of the robustness functions of predicates , and we interchange the and operators. The reversed-robustness function is introduced as a notational simplification within our robustness decomposition framework. This approach removes unfavorable minus sign symbols in the decomposition and establishes a correspondence between convex parts in the optimization and conjunctive operators in the specification, and similarly for concave parts. It is worth mentioning that this modification does not change all the properties of the original robustness as we simply reverse the signs in the definition of the robustness function.

Example 1

This example deals with a specification called two-target specifications, which is borrowed from [12]. The formula of this specification is given as:

| (4) |

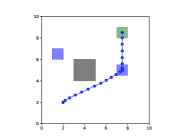

Figure 2 shows the graphical representation of the state trajectory satisfying the two-target specification with . The robot must remain in one of the two regions, either or , for a dwelling time of , and it must reach the goal region within the trajectory length of while avoiding the obstacle region .

Figure 2 shows a tree description of the two-target specification for reversed-robustness functions with and . While logically equivalent STL formulas can have different tree descriptions, we adopt the simplified form of the tree description, which is uniquely determined per STL formula (for details, see [12]). The tree nodes marked “LP” at the bottom represent linear predicates, while the top (outermost) node is marked “and.” The depth of this tree is four and the height of the predicates is either “and-or-and-predicate” or “and-or-predicate” regardless of the value of and . Note that although this figure represents the specification with for visibility, the depth and height of the tree do not change even when we vary these parameters because changing them will only increase the number of predicates in each depth.

II-C Smooth approximation

The robustness function that results from (3) is not differentiable due to the and operators, and thus, we cannot calculate the gradients of this function. Recent papers such as [4] smooth these functions in order to apply gradient-based methods. However, because smoothing both the and of the function leads to a problem that deviates from the original, it is preferable to minimize the parts that are smoothed. Our method requires to smooth only functions. This is because functions in the robustness are rather decomposed in our method as shown in Section III. The popular smooth approximation of the and operators is by using the log-sum-exp (LSE) function. This approximation for operators is given as:

| (5) |

where and is the smooth parameter. Now, we define a new robustness measure that will be used throughout this paper.

Definition 2

(Smoothed reversed-robustness) The smoothed reversed-robustness is defined by the quantitative semantics (3) where every operator is replaced by .

II-D Problem statement

This study considers the problem of synthesizing the trajectory that satisfies the STL specification in a maximally robust way. Given the system (1) and the specification and the initial state , the optimization problem is as follows:

| (6a) | ||||

| s.t. | (6b) | |||

| (6c) | ||||

| (6d) | ||||

Note that the reversed-robustness is replaced with the smoothed reversed-robustness in (6a) and (6d). Thus, although a feasible solution of the non-smoothed problem always satisfies the specification (4), a feasible solution of (6) does not necessarily satisfy the specification (as it under-approximates the non-smoothed robustness). However, the approximation error can be made arbitrarily small by making the smooth parameter sufficiently large [4, 5].

III A structure-aware decomposition of STL formulae

In this section, we present our robustness decomposition framework. The goal of our robustness decomposition is to transform the problem in order to apply a convex-concave procedure (CCP)-based algorithm.

III-A Convex-concave procedure (CCP)

The convex-concave procedure [6, 7] is a heuristic method for finding a local optimum of a class of optimization problems called the difference of convex (DC) programs, which is defined as follows:

Definition 3

(Difference of convex (DC) programs)

| (7a) | |||

| (7b) | |||

where is the vector of optimization variables and the functions and are convex for . The DC problem (7) can also include equality constraints

| (8) |

where and are convex. These equality constraints are expressed as the pair of inequality constraints

| (9) |

The basic idea of CCP is simple; at each iteration of the optimization of the DC program (7), we replace concave terms with a convex upper bound obtained by linearization and then solve the resulting convex problem using convex optimization algorithms. It can be shown that all of the iterates are feasible if the starting point lies within the feasible set and the objective value converges (possibly to negative infinity, see [6, 14] for proof).

Note that either of the functions in equality constraints (8) are also approximated by the CCP. This is because an equality constraint is transformed into two inequalities (9) and both convex functions can make either inequality concave. Therefore, we should avoid introducing equality constraints if possible.

III-B Robustness decomposition

Our decomposition framework alters the problem into an efficient form of a DC program considering the aforementioned features of CCP-based algorithms. This decomposition not only transforms the problem into a DC program but also enhances the efficiency of the overall algorithm. As a result, the resulting program approximates only the truly concave parts, and our approach results in a new SQP method.

To this end, we need to know whether each function in the program (6) is convex or concave. In this program, the robustness function is the only part whose curvature (convex or concave) cannot be determined. Thus, if the robustness function , which is a composite function consisting of and operators, can be rewritten as a combination of convex and concave functions, the entire program can be reduced to a DC program. The proposed framework achieves this by recursively decomposing the outermost operator of the robustness function until all the arguments of the functions are affine. As this study considers only affine functions for predicates , if the arguments of a function are all predicates, the convexity or concavity of the function can be determined by examining the operator preceding it, which may be either a or function. The recursive decomposition of a nonconvex robustness function into predicates corresponds to a decomposition of the robustness tree from the top to the bottom, in accordance with the tree structure.

Our method utilizes the idea of the epigraphic reformulation methods. This method transforms the convex (usually linear) cost function into the corresponding epigraph constraints. However, our approach is different from the normal epigraphic reformulation in the sense that we deal with nonconvex cost functions and we have to apply the reformulation recursively. Our robustness decomposition transforms (6) into a more efficient form of DC program. To demonstrate our main idea, for the remainder of this paper, we use the simplified two-target specification described in Example 1 as the specification .

As the robustness function is in the cost function, the first procedure is to decompose the function from the cost function into a set of constraints. As we consider the two-target specification, the outermost operator of the robustness function is , i.e., . We introduce a new variable , and reformulate the program as follows.

| (10a) | ||||

| s.t. | (10b) | |||

| (10c) | ||||

| (10d) | ||||

| (10e) | ||||

Note that the set of inequalities (10e) is equivalent to

| (11) |

although the original semantics of the variable is the equality constraint:

| (12) |

However, it is well known that this kind of transformation is known to be equivalent, particularly for the convex case. Although (10) is nonconvex, we can also show that both (6) and (10) are equivalent (see [15] and [16, Section 8.3.4.4]). The proof of this equivalence is provided in Appendix -A for a later explanation (in particular (17)).

As each for is a robustness function, each inequality in (10e) can be restated as a constraint in one of the following two forms, depending on whether the outermost operator is or :

| (13) | |||

| (14) |

where functions (resp. ) are robustness functions associated with (resp. ), which are the subformulas of . Note that these arguments of the and functions are still not necessarily predicates. We continue the following steps until all the arguments in each function become the predicates.

For inequality constraints of the form (13), we transform it as follows:

| (15) |

This is of course equivalent to (13).

For constraints of the forms (14), we first check whether each argument of the function is a predicate or not. If not, we replace such an argument with a new variable. Because we consider the simplified two-target specification, the outermost operator of the function is , i.e., . We first replace function in (14) by a new variable as

| (16) |

Then, we add the following constraints:

| (17) |

Although the inequality constraint (17) should be equality, this modification is justified by the similar discussion for (10e) even though the equivalence of this transformation is not immediately obvious (see the proof of Theorem 1).

All the inequalities in (15) and (17) are either in the form (13) or the form (14). Thus, subsequent to these steps, the constraints of the forms (13), (14) are decomposed into several constraints in the same forms again. We repeat this procedure until we reach the predicates, which is the bottom of the tree in Figure 2. When we finish the above recursive manipulations, (6) is transformed into an DC program as follows:

| (18a) | ||||

| s.t. | (18b) | |||

| (18c) | ||||

| (18d) | ||||

| (18j) | ||||

| (18p) | ||||

where all the formulas in (18j) and (18p) are predicates and and . Note that, with some abuse of notation, some variables in in constraints (18j),(18p) may represent and , and some arguments of the function in (18p) are not affine predicates but the new variables.

Important properties of this program (18) are stated below.

Proposition 1

Let denote a feasible solution for program (18). Then, holds.

Proof:

See Appendix -B. ∎

Proof:

Proof:

See Appendix -C. ∎

IV Properties of the subproblem

After we obtain the transformed program (18), we apply the CCP method described in Subsection III-A. We majorize the operator in (18p) at the current point of each iteration. In general, the resulting program becomes a convex program such as a second-order cone program (SOCP) and CCP has to solve the convex program sequentially. However, the subproblem we solve at each iteration is a linear program or a quadratic program, and not the general convex program as stated in the following theorem:

Theorem 2

The program (18) after the majorization by CCP is a linear program (LP). If we add the quadratic cost function, the program can be written as a convex quadratic program (QP).

Proof:

Let denote this LP in the following. It is worth mentioning that inequalities in (18j) are all affine inequalities as we remove all the operators in the end. As a corollary of the above theorem, our CCP-based method sequentially solves LP. If we add the quadratic cost function in (18a), our method becomes sequential quadratic programming (SQP).

Moreover, as all the constraints coming from the robustness decomposition framework in Section III do not have any equality constraints, the non-convex parts of (18) are only the inequality constraints (18p), which comes from functions of the robustness function.

Proposition 3

Hence, the CCP only approximates the truly concave portions of (18p) arising from the functions in the robustness function. It is noteworthy that enforcing the constraints, such as (10e) and (17), as equality constraints would require the CCP to approximate them as described in Subsection III-A, thereby leading to undesirable approximations. In contrast, the proposed method minimizes the number of parts requiring approximation, which enhances the algorithm’s performance.

Furthermore, the feasible region of the program is interior to the feasible region of the program (18).

Proposition 4

A feasible solution of the linear program is a feasible solution to the program (18).

Proof:

This is because the first-order approximations of CCP at each step are global over-estimators; i.e., where is the current point of variable . ∎

Finally, as a direct consequence of Propositions 1 and 4, the solution obtained by our CCP-based SQP method can always satisfy the specification, which is the most important property to guarantee safety.

Theorem 3

Let denote a feasible trajectory of the program . Then always satisfies the STL specification , i.e., in the limit .

Proof:

In practice, we select a sufficiently large to minimize the approximation error.

V Numerical Experiments

We illustrate the advantages of our proposed method through the two-target scenarios with varying time horizons ranging from to . All experiments were conducted on a 2020 MacBook Air with an Apple M1 processor and 8 GB of RAM111The source code is available at https://github.com/yotakayama/STLCCP.. The state and input are defined as , where is the horizontal position of the robot and is the vertical position. As for the system dynamics, we use a double integrator, i.e., a system (1) with the matrix

| (23) |

We implemented our algorithm in Python using CVXPY [17] as the interface to the optimizer. We chose the GUROBI(ver. 10)’s QP-solver with the default option. We took the penalty CCP [7] to solve the problem, which can admit the violation of constraints during the optimization procedures by penalizing the violation with variables. We introduced this penalty variable only for the concave constraints because the CCP only approximates such concave parts. Additionally, we added a small quadratic cost function. The weights on the penalty variables and the quadratic cost functions were 50.0 and 0.001, respectively. The resulting cost function (18a) is represented as:

| (24) |

where are positive semidefinite symmetric matrices, and for ( is the number of constraints in (18p)) is the penalty variable added to the r.h.s. of each concave constraints (18p) satisfying . The initial state is fixed as , and the bounds on the state and input variables are and , respectively.

We compared our method with a popular MIP-based method and a state-of-the-art NLP-based method. For the MIP-based approach, we formulated the problem as a MIP using the encoding framework in [11] and solved the problem with the GUROBI solver (GUROBI is often the fastest MIP solver). However, for the NLP-based method, we used the naive sequential quadratic programming (SQP) approach [18] using the smoothing method proposed in [5]. All the parameters of the two methods were the default settings.

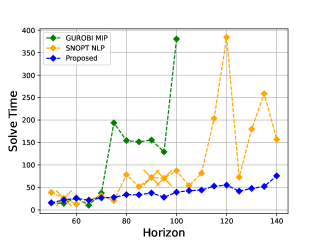

Figure 3 compares convergence time and robustness score of the three methods from to . The SNOPT-NLP method (orange) failed to output a feasible trajectory at 3 horizons () out of the 19 horizons, which are shown by the orange cross marker in the left plot, and their robustness scores have been cut off in the right plot as they are . In contrast, the proposed method (blue) failed only 2 times out of the 95 total trials (5 trials at each horizon). The GUROBI-MIP method (green) is truncated at the horizon due to the computation time s, which exceeds the range of the plotted region. Therefore, subsequent results for longer horizons are not displayed for the sake of visibility. The plots of our method are the averages of different trials varying the initial values of variables, whereas the other two plots are from one trial. All the scores in the right plot are calculated using the original robustness function.

In summary, our proposed method outperformed the state-of-the-art SNOPT-NLP method with respect to both the robustness score and the computation time. Specifically, as shown in the left plot, the computation time of our proposed method remained at a consistently low level even when the horizon increased. In contrast, the other two methods displayed volatility or were infeasibly slow, particularly above . Furthermore, the right plot demonstrates that our proposed method did not compromise the robustness score to shorten the computation time. On the contrary, our method consistently achieved a higher robustness score than the SNOPT-NLP method. At some horizons, our method achieved a score of , with the global optimum obtained by the MIP-based method. Notably, the plot of our method represents the average of all the trials, indicating that the feasible trajectories our method outputs are all the global optimum. In contrast, the SNOPT-NLP method sometimes generated failure trajectories with a robustness score of in some horizons (at ), and all of the robustness scores (orange) were lower than those of the proposed method (blue).

Finally, Figure 4 shows the trajectories generated by the proposed method with . Our method produced a range of satisfactory trajectories, each dependent on the initial values of the system’s variables.

VI Conclusion

In this study, we have introduced the CCP-based SQP algorithm for control problems involving STL specifications leveraging the inherent structures of STL. The subproblem of our proposed method is an efficient quadratic subprogram, achieved by approximating solely the concave constraints of the program that correspond to the disjunctive nodes of the STL tree.

Future work includes extending the proposed method to non-affine predicates, nonlinear systems, and non-smooth cases. While this paper focused on linear systems to establish an efficient SQP approach, the same methodology can be applied to tackle more complex cases.

References

- [1] G. E. Fainekos and G. J. Pappas, “Robustness of temporal logic specifications for continuous-time signals,” Theoretical Computer Science, vol. 410, no. 42, pp. 4262–4291, 2009.

- [2] S. Karaman and E. Frazzoli, “Vehicle routing problem with metric temporal logic specifications,” in IEEE Conference on Decision and Control, pp. 3953–3958, 2008.

- [3] V. Raman, A. Donzé, M. Maasoumy, R. M. Murray, A. Sangiovanni-Vincentelli, and S. A. Seshia, “Model predictive control with signal temporal logic specifications,” in IEEE Conference on Decision and Control, pp. 81–87, 2014.

- [4] Y. V. Pant, H. Abbas, and R. Mangharam, “Smooth operator: Control using the smooth robustness of temporal logic,” in IEEE Conference on Control Technology and Applications, pp. 1235–1240, 2017.

- [5] Y. Gilpin, V. Kurtz, and H. Lin, “A smooth robustness measure of signal temporal logic for symbolic control,” IEEE Control Systems Letters, vol. 5, no. 1, pp. 241–246, 2021.

- [6] T. Lipp and S. Boyd, “Variations and extension of the convex–concave procedure,” Optimization and Engineering, vol. 17, no. 2, pp. 263–287, 2016.

- [7] X. Shen, S. Diamond, Y. Gu, and S. Boyd, “Disciplined convex-concave programming,” in IEEE Conference on Decision and Control (CDC), pp. 1009–1014, 2016.

- [8] S. Sadraddini and C. Belta, “Formal synthesis of control strategies for positive monotone systems,” IEEE Transactions on Automatic Control, vol. 64, no. 2, pp. 480–495, 2019.

- [9] ——, “Robust temporal logic model predictive control,” in Annual Allerton Conference on Communication, Control, and Computing, pp. 772–779, 2015.

- [10] C. Baier and J.-P. Katoen, Principles of Model Checking. MIT Press, 2008.

- [11] C. Belta and S. Sadraddini, “Formal methods for control synthesis: An optimization perspective,” Annual Review of Control, Robotics, and Autonomous Systems, vol. 2, no. 1, pp. 115–140, 2019.

- [12] V. Kurtz and H. Lin, “Mixed-integer programming for signal temporal logic with fewer binary variables,” IEEE Control Systems Letters, vol. 6, pp. 2635–2640, 2022. Their source code is available at https://stlpy.readthedocs.io.

- [13] D. Sun, J. Chen, S. Mitra, and C. Fan, “Multi-agent motion planning from signal temporal logic specifications,” IEEE Robotics and Automation Letters, 2022.

- [14] B. K. Sriperumbudur and G. R. G. Lanckriet, “On the convergence of the concave-convex procedure,” in Advances in Neural Information Processing Systems, vol. 22, 2009.

- [15] L. Lindemann and D. V. Dimarogonas, “Robust control for signal temporal logic specifications using discrete average space robustness,” Automatica, vol. 101, pp. 377–387, 2019.

- [16] G. C. Calafiore and L. El Ghaoui, Optimization Models. Cambridge University Press, 2014.

- [17] S. Diamond and S. Boyd, “CVXPY: A python-embedded modeling language for convex optimization,” Journal of Machine Learning Research, vol. 17, no. 1, p. 2909â2913, 2016.

- [18] P. E. Gill, W. Murray, and M. A. Saunders, “SNOPT: An SQP algorithm for large-scale constrained optimization,” SIAM Review, vol. 47, pp. 99–131, 2005.

-A Equivalence between the two programs: (6) and (10)

We prove the following equivalences.

Proposition 5

Proof:

We demonstrate that if is optimal for (6), then is optimal for (10). Firstly, note that for any feasible solution of (6), is feasible for (10) as (10e) becomes an identity by this substitution . Thus, is also feasible for (10).

Suppose this solution is not optimal for (10). Then, there exists another feasible solution with a better objective, i.e., such that . Since this feasible solution also satisfies (10e), that is, , the inequality holds. Considering that is feasible for (6) (as one of the inequality conditions in (10e) must become the equality condition when the cost function takes a local minimum), the inequality contradicts the optimality of for (6). Therefore, is optimal for (10), and the global optimum is the same. ∎

-B Proof of Proposition 1

Proof:

We present a proof sketch. Let us first consider the initial replacement of the robustness function with a new variable . This transformation converts inequality (14) into two inequalities, (16) and (17). Note that (17) represents the inequality

| (25) |

although this inequality sign should be an equality if were a substituting variable. Nevertheless, due to the fact that is an increasing function with respect to each argument, we have the inequality

Combining this inequality with (16), we conclude that inequality (14) holds even after the transformation with . Similar analogies can be used for all subsequent transformations of non-convex robustness functions with new variables that follow the same pattern. Thus, ultimately, the original inequalities in (10e) hold. By this inequality and (18d), we have . ∎

From a tree perspective, this proof demonstrates that by replacing the robustness of any subformula with a new variable that is greater than or equal to the original value (as shown in (25)), the parent node’s robustness score, due to the monotonicity of the and functions, also becomes greater than or equal to the original value (as shown in (14)). This property extends further to the values of the grandparents and subsequent nodes, ultimately resulting in the cost function , representing the top-node value, being greater than the robustness value of .

-C Proof of Theorem 1

We first prove the following proposition, which states the converse of Proposition 2:

Proposition 6

Proof:

When all the inequalities in (18j) and (18p) becoming identity equations, the feasible region of (18) becomes identical to that of (6), i.e., where and denote the feasible region of (6) and (18) respectively, and the operator denotes the projection mapping by the substitution from to . Therefore, the feasible region of (6) is included in that of (18). ∎

We now show the following optimality equivalence:

Proof:

From Proposition 6, if is not the optimal solution for (18), there exists another feasible solution , with a better objective value, i.e., such that . As in the proof of Proposition 1, . Therefore, . However, as Proposition 2 shows that is also feasible for (6), this inequality contradicts the fact that is optimal for (6). Therefore, is optimal for (18). ∎