\ul

Signal Processing over Multilayer Graphs: Theoretical Foundations and Practical Applications

Abstract

Signal processing over single-layer graphs has become a mainstream tool owing to its power in revealing obscure underlying structures within data signals. However, many real-life datasets and systems, including those in Internet of Things (IoT), are characterized by more complex interactions among distinct entities, which may represent multi-level interactions that are harder to be captured with a single-layer graph, and can be better characterized by multilayers graph connections. Such multilayer or multi-level data structure can be more naturally modeled by high-dimensional multilayer graphs (MLG). To generalize traditional graph signal processing (GSP) over multilayer graphs for analyzing multi-level signal features and their interactions, this work proposes a tensor-based framework of multilayer graph signal processing (M-GSP). Specifically, we introduce core concepts of M-GSP and study properties of MLG spectrum space, followed by fundamentals of MLG-based filter design. To illustrate novel aspects of M-GSP, we further explore its link with traditional signal processing and GSP. We provide example applications to demonstrate the efficacy and benefits of applying multilayer graphs and M-GSP in practical scenarios.

Index Terms:

Multilayer graph, graph signal processing, tensor, data analysisI Introduction

Geometric signal processing tools have found broad applications in data analysis to uncover obscure or hidden structures from complex datasets [1]. Various data sources, such as social networks, Internet of Things (IoT) intelligence, traffic flows and biological images, often feature complex structures that pose challenges to traditional signal processing tools. Recently, graph signal processing (GSP) emerges as an effective tool over the graph signal representation [2]. For a signal with samples, a graph of nodes can be formed to model their underlying interactions [3]. In GSP, a graph Fourier space is also defined from the spectrum space of the representing matrix (adjacency/Laplacian) for signal processing tasks [4], such as denoising [5], resampling [6], and classification [7]. Generalization of the more traditional GSP includes signal processing over hypergraphs [8] and simplicial complexes [9], which are suitable to model high-degree multi-lateral node relationships.

Traditional graph signal processing tools generally describe signals as graph nodes connected by one type of edges. However, real-life systems and datasets may feature multi-facet interactions [10]. For example, in a video dataset modeled by spatial-temporal graph shown in Fig. 1, the nodes may exhibit different types of spatial connections at different temporal steps. It is harder for single-layer graphs to model such multi-facet connections. To model multiple layers of signal connectivity, we explore a high-dimensional graph representation known as multilayer graphs (MLG) [11].

Multilayer graph, also named as multilayer network, is a geometric model containing correlated layers with different structures and physical meanings, unlike traditional single-layer graphs [10]. A typical example is smart grid consisting of two layers shown as Fig. 1: the power grid and the computation network. These two layers have different physical connectivity and rules [12]. Still, signal interactions across the multiple layers in MLG can be strongly correlated. Thus, separate representations by multiple single layer graphs may fail to capture such characteristics. Consider a network consisting of a physical power layer and a cyber layer, the failure of one layer could trigger the failure of the other [13]. One example was the power line damage caused by a storm on September 28th of 2003. Not only did it lead to the failure of several power stations, but also disrupted communications as a result of power station breakdowns that eventually affected 56 million people in Europe [14].

The complexity and multi-level interactions of MLG make the data reside on the irregular and high-dimensional structures, which do not directly lend themselves to standard GSP tools. For example, even though one can represent MLG by a supra-graph unfolding all the layers [15], traditional GSP would treat interlayer and intralayer interactions equivalently in one spectrum space without differentiating the spectra of interlayer and intralayer signal correlations. Recently, there has been growing interest in developing advanced GSP tools to process such multi-level structures. In [16], a joint time-vertex Fourier transform (JFT) is defined to process spatial-temporal graphs by applying graph Fourier transform (GFT) and discrete Fourier transform (DFT). Although JFT can process time-varying datasets, it does not provide more general temporal (interlayer) connectivity in a generic multilayer graph. For flexible interlayer structure, a two-way GFT proposed in [17], defines different graph Fourier spaces for interlayer and intralayer connections, respectively. However, its spatial interactions all lie within a single graph structure, thereby limiting the generalization of MLG. Expanding the works of [17], the tensor-based multi-way graph signal processing framework (MWGSP) of [18] relies on product graph. In MWGSP, separate factor graphs are constructed for each mode of a tensor-represented signal, and a joint spectrum combines factor graph spectra. However, both JFT and MWGSP use the same intralayer connections for all layers. They do not admit different layers with heterogeneous graph structures, thereby limiting the ability to represent a general MLG.

Another challenge in MLG signal processing lies in the need for a suitable mathematical representation. Traditional methods start with connectivity matrices. For example, in [19], a supra-adjacency matrix is defined to represent all layers equivalently while ignoring the natures of different layers. One can also represent each layer with an individual adjacency matrix [20]. However, such matrix-based representations mainly focus on the intralayer connections and lack representation for interlayer interactions. A more natural and general way may start with tensor representation [11], which is particularly attractive in handling complex MLG graph analysis.

Our goal is to generalize graph signal processing for multilayer graphs to model, analyze, and process signals based on the intralayer and interlayer signal interactions. To address the aforementioned challenges and to advance MLG processing, we present a novel tensor framework for multilayer graph signal processing (M-GSP). We summarize the main contributions of this work as follows:

-

•

Leveraging tensor representation of MLG, we introduce M-GSP from a spatial perspective, in which MLG signals and shifting of signals are defined;

-

•

Taking a spectrum perspective, we introduce new concepts of spectrum space and spectrum transform for MLG. For interpretability of spectrum space, we analyze the resulting MLG spectral properties and their distinction from existing GSP tools.

-

•

We also present fundamentals of filter design in M-GSP, and suggest several practical applications based on the proposed framework, including those in IoT systems.

We organize the technical presentation as follows. Section II first summarizes preliminaries of traditional GSP and tensor analysis, before presenting representations of multilayer graphs within M-GSP in Section. III. We then introduce the fundamentals of M-GSP spatial and spectrum analysis in Section IV and Section V, respectively. We next discuss MLG filter design in Section VI. We develop the physical insights and spectrum interpretation of M-GSP concepts in Section VII. With the newly proposed M-GSP framework, we provide several example applications to demonstrate its potential in Section VIII, before concluding this paper in Section IX.

II Preliminaries

II-A Overview of Graph Signal Processing

Signal processing on graphs [1, 3, 2] studies signals that are discrete in some dimensions by representing the irregular signal structure using a graph , where is a set of nodes, and is the representing matrix (e.g., adjacency/Laplacian) describing the geometric structure of the graph . Graph signals are the attributes of nodes that underlie the graph structure. A graph signal can be written as vector where the superscript denotes matrix/vector transpose.

With a graph representation and a signal vector , the basic graph filtering (shifting) is defined via

| (1) |

The graph spectrum space, also known as the graph Fourier space is defined based on the eigenspace of the representing matrix. Let the eigen-decomposition of be given by , where is the matrix with eigenvectors of as columns, and diagonal matrix consists of the corresponding eigenvalues. The graph Fourier transform (GFT) is defined as

| (2) |

whereas the inverse GFT is given by .

II-B Introduction of Tensor Basics

Before introducing the fundamentals of M-GSP, we first review some basics on tensors that are useful for multilayer graph analysis. Tensors can be viewed as multi-dimensional arrays. The order of a tensor is the number of indices needed to label a component of that array [23]. For example, a third-order tensor has three indices. More specially, a scalar is a zeroth-order tensor; a vector is a first-order tensor; a matrix is a second-order tensor; and an -dimensional array is an th-order tensor. For convenience, we use bold letters to represent the tensors excluding scalars, i.e., represents an th-order tensor with being the dimension of the th order, and use to represent the entry of at position with in this work. If has a subscript , we use to denote its entries.

We now start with some useful definitions and tensor operations for the M-GSP framework [23].

II-B1 Super-diagonal Tensor

An th-order tensor is super-diagonal if its entries only for .

II-B2 Symmetric Tensor

A tensor is super-symmetric if its elements remain constant under index permutation. For example, a third-order is super-symmetric if . In addition, tensors can be partially symmetric in two or more modes as well. For example, a third-order tensor is symmetric in the order one and two if , for and .

II-B3 Tensor Outer Product

The tensor outer product between a th-order tensor with entries and a th-order tensor with entries is denoted by

| (3) |

The result is a th-order tensor, whose entries are calculated by

| (4) |

The tensor outer product is useful to construct a higher order tensor from several lower order tensors.

II-B4 n-mode Product

The n-mode product between a tensor and a matrix is denoted by

| (5) |

Each element in is given by

| (6) |

Note that the -mode product is a different operation from matrix product.

II-B5 Tensor Contraction

In M-GSP, the contraction (inner product) between a forth order tensor and a matrix in the third and forth order is defined as

| (7) |

where .

In addition, the contraction between two fourth-order tensor is defined as

| (8) |

whose entries are .

II-B6 Tensor Decomposition

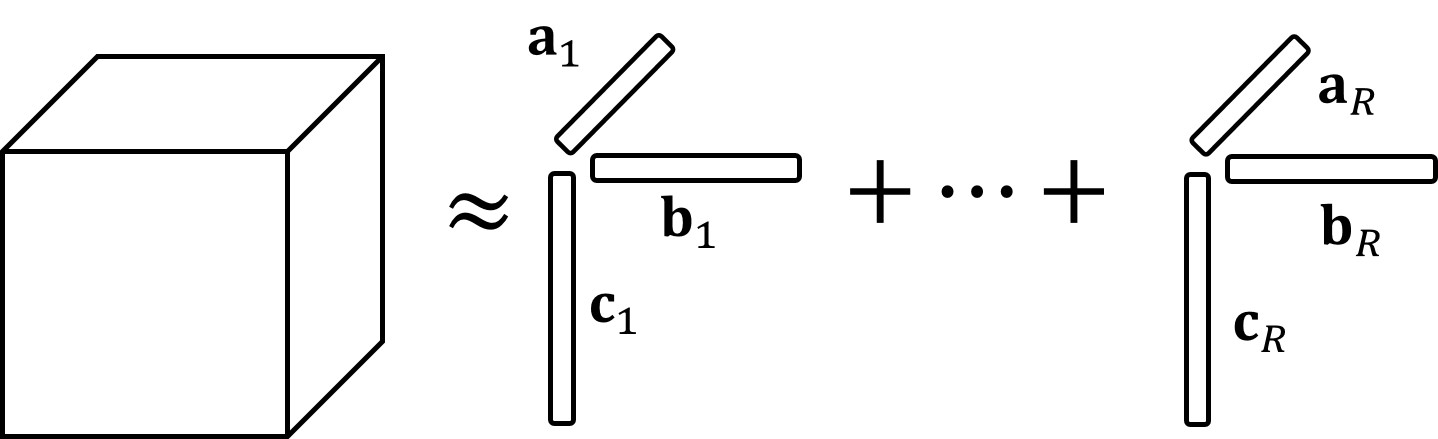

Tensor decompositions are useful tools to extract the underlying information of tensors. Particularly, CANDECOMP/PARAFAC (CP) decomposition decomposes a tensor as a sum of the tensor outer product of rank-one tensors [23, 24]. For example, a third order tensor is decomposed by CP decomposition into

| (9) |

where integer denotes the rank, i.e., the lowest number of rank-one tensors in the decomposition. We illustrate CP decomposition for a third-order tensor in Fig. 2(a), which could be viewed as factorization of the tensor.

Another important decomposition is the Tucker decomposition, which is in the form of higher-order PCA. More specifically, Tucker decomposition decomposes a tensor into a core tensor multiplied by a matrix along each mode [23]. Defining a core tensor . Defining , the Tucker decomposition of a third-order tensor is

| (10) |

where , , . The Tucker decomposition for a third-order tensor is illustrated in Fig. 2(b). Tucker decomposition reduces to CP decomposition if the core tensor is limited to be super-diagonal.

III Representations of MLG in M-GSP

In this section, we introduce the fundamental representations of MLG within the M-GSP framework.

III-A Multilayer Graphs

Multilayer graph, also referred to as multilayer network, is an important geometric model in complex systems [10]. In this work, we refrain from using the “network” terminology because of its diverse meanings in various field ranging from communication networking to deep learning. From here onward, unless otherwise specified, we shall use the less ambiguous term of multilayer graph.

We first provide definitions of multilayer graphs (MLG).

Definition 1 (Multilayer Graph).

A multilayer graph with nodes and layers is defined as , where is the set of nodes, denotes the set of layers with each layer being the subsets of , whereas is the algebraic representation describing node interactions.

Note that, we mainly focus on the layer-disjoint multilayer graph [10] where each node exists exactly in one layer, since layers denote different phenomena. For example, in a smart grid, a station with functions in both power grid and communication network, is usually modeled as two nodes in a two-layer graph for the network analysis [28].

In multilayer graphs, edges connect nodes in the same layer (intralayer edges) or nodes of different layers (interlayer edges) [29]. There are two main types of multilayer graphs: multiplex graph and interconnected graph [30]. In a multiplex graph, each layer has the same number of nodes, and each node only connects with their 1-to-1 matching counterparts in other layers to form interlayer connections. Typically, multiplex graphs characterize different types of interactions among the same (or a similar) set of physical entities. For example, the spatial-temporal connections among a set of nodes can be intuitively modeled as a multiplex graph [30]. In the interconnected graphs, each layer may have different numbers of nodes without a 1-to-1 counterpart. Their interlayer connections could be more flexible. Examples of a three-layer multiplex graph and a three-layer interconnected graph are shown in Fig. 3, where different colors represent different layers, solid lines represent intralayer connections, and dash lines indicate interlayer connections.

III-B Algebraic Representation

To capture the high-dimensional ‘multilayer’ interactions between different nodes, we use tensor as algebraic representation of MLG for the proposed M-GSP framework [11].

III-B1 MLG with same number of nodes in each layer

To better interpret the tensor representation of a multilayer graph, we start from a simpler type of MLG, in which each layer contains the same number of nodes. For a multilayer graph with layers and nodes in each layer, i.e., for , it could be interpreted as embedding the interactions between a set of ‘entities’ (not nodes) into a set of layers. The nodes in different layers can be viewed as the projections of the entities. For example, the video datasets could be modeled by the spatial connections between objects (entities) into different temporal frames (layers).

Mathematically, the process of embedding (projecting) entities can be viewed as a tensor product, and graph connections can be represented by tensors [11]. For convenience, we use Greek letters to indicate each layer and Latin letters to indicate each interpretable ‘entity’ with corresponding node in each layer. Given a set of entities , one can construct a vector whose sole nonzero element is its th element (equal to 1) to characterize each entity . Thus, interactions of two entities can be represented by a second-order tensor , where is the intensity of the relationship between entity and . Similarly, given a set of layers , a vector can capture the properties of the layer , and the connectivity between two layers could be represented by . Following this approach, connectivity between the projected nodes of the entities in the layers can be represented by a fourth-order tensor

| (11) |

where is the weight of connection between the entity ’s projected node on layer and the entity ’s projected node on layer . More specially, the fourth-order tensor becomes the adjacency tensor of the multilayer graph, where each entry characterizes the edge between the entity ’s projected node on layer and the entity ’s projected node on layer . Thus, similar to the adjacency matrix whose 2-D entries indicate whether and how two nodes are pairwise connected by a simple edge in the normal graphs, we adopt an adjacency tensor to represent the multilayer graph with the same number of nodes in each layer as follows.

Definition 2 (Adjacency Tensor).

A multilayer graph , with layers and nodes in each layer , can be represented by a fourth-order adjacency tensor defined as

| (12) |

Here, each entry of the adjacency tensor indicates the intensity of the edge between the entity ’s projected node on layer and entity ’s projected node on layer .

Clearly, for a single layer graph, is a scalar and the fourth-order tensor degenerates to the adjacency matrix of a normal graph. Similar to in an adjacency matrix which indicates the direction from the node to , also indicates the direction from the node to the node in a MLG. Note that, vectors and are not eigenvectors of the adjacency tensor. They are merely the vectors characterizing features of the entities and layers, respectively. We shall discuss the MLG-based spectrum space in Section V.

Given an adjacency tensor, we can define the Laplacian tensor of the multilayer graph similar to that in a single-layer graph. Denoting the degree (or multi-strength) of the entity’s ’s projected node on layer as which is a summation over weights of different natures (inter- and intra- layer edges), the degree tensor is defined as a diagonal tensor with entries for , whereas its other entries are zero. The Laplacian tensor can be defined as follows.

Definition 3 (Laplacian Tensor).

A multilayer graph , with layers and nodes in each layer , can be represented by a fourth-order Laplacian tensor defined as , where is the adjacency tensor and is the degree tensor.

The Laplacian tensor can be useful to analyze propagation processes such as diffusion or random walk [11]. Both adjacency and Laplacian tensors are important algebraic representations of the MLG depending on datasets and user objectives. For convenience, we use a tensor as a general representation of a given MLG . As the adjacency tensor is more general, the representing tensor F refers to the adjacency tensor in this paper unless specified otherwise.

III-B2 Representation of General MLG

Representing a general multilayer graph with different number of nodes in each layer always remains a challenge if one aims to distinguish the interlayer and intralayer connection features. In JFT [16] and MWGSP [18], all layers must reside on the same underlying graph structure which restrict the number of nodes to be the same in each layer. Similarly, a reconstruction is also needed to represent a general MLG by the forth-order tensor in M-GSP. Note that, although M-GSP also needs a reconstruction to represent a general MLG, we allow different layers with heterogeneous graph structures, which provides additional flexibility than JFT and MWGSP.

There are mainly two ways to reconstruct: 1) Add isolated nodes to layers with fewer nodes to reach nodes [12] and set the augmented signals as zero; and 2) Aggregate several nodes into super-nodes for layers with [31] and merge the corresponding signals. Since isolated nodes do not interact with any other nodes, it does not change the topological structure of the original multilayer architecture in the sense of signal shifting while the corresponding spectrum space could still be changed. The aggregation method depends on how efficiently we can aggregate redundant or similar nodes. Different methods can be applied depending on specific tasks. For example, if one wants to explore the cascading failure in a physical system, the method based on isolated nodes is more suitable. For the applications, such as video analysis where pixels can be intuitively merged as superpixels, the aggregation method can be also practical.

In addition, although the fourth-order representing tensor can be viewed as the projection of several entities into different layers in Eq. (11), the entities and layers can be virtual and not necessarily physical to capture the underlying structures of the datasets. The information within the multilayer graphs, together with definitions of the underlying virtual entities and layers, should only depend on the structure of the multilayer graphs. We will illustrate this further in Section VII-B.

III-C Flattening and Analysis

In this part, we introduce the flattening of the multilayer graph, which could simplify some operations in the tensor-based M-GSP. For a multilayer graph with layers and nodes in each layer, its fourth-order representing tensor can be flattened into a second-order matrix to capture the overall edge weights. There are two main flattening schemes in the sense of entities and layers, respectively:

-

•

Layer-wise Flattening: The representing tensor can be flattened into with each element

(13) -

•

Entity-wise Flattening: The representing tensor can be flattened into with each element

(14)

These two flattening methods provide two ways to interpret the graph structure. In the first method, the flattened multilayer graph has clusters with nodes in each cluster. The nodes in the same cluster have the same function (belong to the same layer). In the second method, the flattened graph has clusters with nodes in each cluster. Here, the nodes in the same cluster are from the same entity. Examples of the tensor flattening of a two-layer graph with nodes in each layer are shown in Fig. 4. From the examples, we can see that the diagonal blocks in are the intralayer connections for each layer and other blocks describe the interlayer connections through layer-wise flattening; and the diagonal block in describe the ‘intra-entity’ connections and other elements represent the ‘inter-entity’ connections in entity-wise flattening. Although these two flattening schemes define the same MLG with a different indexing of vertices, they are still helpful to analyze the MLG spectrum space.

IV Spatial Definitions in M-GSP

Based on the tensor representation, we now define signals and signal shifting over the multilayer graphs. In GSP, each signal sample is the attribute of one node. Typically, a graph signal can be represented by an -length vector for a graph with nodes. Recall that in traditional GSP [3], basic signal shifting is defined with the representing matrix as the shifting filter. Thus, in M-GSP, we can also define the signals and signal shifting based on the filter implementation.

In M-GSP, each signal sample is also related to one node in the multilayer graph. Intuitively, if there are nodes, there are signal samples in total. Similar to GSP, we use the representing (adjacency/Laplacian) tensor as the basic MLG-filter. Since the input signal and the output signal of the MLG-filter should be consistent in the tensor size, we define a special form of M-GSP signals to work with the representing tensor as follows.

Definition 4 (Signals over Multilayer Graphs).

For a multilayer graph , with layers and nodes in each layer , the definition of multilayer graph signals is a second-order tensor

| (15) |

where the entry is the signal sample in the projected node of entity on layer .

Note that, if the multilayer graph degenerates to a single-layer graph with , the multilayer graph signal becomes an -length vector, which is similar to that in the traditional GSP. Similar to the representing tensor, the tensor signal can also be flattened as a vector in :

-

•

Layer-wise flattening: whose entries are calculated as .

-

•

Entity-wise flattening: whose entries are calculated as .

Given the definitions of multilayer graph signals and filters, we now introduce the definitions of signal shifting in M-GSP. In traditional GSP, the signal shifting is defined as product between signal vectors and representing matrix. Similarly, we define the shifting in the multilayer graph based on the contraction (inner product) between the representing tensor and tensor signals.

Definition 5 (Signal Shifting over Multilayer Graphs).

Given the representing tensor and the tensor signal defined over a multilayer graph , the signal shifting is defined as the contraction (inner product) between and in one entity-related order and one layer-related order, i.e.,

| (16) |

where is the contraction between and defined in Eq. (7).

The elements in the shifted signal are calculated as

| (17) |

From Eq. (17), there are two important factors to construct the shifted signal: 1) The signal in the neighbors (both intra- and inter- layer interactions) of the node ; and 2) The intensity of interactions between the node and its neighbors. Then, the signal shifting is related to the diffusion process over the multilayer graphs. More specifically, if is the adjacency tensor, signals shift in directions of edges. To better illustrate the signal shifting based on the adjacency tensor, we use a two-layer directed network shown in Fig. 5 as an example. In this multilayer graph, the original signal is defined as

| (18) |

and the adjacency tensor is defined as

for each fiber. Then, the shifted signal is calculated as

| (19) |

From Eq. (19), we can see that the signal shift one step following the direction of the links.

Meanwhile, if is the Laplacian tensor, Eq. (17) can be written as

| (20) |

which is the weighted average of difference with neighbors.

V Multilayer Graph spectrum space

In traditional GSP, graph spectrum space is defined according to the eigenspace of the representing matrix [3]. Similarly, we define the MLG spectrum space based on the decomposition of the representing tensor. Since tensor decomposition is less stable when exploring the factorization of a specific order or when extracting the separate features in the asymmetric tensors, we will mainly focus on spectral properties of undirected multilayer graphs in this section for simplicity and clarity of presentation. For directed MLG, we provide alternative spectrum definitions in the Appendix and will explore detailed analysis in future works.

V-A Joint Spectral Analysis in M-GSP

For a multilayer graph with layers and nodes, the eigen-tensor of the representing tensor is defined in the tensor-based multilayer graph theory [11] as

| (21) |

More specifically, can be decomposed as

| (22) | ||||

| (23) |

where is the eigenvalues and is the corresponding eigen-tensor. Note that just relabels the index of , and there is no specific order for here.

Similar to the traditional GSP where the graph Fourier space is defined by the eigenvectors of the representing matrix, we define the joint MLG Fourier space as follows.

Definition 6 (Joint Multilayer Graph Fourier Space).

For a multilayer graph with layers and nodes, the MLG Fourier space is defined as the space consisting of all spectral tensors , which characterizes the joint features of entities and layers.

Recall that in GSP, the GFT is defined based on the inner product of and the signals defined in Eq. (2). Similarly, we can define the M-GFT based on the spectral tensors of the representing tensor to capture joint features of inter- and intra- layer interactions as follows.

Definition 7 (Joint M-GFT).

Let consist of spectral tensors of the representing tensor , where .

The joint M-GFT (M-JGFT) can be defined as the contraction between and the tensor signal , i.e.,

| (24) |

Now, we show how to obtain the eigen-tensors. Implementing the flattening analysis, we have the following properties.

Property 1.

Proof.

Suppose is an eigenpair of , i.e.,

| (25) |

Let . Since

| (26) |

we have

| (27) |

Thus, is also an eigenvalue of . ∎

This property shows that the flattened tensors are the reshaped original representing tensor, and could capture some of the spectral properties as follows.

Property 2.

Given the eigenpair of the layer-wise flattened tensors, the eigenpair of the original representing tensor can be calculated as , and . Similarly, given the eigenpair of the entity-wise flattened tensor, the eigenpair of the original representing tensor can be calculated as , and .

The Property 2 shows that we can calculate the eigen-tensor from the flattened tensor to simplify the decomposition operations. Moreover, the M-JGFT is the bijection of GFT in the flattened MLG, with vertices indexed by both the layers and the entities. However, such M-JGFT analyzes the inter- and intra- layer connections jointly while ignoring the individual features of entities and layers. Next, we will show how to implement the order-wise frequency analysis in M-GSP based on tensor decomposition.

V-B Order-wise Spectral Analysis in M-GSP

In an undirected multilayer graph, the representing tensor (adjacency/Laplacian) is partially symmetric between orders one and three, and between orders two and four, respectively. Then, the representing tensor can be written with the consideration of the multilayer graph structure under orthogonal CP-decomposition [26] as follows:

| (28) | ||||

| (29) |

where are orthonormal, are orthonormal and .

The CP decomposition factorizes a tensor into a sum of component rank-one tensors, which describe the underlying features of each order. Although approximated algorithms are implemented to obtain the optimal decomposition, CP decomposition still achieves great success in real scenarios, such as feature extraction [32] and tensor-based PCA analysis [33]. A detailed discussion of tensor decomposition and its implementation in M-GSP are provided in Section VII-D. In Eq. (29), and capture the features of layers and entities, respectively, which can be interpreted as the subspaces of the MLG. More discussions about the frequency interpretation of order-wise M-GSP spectrum and connections to MWGSP spectrum are presented in Section VII-C.

Note that, if there is only one layer in the multilayer graph, Eq. (29) reduces to the eigen-decomposition of a normal single-layer graph, i.e.,

With the decomposed representing tensor in Eq. (29), the order-wise MLG spectrum is defined as follows.

Definition 8 (Order-wise MLG Spectral Pair).

For a multilayer graph with layers and nodes, the order-wise MLG spectral pairs are defined by , where and characterize features of layers and entities, respectively.

With the definition of order-wise MLG spectral pair, we now explore their properties. Considering , we have the following property, which indicates the availability of a joint MLG analysis based on order-wise spectrum.

Property 3.

The factor tensor of the representing tensor is the approximated eigen-tensor of .

Proof.

Suppose that is one factor tensor of obtained from Eq. (29).

Let denote the Kronecker delta. Since forms an orthonormal basis, then the inner product would satisfy

Similarly,

| (30) | ||||

| (31) | ||||

| (32) |

Then, we have

| (33) |

Thus,

| (34) |

which indicates

| (35) |

Then, is the approximated eigen-tensor. ∎

This property indicates the relationship between the order-wise MLG spectral pair and the joint eigen-tensors.

By constructing a fourth-order tensor with as its elements, i.e., , we can have the following property.

Property 4.

Let , where is the contraction in the third and forth order with . Then, is super-diagonal with super-diagonal elements all equal to one.

Proof.

Let and . Then, we have

| (36) |

∎

This property generalizes the orthogonality of the spectral tensor into a similar definition of matrix.

We now introduce the order-wise MLG spectral transform. Similar to Eq. (24), the joint transform can be defined as

| (37) |

Note that each element of in Eq. (37) can be calculated as

| (38) | ||||

| (39) | ||||

| (40) |

Let and . We then have

| (41) |

with each element

| (42) |

Clearly, the M-GFT can be obtained as

| (43) |

Then, we have the following definition of M-GFT based on order-wise spectrum.

Definition 9 (Order-wise M-GFT).

Given the spectral vectors and , the layer-wise M-GFT can be defined as

| (44) |

and the entity-wise M-GFT can be defined as

| (45) |

The general M-GFT based on order-wise spectrum is defined as

| (46) |

If there is only one layer in the multilayer graph, the M-GFT calculated with as , which has the same form as the traditional GFT in Eq. (2).

In addition, since and are orthonormal basis of undirected MLG, the inverse M-GFT can be calculated as

| (47) |

Different from joint MLG Fourier space in Section V-A, the order-wise MLG spectrum provides an individual analysis on layers and entities separately, and a reliable approximated analysis on the underlying MLG structures jointly. To minimize confusion, we abbreviate joint M-GFT in Eq. (24) as M-JGFT. M-GFT refers to the order-wise transform in Eq. (46) in the remaining parts if there is no specification.

V-C MLG Singular Tensor Analysis

In addition to the eigen-decomposition, the singular value decomposition (SVD) is another important decomposition to factorize a matrix. In this part, we provide the higher-order SVD (HOSVD) [25] of the representing tensor as an alternative definition of spectrum for the multilayer graphs.

Given the multilayer graph with layers and nodes in each layer, its representing tensor can be decomposed via HOSVD as

| (48) |

where is a unitary matrix, with and . is a complex -tensor of which the subtensor obtained by fixing th index to have

-

•

where .

-

•

.

The Frobenius-norms is the -mode singular value, and are the corresponding -mode singular vectors. For an undirected multilayer graph, the representing tensor is symmetric for every 2-D combination. Thus, there are two modes of singular spectrum, i.e., for mode , and for mode . More specifically, and . Since the joint singular tensor captures the consistent information of entities and layers, it can be calculated as

| (49) |

Note that the diagonal entries of are not the eigenvalues or frequency coefficients of the representing tensor in general. The multilayer graph singular space is defined as follows.

Definition 10 (Multilayer Graph Singular Space).

Similar to order-wise spectral analysis in Section V-B, we can define the MLG singular tensor transform (M-GST) based on the singular tensors as follows.

Definition 11 (M-GST).

Suppose that consists of the singular vectors of the representing tensor in Eq. (48), where . The M-GST can be defined as the contraction between and the tensor signal , i.e.,

| (50) |

If the singular vectors are included in and , the layer-wise M-GST can be defined as

| (51) |

and the entity-wise M-GST can be defined as

| (52) |

Inverse M-GST can be defined similarly as in Eq. (47) with unitary and .

Compared to the eigen-tensors in Eq. (22), the singular tensors come from the combinations of the singular vectors, thus are capable of capturing information of layers and entities more efficiently. Eigen-decomposition, however, focuses more on the joint information and approximate the separate information of layers and entities. We shall provide further discussion on the performance of different decomposition methods in Section VII-D. The intuition of applying HOSVD in MLG analysis and its correlations to GSP are also provided in Section VII-A3.

V-D Spectrum Ranking in the Multilayer Graph

In traditional GSP, the frequencies are defined by the eigenvalues of the shift, whereas the total variation is an alternative measurement of the order of the graph frequencies [3]. Similarly, we use the total variation of based on the spectral tensors to rank the MLG frequencies. Let be the joint eigenvalue of the adjacency tensor with the largest magnitude. The M-GSP total variation is defined as follows:

| (53) | ||||

| (54) |

where is the element-wise norm. Other norms could also be used to define the total variation. For example, the norm could be efficient in signal denoising [3]. The graph frequency related to is said to be a higher frequency if its total variation is larger, and its corresponding spectral tensor is a higher frequency spectrum. If the representation tensor refers to Laplacian tensor, i.e., , the frequency order is in contract to the adjacency tensor as GSP [3]. We shall provide more details on interpretation of MLG frequency in Section VII-A.

VI Filter Design

In this section, we introduce an MLG filter design together with its properties based on signal shifting.

VI-A Polynomial Filter Design

Polynomial filters are basic filters in GSP [7, 34]. In M-GSP, first-order filtering consists of basic signal filtering, i.e.,

| (55) |

Similarly, a second order filter can be defined as additional filtering on first-order filtered signal, i.e.,

| (56) | ||||

| (57) |

whose entries are calculated as

| (58) | ||||

| (59) | ||||

| (60) | ||||

| (61) |

where is the contraction defined in Eq. (8).

Let . From Eq. (22), we have:

| (62) | ||||

| (63) |

Similarly, for th-order term , its entry can be calculated as .

Now we have the following property.

Property 5.

The -th order basic shifting filter can be calculated as

| (64) | ||||

| (65) |

This property is the M-GSP counterpart to the traditional linear system interpretation that complex exponential signals are eigenfunctions of linear systems [3], and provide a quicker implementation of higher-order shifting. With the -order polynomial term, the adaptive polynomial filter is defined as

| (66) |

where are parameters to be estimated from data.

VI-B Spectral Filter Design

Filtering in the graph spectrum space is useful in GSP frequency analysis. For example, ordering the Laplacian graph spectrum in a descent order by the graph total variation [3], i.e., high frequency to low frequency, the GFT of is calculated as . By removing elements in the low frequency part, i.e., , a high-pass filter can be designed as

| (67) | ||||

| (68) |

where is a diagonal matrix with for ; otherwise, .

Similarly, in M-GSP, a spectral filter is designed by filtering in the spectrum space together with the inverse M-GFT. With Eq. (46) and Eq. (50), spectral filtering of is defined as

| (69) |

where functions and are designed by the specific tasks. For example, if one wants to design a layer-wise filter capturing the smoothness of signals in the MLG singular space, the function can be designed as by ordering the layer-wise singular vectors in the descent order of singular values.

VI-C Discussion

We briefly discuss the interpretation of polynomial filters and spectral filters. From the spatial perspective, MLG polynomial filter is a weighted sum of messaging passing on the multilayer graph in different orders, shown as Eq. (66). Each node collects information from both inter- and intra- layer neighbors, before combining them with its own information. From the spectrum perspective, M-GSP polynomial filters are eigenfunctions of linear systems, which are special cases of M-GSP spectral filters shown in Eq. (69) The M-GSP spectral filters assign different weights to each M-GSP spectrum via functions and depending on specific tasks. Both M-GSP polynomial filters and spectral filters can be useful for high-dimensional IoT signal processing. More discussions and examples of M-GSP filters are presented in Section VIII.

VII Discussion and Interpretative Insights

VII-A Interpretation of M-GSP Frequency

VII-A1 Interpretation of Graph Frequency

To better understand its physical meaning, we start with the total variation in digital signal processing (DSP). The total variation in DSP is defined as differences among signals over time [36]. Moreover, the total variations of frequency components have a 1-to-1 correspondence to frequencies in the order of their values. If the total variation of a frequency component is larger, the corresponding frequency with the same index is higher. This means that, a higher frequency component changes faster over time and exhibits a larger total variation. Interested readers could refer to [3, 8] for a detailed interpretation of total variation in DSP.

Now, let us elaborate the graph frequency motivated by the cyclic graph. Rewrite the finite signals in DSP as vectors, i.e., , the signal shifting can be interpreted as the shift filtering corresponding to a cyclic graph shown in Fig. 6. Suppose that its adjacency matrix is written as

| (70) |

Then, the shifted signal over the cyclic graph is calculated as , which shifts the signal at each node to its next node. More specifically, can be decomposed as where the eigenvalues and is the discrete Fourier matrix. Inspired by the DSP, the eigenvectors in are the spectral components (spectrum) of the cyclic graph and the eigenvalues are related to the graph frequencies [3].

Generalizing the adjacency matrix of the cyclic graph to the representing matrix of an arbitrary graph, the graph Fourier space consists of the eigenvectors of and the graph frequencies are related to the eigenvalues. More specifically, the graph Fourier space can be interpreted as the manifold or spectrum space of the representing matrix. As aforementioned, the total variations of frequency components reflect the order of frequencies, we can also use the total variation, i.e., , to rank the graph frequencies, where is the spectral component related to the eigenvalue in . Similar to DSP, the graph frequency indicates the oscillations over the vertex set, i.e., how fast the signals change over the graph shifting.

VII-A2 Interpretation of MLG Frequency

Now, return to M-GSP. Given spectral tensors of a multilayer graph, a signal can be written in a weighted sum of the spectrum, i.e., . Viewing the spectral tensor as a signal component, the total variation is in the form of differences between the original signal and its shifted version in Eq. (53). If the signal component changes faster over the multilayer graph, the corresponding total variation is larger. Since we relate higher frequency component with a larger total variation, the MLG frequency indicates how fast the signal propagates over the multilayer graph under the representing tensor. If a signal contains more high frequency components, it changes faster under the representing tensor.

VII-A3 Interpretation of MLG Singular Tensors

As discussed in Section VII-A1, the name of graph Fourier space arises from the adjacency matrix of the cyclic graph. However, when the algebra representation is generalized to an arbitrary graph, especially the Laplacian matrix, the definition of graph spectrum is less related to the Fourier space in DSP but can be interpreted as the manifold or subspace of the representing matrix instead. In literature, SVD is an efficient method to obtain the spectrum for signal analysis, such as spectral clustering [37] and PCA analysis [38]. It is straightforward to generalize graph spectral analysis to graph singular space, especially for the Laplacian matrix. In M-GSP, the order-wise singular vectors can be interpreted as subspaces characterizing features of layers and entities, respectively. Since HOSVD is robust and efficient, transforming signals to the MLG singular space (M-GST) for the analysis of underlying structures can be a useful alternative for M-GFT.

VII-B Interpretation of Entities and Layers

To gain better physical insight of entities and layers, we discuss two categories of datasets:

-

•

In most of the physical systems and datasets, signals can be modeled with a specific physical meaning in terms of layers and entities. In smart grid, for example, each station can be an entity, connected in two layers of computation and power transmission, respectively. Another example is video in which each geometric pixel point is an entity and each video frame form a layer. Each layer node denotes the pixel value in that video frame. M-GSP can be intuitive tool for these datasets and systems.

-

•

In some scenarios, however, the datasets usually only has a definition of layers without meaningful entities. In particular, for multilayer graphs with different numbers of nodes, we may insert some isolated artificial nodes to augment the multilayer graph. Often in such applications, it may be harder to identify the physical meaning of entities. Here, the entities may be virtual and are embedded in the underlying structure of the multilayer graph. Although definition of a virtual entity may vary with the chosen adjacency tensor, it relates to the topological structure in terms of global spectral information. For example, in Fig. 7, we can use two different definitions of virtual entities. Although the representing tensors for these two definitions differ, their eigenvalues remain the same. Considering also layer-wise flattening, the two supra-matrices are related by reshaping, by exchanging the fourth and fifth columns and rows. They still have the same eigenvalues, whereas the eigentensors can also be the same by implementing the reshaping operations. Note that, to capture distinct information from entities, their spectra would change with different definitions of virtual entities.

VII-C Distinctions from Existing GSP Works

VII-C1 Graph Signal Processing

Generally, M-GSP extends traditional GSP into multilayer graphs. Although one can stack all MLG layers to represent them with a supra-matrix, such matrix representation makes GSP inefficient in extracting features of layers and entities separately. Given a supra-matrix of the MLG, the layers of nodes can not be identified directly from its index since all the nodes are treated equivalently. However, the tensor representation provides clear identifications on layers in its index. Moreover, in GSP, we can only implement a joint transform to process inter- and intra- layer connections together, while the M-GSP provide a more flexible choice on joint and order-wise analysis. In Section V-A, the joint M-GSP analysis introduced can be viewed as the bijection of GFT in the flattened MLG, with vertices indexed by both layers and entities. Beyond that, we flexibly provide order-wise spectral analysis based on tensor decompositions, which allow the order-wise analysis on layers and nodes. One can select suitable MLG-based tools depending on tasks. The joint spectral analysis can be implemented if we aim to explore layers and entities fully, whereas the order-wise spectral and singular analysis are more efficient in characterizing layers and entities separately.

VII-C2 Joint Time-Vertex Fourier Analysis

In [16], a joint time-vertex Fourier transform (JFT) is defined by implementing GFT and DFT consecutively. As discussed in Section VII-A1, the time sequences can be interpreted under a cyclic graph, and thus reside on a MLG structure. However, JFT assumes that all the layers have the same intra-layer connections, which limits the generalization of the MLG analysis. Differently, the tensor-based representation allows heterogeneous structures for the intra-layer connections, which makes M-GSP more general.

VII-C3 Multi-way Graph Signal Processing

In [18], MWGSP has been proposed to process high-dimensional signals. Given th-order high-dimensional signals, one can decompose the tensor signal in different orders, and construct one graph for each. Graph signal is said to reside on a high-dimensional product graph obtained by the product of all individual factor graphs. Although the MW-GFT is similar to M-GFT for , there still are notable differences in terms of spectrum. First, MWGSP can only process signals without exploiting a given structure since multiple graph spectra would arise from each order of the signals. For a multilayer graph with a given structure, such as physical networks with heterogeneous intralayer connections, MWGSP does not naturally process it efficiently and cohesively. The order-wise spectra come from factor graphs of each order in MWGSP while M-GSP spectra are calculated from the tensor representation of the whole MLG. Second, MWGSP assumes all the layers residing on a homogeneous factor graph and restricts the types of manageable MLG structure. For example, in a spatial temporal dataset, a product graph, formed by the product of spatial connections and temporal connections, assumes the same topology in each layer. However, many practical systems and datasets feature more complex geometric interactions. M-GSP provide a more intuitive and natural framework for such MLG. In summary, despite some shared similarities between MW-GFT and M-GFT in some scenarios, they serve different purposes and are suitable for different underlying data structures.

VII-D Comparison of Different Decomposition Methods

To compare recovery accuracy of representing tensor using different tensor decomposition methods, we examine eigen-tensor decomposition, HOSVD, optimal CP decomposition and Tucker decomposition in MLGs randomly generated from the (ER) random graph . Here is the number of layers with nodes in each layer, is the intralayer connection probability and is the interlayer connection probability. We apply different decomposition methods of similar complexity, and compute errors between decomposed and original tensors. From Table I, we can see that the eigen-tensor decomposition and HOSVD exhibit better overall accuracy. Generally, eigen-tensor decomposition is better suited for applications emphasizing joint features of layers and entities. On the other hand, HOSVD is effective at separating individual features of layers and entities. Note that, in addition to recovery accuracy, different decompositions may have different performance advantages when capturing different data features that can be better measured with different metrics.

| Graph Structure | ER(0.3,0.3,6,5) | ER(0.5,0.7,11,15) | ER(0.8,0.7,6,15) |

|---|---|---|---|

| Eigen-tensor | 8.3893e-15 | 1.6001e-13 | 3.8347e-13 |

| HOSVD | 1.011e-14 | 1.9563e-13 | 1.9056e-13 |

| OPT-CP | 9.22e-01 | 8.82e-01 | 9.24e-01 |

| Tucker | 9.37e-05 | 9.10e-05 | 9.40e-05 |

VIII Application Examples

We now provide some illustrative application examples within our M-GSP framework.

VIII-A Analysis of Cascading Failure in IoT Systems

Analysis of cascading failure in IoT systems based on the spreading processes in multilayer graphs has recently attracted significant interests [39]. Modeling the failure propagation in complex systems by shifting over multilayer graph, M-GSP spectrum theory can help the analysis of system stability. In this part, we introduce a M-GSP analysis for cascading failure over multilayer cyber-physical systems based on epidemic model [12]. Shown in Fig. 1, a cyber-physical system with layers and nodes in each layer can be intuitively modeled by a MLG with adjacency tensor .

Here, we consider the susceptible-infectious-susceptible (SIS) model [40] for the failure propagation. In the SIS model, each node has two possible states: susceptible (not fail) or infectious (fail). At each time slot, the infectious node may cause failure to other nodes through directed links at certain infection rates, or it may heal itself spontaneously at a self-cure rate. The initial attack make several nodes infectious.

Since the nodes in the same layer correspond to the same functionality, e.g., power transmission, nodes on the same layer have the same self-cure rate and infection rate. The notations of the epidemic model are given as follows:

-

•

: self-cure rate for nodes on layer ;

-

•

: infection rate describing failure propagation probability from nodes on layer to those on layer ;

-

•

: failure probability of the projected node of entity on layer at time ;

-

•

: transition probability that the projected node of entity on layer shifts from infectious state to susceptible state at time ;

-

•

: transition probability that the projected node of entity on layer remains susceptible at time .

Since an infectious node becomes susceptible if it cures itself without being infected by its neighbors, we have

| (71) |

Similarly, a susceptible node remains susceptible without being infected by its neighbors. Thus,

| (72) |

The state transition forms a Markov chain, for which we derive the failure probability as

| (73) |

where , with if ; otherwise, .

We can define a transition tensor with elements to characterize the failure propagation in Eq. (73). In steady state, . Moreover,

| (74) |

where is the failure probability of the projected node of entity on layer in steady state. Following [12], we can arrive that if the spectral radius of the transition tensor , which indicates no failed nodes in steady state. Thus, could serve as an indicator for system robustness. Here, to avoid being repetitive, we merely introduce a simple example of MLG-based cascading failure analysis. Interested readers may refer to our work [12] for more details. With a better understanding of M-GSP spatial shifting, one can develop more general analysis for various failure models in the multilayer IoT systems.

VIII-B Spectral Clustering

Clustering is a widely used tool in a variety of applications such as social network analysis, computer vision, and IoT. Spectral clustering is popular and effective among many variants. Modeling dataset by a normal graph before spectral clustering, significant performance improvement is possible in structured data [37]. In this part, we introduce M-GSP spectral clustering and demonstrate its application in RGB image segmentation.

To model an RGB image using MLG, we can directly treat its three colors as three layers. To reduce the number of nodes for computational efficiency, we first build superpixels for a given image and represent each superpixel as an entity in the multilayer graph. Here, we define the feature of a superpixel according to its RGB pixel values. For interlayer connections, each node connects with its counterparts in other layers. For intralayer connections on layer , we calculate the Gaussian-based distance between two superpixels according to

| (75) |

if ; otherwise, , where is the superpixel value on layer , is an adjustable parameter and is a predefined threshold. Different layers may have different structures. The threshold is set to be the mean of all pairwise distances. As such, an RGB image is modeled as multiplex graph with and nodes.

We now consider MLG-based spectral clustering. For image segmentation, we focus on the properties of entities (i.e., superpixels), and implement spectral clustering over entity-wise spectrum by proposing Algorithm 1. The previous discussions have been summarized in steps 1-3. In Step 4, different schemes may be used to calculate spectrum, including spectral vector via tensor factorization in Eq. (29), and singular vector in Eq. (48). Step 5 determines based on the largest arithmetic gap in eigenvalues. Traditional clustering methods, such as -means clustering [37], can be carried out in Step 6.

| BSD300(N=100, all) | BSD300(N=300, all) | BSD300(N=100, Coarse) | BSD300(N=300, Coarse) | BSD300(N=900,Coarse) | BSD500(Coarse) | |

| GSP | 0.1237 | 0.1149 | 0.3225 | 0.3087 | 0.3067 | 0.3554 |

| K-MEANS | 0.1293 | 0.1252 | 0.3044 | 0.3105 | 0.3124 | 0.3154 |

| MLG-SVD | 0.1326 | 0.1366 | 0.3344 | 0.3394 | 0.3335 | 0.3743 |

| MLG-CP | 0.1321 | 0.1293 | 0.3195 | 0.3256 | 0.3243 | 0.3641 |

| IIC | 0.2071 | |||||

| GS | 0.3658 | |||||

| BP | 0.3239 | |||||

| DFC | 0.3739 | |||||

To test the proposed Algorithm 1, we first compare its results with those from GSP-based method and traditional -means clustering by using a public BCCD blood cell dataset shown in Fig. 8(a). In this dataset, there are mainly three types of objects, i.e., White Blood Cell (WBC) vs. Red Blood Cell (RBC) vs. Platelet (P). We set the number of clusters to 3 and . For GSP-based spectral clustering, we construct graphs based on the Gaussian model by using information from all 3 color values to form edge connections in a single graph. There is only a single and in the Gaussian model. For M-GSP, we use the MLG singular vectors (MLG-SVD), and tensor factorization (MLG-FZ) for spectral clustering, separately. Their respective results are compared in Fig 8. WBCs are marked yellow, and RBCs are marked green. Platelet (P) is marked blue. From the illustrative results, MLG methods exhibit better robustness and are better in detecting regions under noise. Comparing results from different MLG-based methods, we find MLG-FZ to be less stable than HOSVD, partly due to approximation algorithms used for tensor factorization. Overall, MLG-based methods shows reliable and robust performance over GSP-based method and -means.

In addition to visual inspection of results for such images, we are further interested to numerically evaluate the performance of the proposed methods against some state-of-art methods for several more complex datasets that contain more classes. For this purpose, we test our methods on the BSD300 and BSD500 datasets [41]. BSD300 contains 300 images with labels, and BSD500 contains 500 images with labels. We first cluster each image, and label each cluster with the best map of cluster orders against the ground truth. Numerically, we use mIOU (mean of Intersection-over-Union), also known as the mean Jaccard Distance, for all clusters in each image to measure the performance. The Jaccard Distance between two groups and is defined as

| (76) |

A larger mIOU indicates stronger performance. To better illustrate the results, we considered two setups of datasets, i.e., one containing fewer classes (coarse) and one containing all images (all). We compare our methods together with -means, GSP-based spectral clustering, invariant information clustering (IIC) [42], graph-based segmentation (GS) [43], back propagation (BP) [44] and differentiable feature clustering (DFC) [45]. The best performance is marked in bold. From the results of Table II, we can see that larger number of clusters in the first two columns generate worse performance. There are two natural reasons. First, the mapping of the best order of cluster labels is more difficult for more classes. Second, the graph-based spectral clustering is sensitive to the number of leading spectra and the structure of graphs. Regardless, MLG-based methods still demonstrate better performance. Moreover, even when we use the same total number of nodes in a single layer graph and multilayer graph for another fairness comparison in terms of complexity, i.e., for graph and for MLG, MLG-based methods still perform better than graph-based methods in this example application. MLG methods have competitive performances compared to the state-of-the-art methods. Note that, under proper training, neural network (NN)-based methods may give good results in cases with many clusters as suggested in [45].

VIII-C Semi-Supervised Classification

Semi-supervised classification is an important practical application for IoT intelligence. In this application, we apply MLG polynomial filters for semi-supervised classification. Traditional GSP defines adaptive filter as

| (77) |

where is an adjacency matrix based on pairwise distance or a representing matrix constructed from the adjacency matrix. Here, signals are defined as labels or confidence values of nodes, i.e., by setting unlabeled signals to zero. To estimate parameters of , Optimization can be formulated to minimize, e.g., the mean square error (MSE) from ground truth label

| (78) |

where is a mapping of filtered signals to their discrete labels. For example, in a binary classification, one can assign a label to a filtered signal against a threshold (e.g. ). Some other objective functions include labeling uncertainty, Laplacian regularization, and total variation. Using estimated parameters, we can filter the signal one more time to determine labels for some unlabeled data by following the same process.

Similarly, in an MLG, we can also apply polynomial filters for label estimation. Given an arbitrary dataset with signal points and features for each node, we can construct a MLG by defining layers based on features and entities based on signal points. The inter- and intra- layer connections are calculated by the Gaussian distance with different parameters. Let its adjacency tensor . A signal is defined by

| (79) |

which is an extended version of graph signal. Note that we do not necessarily need to order signals by placing zeros in the rear. We only write the signal as Eq. (79) for notational convenience. We now apply polynomial filters on signals, i.e.,

| (80) |

and

| (81) |

For a filtered signal (), we define a function to map 2-D signals into 1-D by calculated the column-wise mean of , i.e.,

| (82) |

Next, we can define a function on and consider certain objective functions in filter design. To validate the efficacy of polynomial filtering in the MLG framework, we test and for the binary classification problem on the Cleveland Heart Disease Dataset. In this dataset, there are data points with 13 feature dimensions. We directly build a MLG with nodes in each of the layers. More specifically, we directly use the labels as . For (AF), we set for at least one . Using MSE as objective function, we apply a greedy algorithm to estimate parameters . We limit the highest polynomial order to . For (APF), we estimate a classification threshold via the mean of by setting the polynomial order .

We compare our methods with GSP-based method in similar setups as in aforementioned examples. The only difference is that we use in M-GSP and use in GSP for mapping and classification. We also present the results of label propagation and SVM for comparison. We randomly split the test and training data for 100 rounds. From the results shown in Fig. 9, GSP-based and M-GSP based methods exhibit better performance than traditional learning algorithms, particularly when the fraction of test samples is large. In general, M-GSP based methods demonstrate superior performance among all methods owing to its strength to extract ‘multilayer’ features, which could potentially benefit semi-supervised classification tasks in IoT systems.

VIII-D Dynamic Point Cloud Analysis

3D perception plays an important role in the high growth fields of IoT devices and cyber-physical systems, and continues to drive many progresses made in advanced point cloud processing [46]. Here, we propose a short time M-GST method to analyze dynamic point cloud. Given a dynamic point cloud with frames and at most points in each frame, we model it as a multilayer graph with layers and nodes in each layer. More specifically, we test the singular spectrum analysis over the motion sequences of subject 86 in the CMU database [47]. To implement the M-GST, we first divide the motion sequence into several shorter sequences with frames. Next for each shorter sequence, we model interlayer connections by connecting points with the same label among successive frames. For points in the same frame, we connect two points based on the Gaussian-kernel within a Euclidean threshold [6]. Let be the 3D coordinates of the th point. We assign an edge weight between two points and as a nonzero only if . Next, we estimate the spatial and temporal basis vectors of each shorter-term sequences by HOSVD in Eq. (48). Finally, we use the 3D coordinates of all points in each shorter-term sequences as signals and calculate their M-GST. To illustrate the results of M-GST, we examine the spectrogram similar to that of short-time Fourier transform (STFT) [48]. In Fig. 10, we transform the signal defined by the coordinates in dimension via M-GST and illustrate the transformation results for the divided frame sequence. From Fig. 10, one can easily identify different motions based on the MLG singular analysis.

To explore motions in dynamic point clouds, we can also apply the entity-wise MLG highpass filters described in Section VI-B to capture critical details of human bodies. More specifically, we select the first 140 frames in ‘walking’ and define the norm of three coordinates as signals. We select 5 body joints (entities) in each temporal frame (layers) shown as Fig. 11. From the results shown, entity 1 and entity 2 exhibit periodic patterns which are linked to the leg motion. Entity 3 (head) shows little movement relative to the main body. Entity 4 and entity 5 (hands) display more irregular patterns since they do not directly identify ‘walking’. To summarize, the MLG highpass filter can efficiently capture some key information of body movement and identify the meaning of nodes (entities). These and related information can assist further analysis of dynamic point clouds including compression and classification.

Our future works shall target more practical applications of point cloud on IoT devices, including point cloud compression, low-complex point cloud segmentation and robust denoising.

VIII-E Other Potential Applications in IoT Systems

Along with the widespread deployment of IoT technologies, system structures become increasingly complex. Traditional graph-based tools are less adept at modeling ‘multilayer’ graph interactions. The more general model of M-GSP provides additional opportunities for IoT applications. Here, we suggest several potential scenarios in IoT systems for M-GSP:

-

•

IoT networks with multilayer structure fit naturally to MLG, for which M-GSP can be designed for various tasks such as intrusion detection, resource management and state prediction;

-

•

Because of the dynamic nature in practical IoT networking, even signals on single-layer IoT systems naturally fit a spatial-temporal graph model, which can be also characterized by MLG. For such dynamic IoT systems, M-GSP tools, such as adaptive filters and MLG learning machines, can be developed for signal prediction and node classification.

Overall, the power of MLG in extracting underlying ‘multilayer/multi-level’ structures in the IoT systems makes M-GSP a potentially important tool in handling high-dimensional signal processing and learning tasks.

IX Conclusion

In this work, we present a novel tensor-based framework of multilayer graph signal processing (M-GSP) that naturally generalizes the traditional GSP to multilayer graphs. We first present the basic foundation and definitions of M-GSP including MLG signals, signal shifting, spectrum space, singular space, and filter design. We also provide interpretable discussion and physical insights through numerical results and examples to illustrate the strengths, general insights, and benefits of novel M-GSP framework. We further demonstrate exciting potentials of M-GSP in data processing applications through experimental results in several practical scenarios.

With recent advances in tensor algebra and multilayer graph theory, more opportunities are emerging to explore M-GSP and its applications. One such interesting problem is the efficient construction of multilayer graph, where M-GSP spectrum properties could improve the robustness of graph structure. Another promising direction is to develop multilayer graph neural networks based on the M-GSP spectral convolution. Additional future directions include the development of M-GSP sampling theory and fast M-GFT.

Unlike for undirected graphs, representing tensors of directed graphs is asymmetric, thereby making each layer or entity characterized by a pair of spectral vectors. To find the spectrum space of a directed multilayer graph, we also present two ways to compute:

-

•

Flattening analysis: Similar to the representing tensor of undirected graphs, we flatten the representing tensor as a second-order supra-matrix, and define spectrum space as the reshaped eigenvectors of the supra-matrix. The flattened matrix (or , ) can be decomposed as

(83) where is the matrix of eigenvectors and is a diagonal matrix of eigenvalues. Then, we can reshape the eigenvectors, i.e., each column of as , and reshape each row of as . Consequently, the original tensor can be decomposed into

(84) -

•

Tensor Factorization: We can also compute the spectrum from the tensor factorization based on CP-decomposition

(85) (86) where is the rank of tensor, characterize layers, characterize entities, and characterize the joint features. Since there are nodes, it is clear that . Note that, for a single layer, Eq. (85) reduces to

(87) Moreover, if and are orthogonal bases, Eq. (87) is in a consistent form of the eigen-decomposition in a single-layer normal graph. In addition, Eq. (28) is also a special case of Eq. (85) if the multilayer graph is undirected.

Since tensor decomposition is less stable when exploring the factorization of a specific order or when extracting the separate features in the asymmetric tensors, we will defer more general analysis of directed networks to future works.

References

- [1] A. Sandryhaila, and J. M. F. Moura, “Discrete signal processing on graphs” IEEE Transactions on Signal Processing, vol. 61, no. 7, pp. 1644-1656, Apr. 2013.

- [2] D. I. Shuman, S. K. Narang, P. Frossard, A. Ortega and P. Vandergheynst, “The emerging field of signal processing on graphs: extending high-dimensional data analysis to networks and other irregular domains,” in IEEE Signal Processing Magazine, vol. 30, no. 3, pp. 83-98, May 2013.

- [3] A. Ortega, P. Frossard, J. Kovačević, J. M. F. Moura and P. Vandergheynst, “Graph signal processing: overview, challenges, and applications,” in Proceedings of the IEEE, vol. 106, no. 5, pp. 808-828, May 2018.

- [4] A. Sandryhaila and J. M. F. Moura, ”Discrete signal processing on graphs: frequency analysis,” in IEEE Transactions on Signal Processing, vol. 62, no. 12, pp. 3042-3054, Jun., 2014.

- [5] S. Chen, A. Sandryhaila, J. M. F. Moura and J. Kovacevic, “Signal denoising on graphs via graph filtering,” 2014 IEEE GlobalSIP, Atlanta, GA, USA, Dec. 2014, pp. 872-876.

- [6] S. Chen, D. Tian, C. Feng, A. Vetro and J. Kovačević, “Fast resampling of three-dimensional point clouds via graphs,” in IEEE Transactions on Signal Processing, vol. 66, no. 3, pp. 666-681, Feb., 2018.

- [7] S. Chen, A. Sandryhaila, J. M. F. Moura and J. Kovačević, “Adaptive graph filtering: multiresolution classification on graphs,” 2013 IEEE Global Conf. on Signal and Info. Processing, Austin, TX, USA, Dec. 2013, pp. 427-430.

- [8] S. Zhang, Z. Ding and S. Cui, “Introducing hypergraph signal processing: theoretical foundation and practical applications,” in IEEE Internet of Things Journal, vol. 7, no. 1, pp. 639-660, Jan. 2020.

- [9] S. Barbarossa and S. Sardellitti, “Topological signal processing over simplicial complexes,” in IEEE Transactions on Signal Processing, vol. 68, pp. 2992-3007, Mar. 2020.

- [10] M. Kivelä, A. Arenas, M. Barthelemy, J. P. Gleeson, Y. Moreno, and M. A. Porter, “Multilayer networks,” in Journal of complex networks, vol. 2, no. 3, pp. 203-271, Jul. 2014.

- [11] M. De Domenico, A. Sole-Ribalta, E. Cozzo, M. Kivela, Y. Moreno, M. A. Porter, S. Gomez, and A. Arenas, “Mathematical formulation of multilayer networks,” Physical Review X, vol. 3, no. 4, p. 041022, 2013.

- [12] S. Zhang, H. Zhang, H. Li and S. Cui, “Tensor-based spectral analysis of cascading failures over multilayer complex systems,” in Proc. 56th Allerton Conf. on Communication, Control, and Computing, Monticello, USA, Oct. 2018, pp. 997-1004.

- [13] Z. Huang, C. Wang, M. Stojmenovic, and A. Nayak, “Characterization of cascading failures in interdependent cyberphysical systems,” IEEE Transactions on Computers, vol. 64, no. 8, pp. 2158-2168, Aug. 2015.

- [14] S. V. Buldyrev, R. Parshani, G. Paul, H. E. Stanley, and S. Havlin, “Catastrophic cascade of failures in interdependent networks,” Nature, vol. 464, no. 7291, pp. 1025-1028, Apr. 2010.

- [15] S. Gomez, A. Diaz-Guilera, J. Gomez-Gardenes, C. J. Perez-Vicente, Y. Moreno, and A. Arenas, “Diffusion dynamics on multiplex networks,”, Physical Review Letters, vol. 110, no. 2, p. 028701, Jan. 2013.

- [16] F. Grassi, A. Loukas, N. Perraudin and B. Ricaud, “A time-vertex signal processing framework: scalable processing and meaningful representations for time-series on graphs,” in IEEE Trans. Signal Processing, vol. 66, no. 3, pp. 817-829, Feb. 2018.

- [17] P. Das and A. Ortega, “Graph-based skeleton data compression,” 2020 IEEE 22nd International Workshop on Multimedia Signal Processing (MMSP), Tampere, Finland, Sep. 2020, pp. 1-6.

- [18] J. S. Stanley, E. C. Chi and G. Mishne, “Multiway graph signal processing on tensors: integrative analysis of irregular geometries,” in IEEE Signal Processing Magazine, vol. 37, no. 6, pp. 160-173, 2020.

- [19] M. A. Porter, “What is a multilayer network,” Notices of the AMS, vol. 65, no. 11, pp.1419- 1423, Dec. 2018.

- [20] M. De Domenico, V. Nicosia, A. Arenas, and V. Latora, “Structural reducibility of multilayer networks,” Nature Communications, vol. 6, no. 6864, pp. 1-9, Apr. 2015.

- [21] S. Chen, R. Varma, A. Sandryhaila and J. Kovačević, “Discrete Signal Processing on Graphs: Sampling Theory,” in IEEE Transactions on Signal Processing, vol. 63, no. 24, pp. 6510-6523, Dec.15, 2015.

- [22] A. Sandryhaila, and J. M. F. Moura, “Discrete signal processing on graphs: graph filters,” in Proceedings of 2013 IEEE ICASSP, Vancouver, Canada, May 2013, pp. 6163-6166.

- [23] T. G. Kolda and B. W. Bader, “Tensor decompositions and applications,” SIAM Review, vol. 51, no. 3, pp. 455-500, Aug. 2009.

- [24] H. A. L. Kiers, “Towards a standardized notation and terminology in multiway analysis,” Journal of Chemometrics: A Journal of the Chemometrics Society, vol. 14, no. 3, pp. 105-122, Jan. 2000.

- [25] L. De Lathauwer, B. De Moor, and J. Vandewalle, “A multilinear singular value decomposition,” SIAM Journal on Matrix Analysis and Applications, vol. 21, no. 4, pp. 1253-1278, Jan. 2000.

- [26] A. Afshar, J. C. Ho, B. Dilkina, I. Perros, E. B. Khalil, L. Xiong, and V. Sunderam, “Cp-ortho: an orthogonal tensor factorization framework for spatio-temporal data,” Proc. 25th ACM SIGSPATIAL Intl. Conf. Advances in Geographic Info. Syst., Redondo Beach, USA, Jan. 2017, p. 67.

- [27] I. V. Oseledets, “Tensor-train decomposition,” SIAM Journal on Scientific Computing, vol. 33, no. 5, pp. 2295-2317, Jun. 2011.

- [28] A. Bogojeska, S. Filiposka, I. Mishkovski and L. Kocarev, ”On opinion formation and synchronization in multiplex networks,” 2013 21st TELFOR, Belgrade, Serbia, Nov. 2013, pp. 172-175.

- [29] A. C. Kinsley, G. Rossi, M. J. Silk, and K. VanderWaal, “Multilayer and multiplex networks: an introduction to their use in veterinary epidemiology,” Frontiers in Veterinary Science, vol. 7, p. 596, Sep. 2020.

- [30] M. De Domenico, C. Granell, M. A. Porter, and A. Arenas, “The physics of spreading processes in multilayer networks,” Nature Physics, vol. 12, no. 10, pp. 901-906, Aug. 2016.

- [31] A. Loukas, “Graph reduction with spectral and cut guarantees,” Journal of Machine Learning Research, vol. 20, no. 116, pp. 1-42, Jun. 2019.

- [32] Q. Shi, Y. -M. Cheung, Q. Zhao and H. Lu, “Feature extraction for incomplete data via low-rank tensor decomposition with feature regularization,” in IEEE Transactions on Neural Networks and Learning Systems, vol. 30, no. 6, pp. 1803-1817, Jun. 2019.

- [33] M. Jouni, M. D. Mura and P. Comon, “Hyperspectral image classification using tensor cp decomposition,” IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, Jul. 2019, pp. 1164-1167.

- [34] A. Sandryhaila, and J. M. F. Moura, “Discrete signal processing on graphs: graph filters,” in Proceedings of 2013 IEEE ICASSP, Vancouver, Canada, May 2013, pp. 6163-6166.

- [35] S. Chen, F. Cerda, P. Rizzo, J. Bielak, J. H. Garrett and J. Kovačević, “Semi-supervised multiresolution classification using adaptive graph filtering with application to indirect bridge structural health monitoring,” in IEEE Trans. Signal Processing, 62(11):2879-2893, Jun. 2014.

- [36] S. Mallat, A Wavelet Tour of Signal Processing, 3rd ed. New York, NY, USA: Academic, 2008.

- [37] U. V. Luxburg, “A tutorial on spectral clustering.” Statistics and computing, vol .17, no. 4, pp. 395-416, 2007.

- [38] H. Abdi, and L. J. Williams, “Principal component analysis,” Wiley Interdisciplinary Reviews: Computational Statistics, vol. 2, no. 4, pp. 433-459, 2010.

- [39] M. Salehi, R. Sharma, M. Marzolla, M. Magnani, P. Siyari and D. Montesi, “Spreading processes in multilayer networks,” in IEEE Transactions on Network Science and Engineering, vol. 2, no. 2, pp. 65-83, 1 April-June 2015.

- [40] M. J. Keeling and P. Rohani, Modeling Infectious Diseases in Humans and Animals. Princeton, NJ, USA: Princeton University Press, 2011.

- [41] D. Martin, C. Fowlkes, D. Tal and J. Malik, “A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics,” Proceedings Eighth IEEE ICCV, Vancouver, BC, Canada, Jul. 2001, pp. 416-423.

- [42] X. Ji, J. F. Henriques, and A. Vedaldi, “Invariant information clustering for unsupervised image classification and segmentation,” in Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, Nov. 2019, pp. 9865– 9874.

- [43] P. F. Felzenszwalb and D. P. Huttenlocher, “Efficient graph-based image segmentation,” International Journal of Computer Vision, vol. 59, no. 2, pp. 167–181, 2004.