Siamese Keypoint Prediction Network

for Visual Object Tracking

Abstract

Visual object tracking aims to estimate the location of an arbitrary target in a video sequence given its initial bounding box. By utilizing offline feature learning, the siamese paradigm has recently been the leading framework for high performance tracking. However, current existing siamese trackers either heavily rely on complicated anchor-based detection networks or lack the ability to resist to distractors. In this paper, we propose the Siamese keypoint prediction network (SiamKPN) to address these challenges. Upon a Siamese backbone for feature embedding, SiamKPN benefits from a cascade heatmap strategy for coarse-to-fine prediction modeling. In particular, the strategy is implemented by sequentially shrinking the coverage of the label heatmap along the cascade to apply loose-to-strict intermediate supervisions. During inference, we find the predicted heatmaps of successive stages to be gradually concentrated to the target and reduced to the distractors. SiamKPN performs well against state-of-the-art trackers for visual object tracking on four benchmark datasets including OTB-100, VOT2018, LaSOT and GOT-10k, while running at real-time speed. 111The code is available at https://github.com/ZekuiQin/SiamKPN

1 Introduction

Visual object tracking is the task of predicting the location of an arbitrary target in a video sequence provided only the target’s bounding box in the first frame. Like other computer vision tasks such as image classification, object detection and semantic segmentation, object tracking is very challenging due to appearance variations caused by deformation, viewpoint, scale, occlusion, illumination, etc. Besides, the task is even more difficult to solve when considering the background clutter and similar distractors. In literature, a classical approach to visual object tracking is the discriminative correlation filter [5, 18]. In the deep learning era, however, this approach is difficult to leverage end-to-end deep feature learning for even better performance.

To address this issue, the Siamese learning paradigm [6] has been adopted and developed to harvest from offline deep feature learning [45, 2]. This paradigm learns a shared feature embedding network for both the target region and the search image, thus formulates visual object tracking as a similarity learning problem. The first implementation is SINT [45] which trains the Siamese network by sampling pairs of patches. although effective in tracking accuracy, SINT is far from real-time due to the redundant and inefficient patch-based feature extraction. As a simple modification, SiamFC [2] utilizes fully-convolutional operations to learn the Siamese network and solves the matching problem via an efficient cross-correlation between two feature maps. Along this line of research, CFNet [46] incorporates the correlation filter as a differentiable layer into the Siamese framework. RASNet [50] adopts the attention mechanism to improve the quality of the cross-correlation response. In these methods, the prediction modeling part is restricted to simple architectures which results in limited performance to some extent.

To improve the prediction modeling, SiamRPN [27] introduces the region proposal network (RPN) from the object detection literature [37] upon the Siamese network. DaSiamRPN [59] further handles distractors by augmenting the training data to include semantically hard negative pairs. Recently, the backbone is successfully replaced by ResNet [16] in SiamRPN++ [26] via a spatial aware sampling strategy to maintain useful translation invariance and the depth-wise cross-correlation to stablize training. Another work [57] resorts to the cropping-inside residual (CIR) unit to modify ResNet, Inception [44] and ResNeXt [53] to accomodate deeper and wider backbone networks.

Concurrently, some methods utilize the cascade and the branching strategies to improve tracking accuracy and robustness of SiamRPN. For example, C-RPN [14] presents a Siamese cascade RPN framework to lend strength from stacking multiple RPNs which are coarse-to-finely trained according to adjusted anchor schemes. SPM-Tracker [47] designs a series-parallel structure to fuse a coarse matching stage for robustness to distractors and a fine matching stage for discrimination power. Though effective at large, all the above RPN-based methods heavily depends on the choice of the intricate anchor scheme to achieve reasonable tracking performance.

In this paper, we propose a Siamese keypoint prediction network (SiamKPN) for visual object tracking. The whole network consists of a modified ResNet-50 Siamese backbone for feature learning, and a cascade of compact KPN heads for prediction modeling. In particular, we employ the outputs of multiple layers from the backbone as features, while each KPN head is constructed by standard convolutions and one depth-wise cross-correlation. By enforcing loose-to-strict intermediate supervisions, the cascade of predicted heatmaps can gradually concentrate to the target and reduce to the distractors. Worth noting that, our method is motivated by both the cascade and the anchor-free strategy in the recent object detection literature [7, 25, 58], though there are key differences. Firstly, as far as our knowledge, we are the first to consider the anchor-free scheme in the Siamese paradigm for object tracking. Secondly, the anchor-based cascade method adjusts the anchors for each stage to refine the prediction, while our novel cascade heatmap strategy applies loose-to-strict intermediate supervisions to guide the refinement process.

After offline training with a multi-task loss, SiamKPN provides an effective and efficient tracker without online updating. Specifically, the SiamKPN tracker is robust to similar distractors to some extent, while running at real-time speed. We evaluate the SiamKPN tracker through comprehensive experiments on four tracking benchmarks consisting of OTB-100 [52], VOT2018 [24], LaSOT [12] and GOT-10k [20]. In particular, the SiamKPN tracker with three stages (SiamKPN-3s) performs well against state-of-the-art deep trackers especially when compared with other Siamese trackers. Figure 1 presents some of the representative results on three challenging sequences.

2 Related Works

Aside from the Siamese tracking approach, we briefly review two other main categories of deep visual tracking methods considering the taxonomy of recent surveys [30, 40, 55]. The two categories include feature-extraction tracking and end-to-end tracking.

2.1 Feature-Extraction Tracking

Most of the early deep tracking methods utilize deep networks just for feature extraction and rely on classical approaches for target prediction. For example, CNN-SVM [19] trains a support vector machine to classify positive and negative samples using the network outputs as appearance features. This kind of region-based classification has to resort to sparse sampling for running speed while at the price of declined performance.

A better scheme is to train correlation filters based on the deep features. For example, HCFT [34] adaptively learns correlation filters on multiple convolutional layers to encode the target appearance. C-COT [11] presents a joint learning framework to fuse deep features from different spatial pyramids. ECO [10] introduces a factorized convolution operator, a generative sample space model and a conservative model update strategy to better utilize deep features for robust and efficient tracking. UPDT [4] proposes an adaptive fusion approach to leverage deep and shallow features to improve tracking performance.

2.2 End-to-End Tracking

End-to-end tracking usually learns a unified network to conduct both feature extraction and target prediction. For example, DeepTrack [29] trains a simple CNN model of two convolutions and two fully-connected layers in a purely online manner for visual tracking. MDNet [35] pretrains a shared CNN model and finetunes multiple domain-specific layers during online learning. FCNT [48] employs a pretrained VGG-16 network [39] and learns two additional head networks during visual tracking. STCT [49] exploits ensemble learning to utilize different CNN feature channels. CREST [41] reformulates correlation filter as a network layer with residual learning. DSLT [33] designs a shrinkage loss to improve deep regression tracking.

Recently, ATOM [9] designs the overlap maximization based architecture to predict the intersection over union (IoU) overlap between the target and the proposal boxes. As a modification, DiMP [3] replaces the modulation module with a parametric optimizer to further improve performance. Note that, both ATOM and DiMP handle prediction modeling by leveraging the IoU-Net [21], which still belongs to the anchor-based detection paradigm. In contrast, the prediction modeling in our SiamKPN is fully based on heatmap regressions for center point, target size and offsets estimation.

3 Model Representation

In this section, we first present the basic building blocks of SiamKPN. Then we detail the cascade heatmap scheme and illustrate its effect on coarse-to-fine prediction. Figure 2 presents the whole framework of SiamKPN which consists of a Siamese backbone for feature learning, and a cascade of compact KPN heads for prediction modeling.

3.1 Siamese Backbone

Similar to [26], we employ a modified ResNet-50 to define our Siamese backbone network. To make ResNet-50 suitable for our dense prediction task, we reduce the spatial stride to maintain more features and apply dilated convolutions to increase its receptive field. In particular, the original spatial strides in conv and conv layers are converted to one thus yielding spatially larger feature maps. Meanwhile, the original dilation rates in conv and conv blocks are changed to and to increase the receptive field. Based on this modified ResNet-50 backbone subnetwork, we extract the outputs of conv, conv and conv layers as features, and apply convolutions to adjust channels before feeding them into the following head subnetwork.

3.2 Keypoint Prediction Head

Figure 3 illustrates the architecture of the KPN head. As show in the figure, it is constructed by three convolutions and one depth-wise cross-correlation. More specifically, each KPN head involves the following flow of operations,

| (1) | ||||

| (2) | ||||

| (3) |

where Conv and Corr are abbreviations of convolution and cross-correlation. Besides, and denote the parameters of the two convolutions for processing target and search feature maps respectively, stands for that of an internal adjustment convolution. We have made explicit the stage number to be consistent with the following notations and are actually the output feature maps of the Siamese backbone for the target and search images. Note that the search feature maps are fed into the next stage while being further processed by another two convolutions to obtain the predicted heatmaps .

Aside from the architecture, we would like to elaborate a little on the meanings of different channels in the predicted heatmaps. In our implementation, three types of tasks are defined which include the center point, point offsets and target size estimation respectively. In particular, we use one channel to handle the center point estimation task thus it represents the response map of the target location . Meanwhile, two offsets channels are utilized to address the discretization error due to stride resulting in . Besides, another two channels are employed to estimate the target size in terms of height and width .

3.3 Cascade Heatmap Supervision

Unlike cascading the RPN head in [14] which requires careful adjustments on the anchor scheme sequentially, cascading the KPN head can be easily achieved by a direct repetitive stacking. In addition, the whole architecture supports refinement along the cascade by successively shrinking the variance of the label heatmap. To this end, we have the shrinking version of the Gaussian heatmap which is given as below,

| (4) |

where denote the coordinates of an arbitrary point in the heatmap, represent the coordinates of a target center point, stands for the stage number, controls the shrinkage strength of the variance in the Gaussian function. Hence, as the number of stage increases, the Gaussian heatmap becomes more peaked around the target center point. In other words, the supervision signal is getting stricter along the cascade.

To illustrate the effect of our proposed scheme, we compare it with a naive stacking with fixed variance. Figures 4(a) and 4(b) give an example of the sequentially evolving heatmaps of the two strategies. As shown in the figures, the stacking with fixed variance can slightly help concentrate the predicted heatmap to the target, however it also strengthens the heatmap scores for the similar distractor. In contrast, the shrinking variance scheme can well enhance the heatmap scores in the target and reduce that in the background, thus easier to distinguish the target from the similar distractor as the cascade gets deeper.

It is worth mentioning that, the above scheme can also be applied to point offsets and target size estimation. In particular, the point offsets label are calculated as the discretization gap between the accurate position and a stride-clamped version, i.e., for position and stride . Besides, we define the target size label as the ground-truth height and width around the center point and zero otherwise.

4 Algorithms

In this section, we present the training and tracking algorithms for SiamKPN. Generally speaking, the training of SiamKPN is done in an end-to-end manner with intermediate supervisions, while the SiamKPN tracker is utilized without online adaptation to achieve high accuracy and robustness running in real time.

4.1 Offline Training

During the offline training phase, we prepare the label heatmaps by setting and considering that the search image feature map is in size . Similar to [25, 58], we train each KPN head with a multi-task loss. In particular, the keypoint estimation channel is optimized by a weight-balanced version of the focal loss [31], while the offsets and target size estimation channels are supervised via a smoothed loss.

More specifically, by using Equations (1) and (4), the keypoint estimation loss between the predicted and label heatmap in our framework is defined as below

| (5) |

where denotes the indicator function. Note that we have omitted the stage number for notational simplicity. We set the focal loss hyper-parameters as , and in all our experiments.

Besides, the offsets and target size estimation losses are defined as follows

| (6) | ||||

| (7) | ||||

| (10) |

By combining all the losses across different stages, we obtain the overall training objective as

| (11) |

where and trade-off the balance among the keypoint, offsets and target size estimation. We set and through all our experiments.

4.2 Online Tracking

For the online tracking phase, we first crop around the target and resize it to given its bounding box of the first frame in a video sequence. After going through the backbone and the head subnetworks, the target feature maps are adjusted to obtain several matching templates in size along the cascade and these templates are then kept unchanged during the whole tracking process. Given the predicted target location in the previous frame, we crop an approximately two-times larger image region centered on this location and resize it to . After applying the templates along the cascade, we use the outmost response maps to predict the target location in the current frame.

To get the predicted bounding box, the center point response map is first transformed to the range from 0 to 1 by applying the sigmoid function. By thresholding, the points with higher scores are involved in the subsequent process while the remaining points are ignored. Based on the selected points, the candidate bounding boxes are obtained by considering the corresponding point offsets and target size. To fight against the bounding box changes in adjacent frames, we add penalties for changes in target scale and aspect ratio given by the below function

| (12) |

where is a hyper-parameter, represent the target scales and aspect ratios of adjacent frames respectively. The score of each point is multiplied by its penalty factor to get the penalized scores. Based on the penalized scores, we introduce a Gaussian smooth function to suppress large displacements from the target to yield the final score for each candidate point. To this end, the location with the highest score corresponds to the predicted center of the target. As a post-processing step, the target size of the center point is a weighted average using two adjacent frames. Therefore, the whole process involves three hyper parameters, namely the penalty coefficient, the Gaussian smoothing window coefficient and the target size smoothing coefficient.

5 Experiments

In this section, we evaluate SiamKPN on several visual object tracking benchmarks including OTB-100 [52], VOT2018 [24], LaSOT [12] and GOT-10k [20]. SiamKPN is implemented in Python using PyTorch on GTX 1080Ti GPUs.

5.1 Implementation details

Network Architecture. The modified ResNet-50 backbone outputs the conv, conv and conv layer as features. Their channels are adjusted from 512, 1024 and 2048 to 256, then fed into the KPN cascade. When passing through the cascade, the channels of the target and the search feature maps are maintained at 256, while the spatial sizes are kept as and respectively. Inside of each KPN, the adjustment convolution only operates on the center locations of the target feature map. Hence, the obtained feature map plays as convolutional kernels over the search feature map during the depth-wise cross correlation. The output of each KPN is further processed by two convolutions to give the predicted heatmaps in 5 channels. A layer-wise aggregation is employed to merge the three branches to produce the final prediction of each stage.

Training Datasets. The training datasets consist of the train splits from Youtube-BB [36], LaSOT [12], GOT-10k [20] and COCO [32] datasets. In particular, Youtube-BB provides amounts of sparse-labeled videos, LaSOT and GOT-10k provides frame-by-frame labeled videos, and COCO images are used to increase class diversity. With the ratio 4:2:2:1, we sample 450000 target-search pairs similar to [27] per epoch with several data augmentation operations including random shift, random scale change, random blur, random color jitter and negative samples [59]. Each target-search pair of images are in size and which follows the same dataset curation procedure in [2].

Learning. SiamKPN is trained by the adaptive moment estimation (Adam) optimizer [23] over 5 GPUs with a total batch size of 80. The ResNet-50 backbone is pre-trained on ImageNet [38]. During fine-tuning, only the last three convolutional blocks of the backbone and the whole head subnetwork are trained. In particular, for the head subnetwork, the learning rate is step-wisely decayed from 0.005 to 0.002 for the first 5 epochs and exponentially decayed from 0.002 to 0.0005 for the last 15 epochs. As for the backbone, we set the learning rate to zero for the first 10 epochs and one tenth of that in the head subnetwork for the last 10 epochs. Finally, we set the shrinking factor to 0.9 for the supervision.

Inference. During tracking, we normalize the predicted center point heatmap of the final stage by sigmoid function. Based on this score map, we select top-scored points by thresholding it over 0.15 and clamping the number of points in the range from 8 to 32. As for the three hyper-parameters, a two-level grid search is employed to find the best configuration. The first-level searches each hyper-parameter from 0 to 0.9 with an interval of 0.1 for all the models of the last 10 epochs. Then, we apply the second-level search with an interval of 0.01 around the so-far best configuration.

5.2 Results on OTB-100 [52]

OTB-100 contains 100 representative sequences and has eleven challenge attributes, including background clutters, scale variation, deformation and so on. There are twenty grayscale sequences among them. OTB uses one pass evaluation (OPE) to evaluate trackers with two metrics, precision and area under curve (AUC) of the success plot. The precision plot illustrates the percentage of frames whose distance between the estimated location and ground truth is within the given threshold of 20 pixels. The success plot is defined by the ratios of successful frames by varying the threshold from 0 to 1.

Figure 5 presents the comparison results between SiamKPN-3s and state-of-the-art trackers on OTB-100. As shown in the figure, SiamKPN-3s ranks first both in success plot and precision plot and is ahead of the second-place tracker UPDT by 1.7% in AUC score. Besides, among all the Siamese trackers, SiamKPN-3s outperforms the previous best SiamRPN++ with relative gains of 2.3% and 1.4% in terms of success and precision respectively. This validates that our cascade heatmap architecture can provide more accurate predictions.

5.3 Results on VOT2018 [24]

| EAO | Accuracy | Robustness | EAO | Accuracy | Robustness | ||

|---|---|---|---|---|---|---|---|

| SiamFC [2] | 0.188 | 0.50 | 0.59 | UPDT [4] | 0.378 | 0.536 | 0.184 |

| SiamRPN+ [57] | 0.30 | 0.52 | 0.41 | SiamRPN [27] | 0.383 | 0.586 | 0.276 |

| DaSiamRPN [59] | 0.326 | 0.569 | 0.337 | MFT [24] | 0.385 | 0.505 | 0.140 |

| SPM [47] | 0.338 | 0.58 | 0.30 | LADCF [54] | 0.389 | 0.503 | 0.159 |

| SiamMask [51] | 0.347 | 0.602 | 0.288 | ATOM [9] | 0.401 | 0.590 | 0.204 |

| DRT [43] | 0.356 | 0.519 | 0.201 | SiamRPN++ [26] | 0.414 | 0.600 | 0.234 |

| RCO [24] | 0.376 | 0.507 | 0.155 | DiMP-50 [3] | 0.440 | 0.597 | 0.153 |

| SiamKPN-3s | 0.440 | 0.606 | 0.192 |

VOT2018 contains 60 more challenging sequences. Different from OPE test on OTB, VOT challenge reinitializes trackers at the failure frame. It also has a burn-in period of ten frames, which means ten frames after initialization will be labeled as invalid for accuracy computation. Besides, VOT uses accuracy (A), robustness (R), and expected average overlap (EAO) metrics to evaluate trackers. Particularly, EAO score can comprehensively reflect accuracy and robustness.

We compare SiamKPN-3s with both Siamese trackers and DCF trackers on VOT2018. As shown in Table 1 and Figure 6, SiamKPN-3s ranks first according to EAO, surpassing SiamRPN++ and ATOM with a relative gain of 6.3% and 9.7% respectively. Without any online updating, our method achieves same EAO as DiMP and outperforms it on accuracy. In addition, unlike other Siamese based trackers, SiamKPN-3s improves robustness in great margin. The robustness score of SiamKPN-3s is better than all other Siamese trackers. Even more, SiamKPN-3s has higher robustness score than ATOM, which is a discriminative tracker with online updating. These results are reasonable since the variance-decay strategy can help SiamKPN-3s suppress distractors and improve robustness.

A comparison of quality and speed between SiamKPN-3s and state-of-the-art trackers on VOT2018 is shown in Figure 7. We visualize the Expected Average Overlap (EAO) with respect to the Frames-Per-Seconds (FPS). Note that the FPS axis is in the log scale and we set the real-time threshold at 20 FPS. In the figure, the top-performed CF trackers run at slow speed while Siamese trackers run faster. Our SiamKPN-3s gets best EAO score while runs at 24 FPS in real-time, achieving a better trade-off between performance and speed.

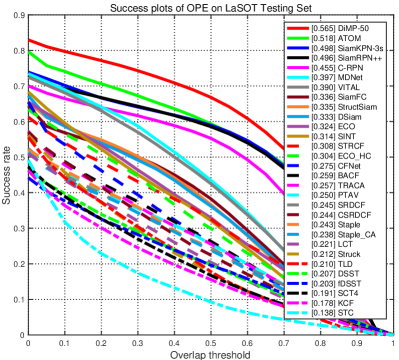

5.4 Results on LaSOT [12]

| AUC | Pre | AUC | Pre | ||

|---|---|---|---|---|---|

| KCF [18] | 0.178 | 0.170 | DSiam [15] | 0.333 | 0.318 |

| Staple [1] | 0.243 | 0.239 | StructSiam [56] | 0.335 | 0.326 |

| PTAV [13] | 0.250 | 0.245 | SiamFC [2] | 0.336 | 0.331 |

| TRACA [8] | 0.257 | 0.230 | VITAL [42] | 0.390 | 0.362 |

| BACF [22] | 0.259 | 0.236 | MDNet [35] | 0.397 | 0.370 |

| CFNet [46] | 0.275 | 0.263 | C-RPN [14] | 0.455 | 0.425 |

| STRCF [28] | 0.308 | 0.296 | SiamRPN++ [26] | 0.496 | 0.467 |

| SINT [45] | 0.314 | 0.294 | ATOM [9] | 0.518 | 0.478 |

| ECO [10] | 0.324 | 0.302 | DiMP-50 [3] | 0.564 | 0.534 |

| SiamKPN-3s | 0.498 | 0.489 |

To further validate the proposed framework on a larger and more challenging dataset, we conduct experiments on LaSOT. The LaSOT dataset provides a large-scale, high-quality dense annotations with 1,400 videos in total and 280 videos in the testing set. LaSOT has 70 categories objects with each containing twenty sequences, and the average video length is 2512 frames, which is useful to evaluate long-term trackers. LaSOT adopts One-Pass Evaluation (OPE) test success and precision similar to OTB. The precision is computed by comparing distance between predicted box and ground truth bounding box in pixels. The success is computed as the Intersection Over Union (IoU) between predicted box and ground truth bounding box.

Table 2 and Figure 8 report the overall comparisons between our SiamKPN-3s tracker and other methods on LaSOT testing set. Worth noting that the robustness measure is essential on LaSOT since zero-overlapping predictions will be included if a tracker loses the target in a long-term sequence. From this point, trackers with online updating shall perform better on LaSOT. Especially, DiMP ranks first in both success and precision. However, among trackers without online updating, our SiamKPN-3s outperforms all other trackers, including SiamRPN++. In particular, SiamKPN-3s achieves a larger precision score that is 4.7% relatively higher than SiamRPN++. Very unexpectedly, SiamKPN-3s even outperforms ATOM which is a recent tracker with online updating. From the above comparison, SiamKPN-3s shows great potentiality for long-term tracking.

5.5 Results on GOT-10k [20]

| MDNet | CF2 | ECO | C-COT | GOTURN | SiamFC | SiamFCv2 | SiamRPN++ | ATOM | DiMP-50 | SiamKPN-3s | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| [35] | [34] | [11] | [10] | [17] | [2] | [46] | [26] | [9] | [3] | ||

| 0.303 | 0.297 | 0.309 | 0.328 | 0.375 | 0.353 | 0.404 | 0.615 | 0.634 | 0.717 | 0.606 | |

| 0.099 | 0.088 | 0.111 | 0.107 | 0.124 | 0.098 | 0.144 | 0.329 | 0.402 | 0.492 | 0.362 | |

| AO | 0.299 | 0.315 | 0.316 | 0.325 | 0.347 | 0.348 | 0.374 | 0.517 | 0.556 | 0.611 | 0.529 |

GOT-10k is also a large-scale dataset containing over 10000 video segments and has 180 test videos. The train and test splits have no overlap in object classes, thus overfitting on particular classes is avoided. In addition, this benchmark requires all trackers to use the train split only for model training while external datasets are forbidden. We strictly follow this protocol and retrain SiamKPN-3s solely on the train split of GOT-10k. Table 3 shows the comparison results between SiamKPN-3s and other methods on the test split of GOT-10k. Not astonishing, DiMP achieves the best performance since online updating is important for tracking unseen class object. However, for trackers without online updating, our SiamKPN-3s achieves the best and AO scores with relative gains of 10% and 2.3% over SiamRPN++.

5.6 Ablation Study

To investigate the impact of different components for our method, we conduct two ablation studies using OTB-100 and VOT2018.

Numbers of Stages: Table 4 presents the performance of SiamKPN by varying the number of stages. With more stages, SiamKPN gathers more strength from the refinement process though in a diminishing manner of the performance gains. It is also not astonishing that the tracking speed is decreased by increasing the number of stages. However, the best performing SiamKPN-3s still runs at real-time speed with 24 FPS. As a side observation, by recalling that in Figure 5 and Table 1, one can find that the basic one-stage SiamKPN achieves comparable results with SiamRPN++ on the OTB-100 and VOT2018 benchmarks.

| One stage | Two stages | Three stages | |

|---|---|---|---|

| SUC on OTB-100 | 0.687 | 0.702 | 0.712 |

| PRE on OTB-100 | 0.906 | 0.916 | 0.927 |

| EAO on VOT2018 | 0.413 | 0.428 | 0.440 |

| Speed on VOT2018 | 40 FPS | 32 FPS | 24 FPS |

Shrinking Variance: During training, the shrinking variance in the heatmap supervision is important for guiding our framework to gradually refine the predictions. To show its effectiveness, we compare the shrinking variance strategy using factor with a fixed variance strategy, i.e. . Table 5 shows the comparison results of SiamKPN-3s by using the two different strategies. One can observe that, with shrinking variance, our tracker can predict more accurately on OTB-100. This observation validates the importance of applying loose-to-strict supervision signals along the cascade.

| w/o var decay | w/ var decay | |

|---|---|---|

| SUC on OTB-100 | 0.705 | 0.712 |

| PRE on OTB-100 | 0.916 | 0.927 |

6 Conclusion

A Siamese keypoint prediction network has been developed for visual object tracking. Although a lot of Siamese networks have been designed in the literature, there still lacks a high-performing scheme to address the challenges of the task. The proposed SiamKPN model reduces this gap, by providing a cascade heatmap scheme to achieve both tracking accuracy and robustness. Training SiamKPN is implemented with loose-to-strict supervisions and based on a multi-task loss in an end-to-end manner. When applying SiamKPN for tracking, the predicted heatmap of successive stages has found to be gradually concentrated to the target while reduced to the distractors. Based purely on offline training, SiamKPN performs favorably against state-of-the-art Siamese trackers and those methods with online learning modules, while running at real-time speed.

References

- [1] Bertinetto, L., Valmadre, J., Golodetz, S., Miksik, O., Torr, P.H.S.: Staple: Complementary learners for real-time tracking. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 1401–1409 (2016)

- [2] Bertinetto, L., Valmadre, J., Henriques, J.F., Vedaldi, A., Torr, P.H.: Fully-convolutional siamese networks for object tracking. In: European Conference on Computer Vision (ECCV) Workshops. pp. 850–865 (2016)

- [3] Bhat, G., Danelljan, M., Gool, L.V., Timofte, R.: Learning discriminative model prediction for tracking. In: IEEE International Conference on Computer Vision (ICCV). pp. 6182–6191 (2019)

- [4] Bhat, G., Johnander, J., Danelljan, M., Shahbaz Khan, F., Felsberg, M.: Unveiling the power of deep tracking. In: European Conference on Computer Vision (ECCV). pp. 483–498 (2018)

- [5] Bolme, D.S., Beveridge, J.R., Draper, B.A., Lui, Y.M.: Visual object tracking using adaptive correlation filters. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 2544–2550. IEEE (2010)

- [6] Bromley, J., Guyon, I., LeCun, Y., Säckinger, E., Shah, R.: Signature verification using a “Siamese” time delay neural network. In: Advances in Neural Information Processing Systems (NeurIPS). pp. 737–744 (1994)

- [7] Cai, Z., Vasconcelos, N.: Cascade R-CNN: Delving into high quality object detection. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 6154–6162 (2018)

- [8] Choi, J., Jin Chang, H., Fischer, T., Yun, S., Lee, K., Jeong, J., Demiris, Y., Young Choi, J.: Context-aware deep feature compression for high-speed visual tracking. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 479–488 (2018)

- [9] Danelljan, M., Bhat, G., Khan, F.S., Felsberg, M.: ATOM: Accurate tracking by overlap maximization. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 4660–4669 (2019)

- [10] Danelljan, M., Bhat, G., Shahbaz Khan, F., Felsberg, M.: ECO: Efficient convolution operators for tracking. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 6931–6939 (2017)

- [11] Danelljan, M., Robinson, A., Khan, F.S., Felsberg, M.: Beyond correlation filters: Learning continuous convolution operators for visual tracking. In: European Conference on Computer Vision. pp. 472–488. Springer (2016)

- [12] Fan, H., Lin, L., Yang, F., Chu, P., Deng, G., Yu, S., Bai, H., Xu, Y., Liao, C., Ling, H.: LaSOT: A high-quality benchmark for large-scale single object tracking. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 5374–5383 (2019)

- [13] Fan, H., Ling, H.: Parallel tracking and verifying: A framework for real-time and high accuracy visual tracking. In: IEEE International Conference on Computer Vision (ICCV). pp. 5487–5495 (2017)

- [14] Fan, H., Ling, H.: Siamese cascaded region proposal networks for real-time visual tracking. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 7952–7961 (2019)

- [15] Guo, Q., Feng, W., Zhou, C., Huang, R., Wan, L., Wang, S.: Learning dynamic siamese network for visual object tracking. In: IEEE International Conference on Computer Vision (ICCV). pp. 1781–1789 (2017)

- [16] He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 770–778 (2016)

- [17] Held, D., Thrun, S., Savarese, S.: Learning to track at 100 fps with deep regression networks. In: European Conference on Computer Vision. pp. 749–765. Springer (2016)

- [18] Henriques, J.F., Caseiro, R., Martins, P., Batista, J.: High-speed tracking with kernelized correlation filters. IEEE Transactions on Pattern Analysis and Machine Intelligence 37(3), 583–596 (2014)

- [19] Hong, S., You, T., Kwak, S., Han, B.: Online tracking by learning discriminative saliency map with convolutional neural network. In: International Conference on Machine Learning (ICML). pp. 597–606 (2015)

- [20] Huang, L., Zhao, X., Huang, K.: GOT-10k: A large high-diversity benchmark for generic object tracking in the wild. arXiv preprint arXiv:1810.11981 (2018)

- [21] Jiang, B., Luo, R., Mao, J., Xiao, T., Jiang, Y.: Acquisition of localization confidence for accurate object detection. In: European Conference on Computer Vision (ECCV). pp. 784–799 (2018)

- [22] Kiani Galoogahi, H., Fagg, A., Lucey, S.: Learning background-aware correlation filters for visual tracking. In: IEEE International Conference on Computer Vision (ICCV). pp. 1144–1152 (2017)

- [23] Kingma, D., Ba, J.: Adam: A method for stochastic optimization. In: International Conference on Learning Representations (ICLR) (2015)

- [24] Kristan, M., Leonardis, A., et al.: The sixth Visual Object Tracking VOT2018 challenge results. In: European Conference on Computer Vision (ECCV) Workshops. pp. 3–53 (2018)

- [25] Law, H., Deng, J.: CornerNet: Detecting objects as paired keypoints. In: European Conference on Computer Vision (ECCV). pp. 734–750 (2018)

- [26] Li, B., Wu, W., Wang, Q., Zhang, F., Xing, J., Yan, J.: SiamRPN++: Evolution of siamese visual tracking with very deep networks. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 4282–4291 (2019)

- [27] Li, B., Yan, J., Wu, W., Zhu, Z., Hu, X.: High performance visual tracking with siamese region proposal network. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 8971–8980 (2018)

- [28] Li, F., Tian, C., Zuo, W., Zhang, L., Yang, M.H.: Learning spatial-temporal regularized correlation filters for visual tracking. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 4904–4913 (2018)

- [29] Li, H., Li, Y., Porikli, F.: DeepTrack: Learning discriminative feature representations online for robust visual tracking. IEEE Transactions on Image Processing 25(4), 1834–1848 (2015)

- [30] Li, P., Wang, D., Wang, L., Lu, H.: Deep visual tracking: Review and experimental comparison. Pattern Recognition 76, 323–338 (2018)

- [31] Lin, T.Y., Goyal, P., Girshick, R., He, K., Dollár, P.: Focal loss for dense object detection. In: IEEE International Conference on Computer Vision (ICCV). pp. 2980–2988 (2017)

- [32] Lin, T.Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., Dollár, P., Zitnick, C.L.: Microsoft COCO: Common objects in context. In: European Conference on Computer Vision (ECCV). pp. 740–755. Springer (2014)

- [33] Lu, X., Ma, C., Ni, B., Yang, X., Reid, I., Yang, M.H.: Deep regression tracking with shrinkage loss. In: European Conference on Computer Vision (ECCV). pp. 369–386 (2018)

- [34] Ma, C., Huang, J.B., Yang, X., Yang, M.H.: Hierarchical convolutional features for visual tracking. In: IEEE International Conference on Computer Vision (ICCV). pp. 3074–3082 (2015)

- [35] Nam, H., Han, B.: Learning multi-domain convolutional neural networks for visual tracking. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 4293–4302 (2016)

- [36] Real, E., Shlens, J., Mazzocchi, S., Pan, X., Vanhoucke, V.: YouTube-BoundingBoxes: A large high-precision human-annotated data set for object detection in video. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 5296–5305 (2017)

- [37] Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: Towards real-time object detection with region proposal networks. In: Advances in Neural Information Processing Systems (NeurIPS). pp. 91–99 (2015)

- [38] Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S., Ma, S., Huang, Z., Karpathy, A., Khosla, A., Bernstein, M., et al.: ImageNet large scale visual recognition challenge. International Journal of Computer Vision 115(3), 211–252 (2015)

- [39] Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. In: International Conference on Learning Representations (ICLR) (2015)

- [40] Smeulders, A.W., Chu, D.M., Cucchiara, R., Calderara, S., Dehghan, A., Shah, M.: Visual tracking: An experimental survey. IEEE Transactions on Pattern Analysis and Machine Intelligence 36(7), 1442–1468 (2013)

- [41] Song, Y., Ma, C., Gong, L., Zhang, J., Lau, R.W.H., Yang, M.H.: Crest: Convolutional residual learning for visual tracking. In: IEEE International Conference on Computer Vision (ICCV). pp. 2574–2583 (2017)

- [42] Song, Y., Ma, C., Wu, X., Gong, L., Bao, L., Zuo, W., Shen, C., Lau, R.W., Yang, M.H.: Vital: Visual tracking via adversarial learning. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 8990–8999 (2018)

- [43] Sun, C., Wang, D., Lu, H., Yang, M.H.: Correlation tracking via joint discrimination and reliability learning. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 489–497 (2018)

- [44] Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., Erhan, D., Vanhoucke, V., Rabinovich, A.: Going deeper with convolutions. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 1–9 (2015)

- [45] Tao, R., Gavves, E., Smeulders, A.W.: Siamese instance search for tracking. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 1420–1429 (2016)

- [46] Valmadre, J., Bertinetto, L., Henriques, J., Vedaldi, A., Torr, P.H.S.: End-to-end representation learning for correlation filter based tracking. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 5000–5008 (2017)

- [47] Wang, G., Luo, C., Xiong, Z., Zeng, W.: SPM-Tracker: Series-parallel matching for real-time visual object tracking. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 3643–3652 (2019)

- [48] Wang, L., Ouyang, W., Wang, X., Lu, H.: Visual tracking with fully convolutional networks. In: IEEE International Conference on Computer Vision (ICCV) (December 2015)

- [49] Wang, L., Ouyang, W., Wang, X., Lu, H.: STCT: Sequentially training convolutional networks for visual tracking. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 1373–1381 (2016)

- [50] Wang, Q., Teng, Z., Xing, J., Gao, J., Hu, W., Maybank, S.: Learning attentions: Residual attentional siamese network for high performance online visual tracking. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 4854–4863 (2018)

- [51] Wang, Q., Zhang, L., Bertinetto, L., Hu, W., Torr, P.H.: Fast online object tracking and segmentation: A unifying approach. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 1328–1338 (2019)

- [52] Wu, Y., Lim, J., Yang, M.H.: Object tracking benchmark. IEEE Transactions on Pattern Analysis and Machine Intelligence 37(9), 1834–1848 (2015)

- [53] Xie, S., Girshick, R., Dollár, P., Tu, Z., He, K.: Aggregated residual transformations for deep neural networks. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 1492–1500 (2017)

- [54] Xu, T., Feng, Z.H., Wu, X.J., Kittler, J.: Learning adaptive discriminative correlation filters via temporal consistency preserving spatial feature selection for robust visual object tracking. IEEE Transactions on Image Processing (2019)

- [55] Yilmaz, A., Javed, O., Shah, M.: Object tracking: A survey. ACM Computing Surveys (CSUR) 38(4), 13 (2006)

- [56] Zhang, Y., Wang, L., Qi, J., Wang, D., Feng, M., Lu, H.: Structured siamese network for real-time visual tracking. In: European Conference on Computer Vision (ECCV). pp. 355–370 (2018)

- [57] Zhang, Z., Peng, H.: Deeper and wider siamese networks for real-time visual tracking. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 4591–4600 (2019)

- [58] Zhou, X., Wang, D., Krähenbühl, P.: Objects as points. arXiv preprint arXiv:1904.07850 (2019)

- [59] Zhu, Z., Wang, Q., Li, B., Wu, W., Yan, J., Hu, W.: Distractor-aware siamese networks for visual object tracking. In: European Conference on Computer Vision (ECCV). pp. 103–119 (2018)