1{zhuoyuan, xcyan, urtasun, yumer}@uber.com 2[email protected]

ShapeAdv: Generating Shape-Aware

Adversarial 3D Point Clouds

Abstract

We introduce ShapeAdv, a novel framework to study shape-aware adversarial perturbations that reflect the underlying shape variations (e.g., geometric deformations and structural differences) in the 3D point cloud space. We develop shape-aware adversarial 3D point cloud attacks by leveraging the learned latent space of a point cloud auto-encoder where the adversarial noise is applied in the latent space. Specifically, we propose three different variants including an exemplar-based one by guiding the shape deformation with auxiliary data, such that the generated point cloud resembles the shape morphing between objects in the same category. Different from prior works, the resulting adversarial 3D point clouds reflect the shape variations in the 3D point cloud space while still being close to the original one. In addition, experimental evaluations on the ModelNet40 benchmark demonstrate that our adversaries are more difficult to defend with existing point cloud defense methods and exhibit a higher attack transferability across classifiers. Our shape-aware adversarial attacks are orthogonal to existing point cloud based attacks and shed light on the vulnerability of 3D deep neural networks.

1 Introduction

Deep neural networks (DNNs) [35, 37, 53] have achieved state-of-the-art performance in various 3D perception tasks [6, 24, 34, 36, 43, 69] and have been widely applied in safety-critical applications including robotic grasping [28, 62] and autonomous driving [44, 63]. However, several recent studies [7, 48, 57, 59] raised concerns regarding the vulnerability of DNNs when applied to 3D sensory data such as point clouds. For example, carefully crafted adversarial 3D point clouds can induce arbitrary prediction errors in modern platforms. Recent works [26, 57, 65] proposed several heuristics to construct adversarial 3D point clouds by injecting adversarial noise directly to the victim object. Among the heuristics, attacks based on independent point shifting or addition can be resolved by statistical denoising or outlier removal mechanisms [68], while attacks based on adding adversarial clusters or objects fail to capture shape variations of a certain 3D point cloud due to significant changes to the true shape of the victim object. Indeed, modeling shape variations of a 3D point cloud is challenging as it requires reasoning about the structural factors (e.g., two chair legs are connected by a horizontal bar), geometric factors (e.g., length of the chair leg), and the interactions in between. At the same time, understanding the failure modes of DNNs towards adversarial 3D perturbations which reflect shape variations in the point cloud space is an important but under-explored.

In this work, we are interested in generating shape-aware adversarial point perturbations to an existing 3D point cloud. More specifically, we would like our perturbations to reflect certain shape variations in local geometry, global geometry, or structures while keeping the overall shape close to the original 3D point cloud. We propose a two-stage framework to generate shape-aware adversarial examples or ShapeAdv by leveraging a widely used point cloud auto-encoder network [1, 18, 64]. First, we learn to encode the high-dimensional 3D point cloud data into a lower-dimensional compact representation in the latent space through unsupervised shape reconstruction. As the auto-encoder network is well trained to reconstruct input shape, the learned latent space contains essential geometry and structure information about the 3D object shape that approximates the shape manifold. Second, we propose to add perturbation in the latent space and use our decoder network to propagate the signal to the 3D point cloud space. Consequently, a small perturbation on the latent space would cause a small change on the point space, so that the resulting point cloud is still close to the input shape. This is different from the previous approaches which directly add an adversarial noise to the point space. Our method is also supported by the empirical finding [1, 15, 55, 64] that linear interpolation on the learned latent space can produce smooth and continuous shape morphing.

Furthermore, we extend the proposed framework by incorporating auxiliary point clouds from the same category as the input when generating adversarial attacks. As multiple shapes are used to restrict the shape of the adversary, the generated adversarial shape resembles both the input and auxiliary point clouds. Finally, we demonstrate that auto-encoders can also be used for adversarial defense for 3D point clouds.

To show the effectiveness of our method, we attack the commonly used PointNet classifier [35] on the ModelNet40 [43, 56] dataset. We compare our proposed attacks with previous methods: adding or shifting points, and adding clusters or objects [57]. While all attacks can achieve attack success rate, we observe that our attacks are hard to defend against compared to simple baseline attacks which introduce only a few outliers; on the other hand, adding clusters or objects significantly change the geometry of the input. We experiment with two different auto-encoder networks, namely, the MLP baseline [1] and AtlasNet [18]. Our results show that AtlasNet preserves the detail of shapes as it generates adversarial point clouds by deforming the local surfaces which can be partially removed by existing defense methods. In comparison, the MLP baseline generates a global geometric deformation as the adversarial perturbation, which makes the defense challenging. We consider this as a trade-off between the expressive power of point decoder and the strength of adversarial perturbations. Finally, we show that our proposed attacks improve black-box transferability to other models, such as PointNet++ [37] and DGCNN [53].

To summarize, the contributions of our shape-aware adversarial 3D point cloud generation are as follows:

-

•

We introduce a novel framework for generating shape-aware adversarial 3D point clouds by injecting an adversarial noise in the latent space of a point auto-encoder.

-

•

We propose three different variants to generate such shape-aware attacks with different additional constraint: by minimizing the difference between the input and adversary in either the point space or latent space, and also by incorporating auxiliary point clouds from the same category with the input in the constraint.

-

•

We illustrate that the injected shape-aware perturbation reflects certain shape variations (e.g., geometric deformation or structural difference) in the 3D point cloud space.

-

•

Compared to existing point cloud attack methods that directly operate in the raw point cloud space, the proposed attack methods are significantly more difficult to defend against with existing defense methods and exhibit higher attack transferability.

2 Related work

Deep 3D shape generation.

Generative 3D shape modeling [4, 5] has become a popular research topic in machine learning, computer vision, and graphics. The past few years have witnessed tremendous advances in deep generative modeling of 3D voxels [11, 15, 54, 55, 56, 61], point clouds [1, 12, 14, 18, 20, 29, 64, 66], and surface meshes [16, 23, 25, 27, 50, 51, 52, 70]. Wu et al. [56] introduced the first deep generative models on 3D volumetric shapes using stacked Restricted Boltzmann Machines with 3D convolutions operating on 3D occupancy grids. [15, 54, 55, 61] extended this framework with encoder-decoder 3D convolutional architectures and in-network LSTM modules [11].

Due to several practical challenges (e.g., memory and computationally expensive 3D convolution operations) in generating high-fidelity 3D voxels, most recent works have shifted the focus to generative modeling of point clouds. Compared to the voxel representation, operations in point clouds are computationally more efficient; for example, the dimension of voxel fits 3D convolution, while that of point clouds fits 1D convolution. However, point clouds require permutation-invariant operations because the order of points can be random, which limits a straightforward transfer from other domains. Fan et al. [12] introduced a deep learning-based framework to synthesize 3D point cloud from a single image using the Chamfer distance and Earth mover’s distance to better preserve the shape invariance under point permutation. Achlioptas et al. [1] proposed a two-step training method for an auto-encoder, for learning latent representation space of 3D point clouds and generating 3D point clouds from the latent representation. To improve the MLP based point auto-encoder, Groueix et al. [18] generates 3D point clouds in a piece-wise planar fashion where each planar surface is deformed by a separate neural network. Our proposed shape-aware adversarial method can be crafted with different architectures, as point auto-encoder is a widely used architecture for shape reconstruction and generation. Besides 3D point cloud representation, the idea of generating an adversarial attack on the latent space can potentially be applicable to other 3D data representations, such as voxel grids and surface meshes.

Adversarial examples.

Early studies [17, 49] have revealed the vulnerability of modern image classifiers by carefully crafted adversarial examples, which can induce arbitrary prediction errors while being imperceptible by human. This is achieved by optimizing an objective that maximizes the prediction errors while restricting the perturbation magnitude under an norm. Adversarial attacks have gained tremendous attentions in machine learning, computer vision, and security communities with two parallel efforts focused on generating adversarial attacks [9, 31, 32, 33, 58, 67] and devising effective defenses against such mechanisms [8, 19, 21, 30, 39, 41, 42, 60, 62] on natural images.

While adversarial examples in the 2D image domain have been extensively studied, generating 3D adversarial examples is relatively under-explored. Xiao et al. [59] introduced a method to inject adversarial 3D shape deformations to a victim object so as to fool the 2D object detectors when projecting into the 2D image space using a differentiable renderer. Cao et al. [7] further investigated machine learning-based LiDAR spoofing attacks in the real world. Recently, several works [26, 48, 57, 65] have investigated the problem of generating adversarial signals on the raw 3D point clouds. For example, among the attacks proposed by [57], adding or shifting few points is easily defended by outlier removal methods [26, 68], and adding a few clusters or objects makes a significant difference from the original shape, such that an appropriate preprocessing or segmentation stage can remove them.

Unlike prior works that generate adversarial noises directly in the point cloud space, our work injects adversarial perturbations on the learned shape manifold using latent representation of a point auto-encoder, such that the adversary reflects the shape variations of a 3D point cloud. Our work is also related to recent studies on semantic or “unrestricted” adversarial examples in the image domain [2, 3, 13, 22, 38, 46, 47] using generative models. To the best of our knowledge, the proposed shape-aware adversarial point cloud generation framework is the first study on 3D point cloud adversaries in a shape-aware fashion using the latent space of a point cloud auto-encoder.

3 ShapeAdv: Shape-Aware Adversarial Attacks

In this section, we describe our proposed ShapeAdv attacks and defense methods.

3.1 Background

Adversarial attacks on 3D point clouds.

A 3D point cloud is a set of points sampled from the surface of an object, where each point is represented by a tuple of Cartesian coordinates (XYZ) in the point space . Let be a classification model, which takes a 3D point cloud as an input and predicts its label . Since the number of points and their order vary depending on the sampling, is designed to be invariant to the dimension of , usually achieved by a global max pool or average pool operation [35, 53].

Given a point cloud data and its ground truth label , the goal of an adversarial attack is to lead to misclassify the label of an input , by finding an adversary satisfying:

| (1) |

where is a distance metric to measure the similarity between the original data and the optimized adversary . In targeted attacks, a target label is provided such that the adversary is optimized to satisfy , while is sufficient in untargeted attacks.

In adversarial attacks on 3D point clouds, two types of strategies have been studied [26, 48, 57, 65]: adversarial point perturbation and adversarial point generation. Adversarial point perturbation adds a small perturbation to , i.e., , while adversarial point generation augments points to , i.e., .

Learned latent space as an approximation to the shape manifold.

Different from prior works, we propose to inject an adversarial perturbation to the latent representation of a point cloud. To achieve this, we take an auto-encoder , which consists of an encoder network and a decoder network . In this setting, given a data and its label , we optimize the adversary in the latent space to be similar to the encoded data , such that it leads the model to misclassify the decoded output . Similar to Eq. (1), we optimize the following:

| (2) |

where is a distance metric to measure the similarity between the input and its adversary in the latent space. For this purpose, any auto-encoder is applicable, but a high-quality auto-encoder would produce better quality of the generated adversarial attack, i.e., should be small enough for any .

3.2 Shape-Aware Adversarial Attacks in the Latent Space

Since Eq. (2) could not be directly optimized via gradient descent, we reformulate the optimization problem as:

| (3) |

where controls the balance between the adversarial loss and the similarity between the input and the adversary, is the distance metric, is the latent representation of the input data, and is the adversary in the point space . Here, any adversarial loss can be applicable, but we follow the most effective formulation of the CW attack [9], as described below: let be the logits (or unnormalized probabilities) predicted by the model that is from the label , such that . Then, for a targeted attack,

| (4) |

and for an untargeted attack,

| (5) |

where is the hinge loss. In the following, we specify different choices of the regularization in our approach.

Shape-aware attack in the latent space.

It is straightforward to minimize the difference between the input and the adversary in the latent space. We then solve the following optimization problem:

| (6) |

where is the decoded adversary. We regularize and to be close with respect to distance. To distinguish with other proposed methods, we refer to this method as ShapeAdv-Latent .

Shape-aware attack in the point space.

In ShapeAdv-Latent , we implicitly assume that local similarity is preserved: i.e., as long as is similar to , the adversary is also similar to . Alternatively, we can directly constrain the similarity between the decoded adversary and the input , as our point decoder is differentiable. More specifically, let and be the collection of points in the input and adversary, respectively. We apply the squared Chamfer distance as the metric, which is permutation-invariant and effective in approximating the shape manifold of 3D point cloud data [12]:111Strictly speaking, the Chamfer distance is not a valid metric because it does not satisfy the triangle inequality. However, it is empirically shown to be effective.

| (7) |

where is the decoded adversary. We refer to this method as ShapeAdv-Chamfer.

Shape-aware attack with auxiliary point clouds.

In the above two methods, we optimize the adversarial perturbation in any direction in the latent space. However, if the quality of the latent space is not perfect, the perturbation in the latent space would cause an undesirable perturbation in the point space. To avoid such perturbation in the point space, we propose to leverage auxiliary point clouds sampled from the category of the input to guide the direction in the latent space. Specifically, given a pair of data and its label , we find nearest training data whose label is .222We use the Chamfer distance in the point space to find the nearest neighbors. Let be the input data and its nearest neighbor training data be . We then solve the following optimization problem:

| (8) |

where is the decoded adversary. The coefficient in the second term could be a tunable hyperparameter to give different weights to the input and auxiliary point clouds, but we could not find significant differences in terms of the attack performance quantitatively. We refer to this method as ShapeAdv-Auxiliary.

To analyze the adversary generated by this attack, suppose we have only one auxiliary point cloud. Then minimizing the second term in Eq. (8) forces to be similar to both the input data and the auxiliary point cloud , and its optimal value is expected to be a linear interpolation between them. We note that this holds in a valid metric only. Since the Chamfer distance is not a valid metric, the triangle inequality does not hold in general, such that the optimal value may not be a linear interpolation of and . However, we use the Chamfer distance here, because we found that its qualitative results empirically follow our analysis and show a good performance. In general, when we take auxiliary point clouds, the adversary would expected to be in a convex polyhedron . Therefore, this formulation also serves as a method to analyze the robustness of the classifier under shape deformation in the same class; in other words, if such an adversary exists, then the model is not robust to shape deformation.

On the other hand, we can restrict the search space of adversary in the latent space to be a convex polyhedron . In this case, we no longer need the second term in Eq. (8) and is formulated as a convex combination of the input and the auxiliary point clouds, i.e., where is optimizable. However, we empirically found that such formulation is not very effective.

3.3 Shape-Aware Adversarial Defense

While we focused on using point auto-encoders to generate attacks, they can also be used to defend against adversarial point clouds. First, as our latent space approximates the shape manifold, adversarial attacks out of the shape manifold can be projected on the shape manifold when it is encoded by , such that the output decoded by may not have an adversarial perturbation; in other words, auto-encoding has an effect of noise removal, if the noise is out of the shape manifold. Therefore, given a test input which can be either a clean or adversarial data, is the defended output, such that the prediction after defense can be expressed as

| (9) |

Similar defense mechanisms have also been studied in recent works [19, 21, 41, 42, 45, 47], which mostly focused on evaluating their method in a small 2D image dataset, such as MNIST. In contrast to the 2D image representation, the 3D point cloud we study in this paper is a collection of unordered points irregularly distributed in the 3D, which makes the defense challenging. We refer to this defense method as PointAE Defense.

4 Experimental Evaluation

In this section, we compare our shape-aware attacks with the state-of-the-art attack methods for 3D point clouds against different defense mechanisms.

4.1 Experimental Setup

Datasets and 3D models.

We conduct our experiments on the 3D point cloud classification benchmark aligned ModelNet40 [43, 56], and follow the same experimental settings as reported in [57]. This dataset consists of CAD models from common object categories, where objects are used for training and are for testing.333The compared methods and ours do not require validation, as they are optimization-based. We uniformly sample points on the surface of each object in the point space in the Cartesian coordinate (XYZ), and normalize them into a unit ball by centering and scaling. To evaluate the performance of adversarial attacks, we follow the protocol in [57] for targeted attack, which uses victim-target pairs sampled from 10 major categories (airplane, bed, bookshelf, bottle, chair, monitor, sofa, table, toilet, and vase). For untargeted attacks, we use all the testing examples.

The victim model for adversarial attack is the popular PointNet [35], which has shown the state-of-the-art performance in many tasks. For the attack transferability analysis, we also use PointNet++ [37] and DGCNN [53] as additional 3D point cloud classification models. We train the 3D point cloud classification models by minimizing the standard cross-entropy loss on aligned ModelNet40.

To learn the mapping from raw 3D point cloud data to the latent space, we leverage the point cloud auto-encoder (PointAE) with a latent space with 128 dimension approximating the shape manifold. Here, we consider two different architectures of PointAE proposed in different literature: MLP [1] and AtlasNet [18]. We pre-train PointAE on ShapeNetCore [10]; because the distribution of categories in ModelNet40 is highly imbalanced, training only on ModelNet40 leads to a severe performance downgrade. We note that PointAE-MLP was also trained on ShapeNetCore in [1]. In summary, we pre-train the PointAE on ShapeNetCore [10] using more than object instances and fine-tune the auto-encoder on the aligned ModelNet40 dataset for epochs. We find that such shape pre-training is crucial to learn a good latent space with a low reconstruction error, and effective shape deformation and interpolation in the latent space.

Attack and defense methods.

We compare the state-of-the-art adversarial attacks for 3D point clouds with our proposed methods. Point shifting attack (Shift-Point) [57] perturbs the victim point cloud on the point space while minimizing the perturbation magnitude under the constraint. Point addition attacks [57] introduce new points to the original victim point cloud, where we compare three strategies: (1) adding a number of points independently (Add-Point), (2) adding several clusters of points (Add-Cluster), and (3) adding several point clouds from another object belonging to a different category (Add-Object).

As described in Section 3, we introduce three variants of the shape-aware adversarial attacks by injecting adversarial perturbations in the latent space. We additionally constrain the perturbation by (1) minimizing the distance in the latent space (ShapeAdv-Latent ), (2) minimizing the Chamfer distance in the point space (ShapeAdv-Chamfer), and (3) minimizing the Chamfer distance from the auxiliary point clouds sampled from the category of the input as well as the input (ShapeAdv-Auxiliary).

For the defense experiments, we consider the sparse outlier removal (SOR) [40, 68] and our proposed auto-encoder based method. SOR is a simple defense method that removes outlier points in the point space, which is shown to be effective in [68]; for each point, it first computes the average distance from its -nearest neighbors, and then filters out points if the average distance is larger than , where and are the mean and standard deviation of the average distances, and and are hyperparameters. We set the hyperparameters to be and , which is more conservative than the original paper [68] and shows a strong defense performance against the adversarial point clouds. In PointAE Defense, as described in Section 3.3, each point cloud in the test dataset is auto-encoded and then considered as an input of the classification model, as in Eq. (9). Specifically, for each point cloud in the test dataset, we use the reconstruction-based method described in Section 3.3: using the direct output from the encoder model for decoding as in Eq. (9), referred as PointAE Defense. To show the classification performance after applying defense mechanisms, we report the test accuracy with the reconstructed point clouds in Table 1.

Evaluation metrics.

We use both attack success rate as well as the perturbation magnitude measure (using the Chamfer distance) as our major evaluation metrics. For attack success rate, we compute the number of successfully attacked object instances divided by the total number. For the Chamfer distance measure, we report the quantitative results in the best, average, and worst performance over category-wise performances, as some category pairs are more difficult to attack than others. The best case represents the most easily attacked victim class, average case for attacking all classes, and worst case for the most difficult class.

| Attack Type | Targeted | Untargeted | ||

| Attack / Decoder | MLP | AtlasNet | MLP | AtlasNet |

| Latent (Eq. (6)) | 2.99 / 8.56 / 14.75 | 1.97 / 4.29 / 6.59 | 2.99 / 6.26 / 11.12 | 1.44 / 3.26 / 5.52 |

| Chamfer (Eq. (7)) | 2.14 / 4.55 / 8.82 | 1.46 / 3.15 / 5.15 | 1.36 / 3.47 / 5.68 | 1.00 / 2.70 / 4.42 |

| Auxiliary (Eq. (8)) | 2.47 / 5.16 / 9.61 | 2.25 / 4.12 / 6.10 | 1.46 / 4.61 / 8.27 | 1.20 / 3.86 / 6.46 |

| ShapeAdv | Airplane Bottle | Bottle Chair | Chair Airplane | |||

| Front | Side | Front | Top | Front | Side | |

| No Attack |

|

|

|

|

|

|

| MLP-Latent |

|

|

|

|

|

|

| MLP-Chamfer |

|

|

|

|

|

|

| Atlas-Latent |

|

|

|

|

|

|

| Atlas-Chamfer |

|

|

|

|

|

|

| ShapeAdv Auxiliary | Bottle | Chair | Monitor | Sofa | Table | Vase |

| Input |

|

|

|

|

|

|

| Auxiliary |

|

|

|

|

|

|

| PointAE-MLP |

|

|

|

|

|

|

| PointAE-AtlasNet |

|

|

|

|

|

|

4.2 Experimental Results

Overall analysis: shape-aware adversarial attacks.

First, we measure the attack success rate of our ShapeAdv methods under both targeted and untargeted settings, and found that the attack success rate is 100% for all methods. We report the perturbation magnitude in the Chamfer distance in Table 2. Overall, adversarial point clouds generated by the AtlasNet [18] have relatively small perturbations compared to the MLP baseline [1]. Compared to the other variants, our ShapeAdv-Chamfer achieves the lowest distance, as it is directly used in the optimization objective when generating the adversarial attacks. In particular, our ShapeAdv-Auxiliary generates attacks that deviate further from the original shape as we use one more auxiliary point cloud to guide the shape deformation, such that the distance to the original shape is not always minimized. As in Table 2, the trend is consistent in both targeted and untargeted settings, while untargeted attacks are relatively easier to accomplish than targeted ones.

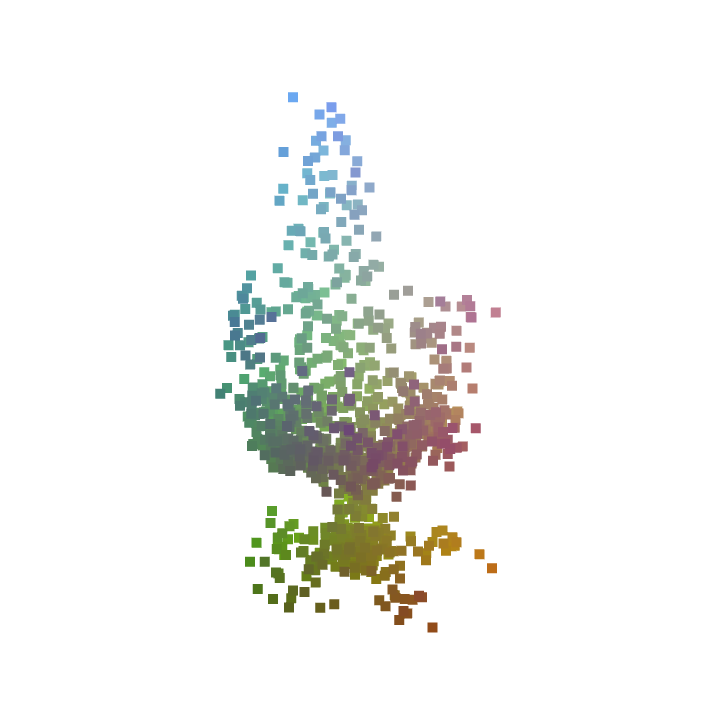

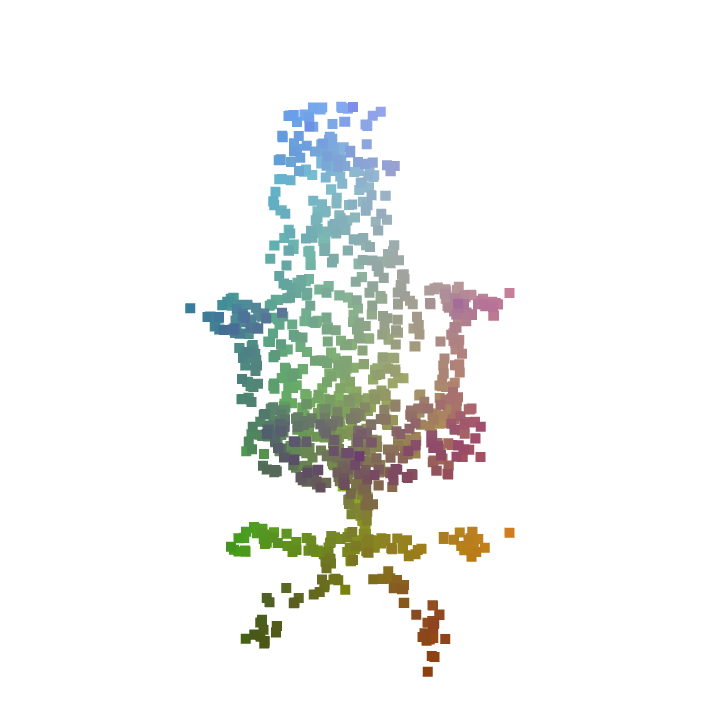

In Figure 2, we visualize our adversarial examples for targeted attacks. Both methods generate perceptually plausible 3D point clouds similar to the shape of the original one with noticeable shape deformations. AtlasNet tends to preserve local geometric details of the original point cloud; the MLP baseline tends to generate a coarse shape of the same semantic category while being slightly different from the original point cloud. Compared to ShapeAdv-Chamfer which uses the Chamfer distance objective, examples generated by ShapeAdv-Latent have relatively larger deformations, which is consistent with the distance measure in our quantitative evaluation. Taking account of its better visualization, we use AtlasNet for ShapeAdv below, unless otherwise stated.

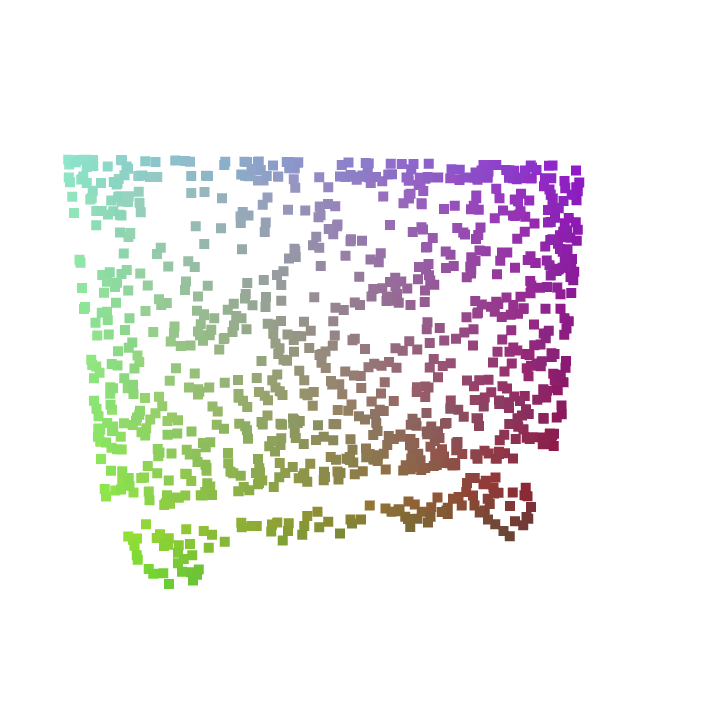

Deformation attacks with auxiliary point clouds.

As seen in Figure 3, the deformed shapes guided by auxiliary point clouds under adversarial attacks are perceptually similar to both input and auxiliary point cloud in one or several aspects. Moreover, this attack works in a more controllable fashion in which the deformation can be guided by the geometry and structure of auxiliary point cloud. For example, in the third column of Figure 3, the method is able to generate an adversarial chair without chair arms from the input chair with two arms. This can be potentially useful when analyzing the robustness of modern 3D point cloud classifiers given a specific shape deformation.

| Attack Method | Airplane Bottle | Bottle Chair | Chair Airplane | |||

| Front | Side | Front | Top | Front | Side | |

| No Attack |

|

|

|

|

|

|

| Shift-Point [57] |

|

|

|

|

|

|

| Add-Point [57] |

|

|

|

|

|

|

| Add-Cluster [57] |

|

|

|

|

|

|

| Add-Object [57] |

|

|

|

|

|

|

| ShapeAdv Latent (Ours) |

|

|

|

|

|

|

| ShapeAdv Chamfer (Ours) |

|

|

|

|

|

|

| Evaluation Metric | Attack Success Rate (%) () | Chamfer distance () () | |||||

| Attack / Defense | SOR [40] | AE-MLP | AE-AtlasNet | No Def. | SOR [40] | AE-MLP | AE-AtlasNet |

| Shift-Point [57] | 10.0 | 9.6 | 9.5 | 0.15 | 0.78 | 4.51 | 3.91 |

| Add-Point [57] | 7.8 | 10.3 | 9.8 | 0.09 | 0.62 | 5.13 | 4.20 |

| Add-Cluster [57] | 63.5 | 53.2 | 34.0 | 17.60 | 19.21 | 22.23 | 12.42 |

| Add-Object [57] | 68.3 | 37.1 | 31.0 | 12.16 | 13.86 | 15.36 | 10.81 |

| MLP-Latent (Eq. (6)) | 34.4 | 24.7 | 23.4 | 8.56 | 9.60 | 9.84 | 9.37 |

| MLP-Chamfer (Eq. (7)) | 20.8 | 14.6 | 15.1 | 4.55 | 5.63 | 5.74 | 5.59 |

| Atlas-Latent (Eq. (6)) | 15.8 | 11.2 | 12.7 | 4.29 | 4.82 | 5.59 | 5.02 |

| Atlas-Chamfer (Eq. (7)) | 15.4 | 10.8 | 10.4 | 3.15 | 3.90 | 5.03 | 4.38 |

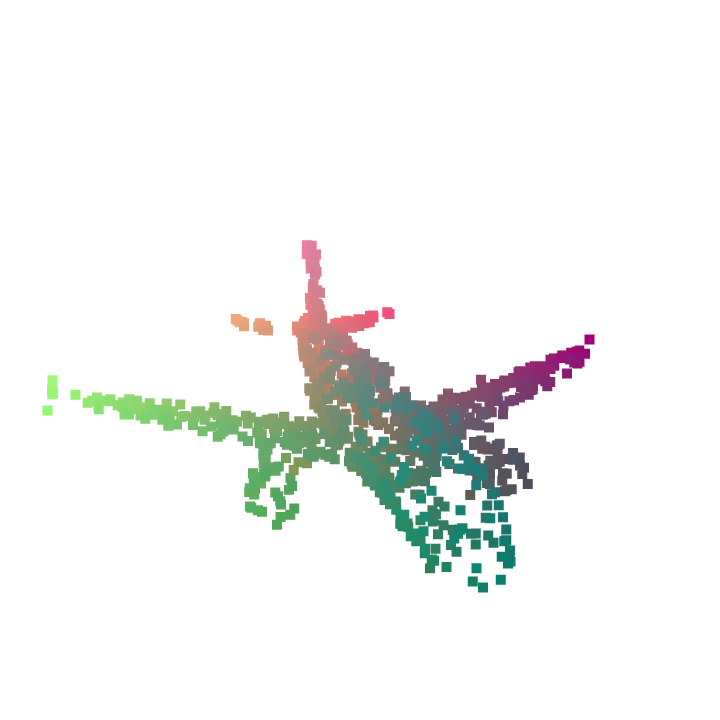

Shape-aware adversarial attacks against defense methods.

We compare our shape-aware attacks with existing point cloud attack methods proposed by [57] and visualize the results in Figure 4. We evaluate the proposed shape-aware attacks against two defense methods including sparse outlier removal (SOR) [68] and our auto-encoder based defense method, which can also be interpreted as Defense-GAN [41] on 3D point clouds. SOR is designed to remove point outliers from the raw point cloud while our AE-based method performs the defense through the auto-encoder. As in Table 3, our shape-aware adversarial attacks are generally more difficult to defend than the existing point perturbation attacks and attacks based on adding individual points. Compared to them, adversarial point clouds generated by our shape-aware attacks have noticeable geometric deformations as we do not use point-to-point correspondences to constrain the perturbation. Also, our methods exhibit different statistics compared to attacks by adding points from point clusters or objects, as the resulting shapes from those methods are severely deformed from the origin ones (the Chamfer distance is higher). In summary, we believe that our shape-aware adversarial attacks are orthogonal to existing adversarial attacks on point clouds.

Comparisons between the two architectures (MLP and AtlasNet) demonstrate that ShapeAdv generated by the MLP baseline is relatively harder to defend against compared to AtlasNet. First, this implies that AtlasNet tends to inject adversarial perturbations on the local geometry surfaces which can be partially removed by the existing defense methods, while the MLP baseline tends to inject global deformations as adversarial perturbations which makes the defense challenging. When it comes to 3D point cloud reconstruction, AtlasNet is more expressive and detail-preserving with a patch-based decoder that constructs the piecewise planar surfaces, where each planar surface is independent from each other (each patch is generated by a separate deformation-based decoder with different model weights). However, the detail-preserving adversaries can be possibly considered as outliers by the point defense methods. Second, existing defense methods consistently fail at defending against adversaries with global geometric deformation or structure difference while being effective against adversary with only local geometric deformation.

Shape-aware attack transferability.

Regarding the safety-critical concerns related to the PointNet model [35], we further extend our study on black-box attack transferability. Basically, we study how well the attacks generated by our shape-aware attack methods can be transferred to other classifiers such as PointNet++ [37] and DGCNN [53]. As in Table 4, our latent-space attacks exhibit stronger transferability compared to all existing methods. Here, we exclude the comparisons with methods that generate additional clusters or objects as they lead to severe shape deformation to the original object shapes.

| PointNet++ | DGCNN | PointNet∗ | |

| Shift-Point [57] | 3.9% | 1.9% | 5.5% |

| Add-Point [57] | 3.6% | 7.4% | 7.8% |

| MLP-Latent (Eq. (6)) | 24.7% | 23.5% | 13.9% |

| MLP-Chamfer (Eq. (7)) | 16.6% | 17.4% | 10.8% |

| MLP-Auxiliary (Eq. (8)) | 13.5% | 14.8% | 6.5% |

| Atlas-Latent (Eq. (6)) | 13.6% | 14.2% | 11.6% |

| Atlas-Chamfer (Eq. (7)) | 13.9% | 13.0% | 11.0% |

| Atlas-Auxiliary (Eq. (8)) | 10.8% | 11.8% | 7.7% |

5 Conclusion

In this paper, we have studied the robustness of 3D point cloud classifiers by exploiting the shape-aware adversarial attacks. In particular, we proposed to inject adversarial perturbations to the learned latent space of a point auto-encoder as an approximation to the shape manifold. By introducing shape deformations in the latent space, we are able to explore shape variations of a certain class in the adversarial point cloud generation. We then extended our shape-aware attacks by guiding the shape deformation with auxiliary point clouds, which reflects the process of shape morphing in the latent space. Moreoever, we have shown that the learned latent representation is directly applicable to a defense method against perturbations away from it. We believe our shape-aware adversarial attacks are orthogonal to existing attacks generated directly on the point cloud, which can broaden the landscape of adversarial robustness on 3D point cloud. Besides 3D point cloud representation, the idea of generating adversary on the latent space can potentially be applicable to other 3D data representations, such as voxel grid and surface mesh.

References

- [1] Achlioptas, P., Diamanti, O., Mitliagkas, I., Guibas, L.: Learning representations and generative models for 3d point clouds. In: ICML (2018)

- [2] Alzantot, M., Sharma, Y., Elgohary, A., Ho, B.J., Srivastava, M., Chang, K.W.: Generating natural language adversarial examples. arXiv preprint arXiv:1804.07998 (2018)

- [3] Bhattad, A., Chong, M.J., Liang, K., Li, B., Forsyth, D.A.: Big but imperceptible adversarial perturbations via semantic manipulation. ICLR (2020)

- [4] Blanz, V., Vetter, T.: A morphable model for the synthesis of 3d faces. In: ACM SIGGRAPH. vol. 99, pp. 187–194 (1999)

- [5] Bogo, F., Kanazawa, A., Lassner, C., Gehler, P., Romero, J., Black, M.J.: Keep it smpl: Automatic estimation of 3d human pose and shape from a single image. In: ECCV (2016)

- [6] Brock, A., Lim, T., Ritchie, J.M., Weston, N.: Generative and discriminative voxel modeling with convolutional neural networks. arXiv preprint arXiv:1608.04236 (2016)

- [7] Cao, Y., Xiao, C., Cyr, B., Zhou, Y., Park, W., Rampazzi, S., Chen, Q.A., Fu, K., Mao, Z.M.: Adversarial sensor attack on lidar-based perception in autonomous driving. arXiv preprint arXiv:1907.06826 (2019)

- [8] Carlini, N., Wagner, D.: Defensive distillation is not robust to adversarial examples. arXiv preprint arXiv:1607.04311 (2016)

- [9] Carlini, N., Wagner, D.: Towards evaluating the robustness of neural networks. In: 2017 IEEE Symposium on Security and Privacy (S&P). IEEE (2017)

- [10] Chang, A.X., Funkhouser, T., Guibas, L., Hanrahan, P., Huang, Q., Li, Z., Savarese, S., Savva, M., Song, S., Su, H., et al.: Shapenet: An information-rich 3d model repository. arXiv preprint arXiv:1512.03012 (2015)

- [11] Choy, C.B., Xu, D., Gwak, J., Chen, K., Savarese, S.: 3d-r2n2: A unified approach for single and multi-view 3d object reconstruction. In: ECCV (2016)

- [12] Fan, H., Su, H., Guibas, L.J.: A point set generation network for 3d object reconstruction from a single image. In: CVPR (2017)

- [13] Frosst, N., Sabour, S., Hinton, G.: Darccc: Detecting adversaries by reconstruction from class conditional capsules. arXiv preprint arXiv:1811.06969 (2018)

- [14] Gadelha, M., Wang, R., Maji, S.: Multiresolution tree networks for 3d point cloud processing. In: ECCV (2018)

- [15] Girdhar, R., Fouhey, D.F., Rodriguez, M., Gupta, A.: Learning a predictable and generative vector representation for objects. In: ECCV (2016)

- [16] Gkioxari, G., Malik, J., Johnson, J.: Mesh r-cnn. In: ICCV (2019)

- [17] Goodfellow, I.J., Shlens, J., Szegedy, C.: Explaining and harnessing adversarial examples. In: ICLR (2015)

- [18] Groueix, T., Fisher, M., Kim, V.G., Russell, B.C., Aubry, M.: Atlasnet: A papier-mâché approach to learning 3d surface generation. In: CVPR (2018)

- [19] Jalal, A., Ilyas, A., Daskalakis, C., Dimakis, A.G.: The robust manifold defense: Adversarial training using generative models. arXiv preprint arXiv:1712.09196 (2017)

- [20] Jiang, L., Shi, S., Qi, X., Jia, J.: Gal: Geometric adversarial loss for single-view 3d-object reconstruction. In: ECCV (2018)

- [21] Jin, G., Shen, S., Zhang, D., Dai, F., Zhang, Y.: Ape-gan: Adversarial perturbation elimination with gan. In: ICASSP (2019)

- [22] Joshi, A., Mukherjee, A., Sarkar, S., Hegde, C.: Semantic adversarial attacks: Parametric transformations that fool deep classifiers. In: ICCV (2019)

- [23] Kanazawa, A., Black, M.J., Jacobs, D.W., Malik, J.: End-to-end recovery of human shape and pose. In: CVPR (2018)

- [24] Landrieu, L., Simonovsky, M.: Large-scale point cloud semantic segmentation with superpoint graphs. In: CVPR (2018)

- [25] Li, J., Xu, K., Chaudhuri, S., Yumer, E., Zhang, H., Guibas, L.: Grass: Generative recursive autoencoders for shape structures. ACM Transactions on Graphics (TOG) 36(4), 52 (2017)

- [26] Liu, D., Yu, R., Su, H.: Extending adversarial attacks and defenses to deep 3d point cloud classifiers. In: ICIP (2019)

- [27] Liu, S., Li, T., Chen, W., Li, H.: Soft rasterizer: A differentiable renderer for image-based 3d reasoning. arXiv preprint arXiv:1904.01786 (2019)

- [28] Mahler, J., Liang, J., Niyaz, S., Laskey, M., Doan, R., Liu, X., Ojea, J.A., Goldberg, K.: Dex-net 2.0: Deep learning to plan robust grasps with synthetic point clouds and analytic grasp metrics. arXiv preprint arXiv:1703.09312 (2017)

- [29] Mandikal, P., Navaneet, K., Agarwal, M., Babu, R.V.: 3d-lmnet: Latent embedding matching for accurate and diverse 3d point cloud reconstruction from a single image. In: BMVC (2018)

- [30] Meng, D., Chen, H.: Magnet: a two-pronged defense against adversarial examples. In: Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security. pp. 135–147. ACM (2017)

- [31] Moosavi-Dezfooli, S.M., Fawzi, A., Frossard, P.: Deepfool: a simple and accurate method to fool deep neural networks. In: CVPR (2016)

- [32] Papernot, N., McDaniel, P., Goodfellow, I., Jha, S., Celik, Z.B., Swami, A.: Practical black-box attacks against machine learning. In: Proceedings of the 2017 ACM on Asia conference on computer and communications security. pp. 506–519. ACM (2017)

- [33] Papernot, N., McDaniel, P., Jha, S., Fredrikson, M., Celik, Z.B., Swami, A.: The limitations of deep learning in adversarial settings. In: Security and Privacy (EuroS&P), 2016 IEEE European Symposium on (2016)

- [34] Qi, C.R., Liu, W., Wu, C., Su, H., Guibas, L.J.: Frustum pointnets for 3d object detection from rgb-d data. In: CVPR (2018)

- [35] Qi, C.R., Su, H., Mo, K., Guibas, L.J.: Pointnet: Deep learning on point sets for 3d classification and segmentation. In: CVPR (2017)

- [36] Qi, C.R., Su, H., Nießner, M., Dai, A., Yan, M., Guibas, L.J.: Volumetric and multi-view cnns for object classification on 3d data. In: CVPR (2016)

- [37] Qi, C.R., Yi, L., Su, H., Guibas, L.J.: Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In: NeurIPS (2017)

- [38] Qiu, H., Xiao, C., Yang, L., Yan, X., Lee, H., Li, B.: Semanticadv: Generating adversarial examples via attribute-conditional image editing. arXiv preprint arXiv:1906.07927 (2019)

- [39] Ranjan, R., Sankaranarayanan, S., Castillo, C.D., Chellappa, R.: Improving network robustness against adversarial attacks with compact convolution. arXiv preprint arXiv:1712.00699 (2017)

- [40] Rusu, R.B., Marton, Z.C., Blodow, N., Dolha, M., Beetz, M.: Towards 3d point cloud based object maps for household environments. Robotics and Autonomous Systems 56(11), 927–941 (2008)

- [41] Samangouei, P., Kabkab, M., Chellappa, R.: Defense-gan: Protecting classifiers against adversarial attacks using generative models. In: ICLR (2018)

- [42] Schott, L., Rauber, J., Bethge, M., Brendel, W.: Towards the first adversarially robust neural network model on mnist. In: ICLR (2019)

- [43] Sedaghat, N., Zolfaghari, M., Amiri, E., Brox, T.: Orientation-boosted voxel nets for 3d object recognition. In: British Machine Vision Conference (BMVC) (2017)

- [44] Shi, S., Wang, X., Li, H.: Pointrcnn: 3d object proposal generation and detection from point cloud. In: CVPR (2019)

- [45] Song, Y., Kim, T., Nowozin, S., Ermon, S., Kushman, N.: Pixeldefend: Leveraging generative models to understand and defend against adversarial examples. In: ICLR (2018)

- [46] Song, Y., Shu, R., Kushman, N., Ermon, S.: Constructing unrestricted adversarial examples with generative models. In: NeurIPS (2018)

- [47] Stutz, D., Hein, M., Schiele, B.: Disentangling adversarial robustness and generalization. In: CVPR (2019)

- [48] Su, J.C., Gadelha, M., Wang, R., Maji, S.: A deeper look at 3d shape classifiers. In: ECCV Workshop (2018)

- [49] Szegedy, C., Zaremba, W., Sutskever, I., Bruna, J., Erhan, D., Goodfellow, I., Fergus, R.: Intriguing properties of neural networks. ICLR (2014)

- [50] Tewari, A., Zollhofer, M., Kim, H., Garrido, P., Bernard, F., Perez, P., Theobalt, C.: Mofa: Model-based deep convolutional face autoencoder for unsupervised monocular reconstruction. In: ICCV (2017)

- [51] Tung, H.Y., Tung, H.W., Yumer, E., Fragkiadaki, K.: Self-supervised learning of motion capture. In: NeurIPS (2017)

- [52] Varol, G., Ceylan, D., Russell, B., Yang, J., Yumer, E., Laptev, I., Schmid, C.: Bodynet: Volumetric inference of 3d human body shapes. In: ECCV (2018)

- [53] Wang, Y., Sun, Y., Liu, Z., Sarma, S.E., Bronstein, M.M., Solomon, J.M.: Dynamic graph cnn for learning on point clouds. ACM Transactions on Graphics (TOG) (2019)

- [54] Wu, J., Wang, Y., Xue, T., Sun, X., Freeman, B., Tenenbaum, J.: Marrnet: 3d shape reconstruction via 2.5 d sketches. In: NeurIPS (2017)

- [55] Wu, J., Zhang, C., Xue, T., Freeman, B., Tenenbaum, J.: Learning a probabilistic latent space of object shapes via 3d generative-adversarial modeling. In: NeurIPS (2016)

- [56] Wu, Z., Song, S., Khosla, A., Yu, F., Zhang, L., Tang, X., Xiao, J.: 3d shapenets: A deep representation for volumetric shapes. In: CVPR (2015)

- [57] Xiang, C., Qi, C.R., Li, B.: Generating 3d adversarial point clouds. In: CVPR (2019)

- [58] Xiao, C., Li, B., Zhu, J.Y., He, W., Liu, M., Song, D.: Generating adversarial examples with adversarial networks. In: IJCAI (2018)

- [59] Xiao, C., Yang, D., Li, B., Deng, J., Liu, M.: Meshadv: Adversarial meshes for visual recognition. In: CVPR (2019)

- [60] Xu, W., Evans, D., Qi, Y.: Feature squeezing: Detecting adversarial examples in deep neural networks. arXiv preprint arXiv:1704.01155 (2017)

- [61] Yan, X., Yang, J., Yumer, E., Guo, Y., Lee, H.: Perspective transformer nets: Learning single-view 3d object reconstruction without 3d supervision. In: NeurIPS (2016)

- [62] Yan, Z., Guo, Y., Zhang, C.: Deep defense: Training dnns with improved adversarial robustness. In: NeurIPS (2018)

- [63] Yang, B., Luo, W., Urtasun, R.: Pixor: Real-time 3d object detection from point clouds. In: CVPR (2018)

- [64] Yang, G., Huang, X., Hao, Z., Liu, M.Y., Belongie, S., Hariharan, B.: Pointflow: 3d point cloud generation with continuous normalizing flows. In: ICCV (2019)

- [65] Yang, J., Zhang, Q., Fang, R., Ni, B., Liu, J., Tian, Q.: Adversarial attack and defense on point sets. arXiv preprint arXiv:1902.10899 (2019)

- [66] Yang, Y., Feng, C., Shen, Y., Tian, D.: Foldingnet: Point cloud auto-encoder via deep grid deformation. In: CVPR (2018)

- [67] Zhao, Z., Dua, D., Singh, S.: Generating natural adversarial examples. ICLR (2018)

- [68] Zhou, H., Chen, K., Zhang, W., Fang, H., Zhou, W., Yu, N.: Dup-net: Denoiser and upsampler network for 3d adversarial point clouds defense. In: ICCV (2019)

- [69] Zhou, Y., Tuzel, O.: Voxelnet: End-to-end learning for point cloud based 3d object detection. In: CVPR (2018)

- [70] Zuffi, S., Kanazawa, A., Berger-Wolf, T., Black, M.J.: Three-d safari: Learning to estimate zebra pose, shape, and texture from images” in the wild”. In: ICCV (2019)